Abstract

People’s decisions and judgments are disproportionately swayed by improbable but extreme eventualities, such as terrorism, that come to mind easily. This article explores whether such availability biases can be reconciled with rational information processing by taking into account the fact that decision-makers value their time and have limited cognitive resources. Our analysis suggests that to make optimal use of their finite time decision-makers should over-represent the most important potential consequences relative to less important, put potentially more probable, outcomes. To evaluate this account we derive and test a model we call utility-weighted sampling. Utility-weighted sampling estimates the expected utility of potential actions by simulating their outcomes. Critically, outcomes with more extreme utilities have a higher probability of being simulated. We demonstrate that this model can explain not only people’s availability bias in judging the frequency of extreme events but also a wide range of cognitive biases in decisions from experience, decisions from description, and memory recall.

Keywords: judgment and decision-making, bounded rationality, cognitive biases, heuristics, probabilistic models of cognition

Human judgment and decision making have been found to systematically violate the axioms of logic, probability theory, and expected utility theory (Tversky & Kahneman, 1974). These violations are known as cognitive biases and are assumed to result from people’s use of heuristics – simple and efficient cognitive strategies that work well for certain problems but fail on others. While some have interpreted the abundance of cognitive biases as a sign that people are fundamentally irrational (Ariely, 2009; Marcus, 2009; Sutherland, 1992; McRaney, 2011) others have argued that people appear irrational only because their reasoning has been evaluated against the wrong normative standards (Oaksford & Chater, 2007), that the heuristics giving rise to these biases are rational given the structure of the environment (Simon, 1956; Todd & Gigerenzer, 2012), or that the mind makes rational use of limited cognitive resources (Simon, 1956; Lieder, Griffiths, & Goodman, 2013; Griffiths, Lieder, & Goodman, 2015; Wiederholt, 2010; Dickhaut, Rustichini, & Smith, 2009).

One of the first biases interpreted as evidence against human rationality is the availability bias (Tversky & Kahneman, 1973): people overestimate the probability of events that come to mind easily. This bias violates the axioms of probability theory. It leads people to overestimate the frequency of extreme events (Lichtenstein, Slovic, Fischhoff, Layman, & Combs, 1978) and this in turn contributes to overreactions to the risk of terrorism (Sunstein & Zeckhauser, 2011) and other threats (Lichtenstein et al., 1978; Rothman, Klein, & Weinstein, 1996). Such availability biases result from the fact that not all memories are created equal: while most unremarkable events are quickly forgotten, the strength of a memory increases with the magnitude of its positive or negative emotional valence (Cruciani, Berardi, Cabib, & Conversi, 2011). This may be why memories of extreme events, such as a traumatic car accident (Brown & Kulik, 1977; Christianson & Loftus, 1987) or a big win in the casino, come to mind much more easily (Madan, Ludvig, & Spetch, 2014) and affect people’s decisions more strongly (Ludvig, Madan, & Spetch, 2014) than moderate events, such as the 2476th time you drove home safely and the 1739th time a gambler lost $1 (Thaler & Johnson, 1990).

The availability bias is commonly assumed to be irrational, but here we propose that it might reflect the rational use of finite time and limited cognitive resources (Griffiths et al., 2015). We explore the implications of these bounded resources within a rational modeling framework (Griffiths, Vul, & Sanborn, 2012) that captures the inherent variability of people’s decisions (Vul, Goodman, Griffiths, & Tenenbaum, 2014) and judgments (Griffiths & Tenenbaum, 2006). According to our mathematical analysis, the availability bias could serve to help decision-makers focus their limited resources on the most important eventualities. In other words, we argue that the overweighting of extreme events ensures that the most important possible outcomes (i.e., those with extreme utilities) are always taken into account even when only a tiny fraction of all possible outcomes can be considered. Concretely, we show that maximizing decision quality under time constraints requires biases compatible with those observed in human memory, judgment, and decision-making. Without those biases the decision-maker’s expected utility estimates would be so much more variable that her decisions would be significantly worse. This follows directly from a statistical principle known as the bias-variance tradeoff (Hastie, Tibshirani, & Friedman, 2009).

Starting from this principle, we derive a rational process model of memory encoding, judgment, and decision making that we call utility-weighted learning (UWL). Concretely, we assume that the mind achieves a near-optimal bias-variance tradeoff by approximating the optimal importance sampling algorithm (Hammersley & Handscomb, 1964; Geweke, 1989) from computational statistics. This algorithm estimates the expected value of a function (e.g., a utility function) by a weighted average of its values for a small number of possible outcomes. To ensure that important potential outcomes are taken into account, optimal importance sampling optimally prioritizes outcomes according to their probability and the extremity of their function value. The resulting estimate is biased towards extreme outcomes but its reduced variance makes it more accurate. To develop our model, we apply optimal importance sampling to estimating expected utilities. We find that this enables better decisions under constrained resources. The intuitive reason for this benefit is that overweighting extreme events ensures that the most important possible outcomes (e.g., a catastrophe that has to be avoided or an epic opportunity that should be seized) are always taken into account even when only a tiny fraction of all possible outcomes can be considered.

According to our model, each experience o creates a memory trace whose strength w is proportional to the extremity of the event’s utility u(o) (i.e., where is a reference point established by past experience). This means that when a person experiences an extremely bad event (e.g., a traumatic accident) or an extremely good event (e.g., winning the jackpot) the resulting memory trace will be much stronger than when the utility of the event was close to zero (e.g., lying in bed and looking at the ceiling). Here, we refer to events such as winning the jackpot and traumatic car accidents as ‘extreme’ not because they are rare or because their utility is far from zero but because they engender a large positive or large negative difference in utility between one choice (e.g., to play the slots) versus another (e.g., to leave the casino).

In subsequent decisions (e.g., whether to continue gambling or call it a day), the model probabilistically recalls past outcomes of the considered action (e.g., the amounts won and lost in previous rounds of gambling) according to the strengths of their memory traces. As a result, the frequency with which each outcome is recalled is biased by its utility even though the recall mechanism is oblivious to the content of each memory.

Concretely, the probability that the first recalled outcome is an instance of losing $1 would be proportional to the sum of its memory traces’ strengths. Although this event might have occurred very frequently, each of its memory traces would be very weak. For instance, while there might be 1345 memory traces their strengths would be small (e.g., with close to u(−$1)). Thus, the experience of losing $1 in the gamble would be only moderately available in the gambler’s memory (total memory strength . Therefore, the one time when the gambler won $1000 might have a similarly high probability of coming to mind because its memory trace is significantly stronger (e.g., one memory trace of strength . According to our model, this probabilistic retrieval mechanism will sample a few possible outcomes from memory. These simulated outcomes (e.g., o1 = $1000, o2 = $ − 1, o5 = $1000) are then used to estimate the expected utility of the considered action by a weighted sum of their utilities where the theoretically derived weights partly correct for the utility-weighting of the memory traces (i.e., with . Finally, the considered action is chosen if and only if the resulting estimate of the expected utility gain is positive.

Our model explains why extreme events come to mind more easily, why people overestimate their frequency, and why they are overweighted in decision-making. It captures published findings on biases in memory recall, frequency estimation, and decisions from experience (Ludvig et al., 2014; Madan et al., 2014; Erev et al., 2010) as well as three classic violations of expected utility theory in decisions from description. Our model is competitive with the best existing models of decisions from experience and correctly predicted the previously unobserved correlation between events’ perceived extremity and the overestimation of their frequencies. The empirical evidence that we present strongly supports the model’s assumption that the stronger memory encoding of events with extreme utilities causes biases in memory recall that in turn lead to biases in frequency estimation and decision-making. Concretely, people remember extreme events more frequently than equally frequent events of moderate utility, overestimate their frequency, and overweight them in decision-making (Ludvig et al., 2014). Furthermore, the magnitude of overweighting increases significantly with the magnitude of the memory bias (Madan et al., 2014), and we found that the extent to which people overestimate an event’s frequency correlates significantly with its extremity. The theoretical significance of our analysis is twofold: it provides a unifying mechanistic and teleological explanation for a wide range of seemingly disparate cognitive biases and it suggests that at least some heuristics and biases might reflect the rational use of finite time and limited cognitive resources (Griffiths et al., 2015).

The remainder of this paper proceeds as follows: We start by deriving a novel decision mechanism as the rational use of finite time under reasonable, abstract assumptions about the mind’s computational architecture. We show that the derived mechanism captures people’s availability biases in frequency judgment and memory recall. Next, we demonstrate that the same mechanism can also account for three classic violations of expected utility theory and evaluate it against alternative models of decisions from description. We proceed to show that our model can also capture the heightened availability, overestimation, and overweighting of extreme events in decisions from experience. Finally, we show that utility-weighted sampling can emerge from a biologically-plausible learning mechanism that captures the temporal evolution of people’s risk preferences in decisions from experience and evaluate it against alternative models of decisions from experience. We conclude with implications for the debate on human rationality and directions for future research.

Resource-rational decision-making by utility-weighted sampling

According to expected utility theory (Von Neumann & Morgenstern, 1944), decision-makers should evaluate each potential action a by integrating the probabilities P(o|A = a) of its possible outcomes o with their utilities u(o) into the action’s expected utility . Unlike simple laboratory tasks where each choice can yield only a small number of possible payoffs, many real-life decisions have infinitely many possible outcomes.1 As a consequence, the expected utility of action a becomes an integral:

| (1) |

In the general case, this integral is intractable to compute. Below we investigate how the brain might approximate the solution to this intractable problem.

Sampling as a decision strategy

To explore the implications of resource constraints on decision-making under uncertainty, we model the cognitive resources available for decision-making within a formal computational framework that has been successfully used to develop rational process models of human cognition and can capture the variability of human performance, namely sampling (Griffiths et al., 2012). Sampling methods can provide an efficient approximation to integrals such as the expected utility in Equation 1 (Hammersley & Handscomb, 1964), and mental simulations of a decision’s potential consequences can be thought of as samples. The idea that the mind handles uncertainty by sampling is consistent with neural variability in perception (Fiser, Berkes, Orbán, & Lengyel, 2010) and the variability of people’s judgments (Vul et al., 2014; Denison, Bonawitz, Gopnik, & Griffiths, 2013; Griffiths & Tenenbaum, 2006). For instance, people’s predictions of an uncertain quantity X given partial information y are roughly distributed according to its posterior distribution p(X|y) as if they were sampled from it (Griffiths & Tenenbaum, 2006; Vul et al., 2014). Such variability has also been observed in decision-making: in repeated binary choices from experience animals chose each option stochastically with a frequency roughly proportional to the probability that it will be rewarded (Herrnstein & Loveland, 1975). This pattern of choice variability, called probability matching, is consistent with the hypothesis that animals perform a single simulation and chose the simulated action whenever its simulated outcome is positive. People also exhibit probability matching when the stakes are low, but as the stakes increase their choices transition from probability matching to maximization (Vulkan, 2000). This transition might arise from people gradually increasing the number of samples they generate to maximize the amount of reward they receive per unit time (Vul et al., 2014). Decision mechanisms based on sampling from memory can explain a wide range of phenomena (N. Stewart, Chater, & Brown, 2006). Concordant with recent drift-diffusion models (Shadlen & Shohamy, 2016) and query theory (Johnson, Häubl, & Keinan, 2007; Weber et al., 2007), this approach assumes that preferences are constructed (Payne, Bettman, & Johnson, 1992) through a sequential, memory-based cognitive process.

Assuming that people make decisions by sampling, we can express time and resource-constraints as a limit on the number of samples, where each sample is a simulated outcome: According to our theory, the decision-maker’s primary cognitive resource is a probabilistic simulator of the environment. The decision-maker can use this resource to anticipate some of the many potential futures that could result from taking one action versus another, but each simulation takes a non-negligible amount of time. Since time is valuable and the simulator can perform only one simulation at a time, the cost of using this cognitive resource is thus proportional to the number of simulations (i.e. samples).

If a decision has to be based on only a small number of simulated outcomes, what is the optimal way to generate them? Intuitively, the rational way to decide whether to take action a is to simulate its consequences o according to one’s best knowledge of the probability p that they will occur and average the resulting gain in utility Δu(o) to obtain an estimate of of the expected gain or loss in utility for taking action a over not taking it, that is

| (2) |

This decision strategy, which we call representative sampling (RS), generates an unbiased utility estimate. Yet – surprisingly – representative sampling is insufficient for making good decisions with very few samples. Consider, for instance, the choice between accepting versus declining a game of Russian roulette with the standard issue six-round NGant M1895 revolver. Playing the game will most likely, i.e. with probability , reward you with a thrill and save you some ridicule (Δu(o1) = 1) but kill you otherwise . Ensuring that representative sampling declines a game of Russian roulette at least 99.99% of the time, would require 51 samples – potentially a very time-consuming computation.

Like Russian roulette, many real-life decisions are complicated by an inverse relationship between the magnitude of the outcome and its probability (Pleskac & Hertwig, 2014). Many of these problems are much more challenging than declining a game of Russian roulette, because their probability of disaster is orders of magnitude smaller than and it may or may not be large enough to warrant caution. Examples include risky driving, medical decisions, diplomacy, the stock market, and air travel. For some of these choices (e.g., riding a motor cycle without wearing a helmet) there may be a one in a million chance of disaster while all other outcomes have negligible utilities:

| (3) |

If people decided based on n representative samples, they would completely ignore the potential disaster with probability 1 − (1 − 10−6)n. Thus to have at least a 50% chance of taking the potential disaster into account they would have to generate almost 700000 samples. This is clearly infeasible; thus one would almost always take this risk even though the expected utility gain is about −1000. In conclusion, representative sampling is insufficient for resource-bounded decision-making when some of the outcomes are highly improbable but so extreme that they are nevertheless important. Therefore, the robustness of human decision-making suggests that our brains use a more sophisticated sampling algorithm—such as importance sampling.

Importance sampling is a popular sampling algorithm in computer science and statistics (Hammersley & Handscomb, 1964; Geweke, 1989) with connections to both neural networks (Shi & Griffiths, 2009) and psychological process models (Shi, Griffiths, Feldman, & Sanborn, 2010). It estimates a function’s expected value with respect to a probability distribution p by sampling from an importance distribution q and correcting for the difference between p and q by down-weighting samples that are less likely under p than under q and up-weighting samples that are more likely under p than under q. Concretely, self-normalized importance sampling (Robert & Casella, 2009) draws s samples x1, ⋯, xs from a distribution q, weights the function’s value f (xj) at each point xj by the weight and then normalizes its estimate by the sum of the weights:

| (4) |

| (5) |

With finitely many samples, this estimate is generally biased. Following Zabaras (2010), we approximate its bias and variance by

| (6) |

| (7) |

We hypothesize that the brain uses a strategy similar to importance sampling to approximate the expected utility gain of taking action a and approximate the optimal decision by

| (8) |

| (9) |

Note that importance sampling is a family of algorithms: each importance distribution q yields a different estimator, and two estimators may recommend opposite decisions. This leads us to investigate which distribution q yields the best decisions.

Which distribution should we sample from?

Representative sampling is a special case of importance sampling in which the simulation distribution q is equal to the outcome probabilities p. Representative sampling fails when it neglects crucial eventualities. Neglecting some eventualities is necessary, but particular eventualities are more important than others. Intuitively, the importance of potential outcome oi is determined by |p(oi) · u(oi)| because neglecting oi amounts to dropping the addend p(oi) · u(oi) from the expected-utility integral (Equation 1). Thus, intuitively, the problem of representative sampling can be overcome by considering outcomes whose importance (|p(oi) · u(oi)|) is high and ignoring those whose importance is low.

Formally, the agent’s goal is to maximize the expected utility gain of a decision made from only s samples. The utility foregone by choosing a sub-optimal action can be upper-bounded by the error in a rational agent’s utility estimate. Therefore the agent should minimize the expected squared error of its estimate of the expected utility gain, which is the sum of its squared bias and variance, that is (Hastie et al., 2009). As the number of samples s increases, the estimate’s squared bias decays much faster (O(s−2)) than its variance (O(s−1)); see Equations 6–7. Therefore, as the number of samples s increases, minimizing the estimator’s variance becomes a good approximation to minimizing its expected squared error.

According to variational calculus the importance distribution

| (10) |

minimizes the variance (Equation 7) of the utility estimate in Equation 9 (Geweke, 1998; Zabaras, 2010; see Appendix A). This means that the optimal way to simulate outcomes in the service of estimating an action’s expected utility gain is to over-represent outcomes whose utility is much smaller or much larger than the action’s expected utility gain. Each outcome’s probability is weighted by how disappointing or elating it would be to a decision-maker anticipating to receive the gamble’s expected utility gain . But unlike in disappointment theory (Bell, 1985; Loomes & Sugden, 1984, 1986), the disappointment or elation is not added to the decision-maker’s utility function but increases the event’s subjective probability by prompting the decision-maker to simulate that event more frequently. Unlike in previous theories, this distortion was not introduced to describe human behavior but derived from first principles of resource-rational information processing: Importance sampling over-simulates extreme outcomes to minimize the mean-squared error of its estimate of the action’s expected utility gain. It tolerates the resulting bias because it is more important to shrink the estimate’s variance.

Unfortunately, importance sampling with qvar is intractable, because it presupposes the expected utility gain that importance sampling is supposed to approximate. However, the average utility of the outcomes of previous decisions made in a similar context could be used as a proxy for the expected utility gain . That quantity has been shown to be automatically estimated by model-free reinforcement learning in the midbrain (Schultz, Dayan, & Montague, 1997). Therefore, people should be able to sample from the approximate importance distribution

| (11) |

This distribution weights each outcome’s probability by the extremity of its utility. Thus, on average, extreme events will be simulated more often than equiprobable outcomes of moderate utility. We therefore refer to simulating potential outcomes by sampling from this distribution as utility-weighted sampling.

Utility-weighted sampling

Having derived the optimal way to simulate a small number of outcomes (Equation 11), we now turn to the question how those simulated outcomes should be used to make decisions under uncertainty. The general idea is to estimate each action’s expected utility gain from a small number of simulated outcomes, and then choose the action for which this estimate is highest.

If the simulated outcomes were drawn representatively from the outcome distribution p, then we could obtain an unbiased expected utility gain estimate by simply averaging their utilities (Equation 2). However, since the simulated outcomes were drawn from the importance distribution rather than p, we have to correct for the difference between these two distributions by computing a weighted average instead (Equation 5). Concretely, we have to weight each simulated outcome oj by the ratio of its probability under the outcome distribution p over its probability under the importance distribution from which it was sampled. Thus, the extreme outcomes that are overrepresented among the samples from will be down-weighted whereas the moderate outcomes that are underrepresented among the samples from will be up-weighted. Because , the weight wj of outcome oj is for some constant z. Since the weighted average in Equation 5 is divided by the sum of all weights, the normalization constant z cancels out. Hence, given samples o1, … ,os from the utility-weighted sampling distribution , the expected utility gain of an action or prospect can be estimated by

| (12) |

If no information is available a priori, then there is no reason to assume that the expected utility gain of a prospect whose outcomes may be positive or negative should be positive, or that it should be negative. Therefore, in these situations, the most principled guess an agent can make for the expected utility gain in Equation 10 – before computing it – is . Thus, when the expected utility gain is not too far from zero, then the importance distribution qvar for estimating the expected utility gain of a single prospect can be efficiently approximated by

| (13) |

This approximation simplifies the UWS estimator of a prospect’s expected utility gain (Equation 12) into

| (14) |

where sign(x) is −1 for x < 0, 0 for x = 0, and +1 for x > 0.

This utility-weighted sampling mechanism succeeds where representative sampling failed. For Russian roulette, the probability that a sample drawn from the utility-weighted sampling distribution (Equation 13) considers the possibility of death (o2) is

| (15) |

Consequently, utility-weighted sampling requires only 1 rather than 51 samples to recommend the correct decision at least 99.99% of the time, because the first sample is almost always the most important potential outcome (i.e., death). In this case, the utility estimate defined in Equation 14 would be 1/|109|·−1 = −109 and its expected value for a single sample is also very close to −109. While this mechanism is biased to overestimate the risk of playing Russian roulette , that bias is beneficial because it makes it easier to arrive at the correct decision. Likewise, a single utility-weighted sample suffices to consider the potential disaster (Equation 3) at least 99.85% of the time, whereas even 700, 000 representative samples would miss the disaster almost half of the time. Thus, utility-weighted sampling would allow people to make good decisions even under extreme time pressure. This suggests that to achieve the optimal bias-variance tradeoff (Hastie et al., 2009) the sampling distribution has to be biased towards extreme outcomes. This bias reduces the variance of the utility estimate enough to enable better decisions than representative sampling whose expected utility gain estimate is unbiased but has high variance.

To apply the utility-weighted sampling model to decisions people face in life and experiments, we have to specify the utility u(o) of the outcomes o. To do so, we interpret an outcome’s utility as the subjective value that the decision-maker’s brain assigns to it in the choice context. Concretely, we follow the proposal of Summerfield and Tsetsos (2015) that the brain represents value in an efficient neural code. This proposal is based on psychophysical and neural data (Louie, Grattan, & Glimcher, 2011; Louie, Khaw, & Glimcher, 2013; Mullett & Tunney, 2013) and fits into our resource-rational framework: The brain’s representational bandwidth is finite, because the possible range of neural firing rates is limited. Efficient coding makes rational use of the brain’s finite representational bandwidth by adapting the neural code to the range of values that have to be represented in a given context. This implies rescaling the values of potential outcomes such that all of them lie within the representational bandwidth. If the representational bandwidth is 1 and the largest and the smallest possible values in the current context c are and respectively, then the utility of an outcome o should be represented by

| (16) |

where is neural noise that reflects uncertainty about the outcome’s value. Since it is the neural representation of value rather than value itself that drives choice, we interpret u(o) as the subjective utility of outcome o in context c. We will consistently use this formal definition of utility (Equation 16) in this and all following sections.

Our basic UWS model of how people estimate a prospect’s expected utility thus has only two parameters: the number of samples s and the unreliability of the decision-maker’s representation of utility.

Utility-weighted sampling in binary choices yields a simple heuristic

Having derived a resource-rational mechanism for estimating expected utilities, we now translate it into a decision strategy. Many real-world decisions and most laboratory tasks involve choosing between two actions a1 and a2 with uncertain outcomes and that depend on the unknown state of the world. Consider, for example, the choice between two lottery tickets: the first ticket offers a 1% chance to win $1000 at the expense of a 99% risk to lose $1 (O(1))∈{−1,1000}) and the second ticket offers a 10% chance to win $1000 at the expense of a 90% risk to lose $100 (O(2) ∈{−100, 1000}). According to expected utility theory, one should choose the first lottery (taking action a1) if and the second lottery (action a2) if This is equivalent to taking the first action if the expected utility difference is positive and the second action if it is negative. The latter approach can be approximated very efficiently by focusing computation on those outcomes for which the utilities of the two actions are very different and ignoring events for which they are (almost) the same. For instance, it would be of no use to simulate the event that both lotteries yield $1000 because it would not change the decision-maker’s estimate of the differential utility and thus have no impact on her decision. To make rational use of their finite resources, people should thus use utility-weighted sampling to estimate the expected value of the two actions’ differential utility ΔU = u(O(1) – u(O(2)) as efficiently as possible. This is accomplished by sampling pairs of outcomes from the bivariate importance distribution

| (17) |

integrating their differential utilities according to

| (18) |

and then choosing the first action if the estimated differential utility is positive, that is

| (19) |

Note that each simulation considers a pair of outcomes: one for the first alternative and one for the second alternative. This is especially plausible when the outcomes of both actions are determined by a common cause. For instance, the utilities of wearing a shirt versus a jacket on a hike are both primarily determined by the weather. Hence, reasoning about the weather naturally entails reasoning about the outcomes of both alternatives simultaneously and evaluating their differential utilities in each case (e.g. rain, sun, wind, etc.) instead of first estimating the utility of wearing a shirt and then starting all over again to estimate the utility of wearing a jacket.

Given that there is no a priori reason to expect the first option to be better or worse than the second option, is 0 and the equation simplifies to

| (20) |

This distribution captures the fact that the decision-maker should never simulate the possibility that both lotteries yield the same amount of money– no matter how large it is. It does not overweight extreme utilities per se, but rather pairs of outcomes whose utilities are very different. Its rationale is to focus on the outcomes that are most informative about which action is best. For instance, in the example above, our UWS model of binary choice overweights the unlikely event in which the first ticket wins $1000 and the second ticket loses $100. Plugging the optimal importance distribution (Equation 20) into the UWS estimate for the expected differential utility yields an intuitive heuristic for choosing between two options. Formally, the optimal importance sampling estimator for the expected value of the differential utility is

| (21) |

where sign(x) is +1 for positive x and −1 for negative x. If the heightened availability of extreme events roughly corresponded to the utility-weighted sampling distribution (Equation 20), then the decision rule in Equation 21 could be realized by the following simple and psychologically plausible heuristic for choosing between two actions:

Imagine a few possible events (e.g., 1. Ticket 1 wins and ticket 2 loses. 2. Ticket 2 wins and ticket 1 loses. 3. Ticket 1 winning and ticket 2 losing comes to mind again. 4. Both tickets lose.).

For each imagined scenario, evaluate which action would fare better (1. ticket 1, 2. ticket 2, 3. ticket 1, 4. ticket 1).

Count how often the first action fared better than the second one (3 out of 4 times).

If the first action fared better more often than the second action, then choose the first action, else choose the second action (Get ticket 1!).

As a quantitative example, consider how UWS would choose between a ticket with a 10% chance of winning $99 and a 90% chance of losing $1 versus winning $1 for sure. If, the frequency with which events come to mind reflects utility-weighted sampling, then people could simply tally whether winning came to mind more often than losing. According to UWS, winning should came to mind about 86% of the time whereas losing should come to mind only about 14% of the time (the derivation of these simulation frequencies is provided in Appendix B). Hence, if the decision-maker imagined the outcome of choosing the gamble twice, there would be a 71.4% chance that winning came to mind twice, a 26.2% chance that winning and losing each came to mind once, and an only 2.4% chance of imagining losing twice. In the first case, the heuristic would always choose the gamble, in the second case it would choose it half of the time, and in the last case it would always decline the gamble. Hence, simply tallying which option (gambling vs. playing it safe) the imagined outcomes favored more frequently (and breaking ties at random) would be sufficient to make the correct decision 84% of the time despite having imagined the outcome only twice. Appendix B provides a complete description of this worked example, and Appendix C applies UWS to the general case of choosing between a gamble and its expected value.

The overweighting of outcomes that strongly favor one action over another in UWS is similar to the effect of anticipated regret in regret theory (Loomes & Sugden, 1982), but in UWS extremity changes the frequency with which an event is simulated and does not affect its utility. Magnifying the subjective probabilities of extreme events makes UWS more similar to salience theory (Bordalo, Gennaioli, & Shleifer, 2012) according to which pairs of payoffs that are very different receive more attention than pairs of payoffs that are similar. Yet, while salience theory provides a descriptive account of binary choice frequencies in decisions from description, UWS additionally provides a resource-rational mechanistic account of decisions from experience, memory recall, and frequency judgments.

Summary and outlook

In summary, our analysis suggested that the rational use of finite cognitive resources implies that extreme events should be overrepresented in decision-making under uncertainty. Utility-weighted sampling is a rational process model that formalizes this prediction. This biased mechanism leads to better decisions than its unbiased alternative (i.e. representative sampling). Utility-weighted sampling thereby enables robust decisions under time constraints that prohibit the careful consideration of many possible outcomes.

We have derived two versions of utility-weighted sampling: The first version estimates the expected utility gain of a single action. The second version chooses between two actions. Although both mechanisms overweight extreme events their notions of extremity are different. The UWS mechanism for estimating the expected utility gain of a single action overweights individual outcomes with extreme utilities. By contrast, the UWS mechanism for choosing between two actions overweights pairs of outcomes whose utilities are very different. In the remainder of this article, we will use the first mechanism to simulate frequency judgment, pricing, and decisions from experience and the second mechanism to simulate binary decisions from description. Despite this difference, we can interpret the first mechanism as a special case of the second one, because its importance distribution (Equation 11) compares the utility of the prospect’s outcomes against the average utility of alternative actions. Hence, UWS always overweights events that entail a large difference between the utility of the considered action and some alternative. The frequency with which a state has been experienced or its stated probability also influence how often it will be sampled. Thus, impossible and highly improbable states are generally unlikely to be sampled. However, states with high differential utility are sampled more frequently than is warranted by how often they have been experienced or their stated probability. This increases the probability that improbable states with extreme differential utility will be considered. We support the proposed mechanism by showing that it can capture people’s memory biases for extreme events, the overestimation of the frequency of extreme events, biases in decisions from description, and biases in decisions from experience.

Biases in frequency judgment confirm predictions of UWS

If people remembered the past as if they were sampling from the UWS distribution (Equation 11), they would recall their best experience and their worst experience much more frequently than an unremarkable one (cf. Madan et al., 2014). If people relied on such a biased memory system to estimate frequencies and assess probabilities, then their estimate of the frequency fk = p(ok) of the event ok would be

| (22) |

where is the utility-weighted sampling distribution. Since over-represents each event ok proportionally to its extremity , that is , we predict that people’s relative over-estimation is a monotonically increasing function of the event’s extremity , Formally, the bias (Equation 6) of utility-weighted probability estimation (Equation 22) implies that the relative amount by which people overestimate an event’s frequency (i.e., ) should increase with the event’s extremity , according to

| (23) |

where c is an upper bound on people’s relative overestimation. This predicts that people should overestimate the frequency of an event more the more extreme it is regardless of its frequency. In this section, we test this prediction against people’s judgments: we first report an experiment suggesting that frequency overestimation increases with perceived extremity, and then we show that UWS can capture the finding that overestimation occurs regardless of the event’s frequency (Madan et al., 2014).

Frequency overestimation increases with perceived extremity

Lichtenstein et al. (1978) and Pachur, Hertwig, and Steinmann (2012) found that people’s estimates of the frequencies of lethal events are strongly correlated with how many instances of each event they can recall. Furthermore, Lichtenstein et al. (1978) also found that overestimation was positively correlated with the number of lives lost in a single instance of each event, the likelihood that an occurrence of the event would be lethal, and the amount of media coverage it would typically attract. We hypothesize that extremity-weighted memory encoding contributed to these effects. If this were true, then overestimation should increase with perceived extremity. Here, we test this prediction of UWS in a new experiment that measures perceived extremity and correlates it with the biases in people’s frequency estimates.

Methods

We recruited 100 participants on Amazon Mechanical Turk. Participants received a baseline payment of $1.25 for about 30 minutes of work. Participants were asked to estimate how many American adults had experienced each of 39 events in 2015 as accurately as possible and accurate frequency estimation was incentivized by a performance dependent bonus of up to $2. In addition, participants judged each event’s valence (good or bad) and extremity (0: neutral – 100: extreme). The 39 events comprised 30 stressful life events from Hobson et al. (1998), four lethal events (suicide, homicide, lethal accidents, and dying from disease/old age), three rather mundane events (going to the movies, headache, and food-poisoning), and two attention-checks. As a reference, participants were told the total number of American adults and how many of them retire each year.

To assess overestimation we compared our participants’ estimates to the true frequencies of the events according to official statistics.2 The complete experiment can be inspected online.3 Out of 100 participants 22 failed one or more attention checks (number of Americans elected president, number of Americans who slept between 2h and 10h at least once) and were therefore excluded.

Results and Discussion

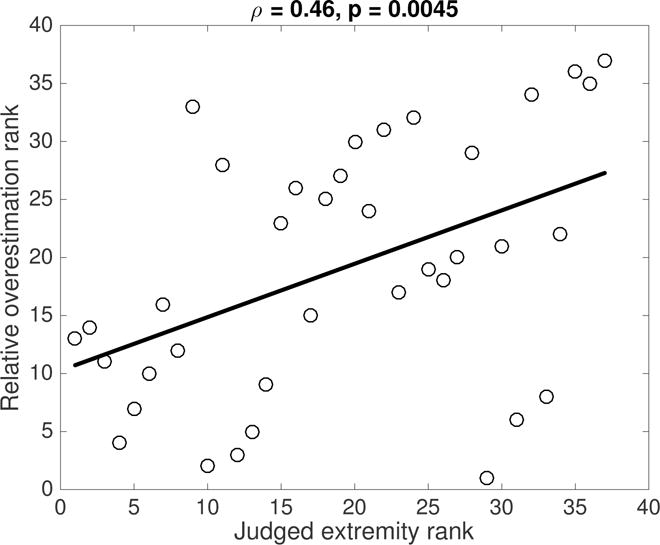

A significant rank correlation4 between the average extremity judgments of the 37 events and average relative overestimation confirmed our model’s prediction (Spearman’s ρ = 0.46, p = .0045, see Figure 1), and we observed the same effect at the level of individual judgments (Spearman’s ρ = 0.14, p < 10−12). The frequencies of the five most extreme events, that is murder (93.3%), suicide (92.6% extreme), dying in an accident (90% extreme), the death of one’s partner (86% extreme), and suffering a major injury or serious illness (85% extreme) were overestimated by a factor of 159 (p = 0.0001), 9 (p = 0.0026), 35 (p = 0.0035), 1.01 (p = 0.03), and −0.22 (p = 0.25) respectively. By contrast, the prevalences of the five least extreme events, that is headache (20% extreme), change in work responsibilities (21% extreme), getting a traffic ticket (26% extreme), moving flat (26% extreme), and career change (32% extreme) were underestimated by 4% (p = 0.42), 1% (p = 0.95), 10% (p = 0.52), 52% (p < 0.0001), and 24% (p = 0.0211) respectively. Like Rothman et al. (1996), we found that people overestimate the frequency of suicide (overestimated by 927%) more heavily than the frequency of divorce (overestimated by 27%). According to our theory, this is because suicide is perceived as more extreme than divorce (92.6% extreme vs. 59% extreme).

Figure 1.

Relative overestimation increases with perceived extremity . Each circle represents one event’s average ratings.

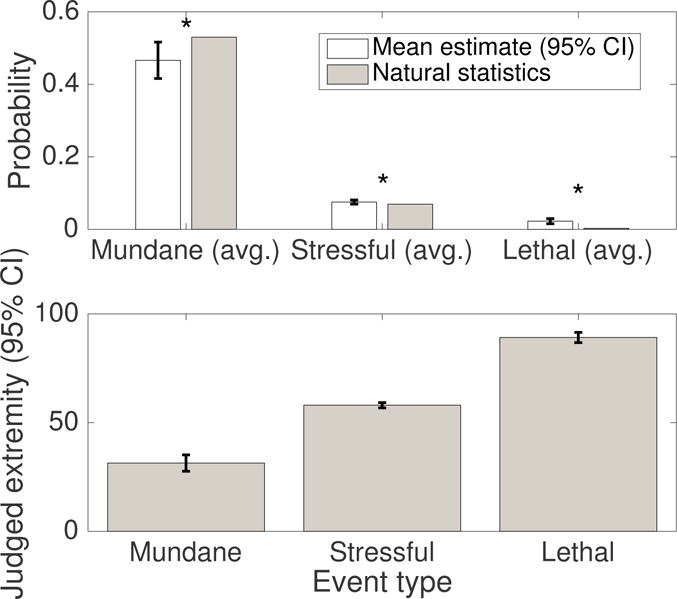

Furthermore, we found that the effect of extremity on overestimation also holds across the three categories the events were drawn from (see Figure 2): people significantly underestimated the frequency of mundane events (t(233)=−3.66,p=0.0003) while overestimating the frequency of stressful life events (t(2338) = 2.02, p = 0.0433) and lethal events (t(311) = 5.46, p < 10−7). Two-sample t-tests confirmed that relative overestimation was larger for stressful life events than for mundane events (t(2571) = 3.16, p = 0.0016) and even larger for lethal events t(544) = 12.70, p < 10−15). Figure 2 illustrates that overestimation and perceived extremity increased together.

Figure 2.

Judged frequency and extremity by event type.

While people’s judgments were biased for the events studied here, there are many quantities, such as the length of poems, for which people’s predictions are unbiased (Griffiths & Tenenbaum, 2006). This is consistent with UWS because unlike monetary gains and losses they impart no (dis)utility on their observer. For instance, hearing that a poem is 8 lines long carries virtually the same utility as hearing that another poem is 25 lines long. Hence, for such quantities, UWL would predict effectively unbiased memory encoding, recall, and prediction. Our theory’s ability to differentiate situations where human judgment is biased from situations where it is unbiased speaks to its validity.

In conclusion, the experiment confirmed our theory’s prediction that an event’s extremity increase the relative overestimation of its frequency. However, additional experiments are required to disentangle the effects of extremity and low probability, because these two variables were anti-correlated (ρ(36) = −0.67, p < 0.0001). To address this problem, we examined our model’s predictions using two published studies that kept frequency constant across events (Madan et al., 2014).

UWS captures that extreme events are overestimated regardless of frequency

The results reported above supported the hypothesis that people overestimate the frequency of extreme events, but most extreme events in that experiment were also rare. Therefore our findings could also be explained by postulating that people overestimate extreme events only because they are rare (Hertwig, Pachur, & Kurzenhäuser, 2005). This possibility is supported by empirical evidence for regression to the mean effects in frequency estimation (Attneave, 1953; Lichtenstein et al., 1978; Hertwig et al., 2005; Zhang & Maloney, 2012). Yet, extremity per se also contributes to overestimation: Madan et al. (2014) found that people overestimate the frequency of an extreme event relative to a non-extreme event even when both were equally frequent. The hypothesis that people overestimate the frequency of extreme events because those events are rare cannot account for this finding, but utility-weighted sampling can. To demonstrate this, we simulated the experiments by Madan et al. (2014) using utility-weighted sampling.

In the first experiment by Madan et al. (2014) participants repeatedly chose between two doors. Each door probabilistically generated one of two outcomes, and different doors were available on different trials. There were a total of four doors generating a sure gain of +20 points, a sure loss of −20 points, a risky gain offering a 50/50 chance of +40 or 0, and a risky loss offering a 50/50 chance of 0 or −40 points. In most trials participants either chose between the risky and the sure gain (gain trials) or between the risky and the sure loss (loss trials). After each choice, participants were shown the number of points earned, and they received no additional information about the options. After 6 blocks of 48 such choices participants were asked to estimate the probability with which each door generated each of the possible outcomes and to report the first outcome that came to their mind for each of the four doors. In their second experiment Madan et al. (2014) shifted all outcomes from Experiment 1 by +40 points.

We estimated the two parameters of the UWS model (i.e., the number of samples s and the noisiness σε of the utility function) from the choice frequencies reported by Madan et al. (2014) using the maximum-likelihood principle. While participants had to learn the outcome probabilities from experience, the model developed so far assumes known probabilities. We thus restricted our analysis to the last block of each experiment. For each experiment, our model defines a likelihood function over the number of risky choices in gain trials and the number of risky choices in loss trials. We maximized the product of these likelihood functions with respect to our model’s parameters using grid search over possible numbers of samples and global optimization with respect to σε. The resulting parameter estimates were s = 4 samples and σε = 0.05.

With these parameters, utility-weighted sampling correctly predicted that extreme outcomes come to mind first more often than the equally frequent moderate outcomes; see Table 1A. Next, we simulated people’s frequency estimates according to Equation 22. UWS correctly predicted that people overestimate the frequency of extreme outcomes relative to the equally frequent moderate outcome; see Table 1B. In addition, UWS captured that participants were more risk-seeking for gains than for losses (see Table 2), and a later section investigates this phenomenon in more detail.

Table 1.

UWS simulation of people’s memory recall (A) and frequency estimates (B) after the Experiments by Madan et al. (2014).

| A | ||

|---|---|---|

| Comes to mind first: | Extreme Gain vs. Neutral | Extreme Loss vs. Neutral |

| Experiment 1 | 64.5% vs. 35.5% | 71% vs. 29% |

| Experiment 2 | 70.0% vs. 30% | 72.6% vs. 27.4% |

| B | ||

| Estimated Frequency of … | Extreme Gain vs. Neutral | Extreme Loss vs. Neutral |

|

| ||

| Experiment 1 | 83.0% vs. 17.0% | 87.5% vs. 12.5% |

| Experiment 2 | 87.5% vs. 12.5% | 90.0% vs. 10.0% |

Table 2.

UWS captures people’s risk preferences in the Experiments by Madan et al. (2014).

| Risky Choices in | Gain Trials | Loss Trials |

|---|---|---|

| Experiment 1: | UWS: 54% People: 45% | UWS: 36%, People: 35% |

| Experiment 2: | UWS: 60%, People: 55% | UWS: 31%, People: 14% |

Summary and Discussion

The findings presented in this section provide strong support for our hypothesis that utility-weighting is the reason why people over-represent extreme events: First, Experiment 1 showed that there is a significant correlation between an event’s utility and the degree to which people overestimate its frequency. Second, the data from Madan et al. (2014) rule out the major alternative explanation that people overestimate the frequency of extreme events only because they are rare and also demonstrate that the overestimation is mediated by a memory bias for events with extreme utility. Furthermore, we found that the adaptive bias predicted by our theory exists not only in decision-making but also in frequency estimation and memory.

A parsimonious explanation for these three phenomena could be that the over-representation of extreme events results from a known bias in learning: emotional salience enhances memory formation (Cruciani et al., 2011). While overestimation has been previously explained by high “availability” of salient memories (Tversky & Kahneman, 1973), our theory specifies what exactly the availability of events should correspond to – namely their importance distribution (Equation 13) – and why this is useful. Our empirical findings were consistent with utility-weighted sampling but inconsistent with the hypothesis that the bias in frequency estimation is merely a reflection of the regression to the mean effect (Hertwig et al., 2005). While alternative accounts of why people overestimate the frequency of extreme events, such as selective media coverage (Lichtenstein & Slovic, 1971), can explain the overestimation of certain lethal events, they cannot account for the data of Madan et al. (2014). Thus at least part of the overestimation of extreme events appears to be due to utility-weighted sampling. Hence, an event’s extremity may sway people’s decisions by increasing their propensity to remember it, and this is clearly distinct from extremity’s potential effects on the subjective utility of anticipated outcomes (Loomes & Sugden, 1982; Bell, 1985; Loomes & Sugden, 1984, 1986).

Our model’s predictions are qualitatively consistent with the data of Madan et al. (2014) but often more extreme. This difference might result from the idealistic assumption that there is no forgetting. We revisit this issue with a more realistic learning model later in the paper.

Biases in decisions from description

According to decision theory, an event’s probability determines its weight in decision-making under uncertainty. Therefore, the biased probability estimates induced by utility-weighted sampling suggest that people should overweight extreme events in decisions under uncertainty. We will test this prediction in the domain of decisions from experience. Since this will require a model of learning, we model decisions from description as an intermediate step towards building a model of decisions from experience.

In the decisions from description paradigm participants choose between gambles that are described by their payoffs and outcome probabilities (Allais, 1953; Kahneman & Tversky, 1979). Typically participants make binary choices between pairs of gambles or between a monetary gamble and a sure payoff. While people could, in principle, make these decisions by computing and comparing the gamble’s expected values, ample empirical evidence demonstrates that they do not. Instead, people might reuse their strategies for everyday decisions. Everyday decisions are usually based on memories of past outcomes in similar situations. Hence, if people reused their natural decision strategies, then their decisions from description should be affected by the availability biases that have been observed in memory recall and frequency judgments. Our section on utility-weighted learning in decision from experience provides a precise, mechanistic account of how these biases arise from biased memory encoding. Here, we assume that similar mechanisms are at play in decisions from description. For instance, it is conceivable that the high salience of large differential payoffs in decisions from description (Bordalo et al., 2012) attracts a disproportionate amount of people’s attention, making them more memorable, and increasing the frequency with which they will be considered. We think that such mechanisms could roughly approximate the utility-weighting prescribed by our model, at least for simple gambles whose outcomes are displayed appropriately.

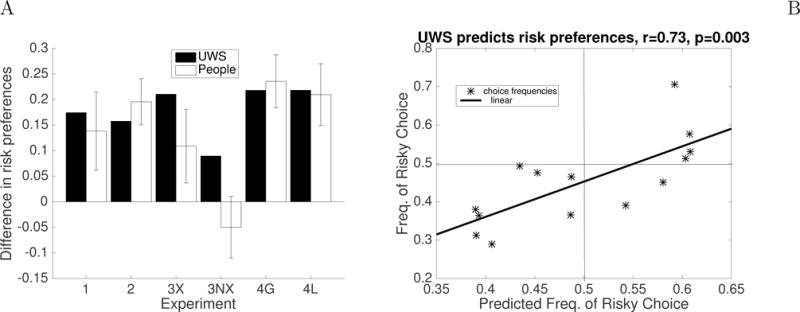

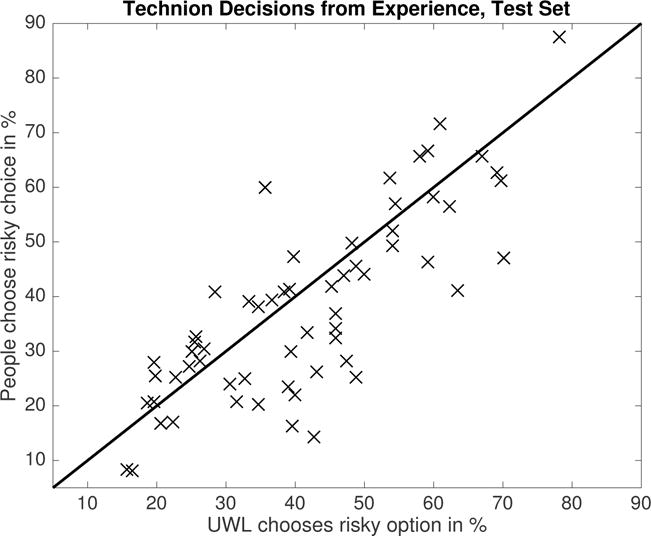

In this section, we therefore apply UWS to decisions from description, validate the resulting model on the data from the Technion choice prediction competition (Erev et al., 2010), and demonstrate that it can capture three classic violations of expected utility theory.

Validation on decisions from description

We validated the utility-weighted sampling model of binary choices (Equations 18–21) with the stochastic normalized utility function defined in Equation 16 against people’s decisions from description in the Technion choice prediction tournament (Erev et al., 2010). There are many factors that influence people’s responses that are outside the scope of our model. These include accidental button presses, mind-wandering, misperception, and the occasional use of additional decision strategies that might be well adapted to the specific problems to which they are applied (Lieder & Griffiths, 2015, under review). We therefore extended UWS to allow for an unknown proportion of choices (prandom) that are determined other factors. We model the net effect of those choices as choosing either option with a probability of 0.50.

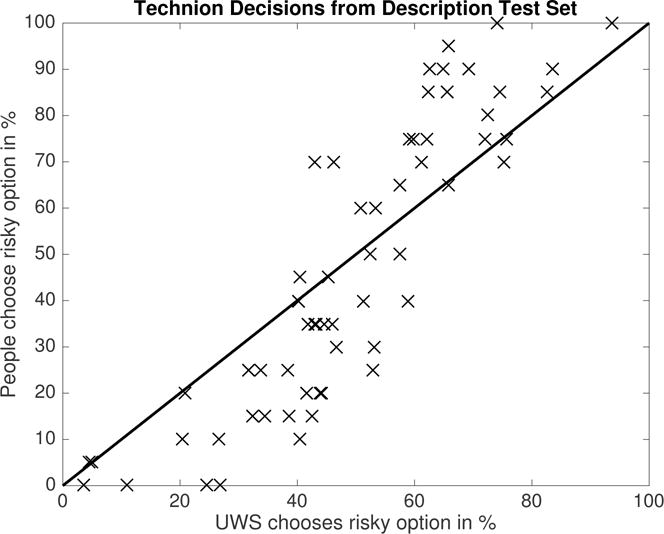

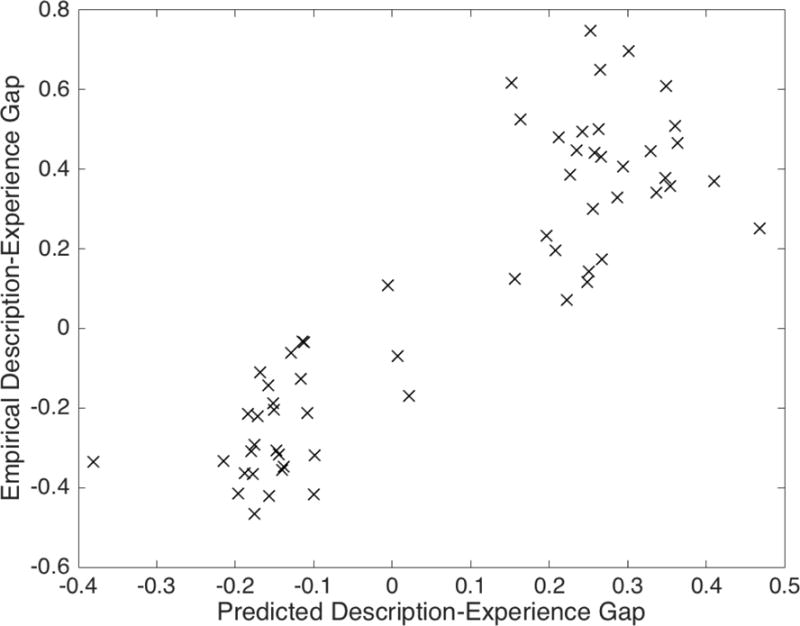

We fitted the number of samples s, the noisiness σε of the utility function, and the percentage of trials in which people choose at random to the training data of the Technion choice prediction competition. The maximum likelihood estimates of these model parameters were s = 10 samples, σε = 0.1703, and prandom = 0.07. We then used these parameter estimates to predict people’s choices in the decision problems of the test set of the Technion choice prediction competition. Figure 3 shows our model’s predictions and compares them to people’s choice frequencies. On average across the 60 problems, people chose the risky option about 46.75 ± 3.98% of the time and the UWS model chose the risky option about 48.92 ± 2.56% of the time. This difference was not statistically significant (t(59) = −1.03, p = 0.31) suggesting that the predictions of UWS were unbiased. While there was no bias—on average—the predictions of UWS were regressed towards 50/50 compared to people’s choice frequencies: On problems where people were risk-seeking UWS chose the risky option less often than people (66.11% vs. 79.20%, t(24) = −6.48, p < .0001). But on problems where people were risk-averse, UWS chose the risky option more often than people (35.54% vs. 21.97%, p < .0001).

Figure 3.

Predictions of UWS on the test set of the Technion choice prediction tournament for decisions from description according to the parameters estimated from the training set. Each data point reports the frequency with which UWS (horizontal axis) versus people (vertical axis) chose the risky option in one of the 60 decision problems of the Technion competition, and the solid line is the identity line.

Our model predicted people’s choice frequencies more accurately than cumulative prospect theory (CPT; Tversky, & Kahneman, 1992) or the priority heuristic (Brandstätter, Gigerenzer, & Hertwig, 2006): Its mean squared error (MSDUWS = 0.0266) was significantly lower than for cumulative prospect theory (MSDCPT = 0.0837, t(59) = − 5.4, p < .001) or the priority heuristic (MSDpriority = 0.1437, t(59) = −4.9,p < .001). Furthermore, the predicted risk preference agreed with people’s risk preferences in 87% of the trials (CPT: 93%, priority heuristic: 81%) and the predicted choice frequencies were highly correlated with people’s choice frequencies (rUWS(59) = 0.88, p < 10−15 versus rCPT = 0.86 and rpriority = 0.65). Our model’s predictive accuracy was similar to those of the best existing models, namely stochastic cumulative prospect theory with normalization (r = 0.92, MSD = 0.0116) and Haruvy’s seven parameter logistic regression model that won the competition (r = 0.92, MSD = 0.0126), although the differences were still statistically significant (t(59) = 3.5, p < .001 and t(59) = 3.97, p < .001). In addition to performing about as well as the best existing models our model is distinctly principled: UWS is the only accurate mathematical process model that is derived from first principles. All alternative models that perform similarly well were tailored to capture known empirical phenomena or fail to specify the mechanisms of decision-making.

Having estimated our model’s parameters and validated it, we now proceed to demonstrate that it can explain three paradoxes in risky choice, namely the Allais paradox (Allais, 1953), the fourfold pattern of risk preferences (Tversky & Kahneman, 1992), and preference reversals (Lichtenstein & Slovic, 1971).

The Allais paradox

In the two lotteries L1(z) and L2(z) defined in Table 3 the chance of winning z dollars is exactly the same. Yet, when z = 2400 most people prefer lottery L2 over lottery L1, but when z = 0 the same people prefer L1 over L2. This inconsistency is known as the Allais paradox (Allais, 1953).

Table 3.

The Allais gambles: Participants choose between lottery L1 and lottery L2 for z = 2400 versus z = 0.

| (ol, pl) | (o2, p2) | (o3, p3) | |

|---|---|---|---|

| L1(z): | (z, 0.66) | (2500, 0.33) | (0, 0.01) |

| L2 (z): | (z, 0.66) | (2400, 0.34) |

We simulated people’s choices between both pairs of lotteries according to utility-weighted sampling with the parameters estimated from the Technion training set. To do so, we computed the probability p and utility difference ΔU for each possible pair of outcomes of the first lottery L1 and the second lottery L2. Since the outcomes of the two lotteries are statistically independent, the probability that the first lottery yields outcome O1 while the second lottery yields O2 is P(O1) · P(O2). To apply UWS to predict people’s choices between the two lotteries, we determined all possible values of the differential utility ΔU and their respective probabilities. For instance, when z = 0, then the possible differential utilities are 0, −u(2400), u(2500) − u(2400), and u(2500) (see Tables 3 and 4). In this case, ΔU is −u(2400) if the first or the third outcome is drawn for the first lottery and the second outcome is drawn for the second lottery. The probability of the first scenario is p1 · p2 = 0.66 · 0.34 and the probability of the second scenario is p3 · p2 = 0.01 · 0.34; hence the probability of ΔU = −u(2400) is 0.67 · 0.34. Next, we computed the simulation frequency which is proportional to p(ΔU) · |Δu|. For instance, in this example, and normalizing this probability distribution yields suggesting that this extreme eventuality would occupy half of the decision-maker’s mental simulations even though its probability is less than 23%. This corresponds to overweighting this event by a factor of 2.19. Table 4 presents these numbers for all differential utilities possible with z = 2400 or z = 0.

Table 4.

Utility-weighted sampling explains the Allais paradox.

| ΔU | p |

|

/p | ||

|---|---|---|---|---|---|

|

|

|||||

| 0 | 0.66 | 0 | 0 | ||

| z = 2400: | |||||

| u(2500) − u(2400) | 0.33 | 0.58 | 1.8 | ||

| −u(2400) | 0.01 | 0.42 | 42 | ||

| ΔU | p |

|

/p | ||

|

|

|||||

| 0 | 0.66 · 0.67 | 0 | 0 | ||

| z = 0 : | −u(2400) | 0.67 · 0.34 | 0.5 | 2.19 | |

| u(2500) −u(2400) | 0.33 · 0.34 | 0.01 | 0.08 | ||

| u(2500) | 0.33 · 0.66 | 0.49 | 2.26 | ||

Note: The agent’s simulation yields ΔU = Δ u with probability where p is Δ u’s objective probability.

Our simulations with UWS predicted people’s seemingly inconsistent preferences in the Allais paradox. For the first pair of lotteries (z = 2400), UWS preferred the second lottery to the first one, choosing L2 55.66% of the time and L1 only 44.34% of the time. But for the second pair of lotteries (z = 0), UWS choose the first lottery more often than the second one (50.38% vs. 49.62%). Table 4 shows how our theory explains why people’s preferences reverse when z changes from 2400 to 0: According to the importance distribution (Equation 13), people overweight the event for which the utility difference between the two gambles’ outcomes (O1 and O2) is largest (ΔU = u(O1) − u(O2)). Thus when z = 2400, the most over-weighted event is the possibility that gamble L1 yields o1 = 0 and gamble L2 yields o2 = 2400 (ΔU = −u(2400)); consequently the bias is negative and the first gamble appears inferior to the second ( which corresponds to $ − 75.54). But when z = 0, then L1 yielding o1 = 2500 and L2 yielding o2 = 0 (ΔU = +u(2500)) becomes the most over-weighted event making the first gamble appear superior which corresponds to $3.25). Our model’s predictions are qualitatively consistent with the empirical findings by Kahneman and Tversky (1979) but less extreme; this is primarily because fitting the model to the data from the Technion choice prediction Tournament led to large number of samples (s = 10) and the predicted availability biases decrease with the number of samples; for a smaller number of samples, the model predictions would have been closer to the empirical data.

The fourfold pattern of risk preferences

Framing outcomes as losses rather than gains can reverse people’s risk preferences (Tversky & Kahneman, 1992): In the domain of gains people prefer a lottery (o dollars with probability p) to its expected value (risk seeking) when p < .5, but when p > .5 they prefer the expected value (risk aversion). In contrast, in the domain of losses, people are risk averse for p < .5 but risk seeking for p > .5. This phenomenon is known as the fourfold pattern of risk preferences. Formally, decision-makers are risk seeking when they prefer a gamble (p, o; 0) which yields $o with probability p and nothing otherwise to its expected value p · o dollars, and risk averse if they prefer receiving the expected value for sure to playing the gamble. We therefore determined the risk preferences predicted by utility-weighted sampling by simulating choices between such gambles and their expected values. Concretely, we used the gambles (p, o; 0) for 0 < p < 1 and −1000 < o < 1000 and applied UWS with the parameters estimated from the Technion choice prediction tournament. Appendix C illustrates how utility-weighted sampling makes these decisions and how this leads to inconsistent risk preferences.

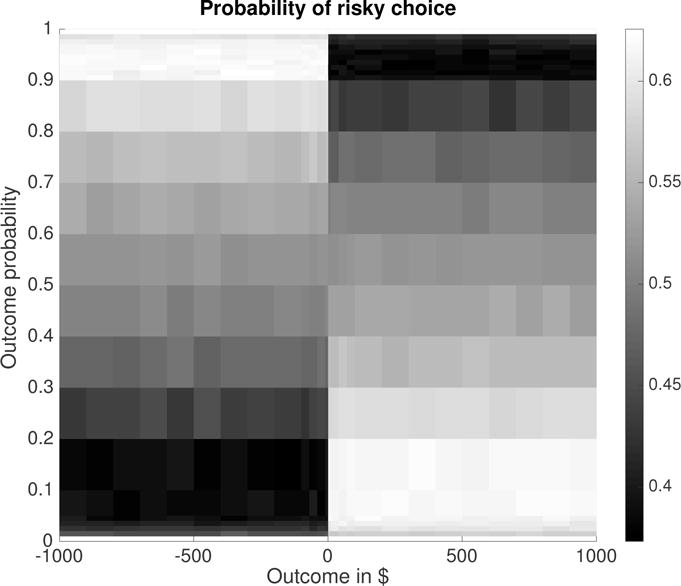

We found that utility-weighted sampling predicts the fourfold pattern of risk preferences (Tversky & Kahneman, 1992); see Figure 4. To understand how utility-weighted sampling explains this phenomenon, remember that it estimates the expected value of the differential utility ΔU by sampling from the importance distribution . The differential utility of choosing a gamble that yields o with probability p over its expected value p · o is

| (24) |

Utility-weighted sampling thus overweights the gain/loss o of the lottery if p is small, because then |u(o) − u(p · o)| > |u(p · o)|. Conversely, it underweights the gain/loss o if p is large, because then |u(o) − u(p · o)| < |u(p · o)|. Concretely, when choosing between a two-outcome gamble and its expected value, UWS simulates the outcome of the gamble as if winning and losing were equally probable even when the probability of winning is much larger or much smaller than 0.5 (see Appendix C). On top of this over-simulation of the more extreme outcome, the noise term of the utility function (Equation 16) stochastically flips the sign of the differential utilities of some of the simulated outcomes. When the probability of winning is close to 0 or 1, then this happens almost exclusively for the outcome whose differential utility is closer to zero. Combined with the over-simulation of the more extreme outcome this asymmetry renders the decision-maker’s bias positive (risk-seeking) for improbable gains and probable losses but negative (risk-aversion) for probable gains and improbable losses (see Figure 4). Appendix C elaborates this explanation with detailed worked examples.

Figure 4.

Utility-weighted sampling predicts the fourfold pattern of risk preferences. The color scale indicates the probability people make the risky choice as a function of the probability and dollar value of the outcome.

In everyday life the fourfold pattern of risk preferences manifests itself in the apparent paradox that people who are so risk-averse that they buy insurance can also be so risk-seeking that they play the lottery. Our simulations resolved this apparent contradiction: First, we simulated the decision whether or not to play the Powerball lottery.5 The jackpot is at least $40 million, but the odds of winning it are less than 1:175 million. In brief, people pay $2 to play a gamble whose expected value is only $1. We simulated how much people would be willing to pay for a ticket of the Powerball lottery according to UWS. We found that UWS overestimates the value of a lottery ticket by more than a factor of 2 more than 36% of the time. Thus, a person who evaluates lottery tickets often should consider them underpriced about one third of the time. Applied to choice, UWS predicts that people buy lottery tickets almost every second time they consider it (PUWS(buy lottery ticket) = 0.497), because they over-represent the possibility of winning big. Next, we applied UWS to predict how much the same people would be willing to pay for insurance. Our simulation assumed that the total insured loss follows the heavy-tailed power-law distribution of debits (N. Stewart et al., 2006) over the range from $1 to $1 000 000. To simplify the application of UWS to this continuous distribution, we set the reward expectancy ū to zero and assumed that the simulation distribution is not affected by noise. We determined the certainty equivalents of the utility-weighted sampling estimates of the utility of an insurance against a loss drawn from this distribution. To do so, we applied the inverse of the utility function to the UWS estimates of the expected disutility of the hazard. We found that UWS overestimates the expected hazard about 80% of time, and it overestimates it by a factor of at least 2 in 64% of all cases. Therefore, most people should be motivated to buy insurance even when they just bought a lottery ticket. The prediction of utility-weighted sampling for whether people actually decide to buy an overpriced insurance policy are more moderate, because the high price of insurance makes the possibility of paying nothing and losing nothing more salient. Nevertheless, UWS predicts that people would be willing to buy insurance for 130% of its expected value about 37.3% of the time. Thus 90% of customers would buy 130% overpriced insurance after considering at most 5 offers.

Utility-weighted sampling thereby resolves the paradox that people who are so risk-seeking that they buy lottery tickets can also be so risk-averse as to buy insurance by suggesting that people overweight extreme events regardless of whether they are gains (as in the case of lotteries) or losses (as in the case of insurance).

Preference reversals

When people first price a risky gamble and a safe gamble with similar expected value and then choose between them, their preferences are inconsistent almost 50% of the time: most people price the risky gamble higher than the safe one, but many of them nevertheless choose the safer one (Lichtenstein & Slovic, 1971). This inconsistency does not result from mere randomness, as preference reversals in the opposite direction are rare.

To evaluate whether our theory can capture this inconsistency, we simulated the pricing of a safe gamble offering an 80% chance of winning $1 and a risky gamble offering a 40% chance of winning $2, and the subsequent choice between them according to UWS with the parameters estimated from the Technion choice prediction tournament for decisions from description. Since the largest and the smallest possible outcome are omax = 2 and omin = 0 respectively, the utility function from Equation 16 becomes with .

We assumed that people price a gamble by estimating its expected utility gain according to Equation 14 and then convert the resulting utility estimate into its monetary equivalent. Plugging the payoffs and outcome probabilities of the safe gamble in to Equation 14 reveals that, for the safe gamble, winning (o = 1) and losing (o = 0) would be simulated with the frequencies

| (25) |

| (26) |

respectively. For the risky gamble the possibility of winning is over-represented more:

| (27) |

| (28) |

Each simulated decision-maker sampled 10 possible outcomes. We then applied Equation 14 to translate the 10 samples from qsafe into the UWS estimate of the expected utility gain of playing the safe gamble and the 10 samples from qrisky into the UWS estimate of the expected utility gain of playing the risky gamble . Finally, we converted each estimated utility gain into the equivalent monetary amount m by inverting the utility function u without adding any noise, that is

| (29) |

| (30) |

Each value of mrisky corresponds to one participant’s judgment of the fair price for the risky gamble and likewise for the values of msafe.

To simulate choice, we applied the UWS model for binary decisions from description (Equations 20–21) with the parameters estimated from the Technion choice prediction tournament. To choose between the risky versus the safe gamble, this model estimates the expected differential utility directly instead of estimating the gambles’ expected utilities and separately. Consequently, it overweights pairs of outcomes whose utilities are very different instead of individual outcomes whose utilities are far from 0. Concretely, it simulates pairs of outcomes (i.e., one outcome for the risky gamble and one outcome for the safe gamble) according to the distribution qΔ defined in Equation 20, which weights their joint probability by the absolute value of their difference in utility. The differential utilities Δu1,…, Δu10 of the simulated outcome pairs are then translated into an estimate of the difference between the expected utility of the risky gamble versus the safe gamble according to Equation 21. If the resulting decision variable is positive, the simulated decision-maker chooses the risky gamble, if it is negative they choose the safe gamble, and if it is 0 then they choose randomly.

Since the utilities u(o) that drive the overweighting of extreme outcomes are stochastic (Equation 16), we conducted 100 000 simulations to average over a large number of utility-weighted sampling distributions q. Each simulation generated one price for the safe gamble, one price for the risky gamble, and one simulated choice between the two. At the beginning of each simulation, the utilities u(0), u(1), and u(2) were drawn from for each possible outcome o ∈ {0,1, 2} and plugged into Equations 25–28 to yield the distributions the decision-maker would sample from in that simulation. Within each simulation, the sampled outcomes were evaluated by independent applications of the noisy utility function (Equation 16). Hence, even when the same outcome was sampled multiple times in a simulation, its subjective utility could be different every time.

UWS predicted that 42% of participants should reverse their risk preference from pricing to choice. In 66% of these reversals the model prices the risky gamble higher but choose the safe one. As a result, utility-weighted sampling typically prices the risky gamble higher than the safe gamble (67% of the time), but it choses the safe gamble almost every second time (49% of the time). The rational decision mechanism of utility-weighted sampling weights events differently depending on whether it is tasked to perform pricing versus choice. Given that its shift in attention is a rational adaption to the task, the inconsistency between people’s apparent risk preferences in pricing versus choice is consistent with resource-rationality.

While the laboratory experiments that demonstrated the effects simulated above can be criticized as artificial because their stakes were low or hypothetical, the overweighting of outcomes with extreme differential utility has also been observed in high-stakes, financial decisions whose outcomes do count (Post, Van den Assem, Baltussen, & Thaler, 2008), and UWS can capture those effects as well (see Section “Deal or No Deal: Overweighting of extreme events in real-life high-stakes economic decisions” of the Supplemental Online Material).

Summary

In this section, we have shown that utility-weighted sampling accurately predicts people’s decisions from description across a wide range of problems including those that elicit inconsistent risk preferences. Our utility-weighted sampling model of decisions from description rests on three assumptions: Its central assumption is that expected utilities are estimated by importance sampling. In addition, we assumed that binary choices from description are made by directly estimating the differential utility of choosing the first option over the second option. This assumption was important to predict the fourfold pattern of risk preferences, preference reversals, and the Allais paradox. Finally, we assumed that the mapping from payoffs to utilities is implemented by efficient coding. This assumption is not critical to the simulations reported here, but it will become important in our simulations of decisions from experience in the next section.

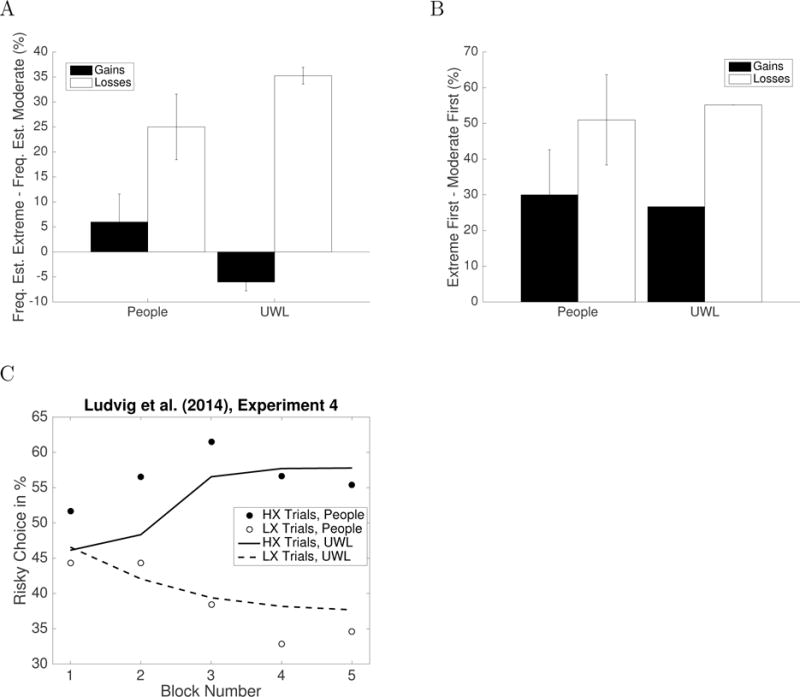

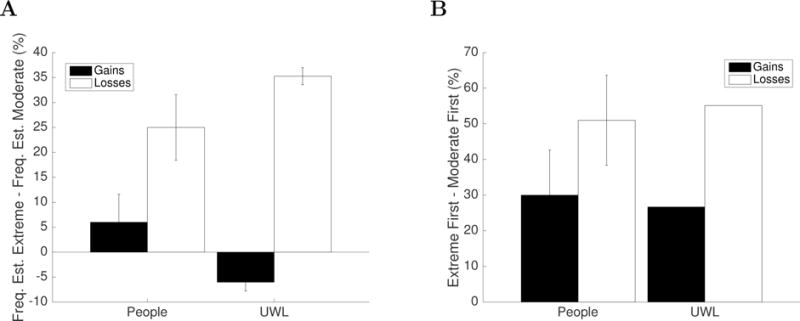

Overweighting of extreme events in decisions from experience

In decisions outside the laboratory we are rarely given a list of all possible outcomes and their respective probabilities. Instead, we have to estimate these probabilities from past experience. When people learn outcome probabilities from experience their risk preferences are systematically different than when the probabilities are described to them (Hertwig & Erev, 2009). For instance, people overweight rare outcomes in decisions from description but tend to underweight them in decisions from experience (Hertwig, Barron, Weber, & Erev, 2004).

A common paradigm for studying decisions from experience is repeated binary choices with feedback. In this paradigm, the outcomes and their probabilities are initially unknown and must be learned from experience. Madan et al. (2014) discovered an interesting memory bias in this paradigm: people remember extreme outcomes more often than moderate ones and overestimate their frequency. Ludvig et al. (2014) showed that people also overweight the same extreme outcomes in their decisions when their probability is . Above we showed that utility-weighted sampling can account for the memory biases discovered by Madan et al. (2014), and in this section we investigate whether utility-weighted sampling can also account for the corresponding biases in decisions from experience by simulating the experiments by Ludvig et al. (2014). Our analysis suggests that biased memory encoding serves to help people make future decisions more efficiently by making the most important desiderata come to mind first.

Ludvig et al. (2014) conducted a series of four experiments. In each of the four experiments people made a series of decisions from experience. For instance, Experiment 1 comprised 5 blocks with 48 choices each. There were a total of four options: a sure gain of +20 points, a sure loss of −20 points, a risky gain offering a 50/50 chance of +40 or 0, and a risky loss offering a 50/50 chance of 0 or −40 points. In most trials participants either chose between the risky and the sure gain (gain trials) or between the risky and the sure loss (loss trials). After each choice subjects were shown the number of points earned, and they received no additional information about the options. Experiments 2–4 used different outcomes but were otherwise similar. In Experiment 2 the absolute values of all outcomes of Experiment 1 were shifted by 5 points. In Experiment 3 the gain and loss trials were supplemented by extreme gain trials and extreme loss trials whose outcomes were double the outcomes in Experiment 1. Experiment 4 had a loss condition in which all outcomes were losses (4L) and a gain condition in which all outcomes were gains (4G). Both conditions comprised risky gambles in which only the high outcome was extreme (HX), gambles in which only the low outcome was extreme (LX), and gambles in which both outcomes were extreme (BX).

To simulate these experiments, we assumed that Ludvig et al.’s participants had learned the outcome probabilities in the first four blocks and modeled their choice frequencies in the final block of each experiment. We can therefore model each individual decision as the choice between two lotteries each of which is defined by the value of the high outcome ohigh, the probability phigh of receiving it, and the low outcome olow:

| (31) |

| (32) |

We model utility-weighted sampling as simulating s possible outcomes of each action a by sampling from the importance distribution defined in Equation 11:

| (33) |