Abstract

Background

Decisions on treatment are guided, not only by the potential for benefit, but also by the nature and severity of adverse drug reactions. However, some researchers have found numerous deficiencies in trial reports of adverse effects. We sought to confirm these findings by evaluating trials of drug therapy published in seven eminent medical journals in 1997.

Methods

Literature review to determine whether the definition, recording and reporting of adverse drug reactions in clinical trials were in accordance with published recommendations on structured reporting.

Results

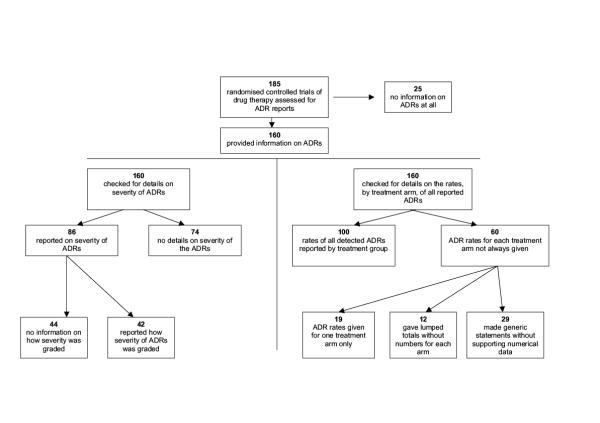

Of the 185 trials reviewed, 25 (14%) made no mention of adverse drug reactions. Data in a further 60 (32%) could not be fully evaluated, either because numbers were not given for each treatment arm (31 trials), or because a generic statement was made without full details (29 trials). When adverse drug reactions such as clinical events or patient symptoms were mentioned in the reports, details on how they had been recorded were given in only 14/95 (15%) and 18/104 (17%) trials respectively. Of the 86 trials that mentioned severity of adverse drug reactions, only 42 (49%) stated how severity had been defined. The median amount of space used for safety data in the Results and Discussion sections was 5.8%.

Conclusions

Trial reports often failed to provide details on how adverse drug reactions were defined or recorded. The absence of such methodological information makes comparative evaluation of adverse reaction rates potentially unreliable. Authors and journals should adopt recommendations on the structured reporting of adverse effects.

Background

Although drug safety information is of vital importance, evidence is now emerging that adverse drug reactions (ADRs) are often inadequately reported in randomised, controlled trials [1,2]. Lack of consistency in ADR reporting has important implications – it makes systematic reviews of ADR data extremely difficult and also hinders comparisons of ADR rates between trials. For example, the authors of a recent systematic review of beta-blockers in myocardial infarction were able to draw conclusions on the efficacy of treatment, but not safety, because "different definitions and reporting made comparisons between trials problematic" [3].

Two aspects of reporting are particularly important when comparing rates of ADRs in different trials [4]. Firstly, adverse event recording in trials may frequently include unfavourable outcomes that arise from disease progression or concomitant co-morbidity, and are unrelated to drug treatment. Trial reports of adverse events need to make it clear which ones are thought to be ADRs. Secondly, different methods of recording ADRs can lead to significantly different results. For example, Olsen et al's study of hypertensive patients showed that rates of ADRs varied depending on whether spontaneous reporting, general enquiry, or specific questioning had been used in detecting ADRs (rates of 16%, 24%, and 62% respectively) [5]. ADR data can be reliably interpreted only when there is information on how they were recorded.

Although the original CONSORT statement made no mention of adverse effects, the recent revised statement recommends only that "all important adverse events or side effects [are reported] in each intervention group" [6]. More detailed recommendations on the structured reporting of ADRs were made by the SORT committee in 1994 [7]. Trials should:

• define what constituted adverse events and how they were monitored by the intervention group;

• present sufficient simple (unadjusted) summary data on primary outcome measures and important adverse effects for the reader to reproduce the result.

We carried out our study to determine if the reporting of ADRs in a wide selection of randomised controlled trials was in accordance with these recommendations.

Methods

Selection of trials

We used a sample of randomised controlled trials that had been published in seven general medical journals: The New England Journal of Medicine, The Journal of the American Medical Association, The Lancet, The British Medical Journal, Annals of Internal Medicine, Archives of Internal Medicine, and The American Journal of Medicine. We selected these journals because they are highly ranked in the Science Citation Index and provide a relatively broad coverage of general medical topics. The Cochrane Controlled Trials Register was used to identify all the randomised controlled trials from these journals in the year 1997 [8]. As our primary objective was to evaluate the reporting of drug ADRs, we reviewed only those trials in which at least one treatment arm involved a pharmacological intervention. We excluded papers in which the main bulk of the data had been published elsewhere, e.g. post hoc or subgroup analyses of trials, or papers that stated that adverse effects data were or would be available in a separate publication. A complete list of the trials evaluated is available from the authors.

Two reviewers assessed all trials independently, using a checklist, and any discrepancies were resolved through discussion.

Evaluation

The review checklist was based largely on the recommendations of SORT; our main criteria were that:

1. ADRs should be clearly and appropriately defined;

2. there should be sufficient detail on how the reported ADRs were elicited and recorded (including definitions of severity) so that the reader can fully interpret the result or reproduce the study if necessary;

3. frequencies of adverse effects should be reported in a simple, coherent manner.

We also measured the amount of space (area in cm2) in the Results and Discussion sections that was devoted to ADR reports.

Details are given below on the specific criteria used in evaluating ADR reports.

Clear and appropriate use of ADR definitions

Adverse drug reactions have been defined as those "where the causal relation between the product and an adverse event is at least a reasonable possibility", while the less specific (but widely used) term "adverse events" should be reserved for describing untoward occurrences that are not necessarily causally related to the treatment [9,10]. The terms should not be used interchangeably.

The use of terminology was considered appropriate if trials reported drug safety with terms such as "adverse drug reactions", "adverse or side effects" or "drug toxicity", or if they explicitly stated which adverse events were possibly related to drug treatment. Reports of adverse outcomes without clarification of their relation to drug treatment were considered to be inadequate.

Sufficient detail on methods used in monitoring of ADRs for the reader to interpret or reproduce the result

When ADRs were reported, we asked whether it was possible for readers to tell which specific methods had been used in the detection or recording of ADRs. We evaluated the following areas of ADR monitoring – symptoms reported by patients, events diagnosed clinically by physicians, and abnormalities detected using investigative tests.

Patients' symptoms

We asked whether the trial report explained how the rates of symptomatic ADRs had been determined during the course of the study; for example, by spontaneous reporting, patient diary or checklist questionnaire.

ADRs presenting as clinical events diagnosed by physicians

We asked whether the reported ADRs had been detected with specific, targeted clinical assessments or purely from spontaneous reports as they arose. In particular, we looked for detail of systematic surveillance measures e.g. investigators were asked to take blood pressure readings at 15 minute intervals to look for hypotension; rates of gastrointestinal haemorrhages were monitored through routine checking of hospital records.

ADRs detected through the use of diagnostic tests

We asked whether the trial specified which diagnostic techniques had been used in detecting the reported ADRs; for example, was deep venous thrombosis diagnosed by ultrasonography or by contrast venography? In cases in which laboratory tests were used, we asked whether the laboratory values or criteria for diagnosing an abnormality were stated; for example, was hypokalaemia diagnosed at serum potassium concentrations below 3.0 mmol/l?

Severity

We looked for details on the severity of the reported ADRs and, if present, whether the measures of severity were made according to recognized scales (such as the National Cancer Institute Toxicity Grades[11]), or otherwise defined e.g. "major bleeds were those that were fatal or required transfusion".

Nature of ADR reporting

Numerical data

When ADRs were reported, we asked whether simple summary (unadjusted) data were always given for each treatment arm; were there instances in which data were given as a lumped total (e.g. headache noted in 5% of all patients in the trial), or for only one treatment arm?

We also looked at the prevalence of statements that could not be fully interpreted owing to lack of detail on the frequency and/or type of adverse effect; e.g. "The pattern and profile of adverse effects were similar" or "The treatments were well tolerated". Such generic statements, in the absence of any supporting information, are not helpful in evaluating the frequency of ADRs [2].

Space for reporting adverse events

We measured the area (cm2) used for reporting ADRs (text, figures and tables) in the Results and Discussion sections of the published paper, and expressed it as a percentage of the total area of the Results and Discussion sections.

Results

Of the 185 eligible trials, 25 (13.5%) made no mention of adverse effects in any part of the paper and were therefore not analysed further.

Definition of ADRs

We found 48 trials out of 160 (30%) in which it was not clear which (if any) of the reported adverse events were considered to be drug-related. For instance, in a study of captopril for heart failure, cough (a well-recognised ADR of captopril) was listed together with other untoward incidents, such as myocardial infarction, in a table of adverse events [12]. Systematic reviewers and members of the public who are unfamiliar with pharmacology may end up erroneously believing that myocardial infarction is a harmful drug-related effect of captopril, when in fact, myocardial infarction is a recognised complication of the underlying illness.

Monitoring of ADRs

Details of the methods used in ascertaining the rate of ADRs were more likely to be given when an investigative test was required for diagnosis of the ADR (Table 1). In contrast, many trials merely said that patients were routinely assessed for the presence of ADRs such as clinical events, or new symptoms. It was usually unclear whether this involved targeted clinical assessments for particular ADRs, or if it was left to the patients to report any untoward events.

Table 1.

Availability of information on the methods used in recording ADRs, categorized according to type of ADRs reported by the trial.

| Types of ADRs | No. of trials | Specific details given on how | Did not specify methods |

| reporting | rate of reported ADRs were | used in determining the | |

| recorded | rate of reported ADRs | ||

| Detected by | 68 | 37 (55%) | 31 (45%) |

| investigative tests | |||

| Clinical events | 95 | 14 (15%) | 81 (85%) |

| Patient symptoms | 104 | 18 (17%) | 86 (83%) |

Grade of severity

Of the 86 trials that commented on the severity of the reported ADRs, more than half (44 trials, 51%) did not give details on how severity was defined or which, if any, recognized severity grading system was used.

Reporting

Numerical data

Of the 160 trials that mentioned ADRs, analysis of the full dataset was not possible in 60 (38%) trials. Nineteen gave figures on ADRs for one treatment arm only (without details of figures for the other arms) and 12 provided lumped totals without specifying the numbers that occurred in individual arms. Information on ADRs in a further 29 trials could not be fully analysed because it was based on generic statements without adequate definition or supporting numerical data.

A summary of the findings is shown in Figure 1.

Figure 1.

Reporting of adverse drug reactions – flow chart summary of results.

Proportion of space for reporting adverse effects in the Results and Discussion section

The median proportion of space devoted to ADR reporting in the Results and Discussion section was 5.8%, and the mean was 8.2% (interquartile range of 1.7–12%).

Discussion

"Readers should not have to infer what was probably done, they should be told explicitly" wrote Altman in support of the recommendations on better reporting of randomised controlled trials [13]. Disappointingly, in this series of trials it was often not possible to tell what had been done to define or detect the reported ADRs. Most authors were content either to say that adverse effects were assessed routinely (leaving the reader to infer the exact methods), or gave no information at all, ignoring the SORT recommendation that sufficient detail should be given for readers to reproduce the results.

This leaves us in a quandary when we try to interpret rates of ADRs. Perceived differences in drug safety profiles may result simply from the use of different methods, rather than reflecting any genuine disparity. For example, trials that found relatively high rates of ADRs may have done so because patients' diaries, rather than spontaneous reporting, were used in monitoring ADRs [1]. A similar lack of information on the criteria (or grading system) used in gauging the severity of ADRs makes it difficult to assess their clinical impact. There will be differences in the reported rate, and clinical significance, of an ADR such as hypokalaemia if it was diagnosed at plasma potassium concentrations below 3.0 mmol/l in one trial, and at levels below 3.5 mmol/l in another. As these methodological details are seldom fully described in trial reports, any comparative evaluation of ADR rates between trials should be viewed as being potentially unreliable.

Although deviations from a precise definition of the term "ADR" may seem to be a minor issue, closer analysis reveals how misleading conclusions may result from the inappropriate use of terminology. A systematic review of postoperative analgesia found piroxicam to have a significantly better safety profile than placebo [14]. However, on closer examination, we found that symptoms such as fever and headache were recorded as adverse effects in the piroxicam trials. Piroxicam (a non-steroidal anti-inflammatory drug) is an effective treatment for fevers and headaches, and it is therefore no surprise that placebo turned out to have a greater rate of "adverse effects" and was considered less safe. In order to avoid potential confusion, authors should provide sufficient information in adverse event reports for readers to be aware of which events were defined as ADRs, and which were considered to be adverse clinical events unrelated to therapy.

We recognise that trials may be insufficiently powered for detection of rare adverse effects and cannot be expected to detect and report all adverse effects associated with a drug. We also do not mean to imply that trials providing long lists of ADRs are better than those that report few. However, our primary concern is that adverse effects data, when available, should be reported in a consistent manner. Specific steps for monitoring ADRs have already been laid down under the International Conference on Harmonisation – Good Clinical Practice guidelines for clinical trials, and it is regrettable that much of the information collected is not reported in a useful format [9].

Authors may argue that space constraints in journals prevent them from reporting ADRs in more detail. However, we found that many papers used only a small proportion of space for reporting safety data – 68% of the reviewed trials devoted less than 10% of the total area in the Results and Discussion section to describing adverse effects. Greater clarity in the reporting of ADRs could be achieved if authors were prepared to reduce the emphasis on efficacy and devote a greater proportion of space to safety. In addition, journals that maintain a web presence could be asked to provide dedicated areas for the reporting of adverse effects data.

Systematic reviews summarising the effects of health care have recently been accused of bias, because they emphasise efficacy rather than safety [15]. However, the disparate nature of ADR reporting does not facilitate systematic analysis, and it is therefore no surprise that systematic reviews are unable to provide a balanced viewpoint. Patients and their physicians will not be able to make accurate judgements on the benefit:harm ratio unless safety reporting receives equal attention. Widespread adoption of the SORT recommendations on the reporting of adverse effects would substantially improve the quality of information without adding greatly to the burden of work for authors.

In summary, we believe that the absence of methodological detail, and lack of coherency in reporting the rates of ADRs creates significant problems for those trying to interpret the data. Authors need to:

• specify the methods used in detecting ADRs (incidental reporting, routine recording, or active seeking)

• define ADRs, and the scale of severity used

• report the frequencies of ADRs for each treatment arm

Valuable evidence on ADRs will continue to be misinterpreted or lost unless these measures are adopted by authors and journal editors.

Conclusions

Trial reports often failed to provide details on how adverse drug reactions were defined or recorded. The absence of such methodological details makes comparative evaluation of adverse effects rates between trials potentially unreliable, and hinders systematic review or meta-analysis of such data. The quality of the information would be significantly improved if authors and journals adopted recommendations on structured reporting of adverse effects.

Abbreviations

ADR: Adverse drug reaction

Competing interests

None

Pre-publication history

The pre-publication history for this paper can be accessed here:

http://www.biomedcentral.com/content/backmatter/1472-6904-1-3-b1.pdf

Acknowledgments

Acknowledgements

Thanks go to Jeff Aronson for help with the manuscript.

Funding: SD was supported by grants from Oxford University and The Sir Jules Thorne Trust.

Contributor Information

Yoon Kong Loke, Email: yoon.loke@clinpharm.ox.ac.uk.

Sheena Derry, Email: sheena.derry@clinpharm.ox.ac.uk.

References

- Edwards JE, McQuay HJ, Moore RA, Collins SL. Reporting of adverse effects in clinical trials should be improved: lessons from acute postoperative pain. J Pain Symptom Manage. 1999;18:427–437. doi: 10.1016/S0885-3924(99)00093-7. [DOI] [PubMed] [Google Scholar]

- Ioannidis JPA, Lau J. Completeness of safety reporting in randomized trials – an evaluation of seven medical areas. J Am Med Assoc. 2001;285:437–443. doi: 10.1001/jama.285.4.437. [DOI] [PubMed] [Google Scholar]

- Freemantle N, Cleland J, Young P, Mason J, Harrison J. Beta blockade after myocardial infarction: systematic review and meta regression analysis. BMJ. 1999;318:1730–1737. doi: 10.1136/bmj.318.7200.1730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore RA, McQuay HJ. Adverse events: can we trust the data? Bandolier. 2001;8:6–7. http://www.jr2.ox.ac.uk/bandolier/band85/b85-5.html [Google Scholar]

- Olsen H, Klemetsrud T, Stokke HP, Tretli S, Westheim A. Adverse drug reactions in current antihypertensive therapy: a general practice survey of 2586 patients in Norway. Blood Press. 1999;8:94–101. doi: 10.1080/080370599438266. [DOI] [PubMed] [Google Scholar]

- Moher D, Schultz KF, Altman DG, for the CONSORT Group The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet. 2001;357:1191–1194. doi: 10.1016/S0140-6736(00)04337-3. [DOI] [PubMed] [Google Scholar]

- The Standards of Reporting Trials Group A proposal for structured reporting of randomized controlled trials. J Am Med Assoc. 1994;272:1926–1931. doi: 10.1001/jama.272.24.1926. [DOI] [PubMed] [Google Scholar]

- Cochrane Controlled Trials Register. The Cochrane Library, Issue 2. Oxford: Update Software; 2000.

- International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use: ICH Harmonised Tripartite Guideline – Clinical Safety Data Management: Definitions and Standards for Expedited Reporting. Federal Register. 1995;60:11284–11287. http://www.ifpma.org/pdfifpma/e2a.pdf [Google Scholar]

- WHO Collaborating Centre for International Drug Monitoring: Definitions. 2002. http://www.who-umc.org/defs.html

- Cancer Therapy Evaluation Program: Common Toxicity Criteria, Version 2.0. DCTD, NCI, NIH. 2002. http://ctep.info.nih.gov/CTC3/Download/CTCv20%204-30-992.pdf

- Pitt B, Segal R, Martinez FA, Meurers G, Cowley AJ, Thomas I, Deedwania PC, Ney DE, Snavely DB, Chang PI. Randomised trial of losartan versus captopril in patients over 65 with heart failure (Evaluation of Losartan in the Elderly Study, ELITE). Lancet. 1997;349:747–752. doi: 10.1016/S0140-6736(97)01187-2. [DOI] [PubMed] [Google Scholar]

- Altman DG. Better reporting of randomised controlled trials: the CONSORT statement. Br Med J. 1996;313:570–571. doi: 10.1136/bmj.313.7057.570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards JE, Loke YK, Moore RA, McQuay HJ. Single dose piroxicam in acute postoperative pain: a quantitative systematic review. (Cochrane Review) The Cochrane Library, Issue 1. Oxford: Update Software; 2001.

- Walker A. Meta-style and expert review. Lancet. 1999;354:1834–1835. doi: 10.1016/S0140-6736(99)00355-4. [DOI] [PubMed] [Google Scholar]