Abstract

Our purpose was to compare reading performance measured with the MNREAD Acuity Chart and an iPad application (app) version of the same test for both normally sighted and low-vision participants. Our methods included 165 participants with normal vision and 43 participants with low vision tested on the standard printed MNREAD and on the iPad app version of the test. Maximum Reading Speed, Critical Print Size, Reading Acuity, and Reading Accessibility Index were compared using linear mixed-effects models to identify any potential differences in test performance between the printed chart and the iPad app. Our results showed the following: For normal vision, chart and iPad yield similar estimates of Critical Print Size and Reading Acuity. The iPad provides significantly slower estimates of Maximum Reading Speed than the chart, with a greater difference for faster readers. The difference was on average 3% at 100 words per minute (wpm), 6% at 150 wpm, 9% at 200 wpm, and 12% at 250 wpm. For low vision, Maximum Reading Speed, Reading Accessibility Index, and Critical Print Size are equivalent on the iPad and chart. Only the Reading Acuity is significantly smaller (I. E., better) when measured on the digital version of the test, but by only 0.03 logMAR (p = 0.013). Our conclusions were that, overall, MNREAD parameters measured with the printed chart and the iPad app are very similar. The difference found in Maximum Reading Speed for the normally sighted participants can be explained by differences in the method for timing the reading trials.

Keywords: reading test, MNREAD, iPad, normal vision, low vision

Introduction

Reading speed is a strong predictor of visual ability and vision-related quality of life for patients with vision loss (Hazel, Petre, Armstrong, Benson, & Frost, 2000; McClure, Hart, Jackson, Stevenson, & Chakravarthy, 2000). For this reason, reading performance has been used as an outcome measure in clinical trials for judging the effectiveness of treatments (Mahmood et al., 2015), surgical procedures (Jonker et al., 2015; Tang, Zhuang, & Liu, 2014), or rehabilitation techniques (Binns et al., 2012; Stelmack et al., 2017). Several reading tests have been developed to assess aspects of reading vision (Bailey & Lovie, 1980; Baldasare, Watson, Whittaker, & Miller-Shaffer, 1986; Colenbrander & Fletcher, 2005; Hahn et al., 2006; MacKeben, Nair, Walker, & Fletcher, 2015; Mansfield, Ahn, Legge, & Luebker, 1993; Radner et al., 2002; Ramulu, Swenor, Jefferys, & Rubin, 2013; Trauzettel-Klosinski, Dietz, & IReST Study Group, 2012). Most of these tests were designed as text printed on a card or on paper (Radner, 2017). Recent advances in digital technology represent a valuable opportunity for researchers and health professionals to develop new screening tools, which are portable and can combine multiple tests within a single device (Berger, 2010). The measurement of reading performance is one area that may benefit significantly from this use of new technology. The Salzburg Reading Desk (Dexl, Schlögel, Wolfbauer, & Grabner, 2010) for example, is a new test that has made the transition to electronic test displays. Tablet computers, like the Apple iPad, are widely available at a moderate cost and provide highly portable devices with relatively large, high-resolution screens. Such devices enable the display of optotypes at both large and small print and at various contrasts. They also feature software that can ensure correct scoring of reading performance and store or transmit the data collected. They can thus improve the current methods for assessing reading performance, for which scoring algorithms need to be optimized and simplified so that the reliability and responsiveness of reading tests can be improved (Patel, Chen, Da Cruz, Rubin, & Tufail, 2011; Rubin, 2013).

The MNREAD acuity chart is a continuous-text reading-acuity test designed to measure the reading performance of people with normal and low vision (Figure 1; Mansfield et al., 1993). The chart is printed on laminated, semigloss paper, mounted on high-impact polystyrene. The examiner is responsible for timing trials, counting errors, and deriving the four MNREAD measures: the Reading Acuity (RA—the smallest print that one can read without making significant errors); the Critical Print Size (CPS—the smallest print that one can read with maximum speed); the Maximum Reading Speed (MRS—one's reading speed when reading is not limited by print size); and the Reading Accessibility Index (ACC—A single-valued measure that represents one's visual access to commonly encountered printed material, where 0 means no access to print and 1.0 represents average normal access; Calabrèse, Owsley, McGwin, & Legge, 2016).

Figure 1.

Printed MNREAD chart. (A) Front and back of the MNREAD acuity chart printed in black-on-white (regular polarity); (B) Example MNREAD curve showing the four MNREAD parameters—Maximum Reading Speed (MRS—red star) is 225 words/min, with Critical Print Size (CPS—green diamond), and Reading Acuity (RA—blue triangle) of 0.0 and −0.2 logMAR, respectively. The Reading Accessibility Index (ACC) is 1.12.

The MNREAD test would benefit from a digital transition, first, by simplifying and standardizing the testing methods; second, by automating the scoring methods that have been applied to calculate the MRS and CPS (Patel et al., 2011); third by providing easy data sharing; and fourth, by increasing portability, with several test versions (two contrast polarities, several languages) available within a single device. Here we describe a newly developed version of MNREAD, implemented on an iPad tablet with Retina display, resolution 264 pixels per inch (ppi) or 104 pixels/cm: the MNREAD iPad App ©2017 (https://itunes.apple.com/us/app/mnread/id1196638274?ls=1&mt=8). In the app, sentences are presented one at a time and centered on the screen (Figure 2). Like the standard chart, sentences are displayed on three lines in the Times Roman font, with print size decreasing in steps of 0.1 log unit. The app uses the same short sentences as the chart but with a reduced range of print sizes (14 sentences compared to 19 in the printed version). This reduction is due to screen size limitations (for the largest print size) and pixel-resolution limitations (for the four smallest print sizes). The physical print size ranges from 6.3 M to 0.32 M (in Sloan M notation) or 9.3 mm to 0.5 mm (in x height). From the recommended viewing distance of 40 cm (16 in.) the corresponding angular print size ranges from 1.2 to –0.1 logMAR (Snellen equivalents 20/320 to 20/16). This range can be extended by using a shorter or longer viewing distance. It is recommended to increase the viewing distance when testing normal vision in order to extend the range of print sizes in the smaller end and thus obtain accurate measures of Critical Print Size and Reading Acuity. It is recommended to use a shorter viewing distance for low-vision individuals with substantially reduced acuity. In addition to the sentence display, the MNREAD app provides features that are not available with the printed version: reading time recording, graphical display of the results, MNREAD curve fitting, MNREAD parameters estimation, data recording and export, and practice test.

Figure 2.

MNREAD testing sequence using the iPad app. (A) Preparation screen displayed before each sentence; (B) After the experimenter clicks the “GO” button, the first sentence is displayed in the center of the screen, launching the time recording; (C) Once the participant is done reading, a simple click will stop the trial and record the reading time. A score screen appears, allowing the experimenter to enter the number of errors and launch the next trial; (D) When reading becomes impossible, the test is stopped and the app displays the MNREAD data plot and parameter estimates.

The goal of the current report is to compare test performance between the printed chart and the iPad app. Comparison is made for both normally sighted (Experiment 1) and low-vision participants (Experiment 2).

Methods

Participants

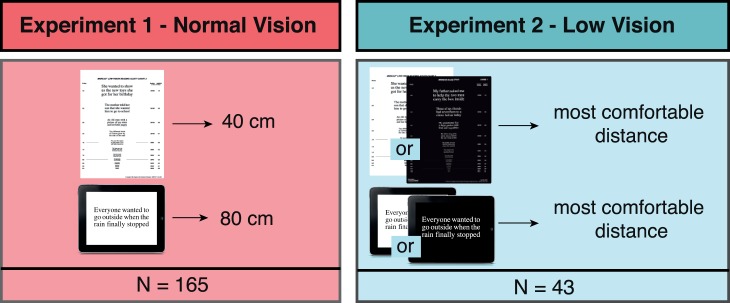

One hundred sixty-five normally sighted participants (Experiment1) and 43 low-vision participants (Experiment 2) were recruited for this project. The normally sighted participants had normal or corrected-to-normal vision with no reported cognitive or reading impairment. Low-vision participants had heterogeneous diagnoses and levels of visual impairment, and were able to read large print. They had no reported cognitive impairment and no reading deficit diagnosed prior to their visual impairment. All participants were either native or fluent English speakers. Data were collected with informed consent approved by the Institutional Review Board (IRB) of the University of Minnesota.

Experimental design

In Experiment 1, normally sighted participants were tested on two conditions in one session: on the chart at 40 cm and on the app at 80 cm (this longer viewing distance was chosen to compensate for the reduced range of print sizes on the iPad). All testing was performed with the regular polarity of the test (black print on white background; Figure 3). In Experiment 2, low-vision participants were tested once on the chart and once on the iPad. Both test were run with their self-reported preferred (“most comfortable”) polarity and at their preferred viewing distance (both determined during a practice test at the beginning of the testing session). Preferred viewing distance was determined by having participants choose the “most comfortable” distance permitting them to read down to the fourth largest print size on the chart (20/200 Snellen equivalent at 40 cm). Regular polarity was chosen by 19 participants, and 24 chose the reverse polarity. Preferred viewing distance was on average 30 cm ± SD 10 cm. In both experiments, tests were run with different sentence sets to avoid memorization. Participants were tested binocularly with their most up-to-date near refractive corrections, if any.

Figure 3.

Experimental protocol for Experiments 1 and 2.

Chart testing was performed using the printed MNREAD acuity chart (Precision Vision). A desk lamp was used in addition to the ceiling light to provide uniformity of luminance across the chart (200 cd/m2; Black et al., 2013; Livingstone et al., 2016). Following the recommended standard procedure, the experimenter revealed each sentence one at a time. Participants were instructed to read the test sentences aloud as quickly and accurately as possible, beginning with the largest print size and progressing to the smallest print size that could be read. The experimenter used a stopwatch to record the reading time (in seconds, to the nearest 0.01 s) and counted the number of errors (misread or missing words) for each sentence. The testing stopped when the print size was so small that the participant could no longer read any words.

iPad app testing was conducted with a prerelease version of the MNREAD iPad app running on an iPad with Retina display (9.7-in diagonal; LED-backlit). To avoid any reflections on the screen, an antiglare screen protector was applied (Black et al., 2013). The iPad was mounted vertically on a stand in landscape mode. Height was adjusted individually so the center of the screen was at eye level. Ceiling light ensured uniform illumination of the room with no additional lighting. Screen luminance was set to 200 cd/m2. Sentence presentation was initiated by the experimenter with a finger tap on the screen, which also started the timer (Figure 2A). Each sentence was displayed instantly (Figure 2B). Participants were asked to start reading out loud and as quickly and accurately as possible as soon as a sentence appeared. Once reading was completed, the experimenter tapped on the screen a second time to end the trial, and the app recorded the reading time. After each sentence read, a score screen was displayed, where the experimenter entered the number of errors, if any (Figure 2C). The testing stopped when the print size was so small that the participant could no longer read any words. The app then displayed the corresponding MNREAD curve of log reading speed as a function of print size, along with the four MNREAD parameters (Figure 2D). In case the estimation of MRS and CPS looked erroneous, the examiner had the option to override the automatic fit and perform a manual estimation of these parameters.

MNREAD parameters estimation

Data collected with the printed chart were analyzed offline in R with the mnreadR package (Calabrèse, Mansfield, & Legge, 2017) to extract the four MNREAD parameters (MRS, CPS, RA, and ACC). For each testing run with the iPad app, MNREAD data were analyzed in real time by the app software, which returned the four MNREAD parameters. Both methods used the same calculations: MRS and CPS were estimated using the original algorithm described in Legge (2007); RA and ACC were calculated according to the standard formulas (Calabrèse, Owsley et al., 2016). To ensure proper estimation of the MRS and CPS, individual MNREAD curves of log reading speed as a function of print size were plotted (either in R or within the app) and visually inspected. The algorithm estimation was judged erroneous in 4% of the chart tests and 2% of the iPad tests in Experiment 1 and in 11% on the chart and 9% on the iPad in Experiment 2. In such cases, parameters were estimated visually as follows: MRS was estimated as the plateau of the curve, and CPS was set as the last print size sustaining MRS. Following Subramanian and Pardhan's (2009) suggestion, MRS was log10 transformed and expressed in log words/min (logWPM).

Statistical analysis

We measured the effect of test condition (chart vs. app) on the four MNREAD measures using linear-mixed effects (LME) models (Bates, Mächler, Bolker, & Walker, 2015). For each experiment, each MNREAD parameter was used as a dependent variable in an LME model with “test version” set as fixed effect and “participants” modeled as random effects. Optimal model selection was performed using the Akaike's Information Criterion (AIC). In the Results section, we report the mean values estimated by the models for each group, with their p values and 95% CI.

Results

Participants

Normally sighted participants (N = 165) ranged in age from eight to 72 years and low-vision participants (N = 43) ranged in age from 22 to 93 years (Table 1). They had a wide variety of diagnoses, including age-related macular degeneration (nine), retinitis pigmentosa (seven), optic neuropathy (six), glaucoma (five), retinal detachment (three), Stargardt's disease (two), myopic degeneration (two), retinal detachment (two), diabetic retinopathy (two), rod-cone dystrophy (two), congenital cataract (one), macular hole (one), choroidal dystrophy central areolar (one).

Table 1.

Characteristics of the participants included in Experiments 1 and 2.

Experiment 1: Normal vision

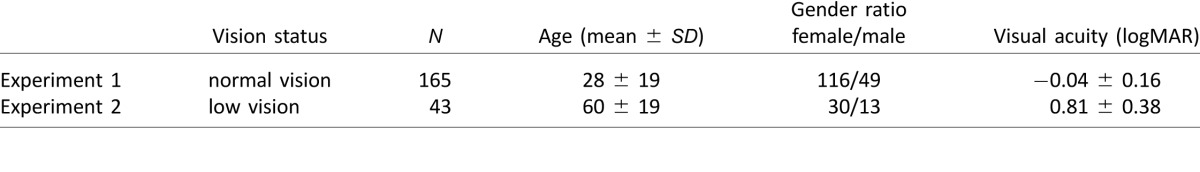

MRS was significantly faster on the chart (2.26 logWPM; 95% CI [2.24, 2.27]) than on the iPad (2.22 logWPM; 95% CI [2.20, 2.23]; p < 0.001; Figure 4A). When converted to recorded reading time, this result translates into an average 0.3 s difference. We also found that the percentage difference between chart MRS and iPad MRS was significantly correlated with the value of the chart MRS (r = 0.38; 95% CI [0.25, 0.49]; p < 0.001). On average, the difference between chart and iPad was 3% at 100 wpm, 6% at 150 wpm, 9% at 200 wpm and 12% at 250 wpm (Figure 5), showing a growing advantage of the chart for faster readers.

Figure 4.

Agreement between the Maximum Reading Speed (MRS) measured with the chart and the iPad app. (A) shows data from Experiment 1 (normally sighted participants). (B) shows data from Experiment 2 (low-vision participants). Bland-Altman plots show the difference between measured MRS (iPad-Chart) plotted against the mean MRS. The red dashed lines represent the average difference. The blue dashed lines represent the agreement limits (±1.96 SD). The dotted lines show the 95% CI limits. Top and right histograms show the data distribution along the x and y axis respectively. Tables summarize the chart and iPad average values as well as their difference (as given by the LME models).

Figure 5.

Percentage difference in MRS between chart and iPad as a function of MRS as measured on the chart. As shown by the regression lines, the percentage difference between the two measures increases when the MRS (as measured on the chart) increases.

Mean ACC on the chart was 0.92 (95% CI [0.89, 0.94]) and 0.83 on the iPad (95% CI [0.79, 0.87]). This difference of −0.09 units (i.e., 9.7%) was found to be significant (95% CI [−0.10, −0.07], p < 0.001).

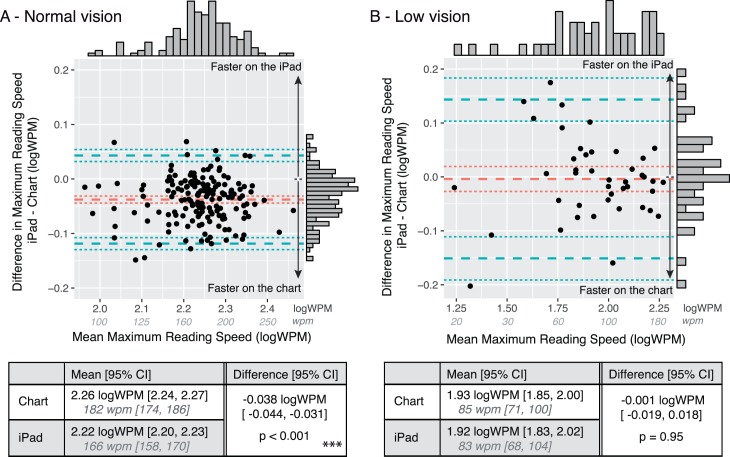

On average the CPS estimated with the chart was 0.09 logMAR (95% CI [0.06, 0.11]) while the CPS estimated with the iPad app was on average 0.06 logMAR (95% CI [0.01, 0.11]). This difference was not statistically significant (95% CI [−0.06, 0.001], p = 0.06; Figure 7A).

Figure 7.

Agreement between the Critical Print Size (CPS) measured with the chart and the iPad app. (A) shows data from Experiment 1 (normally sighted participants). (B) shows data from Experiment 2 (low-vision participants). Bland-Altman plots show the difference between measured CPS (iPad – Chart) plotted against the mean CPS. To avoid overlapping data points, similar CPS values are grouped and shown with a size code in (A). The red dashed lines represent the average difference. The blue dashed lines represent the agreement limits (±1.96 SD). The dotted lines show the 95% CI limits. Top and right histograms show the data distribution along the x and y axis respectively. Tables summarize the chart and iPad average values as well as their difference (as given by the LME models).

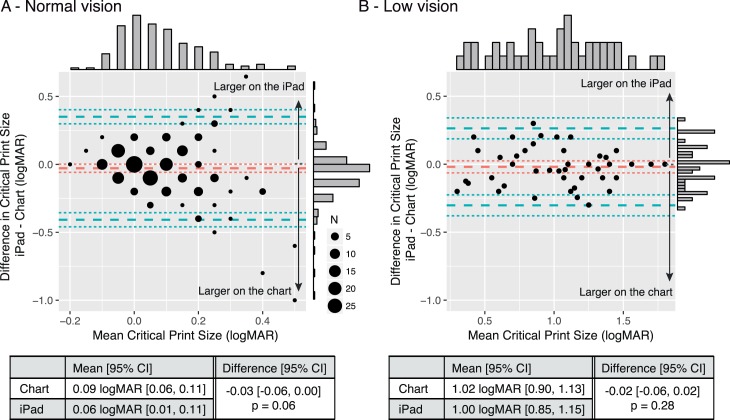

Similarly, we found no significant difference between the RA estimated with the chart (−0.13 logMAR; 95% CI [−0.15, −0.12]) and the RA estimated with the app (−0.14 logMAR; 95% CI [−0.17, −0.11]; Figure 8A).

Figure 8.

Agreement between the Reading Acuity (RA) measured with the chart and the iPad app. (A) shows data from Experiment 1 (normally sighted participants). (B) shows data from Experiment 2 (low-vision participants). Bland-Altman plots show the difference between measured RA (iPad – Chart) plotted against the mean RA. The red dashed lines represent the average difference. The blue dashed lines represent the agreement limits (±1.96 SD). The dotted lines show the 95% confidence interval limits. Top and right histograms show the data distribution along the x and y axis respectively. Tables summarize the chart and iPad average values as well as their difference (as given by the LME models).

Experiment 2: Low vision

On average, the MRS measured with the chart was 1.93 logWPM (95% CI [1.85, 2.00]) and was not significantly different (95% CI [−0.019, 0.018], p = 0.95) from measurement with the app (1.92 logWPM, 95% CI [1.83, 2.02]; Figure 4B).

Similarly, we found no significant difference between the ACC estimated with the chart (0.36; 95% CI [0.29, 0.42]) and the ACC estimated with the iPad app (0.37; 95% CI [0.28, 0.45]; Figure 6B).

Figure 6.

Agreement between the Reading Accessibility Index (ACC) measured with the chart and the iPad app. (A) shows data from Experiment 1 (normally sighted participants). (B) shows data from Experiment 2 (low-vision participants). Bland-Altman plots show the difference between measured ACC (iPad-Chart) plotted against the mean ACC. The red dashed lines represent the average difference. The blue dashed lines represent the agreement limits (±1.96 SD). The dotted lines show the 95% CI limits. Top and right histograms show the data distribution along the x and y axis respectively. Tables summarize the chart and iPad average values as well as their difference (as given by the LME models).

On average, the CPS estimated with the chart was 1.02 logMAR (95% CI [0.9, 1.13]) and was not significantly different (95% CI [−0.06, 0.02], p = 0.28) when estimated with the app (1.0 logMAR, 95% CI [0.85, 1.15]; Figure 7B).

The average RA was smaller (i.e., better) on the iPad app (0.74 logMAR, 95% CI [0.60, 0.88]) than on the chart (0.77 logMAR, 95% CI [0.65, 0.89]). This difference (4%) was small but significant (95% CI [−0.05, −0.01], p = 0.01; Figure 8B).

Discussion

The MNREAD iPad App is a newly developed digital version of the MNREAD acuity chart. It was designed to include the primary MNREAD layout and linguistic principles, with the main differences that reading stimuli are displayed on a screen and that MNREAD parameters are estimated automatically in real time. Overall, we found that the MNREAD parameters measured with the printed chart and the iPad app are very similar.

In Experiment 1, we tested a sample of normally sighted participants covering a wide age range, including children and older adults. We found that CPS and RA, measured on the iPad and chart, were not significantly different. The between-test consistency we reported in CPS (0.03 logMAR) and RA (0.01 logMAR) both fall within the range of test-retest repeatability of the printed chart for normal vision reported in adults (0.12 logMAR for CPS and 0.05 logMAR for RA; Subramanian & Pardhan, 2006) and children (0.14 and 0.19 logMAR for CPS and RA respectively; Virgili et al., 2004). The RA consistency between chart and iPad confirms that sentences in the small end print size are rendered with sufficient resolution on the iPad screen.

MRS was found to be significantly faster with the chart compared to the iPad app, more so for faster readers. On average, the difference between chart and iPad was 3% at 100 wpm and increased to 12% at 250 wpm. Such values are larger than the 4% test-retest repeatability range reported for MNREAD chart in adults with normal vision (Subramanian & Pardhan, 2006), but smaller than the 19% change between test and retest reported in children (Virgili et al., 2004). It is likely that the discrepancy we found in between-test consistency for MRS is the result of differences in the timing method. With the printed chart, the experimenter must use a blank piece of paper to mask the following sentences. Then, to initiate the trial, the experimenter must reveal the test sentence and start the timer. Even for an experienced experimenter, there is a small delay between the time the mask starts moving until the moment the test sentence is fully revealed (when the timer is started). During this delay, the subject may begin to read the first line(s) of text. The result would be an underestimate of the reading time, and consequently an overestimate of reading speed. In comparison, the app's instant presentation of the test sentences, paired with an automatic start of the internal timer, would lead to a slightly longer and more accurate estimate of reading time and therefore a slower reading speed. This possibility is supported by a recent study that compared stopwatch versus automated timing in a computer-based reading test (Xu & Bradley, 2015). These authors reported that stopwatch timing underestimated reading time by about 0.3 s. Such difference translates into a 5% difference in reading speed at 100 wpm, 7.5 % at 150 wpm, 10% at 200 wpm, and 12.5% at 250 wpm. These numbers are in close agreement with the ones we reported in Experiment 1. The diminishing difference in speeds for slower reading also explains why we found no significant difference in MRS for the low-vision participants, for whom reading speed was on average only 85 wpm. It therefore seems likely that the discrepancy in MRS between chart and iPad app can be explained by the difference in timing method, leading to the conclusion that the automated timing provided by the iPad app gives more accurate and consistent measurement of reading time.

We found that the iPad app gives a smaller ACC than the chart (0.83 vs. 0.92). The Reading Accessibility is scaled so that a value of 0 means no reading within the commonly encountered range of print sizes, and 1.0 is the average value for normally sighted young adults between the ages of 18 and 39 (Calabrèse, Owsley et al., 2016). The mean value of ACC we report here for the normally sighted group is smaller than 1.0 because the group includes ACC from children and older adults whose ACC values are usually less than the baseline values for young adults (Calabrèse, Cheong et al., 2016). For our participants in the age range 18–39 years (N = 33), the average ACC values were 1.02 on the chart and 0.92 on the iPad. Taken at face value, this difference seems to indicate that text is slightly less accessible on the iPad. But computation of ACC involves averaging reading speeds across 10 print sizes. As previously discussed, reading speeds on the iPad are slightly slower than those on the chart due to differences in the method of timing. As a result, the corresponding ACC values are lower. One way of addressing this discrepancy between chart and iPad and maintain uniformity in ACC values would be to apply an adjustment factor to ACC measured on the iPad. From our Experiment 1, participants in the 18–39 year old range read about 10% slower on the iPad (183 wpm) than on the chart (202 wpm), suggesting that a multiplicative factor of 1.1 should be applied to the ACC measured with the iPad to compensate for the difference in procedure with the printed MNREAD chart.

In Experiment 2, we inspected chart versus iPad app differences in a low-vision sample of participants with a wide range of visual impairment. We found that MRS, ACC, and CPS were equivalent on iPad and chart measurements. Only the RA was significantly better when measured on the digital version of the test, but by only 0.03 logMAR. Considering that the coefficient of repeatability for RA in a low-vision population was estimated to be between ± 0.036 logMAR (Maaijwee, Mulder, Radner, & Van Meurs, 2008) and ± 0.1 logMAR (Subramanian & Pardhan, 2009), the small difference we measured in the present study does not seem to be clinically important.

A main difference between the iPad app and the chart is the digital display of text compared to standard print. Recent studies have reported few significant differences in reading speed between paper and liquid crystal display (LCD) screens (Köpper, Mayr, & Buchner, 2016; Noyes & Garland, 2008), but it is still a matter of debate as to whether reading is better in paper or LCD (Kang, Wang, & Lin, 2009). In order to render the sentences accurately, the iPad app is designed to run on the Retina display, the LCD screen available on iPad 3 and later versions. The Retina display has higher pixel density (264 ppi, 2048 × 1536 pixels) than older screen versions (132 ppi, 1024 × 768 pixels). Compared to reading a printed book, reading on an iPad with lower pixel-density screens resulted in a small but nonsignificant decrease in reading speed (Nielsen, 2010). When comparing paragraph reading with higher pixel-density displays and lower pixel-density screens, the Retina display led to less fatigue but similar reading speed (Mayr, Köpper, M., & Buchner, 2017). Overall, these results suggest that reading speed on the Retina display is largely equivalent to reading hard copy, especially for MNREAD-like short sentence reading.

One disadvantage of testing with the iPad app is the reduced range of print sizes. However, the present study confirms that increasing the viewing distance is an efficient strategy for testing normally sighted individuals, to compensate for the loss of the four smaller sentences. Considering the one sentence missing at the larger end of the print size range, our results for MRS suggest that starting the test at 6.3 M leads to accurate estimates, even for low-vision individuals.

Conclusions

Digital eye tests like the MNREAD iPad app have several advantages over chart tests. First, they are very likely to increase intertester reliability, thanks to more consistent sentence display, more accurate timing measurement, and unified methods for parameter estimation. Second, they allow for significantly shorter testing time thanks to a smoother and quicker way of administration paired with an instantaneous presentation of the results. Such time saving can be especially valuable in clinics where many patients need to be seen. Third, they allow for potential self-administration: Patients can launch and stop a trial with a simple click, automatically recording their reading time. Self-administration is a positive feature that helps motivate and engage patients during testing. Finally, a major advantage of digital reading tests is to provide more uniform automated methods for measuring reading performance and therefore help standardize reading assessment.

Acknowledgments

The authors would like to thank J. Stephen Mansfield and Charles Bigelow for their work on the MNREAD iPad app development, as well as Michael Crossland for his guiding comments during the app development process. The authors would also like to thank the researchers and clinicians who agreed to test the beta version of the app, providing helpful feedback and suggestions during the debug process. This work was supported by the National Institutes of Health (grant EY002934).

Commercial relationships: none.

Corresponding author: Aurélie Calabrèse.

Email: acalabre@umn.edu.

Address: Aix-Marseille University, CNRS, LPC, Marseille, France.

References

- Bailey, I. L., Lovie, J. E.. (1980). The design and use of a new near-vision chart. American Journal of Optometry and Physiological Optics, 57, 378– 387. [DOI] [PubMed] [Google Scholar]

- Baldasare, J., Watson, G. R., Whittaker, S. G., Miller-Shaffer, H.. (1986). The development and evaluation of a reading test for low vision individuals with macular loss. Journal of Visual Impairment & Blindness, 80, 785– 789. [Google Scholar]

- Bates, D., Mächler, M., Bolker, B., Walker, S.. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67, 1– 48. [Google Scholar]

- Berger, E. (2010). The iPad: Gadget or medical godsend? Annals of Emergency Medicine, 56, A21– A22. [DOI] [PubMed] [Google Scholar]

- Binns, A. M., Bunce, C., Dickinson, C., Harper, R., Tudor-Edwards, R., Woodhouse, M.,… Margrain, T. H.. (2012). How effective is low vision service provision? A systematic review. Survey of Ophthalmology, 57, 34– 65. [DOI] [PubMed] [Google Scholar]

- Black, J. M., Jacobs, R. J., Phillips, G., Chen, L., Tan, E., Tran, A., Thompson, B.. (2013). An assessment of the iPad as a testing platform for distance visual acuity in adults. BMJ Open, 3 6, e002730, doi:10.1136/bmjopen-2013-002730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calabrèse, A., Cheong, A. M. Y., Cheung, S.-H., He, Y., Kwon, M., Mansfield, J. S.,… Legge, G. E.. (2016). Baseline MNREAD measures for normally sighted subjects from childhood to old age. Investigative Ophthalmology & Visual Science, 57 8, 3836– 3843, doi:10.1167/iovs.16-19580. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calabrèse, A., Mansfield, J. S., Legge, G. E.. (2017). mnreadR, an R package to analyze MNREAD data. Retrieved from https://CRAN.R-project.org/package=mnreadR

- Calabrèse, A., Owsley, C., McGwin, G., Legge, G. E.. (2016). Development of a reading accessibility index using the MNREAD acuity chart. JAMA Ophthalmology, 134, 398– 405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colenbrander, A., Fletcher, D. C.. (2005). The mixed contrast reading card, a new screening test for contrast sensitivity. International Congress Series 1282, 492– 497. [Google Scholar]

- Dexl, A. K., Schlögel, H., Wolfbauer, M., Grabner, G.. (2010). Device for improving quantification of reading acuity and reading speed. Journal of Refractive Surgery, 26, 682– 688. [DOI] [PubMed] [Google Scholar]

- Hahn, G. A., Penka, D., Gehrlich, C., Messias, A., Weismann, M., Hyvarinen, L.,… Trauzettel-Klosinski, S.. (2006). New standardised texts for assessing reading performance in four European languages. The British Journal of Ophthalmology, 90, 480– 484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hazel, C. A., Petre, K. L., Armstrong, R. A., Benson, M. T., Frost, N. A.. (2000). Visual function and subjective quality of life compared in subjects with acquired macular disease. Investigative Ophthalmology & Visual Science, 41, 1309– 1315. [PubMed] [Article] [PubMed] [Google Scholar]

- Jonker, S. M. R., Bauer, N. J. C., Makhotkina, N. Y., Berendschot, T. T. J. M., van den Biggelaar, F. J. H. M., Nuijts, R. M. M. A.. (2015). Comparison of a trifocal intraocular lens with a +3.0 D bifocal IOL: Results of a prospective randomized clinical trial. Journal of Cataract and Refractive Surgery, 41, 1631– 1640. [DOI] [PubMed] [Google Scholar]

- Kang, Y.-Y., Wang, M.-J. J., Lin, R.. (2009). Usability evaluation of E-books. Displays, 30, 49– 52. [Google Scholar]

- Köpper, M., Mayr, S., Buchner, A.. (2016). Reading from computer screen versus reading from paper: Does it still make a difference? Ergonomics, 59, 615– 632. [DOI] [PubMed] [Google Scholar]

- Legge, G. E. (2007). Psychophysics of reading in normal and low vision. Mahwah, NJ and London: Lawrence Erlbaum Associates. [Google Scholar]

- Livingstone, I. A. T., Tarbert, C. M., Giardini, M. E., Bastawrous, A., Middleton, D., Hamilton, R.. (2016). Photometric compliance of tablet screens and retro-illuminated acuity charts as visual acuity measurement devices. PLoS One, 11, e0150676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maaijwee, K., Mulder, P., Radner, W., Van Meurs, J. C.. (2008). Reliability testing of the Dutch version of the Radner Reading Charts. Optometry and Vision Science 85, 353– 358. [DOI] [PubMed] [Google Scholar]

- MacKeben, M., Nair, U. K. W., Walker, L. L., Fletcher, D. C.. (2015). Random word recognition chart helps scotoma assessment in low vision. Optometry and Vision Science : Official publication of the American Academy of Optometry, 92, 421– 428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahmood, S., Roberts, S. A., Aslam, T. M., Parkes, J., Barugh, K., Bishop, P. N.,& GMAN Study Group . (2015). Routine versus as-needed Bevacizumab with 12-weekly assessment intervals for neovascular age-related macular degeneration: 92-week results of the GMAN trial. Ophthalmology, 122, 1348– 1355. [DOI] [PubMed] [Google Scholar]

- Mansfield, J. S., Ahn, S. J., Legge, G. E., Luebker, A.. (1993). A new reading-acuity chart for normal and low vision. Ophthalmic and Visual Optics/Noninvasive Assessment of the Visual System Technical Digest, 3, 232– 235. [Google Scholar]

- Mayr, S., Köpper, M., Buchner, A.. (2017). Effects of high pixel density on reading comprehension, proofreading performance, mood state, and physical discomfort. Displays, 48, 41– 49. [Google Scholar]

- McClure, M. E., Hart, P. M., Jackson, A. J., Stevenson, M. R., Chakravarthy, U.. (2000). Macular degeneration: Do conventional measurements of impaired visual function equate with visual disability? The British Journal of Ophthalmology, 84, 244– 250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nielsen, J. (2010). iPad and Kindle reading speeds. Jacob Nielsen's Alertbox. Retrieved from http://www.useit.com/alertbox/ipad-kindle-reading.html

- Noyes, J. M., Garland, K. J.. (2008). Computer- vs. paper-based tasks: Are they equivalent? Ergonomics, 51, 1352– 1375. [DOI] [PubMed] [Google Scholar]

- Patel, P. J., Chen, F. K., Da Cruz, L., Rubin, G. S., Tufail, A.. (2011). Test-retest variability of reading performance metrics using MNREAD in patients with age-related macular degeneration. Investigative Ophthalmology & Visual Science, 52, 3854– 3859, doi:10.1167/iovs.10-6601. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Radner, W. (2017). Reading charts in ophthalmology. Graefe's Archive for Clinical and Experimental Ophthalmology, 255, 1465– 1482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radner, W., Obermayer, W., Richter-Mueksch, S., Willinger, U., Velikay-Parel, M., Eisenwort, B.. (2002). The validity and reliability of short German sentences for measuring reading speed. Graefe's Archive for Clinical and Experimental Ophthalmology, 240, 461– 467. [DOI] [PubMed] [Google Scholar]

- Ramulu, P. Y., Swenor, B. K., Jefferys, J. L., Rubin, G. S.. (2013). Description and validation of a test to evaluate sustained silent reading. Investigative Ophthalmology & Visual Science, 54, 673– 680, doi:10.1167/iovs.12-10617. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubin, G. S. (2013). Measuring reading performance. Vision Research, 90, 43– 51. [DOI] [PubMed] [Google Scholar]

- Stelmack, J. A., Tang, X. C., Wei, Y., Wilcox, D. T., Morand, T., Brahm, K.,hellip; LOVIT II Study Group . (2017). Outcomes of the Veterans Affairs Low Vision Intervention Trial II (LOVIT II): A randomized clinical trial. JAMA Ophthalmology, 135, 96– 104. [DOI] [PubMed] [Google Scholar]

- Subramanian, A., Pardhan, S.. (2006). The repeatability of MNREAD acuity charts and variability at different test distances. Optometry and Vision Science: Official Publication of the American Academy of Optometry, 83, 572– 576. [DOI] [PubMed] [Google Scholar]

- Subramanian, A., Pardhan, S.. (2009). Repeatability of reading ability indices in subjects with impaired vision. Investigative Ophthalmology & Visual Science, 50, 3643– 3647, doi:10.1167/iovs.08-2823. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Tang, W., Zhuang, S., Liu, G.. (2014). Comparison of visual function after multifocal and accommodative IOL implantation. Eye Science, 29, 95– 99. [PubMed] [Google Scholar]

- Trauzettel-Klosinski, S., Dietz, K.,& IReST Study Group . (2012). Standardized assessment of reading performance: The New International Reading Speed Texts IReST. Investigative Ophthalmology & Visual Science, 53, 5452– 5461, doi:10.1167/iovs.11-8284. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Virgili, G., Cordaro, C., Bigoni, A., Crovato, S., Cecchini, P., Menchini, U.. (2004). Reading acuity in children: Evaluation and reliability using MNREAD charts. Investigative Ophthalmology & Visual Science, 45, 3349– 3354, doi:10.1167/iovs.03-1304. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Xu, R., Bradley, A.. (2015). IURead: A new computer-based reading test. Ophthalmic and Physiological Optics, 35, 500– 513. [DOI] [PubMed] [Google Scholar]