Abstract

Musical rhythms performed by humans typically show temporal fluctuations. While they have been characterized in simple rhythmic tasks, it is an open question what is the nature of temporal fluctuations, when several musicians perform music jointly in all its natural complexity. To study such fluctuations in over 100 original jazz and rock/pop recordings played with and without metronome we developed a semi-automated workflow allowing the extraction of cymbal beat onsets with millisecond precision. Analyzing the inter-beat interval (IBI) time series revealed evidence for two long-range correlated processes characterized by power laws in the IBI power spectral densities. One process dominates on short timescales (t < 8 beats) and reflects microtiming variability in the generation of single beats. The other dominates on longer timescales and reflects slow tempo variations. Whereas the latter did not show differences between musical genres (jazz vs. rock/pop), the process on short timescales showed higher variability for jazz recordings, indicating that jazz makes stronger use of microtiming fluctuations within a measure than rock/pop. Our results elucidate principles of rhythmic performance and can inspire algorithms for artificial music generation. By studying microtiming fluctuations in original music recordings, we bridge the gap between minimalistic tapping paradigms and expressive rhythmic performances.

Introduction

The art of creating music involves a balance of surprise and predictability. This balance needs to be achieved on many scales, and for many musical components like melody, dynamics, and rhythm. Such a balance is believed to be essential for making music interesting and appealing [1–5]. While musicians achieve this balance intuitively, the principles generating it remain unknown. A core hypothesis conjectures that this balance manifests itself in long-range correlations (LRCs) and self-similar structure of melody, dynamics, and rhythm. In fact, a first evidence for this hypothesis was provided by Voss and Clarke [6], who identified LRCs in pitch and loudness fluctuations. More recently, LRCs were found in the rhythmic structure of Western classical music compositions [2], i.e. in written notations, where the rhythm is represented in a metrically organized precise fashion. Such compositions may be played back in this precise fashion, e.g., by computers, but are often perceived to sound mechanical and unnatural [7]. In performed music, in contrast, musicians introduce subtle deviations from the metrically precise temporal location, which make the performance sound human.

Such microtiming deviations on the one hand are inevitable in human performances as human abilities to produce precisely timed temporal intervals are limited [8, 9]. On the other hand, they can be introduced on purpose and contribute to a musician’s individual expression. It is thus worthwhile elucidating the nature of temporal fluctuations and factors contributing to them in various musical contexts. Inferring such microtiming deviations from ready-made musical recordings is a challenge, however, because beat onsets must be determined with millisecond precision. In past studies this precision was achieved using fairly reduced settings, e.g. simple finger-tapping tasks [7, 10–20]. For those performed with metronome, LRCs were identified for microtiming deviations (ei) from metronome clicks [7, 10–12]. Here, LRCs manifest themselves as power-laws P(f) ∝ f−β, with 0.5 ≲ β ≲ 1.5 in the power spectral density (PSD) of the ei. In contrast, if the deviations ei were independent, one would expect β = 0. For unpaced tapping, i.e. tasks performed without a metronome, LRCs were recovered for tempo fluctuations, i.e. the PSD of the inter-beat interval (IBI) time series showed power-laws P(f) with 0.5 ≲ β ≲ 1.5 [7, 11, 15–20]. Hennig and colleagues extended this framework to more complex rhythms (with metronome), but still in a laboratory setting, They provided evidence for LRCs of microtiming deviations, consistently with those of simple finger tapping [7]. More recently, LRCs were identified for drumming in a single pop song [5]. Together, these results may suggest that both, microtiming deviations from beats, as well as tempo fluctuations show LRCs. Detailed analyses are required to investigate this hypothesis, in particular with respect to the precise scaling properties (i.e. β), and their dependence on genres. Differences in scaling may occur, as the cognitive involvement clearly differs between simple tapping tasks versus the flow experienced when making music together.

In our present study, we carry the analysis of human beat performance from the laboratory to real-world conditions of musical performances with all its complexity. To this end, we compiled beat onset time series from over 100 music recordings. To estimate the beat onset for each recording with millisecond precision, we devised a semi-automated beat extraction workflow. The resulting IBI time series allowed us to investigate both, unpaced and paced recordings, and to compare their scaling properties to those from finger tapping. Making use of our large dataset, we extended our analysis to investigate different genres, jazz and rock/pop, to elucidate how genre-dependence manifests itself in the beat structure.

Based on the millisecond precise beat time series, we could identify signatures of two processes, a clock and a motor process. Both processes influence the beat microtiming and showed similar long-range correlations. However, the motor process revealed stronger timing fluctuations within a measure for jazz compared to rock/pop. On the one hand our results point to general dynamics of microtiming fluctuations across musical genres on long time scales, reflecting the temporal organization of musical pieces. On the other hand the stronger fluctuations on fast time scales in jazz music might be attributed to the higher degree of freedom as compared to rock/pop.

Results

Millisecond-precise beat extraction

Human rhythmic performance can be precise down to the scale of several milliseconds [8, 9]. Therefore, our analyses required a millisecond-precise, consistent estimation of beat onsets. As this precision is not reached by any of the currently available methods, we developed a specialized semi-automated workflow.

A conceptual challenge in beat detection of original performances is that the beat is not uniquely defined. We approximated the beat by cymbal onsets, because drummers provide a rhythmic foundation, because cymbal onsets can be well separated from other instrument onsets, and because the short attack times allow for millisecond-precise onset detection. This precise onset detection is crucial for the subsequent systematic and reproducible analyses of large datasets.

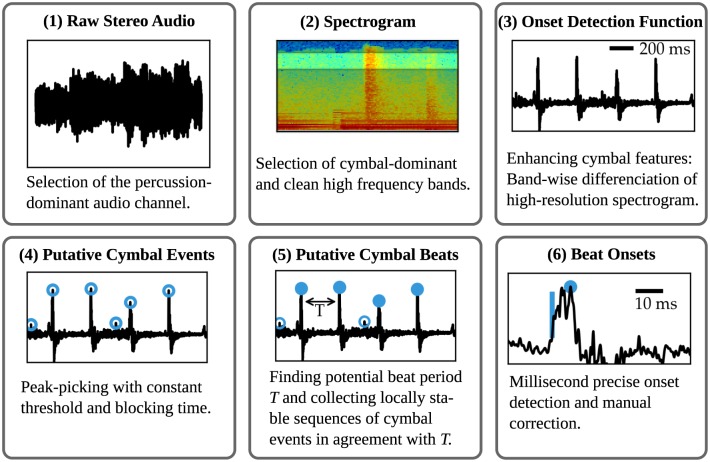

In the following, we sketch the semi-automated workflow for beat-extraction (see Fig 1). More details are given in the methods section. (1) The percussion-dominated channel is selected. (2) The frequency range in which the cymbal dominated is isolated. (3,4) Using differentiation, putative cymbal events are identified. (5) Of those, the cymbal onsets that built a regular beat sequence are combined to a beat-onset time series. This step excludes cymbal onsets that were not on the regular beat. (6) To improve the temporal precision of the extracted beat onsets, the precise onset time is estimated on the rising slope of the cymbal beat. This workflow allowed us to acquire beat time series from more than 100 recordings, comprising each about 600 beat onsets. All songs we analyzed are listed in S1–S3 Tables.

Fig 1. Workflow for the estimation of the beat-onset time series from one channel of a music recording.

Human beat performance in music

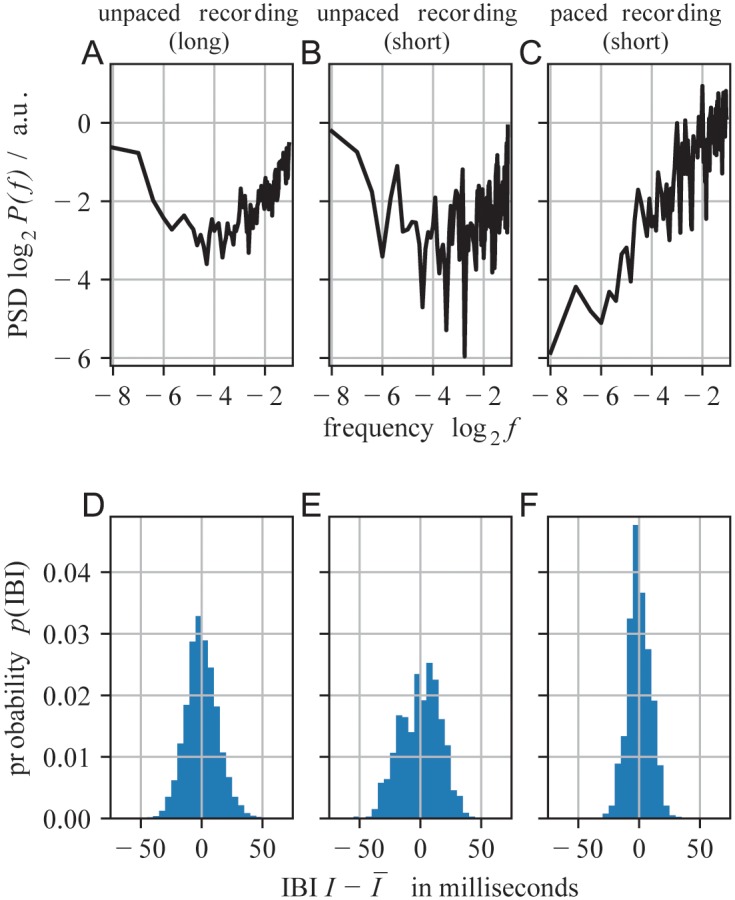

We analyzed recordings played with or without metronome. For those played with a metronome (paced recordings), we consistently found a power law for the power spectral density (PSD) of the inter-beat intervals (IBIs) (Fig 2C, sketch in Fig 3). The exponents βM of the motor or microtiming deviations varied across recordings, but consistently indicated long-range correlations (LRCs). Its median was (where the standard deviation is given in parentheses). is negative, because the IBI time series, compared to the deviations from the metronome, represents a differentiated signal (see below). significantly differed from an independence assumption (, p < 10−30, where significance was obtained by analytical calculation of the bootstrap distribution; Fig 4A). Qualitatively, these results are consistent with those for simple finger tapping tasks, indicating that a similar process underlies beat generation in simple tapping tasks as well as in music.

Fig 2.

A-C. Power spectral densities (PSD) of inter-beat intervals (IBIs) for unpaced and paced (metronome-guided) recordings. “Long” refers studio drum recordings of about 30 min and “short” to jazz and rock/pop recordings of typically ≈ 3 min (A: long jazz recording; B: Buster Smith—Kansas City Riffs; C: Bee Gees—How Deep Is Your Love). D-F. IBI distribution for the same example recordings.

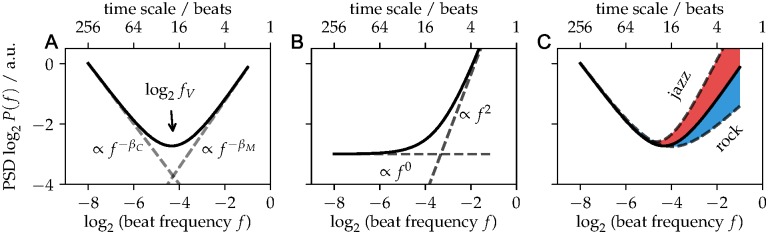

Fig 3. Scheme of the power-spectral density (PSD) of inter-beat interval (IBI) time series.

A. The combination of a long-range correlated clock and motor process (dashed lines) superpose to a V-shaped PSD of the IBIs (solid line). The characteristic frequency (or time scale, see upper axis) at the minimum determines the turnover between the clock-dominated and motor-dominated regime. B. Same as panel A, but assuming that both, the clock and motor process are uncorrelated. C. Sketch of genre-induced differences in the PSD.

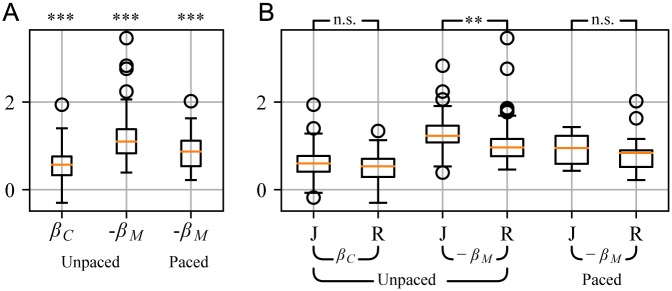

Fig 4. Scaling exponents (β) obtained from analyzing the PSDs of IBI time series.

A. Neither the clock nor the motor process is random but clearly long-range persistent, i.e. βC > 0, −βM < 2. *** denotes p ≪ 10−3 (significance obtained by bootstrapping). B. Genre-dependence of the scaling exponents. The motor process showed significantly stronger long-range persistence in rock/pop (R) than in jazz (J). The box plot depicts the median in red, boxes at the first and third quartile, whiskers at 1.5 ⋅ IQR (interquartile range), and circles represent outliers. ** denotes p = 0.001, and n.s. denotes not significant.

IBI time series from unpaced music recordings showed characteristic V-shapes for the PSD (Fig 2A and 2B). Such V-Shapes can be generated by the superposition of two stochastic processes, each of them contributing to the PSD. In analogy to finger-tapping experiments, we interpret the two processes as a “clock process” C governing temporal interval estimation, and a “motor process” M governing the motor execution of a planned interval (Fig 3A) [13, 15, 16]. In this general framework, an IBI interval Ii is generated by a clock estimate Ci, and motor deviations Mi, which represent the microtiming deviations from the intended clock interval Ci [13, 15, 16, 18]:

| (1) |

The PSD of the intervals I is thus generated by the PSDs of the two stochastic processes, C and M. The clock process contributes with a power law , where for long-range correlations. For an uncorrelated process one expects (Fig 3A and 3B). The motor process M enters I as a difference, and hence contributes to the PSD with −1.5 ≤ βM ≤ −0.5 for long-range correlations, whereas would reflect an uncorrelated process (Fig 3A and 3B). As the clock and motor processes contribute to I with exponents of opposite sign, βC > 0 and βM < 0, respectively, C dominates the PSD at low frequencies, whereas M dominates at high frequencies. This generates the characteristic V-shape and allows to estimate both scaling exponents from the PSD of the IBIs (Figs 2A, 2B and 3A). When the rhythm is performed with a metronome (paced), the dynamics of the clock process is strongly confined, and the motor process alone dominates the PSD on the entire frequency range, i.e. one observes a single power law regime in the power spectral density (PSD) of the IBI time series, as reported above (Fig 2C) [7, 15].

We systematically quantified the scaling exponents βC and βM for unpaced recordings (Figs 4 and 5). The clock process showed LRCs with . It significantly differed from an independent process, which would be characterized by (p = 10−29, bootstrap). Our results indicate that tempo fluctuations across the entire recording do not occur independently, but ultimately are related to fluctuations at any other time. The motor process contributed with βM ≈ −1 (), and significantly differed from an independent process as well (, p = 10−29, bootstrap).

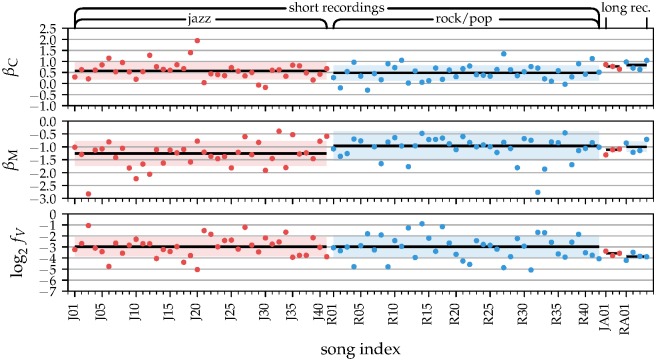

Fig 5. Fit parameters of the unpaced musical recordings by genre (jazz: red, rock/pop: blue).

Both, the music performances and our studio recordings showed consistent tendencies (median (SD) given as black lines (colored ranges) for the individual parameters). Most prominently, the motor persistence βM is genre-dependent.

These results can be interpreted as follows: As the local tempo, governed by the clock process, needs to be maintained, any deviation from the local clock or metronome shortens one interval and at the same time lengthens the other, resulting in anti-correlations on I, and negative values of βM. Last, the turnover between the clock- and motor-dominated regimes was generally at about log2 fV ≈ −3 (). That is, only for about 23 = 8 beats or a few measures the motor process dominated the PSD, while for time scales spanning more than about 8 beats the clock process dominated.

Interestingly, unpaced and paced recordings only differed slightly in their IBI distributions p(IBI) (Fig 2D–2F). Despite the absence of a metronome, unpaced recordings showed only slightly broader p(IBI) (σ = 13.1(3.8) ms and σ = 11.5(4.3) ms, respectively, p = 0.067, d = 0.393). Moreover, for both conditions, the microtiming deviations showed similar scaling properties, i.e. the βM did not differ between the conditions (p > 0.05). In contrast, the characteristic V-shape was clearly present for the PSD of I when recordings were played without a metronome, whereas those played with metronome showed a single power law, because the metronome presumably suppressed or replaced the clock process (Fig 2). This result supports the hypothesis of two independent processes, one being suppressed when beats are performed under pacing by a metronome.

As many recordings are fairly short (about 3 minutes, median of 580 beats), the effect of spectral averaging was small and thus the PSDs were noisy. To obtain PSDs from longer time series, we recorded seven unpaced, genuine drum performances from a professional musician in a studio setup, lasting 20 to 30 minutes each and comprising 3189(612) beats. For these long time series, the PSDs were very clear due to better spectral averaging (Fig 2A). The parameters obtained from these PSDs were consistent with those of the short musical recordings analyzed above: , , and . As the short and long recordings did not differ significantly in any of the parameters, we merged both for the analysis of genre-dependence in the following sections. Note, that we obtained the same results when we considered the short recordings alone, and the same as a trend for the seven long recordings, which alone, however, would not be numerous enough to reach significance.

Genre-dependence

Do the scaling properties of the clock and motor process depend on the musical genre or are they a general feature of music? With the highly precise IBI time series from jazz and rock/pop music we were able to test for genre-dependence.

Most interestingly, we found that jazz recordings showed smaller βM for the unpaced songs than rock/pop recordings (Fig 4B, p = 0.001, d = 0.509, restricted permutation test (RPT), for details on the statistical tests see methods). More precisely, for jazz recordings we found , and for rock/pop . The same trend was observed for the paced songs. In contrast, jazz and rock did not differ in the clock exponent βC (Fig 4B). These results indicate that in jazz, musicians make more use of microtiming deviations on very short time scales, i.e. they introduce stronger deviations from the local tempo. In rock/pop, musicians play with a more regular beat on these short time scales. On longer time scales, where the clock process dominates, the tempo variations do not differ between jazz and rock/pop, indicating that the overall musical structure from short motives to long blocks does not differ between these genres, and we hypothesize that other genres might show similar LRCs for the clock process as well.

Basic beat variability

In addition to the very prominent genre-dependence in the motor process, we found that on average the beat in jazz was slightly slower than in rock. In detail, the median IBI in jazz (rock/pop) was 330 ms (288 ms) for the unpaced recordings, and 400 ms (282 ms) for the paced recordings (p = 0.013, d = 0.574, RPT). More interestingly, the variability (i.e. the standard deviation of I) was higher for jazz than for rock; it was 14.3 ms (11.9 ms) for the unpaced performances, and 14.6 ms (9.2 ms) for the paced performances (p = 0.001, d = 0.745, RPT). It is to be expected, that faster performances show less variability (smaller SD). To account for this, we compared the tempo-normalized variability of the IBI time series, i.e. the Fano factor , where σ denotes the standard deviation and the median I. Consistently with the differences above, the Fano factor was higher in jazz than in rock across the paced and unpaced recordings (p = 0.016, d = 0.294, RPT, Bonferroni corrected for multiple comparisons), although the effect size was smaller. When analyzing the paced and unpaced recordings separately, both showed the same trend (p = 0.049, d = 1.211 for paced, p = 0.132, d = 0.182 for unpaced, Bonferroni corrected for multiple comparisons), however, the effect size was more pronounced for the paced songs. Together, these result suggests that jazz makes more use of temporal variability, especially when recordings are played with a metronome (paced), i.e. when only the motor, but not the clock process can be used as an expressive component of music.

Discussion

Interestingly, our results for rhythm generation in music revealed evidence for at the same two underlying processes as inferred for simple finger tapping tasks: a clock and a motor process, both of them long-range correlated. In both settings, music and finger tapping, the clock process disappeared when a metronome was used, and the remaining motor process showed a single power law with slope around unity. However, although the LRCs in both settings have similar characteristics, their origin may be very different: When a piece of music is performed, it has structure on all scales, from motifs, phrases and themes to verses and movements. This structure is reflected in the tempo and is likely to underlie the observed long-range correlations. Such structure is absent in finger tapping tasks. Those tasks are somewhat dull for the subjects, and hence it may well be that their mind is wandering during tapping. As a consequence, concentration may wax and wane, certainly on many different scales as well, thereby generating LRCs. Studies relating neural activity to motor precision and perception hint in that direction [12, 21]. Smit et al, for example, showed clear correlation between βC and the scaling exponent derived from neural alpha oscillations [12]. Hence, although the signatures of the beat time series are similar for music and finger tapping, their origin may differ vastly: One lying in the multi-scale structure of a musical composition, which aims at keeping us captivated, the other making our mind wander owing to the dullness of tapping a simple beat for minutes in a row. Such waxing and waning of concentration and performance can be tested in future studies by simultaneously measuring markers of attention in brain activity or pupil diameter and relating this to the microtiming deviations in finger-tapping tasks; in music songs, the microtiming deviations might be related to the structure of each song.

For beat generation in music as well as in simple tapping tasks, the generative models still remain unknown. In past studies, LRCs in finger tapping were attributed either generically to models for 1/f noise, e.g. long-range correlated (critical) brain dynamics or the superposition of processes on different time scales [12, 22–28]. Alternatively, they were explained by more mechanistic models, such as the linear phase correction model [29], the shifting strategy model [30], or the hopping model [31], as summarized by Torre et al. [15].

We found both, the clock and the motor process to show LRCs characterized by βC ≈ 0.6 and βM ≈ −1, respectively. Are these results for beat in music consistent with those found for finger tapping? Early studies on finger tapping assumed that both the motor and the clock process showed uncorrelated Gaussian noise (βC ≈ 0, βM ≈ −2), but never tested that explicitly by evaluating e.g. the PSD [13, 18]. First analyses of the PSD showed 0.9 < βC < 1.2 for the clock process [16, 19]. The exponent βM was not fitted but assumed to reflect an uncorrelated process (βM ≈ −2), although the spectra were clearly flatter, hinting at LRCs in the motor process as well. For the clock process, βC ≈ 1 was found consistently in various simple finger tapping tasks [12, 16–19]. When two subjects tapped in synchrony, βC was a bit smaller (βC ≈ 0.85), and in an exemplary pop song, βC was found to be even smaller (βC ≈ 0.56), which is very similar to our results on the over 100 music recordings. Overall, our study, together with the past ones, suggests that finger-tapping tasks have a larger βC than beat generation in music. This indicates stronger persistence of the tempo drifts in tapping compared to music pieces. The origin for this difference, though, remains unknown. It is conceivable that professional musicians are better trained at keeping a constant tempo, whereas the subjects in the tapping tasks typically did not have any training.

Regarding the motor process, results are very scarce for unpaced finger tapping. For paced finger tapping, the βM were typically estimated in a different manner. Instead of the IBI time series, the deviations from the metronome, i.e. the error time series was used. For those, the is expected to differ by 2, . We found βM ≈ −1, both for paced and unpaced music recordings. For tapping, earlier studies reported βM ≈ −1.5 or βM ≈ −1.3 [7, 10, 11, 32]. Hence, fluctuations on short time scales are stronger for tapping than for music beat generation. Whether these differences are attributed to the different cognitive involvements, or whether they reflect differences between lay people’s tapping performance, versus professional musician’s beat generation, remains an open question.

Materials and methods

Datasets

All datasets are available in the supplementary material S1 Dataset.

Dataset 1: Real-world musical performances

We analyzed in total 100 recordings (47 jazz and 53 rock/pop), listed in Tables S1–S3 Tables. Of these recordings, 9 jazz and 13 rock/pop recordings were played with metronome (“paced recordings”). The recordings are denoted by J** and R** with ** denoting a consecutive (arbitrary) number of the jazz or rock/pop song, respectively. All recordings satisfied the following criteria:

The cymbals were clearly audible even when other high-pitched sounds were interfering.

The cymbals’ main rhythmic function was for pace-keeping, i.e. we discarded recordings where the cymbals only occurred occasionally or were used in a mainly expressive way, the cymbal patterns frequently changed or where drum-play was virtuoso in general.

The audio quality for MP3-encoded recordings was at least 320 kBit/s.

Dataset 2: Experimental performances

To obtain a complementary dataset, we asked a professional drummer to play jazz and rock/pop music as genuinely as possible on his own and in absence of a metronome. The drummer gave informed, verbal consent that we use the recording for timing analysis.

The drummer was free in all musical decisions like tempo, rhythms and musical structure but was asked to avoid the crash cymbal and not to interrupt his performance. Additionally he was aware of the fact that we focused on the cymbals in our analysis and thus paid attention to use them consistently.

The drummer was a professional musician with a conservatory degree in drumming, and long standing experience with live and studio jazz performances. We obtained 7 drum performances in total (3 jazz and 4 rock/pop) with a length of 20–30 minutes each.

A setup with six drum microphones (Shure PGA Drumkit 6) was used to record the ride cymbal, hi-hat, the toms, the snare and the bass drum separately. In order to reduce cross-talk, we aligned each of the two overhead microphones (Shure PGA 81) to point towards the hi-hat and ride cymbal surface within a close distance (≈5 cm) and away from the other drums.

For all these recordings and performances, we estimated the beat time series as described below.

Time series extraction

In the following we detail our semi-automated workflow for millisecond-precise, reproducible beat extraction. It consists of six transformation and refinement steps in order to obtain high-precision cymbal beat time series from the initial audio signal.

(1) From the stereo audio signal, recorded at a sampling frequency of 44.1 kHz, the percussion-dominant channel was isolated (Fig 1(1)). It is denoted by ξ(t′), where t′ is the discrete time sampled at about 0.02 ms.

(2) To detect the onsets of the cymbal, we used a time-frequency representation of ξ(t′). More specifically, we calculated the short-term Fourier transform (STFT) of ξ(t′), using in the time domain a window size of 128 samples (≈3 ms), a step size of 8 samples (≈0.2 ms), smoothed with a Hann function.

In the frequency domain this results in 64 bands of fNyquist/64 ≈ 345 Hz. Hence for each time step t (corresponding to 8 samples of t′) and frequency window k, we obtained the spectrogram S(k, t). The cymbal was most prominent in the band from ≈15 kHz to ≈19 kHz. For higher frequencies, MP3 compression artifacts distorted the signal, and for lower frequencies, other instruments interfered. The precise values of the frequency band depended on the specific piece and were adjusted if necessary.

(3) For every frequency band k, a rise in power, potentially indicating a cymbal onset, was determined by subtracting the average power of the past 9.3 ms (i.e. 51 time steps) from the current sample:

The onset detection function y(t) is the average of S′(k, t) over the cymbal-dominated frequency bands k from ≈15 kHz to ≈19 kHz.

(4) To extract putative cymbal events , we applied a simple peak-picking algorithm by first applying a threshold ythresh = 0.07 ⋅ max{y(t)} and then discarded all but the maximal within any time window for size Tblock = 70 ms. This resulted in a minimum interval of 2 ⋅ Tblock between local maxima. Occasionally the threshold had to be manually lowered.

(5) To exclude all cymbal events that are not part of the beat, we first estimated the beat period T. To this end, we calculated the intervals δt between each putative cymbal event and the m = 2 following cymbal events. The beat period T then manifested as a strong peak in a histogram of the δt within a range [0 ms; 1000 ms].

The local rhythmic structure of the song was obtained by plotting the δt versus the corresponding . In this representation, the regions in the song with, e.g., fainter or missing cymbals resulted in sparsely populated regions. For such pieces, the procedure described above was repeated with a lower threshold. If this led to many false-detections, then we increased the default value of m to 3 or 4.

Having estimated the beat period T, we grouped the cymbal events to labeled sequences that were locally in agreement with T: Two cymbal events and were assigned the same label if their time difference was within T ± τ. τ was set to 35 ms and adjusted if necessary. These labeled sequences were manually assembled to full beat time series . Only sequences of length 256 or longer were used for further analysis, apart from 3 slightly shorter time series (see below).

The steps described up to this point needed about one minute quality checking per recording. They where optimized to quickly validate whether a sufficiently long sequence of beats could be extracted reliably. In the following, we describe how for these 107 recordings the millisecond precise onsets were extracted.

(6) First, all of the putative beat time series were checked for validity and corrected manually if necessary. Then we determined millisecond-precisely the physical onset time ti as the time were the onset detection function y(t) (see step 3) first rose above base line (the blue line in panel (6) indicates the estimated physical onset time ti). ti is expected to be at most 50 ms before the corresponding . Hence starting at ms, we scanned that entire window to find the last t for which y(t) exceeded its own preceding 5 ms baseline, i.e. y(t) > max{y(t − 5 ms), …, y(t − 1 sample)} is fulfilled. In a few pieces, e.g. with prominent rim-shots, which result in multiple closely spaced local maxima, the most reliable type of maximum was used by defining a target interval in which y(ti) was expected to lie. Typically, the correct onset times were unambiguously visible in the spectrograms. These automatically detected onset times ti were all checked audio-visually and adjusted if necessary.

Time series analysis

We calculated the power spectral density (PSD) of the inter-beat interval (IBI) time series, i.e. the temporal difference between two successive beat onsets di = ti+1 − ti. Here, any missing ti was handled as NaN. The IBIs were detrended with a polynomial of degree 3. Afterwards, the NaNs were discarded, because this procedure leads to a better estimate of the PSD. Time series with less than 256 data points were centered and zero-padded—this applied to three out of the 107 time series (R16, R32, R48), where with N = 194 R48 was the shortest.

To estimate the exponents, we applied the standard Welch PSD method introduced in [33] with window size Nwin = 256. To suppress spectral leakage, each segment was multiplied with a Hann window , where n denotes the index n = 0, 1, …, Nwin − 1. The overlap was set to Noverlap ≈ Nwin/2, i.e. first the number of windows fitting in the time series of length N was calculated and the overlap was adjusted to cover the whole time series instead of the next-smaller multiple of Nwin/2.

For unpaced performances, we fitted a V-shaped PSD (see Fig 3) using a superposition of two power laws with opposite-signed scaling parameters βC and βM:

| (2) |

where βC putatively quantifies how the clock process is correlated over time, while βM quantifies how the “microtiming deviation” from the beat or “motor process” is (anti)correlated over time. PC and PM describe the power of the clock and motor components, respectively. As the clock and the motor components have opposite sign, each of them dominates one side of the spectrum, and the turnover frequency, i.e. the resulting minimum of P(f), is denoted by fV (Fig 3A). fV is a function of the exponents and power of the motor and clock components:

When fitting all the free parameters θ = (fV, βC, βM) of the spectrum, we aimed at weighting the motor and clock contributions equally. To this end, we assumed a turn-over frequency and weighted the fit residuals of both sides of equally. This results in a weighting function

where N− (N+) denotes the number of frequency bins f that are smaller (larger) than . The residual

between model and data was minimized using the Broyden-Fletcher-Goldfarb-Shanno algorithm [34]. To initialize the minimization, for each trial each side of the log-transformed spectrum was approximated by a linear relationship, using the Theil-Sen method [35]. As is not known a priori, we scanned equidistantly on . For each time series we thus obtained ten parameter sets θ. Note that was only used to define the weighting function w(f), while fV proper is a free parameter. We still used different , because it allowed for an estimate of the variability of the parameters θ.

In the case of the paced (metronome-guided) recordings we expected the clock component to be missing. As a consequence, the PSD approximates a power law with . Thus when fitting with the procedure above, the parameters βM and βC are expected to be both negative. This condition was used as a test for paced versus unpaced pieces. The βM of the unpaced pieces can then be estimated either by fitting a single power law, or by fitting the V-shape as above.

Permutation test and effect size

To test for the presence of different effects for jazz (J) versus rock/pop (R), we applied a median-based two-sided permutation test on the estimated parameters βC, βM and log2 fV obtained by the V-shaped fit. Therefore, the median values for jazz and rock/pop were compared by for each parameter.

In addition to the p-values from the permutation, we reported the effect size for the differences between jazz and rock/pop recordings. We used a modified Cohen’s d

where denotes the median of the respective values , or . The pooled standard deviation σ was computed from the population sizes nJ, nR and standard deviations σJ, σR of the respective populations:

Effect sizes are considered being small for 0.2 < |d| < 0.5, medium for 0.5 < |d| < 0.8 and large for |d| > 0.8.

Supporting information

(ZIP)

(ODS)

(ODS)

(ODS)

Acknowledgments

We thank Annette Witt and George Datseris for helpful discussions and support, and Tim Dudek for making studio drum recordings for us.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

All authors were supported by the Max Planck Society. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Huron DB. Sweet Anticipation: Music and the Psychology of Expectation. Bradford Bks: MIT Press; 2006. [Google Scholar]

- 2. Levitin DJ, Chordia P, Menon V. From the Cover: Musical rhythm spectra from Bach to Joplin obey a 1/f power law. Proceedings of the National Academy of Sciences. 2012;109(10):3716–3720. doi: 10.1073/pnas.1113828109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Hennig H, Fleischmann R, Geisel T. Musical rhythms: The science of being slightly off. Physics Today. 2012;65(7):64–65. doi: 10.1063/PT.3.1650 [Google Scholar]

- 4. Schmidhuber J. Driven by Compression Progress: A Simple Principle Explains Essential Aspects of Subjective Beauty, Novelty, Surprise, Interestingness, Attention, Curiosity, Creativity, Art, Science, Music, Jokes In: Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). vol. 5499 LNAI; 2009. p. 48–76. Available from: http://link.springer.com/10.1007/978-3-642-02565-5_4 [Google Scholar]

- 5. Räsänen E, Pulkkinen O, Virtanen T, Zollner M, Hennig H. Fluctuations of Hi-Hat Timing and Dynamics in a Virtuoso Drum Track of a Popular Music Recording. Plos One. 2015;10(6):e0127902 doi: 10.1371/journal.pone.0127902 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Voss RF, Clarke J. ‘1/fnoise’ in music and speech. Nature. 1975;258(5533):317–318. doi: 10.1038/258317a0 [Google Scholar]

- 7. Hennig H, Fleischmann R, Fredebohm A, Hagmayer Y, Nagler J, Witt A, et al. The nature and perception of fluctuations in human musical rhythms. PLoS ONE. 2011;6(10):e26457 doi: 10.1371/journal.pone.0026457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Repp BH, Su YH. Sensorimotor synchronization: A review of recent research (2006-2012). Psychonomic Bulletin & Review. 2013;20(3):403–452. doi: 10.3758/s13423-012-0371-2 [DOI] [PubMed] [Google Scholar]

- 9. Merchant H, Zarco W, Prado L. Do We Have a Common Mechanism for Measuring Time in the Hundreds of Millisecond Range? Evidence From Multiple-Interval Timing Tasks. Journal of Neurophysiology. 2008; p. 939–949. doi: 10.1152/jn.01225.2007 [DOI] [PubMed] [Google Scholar]

- 10. Ding M, Chen Y, Kelso JAS. Statistical Analysis of Timing Errors. Brain and Cognition. 2002;48(1):98–106. doi: 10.1006/brcg.2001.1306 [DOI] [PubMed] [Google Scholar]

- 11. Torre K, Delignières D. Unraveling the finding of 1/fβ noise in self-paced and synchronized tapping: a unifying mechanistic model. Biological cybernetics. 2008;99(2):159–70. doi: 10.1007/s00422-008-0247-8 [DOI] [PubMed] [Google Scholar]

- 12. Smit DJA, Linkenkaer-Hansen K, de Geus EJC. Long-range temporal correlations in resting-state α oscillations predict human timing-error dynamics. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2013;33(27):11212–20. doi: 10.1523/JNEUROSCI.2816-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Wing AM, Kristofferson AB. Response delays and the timing of discrete motor responses. Perception & Psychophysics. 1973;14(1):5–12. doi: 10.3758/BF03198607 [Google Scholar]

- 14. Delignières D, Torre K, Lemoine L. Long-range correlation in synchronization and syncopation tapping: A linear phase correction model. PLoS ONE. 2009;4(11):1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Torre K, Wagenmakers EJ. Theories and models for 1/fβ noise in human movement science. Human Movement Science. 2009;28(3):297–318. doi: 10.1016/j.humov.2009.01.001 [DOI] [PubMed] [Google Scholar]

- 16. Gilden D, Thornton T, Mallon M. 1/f noise in human cognition. Science. 1995;267(5205):1837–1839. doi: 10.1126/science.7892611 [DOI] [PubMed] [Google Scholar]

- 17. Chen Y, Repp BH, Patel AD. Spectral decomposition of variability in synchronization and continuation tapping: Comparisons between auditory and visual pacing and feedback conditions. Human Movement Science. 2002;21(4):515–532. doi: 10.1016/S0167-9457(02)00138-0 [DOI] [PubMed] [Google Scholar]

- 18. Delignières D, Lemoine L, Torre K. Time intervals production in tapping and oscillatory motion. Human Movement Science. 2004;23(2):87–103. doi: 10.1016/j.humov.2004.07.001 [DOI] [PubMed] [Google Scholar]

- 19. Musha T, Katsurai K, Teramachi Y. Fluctuations of Human Tapping Intervals. IEEE Transactions on Biomedical Engineering. 1985;BME-32(8):578–582. doi: 10.1109/TBME.1985.325457 [DOI] [PubMed] [Google Scholar]

- 20. Hennig H. Synchronization in human musical rhythms and mutually interacting complex systems. Proceedings of the National Academy of Sciences of the United States of America. 2014;2014(36):1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Palva JM, Zhigalov A, Hirvonen J, Korhonen O, Linkenkaer-Hansen K, Palva S. Neuronal long-range temporal correlations and avalanche dynamics are correlated with behavioral scaling laws. Proceedings of the National Academy of Sciences of the United States of America. 2013;110(9):3585–90. doi: 10.1073/pnas.1216855110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Bak P, Tang C, Wiesenfeld K. Self-organized criticality: An explanation of the 1/f noise. Physical Review Letters. 1987;59(4):381–384. doi: 10.1103/PhysRevLett.59.381 [DOI] [PubMed] [Google Scholar]

- 23. Priesemann V, Valderrama M, Wibral M, Le Van Quyen M. Neuronal Avalanches Differ from Wakefulness to Deep Sleep—Evidence from Intracranial Depth Recordings in Humans. PLoS Computational Biology. 2013;9(3). doi: 10.1371/journal.pcbi.1002985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Priesemann V, Wibral M, Valderrama M, Propper R, Le Van Quyen M, Geisel T, Triesch J, Nikolic D, Munk MHJ. Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Frontiers in Systems Neuroscience. 2014;8:108 doi: 10.3389/fnsys.2014.00108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Linkenkaer-Hansen K, Nikouline VV, Palva JM, Ilmoniemi RJ. Long-range temporal correlations and scaling behavior in human brain oscillations. Journal of Neuroscience. 2001;21(4):1370–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Beggs JM, Plenz D. Neuronal Avalanches in Neocortical Circuits. The Journal of Neuroscience. 2003;23(35):11167–11177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Marom S. Neural timescales or lack thereof. Progress in Neurobiology. 2010;90(1):16–28. doi: 10.1016/j.pneurobio.2009.10.003 [DOI] [PubMed] [Google Scholar]

- 28. Levina A, Priesemann V. Subsampling scaling. Nature Communications. 2017;8:15140 doi: 10.1038/ncomms15140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Vorberg D, Wing A. Chapter 4 Modeling variability and dependence in timing In: Handbook of Perception and Action. vol. 2; 1996. p. 181–262. Available from: http://linkinghub.elsevier.com/retrieve/pii/S1874582206800071 [Google Scholar]

- 30. Wagenmakers EJ, Farrell S, Ratcliff R. Estimation and interpretation of 1/fα noise in human cognition. Psychonomic bulletin & review. 2004;11(4):579–615. doi: 10.3758/BF03196615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. West BJ, Scafetta N. Nonlinear dynamical model of human gait. Physical Review E. 2003;67(5):051917 doi: 10.1103/PhysRevE.67.051917 [DOI] [PubMed] [Google Scholar]

- 32. Chen Y, Ding M, Kelso J. Long Memory Processes in Human Coordination. Physical Review Letters. 1997;79(22):4501–4504. doi: 10.1103/PhysRevLett.79.4501 [Google Scholar]

- 33. Welch PD. The Use of Fast Fourier Transform for the Estimation of Power Spectra: A Method Based on Time Averaging Over Short, Modified Periodograms. IEEE Transactions on Audio and Electroacoustics. 1967;15(2):70–73. doi: 10.1109/TAU.1967.1161901 [Google Scholar]

- 34. Broyden C. The Convergence of a Class of Double-rank Minimization Algorithms. IMA Journal of Applied Mathematics. 1970;6(3):222–231. doi: 10.1093/imamat/6.3.222 [Google Scholar]

- 35. Birkes D, Dodge Y. Alternative Methods of Regression Wiley Series in Probability and Statistics. Hoboken NJ, USA: John Wiley & Sons, Inc.; 1993. Available from: http://doi.wiley.com/10.1002/9781118150238 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(ZIP)

(ODS)

(ODS)

(ODS)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.