Abstract

Context

The Center of Disease Control and Prevention’s Core State Violence and Injury Prevention Program (Core SVIPP) provides an opportunity for states to engage with their partners to implement, evaluate, and disseminate strategies that lead to the reduction and prevention of injury and violence. Core SVIPP requires awardees to develop or update their state injury and violence plans. Currently, literature informing state planning efforts is limited, especially regarding materials related to injury and violence. Presumably, plans that are higher quality result in having a greater impact on preventing injury and violence, and literature to improve quality would benefit prevention programming.

Objective

(1) To create a comprehensive injury-specific index to aid in the development and revision of state injury and violence prevention plans, and (2) to assess the reliability and utility of this index.

Design

Through an iterative development process, a workgroup of subject matter experts created the Violence and Injury Prevention: Comprehensive Index Tool (VIP:CIT). The tool was pilot tested on 3 state injury and violence prevention plans and assessed for initial usability. Following revisions to the tool (ie, a rubric was developed to further delineate consistent criteria for rating; items were added and clarified), the same state plans were reassessed to test interrater reliability and tool utility.

Results

For the second assessment, reliability of the VIP:CIT improved, indicating that the rubric was a useful addition. Qualitative feedback from states suggested that the tool significantly helped guide plan development and communicate about planning processes.

Conclusion

The final VIP:CIT is a tool that can help increase plan quality, decrease the research-to-practice gap, and increase connectivity to emerging public health paradigms. The tool provides an example of tailoring guidance materials to reflect academic literature, and it can be easily adapted to other topic areas to promote quality of strategic plans for numerous outcomes.

Keywords: index, injury prevention, program implementation, state health departments, state plans, strategic planning, violence prevention

Twenty-three state health departments are funded from 2016 to 2021 through the Core State Violence and Injury Prevention Program (Core SVIPP).1 Core SVIPP provides an opportunity for states to engage with their partners to implement, evaluate, and disseminate strategies that lead to the reduction and prevention of injury and violence. Engaging partners to develop or update an existing state injury and violence prevention plan is a required component of this funding initiative.

Quality Strategic Planning

Developing strategic plans is recommended for identifying goals and priorities; specifying the relevant details regarding objectives, actions, time frames, and resources; and leveraging efforts to achieve intended outcomes within these specifications.2 Strategic planning is considered a vital component of developing quality prevention programs, practices, and polices. For instance, the Component Model of Infrastructure includes responsive state plans as one of 5 necessary components for functioning programmatic infrastructure.3 Similarly, the Getting to Outcomes4 model prioritizes the planning process as 6 of its 10 total steps for increasing capacity for interventions.

The desire to create quality strategic plans has resulted in the emergence of trainings, guidance documents, and other resources to assist prevention planners. For instance, manuals providing “how-to” guidance to local health departments, such as the guide provided by the National Association of County & City Health Officials (NACCHO),5 and technical resources, such as those provided by the Community Toolbox,6 are openly available to prevention planners. Overall, the purpose of these materials is to provide semistructured spaces to assist practitioners in organizing plans and initiating action on various planning-related activities.

These materials are helpful for practitioners to explore complex and nuanced public health obstacles; however, the benefits of an open-ended approach have an intrinsic downside when they provide no standardized, quantitative feedback. The lack of quantified feedback provides a gap for prevention planners; it is difficult to discern the quality of one’s plan (even when planning guidance was used to create the plan) without a standardized assessment that scores the various elements. Unfortunately, the overarching literature on assessing plans using structured, quantified assessments is limited7 despite awardees and funders having a vested interest in knowing and improving the quality of prevention planning in hopes of increasing impact.

To fill this gap, there have been emerging inventories that are standardized, quantitative, and specific to a field of public health. For example, the Centers for Disease Control and Prevention’s (CDC’s) Division of Cancer Prevention and Control created the Cancer Plan Self-Assessment Tool,8 which was adapted from the State Plan Index from CDC’s Nutrition and Physical Activity Program to Prevent Obesity and Other Chronic Diseases.9 Both plans were designed to comprehensively cover key points of state plans through standardized subsections and items. The State Plan Index includes 60 items over 9 subsections (Involvement of Stakeholders, Presentation of Data on Disease Burden and Existing Efforts to Control Obesity, Goals, Objectives, Selecting Population(s) and Strategies for Intervention, Integration of Strategies with Other Programs and Implementation of Plan, Resources for Implementation of Plan, Evaluation, and Accessibility of Plan), and these items are graded on a 5-point Likert-type scale. The State Plan Index was developed to score plans quantitatively from the perspective of both government staff who review state plans and plan developers as a self-assessment.

Reflecting Shifting Paradigms in Strategic Plans

In addition to being quantitative, as in the Cancer Plan Self-Assessment Tool8 and State Plan Index,9 it is vital that strategic planning guidance is aligned with the field’s most cutting-edge paradigms. This is especially important with complex outcomes such as injury; the real-world context that surrounds the instances of injury and violence involves multiple risk/protective factors, barriers to prevention, as well as resource limitations, and these variables are complexly interconnected and influenced by one another. Traditional interventions aimed at reducing injury and violence are often singular and focused on specific risk/protective factors and specific outcomes. This approach often misses the complexity of these interconnections; to account for evolving changes within a system, interventions must be able to quickly adapt to the complex and ever-changing public health landscape.10 Systems thinking is a perspective that encourages approaching a problem through considering the context surrounding the problem, including the entire context, parts of the context, connections between these parts, and the multitude of diverse perspectives represented within this context (L. Smith, N. Wilkins, R. McClure; CDC Systems Thinking Working Group, unpublished data, 2016-2017).10,11 A systems-thinking perspective provides 2 advantages through accounting for influential factors that may have gone unnoticed and fostering an approach that assumes the need for adaptability and flexibility. In this sense, programs for prevention require a broader-reaching, systems-thinking perspective to remain relevant11 and increasingly the field has been encouraging integration of this line of thinking with prevention plans.

Utilizing the systems-thinking paradigm also requires collaboration with partners representing the diverse perspectives within that system. This approach is well suited for constructing quality state plans. Ideally, state plans result from a dynamic process that is just as important as the plan itself because the collaborative nature and diverse viewpoints from multiple stakeholders foster shared ownership and responsibility for the goals and objectives among the various programs, practices, and policies.3 Therefore, including systems thinking is advantageous not only because the resulting plans are designed to be responsive and adaptable but also because partners are fully engaged throughout the project. It is likely that plans that foster systems-level thinking and collaboration (eg, emphasizing relationship building across different levels of the social ecology, incorporating distal barriers and risk/protective factors, sustained at the policy level) are better designed to result in measureable impact.10

Including a systems-thinking perspective in prevention planning confers additional benefits beyond those that are expressly part of the process; understanding how public health risk factors, protective factors, and outcomes map onto their broader system yields a bird’s eye view that clarifies areas of overlap with other related health factors and outcomes. This understanding provides a unique opportunity for practitioners to maximize impact while minimizing resource expenditure via utilizing interventions that are cross-cutting. Within the field of violence prevention specifically, there has been increased focus on violence outcomes that share similar risk and protective factors.12 As an example, a single risk/protective factor such as connection to and support by one’s community may influence multiple violence outcomes such as child abuse and neglect and intimate partner violence. If violence prevention interventions (whether programmatic, practice, or policy interventions) focus on those risk and protective factors that are shared across outcomes, then they may influence multiple areas of violence through maximizing limited financial and personnel resources.13 Indeed, the greatest impact may be for those interventions that target the risk and protective factors that are shared at the community or societal levels (eg, cultural norms that support aggression, high alcohol outlet density, weak policies) rather than those that only affect individuals or relationship dynamics (eg, history of violence victimization, family conflict).13,14 Therefore, strong prevention planning might benefit from systematizing the inclusion of a shared risk/protective factor perspective.

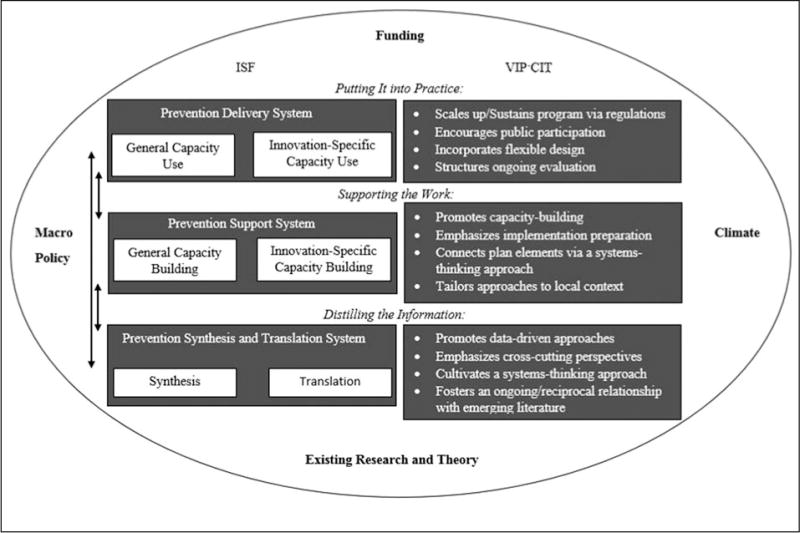

Adopting a systems-thinking perspective and an approach reliant on crosscutting, shared factors can be a complex and uncharted process for prevention practitioners. Fortunately, detailed guidance in developing interventions that are dynamic, collaborative, and ever-evolving can be found within the Interactive Systems Framework (ISF).15 The ISF provides a guiding perspective for increasing the use of evidence-based interventions in prevention efforts. The ISF emphasizes that prevention work is a collaboration between researchers and practitioners over 3 distinct subprocesses that contribute to the overall product: the Prevention Synthesis and Distillation System (collaboratively reviewing and synthesizing relevant research science), the Prevention Support System (supporting the work of developing an intervention), and the Prevention Delivery System (supporting the delivery of that intervention). Per the ISF, for evidence-based interventions to be ubiquitous, prevention programming and policy require integration of these 3 systems as one continuous, interrelated process rather than discrete actions that build upon one another temporally. Other frameworks, such as NACCHO’s Mobilizing for Action through Planning and Partnerships (MAPP),16 also stress the importance of taking a nonlinear approach to public health and emphasize the role of equitable participation across agencies and community stakeholders.

In addition, the ISF provides a structure for housing these 3 subprocesses within the larger system that influences them, specifically identifying 4 systems-level components: (1) Macro Policy, (2) Funding, (3) Climate, and (4) Existing Research and Theory. Changes to any of these individual parts of the system may result in the need to alter the intervention being developed. The interconnectedness that is prioritized by the ISF promotes strategies that maintain their rigor while being flexible and specially tailored to the individual needs of each unique context. Through its continuous collaboration, a nonlinear approach, and recognition of unique systems, the ISF provides practitioners a tangible process for developing plans that are inherently mindful of the surrounding system, that are crosscutting, and that are dynamic and flexible. Guidance for strategic planning that incorporates an ISF perspective would aid practitioners in creating strategic plans that are systems-informed, dynamic, and collaborative with diverse partners.

Design

The goal of this project was to create a strategic planning tool that is both rigorous psychometrically and useful to the states that will implement it. The tool is intended to be used by states to score their own plans in an effort to improve consistency of strategic plans and align plans with current public health paradigms or for public health researches to have a standardized way of classifying plans for research purposes. To fill the gap of strategic planning materials within the field of injury, the aim was for this tool to (1) provide standardized, quantified scores, (2) reflect the emerging systems-thinking work in injury, (3) cultivate plans with dynamic collaboration between diverse partners and stakeholders, and (4) encourage adaptive, flexible programming.

To accomplish this goal, the VIP:CIT was created by a team on the basis of the Cancer Plan Self-Assessment Tool and the State Plan Index.8,9 Items from these tools were modified with input from subject matter experts to reflect crucial elements in injury and violence prevention. As a result, the initial VIP:CIT consisted of 70 items over 12 sections (Development of the Plan, Involvement of Stakeholders, Involvement of Policy and Regulations, Presentation of Data on Injury and Violence Burden & Current Efforts Implemented, State Goals, Objectives, Strategies for Interventions, Reducing Injury and Violence Disparities, Implementation of Plan, Resources for Implementation, Evaluation, and Plan Accessibility and Usability). A 5-point Likert-type scale ranging from “item not addressed at all” (1) to “strongly addressed in plan” (5) was provided to grade each item. The VIP:CIT items (including initial and finalized items) can be found in Supplemental Digital Content Appendix A (available at http://links.lww.com/JPHMP/A379).

Pilot assessment

To determine the reliability of the plan, a pilot assessment based on previous studies was used.8,9 For this initial assessment, 3 individuals each rated 3 state plans on the basis of whether they received previous Core VIPP funding from 2011 to 2016 and whether they were funded for Core SVIPP funding beginning in 2016. State plans were selected with input from project officers familiar with the states to ensure the 3 states were different in regard to their past planning approaches. The 3 states included a state with funding for Core VIPP but not Core SVIPP (state 1), a state funded for both Core VIPP and Core SVIPP (state 2), and a state funded for Core SVIPP but not Core VIPP (state 3). We expected scores to be higher among the 2 states that received Core SVIPP funding (states 2 and 3) and lower for the state that was funded for Core VIPP but unsuccessful in competing for Core SVIPP funds (state 1).

Results

Data analysis plan

The scores of the 3 raters were averaged over each section for the 3 state plans. Therefore, each plan yielded 12 sections scores and a total score that reflected the average of the 3 raters (Table 1).

TABLE 1.

Average Scores Across Raters for VIP:CIT Subsections, Pilot and Revised Versions

| Subsection | Averaged Scores | |||||

|---|---|---|---|---|---|---|

| State 1 | State 2 | State 3 | ||||

| Period 1 | Period 2 | Period 1 | Period 2 | Period 1 | Period 2 | |

| Section 1: Development of the Plan | 2.78 | 2.33 | 3.11 | 2.67 | 4.44 | 4.22 |

| Section 2: Involvement of Stakeholders | 3.62 | 2.10 | 3.95 | 1.86 | 4.29 | 2.95 |

| Section 3: Involvement of Policy and Regulations | 2.00 | 1.40 | 2.25 | 1.07 | 2.33 | 1.73 |

| Section 4: Presentation of Data on Injury and Violence Burden & Current Efforts Implemented | 2.59 | 2.33 | 3.24 | 2.89 | 3.52 | 3.30 |

| Section 5: State Goals | 3.73 | 3.67 | 4.13 | 3.67 | 4.40 | 4.07 |

| Section 6: Objectives | 3.86 | 2.48 | 3.86 | 3.19 | 3.72 | 2.57 |

| Section 7: Strategies for Interventions | 3.39 | 2.83 | 3.56 | 3.06 | 3.50 | 3.06 |

| Section 8: Reducing Injury and Violence Disparities | 1.83 | 2.33 | 2.17 | 1.83 | 3.00 | 3.00 |

| Section 9: Implementation of Plan | 1.33 | 1.44 | 2.11 | 1.56 | 2.28 | 1.39 |

| Section 10: Resources for Implementation | 1.39 | 1.44 | 1.89 | 1.44 | 2.22 | 1.44 |

| Section 11: Evaluation | 2.43 | 1.86 | 2.24 | 1.62 | 2.29 | 1.29 |

| Section 12: Plan Accessibility and Usability | 3.76 | 3.18 | 3.81 | 3.00 | 3.10 | 2.33 |

| Total (all items) | 2.79 | 2.26 | 3.08 | 2.33 | 3.24 | 2.52 |

Abbreviation: VIP:CIT, Violence and Injury Prevention:Comprehensive Index Tool.

Krippendorff’s17 α coefficients were calculated to determine interrater reliability using the ReCal calculator.18 Alpha was chosen, given its advantages with Likert-type data, it allows more than 2 raters, and its flexibility with missing data.17,19 Krippendorff’s17 α ranges from −1 to 1, with scores closer to 1 indicating better interrater reliability. When interpreting α, Krippendorff cautions against decontextualizing the results of the content analysis from the immediate research question (ie, whether the scores indicate usable data depends on how the data are to be used). When cutoff criteria are required, he suggests that scores greater than .667 indicate tentatively adequate levels of reliability and scores greater than .800 are adequate.20

Pilot results and VIP:CIT revisions

Initial results from the pilot study revealed poor interrater reliability (Table 2). Over the 3 state plan total scores, α coefficients ranged from .28 to .56. When comparing the 12 subsections, interrater reliability was highly variable both within each state over the 12 sections and when comparing between state scores over each section.

Table 2.

Interrater Reliabilities for VIP:CIT Subsections, Pilot and Revised Versions

| Subsection | Krippendorff’s α | |||||

|---|---|---|---|---|---|---|

| State 1 | State 2 | State 3 | ||||

| Period 1 | Period 2 | Period 1 | Period 2 | Period 1 | Period 2 | |

| Section 1: Development of the Plan | .30 | .11 | −.21 | .67b | −.26 | −.14 |

| Section 2: Involvement of Stakeholders | .11 | .12 | −.28 | .26 | −.11 | −.42 |

| Section 3: Involvement of Policy and Regulations | .43 | .48 | −.22 | .00 | −.08 | −.93 |

| Section 4: Presentation of Data on Injury and Violence Burden & Current Efforts Implemented | .78b | .74b | .88c | .95c | .47 | .47 |

| Section 5: State Goals | .55 | .78b | .16 | .60 | −.18 | .58 |

| Section 6: Objectives | .64 | .87c | .27 | .58 | −.04 | .22 |

| Section 7: Strategies for Interventions | .62 | .65 | .60 | .60 | .44 | −.05 |

| Section 8: Reducing Injury and Violence Disparities | −.25 | .71b | .22 | .15 | −.18 | −.12 |

| Section 9: Implementation of Plan | −.01 | .15 | .32 | −.10 | .31 | .12 |

| Section 10: Resources for Implementation | .21 | .03 | −.14 | −.40 | −.24 | −.12 |

| Section 11: Evaluation | .08 | .00 | −.13 | .26 | −.26 | −.11 |

| Section 12: Plan Accessibility and Usability | .61 | .67b | .47 | .15 | −.02 | .38 |

| Total (all items) | .56 | .62 | .45 | .65 | .28 | .50 |

Bolded cells indicate where interrater reliability improved 0.10 points or more from period 1 to period 2.

Scores at or above .66.

Scores at or above .80.

The original raters provided feedback that a rubric may improve scoring consistency through clarifying the intention of each item. In addition, it was hoped that a rubric would be beneficial for clarifying the ideal content of state plans. In this sense, adding a rubric would improve the VIP:CIT from being not only a way to measure plan quality but also a guide to improving plan quality during development. To develop the rubric, 2 of the developers generated behavioral anchors on a 5-point scale for all items, with each developer generating half of the anchors and then reviewing the other developer’s generated anchors. The 2 developers then jointly discussed and revised all anchors until agreement was reached. The resulting rubric provides behavioral and, when applicable, quantified anchors for each item. The VIP:CIT rubric can be found in Supplemental Digital Content Appendix B (available at http://links.lww.com/JPHMP/A380).

Qualitative feedback

The VIP:CIT was then presented to subject matter experts to elicit feedback. Revisions were made to enhance the materials further, including adding items and amending language. The Rapid Synthesis and Translation Process,21 a process of synthesizing information based on the ISF,15 was utilized to integrate practitioner thought and feedback with this expert feedback. Practitioners were from 2 states with Core SVIPP funding who requested additional guidance for updating their injury plans; neither of these states was included in the 3 state plans rated in the pilot assessment. This state feedback was consistently positive, with 3 predominant themes emerging: (1) the rubric provides detailed and specific information on the content necessary for a quality plans, easing the process of addressing plan weaknesses; (2) the quantified scores provide an easier way to track plan improvement with each iteration; and (3) the standardized sections increase and ease communication about strategic planning with stakeholders and with other state injury programs. One state elaborated that the standardized sections would be helpful in ensuring that communication is consistent in “comparing apples to apples” when discussing content with partners, other states, or CDC staff. Both states indicated that they were utilizing the tool in updating their plans for Core SVIPP.

Incorporating practitioner feedback allowed us to ensure not only that the ISF was followed when developing the VIP:CIT but also that the VIP:CIT elements closely align with the 3 ISF15 processes (Figure). Following the ISF for building the VIP:CIT ensured that practitioners helped shape the tool and its elements, resulting in a product that is applicable to the future practitioners who might use it; the VIP:CIT is intentionally structured to promote plans formed alongside data and scientific research (as reflected in the Prevention Synthesis and Translation System), to acknowledge and change the systemic and structural components that influence plan success (as reflected in the Prevention Support System), and to successfully create sustainable, wide-scale plans that are continuously reviewed (as reflected in the Prevention Delivery System). The VIP:CIT was designed intentionally to be flexible to the surrounding context of interventions, and because the tool was informed by practitioner perspectives, it provides guidance to practitioners on interacting with contextual elements that may influence their efforts, including relevant policy, funding, climate, and science.

Figure 1. The Interactive Systems Framework (ISF) and VIP:CIT Support.

Abbreviations: ICF, Interactive Systems Framework; VIP:CIT, Violence and Injury Prevention:Comprehensive Index Tool.

Period 2 assessment

To retest the reliability of the VIP:CIT after adding the rubric, a second round of rating state plans was conducted. To directly compare interrater improvement, the same 3 states were used. Two of the 3 reviewers remained the same. Overall, interrater reliability improved moderately for all state total scores (ranging from 0.32 to 0.61). Regarding the 12 subsections, interrater reliability generally improved for states 1 and 2 (ie, the states with lower average scores; see Table 1) and worsened for state 3 (ie, the state with the highest average scores). It appears that score consistency increased as plan quality decreased, indicating that the rubric helped score lower- and moderate-quality plans but perhaps confused scoring for higher-quality plans. It appears that ratings were generally higher and more consistent when taken over the entire measure (rather than each subsection), which is consistent with previous validation testing for the State Plan Index.9,22 When considering total scores, the updated VIP:CIT’s reliability was tentatively promising across all 3 plans (ranging from 0.50 to 0.65), indicating that the tool is useful for states with varying levels of infrastructure. However, these results were below Krippendorff’s20 recommendations, indicating that future versions will need to focus on improving reliability. On the basis of rater feedback, an additional item was created on the basis of feedback from the rubric, bringing the item total to 73.

Discussion

Strategic planning is a complex process, and guidance materials assisting with plan formulation are limited. To respond to the multitude of changes occurring in public health (eg, changes in scientific evidence, priorities, funding levels, external support), state plans must be dynamic and evolve relative to these changes; such plans are a necessary component for functioning public health infrastructure.3 Crafting these types of quality plans requires more than capacity building alone,23 leading to a need to create planning tools that are both comprehensive and flexible to practitioner needs.

The VIP:CIT is a helpful tool that is designed to promote consistency in strategic planning for injury. The VIP:CIT promotes diverse collaboration, adaptability, connections to the emerging academic literature, and systems perspectives in strategic planning. Initial feedback indicates that the VIP:CIT fulfills this role for state strategic planning through providing an in-depth rubric and a standardized, quantifiable structure for measuring plans. Reliability for the VIP:CIT total scores suggests that the tool is useful for all states despite level of capacity. However, as of yet, the VIP:CIT appears to be useful for comparing total scores (rather than comparing quantified sections across states), as comparisons between raters remain only moderately reliable for these sections. Future iterations of the VIP:CIT will require changes or added training to increase reliability between raters. In addition, it will be important to test how the VIP:CIT’s ratings perform when the rater’s perspective differs (eg, between federal or state staff raters), as previous work for the State Plan Index9 has shown that ratings may change on the basis of these perspectives.22

Implications

Although scores between raters require improvement, the VIP:CIT’s unique design and relevance to the public health field maintain clear benefits for practitioners while encouraging policies that are impactful, evidence-based, and resource-friendly.

Implications for research

The VIP:CIT also contributes to academic discussion in multiple ways. Its design is based on the ISF, a model that emphasizes the importance of creating and supporting an integrated relationship between science and practice. The VIP:CIT’s structure holds promise to reduce the persistent gap between emerging research and actual prevention practice. Finally, the VIP:CIT’s quantified scores provide the means to advance further research; a quantified score facilitates further research into whether this operationalization of plan quality is a meaningful predictor of program impact and outcomes. Since research in this area is sparse, the VIP:CIT has potential to encourage future research into plan development and its relationship to meaningful public health intervention.

This work has notable methodological and analytical limitations. Although interrater reliability improved following the addition of the rubric, overall α coefficients have reached only moderate levels. Further work is required to increase reliability to high levels. For instance, increased training or access to additional scoring materials may be needed. In this vein, this investigation was further limited by methodological concerns, including a limited number of raters, a limited number of plans rated, and the lack of tying VIP:CIT ratings to injury outcomes. Specifically, generalizability of the current findings would be improved through another analysis using different raters and different state plans between period 1 and period 2 to demonstrate reliability of the tool despite the consistency of state plan or the perspective of the rater.

Further work is required to assess and enhance the VIP-CIT’s utility for state health departments and their partners. Specifically, additional collaborative work with state health departments, their stakeholders, and program experts may be useful for improving the VIP:CIT and state plans in general. In addition, future research might focus on whether the VIP:CIT’s quantitative scores predict prevention outcomes or result in stronger program impacts. Also, while the VIP:CIT’s comprehensive nature is a strength, a plan that achieved a perfect score on all items would likely be intensive to create, require untenable resources, and be overwhelming in nature; the VIP:CIT is meant to be applied flexibly to improve the planning process rather than to seek a perfect score. However, future work may help streamline the VIP:CIT’s items to reduce the complexity and identify items that may be most related to program success. Public health practitioners may benefit from additional technical assistance materials on using the tool or assistance tailored specifically to individual states (eg, assistance improving scores on a specific section that is lower than desired). Assessment of state plans has been used in other topic areas to identify emerging themes and gaps across states to provide a national perspective.24–26 Assessing all state injury and violence prevention plans may help inform training and technical assistance opportunities, tracking state priorities, and whether evidence-based strategies increase over time. The VIP:CIT’s utility for state partners is well aligned with how previous CDC plans have been used, such as the State Plan Index9; through application, the State Plan Index was found to be beneficial for improving communication around, guiding technical assistance for, and providing training for strategic planning.22 Although designed to target violence and injury specifically, the VIP:CIT may serve as a model for not only an index but also a comprehensive rubric in other public health interests where comprehensive planning is beneficial.

Supplementary Material

Implications for Policy & Practice.

The VIP:CIT:

-

■

Quantifies plan quality, allowing practitioners to observe changes longitudinally and alongside external context.

-

■

Provides a standardized rubric that creates a common language for practitioners and their peers while maintaining flexibility for modification to other public health outcomes.

-

■

Aligns state plans with current perspectives regarding research and practice interconnectivity, intervention flexibility, systems-thinking, and crosscutting approaches.

-

■

Incorporates systems-level components and encourages practitioners to consider policy as a core component of creating goals, engaging stakeholders, and implementing strategies.

-

■

Ensures that partnerships are diverse, equal, and continuously engaged, which is essential to successful program implementation, as identified by former CDC director Dr Frieden.27

-

■

Encourages codeveloping plans alongside partners and stakeholders with consistent communication of program missions, goals, and key activities.

-

■

Assists practitioners in considering implementation outcomes in complex systems, including across population subgroups, policies, and social determinates of health.2

-

■

Includes broader practices and social policies, regulations, and laws as interventions alongside traditional programs.

Acknowledgments

The authors thank Emily Sims, MPH, Practice Integration & Evaluation Branch, Division of Analysis, Research, and Practice Integration, National Center for Injury Prevention and Control, Centers for Disease Control and Prevention, Atlanta, Georgia, for her expert feedback and acting as a rater for the validation process.

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

Footnotes

The authors declare no conflicts of interest.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s Web site (http://www.JPHMP.com).

References

- 1.Centers for Disease Control and Prevention. Core State Violence and Injury Prevention Program (Core SVIPP) Web site. http://www.cdc.gov/injury/stateprograms/index.html. Updated July 5, 2016. Accessed October 14, 2016.

- 2.Institute of Medicine. Living Well With Chronic Illness: A Call for Public Health Action. Washington, DC: The National Academies Press; 2012. [Google Scholar]

- 3.Lavinghouze SR, Snyder K, Rieker PP. The Component Model of Infrastructure: a practical approach to understanding public health program infrastructure. Am J Public Health. 2014;104(8):e14–e24. doi: 10.2105/AJPH.2014.302033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wandersman A, Imm P, Chinman M, Kaftarian S. Getting to outcomes: a results-based approach to accountability. Eval Program Plann. 2000;23(3):389–395. [Google Scholar]

- 5.National Association of County & City Health Officials. Developing a local health department strategic plan: a how-to guide. http://www.naccho.org/uploads/downloadable-resources/Programs/Public-Health-Infrastructure/StrategicPlanningGuideFinal.pdf. Published 2010. Accessed December 29, 2016.

- 6.KU Work Group for Community Health and Development. The Community Toolbox Web site. http://ctb.ku.edu/en. Accessed December 29, 2016.

- 7.Rochester P, Adams E, Porterfield DS, Holden D, McAleer K, Steele CB. Cancer Plan Index: a measure for assessing the quality of cancer plans. J Public Health Man. 2011;17(6):E12–E17. doi: 10.1097/PHH.0b013e318215a603. [DOI] [PubMed] [Google Scholar]

- 8.Division of Cancer Prevention and Control. Cancer Plan Self-Assessment Tool. www.cdc.gov/cancer/ncccp/pdf/CancerSelfAssessTool.pdf. Accessed October 14, 2016.

- 9.Butterfoss FD, Dunět DO. State Plan Index: a tool for assessing the quality of state public health plans. Prev Chronic Dis. http://www.cdc.gov/pcd/issues/2005/apr/04_0089.htm. Published April 2005. Accessed October 14, 2016. [PMC free article] [PubMed]

- 10.Leischow SJ, Best A, Trochim WM, et al. Systems thinking to improve the public’s health. Am J Prev Med. 2008;35(2 suppl):S196–S203. doi: 10.1016/j.amepre.2008.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Leischow SJ, Milstein B. Systems thinking and modeling for public health practice. Am J Public Health. 2006;96(3):403–405. doi: 10.2105/AJPH.2005.082842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wilkins N, Tsao B, Hertz M, Davis R, Klevens J. Connecting the Dots: An Overview of the Links Among Multiple Forms of Violence. Atlanta, GA: National Center for Injury Prevention and Control, Centers for Disease Control and Prevention; 2014. [Google Scholar]

- 13.Wilkins N, Myers L, Kuehl T, Bauman A, Hertz M. Connecting the Dots: state health department approaches to addressing shared risk and protective factors across multiple forms of violence. J Public Health Manag Pract. 2017;24:S32–S41. doi: 10.1097/PHH.0000000000000669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Armstead T, Wilkins N, Doreson A, Allen C. Community indicators for evaluating violence prevention programs. J Public Health Manag Pract. 2017;24:S42–S50. doi: 10.1097/PHH.0000000000000681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wandersman A, Duffy J, Flaspohler P, et al. Bridging the gap between prevention research and practice: the interactive systems framework for dissemination and implementation. Am J Community Psychol. 2008;41(3/4):171–181. doi: 10.1007/s10464-008-9174-z. [DOI] [PubMed] [Google Scholar]

- 16.National Association of County & City Health Officials. Mobilizing for Action through Planning and Partnerships Web site. http://www.naccho.org/programs/public-health-infrastructure/mapp. Accessed December 29, 2016.

- 17.Krippendorff K. Estimating the reliability, systematic error, and random error of interval data. Educ Psychol Meas. 1970;30:61–70. [Google Scholar]

- 18.Freelon D. ReCal OIR: ordinal, interval, and ratio intercoder reliability as a Web service. Int J Internet Sci. 2013;8(1):10–16. [Google Scholar]

- 19.Hayes AF, Klaus K. Answering the call for a standard reliability measure for coding data. Commun Methods Meas. 2007;1(1):77–89. [Google Scholar]

- 20.Krippendorff K. Reliability in content analysis: some common misconceptions and recommendations. Hum Commun Res. 2004;30(3):411–433. [Google Scholar]

- 21.Thigpen S, Puddy RW, Singer HH, Hall DM. Moving knowledge into action: developing the Rapid Synthesis and Translation Process within the Interactive Systems Framework. Am J Commun Psychol. 2012;50(3/4):285–294. doi: 10.1007/s10464-012-9537-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dunět DO, Butterfoss FD, Hamre R, Kuester S. Using the State Plan Index to evaluate the quality of state plans to prevent obesity and other chronic diseases. Prev Chronic Dis. 2005;2(2):A10. [PMC free article] [PubMed] [Google Scholar]

- 23.Leeman J, Calancie L, Hartman MA, et al. What strategies are used to build practitioners’ capacity to implement community-based interventions and are they effective? A systematic review. Implement Sci. 2015;10(1):80. doi: 10.1186/s13012-015-0272-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Holmberg SD, Layton CM, Ghneim GS, et al. State plans for containment of pandemic influenza. Emerg Infect Dis. 2006;12(9):1414–1417. doi: 10.3201/eid1209.060369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ory MG, Sanner B, Vollmer Dahlke D, Melvin CL. Promoting public health through state cancer control plans: a review of capacity and sustainability. Front Public Health. 2015;3:40. doi: 10.3389/fpubh.2015.00040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Thomas JC, Dasgupta N, Martinot A. Ethics in a pandemic: a survey of the state pandemic influenza plans. Am J Public Health. 2007;97(suppl 1):S26–S31. doi: 10.2105/AJPH.2006.093443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Frieden T. Six components necessary for effective public health program implementation. Am J Public Health. 2014;104:17–22. doi: 10.2105/AJPH.2013.301608. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.