Abstract

Objective

To develop an automated, data‐driven, and scale‐flexible method to delineate hospital service areas (HSAs) and hospital referral regions (HRRs) that are up‐to‐date, representative of all patients, and have the optimal localization of hospital visits.

Data Sources

The 2011 state inpatient database in Florida from the Healthcare Cost and Utilization Project.

Study Design

A network optimization method was used to redefine HSAs and HRRs by maximizing patient‐to‐hospital flows within each HSA/HRR while minimizing flows between them. We first constructed as many HSAs/HRRs as existing Dartmouth units in Florida, and then compared the two by various metrics. Next, we sought to derive the optimal numbers and configurations of HSAs/HRRs that best reflect the modularity of hospitalization patterns in Florida.

Principal Findings

The HSAs/HRRs by our method are favored over the Dartmouth units in balance of region size and market structure, shape, and most important, local hospitalization.

Conclusions

The new method is automated, scale‐flexible, and effective in capturing the natural structure of the health care system. It has great potential for applications in delineating other health care service areas or in larger geographic regions.

Keywords: Hospital service area, hospital referral region, HCUP, community detection method, Dartmouth method

A reliable geographic unit is critical to researchers, practitioners, and policy makers involved in health care economics and policy, health services, and population health research (Kilaru et al. 2015). Such analysis units can be defined by health care markets (e.g., hospital service areas [HSAs] and hospital referral regions [HRRs] provided by Dartmouth Atlas of Health Care, http://www.dartmouthatlas.org/), political boundaries (e.g., county, state), administrative areas (e.g., township), or census units (e.g., census tracts, metropolitan statistical areas). However, the administrative, census, or political units may be inadequate in health care research because they are (i) not intended for health care studies, and (ii) prone to the modifiable areal unit problem (MAUP) as a result of the multiple levels of aggregation provided, although ancillary data sources are usually available at these units. Instead, the Dartmouth HSAs/HRRs are designed to capture local hospitalization patterns and thus are chosen by many studies as the adequate analysis units for health care research such as to examine the geographic variation of the health care system (Klauss et al. 2005; AHA [American Hospital Association] 2009; Zuckerman et al. 2010; MedPAC [Medicare Payment Advisory Commission] 2011, 2014; Zhang et al. 2012; Newhouse et al. 2013; Kilaru et al. 2015).

The Dartmouth HSAs/HRRs were defined through a three‐step process. The first step assigns all acute care hospitals to the town or city where they are located. Based on the 1992–1993 Medicare hospitalization records, the second step uses a plurality rule that assigns each ZIP code to the town or city containing the hospitals most often used by local residents; and the set of ZIP codes assigned to a town or city becomes a preliminary HSA. The third step examines the geographic contiguity of all ZIP codes in a HSA and assigns, if any, enclave ZIP code(s) to its adjacent HSAs. In a similar fashion, the larger HRRs were subsequently constructed from HSAs based on cardiovascular surgery and neurosurgery referral patterns using the same data (Cooper 1996).

However, the Dartmouth HSAs and HRRs are not free of concerns and need to be improved and updated (Guagliardo et al. 2004; Jia, Xierali, and Wang 2015; Kilaru et al. 2015). The first concern is that they were defined about two decades ago; and the hospitalization patterns of residents may have altered significantly over time as a result of the changes of hospitals (e.g., hospitals close, merge, or open), population distribution, infrastructure (e.g., new roads), political regulations, and insurance policies. For example, three of the 114 Dartmouth HSA units in Florida no longer have any hospital in it. These units no longer reflect current hospital utilization patterns.

Secondly, the representativeness of Dartmouth units in terms of patient groups is questioned. As mentioned, the Dartmouth HSAs/HRRs were based on the Medicare patients only and hence were not representative of other patient groups. Guagliardo et al. (2004) assessed the fit of the Dartmouth HSAs for pediatric patients in California and found that they were not appropriate for all age groups and service types. Jia, Xierali, and Wang (2015) compared the HSAs derived from the Medicare records with the HSAs based on all‐payer data in Florida in 2011 and found significantly different HSA configurations. Kilaru et al. (2015) discovered that Dartmouth units would vary significantly in characterizing the inpatient hospital care patterns by different patient attributes (e.g., insurance type and the utilization of emergency department services) as well as HSA characteristics (e.g., number of hospitals and urban or rural location).

The final concern refers to the scientific soundness of the Dartmouth approach for HSA/HRR delineation. The Dartmouth method and other similar approaches (e.g., Klauss et al. 2005; Jia, Xierali, and Wang 2015) involve uncertainty or arbitrary choices (e.g., assigning an HSA or enclave to one of its adjacent HSAs to ensure geographic contiguity), and the processes are not completely automated. Additionally, without an explicit objective function in the delineation process, one cannot guarantee that the derived HSAs are optimal and robust in terms of their structures and connections. Given the very goal of defining HSAs for promoting hospital localization, Dartmouth HSAs fell short in ensuring that the patient‐to‐hospital flows are maximally localized within the HSAs.

The main goal of this study was to develop a completely automated, data‐driven, and scale‐flexible method to redelineate HSAs and HRRs that are up‐to‐date, representative of all patients, and have the optimal hospital localization patterns. The proposed method is based on an optimization technique often used in social network studies to define communities (regions) by repeatedly merging subregions (e.g., ZIP code areas) that maximize intraregional flows (e.g., patient‐to‐hospital flows) and minimize interregional flows (Zhao, Levina, and Zhu 2011). In essence, it is a data‐driven approach that more accurately detects patient communities hidden in the data, and it can be automated in a program for easy implementation. In addition, this method is scale‐flexible and can produce a number of HSAs (or HRRs) defined by the user, an important feature that can help policy analysts to examine policy effectiveness at multiple scales. The method is illustrated in a case study in Florida. Various indices are used to demonstrate the benefits of the method.

Methods

Study Area and Data Sources

The study area is the state of Florida. Florida is only contiguously connected with two states—Alabama and Georgia—to the north, and bordered by the Gulf of Mexico to the west, the Atlantic Ocean to the east, and the Straits of Florida to the south. This unique geography makes Florida an ideal study area as only a very small population seeks hospital care outside of the state (Jia, Xierali, and Wang 2015).

The major data sources used in this research is the state inpatient databases (SID) of Florida 2011 from the Healthcare Cost and Utilization Project (HCUP) sponsored by the Agency for Healthcare Research and Quality ((AHRQ 2011). The SID includes individual inpatient discharge records in terms of all patients, regardless of payer, from community hospitals in Florida 2011; and contains variables such as principal and secondary diagnoses and procedures, payment source (e.g., Medicare, Medicaid and private insurance), total charges, patient ZIP codes, and a unique hospital identifier (http://www.hcup-us.ahrq.gov/sidoverview.jsp). We linked the data with the 2013 American Hospital Association (AHA) survey based on the unique hospital identifier and appended hospital information such as hospital ZIP codes, bed size, and hospital type. The Florida 2011 SID consists of 2,656,249 inpatient discharge records from 281 hospitals, including hospital transfers. For patients admitted to multiple hospitals, each admission is regarded as a separate record in this analysis. We excluded 17,178 records associated with 13 hospitals that cannot be identified, 22,733 records without residence ZIP codes, 174,004 observations from hospitals that are not defined as general medical and surgical hospitals by the AHA survey (e.g., pediatric, psychiatric, long‐term acute care, rehabilitation, women's and other specialty centers), and 96,302 admissions from out‐of‐state patients. After preprocessing, there remained 2,346,032 all‐payer records (88.32 percent of the original records) associated with 202 hospitals (72 percent of all hospitals) and 983 ZIP codes (100 percent of all ZIP codes) in Florida in 2011. ZIP code areas were represented by their population‐weighted centroids (calibrated from population data at the census block level) for improved spatial accuracy, especially for rural ZIP code areas of large area size (Wang 2015:78). See Figure S1 for more details about our study population.

The Community Detection Method

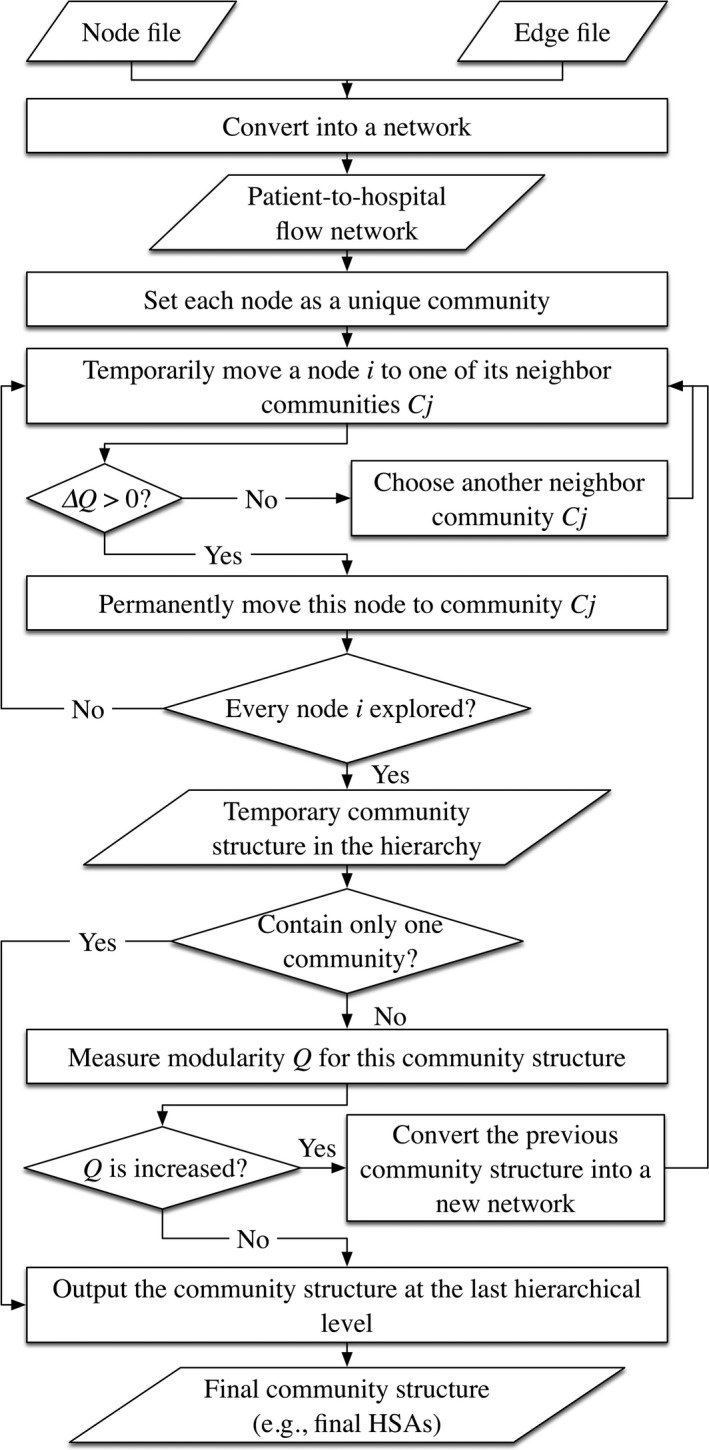

This research applies the Louvain community detection method (Blondel et al. 2008) to delineate HSAs and HRRs. Often used in social network studies, it is an optimization process of partitioning nodes in a network into natural groups (communities) of nodes (Newman 2004a, 2010). Basically, this approach is an agglomerative hierarchical clustering (i.e., bottom‐up) approach. In the beginning, the algorithm treats every node as a group (community) and then successively combines communities together to form larger communities, choosing at each step the best agglomeration with respect to the previous community configuration, until all nodes in the network are grouped into one large community or no improvements in the community configuration are observed.

To examine the quality of each agglomeration (i.e., configuration of the resulted community), Newman and Girvan (2004) designed the so‐called modularity, which is now the most acceptable quality measure. Similar to the principle of statistical significance test, it compares the total number of edges (or total weights of edges for a weighted network) fallen within all communities in a given network to that in a null model (i.e., random network), and a good division sees more within‐community edges relative to the number of such edges expected by chance (Newman 2004a). Mathematically, the modularity Q of a network (weighted) is formulated as (Newman 2004b)

| (1) |

where A ij represents the edge weight between nodes i and j, m = 1/2Σij A ij is the sum of weights of all edges in the network, k i = Σj A ij is the sum of weights of edges linked to node i (also termed the degree of node i), c i is the community to which node i is assigned, and δ(x, y) equals 1 when x = y, and 0 otherwise. In essence, this equation calculates the difference of total within‐community edge weights between a real flow network and an expected flow network, and a higher modularity score indicates a better division (or more steady community structure). A modularity score of 0 indicates that the fraction of total edge weights is no better than that expected at chance, and thus no communities exist in the network; and a score of 1 represents the most robust community structure. In practice, the modularity Q ranges between 0.3 and 0.7 for most real‐world networks (Newman and Girvan 2004; Newman 2006). As a result, community detection is eventually a modularity optimization process searching for the agglomeration that produces communities with the maximal modularity score, in other words, maximal intracommunity flows and minimal intercommunity flows (Zhao, Levina, and Zhu 2011).

Here, we illustrate the implementation of Louvain community detection method in delineating HSAs in Florida based on its 2011 SID. First, the extracted all‐payer patient‐to‐hospital flows from the SID are used to construct a network with (i) ZIP code (patient residential ZIP codes and hospital ZIP codes) centroids as its nodes, (ii) flows between a patient ZIP code and a hospital ZIP code as its edges, and (iii) the flow volume as the edge weights. This network is represented by two ASCII files—one for nodes and the other for edges between them—and then fed into the community detection algorithm. Figure 1 illustrates the preceding flow network constructed from Florida 2011 SID. More flows are observed in central Florida and along the western and eastern coastal lines where major metropolitan areas are located. One may also observe the distance decay effect that the flow volumes decrease as the distances between patients and hospitals increase.

Figure 1.

All‐Payer‐Based Patient‐to‐Hospital Flow Network in Florida 2011

- Note. Line width is proportional to the flow volume on it. [Color figure can be viewed at wileyonlinelibrary.com]

Specifically, this algorithm generally iterates based on a two‐phase process. The first phase treats each ZIP code centroid (for simplicity, we use node hereafter) in the network as a unique community (e.g., HSA), then for each node i removes it from its original community and puts it into one of its neighboring communities. A local modularity gain is calculated to help identify the destination community for node i that gives the biggest increase in modularity. Equation (2) presents the local modularity gain by adding node i into one of its neighboring communities C j (Blondel et al. 2008):

| (2) |

where Σin is the sum of weights of all edges inside C j, Σtotal is the sum of weights of all edges that have one of their ends in C j, k i is the sum of weights of edges linking to node i, and k i,in represents the sum of weights of edges from node i to all nodes in C j. This process is repeated for all nodes until no modularity gain can be obtained. However, the community configuration (i.e., overall modularity) returned by the first phase here is merely a local optimum in this hierarchical approach. The second phase then starts to detect the global‐optimal result. Specifically, it generates a new network where its nodes are the communities identified in the first phase, and the weights of the edges between the new nodes are the sum of the weight of edges between nodes in two original communities discovered in the first phase. The first phase is then reapplied afterwards. Repeat this two‐phase process until there is only one large community left or no positive modularity (overall) change can be experienced. Figure 2 shows the workflow of this method.

Figure 2.

Workflow of the Proposed Method

From the extracted 2,346,032 records, we further pulled out those records associated with cardiovascular surgery and neurosurgery; and then applied the above workflow again to delineate HRRs in Florida.

In addition to this Louvain method, there are also other methods for community detection in the literature (see reviews in Schaeffer 2007; Fortunato 2010; and Newman 2010). But this approach has one significant feature over others that it is scale‐flexible. Specifically, it records every hierarchy of community structures and thus facilitates the investigation of network communities at multiple scales (Fortunato 2010; Ratti et al. 2010). Therefore, one may trace back at all hierarchical levels to search for the desired community structure. This scale‐flexible nature can be useful to researchers and policy makers to examine the effectiveness of planning and policies targeted at multiple scales or the impacts of MAUP.

Evaluation Metrics

We selected nine metrics to characterize HSAs/HRRs and evaluate the performance of the proposed method. These metrics include localization index (LI), market share index (MSI), and net patient flow (NPF) that are commonly used to characterize health care service regions (Kilaru et al. 2015), as well as geometric compactness, region size balance, and hospital market structure which are common measures to examine regionalization products from a geographic perspective (Guo 2008). Being the most widely used indicator that reveals local hospitalization patterns, LI describes the proportion of patients that are treated in the same HSA/HRR as where they live, and it is designed to capture the propensity of patients visiting local hospitals (Guagliardo et al. 2004). A higher LI demonstrates more accurate or representative delineation of HSA or HRR boundaries. MSI is the proportion of HSA or HRR patients who do not live in the regions (Kilaru et al. 2015). It represents the tendency of hospitals in an HSA (or HRR) to absorb out‐of‐area residents; hence, a lower value generally means better delineation. NPF is defined as the ratio of incoming patients to outgoing patients, that is, the non‐HSA (or HRR) residents treated inside the region versus HSA (or HRR) residents treated outside the region (Klauss et al. 2005). A value >1 indicates the tendency of more patients traveling inside the area to seek hospital care, while a value <1 implies the tendency of more patients leaving the area for hospital care.

Different from these metrics targeted on the health care–related characteristics, the following focuses on the geographic structure of HSAs/HRRs. For example, geographic compactness, which is commonly used in evaluating redistricting plans (Siegel 1996), characterizes the regularity of a region's shape based on the perimeter(P)‐area(A) corrected ratio or PAC (= P/(3.54*sqrt(A))). A geographically compact region (i.e., with lower PAC value) indicates that it is consolidated rather than spread out or, to put it another way, packed around its central point (Shirabe and Tomlin 2002); and it is proven to benefit systems planning (Mu and Wang 2008).

Balance in region sizes, for example, relatively even numbers of subregions (here, ZIP codes), hospitals, patients, and population in general across HSAs (or HRRs), leads to regions that are more comparable. This is an important property desirable for any regionalization method (Guo 2008) so that constructed regions are more representative of fair sampling in statistical analysis (Wang, Guo, and McLafferty 2012). It avoids the necessity of a mitigation measure adopted in some studies to assign various weights to samples that differ drastically in population (Wang 2015:122–126).

Herfindahl Index (HHI), an economic concept measuring the amount of competition among firms in a local market, is adopted here to characterize the hospital market structure, specifically, the competitive environment in an HSA (or HRR) in terms of share of admissions to local hospitals. Investigations into HHI may help researchers and policy makers better understand hospital behaviors and answer such questions as “are there higher costs or more specialized services, on average, when hospitals are located in more competitive hospital market areas?” (Garnick et al. 1987). HHI is the sum of the squared market share of each hospital in the market (i.e., HSA or HRR) multiplied by 10,000, where the market share of a hospital is its share of inpatient admissions in the same HSA (or HRR). For example, an HSA with only one hospital would have an HHI of 10,000. On the contrary, an HSA with a great number of hospitals that have relatively even shares of inpatient admissions would have an HHI near 0. Conventionally, a hospital market is considered highly concentrated (or monopolistic) if the HHI is larger than 2,500, moderately concentrated if between 1,500 and 2,500, unconcentrated if between 100 and 1,500, and highly competitive if below 100 (Cutler and Morton 2013).

Results and Comparison to the Dartmouth Units

An experiment is designed in order to evaluate the performance of our method with comparison to the Dartmouth method. First, we used the new method to construct as many HSAs/HRRs as the Dartmouth units in Florida, hereafter referred to as Dartmouth‐comparable units for simplicity—thanks to the scale‐flexible feature of this method. We then applied the aforementioned metrics to characterize and compare the two units. Given that the Dartmouth‐comparable units may not have the global‐optimal modularity, the next section derived the HSAs/HRRs with global‐optimal modularity value and applied those metrics to detect the “optimal” (or “natural”) configuration of a hierarchical hospitalization system in Florida.

In Florida, there are 114 HSAs and 18 HRRs delineated by Dartmouth. Therefore, we constructed 114 Dartmouth‐comparable HSAs and 18 Dartmouth‐comparable HRRs using the all‐payer‐based patient‐to‐hospital flow network (see Figure 1) and the subset of only major cardiovascular surgical or neurosurgery flow network, respectively. Given the modularity score range 0.3–0.7 in the corresponding real world networks, the modularity scores for the Dartmouth‐comparable units (0.63 for HSAs and 0.8 for HRRs) were notably high and indicated the significant nonrandom region structures of newly derived units. The following evaluated the effectiveness of these units in comparison to Dartmouth ones in the nine indices.

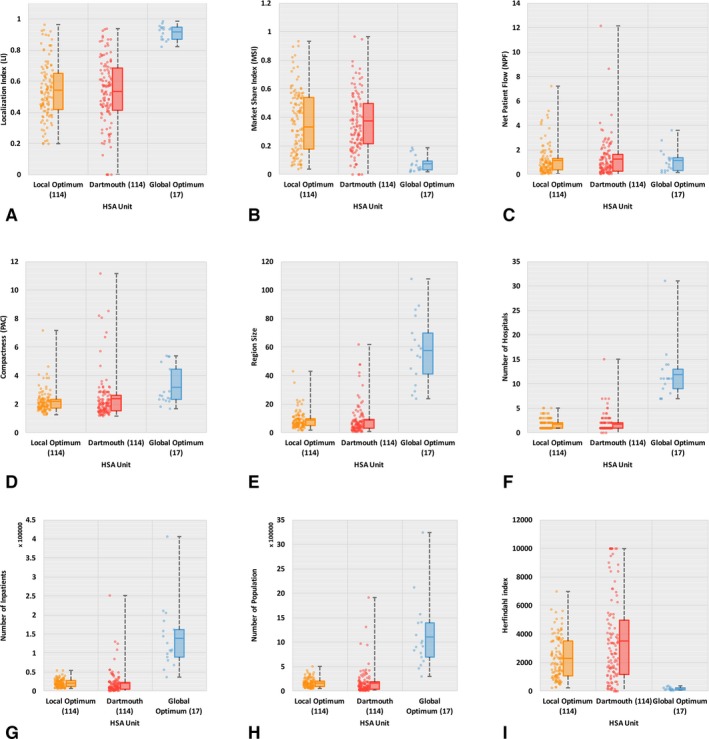

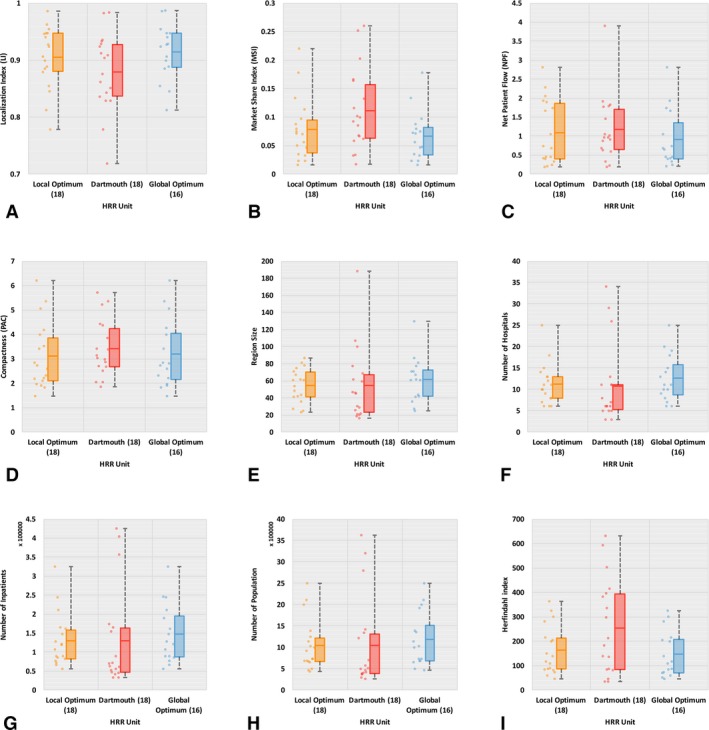

Localization index is the proportion of patients that are treated in the same HSA/HRR as where they live, and a higher value represents more favorable delineation. As shown in Figure 3A, the mean LI in Dartmouth‐comparable HSAs (labeled “Local Optimum”) is slightly higher than that in the Dartmouth HSAs (0.54 vs. 0.53), though not statistically significant. For HRRs (see Figure 4A), Dartmouth‐comparable HRRs (labeled “Local Optimum”) return a higher mean LI than Dartmouth HRRs (0.9 vs. 0.87) and the difference is significant at the 0.01 level. In addition, a significantly smaller range of LI is observed in both Dartmouth‐comparable HSAs and HRRs. That is to say, our modularity optimization approach shows an advantage in improving hospitalization LI, and such advantage is more evident in HRRs than HSAs.

Figure 3.

Basic Statistics of Nine Criteria for Evaluating HSAs

- Note. The line in the midst of a box represents mean, not median, value. [Color figure can be viewed at wileyonlinelibrary.com]

Figure 4.

Basic Statistics of Nine Criteria for Evaluating HRRs

- Note. The line in the midst of a box represents mean, not median, value. [Color figure can be viewed at wileyonlinelibrary.com]

Market share index reflects the proportion of HSA or HRR patients who do not live in the regions and therefore a lower value indicates better delineation. As illustrated in Figures 3B and 4B, we find lower mean MSI (significant at 0.01 level) in Dartmouth‐comparable units than Dartmouth ones (0.33 vs. 0.37 for HSAs, and 0.09 vs. 0.11 for HRRs). Likewise, it also indicates the better performance of our method in delineating hospital service regions with improved local health care patterns.

Net patient flow is the ratio of incoming patients to outgoing patients, and a value closer to 1 indicates more balanced health care service structure, that is, better localization pattern in the region. Both Figures 3C and 4C show lower mean NPF value in Dartmouth‐comparable units than Dartmouth's, for example, 1.15 versus 1.24 for HSAs and 1.09 versus 1.17 for HRRs, and the differences are significant at 0.01. Again, the less deviation of NPFs from 1 as well as the narrower range of NPFs in Dartmouth‐comparable units demonstrate improved local health care patterns.

Compactness here is measured in PAC as explained previously. As shown in Figures 3D and 4D, the Dartmouth‐comparable HSAs and HRRs have overall lower mean PAC values than Dartmouth ones (2.18 vs. 2.4 for HSAs, and 3.1 vs. 3.44 for HRRs, both significant at 0.01), indicating a more regularly shaped and consolidated region of the newly constructed HSAs and HRRs. The wider variability in compactness of Dartmouth HSAs is especially noticeable.

Balance in region size is measured in four aspects such as subregion count, numbers of hospitals and inpatients, and population size in this study. In practice, relatively even region sizes are often desired by planners (Guo 2008). Figures 3E and 4E show more balanced subregion counts (here, referred to simply as region sizes) in Dartmouth‐comparable units than in Dartmouth ones. The region sizes for Dartmouth‐comparable units have narrower ranges and lower standard deviations than those for Dartmouth units. As we generated the same number of HSAs (and HRRs) as Dartmouth units, the mean region size between two HSAs (and HRRs) is identical. Also as shown in Figures 3F–H and 4F–H, the number of hospitals in HSAs (and HRRs) varies in a significantly narrower range with a lower standard deviation in Dartmouth‐comparable HSAs (and HRRs) than Dartmouth units. Similar findings are observed for the number of inpatients and population size in HSAs (and HRRs). That is to say, our Dartmouth‐comparable units are more balanced in their sizes, in hospital count as well as the inpatients and population served, and therefore are more comparable across HSAs or across HRRs.

Herfindahl Index measures the competitive environment in an HSA (or HRR) in terms of share of admissions to local hospitals, and a smaller value indicates more competition (i.e., more balanced share of inpatient admissions) among hospitals in the same region. A highly concentrated or monopolistic market structure is observed in Dartmouth HSAs (mean HHI = 3510); however, only moderately concentrated market structure in Dartmouth‐comparable HSAs (mean HHI = 2275). At the HRR level, both units have unconcentrated markets, but Dartmouth‐comparable HRRs (mean HHI = 162) are more competitive in structure than Dartmouth HRRs (mean HHI = 255). See Figures 3I and 4I for more details.

In summary, the newly derived HSAs and HRRs are more favorable than the Dartmouth units in all nine indices used: localization of hospital visits (in LI, MSI, and NPF), compactness in shape, balanced region sizes (in four measures), and market structure.

Exploratory Analysis of Optimal Configuration of Hospital Service Areas

As mentioned previously, the Dartmouth‐comparable units may not represent the best feasible configuration of hospital service market in Florida. In fact, the 114 Dartmouth‐comparable HSAs and 18 HRRs are geographic units with only local optimal modularity scores. In other words, is there an optimal number of HSAs (or HRRs) that best capture the “natural” structure of health care system?

Based on the patient‐to‐hospital flow pattern for all patients, our method yielded 17 HSAs with the global‐optimal modularity score of 0.85 (see Figure S2), referred to as “global‐optimal HSAs” hereafter. For the cardiac and neurosurgery patient flow pattern that were used for delineation of HRRs, our method yielded 16 HRRs with the global‐optimal modularity score of 0.83 (the graph for modularity scores vs. HRR size is not shown here), referred to as “global‐optimal HRRs” hereafter. Note that the number of global‐optimal HRRs derived from the specialized care patient discharge pattern was just one fewer than the number of global‐optimal HSAs based on all patients, and their configurations (17 HSAs vs. 16 HRRs) were largely consistent (see Figure 5A and B). Both numbers were also very close to the 18 Dartmouth HRRs. To our knowledge, such an analysis in the context of health care market is the first of the kind, and thus both the results and related discussion are exploratory in nature.

Figure 5.

Major Discharge Flows and Global‐Optimal Units in Florida: (A) HSAs with ≥200 Inpatient Discharge and (B) HRRs with ≥50 Inpatient Discharge

- Note. White space is water areas. [Color figure can be viewed at wileyonlinelibrary.com]

The most remarkable improvement is observed in health care localization pattern. The mean LI increased from 0.54 in the 114 Dartmouth‐comparable HSAs (LI=0.53 for the 114 traditional Dartmouth HSAs) to 0.92 in the 17 global‐optimal HSAs. Granted that much of the improvement in LI was attributable to the aggregation to much fewer HSAs, the significant increase of modularity score from a local optimal modularity score 0.63 to a global‐optimal 0.85 signaled the need of further consolidation of HSAs in order to capture the contemporary hospitalization pattern that is increasingly interwoven and integrated. That is to say, the number of HSAs defined by the traditional Dartmouth method might be too high (i.e., the size of Dartmouth HSAs might be too small) for today's health care market. A similar increasing trend, understandably not as drastic, is detected in HRRs: the mean LI increased from 0.87 in the 18 Dartmouth HRRs to 0.90 in the 18 Dartmouth‐comparable HSAs, and then to 0.92 in the 16 global‐optimal HRRs. Clearly, both the aggregation to slightly fewer HRRs and the optimal configuration of internal patient flows contributed to the improved LI. As for the second related index MSI, we found a significant decline in the mean MSI from 0.33 in Dartmouth‐comparable HSAs to 0.07 in global‐optimal HSAs. In other words, if the 17 global‐optimal HSAs were adopted in Florida, hospitalization was highly localized with only 7 percent of patients visiting hospitals outside their assigned HSAs. Similarly, there were an overall 9 percent patients visiting outside the 18 Dartmouth‐comparable HRRs and also 7 percent outside in the 16 global‐optimal HRRs. Once again, the convergence of global‐optimal HSAs and global‐optimal HRRs (though one based on all patients and another on “specialized” patients) is noticeable and has important implication. The same message can be read from the NPF results: the mean value was 1.12, 1.15, and 1.24 in the global‐optimal, Dartmouth‐comparable, and Dartmouth HSAs, respectively; and its mean value was 0.94, 1.09, and 1.17 in the global‐optimal, Dartmouth‐comparable, and Dartmouth HRRs, respectively.

Other indices such as balance in region size, shape compactness, and market structure are strongly dependent on scale. For example, according to the HHI, the global‐optimal HSAs had a significantly more equitable share of patient admissions among local hospitals (mean HHI = 150), compared to a moderately monopolistic market structure in Dartmouth‐comparable HSAs (mean HHI = 2275). This is mainly caused by the major difference in number of hospitals enclosed in the two systems (i.e., smaller and more numerous HSAs in a Dartmouth‐comparable system are more likely to be dominated by one or a few hospitals). It is not very meaningful to evaluate the differences between 114 Dartmouth‐comparable HSAs and 17 global‐optimal HSAs in those indices. Interested readers may refer to Figures 3 and 4 (the three boxplots correspond to Dartmouth‐comparable, Dartmouth, and global‐optimal units in each graph).

To recap this exploratory analysis, Figure 5A and B show the global‐optimal HSAs (only discharge flows ≥200 inpatient discharge are preserved to highlight the pattern) and HRRs (only flows ≥50 “specialized” inpatient discharge are kept). Clearly, both flow maps appear to align well with the delineated geographic units. Specifically, we find more patient‐to‐hospital flows within a region and negligible flows between regions, and hence a highly localized health care service pattern.

Concluding Remarks

Several issues may be identified in the widely used Dartmouth HSAs and HRRs as analysis units for health care market. They are outdated and unrepresentative of the overall patient population, and the delineation process is not automated and involves making some arbitrary choices. Based on 2011 SID data in Florida, the primary purpose of this research was to adopt a community detection method developed in the complex network science for delineating HSAs and HRRs that are (i) up‐to‐date, (ii) representative of the overall population, and (iii) automatically and consistently generated. The derived HSAs/HRRs possess significant advantages over the Dartmouth units. Our units are more balanced in region size and more compact in shape, two important properties in design of any regionalization method. Most important, our units enjoy higher local hospitalization ratios, the very foundation built in any approach for HSA delineation.

The exploratory analysis of seeking the optimal number of HSAs also yields some interesting findings and sheds light on several important issues in the field. First, the global‐optimal HSAs (in terms of modularity in existing overall patient flows) has a number very close to the number of HRRs, either based on the optimized modularity of cardiac and neurosurgery patient flows or from the traditional Dartmouth method. This convergence may suggest the need of revisiting the use of these two services in defining the HRRs. The increasing prevalence of such services (especially cardiac surgery) might have reached a point that they are no longer as specialized or with limited availability as they used to be. A recent study (Jia, Wang, and Xierali 2017) shows similar distance decay behaviors in general inpatients and inpatients of cardiac procedures in Florida hospitals. Secondly, by extension of the previous point, one may consider the larger HRRs aggregated from HSAs as a more appropriate unit for analysis of today's health care market that is increasingly consolidated as well as integrated. Thirdly, both points call for more studies to be expanded temporally (e.g., to verify whether the health care market has indeed become more geographically interwoven over time) and spatially (e.g., in a larger geographic region so that sufficient samples of patients of specialized cares are available for reliable statistical analysis). For now, the above discussion is suggestive or perhaps speculative, and needs to be taken with caution.

There are some limitations in the research. Here we raise several related to the data source. The SID data included information on hospital transfers and we treated each discharge as a separate record. For instance, about 20 percent of the selected 2,346,032 records in Florida were hospital transfers. Inclusion or separation of transfer patients, readmissions, and treat‐and‐release outpatients may affect the results. Future research should consider this to more fully assess the health care system and patient communities. In addition, due to data availability, we only used inpatient flows (SID) but not the outpatient flow data. When available, both data sources may be integrated (or analyzed separately) to assess the whole of hospital health care service pattern. The geographic level in the SID data is also inconsistent across datasets (e.g., five states are not aggregated at ZIP level). While our method can be applied in any scale, the inconsistency across states is an issue that needs to be resolved for a study area including multiple states. Furthermore, the selected SID data are only available for roughly 50 percent of states across the United States. This raises a problem for feasibility of national analysis. Like the Dartmouth Atlas Project, one may seek other data sources that are provided nationwide such as the CMS Medicare claims data even though it is limited to a cohort of Medicare patients only. This paper focuses on methodological issues of defining HSAs, and we hope to report future work on using the new units to compare the geographic variation in spending, population, utilization, and other measures in the health care system as raised in a recent Institute of Medicine report (Newhouse et al. 2013).

Supporting information

Appendix SA1: Author Matrix

Figure S1. Extraction of the Study Population.

Figure S2. Modularity Change by HSA Size.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: The financial support from the Graduate School of Louisiana State University to Yujie Hu under the Economic Development Assistantship (EDA) is gratefully acknowledged. We appreciate very detailed and constructive comments by three reviewers and the editors who helped improve the revision. The authors declare no conflicts of interests.

Disclosures: None.

Disclaimers: None.

References

- Agency for Healthcare Research and Quality (AHRQ) . 2011. Healthcare Cost and Utilization Project (HCUP) State Inpatient Databases (SID)—Florida. Rockville, MD: Available at www.hcup-us.ahrq.gov/sidoverview.jsp [Google Scholar]

- AHA (American Hospital Association) . 2009. “Geographic Variation in Health Care Spending: A Closer Look.” TrendWatch 2009: 1–16. [Google Scholar]

- Blondel, V. D. , Guillaume J. L., Lambiotte R., and Lefebvre E.. 2008. “Fast Unfolding of Communities in Large Networks.” Journal of Statistical Mechanics: Theory and Experiment 2008 (10): P10008. [Google Scholar]

- Cooper, M. M. 1996. The Dartmouth Atlas of Health Care, pp. 2–26. Chicago, IL: American Hospital Publishing. [PubMed] [Google Scholar]

- Cutler, D. M. , and Morton F. S.. 2013. “Hospitals, Market Share, and Consolidation.” Journal of the American Medical Association 310 (18): 1964–70. [DOI] [PubMed] [Google Scholar]

- Fortunato, S. 2010. “Community Detection in Graphs.” Physics Reports 486 (3): 75–174. [Google Scholar]

- Garnick, D. W. , Luft H. S., Robinson J. C., and Tetreault J.. 1987. “Appropriate Measures of Hospital Market Areas.” Health Services Research 22 (1): 69–89. [PMC free article] [PubMed] [Google Scholar]

- Guagliardo, M. F. , Jablonski K. A., Joseph J. G., and Goodman D. C.. 2004. “Do Pediatric Hospitalizations Have a Unique Geography?” BMC Health Services Research 4 (1): 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo, D. 2008. “Regionalization with Dynamically Constrained Agglomerative Clustering and Partitioning (REDCAP).” International Journal of Geographical Information Science 22 (7): 801–23. [Google Scholar]

- Jia, P. , Wang F., and Xierali I. M.. 2017. “Delineating Hierarchal Hospital Service Areas in Florida.” Geographic Review doi: 10.1111/j.1931‐0846.2016.12207.x [Google Scholar]

- Jia, P. , Xierali I. M., and Wang F.. 2015. “Evaluating and Re‐Demarcating the Hospital Service Areas in Florida.” Applied Geography 60: 248–53. [Google Scholar]

- Kilaru, A. S. , Wiebe D. J., Karp D. N., Love J., Kallan M. J., and Carr B. G.. 2015. “Do Hospital Service Areas and Hospital Referral Regions Define Discrete Health Care Populations?” Medical care 53 (6): 510–6. [DOI] [PubMed] [Google Scholar]

- Klauss, G. , Staub L., Widmer M., and Busato A.. 2005. “Hospital Service Areas–A New Tool for Health Care Planning in Switzerland.” BMC Health Services Research 5 (1): 33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MedPAC (Medicare Payment Advisory Commission) . 2011. Regional Variation in Medicare Service Use. Report to the Congress: January 2011. Washington, DC: MedPAC. [Google Scholar]

- MedPAC (Medicare Payment Advisory Commission) . 2014. Report to the Congress: Medicare Payment Policy: March 2014. Washington, DC: MedPAC. [Google Scholar]

- Mu, L. , and Wang F.. 2008. “A Scale‐Space Clustering Method: Mitigating the Effect of Scale in the Analysis of Zone‐Based Data.” Annals of the Association of American Geographers 98 (1): 85–101. [Google Scholar]

- Newhouse J. P., Garber A. M., Graham R. P., McCoy M. A., Mancher M., and Kibria A. (eds.). 2013. Variation in Health Care Spending: Target Decision Making, Not Geography. Washington, DC: National Academies Press. [PubMed] [Google Scholar]

- Newman, M. E. 2004a. “Fast Algorithm for Detecting Community Structure in Networks.” Physical Review E 69 (6): 066133. [DOI] [PubMed] [Google Scholar]

- Newman, M. E. 2004b. “Analysis of Weighted Networks.” Physical Review E 70 (5): 056131. [DOI] [PubMed] [Google Scholar]

- Newman, M. E. 2006. “Modularity and Community Structure in Networks.” Proceedings of the National Academy of Sciences 103 (23): 8577–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman, M. E. 2010. Networks: An Introduction. Oxford, UK: Oxford University Press. [Google Scholar]

- Newman, M. E. , and Girvan M.. 2004. “Finding and Evaluating Community Structure in Networks.” Physical Review E 69 (2): 026113. [DOI] [PubMed] [Google Scholar]

- Ratti, C. , Sobolevsky S., Calabrese F., Andris C., Reades J., Martino M., Claxton R., and Strogatz S. H.. 2010. “Redrawing the Map of Great Britain from a Network of Human Interactions.” PLoS ONE 5 (12): e14248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaeffer, S. E. 2007. “Graph Clustering.” Computer Science Review 1 (1): 27–64. [Google Scholar]

- Shirabe, T. , and Tomlin C. D.. 2002. Decomposing Integer Programming Models for Spatial Allocation, pp. 300–12. Berlin: Springer. [Google Scholar]

- Siegel, J. S. 1996. “Geographic Compactness vs. Race/Ethnic Compactness and Other Criteria in the Delineation of Legislative Districts.” Population Research and Policy Review 15 (2): 147–64. [Google Scholar]

- Wang, F. 2015. Quantitative Methods and Socioeconomic Applications in GIS, 2d Edition Boca Raton, FL: CRC Press. [Google Scholar]

- Wang, F. , Guo D., and McLafferty S.. 2012. “Constructing Geographic Areas for Cancer Data Analysis: A Case Study on Late‐Stage Breast Cancer Risk in Illinois.” Applied Geography 35: 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, Y. , Baik S. H., Fendrick A. M., and Baicker K.. 2012. “Comparing Local and Regional Variation in Health Care Spending.” New England Journal of Medicine 367 (18): 1724–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao, Y. , Levina E., and Zhu J.. 2011. “Community Extraction for Social Networks.” Proceedings of the National Academy of Sciences United States of America 108 (18): 7321–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zuckerman, S. , Waidmann T., Berenson R., and Hadley J.. 2010. “Clarifying Sources of Geographic Differences in Medicare Spending.” New England Journal of Medicine 363 (1): 54–62. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix

Figure S1. Extraction of the Study Population.

Figure S2. Modularity Change by HSA Size.