Abstract

In de novo drug design, computational strategies are used to generate novel molecules with good affinity to the desired biological target. In this work, we show that recurrent neural networks can be trained as generative models for molecular structures, similar to statistical language models in natural language processing. We demonstrate that the properties of the generated molecules correlate very well with the properties of the molecules used to train the model. In order to enrich libraries with molecules active toward a given biological target, we propose to fine-tune the model with small sets of molecules, which are known to be active against that target. Against Staphylococcus aureus, the model reproduced 14% of 6051 hold-out test molecules that medicinal chemists designed, whereas against Plasmodium falciparum (Malaria), it reproduced 28% of 1240 test molecules. When coupled with a scoring function, our model can perform the complete de novo drug design cycle to generate large sets of novel molecules for drug discovery.

Short abstract

Using artificial neural networks, computers can learn to generate molecules with desired target properties. This can aid in the creative process of drug design.

Introduction

Chemistry is the language of nature. Chemists speak it fluently and have made their discipline one of the true contributors to human well-being, which has “change[d] the way you live and die”.1 This is particularly true for medicinal chemistry. However, creating novel drugs is an extraordinarily hard and complex problem.2 One of the many challenges in drug design is the sheer size of the search space for novel molecules. It has been estimated that 1060 drug-like molecules could possibly be synthetically accessible.3 Chemists have to select and examine molecules from this large space to find molecules that are active toward a biological target. Active means for example that a molecule binds to a biomolecule, which causes an effect in the living organism, or inhibits replication of bacteria. Modern high-throughput screening techniques allow testing of molecules on the order of 106 in the lab.4 However, larger experiments will get prohibitively expensive. Given this practical limitation of in vitro experiments, it is desirable to have computational tools to narrow down the enormous search space. Virtual screening is a commonly used strategy to search for promising molecules among millions of existing or billions of virtual molecules.5 Searching can be carried out using similarity-based metrics, which provides a quantifiable numerical indicator of closeness between molecules. In contrast, in de novo drug design, one aims to directly create novel molecules that are active toward the desired biological target.6,7 Here, like in any molecular design task, the computer has to

-

(i)

create molecules,

-

(ii)

score and filter them, and

-

(iii)

search for better molecules, building on the knowledge gained in the previous steps.

Task i, the generation of novel molecules, is usually solved with one of two different protocols.7 One strategy is to build molecules from predefined groups of atoms or fragments. Unfortunately, these approaches often lead to molecules that are very hard to synthesize.8 Therefore, another established approach is to conduct virtual chemical reactions based on expert coded rules, with the hope that these reactions could then also be applied in practice to make the molecules in the laboratory.9 These systems give reasonable drug-like molecules and are considered as “the solution” to the structure generation problem.2 We generally share this view. However, we have recently shown that the predicted reactions from these rule-based expert systems can sometimes fail.10−12 Also, focusing on a small set of robust reactions can unnecessarily restrict the possibly accessible chemical space.

Task ii, scoring molecules and filtering out undesired structures, can be solved with substructure filters for undesirable reactive groups in conjunction with established approaches such as docking13 or machine learning (ML) approaches.7,14,15 The ML approaches are split into two branches: Target prediction classifies molecules into active and inactive, and quantitative structure–activity relationships (QSAR) seek to quantitatively predict a real-valued measure for the effectiveness of a substance (as a regression problem). As molecular descriptors, signature fingerprints, extended-connectivity (ECFP), and atom pair (APFP) fingerprints and their fuzzy variants are the de facto standard today.16−18 Convolutional networks on graphs are a more recent addition to the field of molecular descriptors.19−22 Jastrzebski et al. proposed to use convolutional neural networks to learn descriptors directly from SMILES strings.23 Random forests, support vector machines, and neural networks are currently the most widely used machine learning models for target prediction.24−35

This leads to task iii, the search for molecules with the right binding affinity combined with optimal molecular properties. In earlier work, this was performed (among others) with classical global optimization techniques, for example genetic algorithms or ant-colony optimization.7,36 Furthermore, de novo design is related to inverse QSAR.37−40 While in de novo design a regular QSAR mapping X → y from molecular descriptor space X to properties y is used as the scoring function for the global optimizer, in inverse QSAR one aims to find an explicit inverse mapping y → X, and then maps back from optimal points in descriptor space X to valid molecules. However, this is not well-defined, because molecules are inherently discrete (the space is not continuously populated), and the mapping from a target property value y to possible structures X is one-to-many, as usually several different structures with very similar properties can be found. Several protocols have been developed to address this, for example enumerating all structures within the constraints of hyper-rectangles in the descriptor space.37−42 Gómez-Bombarelli et al. proposed to learn continuous representations of molecules with variational autoencoders, based on the model by Bowman et al.,43 and to perform Bayesian optimization in this vector space to optimize molecular properties.44 While promising, this approach was not applied to create active drug molecules and often produced syntactically invalid molecules and highly strained or reactive structures, for example cyclobutadienes.44

In this work, we suggest a complementary, completely data-driven de novo drug design approach. It relies only on a generative model for molecular structures, based on a recurrent neural network, that is trained on large sets of molecules. Generative models learn a probability distribution over the training examples; sampling from this distribution generates new examples similar to the training data. Intuitively, a generative model for molecules trained on drug molecules would “know” how valid and reasonable drug-like molecules look and could be used to generate more drug-like molecules. However, for molecules, these models have been studied rarely, and rigorously only with traditional models such as Gaussian mixture models (GMM).41,45,46 Recently, recurrent neural networks (RNNs) have emerged as powerful generative models in very different domains, such as natural language processing,47 speech,48 images,49 video,50 formal languages,51 computer code generation,52 and music scores.53 In this work, we highlight the analogy of language and chemistry, and show that RNNs can also generate reasonable molecules. Furthermore, we demonstrate that RNNs can also transfer their learned knowledge from large molecule sets to directly produce novel molecules that are biologically active by retraining the models on small sets of already known actives. We test our models by reproducing hold-out test sets of known biologically active molecules.

Methods

Representing Molecules

To connect chemistry with language, it is important to understand how molecules are represented. Usually, they are modeled by molecular graphs, also called Lewis structures in chemistry. In molecular graphs, atoms are labeled nodes. The edges are the bonds between atoms, which are labeled with the bond order (e.g., single, double, or triple). One could therefore envision having a model that reads and outputs graphs. Several common chemistry formats store molecules in such a manner. However, in models for natural language processing, the input and output of the model are usually sequences of single letters, strings or words. We therefore employ the SMILES format, which encodes molecular graphs compactly as human-readable strings. SMILES is a formal grammar which describes molecules with an alphabet of characters, for example c and C for aromatic and aliphatic carbon atoms, O for oxygen, and −, =, and # for single, double, and triple bonds (see Figure 1).54 To indicate rings, a number is introduced at the two atoms where the ring is closed. For example, benzene in aromatic SMILES notation would be c1ccccc1. Side chains are denoted by round brackets. To generate valid SMILES, the generative model would have to learn the SMILES grammar, which includes keeping track of rings and brackets to eventually close them. In morphine, a complex natural product, the number of steps between the first 1 and the second 1, indicating a ring, is 32. Having established a link between molecules and (formal) language, we can now discuss language models.

Figure 1.

Examples of molecules and their SMILES representation. To correctly create smiles, the model has to learn long-term dependencies, for example, to close rings (indicated by numbers) and brackets.

Language Models and Recurrent Neural Networks

Given a sequence of words (w1, ..., wi), language models predict the distribution of the (i+1)th word wi+1.55 For example, if a language model received the sequence “Chemistry is”, it would assign different probabilities to possible next words: “fascinating”, “important”, or “challenging” would receive high probabilities, while “runs” or “potato” would receive very low probabilities. Language models can both capture the grammatical correctness (“runs” in this sentence is wrong) and the meaning (“potato” does not make sense). Language models are implemented, for example, in message autocorrection in many modern smartphones. Interestingly, language models do not have to use words. They can also be based on characters or letters.55 In that case, when receiving the sequence of characters chemistr, it would assign a high probability to y, but a low probability to q. To model molecules instead of language, we simply swap words or letters with atoms, or, more practically, characters in the SMILES alphabet, which form a (formal) language. For example, if the model receives the sequence c1ccccc, there is a high probability that the next symbol would be a “1”, which closes the ring, and yields benzene.

More formally, to a sequence S of symbols si at steps ti ∈ T, the language model assigns a probability of

| 1 |

where the parameters θ are learned from the training set.55 In this work, we use a recurrent neural network (RNN) to estimate the probabilities of eq 1. In contrast to regular feedforward neural networks, RNNs maintain state, which is needed to keep track of the symbols seen earlier in the sequence. In abstract terms, an RNN takes a sequence of input vectors x1:n = (x1, ..., xn) and an initial state vector h0, and returns a sequence of state vectors h1:n = (h1, ..., hn) and a sequence of output vectors y1:n = (y1, ..., yn). The RNN consists of a recursively defined function R, which takes a state vector hi and input vector xi+1 and returns a new state vector hi+1. Another function O maps a state vector hi to an output vector yi.55

| 2 |

| 3 |

| 4 |

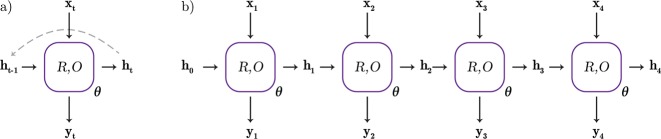

The state vector hi stores a representation of the information about all symbols seen in the sequence so far. As an alternative to the recursive definition, the recurrent network can also be unrolled for finite sequences (see Figure 2). An unrolled RNN can be seen as a very deep neural network, in which the parameters θ are shared among the layers, and the hidden state ht is passed as an additional input to the next layer. Training the unrolled RNN to fit the parameters θ can then simply be done by using backpropagation to compute the gradients with respect to the loss function, which is categorical cross-entropy in this work.55

Figure 2.

(a) Recursively defined RNN. (b) The same RNN, unrolled. The parameters θ (the weight matrices of the neural network) are shared over all time steps.

As the specific RNN function, in this work, we use the long short-term memory (LSTM), which was introduced by Hochreiter and Schmidhuber.56 It has been used successfully in many natural language processing tasks,47 for example in Google’s neural machine translation system.57 For excellent in-depth discussions of the LSTM, we refer to the articles by Goldberg,55 Graves,58 Olah,59 and Greff et al.60

To encode the SMILES symbols as input vectors xt, we employ the “one-hot” representation.58 This means that if there are K symbols, and k is the symbol to be input at step t, then we can construct an input vector xt with length K, whose entries are all zero except the kth entry, which is one. If we assume a very restricted set of symbols {c, 1, \n}, input c would correspond to xt = (1, 0, 0), 1 to xt = (0, 1, 0), and \n to xt = (0, 0, 1).

The probability distribution Pθ(st+1|st, ..., s1) of the next symbol given the already seen sequence is thus a multinomial distribution, which is estimated using the output vector yt of the recurrent neural network at time step t by

| 5 |

where ytk corresponds to the kth element of vector yt.58 Sampling from this distribution would then allow generating novel molecules: After sampling a SMILES symbol st+1 for the next time step t + 1, we can construct a new input vector xt+1, which is fed into the model, and via yt+1 and eq 5 yields Pθ(st+2|st+1, ..., s1). Sampling from the latter generates st+2, which serves again also as the model’s input for the next step (see Figure 3). This symbol-by-symbol sampling procedure is repeated until the desired number of characters have been generated.58

Figure 3.

Symbol generation and sampling process. We start with a random seed symbol s1, here c, which gets converted into a one-hot vector x1 and input into the model. The model then updates its internal state h0 to h1 and outputs y1, which is the probability distribution over the next symbols. Here, sampling yields s2 = 1. Converting s2 to x2 and feeding it to the model leads to updated hidden state h2 and output y2, from which we can sample again. This iterative symbol-by-symbol procedure can be continued as long as desired. In this example, we stop it after observing an EOL (\n) symbol, and obtain the SMILES for benzene. The hidden state hi allows the model to keep track of opened brackets and rings, to ensure that they will be closed again later.

To indicate that a molecule is “completed”, each molecule in our training data finishes with an “end of line” (EOL) symbol, in our case the single character \n (which means that the training data is just a simple SMILES file). Thus, when the system outputs an EOL, a generated molecule is finished. However, we simply continue sampling, thus generating a regular SMILES file that contains one molecule per line.

In this work, we used a network with three stacked LSTM layers, using the Keras library.61 The model was trained with back-propagation through time,58 using the ADAM optimizer at standard settings.62 To mitigate the problem of exploding gradients during training, a gradient norm clipping of 5 is applied.58

Transfer Learning

For many machine learning tasks, only small data sets are available, which might lead to overfitting with powerful models such as neural networks. In this situation, transfer learning can help.63 Here, a model is first trained on a large data set for a different task. Then, the model is retrained on the smaller data set, which is also called fine-tuning. The aim of transfer learning is to learn general features on the bigger data set, which also might be useful for the second task in the smaller data regime. To generate focused molecule libraries, we first train on a large, general set of molecules, then perform fine-tuning on a smaller set of specific molecules, and after that start the sampling procedure.

Target Prediction

To verify whether the generated molecules are active on the desired targets, standard target prediction was employed. Machine learning based target prediction aims to learn a classifier c: M → {1, 0} to decide whether a molecule m ∈ molecular descriptor space M is active or not against a target.14,15 The molecules are split into actives and inactives using a threshold on a measure for the substance effectiveness. pIC50 = −log10(IC50) is one of the most widely used metrics for this purpose. IC50 is the half maximal inhibitory concentration, that is, the concentration of drug that is required to inhibit 50% of a biological target’s function in vitro.

To predict whether the generated molecules are active toward the biological target of interest, target prediction models (TPMs) were trained for all the tested targets (5-HT2A, Plasmodium falciparum and Staphylococcus aureus). We evaluated random forest, logistic regression, (deep) neural networks, and gradient boosting trees (GBT) as models with ECFP4 (extended connectivity fingerprint with a diameter of 4) as the molecular descriptor.16,17 We found that GBTs slightly outperformed all other models and used these as our virtual assay in all studies (AUC[5-HT2A] = 0.877, AUC[Staph. aur.] = 0.916). ECFP4 fingerprints were generated with CDK version 1.5.13.64,65 scikit-learn,66 XGBoost,67 and Keras61 were used as the machine learning libraries. For 5-HT2A and Plasmodium, molecules are considered as active for the TPM if their IC50 reported in ChEMBL is <100 nM, which translates to a pIC50 > 7, whereas for Staphylococcus, we used pMIC > 3.

Data

The chemical language model was trained on a SMILES file containing 1.4 million molecules from the ChEMBL database, which contains molecules and measured biological activity data. The SMILES strings of the molecules were canonicalized (which means finding a unique representation that is the same for isomorphic molecular graphs)68,69 before training with the CDK chemoinformatics library, yielding a SMILES file that contained one molecule per line.64,65 It has to be noted that ChEMBL contains many peptides, natural products with complex scaffolds, Michael acceptors, benzoquinones, hydroxylamines, hydrazines, etc., which is reflected in the generated structures (see below). This corresponds to 72 million individual characters, with a vocabulary size of 51 unique characters. 51 characters is only a subset of all SMILES symbols, since the molecules in ChEMBL do not contain many of the heavy elements. As we have to set the number of symbols as a hyperparameter during model construction, and the model can only learn the distribution over the symbols present in the training data, this implies that only molecules with these 51 SMILES symbols seen during training can be generated during sampling.

The 5-HT2A, the Plasmodium falciparum, and the Staphylococcus aureus data sets were also obtained from ChEMBL. As these molecules were intended to be used in the rediscovery studies, they were removed from the training data before fitting the chemical language model.

Model Evaluation

To evaluate the

models for a test set T, and a set of molecules GN generated from the model

by sampling, we report the ratio of reproduced molecules  , and enrichment

over random (EOR), which is defined as

, and enrichment

over random (EOR), which is defined as

| 6 |

where n = |GN ∩ T| is the number of reproduced molecules from T by sampling a set GN of |GN| = N molecules from the fine-tuned generative model, and m = |RM ∩ T| is the number of reproduced molecules from T by sampling a set RM of |RM| = M molecules from the generic, unbiased generative model trained only on the large data set. Intuitively, EOR indicates how much better the fine-tuned models work when compared to the general model.

Results and Discussion

In this work, we address two points: First, we want to generate large sets of diverse molecules for virtual screening campaigns. Second, we want to generate smaller, focused libraries enriched with possibly active molecules for a specific target. For the first task, we can train a model on a large, general set of molecules to learn the SMILES grammar. Sampling from this model would generate sets of diverse, but unfocused molecules. To address the second task, and to obtain novel active drug molecules for a target of interest, we perform transfer learning: We select a small set of known actives for that target and we refit our pretrained chemical language model with this small data set. After each epoch, we sample from the model to generate novel actives. Furthermore, we investigate if the model actually benefits from transfer learning, by comparing it to a model trained from scratch on the small sets without pretraining.

Training the Recurrent Network

We employed a recurrent neural network with three stacked LSTM layers, each with 1024 dimensions, and each one followed by a dropout70 layer, with a dropout ratio of 0.2, to regularize the neural network. The model was trained until convergence, using a batch size of 128. The RNN was unrolled for 64 steps. It had 21.3 × 106 parameters.

During training, we sampled a few molecules from the model every 1000 minibatches to inspect progress. Within a few 1000 steps, the model starts to output valid molecules (see Table 1).

Table 1. Molecules Sampled during Training.

Generating Novel Molecules

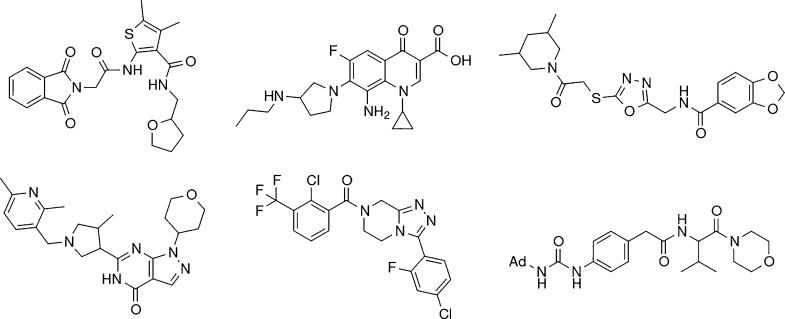

To generate novel molecules, 50,000,000 SMILES symbols were sampled from the model symbol-by-symbol. This corresponded to 976,327 lines, from which 97.7% were valid molecules after parsing with the CDK toolkit. Removing all molecules already seen during training yielded 864,880 structures. After filtering out duplicates, we obtained 847,955 novel molecules. A few generated molecules were randomly selected and depicted in Figure 4. The Supporting Information contains more structures. The created structures are not just formally valid but also mostly chemically reasonable.

Figure 4.

A few randomly selected, generated molecules. Ad = Adamantyl.

In order to check if the de novo compounds could be considered as valid starting points for a drug discovery program, we applied the internal AstraZeneca filters.71 At AstraZeneca, this flagging system is used to determine if a compound is suitable to be part of the high-throughput screening collection (if flagged as “core” or “backup”) or should be restricted for particular use (flagged as “undesirable” since it contains one or several unwanted substructures, e.g., undesired reactive functional groups). The filters were applied to the generated set of 848 k molecules, and they flagged most of them, 640 k (75%), as either core or backup. Since the same ratio (75%) of core and backup compounds has been observed for the ChEMBL collection, we therefore conclude that the algorithm generates preponderantly valid screening molecules and faithfully reproduces the distribution of the training data.

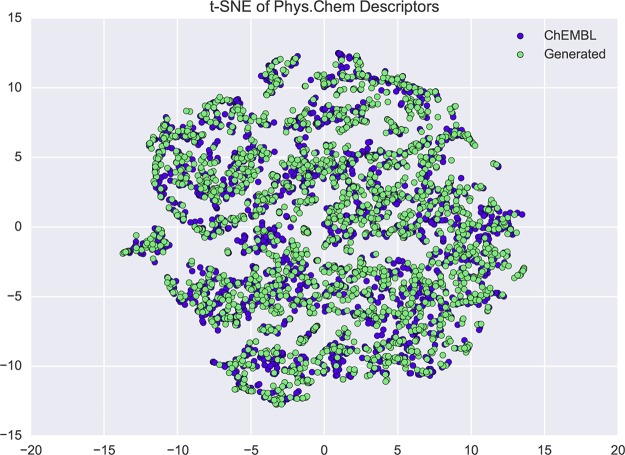

To determine whether the properties of the generated molecules match the properties of the training data from ChEMBL, we followed the procedure of Kolb:72 We computed several molecular properties, namely, molecular weight, BertzCT, the number of H-donors, H-acceptors, and rotatable bonds, logP, and total polar surface area for randomly selected subsets from both sets with the RDKit73 library version 2016.03.1. Then, we performed dimensionality reduction to 2D with t-SNE (t-distributed stochastic neighbor embedding, a technique analogous to PCA), which is shown in Figure 5.74 Both sets overlap almost completely, which indicates that the generated molecules very well recreate the properties of the training molecules.

Figure 5.

t-SNE projection of 7 physicochemical descriptors of random molecules from ChEMBL (blue) and molecules generated with the neural network trained on ChEMBL (green), to two unitless dimensions. The distributions of both sets overlap significantly.

Furthermore, we analyzed the Bemis–Murcko scaffolds of the training molecules and the sampled molecules.75 Bemis–Murcko scaffolds contain the ring systems of a molecule and the moieties that link these ring systems, while removing any side chains. They represent the scaffold, or “core” of a molecule, which series of drug molecules often have in common. The number of common scaffolds in both sets divided by the union of all scaffolds in both sets (Jaccard index) is 0.12, which indicates that the language model does not just modify side chain substituents but also introduces modifications at the molecular core.

Generating Active Drug Molecules and Focused Libraries

Targeting the 5-HT2A Receptor

To generate novel ligands for the 5-HT2A receptor, we first selected all molecules with pIC50 > 7 which were tested on 5-HT2A from ChEMBL (732 molecules), and then fine-tuned our pretrained chemical language model on this set. After each epoch, we sampled 100,000 chars, canonicalized the molecules, and removed any sampled molecules that were already contained in the training set. Following this, we evaluated the generated molecules of each round of retraining with our 5-HT2A target prediction model (TPM). In Figure 6, the ratio of molecules predicted to be active by the TPM after each round of fine-tuning is shown. Before fine-tuning (corresponding to epoch 0), the model generates almost exclusively inactive molecules. Already after 4 epochs of fine-tuning the model produced a set in which 50% of the molecules are predicted to be active.

Figure 6.

Epochs of fine-tuning vs ratio of actives.

Diversity Analysis

In order to assess the novelty of the de novo molecules generated with the fine-tuned model, a nearest neighbor similarity/diversity analysis has been conducted using a commonly used 2D fingerprint (ECFP4) based similarity method (Tanimoto index).72Figure 7 shows the distribution of the nearest neighbor Tanimoto index generated by comparing all the novel molecules and the training molecules before and after n epochs of fine-tuning. For each bin, the white bars indicate the molecules generated from the unbiased, general model, while the darker bars indicate the molecules after several epochs of fine-tuning. Within the bins corresponding to lower similarity, the number of molecules decreases, while the bins of higher similarity get populated with increasing numbers of molecules. The plot thus shows that the model starts to output more and more similar molecules to the target-specific training set. Notably, after a few rounds of training not only are highly similar molecules produced but also molecules covering the whole range of similarity, indicating that our method could deliver not only close analogues but also new chemotypes or scaffold ideas to a drug discovery project.5 To have the best of both worlds, that is, diverse and focused molecules, we therefore suggest to sample after each epoch of retraining and not just after the final epoch.

Figure 7.

Nearest-neighbor Tanimoto similarity distribution of the generated molecules for 5-HT2A after n epochs of fine-tuning against the known actives. The generated molecules are distributed over the whole similarity range. Generated molecules with a medium similarity can be interesting for scaffold-hopping.5

Targeting Plasmodium falciparum (Malaria)

Plasmodium falciparum is a parasite that causes the most dangerous form of malaria.76 To probe our model on this important target, we used a more challenging validation strategy. We wanted to investigate whether the model could also propose the same molecules that medicinal chemists chose to evaluate in published studies. To test this, first, the known actives against Plasmodium falciparum with a pIC50 > 8 were selected from ChEMBL (Table 2). Then, this set was split randomly into a training (1239 molecules) and a test set (1240 molecules). The chemical language model was then fine-tuned on the training set. 7500 molecules were sampled after each of the 20 epochs of refitting.

Table 2. Reproducing Known Actives in the Plasmodium Test Set.

| no. | pIC50 | training | test | gen mols | reprod (%) | EORa |

|---|---|---|---|---|---|---|

| 1 | >8 | 1239 | 1240 | 128,256 | 28 | 66.9 |

| 2 | >8 | 100 | 1240 | 93,721 | 7 | 19.0 |

| 3 | >9 | 100 | 1022 | 91,034 | 11 | 35.7 |

EOR: Enrichment over random.

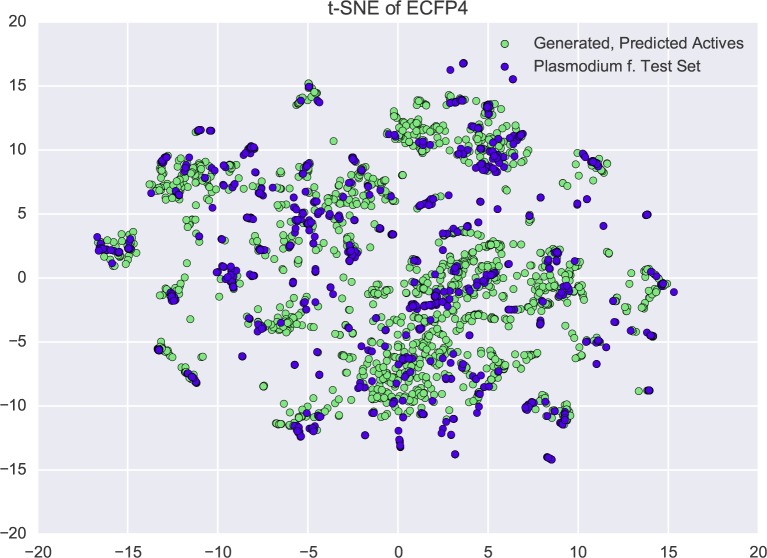

This yielded 128,256 unique molecules. Interestingly, we found that our model was able to “redesign” 28% of the unseen molecules of the test set. In comparison to molecules sampled from the unspecific, untuned model, an enrichment over random (EOR) of 66.9 is obtained. With a smaller training set of 100 molecules, the model can still reproduce 7% of the test set, with an EOR of 19.0. To test the reliance on pIC50 we chose to use another cutoff of pIC50 > 9, and took 100 molecules in the training set and 1022 in the test set. 11% of the test set could be recreated, with an EOR of 35.7. To visually explore how the model populates chemical space, Figure 8 shows a t-SNE plot of the ECFP4 fingerprints of the test molecules and 2000 generated molecules that were predicted to be active by the target prediction model for Plasmodium falciparum. It indicates that the model has generated many similar molecules around the test examples.

Figure 8.

t-SNE plot of the pIC50 > 9 test set (blue) and the de novo molecules predicted to be active (green). The language model populates chemical space around the test molecules.

Targeting Staphylococcus aureus (Golden Staph)

To evaluate a different target, we furthermore conducted a series of experiments to reproduce known active molecules against Staphylococcus aureus. Here, we used actives with a pMIC > 3. MIC is the mean inhibitory concentration, the lowest concentration of a compound that prevents visible growth of a microorganism. As above, the actives were split into a training and a test set. However, here, the availability of the data allows larger test sets to be used. After fine-tuning on the training set of 1000 molecules (Table 3, entry 1), our model could retrieve 14% of the 6051 test molecules. When scaling down to a smaller training set of 50 molecules (the model gets trained on less than 1% of the data!), it can still reproduce 2.5% of the test set, and performs 21.6 times better than the unbiased model (Table 3, entry 2). Using a lower learning rate (0.0001, entry 3) for fine-tuning, which is often done in transfer learning, does not work as well as the standard learning rate (0.001, entry 2). We additionally examined whether the model benefits from transfer learning. When trained from scratch, the model performs much worse than the pretrained and subsequently fine-tuned model (see Figure 9 and Table 3, entry 4). Pretraining on the large data set is thus crucial to achieve good performance against Staphylococcus aureus.

Table 3. Reproducing Known Actives in the Staphylococcus Test Set.

| entry | pMIC | training | test | gen mols | reprod (%) | EORa |

|---|---|---|---|---|---|---|

| 1 | >3 | 1000 | 6051 | 51,052 | 14 | 155.9 |

| 2 | >3 | 50 | 7001 | 70,891 | 2.5 | 21.6 |

| 3b | >3 | 50 | 7001 | 85,755 | 1.8 | 6.3 |

| 4c | >3 | 50 | 7001 | 285 | 0 | |

| 5d | >3 | 0 | 7001 | 60,988 | 6 | 59.6 |

EOR: Enrichment over random.

Fine-tuning learning rate = 10–4.

No pretraining.

8 generate-test cycles.

Figure 9.

Different training strategies on the Staphylococcus aureus data set with 1000 training and 6051 test examples. Fine-tuning the pretrained model performs better than training from scratch (lower test loss [cross entropy] is better).

Simulating Design-Synthesis-Test Cycles

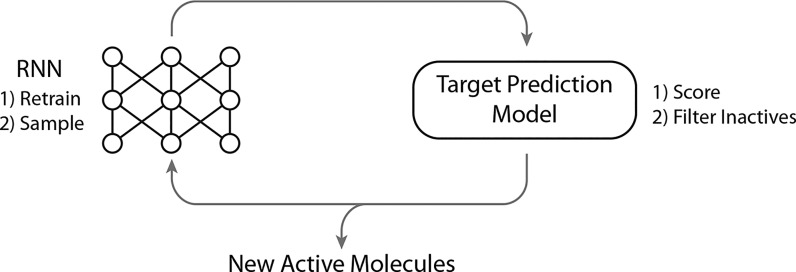

The experiments we conducted so far are applicable if one already knows several actives. However, in drug discovery, one often does not have such a set to start with. Therefore, high throughput screenings are conducted to identify a few hits, which serve as a starting point for the typical cyclical drug discovery process: Molecules get designed, synthesized, and then tested in assays. Then, the best molecules are selected, and based on the gained knowledge new molecules are designed, which closes the cycle. Therefore, as a final challenge for our model, we simulated this cycle by iterating molecule generation (“synthesis”), selection of the best molecules with the machine learning based target prediction (“virtual assay”), and retraining the language model with the best molecules (“design”) with Staphylococcus aureus as the target. We thus do not use a set of known actives to start the structure generation procedure (see Figure 10).

Figure 10.

Scheme of our de novo design cycle. Molecules are generated by the chemical language model and then scored with the target prediction model (TPM). The inactives are filtered out, and the RNN is retrained. Here, the TPM is a machine learning model, but it could also be a robot conducting synthesis and biological assays, or a docking program.

We started with 100,000 sampled molecules from the unbiased chemical language model. Then, using our target prediction model, we extracted the molecules classified as actives. After that, the RNN was fine-tuned for 5 epochs on the actives, sampling ≈10,000 molecules after each epoch. The resulting molecules were filtered with the target prediction model, and the new actives appended to the actives from the previous round, closing the loop.

Already after 8 iterations, the model reproduced 416 of the 7001 test molecules from the previous task, which is 6% (Table 3, entry 5), and exhibits an EOR of 59.6. This EOR is higher than if the model is retrained directly on a set of 50 actives (entry 2). Additionally, we obtained 60,988 unique molecules that the target prediction model classified as active. This suggests that, in combination with a target prediction or scoring model, our model can at least simulate the complete de novo design cycle.

Why Does the Model Work?

Our results indicate that the general model trained on a large molecule set has learned the SMILES rules and can output valid, drug-like molecules, which resemble the training data. However, sampling from this model does not help much if we want to generate actives for a specific target: We would have to generate very large sets to find actives for that target among the diverse range of molecules the model creates, which is indicated by the high EOR scores in our experiments.

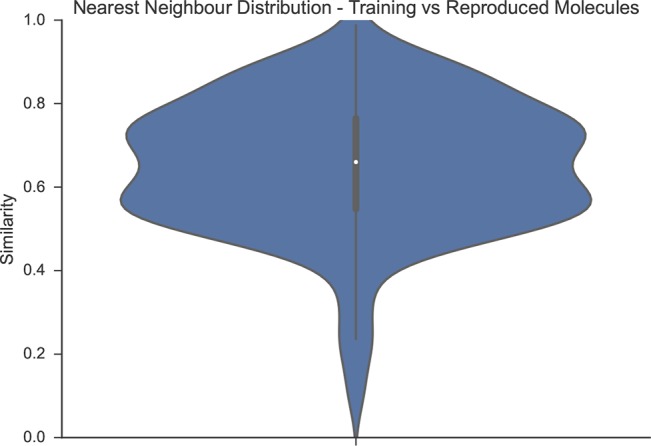

When fine-tuned to a set of actives, the probability distribution over the molecules captured by our model is shifted toward molecules active toward our target. To study this, we compare the Levenshtein (string edit) distance of the generated SMILES to their nearest neighbors in the training set in Figure 11. The Levenshtein distance of, e.g., benzene c1ccccc1 and pyridine c1ccncc1 would be 1. Figure 11 shows that while the model often seems to have made small replacements in the underlying SMILES, in many cases it also made more complex modifications or even generated completely different SMILES. This is supported also by the distribution of the nearest neighbor fingerprint similarities of training and rediscovered molecules (ECFP4, Tanimoto, Figure 12). Many rediscovered molecules are in the medium similarity regime.

Figure 11.

Histogram of Levenshtein (string edit) distances of the SMILES of the reproduced molecules to their nearest neighbor in the training set (Staphylococcus aureus, model retrained on 50 actives). While in many cases the model makes changes of a few symbols in the SMILES, resembling the typical modifications applied when exploring series of compounds, the distribution of the distances indicates that the RNN also performs more complex changes by introducing larger moieties or generating molecules that are structurally different, but isofunctional to the training set.

Figure 12.

Violin plot of the nearest-neighbor ECFP4-Tanimoto similarity distribution of the 50 training molecules against the rediscovered molecules in Table 3, entry 2. The distribution suggests that the model has learned to make typical small functional group replacements, but can also reproduce molecules which are not too similar to the training data.

Because we perform transfer learning, during fine-tuning, the model does not “forget” what it has learned. A plausible explanation why the model works is therefore that it can transfer the modifications that are regularly applied when series of molecules are studied, to the molecules it has seen during fine-tuning.

Conclusion

In this work, we have shown that recurrent neural networks based on the long short-term memory (LSTM) can be applied to learn a statistical chemical language model. The model can generate large sets of novel molecules with similar physicochemical properties to the training molecules. This can be used to generate libraries for virtual screening. Furthermore, we demonstrated that the model performs transfer learning when fine-tuned to smaller sets of molecules active toward a specific biological target, which enables the creation of novel molecules with the desired activity. By iterating cycles of structure generation with the language model, scoring with a target prediction model (TPM) and retraining of the model with increasingly larger sets of highly scored molecules, we showed that we do not even need a set of known active molecules to start our procedure with, as the TPM could also be a docking program, or a robot conducting synthesis77 and biological testing.

We see three main advantages of our method. First, it is conceptually orthogonal to established molecule generation approaches, as it learns a generative model for molecular structures. Second, our method is very simple to set up, to train, and to use; it can be adapted to different data sets without any modifications to the model architecture; and it does not depend on hand-encoded expert knowledge. Furthermore, it merges structure generation and optimization in one model. A weakness of our model is interpretability. In contrast, existing de novo design methods settled on virtual reactions to generate molecules, which has advantages as it minimizes the chance of obtaining “overfit”, weird molecules, and increases the chances to find synthesizable compounds.2,7

To extend our work, it is just a small step to cast molecule generation as a reinforcement learning problem, where the pretrained LSTM generator could be seen as a policy, which can be encouraged to create better molecules with a reward signal obtained from a target prediction model.78 In addition, different approaches for target prediction, for example, docking, could be evaluated.7,13

Deep learning is not a panacea, and we join Gawehn et al. in expressing “some healthy skepticism” regarding its application in drug discovery.31 Generating molecules that are almost right is not enough, because in chemistry, a miss is as good as a mile, and drug discovery is a “needle in the haystack” problem—in which also the needle looks like hay. Nevertheless, given that we have shown in this work that our model can rediscover those needles, and other recent developments,31,79 we believe that deep neural networks can be complementary to established approaches in drug discovery. The complexity of the problem certainly warrants the investigation of novel approaches. Eventually, success in the wet lab will determine if the new wave26 of neural networks will prevail.

Acknowledgments

The project was conducted during a research stay of M.H.S.S. at AstraZeneca R&D Gothenburg. We thank H. Chen and O. Engkvist for valuable discussions and feedback on the manuscript, and G. Klambauer for helpful suggestions.

Supporting Information Available

The Supporting Information is available free of charge on the ACS Publications website at DOI: 10.1021/acscentsci.7b00512.

M.H.S.S. and M.P.W. acknowledge funding by Deutsche Forschungsgemeinschaft (DFG, SFB 858). M.P.W. would like to acknowledge support from the Shanghai Eastern Scholar Program. T.K. and C.T. are employees of AstraZeneca.

The authors declare no competing financial interest.

Supplementary Material

References

- Whitesides G. M. Reinventing chemistry. Angew. Chem., Int. Ed. 2015, 54, 3196–3209. 10.1002/anie.201410884. [DOI] [PubMed] [Google Scholar]

- Schneider P.; Schneider G. De Novo Design at the Edge of Chaos: Miniperspective. J. Med. Chem. 2016, 59, 4077–4086. 10.1021/acs.jmedchem.5b01849. [DOI] [PubMed] [Google Scholar]

- Reymond J.-L.; Ruddigkeit L.; Blum L.; van Deursen R. The enumeration of chemical space. Wiley Interdisc. Rev. Comp. Mol. Sci. 2012, 2, 717–733. 10.1002/wcms.1104. [DOI] [Google Scholar]

- Schneider G.; Baringhaus K.-H.. Molecular design: concepts and applications; John Wiley & Sons: 2008. [Google Scholar]

- Stumpfe D.; Bajorath J. Similarity searching. Wiley Interdisc. Rev. Comp. Mol. Sci. 2011, 1, 260–282. 10.1002/wcms.23. [DOI] [Google Scholar]

- Schneider G.; Fechner U. Computer-based de novo design of drug-like molecules. Nat. Rev. Drug Discovery 2005, 4, 649–663. 10.1038/nrd1799. [DOI] [PubMed] [Google Scholar]

- Hartenfeller M.; Schneider G. Enabling future drug discovery by de novo design. Wiley Interdisc. Rev. Comp. Mol. Sci. 2011, 1, 742–759. 10.1002/wcms.49. [DOI] [Google Scholar]

- Hartenfeller M.; Zettl H.; Walter M.; Rupp M.; Reisen F.; Proschak E.; Weggen S.; Stark H.; Schneider G. DOGS: reaction-driven de novo design of bioactive compounds. PLoS Comput. Biol. 2012, 8, e1002380. 10.1371/journal.pcbi.1002380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartenfeller M.; Eberle M.; Meier P.; Nieto-Oberhuber C.; Altmann K.-H.; Schneider G.; Jacoby E.; Renner S. A collection of robust organic synthesis reactions for in silico molecule design. J. Chem. Inf. Model. 2011, 51, 3093–3098. 10.1021/ci200379p. [DOI] [PubMed] [Google Scholar]

- Segler M. H.; Waller M. P. Modelling chemical reasoning to predict and invent reactions. Chem. - Eur. J. 2017, 23, 6118–6128. 10.1002/chem.201604556. [DOI] [PubMed] [Google Scholar]

- Segler M. H.; Waller M. P. Neural-Symbolic Machine Learning for Retrosynthesis and Reaction Prediction. Chem. - Eur. J. 2017, 23, 5966–5971. 10.1002/chem.201605499. [DOI] [PubMed] [Google Scholar]

- Segler M. H.; Preuss M.; Waller M. P. Learning to Plan Chemical Syntheses. ArXiv 2017, 1708.04202. [Google Scholar]

- Kitchen D. B.; Decornez H.; Furr J. R.; Bajorath J. Docking and scoring in virtual screening for drug discovery: methods and applications. Nat. Rev. Drug Discovery 2004, 3, 935–949. 10.1038/nrd1549. [DOI] [PubMed] [Google Scholar]

- Varnek A.; Baskin I. Machine learning methods for property prediction in chemoinformatics: quo vadis?. J. Chem. Inf. Model. 2012, 52, 1413–1437. 10.1021/ci200409x. [DOI] [PubMed] [Google Scholar]

- Mitchell J. B. Machine learning methods in chemoinformatics. Wiley Interdisc. Rev. Comp. Mol. Sci. 2014, 4, 468–481. 10.1002/wcms.1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riniker S.; Landrum G. A. Open-source platform to benchmark fingerprints for ligand-based virtual screening. J. Cheminf. 2013, 5, 26. 10.1186/1758-2946-5-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers D.; Hahn M. Extended-connectivity fingerprints. J. Chem. Inf. Model. 2010, 50, 742–754. 10.1021/ci100050t. [DOI] [PubMed] [Google Scholar]

- Alvarsson J.; Eklund M.; Engkvist O.; Spjuth O.; Carlsson L.; Wikberg J. E.; Noeske T. Ligand-based target prediction with signature fingerprints. J. Chem. Inf. Model. 2014, 54, 2647–2653. 10.1021/ci500361u. [DOI] [PubMed] [Google Scholar]

- Baskin I. I.; Palyulin V. A.; Zefirov N. S. A neural device for searching direct correlations between structures and properties of chemical compounds. J. Chem. Inf. Comp. Sci. 1997, 37, 715–721. 10.1021/ci940128y. [DOI] [Google Scholar]

- Merkwirth C.; Lengauer T. Automatic generation of complementary descriptors with molecular graph networks. J. Chem. Inf. Model. 2005, 45, 1159–1168. 10.1021/ci049613b. [DOI] [PubMed] [Google Scholar]

- Duvenaud D. K.; Maclaurin D.; Iparraguirre J.; Bombarell R.; Hirzel T.; Aspuru-Guzik A.; Adams R. P. Convolutional networks on graphs for learning molecular fingerprints. Adv. Neural Inf. Proc. Syst. 2015, 2224–2232. [Google Scholar]

- Altae-Tran H.; Ramsundar B.; Pappu A. S.; Pande V. Low Data Drug Discovery with One-Shot Learning. ACS Cent. Sci. 2017, 3, 283–293. 10.1021/acscentsci.6b00367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jastrzebski S.; Lesniak D.; Czarnecki W. M.. Learning to SMILE(S). In International Conference on Learning Representations; 2016.

- Zupan J.; Gasteiger J. Neural networks: A new method for solving chemical problems or just a passing phase?. Anal. Chim. Acta 1991, 248, 1–30. 10.1016/S0003-2670(00)80865-X. [DOI] [Google Scholar]

- Gasteiger J.; Zupan J. Neural networks in chemistry. Angew. Chem., Int. Ed. Engl. 1993, 32, 503–527. 10.1002/anie.199305031. [DOI] [Google Scholar]

- Zupan J.; Gasteiger J.. Neural networks in chemistry and drug design; John Wiley & Sons, Inc: 1999. [Google Scholar]

- Lusci A.; Pollastri G.; Baldi P. Deep architectures and deep learning in chemoinformatics: the prediction of aqueous solubility for drug-like molecules. J. Chem. Inf. Model. 2013, 53, 1563–1575. 10.1021/ci400187y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Unterthiner T.; Mayr A.; Klambauer G.; Steijaert M.; Wegner J. K.; Ceulemans H.; Hochreiter S.. Deep learning as an opportunity in virtual screening. In Proceedings of the Deep Learning Workshop at NIPS; 2014; Vol. 27, pp 1–9.

- Unterthiner T.; Mayr A.; Klambauer G.; Hochreiter S. Toxicity prediction using deep learning. ArXiv 2015, 1503.01445. [Google Scholar]

- Schneider P.; Müller A. T.; Gabernet G.; Button A. L.; Posselt G.; Wessler S.; Hiss J. A.; Schneider G. Hybrid Network Model for “Deep Learning” of Chemical Data: Application to Antimicrobial Peptides. Mol. Inf. 2017, 36, 1600011. 10.1002/minf.201600011. [DOI] [PubMed] [Google Scholar]

- Gawehn E.; Hiss J. A.; Schneider G. Deep learning in drug discovery. Mol. Inf. 2016, 35, 3–14. 10.1002/minf.201501008. [DOI] [PubMed] [Google Scholar]

- Ramsundar B.; Kearnes S.; Riley P.; Webster D.; Konerding D.; Pande V. Massively multitask networks for drug discovery. ArXiv 2015, 1502.02072. [Google Scholar]

- Behler J. Constructing high-dimensional neural network potentials: A tutorial review. Int. J. Quantum Chem. 2015, 115, 1032–1050. 10.1002/qua.24890. [DOI] [Google Scholar]

- Behler J.; Parrinello M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 2007, 98, 146401. 10.1103/PhysRevLett.98.146401. [DOI] [PubMed] [Google Scholar]

- Ma J.; Sheridan R. P.; Liaw A.; Dahl G. E.; Svetnik V. Deep neural nets as a method for quantitative structure–activity relationships. J. Chem. Inf. Model. 2015, 55, 263–274. 10.1021/ci500747n. [DOI] [PubMed] [Google Scholar]

- Reutlinger M.; Rodrigues T.; Schneider P.; Schneider G. Multi-Objective Molecular De Novo Design by Adaptive Fragment Prioritization. Angew. Chem., Int. Ed. 2014, 53, 4244–4248. 10.1002/anie.201310864. [DOI] [PubMed] [Google Scholar]

- Miyao T.; Arakawa M.; Funatsu K. Exhaustive Structure Generation for Inverse-QSPR/QSAR. Mol. Inf. 2010, 29, 111–125. 10.1002/minf.200900038. [DOI] [PubMed] [Google Scholar]

- Miyao T.; Kaneko H.; Funatsu K. Inverse QSPR/QSAR Analysis for Chemical Structure Generation (from y to x). J. Chem. Inf. Model. 2016, 56, 286–299. 10.1021/acs.jcim.5b00628. [DOI] [PubMed] [Google Scholar]

- Takeda S.; Kaneko H.; Funatsu K. Chemical-Space-Based de Novo Design Method To Generate Drug-Like Molecules. J. Chem. Inf. Model. 2016, 56, 1885–1893. 10.1021/acs.jcim.6b00038. [DOI] [PubMed] [Google Scholar]

- Mishima K.; Kaneko H.; Funatsu K. Development of a new de novo design algorithm for exploring chemical space. Mol. Inf. 2014, 33, 779–789. 10.1002/minf.201400056. [DOI] [PubMed] [Google Scholar]

- White D.; Wilson R. C. Generative models for chemical structures. J. Chem. Inf. Model. 2010, 50, 1257–1274. 10.1021/ci9004089. [DOI] [PubMed] [Google Scholar]

- Patel H.; Bodkin M. J.; Chen B.; Gillet V. J. Knowledge-based approach to de novo design using reaction vectors. J. Chem. Inf. Model. 2009, 49, 1163–1184. 10.1021/ci800413m. [DOI] [PubMed] [Google Scholar]

- Bowman S. R.; Vilnis L.; Vinyals O.; Dai A. M.; Jozefowicz R.; Bengio S.. Generating sentences from a continuous space. In SIGNLL Conference on Computational Natural Language Learning (CONLL); 2016.

- Gómez-Bombarelli R.; Duvenaud D.; Hernández-Lobato J. M.; Aguilera-Iparraguirre J.; Hirzel T. D.; Adams R. P.; Aspuru-Guzik A. Automatic chemical design using a data-driven continuous representation of molecules. ArXiv 2016, 1610.02415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voss C.Modeling Molecules with Recurrent Neural Networks; 2015; http://csvoss.github.io/projects/2015/10/08/rnns-and-chemistry.html.

- Firth N.de novo Design Without the Chemistry; 2016; https://medium.com/@nf508/de-novo-design-without-the-chemistry-d183e8a9f150.

- Jozefowicz R.; Vinyals O.; Schuster M.; Shazeer N.; Wu Y. Exploring the limits of language modeling. ArXiv 2016, 1602.02410. [Google Scholar]

- Graves A.; Eck D.; Beringer N.; Schmidhuber J.. Biologically plausible speech recognition with LSTM neural nets. In International Workshop on Biologically Inspired Approaches to Advanced Information Technology; 2004; pp 127–136.

- van den Oord A.; Kalchbrenner N.; Kavukcuoglu K.. Pixel Recurrent Neural Networks. In International Conference on Machine Learning; 2016.

- Srivastava N.; Mansimov E.; Salakhutdinov R.. Unsupervised learning of video representations using LSTMs. In Proceedings of the 32nd International Conference on Machine Learning; 2015; pp 843–852.

- Gers F. A.; Schmidhuber E. LSTM recurrent networks learn simple context-free and context-sensitive languages. IEEE Transactions on Neural Networks 2001, 12, 1333–1340. 10.1109/72.963769. [DOI] [PubMed] [Google Scholar]

- Bhoopchand A.; Rocktäschel T.; Barr E.; Riedel S. Learning Python Code Suggestion with a Sparse Pointer Network. ArXiv 2016, 1611.08307. [Google Scholar]

- Eck D.; Schmidhuber J.. Finding temporal structure in music: Blues improvisation with LSTM recurrent networks. In Proc. 12th IEEE Workshop Neural Networks for Signal Processing; 2002; pp 747–756.

- Weininger D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Model. 1988, 28, 31–36. 10.1021/ci00057a005. [DOI] [Google Scholar]

- Goldberg Y. A Primer on Neural Network Models for Natural Language Processing. J. Artif. Intell. Res. 2016, 57, 345–420. [Google Scholar]

- Hochreiter S.; Schmidhuber J. Long short-term memory. Neural computation 1997, 9, 1735–1780. 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- Johnson M.; Schuster M.; Le Q. V.; Krikun M.; Wu Y.; Chen Z.; Thorat N.; Viégas F.; Wattenberg M.; Corrado G. Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation. ArXiv 2016, 1611.04558. [Google Scholar]

- Graves A. Generating sequences with recurrent neural networks. ArXiv 2013, 1308.0850. [Google Scholar]

- Olah C.Understanding LSTM Networks; http://colah.github.io/posts/2015-08-Understanding-LSTMs/.

- Greff K.; Srivastava R. K.; Koutník J.; Steunebrink B. R.; Schmidhuber J. LSTM: A search space odyssey. IEEE transactions on neural networks and learning systems 2017, 28, 2222–2232. 10.1109/TNNLS.2016.2582924. [DOI] [PubMed] [Google Scholar]

- Chollet F.Keras; https://github.com/fchollet/keras; retrieved on 2016-10–-24.

- Kingma D.; Ba J.. Adam: A method for stochastic optimization. In International Conference for Learning Representations; 2015.

- Cireşan D. C.; Meier U.; Schmidhuber J.. Transfer learning for Latin and Chinese characters with deep neural networks. In The 2012 International Joint Conference on Neural Networks (IJCNN); 2012; pp 1–6. [Google Scholar]

- Steinbeck C.; Hoppe C.; Kuhn S.; Floris M.; Guha R.; Willighagen E. L. Recent developments of the chemistry development kit (CDK)-an open-source java library for chemo-and bioinformatics. Curr. Pharm. Des. 2006, 12, 2111–2120. 10.2174/138161206777585274. [DOI] [PubMed] [Google Scholar]

- Steinbeck C.; Han Y.; Kuhn S.; Horlacher O.; Luttmann E.; Willighagen E. The Chemistry Development Kit (CDK): An open-source Java library for chemo-and bioinformatics. J. Chem. Inf. Comp. Sci. 2003, 43, 493–500. 10.1021/ci025584y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pedregosa F.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chen T.; Guestrin C. XGBoost: A scalable tree boosting system. 22nd ACM SIGKDD Int. Conf. 2016, 785. 10.1145/2939672.2939785. [DOI] [Google Scholar]

- Weininger D.; Weininger A.; Weininger J. L. SMILES. 2. Algorithm for generation of unique SMILES notation. J. Chem. Inf. Model. 1989, 29, 97–101. 10.1021/ci00062a008. [DOI] [Google Scholar]

- https://en.wikipedia.org/wiki/Graph_canonization.

- Srivastava N.; Hinton G. E.; Krizhevsky A.; Sutskever I.; Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Cumming J. G.; Davis A. M.; Muresan S.; Haeberlein M.; Chen H. Chemical predictive modelling to improve compound quality. Nat. Rev. Drug Discovery 2013, 12, 948–962. 10.1038/nrd4128. [DOI] [PubMed] [Google Scholar]

- Chevillard F.; Kolb P. SCUBIDOO: A Large yet Screenable and Easily Searchable Database of Computationally Created Chemical Compounds Optimized toward High Likelihood of Synthetic Tractability. J. Chem. Inf. Model. 2015, 55, 1824–1835. 10.1021/acs.jcim.5b00203. [DOI] [PubMed] [Google Scholar]

- RDKit: Open-source cheminformatics; http://www.rdkit.org.

- Maaten L. v. d.; Hinton G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Bemis G. W.; Murcko M. A. The properties of known drugs. 1. Molecular frameworks. J. Med. Chem. 1996, 39, 2887–2893. 10.1021/jm9602928. [DOI] [PubMed] [Google Scholar]

- Williamson A. E.; Todd M. H.; et al. Open source drug discovery: highly potent antimalarial compounds derived from the Tres Cantos arylpyrroles. ACS Cent. Sci. 2016, 2, 687–701. 10.1021/acscentsci.6b00086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ley S. V.; Fitzpatrick D. E.; Ingham R.; Myers R. M. Organic synthesis: march of the machines. Angew. Chem., Int. Ed. 2015, 54, 3449–3464. 10.1002/anie.201410744. [DOI] [PubMed] [Google Scholar]

- Sutton R. S.; Barto A. G.. Reinforcement learning: An introduction; MIT Press Cambridge; 1998; Vol. 1. [Google Scholar]

- Ching T.; Himmelstein D. S.; et al. Opportunities And Obstacles For Deep Learning In Biology And Medicine. bioRxiv 2017, 142760. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.