Abstract

Feedback is an essential part of medical training, where trainees are provided with information regarding their performance and further directions for improvement. In diagnostic radiology, feedback entails a detailed review of the differences between the residents’ preliminary interpretation and the attendings’ final interpretation of imaging studies. While the on-call experience of independently interpreting complex cases is important to resident education, the more traditional synchronous “read-out” or joint review is impossible due to multiple constraints. Without an efficient method to compare reports, grade discrepancies, convey salient teaching points, and view images, valuable lessons in image interpretation and report construction are lost. We developed a streamlined web-based system, including report comparison and image viewing, to minimize barriers in asynchronous communication between attending radiologists and on-call residents. Our system provides real-time, end-to-end delivery of case-specific and user-specific feedback in a streamlined, easy-to-view format. We assessed quality improvement subjectively through surveys and objectively through participation metrics. Our web-based feedback system improved user satisfaction for both attending and resident radiologists, and increased attending participation, particularly with regards to cases where substantive discrepancies were identified.

Keywords: Radiology education, Dashboard, Resident-attending discrepancy

Introduction

Feedback refers to a dynamic communication process, where information regarding the trainee’s performance is conveyed to facilitate growth [1]. However, there is no universally agreed-upon methodology in providing feedback, and it can vary in quality and resulting effectiveness. For feedback to be effective, it needs to be relevant, accurate, timely, specific, and easy to understand [2]. Specific, detailed content of feedback is received with higher enthusiasm compared to brief, non-specific feedback [3]. Timeliness of feedback is also important, as immediate feedback is viewed more helpful, and it allows trainees to reflect and make active changes in their behavior [1, 4, 5]. Consistent, systemized feedback is recommended over informal methods of feedback on a day-to-day basis; in the latter superiors are more likely to voice negative evaluations and overlook good work [1] though positive feedback has been shown to increase performance and morale [2].

Feedback is an integral part of residency training, where residents can learn from their mistakes. In addition, consistent, systemized assessment of resident competency can prevent delays in detecting deficiencies to allow educational intervention [6]. Feedback in radiology residency training involves detailed review of discordance between residents’ interpretations and the final interpretations [7]. While the on-call experience of independently interpreting complex cases and forming clinically relevant conclusions is an essential part of radiology resident education, providing timely, constructive feedback for on-call residents is not always easy, particularly in the context of increasing clinical demands in imaging volume, turn-around-times, and differences in scheduled work hours [8–10]. Important teaching points in image interpretation and report construction are lost without an efficient way to view changes, grade discrepancies, provide case-specific direction, and easily access imaging studies [9–11]. In many institutions, including ours, trainees are responsible to manually check the finalized version of their preliminary reports to learn from their on-call cases [9, 10]. Even when attending radiologists take the initiative to provide feedback, such one-on-one feedback tends to be inconsistent [8]; in our institution, attending radiologists used varying methods for constructive criticism—some chose e-mail while others waited for in-person conversation.

It is imperative that a consistent and easy method of providing feedback, with real-time or near real-time review by the resident, be in place in each residency program. In addition to improved resident learning, quality feedback with regular documentation and review is helpful in ensuring high quality interpretation of imaging studies during off-hours [12]. We developed a web-based system in which giving feedback to radiology residents is simple, and a correlative dashboard where residents can easily consume this feedback with reference to the associated radiology report and imaging. Our goal is to minimize barriers to asynchronous communication between attending radiologists and on-call residents, as well as to document quality improvement subjectively through surveys and objectively through participation metrics.

Materials and Methods

We developed a web-based system to facilitate both provisioning and receipt of case-specific feedback for on-call resident preliminary reports. The foundation of this system is our departmental server which receives real-time Health Level 7 (HL7) order and report data from our radiology information system (RIS) (Siemens). Data is filtered by a Mirth Connect HL7 engine (Mirth Corporation) and stored in a MySQL (Oracle) database with associated metadata. This server also includes an Apache web server (Apache Software Foundation) to receive feedback data and for display of a dashboard for feedback consumption. Authentication is performed primarily using our institutional Active Directory (Microsoft) system as well as a local lightweight access directory protocol (LDAP) server (OpenLDAP) for local group and control purposes.

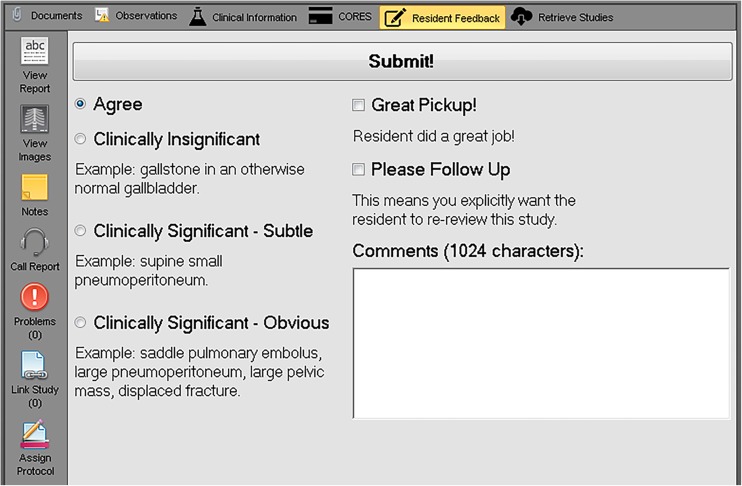

A C#/.NET (Microsoft, Inc) plug-in is integrated into our radiology workflow application (Medicalis Corp.) to gather resident feedback. The plug-in is displayed when the exam is from our local teaching institution, the user is an attending radiologist as determined by our local LDAP server, and when a preliminary report from a resident exists for the exam (Fig. 1). At our institution, the on-call resident generates full dictations for the vast majority of cases; for the few exceptions, a brief preliminary report is still generated through our dictation system. The attending radiologist then provides a graded evaluation for report discrepancy, free text comments, positive feedback, and/or a request for follow-up or review by the resident. Discrepancy grading is based on our institution’s modified RADPEER scoring, similar to those used in other academic institutions [13, 14], and is categorized as: agreement, clinically insignificant discrepancy, clinically significant discrepancy with subtle findings, and clinically significant discrepancy with not subtle findings. The feedback data is posted through our web server and then stored in our database along with our report and order data.

Fig. 1.

Resident feedback plug-in integrated in the radiology worklist application

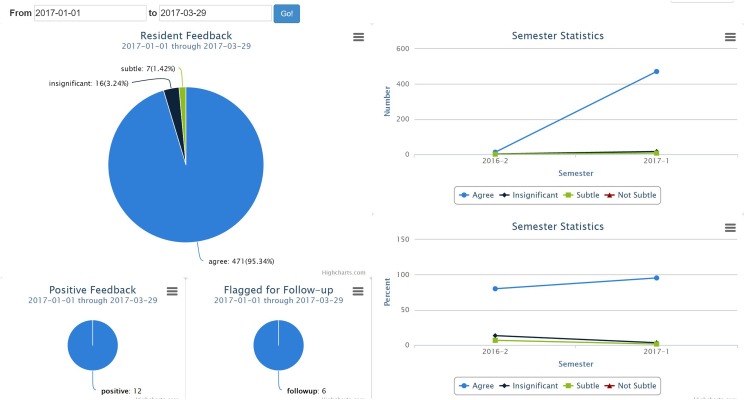

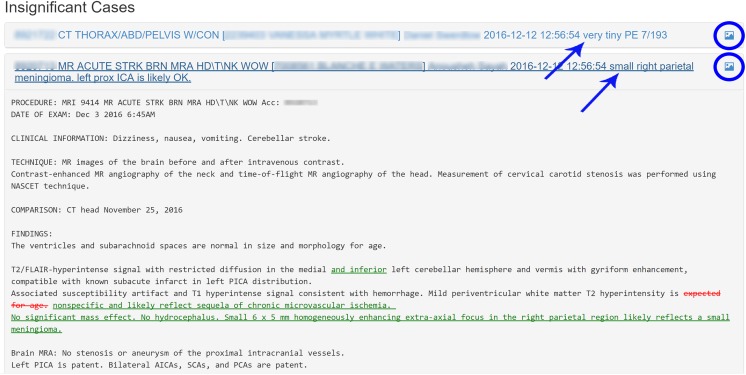

Our web-based dashboard is accessible via our institution’s intranet or virtual private network (VPN). Summary statistics are user-specific and can be customized to a desired date range (Fig. 2). Feedback categories and those exams flagged as positive feedback or for follow-up can be clicked interactively to display exam details as well as attending radiologist comments for each group. Each exam can then be expanded to see highlighted report differences using similar methods previously reported from our institution [11]. A direct link to immediately launch the associated images in context within the web-browser is also available (Fig. 3). Our residency program director has access to all resident dashboards for administrative and educational progress review. The program director has access to all residents’ dashboards and is able to adjudicate discrepancy scores; for purposes of our study, scores given by the original interpreting radiologists were analyzed.

Fig. 2.

Screenshot of resident feedback dashboard interface, which includes customizable date range selection and distribution of reviewed reports by discrepancy grade. Reports with positive feedback and those flagged for follow-up are displayed separately. Bar chart on the right is intended to track resident’s progress over time

Fig. 3.

Display of cases. Exam information and reviewer’s comments (arrows) are shown and can be expanded to view highlighted differences between preliminary and finalized versions of reports

Exemption from full review was obtained from our institutional review board (IRB). Attending radiologists and trainees were surveyed about the ease or difficulty of providing or receiving feedback prior to and 3 months after the implementation of our system. The survey included several questions regarding ease and satisfaction of use, graded on a Likert scale. Respondents also provided suggestions via free text comments. Objective quantification of attending radiologist participation was performed by tallying the number and percent of cases with feedback for the 3 months before and 4 months after implementation. Prior to implementation, attending radiologists were able to “bulk agree” all cases from overnight on our paper feedback sheets while in the new system explicit feedback was required for each case—for this reason, we also examined number and percent of cases that were anything other than agree prior to and after implementation. Total number of on-call cases was estimated as the number of preliminary reports issued by a resident between 6 pm–7 am or any time on Saturday or Sunday. Participation data before and after implementation was compared using a chi-squared test with Yates continuity correction.

Results

Survey Data

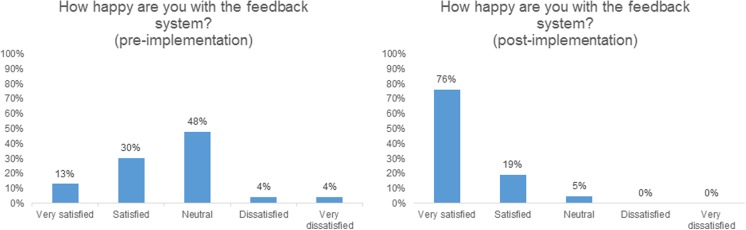

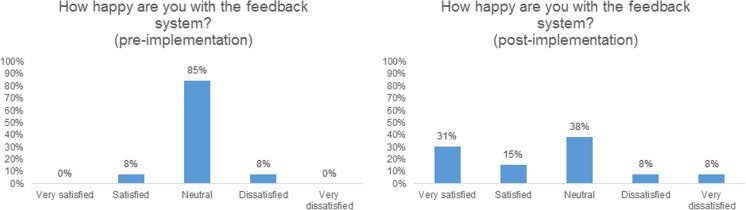

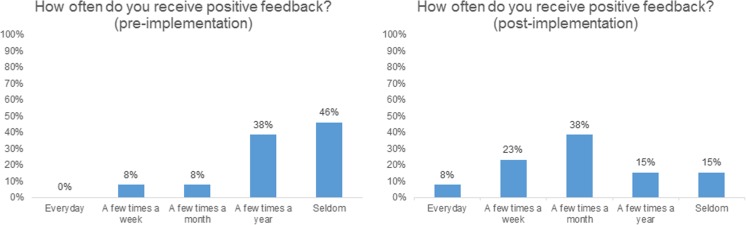

Twenty-three of 31 (74%) of attending radiologists responded to the pre-intervention survey, and 21 (68%) responded to the post-intervention survey. Thirteen of 13 (100%) 2nd, 3rd, and 4th year residents taking call responded to the survey. Prior to intervention, only 9% of the attendings were satisfied with the existing methods of feedback, 48% were neutral, and 43% were dissatisfied; following intervention, 85% were satisfied, 5% neutral, and none of the attending radiologists were dissatisfied (Fig. 4). On post-intervention surveys, nearly all (95%) responded that they used the plug-in to provide feedback to the on-call residents. The attending radiologists were more likely to give explicit feedback for subtle and clinically insignificant discrepancies with the new system: before implementation, only 22% of the attendings would communicate clinically insignificant discrepancies; following implementation, 43% stated they would give feedback for such cases. The majority of the residents, 11 of 13 (85%), stated they were neutral to the pre-existing system, while one resident was satisfied, and one resident was dissatisfied. On post-intervention surveys, six residents (46%) responded that they were happy, five (38%) were neutral, and two residents stated they were not happy (Fig. 5). Our feedback system also encouraged positive feedback. On our pre-intervention survey, 46% of the residents responded that they seldom received commendation, and all residents desired more positive feedback. With the new system, this decreased to 15%, and 70% of the residents received positive feedback at least few times a month (Fig. 6). The attendings also agreed: while only 48% gave positive feedback at least a few times a month before intervention, 76% gave positive feedback with the new system.

Fig. 4.

Attending radiologist satisfaction with the feedback system before and after implementation

Fig. 5.

Resident radiologist satisfaction with the feedback system before and after implementation

Fig. 6.

Frequency of positive feedback received by residents before and after implementation of our feedback system

Objective Quantification Data

Objective quantification of the attending radiologist response rate prior to and after the implementation demonstrated a statistically significant increase in overall response rate (p < 0.0001; two-tailed chi-squared test with Yates correction) as well as in those cases with feedback other than “agree” (p < 0.0001) (Table 1). Prior to implementation, 31.9% of all on-call cases received feedback, compared to 35.8% in the months following implementation. Attending response rate increased notably in the third and fourth months following implementation, where feedback was given for 48–49% of all on-call cases. 2.1% of cases received non-agree feedback pre-implementation, whereas 4.2% of cases received non-agree feedback post-implementation. The proportion of cases with clinically significant discrepancies, with both subtle and not subtle findings, also increased from 0.5 to 1.7% (p < 0.0001).

Table 1.

Objective quantification of attending radiologist response rate before and after implementation of web-based feedback system

| Before implementation | ||||

| Month | Total number of cases with feedback (%)a | Number of cases with non-agree feedback (%) | Number of cases with clinically significant discrepancies (%) | Total number of preliminary reportsb |

| July, 2016 | 901 (45) | 60 (3) | 16 (0.8) | 2019 |

| August, 2016 | 683 (28) | 51 (2.1) | 6 (0.2) | 2418 |

| September, 2016 | 627 (25) | 34 (1.4) | 11 (0.4) | 2499 |

| Total cases | 2211 (31.9) | 145 (2.1) | 33 (0.5) | 6936 |

| Average | 737 | 48.3 | 11 | 2312 |

| After implementation | ||||

| November, 2016 | 583 (24) | 112 (4.5) | 42 (1.7) | 2463 |

| December, 2016 | 243 (22) | 53 (4.8) | 52 (2.0) | 2093 |

| January, 2017 | 1189 (48) | 134 (5) | 44 (1.8) | 2478 |

| February, 2017 | 1187 (49) | 95 (4) | 25 (1.0) | 2425 |

| Total cases | 3202 (35.8) | 394 (4.2) | 163 (1.7) | 9459 |

| Average | 800.5 | 98.5 | 41.8 | 2364.8 |

| p value | p < 0.0001 | p < 0.0001 | p < 0.0001 | |

apercentage relative to total number of preliminary reports

btotal number of preliminary reports issued by a resident between 6 pm–7 am or on Saturday or Sunday was used as estimate for number of on-call cases

Discussion

Feedback needs to be timely, specific, and easy to understand to be effective [2, 4]. For example, “great job on handling the phone call to Mr. Jones” is more helpful than “great job” [3]. Immediate feedback is better, partly due to a trainee’s reduced ability to recall the details of the particular task as time passes [4]. The trainee may also assume that the sender of feedback can more adequately judge their performance or skills when an assessment is performed immediately upon completion of the task. Timely feedback is better received [5] and allows for trainees to reflect and make active changes to their behavior [1]. Consistent, specific feedback allows early detection of resident’s deficiencies [6]. In addition, systemized assessments of residents’ performance can help ensure high quality interpretations of imaging studies [12].

While several tools in resident preliminary report discrepancy tracking and report comparison have been described [8, 15], our approach is focused on ease of use and completeness for both the attending radiologist and the trainee, with survey and objective compliance data to support acceptance and improvement within our institution. This workflow is seamlessly integrated into the radiology worklist without taking up valuable real estate on diagnostic monitors. The attending radiologist can provide feedback in as little as two clicks of a mouse, and following the completion of this project, we enabled an auto-agree function that assumes that the attending agrees if they sign the report without explicitly providing feedback, which further decreases required steps. Unlike some workflows, where discrepancy tracking is based on macros, our system also allows for case-specific instruction via free text comments from the faculty, which is only displayed on the individual resident’s dashboard. Additional flags for positive feedback and specific follow-up are allowed and encouraged based on the assessment of residents’ preliminary interpretation.

The graphical format of the resident feedback dashboard provides a streamlined display of the resident’s performance. Customizable date ranges, summary visualizations, interactive drill-down capability, and integrated web-based image launch allows complete review of feedback along with report and imaging data in one easily accessible location. The dashboard is accessible via our institution’s intranet and VPN, and cases can be viewed in full without vendor-specific PACS. Many of previously described report reviewing tools have focused on the amount of words or characters changed as a measure of resident performance. However, we and others have found that many of the changes are stylistic and do not necessarily contribute to the pertinent findings or diagnoses that affect patient care [8, 11]. While we still highlight these changes using previously reported methods [11], our emphasis for resident education is centered on the discrepancy grading and comments.

Our web-based feedback system was well received at our institution, with greatly improved user satisfaction from the attending radiologists. Resident satisfaction also improved, but to a lesser extent than that of the attending radiologists. We hypothesize that this is related to the falsely elevated discrepancy rate: (a) the attending radiologist is more likely to remember to explicitly submit feedback for discrepant cases rather than “Agree” cases; (b) with convenience of the new web-based system, attendings more frequently provided case-specific direction, even those that do not result in a clinically significant change. Department-wide implementation of our new system also facilitated communication between the residents and attendings regarding how on-call feedback can be improved. One of the keys to providing useful, well-received feedback is to involve the trainees in the process and elicit their input regarding the type of feedback they want [16], and the process has resulted in a more feedback-friendly culture at our institution.

Our study has several limitations. Direct comparison of objective participation data was somewhat limited, primarily because when using our old paper-based system, attendings would commonly report the total number of reports in their queue in bulk as all “Agree” without individually reporting each exam. The new system does not currently assume that an exam is graded “Agree”; feedback must be given explicitly. Despite the ability to bulk agree with the old paper-based system, attending radiologist response rate significantly increased overall after implementation, particularly in the most recent months. Following implementation, the number and proportion of cases with discrepancy grades other than “Agree” also increased significantly, reflective of ease of use for the attending radiologists in providing precise, case-specific descriptions of error and suggestions for refinement. The proportion of cases with clinically significant discrepancies also increased, but we believe this reflects an underreporting bias in our old system. The discrepancy rate of 1.7% at our institution with our new feedback system is comparable to attending-resident radiologist discrepancy rates of 0.1–2.6%, as cited by Brown et al. [17], and attending-attending discrepancy rates of 0.8–2.7% [18–21].

We had small sample sizes for our surveys, as our institution is a medium-size residency program with only 13 residents (excluding the author) taking call in a single academic year. Attending survey participation was also somewhat limited. The longer-term impact of our system on resident education and patient care requires further evaluation; additional larger and likely multi-institutional studies would be required to fully validate our results.

Future directions include increasing faculty participation and standardizing feedback. While we believe the plug-in is relatively easy to use, further education or other workflow improvements, such as our recently implemented auto-agree function, may increase ease of use for the attending radiologist. While assuming that the attending agrees if no feedback is explicitly given comes with some limitations, this was felt by our attendings to be best for workflow purposes; we can also distinguish explicit agreement from auto-agreement for future analysis purposes. We have also found that some attendings grade resident reports using different internal scales; our program director has the capability to adjudicate discrepancy scores which we will study as more data becomes available and we are considering notifications for the attending radiologists whose grading required adjudication. In the future, we also hope to incorporate feedback data into our report search tool, create anonymized teaching files for sharing great calls and missed cases in conference, and improve report comparison function by utilizing natural language processing.

Conclusion

Feedback is an essential method of instruction for radiology residents on call. An efficient, streamlined web-based system which delivers end-to-end feedback, including report and image viewing, can enhance delivery of constructive criticism between attending radiologists and on-call residents. Our system has improved user satisfaction from both attending and resident radiologists. Quality improvement is also documented objectively through increased participation metrics.

References

- 1.Baker A, Perreault D, Reid A, Blanchard CM. Feedback and organizations: feedback is good, feedback-friendly culture is better. Can Psychol. 2013;54(4):260–268. doi: 10.1037/a0034691. [DOI] [Google Scholar]

- 2.Baker N. Employee feedback technologies in the human performance system. Hum Resour Dev Int. 2010;13(4):477–485. doi: 10.1080/13678868.2010.501994. [DOI] [Google Scholar]

- 3.Raemdonck I, Strijbos J-W. Feedback perceptions and attribution by secretarial employees. Eur J Train Dev. 2013;37(1):24–48. doi: 10.1108/03090591311293275. [DOI] [Google Scholar]

- 4.Northcraft GB, Schmidt AM, Ashford SJ. Feedback and the rationing of time and effort among competing tasks. J Appl Psychol. 2011;96(5):1076–1086. doi: 10.1037/a0023221. [DOI] [PubMed] [Google Scholar]

- 5.Bindal T, Wall D, Goodyear HM. Trainee doctors’ views on workplace-based assessments: are they just a tick box exercise? Med Teach. 2011;33(11):919–927. doi: 10.3109/0142159X.2011.558140. [DOI] [PubMed] [Google Scholar]

- 6.Mar C, Chang S, Forster B. Remedial training for the radiology resident: a template for optimization of the learning plan. Acad Radiol. 2015;22(2):240–246. doi: 10.1016/j.acra.2014.10.005. [DOI] [PubMed] [Google Scholar]

- 7.Lee S, Baek HJ, Jung HK, Il MJ, Cho SB, Choi BH, Bae K, Jeon KN, Choi DS, Shin HS, Kim DW. Interpretations of diffusion-weighted MR imaging by radiology residents in the emergency department: is diagnostic performance influenced by the level of residency training? Radiol Med. 2017;122(1):35–42. doi: 10.1007/s11547-016-0688-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gorniak RJT, Flanders AE, Sharpe RE. Trainee report dashboard: tool for enhancing feedback to radiology trainees about their reports. Radiographics. 2013;33(7):2105–2113. doi: 10.1148/rg.337135705. [DOI] [PubMed] [Google Scholar]

- 9.Harari AA, Conti MB, Jamal Bokhari SA, Staib LH, Taylor CR. The role of report comparison, analysis, and discrepancy categorization in resident education. Am J Roentgenol. 2016;207(6):1223–1231. doi: 10.2214/AJR.16.16245. [DOI] [PubMed] [Google Scholar]

- 10.Sharpe RE, Surrey D, Gorniak RJT, Nazarian L, Rao VM, Flanders AE. Radiology report comparator: a novel method to augment resident education. J Digit Imaging. 2012;25(3):330–336. doi: 10.1007/s10278-011-9419-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kalaria AD, Filice RW. Comparison-Bot: an automated preliminary-final report comparison system. J Digit Imaging. 2016;29(3):325–330. doi: 10.1007/s10278-015-9840-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ruchman RB, Jaeger J, Wiggins EF, Seinfeld S, Thakral V, Bolla S, Wallach S. Preliminary radiology resident interpretations versus final attending radiologist interpretations and the impact on patient care in a community hospital. Am J Roentgenol. 2007;189(3):523–526. doi: 10.2214/AJR.07.2307. [DOI] [PubMed] [Google Scholar]

- 13.Ruma J, Klein KA, Chong S, Wesolowski J, Kazerooni EA, Ellis JH, Myles JD. Cross-sectional examination interpretation discrepancies between on-call diagnostic radiology residents and subspecialty faculty radiologists: analysis by imaging modality and subspecialty. J Am Coll Radiol. 2011;8(6):409–414. doi: 10.1016/j.jacr.2011.01.012. [DOI] [PubMed] [Google Scholar]

- 14.Weinberg BD, Richter MD, Champine JG, Morriss MC, Browning T. Radiology resident preliminary reporting in an independent call environment: multiyear assessment of volume, timeliness, and accuracy. J Am Coll Radiol. 2015;12(1):95–100. doi: 10.1016/j.jacr.2014.08.005. [DOI] [PubMed] [Google Scholar]

- 15.Itri JN, Kim W, Scanlon MH. Orion: a web-based application designed to monitor resident and fellow performance on-call. J Digit Imaging. 2011;24(5):897–907. doi: 10.1007/s10278-011-9360-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Boud D, Molloy E. Rethinking models of feedback for learning: the challenge of design. Assess Eval High Educ. 2013;38(6):698–712. doi: 10.1080/02602938.2012.691462. [DOI] [Google Scholar]

- 17.Brown JM, Dickerson EC, Rabinowitz LC, Cohan RH, Ellis JH, Litell JM, Kaza RK, Lopez AN, Theyyunni NR, Weber JT, Kocher KE, Davenport MS. “Concordance” revisited: a multispecialty appraisal of “concordant” preliminary abdominopelvic CT reports. J Am Coll Radiol. 2016;13(9):1111–1117. doi: 10.1016/j.jacr.2016.04.019. [DOI] [PubMed] [Google Scholar]

- 18.Branstetter BF, IV, Morgan MB, Nesbit CE, Phillips JA, Lionetti DM, Chang PJ, Towers JD. Preliminary reports in the emergency department: is a subspecialist radiologist more accurate than a radiology resident? Acad Radiol. 2007;14(2):201–206. doi: 10.1016/j.acra.2006.11.001. [DOI] [PubMed] [Google Scholar]

- 19.Harvey HB, Alkasab TK, Prabhakar AM, Halpern EF, Rosenthal DI, Pandharipande PV, Gazelle GS. Radiologist peer review by group consensus. J Am Coll Radiol. 2016;13(6):656–662. doi: 10.1016/j.jacr.2015.11.013. [DOI] [PubMed] [Google Scholar]

- 20.Cheng T, Dumire R, Golden S, Gregory J. Impact on patient care of discordance in radiology readings between external overnight radiology services and staff radiology readings at a level 1 trauma center. Am J Surg. 2013;205(3):280–283. doi: 10.1016/j.amjsurg.2012.10.017. [DOI] [PubMed] [Google Scholar]

- 21.Borgstede JP, Lewis RS, Bhargavan M, Sunshine JH. RADPEER quality assurance program: A multifacility study of interpretive disagreement rates. J Am Coll Radiol. 2004;1(1):59–65. doi: 10.1016/S1546-1440(03)00002-4. [DOI] [PubMed] [Google Scholar]