Abstract

Objective The electronic chart review habits of intensive care unit (ICU) clinicians admitting new patients are largely unknown but necessary to inform the design of existing and future critical care information systems.

Methods We conducted a survey study to assess the electronic chart review practices, information needs, workflow, and data display preferences among medical ICU clinicians admitting new patients. We surveyed rotating residents, critical care fellows, advanced practice providers, and attending physicians at three Mayo Clinic sites (Minnesota, Florida, and Arizona) via email with a single follow-up reminder message.

Results Of 234 clinicians invited, 156 completed the full survey (67% response rate). Ninety-two percent of medical ICU clinicians performed electronic chart review for the majority of new patients. Clinicians estimated spending a median (interquartile range (IQR)) of 15 (10–20) minutes for a typical case, and 25 (15–40) minutes for complex cases, with no difference across training levels. Chart review spans 3 or more years for two-thirds of clinicians, with the most relevant categories being imaging, laboratory studies, diagnostic studies, microbiology reports, and clinical notes, although most time is spent reviewing notes. Most clinicians (77%) worry about overlooking important information due to the volume of data (74%) and inadequate display/organization (63%). Potential solutions are chronologic ordering of disparate data types, color coding, and explicit data filtering techniques. The ability to dynamically customize information display for different users and varying clinical scenarios is paramount.

Conclusion Electronic chart review of historical data is an important, prevalent, and potentially time-consuming activity among medical ICU clinicians who would benefit from improved information display systems.

Keywords: information needs, electronic health records, clinical informatics, intensive care units, data display

Background and Significance

With the widespread dissemination of electronic medical records (EMRs), clinicians have the ability to perform information retrieval on a comprehensive, longitudinal medical history database to familiarize themselves with new patients, referred to as electronic chart review. 1 In fact, the EMR now represents the most common initial information source for new patients. 2 Performing an electronic chart review (or “chart biopsy”) 3 is perhaps most important for patients with complex medical histories, prolonged hospital courses, or cognitive/mental status limitations that do not permit accurate history-taking. Given the availability of all this information, clinicians may spend nearly 15% of their time performing chart review tasks. 4 Surprisingly, we found little published literature about how the EMR is reviewed by clinicians performing direct patient care for new admissions. 3 5 6 As electronic chart review is an increasingly common and important task, 7 the paucity of informative “use-case” studies on the topic may contribute to poor EMR design and low EMR satisfaction, 8 despite an increasing recognition of the importance of EMR usability. 9 10 11 The wider potential implications of suboptimal electronic chart review tools include medical error and patient safety concerns, which are increasingly recognized unintended consequences of digital health records. 10 12 13

The intensive care unit (ICU) represents its own unique information environment, where there is a perfect storm of massive data collection, 14 frequently impaired physician–patient communication from intubation, sedation, and limited consciousness with high acuity conditions. Although the information needs of ICU clinicians have been previously studied by our group, they remain incompletely understood, especially regarding the review of historical EHR information. 15 Our research group is developing an electronic tool that can organize and filter historical EMR data into a distilled, meaningful narrative to reduce information overload and improve chart review efficiency. 16 However, the knowledge gaps about electronic chart review habits at the time of ICU admission represented both an unmet clinical informatics need and a barrier to such tool development.

Objective

Through the study of clinicians' electronic chart review habits during medical ICU admission, we aim to address a general informatics knowledge gap for different critical care areas and inform the design, workflow, and usability of existing and future critical care informatics tools. Specifically, we had four objectives:

Quantify the time health care providers estimate spending on electronic chart review for new patients admitted to medical ICUs.

Assess the type and amount of historical data elements sought during electronic chart review and their relative importance in building a clinical narrative.

Assess the information workflow patterns used to complete an electronic chart review.

Elicit clinician preferences on the preferred method for display of historical clinical information.

Methods

Design

We conducted a prospective survey study of clinicians that inquired about their chart review habits, historical information needs, workflow, and data display preferences during electronic chart review for newly admitted medical ICU patients.

Setting and Participants

The survey was sent to all clinicians who practice within a primary medical or mixed medical/surgical ICU setting at three academic medical centers: Mayo Clinic Minnesota, Mayo Clinic Arizona, and Mayo Clinic Florida. Among all sites, we included ICU attendings, fellows, and advanced practice providers (APPs), and at two sites (Mayo Clinic Florida and Arizona) we also included rotating residents. ICU clinicians were identified through departmental email lists and rotation schedules. There were no specific exclusion criteria for clinicians. All the three sites used three core medical record applications that were developed internally for electronic medical record (EMR) viewing (Synthesis, AWARE, QREADS), as well as a combination of two commercial electronic health records (EHRs, customized versions of General Electric Company Centricity and Cerner PowerChart).

Survey Procedure

We emailed clinicians an explanation of our study and electronic survey link between December 2016 and January 2017. It was explained that the survey responses were anonymous and had no bearing on their employment or trainee evaluations. For those who did not initially complete the survey, a single follow-up request was sent approximately 2 weeks later. The survey remained open for a maximum of 6 weeks. The electronic surveys were administered and managed using the Research Electronic Data Capture (REDCap) tool version 4.13.17 (Vanderbilt University, Nashville, Tennessee, United States). 17 This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and approved by the Mayo Clinic Institutional Review Board.

Survey Instrument

The survey was developed through a modified Delphi process 18 among the authors after identifying gaps in the existing literature and with the additional goal to inform a critical care information display tool we are designing. The three survey design participants were M.E.N. (pulmonary/critical care fellow with experience in designing web applications), V.H. (a clinical informaticist with experience in prior survey design and information-needs assessment), and P.M.-F. (intensivist with experience in deployment and assessment of novel critical care information systems). The survey assessed clinician impressions of their electronic chart review habits in the following five domains: general behaviors (including time spent), type of information sought, chart review workflow, information display preferences, and demographic information. M.E.N. and V.H. first worked together to identify the survey question domains that would meet the stated research needs. M.E.N. developed the foundational survey questions, which were then evaluated iteratively by the rest of the group. During the first evaluation round, two questions were removed and five questions were updated to improve clarity (no questions added). In the second round of evaluation, 10 questions were modified to improve clarity, with no questions added or deleted. The final consensus survey contained approximately 74 elements within 30 core questions. The questions were developed to be meaningful irrespective of the EHR software. Most of the ordinal, multiple-choice questions had a central/neutral anchor. Multiple-choice questions with potentially subjective answers all had an option for free-text input, and “general comments” input was offered for each domain to ensure an opportunity for subjective input. No questions had “mandatory” responses, and survey completeness was defined by reaching the final survey screen and “submitting” the electronic survey. Most questions are shown in Tables 1 , 2 , and 3 and Figs. 2 and 3 , with the full survey provided in ( Supplementary Material , available in the online version only).

Table 1. General habits/behaviors for electronic chart review of new medical ICU admissions.

| Prompt | Answer option | N (%) |

|---|---|---|

| Aside from reviewing the immediate ICU admission data (most recent vitals/imaging/laboratories), do you perform any form of historical electronic “chart review”? | N = 156 | |

| Yes | 155 (99) | |

| No | 1 (1) | |

| For what proportion of your new patients do you perform a chart review? | N = 155 a | |

| 75–100% | 143 (92) | |

| 50–74% | 10 (6) | |

| 25–49% | 2 (1) | |

| 0–24% | 0 (0) | |

| The primary reason you perform an electronic chart review is: | N = 155 a | |

| Primarily construct my own clinical narrative to understand the events leading to the patient's current state | 125 (81) | |

| Confirm the major narrative events/data points as relayed by the patient or another provider | 20 (13) | |

| Search for omitted narrative events/data points that may be relevant | 8 (5) | |

| Other reason | 2 (1) | |

| For what percentage of new ICU admissions is your diagnosis or treatment strategy mostly established by chart review alone (i.e., excluding bedside history/exam from the patient)? | N = 156 | |

| 0–24% | 23 (15) | |

| 25–49% | 50 (32) | |

| 50–74% | 65 (42) | |

| 75–100% | 18 (12) | |

Abbreviation: ICU, intensive care unit.

Note: Responses representing the plurality/majority appear in bold.

N < 156 (“complete response” number) indicates missing values for that question.

Table 2. Electronic chart review workflow habits among medical ICU clinicians.

| Prompt | Answer option | N (%) |

|---|---|---|

| Which statement best describes your usual chart review workflow: | N = 154 a | |

| I have a methodical chart review workflow | 78 (51) | |

| My chart review workflow is haphazard/disorganized | 76 (49) | |

| If a “methodical” workflow, first data category reviewed (free-text entry): | N = 63 b | |

| Clinical notes | 40 (63) | |

| Vital sign data | 11 (17) | |

| Various other | 12 (19) | |

| What is the main reason for a haphazard chart review workflow? c | N = 76 | |

| Each piece of information leads me in different directions (inherently disorganized data) | 60 (79) | |

| Data are spread across different tabs/screens (interface design) | 39 (51) | |

| Other reason | 6 (8) | |

| Do you worry about overlooking important pieces of information during your chart review? | N = 153 a | |

| No | 35 (23) | |

| Yes | 118 (77) | |

| If so, what do you think would be the main reasons for an oversight? c | N = 118 | |

| Too many total data elements to review | 87 (74) | |

| Data are poorly displayed or organized for mass review | 74 (63) | |

| Didn't review far enough back in the record | 41 (35) | |

| Misread a report or value | 24 (20) | |

| Too busy/inadequate time for chart review (free-text entry) | 5 (4) | |

| Other reason | 3 (3) | |

Abbreviation: ICU, intensive care unit.

Note: Responses representing the plurality/majority appear in bold.

N < 156 (“complete response” number) indicates missing values for that question.

“Methodical” subgroup, total N = 78.

Multiple responses allowed, percentages will not sum to 100%.

Table 3. Information display preferences for ICU electronic record systems.

| Prompt | Answer option | N (%) |

|---|---|---|

| When considering the vast amount of information available for review in some electronic health records, would you prefer that the computer system intelligently “hide” low-yield data from view? | N = 154 a | |

| Yes | 98 (64) | |

| … “hiding” low-yield data would be helpful | 11 (7) | |

| … but I would want some indicator about what type of information is “hidden” | 86 (56) | |

| … other reason | 1 (1) | |

| No | 56 (36) | |

| … I would not trust the “hiding” rules, which may suppress important data | 35 (23) | |

| … I want to see and review all the data myself | 17 (11) | |

| … other reason | 4 (3) | |

| When browsing a list of clinical notes or reports in the electronic medical record, would you prefer seeing: | N = 153 a | |

| All metadata b (favors comprehensiveness over screen information density, requiring more scrolling) | 35 (23) | |

| Limited group of metadata | 90 (59) | |

| Very few metadata (favors screen information density over comprehensiveness, fewer sorting options) | 28 (18) | |

| For the display of clinical metadata text in the electronic medical record, would you prefer: | N = 153 a | |

| More clinical abbreviations c (favors screen information density, possible acronym/abbreviation ambiguity) | 96 (63) | |

| Fewer clinical abbreviations (favors unambiguous language, requires more screen space/scrolling) | 57 (37) | |

| For the visual display of data elements in the electronic medical record, would you prefer: d | N = 152 a | |

| Color coding e | 91 (60) | |

| Abbreviated text-based descriptors | 87 (57) | |

| Icons | 49 (32) | |

| Verbose text-based descriptors | 23 (15) | |

Abbreviation: ICU, intensive care unit.

Note: Responses representing the plurality/majority appear in bold.

N < 156 (“complete response” number) indicates missing values for that question.

Prompt: Metadata refers to attributes of a data element beyond the intrinsic “value.” For a clinical report/note, the report text is the “value,” while the “author,” “date time,” “service description,” “finalization status,” “subtype,” “service group,” “department,” and “facility,” are metadata.

Prompt: Clinical abbreviations and acronyms can be used to condense the visual display of clinical metadata, for example “PFTs” rather than “pulmonary function tests” or “TTE” rather than “echocardiogram-transthoracic.

Multiple responses allowed, percentages will not sum to 100%.

Prompt: Color-coding and iconography can be used to visually describe data elements and augment or even replace the need for text-based descriptors of meta-data. (Potential downsides to these tools are needing familiarity with the key/legend and possible visual distraction.) As an example, a small icon of a “QRS” complex could represent an “ECG tracing” report, or all cardiovascular study report items could have a red background color.

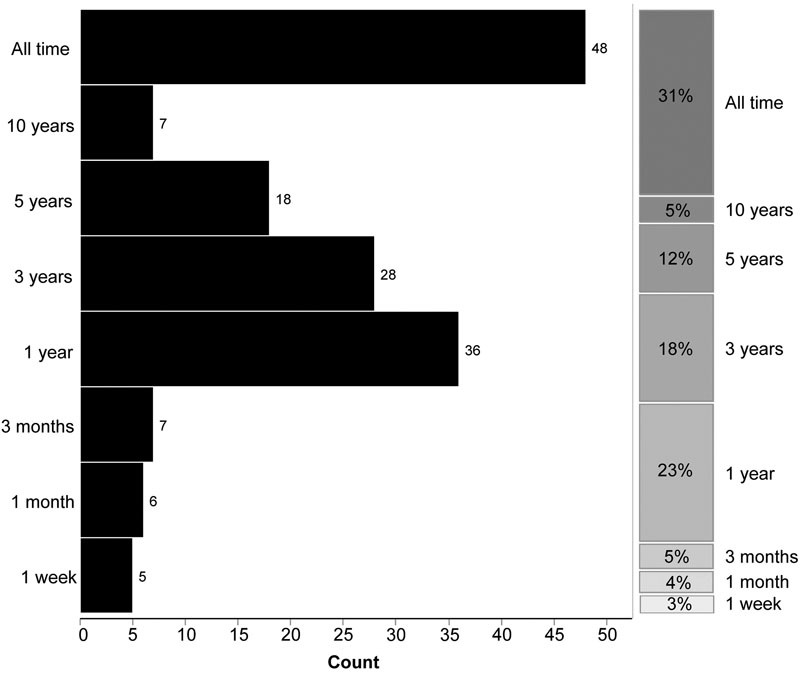

Fig. 2.

Self-reported historical depth of electronic chart review for new medical ICU patients (n = 155). “On average, how far back into the record do you review during a typical chart review?” (Y-axis). Histogram bars and counts appear on the left side of the figure, with the right stacked column indicating relative percentages. ICU, intensive care unit.

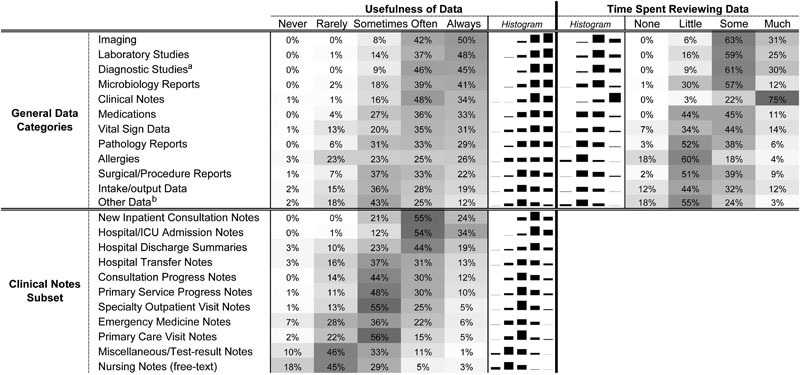

Fig. 3.

Perceived usefulness and time spent reviewing electronic information for new medical ICU patients (n = 155). “Please rate the following data elements for USEFULNESS in satisfying your chart review information needs” and “Please rate the following data elements for AVERAGE TIME SPENT per element (compared to total time spent in chart review) in satisfying your chart review information needs.” Data elements ordered in descending order by perceived usefulness, with darker heatmap coloring indicating a higher proportion of respondents. Side-by-side mini-histograms correspond to the adjacent percentages within a column and allow visual estimation of concordance/discordance between the “utility” of information and the relative “time spent” in review. ICU; intensive care unit. a Diagnostic studies excluding primary imaging studies. b Other data (e.g., advance directives, upcoming appointments, pending orders, nursing flowsheet data).

Results Analysis

Results are reported with descriptive statistics, using median/interquartile range (IQR) due to data skew. Qualitative results from free-text input were aggregated and reviewed for themes, with representative quotations reported where applicable. The Kruskal–Wallis rank-sums test was used to check for a pooled significant difference for time spent performing chart review. All analyses were performed using JMP Statistical Software for Windows version 12.2.0 (SAS Institute Inc, Cary, North Carolina, United States). Only surveys that were completed in full were included in final analysis.

Results

Respondent Demographics

Table 4 provides respondent demographics. In total, 156 complete responses were received among 234 emailed surveys, with an overall complete response rate of 67%. Complete response rates by individual Mayo Clinic sites were 76, 58, and 61% for Minnesota, Florida, and Arizona, respectively. There was a fairly even distribution of ICU team roles/training levels with the largest spread being among residents (19% of responses) and attendings (35% of responses). As expected, the “number of years in clinical practice” was right skewed, with a median of 6 years but 25% of respondents having 15 or more years of clinical experience. Fifty-three percent of respondents were internal medicine and/or pulmonary and critical care–trained intensivists, and accordingly the majority of practice ICUs were either medical or mixed medical/surgical units. Fewer than 12% of clinicians reported only “beginner” skills with their institution's EHR, indicating a largely experienced group of software users. In total, across all sites, clinicians used seven different electronic health data systems to perform their chart review, with 62% of clinicians preferring Mayo Clinic's internally developed “Synthesis” application for the task.

Table 4. Respondent demographics.

| Clinicians surveyed | N |

|---|---|

| Total surveys sent | 234 |

| Partial responses | 3 |

| Complete responses | 156 |

| Complete response rate (%) | 67% |

| Complete respondents ( N = 156) | N (% of total) |

| ICU team role | N = 154 a |

| Attending | 53 (34) |

| Fellows | 37 (24) |

| APP | 34 (22) |

| Residents | 30 (19) |

| Years in clinical practice | N = 143 a |

| Minimum | < 1 |

| Median (IQR) | 6 (4–15) |

| Maximum | 38 |

| Mayo Clinic site b | N = 156 |

| Rochester, MN | 81 (52) |

| Jacksonville, FL | 47 (30) |

| Scottsdale, AZ | 28 (18) |

| Usual ICU practice c | N = 153 a |

| Medical | 78 (50) |

| Mixed medical/surgical | 78 (50) |

| Surgical | 24 (15) |

| Other (eICU, cardiac, etc.) | 13 (8) |

| Primary specialty | N = 150 a |

| Pulmonary and critical care | 54 (36) |

| Critical care (internal medicine pathway) | 26 (17) |

| Critical care (anesthesiology pathway) | 15 (10) |

| Other critical care | 24 (16) |

| Internal medicine residency/preliminary year | 27 (18) |

| Other residency/practice | 16 (11) |

| Familiarity with existing EMR software | N = 154 a |

| Beginner | 18 (12) |

| Intermediate | 63 (41) |

| Advanced | 73 (47) |

Abbreviations: APP, advanced practice provider; eICU, electronic intensive care unit; EMR, electronic medical record; IQR, Interquartile range.

N < 156 (“complete response” number) indicates missing values for that question.

Percentages reflect proportion of total respondents across all sites. Individual site-specific response rates were 76, 58, and 61% for Rochester, Jacksonville, and Scottsdale, respectively.

Multiple responses allowed, percentages will not sum to 100%.

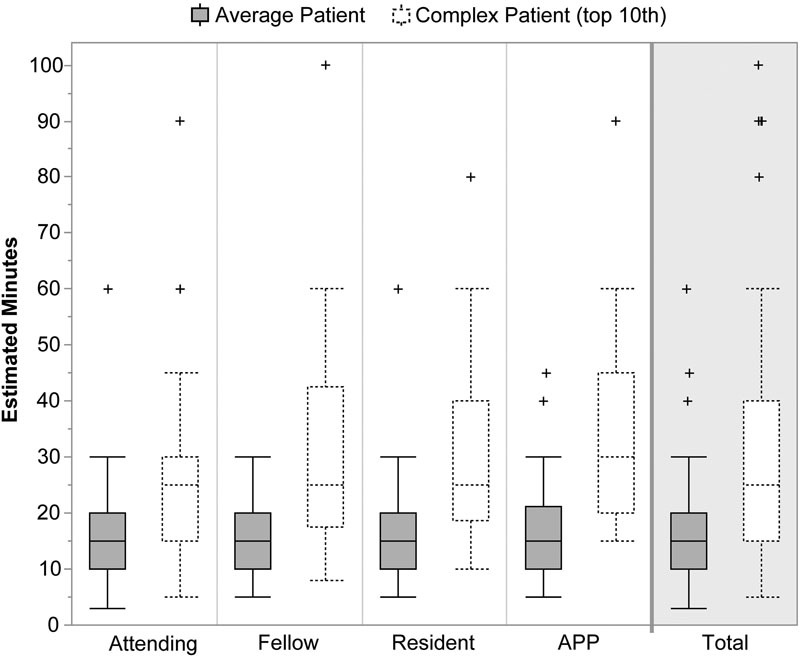

General Behaviors

Ninety-two percent of clinicians reported performing some form of historical electronic chart review during new patient admissions to ICUs for nearly all (75–100%) of new admissions ( Table 1 ). For the “average” new patient admission, clinicians estimated spending a median of 15 minutes (IQR: 10–20) reviewing the electronic record, and a median of 25 minutes (IQR: 15–40) for patients in the top 10th percentile for medical complexity, with no significant differences between ICU team roles ( Fig. 1 ). Additional subgroup analysis by “usual ICU practice location” being primarily a medical ICU versus other ICU also showed no significant differences in duration of chart review ( p = 0.59 for “average” patient, p = 0.59 for “complex” patients). When asked about the historical depth of clinical chart review, two-thirds of clinicians reported reviewing data at least 3 years back, and over one-third of clinicians reviewed data from 10 years or more ( Fig. 2 ).

Fig. 1.

Self-reported duration of electronic chart review for new medical ICU patients, grouped by team role (n = 153). X-axis represents team role. Box plots represent the 25th, 50th (median), and 75th percentiles, with whiskers extending to 1.5 *Interquartile range. Kruskal–Wallis test for pooled analysis of variance showed no significant difference among ICU team role groupings for “average” patients ( p = 0.33) or “complex” patient ( p = 0.41). “Complex” patients were defined as those patients in the top 10th percentile for medical complexity or protracted hospital course. APP, advanced practice provider; ICU, intensive care unit.

Regarding the overall informational relevance of electronic chart review, 53% of clinicians indicated that for at least half of new admissions the “diagnosis and treatment strategy [is] mostly established by chart review alone (i.e., excluding bedside history/exam […] obtained from the patient),” with 85% of clinicians finding this true at least in every one in four admissions ( Table 1 ). When given the opportunity for free-text feedback about general chart review habits, one theme mentioned by eight respondents was that the stability and/or clinical scenario of the admitted patient are primarily responsible for the depth and content of the chart review.

Information Needs

Fig. 3 provides a summary of clinician perceptions about their information use at the time of ICU admission. Clinicians were asked to distinguish between the usefulness of various data elements and the amount of time they spend in reviewing those elements, which allowed ranking the various elements against each other for informational importance. Over 70% of clinicians indicated the following data elements are “always” or “often” useful to review, indicating the most critical information categories (in descending order): imaging, laboratory studies, diagnostic studies, microbiology reports, and clinical notes. In general, clinicians spent more time reviewing typically “useful” data, but there was a notable discrepancy within clinical notes, with 75% of clinicians spending “much time” reviewing, while only 34% reporting notes to be “always” useful, suggesting these may be important but often low-yield information sources.

When drilling down on subtypes of clinical notes, the top 4 most useful note types were new inpatient consultations, admission notes, discharge summaries, and service-to-service transfer notes. Interestingly, emergency medicine notes ranked low, with only 30% of clinicians reporting them as “often/always” useful, despite this being a very common pathway for medical ICU admission. When asked about the typical amount of each note's content that was actually read, only 21% of clinicians read the “entire note,” while the remaining 79% read only some combination of the “history of present illness,” “problem/diagnosis list,” and “impression/plan.”

Nonetheless, free-text feedback about EMR information use revealed several themes. One was that there can be high variability in the chart review process depending on the clinical scenario. Another theme was the frequent inaccuracy of clinical note documents and mistrust of the verbally relayed history from other providers, or that the accuracy often correlates with the department/service performing the documentation. Finally, some clinicians viewed the patient interview as a mechanism to confirm data discovered during antecedent chart review process.

Electronic Chart Review Workflow

A summary of electronic chart review workflow responses is found in Table 2 . Only 51% of clinicians reported using a “methodical” workflow when performing historical chart review. Of those who did, the most common starting point was “clinical notes” (63%), in particular making reference to hospital discharge summaries, admission notes, and diagnosis/problem lists. Among the 64 respondents providing free-text input about their “methodical” workflow, 5 clinicians reported following a strict chronologic order in their chart review, one clinician specifically mentioned using a checklist/rubric to ensure completeness, and the rest largely followed a sequential data category review process. Of respondents who reported a “haphazard” (nonmethodical) workflow, 79% cited the cause to be inherently disorganized data. However, a full 51% endorsed that interface design issues contributed to a disorganized chart review process. One user commented that the desire to review the record in chronological order caused their record review to be “haphazard and mixed” as the data are spread across multiple tabs/screens.

Interestingly, 77% of medical ICU clinicians reported “worrying” about overlooking important information in the medical record, citing both the amount of data (74%) and the display/organization of data (63%) as the most likely cause for important oversights.

Data Display Preferences

Table 3 outlines user responses to general interface design questions. Given the abundance of data contained in the EMR, creating a filtered patient summary that only displays pertinent information is an area of active interest and previous review. 19 Sixty-four percent of clinicians indicated they would “prefer that the computer system intelligently 'hide' low-yield data,” but would require an “indicator about what type of information is ‘hidden’” (56%). However, 23% of respondents said they “would not trust the ‘hiding’ rules, which may suppress important data.” Several additional questions were asked about metadata, as pertains to unstructured/semistructured data found in clinical notes or reports. Only 23% of clinicians prefer to see “all metadata” about a document (which favors comprehensiveness over screen information density, requiring more scrolling), with 59% of clinicians wanting just a “limited group” of metadata with which to process/sort reports. Although rarely implemented in most EMR applications, 63% of clinicians would prefer the use of “more clinical abbreviations” (e.g., “PFT” rather than “pulmonary function test”) which could improve screen information density. Finally, when prompted about the use of abbreviated/verbose text-based descriptors, color-coding, and iconography to aid in visual display of clinical metadata, only 15% would prefer “verbose text-based descriptors,” while the majority prefer either abbreviated text descriptors (57%) or color-coding (60%).

Clinicians were prompted for free-text input about EMR information display preferences, with several themes arising. One was a strong need for robust “search” features within the EMR. One user described the chart review process as a “scavenger hunt” in which the clinician “pulls” data from the EMR rather than having only relevant data “pushed.” Similarly, others prefer “natural highlighting of important notes/events” and improved “flagging” of abnormal data to reduce the information review burden. Several users commented on the potential utility of better methods to summarize data/events in the EMR to facilitate review, such as using “logical, time-based organization with extraneous data removed … visual tools and hovering pop-ups declutter the interface and allow for focus on important areas.”

It was noted that while application “tabs” help organize data, they cause the unwanted need for more “clicks” to complete data review. As clinicians completing the survey use several EMR applications, the desire for a unified, single application was apparent, as was the desire for consistency of nomenclature/metadata between different record systems.

Discussion

This study provides original and valuable insight about the type and depth of information used within EMR by ICU clinicians admitting new patients, particularly focusing on the use and relative value of unstructured clinical data. We found that nearly every clinician (99%) performs a review of the electronic record when admitting new patients, spending an estimated 15 minutes reviewing typical cases, and 25 minutes for the most complex patients, which was surprisingly consistent across all experience categories (residents through attendings). We are aware of two studies that directly quantified time spent in EMR review for unfamiliar patients: a hypothetical “cross-cover” scenario found chart review averaged 6.2 minutes (range: 1–17), 20 and a hypothetical MICU admission scenario averaged 11.0 minutes, 6 which are less than clinicians in the present study estimate spending. However, the hypothetical and varying clinical scenarios, as well as this study's reliance on clinician recall, complicate direct comparison. Block et al reported that modern internal medicine interns spend 14.5% of their duty hours “reviewing [the] patient chart” and 40% of work hours performing some computer task. 4 Combined with our results, we find ICU clinicians may spend a significant proportion of time at the computer, likely limiting the time spent directly interviewing and examining the new patient. Furthermore, we were surprised to find that two-thirds of ICU clinicians reported searching back into the record 3 or more years to find relevant information. This indicates that ICU clinicians require ready access to the majority of the historical medical record, emphasizing both the importance and simultaneous difficulty in providing/displaying this information succinctly.

One may wonder why clinicians are choosing to spend valuable time at the computer reviewing the EMR. We found the main goal of electronic chart review is to primarily construct/reconstruct a clinical narrative leading to patient's current state. During the course of care, especially with an ICU admission, many verbal handoffs and written documentation contain the clinical story, but our results suggest that nearly all clinicians repeat this process anew when assuming care. Although this might seem like unnecessary redundancy, it may actually indicate skepticism or confirmation seeking about what other clinicians have said or documented, which could be partly influenced by the lack of a single curated medical history and problem list within our particular EMR.

Other explanations for narrative reconstruction are that information needs vary from provider to provider, or that electronic chart review is part of the “learning” process to develop familiarity with new patients. Varpio et al described the act of “building the patient's story” as a “vitally important skill,” but that ironically “EMR use obstructed [this process] by fragmenting data interconnections.” 7 Although medical training curricula have well-defined techniques to learn and perform the physical exam or take a verbal patient history, to our knowledge there are no formal education programs on how to perform electronic chart review. Determining an “ideal” methodologic approach to electronic chart review represents an area for further study and potential curriculum development given the modern importance of chart review as a skill.

Two additional findings that explain the significant time spent reviewing the EMR are the sheer volume of data available to review, and its organization within the EMR. Manor-Shulman et al studied the volume of clinical data collected over 24 hours for patients admitted to a pediatric ICU, finding that over 1,400 discrete items are documented each day per patient (as of 2005), which did not even include clinical note data. 14 A subsequent study by Pickering et al at our institution found that, despite the abundance of data, relatively few clinical concepts are needed for ICU decision making, 15 which demonstrates the need to better understand which historical data elements are most important for prioritization purposes. Our research augments this understanding, finding that the most frequently helpful historical information for ICU care fell into the following five categories: imaging, laboratory studies, diagnostic studies, microbiology reports, and clinical notes. These data may provide a set of “core” categories around which a well-designed critical care admission information display system could revolve. It is also worth noting that clinicians may need to review significantly more information than is ultimately important for their final decision making, implying information systems need the ability to display high volumes of information, not just high-yield data.

Reichert et al studied physicians tasked with creating a hypothetical patient summary for unfamiliar patients. 5 They found that clinicians spent the most time reviewing clinical notes, similar to our research ( Fig. 3 ). Our study elaborates on this category by ranking these documents' perceived utility in the ICU, with the caveat that these findings describe averages and do not apply equally in all clinical situations. We show that certain notes could be given visual/informational preference (inpatient consultations, admission notes), while others are possible candidates for filtering/suppression (primary care, nursing, and “miscellaneous” notes). Furthermore, we found that the large majority of ICU clinicians (∼80%) read only the history of present illness, problem list, and/or assessment/plan, indicating that clinical note display could probably be abbreviated by default. While we offer these suggestions with the goal of alleviating information overload in the ICU, we acknowledge that any form of default data suppression or filtering must be carefully considered and studied during implementation to ensure patient safety.

Similar to the findings of Reichert et al about EMR review sequence, clinicians in our study commonly reported a haphazard workflow, in large part due to the data itself, but half of respondents also cited interface design issues. This may contribute to the high rate of “worry” (77%) clinicians reported about overlooking important data. We found that clinicians preferred a chronologic review of the record, but the dispersion of data across multiple screens causes the need to flip back-and-forth in the interface. As Varpio et al commented, “the EMR was scattering the different elements of the patient's story into different screens and content categories, thus making it harder to consolidate and interpret the data. In other words, the EHR made it harder to build the patient's story.” 7 This would suggest the EMR should allow the display of disparate data types/categories within the same interface to properly synthesize temporally related data. We would propose a chronologic timeline may best satisfy this display need, which could be further augmented using problem-oriented 21 or organ system-based organization. 22

Nonetheless, it was very clear from multiple clinicians' feedback that a uniform approach to information display will be inadequate due to large variation in data needs. Electronic display systems need to be customizable for highly variable workflows between providers and even between patients/cases with differing data granularity needs. The use of color-coding, common clinical abbreviations, metadata parsimony, explicit data filtering techniques, and improved highlighting/flagging of normal/abnormal results may be helpful toward these goals. While many EMRs (including those studied) have basic flagging of abnormal laboratory results, we envision that “flagging” could additionally include other (unstructured) diagnostic reports that contain new/important findings, such as highlighting echocardiographic reports when there has been a significant change in ejection fraction. The findings also reinforce an important caveat about filtering clinical data: the need for explicit rules and indicators when information is suppressed to ensure safety and minimize the clinician's “worry” about overlooking data. It is imperative that clinicians first understand a filtered display system before they will trust and use it.

Finally, we found that over half of our ICU clinicians endorsed that the bulk of medical decision making/treatment strategy could be formed by electronic chart review alone in 50% or more of new ICU cases. Certainly, ICU patients are a unique population who are frequently unable to provide reliable history due to altered mental status/sedation or tracheal intubation, and this finding supports the increasing importance of the EMR as a primary decision-making tool. EMR systems cannot be conceptualized as the mere “front-end” for a complex database, with data siloed into the same categories used in the era of paper charts. This approach fails to leverage the power of modern computer systems and human–computer interaction tenets, perpetuates the problem of information overload, and leads to continued clinician-EMR dissatisfaction.

Future Research

While this survey study provides a basic understanding of the electronic chart review habits and information needs among medical ICU clinicians, it raises several ongoing questions, including the following:

Are clinician beliefs about their data utilization consistent with observed behaviors of their chart review habits?

As many clinicians report a “haphazard” workflow through the EMR, how exactly are users accessing and navigating the data?

Are there inefficiencies in the chart review workflow that may contribute to this potentially time-consuming activity (and could be targets for system improvement)?

To help answer these questions, a prospective study is being planned at our institution.

Strengths and Limitations

Great efforts were made to recruit a large number of academic medical ICU practitioners across three states with different training levels and varying EMR systems, receiving a very good overall response rate. Nonetheless, ICU practitioners at nonacademic medical centers were not surveyed, who may have differing habits or opinions about EMR use. A similar threat to generalizability is the use of only a handful of EMR systems within the studied institutions. Although questions were tailored to elicit generic information needs, it is possible that clinicians who use different medical record systems would report different behaviors or preferences. Also, several clinicians provided feedback that the survey questions and associated findings about “average” information time, usefulness, etc., should be interpreted with caution given the high variability. While sweeping conclusions should not be drawn from this survey study, the data remain highly valuable and unique in offering a foundation about ICU chart review habits. Nonetheless, as with any survey study, recall bias remains a major limitation, especially for objective measurements like the time spent performing chart review. While survey questions were designed to be basic and unambiguous, the instrument was not formally validated and it is possible that the questions failed to capture the intended concepts. Finally, all self-reported time measures should be considered estimates, and only a prospective, observational study could help address this concern, as discussed above.

Conclusion

Independent of their training level, medical ICU clinicians estimate spending significant amounts of time performing electronic chart review for the majority of new patients, with a frequently haphazard/disorganized approach. In doing so, they are attempting to primarily construct a clinical narrative, often reviewing data at least 3 or more years old, with the most commonly useful data being imaging, laboratory studies, diagnostic studies, microbiology reports, and clinical notes. Clinicians spend the most time reviewing clinical notes, finding inpatient consultations, admission notes, discharge summaries, and service transfer notes the most helpful. Most ICU clinicians worry about overlooking important information in the medical record, burdened by both the volume of information in the EMR and inadequate display interfaces. Potentially helpful solutions are chronologic ordering of disparate data, use of color-coding and common clinical abbreviations, limiting metadata display, explicit data filtering techniques, and improved “flagging” of normal/abnormal results. The ability to dynamically customize the information display for different users and varying clinical scenarios is paramount. Given the prevalence and importance of clinical electronic chart review in modern medicine, which can often allow a diagnosis and treatment plan independent of the patient interview or exam, more research is needed on this topic. This study can help inform the design of clinically oriented, visually optimized electronic medical information display systems so that ICU clinicians can step away from the computer and return to the bedside, providing better care for their patients.

Clinical Relevance Statement

This study shines new light on the opaque but prevalent process of electronic chart review among ICU practitioners. By elucidating the patterns of information-retrieval depth and clinical content relevance within a sea of medical record data, we hope informaticists use our findings to help design and optimize medical record display systems so that ICU clinicians may spend less time at the computer and allow more time at the bedside in direct patient care.

Multiple Choice Questions

-

How long do medical ICU clinicians estimate spending performing an electronic chart review for a new complex (top 10th percentile) patient admission?

10 minutes

15 minutes

20 minutes

25 minutes

Correct Answer: The correct answer is D, 25 minutes. We prompted medical ICU clinicians to estimate the total time they spent on electronic chart review for their top 10th percentile-complexity patients. The median time was 25 minutes, with an interquartile range of 15 to 40 minutes. This was a somewhat surprising finding in that it represents a significant amount of clinician's time, which was consistent across training levels (from residents to attendings). It highlights the importance of optimizing efficiency of information access and display within the EMR.

-

Which of the following clinical notes might be most important to include in a filtered list or clinical summary for ICU clinicians admitting new patients?

Inpatient consultation notes, emergency medicine notes

Emergency medicine notes, nursing notes

Hospital admission notes, inpatient consultation notes

Primary care notes, emergency medicine notes

Correct Answer: The correct answer is C, Hospital admission notes and inpatient consultation notes. When we surveyed clinicians about which clinical note types they found to be most useful, the top types (rated to be “often” or “always” useful by the most clinicians) were inpatient consultation notes, hospital/ICU admission notes, and hospital discharge summaries. Nursing notes, primary care visits, and surprisingly even emergency medicine notes were less frequently helpful compared with the previously mentioned note types. Of course, relevant information can be highly contextual for each patient, but these findings could assist with the creation of default information filters.

Protection of Human and Animal Subjects

This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and approved by the Mayo Clinic Institutional Review Board.

Conflict of Interest None.

Funding

There were no specific intramural or extramural funds for this project. Mayo Clinic's installation of REDCap software is supported by the Mayo Clinic Center for Clinical and Translational Science, through an NIH Clinical and Translational Science Award (UL1 TR000135).

Supplementary Material

Supplementary Material

Supplementary Material

References

- 1.Hanauer D A, Mei Q, Law J, Khanna R, Zheng K. Supporting information retrieval from electronic health records: a report of University of Michigan's nine-year experience in developing and using the Electronic Medical Record Search Engine (EMERSE) J Biomed Inform. 2015;55:290–300. doi: 10.1016/j.jbi.2015.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Redd T K, Doberne J W, Lattin D et al. Variability in electronic health record usage and perceptions among specialty vs. primary care physicians. AMIA Annu Symp Proc. 2015;2015:2053–2062. [PMC free article] [PubMed] [Google Scholar]

- 3.Hilligoss B, Zheng K. Chart biopsy: an emerging medical practice enabled by electronic health records and its impacts on emergency department-inpatient admission handoffs. J Am Med Inform Assoc. 2013;20(02):260–267. doi: 10.1136/amiajnl-2012-001065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Block L, Habicht R, Wu A W et al. In the wake of the 2003 and 2011 duty hours regulations, how do internal medicine interns spend their time? J Gen Intern Med. 2013;28(08):1042–1047. doi: 10.1007/s11606-013-2376-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Reichert D, Kaufman D, Bloxham B, Chase H, Elhadad N. Cognitive analysis of the summarization of longitudinal patient records. AMIA Annu Symp Proc. 2010;2010:667–671. [PMC free article] [PubMed] [Google Scholar]

- 6.Kannampallil T G, Franklin A, Mishra R, Almoosa K F, Cohen T, Patel V L. Understanding the nature of information seeking behavior in critical care: implications for the design of health information technology. Artif Intell Med. 2013;57(01):21–29. doi: 10.1016/j.artmed.2012.10.002. [DOI] [PubMed] [Google Scholar]

- 7.Varpio L, Rashotte J, Day K, King J, Kuziemsky C, Parush A. The EHR and building the patient's story: a qualitative investigation of how EHR use obstructs a vital clinical activity. Int J Med Inform. 2015;84(12):1019–1028. doi: 10.1016/j.ijmedinf.2015.09.004. [DOI] [PubMed] [Google Scholar]

- 8.American EHR & the American Medical Association.Physicians Use of EHR Systems 20142014. Available at:http://www.americanehr.com/research/reports/Physicians-Use-of-EHR-Systems-2014.aspx. Accessed April 10, 2017

- 9.Schumacher R M, Patterson E S, North R, Quinn M T. National Institute of Standards and Technology, U.S. Department of Commerce; 2012. NISTIR 7804 Technical Evaluation, Testing and Validation of the Usability of Electronic Health Records. [Google Scholar]

- 10.Middleton B, Bloomrosen M, Dente M Aet al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA J Am Med Inform Assoc 201320(e1):e2–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Belden J L, Grayson R, Barnes J. Healthcare Information and Management Systems Society (HIMSS); 2009. Defining and Testing EMR Usability: Principles and Proposed Methods of EMR Usability Evaluation and Rating. [Google Scholar]

- 12.Committee on Patient Safety and Health Information Technology.Health IT and Patient Safety: Building Safer Systems for Better Care. Institute of Medicine Washington, DC: National Academies Press; 2011 [PubMed] [Google Scholar]

- 13.Sittig D F, Ash J S, Singh H. The SAFER guides: empowering organizations to improve the safety and effectiveness of electronic health records. Am J Manag Care. 2014;20(05):418–423. [PubMed] [Google Scholar]

- 14.Manor-Shulman O, Beyene J, Frndova H, Parshuram C S. Quantifying the volume of documented clinical information in critical illness. J Crit Care. 2008;23(02):245–250. doi: 10.1016/j.jcrc.2007.06.003. [DOI] [PubMed] [Google Scholar]

- 15.Pickering B W, Gajic O, Ahmed A, Herasevich V, Keegan M T. Data utilization for medical decision making at the time of patient admission to ICU. Crit Care Med. 2013;41(06):1502–1510. doi: 10.1097/CCM.0b013e318287f0c0. [DOI] [PubMed] [Google Scholar]

- 16.Nolan M E, Pickering B W, Herasevich V. Initial clinician impressions of a novel interactive Medical Record Timeline (MeRLin) to facilitate historical chart review during new patient encounters in the ICU. Am J Respir Crit Care Med. 2016;193:A1101. [Google Scholar]

- 17.Harris P A, Taylor R, Thielke R, Payne J, Gonzalez N, Conde J G. Research electronic data capture (REDCap)–a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(02):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dalkey N, Helmer O. An experimental application of the DELPHI method to the use of experts. Manage Sci. 1963;9(03):458–467. [Google Scholar]

- 19.Pivovarov R, Elhadad N. Automated methods for the summarization of electronic health records. J Am Med Inform Assoc. 2015;22(05):938–947. doi: 10.1093/jamia/ocv032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kendall L, Klasnja P, Iwasaki J et al. Use of simulated physician handoffs to study cross-cover chart biopsy in the electronic medical record. AMIA Annu Symp Proc. 2013;2013:766–775. [PMC free article] [PubMed] [Google Scholar]

- 21.Weed L L. Medical records that guide and teach. N Engl J Med. 1968;278(11):593–600. doi: 10.1056/NEJM196803142781105. [DOI] [PubMed] [Google Scholar]

- 22.Tange H J, Schouten H C, Kester A DM, Hasman A. The granularity of medical narratives and its effect on the speed and completeness of information retrieval. J Am Med Inform Assoc. 1998;5(06):571–582. doi: 10.1136/jamia.1998.0050571. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Material

Supplementary Material