Abstract

Background A detailed understanding of electronic health record (EHR) workflow patterns and information use is necessary to inform user-centered design of critical care information systems. While developing a longitudinal medical record visualization tool to facilitate electronic chart review (ECR) for medical intensive care unit (MICU) clinicians, we found inadequate research on clinician–EHR interactions.

Objective We systematically studied EHR information use and workflow among MICU clinicians to determine the optimal selection and display of core data for a revised EHR interface.

Methods We conducted a direct observational study of MICU clinicians performing ECR for unfamiliar patients during their routine daily practice at an academic medical center. Using a customized manual data collection instrument, we unobtrusively recorded the content and sequence of EHR data reviewed by clinicians.

Results We performed 32 ECR observations among 24 clinicians. The median (interquartile range [IQR]) chart review duration was 9.2 (7.3–14.7) minutes, with the largest time spent reviewing clinical notes (44.4%), laboratories (13.3%), imaging studies (11.7%), and searching/scrolling (9.4%). Historical vital sign and intake/output data were never viewed in 31% and 59% of observations, respectively. Clinical notes and diagnostic reports were browsed ≥10 years in time for 60% of ECR sessions. Clinicians viewed a median of 7 clinical notes, 2.5 imaging studies, and 1.5 diagnostic studies, typically referencing a select few subtypes. Clinicians browsed a median (IQR) of 26.5 (22.5–37.25) data screens to complete their ECR, demonstrating high variability in navigation patterns and frequent back-and-forth switching between screens. Nonetheless, 47% of ECRs begin with review of clinical notes, which were also the most common navigation destination.

Conclusion Electronic chart review centers around the viewing of clinical notes among MICU clinicians. Convoluted workflows and prolonged searching activities indicate room for system improvement. Using study findings, specific design recommendations to enhance usability for critical care information systems are provided.

Keywords: information seeking behavior, electronic health records, clinical information, intensive care units, EHR interface, data display

Background and Significance

The modern electronic health record (EHR) allows quick access to vast troves of clinical information, which is simultaneously its biggest promise and greatest pitfall. Recognizing a deficiency between existing software design and clinical utility, the seminal Computational Technology for Effective Healthcare report emphasized the importance of user-centered design to support the varied cognitive tasks of clinicians, including review of the electronic medical record. 1 A thorough understanding of the digital information needs and EHR workflow patterns among clinicians is paramount for user-centered design of clinical applications.

One common EHR task is general electronic chart review, in which clinicians browse the record of new patients to develop familiarity with their medical history to inform subsequent decision making. Hilligoss and Zheng and Varpio et al helped define the cognitive processes underpinning this “chart biopsy” 2 and the role of the EHR in helping/hindering this process. 3 Diving further into this concept, Wright et al 4 conducted a cognitive assessment about electronic information use and access among medical intensive care unit (ICU) clinicians, finding that information needs during a “new patient assessment” are fundamentally different from “reviewing the status of a known patient,” and would require differing information display. Accordingly, we are designing a longitudinal medical record visualization tool for ICU clinicians to facilitate the admission chart review process and reduce information overload. 5 The goal of this tool is to distill the EHR into a timeline of key data, which requires a detailed understanding of relative informational value and information access patterns—which data to show, when, and how? While existing research has evaluated some aspects of EHR information use and workflow in critical care, 6 7 8 9 10 11 detailed descriptions of these concepts could not be identified, particularly for unstructured/semistructured data like clinical notes. After conducting a preliminary survey study on the topic, 12 we identified the need for direct observation of clinician–EHR behavior to overcome limitations of recall bias and analyze specific workflow sequence, prompting the present study.

Objective

We aimed to quantify EHR information use and workflow patterns among medical ICU clinicians evaluating new patients to determine the optimal selection and display of core data for a revised EHR interface. Specifically, we sought to answer the following questions:

What are the most commonly viewed data during electronic chart review?

How far back do clinicians browse historical EHR data?

Which data categories tend to be viewed together or sequentially?

Methods

Design

We conducted a prospective, direct-observation study of ICU clinicians performing electronic chart review as part of their routine care for newly admitted medical ICU patients, attempting to adhere to Zheng et al's “Suggested Time and Motion Procedures” guidelines. 13

Setting and Electronic Environment

The study was performed within a 24-bed medical and 21-bed mixed medical/surgical ICU at a tertiary academic medical center (Mayo Clinic Rochester) between December 2016 and March 2017. Each ICU is staffed by one to two in-house attendings 24 hours per day. Mayo currently hosts a suite of externally and internally developed applications for viewing clinical data, rather than a single commercial solution, which has been previously described. 14

Participants

All critical care attending physicians, fellows, and advanced practice providers (APPs, i.e., nurse practitioners and physician assistants) rotating through the target ICUs during the study interval were invited to participate. Patient/chart inclusion criteria were all new ICU admissions for primary medical (nonsurgical) indications for whom the ICU team was assuming primary or comanagement responsibility. Patient exclusion criteria were age younger than 18 years, pregnant, currently incarcerated, readmission to the same ICU and already known to the ICU team, primarily postoperative ICU admission indication, and those who had declined general research authorization at Mayo Clinic Rochester.

Recruitment and Consent

Clinicians were recruited for participation by email and then approached by study staff to obtain verbal consent while in the ICU. We received a waiver of informed consent for patient participants as their inclusion comprised only retrospective review of their record following study completion. This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and approved by the Mayo Clinic Institutional Review Board.

Study Procedure

During observation periods, study personnel remained in close physical proximity to the ICU and received a verbal notice or text-page from participating ICU clinicians when new admissions were anticipated. Before they had opened the electronic medical record, the study staff would immediately meet the clinician at their existing computer workstation and silently record their on-screen chart review actions while remaining out of direct line-of-sight. Observation sessions were conducted during both days and nights to ensure a representative sample. We did not conduct observation sessions over the weekend, as ICU staffing levels do not differ from the weekdays at our institution.

Clinicians were monitored for the time spent reviewing individual categories of clinical data within the EHR and their workflow accessing that data (navigating from one section to another). It was anticipated that the chart review process would be frequently interrupted by other clinical activities, during which time the study personnel silently recorded the type and duration of interruption and paused/resumed the chart review timer accordingly. This also permitted clinicians to switch clinical workstations while continuing to capture the full extent of clinical data review. A maximum of three chart review observations were permitted per clinician.

Study Instrument

Finding existing software solutions lacking for our study needs, we developed a simple tablet-based HTML/JavaScript data collection instrument that captured over 120 distinct elements. See Fig. 1 in Supplementary Appendix 1 and Supplementary Appendix 2 for a full description of the instrument (available in the online version). Although application-specific data were captured, the data collection instrument was designed primarily to capture generic clinical information concepts that are EHR independent, to enhance generalizability. Among the clinical data captured were clinical notes, imaging/radiology studies, vital sign data (heart/pulse rate, blood pressure, body temperature, respiration rate, oxygen saturation, and ventilator-related data—subsequently referred to as “vitals”), laboratory studies (diagnostic testing of blood-based samples—subsequently referred to as “labs”), nonimaging/nonlaboratory diagnostic studies, and medications. Viewing of multimodal dashboards and searching/scrolling were recorded as individual activities. When possible, the most-historical datum reviewed within a given category was tracked. As a clinician participant navigated the EHR, the study personnel would watch and manually record their chart review using the data collection instrument. Interruptions were permitted and recorded but did not count toward the total chart review time if they caused deviation of gaze away from the computer screen. Handwritten personal notes taken during the review process were recorded as a chart review activity.

In addition to following a highly detailed protocol (see Supplementary Appendix 1 , available in the online version), the two study staff performing the observations (M.E.N. and R.S.) did three qualitative coobservations to ensure consistency of observation session methods and data collection. Given the objective nature of the data collection, no formal interrater testing was deemed necessary.

Data Analysis

Aligned with our aforementioned objectives, the primary data analysis goals were to define:

Total and relative percentage of time spent performing electronic chart review by data category.

Number and relative percentage of discrete documents/reports viewed.

Most historical piece of information reviewed per data category.

Chart review workflow and transition analysis showing the relative probability of viewing one data category following another.

The results are reported with descriptive statistics. As there were no paired observations of different providers reviewing the same medical record, between-group analyses were not performed. Analyses were performed in JMP Statistical Software for Windows version 12.2 (SAS Institute Inc, Cary, North Carolina, United States) and Microsoft Excel 2010 for Windows version 14.0 (Microsoft Corporation, Redmond, Washington, United States).

Results

Electronic Chart Review Characteristics

Between 21 December 2016 and 8 March 2017, there were 32 electronic chart review observations collected among 24 unique ICU clinicians admitting new patients to their care, capturing a total of 6.2 hours of active chart review activity. Table 1 provides a summary of observation sessions and clinician characteristics, showing the recruitment of clinicians with varying experience levels and patients of varying backgrounds. The overall median (interquartile range [IQR]) duration of electronic chart review was 9.2 (7.3–14.7) minutes, with a range of 2.6 to 29.8 minutes ( Fig. 2 in Supplementary Appendix 1 , available in the online version). Fellows, APPs, and attendings had median (IQR) chart review durations of 9.4 (6.3–15.1), 9.9 (8.7–16.0), and 8.3 (7.0–13.1) minutes, respectively, although no direct comparison can be made as they were reviewing different records.

Table 1. Observation and participant characteristics.

| Chart review observations | N (%) |

|---|---|

| Total observations | 32 |

| Unique patient charts reviewed | 31 |

| Unique clinicians observed | 24 |

| ICU team role | |

| Attending physicians (10 unique) | 13 (41%) |

| Fellows (7 unique) | 9 (28%) |

| APP (7 unique) | 10 (31%) |

| Shift | |

| Day | 23 (72%) |

| Night | 9 (28%) |

| Preobservation ICU team census percent (of maximum) a | Mean (SD) |

| Total | 68 (± 16) % |

| Admitting patient syndrome/diagnosis b | |

| Respiratory failure | 15 (48%) |

| Renal failure | 7 (23%) |

| Pneumonia | 5 (16%) |

| Septic shock | 4 (13%) |

| Altered mental status | 4 (13%) |

| Hypotension NOS | 3 (10%) |

| Heart failure | 3 (10%) |

| GI bleeding | 3 (10%) |

| Liver failure | 2 (6%) |

| Hemorrhagic shock | 2 (6%) |

| Arrhythmia | 2 (6%) |

| Other | 13 (42%) |

| APACHE IV score | Median (IQR) |

| Total | 69 (57–79) |

| Route of patient admission | |

| Internal emergency department | 11 (35%) |

| General hospital ward | 7 (23%) |

| External emergency department transfer | 6 (19%) |

| Outpatient clinic/procedure | 4 (13%) |

| Outside hospital transfer | 3 (10%) |

| Clinician demographics ( N = 24) | N (%) |

| Years in clinical practice | |

| Minimum | 1 |

| Median (IQR) | 7.5 (4–13.5) |

| Maximum | 34 |

| Usual ICU practice ( N = 19 c ) | |

| Medical | 16 (84%) |

| Mixed medical/surgical | 3 (16%) |

| Primary specialty | |

| Pulmonary and critical care | 14 (58%) |

| Critical care—internal medicine | 1 (4%) |

| Critical care—anesthesiology | 2 (8%) |

| APP training (critical care) | 7 (29%) |

| Familiarity with existing EHR software ( N = 19 c ) | |

| Beginner | 2 (11%) |

| Intermediate | 7 (37%) |

| Advanced | 10 (53%) |

Abbreviations: APACHE, Acute Physiology and Chronic Health Evaluation; APP, advanced practice provider; NOS, not otherwise specified; SD, standard deviation.

Provides an estimate of the workload burden at the time of the chart review.

More than one diagnosis permitted per unique patient ( N = 31), will not sum to 100%.

Not all clinician participants completed the demographic assessment.

Amount and Type of Clinical Data Reviewed

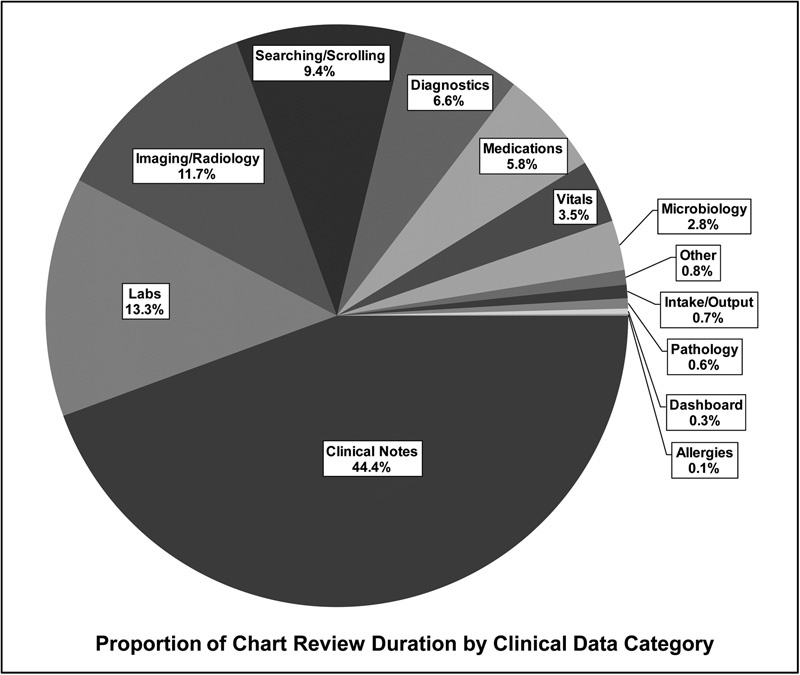

Fig. 1 shows the proportion of time spent reviewing each clinical data category, with clinical notes representing by far the most time-consuming section with 44% of the total duration. Interestingly, 9.4% of the time was spent searching or scrolling through the record looking for clinical data.

Fig. 1.

Results aggregated from 32 chart review observation sessions. “Vitals” = heart/pulse rate, blood pressure, body temperature, respiration rate, oxygen saturation, and ventilator-related data. “Labs” = diagnostic testing of blood-based samples. “Searching/scrolling” = time spent scrolling through lists of note/report metadata before actually selecting a document to read. “Diagnostics” = nonlaboratory, nonimaging/radiology diagnostic study that generates a text report. “Medications” = active outpatient and inpatient medication lists, and inpatient medical administration record (MAR). “Dashboard” = viewing a multimodal data window. “Other” = clinical data not otherwise categorized, such as flowsheet records, appointment schedule, administrative demographic data, advanced directives, and active inpatient orders.

For textual report data, we quantified and categorized the type of information viewed ( Fig. 3 in Supplementary Appendix 1 , available in the online version). Clinicians viewed a median (IQR) of 7 (4.25–10.75) clinical notes, 2.5 (1–4) imaging/radiographic studies, and 1.5 (0.25–3.75) nonimaging diagnostic studies. Table 2 specifies the number and relative percentage of report types viewed, with the caveat that one extreme outlier chart review (performed during an overnight shift by an attending for a patient with pyelonephritis and pancytopenia, with 78 clinical notes, 10 imaging, and 8 diagnostic reports) was purposefully excluded as it would unduly weight aggregate percentages. Among clinical notes, the most commonly viewed subtypes were outpatient specialist notes, hospital discharge summaries, hospital progress notes (primary inpatient service), and “miscellaneous”-type notes, altogether representing 51% of all the notes viewed. Sixty-three percent of all imaging studies viewed were chest X-rays and chest CTs alone, and electrocardiographs and cardiac echocardiographs represented 72% of all nonimaging diagnostic studies viewed. Among the 32 chart review sessions, 10 sessions (31%) never reviewed historical vitals data, 19 sessions (59%) never reviewed intake/output data, and 23 sessions (72%) never reviewed the medication administration record.

Table 2. Aggregate total and relative percent of unique notes/reports viewed among all chart review observations ( N = 31 a ) .

| Data category | Note/Report type | Viewed ( N ) | Category (%) | Total (%) |

|---|---|---|---|---|

| Clinical notes | Outpatient specialty notes | 50 | 19.2 | 12.3 |

| Hospital discharge summary | 32 | 12.3 | 7.9 | |

| Progress note (primary inpatient) | 28 | 10.7 | 6.9 | |

| Miscellaneous note | 22 | 8.4 | 5.4 | |

| Emergency department | 17 | 6.5 | 4.2 | |

| Hospital admission | 13 | 5.0 | 3.2 | |

| Inpatient specialty consult | 12 | 4.6 | 3.0 | |

| Operative report | 10 | 3.8 | 2.5 | |

| Inpatient specialty progress | 9 | 3.4 | 2.2 | |

| Direct admission notification | 9 | 3.4 | 2.2 | |

| Minor procedure report | 8 | 3.1 | 2.0 | |

| Outside records (electronic) | 7 | 2.7 | 1.7 | |

| Primary care | 7 | 2.7 | 1.7 | |

| Clinical problem list | 5 | 1.9 | 1.2 | |

| “Other” inpatient notes | 5 | 1.9 | 1.2 | |

| Past medical history list | 5 | 1.9 | 1.2 | |

| Rapid response team note | 5 | 1.9 | 1.2 | |

| All other clinical notes | 14 | 6.5 | 4.2 | |

| Subtotal | 258 | – | 65.6 | |

| Imaging | Chest X-ray | 35 | 43.8 | 8.6 |

| Chest CT | 15 | 18.8 | 3.7 | |

| Abdominal/Pelvic CT | 7 | 8.8 | 1.7 | |

| Head CT | 5 | 6.3 | 1.2 | |

| All other imaging | 13 | 22.5 | 4.4 | |

| Subtotal | 75 | – | 19.1 | |

| Diagnostics | Echocardiography | 24 | 37.5 | 5.9 |

| Electrocardiography | 22 | 34.4 | 5.4 | |

| Pulmonary function testing | 5 | 7.8 | 1.2 | |

| All other diagnostics | 9 | 20.3 | 3.2 | |

| Subtotal | 60 | – | 15.3 | |

| Grand total | 393 | – | 100 |

One extreme outlier chart review observation was excluded to avoid biasing results (see text).

Review of Historical Data

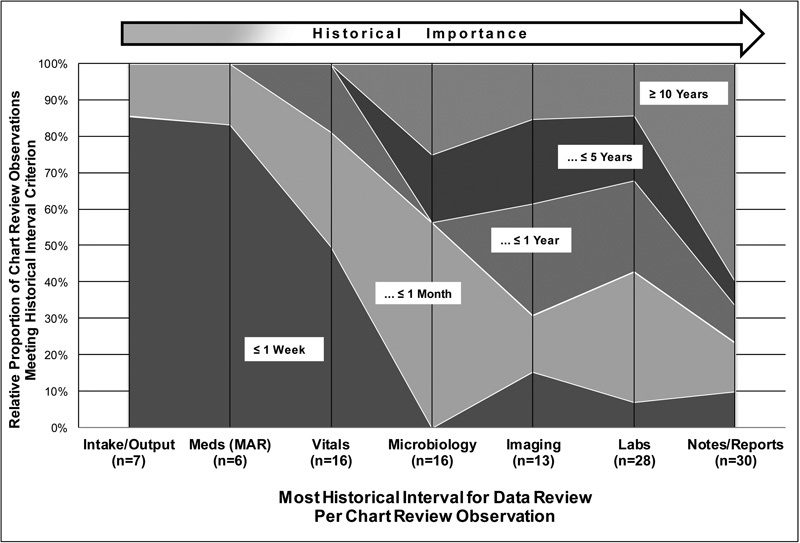

As clinicians browsed through the electronic medical record, it was possible to capture the earliest date of data reviewed or searched, recorded as a time interval from the date of admission ( Fig. 2 ). Vitals and intake/output data and the medication administration record were never browsed >1 year in time, whereas clinical notes and diagnostic reports were browsed ≥10 years in time for 60% of chart review sessions. As mentioned earlier, certain data categories were frequently omitted during the chart review process, which limited the number of historical interval observations captured for those elements.

Fig. 2.

The most historical interval over which data were searched or viewed (in relation to the admission date) were recorded and categorized in intervals from ≤ 1 week to ≥ 10 years. Area plots define the relative proportion of chart review observations within each historical time interval (overlaid labels), grouped by data category and sorted in order of increasing likelihood to review older, more historical data. “ n ” per category = number of observations for which the data category was captured for historical analysis. “MAR” = medication administration record. “Notes/Reports” = clinical notes and diagnostic reports.

Workflow Analysis

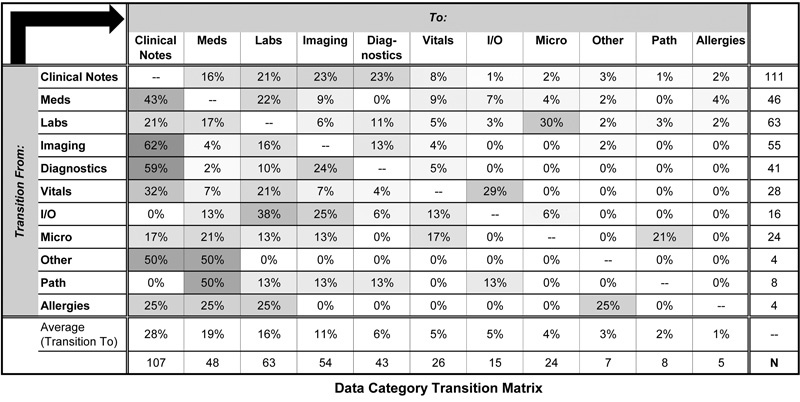

The electronic workflow and transitions between applications, data categories/screens, and interruptions were all tracked, finding highly variable (and frequently interrupted) patterns among clinicians navigating the data. See Fig. 4 in Supplementary Appendix 1 for example workflow diagrams (available in the online version). Clinicians switched between software applications—for example, reading a clinical note in the documentation viewer followed by launching the radiology imaging viewer—a median (IQR) of four (two to six) times. The number of unique transitions from viewing one data element or category to the next was quantified, finding that clinicians viewed a median (IQR) of 26.5 (22.5–37.25) data screens to complete their chart review. Forty-seven percent of chart reviews initially began by viewing clinical notes, 22% started with laboratories viewing, 13% with imaging, 13% with vitals data, followed by medications and intake/output data at 3% each. Fig. 3 provides a matrix of the probability of transitioning from one data category to the next. From clinical notes, clinicians are most likely to browse imaging, nonimaging diagnostics, or laboratories. As the heatmap shows, when navigating from most other categories, clinicians are usually returning to view clinical notes, although there are notable relationships for laboratories → microbiology, vitals → intake/output, and intake/output → laboratories.

Fig. 3.

Heatmap figure showing the probability of transitioning from viewing one data category (rows) to the next (columns), as a continuous gradation from the lowest value (0%, white) to the highest (62%, gray). Cell values represent percentages within each row. “Meds” = active outpatient and inpatient medication lists, and inpatient medical administration record (MAR). “Diagnostics” = nonlaboratory, nonimaging diagnostic study that generates a text report. “I/O” = intake/output data. “Micro” = microbiology data. “Path” = pathology data. “Other” = clinical data not otherwise categorized, such as flowsheet records, appointment schedule, administrative demographic data, advanced directives, and active inpatient orders. “Allergies” = allergy and immunization list.

Clinicians experienced interruption events from their primary data review a median (IQR) of 12 (6.5–15) times per chart review, with one user having 50 unique interruptions. As the transcription of electronic data into personal handwritten notes was categorized as an interruption, this was by far the most prevalent interruption event, representing 74% of the total with a median (IQR) of 9 (2.75–12.25) pauses to transcribe notes. In total, 27 out of the 32 chart review sessions included the use of handwritten notes during the chart review process, spending a median (IQR) of 1.4 (0.7–1.8) minutes in transcription per chart review session. Excluding handwritten notes, the median (IQR) number of interruptions per chart review was 2 (1–4), with 59% being “communication” with other clinicians/nurses, 20% being documentation activities, 9% being order entry, and 7% requiring the clinician to physically leave the workstation, typically to evaluate one of their patients at the bedside.

Discussion

Summary of Results and Trends

This study provides a highly detailed and unique account of the information use trends and EHR workflow among medical ICU clinicians performing electronic chart review for new patients. In the age of ever-increasing electronic data collection, a thorough understanding of this topic is necessary to combat information overload and optimize system design, and addresses calls to define data utilization within specific clinical use cases (such as proposed by Apker et al for handoff evaluation 15 ). The present study identifies several important trends regarding the electronic chart review activity, with the primacy of clinical note data being most important. Both as a function of time spent (44%) and number of individual documents reviewed (7), clinical notes comprised, by far, the plurality of data reviewed. Nearly half of all chart review sessions began by viewing notes, which were overall the most likely data category to which clinicians return after viewing other data. Interestingly, when analyzing the exact subtypes of clinical notes and imaging/diagnostic reports viewed, it was noted that the majority of data review involved relatively few distinct subtypes (see section “Results”; Table 2 ). We would conclude that these report subtypes likely represent highly important components to medical ICU decision making. Another noteworthy finding was that about one-third of chart review sessions never referenced (or even checked for the existence of) historical vital sign data. While these data are certainly important in some scenarios, for a good number of our observed admissions the relevant vital sign data are presumably obtained only at the bedside.

The workflow and interruption analysis was perhaps most notable for the finding that clinicians spent nearly 10% of their time searching or scrolling through screens of metadata, representing the fourth most common workflow activity by time. While it is possible that useful information can be gleaned during this activity, it may suggest ineffective data presentation. In a similar vein, the median number of screen transitions was over 26 per chart review. While viewing multiple data elements is necessary, screen transitions often involved leaving the existing data screen only to return to that same screen afterward. These workflow findings may represent opportunities to rework clinical data presentation and optimize efficiency.

Context of Other Studies

We are aware of three other clinician studies that quantified the workflow patterns of EHR information access, 16 17 18 although none evaluated ICU clinicians, and two were done using hypothetical case scenarios. 16 17 Zheng et al's study of sequential pattern analysis of ambulatory EHR workflow 18 inspired our own analysis within the ICU setting and the studies are thus complementary. Kendall et al's 16 and Reichert et al's 17 studies of EHR review for patient handoff and outpatient transfer of care, respectively, both identified the importance of clinical notes, and corroborated our findings about highly variable EHR navigation patterns. As mentioned earlier, we first conducted a survey study about medical ICU chart review habits. 12 Comparing those findings to our observational results, users accurately estimated spending the most time reviewing clinical notes, although they estimated spending approximately 5 minutes longer performing chart review than we actually observed. Interestingly, they did not describe “miscellaneous”-type clinical notes as commonly useful, whereas we identified very frequent viewing of these notes in our actual observations.

This study builds on other previous work done at our institution about data utilization for medical decision making in the ICU. 6 19 Pickering et al conducted a post-ICU admission survey among clinicians, and found that relatively few (mostly structured/numeric) data concepts were “relevant for the diagnosis and treatment” of the new ICU patient. 6 The current observational study found clinicians spent significant portions of time reviewing unstructured clinical note data despite Pickering et al's study suggesting these are probably low-yield information sources. This could suggest that data relevance (or information gain 8 ) and data use/review may actually be discrepant concepts, and that review of commonly low-yield sources like clinical notes remain important for clinicians to synthesize a clinical narrative during a new patient admission. Even if clinicians rank information sources as unimportant, the fact that they still seem to review these low-yield data means one must be very judicious when designing critical care information systems that attempt to filter/suppress information.

Usability and System Design Implications

In 2015, Zahabi et al published a comprehensive review and guideline formation about usability considerations in EHR design. 20 This important work provided multiple evidence-based recommendations including designing around a “natural” workflow, reducing the amount of information in EHR displays, ranking data in terms of importance, and considering codependencies among data interfaces to reduce the steps to complete an action. The present study is able to directly inform many of these principles, and we have summarized our findings and recommendations to enhance user-centered design in Table 3 .

Table 3. Design recommendations to enhance usability for longitudinal critical care information display systems a .

| Topic | Finding | Recommendation |

|---|---|---|

| Core Design | Clinical notes were the most frequently viewed and navigated-to category | Information display should center on effective clinical notes presentation and allow on-screen persistence |

| Clinicians frequently switched back-and-forth between data screens to chronologically correlate data | Systems should allow efficient viewing of multiple data elements on the same screen and minimize use of single-category tabs/windows | |

| Clinical notes, imaging reports, diagnostic studies, medications, and labs were the most extensively reviewed and co-navigated categories | Give visual prominence (or co-display) for clinical notes, imaging reports, diagnostic studies, medications, and labs | |

| Clinicians took highly variable pathways to complete electronic chart review | Systems should accommodate user-defined customization of data display | |

| Data Presentation (see Table 2 ) |

Clinicians spent nearly 10% of the time searching/scrolling through lists of metadata | Systems should include robust visual prioritization schemes and search/sort support to expedite information seeking |

| Over 50% of the clinical notes viewed were one of five specific note subtypes | Give visual priority to the display of these 5 Clinical Note subtypes (see Table 2 ) | |

| Over 75% of the imaging studies viewed were one of: chest X-ray, chest CT, abdominal/pelvic CT, or head CT | Give visual priority to the display of chest X-rays, chest CTs, abdominal/pelvic CTs, and head CTs | |

| 80% of the diagnostic studies viewed were one of: echocardiograms, ECGs, and PFTs | Give visual priority to the display of echocardiograms, ECGs, and PFTs | |

| Data access (see Fig. 2 ) |

Clinicians frequently viewed clinical notes/diagnostic studies/imaging reports beyond 10 y in time | Systems should accommodate efficient query and display of historical clinical notes/diagnostic studies/imaging reports with a minimum availability of 10 y |

| Labs and microbiology data were rarely viewed beyond 5 y in time | Filter labs and microbiology data to a default of 5 y | |

| Vital sign data were never viewed beyond 1 y in time (rarely more than 1 mo) | Filter historical vitals data to a default of 1 mo b | |

| Intake/Output data and the MAR were never viewed beyond 1 mo in time (typically 1 wk) | Filter intake/output data and the MAR to a default of 1 wk b | |

| Navigation (see Fig. 3 ) |

Clinicians often leave one data screen and need to scroll the subsequent data screen to arrive at the corresponding date as the previously viewed data | When navigating between data screens, provide a mechanism to “jump-to” the same date viewed on the previous screen |

| After reading clinical notes, clinicians usually viewed imaging reports/diagnostic studies/labs | When viewing clinical notes, the system should provide multipane viewing of imaging reports/diagnostic studies/labs or visual prominence of links to these elements | |

| Vital sign viewing was commonly followed by intake/output data review | Vital sign data should be codisplayed with intake/output data |

Abbreviations: CT, computed tomography; ECG, electrocardiography; MAR, medication administration record; PFT, pulmonary function testing.

Findings and design recommendations for longitudinal EHR data presentation for the specific use-case of historical chart review in the medical ICU.

Findings based on relatively few total observations (see Fig. 2 ); recommendations should be considered preliminary.

Strengths and Limitations

We believe this study's greatest strength was the in vivo observational method, which allowed clinicians to perform their usual chart review task in their natural environment, rather than a simulation-laboratory study. Although eye tracking/screen capture methods allow precise recording of clinician–EHR interactions, 4 18 21 22 manual observational methods are a common choice in critical care settings 9 11 23 24 likely due to greater flexibility and less intrusion into native workflows, yet still providing adequate fidelity. While we acknowledge the possibility of a Hawthorne effect, the observation protocol made every attempt to minimize this influence. Another strength of the study was the observation of clinicians largely experienced with the existing EHR, whose workflow patterns should be optimized for the system and valid for interpretation (unlike a novice).

It is possible there could be interrater variability between the two observers (M.E.N. and R.S.), which was not systematically studied, although the objective nature of the captured data should minimize this concern. We acknowledge the possibility for bias among our observed versus nonobserved chart reviews, where we may have under-sampled high-acuity ICU admissions for which the ICU team did not notify us. The labor-intensive study design meant only 32 observations were ultimately performed, which raises the possibility of sampling bias. We also acknowledge some homogeneity of admitting diagnoses (respiratory failure) and paucity of some common medical ICU diagnoses (septic shock). Another limitation is that our study occurred at a single, academic center with its own unique EHR suite. It is possible that the information workflows we observed arose not by clinical preference but due to our EHR's design. Nonetheless, the frequent switching between different data screens may support that clinicians were, in fact, navigating according to their fundamental information needs. Finally, we acknowledge that EHR workflow patterns can vary considerably between clinicians, and that our findings and recommendations about common pathways/trends may not adequately satisfy some users' needs.

Conclusion

Among medical ICU clinicians, the electronic chart review process largely centers around the review of clinical notes. The convoluted workflow patterns and prolonged information-seeking activities we identified indicate an opportunity to improve the design of current and future systems using our findings. We provide several specific design recommendations to enhance usability for longitudinal critical care information display systems.

Clinical Relevance Statement

This study provides detailed insight into the information use and workflow patterns among medical ICU clinicians browsing the EHR, which contains ever-increasing amounts of historical information. This analysis is foundational to inform the design of existing and future critical care information systems that may help clinicians achieve optimal accuracy and efficiency in their daily work, with the ultimate goal of improving patient safety and care delivery.

Multiple Choice Question

When performing electronic chart review for new medical ICU patients, clinicians spend the most time viewing:

Vital sign data

Laboratory data

Clinical notes

Imaging/radiology data

Correct Answer: The correct answer is C , clinical notes. Based on our study, clinicians spent 44% of their time reviewing clinical notes, 13% of their time reviewing laboratory tests, 12% of their time reviewing imaging/radiology tests, and <4% of their time reviewing vital sign data within the EHR. Within clinical notes, the most frequently viewed subtypes were outpatient specialist notes, hospital discharge summaries, hospital progress notes (primary inpatient service), and “miscellaneous”-type notes. Clinical notes were also the most likely navigation destination after viewing other types of data, indicating their central importance to the electronic chart review process among medical ICU clinicians.

Protection of Human and Animal Subjects

This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and approved by the Mayo Clinic Institutional Review Board.

Conflict of Interest None.

Funding

None.

Supplementary Material

Supplementary Appendix 1 and Figures

Supplementary Appendix 1 and Figures

Supplementary Appendix 2

Supplementary Appendix 2

References

- 1.Stead W W, Lin H S. Washington, DC: National Academies Press; 2009. Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. [PubMed] [Google Scholar]

- 2.Hilligoss B, Zheng K. Chart biopsy: an emerging medical practice enabled by electronic health records and its impacts on emergency department-inpatient admission handoffs. J Am Med Inform Assoc. 2013;20(02):260–267. doi: 10.1136/amiajnl-2012-001065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Varpio L, Rashotte J, Day K, King J, Kuziemsky C, Parush A. The EHR and building the patient's story: a qualitative investigation of how EHR use obstructs a vital clinical activity. Int J Med Inform. 2015;84(12):1019–1028. doi: 10.1016/j.ijmedinf.2015.09.004. [DOI] [PubMed] [Google Scholar]

- 4.Wright M C, Dunbar S, Macpherson B C et al. Toward designing information display to support critical care. a qualitative contextual evaluation and visioning effort. Appl Clin Inform. 2016;7(04):912–929. doi: 10.4338/ACI-2016-03-RA-0033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nolan M E, Pickering B W, Herasevich V. Initial clinician impressions of a Novel Interactive Medical Record Timeline (MeRLin) to facilitate historical chart review during new patient encounters in the ICU. Am J Respir Crit Care Med. 2016;193:A1101. [Google Scholar]

- 6.Pickering B W, Gajic O, Ahmed A, Herasevich V, Keegan M T. Data utilization for medical decision making at the time of patient admission to ICU. Crit Care Med. 2013;41(06):1502–1510. doi: 10.1097/CCM.0b013e318287f0c0. [DOI] [PubMed] [Google Scholar]

- 7.Manor-Shulman O, Beyene J, Frndova H, Parshuram C S. Quantifying the volume of documented clinical information in critical illness. J Crit Care. 2008;23(02):245–250. doi: 10.1016/j.jcrc.2007.06.003. [DOI] [PubMed] [Google Scholar]

- 8.Kannampallil T G, Franklin A, Mishra R, Almoosa K F, Cohen T, Patel V L. Understanding the nature of information seeking behavior in critical care: implications for the design of health information technology. Artif Intell Med. 2013;57(01):21–29. doi: 10.1016/j.artmed.2012.10.002. [DOI] [PubMed] [Google Scholar]

- 9.Hefter Y, Madahar P, Eisen L A, Gong M N. A time-motion study of ICU workflow and the impact of strain. Crit Care Med. 2016;44(08):1482–1489. doi: 10.1097/CCM.0000000000001719. [DOI] [PubMed] [Google Scholar]

- 10.Carayon P, Wetterneck T B, Alyousef B et al. Impact of electronic health record technology on the work and workflow of physicians in the intensive care unit. Int J Med Inform. 2015;84(08):578–594. doi: 10.1016/j.ijmedinf.2015.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kannampallil T G, Jones L K, Patel V L, Buchman T G, Franklin A.Comparing the information seeking strategies of residents, nurse practitioners, and physician assistants in critical care settings J Am Med Inform Assoc 201421(e2):e249–e256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nolan M E, Cartin-Ceba R, Moreno-Franco P, Pickering B W, Herasevich V.A multi-site survey study of EMR review habits, information needs, and display preferences among medical ICU clinicians evaluating new patientsAppl Clin Inform 2017 [DOI] [PMC free article] [PubMed]

- 13.Zheng K, Guo M H, Hanauer D A. Using the time and motion method to study clinical work processes and workflow: methodological inconsistencies and a call for standardized research. J Am Med Inform Assoc. 2011;18(05):704–710. doi: 10.1136/amiajnl-2011-000083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ahmed A, Chandra S, Herasevich V, Gajic O, Pickering B W. The effect of two different electronic health record user interfaces on intensive care provider task load, errors of cognition, and performance. Crit Care Med. 2011;39(07):1626–1634. doi: 10.1097/CCM.0b013e31821858a0. [DOI] [PubMed] [Google Scholar]

- 15.Apker J, Beach C, O'Leary K, Ptacek J, Cheung D, Wears R. Handoff communication and electronic health records: exploring transitions in care between emergency physicians and internal medicine/hospitalist physicians. Proc Int Symp Hum Factors Ergon Heal Care. 2014;3(01):162–169. [Google Scholar]

- 16.Kendall L, Klasnja P, Iwasaki J et al. Use of simulated physician handoffs to study cross-cover chart biopsy in the electronic medical record. AMIA Annu Symp Proc. 2013;2013:766–775. [PMC free article] [PubMed] [Google Scholar]

- 17.Reichert D, Kaufman D, Bloxham B, Chase H, Elhadad N. Cognitive analysis of the summarization of longitudinal patient records. AMIA Annu Symp Proc. 2010;2010:667–671. [PMC free article] [PubMed] [Google Scholar]

- 18.Zheng K, Padman R, Johnson M P, Diamond H S. An interface-driven analysis of user interactions with an electronic health records system. J Am Med Inform Assoc. 2009;16(02):228–237. doi: 10.1197/jamia.M2852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ellsworth M A, Lang T R, Pickering B W, Herasevich V. Clinical data needs in the neonatal intensive care unit electronic medical record. BMC Med Inform Decis Mak. 2014;14(01):92. doi: 10.1186/1472-6947-14-92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zahabi M, Kaber D B, Swangnetr M. Usability and safety in electronic medical records interface design: a review of recent literature and guideline formulation. Hum Factors. 2015;57(05):805–834. doi: 10.1177/0018720815576827. [DOI] [PubMed] [Google Scholar]

- 21.Doberne J W, He Z, Mohan V, Gold J A, Marquard J, Chiang M F. Using high-fidelity simulation and eye tracking to characterize EHR workflow patterns among hospital physicians. AMIA Annu Symp Proc. 2015;2015:1881–1889. [PMC free article] [PubMed] [Google Scholar]

- 22.Hirsch J S, Tanenbaum J S, Lipsky Gorman S et al. HARVEST, a longitudinal patient record summarizer. J Am Med Inform Assoc. 2015;22(02):263–274. doi: 10.1136/amiajnl-2014-002945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tang Z, Weavind L, Mazabob J, Thomas E J, Chu-Weininger M Y, Johnson T R. Workflow in intensive care unit remote monitoring: A time-and-motion study. Crit Care Med. 2007;35(09):2057–2063. doi: 10.1097/01.ccm.0000281516.84767.96. [DOI] [PubMed] [Google Scholar]

- 24.Ballermann M A, Shaw N T, Mayes D C, Gibney R T, Westbrook J I. Validation of the Work Observation Method By Activity Timing (WOMBAT) method of conducting time-motion observations in critical care settings: an observational study. BMC Med Inform Decis Mak. 2011;11:32. doi: 10.1186/1472-6947-11-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Appendix 1 and Figures

Supplementary Appendix 1 and Figures

Supplementary Appendix 2

Supplementary Appendix 2