Abstract

Over the last decade virtual reality (VR) setups for rodents have been developed and utilized to investigate the neural foundations of behavior. Such VR systems became very popular since they allow the use of state-of-the-art techniques to measure neural activity in behaving rodents that cannot be easily used with classical behavior setups. Here, we provide an overview of rodent VR technologies and review recent results from related research. We discuss commonalities and differences as well as merits and issues of different approaches. A special focus is given to experimental (behavioral) paradigms in use. Finally we comment on possible use cases that may further exploit the potential of VR in rodent research and hence inspire future studies.

Keywords: behavioral neuroscience, closed loop, multisensory stimulation, neural coding, sensorimotor integration, spatial navigation.

Introduction

We perceive the outside world as a result of continuous sensorimotor interactions. We direct our gaze to what we want to look at; we choose and approach objects to touch, smell or taste. Sensory inputs, in return, guide our motor actions. Traditionally, sensory perception has been studied with passive stimulation of a single sensory modality; however, neuronal processing of even simple controlled stimuli might vary greatly at different behavioral states. To understand how we perceive and interact with our environment, it is, therefore, essential to consider interactive settings that allow naturalistic behaviors. Virtual reality (VR) provides a simulated artificial environment in which one’s actions determine sensory stimulation. Therefore, it closes the loop between sensory stimulation and motor actions and is a valuable tool for investigating a wide spectrum of behaviors from sensorimotor interactions to spatial navigation and cognition. Moreover, sensory stimulation may be provided across multiple modalities in VR to provide a unified perceptual experience that is still precisely controlled. In animal experiments, VR may be combined with recently developed experimental tools (e.g., transgenic mouse lines, viral constructs, and genetically encoded calcium indicators) that allow us to observe neuronal activity at the level of specific cell populations during behavior, permitting dissection of the underlying circuitry of perception and behavior.

VR was introduced into neuroscience about 2 decades ago (Tarr and Warren 2002; Bohil et al. 2011), when sufficient computing power became available. At first, VR was used in behavioral studies with humans, but animal experiments soon began to take advantage of this technique. In insects, visuomotor control of flying was studied in VR (Strauss et al. 1997; Gray et al. 2002), whereas, in mammals, VR was initially utilized to study spatial navigation in nonhuman primates (e.g., Matsumura et al. 1999; Leighty and Fragaszy 2003; Hori et al. 2005). Meanwhile, VR setups were also developed for rodents (e.g., Hölscher et al. 2005; Lee et al. 2007). These setups became very popular since with them an animal can be held in place, while it moves and interacts with a virtual environment (VE). Head-fixation, a commonly used approach in rodent VR settings, allowed applying recording techniques that require a high amount of stability, in behaving animals. Nowadays whole-cell patch-clamp recordings from single neurons (Harvey et al. 2009; Domnisoru et al. 2013; Schmidt-Hieber and Häusser 2013) and 2-photon calcium imaging of neuronal networks (Harvey et al. 2012; Keller et al. 2012; Heys et al. 2014) in behaving animals are routinely applied in VR settings.

Here, we give an overview of VR technologies for rodents including VR applications that go beyond visual stimulation (e.g., tactile, auditory), as well as the findings from related work. In particular, we discuss the different experimental approaches and behavioral paradigms in use. Finally, we comment on the potential of rodent VR applications, which, in our view, has not yet been fully exploited.

History and State of the Art

Computer-controlled visual stimulation is already applied for years in behavioral testing with rodents. Early studies used computer screens for the presentation of dynamic stimuli to study optic flow utilization and computation of the time to contact an object during spatial behavior (Sun et al. 1992; Shankar and Ellard 2000). Further behavioral paradigms included visual learning and discrimination tasks (Gaffan and Eacott 1995; Eacott et al. 2001; Nekovarova and Klement 2006; Busse et al. 2011) as well as setups to measure visual acuity in rodents (Prusky et al. 2000; Prusky et al. 2004; Benkner et al. 2013). Apparatuses were designed in which visual stimuli are presented on the environment’s floor instead of its walls, which seems to account well for the tendency of rodents to collect visual information from the lower part of the visual field (Furtak et al. 2009). In addition, touch screens are used to provide means of interaction (e.g., Sahgal and Steckler 1994, for an early implementation).

Stimuli may be adapted with the above setups, however, there is no closed loop between sensory stimulation and an animal’s actions—which is the essence of VR (but see Ellard 2004, for an attempt to gain closed-loop control). The first implementation of an actual VR system for rodents was done by Hölscher et al. (2005). The key features of their system, which still form the basis of most rodent VR setups to date, were a display screen and a treadmill. Hölscher et al. (2005) used a panoramic display to provide visual virtual stimulation. They claim that such a display was the crucial component in designing a functional rodent VR, that is, an immersive visual stimulation that a rodent perceives as an interactive environment. A panoramic display covers a substantial part of a rodent’s field of view. For the same purpose, panoramic displays have been used before in VR experiments with insects (Strauss et al. 1997; Gray et al. 2002). As a treadmill, Hölscher et al. (2005) used a Styrofoam sphere floating on a cushion of pressured air, to reduce friction and hence the exhaustion of the animal. Again, this component was adapted from setups for insects (Carrel 1972; Dahmen 1980). Movement of the animal on the treadmill was captured and used to adapt the VE and simulate virtual movement on the visual screen.

Shortly after Hölscher et al. (2005), a similar rodent VR setup was built by Lee et al. (2007). That system used a motorized linear belt treadmill and back-projected dome screen. The animal’s attempts to move were detected via a position sensor, and treadmill position and virtual scene were updated accordingly.

Further development of rodent VRs was driven by the wish to record neural activity in behaving animals. Awake mice were head-fixed on top of a treadmill, to perform optical imaging of neural responses (Dombeck et al. 2007), or electrophysiological recordings (Niell and Stryker 2010; Ayaz et al. 2013; Saleem et al. 2013). Taking the final step to VR, head fixation of awake, behaving animals on a treadmill was combined with panoramic displays. Two-photon calcium imaging was performed during spatial navigation in VEs (Dombeck et al. 2010; Harvey et al. 2012; Keller et al. 2012; Heys et al. 2014; Low et al. 2014) and patch-clamp recordings were made to measure the membrane potential dynamics of place and grid cells (Harvey et al. 2009; Domnisoru et al. 2013; Schmidt-Hieber and Häusser 2013). Other lines of VR application with rodents took advantage of VR as a tool to study effects of visual and other sensory conditions on neural activity in neocortex and hippocampus (e.g., Chen et al. 2013; Ravassard et al. 2013; Saleem et al. 2013) or to perform pure behavioral experiments (Youngstrom and Strowbridge 2012; Thurley et al. 2014; Garbers et al. 2015; Kautzky and Thurley 2016).

New developments in rodent VR include techniques that let animals move more freely. In one approach, the treadmill is actively rotated compensating for the animal’s movements to keep it physically in place (Kaupert and Winter 2013). Again, this approach originates from experiments with insects (Kramer 1975, 1976). In another setup, a freely moving rodent is placed inside a box. The walls of the box are used as a projection screen. The VE is adapted with the help of thorough head-tracking information in accord with the animal’s movements (Del Grosso and Sirota 2014). The technique shares close analogies with the CAVE VR systems for humans (cave-assisted VE; Cruz-Neira et al. 1992)—such systems became less popular for use with humans recently, where head mounted displays increasingly dominate the field. Furthermore, systems, which restrict the rodent’s movement to circular paths, are developed to provide more natural inertial and proprioceptive feedback (Madhav et al. 2014).

Before we discuss the results from the studies mentioned, we describe the technical specifics of VR systems for rodents in more detail.

Design of VR Systems for Rodents

VR systems share a number of key technical components, which include devices 1) to measure the subjects’ movements and 2) to provide sensory stimulation, i.e. to generate the virtual world. Typically, sensory stimulation in rodent VRs is limited to vision, although providing stimuli to other senses would be similarly relevant—in particular with rodents. The description below mainly concerns visual VR but VR approaches developed for other sensory modalities are briefly introduced as well.

Tracking animal movements

VR setups involve closed-loop presentation of sensory stimuli, i.e. stimulation is modulated according to an animal’s own movement in real time, and, therefore, close tracking of movements during stimulus presentation is of critical importance. In most rodent VR setups, this is achieved with a low friction, passive treadmill, and motion sensors (Figure 1A and, e.g., Hölscher et al. 2005; Harvey et al. 2009). The animal is placed on top of a spherical treadmill—typically a Styrofoam (polystyrene) ball. The ball is sitting in a bowl, for example, made of aluminum, that is slightly larger than the ball’s diameter. Into the bowl a stream of compressed air is directed from below or from multiple outlets within the bowl. The air stream supports the ball, such that it is floating on an air cushion and kept off the bowl’s inner surface in a stable position with nearly no friction (Dahmen 1980). The animal is fixed such that its movements rotate the treadmill. This is accomplished with the muscular power of the animal and, therefore, the mass of the ball should be taken into account in relation to the animal’s body weight (Thurley et al. 2014). Treadmill rotations are measured by movement sensors located in proximity to the treadmill (often 1 or 2 optical computer mice). The signal is fed into a computer that generates and updates the VE. Spherical treadmills allow for movement in 2-dimensional (2D) VEs. Treadmills such as rolling belts (Lee et al. 2007) or cylinders (Domnisoru et al. 2013) as well as spheres (by considering only the movement on a certain axis or by physically limiting the movement to single-dimensional rotation by inserting an axis) are used to track movements in 1-dimensional (1D) VEs. Here, the animals are placed into infinite tracks or they are teleported back to the start upon reaching the track’s end. Such approaches may further increase stability of recordings. Using only 1D information may also simplify training the animals (Keller et al. 2012; Chen et al. 2013).

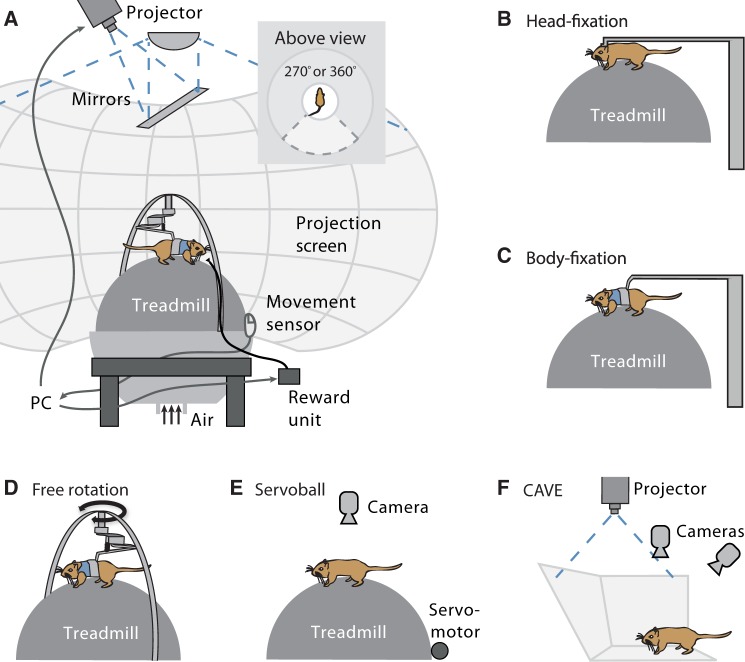

Figure 1.

VR setups for rodents. (A) General components of rodent VR setups include a device to restrain and track the animal’s movement, and equipment to provide sensory stimulation. Here, a system is depicted which comprises a spherical treadmill, fixation permitting free rotation of the animal around its vertical body axis, and projection of a visual VR onto a projection screen (cf. Thurley et al. 2014). (B–F) Types of restraints used in rodent VR systems. See main text for details.

To measure the animal’s movements and to ensure the delivery of appropriate sensory stimuli at the same time, the animal needs to be restrained to some level. The type of fixation depends on the experimental demands and ranges from full head fixation (Figure 1B) to freely moving (Figure 1E, F). Head fixation is usually necessary in situations where high stability is required to record neural activity. For fluorescence imaging of neurons in vivo a stable image is essential for reliable measurements (Dombeck et al. 2007; Dombeck et al. 2010; Harvey et al. 2012; Keller et al. 2012; Heys et al. 2014). Recording of single neurons with whole-cell patch-clamp or juxta-cellular electrodes requires a similar level of mechanical stability (Harvey et al. 2009; Domnisoru et al. 2013; Schmidt-Hieber and Häusser 2013). For head fixation, a mounting clamp is implanted onto the skull of the animal. Animals are fully fixed in place using the clamp, while they are still able to rotate the spherical treadmill by shear movements of their legs. Both translational and rotational movements of the treadmill are simulated in VR.

Head fixation is not required with recording techniques, which are typically used in real world experiments like extracellular recordings with chronically implanted electrodes (e.g., Buzsaki 2004). If such recording techniques are applied in VR setups, animals may sit in a harness being body-fixed (Figure 1C; Cushman et al. 2013; Ravassard et al. 2013; Aghajan et al. 2015) or can rotate around their vertical body axis (Figure 1D; Hölscher et al. 2005; Aronov and Tank 2014; Thurley et al. 2014). With body fixation, both translational and rotational movements are simulated. When animals are allowed to rotate themselves on top of the ball, only translational movements are simulated, since rotations are performed physically; but rotations of the ball around its vertical axis need to be inhibited for correct movement tracking (e.g., by small wheels, Hölscher et al. 2005). Body fixation leaves the head of the animal free, allowing for more natural visual exploration of the surrounding. Moreover, vestibular and proprioceptive inputs due to head position are available to the animal to a certain extent. Nevertheless, mismatches cannot be avoided between visual and vestibular inputs. With free rotation, vestibular information about rotational movements is available to the animal and it matches the visual input. Still, inputs are lacking from the otolith organs which would provide information on linear acceleration. A way to solve this issue is to restrict movements to circular paths; however, this solution precludes translational movements (Madhav et al. 2014).

More recently, VR systems were developed in which the animals are not fixed directly. One system video tracks in real time a freely moving animal on top of a treadmill and introduces counter rotations of the treadmill with the help of servomotors, compensating for the animal’s attempts to move (Figure 1E; Winter et al. 2005; Kaupert and Winter 2013). Another approach uses a system where projections are directed to the walls and floor of the physical arena surrounding the animal. The animal’s head position is tracked using a multicamera system to update the VR accordingly. The animal’s movement is not restrained but restricted to the chamber. However, very precise tracking in real time is necessary to make the approach feasible (Figure 1F;Del Grosso and Sirota 2014).

Generating a visual VR

Rodent VR systems use panoramic displays, which are considered crucial to provide appropriate visual stimuli to rodents with their large field of view (Hölscher et al. 2005; Dombeck and Reiser 2011). For instance, in rats the field of view extends ∼300° horizontally and between −45° and 100° vertically (Hughes 1979). Visual stimuli of low spatial resolution and high contrast are commonly used in VEs to match selectivity of rodent vision (Prusky et al. 2000; Prusky et al. 2004; Gao et al. 2010).

Panoramic displays are typically toroidal or cylindrical screens on which the virtual scene is presented with the help of a projector and system of mirrors comprising an angular amplification mirror (Figure 1A;Chahl and Srinivasan 1997). The mirror may be located above (e.g., Hölscher et al. 2005) or below the setup (Schmidt-Hieber and Häusser 2013). For experiments with head or body fixation, displays with 300° of azimuthal angle are sufficient (e.g., Ravassard et al. 2013; Schmidt-Hieber and Häusser 2013); 360° displays are used in setups with free rotation (cf. inset in Figure 1A; Hölscher et al. 2005; Aronov and Tank, 2014; Thurley et al. 2014). In elevation, displays reach from a bit below 0° to +60°. Some projection systems do not use angular amplification but directly project the VE onto the screen (Aronov and Tank 2014; Del Grosso and Sirota 2014). Also, displays are used that comprise several computer screens (Keller et al. 2012; Ayaz et al. 2013; Chen et al. 2013; Saleem et al. 2013; Heys et al. 2014).

Although projection screens may cover a large part or even the full field of view of rodents, projections remain 2D; stereo vision is not triggered as in natural situations. Animals may make use of strategies such as motion parallax while moving through the VE to gain depth perception in setups that work with head- or body fixation. Nevertheless, strategies like peering, that is, head or body movements that result in changes of head position, cannot be utilized by the animals (c.f. e.g., Goodale et al. 1990; Legg and Lambert 1990). A solution to this may be achieved in CAVE VR (Figure 1F;Del Grosso and Sirota 2014), where the VE is updated based on head position. The CAVE approach helps exploiting depth cues and hence 3D visual features. In how far such setups also assist binocular (stereo) vision in rodents has to be determined. Again experimental methods used with insects may be helpful (Nityananda et al. 2016).

Another factor that should be considered in preparation of visual VR is the color sensitivity of rodent visual system if one wants to include color in the visual VE. Rodents have dichromatic vision with 2 cone receptors that are most sensitive to light with short “S” and medium “M” wavelength. For instance, mice show highest sensitivity to light at 370 and 510 nm wavelengths (Jacobs et al. 1991). Some studies have already considered this sensitivity by including green color (∼500 nm) in their VEs (Harvey et al. 2009). However, whether the inclusion of ultraviolet spectrum in VR representation improves perception or behavioral performance remains to be explored.

A critical component of a closed-loop stimulation is close to real-time update of the virtual scene (Dombeck and Reiser 2011). Appropriate update time can be accomplished with modern graphics software that performs the necessary calculations directly on the graphics hardware (GPU). Different software solutions are available and have been used including video game engines (e.g., Harvey et al. 2009; Youngstrom and Strowbridge 2012; Chen et al. 2013); specific 3D graphics software like Blender (http://www.blender.org; Schmidt-Hieber and Häusser 2013), OGRE (http://www.ogre3d.org; Ravassard et al. 2013), OpenSceneGraph (FlyVR, http://flyvr.org/; Stowers et al. 2014), and Vizard (WorldViz, http://www.worldviz.com; Thurley et al. 2014); or direct use of OpenGL, for example, accessed via Matlab or Python (Hölscher et al. 2005; Saleem et al. 2013; Aronov and Tank 2014; Del Grosso and Sirota 2014). However, other factors such as refresh rate and latency of the display as well as configuration of the graphics driver (e.g., vertical sync), commonly determines the VR update rate and the latency.

Also the time lag between the actions of the animal and the system’s response is crucial to realistic virtual stimulation. This issue has been investigated in humans (see, e.g., Friston and Steed 2014 for an overview) but to our best knowledge there is no such study examining effects of VR lag on rodent perception and performance. This may be due to the difficulty of fully determining what appears realistic to animals, specifically to rodents (see also discussion in “Potentials and caveats of rodent VRs”).

Another important issue is to limit the movement of the animal in VR such that it remains inside the visual VE. Most setups only provide visual feedback; similar to computer games, virtual walls cannot be passed through and the projection simply stops when a wall is hit. In addition to visual feedback, Schmidt-Hieber and Häusser (2013) also used air puffs to provide tactile, aversive feedback upon touching a virtual wall.

VR for other senses

Most rodent VRs currently work only with visual stimulation, but efforts exist to develop closed-loop stimulation of other sensory modalities or even to build multisensory VRs.

Recently, significant effort has been put into creating tactile VRs for rodents. Rodents are highly tactile animals and use their whiskers to explore their environment with sensitivity similar to human fingertips. In their natural habitat, they navigate through dark tunnels by utilizing their whiskers. Two main attempts to provide closed-loop tactile stimulation are as follows: Sofroniew et al. (2014) developed a tactile VR system for head-fixed mice, where running through corridors was simulated by movable walls on both sides of the animal (Figure 2A). Mice were fixed on top of a ball and their movement was measured and translated into the position of the walls, such that if a mouse runs rightward, the wall on the right would come closer. In their setting, 2 walls always kept a certain distance (30 mm) from each other, simulating a fixed-width corridor. To simulate curvatures in the virtual corridor, walls were moved accordingly. Mice successfully steered the ball to follow the turns in the corridor (Figure 2B). Using this tactile VE, Sofroniew et al. (2014) studied whisker-guided locomotion.

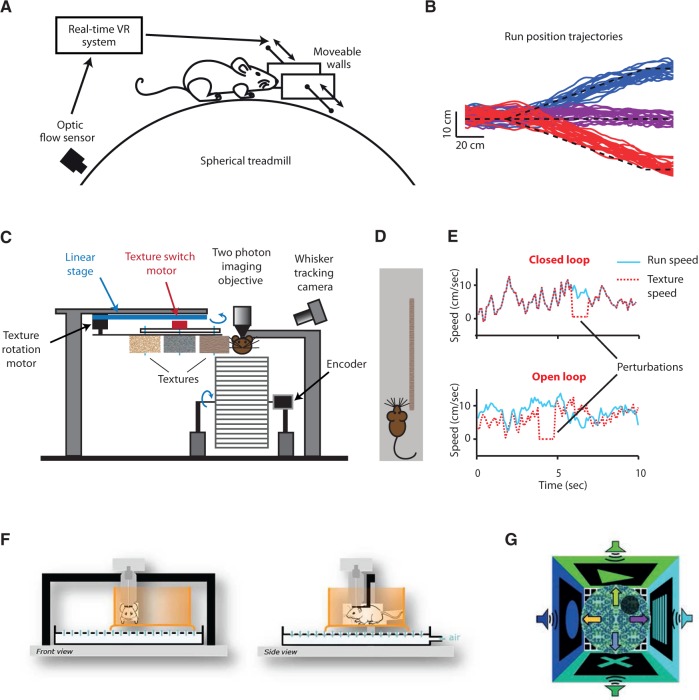

Figure 2.

VRs utilizing nonvisual information. (A) Tactile virtual setup simulating running along corridors with/without turns. Distances of movable walls from whisker pads provide information on whether a turn in the corridor is reached. Mice follow these turns reliably even in the absence of prior training (B). Pictures in (A, B) from Sofroniew et al. (2014). (C) An alternative tactile VE, simulating a free walk along a wall in the dark. Mice are head restrained on a linear treadmill and textures are presented on rotating cylinders in reach of the whiskers. (D) Illustration of a simulated “walk along a wall” in a typical closed-loop trial. (E) This experimental setting allows to manipulate the speed of the texture independent from the speed of the animal on the treadmill, permitting closed-loop (animal and texture speed are coupled) as well as open-loop (decoupled) conditions. Brief perturbations, where texture rotation is stopped, are introduced during closed loop and replayed during open-loop trials. (F) Flat-floored air-lifted platform. A head-fixed mouse moves around in an air-lifted mobile cage (orange), which can be enriched with different visual, tactile, and olfactory cues. Picture from Kislin et al. (2014). (G) Open field arena for the virtual water maze task used in the study of Cushman et al. (2013). The movement of the animal is restricted to the central disc. Distal visual and acoustic cues are present in the surrounding. Hidden reward zone and 4 start locations are indicated by the small gray disc and arrows, respectively. In addition to the visual cues, ambisonic auditory stimuli can be provided from speakers surrounding the arena. Picture from Cushman et al. (2013).

Similarly, Ayaz et al. (2014) developed a tactile VR for mice to investigate somatosensory processing during active exploration. They simulate the condition in which mice can run in the dark along a “wall,” on which varying textures (placed on rotating cylinders) are presented (Figure 2C, D). This experimental setting allows to manipulate the speed of the texture independent from the speed of the animal on the treadmill (open-loop condition), alternatively texture and animal speeds can be coupled (closed-loop condition, Figure 2E).

Another recent experimental setting that provides closed-loop sensory stimulation is the flat-floored air-lifted platform (Figure 2F;Kislin et al. 2014). In this approach, a head-fixed mouse can move around in an air-lifted mobile cage. This mobile cage can be enriched with different visual, tactile, and olfactory cues, creating a multisensory environment. The setting can be exploited to perform various behavioral paradigms while measuring neural activity in a head-restrained preparation.

Incorporation of closed-loop auditory information in a VE has been developed for a virtual version of a Morris water maze task (Figure 2G;Cushman et al. 2013). Auditory cues were presented by a 7-speaker ambisonic surround sound system. The intensity and the orientation of auditory stimulation could be modified, as a rat navigated the VE.

To our best knowledge, there is no study utilizing olfactory cues in a VE or providing closed-loop olfactory stimulation to rodents. However, for example, approaches could be adapted from research on honeybee navigation, in which odor concentration is controlled by feedback from honeybee’s walk in the VE (Kramer 1976). It is important to note that rodents can travel longer distances in a short time compared to honey bees, making it more difficult to adjust odor concentrations at such short intervals.

VR as a Method to Investigate Rodent Behavior and Accompanying Neural Activity

Research on spatial cognition and navigation is an obvious application area of VR—in particular when used with rats and mice, the prevailing mammalian models to study spatial behavior and its accompanying neural events (e.g., O'Keefe and Dostrovsky 1971; Mittelstaedt and Mittelstaedt 1980; Morris et al. 1982; Wilson and McNaughton 1993; Etienne and Jeffery 2004; Fyhn et al. 2004; Hafting et al. 2005). The ease, with which visual stimulation can be provided in VR, makes it also well suited for investigating visual perception. So far most rodent VR research focused on spatial and visual perception, nevertheless a number of studies on nonvisual perception and higher order cognitive abilities, also exist. In the present section, we give an overview of research utilizing rodent VRs, introduce their specificities, and review major findings.

Probing spatial navigation with rodent VR

Rodents—especially mice and rats—are usually believed to rely less on their visual sense compared to more visual mammals such as primates (but see Carandini and Churchland 2013). However, during spatial navigation rodents indeed heavily make use of vision; for example, to orient themselves with the help of distant landmarks (reviewed in e.g., Moser et al. 2008). Contributions of vision in relation to other senses and their use to guide spatial behaviors are hard to test with classical “real world” tasks. VR is a convenient tool for disentangling multisensory influences. In particular, visual inputs can be disentangled from nonvisual contributions, such as vestibular, tactile, and proprioceptive. The latter are usually not taken into account explicitly. Acoustic and olfactory stimuli are even ignored, that is, it is hoped that both do not exert relevant influences, since they are de-correlated with the (visual) VR.

One traditional line of research on spatial behavior with rodents uses very simple mazes like linear tracks or small open arenas of square and round shapes. Such mazes are necessary when wide coverage of the maze and a lot of repetitions are needed but no other behavioral responses are required. Usually this is the case when recordings shall be made from spatially selective neurons in the hippocampal formation like place cells, head direction cells, and grid cells (e.g., Moser et al. 2008). More complex and extended mazes can be built with much greater effort but are still restricted to the lab scale (Davidson et al. 2009; Rich et al. 2014). Spatially extended mazes can easily be built within VR. In the first rodent VR implementation, Hölscher et al. (2005) trained rats to navigate to cylinders hanging from the ceiling of a VE (Figure 3A). The cylinders were placed with regular spacing in a large 2D virtual space of several 10 m2 and the rats were rewarded when they entered the area below a cylinder. Comparison experiments were repeated in a similar but smaller real arena with analog results.

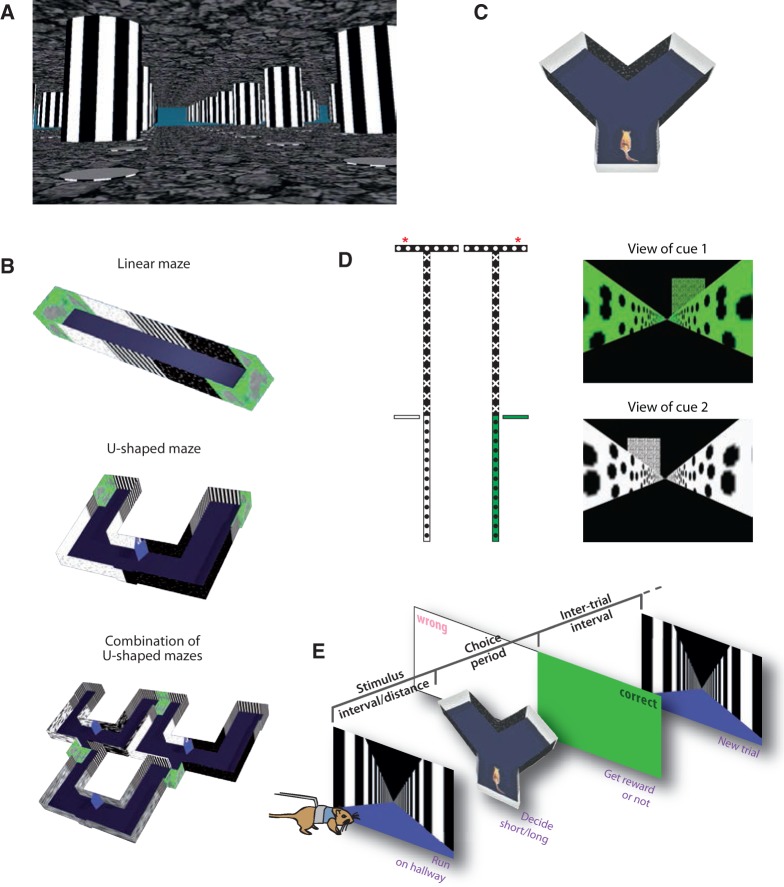

Figure 3.

VEs and tasks. (A) VE with a regular field of pillars suspended from the ceiling as used by Hölscher et al. (2005); picture courtesy of Hansjürgen Dahmen. (B) Virtual tracks of different complexity from Thurley et al. (2014). (C) Y-shaped maze to probe decisions in 2 alternative forced choice (2AFC) tasks (picture from Garbers et al. 2015). (D) Above view of the T-shaped virtual maze (modified from Harvey et al. 2012, courtesy of Christopher Harvey). The rewarded arm of the T-maze (marked with red asterisks on the left) is cued on each trial by the color of the track and the position of an external landmark (cf. right panels). (E) Spatiotemporal bisection task used by Kautzky and Thurley (2016).

Hölscher et al. (2005) provided a first proof-of-concept study for usability of VR with rodents. Later studies were interested in the neural substrate underlying spatial behaviors. Such studies were often conducted with mice, to readily utilize transgenic mouse lines (e.g., to have access to different neural populations) and viral constructs (e.g., genetically encoded calcium indicators). In these studies, virtual linear tracks of few meter length were provided in which the animals shuttled back and forth between the 2 ends (cf. Figure 3B). The walls of the mazes were textured with dot and stripe patterns and some external landmarks that were beneficial to ensure appropriate performance (Youngstrom and Strowbridge 2012). Patch-clamp recordings from place (Harvey et al. 2009) and grid cells (Domnisoru et al. 2013; Schmidt-Hieber and Häusser 2013) were made and the subthreshold membrane potential could be recorded while an animal moved along such a virtual corridor. Two-photon calcium imaging allowed several place (Dombeck et al. 2010) and grid cells (Heys et al. 2014) to be imaged at the same time to investigate relations between anatomical arrangement, spatial firing patterns, and neuronal interactions across the population of neurons. To gain visual access to neurons that do not lie on the dorsal surface of the cortex (e.g., place cells in hippocampus or grid cells in medial entorhinal cortex), either the overlaying cortex was aspirated (Dombeck et al. 2010) or prisms were implanted (Heys et al. 2014; Low et al. 2014). Further technical advances have enabled recording calcium events in dendrites of place cells, providing evidence of regenerative dendritic events and their contribution to place field formation (Sheffield and Dombeck 2015). It has also been shown that neuronal activity can be perturbed during behavior at a cellular resolution by genetically expressing spectrally isolated optogenetic probe and calcium sensor (Rickgauer et al. 2014). Specifically, in this study single place cell activity was manipulated optogenetically by 2-photon excitation while simultaneously being imaged along with a population of CA1 neurons during behavior in a linear VR track. They found that perturbing single place cell activity affected a small subset of observed neurons suggesting local interactions to play a role in place cell formation (Rickgauer et al. 2014).

Chen et al. (2013) took advantage of VR in a different way. They directly applied modifications in VR to separate visual and nonvisual contributions to hippocampal place coding. They incorporated mismatches between the animal’s own movement and the visual advance in the VE. In addition, they systematically exchanged visual stimuli. Different place cells were differentially influenced by the various sources of sensory information. Some cells were driven by specific visual features, whereas others depended on the animal’s own movement. A majority of place cells required both visual and movement cues but their dependence on these variables was heterogenous across the population.

Sofroniew et al. (2014) demonstrated tactile navigation in VR. In their behavioral study, mice used their whiskers to track walls of curving corridors (cf. Figure 2B). This behavior arose quite naturally to avoid coming too close to walls and did not need previous training. They trimmed whiskers such that only C2 whiskers on both whisker pads were left and they closely tracked these 2 whiskers during behavior. They observed that whisking and running are highly coupled and mice use the information gathered by their whiskers to guide locomotion.

The need to restrain rodents within VR setups, leads to restrictions in the sensory information that is available to an animal. This may be considered as an advantage, since particular senses can be separated from the others; but may also cause issues if unintended and unnoticed. For example, head fixation impedes natural head movements and fully removes vestibular inputs which are important during spatial orientation. In consequence, head- as well as body fixation (cf. Figure 1B, C) unavoidably result in mismatches between vestibular, proprioceptive, and visual inputs. Such mismatches may perturb neural processing, as was confirmed by direct comparisons between virtual paradigms and real world analogs (Chen et al. 2013; Ravassard et al. 2013): Hippocampal place cells show altered activity patterns in VR compared to real world (Ravassard et al. 2013). Particularly in 2D VEs, normal place-selective firing has been reported to be absent (Aghajan et al. 2015), rather firing is strongly directionally modulated and coupled to visual cues (Acharya et al. 2016). In contrast, spatially selective neurons appear normally in setups where the animal can turn around its vertical body axis (Aronov and Tank 2014). However, one may argue that in the latter case nonvirtual landmarks are introduced by the setup’s hardware or the surrounding lab room, which may provide informative spatial inputs. If that view is correct, it would turn VR approaches without head- or body-fixation (cf. Figure 1D–F) into what in VR terms is called an augmented reality, since both virtual and real world information is available and utilized by the animal.

Spatial learning and memory

A second line of research on spatial behavior is concerned with spatial learning and memory. The most famous task is perhaps the Morris water maze (Morris et al. 1982). In the task, a rat is put into a large tub filled with opaque water. A submerged platform is located somewhere in the tub and the animal’s goal is to find the fastest route to this platform. An animal can use features of the surrounding of the tub as distal (visual) landmarks to learn and on later trials remember the location of the hidden platform. Using water allows for hiding the platform and removing scent cues left by the animal, and most importantly being in water adds aversive motivation to search for the escape platform. The drawback of the technique is that it cannot be combined with electrophysiological recordings—a limitation that could be circumvented in VR. A virtual variant of the Morris water maze can be easily implemented (Figure 2C;Cushman et al. 2013). In VR, no water has to be used and electrophysiological recordings are possible. However, the aversive motivation is lost. Cushman et al. (2013) presented distal visual and acoustic cues to test the dependence of spatial learning in rats on multisensory information. The animals were able to find the hidden goal with the help of distal visual cues but not of distal auditory cues.

Other well-known tasks for spatial learning include the radial arm maze (Olton and Samuelson 1976), the W-shaped maze (Karlsson and Frank 2008), and the multiple T-maze (Tolman and Honzik 1930; Johnson and Redish 2007; McNamara et al. 2014). Such tasks can in principle be easily implemented in VR. However, apart from the study by Thurley et al. (2014), more complex spatial tasks have not been applied in VR. Thurley et al. (2014) designed VEs of different complexity (Figure 3B) and successfully trained Mongolian gerbils to navigate in those. They found that the animals could make use of previously acquired knowledge about VEs, i.e. they generalized from simpler to more complex environments.

Sensory processing

Since visual stimulation can be easily provided in VR setups, it makes them ideally suited for studies on visual processing. Initial studies took advantage of movement on the treadmill during passive stimulation and showed that responses in mouse visual cortex are strongly modulated by an animal’s own-movement (i.e., locomotion) (Niell and Stryker 2010; Ayaz et al. 2013). Moreover, visual cortex neurons are not only strongly driven by locomotion but also by mismatches between actual and expected visual feedback. This was demonstrated by introducing brief perturbations between optical flow and locomotion (Keller et al. 2012). Another study further investigated, how locomotion and visual motion are integrated in the visual cortex (Saleem et al. 2013). They let mice run along a virtual linear corridor, in either closed- or open-loop stimulus conditions while recording from their visual cortex. They demonstrated that both the running speed of the animal and the speed in the visual VE modulated neuronal responses. Single V1 neurons performed a weighted sum of the 2 speeds. V1 neurons were even tuned to speed in absence of visual stimuli. Poort et al. (2015) imaged neuronal populations in primary visual cortex while mice ran along a virtual hallway and learned to discriminate visual patterns. Improvements in behavioral performance were accompanied with the stabilization of neural representations of task-relevant stimuli.

Other studies conducted psychophysical testing of perceptual properties in VR without recording neuronal responses. Garbers et al. (2015) investigated, with the help of VR, brightness and color vision in Mongolian gerbils. Although this study did not require closed-loop stimulation (cf. Prusky et al. 2000; Furtak et al. 2009), it still demonstrates how flexibly VR setups can be used.

Investigations on nonvisual modalities with VR so far are rare. First examples focus on somatosensory processing. One of the studies developed a tactile VR, to investigate somatosensory processing during active exploration and focuses on integration of sensory signals with motor components (i.e., whisking and locomotion; Ayaz et al. 2014). While mice are running and whisking along the virtual wall, 2-photon calcium imaging was performed of a population of neurons in superficial and deep layers of vibrissal primary somatosensory cortex (vS1). In a recent study, Sofroniew et al. (2015) showed that vS1 neurons are tuned for the distance between the snout and the wall in their virtual tactile setup, with varying selectivity. They also reported that optogenetic activation of layer 4 neurons in vS1 created an illusory wall effect and drove wall-tracking behavior in mice.

Decision-making

Apart from pure sensory processing also more involved cognitive abilities may be investigated in VR, such as decision-making. Basic decision-making experiments have been implemented in VR already (Harvey et al. 2012; Thurley et al. 2014). Harvey et al. (2012) trained mice on a virtual working memory task, in which the animals were required to remember visual cues at the beginning of a linear track and after a few more meters of running to use this information to decide for either going left or right at a T-junction (Figure 2E;Harvey et al. 2012). Population activity in parietal cortex displayed sequential neuronal activation which correlated with the choices.

To test the ability of rodents to extract information from self-motion cues, Kautzky and Thurley (2016) developed a paradigm in which a rodent has to run along a virtual hallway for either a certain temporal interval or virtual distance, respectively, and afterwards categorize the stimulus into “short” or “long” (Figure 3E). VR was necessary for 2 reasons here: 1) landmark-based strategies for task-solving could be excluded using a hallway with infinite length and walls textured with a repetitive pattern of black and white stripes; 2) running time and virtual distance covered could be separated by changing the gain factor between own movement and movement in the VE, that is, the advance of the visual VR.

Potentials and Caveats of Rodent VRs

In the present review article, we examined and portrayed the role of VR as a method to investigate neural substrates of behavior in rodents. The feasibility of applying modern recording techniques that require high stability in navigating mice, undeniably benefited most from VR and renders it an exceptional tool for probing neural responses. Nevertheless, rodent VR systems certainly possess a great potential that extends beyond being a mere tool for head fixation.

Being, by design, convenient for investigating various aspects of spatial cognition, research using rodent VR already impressively demonstrated its benefits in aiding classical nonvirtual approaches—as we described above. However, the full potential of VR to probe spatial cognition and navigation has not yet been exploited. A substantial part of spatial cognition research in rodents during the last decades centered on understanding acquisition, processing, and storage of information to build spatial representations—especially in the hippocampal formation. Here, VR advances real world setups, for instance, with the possibility of providing conflicting spatial information. Head- and body fixation indirectly realize such an approach, since they only provide informative visual but not vestibular inputs (Aghajan et al. 2015; Acharya et al. 2016). Another option is to blur the relation between own-movement and virtual speed to separate inputs that are complementary under natural conditions (cf. Chen et al. 2013; Saleem et al. 2013; Kautzky and Thurley 2016). Nevertheless, how actions related to navigation could and should be performed has been less investigated in rodents. Tackling such questions is common practice in human VR (Tarr and Warren 2002; Bohil et al. 2011), but may be addressed with rodent VRs as well. A good starting point could be to learn from human VR and adapt paradigms to rodents. Tasks can be designed that require the animals to make decisions based on sensory conditions, which in turn may be modified online as described above (Kautzky and Thurley 2016). Other options are teleportation between virtual mazes or parts thereof, and physically impossible environments (cf. for a non-VR attempt, Jezek et al. 2011). Despite being comparably simple to implement, such uses are rarely found in rodent VR so far. Similarly, adapting traditional psychophysical tasks for VR could further exploit it for understanding perception.

To reach beyond spatial cognition, fostering attempts to develop VR techniques for other senses and then combine them across sensory modalities to create multimodal VR would strongly advance the scope of rodent VR. For instance, 2 tactile VRs introduced earlier (Ayaz et al. 2014; Sofroniew et al. 2014) could be easily combined with other sensory inputs to create a multisensory VR. Although questions related to spatial navigation would highly benefit from multimodal VR, more general objectives could also be pursued. One could try to better understand how information is extracted from single senses; how different sensory inputs interact and how neural representations are formed (cf., e.g., Kaifosh et al. 2013; Bittner et al. 2015; Villette et al. 2015). An alternative and valuable approach could be studying attention in a multisensory setting. Tasks may be developed in a multisensory VR in which an animal is required to attend to 1 sensory modality at a time in a reward-based task. In such a setting, one could ask: Do salient changes in one modality affect processing in the other one? How does priming animals to attend one or the other modality modulate coding in either sensory cortex? And how do these modulations map to specific cell populations and what are the underlying mechanisms? Again combinations of stimuli that are not found in a real world setting or perturbations between couplings of sensory inputs could be presented to investigate principles of multisensory integration.

In addition to spatial VEs, interactions with virtual objects could be a venue of research, providing truly interactive situations. An example study might involve detailed observation of whisker movements during exploration of different objects (e.g., objects of different geometries, circular vs. rectangular or objects of different textures) and then to provide similar whisker movements through multiprobe stimulation (Krupa et al. 2001; Jacob et al. 2010; Ramirez et al. 2014). This virtual object setup can be used to study object recognition and might allow systematic investigation of varying stimulus components and their influence on recognition.

Technical advancements of rodent VR could be methods to include motion parallax and hence enable animals to use depth and distance cues. Such systems would require precise head tracking. Efforts in this direction are undertaken (Del Grosso and Sirota 2014). In addition, the use of displays or projectors that present short-wavelength light, i.e. blue/UV, would expand the visual component of rodent VRs by better fitting the short wavelength sensitive photo-receptors of rodents (Jacobs et al. 1991; Garbers et al. 2015).

The above descriptions illustrate that the stimulus repertoire of VRs is fairly reduced at the moment compared to “real” reality. For many applications, including behavioral neuroscience, the goal is to create a controlled environment that is as life-like as possible. Related to human VR, it is often stated that computer-generated virtual cues should provide an immersive sensory experience (Tarr and Warren 2002; Bohil et al. 2011). This demand is, however, not to be confused with the need to provide situations that are as natural or realistic as possible—in particular when VR is applied with animals. Of course, it needs to be taken into consideration what is life-like experience for a rodent; especially since we assess it from the human perspective. However, the actual requirement is that VR experiments generate behavioral responses and accompanying neural activity that is close to what occurs in the real world; not that VEs appear realistic to humans. Unnatural deviations should only be introduced on purpose and care needs to be taken to avoid accidental perturbations.

New developments try to circumvent limited and unnatural stimulation (Kaupert and Winter 2013; Del Grosso and Sirota 2014) by restraining the animals less. However, this comes at the expense of reduced stimulus control and fewer options for recording neural activity. The latter drawback may be accounted for by novel techniques to image and record neural activity without the need of head fixation. Such techniques miniaturize the recording equipment and mount it to the animal’s head (Helmchen et al. 2001; Lee et al. 2006; Lee et al. 2009; Ghosh et al. 2011; Long and Lee 2012). Considerations on stimulus control, however, must not be disregarded. Certainly, stimulation is usually better controlled in VR compared to real world. Nevertheless, VRs always come with a tradeoff between less restraint and precise control. In freely moving rodent VR—as already mentioned above—animals may make use of parts of the setup’s hardware to form a neural representation of their environment turning the approach into an augmented reality. With stronger restraint, such influences are better controlled. On the other hand, important sensory inputs may be lacking that may not be entirely made up for by the provided stimuli. Even worse, corruptions may be introduced that considerably alter behavior, neural responses or both.

Above considerations on stimulus control also apply to attempts to provide multimodal stimulation in VR. Such VRs have to be designed very carefully to gain perceptually correct synchronization and weighting of the modalities.

The specific VR system that is best suited depends on the particular situation and research objective—as always was the case also with non-VR experiments. Bearing in mind the tradeoffs between different approaches, and the limits and caveats that accompany each approach, VR systems for rodents offer a wide range of tools to promote and inspire future neuroscience research. New applications will expand the scope of rodent VR even further, turning it into a more comprehensive toolset. Nevertheless, VR should always be considered as an addition to the extensive set of methods that is already available in neuroscience. When a certain question can be answered in a real world setup, it may be more readily approached there—and if so, it should be!

Acknowledgments

We are grateful to Ariel Gilad, Nicholas Del Grosso, Aman Saleem, and Andreas Stäuble for comments and discussions on earlier versions of the manuscript and thank Moritz Dittmeyer for assistance with the schematic drawings.

Funding

K.T. was supported by Bernstein Center Munich, Grant number 01GQ1004A, BMBF (Federal Ministry of Education and Research, Germany). A.A. received support from University of Zurich, Swiss National Science Foundation (SNSF) Marie Heim Vögtlin Grant (PMPDP3_145476) and SNSF Ambizione Grant (PZ00P3_161544).

References

- Acharya L, Aghajan ZM, Vuong C, Moore JJ, Mehta MR, 2016. Causal influence of visual cues on hippocampal directional selectivity. Cell 164:197–207. [DOI] [PubMed] [Google Scholar]

- Aghajan ZM, Acharya L, Moore JJ, Cushman JD, Vuong C. et al. , 2015. Impaired spatial selectivity and intact phase precession in two–dimensional virtual reality. Nat Neurosci 18:121–128. [DOI] [PubMed] [Google Scholar]

- Aronov D, Tank DW, 2014. Engagement of neural circuits underlying 2D spatial navigation in a rodent virtual reality system. Neuron 84:442–456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayaz A, Saleem AB, Schölvinck ML, Carandini M, 2013. Locomotion controls spatial integration in mouse visual cortex. Curr Biol 23:890–894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayaz A, Stäuble A, Helmchen F, 2014. A virtual tactile environment to study somatosensory processing in mouse cortex during locomotion. 9th FENS Forum of Neuroscience; 2014 Jul 5–9; Milan, Italy. Available from: http://fens2014.meetingxpert.net/FENS_427/poster_101150/program.aspx.

- Benkner B, Mutter M, Ecke G, Münch TA, 2013. Characterizing visual performance in mice: an objective and automated system based on the optokinetic reflex. Behav Neurosci 127:788–796. [DOI] [PubMed] [Google Scholar]

- Bittner KC, Grienberger C, Vaidya SP, Milstein AD, Macklin JJ. et al. , 2015. Conjunctive input processing drives feature selectivity in hippocampal CA1 neurons. Nat Neurosci 18:1133–1142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bohil CJ, Alicea B, Biocca FA, 2011. Virtual reality in neuroscience research and therapy. Nat Rev Neurosci 12:752–762. [DOI] [PubMed] [Google Scholar]

- Busse L, Ayaz A, Dhruv NT, Katzner S, Saleem AB. et al. , 2011. The detection of visual contrast in the behaving mouse. J Neurosci 31:11351–11361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buzsaki G, 2004. Large-scale recording of neuronal ensembles. Nat Neurosci 7:446–451. [DOI] [PubMed] [Google Scholar]

- Carandini M, Churchland AK, 2013. Probing perceptual decisions in rodents. Nat Neurosci 16:824–831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrel JS, 1972. An improved treading device for tethered insects. Science 175:1279.4400809 [Google Scholar]

- Chahl JS, Srinivasan MV, 1997. Reflective surfaces for panoramic imaging. Appl Opt 36:8275–8285. [DOI] [PubMed] [Google Scholar]

- Chen G, King JA, Burgess N, O'keefe J, 2013. How vision and movement combine in the hippocampal place code. Proc Natl Acad Sci USA 110:378–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cruz-Neira C, Sandin DJ, DeFanti TA, Kenyon RV, Hart JC, 1992. The CAVE: audio visual experience automatic virtual environment. Commun ACM 35:64–72. [Google Scholar]

- Cushman JD, Aharoni DB, Willers B, Ravassard P, Kees A. et al. , 2013. Multisensory control of multimodal behavior: do the legs know what the tongue is doing? PLoS ONE 8:e80465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahmen H, 1980. A simple apparatus to investigate the orientation of walking insects. Cell Mol Life Sci 36:685–687. [Google Scholar]

- Davidson TJ, Kloosterman F, Wilson MA, 2009. Hippocampal replay of extended experience. Neuron 63:497–507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Del Grosso N, Sirota A, 2014. ratCAVE: a novel virtual reality system for freely moving rodents. Program No. 466.01/UU37. Neuroscience Meeting Planner. Washington (DC): Society for Neuroscience, Online.

- Dombeck DA, Harvey CD, Tian L, Looger LL, Tank DW, 2010. Functional imaging of hippocampal place cells at cellular resolution during virtual navigation. Nat Neurosci 13:1433–1440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dombeck DA, Khabbaz AN, Collman F, Adelman TL, Tank DW, 2007. Imaging large-scale neural activity with cellular resolution in awake, mobile mice. Neuron 56:43–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dombeck DA, Reiser MB, 2011. Real neuroscience in virtual worlds. Curr Opin Neurobiol 22:3–10. [DOI] [PubMed] [Google Scholar]

- Domnisoru C, Kinkhabwala AA, Tank DW, 2013. Membrane potential dynamics of grid cells. Nature 495:199–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eacott MJ, Machin PE, Gaffan EA, 2001. Elemental and configural visual discrimination learning following lesions to perirhinal cortex in the rat. Behav Brain Res 124:55–70. [DOI] [PubMed] [Google Scholar]

- Ellard CG, 2004. Visually guided locomotion in the gerbil: a comparison of open- and closed- loop control. Behav Brain Res 149:41–48. [DOI] [PubMed] [Google Scholar]

- Etienne AS, Jeffery KJ, 2004. Path integration in mammals. Hippocampus 14:180–192. [DOI] [PubMed] [Google Scholar]

- Friston S, Steed A, 2014. Measuring latency in virtual environments. IEEE Trans Visual Comput Graph 20:616–625. [DOI] [PubMed] [Google Scholar]

- Furtak SC, Cho CE, Kerr KM, Barredo JL, Alleyne JE. et al. , 2009. The floor projection maze: a novel behavioral apparatus for presenting visual stimuli to rats. J Neurosci Methods 181:82–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fyhn M, Molden S, Witter MP, Moser EI, Moser M-B, 2004. Spatial representation in the entorhinal cortex. Science 305:1258–1264. [DOI] [PubMed] [Google Scholar]

- Gaffan EA, Eacott MJ, 1995. A computer-controlled maze environment for testing visual memory in the rat. J Neurosci Methods 60:23–37. [DOI] [PubMed] [Google Scholar]

- Gao E, DeAngelis GC, Burkhalter A, 2010. Parallel input channels to mouse primary visual cortex. J Neurosci 30:5912–5926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garbers C, Henke J, Leibold C, Wachtler T, Thurley K, 2015. Contextual processing of brightness and color in Mongolian gerbils. J Vis 15:1–13. [DOI] [PubMed] [Google Scholar]

- Ghosh KK, Burns LD, Cocker ED, Nimmerjahn A, Ziv Y. et al. , 2011. Miniaturized integration of a fluorescence microscope. Nat Methods 8:871–878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodale MA, Ellard CG, Booth L, 1990. The role of image size and retinal motion in the computation of absolute distance by the Mongolian gerbil Meriones unguiculatus. Vision Res 30:399–413. [DOI] [PubMed] [Google Scholar]

- Gray JR, Pawlowski V, Willis MA, 2002. A method for recording behavior and multineuronal CNS activity from tethered insects flying in virtual space. J Neurosci Methods 120:211–223. [DOI] [PubMed] [Google Scholar]

- Hafting T, Fyhn M, Molden S, Moser M-B, Moser EI. et al. , 2005. Microstructure of a spatial map in the entorhinal cortex. Nature 436:801–806. [DOI] [PubMed] [Google Scholar]

- Harvey CD, Coen P, Tank DW, 2012. Choice-specific sequences in parietal cortex during a virtual-navigation decision task. Nature 484:62–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harvey CD, Collman F, Dombeck DA, Tank DW, 2009. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature 461:941–946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helmchen F, Fee MS, Tank DW, Denk W, 2001. A miniature head-mounted two-photon microscope high-resolution brain imaging in freely moving animals. Neuron 31:903–912. [DOI] [PubMed] [Google Scholar]

- Heys JG, Rangarajan KV, Dombeck DA, 2014. The functional micro-organization of grid cells revealed by cellular-resolution imaging. Neuron 84:1079–1090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hölscher C, Schnee A, Dahmen H, Setia L, Mallot HA, 2005. Rats are able to navigate in virtual environments. J Exp Biol 208:561–569. [DOI] [PubMed] [Google Scholar]

- Hori E, Nishio Y, Kazui K, Umeno K, Tabuchi E. et al. , 2005. Place-related neural responses in the monkey hippocampal formation in a virtual space. Hippocampus 15:991–996. [DOI] [PubMed] [Google Scholar]

- Hughes A, 1979. A schematic eye for the rat. Vision Res 19:569–588. [DOI] [PubMed] [Google Scholar]

- Jacob V, Estebanez L, Le Cam J, Tiercelin JY, Parra P. et al. , 2010. The Matrix: a new tool for probing the whisker-to-barrel system with natural stimuli. J Neurosci Methods 189:65–74. [DOI] [PubMed] [Google Scholar]

- Jacobs GH, Neitz J, Deegan JF, II, 1991. Retinal receptors in rodents maximally sensitive to ultraviolet light. Nature 353:655–656. [DOI] [PubMed] [Google Scholar]

- Jezek K, Henriksen EJ, Treves A, Moser EI, Moser M-B, 2011. Theta-paced flickering between place-cell maps in the hippocampus. Nature 478:246–249. [DOI] [PubMed] [Google Scholar]

- Johnson A, Redish AD, 2007. Neural ensembles in CA3 transiently encode paths forward of the animal at a decision point. J Neurosci 27:12176–12189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaifosh P, Lovett-Barron M, Turi GF, Reardon TR, Losonczy A, 2013. Septo-hippocampal GABAergic signaling across multiple modalities in awake mice. Nat Neurosci 16:1182–1184. [DOI] [PubMed] [Google Scholar]

- Karlsson MP, Frank LM, 2008. Network dynamics underlying the formation of sparse, informative representations in the hippocampus. J Neurosci 28:14271–14281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaupert U, Winter Y, 2013. Rat navigation with visual and acoustic cues in virtual reality on a servo ball. 10th Göttingen Meeting of the German Neuroscience Society; 2013 Mar 13–16; Göttingen, Germany. Poster number T25-3B. Available from: https://www.nwg-goettingen.de/2013/upload/file/Programm_Poster_Contributions.pdf.

- Kautzky M, Thurley K, 2016. Estimation of self-motion duration and distance in rodents. Roy Soc Open Sci 3:160118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keller GB, Bonhoeffer T, Hübener M, 2012. Sensorimotor mismatch signals in primary visual cortex of the behaving mouse. Neuron 74:809–815. [DOI] [PubMed] [Google Scholar]

- Kislin M, Mugantseva E, Molotkov D, Kulesskaya N, Khirug S. et al. , 2014. Flat-floored air-lifted platform: a new method for combining behavior with microscopy or electrophysiology on awake freely moving rodents. J Vis Exp e51869.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer E, 1975. Orientation of the male silkmoth to the sex attractant bombykol. Olfact Taste 5:329–335. [Google Scholar]

- Kramer E, 1976. The orientation of walking honeybees in odour fields with small concentration gradients. Physiol Entomol 1:27–37. [Google Scholar]

- Krupa DJ, Brisben AJ, Nicolelis MA, 2001. A multi-channel whisker stimulator for producing spatiotemporally complex tactile stimuli. J Neurosci Methods 104:199–208. [DOI] [PubMed] [Google Scholar]

- Lee AK, Epsztein J, Brecht M, 2009. Head-anchored whole-cell recordings in freely moving rats. Nat Protoc 4:385–392. [DOI] [PubMed] [Google Scholar]

- Lee AK, Manns ID, Sakmann B, Brecht M, 2006. Whole-cell recordings in freely moving rats. Neuron 51:399–407. [DOI] [PubMed] [Google Scholar]

- Lee H, Kuo M, Chang D, Ou-Yang Y, Chen J, 2007. Development of virtual reality environment for tracking rat behavior. J Med Biol Eng 27:71–78. [Google Scholar]

- Legg CR, Lambert S, 1990. Distance estimation in the hooded rat: experimental evidence for the role of motion cues. Behav Brain Res 41:11–20. [DOI] [PubMed] [Google Scholar]

- Leighty KA, Fragaszy DM, 2003. Primates in cyberspace: using interactive computer tasks to study perception and action in nonhuman animals. Anim Cogn 6:137–139. [DOI] [PubMed] [Google Scholar]

- Long MA, Lee AK, 2012. Intracellular recording in behaving animals. Curr Opin Neurobiol 22:34–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Low RJ, Gu Y, Tank DW, 2014. Cellular resolution optical access to brain regions in fissures: imaging medial prefrontal cortex and grid cells in entorhinal cortex. Proc Natl Acad Sci USA 111:18739–18744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madhav MS, Jayakumar RP, Savelli F, Blair HT, Cowan NJ. et al. , 2014. Place cells in virtual reality dome reveal interaction between conflicting self-motion and landmark cues. Society for Neuroscience Annual Meeting 2015; Oct 17–21; Chicago, USA. Available from: http://www.abstractsonline.com/plan/ViewAbstract.aspx?cKey=6d64279d-8b44-466b-b369-900d1066c22f&mID=3744&mKey=d0ff4555-8574-4fbb-b9d4-04eec8ba0c84&sKey=3edb12cb-ccc0-4d8b-b86e-c070a5845819.

- Matsumura N, Nishijo H, Tamura R, Eifuku S, Endo S. et al. , 1999. Spatial- and task-dependent neuronal responses during real and virtual translocation in the monkey hippocampal formation. J Neurosci 19:2381–2393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNamara CG, Tejero-Cantero A, Trouche S, Campo-Urriza N, Dupret D, 2014. Dopaminergic neurons promote hippocampal reactivation and spatial memory persistence. Nat Neurosci 17:1658–1660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mittelstaedt M, Mittelstaedt H, 1980. Homing by path integration in a mammal. Naturwissenschaften 67:566–567. [Google Scholar]

- Morris RG, Garrud P, Rawlins JN, O'keefe J, 1982. Place navigation impaired in rats with hippocampal lesions. Nature 297:681–683. [DOI] [PubMed] [Google Scholar]

- Moser EI, Kropff E, Moser M-B, 2008. Place cells, grid cells, and the brain's spatial representation system. Annu Rev Neurosci 31:69–89. [DOI] [PubMed] [Google Scholar]

- Nekovarova T, Klement D, 2006. Operant behavior of the rat can be controlled by the configuration of objects in an animated scene displayed on a computer screen. Physiol Res 55:105–113. [DOI] [PubMed] [Google Scholar]

- Niell CM, Stryker MP, 2010. Modulation of visual responses by behavioral state in mouse visual cortex. Neuron 65:472–479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nityananda V, Tarawneh G, Rosner R, Nicolas J, Crichton S. et al. , 2016. Insect stereopsis demonstrated using a 3D insect cinema. Sci Rep 6:18718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J, Dostrovsky J, 1971. The hippocampus as a spatial map: preliminary evidence from unit activity in the freely-moving rat. Brain Res 34:171–175. [DOI] [PubMed] [Google Scholar]

- Olton DS, Samuelson RJ, 1976. Remembrance of places passed: spatial memory in rats. J Exp Psychol 2:97–116. [Google Scholar]

- Poort J, Khan AG, Pachitariu M, Nemri A, Orsolic I. et al. , 2015. Learning enhances sensory and multiple non-sensory representations in primary visual cortex. Neuron 86:1478–1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prusky GT, Alam NM, Beekman S, Douglas RM, 2004. Rapid quantification of adult and developing mouse spatial vision using a virtual optomotor system. Invest Ophthalmol Vis Sci 45:4611–4616. [DOI] [PubMed] [Google Scholar]

- Prusky GT, West PW, Douglas RM, 2000. Behavioral assessment of visual acuity in mice and rats. Vision Res 40: 2201–2209. [DOI] [PubMed] [Google Scholar]

- Ramirez A, Pnevmatikakis EA, Merel J, Paninski L, Miller KD. et al. , 2014. Spatiotemporal receptive fields of barrel cortex revealed by reverse correlation of synaptic input. Nat Neurosci 17:866–875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravassard P, Kees A, Willers B, Ho D, Aharoni D. et al. , 2013. Multisensory control of hippocampal spatiotemporal selectivity. Science 340:1342–1346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rich PD, Liaw H-P, Lee AK, 2014. Large environments reveal the statistical structure governing hippocampal representations. Science 345:814–817. [DOI] [PubMed] [Google Scholar]

- Rickgauer JP, Deisseroth K, Tank DW, 2014. Simultaneous cellular-resolution optical perturbation and imaging of place cell firing fields. Nat Neurosci 17:1816–1824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sahgal A, Steckler T, 1994. TouchWindows and operant behaviour in rats. J Neurosci Methods 55:59–64. [DOI] [PubMed] [Google Scholar]

- Saleem AB, Ayaz A, Jeffery KJ, Harris KD, Carandini M, 2013. Integration of visual motion and locomotion in mouse visual cortex. Nat Neurosci 16:1864–1869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt-Hieber C, Häusser M, 2013. Cellular mechanisms of spatial navigation in the medial entorhinal cortex. Nat Neurosci 16:325–331. [DOI] [PubMed] [Google Scholar]

- Shankar S, Ellard C, 2000. Visually guided locomotion and computation of time-to-collision in the mongolian gerbil Meriones unguiculatus: the effects of frontal and visual cortical lesions. Behav Brain Res 108:21–37. [DOI] [PubMed] [Google Scholar]

- Sheffield MEJ, Dombeck DA, 2015. Calcium transient prevalence across the dendritic arbour predicts place field properties. Nature 517:200–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheffield MEJ, Dombeck JD, Lee AK, Svoboda K, 2014. Natural whisker-guided behavior by head-fixed mice in tactile virtual reality. J Neurosci 34:9537–9550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sofroniew NJ, Vlasov YA, Andrew Hires S, Freeman J, Svoboda K, 2015. Neural coding in barrel cortex during whisker-guided locomotion. eLife 4: e12559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stowers JR, Fuhrmann A, Hofbauer M, Streinzer M, Schmid A. et al. , 2014. Reverse engineering animal vision with virtual reality and genetics. Computer 47:38–45. [Google Scholar]

- Strauss R, Schuster S, Götz KG, 1997. Processing of artificial visual feedback in the walking fruit fly Drosophila melanogaster. J Exp Biol 200:1281–1296. [DOI] [PubMed] [Google Scholar]

- Sun HJ, Carey DP, Goodale MA, 1992. A mammalian model of optic-flow utilization in the control of locomotion. Exp Brain Res 91:171–175. [DOI] [PubMed] [Google Scholar]

- Tarr MJ, Warren WH, 2002. Virtual reality in behavioral neuroscience and beyond. Nat Neurosci 5(Suppl):1089–1092. [DOI] [PubMed] [Google Scholar]

- Thurley K, Henke J, Hermann J, Ludwig B, Tatarau C. et al. , 2014. Mongolian gerbils learn to navigate in complex virtual spaces. Behav Brain Res 266:161–168. [DOI] [PubMed] [Google Scholar]

- Tolman EC, Honzik CH, 1930. Introduction and removal of reward, and maze performance in rats. Univ Calif Public Psychol 4:257–275. [Google Scholar]

- Villette V, Malvache A, Tressard T, Dupuy N, Cossart R, 2015. Internally recurring hippocampal sequences as a population template of spatiotemporal information. Neuron 88:357–366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson MA, McNaughton BL, 1993. Dynamics of the hippocampal ensemble code for space. Science 261:1055–1058. [DOI] [PubMed] [Google Scholar]

- Winter Y, Ludwig J, Kaupert U, Kleindienst HU, 2005. Rodent cognition in virtual reality: time dynamics pf place and spatial response learning by mice on a servosphere virtual maze. In: 6th Göttingen Meeting of the German Neuroscience Society. Göttingen, Germany. [Google Scholar]

- Youngstrom IA, Strowbridge BW, 2012. Visual landmarks facilitate rodent spatial navigation in virtual reality environments. Learn Mem 19:84–90. [DOI] [PMC free article] [PubMed] [Google Scholar]