Abstract

The extent to which development of the brain language system is modulated by the temporal onset of linguistic experience relative to post-natal brain maturation is unknown. This crucial question cannot be investigated with the hearing population because spoken language is ubiquitous in the environment of newborns. Deafness blocks infants’ language experience in a spoken form, and in a signed form when it is absent from the environment. Using anatomically constrained magnetoencephalography, aMEG, we neuroimaged lexico-semantic processing in a deaf adult whose linguistic experience began in young adulthood. Despite using language for 30 years after initially learning it, this individual exhibited limited neural response in the perisylvian language areas to signed words during the 300 to 400 ms temporal window, suggesting that the brain language system requires linguistic experience during brain growth to achieve functionality. The present case study primarily exhibited neural activations in response to signed words in dorsolateral superior parietal and occipital areas bilaterally, replicating the neural patterns exhibited by two previously case studies who matured without language until early adolescence (Ferjan Ramirez N, Leonard MK, Torres C, Hatrak M, Halgren E, Mayberry RI. 2014). The dorsal pathway appears to assume the task of processing words when the brain matures without experiencing the form-meaning network of a language.

Keywords: brain language development, critical period, neural plasticity, sign language, magnetoencephalography

1. Introduction

The adult brain has long been known to process language primarily in the perisylvian region of the left hemisphere (Geshwind, 1970; Penfield & Roberts, 1959). The young child’s neural response to known words is observed in the same brain areas with a more bilateral response that becomes more prominent in the left hemisphere with maturation (Berl et al., 2014; Ressel, Wilke, Lidzba, Lutzenberger, & Krägeloh-Mann, 2008; Stiles, Brown, Haist, & Jernigan, 2015). Unknown is how the temporal onset of language experience relative to post-natal brain maturation affects the development of the brain language system. The question is difficult to investigate because spoken language is ubiquitous in human communities so that the onset of linguistic experience and post-natal brain maturation are always temporally coupled when infants hear normally. Infant deafness has the effect of uncoupling the temporal relation of post-natal brain maturation from the onset of linguistic experience. Infants born deaf cannot hear the language spoken around them, nor can they see sign language when no one in the environment signs. This creates a situation where the onset of linguistic experience varies widely in the deaf population. This naturally occurring situation can then be used ask whether and how the temporal onset of linguistic experience relative to post-natal brain maturation affects development of the brain language system. Here we report a case study of an extreme degree of temporal asynchrony between the onset of language experience and post-natal brain maturation. Using anatomically constrained magnetoencephalography, aMEG, we neuroimaged lexico-semantic processing in an adult whose linguistic experience began in young adulthood. The present study arises from previous studies investigating how the age onset of linguistic experience affects neurolinguistic processing to which we now turn.

The language learned by the present case, American Sign Language -- ASL, is hierarchically organized like spoken language. ASL sentences consist of lexical items interwoven with inflectional and derivational morphology governed by grammatical rules. ASL lexical items, or signs, are further structured sub-lexically: articulatory features (hand configurations, movements and locations of the fingers, hands, and arms on the head and torso) combine to express signs in highly specified ways analogous to the rules of spoken language phonology (Brentari, 1998; Sandler & Lillo-Martin, 2006). Ample evidence indicates that the psycholinguistic processes underlying ASL comprehension involve the mental manipulation of grammatical structures by native signers: individuals who experienced the language from birth (Emmorey, 2002). Infants exposed to ASL acquire it spontaneously. Development of ASL comprehension and production by infants and young children follows a trajectory comparable to that shown by hearing children acquiring spoken language (Anderson & Reilly, 2002; Fenson et al., 1994; Mayberry & Squires, 2006). Deaf and hearing individuals can also learn ASL as a second language, L2, subsequent to experiencing and acquiring a first language, L1, in infancy. L2 learners of ASL, like L2 learners of spoken languages, can achieve near-native proficiency (Best, Mathur, Miranda, & Lillo-Martin, 2010; Mayberry, 1993; Morford & Carlson, 2011)

When the onset of first language experience lags significantly behind post-natal brain maturation, ultimate language proficiency is attenuated at levels well below that of native and L2 learners. These effects are progressive: the longer the temporal lag in the onset of language experience relative to birth, the lower the linguistic proficiency (Boudreault & Mayberry, 2006; Newport, 1990). The outcome of delayed L1 acquisition is thus unlike that of L2 learning where near-native proficiency can potentially be achieved. The attenuated language proficiency associated with a delayed onset of language experience characterizes the outcome of all subsequent language learning, be it of other languages or language forms as in reading (Chamberlain & Mayberry, 2008; Cormier, Schembri, Vinson, & Orfanidou, 2012; Mayberry & Lock, 2003; Mayberry, Lock, & Kazmi, 2002). The deleterious linguistic effects of a delayed onset of language experience suggest that the brain language system may be affected as well. Neurolinguistic research provides initial evidence for this hypothesis.

For the present study it is important to know that the brain language system underlying sign language processing is remarkably similar to that of spoken language processing despite sensory-motor differences between the two kinds of language. This only holds, however, when post-natal brain maturation is coincides with the onset of linguistic experience, as is the case for native speakers or signers. As would be expected, the neural activation patterns for signed and spoken language differ at the initial sensory stages of brain language processing. The initial cortical processing of spoken words occurs in the primary auditory cortex, whereas the initial cortical processing of signed words occurs in the primary visual areas of the occipital cortex (Leonard et al., 2012). Beyond these initial sensory processing differences, the neural activation patterns for the semantic processing of words is remarkably similar for spoken and signed words and primarily located in the canonical language areas of the perisylvian cortex (Cardin et al., 2013; MacSweeney et al., 2006; Mayberry, Chen, Witcher, & Klein, 2011; Newman, Supalla, Fernandez, Newport, & Bavelier, 2015; Petitto et al., 2000; Sakai, Tatsuno, Suzuki, Kimura, & Ichida, 2005). Notably when the onset of language experience is delayed relative to post-natal brain maturation, deviations from these native-like neural activation patterns are observed. Delays in the onset of language experience affect hemodynamic patterns during ASL sentences processing in a linear fashion. Reduced activation the language areas of the left superior temporal gyrus and inferior frontal gyrus are observed along with increased activation the middle occipital and lingual gyrus of the left hemisphere (Mayberry et al., 2011).

In individuals who experienced minimal language throughout childhood, the neural activation patterns for lexico-semantic processing obtained with anatomically constrained magnetoencephalography, aMEG, show a marked departure from expectation. Two previously studied adolescents, whose language experience began at age 14, exhibited neural activation patterns to signed words mostly in right dorsolateral parietal and occipital cortex with some bilateral activation. These neural activation patterns to signed words contrasted sharply with those of two control groups who experienced language in infancy. Deaf native signers exhibited neural activation patterns in the expected left perisylvian regions. Hearing L2 learners of ASL exhibited neural activation patterns nearly indistinguishable from those of the deaf native signers (Ferjan Ramirez, Leonard, Torres, et al., 2014). After an additional year and a half of language experience, the neural activation patterns of the adolescent learners showed some shift to left and right perisylvian areas in response. This shift in neural response to expected language areas was partial, however, and observed mainly for the signed words with which they were most familiar as indexed by RT (Ferjan Ramirez, Leonard, Davenport, et al., 2014).

The partial shift in neural activation patterns to canonical perisylvian areas observed in the adolescent learners raises two possibilities about the role of language experience in the development of the brain language system. One possibility is that the brain language system retains the capacity to process language throughout maturation independent of the temporal onset of linguistic experience, so long as some threshold amount of language is eventually experienced. Because the present case had 30 years of linguistic experience after initially learning ASL, this hypothesis predicts that the present case will exhibit neural activation patterns in response to signed words that will be similar to the control groups who experienced language from birth. An alternative possibility, however, is that the canonical perisylvian language areas require language experience during post-natal brain growth in order to fully develop its language processing capabilities. This hypothesis predicts that the present case will exhibit atypical neural activation patterns in response to signed words because the onset of language experience occurred in young adulthood toward the end of main period of brain growth. To test these hypotheses, we used the same paradigm and neuroimaging modality as in our previous studies of the adolescent L1 learners. Doing so allowed us to investigate the neural activation patterns underlying lexico-semantic processing in relation to three contrastive temporal relationships between post-natal brain maturation and the onset of linguistic experience: 1) young adulthood – the present case; 2) early adolescence – the previous case studies; and 3) infancy – the control groups.

2.0 Materials and Methods

The experimental protocol was approved by the Human Research Protections Program of the University of California San Diego.

2.1 Participants

2.1.1 Case study background

The individual who volunteered for the present study was a healthy 51-year old male given the pseudonym Martin. Martin was the third and only deaf child of four children born to hearing parents in rural Mexico. No other deaf individuals lived in the neighboring area, and no one in his hearing family or community knew or used any sign language. Deaf children in such circumstances are observed to use gesture to communicate with their hearing families in lieu of spoken language, known as homesign (Goldin-Meadow, 2005). Martin probably used some homesign because he reported communicating with a sister through gesture as a child. Unlike his hearing siblings, Martin was kept at home and not sent to school because he was deaf, although he reported a keen childhood desire to attend school. At the age of 21, he left home alone and made his way to a town where a school for deaf students was located and began to learn Mexican Sign Language. Two years later, he immigrated to the USA in search of employment and reported learning ASL from deaf co-workers, deaf friends, and community classes. At the time he volunteered for the present study, Martin was 51 years old, had normal vision, and was strongly right handed for all manual activities given on the Edinburgh Handedness Inventory (Oldfield, 1971). He was employed, married, and used ASL with his deaf wife and friends. The fact that Martin used ASL daily with ASL signers indicates that any differences we observe in his neural processing of signed words cannot be due to a lack of linguistic experience.

2.1.2 Nonverbal cognitive skills and language comprehension

Consistent with previous studies of deaf individuals with minimal language experience during childhood (Braden, 1994; Mayberry, 2002; Morford, 2003) Martin performed within the average range of the hearing population on two nonverbal cognitive tasks, the Block Design and Picture Arrangement subtests of the Wechsler Intelligence Scale for Adults (Wechsler & Naglieri, 2006). Martin’s English reading comprehension was at the 2.1 grade level (Reading Comprehension Subtest, Revised PIAT, Peabody Individual Achievement Test, (Markwardt, 1989). On a separate comprehension experiment using a sentence-to-picture matching task for 18 ASL syntactic structures ranging in complexity from simple (mono-clausal) to complex (inter-sentential), Martin accurately comprehended mostly simple structures (SV, SVO, SVO-with inflected verbs) and simple classifier constructions (p<.01). Consistent with the comprehension patterns shown by other late L1 learners who first began to acquire language in adolescence, Martin did not comprehend more complex structures. By contrast, L2 learners of ASL with early language acquisition, both native English speakers and deaf native signers of sign languages other than ASL perform at near-native levels on this ASL comprehension experiment (Mayberry, Cheng, Hatrak, & Ilkbasaran, 2017).

With respect to ASL production, Martin uses his dominant right hand and supporting left hand in two-handed sign articulation similar to native signers following the phonotactics rules of hand symmetry and dominance in ASL (Battison, 1978; Eccarius & Brentari, 2007; Morgan & Mayberry, 2012). His rate of sign production can be fluid, but is sometimes halting when he is unsure of what to say. Sometimes his signs are ill formed. His ASL utterances consist of signs combined into short phrases and clauses interspersed with gestures and facial expressions. He tends not use grammatical morphology or grammatical facial expression (Sandler & Lillo-Martin, 2006). When communicating about past experiences or telling a narrative elicited by pictures, he tends to describe the actions and emotions of characters in a temporal sequence but often without introducing or naming new characters which can make his ASL somewhat difficult to understand without knowing the context of his discourse.

Martin’s ASL acquisition appears to have plateaued at a low level of grammar, similar to other late L1 learners and young children. Although syntactically limited, his ASL expression does not show symptoms of aphasia caused by left hemisphere brain damage in adult signers (Corina et al., 1999; Poizner, Klima, & Bellugi, 1987). At this point, it is unclear whether Martin uses ASL phonological processing during on-line comprehension. Preliminary research suggests that late L1 learners do not engage in ASL phonological processing during on-line sign recognition in the same fashion as deaf native signers, as measured with an eye-tracking paradigm (Lieberman, Borovsky, Hatrak, & Mayberry, 2015).

2.1.3 Informed consent for the present study

Martin had volunteered for previous studies in our laboratory and thus was familiar with participating in ASL experiments and the research team. To obtain informed consent for the present MEG study, a research assistant, who is a deaf native signer and highly experienced interacting with late L1 learners, deaf aphasics, and deaf children in research settings, explained the goals, protocol, and potential risks and benefits of the present experiment to Martin. She did so face-to-face using simple ASL, gesture, and facial expression (to convey the potential emotions of fear, boredom, or confusion) emphasizing that he was free to decline or stop the experiment at any time. This explanation included photographs of a person sitting in the MEG, sample videos of the stimuli, and an example of the task. Throughout this explanation, the research assistant probed Martin for comprehension. After this explanation, Martin stated his willingness to participate in the study through ASL. His agreement was witnessed by another experimenter also highly proficient in ASL and communicating with late L1 learners. It was explained to Martin that his signature on the written consent form would certify his willingness to participate. He was given a copy of the signed consent form which included the English text of the experimental protocol. After completing the study, Martin stated that he had enjoyed it and was eager to participate in future studies.

2.1.2 Control groups and previous case studies

For the present study, we compare the neuroimaging results for Martin to those of two control groups and two previous case studies of adolescent L1 learners. The two control groups were deaf native signers who acquired sign language from birth, and young hearing adults who learned ASL as an L2. Unlike Martin, both control groups experienced language from birth. The deaf native signers serve as a baseline indicating how ASL is processed in the deaf brain when it is acquired from birth. The L2 signers serve as a second baseline indicating how ASL is processed in the hearing brain when it is learned as an L2 in late adolescence or young adulthood and full proficiency has not yet been attained. Like Martin, the L2 learners began to learn ASL in young adulthood or late adolescence, but unlike Martin, they first experienced language (English) from infancy. We also compared his neuroimaging results to two previously studied individuals whose language experience began in adolescence at the ages of 13 and 14. The adolescent L1 learners show how the adolescent brain processes language after 2 to 4 years of language immersion, and again after 3 to 5 years of language experience. The results from the adolescent learners and control groups and have been reported in detail elsewhere (Ferjan Ramirez, Leonard, Davenport, et al., 2014; Ferjan Ramirez, Leonard, Torres, et al., 2014), and are reported here only insofar as they illuminate the present case study results.

2.1.3 Deaf native signers

Twelve healthy right-handed congenitally deaf native signers (6 F, 17–36 years) with no history of neurological or psychological impairment were recruited for participation. All had severe to profound hearing loss from birth and acquired ASL from their deaf parents.

2.1.4 Hearing L2 signers

Eleven hearing native English speakers also participated (10 F; 19–33 years). All the L2 signers were healthy adults with normal hearing and no history of neurological or psychological impairment. All participants had four to five academic quarters (40 to 50 weeks) of college-level ASL instruction, and used ASL on a regular basis at the time of the study. Participants completed a self-assessment questionnaire to rate their ASL proficiency on a scale from 1–10, where 1 meant “not at all” and 10 meant “perfectly”. For ASL comprehension, the average score was 7.1 ± 1.2; ASL production was 6.5 ± 1.9. In an ongoing study of ASL comprehension using a range of ASL structures ranging from simple to complex described above, hearing L2 learners similar to the ones who participated in the present study tend to make errors on verb morphology and complex inter-sentential sentence structures involving pronouns but otherwise perform accurately.

2.1.5 Adolescent L1 learners

The two adolescent L1 learners were born severely to profoundly deaf and experienced minimal language during childhood due to varying circumstances. Like the present case study, Carlos was born into a hearing family in rural Mexico, did not attend school, and had little language experience throughout childhood. Unlike the present case study, however, Carlos’s language experience began at a younger age, 13;8 (years;months), when he immigrated to the USA with his family. Shawna was born deaf in the USA and kept at home with hearing caretakers who neither knew nor used any sign language with her. Like Carlos, she had minimal language experience until the age of 14;7. Carlos and Shawna first experienced language through ASL immersion in the same group environment where most individuals were deaf and everyone used ASL. At the time of the first study, they had 3 and 2 years of linguistic experience respectively. At the time of the follow-up study, they had 4 and 3 years of linguistic experience respectively.

2.2 Task and Stimuli

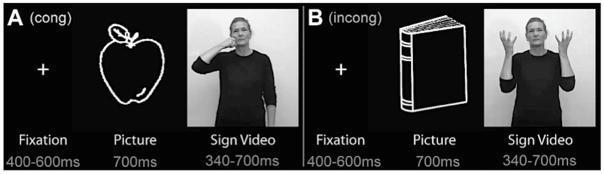

Martin performed a semantic decision task that was designed to evoke and modulate the N400, an event-related neural response between 200–600 ms after the onset of meaningful stimuli (Kutas & Federmeier, 2000; Kutas & Hillyard, 1980) or the N400m in MEG (Halgren et al., 2002). In our previous studies, the control participants and the adolescent learners performed the same task. While MEG was recorded, participants saw a line drawing of an object for 700 ms, followed immediately by a sign (mean length: 515.3 ms; length range: 340–700 ms) that either matched (congruent; for example “cat-cat”) or mismatched (incongruent; for example, “cat-ball”) the picture in meaning (Fig. 1). To measure accuracy and maintain attention, participants pressed a button when the stimulus sign matched the picture. Response hand was counterbalanced across blocks within participants. Responding only to congruent trials makes the task easy to perform, although it could potentially lead to differences in neural responses to congruent and incongruent conditions. However, previous analyses indicate that the neural response to button press does not affect the N400 semantic congruity effect (Ferjan Ramirez, Leonard, Torres, et al., 2014; Travis et al., 2011). Critically, each sign occurred twice, once in the congruent condition, and once in the incongruent condition, with half the signs occurring first in congruent and half incongruent. This design allowed the effects of stimulus differences to be rigorously dissociated from those of congruency, because each stimulus served as its own control. The large number of stimuli allowed us to obtain statistically significant results for an individual participant.

Figure 1. Schematic diagram of task design.

Each picture and sign appeared in both the congruent (A) and incongruent (B) conditions. Averages of congruent versus incongruent trials compared responses to the same stimuli.

Prior to participating in the study, we ensured that Martin was familiar with the ASL stimulus signs, able to perform the task, was comfortable with the scanner, and knew how to signal that he wished to stop the experiment.

All stimulus signs were imageable and concrete nouns selected from ASL developmental inventories (Anderson & Reilly, 2002; Schick, 1997), picture naming data for English (Bates et al., 2003), and from a previous in depth study of the adolescent learners’ lexical acquisition (Ferjan Ramirez, Lieberman, & Mayberry, 2013). A panel of 6 deaf and hearing fluent signers judged each potential stimulus sign for accurate production and familiarity. Fingerspelling, compound nouns, verbs, and classifier constructions were excluded. Each sign video was edited to begin when all phonological parameters (handshape, location, movement, orientation) were in place, and ended when the movement was completed. Each stimulus sign appeared in both the congruent and incongruent conditions. If a trial from one condition was rejected due to artifacts in the MEG signal, the corresponding trial from the other condition was also eliminated to ensure that sensory processing across congruent and incongruent trials included in the averages was identical. The native signers saw 6 blocks of 102 trials each, and the L2 signers saw 3 blocks of 102 trials each because they were also scanned on the same task in the auditory and written English modality, 3 blocks for each (Leonard et al., 2013). Our previous work with MEG sensor data and aMEG analyses indicates that 300 trials (150 in each condition) are sufficient to capture clean and reliable single participant responses. Similar to the native signers and previous case studies, Martin saw 6 blocks of 102 trials. Before scanning began, he performed a practice run in the scanner using a separate set of stimuli that was not part of the experiment. Martin understood the task and required no repetitions of the practice block in the MEG.

2.3 Procedure

In previous research with ASL signs, we found a typical N400m effect as the difference in the magnitude of the neural response evoked by congruent versus incongruent trials (Ferjan Ramirez, Leonard, Torres, et al., 2014). In the present study, we estimated the cortical generators of this semantic effect using anatomically constrained magnetoencephalography (aMEG), a non-invasive neurophysiological technique that combines MEG and high resolution structural MRI (Dale et al., 2000). MEG was recorded in a magnetically shielded room (IMEDCO-AG, Switzerland) with the head in a Neuromag Vectorview helmet-shaped dewar containing 102 magnetometers and 204 planar gradiometers (Elekta AB, Helsinki, Finland). Data were collected at a continuous sampling rate of 1000 Hz with minimal filtering (0.1 to 200 Hz). The positions of four non-magnetic coils affixed to the participants’ heads were digitized along with the main fiduciary points such as the nose, nasion, and preauricular points for subsequent co-registration with high-resolution MRI images. Structural MRI was acquired on the same day after MEG, and participants were allowed to rest or sleep in the MRI scanner.

Using aMEG we have previously found that, when experienced from birth, ASL is processed in a left fronto-temporal brain network (Leonard et al., 2013), similar to the network used by hearing subjects to understand speech, consistent with other neuroimaging studies (MacSweeney et al., 2006; MacSweeney et al., 2002; Mayberry et al., 2011; Petitto et al., 2000; Sakai et al., 2005).

Anatomically-constrained MEG (aMEG) Analysis

The data were analyzed using a multimodal imaging approach that constrains the MEG activity to the cortical surface as determined by high-resolution structural MRI (Dale et al. 2000). This noise-normalized minimum norm inverse technique has been used extensively across a variety of paradigms, particularly language tasks that benefit from a distributed source analysis (Leonard et al., 2010; Marinkovic et al., 2003), and has been validated by direct intracranial recordings (Halgren et al., 2002; McDonald et al., 2010).

Martin’s cortical surface was reconstructed from a T1-weighted structural MRI using FreeSurfer (http://surfer.nmr.mgh.harvard.edu/). A boundary element method forward solution was derived from the inner skull boundary(Oostendorp & Van Oosterom, 1992), and the cortical surface was downsampled to ~2500 dipole locations per hemisphere (Dale, Fischl, & Sereno, 1999; Fischl, Sereno, Tootell, & Dale, 1999). The orientation-unconstrained MEG activity of each dipole was estimated every 4ms, and the noise sensitivity at each location was estimated from the average pre-stimulus baseline from −190 to −20ms. aMEG was performed on the waveforms produced by subtracting congruent from incongruent trials.

The data were inspected for bad channels (channels with excessive noise, no signal, or unexplained artifacts), which were excluded from further analyses. Additionally, trials with large (>3000 fT/cm for gradiometers) transients were rejected. Blink artifacts were removed using independent components analysis (Delorme & Makeig, 2004) by pairing each MEG channel with the electrooculogram (EOG) channel, and rejecting the independent component that contained the blink. For Martin, fewer than 3% of trials were rejected due either to artifacts or cross-condition balancing. For native signers fewer than 3% of trials were rejected; for L2 signers fewer than 2% were rejected.

Individual participant aMEG movies were constructed from the averaged data in the trial epoch for each condition using only data from the gradiometers. These data were combined across participants by taking the mean activity at each vertex on the cortical surface and plotting it on an average Freesurfer fs average brain (version 450) at each latency. Vertices were matched across participants by morphing the reconstructed cortical surfaces into a common sphere, optimally matching gyral-sulcal patterns and minimizing shear (Fischl et al., 1999; Sereno, Dale, Liu, & Tootell, 1996). All statistical comparisons were made on region of interest (ROI) timecourses, which were selected based on information from the average incongruent-congruent subtraction across all participants (see Table 2).

Table 2.

ROI analyses of normalized aMEG values for the N400m effect (subtraction of incongruent from congruent trials)

| a) Martin | ||

|---|---|---|

|

| ||

| Mean | ||

|

| ||

| ROI | LH | RH |

| AI- Anterior Insula | .13 | .13 |

| IFG- Inferior Frontal Gyrus | .20 | .22 |

| IPS- Inferior Parietal Sulcus | .51 | .31 |

| IT- Inferior Temporal Cortex | .13a | .15 |

| LOT- Lateral Occipital Temporal Cortex | .19 | .32 |

| PT- Planum Temporale | .26 | .31 |

| STS- Superior Temporal Sulcus | .14a | .32 |

| TP-Temporal Pole | .15 | .12b |

| pSTS- posterior Superior Temporal Sulcus | .39 | .31 |

| b) Control Groups | ||||

|---|---|---|---|---|

|

| ||||

| Native mean (sd) | L2 mean (sd) | |||

|

| ||||

| ROI | LH | RH | LH | RH |

| AI | 0.39 (0.14) | 0.40 (0.18) | 0.33 (0.12) | 0.36 (0.13) |

| IFG | 0.29 (0.12) | 0.30 (0.12) | 0.26 (0.12) | 0.28 (0.14) |

| IPS | 0.37 (0.10) | 0.32 (0.13) | 0.36 (0.13) | 0.28 (0.08) |

| IT | 0.43 (0.12) | 0.35 (0.11) | 0.36 (0.13) | 0.34 (0.18) |

| LOT | 0.29 (0.12) | 0.29 (0.10) | 0.30 (0.16) | 0.32 (0.15) |

| PT | 0.54 (0.14) | 0.45 (0.17) | 0.45 (0.16) | 0.43 (0.18) |

| STS | 0.43 (0.08) | 0.41 (0.18) | 0.32 (0.09) | 0.36 (0.16) |

| TP | 0.45 (0.16) | 0.46 (0.15) | 0.34 (0.14) | 0.38 (0.16) |

| PSTS | 0.33 (0.09) | 0.27 (0.07) | 0.34 (0.16) | 0.32 (0.15) |

| c) Adolescent Learners | ||||

|---|---|---|---|---|

|

| ||||

| Carlos | Shawna | |||

|

| ||||

| ROI | LH | RH | LH | RH |

| AI | 0.27 | 0.43 | 0.38 | 0.43 |

| IFG | 0.26 | 0.31 | 0.34 | 0.60a |

| IPS | 0.31 | 0.54a | 0.41 | 0.66a,b |

| IT | 0.50 | 0.43 | 0.41 | 0.27 |

| LOT | 0.43 | 0.57a | 0.17 | 0.29 |

| PT | 0.33 | 0.57 | 0.52 | 0.33 |

| STS | 0.26 | 0.40 | 0.47 | 0.23 |

| TP | 0.42 | 0.51 | 0.27 | 0.20 |

| PSTS | 0.26 | 0.47a | 0.35 | 0.541 |

2.5 SD (p = .0124) from Native mean;

2.0 SD (p = 0.0234) from Native mean

2.5 SD from Native mean;

2.5 SD from L2 mean

3.0 Results

We first report the behavioral results and neuroimaging results for Martin, followed by a comparison of his results with those of the two control groups, deaf native signers and hearing L2 signers, which we compare last with the results of the two previously studied adolescent L1 learners.

3.1 Behavioral Task Results

Martin performed the picture-sign matching task in the scanner with a high degree of accuracy (89%) and fast reaction times (737ms) comparable to that of the L2 signer control group (89%, 720 ms), indicating that the selection of stimulus signs was successful and within his vocabulary. His performance was somewhat less accurate and less fast compared to the native signer control group (94%, 619 ms; Table 1). Martin’s high performance levels indicate that any differences in neural activation patterns observed between him and the control groups in response to the stimulus signed words cannot be attributed to differences in task performance.

Table 1.

Participant(s) background and performance mean accuracy and RT (SD).

| Participant(s) | Gender | Age | Age of Language Onset | Age of ASL Acquisition | Accuracy | RT ms |

|---|---|---|---|---|---|---|

| Martin | M | 51 | 21 | 23 | .89 | 762 (174) |

| Native signers | 6M, 6F | 30 (6.4) | Infancy | Infancy | .94 (.04) | 619 (97.5) |

| L2 signers | 1M, 6F | 22;5 (3.8) | Infancy | 20 (3.9) | .89 (.05) | 720 (92.7) |

| Shawna | F | 16;9 | 14;7 | 14;7 | .84 | 812 (210) |

| Carlos | M | 16;10 | 13;8 | 13;8 | .85 | 731 (244) |

3.2 Anatomically-constrained MEG (aMEG) Results

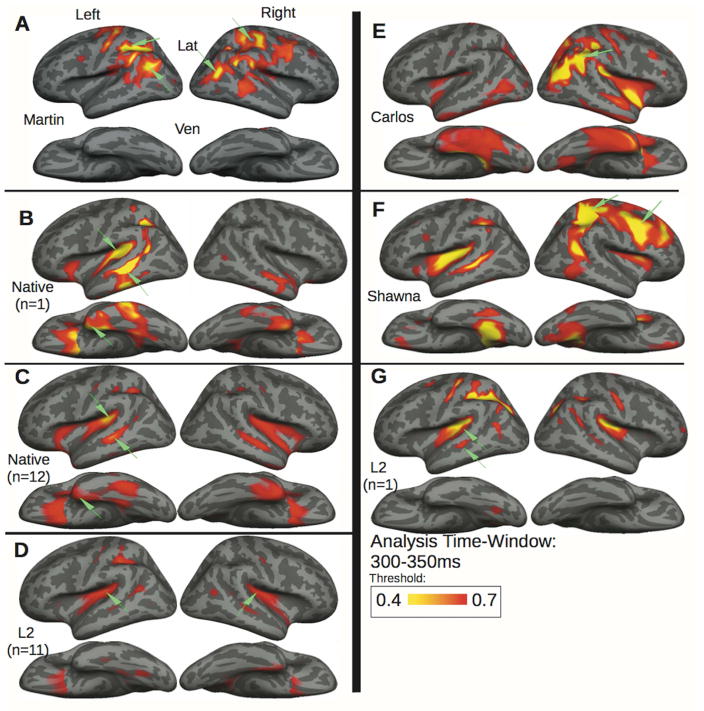

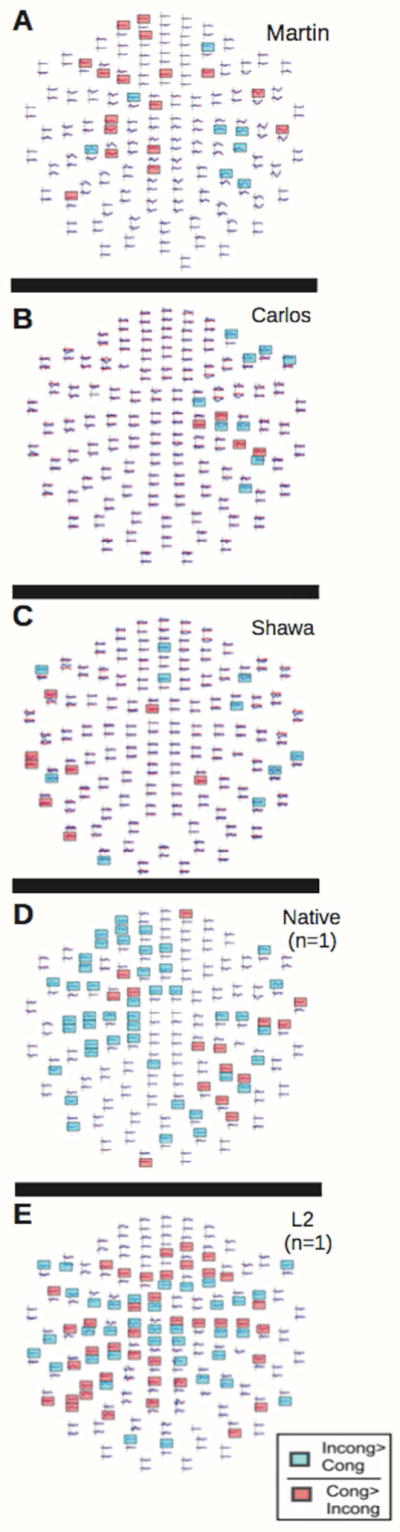

We examined aMEG responses to ASL signs at the group level (two control groups) and at the individual levels (Martin and two representative control participants; Martin and the two previously studied adolescent learners) from 300–350ms post-sign onset, a time-window during which lexico-semantic encoding is known to occur in spoken and sign languages (Ferjan Ramirez et al., 2016; Kutas & Federmeier, 2011; Kutas & Hillyard, 1980; Leonard et al., 2012; Marinkovic et al., 2003). Across studies, the N400 occurs in a broad 200–600ms post-stimulus time window (Kutas & Federmeier, 2000, 2011). In previous studies on lexico-semantic processing using spoken, written, and sign language stimuli, we have observed the onset of this effect to be around ~220ms post stimulus, with peak activity occurring slightly before 400ms post stimulus. The 300–350ms post-stimulus time window was selected because we have previously observed that the semantic effect in picture-priming paradigms with spoken and signed stimuli is the strongest at this time window (Leonard et al., 2012; Travis et al., 2011). The same was true for Martin and the previously studied adolescent learners (Ferjan Ramirez, Leonard, Torres, et al., 2014)

To directly compare the strength of semantically modulated neural activity in Martin with that of the control groups, we first considered the neural activation patterns in 9 bilateral regions of interests (ROIs). ROIs were selected by considering the aMEG movies of grand-average activity across the whole brain of 25 participants (all 12 native signers, all 11 L2 signers, and the two adolescent learners). These movies are a measure of signal to noise ratio (SNR), being the F-ratio of explained variance over unexplained variance. The strongest clusters of neural activity across all the participants and conditions were selected for statistical comparisons, thereby producing empirically derived ROIs that were independent of our predictions (Ferjan Ramirez, Leonard, Torres, et al., 2014).

The normalized aMEG values for the subtraction of incongruent-congruent trials for Martin were then compared to that of control groups and the adolescent learners on the basis of these previously established ROIs, shown in Table 2. We defined as significant those ROIs for which Martin’s aMEG values were 2.5 standard deviations from the mean value of each control group. We applied this significance threshold (a z-score of 2.5 corresponds to a p-value of 0.0124) to account for the comparisons of multiple ROIs.

Using this ROI approach, Martin exhibited less activity than the control groups in three ROIs (Table 2). Specifically, he showed less activity than the native signers in the left hemisphere inferior temporal cortex and superior temporal sulcus and in the right temporal pole. Compared to the L2 signers, Martin showed a trend toward less activity in the left inferior temporal cortex and right anterior insula that did not reach significance due to our high significance threshold.

Because the selected ROIs were based on the grand average of the participants in our previous studies (both control groups and the adolescent learners), it is possible that some brain areas that were strongly activated in Martin were not reflected in the previously selected as ROIs. To investigate this possibility, an analysis of activations across the entire brain surface allowed us to focus on Martin’s individual neural activation patterns. First we compared the aMEGs associated with the incongruent vs congruent contrast in Martin to those of the control groups and two individual control participants. We then examined whether differences between congruent and incongruent conditions were due to larger signals in one or the other direction by examining the MEG sensor level data directly. Planar gradiometers were examined, which, unlike other MEG sensors, are most sensitive to the immediately underlying cortex.

The aMEG maps in Figure 2 represent the strength of the congruent-incongruent activity across the whole brain for Martin (panel A) and two representative control participants, a native deaf signer and a hearing L2 signer (panels B, G) and both control groups (panel C, deaf native signers; panel D, hearing L2 signers). The aMEG maps are a measure of SNR. The areas shown in yellow and red represent those brain regions where the SNR is larger than the baseline. The maps are normalized within each control group or each individual, allowing for a qualitative comparison of overall congruent-incongruent activity patterns.

Figure 2. Semantic activation patterns to picture-primed signs.

exhibited by Martin compared with the adolescent learners Carlos, Shawna, and a representative deaf native signer and hearing L2 signer, along with the deaf native signer and hearing L2 control group averages (see Table 1). During semantic processing (300–350ms), Martin (A) shows the strongest effect in the occipito-parietal cortex bilaterally similar to those shown in the right hemisphere by Carlos (E) and Shawna (F), who shows additional left superior temporal and right frontal activity. A representative deaf native signer (B) and hearing L2 signer (G) both exhibit semantic effects in left fronto-temporal language similar to the control group averages for the native signers (C) and the L2 signers (D).

Consistent with other neuroimaging studies of sign language, we have previously found that, in native deaf signers, signed words elicited activity in a left-lateralized fronto-temporal network including the temporal pole (TP), planum temporale (PT), superior temporal sulcus (STS), and to a lesser extent in the homologous right hemisphere areas (Fig. 2 panel C, data from (Ferjan Ramirez, Leonard, Torres, et al., 2014)). Consistent with previous studies on L2 acquisition (Abutalebi, 2008), this canonical language network was also activated in L2 signers (Fig. 2 panel D, data from Leonard et al. (2012).

The same left-lateralized fronto-temporal activations are observed when we look at the aMEG maps of two individual control participants (Fig 2, panels B and G). The normalized aMEG values of the two control participants were also compared to the average aMEG values of their respective groups in each of the 18 ROIs (Table 2), and no significant differences were found (i.e., there were no ROIs where the individual control subjects were more than 2.5 standard deviations away from the respective group mean). Thus, in the control participants whose post-natal brain maturation was synchronous with the onset of linguistic experience (deaf native and hearing L2 signers), we find activations in the canonical language network of left hemisphere at both the individual and group level.

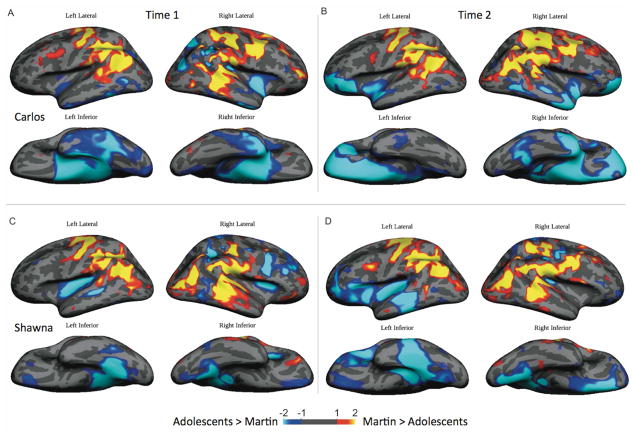

Consistent with the fact that he understood the stimuli signs (Table 1), and could understand simple sentences (behavioral results given above), Martin exhibited the semantic modulation effect -- the N400 effect. MEG channels with significant semantic effects for Martin and two representative control participants are highlighted in red and blue in Figure 3, Martin, panel A, native signer, D, and L2 signer, E. Using a random-effects resampling procedure (Maris & Oostenveld, 2007), we determined in which MEG channels the incongruent>congruent and the congruent>incongruent effects were significant (at p < 0.01). Channels with significant congruent>incongruent activity are shown in red, and channels with significant incongruent>congruent activity are shown in blue.

Figure 3. Individual MEG sensor data.

Blue channels represent significant incongruent>congruent activity between 300–350ms; red channels represent significant congruent>incongruent effects at the same time. Martin (A) lacks a strong incongruent>congruent effect in left fronto-temporal regions and shows the strongest incongruent>incongruent effects in right hemisphere channels (blue); Carlos (B) shows the strongest incongruent>congruent effects in right hemisphere channels (blue); Shawna (C) also shows the most incongruent> congruent effects in right occipito-tempero-parietal channels (blue). Statistical significance was determined by a random-effects resampling procedure (Maris & Oostenveld 2007) and reflects time periods where incongruent and congruent conditions diverge at p<.01. The two representative control participants, native signer (D) and L2 signer (E) are the same individuals as those whose aMEGs are displayed in Fig. 2.

By simultaneously inspecting the MEG sensor data (Fig. 3, panel A) and the aMEGs (Fig. 2, panel A), it is clear that the localization pattern of semantically modulated activity in Martin was different from that observed in the control participants. Martin exhibits semantically modulated activity (incongruent>congruent) in response to ASL signs primarily in right dorsolateral prefrontal, superior parietal cortex, with some bilateral activation. Like the previously studied adolescent L1 learners (Fig 3, panels B, C), Martin’s sensor map shows more congruent> incongruent response than the control groups (Fig 3, panels D, E). This suggests that the way in which primed signed words are semantically processed may be affected by a lack of childhood linguistic experience despite accurate recognition, a point to which we return below.

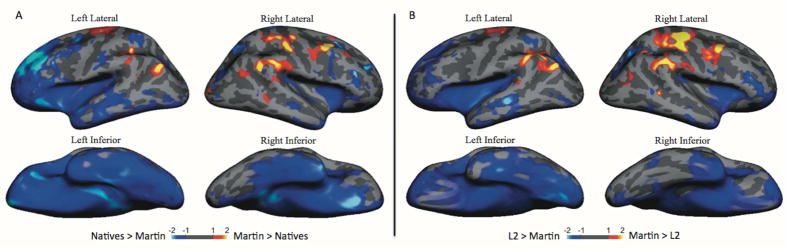

For the next step of the analyses, we created z-score maps of the aMEG for Martin compared to that of each control group. Since the aMEG is calculated from the difference in activity evoked between congruent and incongruently primed signs, and is always positive, large z-scores reflect areas where the magnitude of the responses may be unusual in Martin; their polarity (congruent larger vs incongruent larger) is uncertain but can be inferred from the sensor data noted above. Visual inspection of this comparison (Fig 4, panel A), suggests that Martin’s neural activity for sign/word meaning was greater than that of native signers and that of the L2 learners (Fig 4, panel B) predominantly in the right dorsolateral prefrontal and parieto-occipital cortex with some bilateral activation. Native signers exhibited more activity than Martin in the left anterior insula, inferior temporal sulcus, PT, STS, and in the right inferior temporal sulcus. L2 signers exhibited more activity than Martin in the same areas as the native signers, excluding the left anterior insula.

Figure 4.

Z-score maps showing brain areas where semantic modulation is greater in Martin compared to the control groups (yellow and red) and areas where semantic modulation is greater in the control groups (regardless of polarity) compared to Martin (blue): (A) Martin vs deaf native signers; (B) Martin vs hearing L2 signers. Martin exhibits stronger activity than the control participants in multiple areas including the right parietal cortex where it is most prominent, but also including the supramarginal gyrus and premotor cortex bilaterally.

In the last step of the analyses, we created a z-score map of Martin responses compared to those of the two previously studied adolescent learners. Recall that Martin’s brain matured without linguistic experience until young adulthood at the age of 21. This temporal lag was not as great for Carlos and Shawna, whose language experience began at the age of 14. Although Martin had accumulated 30 years of linguistic experience at the time of the present study, he did not exhibit neural activations in the left and right inferior and superior temporal cortices as were observed in Carlos after 36 months of linguistic experience (Fig. 5, panel A). Nor did Martin exhibit the additional neural activation patterns that Carlos exhibited in the left and right inferior frontal cortex after 52 months of linguistic experience (shown in blue in Fig. 5, panel B). Martin also exhibited different neural activation patterns contrasting with those exhibited by Shawna. After 24 months of language experience, and especially after 39 months of experience, she exhibited left lateralized temporal lobe and inferior frontal activations that were not apparent in the neural activation patterns exhibited by Martin even though he had used language for 30 years (shown in blue in Fig 5, panels C, D).

Figure 5.

Z-score maps showing brain areas where semantic modulation is greater (regardless of polarity) in Martin compared with the previously studied adolescent L1 learners (yellow and red) and areas where semantic modulation is greater in the adolescent learners compared to Martin (blue): (A) Martin vs Carlos with an onset of linguistic experience at age 13 and 3 years of experience; (B) Martin vs Carlos with 5 years of experience; (C) Martin vs Shawna with an onset of linguistic experience at age 14 and 2 years of experience; (D) Martin vs Shawna with 4 years of experience.

4.0 Discussion

In this first study to neuroimage lexico-semantic processing in an individual who grew up without linguistic experience until young adulthood, we tested two alternative hypotheses about how the temporal relation of post-natal brain maturation and the onset of linguistic experience might affect development of the brain language system. If the canonical perisylvian language network develops functionality primarily through mechanisms of brain growth independent of the temporal onset of linguistic experience, then Martin should have exhibited neural activation patterns in response to signed words located in the perisylvian language areas of the left and/or right hemisphere. This is not what we observed, however. Martin exhibited neural activation patterns that tended to be located outside of the left and right perisylvian language areas single signs at the 300 to 350 ms time window. This result is not due to a lack of linguistic experience or poor performance in the scanner because he had used sign language for 30 years and performed the scanner task with a degree of accuracy and fast RTs, comparable to those of the control group who learned ASL as an L2 around the same age. It is important to note that the stimuli in the present experiment consisted of single signed words that are concrete and imageable nouns acquired early by young children and primed by line drawings. Martin’s accurate scanner performance is thus consistent with his spontaneous expression of single signs. However, despite his accurate recognition of primed, single signed words, Martin’s neural activation patterns during this time window provide preliminary support the alternative hypothesis, namely, that the perisylvian language network requires linguistic experience during post-natal maturation to develop functionality. This result may relate to Martin’s limited syntactic ability.

The present results replicate and extend our previous case studies of post-natal brain maturation without linguistic experience. Martin exhibited neural activation patterns in response to signed words that were similar to those exhibited by two previously studied adolescents after they had experienced language for two to three years. First, the neural activations patterns Martin exhibited overlapped in localization and distribution with those exhibited by the adolescent learner Carlos. This overlap is noteworthy because Martin and Carlos shared a childhood family and cultural profile. Both grew up as the only deaf member of a hearing family in rural Mexico without access to language or education. In contrast to Martin, Carlos began to experience language in adolescence and exhibited additional neural activation patterns in right perisylvian language area after 38 months of language experience. After 53 months of language experience, Carlos further exhibited neural activations patterns in the left perisylvian language areas.

Second, the adolescent L1 learner Shawna exhibited similar neural activation patterns in dorsolateral superior parietal and occipital areas after 26 months of language experience. Like Carlos, she also exhibited activation in perisylvian language areas but bilaterally. After 41 months of continued language experience, she exhibited additional neural action patterns in left perisylvian language areas. Thus, although Martin exhibited neural activation patterns that were similar to those exhibited by the adolescent L1 learners after two to three years of linguistic experience, his neural activation patterns were also distinctive. He exhibited limited neural activation pattern in the perisylvian language areas of either the left or right hemisphere despite having ten times more linguistic experience than the adolescent L1 learners. These contrastive results provide preliminary and novel insights into the development of the brain language system.

Carlos and Shawna both exhibited glimmers of the expected neural activation pattern in response to signed words at the onset of their language experience. As they gained more language experience, their neural activation patterns in response to signed words became more prominent in the canonical brain language areas, particularly in the left temporal cortex. By contrast, Martin exhibited limited neural activation patterns in the left or right perisylvian language areas despite 30 years of linguistic experience in the 300 to 350 ms post stimulus time window. The main difference between Martin and the previously studied cases is that his language experience began in young adulthood whereas the language experience of Carlos and Shawna began in early adolescence. These contrastive results provide initial evidence that the canonical perisylvian language network retains some capacity to process language when linguistic experience begins during brain maturation, as in adolescence (Paus, 2005), but that this capacity is no longer available when language experience begins once brain maturation is nearly complete in adulthood. Whether the phenomenon is caused by a loss of neurolinguistic processing capacity by the canonical brain language areas due to disuse or, alternatively, arises from a failure of neural networks supporting neurolinguistic processing to fully develop due to lack of linguistic experience available in the environment requires more research. Ongoing in our laboratory investigating ASL sentence processing in Martin and other late L1 learners with fMRI corroborates the present findings at the sentence level with another neuroimaging modality, fMRI.

No other cases of post-natal brain maturation with minimal language have been neuroimaged before to our knowledge. The well-known case of Genie, a hearing adolescent who began learning spoken English at the age of 13 after a childhood of social isolation (Curtiss, 1977), exhibited a strong left-ear advantage on several dichotic listening tests. This pattern suggested a right hemisphere dominance for spoken word processing, even though she was right handed (Fromkin, Krashen, Curtiss, Rigler, & Rigler, 1974). Because dichotic listening is a behavioral rather than a neural measure, this conclusion remains speculative. However, the right hemisphere has been shown to actively contribute to children’s language processing, a contribution that appears to decrease over maturation (Berl et al., 2014). The right hemisphere is also involved when adults process their less-proficient L2 as compared with their proficient L1 (Leonard et al., 2011). Although neural language processing is known to be bilateral for many language tasks, the right hemisphere may play a special role in learning to comprehend a less well-understood language given its proclivity for processing for coarse vs fine-grained information and less predictable vs. highly predictable patterns in comparison to the left hemisphere (Federmeier, Wlotko, & Meyer, 2008).

It is notable that all three cases of adolescent or adult onset of linguistic experience exhibited neural activation patterns in response to signed words in dorsolateral occipital and parietal areas, bilaterally for Martin and in the right hemisphere for Carlos and Shawna. These neural activation patterns contrast sharply with the well-documented neural response to signed words in the left perisylvian language areas in deaf native signers (MacSweeney et al., 2006; Mayberry et al., 2011; Petitto et al., 2000; Sakai et al., 2005). This neural pattern was exhibited by the control groups in the present study who both had an infant onset of linguistic experience: deaf native signers whose infant ASL language experience was in the visual and manual sensory-motor modality, and hearing L2 signers whose infant spoken English language experience was in the auditory and vocal sensory-motor modality (Ferjan Ramirez, Leonard, Torres, et al., 2014). This suggests that the superior parietal and occipital response to signed words may be a neural signature of linguistic processing of childhood brain maturation without language.

The question arises as to why areas in the superior parietal and occipital cortex of the right, and to some extent of the left, dorsal stream respond to signed words when the brain matures without linguistic experience. One possibility is that these neural activation patterns arise from the deaf child’s use of gesture to communicate in lieu of spoken language. However, brain areas associated with, but not identical to, language comprehension are activated in response to meaningful gestures in hearing adults, including the left inferior frontal gyrus (Newman et al., 2015; Skipper, Goldin-Meadow, Nusbaum, & Small, 2007; Willems, Özyürek, & Hagoort, 2007) and the superior temporal sulcus (Holle, Gunter, Rüschemeyer, Hennenlotter, & Iacoboni, 2008). This indicates that the onset of language experience relative to post-natal brain maturation affects brain organization for meaningful gesture as well as language. Although meaningful gesture activates the left perisylvian language areas in hearing individuals, meaningless gestures do not. Instead, meaningless gestures activate the dorsal stream from occipital to superior parietal cortex primarily in the right hemisphere (Decety & Grèzes, 1999; Decety et al., 1997). The ASL stimulus signs used in the present study were clearly meaningful to Martin, as evidenced by his high levels of accuracy and fast RT. However, his sensor map results (Fig. 3) show a greater neural response to picture-sign congruity than incongruity. Whether this indicates that the link between meaning and sign form are more imagistic for Martin compared to individuals who experienced language from birth requires further investigation.

Deaf children without access to spoken or signed language use idiosyncratic gestures known as homesign to communicate with their family members (Goldin-Meadow, 2005). But the term homesign may be a misnomer because it implies a consistency of articulatory form that is not observed in the hearing people who communicate with these deaf children: they neither share the gestures used by such deaf children nor fully comprehend them (Carrigan & Coppola, 2017; Goldin-Meadow, Mylander, de Villiers, Bates, & Volterra, 1984). Deaf children growing up under these circumstances must glean what communicative meaning they can from the unpredictable and ad hoc gesture forms, facial expressions, and body postures of the hearing individuals of their families. This lack of predictability between gesture form and meaning may have the effect of establishing occipital to superior parietal brain areas as the main neural pathway for extracting meaning from human actions. By contrast, infant language exposure creates phonological representations allowing infants to bind predictable articulatory forms to events and concepts creating a neural network of form-meaning representations that is the purview of the perisylvian language areas. Once this neural network is created, it can then function to learn and process other languages and language forms.

Although the present study is of only one individual, the present results replicate and extend those of our previous case studies. Together these studies provide initial evidence that the development of the brain language system may require linguistic experience during post-natal brain maturation to achieve functionality. More research with additional cases such as the one we studied here, along with confirmatory group studies, is necessary to extend the present findings to tract these neural processing effects across linguistic structures that are larger and more complex than single words.

5. Conclusion

In conclusion, we find that the neural activation patterns in response to signed words shown by an individual whose experienced minimal language until young adulthood were located primarily in the dorsal pathway of the right hemisphere with bilateral activation with significantly less observed activation in the canonical perisylvian language areas, despite a high degree of performance accuracy and three decades of linguistic experience. The present findings replicate our previous findings of similar neural activation patterns when the onset of linguistic experience begins in early adolescence. These findings extend our previous work by indicating that the canonical perisylvian language areas retain some capacity to process lexical items when linguistic experience begins in early adolescence, but not when it is postponed until young adulthood.

Highlights.

Language learning for the first time in adulthood has marked effects on brain language processing

The dorsolateral superior and occipital areas are bilaterally activated to known words

Canonical brain language areas minimally process language after prolonged language deprivation

Acknowledgments

The research reported in this publication was supported by National Institutes of Health (grant R01DC012797). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We thank Martin for his gracious participation and Marla Hatrak for invaluable research assistance.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abutalebi J. Neural aspects of second language representation and language control. Acta psychologica. 2008;128(3):466–478. doi: 10.1016/j.actpsy.2008.03.014. [DOI] [PubMed] [Google Scholar]

- Anderson D, Reilly J. The MacArthur communicative development inventory: normative data for American Sign Language. Journal of Deaf Studies and Deaf Education. 2002;7(2):83–106. doi: 10.1093/deafed/7.2.83. [DOI] [PubMed] [Google Scholar]

- Bates E, D’Amico S, Jacobsen T, Székely A, Andonova E, Devescovi A, Herron D, Lu CC, Pechmann T, Pléh C. Timed picture naming in seven languages. Psychonomic bulletin & review. 2003;10(2):344–380. doi: 10.3758/bf03196494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battison R. Lexical Borrowing in American Sign Language. Silver Spring, MD: Linstok Press; 1978. [Google Scholar]

- Berl MM, Mayo J, Parks EN, Rosenberger LR, VanMeter J, Ratner NB, Vaidya CJ, Gaillard WD. Regional differences in the developmental trajectory of lateralization of the language network. Hum Brain Mapp. 2014;35(1):270–284. doi: 10.1002/hbm.22179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Best CT, Mathur G, Miranda KA, Lillo-Martin D. Effects of sign language experience on categorical perception of dynamic ASL pseudosigns. Attention, Perception, & Psychophysics. 2010;72(3):747–762. doi: 10.3758/APP.72.3.747. [DOI] [PubMed] [Google Scholar]

- Boudreault P, Mayberry RI. Grammatical processing in American Sign Language: Age of first-language acquisition effects in relation to syntactic structure. Language and Cognitive Processes. 2006;21(5):608–635. doi: 10.1080/01690960500139363. [DOI] [Google Scholar]

- Braden JP. Deafness, Deprivation, and IQ. New York: Plenum Press; 1994. [Google Scholar]

- Brentari D. A prosodic model of sign language phonology. MIT Press; 1998. [Google Scholar]

- Cardin V, Orfanidou E, Ronnberg J, Capek CM, Rudner M, Woll B. Dissociating cognitive and sensory neural plasticity in human superior temporal cortex. Nature Commnications. 2013;4:1473. doi: 10.1038/ncomms2463|. [DOI] [PubMed] [Google Scholar]

- Carrigan EM, Coppola M. Successful communication does not drive language development: Evidence from adult homesign. Cognition. 2017;158:10–27. doi: 10.1016/j.cognition.2016.09.012. [DOI] [PubMed] [Google Scholar]

- Chamberlain C, Mayberry RI. ASL syntactic and narrative comprehension in skilled and less skilled adult readers: Bilingual-bimodal evidence for the linguistic basis of reading. Applied Psycholinguistics. 2008;28(3):537–549. [Google Scholar]

- Corina DP, McBurney SL, Dodrill C, Hinshaw K, Brinkley J, Ojemann G. Functional roles of Broca’s area and SMG: evidence from cortical stimulation mapping in a deaf signer. Neuroimage. 1999;10(5):570–581. doi: 10.1006/nimg.1999.0499. [DOI] [PubMed] [Google Scholar]

- Cormier K, Schembri A, Vinson D, Orfanidou E. First language acquisition differs from second language acquisition in prelingually deaf signers: Evidence from sensitivity to grammaticality judgement in British Sign Language. Cognition. 2012;124:50–65. doi: 10.1016/j.cognition.2012.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curtiss S. Genie: A Psycholinguistic Study of a Modern-Day “Wild Child”. New York: Academic Press; 1977. [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis: I. Segmentation and surface reconstruction. Neuroimage. 1999;9(2):179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Dale AM, Liu AK, Fischl BR, Buckner RL, Belliveau JW, Lewine JD, Halgren E. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000;26(1):55–67. doi: 10.1016/s0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- Decety J, Grèzes J. Neural mechanisms subserving the perception of human actions. Trends in cognitive sciences. 1999;3(5):172–178. doi: 10.1016/s1364-6613(99)01312-1. [DOI] [PubMed] [Google Scholar]

- Decety J, Grezes J, Costes N, Perani D, Jeannerod M, Procyk E, Grassi F, Fazio F. Brain activity during observation of actions. Influence of action content and subject’s strategy. Brain. 1997;120(10):1763–1777. doi: 10.1093/brain/120.10.1763. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of neuroscience methods. 2004;134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Eccarius P, Brentari D. Symmetry and dominance: A cross-linguistic study of signs and classifier constructions. Lingua. 2007;117(7):1169–1201. doi: 10.1016/j.lingua.2005.04.006. [DOI] [Google Scholar]

- Emmorey K. Language, cognition, and the brain: Insights from sign language research. Mahwah, NJ: Lawrence Erlbaum Associates; 2002. [Google Scholar]

- Federmeier KD, Wlotko EW, Meyer AM. What’s “right” in language comprehension: ERPs reveal right hemisphere language capabilities. Lang Linguist Compass. 2008;2(1):1–17. doi: 10.1111/j.1749-818X.2007.00042.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, Pethick SJ, Tomasello M, Mervis CB, Stiles J. Variability in early communicative development. Monographs of the society for research in child development. 1994:i–185. [PubMed] [Google Scholar]

- Ferjan Ramirez N, Leonard MK, Davenport T, Torres C, Halgren E, Mayberry RI. Neural language processing in adolescent first-language learners: Longitudinal case studies in American Sign Language. Cereb Cortex, Advance access. 2014:1–12. doi: 10.1093/cercor/bhu273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferjan Ramirez N, Leonard MK, Davenport TS, Torres C, Halgren E, Mayberry RI. Neural language processing in adolescent first-language learners: Longitudinal case studies in American Sign Language. Cereb Cortex. 2016;26(3):1015–1026. doi: 10.1093/cercor/bhu273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferjan Ramirez N, Leonard MK, Torres C, Hatrak M, Halgren E, Mayberry RI. Neural language processing in adolescent first-language learners. Cereb Cortex. 2014;24(10):2772–2783. doi: 10.1093/cercor/bht137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferjan Ramirez N, Lieberman AM, Mayberry RI. The initial stages of first-language acquisition begun in adolescence: when late looks early. J Child Lang. 2013;40(2):391–414. doi: 10.1017/S0305000911000535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RB, Dale AM. High-resolution intersubject averaging and a coordinate system for the cortical surface. Human brain mapping. 1999;8(4):272–284. doi: 10.1002/(SICI)1097-0193(1999)8:4<272::AID-HBM10>3.0.CO;2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fromkin V, Krashen S, Curtiss S, Rigler D, Rigler M. The development of language in Genie: A Case of language acquisition beyond the critical period. Brain and Language. 1974;1:81–107. [Google Scholar]

- Geshwind N. The organization of language and the brain. Science. 1970;170(3961):940–944. doi: 10.1126/science.170.3961.940. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S. The resilience of language: What gesture creation in deaf children can tell us about how all children learn language. Psychology Press; 2005. [Google Scholar]

- Goldin-Meadow S, Mylander C, de Villiers J, Bates E, Volterra V. Gestural communication in deaf children: The effects and noneffects of parental input on early language development. Monographs of the society for research in child development. 1984:1–151. [PubMed] [Google Scholar]

- Halgren E, Dhond RP, Christensen N, Van Petten C, Marinkovic K, Lewine JD, Dale AM. N400-like magnetoencephalography responses modulated by semantic context, word frequency, and lexical class in sentences. Neuroimage. 2002;17(3):1101–1116. doi: 10.1006/nimg.2002.1268. [DOI] [PubMed] [Google Scholar]

- Holle H, Gunter TC, Rüschemeyer SA, Hennenlotter A, Iacoboni M. Neural correlates of the processing of co-speech gestures. Neuroimage. 2008;39(4):2010–2024. doi: 10.1016/j.neuroimage.2007.10.055. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Electrophysiology reveals semantic memory use in language comprehension. Trends in cognitive sciences. 2000;4(12):463–470. doi: 10.1016/s1364-6613(00)01560-6. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP) Annual review of psychology. 2011;62:621–647. doi: 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. Reading senseless sentences: Brain potentials reflect semantic incongruity. Science. 1980;207(4427):203–205. doi: 10.1126/science.7350657. [DOI] [PubMed] [Google Scholar]

- Leonard MK, Brown TT, Travis KE, Gharapetian L, Hagler DJ, Dale AM, Elman JL, Halgren E. Spatiotemporal dynamics of bilingual word processing. Neuroimage. 2010;49(4):3286–3294. doi: 10.1016/j.neuroimage.2009.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Ferjan Ramirez N, Torres C, Hatrak M, Mayberry RI, Halgren E. Neural stages of spoken, written, and signed word processing in beginning second language learners. Frontiers in Human Neuroscience. 2013;7:322. doi: 10.3389/fnhum.2013.00322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Ferjan Ramirez N, Torres C, Travis KE, Hatrak M, Mayberry RI, Halgren E. Signed words in the congenitally deaf evoke typical late lexicosemantic responses with no early visual responses in left superior temporal cortex. Journal of Neuroscience. 2012;32(28):9700–9705. doi: 10.1523/Jneurosci.1002-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Torres C, Travis KE, Brown TT, Hagler DJ, Dale AM, Elman JL, Halgren E. Language proficiency modulates the recruitment of non-classical language areas in bilinguals. Plos One. 2011 doi: 10.1371/journal.pone.0018240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman AM, Borovsky A, Hatrak M, Mayberry RI. Real-time processing of ASL signs: Delayed first language acquisition affects organization of the mental lexicon. J Exp Psychol Learn Mem Cogn. 2015;41(4):1130–1139. doi: 10.1037/xlm0000088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Brammer MJ, Giampietro V, David AS, Calvert GA, McGuire PK. Lexical and sentential processing in British Sign Language. Human brain mapping. 2006;27(1):63–76. doi: 10.1002/hbm.20167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, McGuire PK, David AS, Williams SC, Suckling J, Calvert GA, Brammer MJ. Neural systems underlying British Sign Language and audio-visual English processing in native users. Brain. 2002;125(7):1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E. Spatiotemporal dynamics of modality-specific and supramodal word processing. Neuron. 2003;38(3):487–497. doi: 10.1016/s0896-6273(03)00197-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG-and MEG-data. Journal of neuroscience methods. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Markwardt FC. Peabody individual achievement test-revised: PIAT-R. American Guidance Service Circle Pines; 1989. [Google Scholar]

- Mayberry RI. First language acquisition after childhood differs from second language acquisition: The case of American Sign Language. Journal of Speech and Hearing Research. 1993;36(6):1258–1270. doi: 10.1044/jshr.3606.1258. [DOI] [PubMed] [Google Scholar]

- Mayberry RI. Cognitive development in deaf children: The interface of language and perception in neuropsychology. In: Segalowitcz SJ, Rapin I, editors. Handbook of neuropsychology. 2. Vol. 8. 2002. pp. 71–107. [Google Scholar]

- Mayberry RI, Chen JK, Witcher P, Klein D. Age of acquisition effects on the functional organization of language in the adult brain. Brain and Language. 2011;119(1):16–29. doi: 10.1016/J.Bandl.2011.05.007. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Cheng Q, Hatrak M, Ilkbasaran D. Late L1 learners acquire simple but not syntactically complex structures. Paper presented at the International Association for the Study of Child Language; Lyon, France. 2017. [Google Scholar]

- Mayberry RI, Lock E. Age constraints on first versus second language acquisition: Evidence for linguistic plasticity and epigenesis. Brain and Language. 2003;87(3):369–384. doi: 10.1016/S0093-934x(03)00137-8. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Lock E, Kazmi H. Linguistic ability and early language exposure. Nature. 2002;417(6884):38–38. doi: 10.1038/417038a. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Squires B. Sign language acquisition. In: Brown K, editor. Encyclopedia of Language and Linguistics. Elsevier; 2006. pp. 11–739. [Google Scholar]

- McDonald CR, Thesen T, Carlson C, Blumberg M, Girard HM, Trongnetrpunya A, Sherfey JS, Devinsky O, Kuzniecky R, Dolye WK. Multimodal imaging of repetition priming: using fMRI, MEG, and intracranial EEG to reveal spatiotemporal profiles of word processing. Neuroimage. 2010;53(2):707–717. doi: 10.1016/j.neuroimage.2010.06.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford JP. Grammatical development in adolescent first-language learners. Linguistics. 2003;41(4):681–722. ISSU 386. [Google Scholar]

- Morford JP, Carlson ML. Sign perception and recognition in non-native signers of ASL. Language Learning and Development. 2011;7(2):149–168. doi: 10.1080/15475441.2011.543393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan HE, Mayberry RI. Complexity in two-handed signs in Kenyan Sign Language. Sign Language and Linguistics. 2012;15(1):147–174. [Google Scholar]

- Newman A, Supalla TR, Fernandez N, Newport EL, Bavelier D. Neural systems supporting limguistic structure, linguistic experience, and symbolic communication in sign language and gesture. Proceedings of the National Academy of Science. 2015;112(37):11684–11689. doi: 10.1073/pnas.1510527112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newport EL. Maturational constraints on language learning. Cognitive Science. 1990;14(1):11–28. [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016//0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Oostendorp T, Van Oosterom A. Source parameter estimation using realistic geometry in bioelectricity and biomagnetism. Biomagnetic localization and 3D modeling 1992 [Google Scholar]

- Paus T. Mapping brain maturation and cognitive development during adolescence. Trends Cogn Sci. 2005;9(2):60–68. doi: 10.1016/j.tics.2004.12.008. [DOI] [PubMed] [Google Scholar]

- Penfield W, Roberts L. Speech and brain mechanisms. Princeton: Princeton University Press; 1959. [Google Scholar]

- Petitto LA, Zatorre RJ, Guana K, Nikelski EJ, Dostie D, Evans EA. Speech-like cerebral activity in profoundly deaf people processing signed languages: Implications for the neural basis of human language. Proceedings of the National Academy of Science. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poizner H, Klima ES, Bellugi U. What the hands reveal about the brain. Cambridge: MIT Press; 1987. [Google Scholar]

- Ressel V, Wilke M, Lidzba K, Lutzenberger W, Krägeloh-Mann I. Increases in language lateralization in normal children as observed using magnetoenceophalography. Brain and Language. 2008;106:16, 17–176. doi: 10.1016/j.bandl.2008.01.004. [DOI] [PubMed] [Google Scholar]

- Sakai KL, Tatsuno Y, Suzuki K, Kimura H, Ichida Y. Sign and speech: amodal commonality in left hemisphere dominance for comprehension of sentences. Brain. 2005;128(6):1407–1417. doi: 10.1093/brain/awh465. [DOI] [PubMed] [Google Scholar]

- Sandler W, Lillo-Martin D. Sign Language and Linguistic Universals. Cambridge University Press; 2006. [Google Scholar]

- Schick B. The American Sign Language Vocabulary Test. Boulder, CO: University of Colorado at Boulder; 1997. [Google Scholar]

- Sereno M, Dale A, Liu A, Tootell R. A surface-based coordinate system for a canonical cortex. Neuroimage. 1996;3(3):S252. [Google Scholar]

- Skipper JI, Goldin-Meadow S, Nusbaum HC, Small SL. Speech-associated gestures, Broca’s area, and the human mirror system. Brain and Language. 2007;101(3):260–277. doi: 10.1016/j.bandl.2007.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stiles J, Brown TT, Haist F, Jernigant L. Brain and cognitive development. Handbook of Child Psychology and Developmental Science. 2015;2(2):1–54. doi: 10.1002/9781118963418.childpsy202. [DOI] [Google Scholar]

- Travis KE, Leonard MK, Brown TT, Hagler DJ, Curran M, Dale AM, Elman JL, Halgren E. Spatiotemporal neural dynamics of word understanding in 12-to 18-month-old-infants. Cereb Cortex. 2011;21(8):1832–1839. doi: 10.1093/cercor/bhq259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D, Naglieri JA. Wechsler Nonverbal Scale of Ability: WNV. PsychCorp; 2006. [Google Scholar]

- Willems RM, Özyürek A, Hagoort P. When language meets action: the neural integration of gesture and speech. Cereb Cortex. 2007;17(10):2322–2333. doi: 10.1093/cercor/bhl141. [DOI] [PubMed] [Google Scholar]