Abstract

Transitions, or periods of change, in medical career pathways can be challenging episodes, requiring the transitioning clinician to take on new roles and responsibilities, adapt to new cultural dynamics, change behaviour patterns, and successfully manage uncertainty. These intensive learning periods present risks to patient safety. Simulation-based education (SBE) is a pedagogic approach that allows clinicians to practise their technical and non-technical skills in a safe environment to increase preparedness for practice. In this commentary, we present the potential uses, strengths, and limitations of SBE for supporting transitions across medical career pathways, discussing educational utility, outcome and process evaluation, and cost and value, and introduce a new perspective on considering the gains from SBE. We provide case-study examples of the application of SBE to illustrate these points and stimulate discussion.

Keywords: Junior Doctor, Deliberate Practice, Boot Camp, Team Task, Repeated Practice

Background

Transitions are inherent in medical education, training, and working life. However, evidence from healthcare and other literature indicates that transitions can be challenging for medical students and doctors, who report feeling underprepared in terms of technical and non-technical skills and who report high levels of associated stress [1–4]. Calls have been made for further formalised training aligned to support doctors in tackling the specific challenges experienced during educational transitions [5]. In this paper, we discuss the potential utility of simulation-based education (SBE) as a mechanism to support transitions in medical careers. We provide some examples of how this is already happening and suggest ways to expand the use of SBE in terms of preparedness for clinical practice in the broadest sense. Whilst identifying concrete specific outcomes of SBE for this purpose is not the focus of this paper, we situate our argument in the wider literature on how formal education and practice-based experiences contribute to the development of medical capacities and dispositions [6] and suggest ways to ensure maximum gain from SBE.

Simulation-based education

First, it is important to be clear what we mean by SBE. Simulation is a means of allowing deliberate hands-on practice of clinical skills and behaviours prior to, and alongside, entry into clinical environments. The aim of SBE is to develop safe clinicians by creating alternative situations and environments in which to learn skills and behaviours. SBE encompasses a breadth of approaches, from low-cost bench simulators to high-fidelity manikins, from simulated patients for learning communication skills to complex ward simulations and haptics.

Simulation is required in medical education for a number of reasons. The natural method of teaching clinicians advocated by Osler (1903)—unstructured clinical experience—was shown to be educationally ineffective [7]. Therefore, the focus of medical education and training shifted to a competency- or outcome-based model of teaching and learning where objectives and outcomes, assessment and feedback, and practice and supervision became the norm [8]. Concurrently, reduced availability of patients for teaching and learning medicine, due to changes in healthcare delivery [9], as well as increased emphasis on protecting patients from unnecessary harm [10], placed limits on the nature of patient contact, particularly for relatively inexperienced learners. Last but not least, in many countries, including Europe and to a lesser extent the USA, hours of training have now been strictly controlled by working time legislation which also led to increased interest in alternative pedagogic paradigms. SBE addresses all of these issues—decreasing reliance on training on real patients, allowing for instant feedback for correction of errors and for directing learning, optimising use of valuable clinical time, enhancing the transfer of theoretical knowledge into the clinical context, and ensuring learners are competent before exposure to real patients [11–13]. SBE focuses on the needs of the learner—it allows for the deconstruction of clinical work patterns to focus on the mastery of a particular skill or combination of skills of interest, it can be calibrated to meet the needs and level of the individuals or teams involved, and it can be optimised in terms of timing of delivery to support skill development in a preparatory fashion [13]. Additionally, given that individuals find it difficult to reliably self-assess their level of preparedness—their strengths and weaknesses [14]—SBE incorporating rigorous, objective, and relevant measures of performance can contribute stronger predictive data regarding readiness for a role and help to identify areas requiring focused educational attention and self-directed learning prior to making a transition. Research has indicated a positive relationship between SBE and learning outcomes including the development of technical and non-technical skills, confidence, and, critically, patient outcomes [15–18]. Indeed, a number of recent publications have identified that SBE can have a measurable, direct effect on a range of patient and public health outcomes including ICU infection rates, lower childbirth complications, and reduced post-operative complications and overnight stay [19–21].

How can SBE support transitions in medical education?

It is useful to think of the complexity of transitions before answering this question. As defined by Kilminster et al., the term transition refers to the process of change or movement between one state of work and another [22]. At the undergraduate level, transitions start with entry into medical school and then involve moving from non-clinical to clinical environments and rotating through different medical specialties, culminating for many in the transition from medical school to working as a junior doctor. Following graduation, junior doctors rotate from unit to unit, place to place, speciality to speciality, and then sub-specialty to sub-specialty, before moving on from being a senior trainee or resident to their first fully trained post. Each transition presents an intensive learning period, requiring that the individual adapt to new environments, with their values, norms, and beliefs [23], manage uncertainty, master unfamiliar equipment or technology, work with new colleagues, and perhaps take on new roles/responsibilities and/or work with unfamiliar patient groups. Given the potential “breadth” of unfamiliarity associated with the changing working environment, it is perhaps understandable that transition points present risks for patient safety [24] and may stifle progress in skill acquisition [25, 26].

The focus of the majority of the research on transitions has been that of the move from medical school to junior doctor or internship (Foundation year 1 in the UK), where the learning curve is steep and the challenges facing new doctors are well defined and relatively well understood. During this transition, the emphasis shifts from learning to balancing education with performing a role in the workplace. Research shows that new doctors often feel they lack the skills and competences for work upon graduation [2]. Studies from the UK context suggest that there are particular areas in which senior medical students or new doctors feel unprepared, such as clinical reasoning and making a diagnosis, diagnosing and managing acute medical emergencies, and prescribing, as well as competencies associated with non-technical skills such as communicating effectively in a multidisciplinary team, speaking up, prioritising patients, handover, and breaking bad news [1, 27–29]. (Note that this feeling of being thrown in at the deep end is not unique to medical graduates: the messages from the literature on the transition from student nurse to staff nurse are very similar) [30]. There is a paucity of research around transitions later in medical training where, arguably, the role shifts are less dramatic, but what evidence there is suggests that the issues are broadly the same. What we know is that those transitioning from Foundation doctor to specialist trainee (intern to resident) often report a heavy focus on service delivery to the detriment of having time to learn and develop new skills, to pursue sub-specialty interests and to gain exposure to the responsibility necessary for progressing in their roles [31]. Similarly, doctors transitioning from specialist trainee (senior resident) to consultant frequently recognise that they are deficient in several necessary non-clinical skills, e.g. supervision, handling complaints, decision-making, delegation, managing conflict, and providing feedback [32]. Although, as yet, there has been relatively little research on the effects of these later career transitions on doctors’ performance and patient safety, it would seem prudent at this point in time to consider all transitions in medical education and training as challenging and with the potential to lead to harm if poorly managed by the individual and the system.

SBE can aid transitions by allowing medical educators to create the conditions in which learners can undergo the practice to acquire and maintain essential (pre-determined) skills, behaviours, and expertise [33]. Learners can rehearse specific skills and procedures and practise broader tasks such as managing competing demands in acute settings, in artificially created environments which are designed to be authentic and to facilitate acquisition of expertise by individuals and teams, via practice, assessment, and feedback [34]. By doing so, learners are better prepared for clinical practice and hence may be able to manage transitions more effectively. How does this work? Increased knowledge and skill obtained through SBE allows necessary information to be accumulated and stored in long-term memory, and drawn on as required, freeing working memory to focus on other aspects of the task in question. To borrow an example from Leppink et al. [35], a novice reviewing an x-ray for the first time may see a mass of different elements all of which need to be processed to make sense of the x-ray. A more experienced learner, who has learned about x-rays and who has a preliminary cognitive schema of what to expect (in terms of physiology, anatomy, and imaging), can make sense of the x-ray more easily. This leaves him or her more cognitive resources to process other unknown aspects of the task. The same applies to practising any other skill, for example non-technical skills such as patient prioritisation or task delegation—once these skills have been rehearsed and incorporated into cognitive schema in the long-term memory store, they will become more automatic and capacity to process additional information simultaneously will increase. Thus, SBE prepares the individual to manage challenging and new situations by supporting them to learn parts of the puzzle in advance (so information is in storage to draw on as required), thus freeing up working memory to focus on what is new, novel, or unexpected [36]. In short, SBE draws on years of research into deliberate practice [8, 35] and cognitive load in a number of high-risk areas—not just medical education but also aviation, oil and gas, and energy [37, 38]—to create safe learning conditions for learners, to ensure safety in real-life situations.

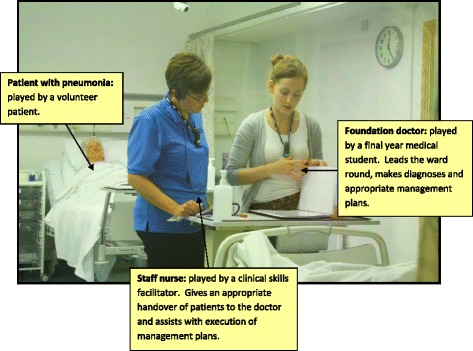

Whilst the theoretical basis of SBE is well-recognised and researched, there has been less of a focus on using SBE effectively to support transitions in the medical career pathway. To effectively use SBE to support transitions requires a number of considerations which draw on the wider literature on deliberate practice. First, the simulated scenarios or tasks must be linked to well-designed learning objectives which are appropriately aligned with the learner’s stage of training and to areas known to be problematic at that transition point [7, 11, 39]. Given, for example, most UK medical students feel adequately trained in terms of basic medical knowledge, history taking, and certain clinical skills, but less confident in other areas, including non-technical skills, such as prioritisation and teamwork [29], then SBE to support the transition between medical school and internship could usefully focus on the latter areas. To give an example of using SBE to support the development of non-technical skills, a number of years ago, we identified that newly qualified doctors struggled with seeking help from senior staff in out-of-hours situations, particularly where communication was by telephone. We developed a simple simulation-based session which involved realistic scenarios, a phone, clinical staff taking the calls, feedback from those taking the calls and faculty (observing the student making the call), and “handy hints”. Our data indicates that the block of teaching of which this is part (the “Professional Practice Block”) has been effective in graduating doctors who are more prepared for practice [40]. Second, for SBE to be effective, it should be integrated into the curriculum in a way that promotes transfer of the skills learnt to clinical practice. For example, it should be initiated at the appropriate educational moment/s, it should include processes for reinforcing learning (including immediate and informative feedback with a focus on areas of weakness), there should be opportunities to amend behaviours (i.e. time for reflection and consideration of current strategies and repeat sessions to allow learning to be put into practice), and, ideally, it should be possible to track performance gains (or losses) using rigorous and objective performance measures [11, 41, 42]. An example of SBE which was grounded in observable difficulties at the time of transition from medical school and included many of these essential features of deliberate practice was recently published by Thomas and colleagues (Table 1, Fig. 1) [43].

Table 1.

Simulated ward round to support the transition from medical student to junior doctor

| Junior doctors are particularly susceptible to error-making within stressful environments. The ward is an excellent example—for it is endemic in distraction [64]. Through overwhelming cognitive load, distraction impairs clinical reasoning [65] and contributes to prescribing error [66]. Despite this, medical graduates receive little training in how to cope with hectic work environments and it is perhaps unsurprising that the early years of a medical career are the most error prone [67]. However, the literature suggests that practice with distraction and interruption can dampen their adverse consequences [68]. In response, Thomas and colleagues investigated whether a simulated ward exercise could improve medical student management of distractions to reduce error-making. | |

| A high-fidelity simulated ward round experience was developed. Students played the part of a junior doctor leading the round and completed a number of error-prone tasks, from patient diagnosis to prescribing. At time-critical points, common distractions were deployed (for example, the doctor’s pager being set off or having to deal with a disgruntled relative) (Fig 1). | |

| A non-randomised controlled study was undertaken with 28 final-year medical students. All students participated in a baseline ward round. Fourteen students formed an intervention group and received immediate feedback on their handling of distractions. The 14 students in a control group received no such feedback. After a lag-time of 1 month, students participated in a post-intervention ward round of comparable rigour. Changes in medical error-making and distractor management between simulations were evaluated. | |

| Baseline error rates were high, with 72 and 76 errors witnessed in the intervention and control groups, respectively. Many errors were life threatening and included prescribing patient-allergic antibiotics, inappropriate thrombolysis, and medication overdoses. Similarly, at baseline, distractions were poorly managed in both groups. | |

| All forms of simulation training resulted in error-reduction post-intervention. In the control group, the total number of errors fell to 44, representing a 42.11 % reduction (p value = 0.0003). In the intervention group, the total number of errors fell to 17, representing a 76.39 % reduction (p value <0.0001). The management of distractions only improved significantly in the intervention group. | |

| Students highly valued the simulations [69], which were deemed high fidelity and built confidence. | |

| “I really hope this is a method of education that catches on because I feel it has been one of my most valuable learning experiences in 5th year so far.” | |

| The research shows that students are not inherently equipped with the skills to manage distractions in order to mitigate error. However, practice with distractions minimises its adverse consequences and targeted feedback is key in achieving the greatest educational utility. |

Fig. 1.

A simulated ward round experience: bridging the gap between undergraduate and postgraduate years

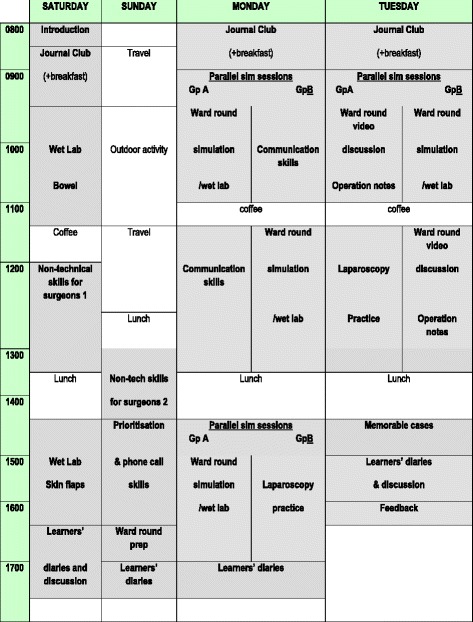

Fewer SBE examples exist to support more senior transitions in a doctor’s career. This is reflective of the relative paucity of research focused on understanding the challenges faced during these later transitions and is mirrored by the fact that whilst the competencies required by junior doctors have generally been outlined by national training bodies, this is not typical for more senior transitions in medical careers. Nevertheless, some promising work is emerging here. One relatively new approach to SBE is the “Boot Camp”. Boot Camps are themed on the principles of a military Boot Camp—intensive, focused training using experiential learning and hands-on practice to learn new skills and knowledge in a safe environment. Several US Boot Camps, designed to support interns transitioning into residency programmes, and drawing heavily on SBE for technical skill development, report improvements in interns’ confidence levels and procedural skill acquisition following repeated exposures to clinical scenarios in a simulated setting [44–46]. A further example of a Boot Camp to support the transition from junior doctor into a surgical training programme in the UK (Fig. 2), one which includes a novel focus on developing core non-technical skills through SBE, is provided in Table 2 [47].

Fig. 2.

Scottish Surgical Boot Camp programme, 2015

Table 2.

Scottish Surgical Boot Camp

| In designing the Scottish Surgical Boot Camp (SSBC), the surgical training faculty in Inverness, Scotland, set out to define and include skills, attitudes, and even values that seemed essential for a safe and “flying” start to surgical training. The content was derived from their observations as trainers of where surgeons (especially trainees) tend to struggle and of which skills had previously been learned “the long way” by apprenticeship or prolonged clinical exposure, or sometimes never learned at all, and which now could be taught early on using a new paradigm of training. Hence, alongside technical skills such as bowel anastomosis and laparoscopic instrument handling, the programme includes sessions devoted to non-technical skills in complex real-life settings, e.g. the leading of a simulated ward round in the face of distractions and the handling of difficult written or spoken communication scenarios. Also included are anecdotal lessons in resilience. | |

| First piloted in 2011, the SSBC was adopted in 2014 by the two Core Surgical Training programmes in Scotland as their introductory course for new start trainees, endorsed by two surgical Royal Colleges (Edinburgh and Glasgow) and fees are subsidised by the NHS Education for Scotland, the body which oversees training for all doctors and healthcare professionals. | |

| The current iteration of the programme is shown in Fig. 2. Most sessions include SBE, and it is not difficult to see from the programme how as a whole it mimics the structure of a “surgical day” and “surgical week”. Also built in is an adherence to Issenberg’s highly evidence-based 10 conditions for effective simulation-based learning [70]. For example, skills are practised in a variety of clinical settings, in valid and controlled simulations, with immediate and individualised feedback. | |

| The technical tasks taught and practised using pig tissue in the “wet labs” are limited to two defined, useful tasks not easily accessible to the new start trainee in real clinical practice, which require discipline and repetition and in which the learner rapidly feels the benefit of repetition. These are small bowel anastomosis, skin flaps, and/or tendon repair. The non-technical skills are taught using the well-established taxonomy “Non-Technical Skills for Surgeons” [71] (NOTSS) and using varying simulated phone call scenarios and a simulated ward round with detailed individual feedback on the core NOTSS behavioural constructs (situation awareness, decision-making, communication and teamwork, and leadership). | |

| Educational theory has been used to understand the complex processes of the Boot Camp by way of a qualitative study [72]. |

Projecting further ahead still, to the transition from specialty training to career grade positions (attending clinicians in the USA and consultant posts in the UK and Europe), it was not possible to identify any SBE approaches designed to support the development of the new skills and behaviours necessary for mastery of these roles. Since the emerging literature is starting to highlight a need for more support for certain non-technical elements of these positions (e.g. supervision, delegation, influencing culture) [32], it may be time to define and specify the precise learning objectives that can be addressed usefully via deliberate practice and to plan SBE interventions that can support preparedness at these higher stages.

What other considerations are important when planning SBE to support transitions?

First, in relation to feedback, it is critical to use trained faculty, who are skilled in giving immediate, informative feedback and engaging participants not just with SBE generally but also with the feedback component of SBE. More contemporary theories of feedback stress the importance of the learner in the feedback process, conceptualising feedback as a dialogic process, where effective feedback depends on learner engagement and activation of the internal regulatory processes required for goal-directed learning [48]. To do so sets expectations for the role of both faculty and learners in the feedback process, which may differ from their experiences of feedback to date, and hence should be explicitly considered in the training and preparation components of any SBE. Associated with this, and discussed earlier, SBE must be matched with performance standards, educational objective(s), and opportunities for repeated practice (with feedback) in order to reach these standards. Moreover, it is essential to set up SBE to recognise that different people require different amounts of practice to accomplish mastery of predefined educational objectives [49]. In other words, one learner might master the outcomes/objectives associated with a simulated anastomosis activity with related ease depending on their prior learning, hand-eye coordination, etc., whereas another learner with different skills, knowledge, experience, and/or attitudes towards learning may need repeated practice.

Clearly, as stated above, SBE outcomes need to be defined in advance. When evaluating SBE, it is very important to go beyond acceptability (e.g. “8 out of 10 students reported that they enjoyed the [SBE]”) and move into outcomes based on measurable change in skill acquisition, whether the objectives are technical or non-technical skills [17, 18], all the way to translation into practice—“from VR-to-OR” [50]—and better outcomes for patients [19–21]. Clearly, it is much easier to collect evaluation data immediately after an SBE intervention than it is to follow up learners when they are out in clinical practice, but it is essential to carefully plan long-term follow-up as otherwise it will be hard to justify the value of SBE to education providers and funders. One study assessed the impact of SBE on performance in the clinical environment in an Irish teaching hospital. SBE on ordering blood products was delivered to newly graduated medical students as part of a Boot Camp course prior to working as a junior doctor. The training was found to reduce the risk of a rejected sample by 65 % as compared with junior doctors who did not receive the training. Moreover, the risk of a rejected sample for trained interns was 45 % lower than for much more experienced doctors. The untrained interns required more than 2 months of clinical experience to reach an error rate that was not significantly different from that of the trained interns [51].

In addition to high-quality outcome evaluation, Moore et al. [52] discuss a number of benefits of complementing this with process evaluations (i.e. understanding the functioning of an intervention, e.g. How was it implemented?, What are the mechanisms of effect?) in relation to complex interventions (and we believe the educational interventions, including SBE, can be considered as complex interventions) [53]. Process evaluations provide the benefit of being able to inform the educators (e.g. was the simulated intervention delivered as intended, does it need to be redesigned in some way) and/or identify aspects of context which acted as barriers to the new learning being translated into clinical practice.

Value is usually related to cost [54]. Medical schools and medical training providers need to answer to governments, regulators, funders, and the public in terms of whether what they are delivering is fit for purpose. “Fit for purpose” can be considered from a number of perspectives. For example, are we producing the right doctors in terms of knowledge, skills, attitudes, and behaviours to meet the health needs of the communities they serve? Are we delivering these outcomes not just to a high standard but in a fiscally responsible way—can we justify a high-cost simulation over an apparently low-cost clinical experience? What are the gains from SBE that would be unobtainable, or unsafe, in more traditional models of teaching and learning? There is a need to develop an evidence base for SBE which encompasses “fitness for purpose” both educationally and fiscally. A high-cost, low-educational value SBE is the worst of all possible outcomes. A low-cost, low-value SBE will not meet anyone’s needs in the long term, whereas a high-cost, high-value SBE would probably be acceptable. Thomas and colleagues [55] calculated the cost of their simulated ward round and realised that the high cost limited the feasibility of the simulation as it was originally designed. By identifying the main cost components, they were able to develop and evaluate a slightly different approach (e.g. group feedback rather than individual feedback). Costs were significantly reduced, but the positive response from participants was maintained. As these are recent studies, it is not yet known if changing from individual to group feedback impacts differently on more distal outcomes such as clinical care practices. However, paying explicit attention to the cost of their SBE allowed Thomas and colleagues to consider other models of educational delivery without threatening the quality of their product.

Our next consideration is that of fidelity in the broadest sense. Much SBE has focused on individual skill development. However, healthcare is usually a team effort and many of the problems noted in transitions are about team skills, e.g. communication with other members of the multidisciplinary team, supervision, and speaking up across professional hierarchies [28–30]. This means it is essential both to develop SBE team tasks and to develop outcomes that go beyond individual gains to team outcomes. These group objectives might be “hard” outcomes such as systemic improvements in team performance (e.g. fewer errors, cost savings, more efficient patient throughput), but it is also important to assess softer, process-, and team-related outcomes, including the quality of inter-disciplinary teamwork in a global sense and including specific team tasks such as communication during handovers, transfer of leadership, and task-based coordination [56]. Through the observation of teams working together in realistic scenarios and reflecting on performance through debriefing (potentially facilitated by the use of video), SBE might also help to explore more complex team-based dynamics such as social hierarchy, diversity, and divisions which can be difficult to pick up in more traditional classroom-based approaches to inter-professional education [57]. In this sense, SBE might also be tailored to target any specific team-based issues ongoing at a local level [58].

Finally, whilst it is absolutely critical to know what works in SBE and understand how it does so in terms of individual, cognitive, and acquisitive learning, limiting the focus of research to outcome and effectiveness studies means understanding of SBE will remain limited. There has been, to date, no acknowledgement in the literature that much SBE is inherently a social activity, bringing together groups of learners and faculty, away from the everyday clinical environment, sometimes in residential situations. By not recognising this explicitly, we have no understanding of how the relationships between faculty, participants, and activities during SBE influence learning [59] or of the nature or influence of the hidden curriculum [60]. Nor do we know about the influence of the particular cultural contexts, for example, of the wider socio-cultural, institutional, and historical setting and complexities of clinical training [61], in which SBE is situated. The need to extend the range of approaches to researching this field is real because, if SBE is based on limited models of learning, it risks inadequately preparing learners for practice. Moreover, where theoretical frameworks are lacking, explanations of the simulation phenomena that can be elaborated and refined in future research may not be forthcoming. Indeed, recently, some researchers have called for the reconceptualising of simulation education generally, to draw on contemporary practice and to consider questions of learning in complex healthcare systems [62, 63].

Conclusions

Increasing doctors’ preparedness to perform the skills and behaviours required to fulfil the responsibilities of any new role is important for patient safety, service efficiency, and individual psychological well-being. Whilst true mastery of a role cannot be achieved until one is immersed within the workplace itself [6], the literature indicates that we can go some way to preparing individuals for the technical and non-technical elements of any new role, and indeed the associated psychological challenges, through the judicious and imaginative use of SBE. In this paper, we have provided an overview of some of the key factors associated with planning and evaluating SBE for transitions. We have also highlighted a number of areas for future research in SBE to support medical career transitions. These include the development of understanding around the practical factors to be considered when designing SBE, ranging from the delivery of feedback and the incorporation of longer term outcome measures to analysis of the cost-effectiveness of the approach being undertaken, as well as the socio-cultural influences on learning in simulated settings. We urge those working in SBE research to consider how best to identify and evaluate concrete specific outcomes of SBE for this purpose. There remains the need for further investigation into the use of SBE to support the transition from medical student to junior doctor, but we urge those working in this area to not neglect examining the use of SBE to support later medical career transitions where “learners” are working with less supervision and increasing responsibility yet where (largely non-technical) issues pertinent to patient safety remain apparent.

Funding

No funding was required for the preparation of this manuscript.

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors made a significant contribution towards the conception, design, and content of this paper. Whilst no primary data is presented, authors KW and IT contributed specifically the two case studies presented in Tables 1 and 2. Authors JC, SR, RP and PO completed the first draft of the paper which was then critically revised by all authors for intellectual content. All authors have given approval for this version to be published and to be held accountable for all aspects of the work. All authors read and approved the final manuscript.

Contributor Information

Jennifer Cleland, Email: jen.cleland@abdn.ac.uk.

Rona Patey, Email: r.patey@abdn.ac.uk.

Ian Thomas, Email: ianthomas1@nhs.net.

Kenneth Walker, Email: kennethwalker@nhs.net.

Paul O’Connor, Email: paul.oconnor@nuigalway.ie.

Stephanie Russ, Phone: +44 (0) 1224 437818, Email: s.russ@abdn.ac.uk.

References

- 1.Teunissen PW, Westerman M. Junior doctors caught in the clash: the transition from learning to working explored. Med Educ. 2011;45(10):968–70. doi: 10.1111/j.1365-2923.2011.04052.x. [DOI] [PubMed] [Google Scholar]

- 2.Cameron A, Miller J, Szmidt N, et al. Can new doctors be prepared for practice? A review. Clin Teach. 2014;11:188–92. doi: 10.1111/tct.12127. [DOI] [PubMed] [Google Scholar]

- 3.Westerman M, Teunissen PW, Fokkema JPI, et al. The transition to hospital consultant and the influence of preparedness, social support and perception: a structural equation modelling approach. Med Teach. 2013;35(4):320–7. doi: 10.3109/0142159X.2012.735381. [DOI] [PubMed] [Google Scholar]

- 4.Chen A, Kotliar D, Drolet BC. Medical education in the United States: do residents feel prepared? Perspectives on Medical Education. 2015;4:181–5. doi: 10.1007/s40037-015-0194-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Greenaway D. Shape of training: securing the future of excellent patient care. 2013. [Google Scholar]

- 6.Billett S. The practices of learning through occupations. In: Billett S, editor. Learning through practice: models, traditions, orientations and approaches. Netherlands: Springer; 2010. pp. 59–81. [Google Scholar]

- 7.McGaghie WC, Kristopaitis T. Deliberate practice and mastery learning: origins or expert medical performance. In: Cleland J, Durning SJ, editors. Researching medical education. Oxford, UK: Wiley Blackwell; 2015. pp. 219–30. [Google Scholar]

- 8.Harden RM. AMEE Guide No. 14: outcome-based education: part 1—an introduction to outcome-based education. Med Teach. 1999;21:7–14. doi: 10.1080/01421599979969. [DOI] [PubMed] [Google Scholar]

- 9.Ker J, Bradley P. Simulation in medical education. In: Swannick T, editor. Understanding medical education: evidence, theory and practice. London: Wiley-Blackburn; 2010. pp. 164–80. [Google Scholar]

- 10.Ziv A, Wolpe PR, Small SD, et al. Simulation-based medical education: an ethical imperative. Acad Med. 2003;78(8):783–8. doi: 10.1097/00001888-200308000-00006. [DOI] [PubMed] [Google Scholar]

- 11.Bearman M, Nestel D, Andreatta P. Simulation-based medical education. In: Oxford textbook of medical education. Oxford University Press, Oxford, UK. 2013. p. 186–97.

- 12.Watson K, Wright A, Morris N, et al. Can simulation replace part of clinical time? Two parallel randomised controlled trials. Med Educ. 2012;46:657–67. doi: 10.1111/j.1365-2923.2012.04295.x. [DOI] [PubMed] [Google Scholar]

- 13.Weller JM, Nestel D, Marshall SD, et al. Simulation in clinical teaching and learning. Med J Aust. 2012;196:1–5. doi: 10.5694/mja10.11474. [DOI] [PubMed] [Google Scholar]

- 14.Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80:S46–54. doi: 10.1097/00001888-200510001-00015. [DOI] [PubMed] [Google Scholar]

- 15.Riley W, Davis S, Miller K, et al. Didactic and simulation nontechnical skills team training to improve perinatal patient outcomes in a community hospital. Joint Comm J Qual Patient Saf. 2011;37(8):357–64. doi: 10.1016/s1553-7250(11)37046-8. [DOI] [PubMed] [Google Scholar]

- 16.Fung L, Boet S, Bould MD, et al. Impact of crisis resource management simulation-based training for interprofessional and interdisciplinary teams: a systematic review. J Interprof Care. 2015;29(5):433–44. doi: 10.3109/13561820.2015.1017555. [DOI] [PubMed] [Google Scholar]

- 17.Knight LJ, Gabhart JM, Earnest KS, et al. Improving code team performance and survival outcomes: implementation of pediatric resuscitation team training. Crit Care Med. 2014;42(2):243–51. doi: 10.1097/CCM.0b013e3182a6439d. [DOI] [PubMed] [Google Scholar]

- 18.Bagai A, O’Brien S, Al Lawati H, et al. Mentored simulation training improves procedural skills in cardiac catheterization: a randomized, controlled pilot study. Circ Cardiovasc Interv. 2012;5(5):672–9. doi: 10.1161/CIRCINTERVENTIONS.112.970772. [DOI] [PubMed] [Google Scholar]

- 19.Barsuk JH, Cohen ER, Potts S, et al. Dissemination of a simulation-based mastery learning intervention reduces central line-associated bloodstream infections. BMJ Qual Saf. 2014;23:749–56. doi: 10.1136/bmjqs-2013-002665. [DOI] [PubMed] [Google Scholar]

- 20.Zendejas B, Cook D, Bingener J, et al. Simulation-based mastery learning improves patient outcomes in laparoscopic inguinal hernia repair: a randomized controlled trial. Ann Surg. 2011;254:502–11. doi: 10.1097/SLA.0b013e31822c6994. [DOI] [PubMed] [Google Scholar]

- 21.Inglis SR, Feier N, Chetiyaar JB, et al. Effects of shoulder dystocia training on the incidence of brachial plexus injury. Am J Obstet Gynecol. 2011;204(4):322-e1. doi: 10.1016/j.ajog.2011.01.027. [DOI] [PubMed] [Google Scholar]

- 22.Kilminster S, Zukas M, Quinton N, et al. Preparedness is not enough: understanding transitions as critically intensive learning periods. Med Educ. 2011;45:1006–15. doi: 10.1111/j.1365-2923.2011.04048.x. [DOI] [PubMed] [Google Scholar]

- 23.Martin MJ. “That’s the way we do things around here”. An overview of organizational culture. Electronic Journal of Academic and Special Librarianship 2006;7(1).

- 24.Vaughan L, McAlister G, Bell D. ‘August is always a nightmare’: results of the Royal College of Physicians of Edinburgh and Society of Acute Medicine August transition survey. Clin Med. 2011;11(4):322–6. doi: 10.7861/clinmedicine.11-4-322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kellett J, Papageorgiou A, Cavenagh P, et al. The preparedness of newly qualified doctors—views of Foundation doctors and supervisors. Med Teach. 2015;37(10):949–54. doi: 10.3109/0142159X.2014.970619. [DOI] [PubMed] [Google Scholar]

- 26.Teunissen PW, Westerman M. Opportunity or threat: the ambiguity of the consequences of transitions in medical education. Med Educ. 2011;45(1):51–9. doi: 10.1111/j.1365-2923.2010.03755.x. [DOI] [PubMed] [Google Scholar]

- 27.Goldacre MJ, Taylor K, Lambert TW. Views of junior doctors about whether their medical school prepared them well for work: questionnaire surveys. BMC Med Educ. 2010;10:78. doi: 10.1186/1472-6920-10-78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Brennen N, Corrigan O, Allard J, et al. The transition from medical student to junior doctor: today’s experiences of tomorrow’s doctors. Med Educ. 2010;44:449–58. doi: 10.1111/j.1365-2923.2009.03604.x. [DOI] [PubMed] [Google Scholar]

- 29.Tallentire VR, Smith SE, Skinner J, et al. Understanding the behavior of newly qualified doctors in acute care contexts. Med Educ. 2011;45:995–1005. doi: 10.1111/j.1365-2923.2011.04024.x. [DOI] [PubMed] [Google Scholar]

- 30.Monaghan T. A critical analysis of the literature and theoretical perspectives on theory-practice gap amongst newly qualified nurses within the United Kingdom. Nurse Educ Today. 2015;35(8):1–7. doi: 10.1016/j.nedt.2015.03.006. [DOI] [PubMed] [Google Scholar]

- 31.Lambert T, Smith F, Goldacre MJ. Doctors’ views about their work, education and training three years after graduation in the UK: questionnaire survey. JRSM Open. 2015;6(12):2054270415616309. doi: 10.1177/2054270415616309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Morrow G, Burford B, Redfern N, et al. Does specialty training prepare doctors for senior roles? A questionnaire study of new UK consultants. Postgrad Med J. 2012;88:558–65. doi: 10.1136/postgradmedj-2011-130460. [DOI] [PubMed] [Google Scholar]

- 33.McGaghie WC, Issenberg SB, Cohen ER, et al. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 2011;86:706–11. doi: 10.1097/ACM.0b013e318217e119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Causer J, Williams AM. Catheter-based cardiovascular interventions. Berlin Heidelberg: Springer; 2013. Professional expertise in medicine; pp. 97–112. [Google Scholar]

- 35.Leppink J, van Gog T, Paas F, et al. Cognitive load theory: researching and planning teaching to maximise learning. In: Cleland J, Durning SJ, et al., editors. Researching medical education. Oxford, UK: Wiley Blackwell; 2015. pp. 207–18. [Google Scholar]

- 36.Qiao YQ, Shen J, Liang X, et al. Using cognitive theory to facilitate medical education. BMC Med Educ. 2014;14:79. doi: 10.1186/1472-6920-14-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.McAllister B. Crew resource management: the improvement of awareness, self-disciple cockpit efficiency and safety. London: Crowood Press; 1997. [Google Scholar]

- 38.Flin R, O’Connor P, Mearns K. Crew resource management: improving teamwork in high reliability industries. Team performance management: an international journal. 2002;8.3/4:68–78. doi: 10.1108/13527590210433366. [DOI] [Google Scholar]

- 39.Nicholas C. An introduction to medical teaching. Netherlands: Springer; 2014. Teaching with simulation; pp. 93–111. [Google Scholar]

- 40.Thomson, AR, Cleland, JA, Arnold, R. Communication skills in a multi-professional critical illness course. The Association for Medical Education in Europe (AMEE), Berne, 30th Aug-3rd September 2003.

- 41.Cook DA, Hamstra SJ, Brydges R, et al. Comparative effectiveness of instructional design features in simulation-based education: systematic review and meta-analysis. Med Teach. 2013;35(1):e867–98. doi: 10.3109/0142159X.2012.714886. [DOI] [PubMed] [Google Scholar]

- 42.Carter O, Brennen M, Ross N, et al. Assessing simulation-based clinical training: comparing the concurrent validity of students’ self-reported satisfaction and confidence measures against objective clinical examinations. BMJ Simulation and Technology Enhanced Learning 2016. In Press. [DOI] [PMC free article] [PubMed]

- 43.Thomas IM, Nicol LG, Regan L, et al. Driven to distraction: a prospective controlled study of a simulated ward round experience to improve patient safety teaching for medical students. BMJ Qual Saf. 2014 doi: 10.1136/bmjqs-2014-003272. [DOI] [PubMed] [Google Scholar]

- 44.Fernandez GL, Page DW, Coe NP, et al. Boot cAMP: educational outcomes after 4 successive years of preparatory simulation-based training at onset of internship. J Surg Educ. 2012;69:242–8. doi: 10.1016/j.jsurg.2011.08.007. [DOI] [PubMed] [Google Scholar]

- 45.Krajewski A, Filippa D, Staff I, et al. Implementation of an intern boot camp curriculum to address clinical competencies under the new Accreditation Council for Graduate Medical Education supervision requirements and duty hour restrictions. JAMA Surg. 2013;148:727–32. doi: 10.1001/jamasurg.2013.2350. [DOI] [PubMed] [Google Scholar]

- 46.Parent RJ, Plerhoples TA, Long EE, et al. Early, intermediate, and late effects of a surgical skills “boot camp” on an objective structured assessment of technical skills: a randomized controlled study. J Am Coll Surg. 2010;210:984–9. doi: 10.1016/j.jamcollsurg.2010.03.006. [DOI] [PubMed] [Google Scholar]

- 47.Cleland J, Walker KG, Gale M, et al. Simulation-based education: understanding the complexity of a surgical training boot camp. Medical Education 2016; In Press. [DOI] [PubMed]

- 48.Boud D, Molloy E. Feedback in higher and professional education: understanding it and doing it well. UK: Routledge; 2012. [Google Scholar]

- 49.Wong BS, Krang L. Mastery learning in the context of university education. Journal of NUS Teaching Academy. 2012;2:206–22. [Google Scholar]

- 50.Dawe SR, Pena GN, Windsor JA, et al. Systematic review of skills transfer after surgical simulation-based training. Br J Surg. 2014;101(9):1063–76. doi: 10.1002/bjs.9482. [DOI] [PubMed] [Google Scholar]

- 51.Joyce KM, Byrne D, O’Connor P, et al. An evaluation of the use of deliberate practice and simulation to train interns in requesting blood products. Simul Healthc. 2015;10(2):92–7. doi: 10.1097/SIH.0000000000000070. [DOI] [PubMed] [Google Scholar]

- 52.Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258. doi: 10.1136/bmj.h1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Mattick K, Barnes R, Dieppe P. Medical education: a particularly complex intervention to research. Adv Health Sci Educ. 2013;18(4):769–78. doi: 10.1007/s10459-012-9415-7. [DOI] [PubMed] [Google Scholar]

- 54.Brown C, Cleland JA, Walsh K. The costs of medical education assessment. Medical Teacher 2015; In Press. [DOI] [PubMed]

- 55.Ford H, Cleland J, Thomas I. Simulated ward round: reducing costs, not outcomes. The Clinical Teacher 2015; In Press. [DOI] [PubMed]

- 56.Medical Research Council. Developing and evaluating complex interventions: new guidance. Available at: http://www.mrc.ac.uk/documents/pdf/complex-interventions-guidance/. Accessed on 04.02.16.

- 57.Palaganas JC, Epps C, Raemer DB. A history of simulation-enhanced interprofessional education. J Interprof Care. 2014;28(2):110–5. doi: 10.3109/13561820.2013.869198. [DOI] [PubMed] [Google Scholar]

- 58.Decker SI, Anderson M, Epps C, et al. Standards of best practice: Simulation Standard VIII: Simulation-Enhanced interprofessional Education (SIM-IPE) Clinical Simulation in Nursing. 2015;11:293–7. doi: 10.1016/j.ecns.2015.03.010. [DOI] [Google Scholar]

- 59.Durning SJ, Artino AR. Situativity theory: a perspective on how participants and the environment can interact: AMEE Guide no. 52. Med Teach. 2011;33(3):188–99. doi: 10.3109/0142159X.2011.550965. [DOI] [PubMed] [Google Scholar]

- 60.Mahood SC. Medical education: beware the hidden curriculum. Can Fam Physician. 2011;57(9):983–5. [PMC free article] [PubMed] [Google Scholar]

- 61.Koens F, Mann KV, Custers EJFM, et al. Analysing the concept of context in medical education. Med Educ. 2005;39(12):1243–9. doi: 10.1111/j.1365-2929.2005.02338.x. [DOI] [PubMed] [Google Scholar]

- 62.Fenwick T, Dahlgren MA. Towards socio-material approaches in simulation-based education: lessons from complexity theory. Med Educ. 2015;49:359–67. doi: 10.1111/medu.12638. [DOI] [PubMed] [Google Scholar]

- 63.Bleakley A, Bligh J, Browne J. Medical education for the future: identity, power and location. 2011. Springer, London New York.

- 64.Weigl M, Muller A, Zupance A, et al. Hospital doctors’ workflow interruptions and activities: an observation study. BMJ Qual Saf. 2011;20(6):491–7. doi: 10.1136/bmjqs.2010.043281. [DOI] [PubMed] [Google Scholar]

- 65.Pottier P, Dejoie T, Hardouin J, et al. Effect of stress on clinical reasoning during simulated ambulatory consultations. Med Teach. 2013;35(6):472–80. doi: 10.3109/0142159X.2013.774336. [DOI] [PubMed] [Google Scholar]

- 66.Westbrook J, Woods A, Rob M, et al. Association of interruptions with an increased risk and severity of medication administration errors. Arch Intern Med. 2010;170(8):683–90. doi: 10.1001/archinternmed.2010.65. [DOI] [PubMed] [Google Scholar]

- 67.Ryan C, Ross S, Davey P, et al. Prevalence and causes of prescribing errors: the Prescribing Outcomes for Trainee Doctors Engaged in Clinical Training (PROTECT) study. Public Library of Science. 2014;3;9(1):e798025. doi: 10.1371/journal.pone.0079802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Liu D, Grundgeiger T, Sanderson PM, Jenkins SA, Leane TA. Interruptions and blood transfusion checks: lessons from the simulated operating room. Anesth Analg. 2009;108(1):219–22. doi: 10.1213/ane.0b013e31818e841a. [DOI] [PubMed] [Google Scholar]

- 69.Thomas IM. Student views of stressful simulated ward roads. Clin Teach. 2015 doi: 10.1111/tct.12329. [DOI] [PubMed] [Google Scholar]

- 70.Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27:10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 71.Yule S, Flin R, Paterson-Brown S, Maran N, Rowley D. Development of a rating system for surgeons’ non-technical skills. Med Educ. 2006;40:1098–104. doi: 10.1111/j.1365-2929.2006.02610.x. [DOI] [PubMed] [Google Scholar]

- 72.Cleland J et al. Understanding the sociocultural complexity of a surgical simulation “Boot Camp”. Med Educ. 2015, in press [DOI] [PubMed]