Abstract

Simulation is traditionally used to reduce errors and their negative consequences. But according to modern safety theories, this focus overlooks the learning potential of the positive performance, which is much more common than errors. Therefore, a supplementary approach to simulation is needed to unfold its full potential. In our commentary, we describe the learning from success (LFS) approach to simulation and debriefing. Drawing on several theoretical frameworks, we suggest supplementing the widespread deficit-oriented, corrective approach to simulation with an approach that focusses on systematically understanding how good performance is produced in frequent (mundane) simulation scenarios. We advocate to investigate and optimize human activity based on the connected layers of any setting: the embodied competences of the healthcare professionals, the social and organizational rules that guide their actions, and the material aspects of the setting. We discuss implications of these theoretical perspectives for the design and conduct of simulation scenarios, post-simulation debriefings, and faculty development programs.

Keywords: Simulation, Scenarios, Debriefings, Installation theory, Activity theory, Mundane practice, Patient safety, Safety II, Video reflexivity, Faculty development

Background

During the scenario, the patient developed signs of anaphylaxis. The medical emergency team (MET) was called by the treating nurse. One of the nurses in the MET begins to ventilate the patient. The nurse is tall and reaches easily above the headboard of the bed. Despite effective ventilation, the patient’s saturation is dropping. The MET decides to intubate the patient. The anesthesiologist takes over—she is not as tall as her nurse colleague. The headboard becomes an obstacle. The team struggles a bit to get it loose and out of the way. When attempting to intubate, the light on the laryngoscope malfunctions. The team reverts to bag mask ventilation, while another laryngoscope is fetched. When the patient is intubated, the saturation raises and the scenario unfolds. During the debriefing, the group discusses both situations—the headboard and the broken light. In both cases, the undertone is negative: The laryngoscope should not have been broken, nor should the headboard be an obstacle for the anesthesiologist, as (according to the accepted assumption in the debriefing group) it should have been removed immediately, when it becomes clear that the patient is deteriorating. The team considers options on how to avoid such unwanted variation in the future (learning from failure).

While many debriefing structures emphasize learning from positive as well as negative aspects of a scenario [1–3], the case above illustrates a widespread approach to actual simulation practice as we know it: If there is a suboptimal event during the scenario, the focus during the debriefing is often how it could have been discovered and solved, or better: avoided. Usually, less focus is placed on how participants adapted to the unexpected and recovered the process and their performance to an acceptable level (good performance, or in short: “success”).

In this paper, we discuss how a focus on everyday positive aspects of work can contribute to improving patient safety-oriented simulation. We first explain the theoretical lenses we use as to how learning from good performance makes sense for simulation practice. We then summarize our thoughts and describe implications for the design and conduct of simulation scenarios and debriefings, as well as for faculty development.

Theoretical perspectives of the learning from success (LFS) approach

In the example above, we could debrief checking routines for equipment that should be standard. This might have helped to identify the broken laryngoscope. We could also debrief standard approaches to treatment including preparation of the work environment. This might have led to the immediate removal of the headboard, when calling the MET. Thus, we could discuss how to avoid errors in the first place and the frequent recommendation to define or refine standard approaches to situations in order to avoid errors. But many such procedures exist already and are not always followed [4]. Adding even more policies and procedures is not necessarily effective. The proliferation of policies can be counterproductive, for example, when procedures and checklists are used in a “tick and flick” approach [5]. The mechanics of checking the boxes may outweigh the thoughtful approach to the task and situation. Our focus here is not on how to avoid errors and unwanted variations but on learning how healthcare professionals work towards actively trading-off conflicting goals to find the best possible balance between efficiency, thoroughness, and safety for the individual patient and within the healthcare system. We call this perspective the learning from success (LFS) approach in simulation.

The LFS approach sets the focus on a different angle for the same scenario: How did the team adapt to the unanticipated and problematic disruptions? What triggered their adaptations? Why did the actions chosen make sense to those involved? What trade-off considerations were involved? How did the team organize the search for the new laryngoscope while at the same time reverting to bag mask ventilation? When did they become aware of the headboard becoming an obstacle? How did they collaborate to remove it, even though this type of bed was not familiar to them and they thus had to solve a mechanical problem under time pressure? What effect did the time pressure have—on the individuals, on the team, on their treatment of the patient?

The LFS approach focusses on the (re-)creation of good performance based on experience and can help in enlarging the focus of simulation-based training to find positive answers to the many questions in the previous paragraph. Therefore, in simulation, we move the focus away from only concentrating on reducing the occurrence of unwanted deviations and their possibly negative effects—towards reinforcing and increasing efficient and effective adaptation [6–14]. In most cases, our assumption will be that efficient adaptation results in good performance and thus, all other things being equal, better outcome for the patient and team satisfaction. Most of the time, good performance is the result of human adaptation in the face of expected and unexpected perturbations. However, at times, these adaptations seem accidental, as when the participants are not fully aware of how they created the good performance. Focusing on these adaptations is not done automatically, as they are often seen as a “normal” part of everyday work. They are expected and not considered worthy of further thought—as opposed to errors (or conversely brilliant solutions) that trigger attention, arousal, interest, and often strong emotions.

In this paper, we discuss the implications of a new approach for (a) the design and implementation of simulation scenarios, (b) the conduct of debriefings, and (c) the training of faculty. We see our approach as complementary to existing approaches. It is not intended to replace them. Because of its innovative character, we do not have empirical evidence about its effectiveness, but build on the combined experience of the author team and observations in our practices. Our point of departure is to use everyday routine tasks with good outcomes, in a context that brings together people, devices, and rules to understand how healthcare professionals adapt in a goal-oriented fashion to achieve the best outcome for the patient in the given situation. First, we explain our key concepts and their interrelations. Given the number of concepts in this paper, we also provide a summary (see Table 1).

Table 1.

Key terms and their definition used in this text

| Embodied competences | Describes what the person can do without conscious efforts. This can be manual skills; ways of addressing and working with problems (not necessarily solving them); ways of thinking; patterns of interpretations; ingrained assumptions, norms values, and beliefs. They are “they are inscribed in the flesh and emerge as cognitions, emotions and movements.” [32] |

| Mundane | Describes the regular and yet not trivial aspect of everyday activities. The mundane does not stick out is part of the expectations and routines—and yet, it requires a lot work to keep the mundane and preventing it from becoming extraordinary. |

| Exnovation | Describes the idea of developing new insights and actions from what is already given. The new ideas are not given “in,” like in innovation, but are developed from out of the existing. |

| Installation | Specific, local, societal settings where humans are expected to behave in a predictable way. Installations consist of a set of components that simultaneously support and socially control individual behavior. The components are distributed over the material environment (affordances), the subject (embodied competences), and the social space (institutions, enacted, and enforced by other subjects). These components assemble at the time and place the activity is performed. [32] |

Opening up the “mundane” as a learning space

Regular everyday practice is valuable for learning on many levels: “Like the mantelpiece clock that nobody notices until it stops ticking, safe clinical practice tends to be taken for granted as a default state. In fact, safety requires an awareness of the complex processes that underlie routine practice, coupled with an ability to recognise problems at an early stage and head them off before they escalate into adverse events. Ensuring that things go right is as important as knowing what to do when they go wrong” [15]. However, concentrating on these mundane activities has challenges and advantages. It is challenging to actually “see” or analyze “normal” events when nothing “remarkable” happens. As these events unfold, they do not necessarily trigger attention and do not invite further thought and cognitive processing. It can be difficult to convince discussion partners to focus on them as relevant, and even if discussed, their underlying dynamics might be difficult to explore: Because of the automaticity of these tasks, they are not easily subject to cognitive analysis.

On the other hand, the mundane is frequent and/or widespread—actions that are done often and/or by many [14]. Thus, mundane actions are valuable in order to understand the corridor of normal performance for an action: What is the variation in task execution over time and/or between different people with the average skill set for the task? Being mindful of these variations can show trends, for example “drifts into failure”—when we are operating in a riskier environment than believed [16, 17]—or emerging good ideas. Any insight, improvement, and learning from such situations would have a large effect, as it would be applied frequently and by many.

Further, these “normal” practices often incorporate a vast amount of experience. The well-executed normal practice contributes to preventing problematic situations and to keeping actions within the expectations, within the corridor of normal performance. Reflecting on the mundane can trigger deep insights about control and prevention strategies and the rationale behind practice that often has been learned without deep reflection. Finally, what is routine for one team may not be routine for another: best (and good) practice can be shared. As a bonus, making explicit success in performance enhances participants self-efficacy (confidence in one’s own ability to achieve intended results), which is likely to affect their future ability to cope with similar situations [18] and in participant’s team spirit.

A positive perspective enables learning from more than failures

Over the last few decades, a set of theoretical frameworks has evolved that emphasize the value of a positive perspective for learning and design. We describe a number of these to demonstrate some of the conceptual complexity that underlies simulation practice. When looking at the different approaches, their partial overlap and how they supplement each other, the value of interdisciplinary work and research teams become clear. The underlying complexity of simulation requires reflection and is difficult to grasp in streamlined algorithms or primers used to assess simulation practice. Therefore, to make simulation effective, we argue, aspects of these many science traditions are required and need to be used together.

First, one well-established positive approach is appreciative inquiry. Appreciative inquiry emphasizes learning from what has worked well in the past and turns these successes into a resource. Moreover, appreciative inquiry considers problems as opportunities or sources of inspiration [19, 20]. The team in the vignette could discuss how their previous experiences helped them in coordinating and what they learnt about their way of coordinating.

Positive deviance, on the other hand, has its focus on individuals, teams, or organizations that stand out for excellence. These “positive deviants” should inspire others and spread positive excellent behavior [21, 22]. The team in our vignette could discuss which best practices they had observed during the scenario or other experiences and how they could be replicated.

Exnovation aims to explicate the already existing strength of practices in order to improve practices [23, 24]. In doing this, exnovation acknowledges that the “ordinary” is an extraordinary accomplishment and that “things or practices are not less valuable simply because they already exist” [25]. Its point of departure is the idea that mundane and implicit routines of practices have become invisible over time but actually play a crucial role in the foundation and preservation of adequate levels of quality. Therefore, the “hidden” strength of practices should be explicated. With its focus on ways of doing and reasoning that are out of sight, the method of video-reflexive ethnography (VRE) is key to exnovate practices as it provides the required balance between familiarity and unfamiliarity. Known (and filmed) practice can be seen from a new perspective, repeatedly, in slow or fast motion, or other ways that might facilitate new perspectives on the existing processes. In this way, there is a strong overlap between exnovation and simulation in the use of video in a learning space. While watching the video of their scenario, the team may filter out the many adaptation processes they used during the scenario. Many different actions were performed—many of them unconsciously. The video and the exnovation angle take them as something valuable and worth of investigation and reflection.

Finally, in the last few years, we have seen a change of focus in the systems approach in safety research [8]. Whereas Safety I has its focus on the avoidance of negative deviations from expected performance, Safety II concentrates on systematically understanding how good performance is produced and how adaptive mechanisms help in recognizing perturbations in the system and in reacting appropriately to them. There is a growing recognition of Safety II in healthcare [26, 27] as well as in healthcare simulation [28]. The team in the vignette could discuss and analyze in detail the “normal” and good parts of their performance.

The context guides human action and experience

The value of an action and its outcome depend on how it fits into its context. Assessing an action or an outcome as positive, as an error, as a brilliant idea etc. is a value statement based on the comparison between what was expected and what was realized in a given context: A task is performed in order to reach a goal [29]. A goal is a conscious representation of the desired result of an activity and at the same time influences which actions must be taken to reach this goal [30]. The actions need to be adapted to the context in which they are taken; successful activity is reaching the goal in the conditions given. Every situation is a new situation, somewhat different from the previous (consider a different patient, different equipment, different colleagues, different mindsets, etc.). Therefore, a good performance in a specific context will inevitably be a subtle adaptation to optimize action in these new conditions. Excellence lies in the capacity to adapt successfully to the dynamic variation of the situation. How can we help the actors seize the complexity of the situation and analyze its components during debrief?

The variability of a situation may be described with three related layers:

The embodied competences of the persons involved and the motives that guide their actions

The social and institutional rules applicable in the situation

The material characteristics of the environment

The embodied competences are all that a human can do, think, experience, feel, etc. They are embedded in the body and are the limits of human performances. Rules can be open or hidden, accepted or opposed, and their official version can be congruent—or not—with their unofficial version. They are also subject to a certain degree of interpretation (for example, the appropriateness of jokes in different settings). Often work as imagined and described in official documents is different from work as done in real work situations [7, 8]. The material layer and what actions it affords (its “affordances”) [31] has obvious influence on what is possible—consider lighting conditions, waiting times for resources, material obstruction, etc. The combination of these three layers is an “installation” that channels (scaffolds and constrains) behavior [32]. Problems may occur when one of the layers is faulty or when they are contradictory. Consider the problem with removing the headboard of the bed. It became a material obstacle, as the rule of removing it was not followed, as the need was not seen previously, since the nurse was tall enough to reach above it. Conversely, one layer can compensate for another. Consider, for example, the collaboration between the team members in removing the headboard, when they find the solution in a shared problem-solving approach (embodied competences). The interplay between the different layers only unfolds when tasks are actually performed: As long as the anesthesiologist does not intubate the patient, the broken light of the laryngoscope is not relevant. The installation as-a-whole produces performance as action unfolds, as in a chemical reaction where the three layers combine.

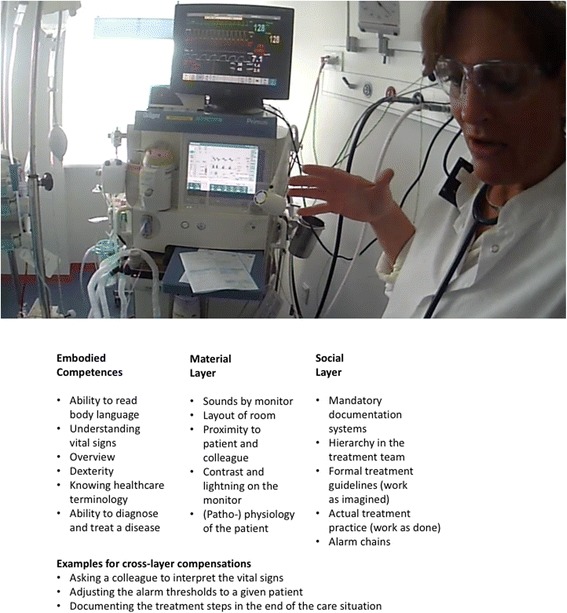

The installation’s three layers provide a framework for enlarging the learning space for the understanding of mundane performance and how it relates to good outcomes. What did the persons involved do, which embodied competences did they draw upon? What guiding rules and standards were there for their actions? What material constraints were relevant (scaffolding or impeding affordances)? Figure 1 illustrates the different layers with a picture from an actual simulation, although not the simulation from the vignette, and examples of elements from each layer. It also provides examples of how the layers can compensate for each other.

Fig. 1.

A picture of an anesthesia simulation to illustrate the different layers in an installation with examples

We have now described the theoretical perspectives that we would like to use to analyze simulation practice. In addition, we have established the mundane performance as relevant for learning, described different positive perspectives as frames of reference, and explained the standing context layers scaffolding human action. Before turning to the practical implications for simulation practice, we will describe our rationale for why it is important to consider them.

The relevance of our theoretical perspectives for simulation practice

The real world for which simulation participants train does have variation. The different layers in different contexts will vary, as will their interplay. People are different in their abilities and their performance varies. The material setting in which care unfolds varies, and at times, devices do not function as expected; interpretations of rules and regulations vary—to name just a few examples. Therefore, focussing only on how to avoid variation cannot be a successful strategy to improve safety, as it builds on the flawed basic assumption that variation can be removed from the real world [8]. The ability to adapt to the situation can be seen as one cornerstone of good performance [33–39]. Thus, it is important to include variability in simulation to support its ecological validity—its relevance for the real world [40]. If simulation does not include such variability and does not address helping participants to deal with it, it would prepare participants for an ideal clinical practice, not the real world. Clinicians would be left alone to reconcile what they learned in “ideal simulation” and the “ill-structured” real world of clinical practice [41]. In this sense, participants in simulation should be effectively solving tasks and learn to adapt their actions to achieve their goals within the context variations at the same time [42].

In real life, the competence to flexibly adapt action to diverse contexts is acquired by embodying experiences from repeated practice under varying conditions. Expertise stems from having performed slightly different actions adapted to a variety of slightly different contexts [32, 43]. As a result, the expert, in a given situation, can consider a large array of possible actions in the range of his or her experience, based on analogue situations previously encountered, and can select the most closely adapted. Simulation-based learning can enhance the array of experiences. The learner is not “limited” to experiences from his/her own clinical practice in which some situations might be encountered frequently, while others might be missed altogether. In fact, practicing the seldom, sensitive, critical, and complex situations is a set of key arguments for simulation practice in the first place.

We suggest supplementing such simulation-based learning by using mundane situations in combination with a deeper analysis of what goes well and why it goes well. Simulation offers a systematic way to provide a variety of experiences (within the limitations inherent to simulation). Simulation and the attention to reflection on action also provide the possibility for second-order learning (what type of response works), that is the learning of how to produce adapted, non-stereotypic response to a new situation [44–47]. Thus, simulation, and especially the debriefing, offers ways to go beyond the immediately relevant and to analyze underlying connections and dynamics. We believe this is easier in mundane cases than in extreme cases, as the cognitive requirements for processing the mundane typically can be assumed to be lower than what is required for infrequent and complex cases. By analyzing different views and mindsets during the debriefing, new insights about the interplay of the different context layers may be achieved.

Let us give an empirical example. In a debriefing, a very experienced anesthetist watches a video-recording of a difficult airway scenario he was involved in. He explains why he does not comment on a non-critical mistake of the nurse he is working with. His silence was indeed deliberate. His rationale is his belief that if he does rebuke the nurse at that moment, he will jeopardize the full collaboration he needs:

Doctor: “[Not playing the part of] a good team member right now would be very critical. (…) Because we need to be on exactly the same course. We need to stay very good friends right now, because what we’re doing right now is depending completely on each other. And, so opening the risk of any… unwelcome, bad mood is always very risky at this point, and you should never do it (…) because [the nurse] might still act the same, but be angry and then plan to tell me afterwards that she didn’t like something. But then that fills up her brain in some way, and she’ll have to keep room for that, which will give less room for staying in the situation.”

Note that the anesthetist’s primary reason for not discussing the error is not to be kind to the nurse. His deep reason is to avoid the nurse wanting to justify herself or talk afterwards for social closure, which he knows will take mental and emotional resources away from the situation at hand. He is not being polite, he is considering the nurse’s cognitive resources to get her full attention and collaboration on the job (and, vice versa, often nurses act the same way when coping with “rude” physicians, for the sake of the physician’s concentration and stress management—such coping mechanisms are not tied to professions). Not saying anything in this situation can be seen as highly efficient communication and a way to collaborate in the moment. The decision to not challenge the nurse could, in psychological terms, be seen as a strategy to avoid tension from an “open issue”—a well-known, resource-intense tension known as the Zeigarnik effect [48]. This draws attention to a generic positive competence of considering the collaboration with others in the light of mundane actions. The dynamic underlying example is seen often and in many domains—it can be considered mundane: it is not a matter of doctors vs. nurses, male vs. female, novice vs. experience. It can happen in any care settings, and it will always pose challenges for effective communication in the light of conflict or just different thoughts about how to proceed. By combining the phenomena observed with theoretical perspectives and models from various disciplines (including psychology, sociology, and anthropology to name a few), work as done in practice and with its variability can be understood in more detail.

The LFS approach has implications for simulation practice. In practice, we advocate for a combination of both the positive and the deficit approach for scenario design and implementation as they can complement each other. Simulation should address the rare, critical, and sensitive situations, as well as the mundane and frequent situations.

Summary of the foundations of the LFS approach

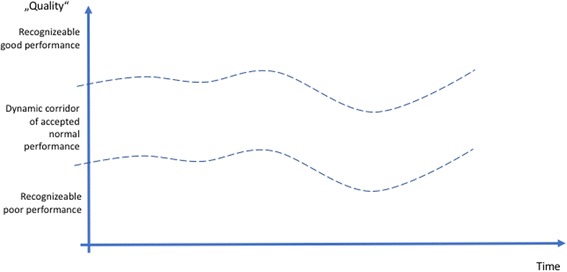

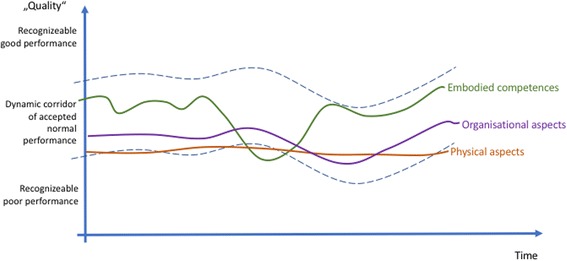

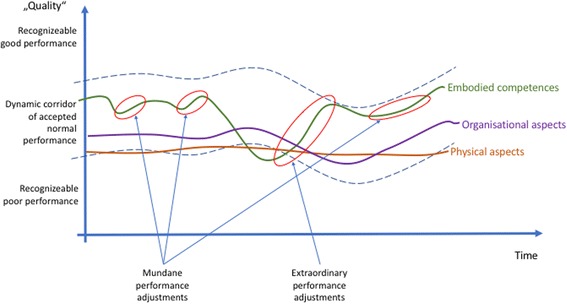

Figures 2, 3, 4, and 5 provide a summary of our considerations that are the base for the practical implications we draw for the design and conduct of simulation-based training.

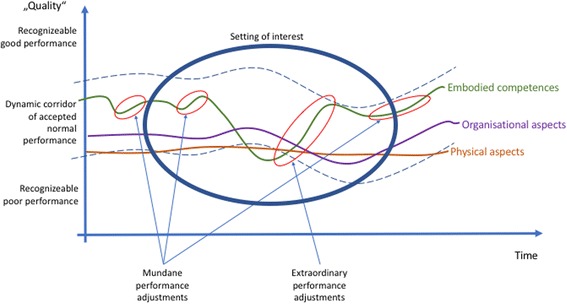

Fig. 2.

The dynamic of the moving corridor of normal performance, as defined by individually or professionally accepted good practice

Fig. 3.

There are constant variations on the three levels of the installation: embodied competences, social- and organizational rules, and the material layer

Fig. 4.

Different possible focus points during debriefings

Fig. 5.

Limits in time and space influence, which aspects of the scenario are discussed

For each process, there is a subjective and/or objective measurement of its quality. Some criteria are measurable (although the results of the measurement still require human interpretation), while others are based on subjective assessment. The profession, a single person, a group of people will consider certain performance as “normal,” other performance as especially good, still other performance as poor. This corridor of normal performance is dynamic. What is considered “good” might be considered poor practice tomorrow or in 50 years [49]. Different people, professions, disciplines, or cultures might define the corridor of normal performance differently, based on variations in norms, values, and beliefs. Therefore, the corridor of normal performance is depicted in a curved fashion (Fig. 2).

All the layers in the installation show variability: human action varies, organizational procedures change. Even machines and devices function with variability—consider break-downs for example. A lot of the variation stays within the corridor of normal performance. Some, however, is recognized as good or poor. It will be more easily recognized the more the variation goes above or below the corridor of normal performance (Fig. 3).

Traditional simulation practices often focus on the negative, especially in human performance. The larger and steeper the gap in performance, the higher the likelihood that an issue is addressed. This is especially likely when the border of the corridor of normal performance is crossed [45]. Some debriefing structures suggest to also look at positive movements [1, 2], but the implementation of this intention does not seem to function fully in practice. We emphasize in addition to not only look at the extraordinary positive adjustments, but also at the mundane adjustments. The mundane, small adjustments occurring constantly build, rebuild, and transmit the foundation of good overall performance (Fig. 4).

Each simulation-based course or clinical debriefing is a snapshot of the overall system. The setting under consideration is limited in time and space. Events before or after and events outside of the scope of the investigation might never become a consideration for the current discussion, even though they might explain the history of the dynamics in the installation and help to anticipate future developments (Fig. 5).

Implications for scenario design

As noted for debriefing, some of the existing frameworks prescribe similar approaches as our paper—this is also true for the design and implementation of scenarios [50–54]. Therefore, some of the suggestions may seem familiar. Yet, simulation in theory does not always translate to simulation in practice. In addition, we relate frameworks with each other that in a new combination to allow us to approach the complexity of simulation as social practice [55]. Thus, we base our approach on exnovation principles: we look at existing approaches and practice and develop new thoughts from this.

The LFS approach provides a structure for systematic investigation of actual factors and components used in pursuing goals. All three context layers (embodied competences, social and organizational rules, and material aspects) can be used to systematically investigate and design scenarios that maximize the experience range offered to participants. The material layer may or may not provide what is needed for the task. The people involved could have the right skills for the situation, they could also be under- or overqualified. The rules that guide the task execution might be known and accepted by all involved, they might also be unknown or opposed by some of the participants in a scenario. Systematically varying the layers and their interplay allows exploration of how a work system functions in more detail. For example, what happens to a scenario when a less experienced person participates? Such systematic variation can be used to analyze why the system is functioning well: how was the inexperience of the novice compensated for? Is the integration functioning well and how could it be improved even further? What are working strategies of novices and what of experts? What different errors would they be involved in? Designing scenarios based on the insight into the dynamics of its components may be seen as a strong test for the analysis of the system’s effectiveness. If we understand how good performance is produced, then we should be able to design scenarios in which participants systematically demonstrate good performance and are still able to adapt to the changes introduced. This approach of enabling or supporting good performance can inform scenarios that are designed to help participants improve their performance. Hollnagel’s Functional Resonance Analysis Method provides another approach on the systematic variation of different aspects of scenarios [7]. Such systematic variation could be combined with existing scenario design methods [56–61].

In practical terms, this would suggest the need to design more scenarios around mundane situations of care [15]. These situations could then be simulated with a higher degree of ecological validity by capturing the variability typical for the actual clinical situation across the context layers. Such systemic variation could enable the identification of the key elements that make a difference in reliably creating good performance. This knowledge could be incorporated during debriefs.

Another way to build scenarios on the LFS approach would be to include all the stakeholders in the scenarios that would typically be involved in clinical care. In current practice, some of the positions are often introduced as role players, instead of the actual professionals—often for financial and logistic reasons. In inter-professional, inter-departmental, inter-disciplinary scenarios that focus on the mundane collaboration of the stakeholders around patients, it is important to stimulate learning situations for all involved. In the LFS approach, there might be potentially more stakeholders involved—also those on the other side of the hand-over (e.g., the personnel in the recovery room for anesthesia scenarios) or patients, as, after all, they and their values are in the focus of simulation activities [62–64]. Of course, resources needed should be in balance with learning goals and the overall system in which they are used.

For the implementation of such scenarios, it would be helpful to anticipate possible cues that would enable the scenario to play out within the mundane areas, not drifting (too much) outside of the corridor of normal performance [56]. Because of the variation in context layers, even “simple” scenarios might easily develop a complexity that is not anticipated—especially, if the discussions in the debriefing reach deeper reflections.

The more information such simulations of mundane situations demonstrate concerning the normal functioning of the healthcare system, the more this knowledge could be used to design scenarios that are even more relevant to clinicians. In this way, a stronger feedback loop between training results and training design can be established. Triggers for deviations, error-traps, known good solutions, etc. could be used to design scenarios that systematically help create a learning opportunity for participants:

For example, a sequence of scenarios could show the different aspects of handing-over the patient between different departments in a hospital. The difference of the LFS approach to some of the existing approaches lies in the focus on the mundane hand-overs, not the difficult ones. By involving the different stakeholders in a participatory approach to the design and conduct of such training and by jointly discussing the scenarios, connections could be made and mutual understanding could be increased. Here, in situ simulations might be beneficial [65–69].

Good solutions for handling a certain patient could be distilled from various teams in simulations. Such a model of good solution could then be introduced to other teams to explore, what kind of adaptations the different team composition and a change of context would require.

Implications for the debriefing

The LFS approach requires efforts to help participants see the benefit of analyzing good performance in mundane situations. Many participants expect challenging scenarios that stretch them to the limits and debriefings in which each error is addressed and corrected. They do not expect to analyze “the regular stuff” and why it turned out well, nor do the learners necessarily value or even recognize the variation that occurs as part of everyday work. With a focus on good performance, participants are potentially more open for a detailed reflexive discussion about the internal processes that guided their actions. With this openness, it is easier to understand differences in the individual frames held by different persons about a certain situation. Understanding those frames in detail requires an open exchange, not hindered by defensive behavior, which is more likely to occur when focusing on errors [42, 55, 70–75]. Research shows that in-depth analysis of good performance in the context of a study provides access to almost the same learning points as the analysis of mistakes [76]. Such a detailed analysis would help in the previous vignette, for example, to understand the triggers to begin coordinating the search for another laryngoscope; the verbal and non-verbal agreements made between the team members to coordinate their actions; the monitoring of the progress in the search; the trigger to remove the headboard from the bed; and the coordination of actions to jointly find the solution to remove it. In a next step, this reflexive discussion could center on ways of applying similar principles in different situations.

While many published debriefing structures contain the analysis of positive points, in practice, there seems to be a marked difference in the conversation around errors and positive performance. Errors are analyzed in some depth; good performance aspects, however, are often mentioned only superficially. Discussions of good performance should address all the context layers described above: What are the resources and conditions that enhance and enable the team’s ability to adapt? This is not an easy task and requires the facilitator to stimulate deep reflections about what is often taken for granted [77]. Simulation debriefings offer many of the elements that make this discussion relevant: a trained facilitator, to help participants to relate their actions to safety and human-factors theories in addition to the clinical aspects; video recordings offer an outside view; protected time and space to engage in meaningful discussions; and (hopefully) a working agreement (ground rules) that enables the necessary trust for a discussion that analyses the “taken-for-granted” [24].

In order to create the atmosphere needed for such an open exchange, many measures to ensure the psychological safety of those involved are important [42, 74, 75, 78–80]. One is the clear distinction between descriptions and interpretations of the underlying perceptions.

Research shows that oriented, in-depth analysis of successful “normal” situation handling can provide access to almost the same learning that is generated from “mistakes,” provided the discussion is properly oriented. Lahlou and his working group use videos, filmed in a first-person perspective (Fig. 1 provides a still from such recording) and ask participants to comment on their own videos in so called replay-interviews. The study team systematically asks participants not only about their positive goals (“what state of things were you trying to attain?”) but also about their negative goals (“is there anything you were trying to avoid happening in acting so?”). This procedure makes explicit the potential issues and risks that were at stake for the clinician, and highlights positive and dynamic safety aspects. This also often triggers narratives of variants of the filmed situations and gives an impression about the realistic variability of work as done. Mesman and colleagues have also successfully used video reflexivity in the Mayo Clinic, USA, while applying the positive approach and focusing on very mundane day-to-day activities in order to analyze inter-professional collaboration between breast cancer surgeons and pathologists. While watching the footage of their own practice, each team realized just how complex was the work of the other team and of themselves. Surgeon: “So one, I’d say my biggest impression from looking at this video is how complex it really is, and we do this every day and we just take it for granted.” They also learned how much they actually do just to facilitate the work of the other team. This resulted in re-appreciation and re-awareness of both the work of the others as well as their own. Crucial in these reflexive meetings was the positive approach.

Also, when using the LFS approach, it is common to observe errors in the scenario. Some might be small and in the light of limited debriefing time might not be discussed, as other angles might be prioritized. Other errors, on the other hand, might be serious enough to warrant a discussion. In some cases, it might not be possible to discuss all errors. Each case will need a judgment call by the debriefer. We see a goal for debriefing that all relevant errors are mentioned and that this is agreed upon with the participants during briefing. If warranted, time might have to be assigned for this discussion within or even after the debriefing—a challenge of adapting debriefing practice to the situation. Another practical approach could be to analyze how the team prevented negative consequences from the error, mitigating possible damage. Alternatively, the participants may be asked to reflect good performance. Again, we emphasize that the LFS approach would be used in combination with debriefings that focus on errors in more detail. Not every detail can be discussed in a session; the goal of training is to learn something important, not to discuss everything. The desire for exhaustive analysis should not get in the way of a deep discussion of one specific aspect that takes time but leads to an important generic insight.

In practical terms, debriefings in the LFS approach might benefit from:

Seeing selected relevant aspects of the scenario recording more than once, as the focus of participants is often on the negative aspects, when they see the video for the first time. Various recording systems allow for viewing of discrete sections marked by the facilitator rather than the entire video.

Consciously observing scenarios and collecting material for the debriefing that focus on adaptations and success.

Looking systematically at the influence of the three layers of the installation and not only at the embodied competences to enhance a systemic understanding of situations.

Implications for simulation trainers and faculty development

Faculty development is under much debate in healthcare simulation, as well as in medical education [70, 81–88]. Classically, a key ability of faculty for implementing the positive approach is listening to what was said and observing what was done. Faculty need “passivity competence” [24], meaning the ability and willingness to first observe and listen instead of acting immediately. This delayed response enables participants “to become sensitized to a greater array of impressions” [24] which expands their capacity for effective action. It moves the focus to what learners actually say and do. This requires so-called collaborative attention, the capacity of “having the patience to be open—open to what colleagues are doing and saying, open to the implications of what they are doing and saying, and open to colleagues having questions about what is appropriate to do and say” [24]. Workshops and courses or exercises for interviews often show how difficult it actually is to really listen to another person and to perform appreciative inquiry. Thoughts about the next question, early interpretations, stereotypes, and many more aspects influence how much (or little) faculty actually understand concerning what participants are saying. Slowing down, concentrating on core issues, and trying to ensure accurate understanding of what the other person said should be a core competence. This also includes becoming aware of each participant’s contribution to the conversation in terms of motivations, feelings, assumptions, etc. Following Schein’s “humble inquiry” recommendations may be useful [89].

For the LFS approach, many of the abilities faculty need are similar or identical to those needed for faculty in general simulation practice [70, 90]. Our approach, however, would require an improved understanding of core concepts around human action that unfolds in contexts. To guide reflections around these issues likely requires a more in depth understanding of the science behind human factors, patient safety, implementation science, and organizational psychology, to name a few. It is about becoming confident using a new vocabulary and new concepts. In practice, this could be initiated by systematic, check-list like, investigations of the three context layers during a debriefing. The aim is to stimulate participants to make explicit the material scaffoldings, the skills, and the rules that were used in producing good practice. Deep understanding of the concepts likely would require substantial study and experience.

Experience from discussing the LFS approach with faculty shows that there are several concerns raised. Some issues relate to the interaction with low performing learners, the need to correct errors that did occur during the scenario, and dealing with mutual expectations. In many countries, the traditional role of the teacher includes identifying and correcting errors via clear feedback of what is right and wrong especially with low performing participants [33, 71, 91]. Debriefers can easily become the most active members during such discussions, focussing on teaching and not on questioning, reflecting, and understanding [91]. Novice faculty members need to understand the dynamics of such role expectations and reflect upon how much they should concur with or violate them in order to optimize the learning opportunities for the various learner groups in the different work settings.

The approach we suggest here requires sufficient time to discuss the activities and thoughts of the participants during the scenario. Studies show that current debriefing practice may not reach this deep reflective level consistently; too little time for a large number of topics might be one of the obstacles for deep reflections [77]. It may be enough to focus on a few key moments of the scenario to create the habit and reflexive mindset of learning from success, which participants can then carry into their daily practice [44]. Applying the in-depth analysis of the LFS approach, participants will likely obtain insights that they can generalize. In this perspective, simulation debriefing also has the higher-order learning objective to train participants to be analytic and reflexive on their actions on an everyday basis. Table 2 summarizes our practical considerations and contrasts the LFS approach with traditional simulation practice. In practice, both approaches will likely combine and overlap.

Table 2.

Comparison of traditional simulation-based education with the LFS approach, based on selected phases of the simulation setting [72]

| Simulation setting phases | Traditional approach | Learning from good performance approach |

|---|---|---|

| Pre-briefing and setting introduction | Emphasis on the extra-ordinary and possibility to train rare, critical, sensitive, and complex situations. The debriefer as (facilitating) expert. |

Emphasis on the value of existing mundane practice. The debriefer as partner in the common learning process. |

| Scenario Conduct | Aim to find the edges of the participants’ competences. Generation of stressful conditions. Use of error traps to generate debriefing-related experiences. Complex scenarios in regards to clinical care, human factors issues, and the use of the simulation. |

Aim to work through common scenarios including systematic variation along the FRAM [7] aspects: • Trigger for an action • Outcome of an action • Prerequisites for an action • Resources needed while action is performed • Time aspects • Control mechanisms and rules for the action |

| Debriefing | Focus on failure and how to avoid them. Positive performance mentioned and praised, but not analyzed. Focus on events “sticking out”: gaps and peaks—failures and good ideas. |

Focus on how to systematically produce good performance by adjusting team and care processes to the context. Focus on the deep analysis of good performance and how to reproduce and re-apply it. Focus on performance within the corridor of normal performance. |

The table emphasizes the contrasts. In practice, both approaches will overlap considerably and/or supplement each other

Future directions

Using exnovation principles, we analyzed existing simulation practice and reflected how it could be supplemented with the LFS approach. Our approach brings together a substantial number of frameworks. All align in terms of understanding how positive adaptation was reached rather than only focussing on failures. They provide a grid for analysis as well as some practical techniques that colleagues may use in practice. Comparative studies will be needed to identify which topics are actually discussed in LFS-oriented scenarios and debriefings. It will also be important to understand what effects this has on the participants’ reactions, learning, and its applications in clinical settings. Ultimately, we need to understand how this application influences patients’ experiences and outcomes. Such studies will enable us to discover the best types of situations to use LFS in simulation training. We anticipate some effects will be difficult to capture with traditional study designs, because of the complex situations that the LFS approach addresses. Therefore, we believe that a focus on the processes that are enabled in the LFS approach—understanding the system and performance interactions that enhance good performance—would be a good place to start to create a reflexive culture of LFS.

Conclusion

We described an innovative approach to simulation and debriefing—the LFS approach. LFS focusses on the systematic understanding of how humans with their embodied competences act in the context of social and organizational rules and material context to create good performance in mundane situations. To supplement traditional simulation approaches with this perspective, scenarios should be based on common, everyday situations, and debriefings should focus on a detailed analysis of how good performance was produced. Simulation faculty can use theoretical insights, terminology, and practice described in this paper to implement this approach.

Acknowledgements

We would like to acknowledge the roles of the colleagues in our respective institutions, with whom we had intense discussions around parts of this article. The participants in your courses helped us to shape our view on patient safety and how simulation relates to it. The reviewers and editors in Advances in Simulation helped us tremendously to improve the text.

Funding

The writing of this article was funded by the authors’ institutions.

Availability of data and materials

The only data reported is available in the text above.

Abbreviation

- LFS

Learning from success

Authors’ contributions

PD drafted the first version of this article. All authors contributed substantially to further revisions with text pieces. PD integrated the different viewpoints into the coherent text form. All authors approved the final version.

Authors’ information

PD—Peter Dieckmann, PhD, Dipl-Psych, is a work and organizational psychologist working with the Copenhagen Academy for Medical Education and Simulation (CAMES) in Herlev, Denmark. Peter is working with simulation since 1999. His research on simulation focusses on understanding the educational potential of simulation and how to use it. His research with simulation focusses on increasing patient safety by optimizing individual work and organizational safety issues. He is internationally recognized simulation faculty trainer and conference contributor. Peter is past president of the Society in Europe for Simulation Applied to Medicine (SESAM).

MD—Mary D. Patterson, MD, MEd is a pediatric emergency physician and the Medical Director of the Akron Children’s Hospital Simulation Center for Safety and Reliability. Dr. Patterson has completed a Master’s in Education at the University of Cincinnati and a Patient Safety Fellowship at Virginia Commonwealth University. She served on the Board of Directors of the Society of Simulation in Healthcare from 2006 to 2011 and was the president of the Society in 2010. Her primary research interests are related to the use of medical simulation to improve patient safety and human factors work related to patient safety. She is a federally funded investigator in these areas.

SL—Saadi Lahlou is chair is Social Psychology at the London School of Economics and Political Science. His research is on the impact of context on behavior. He has been developing methods for digital ethnography for two decades, which are applied in simulation training.

JM—Jessica Mesman, PhD, Associate Professor in Science and Technology Studies, Maastricht University, The Netherlands. Her research interest includes the anthropology of informal knowledge and the method of video-reflexivity in health care practices, particularly the explication of informal and unarticulated dimensions (exnovation) of establishing and preserving safety in care practices. Her work on video reflexivity is internationally recognized in academia as well as in the health care practice. The latter is for example expressed in the invitation of the Mayo Clinic (USA) to join their organization as collaborative researcher, which she happily accepted.

PN—Patrik Nyström, MScHuman Factors and System Safety has worked with simulation since 2004 at the University of Applied Sciences—Arcada, Finland. As the director of Arcada Patient Safety and learning Center (APSLC) and as degree programme director of the emergency care BSc program, the work has consisted of building, integrating, and enhancing simulation as a method for all the health educations including nurses, midwifes, emergency care, and public health nursing. He has actively integrated the safety theories and understanding of human factors in the basic education and especially in the simulation. He has integrated simulation into fields where simulation has not traditionally been a part, and an example of this is a course in ethics which is highly appreciated by the students.

RK—Ralf Krage, MD, PhD, DEAA. is an anesthesiologist. He is involved with simulation-based learning since the 1990. Currently, he works at the VU University Medical Center in Amsterdam, the Netherlands, and he is the director of the ADAM simulation center. His special interests focus on Human Factor issues. He has a record of numerous invited presentations on international conferences on Human Factors, patient safety, simulation-based learning, and airway management. He served the Society in Europe for Simulation Applied to Medicine (SESAM) as president between 2013 and 2015 and was vice-president of the DSSH 2011–2016 (Dutch society for Simulation in Healthcare). He is also a Board member of the GNSH association (Global network for Simulation in Healthcare) and an international partner of the EuSim group.

Ethics approval and consent to participate

The currently unpublished study in which we collected the data from which the quotation in our text stems was approved by the ethics committee of the London School of Economics. Danish law exempts studies from a formal ethics review, if no patients are involved.

Consent for publication

Participants were fully informed about the data recording, storage, and handling. They gave their consent for the scientific publication of the results of the study. The person depicted in Fig. 1 gave her permission for the picture to be used.

Competing interests

PD heads the EuSim group, a network of simulation experts, providing faculty development programs. RK and PN are part of this network as faculty members. Mary Patterson occasionally consults for SimHealth Group.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Peter Dieckmann, Email: mail@peter-dieckmann.de.

Mary Patterson, Email: mpatterson@childrensnational.org.

Saadi Lahlou, Email: s.lahlou@lse.ac.uk.

Jessica Mesman, Email: j.mesman@maastrichtuniversity.nl.

Patrik Nyström, Email: patrik.nystrom@arcada.fi.

Ralf Krage, Email: r.krage@vumc.nl.

References

- 1.Eppich W, Cheng A. Promoting Excellence and Reflective Learning in Simulation (PEARLS): development and rationale for a blended approach to health care simulation debriefing. Simul Healthc. 2015;10(2):106–115. doi: 10.1097/SIH.0000000000000072. [DOI] [PubMed] [Google Scholar]

- 2.Kolbe M, Weiss M, Grote G, Knauth A, Dambach M, Spahn DR, et al. TeamGAINS: a tool for structured debriefings for simulation-based team trainings. BMJ Qual Saf. 2013;22(7):541–553. doi: 10.1136/bmjqs-2012-000917. [DOI] [PubMed] [Google Scholar]

- 3.Smith-Jentsch KA, Cannon-Bowers JA, Tannenbaum SR, Salas E. Guided team self-correction impacts on team mental models, processes, and effectiveness. Small Group Res. 2008;39:303–329. doi: 10.1177/1046496408317794. [DOI] [Google Scholar]

- 4.Amalberti R, Vincent C, Auroy Y, de Saint Maurice G. Violations and migrations in health care: a framework for understanding and management. Qual Saf Health Care. 2006;15(Suppl 1):i66–i71. doi: 10.1136/qshc.2005.015982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gillespie BM, Withers TK, Lavin J, Gardiner T, Marshall AP. Factors that drive team participation in surgical safety checks: a prospective study. Patient Saf Surg. 2016;10:3. doi: 10.1186/s13037-015-0090-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hollnagel E. The ETTO principle: efficiency-thoroughness trade-off : why things that go right sometimes go wrong. Burlington: Ashgate; 2009. [Google Scholar]

- 7.Hollnagel E. FRAM, the functional resonance analysis method: modelling complex socio-technical systems. Farnham, Surrey, UK England. Burlington: Ashgate; 2012. [Google Scholar]

- 8.Hollnagel E. Safety-I and safety-II: the past and future of safety management. Farnham, Surrey, UK England. Burlington: Ashgate Publishing Company; 2014. [Google Scholar]

- 9.Braithwaite J, Wears RL, Hollnagel E. Resilient health care: turning patient safety on its head. Int J Qual Health Care. 2015;27(5):418–420. doi: 10.1093/intqhc/mzv063. [DOI] [PubMed] [Google Scholar]

- 10.Wears RL, Hollnagel E, Braithwaite J. The resilience of everyday clinical work. Farnham, Surrey. Burlington: Ashgate; 2015. [Google Scholar]

- 11.Hollnagel E. Safety-II in practice: developing the resilience potentials. Abingdon; New York: Routledge; 2017. [Google Scholar]

- 12.Dekker S. Just culture: restoring trust and accountability in your organization. 3. Boca Raton: CRC Press, Taylor & Francis Group; 2017. [Google Scholar]

- 13.Dekker S. Safety differently: human factors for a new era. 2. Boca Raton: CRC Press, Taylor & Francis Group; 2015. [Google Scholar]

- 14.Owen C, Bâeguin P, Wackers GL. Risky work environments: reappraising human work within fallible systems. Farnham; Burlington: Ashgate; 2009. [Google Scholar]

- 15.Kneebone RL, Nestel D, Vincent C, Darzi A. Complexity, risk and simulation in learning procedural skills. Med Educ. 2007;41(8):808–814. doi: 10.1111/j.1365-2923.2007.02799.x. [DOI] [PubMed] [Google Scholar]

- 16.Dekker S. Drift into failure: from hunting broken components to understanding complex systems. Farnham; Burlington, Ashgate Pub; 2011.

- 17.Woods D. Essential characteristics of resilience. In: Hollnagel E, Woods DD, Leveson N, editors. Resilience engineering: concepts and precepts. Boca Raton: Tayler & Francis; 2006.

- 18.Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977;84(2):191–215. doi: 10.1037/0033-295X.84.2.191. [DOI] [PubMed] [Google Scholar]

- 19.Cooperrider DL, Whitney D. Appreciative inquiry: a positive revolution in change. 1. San Francisco: Berrett-Koehler; 2005. [Google Scholar]

- 20.Cooperrider DL, Whitney D, Stavros JM. Appreciative inquiry handbook: for leaders of change. 2. Brunswick; San Francisco: Crown Custom Publishing; 2008. [Google Scholar]

- 21.Baxter R, Taylor N, Kellar I, Lawton R. What methods are used to apply positive deviance within healthcare organisations? A systematic review. BMJ Qual Saf. 2016;25(3):190–201. doi: 10.1136/bmjqs-2015-004386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bradley EH, Curry LA, Ramanadhan S, Rowe L, Nembhard IM, Krumholz HM. Research in action: using positive deviance to improve quality of health care. Implement Sci. 2009;4:25. doi: 10.1186/1748-5908-4-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mesman J. Resources of strength: an exnovation of hidden competences to preserve patient safety. In: Rowley E, Waring J, editors. A socio-cultural perspective on patient safety. Aldershot: Ashgate; 2011. [Google Scholar]

- 24.Iedema R, Mesman J, Carroll K. Visualising health care practice improvement: innovation from within. London: Radcliffe Publishing; 2013. [Google Scholar]

- 25.De Wilde R. Innovating Innovation: a contribution to the philosophy of the future. Paper read at the Policy Agendas for Sustainable Technology Innovation Conference, London; 1–3 December, 2000. 2000. [Google Scholar]

- 26.Staender S. Safety-II and resilience: the way ahead in patient safety in anaesthesiology. Curr Opin Anaesthesiol. 2015;28(6):735–739. doi: 10.1097/ACO.0000000000000252. [DOI] [PubMed] [Google Scholar]

- 27.Feeley D. Six Resolutions to Reboot Patient Safety. 2017. [Available from: http://www.ihi.org/communities/blogs/_layouts/15/ihi/community/blog/ItemView.aspx?List=7d1126ec-8f63-4a3b-9926-c44ea3036813&ID=365&Web=1e880535-d855-4727-a8c1-27ee672f115d.

- 28.Patterson M, Deutsch E, Jacobson L. Simulation: closing the gap between work-as-imagined and work-as-done. In: Hollnagel E, Braithwaite J, Wears R, editors. Resilient healthcare volume 3: reconciling work-as-imagined and work-as-done. Boca Raton: CRC Press, Taylor and Francis Group; 2016. [Google Scholar]

- 29.Leontyev, AN. The development of Mind. Selected Works of Aleksei Nikolaevich Leontyev. Pacifica: Marxist Internet Archive; 2009. [Available from: https://www.marxists.org/archive/leontev/works/development-mind.pdf.

- 30.Le Bellu S, Lahlou S, Nosulenko VN, Samoylenko ES. Studying activity in manual work: a framework for analysis and training. Le Travail Humain. 2016;79(1):216–238. doi: 10.3917/th.791.0007. [DOI] [Google Scholar]

- 31.Gibson JJ. The ecological approach to visual perception. Boston: Houghton Mifflin; 1979. [Google Scholar]

- 32.Lahlou S. Installation theory. The societal construction and regulation of behaviour. Cambridge: Cambridge University Press; 2017. [Google Scholar]

- 33.Rasmussen MB, Dieckmann P, Barry Issenberg S, Ostergaard D, Soreide E, Ringsted CV. Long-term intended and unintended experiences after Advanced Life Support training. Resuscitation. 2013;84(3):373–377. doi: 10.1016/j.resuscitation.2012.07.030. [DOI] [PubMed] [Google Scholar]

- 34.Leplat J, Hoc J-M. Tâche et activité dans l’analyse psychologique des situations. Cah Psychol Cogn. 1983;3(1):49–63. [Google Scholar]

- 35.Bogdanovic J, Perry J, Guggenheim M, Manser T. Adaptive coordination in surgical teams: an interview study. BMC Health Serv Res. 2015;15:128. doi: 10.1186/s12913-015-0792-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kolbe M, Burtscher MJ, Manser T. Co-ACT—a framework for observing coordination behaviour in acute care teams. BMJ Qual Saf. 2013;22(7):596–605. doi: 10.1136/bmjqs-2012-001319. [DOI] [PubMed] [Google Scholar]

- 37.Burtscher MJ, Manser T, Kolbe M, Grote G, Grande B, Spahn DR, et al. Adaptation in anaesthesia team coordination in response to a simulated critical event and its relationship to clinical performance. Br J Anaesth. 2011;106(6):801–806. doi: 10.1093/bja/aer039. [DOI] [PubMed] [Google Scholar]

- 38.Burtscher MJ, Wacker J, Grote G, Manser T. Managing nonroutine events in anesthesia: the role of adaptive coordination. Hum Factors. 2010;52(2):282–294. doi: 10.1177/0018720809359178. [DOI] [PubMed] [Google Scholar]

- 39.Manser T, Howard SK, Gaba DM. Adaptive coordination in cardiac anaesthesia: a study of situational changes in coordination patterns using a new observation system. Ergonomics. 2008;51(8):1153–1178. doi: 10.1080/00140130801961919. [DOI] [PubMed] [Google Scholar]

- 40.Manser T, Dieckmann P, Wehner T, Rallf M. Comparison of anaesthetists’ activity patterns in the operating room and during simulation. Ergonomics. 2007;50(2):246–260. doi: 10.1080/00140130601032655. [DOI] [PubMed] [Google Scholar]

- 41.Gaba DM, Fish KJ, Howard SK, Burden AR. Crisis management in anesthesiology. 2. Philadelphia: Elsevier/Saunders; 2015. [Google Scholar]

- 42.Dieckmann P, Krage R. Simulation and psychology: creating, recognizing and using learning opportunities. Curr Opin Anaesthesiol. 2013;26(6):714–720. doi: 10.1097/ACO.0000000000000018. [DOI] [PubMed] [Google Scholar]

- 43.Hollnagel E. Why do our expectations of how work should be done never correspond exactly to how work is done. In: Hollnagel E, Braithwaite J, Wears R, editors. Resilient healthcare volume 3: reconciling work-as-imagined and work-as-done. Boca Raton: CRC Press, Taylor and Francis Group; 2016. [Google Scholar]

- 44.Schmutz JB, Eppich WJ. Promoting learning and patient care through shared reflection: a conceptual framework for team reflexivity in health care. Acad Med. 2017. [Epub ahead of print]. [DOI] [PubMed]

- 45.Rudolph JW, Simon R, Raemer DB, Eppich WJ. Debriefing as formative assessment: closing performance gaps in medical education. Acad Emerg Med. 2008;15(11):1010–1016. doi: 10.1111/j.1553-2712.2008.00248.x. [DOI] [PubMed] [Google Scholar]

- 46.Visser M. Gregory Bateson on deutero-learning and double bind: a brief conceptual history. J Hist Behav Sci. 2003;39(3):269–278. doi: 10.1002/jhbs.10112. [DOI] [PubMed] [Google Scholar]

- 47.Bateson G. Steps to an ecology of mind: collected essays in anthropology, psychiatry, evolution, and epistemology. London; New York: Paladin; 1973. [Google Scholar]

- 48.Zeigarnik B. Das Behalten erledigter und unerledigter Handlungen. Psychologische Forschung, 9, 1-85. Also: Zeigarnik, B. (1967). On finished and unfinished task. In W. D. Ellis (Ed.), A sourcebook of gestalt psychology. New York: Humanities Press. 1927.

- 49.Hodges B. Medical education and the maintenance of incompetence. Med Teach. 2006;28(8):690–696. doi: 10.1080/01421590601102964. [DOI] [PubMed] [Google Scholar]

- 50.Nestel D, Bearman M. Simulated patient methodology: theory, evidence and practice. Chichester, West Sussex; Hoboken: John Wiley & Sons Inc.; 2015.

- 51.Kyle RR, Murray WB, ProQuest (firm). Clinical simulation: operations, engineering, and management. Burlington: Academic Press; 2008.

- 52.Levine AI, DeMaria S, Schwartz AD, Sim AJ. The comprehensive textbook of healthcare simulation. New York: Springer; 2014. [Google Scholar]

- 53.Grant VJ, Cheng A. Comprehensive healthcare simulation: pediatrics. Switzerland: Springer; 2016. [Google Scholar]

- 54.Riley RH. Manual of simulation in healthcare. Oxford: Oxford University Press; 2008. [Google Scholar]

- 55.Dieckmann P, Gaba D, Rall M. Deepening the theoretical foundations of patient simulation as social practice. Simul Healthc. 2007;2(3):183–193. doi: 10.1097/SIH.0b013e3180f637f5. [DOI] [PubMed] [Google Scholar]

- 56.Dieckmann P, Lippert A, Glavin R, Rall M. When things do not go as expected: scenario life savers. Simul Healthc. 2010;5(4):219–225. doi: 10.1097/SIH.0b013e3181e77f74. [DOI] [PubMed] [Google Scholar]

- 57.Benishek LE, Lazzara EH, Gaught WL, Arcaro LL, Okuda Y, Salas E. The Template of Events for Applied and Critical Healthcare Simulation (TEACH Sim): a tool for systematic simulation scenario design. Simul Healthc. 2015;10(1):21–30. doi: 10.1097/SIH.0000000000000058. [DOI] [PubMed] [Google Scholar]

- 58.Jarzemsky P, McCarthy J, Ellis N. Incorporating quality and safety education for nurses competencies in simulation scenario design. Nurse Educ. 2010;35(2):90–92. doi: 10.1097/NNE.0b013e3181d52f6e. [DOI] [PubMed] [Google Scholar]

- 59.Kobayashi L, Suner S, Shapiro MJ, Jay G, Sullivan F, Overly F, et al. Multipatient disaster scenario design using mixed modality medical simulation for the evaluation of civilian prehospital medical response: a “dirty bomb” case study. Simul Healthc. 2006;1(2):72–78. doi: 10.1097/01.SIH.0000244450.35918.5a. [DOI] [PubMed] [Google Scholar]

- 60.Rubio R, del Moral I, Maestre JM. Enhancing learner engagement in simulation through scenario design. Acad Med. 2014;89(10):1316–1317. doi: 10.1097/ACM.0000000000000451. [DOI] [PubMed] [Google Scholar]

- 61.Russ AL, Zillich AJ, Melton BL, Russell SA, Chen S, Spina JR, et al. Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation. J Am Med Inform Assoc. 2014;21(e2):e287–e296. doi: 10.1136/amiajnl-2013-002045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Porter ME. What is value in health care? N Engl J Med. 2010;363(26):2477–2481. doi: 10.1056/NEJMp1011024. [DOI] [PubMed] [Google Scholar]

- 63.Porter ME, Lee TH. From volume to value in health care: the work begins. JAMA. 2016;316(10):1047–1048. doi: 10.1001/jama.2016.11698. [DOI] [PubMed] [Google Scholar]

- 64.Porter ME, Larsson S, Lee TH. Standardizing patient outcomes measurement. N Engl J Med. 2016;374(6):504–506. doi: 10.1056/NEJMp1511701. [DOI] [PubMed] [Google Scholar]

- 65.Chen PP, Tsui NT, Fung AS, Chiu AH, Wong WC, Leong HT, et al. In-situ medical simulation for pre-implementation testing of clinical service in a regional hospital in Hong Kong. Hong Kong Med J. 2017;23(4):404–410. doi: 10.12809/hkmj166090. [DOI] [PubMed] [Google Scholar]

- 66.Petrosoniak A, Auerbach M, Wong AH, Hicks CM. In situ simulation in emergency medicine: moving beyond the simulation lab. Emerg Med Australas. 2017;29(1):83–88. doi: 10.1111/1742-6723.12705. [DOI] [PubMed] [Google Scholar]

- 67.Garber A, Shenassa H, Singh S, Posner G. In situ simulation: a patient safety frontier. J Minim Invasive Gynecol. 2015;22(6S):S138. doi: 10.1016/j.jmig.2015.08.457. [DOI] [PubMed] [Google Scholar]

- 68.Sorensen JL, Navne LE, Martin HM, Ottesen B, Albrecthsen CK, Pedersen BW, et al. Clarifying the learning experiences of healthcare professionals with in situ and off-site simulation-based medical education: a qualitative study. BMJ Open. 2015;5(10):e008345. doi: 10.1136/bmjopen-2015-008345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Sorensen JL, van der Vleuten C, Rosthoj S, Ostergaard D, LeBlanc V, Johansen M, et al. Simulation-based multiprofessional obstetric anaesthesia training conducted in situ versus off-site leads to similar individual and team outcomes: a randomised educational trial. BMJ Open. 2015;5(10):e008344. doi: 10.1136/bmjopen-2015-008344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Cheng A, Grant V, Dieckmann P, Arora S, Robinson T, Eppich W. Faculty development for simulation programs: five issues for the future of debriefing training. Simul Healthc. 2015;10(4):217–222. doi: 10.1097/SIH.0000000000000090. [DOI] [PubMed] [Google Scholar]

- 71.Chung HS, Dieckmann P, Issenberg SB. It is time to consider cultural differences in debriefing. Simul Healthc. 2013;8(3):166–170. doi: 10.1097/SIH.0b013e318291d9ef. [DOI] [PubMed] [Google Scholar]

- 72.Dieckmann P. Simulation settings for learning in acute medical care. In: Dieckmann P, editor. Using simulation for education, training and research. Lengerich: Pabst; 2009. [Google Scholar]

- 73.Rudolph JW, Raemer DB, Simon R. Establishing a safe container for learning in simulation: the role of the presimulation briefing. Simul Healthc. 2014;9(6):339–349. doi: 10.1097/SIH.0000000000000047. [DOI] [PubMed] [Google Scholar]

- 74.Edmondson A. Psychological safety and learning behaviour in work teams. Adm Sci Q. 1999;44(2):350–383. doi: 10.2307/2666999. [DOI] [Google Scholar]

- 75.Edmondson AC. Learning from failure in health care: frequent opportunities, pervasive barriers. Qual Saf Health Care. 2004;13(Suppl 2):ii3–ii9. doi: 10.1136/qshc.2003.009597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Lahlou S, Le Bellu S, Boesen-Mariani S. Subjective evidence based ethnography: method and applications. Integrative psychological and Behav Sci 2015;49(2):216-238. [DOI] [PubMed]

- 77.Kihlgren P, Spanager L, Dieckmann P. Investigating novice doctors’ reflections in debriefings after simulation scenarios. Med Teach. 2015;37(5):437–443. doi: 10.3109/0142159X.2014.956054. [DOI] [PubMed] [Google Scholar]

- 78.Casey T, Griffin MA, Flatau Harrison H, Neal A. Safety climate and culture: integrating psychological and systems perspectives. J Occup Health Psychol. 2017. [Epub ahead of print]. [DOI] [PubMed]

- 79.Chen Y, McCabe B, Hyatt D. Impact of individual resilience and safety climate on safety performance and psychological stress of construction workers: a case study of the Ontario construction industry. J Saf Res. 2017;61:167–176. doi: 10.1016/j.jsr.2017.02.014. [DOI] [PubMed] [Google Scholar]

- 80.Derickson R, Fishman J, Osatuke K, Teclaw R, Ramsel D. Psychological safety and error reporting within Veterans Health Administration hospitals. J Patient Saf. 2015;11(1):60–66. doi: 10.1097/PTS.0000000000000082. [DOI] [PubMed] [Google Scholar]

- 81.Cheng A, Grant V, Huffman J, Burgess G, Szyld D, Robinson T, et al. Coaching the Debriefer: peer coaching to improve debriefing quality in simulation programs. Simul Healthc. 2017. [Epub ahead of print]. [DOI] [PubMed]

- 82.Cheng A, Morse KJ, Rudolph J, Arab AA, Runnacles J, Eppich W. Learner-centered debriefing for health care simulation education: lessons for faculty development. Simul Healthc. 2016;11(1):32–40. doi: 10.1097/SIH.0000000000000136. [DOI] [PubMed] [Google Scholar]

- 83.Cheng A, Palaganas J, Eppich W, Rudolph J, Robinson T, Grant V. Co-debriefing for simulation-based education: a primer for facilitators. Simul Healthc. 2015;10(2):69–75. doi: 10.1097/SIH.0000000000000077. [DOI] [PubMed] [Google Scholar]

- 84.Bearman M, Tai J, Kent F, Edouard V, Nestel D, Molloy E. What should we teach the teachers? Identifying the learning priorities of clinical supervisors. Adv Health Sci Educ Theory Pract. 2017. [Epub ahead of print]. [DOI] [PubMed]

- 85.Steinert Y, Mann K, Anderson B, Barnett BM, Centeno A, Naismith L, et al. A systematic review of faculty development initiatives designed to enhance teaching effectiveness: a 10-year update: BEME Guide No. 40. Med Teach. 2016;38(8):769–786. doi: 10.1080/0142159X.2016.1181851. [DOI] [PubMed] [Google Scholar]

- 86.Irby DM, O’Sullivan PS, Steinert Y. Is it time to recognize excellence in faculty development programs? Med Teach. 2015. p. 1–2. [Epub ahead of print]. [DOI] [PubMed]

- 87.Steinert Y, Naismith L, Mann K. Faculty development initiatives designed to promote leadership in medical education. A BEME systematic review: BEME Guide No. 19. Med Teach. 2012;34(6):483–503. doi: 10.3109/0142159X.2012.680937. [DOI] [PubMed] [Google Scholar]

- 88.Steinert Y. Faculty development: on becoming a medical educator. Med Teach. 2012;34(1):74–76. doi: 10.3109/0142159X.2011.596588. [DOI] [PubMed] [Google Scholar]

- 89.Schein EH. Humble inquiry: the gentle art of asking instead of telling. San Francisco: Berrett-Koehler Publishers, Inc.; 2013. [Google Scholar]

- 90.Krogh K, Bearman M, Nestel D. “Thinking on your feet”—a qualitative study of debriefing practice. Adv Simul. 2016;1(12). https://doi.org/10.1186/s41077-016-0011-4. [DOI] [PMC free article] [PubMed]

- 91.Dieckmann P, Molin Friis S, Lippert A, Ostergaard D. The art and science of debriefing in simulation: ideal and practice. Med Teach. 2009;31(7):e287–e294. doi: 10.1080/01421590902866218. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The only data reported is available in the text above.