Abstract

Neural activity in the striatum has consistently been shown to scale with the value of anticipated rewards. As a result, it is common across a number of neuroscientific subdiscliplines to associate activation in the striatum with anticipation of a rewarding outcome or a positive emotional state. However, most studies have failed to dissociate expected value from the motivation associated with seeking a reward. Although motivation generally scales positively with increases in potential reward, there are circumstances in which this linkage does not apply. The current study dissociates value-related activation from that induced by motivation alone by employing a task in which motivation increased as anticipated reward decreased. This design reverses the typical relationship between motivation and reward, allowing us to differentially investigate fMRI BOLD responses that scale with each. We report that activity scaled differently with value and motivation across the striatum. Specifically, responses in the caudate and putamen increased with motivation, whereas nucleus accumbens activity increased with expected reward. Consistent with this, self-report ratings indicated a positive association between caudate and putamen activity and arousal, whereas activity in the nucleus accumbens was more associated with liking. We conclude that there exist regional limits on inferring reward expectation from striatal activation.

Introduction

Neural activity in the striatum has consistently been shown to scale with the value of anticipated rewards in humans (Delgado, Locke, Stenger, & Fiez, 2003; Knutson, Adams, Fong, & Hommer, 2001) and other animals (Cromwell, Hassani, & Schultz, 2005; Cromwell & Schultz, 2003; Kawagoe, Takikawa, & Hikosaka, 1998). On the basis of this association, a positive emotional state or the anticipation of a rewarding outcome is often inferred based on activation in many striatal regions, including the nucleus accumbens (Cloutier, Heatherton, Whalen, & Kelley, 2008; Harbaugh, Mayr, & Burghart, 2007), putamen (Kang et al., 2009; Aron et al., 2005), and caudate (Delgado, Frank, & Phelps, 2005; King-Casas et al., 2005).

Most fMRI studies of reward have measured neural activity as people make decisions or anticipate executing actions that may lead to the acquisition of a positive outcome. However, this approach generally fails to dissociate anticipation of reward from the motivation required to obtain the reward, which often scales with value. Thus, anticipated reward and motivation are perfectly coupled, such that motivation follows from the anticipation of a potential reward or avoidance of a punishment (Niv, Daw, Joel, & Dayan, 2007). This linkage makes adaptive sense, because successful foraging calls for actions that lead to maximal rewards (Pyke, Pulliam, & Charnov, 1977).

Motivation, as we use the term herein, refers to the drive for action that leads one to work to obtain rewards (Kouneiher, Charron, & Koechlin, 2009; Pessoa, 2009; Niv et al., 2007). Under this definition, motivation precisely targets the cognitions and behaviors that occur in preparation for action. Thus, although the “energizing” nature of motivation directly follows from the availability of reward, it is itself a distinct construct that can be manipulated and studied experimentally. Moreover, motivation is preliminary to and distinct from motor preparation itself. Indeed, motivation may be expected to prioritize subsequent action planning.

There is clearly an adaptive value for a tight linkage between reward and motivation. Nonetheless, emerging evidence suggests that they can be neurally dissociated. Activation in the ventral and dorsal striatum is greatest when reward receipt requires direct action, suggesting that reward-related activity may be contingent on motivation (O'Doherty et al., 2004; Zink, Pagnoni, Martin-Skurski, Chappelow, & Berns, 2004). Furthermore, independent manipulation of outcome reward value and motor response requirements (i.e., go vs. no-go) suggests that striatal activity is more dependent on motor demands than anticipated reward (Kurniawan, Guitart-Masip, & Dayan, 2013; Guitart-Masip et al., 2011, 2012).

In this article, we argue that different parts of the striatum specialize in processing anticipated reward versus motivation. Our thesis is consistent with rodent pharmacological studies and studies of striatal anatomical connectivity, which suggest that ventral striatum function is more associated with reward anticipation, whereas dorsal striatal function is more closely associated with processes related to action preparation (Mogenson, Jones, & Yim, 1980). By distinguishing ventral from dorsal striatal components, we show that it is possible to identify distinct responses to anticipated reward value and the motivation required to obtain it.

We distinguished anticipated reward from motivation by using a task that is unique to our knowledge in that participants exerted more effort to obtain rewards with lower probability of occurrence. This reversed the correlation between expected value and motivation evident in most studies for which motivation directly follows anticipated reward. Thus, in our task, if activity in a given striatal region were driven by expected value, its activation should have increased with reward likelihood. On the other hand, if activity in that striatal region were more closely aligned with motivation, its activation should have decreased with reward probability, because increased reward likelihood also involved decreased motivation.

Methods

A total of 16 participants completed the study (seven men, nine women; aged 18–34 years, mean = 21.6 years, SD = 4.2 years). One participant was excluded for excessive motion (>2 mm between acquisitions), leaving 15 participants for all analyses. All participants gave informed consent before participating in the study using procedures approved the Stanford Institutional Review Board.

RT Task

Before scanning, participants completed a 12-trial motor task to assess their baseline RTs. Participants responded as quickly as possible by pressing a button in response to the appearance of a target that was shown 2–4 sec after the start of each trial (uniformly distributed). The median RT across the 12 trials was taken as the participants' baseline RT for the modified monetary incentive delay task (MID; Knutson, Taylor, Kaufman, Peterson, & Glover, 2005).

Modified MID Task

During the scan, participants engaged in a modified version of the MID task, which was divided into four 40-trial blocks lasting roughly 10 min each. In this task, participants were instructed to respond as quickly as possible following the onset of an unpredictable cued target. If the response occurred before the offset of the target, money was added to the participant's earnings. Participants were informed that they would receive half of this total sum in cash at the end of the session.

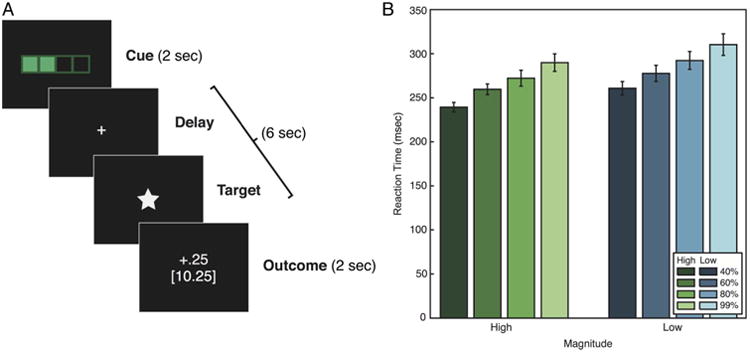

Each trial was divided into four parts: (1) cue, (2) delay, (3) response, and (4) outcome (Figure 1A). During the cue phase (2 sec), participants were shown a bar (cue) whose fill and color denoted that trial's probability of reward (40%, 60%, 80%, or 99%) and magnitude of payout ($0.25 or $1.00), respectively. The bar had four levels of fill, with less fill indicating lower trial difficulty, hence greater probability of obtaining reward. The bar was either white or green in color; white signified a low magnitude trial, whereas green indicated high magnitude. Probability and magnitude were manipulated orthogonally, with each probability–magnitude pair occurring five times within each 40-trial block.

Figure 1.

(A) Participants were scanned while performing a modified version of a MID task. At the start of each trial, participants were cued for 2 sec with an image indicating the difficulty level and the magnitude of reward. After a variable 2- to 4.5-sec delay, a probe appeared on the screen. To obtain reward, participants had to respond to the probe within a threshold RT determined by the difficulty level of the trial. Following this response period, feedback was presented for 2 sec, indicating the reward received on the current trial and cumulatively throughout the experiment. (B) RTs in each condition of the task. RTs were faster for trials of higher difficulty (lower probability of success), F(3, 42) = 25.51, p < .001, and higher reward magnitude, F(1, 41) = 18.33, p < .001. Error bars represent ±1 within-subject standard error.

At the end of the cue phase, a blank screen with a fixation cross was shown for a random 2–4.5 sec (uniform) duration. Following this delay, the target probe (a white star) was presented briefly on the screen, and participants were instructed to press a button to respond before it disappeared. The duration of the response probe presentation was determined based on a staircase procedure (discussed in the next section) that dynamically adjusted the probe duration to achieve the target probability of reward for each trial type. Following the response period, a variable duration ISI was used to maintain a constant 6 sec between cue offset and presentation of the trial outcome.

In the final part of each trial, participants received feedback on their performance for 2 sec. Two numbers were displayed in the center of the screen: the total amount of money earned ($0.25, $1.00, or $0.00 in the case of a failed trial) as well as the participant's total earnings in the experiment up to that point. Trials were separated by an intertrial interval duration of 2, 4, or 6 sec.

Staircase Procedure

The probability of success on each trial type was fixed using a staircase procedure that determined the amount of time that the probe would remain on the screen before disappearing. Eight separate staircases were implemented, each corresponding to a separate trial type. The staircases were each seeded with the participants' median RT in the RT task. To ensure that the trial types initially felt qualitatively different according to their respective difficulty levels, an offset of −50, 0, 50, and 100 msec was applied to the initial values for the 40%, 60%, 80%, and 99% reward probability trials, respectively. Following each trial, the staircase corresponding to that trial type was adjusted based on the participants' response. After successful trials, the probe duration was decreased by 60, 40, 20, or 1 msec for the four respective probability levels. After failed trials, the duration was increased by 40, 60, 80, or 99 msec for the four probability levels. Adjusting the duration in this way fixed the probability of success for each trial type and controlled for differences in RTs across participants.

Self-report Measures

Following scanning, participants were administered surveys that probed individual differences in subjective valuation. For each of the eight cues, participants rated “liking” and “arousal” associated with that trial type. Additionally, participants were asked to estimate the likelihood of earning money for each cue type, allowing us to infer the expected value associated with each cue for each participant.

fMRI Acquisition

Functional images were acquired with a 3T General Electric Discovery scanner (Waukesha, WI) using a 32-channel head coil that minimized susceptibility artifacts in the ventral striatum and ventromedial pFC. Whole-brain BOLD weighted echo-planar images were acquired in 40 oblique axial slices parallel to the AC-PC line with a 2000-msec repetition time (slice thickness = 3.4 mm, no gap, echo time = 30 msec, flip angle = 77°, field of view = 21.8 cm, 64 × 64 matrix, interleaved). High-resolution T2-weighted fast spin-echo structural images (BRAVO) were acquired for anatomical reference (repetition time = 8.2 msec, echo time = 3.2 msec, flip angle = 12°, slice thickness = 1.0 mm, field of view = 24 cm, 256 × 256).

fMRI Analysis

Preprocessing and whole-brain analyses were conducted with Analysis of Functional Neural Images (AFNI) software (Cox, 1996). Data were slice time-corrected within each volume and corrected for 3-D motion across volumes. The data used in whole-brain analyses were spatially smoothed using a 4-mm FWHM filter over a brain mask that excluded ventricles, surrounding CSF, and skull; no smoothing was applied to the data used for the anatomical ROI analyses. Voxel-wise BOLD signals were converted to percent signal change by normalizing by mean BOLD amplitude across the experiment. No participant included in the analyses moved more than 2 mm in any dimension between volumes.

The preprocessed data were normalized to a Talairach template. We transformed the T2 structural image to normalized space and then applied this transform to the preprocessed functional images. The normalized functional images were then analyzed using a general linear model in AFNI. The model consisted of multiple regressors to estimate responses to each component of the task, which were convolved with a two-parameter gamma variate function. We hypothesized that expected reward and motivation to seek this reward would be evident in neural activity elicited by the cue presentation and persisting into the initial part of the delay period before response onset. We modeled this using eight 4-sec duration regressors spanning the cue period and the initial 2 sec of the delay period, one for each of the eight different trial types. An additional two 2-sec duration regressors were included for the response period to (1) capture mean motor-related activation and (2) control for activation that parametrically varied with RT. Four 2-sec regressors accounted for activation during the outcome period, corresponding to positive and negative feedback at high and low magnitude. Additional covariates accounted for head motion and third-order polynomial trends in BOLD signal amplitude across the duration of the scan blocks.

Two contrasts of interest were performed at each voxel during the cue and delay period: activation that scales linearly with probability, independent of magnitude, and activation that scales with magnitude, independent of probability. Maps of t statistics for each contrast were re-sampled and spatially normalized by warping to Talairach space. Whole-brain statistical maps were generated using one-sample t tests at each voxel to localize regions of the brain that showed significant correlations with each of the contrasts across participants. Whole-brain maps were thresholded at p < .05, corrected based on false discovery rate.

Previous work has shown that the striatum differs in function along its dorsal-ventral axis (Haber & Knutson, 2009; Balleine, Delgado, & Hikosaka, 2007). Likewise, we studied neural activity across different striatal subregions by analyzing task-related activity in anatomically defined (and therefore statistically unbiased) ROIs in addition to performing whole-brain analyses. Critically, although the ventral and dorsal striatum are dissociable in terms of their function, there exists no clear demarcation of the anatomical boundary between subregions (Haber & Knutson, 2009). To be as unbiased as possible, we defined ROIs based on the closest identifiable anatomy: the caudate, putamen, and the NAcc. As will be seen, responses differed in the NAcc relative to the caudate and putamen. We explicitly avoid using the terms “ventral striatum” and “dorsal striatum” throughout the remainder of the manuscript because of the fact that the patterns of activity evident in our data were consistent across the caudate and putamen, extending into the ventral putamen. Instead, we focus on two ROIs in the analyses that follow, referring to the caudate and putamen together as “Cpu” and contrasting these responses with the “NAcc.”

ROI masks for the CPu and NAcc were generated anatomically using the Freesurfer image analysis suite (Fischl et al., 2002) and visually inspected for accuracy in each participant. Percent signal change was averaged across voxels in the ROIs to calculate a mean value for each regressor within the region. Contrasts of interest in each ROI were calculated from these parameter estimates in the lmer package in R, modeling participant as a random effect. Type I error probabilities (p values) associated with each contrast estimate were generated using Monte Carlo random sampling.

Results

We estimated motivation through the mean RTs for each level of reward probability and magnitude, with greater motivation indicated by lower RTs. The RTs for each cue type are shown in Figure 1B. RTs varied systematically with both reward magnitude and probability. Specifically, RTs were faster for high-magnitude trials, F(1, 14) = 18.33, p < .001, η2 = .088, but also slowed linearly with increased probability, F(3, 42) = 25.51, p < .001, η2 = .243. These findings indicate that participants were more motivated on trials involving (1) higher magnitude and (2) lower reward probability.

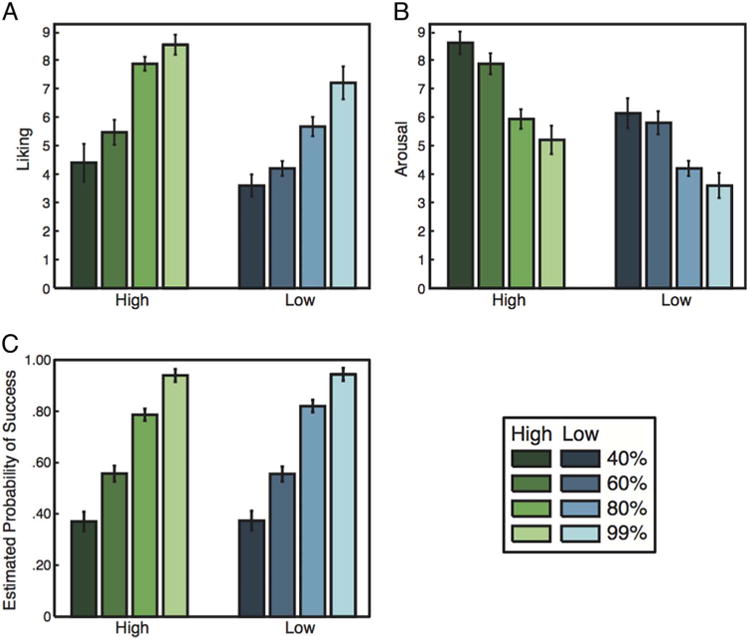

Ratings of liking, arousal, and probability also varied systematically across experimental conditions (Figure 2). As expected, liking ratings were directly related to predictors of higher expected reward. Liking ratings increased with greater probability of winning (Figure 2A), F(3, 42) = 20.05, p < .001, η2 = .433, and were greater on high magnitude than low magnitude trials (Figure 2A), F(1, 14) = 17.26, p < .001, η2 = .140. Arousal increased as probability of winning decreased (Figure 2B), F(3, 42) = 15.49, p < .001, η2 = .320, and was greater in high magnitude trials (Figure 2B), F(1, 14) = 25.10, p < .001, η2 = .234. Finally, participants' subjective ratings of probability of success for each difficulty level were highly related to actual probability (Figure 2C), F(3, 42) = 76.16, p < .001, η2 = .725. Reward magnitude did not bias estimates of probability of success, because estimates did not differ across magnitude conditions (Figure 2C), F(1, 14) = .49, p = .497, η2 = .001.

Figure 2.

(A) Self-reports of liking increased with reward probability and magnitude. (B) Arousal decreased as reward probability increased and was greater for high-magnitude trials. (C) Estimated probabilities resembled the actual probabilities for each trial type and did not differ significantly by reward magnitude.

In all fMRI analyses, we compared relative activation during the cue and delay periods, before the onset of the response probe. Relative BOLD response amplitudes therefore indicate differences in activation based on anticipated reward and trial difficulty. Critically, our analyses modeled motor responses and reward receipt separately, ruling out these factors as causes of the results that follow.

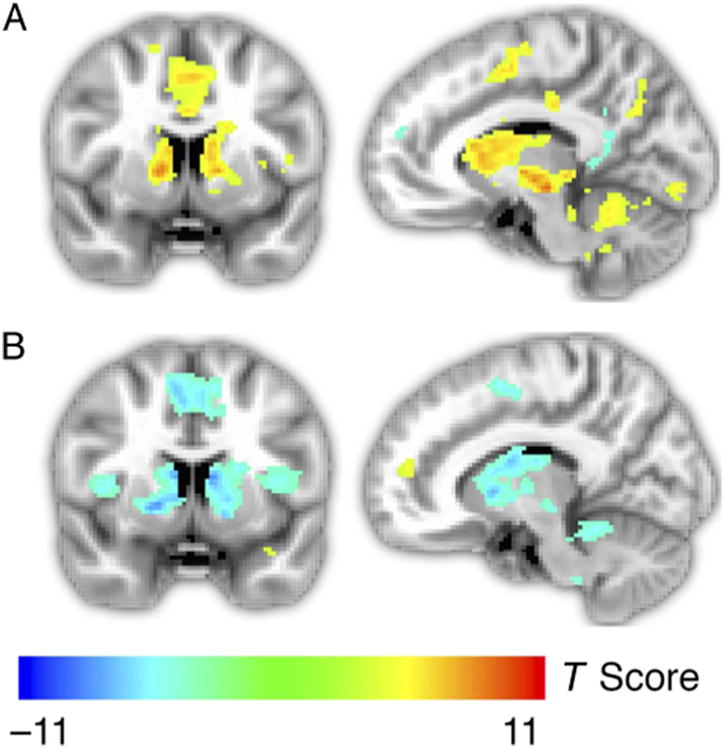

Previous studies have consistently found that anticipation of larger magnitude rewards produce larger neural responses throughout the striatum (Tobler, O'Doherty, Dolan, & Schultz, 2007; Knutson et al., 2001). We therefore tested whether magnitude had the expected effect of scaling neural responses in this study as well (Table 1). Figure 3A presents whole-brain activation maps for the high > low reward magnitude contrast at p < .05, corrected for multiple comparisons. Significant differences were found bilaterally throughout the caudate and putamen. There were no significant voxels in the NAcc for this contrast, as would be predicted based on previous work employing the MID task (e.g., Knutson et al., 2001). Instead, we found that activation in the NAcc increased with ratings of liking and probability of reward—determinants of subjective expected value (Figure 5; discussed in greater detail below). These results are consistent with the CPu and NAcc signaling either expected reward value or motivation, because both value and motivation (indicated by RT) increased with magnitude.

Table 1. Peak Activation Coordinates from Whole–brain Analyses.

| Peak T Statistic (Talairach Coordinate) | ||

|---|---|---|

|

| ||

| Magnitude (High >Low) | Probability (Increasing) | |

| Right caudate | 8.20 (−9, −11, 6) | −8.35 (−12, 2, 18) |

| Left caudate | 7.94 (13, −7, 4) | −9.12 (8, −2, 14) |

| Right putamen | 7.89 (−13, −3, 6) | −11.25 (−18, −8, 0) |

| Left putamen | 5.93 (−5, 51, −6) | −7.28 (14, −8, 0) |

| Thalamus | 9.31 (−5, 3, 12) | −7.65 (0, 4, 14) |

| Supplementary motor area | 9.36 (−11, 5, 60) | −9.66 (6, 8, 54) |

| Anterior cingulate cortex | 7.69 (−3, −21, 38) | 5.68 (−18, −48, 10) |

| Cerebellum | 10.17 (−31, 49, −26) | −8.70 (1, 49, −16) |

| Right lingual gyrus | 5.34 (−25, 87, −8) | – |

| Left lingual gyrus | 5.09 (11, 89, −8) | – |

| Right precuneus | 7.14 (−11, 63, 30) | 6.80 (−29, 81, 26) |

| Left precuneus | 5.61 (1, 61, 62) | 5.20 (−34, 74, 38) |

| Posterior cingulate cortex | −5.83 (5, 55, 20) | 6.71 (5, 53, 24) |

| Right inferior frontal gyrus | 4.54 (−49, −15, 2) | – |

| Left inferior frontal gyrus | −5.58 (37, −33, 0) | – |

| Left middle frontal gyrus | 5.60 (27, −27, 28) | 5.61 (15, −49, 34) |

| Right middle frontal gyrus | – | −12.72 (−2, −10, 48) |

| Right inferior parietal lobule | 4.62 (−33, 47, 38) | – |

| Left inferior parietal lobule | 5.18 (31, 37, 36) | – |

| Left superior frontal gyrus | −4.10 (15, −27, 48) | 5.89 (13, −29, 50) |

| Right parahippocampal gyrus | −4.38 (−21, 15, −14) | 5.50 (−29, 37, −8) |

| Right precentral gyrus | 4.53 (−37, 9, 44) | −5.23 (−37, 11, 42) |

| Right supramarginal gyrus | 4.73 (−51, 41, 34) | – |

| Left angular gyrus | −5.29 (45, 69, 28) | 5.05 (43, 73, 34) |

| Right angular gyrus | – | 5.87 (−47, 65, 24) |

| Right insula | 9.10 (−30, −26, 4) | 5.85 (−35, 15, 16) |

| Left insula | 5.08 (42, −12, 6) | −9.09 (28, −18, 14) |

| Right middle occipital gyrus | – | 4.99 (−35, 79, 2) |

| Left middle occipital gyrus | – | 5.31 (35, 83, 2) |

| Left superior temporal gyrus | – | 6.41 (59, 17, 4) |

| Cingulate gyrus | – | −5.39 (−3, 21, 28) |

| Right amygdala | −4.28 (−22, 10, −12) | 7.17 (−22, 12, −14) |

| Left amygdala | – | 8.57 (21, 9, −16) |

Results significant at threshold of p < .05, false discovery rate-corrected with minimum cluster volume of 20 voxels. Peak voxels are listed by anatomical region.

Figure 3.

(A) Whole-brain analysis identifying brain areas with a significant difference in fMRI responses during anticipation of reward in high-magnitude trials versus low-magnitude trials. Activation extended throughout the CPu. (B) Whole-brain general linear model of activation that scales linearly with probability of success. As probability of success increased, activation in the CPu decreased, suggesting that activation in these structures scales with motivation rather than expected value. Activation maps were thresholded at p < .05, corrected based on false discovery rate.

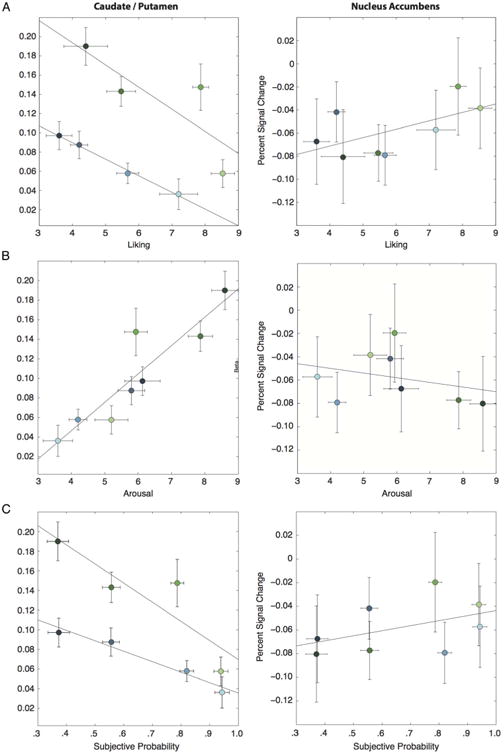

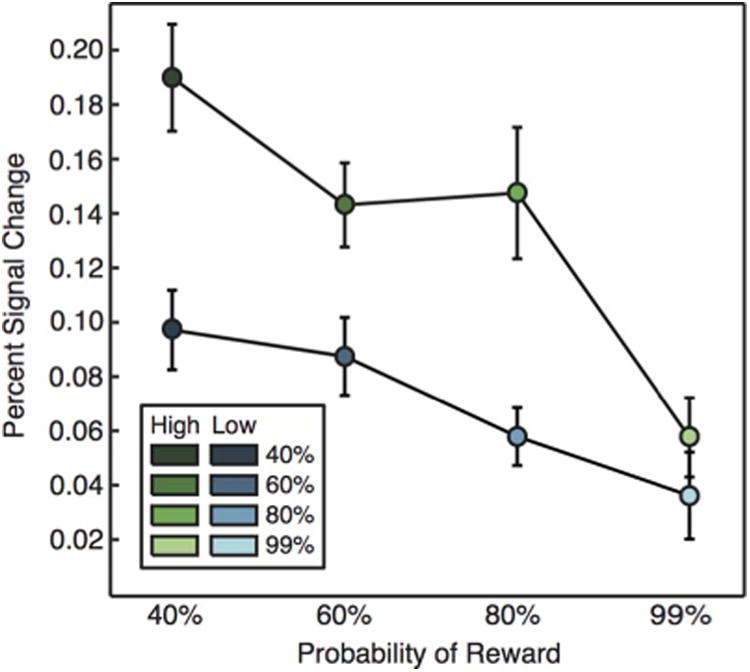

Figure 5.

(A) Activation in the CPu decreased with increasing liking (T = −3.03, p < .01), whereas activation in the NAcc increased with liking (T = 1.93, p = .057). (B) Activation in the CPu increased with arousal (T = 4.75, p < .001), but activation in the NAcc was not related to arousal (T = −.48, p = .653). (C) Activation decreased with subjective probability ratings in the CPu (T = −4.03, p < .001) but increased with subjective probability ratings in the NAcc (T = 2.06, p < .05). Error bars represent ±1 within-subject standard error.

Our primary interest was in determining how striatal activity scaled with reward probability, controlling for reward magnitude. As indicated above, both expected reward and reports of “liking” increased with reward probability. However, as rewards became more probable, motivation (as inferred from RT) decreased. Analyses based on reward probability should therefore permit differentiation of responses related to expected value and motivation.

We conducted two related analyses to determine how CPu and NAcc activity depended on reward probability. First, we ran a whole-brain regression analysis to identify brain areas for which event-related responses scaled linearly with the probability of reward across trials (Figure 3B). Throughout the bilateral CPu, this analysis indicated that activation decreased linearly with increased probability of reward, suggesting that responses in these regions scaled with motivation rather than expected reward. In a second analysis, we extracted average fMRI signals from anatomically defined ROIs that covered the CPu in each participant (Figure 4). Within these ROIs, we then determined how event-related responses scaled with magnitude and reward probability using a mixed model that predicted percent signal change using regressors for magnitude and linear variation in probability. This analysis also revealed that activation in the CPu had a negative association with probability (T = −5.66, p < .001) and a positive association with magnitude (T = 5.42, p < .001). Again, these findings are consistent with the hypothesis that CPu responses scale with motivation but not anticipated reward.

Figure 4.

ROI analysis by trial type in the CPu. Activation decreased linearly with probability (T = 5.66, p < .001) and was greatest on high-magnitude trials (T = 5.42, p < .001). Error bars represent ±1 within-subject standard error.

Although the whole-brain contrast results suggested that CPu activation scaled with motivation, previous work has shown that the NAcc is responsive to differences in the subjective value of anticipated reward (e.g., Knutson et al., 2005). To assess this potential dissociation, we compared responses across anatomical ROIs for the NAcc and CPu (Figure 5). This analysis revealed a clear anatomical distinction with respect to neural activity and participants' ratings of liking, arousal, and probability. First, whereas activation in the CPu decreased with increasing liking (Figure 5A, left; T = −3.03, p < .01), activation in the NAcc increased with liking (Figure 5A, right; T = 1.93, p = .057). A direct comparison between these regions confirmed this dissociation (T = 3.67, p < .001). We also found differences in relation to subjective reports of how arousing each of the stimuli was. Activity was strongly related to arousal in the CPu (Figure 5B, left; T = 4.75, p < .001) but was unrelated to arousal in the NAcc (Figure 5B, right; T = −.48, p = .653) in this study. Direct contrast of the two regions' relationship to arousal supports this interpretation, although the result does not reach statistical significance (T = −1.76, p = .086).

The NAcc and CPu differed in their relationship to participants' estimates of reward probability for each cue type. In the CPu, greater activation was associated with lower estimations of probability (T = −4.03, p < .001), whereas the NAcc showed the opposite relationship (T = 2.06, p < .05). This dissociation was also confirmed by direct contrast between the two regions (T = 3.68, p < .001). Using these subjective probability ratings, we generated a regressor for the expected value of each trial, calculated as reward magnitude ($0.25 or $1.00) times the probability rating endorsed by that participant for that trial type. This analysis showed the expected pattern of results in the NAcc, with greater expected value predicting greater activation. However, this result was not statistically significant (T = 1.54, p = .169), indicating that this result should be interpreted with caution.

Discussion

The major aim of this experiment was to differentiate neural responses to anticipated reward and motivation in the NAcc and CPu. Thus, we framed reward probability as difficulty when describing the task procedures to participants. Perhaps for this reason, trials involving lower probabilities of reward elicited greater motivation in this study. This interpretation is supported by the behavioral results, because RTs were faster for the lower probability and higher magnitude trials. Thus, although the lower probability trials were inferior in terms of expected value, they nonetheless elicited greater motivation.

We found that activation in the CPu decreased with probability and increased with the magnitude of anticipated reward. Together, these findings indicate that activation in the CPu scales with motivation rather than expected reward. The results from the ROI analysis in the CPu support this interpretation. A reward-centric account of striatal activity would predict that activation in the CPu should be greatest for the trial types that received the highest liking ratings. Instead, for trials with equivalent magnitudes of prospective reward, we found the opposite relationship—activation throughout the CPu decreased with liking but increased with ratings of arousal, a construct closely related to motivation. This occurred mainly because, although participants liked the lower probability trials less, they were simultaneously motivated toward more effortful responding because of the greater difficulty of those trials.

These results are difficult to reconcile with theories of dorsal striatal function, which assume that activity in this region scales positively with measures of liking or subjective preference (Balleine et al., 2007; Hikosaka, Takikawa, & Kawagoe, 2000). They instead suggest that this common finding may occur in some regions of the striatum because anticipated reward is often conflated with motivation. Our findings therefore have important implications regarding what can be inferred based on activation in the caudate and putamen. In particular, many investigators have inferred a positive emotional state or the anticipation of a positive outcome from activation in this region (e.g., King-Casas et al., 2005; De Quervain et al., 2004). The current results suggest that such interpretations need qualification, because activation in this region can have a positive or negative relationship with expected value, depending on the extent to which value differences affect motivation.

We relied on RT as a behavioral measure of motivation. This is reasonable because faster RTs can only result from greater exertion of effort and greater effort is expected to follow from increased motivation. Our measure of motivation is therefore certainly related to other experiments that manipulate expected effort (Kurniawan et al., 2010; Croxson, Walton, O'Reilly, Behrens, & Rushworth, 2009). However, the aspect of motivation that we investigated here is qualitatively distinct in an important way. In particular, previous studies investigated activation associated with making choices regarding cognitively or physically taxing actions or receiving information that such effort would be required in the future. In these latter cases, the effortful action neither occurred nor was prepared for proximate to the time of choice; rather, effort was only considered hypothetically. Our experiment therefore studied the “energizing” effects of motivation, whereas other studies have focused on discounting value based on future anticipated work. On the basis of the current findings, these two constructs appear to be distinct.

Our results may also appear to differ from those of previous work, which found that activation in the NAcc increased in response to a cue that signaled high effort requirements for the subsequent block (Botvinick, Huffstetler, & McGuire, 2009). This stands in apparent contrast to the current results, which found that activation in the NAcc responded to differences in subjective value, rather than motivation. This discrepancy is likely attributable to a major difference regarding the design of the two studies. In the current task, effort was manipulated via reward probability, which directly influences the likelihood of obtaining reward. In contrast, Botvinick et al. (2009) manipulated effort orthogonally to reward expectancy and therefore targeted a substantially different construct.

Motivation, as assayed in our experiment, is closely related to arousal and motor preparation. However, these labels fail to fully characterize the behavioral and cognitive changes induced by our manipulation. Arousal is defined as an emotional state that can occur even in the absence of active reward seeking (e.g., Watson & Tellegen, 1985). For example, many experiments have studied arousal by having participants passively view emotionally charged stimuli and report activation patterns that differ substantially from those in the present experiment (e.g., Anders, Lotze, Erb, Grodd, & Birbaumer, 2004). Similarly, although optimal performance on our task requires motor preparation, we intentionally investigated activity elicited by a cue that preceded motor activity by several seconds. Moreover, we factored out neural responses related to motor preparation as well as possible in our analyses. Although the motivation we investigate is certainly closely associated with energizing motor preparation, we believe it is distinct from motor acts themselves. Thus, although our task bears important similarities to arousal and motor preparation, it encompasses aspects of motivation that are insufficiently characterized by these alternative labels.

Our results also suggest a reinterpretation of the recent claims that the requirement for action strongly impacts activation in the CPu whereas the valence of the potential outcome (i.e., gaining vs. losing money) has a weaker relationship (Kurniawan et al., 2013; Guitart-Masip et al., 2011, 2012). In these studies, the difference in value between a successful versus unsuccessful response was the same regardless of whether participants attempted to obtain a reward or avoid a punishment. It is therefore likely that, although the potential outcomes differed between the two conditions, the motivation to succeed was similar. Additionally, action in these studies was extrinsically motivated, because trials requiring motor responses only occurred when dictated by cues indicating trial type. In contrast, the novel design employed in the current study enabled the investigation of motivated states that were intrinsically generated.

Our primary finding is that distinct striatal regions subserve different functions and specifically that the NAcc serves a distinct function from the rest of the striatum. We found that activation in the NAcc scaled positively with measures of liking and the subjective probability of obtaining reward. This is consistent with the existing literature and reinforces the role that this region plays in valuation, independent of the actual effort required, especially when the rewards are highly salient (Litt, Plassmann, Shiv, & Rangel, 2010; Knutson et al., 2005).

The ventral striatum, including the NAcc, is particularly sensitive to susceptibility artifacts and signal dropout, which can influence the variability and consistency of analyses that target this subregion (Sacchet & Knutson, 2013). It is therefore possible our analyses may have underestimated the response of the NAcc relative to the CPu. However, this would not impact the interpretation of the findings reported presently, as they are based on activation patterns that were detectable in spite of potential signal loss.

Although the current task investigated motivation as indexed by RTs, these findings may extend to more general differences in motivation. Motivation manifests in many different ways, only one of which is effort (and reduced RTs). We focused on RT in this study because it provides a relatively unambiguous measure of motivation. That said, motivation is also related to measures such as willingness to pay and a desire to seek out information, both of which have previously been associated with increased activation in the CPu (De Martino, Kumaran, Holt, & Dolan, 2009; Kang et al., 2009; Plassmann, O'Doherty, & Rangel, 2007; Weber et al., 2007).

In summary, these findings bring us closer to understanding the function of the CPu and NAcc in human decision-making and motivated behavior. Whereas some accounts assume that activation in the CPu scales with expected reward, the current results indicate that striatal responses outside the NAcc are more associated with motivation, above and beyond value or liking.

References

- Anders S, Lotze M, Erb M, Grodd W, Birbaumer N. Brain activity underlying emotional valence and arousal: A response-related fMRI study. Human Brain Mapping. 2004;23:200–209. doi: 10.1002/hbm.20048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aron A, Fisher H, Mashek DJ, Strong G, Li H, Brown LL. Reward, motivation, and emotion systems associated with early-stage intense romantic love. Journal of Neurophysiology. 2005;94:327–337. doi: 10.1152/jn.00838.2004. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Delgado MR, Hikosaka O. The role of the dorsal striatum in reward and decision-making. Journal of Neuroscience. 2007;27:8161–8165. doi: 10.1523/JNEUROSCI.1554-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Huffstetler S, McGuire JT. Effort discounting in human nucleus accumbens. Cognitive, Affective, & Behavioral Neuroscience. 2009;9:16–27. doi: 10.3758/CABN.9.1.16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cloutier J, Heatherton TF, Whalen PJ, Kelley WM. Are attractive people rewarding? Sex differences in the neural substrates of facial attractiveness. Journal of Cognitive Neuroscience. 2008;20:941–951. doi: 10.1162/jocn.2008.20062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cromwell HC, Hassani OK, Schultz W. Relative reward processing in primate striatum. Experimental Brain Research. 2005;162:520–525. doi: 10.1007/s00221-005-2223-z. [DOI] [PubMed] [Google Scholar]

- Cromwell HC, Schultz W. Effects of expectations for different reward magnitudes on neuronal activity in primate striatum. Journal of Neurophysiology. 2003;89:2823–2838. doi: 10.1152/jn.01014.2002. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O'Reilly JX, Behrens TEJ, Rushworth MFS. Effort-based cost-benefit valuation and the human brain. Journal of Neuroscience. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Holt B, Dolan RJ. The neurobiology of reference-dependent value computation. Journal of Neuroscience. 2009;29:3833–3842. doi: 10.1523/JNEUROSCI.4832-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Quervain DJF, Fischbacher U, Treyer V, Schellhammer M, Schnyder U, Buck A, et al. The neural basis of altruistic punishment. Science. 2004;305:1254–1258. doi: 10.1126/science.1100735. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Frank RH, Phelps EA. Perceptions of moral character modulate the neural systems of reward during the trust game. Nature Neuroscience. 2005;8:1611–1618. doi: 10.1038/nn1575. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Locke HM, Stenger VA, Fiez JA. Dorsal striatum responses to reward and punishment: Effects of valence and magnitude manipulations. Cognitive, Affective, & Behavioral Neuroscience. 2003;3:27–38. doi: 10.3758/cabn.3.1.27. [DOI] [PubMed] [Google Scholar]

- Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, et al. Whole brain segmentation: Automated labeling of neuroanatomical structures in the human brain. Neuron. 2002;33:341–355. doi: 10.1016/s0896-6273(02)00569-x. [DOI] [PubMed] [Google Scholar]

- Guitart-Masip M, Chowdhury R, Sharot T, Dayan P, Duzel E, Dolan RJ. Action controls dopaminergic enhancement of reward representations. Proceedings of the National Academy of Sciences, USA. 2012;109:7511–7516. doi: 10.1073/pnas.1202229109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Fuentemilla L, Bach DR, Huys QJM, Dayan P, Dolan RJ, et al. Action dominates valence in anticipatory representations in the human striatum and dopaminergic midbrain. Journal of Neuroscience. 2011;31:7867–7875. doi: 10.1523/JNEUROSCI.6376-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: Linking primate anatomy and human imaging. Neuropsychopharmacology. 2009;35:4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harbaugh WT, Mayr U, Burghart DR. Neural responses to taxation and voluntary giving reveal motives for charitable donations. Science. 2007;316:1622–1625. doi: 10.1126/science.1140738. [DOI] [PubMed] [Google Scholar]

- Hikosaka O, Takikawa Y, Kawagoe R. Role of the basal ganglia in the control of purposive saccadic eye movements. Physiological Reviews. 2000;80:953–978. doi: 10.1152/physrev.2000.80.3.953. [DOI] [PubMed] [Google Scholar]

- Kang MJ, Hsu M, Krajbich IM, Loewenstein G, McClure SM, Wang JTY, et al. The wick in the candle of learning: Epistemic curiosity activates reward circuitry and enhances memory. Psychological Science. 2009;20:963–973. doi: 10.1111/j.1467-9280.2009.02402.x. [DOI] [PubMed] [Google Scholar]

- Kawagoe R, Takikawa Y, Hikosaka O. Expectation of reward modulates cognitive signals in the basal ganglia. Nature Neuroscience. 1998;1:411–416. doi: 10.1038/1625. [DOI] [PubMed] [Google Scholar]

- King-Casas B, Tomlin D, Anen C, Camerer CF, Quartz SR, Montague PR. Getting to know you: Reputation and trust in a two-person economic exchange. Science. 2005;308:78–83. doi: 10.1126/science.1108062. [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. Journal of Neuroscience. 2001;21:1–5. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. Journal of Neuroscience. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kouneiher F, Charron S, Koechlin E. Motivation and cognitive control in the human prefrontal cortex. Nature Publishing Group. 2009;12:939–945. doi: 10.1038/nn.2321. [DOI] [PubMed] [Google Scholar]

- Kurniawan IT, Guitart-Masip M, Dayan P. Effort and valuation in the brain: The effects of anticipation and execution. Journal of Neuroscience. 2013;33:6160–6169. doi: 10.1523/JNEUROSCI.4777-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Seymour B, Talmi D, Yoshida W, Chater N, Dolan RJ. Choosing to make an effort: The role of striatum in signaling physical effort of a chosen action. Journal of Neurophysiology. 2010;104:313–321. doi: 10.1152/jn.00027.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litt A, Plassmann H, Shiv B, Rangel A. Dissociating valuation and saliency signals during decision-making. Cerebral Cortex. 2010;21:95–102. doi: 10.1093/cercor/bhq065. [DOI] [PubMed] [Google Scholar]

- Mogenson GJ, Jones DL, Yim CY. From motivation to action: Functional interface between the limbic system and the motor system. Progress in Neurobiology. 1980;14:69–97. doi: 10.1016/0301-0082(80)90018-0. [DOI] [PubMed] [Google Scholar]

- Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: Opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Pessoa L. How do emotion and motivation direct executive control? Trends in Cognitive Sciences. 2009;13:160–166. doi: 10.1016/j.tics.2009.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plassmann H, O'Doherty J, Rangel A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. Journal of Neuroscience. 2007;27:9984–9988. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pyke GH, Pulliam HR, Charnov EL. Optimal foraging: A selective review of theory and tests. Quarterly Review of Biology. 1977;52:137–154. [Google Scholar]

- Sacchet MD, Knutson B. Spatial smoothing systematically biases the localization of reward-related brain activity. Neuroimage. 2013;66:270–277. doi: 10.1016/j.neuroimage.2012.10.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. Journal of Neurophysiology. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson D, Tellegen A. Toward a consensual structure of mood. Psychological Bulletin. 1985;98:219–235. doi: 10.1037//0033-2909.98.2.219. [DOI] [PubMed] [Google Scholar]

- Weber B, Aholt A, Neuhaus C, Trautner P, Elger CE, Teichert T. Neural evidence for reference-dependence in real-market-transactions. Neuroimage. 2007;35:441–447. doi: 10.1016/j.neuroimage.2006.11.034. [DOI] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Martin-Skurski ME, Chappelow JC, Berns GS. Human striatal responses to monetary reward depend on saliency. Neuron. 2004;42:509–517. doi: 10.1016/s0896-6273(04)00183-7. [DOI] [PubMed] [Google Scholar]