Abstract

The persistent use of psychostimulant drugs, despite the detrimental outcomes associated with continued drug use, may be because of disruptions in reinforcement-learning processes that enable behavior to remain flexible and goal directed in dynamic environments. To identify the reinforcement-learning processes that are affected by chronic exposure to the psychostimulant methamphetamine (MA), the current study sought to use computational and biochemical analyses to characterize decision-making processes, assessed by probabilistic reversal learning, in rats before and after they were exposed to an escalating dose regimen of MA (or saline control). The ability of rats to use flexible and adaptive decision-making strategies following changes in stimulus–reward contingencies was significantly impaired following exposure to MA. Computational analyses of parameters that track choice and outcome behavior indicated that exposure to MA significantly impaired the ability of rats to use negative outcomes effectively. These MA-induced changes in decision making were similar to those observed in rats following administration of a dopamine D2/3 receptor antagonist. These data use computational models to provide insight into drug-induced maladaptive decision making that may ultimately identify novel targets for the treatment of psychostimulant addiction. We suggest that the disruption in utilization of negative outcomes to adaptively guide dynamic decision making is a new behavioral mechanism by which MA rigidly biases choice behavior.

Introduction

Flexible decision making, or the ability to adapt choices in response to changes in the external or internal environment, is impaired in individuals dependent upon illicit substances (Ersche et al, 2008; Goldstein and Volkow, 2002; Kubler et al, 2005; Fillmore and Rush, 2006; Ghahremani et al, 2011). These drug-induced, value-based decision-making deficits may contribute to the emergence and persistence of drug-seeking and drug-taking behaviors (Jentsch and Taylor, 1999) and treatments aimed at enhancing decision-making processes have been proposed as potential therapeutics for addiction (c.f., Bechara, 2005).

Decision making involves multiple latent behavioral components that involve reinforcement-learning processes (eg, prediction errors, learning rates, etc.) and enable behavior to remain flexible and goal directed (Redish, 2004; Redish et al, 2008; Dayan, 2009; Lucantonio et al, 2012). Any number of these processes can be altered by chronic exposure to drugs of abuse that may engender the development of inflexible or habitual drug-taking behaviors (Jentsch and Taylor, 1999; Everitt and Robbins, 2005). Indeed, substance-dependent individuals have difficulties using outcomes to make optimal decisions (Park et al, 2010; Sebold et al, 2014), adjusting choices when stimulus–reward contingencies are modified (Ersche et al, 2008; Ghahremani et al, 2011), being sensitive to the devaluation of stimuli (Ersche et al, 2016), and maintaining goal-directed behavior such that they are habitual when otherwise healthy controls are not (Sjoerds et al, 2013). Such latent behavioral processes can be characterized computationally using reinforcement-learning algorithms and regression analyses (Lau and Glimcher, 2005; Montague et al, 2012; Huys et al, 2015). Computational models have been used to analyze behavioral mechanism(s) underlying decision-making strategies in addicts (Volkow et al, 2006; Chiu et al, 2008; Park et al, 2010; Harlé et al, 2015), but it is unclear how complex environmental and variable drug histories in humans impact these decision-making processes.

Chronic exposure to psychostimulants in animals also disrupts reinforcement-learning processes in reversal-learning tasks (Jentsch et al, 2002; Schoenbaum et al, 2004; Stalnaker et al, 2009; Izquierdo et al, 2010; Groman et al, 2012; Cox et al, 2016) that have been linked to altered dopaminergic signaling (Groman et al, 2012). To date, such tasks commonly adopted in animal experiments, unlike those used in human studies, employ deterministic schedules of reinforcement (eg, reward or no reward) that do not fully exploit dynamic learning processes. In addition, deterministic tasks may not rely on the same latent behavioral processes and, consequently, neural mechanisms that are recruited by probabilistic schedules of reinforcement (Dalton et al, 2016). Animal studies using probabilistic reversal learning tasks, which can employ computational frameworks akin to analyses used in humans, are warranted to define discrete parameters that track decision-making strategies affected by drug use.

Dopamine acting on D1-like or D2-like receptor subtypes may mediate different aspects of decision making (Frank, 2004; Jenni et al, 2017) that are crucial for understanding the pathophysiology of addiction (Groman and Jentsch, 2012). Only a few studies in animals have combined dopamine pharmacology with computational analyses of flexible decision making analogous to those used in humans (Costa et al, 2015; Groman et al, 2016). Additional studies combining dopamine receptor pharmacology with translationally analogous tasks and analyses in animals may provide insight into the mechanisms mediating drug-induced decision-making impairments.

The current study sought to identify the behavioral and neurochemical processes that mediate reinforcement-based, decision-making deficits following chronic exposure to methamphetamine (MA). Using a novel, three-choice probabilistically reinforced reversal-learning paradigm, decision-making strategies were assessed before and after rats were exposed to an escalating dose regimen of MA (or saline, as control), and choice behavior was characterized computationally with reinforcement-learning algorithms and regression analyses. MA-induced decision-making deficits were compared with that following administration of dopamine receptor antagonists to provide novel insights into the behavioral and neurochemical processes that are affected by chronic MA.

Materials and methods

Subjects

Forty-two male, Long Evans rats (Charles River), ranging from 7 to 9 weeks of age, were pair housed in a climate-controlled vivarium and maintained on a 12 h light/dark cycle (lights on at 0700 h; lights off at 1900 h). Diet was restricted to maintain a body weight ∼90% of their free-feeding weight throughout the experiment. Water was available ad libitum except during behavioral assessments (1–2 h per day). Experimental protocols were consistent with the National Institutes of Health ‘Guide for the Care and Use of Laboratory Animals’ and approved by the institutional animal care and use committee at Yale University.

Drugs

MA hydrochloride, sulpiride (D2/3 receptor antagonist), and SCH23390 (D1 receptor antagonist) were purchased from Sigma-Aldrich. Doses of MA and SCH23390 were prepared fresh daily in sterile saline and the solution filtered through 22 μm Millex syringe filters (Millipore). Sulpiride was dissolved in sterile saline with 1 drop of 0.1 M HCl and pH adjusted to 7.0. MA was administered subcutaneously at a volume of 1 ml/kg. Sulpiride was administered intraperitoneally 20 min before the behavioral sessions and SCH23390 was administered intraperitoneally 10 min before the behavioral sessions. The order of drug administration was counterbalanced across rats.

Dosing Regimen

The dosing regimen was adapted from a previous study in rats (Segal et al, 2003) to mimic the escalation in both frequency of intake and daily dose reported by human users of MA (Han et al, 2011). The elimination half-life of MA is much faster in rats compared with humans (rats: 70 min; humans: 12 h), but plasma levels of MA can be approximated in rats by increasing the MA dose frequency, as is done with this dosing regimen (Cho et al, 2001). Furthermore, use of this dosing regimen in rats has been reported to alter dopaminergic markers and produce pharmacodynamic tolerance that is similar to that observed in human MA users (Segal et al, 2003; O’Neil et al, 2006). Passive administration of MA, compared with self-administration procedures, guarantees that all rats receive the same dose of the drug at the same rate. Given that the amount of MA intake is associated with the magnitude of drug-induced cognitive impairments (Cox et al, 2016) and that the current study sought to isolate the decision-making processes impacted by chronic exposure to MA, it was critical to control the amount of MA that each rat received. Table 1 provides details of the 23-day regimen used. Injections during the first 3 weeks of the dosing regimen were separated by 4 h and during the final week by 3 h. Rats remained under dietary restriction for the first 6 days, but were placed on ad libitum food for the duration of the dosing regimen. Weights of both experimental groups increased over the course of the dosing regimen, but were more robust in animals exposed to saline compared with those exposed to MA (saline: 18%±2; MA: 8%±2; Supplementary Figure 1). The dosing regimen was stopped after the third dose of MA on day 23 because of veterinary concerns and three rats from the MA group were excluded from the study because of marked hyperthermia.

Table 1. Escalating Dosing Regimen Used in the Current Study.

| Day 1 | Day 2 | Day 3 | Day 4 | Day 5 | Day 6 | Day 7 | |

| Injection 1 | 0.1 | 0.4 | 0.7 | 1.0 | 1.3 | 1.6 | Off |

| Injection 2 | 0.2 | 0.5 | 0.8 | 1.1 | 1.4 | 1.7 | |

| Injection 3 | 0.3 | 0.6 | 0.9 | 1.2 | 1.5 | 1.8 | |

| Day 8 | Day 9 | Day 10 | Day 11 | Day 12 | Day 13 | Day 14 | |

| Injection 1 | 1.9 | 2.2 | 2.5 | 2.8 | 3.1 | 3.4 | Off |

| Injection 2 | 2.0 | 2.3 | 2.6 | 2.9 | 3.2 | 3.5 | |

| Injection 3 | 2.1 | 2.4 | 2.7 | 3.0 | 3.3 | 3.6 | |

| Day 15 | Day 16 | Day 17 | Day 18 | Day 19 | Day 20 | Day 21 | |

| Injection 1 | 3.7 | 4.0 | 6.0 | 6.0 | 6.0 | 6.0 | Off |

| Injection 2 | 3.8 | 4.0 | 6.0 | 6.0 | 6.0 | 6.0 | |

| Injection 3 | 3.9 | 4.0 | 6.0 | 6.0 | 6.0 | 6.0 | |

| Injection 4 | 6.0 | 6.0 | 6.0 | 6.0 | |||

| Day 22 | Day 23 | ||||||

| Injection 1 | 6.0 | 6.0 | |||||

| Injection 2 | 6.0 | 6.0 | |||||

| Injection 3 | 6.0 | ||||||

| Injection 4 | 6.0 |

Values represent dose of methamphetamine hydrochloride (free-base weight; mg/kg) administered to rats across the 23-day regimen. During the first 3 weeks of the dosing regimen, injections were given every 4 h. Injections during the last week of the dosing regimen were given every 3 h.

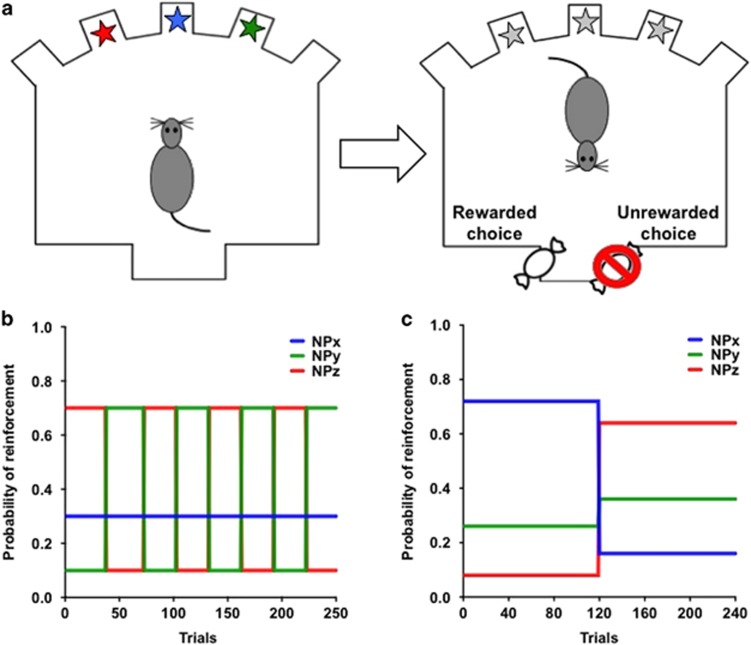

Probabilistic Reversal Learning (PRL) Schedule

Rats were trained to acquire and reverse three-choice spatial discrimination problems within a single session in standard aluminum and Plexiglas operant conditioning chambers (Groman et al, 2016; training procedure described in Supplementary Information). A response into the magazine aperture resulted in the illumination of three noseports (the three interior ports) and rats could respond to any of the illuminated noseports to earn probabilistically delivered rewards (Figure 1). Rats were trained on a variable PRL schedule (Figure 1b) where each noseport was randomly assigned to deliver reward with a probability of 70, 30, or 10% by the program at the start of each session. When rats met a performance criterion (21 choices on the highest reinforced noseport in the last 30 trials), the probabilities reversed between two noseports: the highest reinforced noseport (70%) became the lowest reinforced noseport (10%), whereas the lowest reinforced noseport became the highest reinforced noseport (70%). The noseport associated with a probability of 30% reinforcement remained unaltered. These reinforcement probabilities remained unchanged until the performance criterion was once again met, after which the reinforcement probabilities reversed again between the noseports associated with the highest and lowest reinforcement probabilities. Each time the performance criterion was met, the reinforcement probabilities reversed between the two noseports. The occurrence of a reversal was contingent upon performance and rats could complete as many as 8 reversals in a single session. Sessions terminated when rats completed 250 trials or 75 min had lapsed, whichever occurred first. Rats completed 26 within-session reversal sessions before and once weekly for 4 weeks following the dosing regimen.

Figure 1.

Diagram of the probabilistic reversal learning (PRL) task. (a) Rats make choices between three illuminated spatial locations in order to earn probabilistically delivered rewards (45 mg sucrose pellets, BioServ). (b) The schedule of reinforcement used in the variable PRL task. (c) The schedule of reinforcement used in the stable PRL task.

Decision making was also assessed using a stable PRL schedule (Figure 1c; Groman et al, 2016) 3 weeks after completing the dosing regimen. In this schedule, each noseport was randomly assigned to deliver reward with a probability of 72, 26, or 8% by the program at the start of each session and rats had 120 trials to learn which one of the three noseports was associated with the highest probability of reinforcement (referred to as the Acquisition Phase). Once rats completed 120 trials, the reinforcement probabilities increased for two of the noseports (8–64% and 26–36%) and decreased for one of the noseports (72–16%). Choice behavior was assessed for an additional 120 trials (referred to as the Reversal Phase). Sessions terminated when rats completed 240 trials or 75 min had lapsed, whichever occurred first. Two MA rats did not complete enough trials to experience a reversal, so there were no choices for these subjects in the reversal phase resulting in N=5 for this analysis.

Logistic Regression Analyses

Our results indicated that the ability of rats to use outcomes to guide subsequent choice behavior was disrupted following exposure to MA. To determine whether this impairment extended to recent past choices and outcomes, a logistic regression was used to examine the influence of outcomes on previous trials (t−1 through t−4) on the current choice behavior in rats before and after the dosing regimen, as previously described (Parker et al, 2016). Positive regression coefficients for the ‘Reward’ and ‘No Reward’ predictor indicate that rats are more likely to persist with the same choice, whereas negative regression coefficients indicate that rats are more likely to shift their choice.

Reinforcement-Learning Algorithm

Reinforcement-learning models predict that choices are based on action values that incrementally accrue over many trials. To determine whether exposure to MA influenced longer-term dependencies than those captured with the logistic regression, we analyzed the choice behavior of all rats using a reinforcement-learning model (Barraclough et al, 2004; Ito and Doya, 2009). In this model, the value for the chosen noseport x (Vx) is updated after each trial (t+1) according to the following model

where the decay rate γ determines how quickly the value for the chosen noseport decays (ie, γ=0 means the value is reset every trial) and Δ(t) indicates the change in the value that depends on the outcome from the chosen noseport in trial t. If the outcome of the trial was reward, then the value function of the chosen noseport Vx(t+1) was updated by Δ(t)=Δ1, the reinforcing strength of reward. However, it the outcome of the trial was the absence of reward, then the value function of the chosen noseport was updated by Δ(t)=Δ2, the aversive strength of no reward. The probability of choosing one noseport over the other two noseports was calculated according to a softmax function. Trial-by-trial choice data of each rat were fit with three parameters (γ, Δ1, and Δ2) selected to maximize the likelihood of each rat’s sequence of choices for all sessions completed by each rat.

Choice behavior in the stable PRL was analyzed using the same reinforcement-learning model. However, based on our previous work (Groman et al, 2016), choices made during the acquisition and reversal were analyzed separately. This resulted in six parameter estimates (acquisition: γACQ, Δ1-ACQ, and Δ2-ACQ, reversal: γREV, Δ1-REV, and Δ2-REV).

Pharmacological Studies of Dopamine Receptors

We conducted a pharmacological study in a separate group of drug-naive rats (N=22) to determine whether dopaminergic dysfunction might explain the MA-induced decision-making deficits observed here. Decision-making processes were assessed using a reinforcement schedule similar to that of the stable PRL (Supplementary Figure 2) so that the time from injection to the reversal was equivalent between rats. Sessions terminated when rats completed 240 trials or 76 min had lapsed, whichever occurred first. For half of these rats (N=11), decision making was assessed 20 min after administration of the D2/3 receptor antagonist sulpiride (60 mg/kg) or vehicle. For the remaining rats (N=11), decision making was assessed 10 min after administration of the selective D1 receptor antagonist SCH23390 (0.03 mg/kg; Bourne, 2001) or saline. Doses used were similar to those used in previous studies of reinforcement learning (Ljungberg and Enquist, 1990; Keeler et al, 2014) and do not produce motoric or behavioral impairments that are observed with higher doses of these drugs (Collins et al, 1991; Mattingly et al, 1993). Choice behavior following administration of sulpiride, SCH 23390, or saline was analyzed using the same reinforcement-learning algorithm described above.

Statistical Analyses

All statistical analyses were completed using SPSS (version 21, IBM). Repeated-measures ANOVA or MANOVA were used to examine the effect of the dosing regimen on decision-making processes with experimental group as the between-subjects factor. Decision-making measures for the pharmacological experiment were analyzed independently for the drug conditions (sulpiride vs SCH 233390) using repeated measures ANOVA. In all experiments, omnibus MANOVA was used to examine the influence of MA exposure or pharmacological manipulations on reinforcement-learning parameter estimates. Significant interactions were followed up using t-tests and Bonferonni corrected for multiple comparisons.

Results

Decision Making Before and After the Dosing Regimen

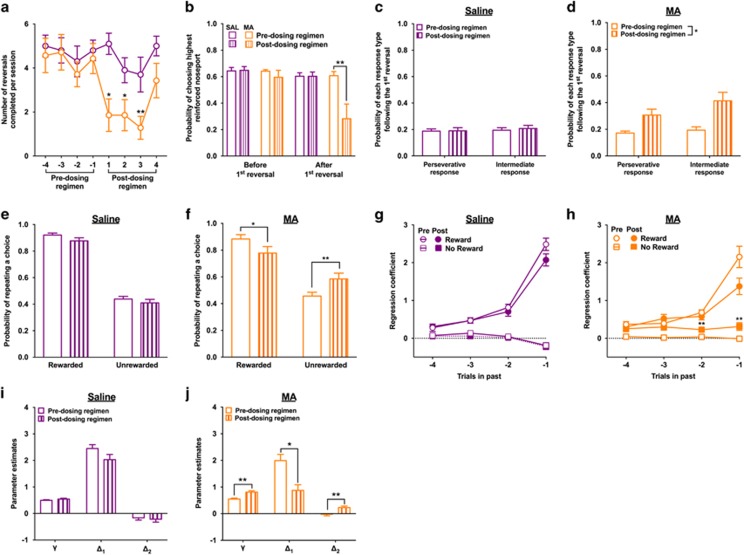

There were no significant differences between the experimental groups for any of the behavioral measures collected before beginning the dosing regimen (all ts<1.34; all ps>0.20). An analysis of the average number of reversals completed before and after the dosing regimen revealed a significant interaction with experimental group (F1, 15=8.91; p=0.009). Rats exposed to MA completed fewer reversals following the dosing regimen compared with their predosing regimen performance (t(6)=3.63; p<0.01). Importantly, the number of trials rats completed each session before and after the MA dosing regimen was not altered (t(6)=0.88; p=0.41; data not shown).

A longitudinal analysis comparing the number of reversals completed in the session immediately before beginning the dosing regimen to the number of reversals completed in the four sessions collected after the dosing regimen indicated that the MA-induced decision-making deficits persisted for 3 weeks following MA exposure (week 1: t(6)=3.0; p=0.02; week 2: t(6)=3.00; p=0.02; week 3: t(6)=3.67; p=0.002; Figure 2a). However, after 4 weeks of forced abstinence from MA the number of reversals completed was comparable to that before the dosing regimen (t(6)=1.17; p>0.05). Given that statistically significant decision-making deficits were only present during the first 3 weeks of forced abstinence from MA, the remaining analyses focused on the choice data collected in the three sessions immediately before (referred to as ‘Pre-dosing regimen’) and the three sessions after the dosing regimen (referred to as ‘Postdosing regimen’).

Figure 2.

The effects of the dosing regimen (MA: orange/light grey; saline: purple/black) on decision-making processes in the variable PRL. (a) Exposure to MA reduced the number of reversals that rats completed in each session. This decision-making impairment was observed during the first 3 weeks of forced abstinence, but had returned to levels comparable to that before the dosing regimen after 4 weeks of abstinence from MA. (b) MA-induced decision-making deficits were not because of impairments in the ability of rats to acquire the initial discrimination, but in their ability to adaptively modify their decisions when the reinforcement probabilities reversed. (c) Exposure to saline did not alter the types of errors rats made following the first reversal, but (d) exposure to MA increased the percentage of trials in which rats chose the noseport that was initially associated with the highest probability of reinforcement (eg, perseverative response) and the noseport that was associated with the intermediate probability of reinforcement (eg, intermediate response). (e) Exposure to saline did not alter feedback-based responding, but (f) exposure to MA decreased the probability that rats would persist with a response following a choice that resulted in reward and increased the probability that rats would persist with a response following a choice that was not rewarded. (g, h) Before the dosing regimen, rats were more likely to make the same choice that was rewarded and less likely to make the same choice that was not rewarded in recent trials. (h) Exposure to MA did not significantly alter the influence of recently rewarded choices on current choice, but increased the influence of recently unrewarded choices on current choice. (i) Exposure to saline did not alter the reinforcement-learning parameters. (j) Exposure to MA increased the γ parameter, decreased the Δ1 parameter, and increased the Δ2 parameter; *p<0.05 and **p<0.01. A full color version of this figure is available at the Neuropsychopharmacology journal online.

The reduction in the number of reversals completed following exposure to MA may be because of an inability of rats to acquire the initial discrimination. However, the number of trials required to reach criterion for the first reversal was not affected by the dosing regimen (time-by-experimental group: F1, 15=0.31; p=0.58; data not shown) and the number of reversals completed each session following exposure to MA was greater than zero (all ts>2.51, all ps<0.05), indicating that rats exposed to MA were able to acquire the initial discrimination, but had difficulties when the reinforcement probabilities were reversed. Furthermore, the probability of choosing the highest reinforced noseport before the first reversal was not affected by the dosing regimen (time-by-experimental group: F1, 15=0.49; p=0.49) but the probability of choosing the highest reinforced noseport after the first reversal was reduced in rats exposed to MA (time-by-experimental group: F1, 15=11.42; p=0.004; t(6)=3.26; p=0.01; Figure 2b). The reduction in performance following the reversal was because of an increase in responding to the noseport that was previously associated with the highest probability of reinforcement (eg, perseverative response) and to the noseport associated with the intermediate probability of reinforcement (eg, intermediate response) (time-by-experimental group: F1, 15=13.29; p=0.002; main effect of time for the MA group: F1, 6=12.64; p=0.01; Figure 2c and d).

Influence of Outcomes on Choices

To determine whether exposure to MA disrupted the ability of rats to use outcomes to guide their choices, the probability that rats would persist with the same response following a reward or no reward outcome before and after the dosing regimen between rats exposed to MA or saline was examined. Repeated measure MANOVA indicated that there was a significant interaction between time and experimental group (F1, 15=24.19; p<0.001). Rats exposed to MA were less likely to persist with the same response following a positive outcome (t(6)=3.19; p=0.02) and more likely to persist with the same response following a negative outcome (t(6)=6.36; p=0.001) compared with their predosing regimen performance (Figure 2f). These measures were not significantly altered in rats exposed to saline (all ts<2.10; all ps>0.06; Figure 2e).

The current results indicate that the ability of rats to use outcomes to guide their choices is disrupted following exposure to MA. To determine whether this impairment extended into the recent history of choices and outcomes (eg, t−1 to t−4), the regression coefficients for the ‘Reward’ and ‘No Reward’ predictors in the logistic regression model were examined before and after the dosing regimen between the experimental groups. Before the dosing regimen, choices were influenced by previous rewarded choices (for t−1 to t−4: all ts>4.80; all ps>0.001) and unrewarded choices (t−1: t=−2.30; p=0.03), indicating that rats were using a history of previous choices and outcomes to guide their decisions (Figure 2g and h). The regression coefficients for unrewarded choices were small and close to zero suggesting that these trials had little influence on subsequent choices. This result is expected under probabilistic schedules of reinforcement because choices on the highest reinforced option are not always rewarded. When the regression coefficients were examined before and after the dosing regimen, a significant three-way interaction between predictor type (Reward vs No Reward), experimental group (MA vs saline), and session (before and after the dosing regimen) was detected (F1, 45=18.55; p=0.001). Following exposure to MA, the regression coefficients for the ‘No Reward’ predictor increased compared with those before the dosing regimen (F1, 6=34.24; p=0.001) and, importantly, were significantly higher than 0 for all past trials (all ts>3.23; all ps<0.02): nonrewarded choices significantly influenced choices by increasing the likelihood that the same action would be made on future trials (Figure 2h). Non-reinforced trials have a weak influence on choice-outcome behavior in general. Exposure to MA disrupts the ability of the most recently non-reinforced trial to update choice such that rats fail to lose–switch. Regression coefficients that are zero or negative for controls are greater than zero and more positive on recent trials for MA rats, indicating a deficit in the utilization of negative outcomes on choice behavior. The interaction between the regression coefficients for the ‘Reward’ predictor and experimental group was not significant (F1, 15=0.97; p=0.34) and exposure to saline did not significantly alter the regression coefficients for the ‘No Reward’ predictor (F1, 9=1.95; p=0.19; Figure 2g).

Reinforcement-Learning Algorithm Analysis

Variable PRL

The parameter estimates, negative log likelihoods, and AIC and BIC values obtained using this reinforcement-learning model for the three sessions before and after the dosing regimen are presented in Supplementary Material Table 1. Repeated measures MANOVA of the three free parameters included in the reinforcement model (γ, Δ1, and Δ2) indicated that there was a significant interaction between experimental group, session, and parameter (F2, 30=8.56; p=0.001). Exposure to saline did not significantly alter the parameter estimates (all ts<2.20; all ps>0.05; Figure 2i): the Δ1 parameter estimate was a large, positive value whereas the Δ2 parameter estimate was a small, negative value. Exposure to MA increased the γ parameter (t(6)=4.97; p=0.003) and the Δ2 parameter, such that it became a positive value (t(6)=3.57; p=0.01; Figure 2j), and decreased the Δ1 parameter (t(6)=6.29; p=0.001; Figure 2j), indicating the influence of outcomes on choice behavior using this dynamic schedule was abnormal following exposure to MA.

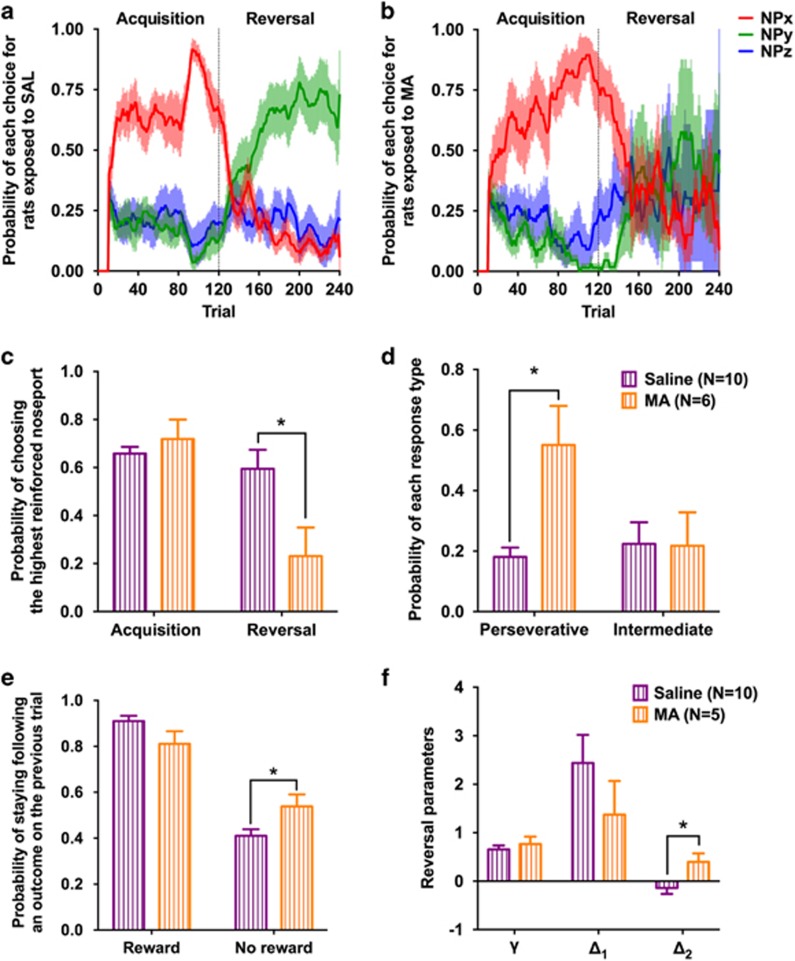

Stable PRL

To ensure that the MA-induced decision-making deficits were not because of the dynamic nature of the variable PRL, the choice behavior of rats was assessed using the stable PRL schedule 3 weeks after completing the dosing regimen (Figure 3a and b). There was a significant interaction between experimental group and the probability that rats would choose the highest reinforced noseport option across the task phases (F1, 14=7.79; p=0.01). Performance in the acquisition phase was similar between the experimental groups (t(14)=0.85; p=0.41), but performance in the reversal phase was significantly lower in rats exposed to MA compared with rats exposed to saline (t(14)=2.65; p=0.01; Figure 3c). During the reversal phase, the proportion of choices directed at the noseport associated with the highest probability during the acquisition phase (ie, perseveration) was significantly higher in rats exposed to MA compared with those exposed to saline (t(14)=3.51; p=0.003), whereas the proportion of choices directed at the noseport that was always associated with an intermediate level of reinforcement did not differ between the groups (t(14)=0.05; p=0.96; Figure 3d). Furthermore, the probability that rats would persist with the same response following nonrewarded trial was higher in rats exposed to MA compared with rats exposed to saline (t(14)=2.38; p=0.03; Figure 3e).

Figure 3.

Exposure to an escalating dose regimen of MA impairs the performance of rats in the stable PRL. (a, b) Average probability of each choice (red: highest reinforced option during the acquisition phase; green: highest reinforced option during the reversal phase; blue: option associated with an intermediate level of reinforcement in both task phases) during the acquisition and reversal phase of the stable PRL in rats exposed to SAL (left) or MA (right). Shaded area is the SEM. (c) The probability of choosing the highest reinforced option during the acquisition phase of the stable PRL was not different between rats exposed to saline (purple) or MA (orange). However, performance in the reversal phase was significantly lower in rats exposed to MA compared with saline. (d) The impaired performance of rats exposed to MA in the reversal phase was because of an increase in the probability that rats would make a perseverative response. (e) Rats exposed to MA were more likely to persist with the same choice following a trial that did not result in reward than rats exposed to SAL. (f) The computational analysis of choice behavior in the stable PRL indicated that rats exposed to MA had a higher Δ2 parameter than rats exposed to SAL; *p<0.05.

Because the effects of MA were greatest in the reversal phase of the stable PRL schedule, we restricted our analysis to the computational parameters obtained from the choices made during the reversal phase using repeated measures MANOVA. Similar to the results in the variable PRL, the Δ2 parameter was significantly higher in rats exposed to MA compared with those exposed to saline (F1, 13=6.46; p=0.02; Figure 3f), providing additional evidence that the utilization of negative outcomes was disrupted following exposure to MA. Although the other parameter estimates were not significantly different between the experimental groups (γ: F1, 13=0.51; p=0.49; Δ1: F1, 13=1.24; p=0.29) they were in a direction similar to that in the variable PRL task (eg, MA-exposed rats had higher γ and lower Δ1 parameter compared with saline-exposed rats).

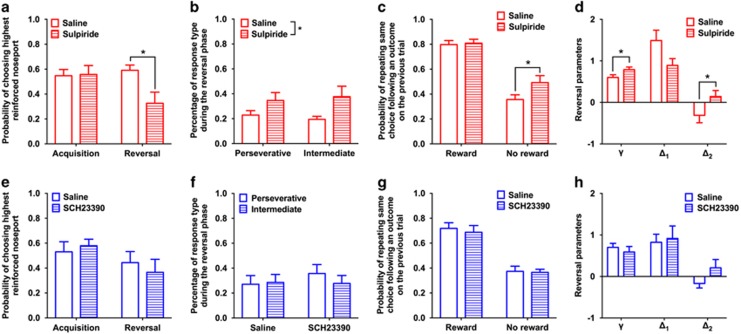

Pharmacological Manipulations

The probability that rats would choose the highest reinforced option in the acquisition phase was compared with that during the reversal phase following administration of vehicle or sulpiride. There was a significant interaction between task phase and drug condition (F1, 10=6.61; p=0.02; Figure 4a). Sulpiride did not alter performance of rats in the acquisition phase (F1, 10=0.01; p=0.91) but decreased performance in the reversal phase (F1, 10=9.69; p=0.01). This decrease in reversal performance was because of an increase in both perseverative and intermediate responding (drug: F1, 18=7.74; p=0.01; drug-by-error type: F1, 18=0.36; p=0.55; Figure 4b). Trial-by-trial analysis of choice based on previous trial outcome indicated that administration of sulpiride increased the probability that rats would persist with the same choice following an unrewarded trial (F1, 10=6.32; p=0.03), but did not affect the probability of persisting with the same response following a rewarded trial (F1, 10=0.14; p=0.72; Figure 4c). A repeated measures MANOVA of the free parameter estimates of choices made during the reversal phase indicated that the γ and Δ2 parameters increased following administration of sulpiride, compared with saline (γ: F1, 9=8.15; p=0.01; Δ2: F1, 9=5.26; p=0.04), similar to increase we observed in rat exposed to MA, and that there was a trend-level decrease in the Δ1 parameter (F1, 9=4.58; p=0.06; Figure 4d) akin to that observed after MA exposure.

Figure 4.

Dopaminergic mechanisms of decision making. (a–d) D2/3 receptor antagonist (sulpiride) experiment. (a) Compared with the performance of rats following administration of saline (open bars), administration of the dopamine D2/3 receptor antagonist sulpiride (60 mg/kg; dashed bars) did not affect the ability of rats to acquire a spatial discrimination, but reduced the probability of choosing the highest reinforced option during the reversal phase. (b) Administration of sulpiride increased both types of errors rats made. (c) Administration of sulpiride increased the probability that rats would persist with the same choice following a nonrewarded trial, but did not affect the probability that rats would persist with the same choice following a rewarded trial. (d) Computational analysis of choices following administration of saline (open bars) or sulpiride (dashed bars) indicated that sulpiride caused an increase in the γ and Δ2 parameter. (e–h) D1/5 receptor antagonist (SCH 23390) experiment. (e) Compared with the performance of rats following administration of saline (open bars), administration of the dopamine D1/5 receptor antagonist SCH 23390 (0.03 mg/kg; dashed bars) did not affect the probability that rats would choose the highest reinforced option in the acquisition or reversal phase. (f) Administration of SCH 23390 did not alter the types of errors rats made following the reversal or (g) influence the probability that rats would persist with the same response following a rewarded trial or an unrewarded trial. (h) Administration of SCH 23390 did not significantly alter the computational parameters.

Administration of SCH23390 reduced the number of trials rats completed, compared with saline (t(10)=2.53; p=0.03; data not shown), and two rats were excluded from the analysis for failing to complete enough trials to experience a reversal. Administration of SCH23390 did not alter the probability that rats would choose the highest reinforced noseport across the task phases (F1, 8=2.87; p=0.13; Figure 4e) and did not alter the types of error rats made (drug: F1, 16=0.65; p=0.43; Figure 4f) or the influence of rewarded (F1, 8=0.002; p=0.96) or unrewarded trials (F1, 8=0.006; p=0.94; Figure 4g) on subsequent choices. Similarly, there was no effect of SCH23390 on the free parameters derived from choices made during the reversal phase (γ: F1, 8=0.78; p=0.40; Δ1: F1, 8=0.55; p=0.48; Δ2: F1, 8=2.53; p=0.15; Figure 4h).

Discussion

We provide novel insights into the mechanisms of maladaptive value-based decision making caused by exposure to MA. By combining translationally analogous behavioral tasks in rats with computational analytic tools and pharmacology we demonstrate that exposure to an escalating dose regimen of MA produces aberrant encoding of choice-outcome behavior on future decisions, and may do so by diminishing dopamine D2/3 receptor signaling. Our results have important implications for understanding the pathophysiology of addiction and are the first to employ both linear regression and formal algorithmic behavioral analyses to psychostimulant-exposed rats.

The decision-making alterations observed in rats following exposure to MA were associated with impairments in the utilization of positive and negative outcomes to guide subsequent choices. Notably, the logistic regression analyses of recent choices and outcomes indicated that exposure to MA increased the probability that rats would persist with the same response following a nonrewarded trial. The model-free reinforcement learning analyses—that examined long-term dependencies of choice and outcome—provided additional evidence that exposure to MA impaired the ability of rats to use negative outcomes in guiding their decision making. However, previous work in animals have reported that utilization of negative outcomes to guide choices remains intact following exposure to MA, such that non-reinforced trials result in lose–switch behavior (Stolyarova et al, 2014). This discrepancy is likely because of the fact that deterministic schedules were used in these studies. Because probabilistic schedules introduce uncertainty into the decision-making process, the behavioral mechanisms that govern decisions in dynamic environments differ from those recruited in stable conditions (Dalton et al, 2016). Our results, using probabilistic schedules of reinforcement, parallel previous observations of impaired utilization of negative outcomes in psychostimulant-dependent humans (Paulus et al, 2003; Parvaz et al, 2015; Ersche et al, 2016). Here, under probabilistic conditions non-reinforced trials would not be expected to result in marked lose–shift behavior, but exposure to MA resulted in a robust increase in lose–stay behavior. This change in response strategy extended to distant trials, as revealed by the computational and logistic regression analysis (ie, increases in the Δ2 parameter and positive regression coefficients for non-rewarded trials). We interpret this as evidence for maladaptive utilization of negative outcomes on choice behavior that reflects a form of perseverative behavior that extends beyond the most recent outcome.

Slow, escalating exposure of rats to MA, rather than acute exposure to high doses of MA (Izquierdo et al, 2010), results in aspects of pharmacodynamic tolerance (Segal et al, 2003) that may be crucial for modeling addiction pathophysiology. Although previous studies implementing the same dosing regimen used here have not reported health concerns, we observed marked hyperthermia in a subset of the rats exposed to MA (N=3). Hyperthermia is commonly observed following administration of MA (Albers and Sonsalla, 1995) and linked to MA-mediated dopamine neurotoxicity (Ali et al, 1994), but does not completely account for MA-induced neuropathology (Albers and Sonsalla, 1995). Therefore, the MA-induced alterations in decision making detected here may, in part, be the biochemical effects of hyperthermia produced by MA.

Following 4 weeks of forced abstinence from MA, decision making in the variable PRL had returned to levels comparable to that before the dosing regimen. We hypothesize that the improvement in performance is reflective of recovery of dopamine D2/3 receptor signaling that has been observed following abstinence from drugs (Groman et al, 2012; Rominger et al, 2012). However, the design of the current study does not eliminate the possibility that the improvement in performance is because of the training that rats received during the abstinence period. Future studies that manipulate the extent of training that rats receive during the abstinence period would provide insight into the bases for improvements in drug-induced decision-making deficits that have observed in animals and humans (Jentsch et al, 2002; Stalnaker et al, 2009; Kohno et al, 2014).

Administration of the dopamine D2/3 receptor antagonist sulpiride impaired the ability of rats to adjust their choices following a change in reinforcement contingencies. This reversal-specific deficit following administration of sulpiride, assessed using the model-free algorithm, was because of a reduction in the utilization of negative outcomes to guide subsequent choices, as indicated by an increase in the Δ2 parameter estimate. These results were qualitatively and quantitatively similar to the results of the model-free analyses of decision making observed here in rats following exposure to MA. These data suggest that disruptions in D2/3 receptor signaling may underlie the MA-induced alterations in decision-making performance. Consistent with this hypothesis is evidence that MA-induced alterations in D2/3 receptor availability correlate with drug-induced changes in decision making in monkeys (Groman et al, 2012).

Administration of the D1/5 receptor antagonist SCH23390 did not disrupt the ability of rats to acquire or reverse a spatial discrimination here. Similar results have been observed in monkeys (Lee et al, 2007). However, viral-mediated disruptions of striatal D1 receptors impair learning from positive, but not negative, outcomes in mice (Higa et al, 2017) and recent work has suggested that D1 receptors facilitate distinct aspects of decision making by acting on different prefrontal networks (Jenni et al, 2017). Systemic administration of D1 acting drugs may, therefore, lack the anatomical specificity needed to delineate the role of this receptor subtype in decision making.

The current study provides insight into the biobehavioral processes that mediate deficits in MA-induced decision-making strategies. Our computational framework and regression analyses highlight alterations in reinforcement learning processes as a mechanism linked to the behavioral psychopathology of addiction. We propose that maladaptive utilization of outcomes on choice behavior caused by drug exposure is a potential mediator of key aspects of addiction, including escalation of drug use, compulsive seeking and taking of drugs, and recidivism to drug use. Ongoing studies are focused on defining how distinct alterations in reinforcement learning processes map on to these features.

Funding and disclosure

The authors declare no conflict of interest.

Acknowledgments

This study was supported by public health service grants DA041480 and DA043443, NARSAD, the Charles B.G. Murphy Fund, and the State of CT Department of Mental Health Services.

Footnotes

Supplementary Information accompanies the paper on the Neuropsychopharmacology website (http://www.nature.com/npp)

Supplementary Material

References

- Albers DS, Sonsalla PK (1995). Methamphetamine-induced hyperthermia and dopaminergic neurotoxicity in mice: pharmacological profile of protective and nonprotective agents. J Pharmacol Exp Ther 275: 111. [PubMed] [Google Scholar]

- Ali SF, Newport GD, Holson RR, Slikker W, Bowyer JF (1994). Low environmental temperatures or pharmacologic agents that produce hypothermia decrease methamphetamine neurotoxicity in mice. Brain Res 658: 33–38. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D (2004). Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci 7: 404–410. [DOI] [PubMed] [Google Scholar]

- Bechara A (2005). Decision making, impulse control and loss of willpower to resist drugs: a neurocognitive perspective. Nat Neurosci 8: 1458–1463. [DOI] [PubMed] [Google Scholar]

- Bourne JA (2001). SCH 23390: the first selective dopamine D1-like receptor antagonist. CNS Drug Rev7: 399–414.. [DOI] [PMC free article] [PubMed]

- Chiu PH, Lohrenz TM, Montague PR (2008). Smokers’ brains compute, but ignore, a fictive error signal in a sequential investment task. Nat Neurosci 11: 514–520. [DOI] [PubMed] [Google Scholar]

- Cho AK, Melega WP, Kuczenski R, Segal DS (2001). Relevance of pharmacokinetic parameters in animal models of methamphetamine abuse. Synapse 39: 161–166. [DOI] [PubMed] [Google Scholar]

- Collins P, Broekkamp CL, Jenner P, Marsden CD (1991). Drugs acting at D-1 and D-2 dopamine receptors induce identical purposeless chewing in rats which can be differentiated by cholinergic manipulation. Psychopharmacology (Berl) 103: 503–512. [DOI] [PubMed] [Google Scholar]

- Costa VD, Tran VL, Turchi J, Averbeck BB (2015). Reversal learning and dopamine: a Bayesian perspective. J Neurosci 35: 2407–2416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox BM, Cope ZA, Parsegian A, Floresco SB, Aston-Jones G, See RE (2016). Chronic methamphetamine self-administration alters cognitive flexibility in male rats. Psychopharmacology (Berl) 233: 2319–2327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalton GL, Wang NY, Phillips AG, Floresco SB (2016). Multifaceted contributions by different regions of the orbitofrontal and medial prefrontal cortex to probabilistic reversal learning. J Neurosci 36: 1996–2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P (2009). Dopamine, reinforcement learning, and addiction. Pharmacopsychiatry 42: S56–S65. [DOI] [PubMed] [Google Scholar]

- Ersche KD, Gillan CM, Jones PS, Williams GB, Ward LH, Luijten M et al (2016). Carrots and sticks fail to change behavior in cocaine addiction. Science 352: 1468–1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ersche KD, Roiser JP, Robbins TW, Sahakian BJ (2008). Chronic cocaine but not chronic amphetamine use is associated with perseverative responding in humans. Psychopharmacol 197: 421–431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Everitt BJ, Robbins TW (2005). Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat Neurosci 8: 1481–1489. [DOI] [PubMed] [Google Scholar]

- Fillmore MT, Rush CR (2006). Polydrug abusers display impaired discrimination-reversal learning in a model of behavioural control. J Psychopharmacol 20: 24–32. [DOI] [PubMed] [Google Scholar]

- Frank MJ (2004). By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science (80-) 306: 1940–1943. [DOI] [PubMed] [Google Scholar]

- Ghahremani DG, Tabibnia G, Monterosso J, Hellemann G, Poldrack RA, London ED (2011). Effect of modafinil on learning and task-related brain activity in methamphetamine-dependent and healthy individuals. Neuropsychopharmacology 36: 950–959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein RZ, Volkow ND (2002). Drug addiction and its underlying neurobiological basis: neuroimaging evidence for the involvement of the frontal cortex. Am J Psychiatry 159: 1642–1652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, Jentsch JD (2012). Cognitive control and the dopamine D(2)-like receptor: a dimensional understanding of addiction. Depress Anxiety 29: 295–306. [DOI] [PubMed] [Google Scholar]

- Groman SM, Lee B, Seu E, James AS, Feiler K, Mandelkern MA et al (2012). Dysregulation of D2-mediated dopamine transmission in monkeys after chronic escalating methamphetamine exposure. J Neurosci 32: 5843–5852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, Smith NJ, Petrullli JR, Massi B, Chen L, Ropchan J et al (2016). Dopamine D3 receptor availability is associated with inflexible decision making. J Neurosci 36: 6732–6741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han E, Paulus MP, Wittmann M, Chung H, Song JM (2011). Hair analysis and self-report of methamphetamine use by methamphetamine dependent individuals. J Chromatogr B Anal Technol Biomed Life Sci 879: 541–547. [DOI] [PubMed] [Google Scholar]

- Harlé KM, Zhang S, Schiff M, Mackey S, Paulus MP, Yu AJ (2015). Altered statistical learning and decision-making in methamphetamine dependence: evidence from a two-armed bandit task. Front Psychol 6: 1910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higa KK, Young JW, Ji B, Nichols DE, Geyer MA, Zhou X (2017). Striatal dopamine D1 receptor suppression impairs reward-associative learning. Behav Brain Res 323: 100–110. [DOI] [PubMed] [Google Scholar]

- Huys QJM, Lally N, Faulkner P, Eshel N, Seifritz E, Gershman SJ et al (2015). Interplay of approximate planning strategies. Proc Natl Acad Sci USA 112: 3098–3103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito M, Doya K (2009). Validation of decision-making models and analysis of decision variables in the rat basal ganglia. J Neurosci 29: 9861–9874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Belcher AM, Scott L, Cazares VA, Chen J, O’Dell SJ et al (2010). Reversal-specific learning impairments after a binge regimen of methamphetamine in rats: possible involvement of striatal dopamine. Neuropsychopharmacology 35: 505–514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenni NL, Larkin JD, Floresco SB (2017). Prefrontal dopamine D1 and D2 receptors regulate dissociable aspects of decision making via distinct ventral striatal and amygdalar circuits. J Neurosci 37: 6200–6213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jentsch JD, Olausson P, De La Garza R 2nd, Taylor JR (2002). Impairments of reversal learning and response perseveration after repeated, intermittent cocaine administrations to monkeys. Neuropsychopharmacology 26: 183–190. [DOI] [PubMed] [Google Scholar]

- Jentsch JD, Taylor JR (1999). Impulsivity resulting from frontostriatal dysfunction in drug abuse: implications for the control of behavior by reward-related stimuli. Psychopharmacology 146: 373–390. [DOI] [PubMed] [Google Scholar]

- Keeler JF, Pretsell DO, Robbins TW (2014). Functional implications of dopamine D1 vs. D2 receptors: A “prepare and select” model of the striatal direct vs. indirect pathways. Neuroscience 282: 156–175. [DOI] [PubMed] [Google Scholar]

- Kohno M, Morales AM, Ghahremani DG, Hellemann G, London ED (2014). Risky decision making, prefrontal cortex, and mesocorticolimbic functional connectivity in methamphetamine dependence. JAMA Psychiatry 71: 812–820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubler A, Murphy K, Garavan H (2005). Cocaine dependence and attention switching within and between verbal and visuospatial working memory. Eur J Neurosci 21: 1984–1992. [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher PW (2005). Dynamic response-by-response models of matching behavior in rhesus monkeys. J Exp Anal Behav 84: 555–579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee B, Groman S, London ED, Jentsch JD (2007). Dopamine D2/D3 receptors play a specific role in the reversal of a learned visual discrimination in monkeys. Neuropsychopharmacology 32: 2125–2134. [DOI] [PubMed] [Google Scholar]

- Ljungberg T, Enquist M (1990). Effects of dopamine D-1 and D-2 antagonists on decision making by rats: no reversal of neuroleptic-induced attenuation by scopolamine. J Neural Transm 82: 167–179. [DOI] [PubMed] [Google Scholar]

- Lucantonio F, Stalnaker TA, Shaham Y, Niv Y, Schoenbaum G (2012). The impact of orbitofrontal dysfunction on cocaine addiction. Nat Neurosci 15: 358–366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattingly BA, Rowlett JK, Lovell G (1993). Effects of daily SKF 38393, quinpirole, and SCH 23390 treatments on locomotor activity and subsequent sensitivity to apomorphine. Psychopharmacology 110: 320–326. [DOI] [PubMed] [Google Scholar]

- Montague PR, Dolan RJ, Friston KJ, Dayan P (2012). Computational psychiatry. Trends Cogn Sci 16: 72–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Neil ML, Kuczenski R, Segal DS, Cho AK, Lacan G, Melega WP (2006). Escalating dose pretreatment induces pharmacodynamic and not pharmacokinetic tolerance to a subsequent high-dose methamphetamine binge. Synapse 60: 465–473. [DOI] [PubMed] [Google Scholar]

- Park SQ, Kahnt T, Beck A, Cohen MX, Dolan RJ, Wrase J et al (2010). Prefrontal cortex fails to learn from reward prediction errors in alcohol dependence. J Neurosci 30: 7749–7753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker NF, Cameron CM, Taliaferro JP, Lee J, Choi JY, Davidson TJ et al (2016). Reward and choice encoding in terminals of midbrain dopamine neurons depends on striatal target. Nat Neurosci 19: 845–854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parvaz MA, Konova AB, Proudfit GH, Dunning JP, Malaker P, Moeller SJ et al (2015). Impaired neural response to negative prediction errors in cocaine addiction. J Neurosci 35: 1872–1879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulus MP, Hozack N, Frank L, Brown GG, Schuckit MA (2003). Decision making by methamphetamine-dependent subjects is associated with error-rate-independent decrease in prefrontal and parietal activation. Biol Psychiatry 53: 65–74. [DOI] [PubMed] [Google Scholar]

- Redish AD (2004). Addiction as a computational process gone awry. Science 306: 1944–1947. [DOI] [PubMed] [Google Scholar]

- Redish AD, Jensen S, Johnson A (2008). A unified framework for addiction: vulnerabilities in the decision process. Behav Brain Sci 31: 415–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rominger A, Cumming P, Xiong G, Koller G, Böning G, Wulff M et al (2012). [18F]fallypride PET measurement of striatal and extrastriatal dopamine D2/3 receptor availability in recently abstinent alcoholics. Addict Biol 17: 490–503. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Saddoris MP, Ramus SJ, Shaham Y, Setlow B (2004). Cocaine-experienced rats exhibit learning deficits in a task sensitive to orbitofrontal cortex lesions. Eur J Neurosci 19: 1997–2002. [DOI] [PubMed] [Google Scholar]

- Sebold M, Deserno L, Nebe S, Schad DJ, Garbusow M, Hägele C et al (2014). Model-based and model-free decisions in alcohol dependence. Neuropsychobiology 70: 122–131. [DOI] [PubMed] [Google Scholar]

- Segal DS, Kuczenski R, O’Neil ML, Melega WP, Cho AK (2003). Escalating dose methamphetamine pretreatment alters the behavioral and neurochemical profiles associated with exposure to a high-dose methamphetamine binge. Neuropsychopharmacology 28: 1730–1740. [DOI] [PubMed] [Google Scholar]

- Sjoerds Z, de Wit S, van den Brink W, Robbins TW, Beekman ATF, Penninx BWJH et al (2013). Behavioral and neuroimaging evidence for overreliance on habit learning in alcohol-dependent patients. Transl Psychiatry 3: e337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Takahashi Y, Roesch MR, Schoenbaum G (2009). Neural substrates of cognitive inflexibility after chronic cocaine exposure. Neuropharmacology 56: 63–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stolyarova A, O’Dell SJ, Marshall JF, Izquierdo A (2014). Positive and negative feedback learning and associated dopamine and serotonin transporter binding after methamphetamine. Behav Brain Res 271: 195–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkow ND, Wang GJ, Begleiter H, Porjesz B, Fowler JS, Telang F et al (2006). High levels of dopamine D2 receptors in unaffected members of alcoholic families: possible protective factors. Arch Gen Psychiatry 63: 999–1008. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.