Abstract

Background

In the search for more straightforward ways of summarizing patient experiences and satisfaction, there is growing interest in the Net Promoter Score (NPS): How likely is it that you would recommend our company to a friend or colleague?

Objective

To assess what the NPS adds to patient experience surveys. The NPS was tested against three other constructs already used in current surveys to summarize patient experiences and satisfaction: global ratings, recommendation questions and overall scores calculated from patient experiences. To establish whether the NPS is a valid measure for summarizing patient experiences, its association with these experiences should be assessed.

Methods

Associations between the NPS and the three other constructs were assessed and their distributions were compared. Also, the association between the NPS and patient experiences was assessed. Data were used from patient surveys of inpatient hospital care (N = 6018) and outpatient hospital care (N = 10 902) in six Dutch hospitals.

Results

Analyses showed that the NPS was moderately to strongly correlated with the other three constructs. However, their distributions proved distinctly different. Furthermore, the patient experiences from the surveys showed weaker associations with the NPS than with the global rating and the overall score.

Conclusions

Because of the limited extent to which the NPS reflects the survey results, it seems less valid as a summary of patient experiences than a global rating, the existing recommendation question or an overall score calculated from patient experiences. In short, it is still unclear what the NPS specifically adds to patient experience surveys.

Keywords: patient satisfaction, patient surveys, quality of care, survey research

Introduction

In recent decades, patient experiences have gained a prominent place in research on quality of care.1, 2 Patients find themselves increasingly involved in assessing the quality of care as ‘health‐care consumers’: How do they themselves perceive the quality of the care they received? Information from patient experience surveys can be used by health‐care providers to see which aspects of care need improvement and which aspects are to the patients’ satisfaction, and also by patients to help them actively choose between health‐care providers.3, 4 Furthermore, health insurance companies or health plans may use patient experiences to contract the best performing health‐care providers and institutions.5

The results of patient experience surveys are usually presented in the form of specific quality indicators (or composites), calculated as an average score over specific survey items. Examples of quality indicators are the attitude of health‐care professionals or the provision of information on treatments. However, stakeholders are still presented with a wide variety of information, without a clear overall view of the results.6, 7, 8 Therefore, global ratings and questions on the recommendation of health‐care providers are commonly included in patient surveys and, in practice, are often used to summarize patient experiences. It has been shown that global ratings are associated with care aspects that are most important to patients and predominantly with patients' experiences regarding care processes.9, 10 Another possibility for summarizing survey results is to construct an overall score from the quality indicators, which also seems to be a promising and valid way to present quality information.11

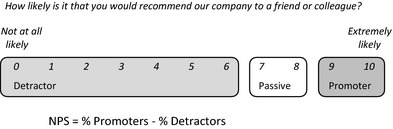

In the search for a more simple and straightforward way of assessing patient experiences and satisfaction in surveys, there is growing interest in including a Net Promoter Score (or NPS).12, 13 In fact, the NPS is sometimes referred to as ‘the ultimate question’, suggesting it is a summary of consumer (or patient) satisfaction. The NPS stems from management research and was introduced in 2003 by Fred Reichheld.14 The NPS is based on a single question: How likely is it that you would recommend our company to a friend or colleague? Participants can give an answer ranging from 0 (‘not at all likely’) to 10 (‘extremely likely’) (Fig. 1). The assumption is that individuals scoring a 9 or a 10 will give positive word‐of‐mouth advertising; they are called ‘promoters’. Individuals answering 7 or 8 are considered indifferent (‘passives’). Finally, individuals answering 0–6 are likely to be dissatisfied customers and are labelled as ‘detractors’. The Net Promoter Score is then calculated as the percentage of ‘promoters’ minus the percentage of ‘detractors’, as shown below.

Figure 1.

The Net Promoter Score scale.

Many companies have adopted the NPS as a simple and concise way to examine the satisfaction of customers, even though its methodology and ability to predict economic growth are not undisputed.15, 16, 17 However, research regarding its use in the health‐care setting is scarce. A 2005 US study discussed potential economic losses and gains in a surgical outpatient clinic for the future, based on the NPS.18 However, these figures were not yet tested in practice. Also, the author observed that the NPS in itself was not useful to define the reasons for patients to be either satisfied or dissatisfied with their care; follow‐up was still needed.

Nonetheless, many stakeholders have high expectations regarding the use of the NPS, and some have already incorporated/adopted it in their patient surveys, such as the Consumer Quality Index (CQI) surveys. The CQI‐surveys are the Dutch standard for measuring patient experiences. Its methodology is similar to that of the CAHPS (Consumer Assessment of Healthcare Providers and Systems) questionnaires from the USA2, 19, 20, 21.

For the validity of surveys and their results, it is important to thoroughly research the implications and meaning of newly introduced items. Therefore, in this article, we seek to examine the potential contribution of the NPS to patient experience surveys. To this end, we will test the NPS against three other constructs already available in current surveys: a global rating, a recommendation question and an overall score. An important role of global ratings and recommendation questions is to summarize patient experiences and satisfaction in CAHPS and CQI‐surveys. Both types of items resemble the NPS. Global ratings usually also involve a 0–10 scale, but ask participants to give an overall rating of the quality, instead of the likelihood of recommending the provider or institution. The recommendation question used in the American Hospital CAHPS and CQI questionnaires does involve recommending the provider or institution, but on a labelled scale of 1–4.

The classification of ‘promoters’ (satisfied, scores of 9 and 10) and ‘detractors’ (dissatisfied, scores of 0–6) may present a specific problem for the Netherlands. A 10‐point grading scale is used throughout the Dutch school system, with a score of ‘6′ being the threshold for passing a test, although it is considered a ‘detractor’ in the NPS classification. Also, an ‘8’ is considered a very good result in the Dutch school system, but the NPS considers it ‘passive’. This may have important implications for the validity of the NPS when used in a Dutch setting; it might be expected that participants will respond similarly to the NPS as to the global rating, because their scales are identical. Also, the trichotomy of the NPS may underestimate willingness to recommend by considering ‘6’ a negative and ‘8’ a neutral response.

We will examine the relationship between the NPS, a global rating and a recommendation question. Furthermore, we will examine the association between the NPS and an overall score, constructed from patient experiences. This leads to our first research question:

-

1

What is the association of the NPS with global ratings, other recommendation questions, and other overall scores of patient experiences?

To establish whether the NPS is a valid measure for summarizing patient experiences, its association with these experiences should be assessed. Therefore, our second research question is:

-

2

To what extent is the NPS a valid measure for summarizing patient experiences with specific aspects of the quality of care?

Methods

Measurements

NPS (11 categories and 3 categories), global rating and CQI recommendation question

The NPS question was: How likely is it that you would recommend this hospital/clinic to family or friends? The actual Net Promoter Score is an aggregate score at the institutional level (range from −100% to +100%). However, because of the limited number of hospitals included in our data, we examined the NPS question only at the patient level in our analyses (response range of 0–10, i.e. 11 categories; designated NPS11). For an additional analysis, the traditional 3‐category classification of the NPS (NPS3) was also calculated for the individual participants: 0 through 6 for ‘detractors’, 7 and 8 for ‘passives’, and 9 and 10 for ‘promoters’ (see Fig. 1).14 Because of the potentially different psychological boundaries of the NPS classification in the Netherlands, we also constructed an alternative NPS3: 0–5 (detractors), 6–7 (passives) and 8–10 (promoters).

The global rating consisted of a single question: How would you rate the hospital/clinic? It involved 11 response categories, ranging from 0 to 10, in which 0 was labelled the ‘worst possible hospital/clinic’ and 10 labelled the ‘best possible hospital/clinic’.

The CQI recommendation question is: Would you recommend this hospital/clinic to your friends and family? The question involves 4 labelled categories: (i) Definitely no; (ii) Probably no; (iii) Probably yes and (iv) Definitely yes (identical to the American Hospital CAHPS survey.22).

CQI patient experiences and the overall score

Each of the items in the CQI‐surveys measures a specific patient experience. Using commonly accepted methods of data reduction, such as factor analysis and reliability analysis, the survey items have been grouped to form quality indicators (or composites). In the analyses, we used these quality indicators to represent patient experiences.

Nine indicators were derived from the CQI Inpatient Hospital Care, each constructed from two or more questions (Cronbach's alpha 0.65–0.82, our data).23 For the outpatient CQI, eight quality indicators were used (Cronbach's alpha 0.79–0.89, our data).24 See Table 2 for the indicators. Indicator scores were constructed by calculating the average score over the items for each participant, provided that the participant answered half or more of the items for that indicator. All indicators had a range of 1 (negative) to 4 (positive). The survey items associated with each quality indicator are presented in an online appendix.

Table 2.

Spearman's rank correlations between CQI patient experiences and NPS, global ratings, CQI recommendation and overall scores

| CQI quality indicator | No. of items | α | Spearman's rank correlations | |||

|---|---|---|---|---|---|---|

| NPS11 | Global rating of hospital | CQI recommendation | Overall score (item–rest) | |||

| Inpatient hospital carea | ||||||

| Communication on intake | 10 | 0.80 | 0.20 | 0.24 | 0.21 | 0.44 |

| Nurse communication | 3 | 0.82 | 0.40 | 0.49 | 0.41 | 0.56 |

| Doctor communication | 2 | 0.81 | 0.38 | 0.47 | 0.37 | 0.52 |

| Autonomy | 5 | 0.68 | 0.33 | 0.39 | 0.34 | 0.52 |

| Treatment information | 3 | 0.80 | 0.35 | 0.40 | 0.33 | 0.61 |

| Pain treatment | 2 | 0.77 | 0.37 | 0.41 | 0.35 | 0.51 |

| Medication communication | 2 | 0.69 | 0.35 | 0.41 | 0.35 | 0.63 |

| Security | 3 | 0.65 | 0.32 | 0.40 | 0.33 | 0.53 |

| Information at discharge | 5 | 0.76 | 0.23 | 0.28 | 0.24 | 0.41 |

| Outpatient hospital careb | ||||||

| Reception at clinic | 4 | 0.85 | 0.38 | 0.43 | 0.38 | 0.39 |

| Contact with doctor | 3 | 0.87 | 0.36 | 0.42 | 0.38 | 0.51 |

| Information from doctor | 4 | 0.86 | 0.36 | 0.41 | 0.37 | 0.56 |

| Communication with doctor | 3 | 0.85 | 0.36 | 0.43 | 0.39 | 0.57 |

| Contact with other care professional | 3 | 0.88 | 0.31 | 0.35 | 0.31 | 0.50 |

| Information from other care professional | 4 | 0.89 | 0.31 | 0.37 | 0.32 | 0.59 |

| Communication with other care professional | 3 | 0.88 | 0.33 | 0.37 | 0.34 | 0.60 |

| Aftercare and medication | 3 | 0.79 | 0.20 | 0.29 | 0.21 | 0.31 |

N = 3130–5985.

N = 3743–10 854.

All correlations are significant at P < 0.001.

The overall score of the patient experiences was then calculated at the patient level as the average of all the individual quality indicator scores. The overall score for each participant was calculated if they had a score for at least half of the quality indicators. In practice, this meant scores for at least 4 (hospital inpatients) or 5 (hospital outpatients) of the quality indicators.

Data

Data were used from two CQI patient experience surveys: inpatient hospital care (short form) and outpatient hospital care.23, 24, 25, 26 Patients were recruited in six Dutch hospitals.27 A random selection of patients from these hospitals is made quarterly, and these patients are invited to participate in the survey, either online or by filling out a paper questionnaire. Ethical approval of the study was not necessary as research by means of surveys that are not taxing and or hazardous for patients (i.e. the once‐only completion of a questionnaire containing questions that do not constitute a serious encroachment on the person completing it) is not subject to the Dutch Medical Research Involving Human Subjects Act (WMO). Subjects were informed by letter about the aim of the survey and were free to respond to the questionnaire. Data collection was performed by a third party and took place in the third quarter of 2013. The data from the CQI inpatient hospital care survey covered the experiences of 6018 inpatients and the data from the CQI outpatient hospital care survey were based on the responses of 10 902 outpatients: 29% (inpatient survey) and 27% (outpatient survey) of the patients invited to participate. If patients were below the age of 18, their parents could fill out the questionnaire on their behalf. Patient characteristics of the two data sets were almost equal for gender (inpatients 48% male; outpatients 52%) and age (inpatients 23% <45 years, 34% 45–64 years and 42% >64 years; outpatients 19% <45 years, 40% 45–65 years and 41% >64 years). These samples were largely representative,26 although women and the youngest age group (<45 years old) were somewhat underrepresented compared to the middle‐aged group.

Analyses

The relationship of the NPS to the three other constructs was first assessed by examining the distributions of the scores. For the NPS and the global rating, this was done by comparing the two 11‐point scales, including an analysis of variance (anova). Because of the ordinal nature and labelled response categories of the CQI recommendation question, its association with the NPS was examined using the standard 3‐category classification of detractors, passives, and promoters (NPS3) and our alternative classification. Conceptually, the ‘Definitely no’ and ‘Definitely yes’ categories of the CQI recommendation question should correspond respectively with the ‘detractor’ and the ‘promoter’ categories of the NPS.

Second, Spearman's correlations were calculated between the NPS11, the global rating, the CQI recommendation question and the overall score.

To determine the validity of the NPS in summarizing patient experiences, we examined the extent to which NPS was associated with the CQI quality indicators from both surveys. Spearman's rank correlation coefficients were calculated between the quality indicators and the NPS11. The stronger the association, the better the indicator scores were reflected by the NPS. To compare the results, the same analyses were performed for the global rating, the CQI recommendation question and the overall score. However, the associations between the individual quality indicators and the overall score were likely to be exaggerated due to possible mathematical coupling (i.e. the individual indicator is included in the calculation of the overall score).28 Therefore, the item–rest correlation of each quality indicator was calculated. This meant that for each quality indicator, its association with the overall score was assessed while omitting that specific indicator from the overall score. For example, to examine the association between the indicator ‘Doctor communication’ and the overall score, the overall score was calculated using all the quality indicator scores except the score for the ‘Doctor communication’ indicator.

All correlation coefficients were checked pairwise for significant differences.29, 30

Results

NPS, global rating, CQI recommendation question and overall score

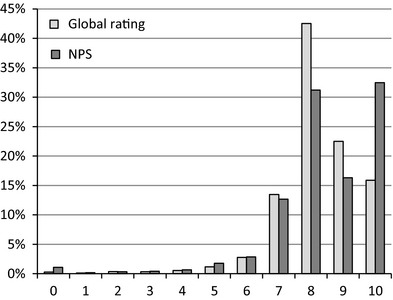

Figure 2 shows the distribution of the NPS‐scores compared to that of the global rating for the outpatient hospital care data. For scores up to 7, the global rating and NPS were almost identical. From scores of 8, however, differences became apparent. Many participants selected an 8 (43%) or a 9 (23%) in the global rating, but only 16% awarded a 10. Conversely, 32% of the participants answered the NPS question with a 10. The anova F‐test showed that the distributions of the global rating and the NPS were significantly different (P < 0.01). These distributions (and their differences) were comparable to the corresponding two distributions for the inpatient hospital data (data not shown).

Figure 2.

Global ratings and NPS 11 for outpatient hospital care (N = 10 824).

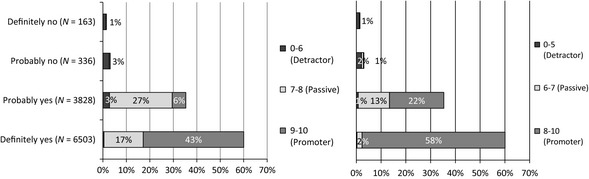

Figure 3 shows the responses on the CQI recommendation question for outpatient hospital data, broken down by scores on the NPS3. Almost all promoters would ‘definitely’ recommend the hospital according to the CQI recommendation question. However, 17% of the ‘passive’ patients would also ‘definitely’ recommend the hospital. An inspection of the data showed that these are mainly participants selecting 8 in the NPS question. Furthermore, the detractors were almost as likely to answer ‘probably yes’ as ‘probably no’ in the CQI recommendation question.

Figure 3.

Responses on CQI recommendation question, broken down by NPS 3 scores (standard and alternative; outpatient hospital care) (N = 10 830).

With regard to respondents answering ‘6’ on the NPS, 20% answered ‘probably no’ and 72% answered ‘probably yes’. Therefore, it seems they fit better in the ‘passive’ than in the ‘detractor’ category, as was expected. However, respondents answering ‘8’ on the NPS were almost just as likely to answer ‘probably yes’ (52%) as ‘definitely yes’ (48%) on the CQI recommendation question, contrary to our hypothesis. In the second part of Fig. 3, the CQI recommendation is plotted against the alternative NPS classification, showing what effect it would have on the interpretation of NPS‐scores. Mainly due to the alternative categorization of ‘8’ on the NPS3, there were less ‘passives’ in the ‘Definitely yes’ category, but also more ‘promoters’ in the ‘Probably yes’ category.

All results were comparable to those of the inpatient hospital data (data not shown).

Next, the actual correlations between the NPS and the 3 other constructs were assessed, which are presented in Table 1. The NPS11 seemed to be most strongly associated with the global rating. The relationship of the NPS with the CQI recommendation question proved somewhat weaker, but stronger than the association between the CQI recommendation question and the global rating: 0.67 vs. 0.60 (inpatient hospital), and 0.63 vs. 0.59 (outpatient hospital). The overall score had limited associations with each of the other 3 constructs.

Table 1.

Spearman's rank correlations between NPS, global ratings, CQI recommendation and overall scores

| NPS11 | Global rating of hospital | CQI recommendation | |

|---|---|---|---|

| Inpatient hospital carea | |||

| Global rating | 0.71 | – | |

| CQI recommendation | 0.67 | 0.60 | – |

| Overall score | 0.44 | 0.54 | 0.44 |

| Outpatient hospital careb | |||

| Global rating | 0.72 | – | |

| CQI recommendation | 0.63 | 0.59 | – |

| Overall score | 0.44 | 0.52 | 0.44 |

N = 5927–5989.

N = 9713–10 841.

All correlations significant at P < 0.001.

Patient experiences

To answer the second research question, the associations of the NPS11 with the quality indicators of patient experiences were analysed for both inpatient and outpatient hospital care (Table 2). The correlations between the NPS and the quality indicators were somewhat low. The strongest relationships, in both surveys, were found with regard to the interaction with nursing staff and doctors. In short, the reflection of patient experiences by the NPS as a composite measure of patient experiences was limited.

Although the same can be said of the global rating, its associations with the quality indicators were stronger than for the NPS. The associations between the quality indicators and the CQI recommendation question did not differ from those of the NPS. The overall score showed the highest correlations with the individual quality indicators, significantly higher than each of the correlations of the other constructs. In short, it could be concluded that the NPS did not reflect patient experiences to the same extent as the global rating or the overall score.

Discussion

Conclusions

To examine the possible contribution of the Net Promoter Score question to patient experience surveys, its relationships with three other constructs used for summarizing survey results were assessed: a global rating, the CQI recommendation question and an overall score, calculated from the quality indicators of patient experiences. Also, the associations of these 4 constructs with actual patient experiences were examined to test their validity in summarizing patient experiences.

Although the NPS was moderately to strongly correlated with the three other constructs, it proved to differ considerably in its distribution from the global rating (NPS11) and especially from the CQI recommendation question (NPS3). More importantly, the NPS showed weaker associations with the patient experiences than the global rating and an overall score, making it a less valid score for summarizing patient experiences.

Discussion of findings

The NPS showed a moderate association with the CQI recommendation question. More importantly, however, a comparison of the NPS3 and the CQI recommendation question showed some inconsistent results. A substantial number of participants answered the CQI recommendation question more positively than could be expected from their NPS3 classification question. Bearing in mind the potential different psychological boundaries of a 0–10 scale in the Netherlands compared to the USA, the original NPS3 classification may lead to a too negative assessment of patients’ willingness to recommend. Based on the answers on the CQI recommendation question, this was especially clear for respondents answering a ‘6’ on the NPS. But for respondents answering an ‘8’, the interpretation can go either way; categorizing them as ‘passives’ underestimates the likelihood to recommend for half of them, whereas categorizing them as ‘promoters’ overestimates this likelihood. Nonetheless, the same problem may occur anywhere if participants consider the NPS response categories as percentages (0 = 0%, 10 = 100%). An alternative trichotomy of NPS‐scores indeed suited CQI recommendation responses in our data better, considering ‘6’ as ‘passive’, although the classification of ‘8’ remains an issue (0–5; 6–7; 8–10 or 0–5; 6–8; 9–10).

Alternatively, to avoid the problem of the NPS categorization, it could be considered to refrain from calculating the Net Promoter Score and only report the average NPS11 for each provider. Indeed, an 11‐point scale allows for more detailed responses from participants and may also be statistically more attractive than the 4‐point scale of the CQI recommendation question. It should be noted, however, that all four response categories of the CQI recommendation question are labelled, whereas the NPS question only has labels at the two extreme ends of the scale. It may be argued that labelled response categories result in a more consistent interpretation of response categories across participants than the distribution of detractors, passives and promoters, which is not applied until the analyses after data collection. At least, it is safe to state that trading the CQI recommendation question for the NPS11 or, even more so, for the original NPS3 may have a substantial effect on the assessment of the quality of care.

In terms of summarizing patient experiences, the NPS does not seem to be favourable. The correlations between the patient experiences and the NPS11 were low and similar to those of the CQI recommendation question. Our findings support the use of the global rating in preference to the NPS and the CQI recommendation question as a summary score of patient experiences, but an overall score may be even better. Nonetheless, in contrast to the global rating, the construction of an overall score does present challenges and may still be difficult to use or interpret for stakeholders.11 A global rating can thus be a pragmatic choice.

Strengths and limitations

As explained in the introduction, the actual NPS of an organization is calculated at an aggregate level, from the individual responses to the NPS question. Due to the limited number of hospitals in our data set, it was not meaningful to examine the characteristics of the NPS at the hospital level.

Even though the response rates in both surveys were just under 30%, participants seemed largely representative for the population visiting hospitals and outpatient clinics regarding gender and age, although younger patients seemed to be somewhat underrepresented. Poor health did not seem to prevent people from participating in the surveys. In the end, we were able to assess the association between the NPS and CQI survey items at the individual level for almost 16 000 patients from two data sets. Therefore, we are confident that our findings are representative for these two patient experience surveys.

For confirmatory purposes, the same analyses were performed on a patient satisfaction survey on inpatient hospital care (3500 cases) and outpatient hospital care (13 500 cases), and a patient experience survey regarding integrated asthma and COPD care (1500 cases). In all surveys, the global rating showed higher associations with both patient satisfaction and patient experiences than the NPS question (data not reported).

The implications of the NPS classification may have presented a specific problem for use in a Dutch setting, because of its school grading system. Nevertheless, it would be interesting to see in which way the NPS classification works out in other countries, compared to a global rating and see whether different response patterns would emerge.

Recommending health‐care institutions and customer loyalty

Our aim in this article was to examine the possible contribution of the NPS to patient experience surveys. In the business world, the NPS is used to measure consumer loyalty to predict economic growth; Is it likely that customers will return? Although health‐care institutions may compete with each other for ‘customers’, ‘consumer loyalty’ and ‘economic growth’ are difficult issues in health care and may vary considerably between disciplines and health conditions.13 Imagine, for example, relating the NPS of a hospital to the number of patient treated over time. For many health‐care disciplines, it is questionable whether a growing number of patients are feasible, relevant or desirable. In this sense, the usefulness of the NPS in health care is not clear.

It is possible that a more fundamental problem exists with regard to recommending health‐care providers. In 2012, the National Health Service (NHS) explored the use of ‘overarching questions’ in UK patient experience surveys, one of which was the NPS.12 In fact, their research also suggested that a global rating of care was the most suitable option for an ‘overarching measure of patient experience’. With regard to the NPS, they discovered another important problem: people considered it strange to ‘recommend’ a health‐care institution, regardless of its quality of care, because they would not want their family and friends to need the care they themselves needed. In spite of this finding, as of October 2012, NHS trusts in the UK are legally required to add the so‐called Friends and Family Test in patient experience surveys, a question identical to the CQI recommendation question except for a neutral midpoint category: ‘Neither likely nor unlikely’.31 Perhaps adding such a neutral category to the CQI recommendation question will make this item more informative. However, if many patients follow the above line of reasoning, it is questionable whether questions regarding the recommending of health‐care providers, such as the NPS and the CQI recommendation question, are valid at all.

It is important to bear in mind that summarizing survey results should not be a goal in itself; it inevitably oversimplifies results and may thus obscure potentially relevant findings for stakeholders.18, 32 Moreover, in this study, correlations between the summarizing constructs and patient experiences proved to be moderate at best. Summarizing constructs should therefore only be used as an addition to survey results; the individual indicator scores and their associated items show specifically which processes need improvement and where differences between providers occur, making them vital for quality assessment and identifying possibilities for health‐care improvement.

Sources of funding

None.

Conflict of interests

None of the authors have any conflict of interests to declare.

Supporting information

Appendix S1. Survey items CQI surveys.

Acknowledgements

The authors would like to thank the Santeon hospitals and Stichting Miletus for providing the data used in this research, as well as the Atrium MC and MeteQ for the use of their data in checking our findings. These data were provided unconditionally and free of charge for the purposes of the manuscript.

References

- 1. Cleary PD. The increasing importance of patient surveys. Now that sound methods exist, patient surveys can facilitate improvement. British Medical Journal, 1999; 319: 720–721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Delnoij DM, Rademakers JJ, Groenewegen PP. The Dutch consumer quality index: an example of stakeholder involvement in indicator development. BMC Health Services Research, 2010; 10: 88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Maarse H, Meulen RT. Consumer choice in Dutch health insurance after reform. Health Care Analysis, 2006; 14: 37–49. [DOI] [PubMed] [Google Scholar]

- 4. Hendriks M, Spreeuwenberg P, Rademakers J, Delnoij DMJ. Dutch healthcare reform: did it result in performance improvement of health plans? A comparison of consumer experiences over time. BMC Health Services Research, 2009; 9: 167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Enthoven AC, Van de Ven WP. Going Dutch–managed‐competition health insurance in the Netherlands. New England Journal of Medicine, 2007; 357: 2421–2423. [DOI] [PubMed] [Google Scholar]

- 6. Hibbard JH, Slovic P, Peters E, Finucane ML. Strategies for reporting health plan performance information to consumers: evidence from controlled studies. Health Services Research, 2002; 37: 291–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Hibbard JH, Peters E. Supporting informed consumer health care decisions: data presentation approaches that facilitate the use of information in choice. Annual Review of Public Health, 2003; 24: 413–433. [DOI] [PubMed] [Google Scholar]

- 8. Damman OC, Hendriks M, Rademakers J, Delnoij DM, Groenewegen PP. How do healthcare consumers process and evaluate comparative healthcare information? A qualitative study using cognitive interviews. BMC Public Health, 2009; 9: 423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. de Boer D, Delnoij D, Rademakers J. Do patient experiences on priority aspects of health care predict their global rating of quality of care? A study in five patient groups. Health Expectations, 2010; 13: 285–297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Rademakers J, Delnoij D, de Boer D. Structure, process or outcome: which contributes most to patients' overall assessment of healthcare quality? BMJ Quality and Safety, 2011; 20: 326–331. [DOI] [PubMed] [Google Scholar]

- 11. Krol MW, de Boer D, Rademakers JJ, Delnoij DM. Overall scores as an alternative to global ratings in patient experience surveys; a comparison of four methods. BMC Health Services Research, 2013; 13: 479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Graham C, McCormick S. Overarching Questions for Patient Surveys: Development Report for the Care Quality Commission (CQC). Oxford: Picker Institute Europe, 2012. Available at: http://www.nhssurveys.org/Filestore/reports/Overarching_questions_for_patient_surveys_v3.pdf, accessed 22 July 2014. [Google Scholar]

- 13. Knightley‐Day T. NPS: Will it Work in Health? Portslade: Fr3dom Health Solutions Ltd, 2012. Available at: http://www.friendsandfamilytest.co.uk/PDFS/May%202012_NPS%20Will%20it%20work%20in%20health.pdf, accessed 22 July 2014. [Google Scholar]

- 14. Reichheld FF. The one number you need to grow. Harvard Business Review, 2003; 81: 46–54. [PubMed] [Google Scholar]

- 15. Keiningham TL, Cooil B, Andreassen TW, Aksoy L. A longitudinal examination of Net Promoter and firm revenue growth. Journal of Marketing, 2007; 71: 39–51. [Google Scholar]

- 16. Schneider D, Berent M, Thomas R, Krosnick J. Measuring customer satisfaction and loyalty: improving the ‘Net‐Promoter’ Score, 2008. Available at: http://www.van-haaften.nl/images/documents/pdf/Measuring%20customer%20satisfaction%20and%20loyalty.pdf, accessed 22 July 2014.

- 17. Ramshaw A. Net Promoter Score® Research: the ‘for’ and ‘against’ list, 2011. Available at: http://www.genroe.com/blog/net-promoter-score-research-the-for-and-against-list/779, accessed 22 July 2014.

- 18. Kinney WC. A simple and valuable approach for measuring customer satisfaction. Otolaryngology – Head and Neck Surgery, 2005; 133: 169–172. [DOI] [PubMed] [Google Scholar]

- 19. Hargraves JL, Hays RD, Cleary PD. Psychometric properties of the Consumer Assessment of Health Plans Study (CAHPS) 2.0 adult core survey. Health Services Research, 2003; 38: 1509–1527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Hays RD, Shaul JA, Williams VS et al Psychometric properties of the CAHPS 1.0 survey measures. Consumer Assessment of Health Plans Study. Medical Care, 1999; 37: MS22–MS31. [DOI] [PubMed] [Google Scholar]

- 21. Delnoij DM, ten Asbroek G, Arah OA et al Made in the USA: the import of American Consumer Assessment of Health Plan Surveys (CAHPS) into the Dutch social insurance system. European Journal of Public Health, 2006; 16: 652–659. [DOI] [PubMed] [Google Scholar]

- 22. Weech‐Maldonado R, Elliott M, Pradhan R, Schiller C, Hall A, Hays RD. Can hospital cultural competency reduce disparities in patient experiences with care? Medical Care, 2012; 50: S48–S55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Batterink M. Further Development CQI Hospitalization 2011 [in Dutch]. Barneveld: Significant, 2011. Available at: http://www.zorginstituutnederland.nl/binaries/content/documents/zinl-www/kwaliteit/toetsingskader-en-register/cq-index/cqi-vragenlijsten/cqi-vragenlijsten/cqi-vragenlijsten/zinl%3Aparagraph%5B35%5D/zinl%3Adocuments%5B5%5D/1401-analyserapport-doorontwikkeling-cqi-ziekenhuisopname-significant/Analyserapport+Doorontwikkeling+CQI+Ziekenhuisopname+%28Significant%29.pdf, accessed 22 July 2014. [Google Scholar]

- 24. Linschoten C, Barf HA, Moorer P, Spoorenberg S. CQ‐index Outpatient Hospital Care: Instrument development [in Dutch]. Groningen: ARGO Rijksuniversiteit Groningen, 2011. Available at: http://www.zorginstituutnederland.nl/binaries/content/documents/zinl-www/kwaliteit/toetsingskader-en-register/cq-index/cqi-vragenlijsten/cqi-vragenlijsten/cqi-vragenlijsten/zinl%3Aparagraph%5B26%5D/zinl%3Adocuments%5B3%5D/1312-cq-index-poliklinische-ziekenhuiszorg-meetinstrumentontwikkeling/CQ-index+Poliklinische+Ziekenhuiszorg+-+meetinstrumentontwikkeling.pdf, accessed 22 July 2014. [Google Scholar]

- 25. Sixma H, Delnoij DM. Measuring patient experiences in the Netherlands: the case of hospital care. European Journal of Public Health, 2008; 18: 88. [Google Scholar]

- 26. Sixma H, Spreeuwenberg P, Zuidgeest M, Rademakers J. CQ‐index Hospitalization: Instrument Development. Quality of Care During Hospitalization from the Perspective of Patients [in Dutch]. Utrecht, NIVEL, 2009. [Google Scholar]

- 27. Santeon Hospitals 2013. Available at: http://www.santeon.nl/en, accessed 22 July 2014.

- 28. Farmery AD. Mathematical coupling in research. British Journal of Anaesthesia, 1999; 82: 147–149. [DOI] [PubMed] [Google Scholar]

- 29. Caci HM. CORTESTI: Stata Module to Test Equality of Two Correlation Coefficients. Statistical Software Components, Boston College Department of Economics, 2000. Available at: http://fmwww.bc.edu/RePEc/bocode/c/cortesti.html and http://fmwww.bc.edu/repec/bocode/c/cortesti.ado, accessed 22 July 2014. [Google Scholar]

- 30. Cohen J, Cohen P. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences. Hillsdale, NJ: Lawrence, 1983. [Google Scholar]

- 31. Patient Experience and Outcomes Framework (NHS) . Friends and family test, 2013. Available at: http://www.patientexperience.co.uk/en/friends-and-family-test/fft-score-calculation, accessed 22 July 2014.

- 32. Damman OC, Hendriks M, Rademakers J, Spreeuwenberg P, Delnoij DM, Groenewegen PP. Consumers' interpretation and use of comparative information on the quality of health care: the effect of presentation approaches. Health Expectations, 2012; 15: 197–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1. Survey items CQI surveys.