Abstract

Many topics that scientists investigate speak to people’s ideological worldviews. We report three studies—including an analysis of large-scale survey data—in which we systematically investigate the ideological antecedents of general faith in science and willingness to support science, as well as of science skepticism of climate change, vaccination, and genetic modification (GM). The main predictors are religiosity and political orientation, morality, and science understanding. Overall, science understanding is associated with vaccine and GM food acceptance, but not climate change acceptance. Importantly, different ideological predictors are related to the acceptance of different scientific findings. Political conservatism best predicts climate change skepticism. Religiosity, alongside moral purity concerns, best predicts vaccination skepticism. GM food skepticism is not fueled by religious or political ideology. Finally, religious conservatives consistently display a low faith in science and an unwillingness to support science. Thus, science acceptance and rejection have different ideological roots, depending on the topic of investigation.

Keywords: science, religion, conservatism, morality, science skepticism, anti-science

Exploring the Ideological Antecedents of Science Acceptance and Rejection

Throughout history, the relationship between science and religion has been tense and contentious. At various times in history, for example, when Galileo Galilei introduced his heliocentric model, or when Darwin introduced the theory of evolution by natural selection, science and religion seemed to be on a collision course. However, there have also been voices—in religion as well as in science—that claim compatibilism (e.g., Gould, 1997; Sager, 2008). In modern times, science continues to spark controversy among the general public. As a testament to this, public attitudes toward science seem to once again have become more polarized. Although recent large-scale surveys conducted in North America and the United Kingdom suggest that scientists rank among the most respected professions—alongside doctors, nurses, firefighters, and military officers (see Rutjens & Heine, 2016; The Harris Poll, 2014)—others point to an increased public distrust in science and a growing anti-science movement, particularly among conservatives (e.g., Gauchat, 2012; Nature Editorial, 2017; Pittinsky, 2015). However, research examining this alleged link between political conservatism and the rejection of science has produced mixed findings.

Of all the potentially contentious topics that scientists investigate, researchers interested in science skepticism have taken the most interest in the environmental and biomedical sciences, in particular, the topics of climate change, childhood vaccination, and genetic modification (GM). For example, political conservatism and endorsement of free-market ideology reliably predict anthropogenic climate change skepticism (Lewandowsky, Gignac, & Oberauer, 2013; Lewandowsky & Oberauer, 2016; Lewandowsky, Oberauer, & Gignac, 2013). Indeed, this link between political ideology and climate change skepticism was recently confirmed in a meta-analysis (Hornsey, Harris, Bain, & Fielding, 2016). In contrast, conservatives (vs. liberals) were found not to be more prone to anti-vaccine attitudes1 or to GM food skepticism (a similar observation was made by Scott, Inbar, & Rozin, 2016; see also Kahan, 2015).

But what about religion? Given the tense history of the science–religion relationship, it is striking that relatively little empirical work has invested how modern rejection of science might be fueled by religiosity (McPhetres & Nguyen, 2017; Rutjens, Heine, Sutton, & van Harreveld, in press). Indeed, measures of religious belief and religious identity are—as far as we are aware—curiously absent (or, at best, religiosity is briefly mentioned as a demographic control variable) in the bulk of the recent work on science skepticism. One notable exception is work showing that religiosity is negatively related to support for nanotechnology funding (Brossard, Scheufele, Kim, & Lewenstein, 2008). A compelling theoretical reason for why it is important to take religion into account is that science and religion both function as ultimate explanatory frameworks (or belief systems) that aim to provide answers to the big questions in life, and that the explanations provided by each framework can be at odds with each other (e.g., in the case of evolution by natural selection; Blancke, De Smedt, De Cruz, Boudry, & Braeckman, 2012; Thagard & Findlay, 2010). Indeed, not only can science provide support for explanations that are incompatible with religious doctrine (Blancke et al., 2012; Farias, 2013; McCauley, 2011; Preston & Epley, 2009), scientific understanding also routinely runs counter to various intuitions about how the world works. These intuitions result from evolved cognitive biases such as teleology and essentialism, and render already counterintuitive scientific theories even more difficult to understand and accept (cf. McCauley, 2011). In addition, scientific and technological progress sometimes runs counter to deeply held religious beliefs and values, for example, in the case of stem cell research, GM, and genome editing (Rutjens, van Harreveld, van der Pligt, van Elk, & Pyszczynski, 2016; see also Heine, Dar-Nimrod, Cheung, & Proulx, 2017).

Religiosity and political ideology reliably intercorrelate; political conservatives are on average more religious than liberals (Graham, Haidt, & Nosek, 2009; Layman, 2001; Malka, Lelkes, Srivastava, Cohen, & Miller, 2012). This means that some of the previous work on science skepticism above may have confounded conservatism with religiosity, so that some but perhaps not all science skepticism might be fueled by religiosity rather than political ideology. In addition, both political conservatives and religious believers place relatively strong emphasis on traditional—or binding—moral values, such as respect for authority, loyalty toward the ingroup, and the importance of maintaining the natural order of things (Graham et al., 2009; McKay & Whitehouse, 2015; Piazza & Sousa, 2014; Rutjens et al., 2016). This brings us to another potential catalyst of science skepticism: morality, in particular, moral concerns about naturalness and purity.

Indeed, one other reason why modern science elicits such ambivalent evaluations is that many fields of research involve topics that speak to people’s deeply held moral views about society and the world. Moralized attitudes (or moral convictions) have been shown to be different from nonmoral attitudes (Skitka, Bauman, & Sargis, 2005). More specifically, they refer to an absolute belief that something is right or wrong, and are therefore not negotiable, even in the light of new information or evidence. In other words, when members of the public read about research on—for example—evolution, nanotechnology, GM, vaccination, equality and fairness, drugs and health, or violence in video games, it is not surprising that their evaluations of the scientific evidence will at least partially be shaped by their preexisting moralized attitudes and ideologies (Blancke et al., 2012; Brossard et al., 2008; Diethelm & McKee, 2009; Douglas & Sutton, 2015; Hornsey et al., 2016; Lewandowsky, Gignac, & Oberauer, 2013, 2015; Scott et al., 2016). The degree to which people take moral offense to science findings predicts their unwillingness to accept these findings (Colombo, Bucher, & Inbar, 2016), and disgust-based concerns about moral purity are a recurring theme (e.g., in the case of GM resistance; Scott et al., 2016). As a consequence, moral convictions might interfere with factual interpretations of the scientific evidence that is presented, leading to increased skepticism and rejection.

Finally, not only are some—or perhaps many—scientific and technological advances hard to reconcile with religious beliefs and values, ideology, and morality, but many of these advances are generally too complex to properly understand. Another reason for general science skepticism might therefore simply be a lack of knowledge among the public. Indeed, many people respect and distrust science and scientists at the same time (e.g., Fiske & Dupree, 2014; Rutjens & Heine, 2016). One observation that speaks to this ambivalence is that the beliefs and attitudes about (the safety of) science and technology held by the general public differ from those held by scientists. As a striking example of this discrepancy, a recent Pew survey reported that 88% of the surveyed scientists (vs. 37% of the public) viewed the consumption of GM foods as safe (Pew Research Center, 2015; see also Blancke, Van Breusegem, De Jaeger, Braeckman, & Van Montagu, 2015). This difference in safety perceptions is arguably caused by differences in knowledge; scientists trust science more than the general public does because they rely on different, more accurate, knowledge about—in this case—GM.

Taking all of the above into account, we can identify four predictors of science acceptance and rejection: Religiosity, political ideology, morality, and knowledge about science (i.e., literacy). However, most of these variables intercorrelate and are therefore potentially confounded. When not measuring all constructs simultaneously, it will be hard to properly assess what the predictive value of each of these is. As an example, if one line of research finds that political conservatism predicts science skepticism but no proper measure of religiosity is included, we cannot be sure what the actual ideological predictor (e.g., religious identity) or combination of predictors (e.g., politically conservative, but not liberal, self-identified religious believers) is. Likewise, when another line of research finds that concerns about moral purity lead to science rejection, this might well reflect underlying effects of political conservatism, or perhaps religious orthodoxy. In a similar vein, a certain level of particular knowledge about science and technology might be confounded with low religious belief or even with political liberalism. Also, one specific ideological motivator of science skepticism may be unique to one particular topic, as seems to be the case with political conservatism, which reliably predicts climate change skepticism but not GM food skepticism.

In short, a systematic investigation of the relative role of religious belief and political ideology—alongside morality and scientific literacy—in predicting belief in science and science skepticism is lacking, and the primary goal of the current research was to address this gap. We set out to scrutinize religious belief and identity, political ideology, and moral concerns as predictors of science acceptance and rejection, across different topics and using various measures (and including a scientific literacy test in the pilot study and Study 3). As these predictors are correlated and therefore potentially confounded, our goal in the current research was to gauge their relative predictive value for general belief in science, willingness to support science, and science skepticism in the fields of environmental science (climate change) and biomedical science (childhood vaccination and GM foods)—currently among science’s most controversial topics.

Overview of Current Research

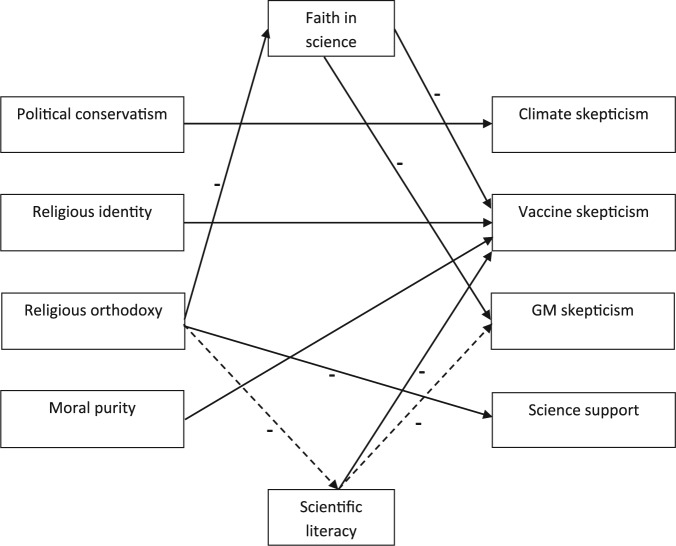

We report the results of three online studies, conducted on Amazon’s Mechanical Turk2 (MTurk; total N = 445), and an analysis using existing data from the International Social Survey Program 2010 (ISSP Research Group, 2012; N = 1,430), that aimed to shed more light on the relative weight of the ideological antecedents of belief in science and science skepticism. An a priori power analysis for hierarchical multiple regression in which we set an average effect size of f2 = .15, power = .80, alpha = .05 (number of predictors set to 12) yielded a recommended sample size of 103. We deliberately oversampled in Studies 1 and 3. Table 1 provides an overview of the key variables and Figure 1 provides an overview of the key findings.

Table 1.

Overview of Key Variables.

| Key predictors | Additional measures | Key dependent measures |

|---|---|---|

| Political conservatism | Demographics | Climate change skepticism |

| Religiosity | Scientific literacy (Study 3) | Vaccine skepticism |

| Morality (Studies 1 and 3) | GM food skepticism (Studies 2-3) | |

| Faith in science (Studies 1-3) | ||

| Science support (Studies 1 and 3) |

Note. GM = genetic modification.

Figure 1.

Overview of main findings across studies.

Note. Dashed line indicates single observation in Study 3. All other relations are observed in more than one studies.

In a pilot study, we included often employed measures of science rejection (i.e., climate change and childhood vaccination skepticism3; Lewandowsky, Gignac, & Oberauer, 2013; Lewandowsky, Oberauer, & Gignac, 2013), a scientific literacy test (B. C. Hayes & Tariq, 2000; Kahan et al., 2012), and measures of political orientation and religious belief. In Study 1, we replaced the scientific literacy test with a measure of faith in science (Farias, Newheiser, Kahane, & de Toledo, 2013), added more fine-grained measures of political orientation and religious belief (i.e., religious orthodoxy), and included a measure gauging moral concerns (the Moral Foundations Questionnaire [MFQ]; Graham et al., 2009). Moreover, we devised a behavioral measure of science support. Here, participants were presented with a resource allocation task in which they could rearrange a “discretionary spending pie,” which included science (alongside military, transportation, and a number of other domains). This measure allowed participants to prioritize science by allocating federal funding to it. Study 2 aimed to conceptually replicate the results of Study 1 among a more general-population sample by performing secondary analyses on relevant variables from the ISSP 2010–Environment III dataset (ISSP Research Group, 2012). This preexisting dataset did not include a measure of vaccine skepticism, but did include a measure of GM food skepticism. Study 3 replicated and extended Studies 1 and 2, by including both a scientific literacy test and a faith in science measure, and more elaborate measures of climate change, childhood vaccination, and GM food skepticism (Lewandowsky, Gignac, & Oberauer, 2013). In all studies, we provide zero-order correlations and the results of hierarchical regression analyses on science skepticism, faith in science (Studies 1-3), and science support (Studies 1 and 3).

Pilot Study

Method

Participants (105 MTurk workers, 42 women; Mage = 30.19, SD = 8.73) were first asked to respond to four science rejection items (Lewandowsky, Gignac, & Oberauer, 2013; Lewandowsky, Oberauer, & Gignac, 2013). Two items reflected medical facts: “The HIV virus causes AIDS” and “Smoking causes lung cancer.” The other two items were more contentious: “Human CO2 emissions cause climate change” and “Vaccinations cause autism.” All items were scored on 7-point scales ranging from 1 (strongly disagree) to 7 (strongly agree). The first three items were reverse-scored. Participants then completed a scientific literacy test (nine true-false items, a maximum score of 9; α = .594; (B. C. Hayes & Tarick, 2000; Kahan et al., 2012; see Appendix A).5 After completing an attention check (Oppenheimer et al., 2009), participants indicated their gender, age, nationality, occupation, religious identity (“Do you consider yourself to be a religious person?”), religious affiliation (i.e., denomination), belief in God (100-point slider scale ranging from not at all to very much), and political conservatism (100-point slider scale ranging from very liberal to very conservative).

Results and Discussion

We used hierarchical regression analysis to assess which variables best predict science rejection. Controlling for age, gender, and profession, we entered political conservatism in Model 1, religiosity in Model 2, and scientific literacy in Model 3. For an overview of the means (SD), correlations, and regression tables, please see Appendix B. The results yielded a number of initial insights. Although all four science rejection items were statistically related to scientific literacy, they differed in important ways in terms of how well they were predicted by religious and political ideology. The publicly accepted medical facts that HIV causes AIDS and smoking causes lung cancer were not ideologically fueled. Rejection of anthropogenic climate change was best predicted by political conservatism (and scientific literacy), but not by religion (Model 3 explained 20% of the variance), F(6, 97) = 4.67, p < .001. In contrast, vaccine skepticism was clearly grounded in religious belief. Scientific literacy however was the strongest predictor of vaccine skepticism, which together with religiosity accounted for 47% of the explained variance, F(6, 97) = 14.08, p < .001. Political conservatism was a weaker predictor6 of vaccine skepticism. This suggests that these two prominent forms of science rejection have different ideological antecedents.

Study 1

In Study 1, our aim was to build on the pilot study results and test the ideological antecedents of science acceptance and rejection with a larger sample and adding several important variables. First, we included a measure of faith in science (Farias et al., 2013), which rather than focusing on skepticism about specific science findings taps into a more general belief in science and acceptance of the scientific method. Given that science and religion are perceived by many as competing ultimate explanations (e.g., Blancke et al., 2012; Farias, 2013; Preston & Epley, 2009), we expected that general faith in science would be best predicted by religious rather than political ideology. Furthermore, we expected to replicate the findings of the pilot study: Political conservatism best predicts climate change skepticism and religiosity best predicts vaccine skepticism (note that scientific literacy was not measured in Study 17).

Second, we included the MFQ (Graham et al., 2009; Haidt & Graham, 2007; see also Rozin, Lowery, Imada, & Haidt, 1999; Scott et al., 2016) to assess if—and to what extent—concerns about what is morally right and wrong underlies the rejection of science. Conservatives as well as highly religious individuals tend to emphasize traditional moral values (i.e., binding moral foundations), especially those that pertain to purity (Graham et al., 2009; Piazza & Sousa, 2014). Moreover, life sciences and their application, such as vaccination and GM, have been shown to flout purity concerns (e.g., Scott et al., 2016) and scientists have been shown to be associated in people’s minds with immoral conduct, especially pertaining to impurity (Rutjens & Heine, 2016; see also Fiske & Dupree, 2014). It remains to be seen however whether moral concerns about purity can help predict science rejection when competing for explained variance with political and religious ideology.

Third, we included a measure of religious orthodoxy to tap into religious conservatism (next to the religious identity and belief in God measures). Arguably, the incompatibility of science and religion should be particularly strong for the religious orthodox because orthodoxy implies viewing religion as the main source of truth (Clobert & Saroglou, 2015; Dawkins, 2006; Evans, 2011; Jensen, 1998, 2009; Rutjens et al., 2016). As such, we expect religious orthodoxy in particular to be a strong predictor of general faith in science.

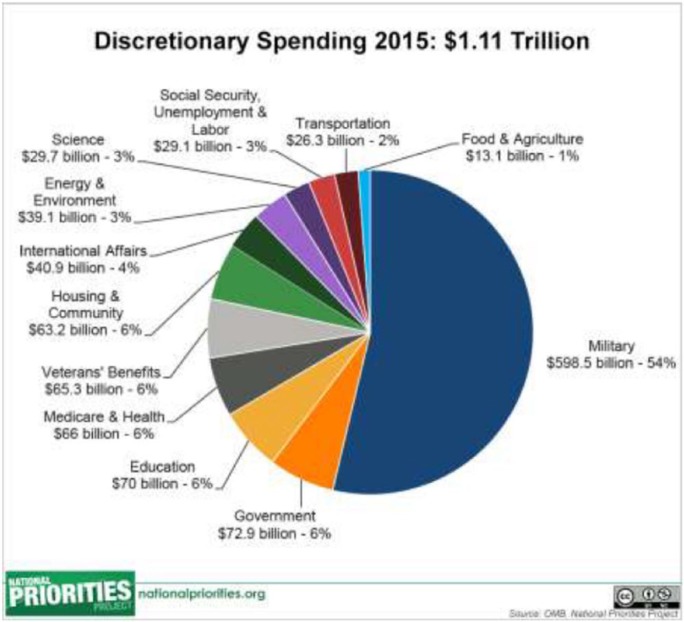

As a final addition, we included a behavioral measure of the willingness to support and prioritize science. We devised a resource allocation task for this purpose, in which participants could indicate their preference with regard to the distribution of federal spending budget, based on a “discretionary spending pie.” Science was one of the 12 spending areas for participants to take into consideration.

Method

Participants

A total of 203 MTurk workers participated in exchange for a monetary reward. Seventeen participants did not complete more than half of the study, and another 13 failed an attention check. They were excluded from analyses. The remaining 173 participants (70 women) had a mean age of 37.43 (SD = 11.76).

Procedure and materials

The study consisted of the following measures (see also Appendix A), and unless reported otherwise, all items were scored on 7-point scales ranging from 1 (strongly disagree) to 7 (strongly agree):

MFQ

Participants completed the moral judgments section of the MFQ (MFQ30-2; Graham et al., 2009). This questionnaire consists of 16 items of which 15 cover the five moral foundations of care/harm, fairness/cheating, loyalty/betrayal, authority/subversion, and purity/degradation, and one was a control item. All items were scored on 5-point scales ranging from 1 (strongly disagree) to 5 (strongly agree).

Rejection of science findings

We asked participants to respond to the same four items regarding HIV, smoking, climate change, and vaccinations as we used in the pilot study.

Faith in science

Participants completed a 5-item Faith in Science scale (Farias et al., 2013; B. C. Hayes & Tarick, 2000). An example item is “Science is the most efficient means of attaining truth” (α = .92).

Religion, political ideology, demographic variables

Participants were asked to indicate their gender, age, nationality, religious identity, religious affiliation, belief in God, and political orientation. In addition to the political conservatism slider item (see pilot study), we added two similar items asking for participants’ political outlook regarding social issues and economic issues separately (see Talhelm et al., 2015). Next, participants completed the Orthodoxy subscale of the Postcritical Belief Scale (Fontaine, Duriez, Luyten, & Hutsebaut, 2003) in which we embedded an attention check (Oppenheimer et al., 2009). Example items are “You can only live a meaningful life if you believe” and “I think that Bible stories should be taken literally, as they are written” (α = .93).

Science support

Finally, a pie chart was presented to participants (see Appendix D), with the accompanying instructions:

Below, you can view the “Discretionary Spending Pie” for the US in 2015. In the next and final part of the study, it is your job to rearrange the percentages to your own liking for the year 2016. [followed by] Below, we present these 12 spending areas in order of spending budget. It is up to you to indicate changes to the spending pie, by rearranging the order of areas. Which spending areas should be prioritized in 2016? You can drag and drop the different items to rearrange the order. (If you are fine with the current order, you can leave it exactly like this.)

Participants could rearrange the 12 spending areas to reflect their preferred order of prioritization. We looked at where participants placed science, which translated to a score on an interval scale ranging from 1 (highest funding allocation) to 12 (lowest funding allocation).

Results

Correlations

Table 2 provides an overview of focal zero-order correlations.

Table 2.

Correlations Between the Moral Purity Foundation, Climate Change Rejection and Belief in Vaccines–Autism Link, Faith in Science, Resource Allocation, and Political and Religious Variables, Study 1.

| M (SD) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. MFQ: Purity/degradation | 3.00 (1.03) | * | ||||||||||

| 2. Climate change skepticism | 2.35 (1.64) | .22** | * | |||||||||

| 3. Vaccine skepticism | 2.10 (1.44) | .35** | .12 | * | ||||||||

| 4. Faith in science | 4.85 (1.55) | −.42** | −.35** | −.30** | * | |||||||

| 5. Political conservatism | 38.83 (27.57) | .27** | .27** | .13 | −.28** | * | ||||||

| 6. Conservatism: Social issues | 33.73 (26.77) | .43** | .31** | .21** | −.37** | .87** | * | |||||

| 7. Conservatism: Economic issues | 43.76 (29.21) | .16* | .24** | .03 | −.20** | .89** | .69** | * | ||||

| 8. Belief in God | 45.52 (41.61) | .42** | .09 | .21** | −.58** | .24** | .37** | .14 | * | |||

| 9. Religious orthodoxy | 2.57 (1.53) | .49** | .21** | .26** | −.61** | .35** | .47** | .18* | .12 | * | ||

| 10. Religious identity (1 = no; 2 = yes) | 36% yes | .37** | .11 | .09 | −.53** | .28** | .40** | .16* | .77** | .71** | * | |

| 11. Science funding allocation | 6.83 (2.87) | .37** | .13 | .26** | −.31** | .16* | .22** | .10 | .36** | .36** | .27** | * |

Note. Science funding allocation: Higher scores indicate a lower ranking of science on the resource allocation task. MFQ = Moral Foundations Questionnaire.

p < .05. **p < .01.

Predictors of science rejection and faith in science

We used hierarchical regression analyses to assess which variables best predict faith in science, science skepticism, and science support. We included general demographic variables and added the moral foundations in Model 1, political conservatism in Model 2,8 religious identity and orthodoxy in Model 3, and faith in science in Model 4 (except in the regression analysis of faith in science). The final model of each set of analyses, depicting the key predictors, is presented in Table 3 (for the complete regression tables, see Appendix C, Tables C1-C4).

Table 3.

Final Models of Hierarchical Regression Analyses of Faith in Science, Climate Change Skepticism, Vaccine Skepticism, and Science Support, Study 1.

| Faith in science | Climate change skepticism | Vaccine skepticism | Science funding allocation | |

|---|---|---|---|---|

| Adjusted R2 = .42** | Adjusted R2 = .16** | Adjusted R2 = .16** | Adjusted R2 = .22** | |

| Purity | −.17* | .11 | .21* | .16 |

| Conservatism | −.05 | .23* | .08 | .04 |

| Religious identity | −.11 | .18 | .29** | .09 |

| Religious orthodoxy | −.43** | .00 | .14 | .21* |

| Faith in science | −.34** | −.22* | −.06 |

Note. All analyses adjust for demographic variables and moral foundations scores. Science funding allocation: Higher scores indicate a lower ranking of science on the resource allocation task.

p < .05. **p < .01.

Faith in science

We started with assessing whether faith in science is predicted by the demographic variables we included in the study. Age and gender alone already explained 10% of the variance, p < .001. Men reported more faith in science than women, which also slightly decreased with age (see Appendix C, Table C1). This gender effect disappeared when controlling for the ideological variables entered next. In Model 2, adding the moral foundations increased explained variance to 22%, p < .001. The only moral foundations predictor was moral purity concerns, which negatively predicted faith in science, Beta = −.41. In Model 3, we added political conservatism, which increased the variance explained to 26%, p < .001. Adding religion (religious identity and orthodoxy) in Model 4 however strongly increased explained variance to 42%, F(10, 171) = 13.55, p < .001. Religious orthodoxy was the strongest negative predictor of faith in science, Beta = −.43, p < .001, 95% confidence interval (CI) [−0.62, −0.25].

HIV and smoking

Skepticism about the link between HIV and AIDS and between smoking and lung cancer was not associated with any of the variables included in the study, except conservatism about social issues (r = .19 and .17, respectively; ps < .05). Dismissing the smoking–lung cancer link also weakly correlated with faith in science, r = −.17, p = .027.9

Climate change

Model 2 (moral foundations) was not significant, although concerns about harm/care and purity were significant predictors (see Appendix C, Table C2). Adding political conservatism in Model 3 however increased explained variance to 10%, with conservatism being a significant predictor of climate change rejection (Beta = .24, p < .01, 95% CI [0.03, 0.01]) and rendering moral concerns no longer significant. Adding religiosity did not further contribute to the explained variance. However, faith in science accounted for an additional 6% of the variance; Model 5 best predicted rejection of anthropogenic climate change, F(11, 171) = 3.95, p < .001. Importantly, while faith in science contributed unique explained variance, adding it to the analyses did not meaningfully reduce the effect of political conservatism (see Appendix C, Table C2).

Vaccinations

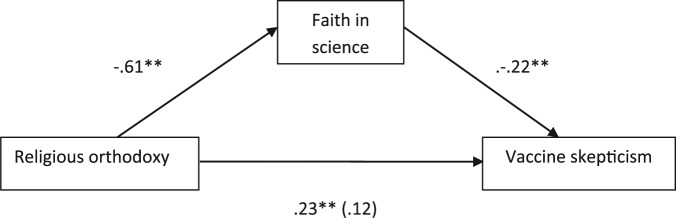

As in the pilot study, vaccine skepticism again yielded a different pattern of results than was the case for climate change skepticism. Here, purity concerns accounted for 11% of the variance, Beta = .27. Political conservatism did not contribute, but religious identity and orthodoxy did, accounting for an additional 2.4% of the variance in Model 4. Faith in science accounted for another 2.1% of the variance in Model 5, F(11, 171) = 3.86, p < .001. In this model, religious identity was the strongest predictor of vaccine skepticism (next to purity concerns and faith in science). Importantly, the effect of religious orthodoxy was no longer significant when faith in science was added (see Appendix C, Table C3). This suggests that while self-identified religious people in general have a problem with vaccines that cannot be attributed to a broader lack of faith in science, religious conservatives in particular are possibly skeptical about vaccines because of a broader distrust in science. A bootstrapping analysis (Model 4 of Process macro; Preacher & Hayes, 2004) of 5,000 samples indeed confirmed mediation of orthodoxy by faith in science, with an indirect effect of .13 (SE = .06) with a 95% CI of [.26 to .02], see Figure 2.

Figure 2.

Mediation of religious orthodoxy on vaccine skepticism by faith in science, Study 1.

*p < .05. **p < .01.

Science support

The mean ranking of science on the resource allocation task was around the midpoint of the measure (which ranged from 1 to 12): 6.83 (SD = 2.87). Also, science funding allocation correlated with moral purity concerns, faith in science, political conservatism, and religion. When only entering the demographic variables in the hierarchical regression analysis, gender was a significant predictor of allocation, explained 13.7% of the variance. Adding the moral foundations in Model 2 increased explained variance to 20.5%, with purity concerns being the only moral foundation that predicted allocation, Beta = .25, p < .01, 95% CI [0.19, 1.18]. Adding political conservatism did not increase explained variance, but adding religious orthodoxy in Model 4 did. Faith in Science (Model 5) did not further increase explained variance. Thus, the model that explained most of the variance (22%) was Model 4, F(10, 171) = 13.55, p < .001 (see Table 3 and Appendix C, Table C4). Controlling for all other variables, gender and religious orthodoxy were the only significant predictors of science funding allocation.10

Discussion

Study 1 replicated and extended the results of the pilot study. First, we again observed that HIV-AIDS and smoking-cancer rejection are not ideologically fueled. Then, we replicate the finding that climate change skepticism is best predicted by political conservatism. Although faith in science also contributed, it did not meaningfully reduce the effect of political conservatism. This suggests that political conservatives’ skepticism about climate change is not due to a more general distrust in science. Also consistent with the pilot study results, vaccine skepticism was best predicted by religious identity, moral purity concerns, and faith in science. Although religious participants were skeptical about vaccines, we also observed that the effect of religious orthodoxy specifically was reduced when including faith in science. This suggests that religious people are skeptical about vaccines, and that the more (religiously) conservative among them are skeptical because they maintain a low faith in science, which is indeed what we observed in the mediation analysis. Finally, whereas political conservatism was a weak predictor of vaccine skepticism in the pilot study, it was unrelated to vaccine skepticism in the current study.

Religious conservatives in particular have a low faith in science (see the beta weights in left column of Table 3): Orthodoxy was found to be the strongest negative predictor of faith in science, over and beyond political conservatism. In line with this, religious orthodoxy also was the strongest predictor of reduced willingness to support science; the higher participants scored on the orthodoxy measure, the lower they ranked science on the resource allocation task. As was the case with faith in science, the initial contribution of moral purity concerns was reduced when adding orthodoxy, and political conservatism did not contribute any meaningful variance.11

In sum, Study 1 showed that political conservatism was the best ideological predictor of climate change skepticism, while religious identity was the best ideological predictor of vaccine skepticism. General lack of faith in science—which in turn resulted in vaccine skepticism—and the (un)willingness to support science were best predicted by religious conservatism.

Study 2

Having established that different forms of science acceptance and rejection have different antecedents, we next sought to test whether this pattern of results generalizes beyond the MTurk population. To do so, we used data from the 2010 wave of the ISSP (Environment III) conducted in the United States (ISSP Research Group, 2012) to conceptually replicate the results obtained thus far by using a representative sample of the U.S. population. We identified measures of climate change skepticism, faith in science, as well as political conservatism and religiosity. In addition, the dataset contained a GM food skepticism measure. There were no items on vaccine skepticism, morality, and scientific literacy.

Method

We downloaded the dataset of the ZA5500: International Social Survey Programme: Environment III–ISSP 2010 at https://dbk.gesis.org/dbksearch/sdesc2.asp?ll=10%C2%ACabs=&af=&nf=&search=&search2=&db=e&no=5500. The U.S. data (N = 1,430; Mage = 48.08, SDage = 17.81; 823 women) was collected early 2010 by the National Opinion Research Center (NORC–General Social Survey). There were no data exclusions.12 The following variables were identified as relevant to the purpose of the current research:

Science skepticism

Two items were identified as proxies of climate change and GM food skepticism, respectively: “In general, do you think that a rise in the world’s temperature caused by climate change is . . . ” and “And do you think that modifying the genes of certain crops is . . . ” Both items had an answer scale ranging from 1 (extremely dangerous for the environment) to 5 (not dangerous at all for the environment), and including a “can’t choose” option. Responses to the GM food item were reverse-scored as to signal skepticism.

Faith in science

Two items (r = .37) in the dataset were identified as measuring faith in science: “We believe too often in science, and not enough in feelings and faith” and “Overall, modern science does more harm than good.” Respondents were asked to indicate their agreement on a scale ranging from 1 (agree strongly) to 5 (disagree strongly). There was also a “can’t choose” option.

Religion, political ideology, demographic variables

In addition to respondents’ age and gender, political preference was measured on a 5-point scale consisting of the following response options: 1 (far left), 2 (left/center left), 3 (center/liberal), 4 (right/conservative), and 5 (far right). In total, 500 respondents identified as left/center left, 561 as center/liberal, and 322 as right/conservative. A total of 35 respondents ticked other/no specification. Religious denomination was measured (including “no religion”). As no measure of religious orthodoxy was included, we looked at frequency of religious service attendance as a proxy. Attendance frequency was measured with the item “How often do you attend religious services,” with the following response options: 1 (several times a week or more), 2 (once a week), 3 (2 or 3 times a month), 4 (once a month), 5 (several times a year), 6 (once a year), 7 (less frequently than once a year), and 8 (never). Mean response was 4.67 (SD = 2.46).

Results

Zero-order correlations can be found in Table 4. As in Study 1, we used hierarchical regression analyses to assess which variables best predict faith in science and science skepticism. We included general demographic variables and added political ideology in Model 2, religious denomination (dichotomized to no religion vs. religion) and religious attendance in Model 3, and faith in science in Model 4 (except in the regression analysis of faith in science) which is depicted in Table 5 (see Appendix C, Tables C5 -C7 for the complete regression analysis).

Table 4.

Correlations Matrix, Study 2.

| M (SD) | 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|---|

| 1. Climate change skepticism | 2.20 (0.90) | — | |||||

| 2. GM skepticism | 2.53 (1.08) | −.30** | — | ||||

| 3. Faith in science | 3.09 (0.92) | −.08** | −.19** | — | |||

| 4. Political conservatism | 4.38 (2.06) | .27** | −.10** | −.01 | — | ||

| 5. Religious denomination (1 = no; 2 = yes) | 81.4% yes | .12** | −.01 | −.24** | .05 | — | |

| 6. Religious orthodoxy | 2.56 (2.33) | .08** | −.04 | −.26** | .05 | .48** | — |

Note. GM = genetic modification.

p < .01.

Table 5.

Final Models of Hierarchical Regression Analyses of Faith in Science, Climate Change Skepticism, and GM Skepticism, Study 2.

| Faith in science | Climate change skepticism | GM skepticism | |

|---|---|---|---|

| Adjusted R2 = .10** | Adjusted R2 = .10** | Adjusted R2 = .07** | |

| Political conservatism | −.01 | .26** | −.08** |

| Religious denomination | −.16** | .08* | −.06 |

| Religious orthodoxy | −.19** | .01 | −.00 |

| Faith in science | −.06* | −.19** |

Note. All analyses adjust for age and gender. Religious orthodoxy was measured with an item gauging religious attendance frequency. GM = genetic modification.

p < .05. **p < .01.

Climate change

Model 3 explained 9.2% of the variance, F(3, 1297) = 44.80, p < .001. Political conservatism (alongside age) was a significant predictor of climate change skepticism, Beta = .27, p < .001, 95% CI [0.28, 0.42]. Adding religious denomination, religious attendance frequency, and faith in science did not lead to meaningful increases in explained variance.

GM food

Model 4 explained 6.6% of the variance, F(6, 1162) = 14.70, p < .001. Political conservatism weakly contributed to the explained variance (with conservatives being slightly less skeptical), while religious denomination and religious attendance frequency did not predict GM food skepticism. Faith in science (alongside small effects of gender and age) was a significant predictor, Beta = −.19, p < .001, 95% CI [−0.15, −0.29].

Faith in science

Model 3 explained 9.6% of the variance, F(5, 1383) = 30.23, p < .001. As can be seen in Table 5, the only significant predictors (alongside small effects of gender and age) were religious denomination (Beta = −.16, p < .001, 95% CI [−0.50, −0.24]) and religious attendance frequency, Beta = .19, p < .001, 95% CI [0.05, 0.09] (note that lower scores indicate higher attendance rates).

Discussion

Although the ISSP dataset did not include all measures of interest to the current project, with the data available, we were able to conceptually replicate the findings of Study 1 among a large, representative, sample of the U.S. general population. Again, climate change skepticism was found to be primarily political, with religiosity playing no meaningful role. Moreover, faith in science was best predicted by religious orthodoxy, using a measure gauging frequency of religious attendance. Finally, we also found that GM food skepticism was best predicted by faith in science, and not religious or political ideology (political conservatism had a small negative effect).

Study 3

A final study sought to integrate and extend the first two studies. The design was similar to that of Study 1, with the following changes: First, along the faith in science measure, we also reintroduced the scientific literacy test from the pilot study. This way, we were able to directly distinguish lack of science literacy (knowledge) from lack of science trust (faith) in predicting science skepticism and support. Second, we replaced the single-item skepticism items used so far with more elaborate scales, targeting skepticism of climate change, vaccines, and GM food.

Method

Participants

A total of 194 MTurk workers participated in exchange for a monetary reward. In all, 21 participants failed the instructional attention check, and another six participants did not complete the study. These participants were excluded from analyses. The remaining 167 participants (73 women) had a mean age of 35.80 (SD = 10.67).

Procedure and materials

The study consisted of the following measures (also see Appendix A):

MFQ

Participants completed the moral judgments section of the MFQ (MFQ30-2; Graham et al., 2009), identical to Study 1.

Scientific literacy

Participants then continued with same scientific literacy test as used in the pilot study (with a maximum score of 9; α = .59; Kahan et al., 2012).

Science skepticism

Next, we presented participants with three science rejection scales (Lewandowsky, Gignac, & Oberauer, 2013), each consisting of five items: Climate Change Skepticism (e.g., “I believe that the climate is always changing and what we are currently observing is just natural fluctuation”; α = .88); Vaccination Skepticism (e.g., “The risk of vaccinations to maim and kill children outweighs their health benefits”; α = .88) and GM Food Skepticism (e.g., “I believe that because there are so many unknowns, it is dangerous to manipulate the natural genetic material of foods”; α = .91). All items were scored on 7-point scales ranging from 1 (strongly disagree) to 7 (strongly agree).

Faith in science

Participants completed the 5-item Faith in Science scale (Farias et al., 2013; B. C. Hayes & Tarick, 2000) as in Study 1 (α = .91). The order of presentation of the rejection of science findings and Faith in Science scale was counterbalanced.

Religion, political ideology, demographic variables

As in Study 1, participants were asked to indicate their gender, age, nationality, religious identity, religious affiliation, belief in God, and political conservatism. They also completed the Orthodoxy subscale of the Postcritical Belief Scale again (α = .94), in which we embedded an attention check.

Science support

Finally, the same13 resource allocation task as employed in Study 1 was presented. Again, participants were asked to rearrange 12 spending areas to reflect their preferred order of prioritization, with science being our target area of interest (ranking range from 1 to 12).

Results

Correlations

Table 6 provides an overview of correlations.

Table 6.

Correlation Matrix, Study 3.

| M (SD) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. MFQ: Purity/degradation | 3.15 (1.12) | — | ||||||||||

| 2. Climate change skepticism | 2.89 (1.76) | .38** | — | |||||||||

| 3. Vaccine skepticism | 2.57 (1.48) | .25** | .41** | — | ||||||||

| 4. GM skepticism | 3.85 (1.61) | .20** | .23** | .58** | — | |||||||

| 5. Faith in science | 4.58 (1.64)) | −.40** | −.40** | −.30** | −.35** | — | ||||||

| 6. Political conservatism | 42.15 (31.29) | .36** | .49** | .11 | .16* | −.41** | — | |||||

| 7. Belief in God | 48.48 (43.07) | .56** | .29** | .17* | .21** | −.66** | .34** | — | ||||

| 8. Religious identity (1 = no; 2 = yes) | 42.5% yes | .53** | .26** | .13 | .12 | −.60** | .30** | .80** | — | |||

| 9. Religious orthodoxy | 2.95 (1.72) | .62** | .40** | .25** | .15 | −.58** | .33** | .71** | .71** | — | ||

| 10. Scientific literacy | 7.01 (1.77) | −.37** | −.18* | −.23** | −.20* | .22** | −.16* | −.39** | −.32** | −.46** | — | |

| 11. Science funding allocation | 6.80 (3.04) | .32** | .28** | .13 | .27** | −.43** | .37** | .42** | .41** | .46** | −28** | — |

Note. Science funding allocation: Higher scores indicate a lower ranking of science on the resource allocation task. MFQ = Moral Foundations Questionnaire; GM = genetic modification.

p < .05. **p < .01.

Hierarchical regression analyses

We again used hierarchical regression analyses to assess which variables best predict our variables of interest. In the current study, we investigated climate change skepticism, vaccination skepticism, GM food skepticism, scientific literacy, faith in science, and resource allocation to science. In addition to demographics, moral foundations were included in Model 2, political conservatism in Model 3, religious identity and orthodoxy in Model 4, and faith in science and scientific literacy in Model 5 (which only applied to analyses on skepticism and science support); see Table 7 (and Appendix C, Tables C8 -C13).

Table 7.

Final Model of Hierarchical Regression Analyses of Scientific Literacy, Faith in Science, Climate Change Skepticism, Vaccine Skepticism, GM Skepticism, and Science Support, Study 3.

| Scientific literacy | Faith in science | Climate change skepticism | Vaccine skepticism | GM skepticism | Science funding allocation | |

|---|---|---|---|---|---|---|

| AdjustedR2 = .25** | Adjusted R2 = .48** | AdjustedR2 = .37** | AdjustedR2 = .15** | AdjustedR2 = .19** | AdjustedR2 = .31** | |

| Purity | −.12 | −.02 | .24* | .33** | .16 | −.18 |

| Conservatism | −.04 | −.19** | .27** | −.06 | .06 | .21* |

| Religious identity | −.01 | −.30** | .21* | .22* | .21^ | .06 |

| Religious orthodoxy | −.36** | −.32** | .24* | .12 | −.12 | .19† |

| Faith in science | −.11 | −.24* | −.38** | −.19* | ||

| Scientific literacy | −.01 | −.19* | −.18* | −.06 |

Note. All analyses adjust for demographic variables and moral foundations scores. Scientific literacy did not contribute to the explained variance of faith in science and vice versa; thus, for these variables, the final model is omitted from this table. Science funding allocation: Higher scores indicate a lower ranking of science on the resource allocation task. GM = genetic modification.

p < .10. *p < .05. **p < .01.

Scientific literacy

Model 4 explained the most variance, F(10, 166) = 6.44, p < .001, with religious orthodoxy (alongside age) as the only significant predictor of literacy, Beta = −.36, p < .001, 95% CI [−0.56, −0.15]. Although faith in science correlated with literacy, it did not contribute unique explained variance.

Science skepticism

Results show that political conservatism is again the strongest predictor of climate change skepticism; Model 3, F(10, 166) = 10.63, p < .001. Faith in science did not explain additional variance. In contrast to Studies 1 and 2, religious identity, orthodoxy, and moral purity explained additional variance over and beyond political ideology.

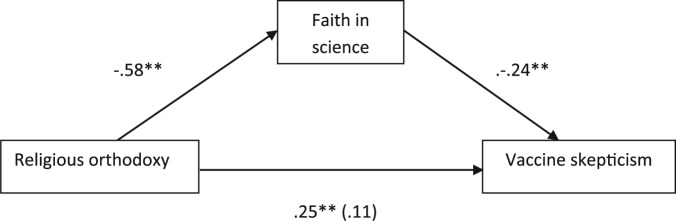

Mirroring the results of Study 1, vaccination skepticism was best predicted by moral purity concerns, religious identity, and faith in science, while political conservatism again played no additional role, Model 5, F(12, 166) = 3.46, p < .001. Importantly, we again found that the effect of religious conservatism (i.e., orthodoxy) on vaccine skepticism was mediated by low faith in science. A bootstrapping analysis of 5,000 samples (Process macro 4; A. F. Hayes, 2012) indeed confirmed mediation of orthodoxy by faith in science, with an indirect effect of .12 (SE = .08) with a 95% CI of [.01, .24]; see Figure 3. Finally, as in the pilot study, scientific literacy also helped predict vaccine skepticism.

Figure 3.

Mediation of religious orthodoxy on vaccine skepticism by faith in science, Study 3.

*p < .05. **p < .01.

GM food skepticism, in turn, was not predicted by religious and political ideology, but primarily by faith in science (in addition to scientific literacy, and gender, see Appendix C, Table C12). Moral purity concerns played a marginal role; Model 5, F(12, 166) = 4.24, p < .001. Thus, faith in science and the scientific method, as well as—to a lesser extent—scientific literacy, was negatively related to GM food skepticism. Women were more skeptical of GM food than men.14

Faith in science

Model 4 explained the most variance, F(10, 166) = 16.18, p < .001, with religious orthodoxy again being the strongest predictor, Beta = −.32, p < .001, 95% CI [−0.47, −0.14]. In contrast to Study 1, religious identity and political conservatism also contributed to the explained variance. Scientific literacy did not explain additional variance.

Science support

In predicting the allocation of resources to science, Model 5 explained the highest proportion of variance, F(12, 166) = 7.27, p < .001. Religious orthodoxy was again a predictor of science support, albeit only marginally, and weaker as in Study 2 (Beta = .19, p = .095, 95% CI [−0.06, 0.71]). In addition, political conservatism (Beta = .21, p < .001, 95% CI [0.01, 0.04]) and faith in science (Beta = −.19, p = .04, 95% CI [−0.69, −0.01]) were significant predictors in this model, which we did not observe in Study 1. Also, gender was again a significant predictor, although gender and age alone explained less variance than in Study 1 (3.8%). Contrary to Study 1, we also observed a role for moral concerns; fairness and authority negatively predicted science support (see Appendix C, Table C13).

Discussion

The goal of Study 3 was to replicate and extend Studies 1 and 2, using more elaborate science skepticism measures. Moreover, Study 3 contained both measures of faith in science and scientific literacy with the goal to directly compare their impact on science skepticism. Results largely replicated our Studies 1 and 2 findings. Again, climate change skepticism was best predicted by political conservatism, which was not due to a general distrust in science. However, in contract to Studies 1 and 2, religious identity and orthodoxy also had some predictive value. Vaccination skepticism was, again, predicted by purity concerns, religious identity and low faith in science. Similar to Study 1, we found that while religious participants were weary of vaccines in general, the orthodox among them seem to be particularly wary of vaccines because they distrust science in general. Scientific literacy also played a role; as in the pilot study, lower literacy was associated with more vaccination skepticism. Regarding GM food skepticism, we replicated the results obtained in Study 2: Low faith in science and low scientific literacy—alongside gender—best predicted GM food skepticism. Religious and political conservatism played no meaningful role in predicting GM food skepticism, although we did find a marginal effect of religious identity.

Religious orthodoxy was again the strongest predictor of faith in science; adding religiosity to the demographic variables and political ideology led to a dramatic increase in explained variance (~20%). This mirrors the results of Studies 1 and 2, and confirms the aforementioned notion that scientific explanations and religious explanations are perceived by many—particularly the orthodox—as competing explananda (e.g., Blancke et al., 2012; Farias, 2013; Jensen, 1998, 2009; Preston & Epley, 2009; Rutjens et al., 2016).

Compared with the results of Study 1, a slightly different, more complex, picture emerged for the behavioral measure of science support. Rather than religious orthodoxy being the sole ideological predictor of reduced science support (see Study 1), the current study found that political conservatism and low faith in science also helped predict—to a similar extent—decreased prioritization of science funding. In addition, we found that fairness and authority concerns also were associated with reduced science support. In other words, the more value was attached to bolstering authority and fairness, the lower science was ranked. Bivariate correlations show that all three binding moral foundations correlated positively with reduced science support, which is in line with previous work on evaluations of scientists (Rutjens & Heine, 2016). When controlling for demographics and political and religious ideology however, fairness and authority concerns remain associated with science support. It is likely that respondents who score high on these measures are more inclined to prioritize other areas (e.g., social security, military) at the expense of science.

The fact that, in contrast to the previous studies, both political conservatism and religious orthodoxy helped predict climate change skepticism and science support in this study could be the result of a temporal fusion of religious and political conservatism. Although a speculative point, the fact that this study was ran on the day before the inauguration of Trump as president of the United States (while data for Studies 1 and 2 were collected early 2016 and 2010, respectively) might signal such a fusion of political ideology and religiosity, which has been argued to increase in times of political uncertainty (Shepherd, Eibach, & Kay, 2017).

General Discussion

Religion and science have repeatedly clashed in the course of modern history. It has been argued that attitudes toward science are becoming more polarized (e.g., Gauchat, 2012; Pittinsky, 2015; Rutjens et al., in press; see also Nature Editorial, 2017) and it is therefore important to gain more insight into the ideological predictors of modern science skepticism. Research investigating the antecedents of science skepticism indeed has made important strides in the last years (e.g., Hornsey & Fielding, 2017; Hornsey et al., 2016; Kahan, 2015; Lewandowsky & Oberauer, 2016). However, one construct that has been given surprisingly limited attention in these endeavors is religiosity. The current work aimed to address this lacuna by systematically scrutinizing religiosity alongside political ideology and morality, while controlling for faith in science and science understanding (i.e., literacy). By simultaneously including the aforementioned constructs and employing various measures and operationalizations of belief in science and science skepticism across different topics, our aim was to shed light on the relative predictive value of political conservatism, religiosity, and morality. Taken together, our results suggest that—with the exception of climate change and GM food skepticism—religiosity plays a pivotal role in predicting science acceptance and rejection. Vaccine skepticism, general faith in science, and the willingness to support science were across studies best predicted by religiosity, over and beyond political ideology, moral concerns, and scientific literacy.

Besides identifying religious identity and religious orthodoxy as being reliably associated with science acceptance and rejection, these results point to the heterogeneous nature of science skepticism. Corroborating previous empirical work (e.g., Lewandowsky, Gignac, & Oberauer, 2013) and a recent meta-analysis (Hornsey et al., 2016), climate change skepticism was consistently found to be best predicted by political ideology. Moreover, we also found that political conservatives’ particular skepticism about climate change could not be attributed to a more general distrust in science. Instead, it is perhaps more likely that our data reflect the argumentation that conservatives worry about the economic and political ramifications of climate science (Lewandowsky, Gignac, & Oberauer, 2013; Lewandowsky & Oberauer, 2016). In contrast, political conservatism did not affect vaccine skepticism: Here, moral purity concerns and religious identity were consistent predictors. In addition, among the religious respondents, religious conservatives were found to be skeptical about vaccines because of a general distrust (i.e., lack of faith) in science. Faith in science may reflect a more existential worldview that is hard to reconcile with orthodoxy in particular (Evans, 2011; Farias et al., 2013; Rutjens et al., 2016). Indeed, the current results also revealed a relatively consistent pattern of religious orthodoxy as the main driver of low faith in science and the unwillingness to support science. In contrast, another contentious topic in the biomedical sciences was not found to be fueled by political or religious belief; GM food skepticism was best predicted by faith in science and knowledge about science. In other words, unlike climate change and vaccine skepticism, GM food skepticism is not driven by political or religious beliefs.

Another way of looking at the antecedents of science acceptance is to pit knowledge constraints (science literacy) against ideological constraints (religious and political convictions).15 Having the background to understand science may help predict overall science acceptance, while ideology differentially predicts acceptance of specific scientific findings. Our data offer insight into this possibility. Indeed, across studies, we find that scientific literacy helps predict both vaccine and GM food skepticism; however, this is not the case for climate science skepticism and general science support (see Figure 1). These findings help formulate recommendations to increase public acceptance of science. First, our findings suggest that to boost acceptance of GM food, it would help to improve public understanding of science. Second, this recommendation may to some extent hold for acceptance of childhood vaccination as well, although based on the predictive power of religiosity, there is more to boosting vaccine acceptance than merely improving scientific literacy. In contrast, to combat climate science skepticism, enhancing literacy may not be very useful at all (and could even backfire; see Drummond & Fischhoff, 2017). Finally, willingness to support science more generally also seems unlikely to increase as a result of merely enhancing public science understanding.

The modest to absent role of political ideology in predicting vaccine and GM food skepticism mirrors earlier work (Kahan, 2015; Lewandowsky, Gignac, & Oberauer, 2013; Scott et al., 2016), and the current research sheds light on which constructs are better suited to predict acceptance of these scientific findings. However, it also highlights the problem of the potentially confounded nature of three overlapping predictors: Political ideology, religiosity, and morality. As one example, a visual inspection of the zero-order correlations suggests a relation between political conservatism and vaccine skepticism in the pilot study and Study 1, but the results of Study 1 show that adjusting for moral purity concerns alone is enough to nullify this correlation. In a similar vein, any ideological correlate with GM food skepticism observed in Study 3 disappeared once faith in science was controlled for. As another example, moral purity concerns correlate with all variables included in the correlation matrices in Studies 1 3, but the relative predictive value of moral purity is reduced once orthodoxy was controlled for. To distill these examples to one focal point, the zero-order correlations in these data do little to reveal the nature of science acceptance and rejection, as most variables intercorrelate, yet crucially some predictors disappear when controlling for others. This corroborates our reasoning that it is essential to include the full range of ideological, moral, and literacy measures and gauge their relative weight when investigating science skepticism.

It is important to note that the current work is correlational and we therefore have to be careful to infer causality. That said, it is unlikely that people become more religious or conservative as a result of skepticism about science. Our aim was to investigate how (relatively stable) ideological differences in religiosity and political orientation impact on science acceptance and rejection.

Another important consideration is that the current studies focused exclusively on samples of North American participants. Studies 1 and 3 were conducted using North American participants on MTurk, a pool of online workers that is not directly representative of any specific segment of any specific population, but is more diverse in terms of demographic background than—for example—the population of North American undergraduate students (Mason & Suri, 2012). Results of Study 2 however, which used data from a representative sample of the U.S. population, increases confidence in the generalizability of our results to at least the population of U.S. adults. It remains to be seen whether the current results will generalize to other populations, for example, secular European countries such as the Netherlands, France, or Denmark.

To sum up the current findings, in four studies, both political conservatism and religiosity independently predict science skepticism and rejection. Climate skepticism was consistently predicted by political conservatism, vaccine skepticism was consistently predicted by religiosity, and GM food skepticism was consistently predicted by low faith in science and knowledge of science. General low faith in science and unwillingness to support science in turn were primarily associated with religiosity, in particular religious conservatism. Thus, different forms of science acceptance and rejection have different ideological roots, although the case could be made that these are generally grounded in conservatism.

Coda

A recent editorial in the prestigious science journal Nature (Nature Editorial, 2017) argued for a more nuanced view on modern anti-science sentiments, given that science is not a single entity that people are either for or against. Speaking to this view, the current article extends the statement that “science does not speak with a single voice” (p. 134) to science skepticism, which—like science itself—is a more heterogeneous phenomenon than previously assumed.

Supplementary Material

Appendix A

Scales

Scientific literacy items (Studies 1 and 3)

All items answered true or false

The center of the Earth is very hot.

All radioactivity is made by humans.

Lasers work by focusing sound waves.

Electrons are smaller than atoms.

It is the father’s gene that decides whether the baby is a boy or a girl.

Antibiotics kill viruses as well as bacteria.

All human-made chemicals can cause cancer.

Astrology has some scientific truth.

Humans developed from animals.

Science skepticism scales (Study 3)

All items answered on scales ranging from 1 (strongly disagree) to 7 (strongly agree)

Climate

I believe that the climate is always changing and what we are currently observing is just natural fluctuation.

I believe that most of the warming over the last 50 years is due to the increase in greenhouse gas concentrations.

I believe that the burning of fossil fuels over the last 50 years has caused serious damage to the planet’s climate.

Human CO2 emissions cause climate change.

Humans are too insignificant to have an appreciable impact on global temperature.

Genetic modification (GM) food

I believe that GM is an important and viable contribution to help feed the world’s rapidly growing population.

I believe genetically engineered foods have already damaged the environment.

The consequences of GM have been tested exhaustively in the lab, and only foods that have been found safe will be made available to the public.

I believe that because there are so many unknowns, that it is dangerous to manipulate the natural genetic material of foods.

GM of foods is a safe and reliable technology.

Vaccine

I believe that vaccines are a safe and reliable way to help avert the spread of preventable diseases.

I believe that vaccines have negative side effects that outweigh the benefits of vaccination for children.

Vaccines are thoroughly tested in the laboratory and wouldn’t be made available to the public unless it was known that they are safe.

The risk of vaccinations to maim and kill children outweighs their health benefits.

Vaccinations are one of the most significant contributions to public health.

Appendix B

Pilot Study Full Results Description and Tables

Predictors of scientific literacy

First, we assessed whether scientific literacy is predicted by the demographic and ideological variables we included in the study. To do so, we used a hierarchical regression analysis (see Table 3). First, we entered age, gender, and profession (academia or other). This model explained 4% of the variance, p = .051. Age and gender were associated with scientific literacy. Not surprisingly, literacy increased with age. Also, men scored marginally higher on the scientific literacy test than women. In Model 1, we added political conservatism, which increased the explained variance to 18%. Adding religious belief (religious identity and belief in God) helped to explain some additional variance, with belief in God being a marginally significant predictor of literacy. Thus, the strongest predictor of literacy was political conservatism; the more conservative, the lower participants scored on the scientific literacy test.

Predictors of science rejection

Next, we used hierarchical regression analysis to assess which of the variables we included in the study best predict science rejection. To do so, we used the same hierarchical regression analysis as above for each of the four rejection items, but adding scientific literacy in a third model and stereotypes16 about scientists in Model 4 (adding this measure did not explain any additional variance and is therefore omitted from Table 3, for presentation purposes).

HIV and smoking

As can be inferred from the correlations displayed in Table 1, rejection of the HIV-AIDS and smoking-lung cancer link was not associated with any of the other variables included in the study, except scientific literacy. Indeed, none of the models tested explained a meaningful amount of the variance for these two items, and the only significant predictor for both items was scientific literacy.

Climate change

In a hierarchical regression analysis (see Table 3), adding political conservatism to the demographics in Model 1 (age, gender, profession) increased explained variance from almost 0% to 17%, with conservatism being a significant predictor, Beta = .44, p < .001, 95% confidence interval (CI) [0.02, 0.04]. Religiosity did not explain additional variance (Model 2). The model that best predicted anthropogenic climate change skepticism was Model 3, which also included scientific literacy. This model explained 20% of the variance, F(6, 97) = 4.67, p < .001. Adding the stereotypes measures in a fourth model did not further increase explained variance.

Vaccinations

Predicting vaccination skepticism (i.e., belief that vaccines can cause autism) yielded a pattern of results different from that of predicting climate change skepticism. Again, as can be seen in Table 3, Model 3 explained the highest portion of variance; 47%, F(6, 97) = 14.08, p < .001. However, here, religious belief, political conservatism, and scientific literacy all significantly explained parts of the variance, with the strongest predictor being scientific literacy, which accounted for an additional 24% of the explained variance in Model 3, Beta = −.55, p < .001, 95% CI [−0.58, −0.31].

Table B1.

Correlation Matrix, Pilot Study.

| M (SD) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Rejection 1: HIV | 1.71 (1.25) | — | |||||||||

| 2. Rejection 2: Smoking | 1.58 (0.99) | .30** | — | ||||||||

| 3. Rejection 3: Climate change | 2.24 (1.57) | .38** | .39** | — | |||||||

| 4. Rejection 4: Vaccinations | 1.87 (1.39) | .16 | .16 | .38** | — | ||||||

| 5. Intuitive moral stereotype | 22.6% fallacy | −.01 | −.13 | −.11 | .00 | — | |||||

| 6. Explicit moral stereotype | 63.33 (14.38) | .07 | .14 | .23* | .26* | .10 | — | ||||

| 7. Political conservatism | 34.37 (26.37) | .08 | −.02 | .41** | .41** | .08 | .26** | — | |||

| 8. Religious identity (1 = no; 2 = yes) | 23% yes | .13 | −.07 | .15 | .14 | .09 | .13 | .36** | — | ||

| 9. Belief in God | 33.44 (39.92) | .10 | −.02 | .17 | .35** | .12 | .38** | .38** | .72** | — | |

| 10. Scientific literacy | 7.18 (1.70) | −.32** | −.20* | −.37** | −.64** | −.14 | −.21* | −.32** | −.17 | −28** | — |

p < .05. **p < .01.

Table B2.

Complete Hierarchical Regression Analysis of Scientific Literacy, Pilot Study.

| Step/predictor | Step 1 | Step 2 | Step 3 |

|---|---|---|---|

| 1. Age | .18 | .23* | .24** |

| Gender (M = 1; F = 2) | −.18 | −.13 | −.21* |

| Profession | .14 | .14 | .14 |

| 2. Religious identity | −.04 | −.09 | |

| Belief in God | −.32* | −.22 | |

| 3. Political conservatism | −.33** | ||

| Adjusted R2 | .04 | .11** | .19** |

p < .05. **p < .01.

Table B3.

Complete Hierarchical Regression Analysis of Climate Change Skepticism, Pilot Study.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .03 | .01 | −.02 | .04 | .04 |

| Gender | .09 | .06 | .16 | .11 | .11 |

| Profession | .02 | .02 | .03 | −.01 | −.02 |

| 2. Religious identity | −.07 | .00 | −.03 | −.03 | |

| Belief in God | .12 | −.02 | −.08 | −.08 | |

| 3. Political conservatism | .45** | .36** | .34** | ||

| 4. Scientific literacy | −.27** | −.26* | |||

| 5. Stereotypes | .10 | ||||

| Adjusted R2 | −.02 | −.01 | .15** | .20** | .20** |

p < .05. **p < .01.

Table B4.

Complete Hierarchical Regression Analysis of Vaccine Skepticism, Pilot Study.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .01 | −.04 | −.05 | .08 | .08 |

| Gender | .08 | .01 | .09 | −.02 | −.02 |

| Profession | .10 | .09 | .09 | .02 | .02 |

| 2. Religious identity | .25 | .30* | .25* | .25* | |

| Belief in God | .51** | .42** | .30** | .30** | |

| 3. Political conservatism | .38** | .20* | .19* | ||

| 4. Scientific literacy | −.55** | −.54** | |||

| 5. Stereotypes | .08 | ||||

| Adjusted R2 | −.01 | .12** | .23** | .47** | .47** |

p < .05. **p < .01.

Appendix C

Complete Hierarchical Regression Tables, Studies 1 to 3

Study 1

Table C1.

Complete Hierarchical Regression Analysis of Faith in Science, Study 1.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 |

|---|---|---|---|---|

| 1. Age | −.20** | −.13 | −.09 | −.14* |

| Gender (M = 1; F = 2) | −.22** | −.22** | −.23** | −.11 |

| 2. Care | .14 | .09 | .07 | |

| Fairness | .04 | −.01 | .01 | |

| Loyalty | −.03 | −.00 | .04 | |

| Authority | .09 | .16 | .08 | |

| Purity | −.41** | −.35** | −.17* | |

| 3. Conservatism | −.25** | −.05 | ||

| 4. Religious identity | −.11 | |||

| Religious orthodoxy | −.43** | |||

| Adjusted R2 | .10** | .22** | .26** | .42** |

p < .05. **p < .01.

Table C2.

Complete Hierarchical Regression Analysis of Climate Change Skepticism, Study 1.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .13 | .09 | .05 | .05 | .08 |

| Gender | −.05 | −.00 | −.00 | .01 | −.02 |

| 2. Care | −.20* | −.15 | −.16 | −.14 | |

| Fairness | −.04 | −.00 | .02 | .03 | |

| Loyalty | .11 | .08 | .07 | .08 | |

| Authority | −.08 | −.15 | −.15 | −.13 | |

| Purity | .23* | .18 | .16 | .11 | |

| 3. Conservatism | .24** | .24* | .23* | ||

| 4. Religious identity | .14 | .18 | |||

| Religious orthodoxy | .14 | .00 | |||

| 5. Faith in Science | −.34** | ||||

| Adjusted R2 | .00 | .07 | .10** | .10** | .16** |

p < .05. **p < .01.

Table C3.

Complete Hierarchical Regression Analysis of Vaccine Skepticism, Study 1.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .03 | −.04 | −.04 | −.04 | −.07 |

| Gender | .14 | .14 | .14 | .17* | .15 |

| 2. Care | −.05 | −.04 | −.06 | −.05 | |

| Fairness | .03 | .05 | .07 | .08 | |

| Loyalty | .16 | .15 | .13 | .14 | |

| Authority | −.02 | −.04 | −.05 | −.03 | |

| Purity | .27** | .26** | .25** | .21* | |

| 3. Conservatism | .08 | .09 | .08 | ||

| 4. Religious identity | .26* | .29** | |||

| Religious orthodoxy | .23* | .14 | |||

| 5. Faith in Science | −.22* | ||||

| Adjusted R2 | .01 | .11** | .11** | .13** | .16** |

p < .05. **p < .01.

Table C4.

Complete Hierarchical Regression Analysis of Science Spending on the Resource Allocation Task, Study 1.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .09 | .04 | .02 | .04 | .03 |

| Gender (M = 1; F = 2) | .35** | .30** | .30** | .28** | .27** |

| 2. Care | .00 | .02 | .01 | .02 | |

| Fairness | .02 | .04 | .05 | .05 | |

| Loyalty | .00 | −.02 | −.04 | −.04 | |

| Authority | .09 | .07 | .09 | .09 | |

| Purity | .25** | .23* | .17 | .16 | |

| 3. Conservatism | .10 | .04 | .04 | ||

| 4. Religious identity | .08 | .09 | |||

| Religious orthodoxy | .24* | .21* | |||

| 5. Faith in Science | −.06 | ||||

| Adjusted R2 | .14** | .21** | .21** | .22** | .22** |

Note. Higher scores on the resource allocation task indicate less science support.

Study 2

Table C5.

Complete Hierarchical Regression Analysis of Faith in Science, Study 2.

| Step/predictor | Step 1 | Step 2 | Step 3 |

|---|---|---|---|

| 1. Age | .00 | .00 | .05* |

| Gender | −.10** | −.11** | −.08** |

| 2. Conservatism | −.03 | −.01 | |

| 3. Religious identity | −.16** | ||

| Religious orthodoxy | −.19** | ||

| Adjusted R2 | .01** | .01** | .10** |

p < .05. **p < .01.

Table C6.

Complete Hierarchical Regression Analysis of Climate Change Skepticism, Study 2.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 |

|---|---|---|---|---|

| 1. Age | .14** | .14** | .12** | .13** |

| Gender | −.07* | −.03 | −.04 | −.04 |

| 2. Conservatism | .27** | .26** | .26** | |

| 3. Religious identity | .08** | .08* | ||

| Religious orthodoxy | −.02 | −.01 | ||

| 4. Faith in Science | −.06* | |||

| Adjusted R2 | .02** | .09** | .10** | .10** |

p < .05. **p < .01.

Table C7.

Complete Hierarchical Regression Analysis of GM Food Skepticism, Study 2.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 |

|---|---|---|---|---|

| 1. Age | −.08** | −.08** | −.08** | −.07* |

| Gender | .16** | .15** | .15** | .14** |

| 2. Conservatism | −.08** | −.08** | −.08** | |

| 3. Religious identity | −.03 | −.06 | ||

| Religious orthodoxy | −.03 | .00 | ||

| 4. Faith in Science | −.19** | |||

| Adjusted R2 | .03** | .04** | .03** | .07** |

Note. GM = genetic modification.

p < .05. **p < .01.

Study 3

Table C8.

Complete Hierarchical Regression Analysis of scientific Literacy, Study 3.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .25** | .26** | .27** | .24** | .24** |

| Gender | −.21** | −.19* | −.19* | −.14† | −.14† |

| 2. Care | .04 | .03 | .04 | .04 | |

| Fairness | −.06 | −.08 | −.10 | −.10 | |

| Loyalty | −.06 | −.05 | −.04 | −.04 | |

| Authority | −.04 | −.03 | .01 | .01 | |

| Purity | −.31** | −.30** | −.12 | −.12 | |

| 3. Conservatism | −.06 | −.04 | −.04 | ||

| 4. Religious identity | −.01 | −.01 | |||

| Religious orthodoxy | −.36** | −.36** | |||

| 5. Faith in Science | −.02 | ||||

| Adjusted R2 | .07** | .19** | .18** | .25** | .24** |

p < .10. *p < .05. **p < .01.

Table C9.

Complete Hierarchical Regression Analysis of Faith in Science, Study 3.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | −.21** | −.21** | −.19** | −.20** | −.20** |

| Gender | −.15 | −.12 | −.12 | −.05 | −.05 |

| 2. Care | −.02 | −.03 | .02 | .02 | |

| Fairness | .24** | .17* | .12 | .12 | |

| Loyalty | −.04 | −.02 | −.01 | −.01 | |

| Authority | −.07 | −.01 | .06 | .06 | |

| Purity | −.37** | −.30** | −.02 | −.02 | |

| 3. Conservatism | −.24** | −.19** | −.19** | ||

| 4. Religious identity | −.30** | −.30** | |||

| Religious orthodoxy | −.32** | −.32** | |||

| 5. Scientific literacy | −.01 | ||||

| Adjusted R2 | .07** | .25** | .29** | .48** | .47** |

p < .05. **p < .01.

Table C10.

Complete Hierarchical Regression Analysis of Climate Change Skepticism, Study 3.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .06 | .05 | .03 | .06 | .04 |

| Gender | .11 | .08 | .09 | .07 | .07 |

| 2. Care | .08 | .10 | .12 | .12 | |

| Fairness | −.42** | −.34** | −.33** | −.32** | |

| Loyalty | −.08 | −.10 | −.11 | −.11 | |

| Authority | .17 | .11 | .09 | .09 | |

| Purity | .39** | .31** | .24* | .24* | |

| 3. Conservatism | .29** | .29** | .27** | ||

| 4. Religious identity | .17 | .21* | |||

| Religious orthodoxy | .27** | .24* | |||

| 5. Faith in science | −.11 | ||||

| Scientific literacy | −.01 | ||||

| Adjusted R2 | .01 | .28** | .34** | .37** | .37** |

p < .05. **p < .01.

Table C11.

Complete Hierarchical Regression Analysis of Vaccine Skepticism, Study 3.

| Step/predictor | Step 1 | Step 2 | Step 3 | Step 4 | Step 5 |

|---|---|---|---|---|---|

| 1. Age | .12 | .13 | .13 | .16 | .15 |

| Gender | .06 | .04 | .04 | .02 | −.02 |

| 2. Care | −.03 | −.03 | −.02 | −.01 | |

| Fairness | −.18* | −.18* | −.18* | −.17 | |

| Loyalty | −.12 | −.12 | −.13 | −.14 | |