Abstract

An endoscopic imaging system using a plenoptic technique to reconstruct 3-D information is demonstrated and analyzed in this Letter. The proposed setup integrates a clinical surgical endoscope with a plenoptic camera to achieve a depth accuracy error of about 1 mm and a precision error of about 2 mm, within a 25 mm × 25 mm field of view, operating at 11 frames per second.

Endoscopic imaging provides visualization in minimally invasive surgery and helps reduce the trauma associated with open procedures[1]. Recent advances in 3-D peripheral reconstruction for endoscopy allow for better tissue assessment and objective risk evaluation by surgeons, and help enhance autonomous control in robotic surgery[2–4]. These advances have enabled real-time surface reconstruction during surgery, and include stereoscopy, time-of-flight (ToF), structured illumination, and plenoptic imaging. To achieve realistic 3-D surface reconstructions of a surgical site in aminimally invasive surgical setting, a 3-D endoscopic system should provide high precision and accuracy within the desired field of view (FOV), and a frame rate adequate for observation and navigation by surgeons.

Stereoscopy uses a passive wide-field illumination and acquires two images of an object from two viewing angles to reconstruct depth information via disparity searching. The depth resolution ranges from 0.05 to 0.6 mm[5,6], which can be achieved with enhanced searching algorithms[7–9]. However, this requires sufficient spatial offset between the two views to achieve a high depth resolution.

ToF techniques measure the differences in phase and intensity of time or frequency modulated laser pulses. Depth information can hence be reconstructed with low computational cost based on the active light modulation information. However, depth resolution based on ToF is relatively poor from 0.89 to 4 mm[10,11]. In addition, this technique often suffers from systemic errors in camera temperature tolerance and varying exposure time. Other impact factors include biological optical properties from studied samples such as absorption and scattering coefficients that can change with respect to the incoming light source and ray angle[12].

The structured illumination technique is classical in 3-D reconstruction, with its principle based either on disparity searching, similar to the stereoscopy technique[13], or on the reconstructed phase information[14,15] from multiple artificial light patterns on the sample. Structured illumination can achieve a very high depth resolution, up to 0.05 mm[16], and has been employed in several medical applications[17–20]. However, it requires an active pattern projector for light modulation that cycles between different pattern illuminations, leading to complications in camera calibration and necessitating a high-power light source[21].

In addition to the three techniques described above, plenoptic imaging is a fairly new 3-D reconstruction technique in the biomedical field. The technique involves a microlens array (MLA) integrated onto an imaging sensor, such that each point of the object can be viewed and imaged at different angles via adjacent microlenses. The depth information can be deduced similarly to the stereoscopy approach. However, in stereoscopy, the two imagers should maintain a set angle of separation to obtain two distinct views of the object while ensuring the desired depth accuracy[22–24]. A stereoscope utilizes triangulation in which the two imagers maintain a set angle relative the object for correspondence searching. As an extension of the stereoscope, a plenoptic imager utilizes only one sensor with multiple micro lenses to create a higher number of viewing positions, and thus improve the correspondence searching performance. Moreover, a plenoptic camera offers the reduction of systematic calibration due to the known separation between each microlens (i.e., known distance between microsensors). In addition, plenoptic imaging also creates the expansion of parallax computation in both horizontal and vertical directions compared to one dimension in a stereoscope. Currently, plenoptic imaging is widely accepted in industry for multifocus imaging[25,26]; however, plenoptic imaging in medicine is limited with a current depth precision of 1 mm[27,28] in wide-field imaging. To adapt this technique to an endoscopic setting for minimally invasive surgery, we propose a plenoptic endoscopy design that consists of a clinical surgical endoscope, a plenoptic camera, and a relay optical system. The proposed setup compensates for the aperture mismatch between the endoscope and the MLA fabricated on the plenoptic camera.

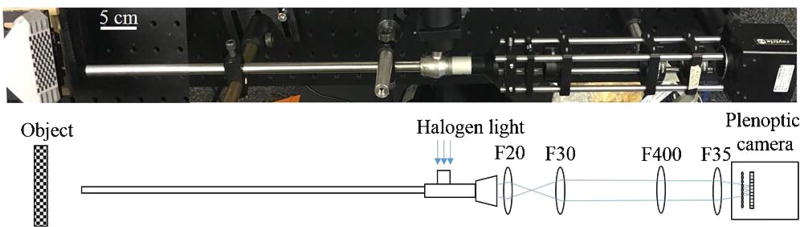

The endoscopic setup described in Fig. 1 employs a 0° surgical borescope with a scope housing diameter of 10 mm (Stryker, San Jose, California, USA) for both illumination and imaging. The light from a halogen bulb is coupled into the light pipe of the borescope to illuminate the surgical site and the image is coupled back to the plenoptic camera via the same borescope and a relay lens system. The plenoptic camera used is commercially available with a predefined MLA setting (Raytrix R5, Kiel, Germany). The relay lens system comprises four biconvex lenses with various focal lengths (Thorlabs, New Jersey, USA) to achieve the desired microlens image on the plenoptic camera. In particular, a 20 mm focal length biconvex lens (LA1859, Thorlabs, New Jersey, USA) is used to form an image with output light rays from the borescope eyepiece. This image is then expanded via three biconvex lenses with focal lengths of 30 mm (LA1805, Thorlabs, New Jersey, USA), 400 mm (LA1172, Thorlabs, New Jersey, USA), and 35 mm (LA1027, Thorlabs, New Jersey, USA) to form the microlens images with an aperture matching the aperture of the fabricated microlens. Beam expansion could be achieved with fewer lenses; however, the combination of F30 and F400 lenses is used to fine tune the image and to provide flexibility for beam delineation. A scale bar of 5 cm length is added in the system schematic for optical alignment reference. The imaging system is operated at 11 frames per second with CUDA GeForce GTX 690 on a Dell Precision T7600 workstation for image reconstruction.

Fig. 1.

Schematic of the endoscopic setup with the plenoptic camera and relay lens system.

In the plenoptic imaging setting, a main lens produces an image from a real object; this image then acts as the object for the MLA. Through the MLA, the incident light cone is split into multiple subimages collected by the sensor. Based on these collected microlens images, depth calculation is defined by the relation between the calculated virtual depth and the metric transformation relation[29,30].

Depth calculation of a plenoptic setup relates to stereoscopy technology, where the MLA performs as a micro camera array that generates multiple views of a small portion of the object, which is also the image generated by the main lens. First, a correspondence search is established with each pixel location from one micro sensor correlated with the other pixel location from the adjacent micro sensor using the sum of the absolute difference method over a group of pixel points along the epipolar lines. The method searches for corresponding pixels between the two adjacent micro images by minimizing the absolute difference between the pixel values within a window size,

| (1) |

where (i, j) is the pixel index of adjacent micro images I1 and I2, m and n are the pixel numbers along horizontal and vertical axes, and x and y represent the disparity along the two directions, respectively.

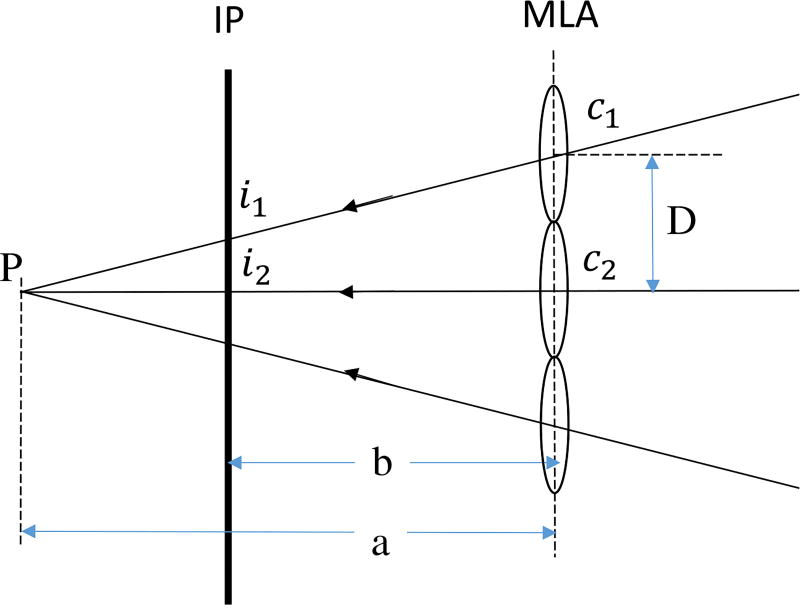

Once the correlated pixels of these micro images are determined, the intersection point of projected rays through these pixels into the virtual 3-D space determines the virtual depth of the object. This virtual depth is related to the distance between the observed object and the camera. An example depicted in Fig. 2 calculates the virtual depth υp to explain this calculation[30],

| (2) |

where a is the distance between MLA to the virtual parallax point, and b is the distance between the sensor and the MLA. D is the baseline distance between two microlenses with centers c1 and c2; i1 and i2 are the image pixels of the same object point projected by the two micro lenses.

Fig. 2.

Triangulation principle for virtual depth estimation. IP: Image plane.

From the virtual depth information, metric distance bL of the virtual depth is computed as

| (3) |

where h is the distance between the main lens and the MLA.

As the optical property of the endoscope is proprietary, the overall system magnification is unknown theoretically; however, we determine the system magnification empirically using a known measurement height standard. Therefore, the scaled object height is determined using this magnification.

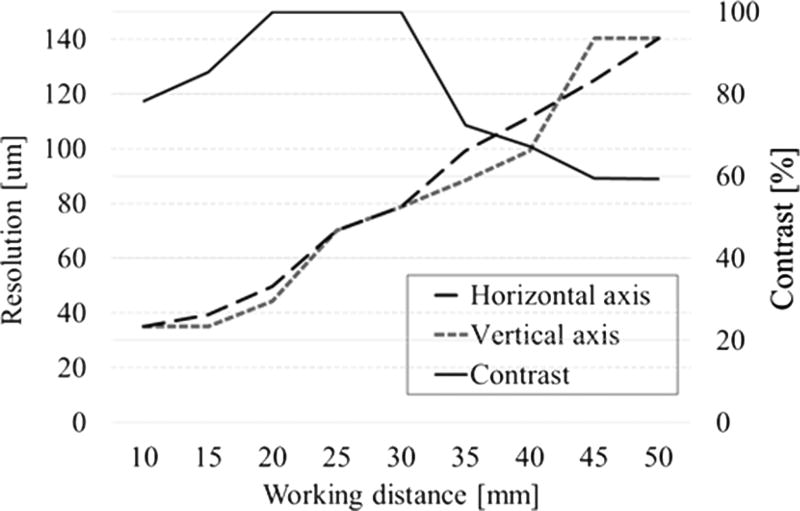

To evaluate the system performance, we calculate the depth of field (DOF) via the resolution and contrast measurement within a range of working distances. The resolution is the smallest resolvable width of a horizontal or vertical line on a USAF test target 1951 (R3L3S1 P, Thorlabs, New Jersey, USA). The standard target is moved away from the distal end of the borescope without refocusing, with an equal step size of 5 mm and increasing the located distance from 10 to 50 mm. In addition, a contrast change with the set working distance was also recorded. The resolution and DOF plot in Fig. 3 shows that the best contrast occurs at 20 to 30 mm away from the borescope. At this distance, the resolution is recorded to be within 50 to 80 µm, which is sufficient for imaging biological samples such as intestinal organs in anastomosis surgery.

Fig. 3.

Resolution and contrast measurements.

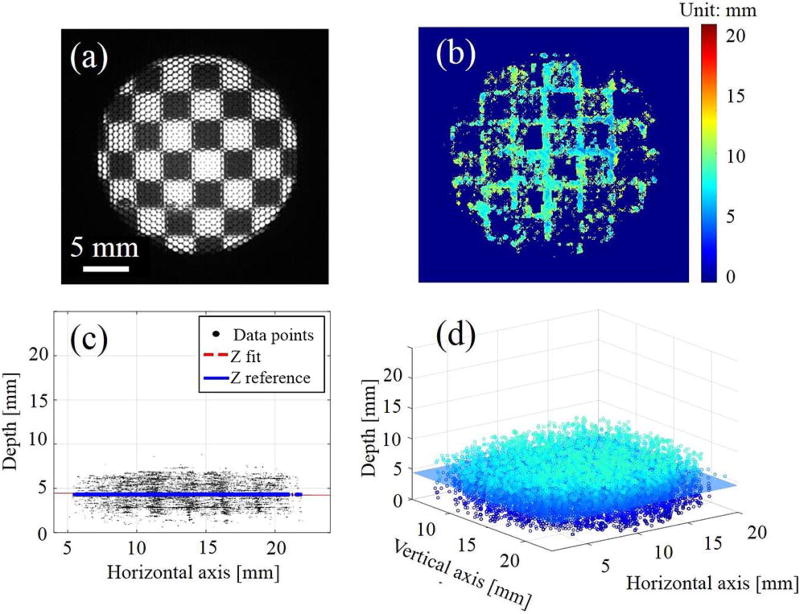

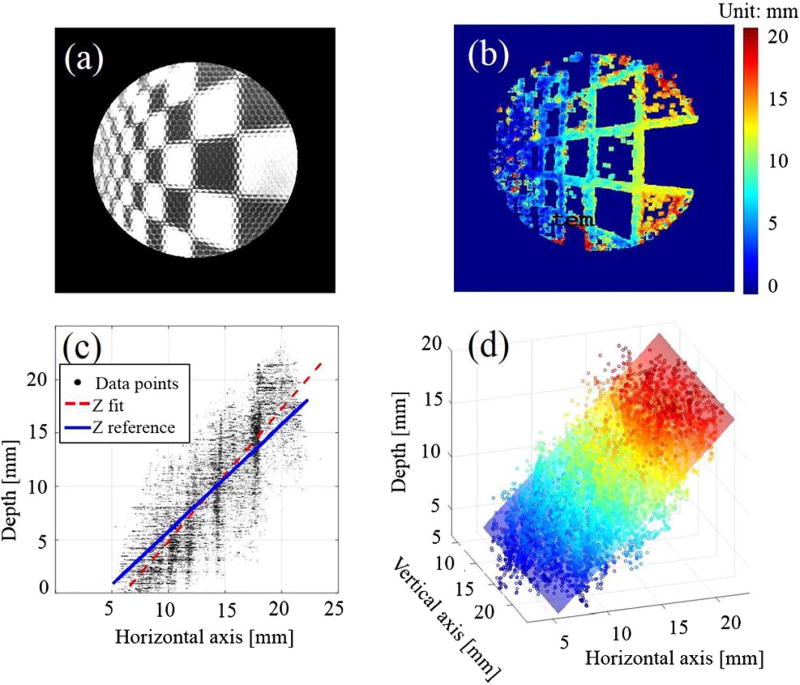

To further validate the system’s accuracy and precision, we used a checkerboard pattern with a known square size of 3.5 mm on a DOF target serving as the height standard (DOF 5–15, Edmund Optics, Barrington, New Jersey). The target is aligned at 0° and 45° and 20 mm away from the distal end plane of the borescope, as indicated in Figs. 4(a) and 5(a). The accuracy error is characterized by the mean distance and standard deviation between the fitted plane and the reference plane modeled at 0° and 45°, while the precision error is defined by the variation (mean distance and standard deviation) of the collected data points from the fitted planes [see Figs. 4(c) 4(d), 5(c), and 5(d)].

Fig. 4.

(a) Microlens image of the checker board at 0° with (b) its depth map and (c, d) point cloud data at different views.

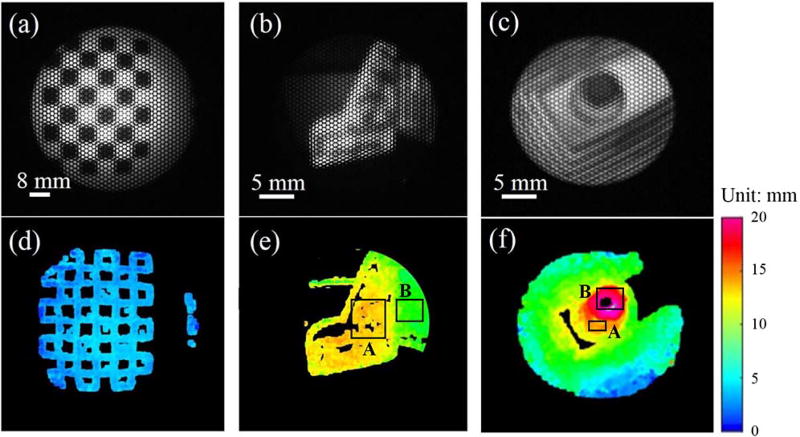

Fig. 5.

Microlens image of the checker board at 45° with (b) its depth map and (c, d) point cloud data at different views.

Due to the triangulation, the depth reconstruction is only possible when sufficient object features and local contrast are achieved, such as at the edges and corners of the checkerboard, as observed in Figs. 4 and 5. As the angle deviates from 0° to 45°, the depth map and point cloud accumulations illustrate the change presented in the corresponding colormap. The calculated accuracy and precision are displayed in Table 1, with an average maximum accuracy and precision error of about 1–2 mm and a FOV of 25 mm × 25 mm. Depending on the object of interest, a required FOV, resolution, and depth precision is demanded accordingly. For a minimally-invasive surgical endoscope especially for anastomosis surgery, a FOV of 25 mm × 25 mm × 25 mm with a spatial resolution of about 200 µm and a depth precision of about 1 mm are sufficient for 3-D image guided anastomosis[4].

Table 1.

Reconstruction Accuracy and Precision at Two Planar Angle Deviations at 0° and 45° (Unit: mm)

| 0° | 45° | ||

|---|---|---|---|

| Accuracy | Mean | 0.085 | 0.818 |

| Standard deviation | 0.032 | 0.440 | |

| Maximum | 0.103 | 1.439 | |

| Precision | Mean | 1.141 | 2.367 |

| Standard deviation | 0.721 | 1.800 | |

| Maximum | 3.863 | 11.658 |

A finer grid pattern for testing the system’s accuracy and precision in Figs. 4 and 5 can improve the 3-D reconstruction due to the increase in data points. However, in medical applications imaging targets often lack dense features. We thus believe that a sparsely distributed checkerboard provides a more realistic test pattern. We also believe that a custom-developed light-field endoscopic camera with a custom MLA will further improve the precision and accuracy in the future.

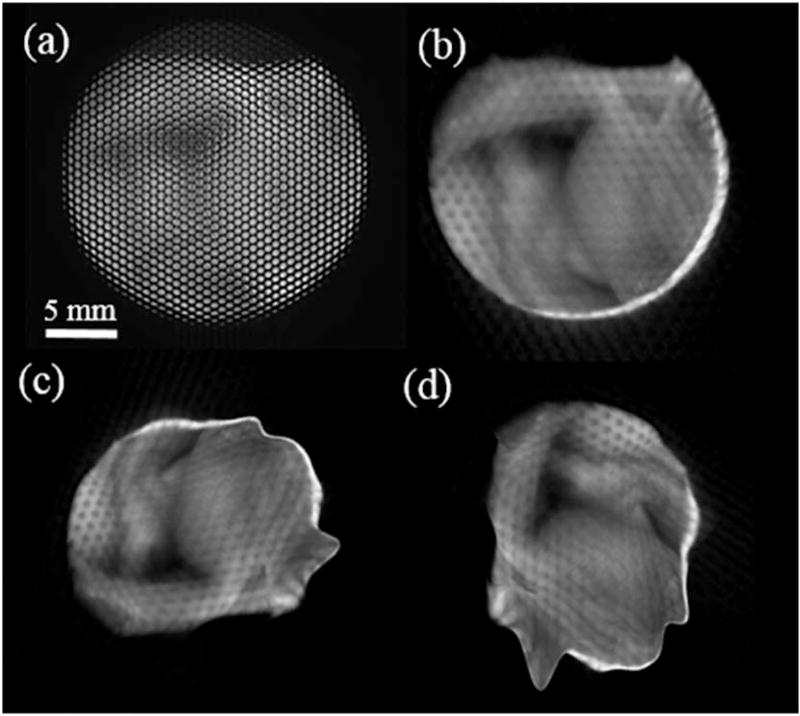

Other complicated 3-D-printed objects of polydimethylsiloxane material with defined structures and known dimensions were further used to examine the system spatial reconstruction. The result indicates the distinct curvature and heights of the objects with the displayed depth colormap (see Fig. 6). In particular, an average reconstructed height from the L-shape base A to the lower base B of the second object [Figs. 6(b) and 6(e)] is 5.5 mm compared to the physical height of 6.64 mm, leading to an error of 1.14 mm. Another height error for the third 3-Dprinted object in Figs. 6(c) and 6(f) was also recorded to be 0.75 mm from the base A to the podium base B. A biological sample of a fowl ventricular specimen was harvested for 3-D measurement (Fig. 7). The specimen’s structural features are contained; however, circular patterns between the microlenses were also detected. This can be explained by the unresolved depth information between the microlens’ edges.

Fig. 6.

(a–c) Microlens image of a plane and inhomogeneous objects and (d–f) its reconstructed depth maps.

Fig. 7.

(a) Microlens image of a fowl ventricular specimen and (b–d) its 3-D reconstructions at multiple angles.

Due to the nature of the plenoptic technique for searching for the disparity between adjacent microlens images, the reconstruction algorithm depends strongly on the inherent features of the tested samples. In other words, featureless or homogeneous regions of the object create outliers or missing depth information, thus data interpolation is essential. To compensate for this limitation, a projector can be used to actively illuminate known features onto the object and an efficient illumination setting can be used to resolve the finer details of the object as well as to avoid reflectance saturation. Nonexistent data points have no effect on the accuracy and precision. The depth estimation depends on detectable features that could be maximized by using active illumination. There are a few advantages of plenoptic endoscopy with active illumination over normal structured illumination. The first is that the plenoptic approach allows the user to observe the scene from a variety of angles in both horizontal and vertical directions due to the MLA arrangement. The second is that the entire scene can be brought into focus provided that it is within the FOV. Lastly, the structure of the illumination needs not be known beforehand, and is not a source of error. Typical structured light approaches rely on a precisely known projection pattern, whereas the plenoptic approach seeks only high contrast features, which can be provided in a myriad of ways.

In conclusion, a 3-D endoscopic system using a plenoptic imaging technique is demonstrated with reconstructed dimensions of both planar and complex samples. We are currently working with the research and development team at the Raytrix Company to further improve the design of MLA that will benefit from such endoscopic 3-D vision for minimally invasive surgery. The improvement involves the f-matching performance of aperture size between the MLA and the optics of the commercial surgical borescope, while maintaining an adequate frame rate for surgical guidance purpose (10 frames per second). Moreover, other optical analysis techniques, such as multispectral imaging or laser speckle contrast, can be registered onto the 3-D rendering to provide the dynamic properties of the studied tissue in minimally invasive surgery.

Acknowledgments

This work was supported by the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health under Grant no. 1R01EB020610. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We thank Justin Opfermann and Dr. Xinyang Liu (Children’s National Health System) for the use of their surgical endoscope.

References

- 1.Bucher P, Pugin F, Ostermann S, Ris F, Chilcott M, Morel P. Surg. Endosc. 2011;25:408. doi: 10.1007/s00464-010-1180-1. [DOI] [PubMed] [Google Scholar]

- 2.Kang CM, Kim DH, Lee WJ, Chi HS. Surg. Endosc. 2011;25:2004. doi: 10.1007/s00464-010-1504-1. [DOI] [PubMed] [Google Scholar]

- 3.Smith R, Day A, Rockall T, Ballard K, Bailey M, Jourdan I. Surg. Endosc. 2012;26:1522. doi: 10.1007/s00464-011-2080-8. [DOI] [PubMed] [Google Scholar]

- 4.Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, Kim PC. Sci. Transl. Med. 2016;8:337ra64. doi: 10.1126/scitranslmed.aad9398. [DOI] [PubMed] [Google Scholar]

- 5.Rohl S, Bodenstedt S, Suwelack S, Kenngott H, Müller-Stich BP, Dillmann R, Speidel S. Med. Phys. 2012;39:1632. doi: 10.1118/1.3681017. [DOI] [PubMed] [Google Scholar]

- 6.Atzpadin N, Kauff P, Schreer O. IEEE Trans. Circuits Syst. Video Technol. 2004;14:321. [Google Scholar]

- 7.Stoyanov D, Scarzanella MV, Pratt P, Yang GZ. Med. Image Comput. Comput. Assist. Intervention. 2010;6361:275. doi: 10.1007/978-3-642-15705-9_34. [DOI] [PubMed] [Google Scholar]

- 8.Stoyanov D. Med. Image Comput. Comput. Assist. Interv. 2012;7510:479. doi: 10.1007/978-3-642-33415-3_59. [DOI] [PubMed] [Google Scholar]

- 9.Chang PL, Stoyanov D, Davison A, Edwards PE. Med. Image Comput. Comput. Assist. Interv. 2013;8149:42. doi: 10.1007/978-3-642-40811-3_6. [DOI] [PubMed] [Google Scholar]

- 10.Penne J, Höller K, Stürmer M, Schrauder T, Schneider A, Engelbrecht R, Feußner H, Schmauss B, Hornegger J. Med. Image Comput. Comput. Assist. Interv. 2009;5761:467. doi: 10.1007/978-3-642-04268-3_58. [DOI] [PubMed] [Google Scholar]

- 11.Groch A, Seitel A, Hempel S, Speidel S, Engelbrecht R, Penne J, Holler K, Rohl S, Yung K, Bodenstedt S, Pflaum F. Proc. SPIE. 2011;7964:796415. [Google Scholar]

- 12.Fursattel P, Placht S, Schaller C, Balda M, Hofmann H, Maier A, Riess C. IEEE Trans. Comput. Imaging. 2016;2:27. [Google Scholar]

- 13.Maier-Hein L, Mountney P, Bartoli A, Elhawary H, Elson D, Groch A, Kolb A, Rodrigues M, Sorger J, Speidel S, Stoyanov D. Med. Image Anal. 2013;17:974. doi: 10.1016/j.media.2013.04.003. [DOI] [PubMed] [Google Scholar]

- 14.Zhang S. Opt. Lasers Eng. 2010;48:149. [Google Scholar]

- 15.Du H, Wang Z. Opt. Lett. 2007;32:2438. doi: 10.1364/ol.32.002438. [DOI] [PubMed] [Google Scholar]

- 16.Wu TT, Qu JY. Opt. Express. 2007;15:10421. doi: 10.1364/oe.15.010421. [DOI] [PubMed] [Google Scholar]

- 17.Schmalz C, Forster F, Schick A, Angelopoulou E. Med. Image Anal. 2012;16:1063. doi: 10.1016/j.media.2012.04.001. [DOI] [PubMed] [Google Scholar]

- 18.Yagnik J, Gorthi GS, Ramakrishnan KR, Rao LK. TENCON 2005–2005 IEEE Region 10 Conference; 2005. p. 1. [Google Scholar]

- 19.Hain T, Eckhardt R, Kunzi-Rapp K, Schmitz B. Med. Laser Appl. 2002;17:55. [Google Scholar]

- 20.Gomes PF, Sesselmann M, Faria CD, Araújo PA, Teixeira-Salmela LF. J. Biomech. 2010;43:1215. doi: 10.1016/j.jbiomech.2009.12.015. [DOI] [PubMed] [Google Scholar]

- 21.Clancy NT, Stoyanov D, Maier-Hein L, Groch A, Yang GZ, Elson DS. Biomed. Opt. Express. 2011;2:3119. doi: 10.1364/BOE.2.003119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.National Instruments. 3D Imaging with NI LabVIEW. 2016 http://www.ni.com/white-paper/14103/en/

- 23.Rao R. Stereo and 3D vision. 2009 https://courses.cs.washington.edu/courses/cse455/09wi/Lects/lect16.pdf.

- 24.Nguyen H, Wang Z, Quisberth J. Adv. Opt. Methods Exp. Mech. 2016;3:195. [Google Scholar]

- 25.Georgiev T, Lumsdaine A. Proc. SPIE. 2012;8299:829908. [Google Scholar]

- 26.Perwass C, Wietzke L. Proc. SPIE. 2012;8291:829108. [Google Scholar]

- 27.Decker R, Shademan A, Opfermann J, Leonard S, Kim PC, Krieger A. Proc. SPIE. 2015;9494:94940B. [Google Scholar]

- 28.Shademan A, Decker RS, Opfermann J, Leonard S, Kim PC, Krieger A. IEEE International Conference on Robotics and Automation (ICRA); 2016. p. 708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Heinze C, Spyropoulos S, Hussmann S, Perwass C. IEEE Trans. Instrum. Meas. 2016;65:1197. [Google Scholar]

- 30.Johannsen O, Heinze C, Goldluecke B, Perwaß C. Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications. Springer; 2013. On the calibration of focused plenoptic cameras; p. 302. [Google Scholar]