Abstract

Fluorescence optical imaging techniques have revolutionized the field of cardiac electrophysiology and advanced our understanding of complex electrical activities such as arrhythmias. However, traditional monocular optical mapping systems, despite having high spatial resolution, are restricted to a two-dimensional (2D) field of view. Consequently, tracking complex three-dimensional (3D) electrical waves such as during ventricular fibrillation is challenging as the waves rapidly move in and out of the field of view. This problem has been solved by panoramic imaging which uses multiple cameras to measure the electrical activity from the entire epicardial surface. However, the diverse engineering skill set and substantial resource cost required to design and implement this solution have made it largely inaccessible to the biomedical research community at large. To address this barrier to entry, we present an open source toolkit for building panoramic optical mapping systems which includes the 3D printing of perfusion and imaging hardware, as well as software for data processing and analysis. In this paper, we describe the toolkit and demonstrate it on different mammalian hearts: mouse, rat, and rabbit.

Introduction

Cardiac mapping is a powerful technique to understand the spread of electrical activity during normal cardiac rhythm as well as during abnormal electrical behavior such as initiation and maintenance of arrhythmias. Traditionally, electrical mapping with surface electrodes has been used for cardiac mapping. This method, however, is constrained by the number of electrodes that can be placed on the cardiac surface (low spatial resolution) and maintenance of good electrode-tissue contact (low quality of signals)1. Moreover, the interpretation of electrical signals during complex arrhythmias and antiarrhythmic therapies is challenging and ambiguous due to noise, far field sensing, and the method’s susceptibility to stimulation artifacts (e.g., defibrillation shocks)2. Optical imaging with fluorescent probes (e.g., calcium and voltage sensitive dyes) overcomes these problems, facilitating high resolution cardiac mapping without direct tissue contact3,4. Consequently, optical mapping techniques have revolutionized cardiac electrophysiology research, advancing our understanding of cardiac electrical activity, calcium handling, signaling, and metabolism5–8.

While many electrophysiological studies can be carried out using a monocular setup, there are some that necessitate a whole surface approach to data acquisition. Of particular relevance are ventricular and atrial arrhythmias. It has been observed that meandering reentrant rotors and/or dynamic focal sources of activity perpetuate arrhythmias9,10. Assessment of these rotors and sources of activity would be limited to those on the camera facing epicardial surface, that do not meander outside the field of view. Panoramic optical mapping overcomes these limitations by first visualizing the entirety of the heart surface and then eliminating discontinuities in the data by projecting it onto a representative, organ specific, anatomical geometry.

Several laboratories have developed different approaches to panoramic imaging as imaging technology continued to improve. Lin et al. used two mirrors to project multiple views onto a single CCD camera sensor11. However, the method was constrained by the absence of geometric information and relatively low quality of recordings. Bray et al. introduced the ability to reconstruct realistic epicardial geometry to visualize functional data from small rabbit hearts12. Kay et al. extended the technique to image functional data from larger swine hearts by four CCD cameras13. Efimov laboratory has progressively developed several generations of panoramic imaging systems and introduced computational 2D to 3D translation methods to quantify and characterize arrhythmia behavior14.

Over the last decade, panoramic imaging has been used to effectively investigate wavefront and rotor dynamics during ventricular tachycardia and fibrillation15–18, approaches to low-voltage electrotherapy19,20, and drug-induced arrhythmia maintenance21. Additionally, an increase in the investigation of atrial fibrillation (AF) and ventricular fibrillation (VF) initiation and maintenance using transgenic mouse models22–24 has created the need for panoramic setups that are easily scalable to accommodate small rodent hearts, such as mouse and rat. In spite of these accomplishments and a growing unmet need, the technical expertise required to design and implement a panoramic imaging system has made it largely inaccessible. We present here an open source toolkit (https://github.com/optocardiography) for building panoramic optical mapping systems capable of imaging small mammal hearts; this includes instructions for the 3D printing of experimental components and software for data acquisition, processing and analysis. Our aim is to provide a solution a cardiovascular scientist, without specialized computer science and engineering training, can easily access and implement. The toolkit will be released under the MIT open source software license.

Results

System Requirements

The software requirements for running panoramic imaging software are LabVIEW (version 14 or above), MATLAB (2016b or above), and MATLAB compatible C compiler (XCode 8.0 for Mac OS, MinGW 5.3/Visual Studio 2010 or above for Microsoft Windows or later, gcc-4.9 for Linux). The recommended hardware requirements include Intel CPU Core i5-2500K 3.3 GHz or comparable processor, AMD Radeon 2 GB or comparable graphics card, 16 GB or above memory (RAM), and 1 TB or above of disk space.

Software Architecture

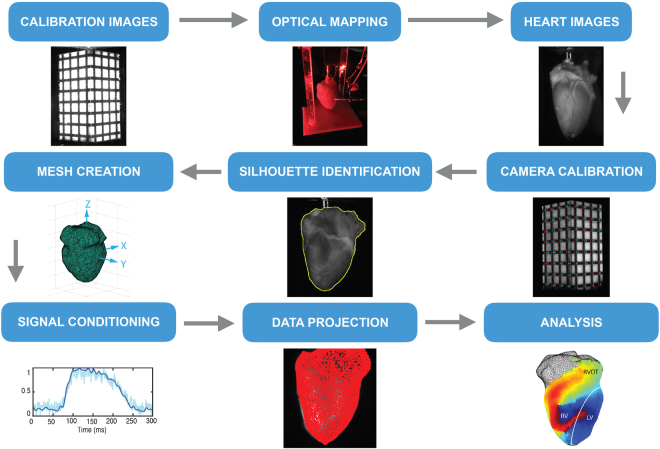

Figure 1 depicts the workflow for the RHYTHM toolkit that includes an open source software module for geometry reconstruction, optical signal processing, and visualization. Software for rotating the heart following the optical mapping study is done using Labview and has been tested on Labview version 14.0. Graphical user interfaces (GUI) were created in Matlab for (1) camera calibration, (2) surface reconstruction, and (3) projection and analysis of the optical data. The layout of each GUI guides the user through the process of generating the desired output. Semi-automated routines have been implemented, where feasible, to make data processing faster and less labor intensive. A manual providing a detailed description of each GUI is included in the data supplement. Silhouette images collected from rotating the heart are saved as *.tiff files. Once camera calibration has occurred all files are saved in MATLAB’s proprietary *.mat file format. To provide readers with a 3D printing foundation, the toolkit also includes design specifications for building a panoramic optical imaging system.

Figure 1.

RHYTHM panoramic imaging workflow. Producing panoramic data requires a detailed oriented, step-by-step process. RHYTHM provides a concise and intuitive set of tools for accomplishing this.

Camera Calibration. We established the camera calibrations before conducting the experiments. The low-cost geometry camera (UI-322XCP-M, IDS Imaging Development Systems, Obersulm, Germany) used the same window as the optical camera A. To maintain the integrity of these cameras’ calibrations, the geometry camera was first calibrated from a bracketed position and then moved to the side. Optical mapping camera A was then calibrated and left in place for the duration of the experiment. During calibration, white LEDs (Joby Gorillatorch, DayMen US Inc, Petaluma, CA) were positioned at the illumination windows to increase the contrast between the cuboid and the calibration grid. These were later replaced by the red LEDs for the optical mapping study. Upon terminating the experiment, the geometry camera was returned to the bracketed position for collection of the silhouette images (see Geometric Reconstruction).

The method for establishing pixel-to-geometry correspondence has already been described in detail12–14. Briefly, we first solved for the unknown parameters of a perspective camera model25. This was done by first imaging an object of known dimensions with easily identifiable landmarks whose positions on the object’s surface were also known. For the rabbit heart we used a rectangular cuboid with 1 × 1 × 2 (L × W × H) inch dimensions. Smaller cuboids were used for mouse and rat hearts. A grid was placed on the outward facing surfaces providing each camera with sixty-four visible intersections, when the cuboid was placed such that two faces were visible from each camera.

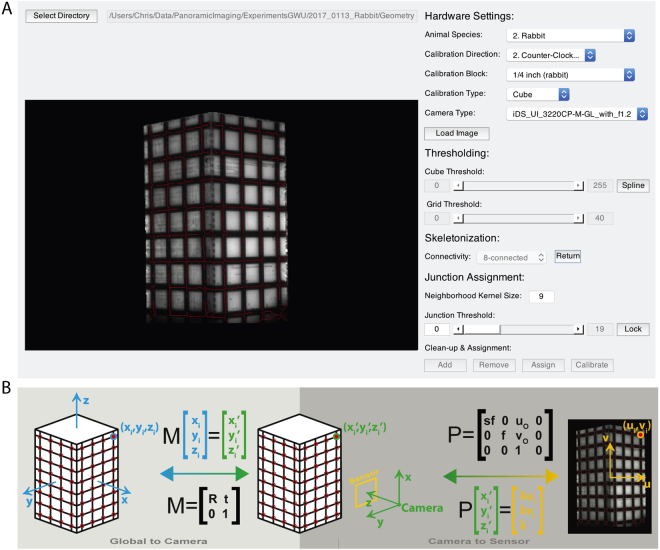

We created a graphical user interface (GUI) to ease the process of establishing the calibration (Fig. 2A). In the ideal scenario (i.e., all intersections visible), an automated algorithm identified and appropriately labeled all intersections based on a user created mask of the cube and its grid. Tools are provided that allow the user to correct poorly identified junctions prior to labeling. In the non-ideal situation (i.e., not all intersections are visible), the user is queried for the number of a visible junction in the top-left and bottom-right corner, and all other visible junctions are then automatically labeled. Detailed descriptions of the algorithms used to accomplish this are included in the toolkit. A Levenberg-Marquardt nonlinear optimization algorithm receives the 2D junction locations for each camera and the corresponding 3D intersection values and iteratively solves for the unknown extrinsic and intrinsic camera parameters needed to create transformation matrices to connect the two data sets.

Figure 2.

Calibration of geometry and optical cameras. (A) A MATLAB graphical user interface was used to semi-automate the calibration of each camera needed to facilitate the projection of optical data onto the geometric surface. Detailed explanations and code are included in the toolkit. (B) The cuboid is used to create a global coordinate system whose origin is at the cuboid’s center. All grid junctions on the cuboid surface have known coordinates in the global coordinate system. Identification of these points in a 2D image provides the necessary number of known values to solve for the unknown components of the global-to-camera (i.e., transformation) and the camera-to-sensor (i.e., perspective projection) matrices.

The extrinsic camera parameters include the camera position (t) and rotation (R) with regards to the global coordinate system created by the cuboid. These translation and rotation values comprise the transformation matrix (M) as seen in Fig. 2B. The transformation matrix from the camera coordinate system to the imaging plane, also known as the perspective transformation (P), utilizes the intrinsic parameters focal length (f), aspect ratio (s), and the image center values (uo, vo). The final component of the transformation corrects for lens distortion. Our setup, as described above, experiences little to no lens distortion, however, this component of the algorithm is still included in the calibration GUI and is described in detail in previous work. The optimization algorithm is run for all four optical cameras and the geometric camera, providing each with a unique set of transformation matrices that will later be used for projection of data onto the reconstructed geometry.

Surface Generation

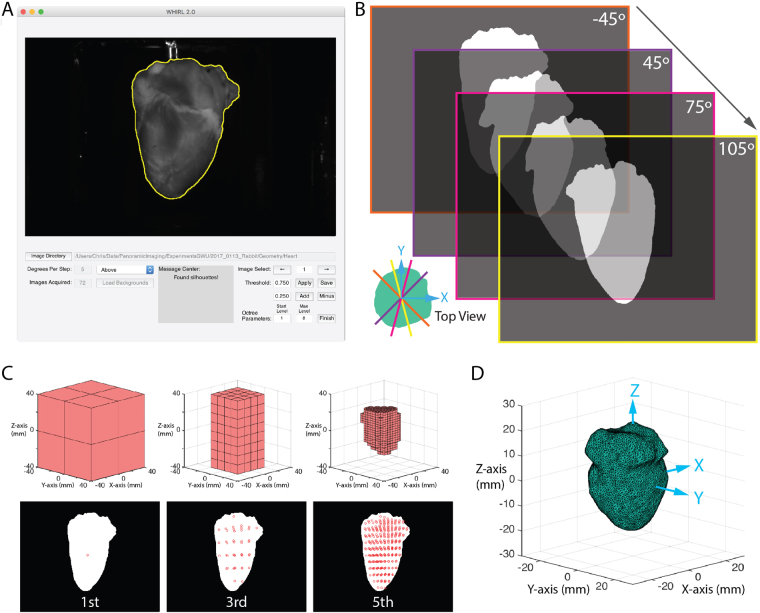

Upon completion of the optical mapping studies, we removed Camera A and returned the geometry camera to its bracketed position. The LEDs nearest the geometry camera were replaced with white LEDs and a backdrop was placed behind the heart to improve contrast. Using a custom-built LabVIEW program, we rotated the motorized rotational stage, to which the cannula was attached, 360 degrees, collecting an image of the heart every five degrees. We then uploaded these images to our custom written MATLAB GUI (Fig. 3A) that facilitates the identification of the heart silhouette in each image using a combination of thresholding and polyline tools. Heart pixels are represented as ones and background pixels as zeros (Fig. 3B).

Figure 3.

Geometric reconstruction. (A) A MATLAB graphical user interface was developed to facilitate accurate identification of the heart silhouettes needed for geometric reconstruction. A detailed description and the code are included in the toolkit. (B) The heart was rotated, and binary silhouette images were collected every 5 degrees. (C) Using the occluding contours method paired with an octree algorithm a series of voxels are iteratively broken down to identify the heart volume. (D) The surface mesh is generated by MATLAB from this volume and smoothed by external C function.

We then used the occluding contour method26,27, in conjunction with an octree algorithm, to efficiently identify the volume represented by the silhouettes in a similar fashion as previously described work13. Briefly, an octree is used to deconstruct a volume, slightly larger than that of the heart and centered at the world coordinate system origin, into eight constituent voxels stacked on top of one another as seen in the first iteration of Fig. 3C. The vertices of each voxel are projected onto each of the silhouettes in succession by rotating the volume five degrees between silhouettes. Voxels whose vertices are either completely inside the heart (i.e. all assigned a one) or outside the heart (i.e. all assigned a zero) remain unchanged. Voxels who lie along the border (i.e. whose pixels are a mix of ones and zeros) are broken down using the octree. The process is repeated until the desired resolution is reached.

Once this was accomplished, the resulting volume was used to generate a triangulated surface mesh in MATLAB in combination with a smoothing algorithm28 implemented in C29. The result is a triangular mesh (Fig. 3D) representative of the epicardium with Ncells cells (i.e. triangles) and Nverts vertices.

Projection and Visualization

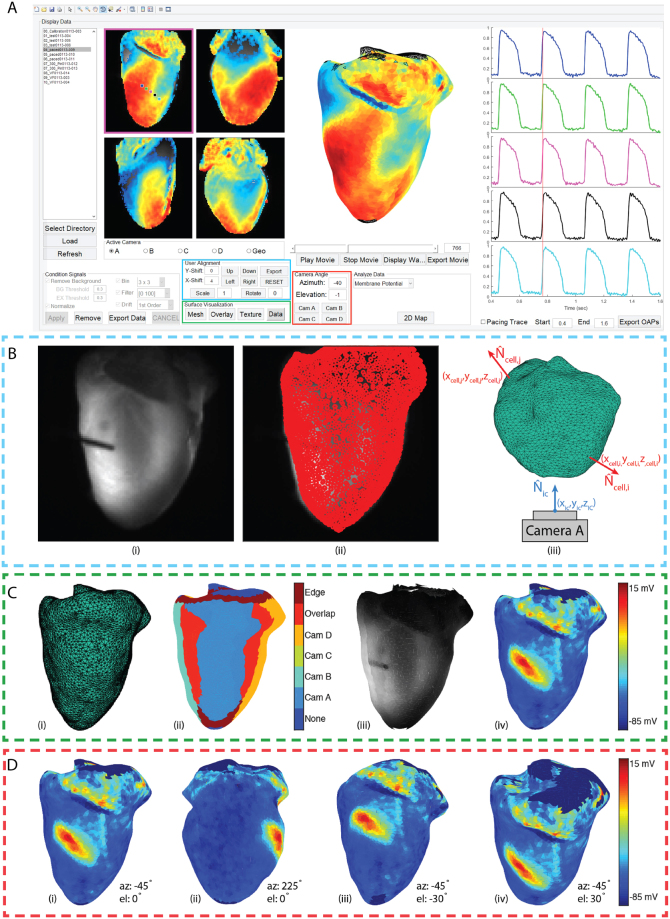

Projection of the data onto the heart surface by texture mapping30 and subsequent analysis was facilitated with a final GUI (Fig. 4A) that builds on the foundation of our previously published work on the analysis of single camera optical mapping signals31. We first load in a complete data set and remove background pixels lacking physiological data. We then normalized the optical action potentials, performed spatial filtering by binning with a 3 × 3 box shaped averaging kernel, applied a 100th order FIR temporal filter with a band pass of 0–100 Hz, and performed 1st order drift removal as necessary. We have also included a polyline tool to facilitate the removal of undesired regions (e.g. the atria when the focus of the study is the ventricles). The final step before projection is the creation of an image mask. Since surface curvature at the edge of each image decreases the signal-to-noise (SNR) ratio and introduces artifacts32, a weighted gradient was created for each mask with center pixels being assigned values of 1 and the outermost edge pixels being assigned values of 0.5.

Figure 4.

Data processing and projection (A) A final MATLAB graphical user interface was created to facilitate the processing of optical data and its projection onto the representative geometry. (B) Projection is accomplished by projecting the face centroids of the triangulated mesh onto the optical mapping cameras. Tools are provided to correct minor inaccuracies in projection. Camera facing centroids are first identified as those whose normals (Ncell) creates an angle greater than 90° with the camera normal (Nic). (C) The surface can then be visualized as a triangulated mesh (i), a map of the camera assignments made to each centroid (ii), texture (iii), and membrane potential (iv). (D) Once correspondence has been established the geometry will appear with membrane potential mapped to the surface. Tools are provided to rotate the heart using click-and-drag, to the view from each camera, and to specific viewing angles using azimuth and elevation angles.

Once conditioned and masked, the centroids of the triangles on the mesh are projected onto each optical mapping camera using the transformation matrices derived from previously described camera calibrations (Fig. 4B(i),B(ii)). Assignments are made using the methodology of Kay et al.13. Briefly, for each camera the angle between the camera view (Nic) and each of the mesh cells (Ncell) is calculated (Fig. 4B(iii)). Minor inaccuracies in projection can be adjusted using the User Alignment tools in the GUI. All centroids with angles less than 90° are excluded from assignment at that camera. Centroids with angles greater than 115° are assigned as the signal from the closest pixel of that particular camera (e.g. Cam A, B, C, or D). Centroids assigned to multiple cameras are considered overlap pixels. The signals at these locations are calculated as a weighted average of the two assignments using the weighted gradient mask described earlier. Finally, the outermost edge cells, with angles 90° and 115°, are assigned as the edge identifier and signals are calculated as an edge weighted average.

Once projected, the geometry surface visualization tools (Fig. 4A) can be used to view the 3D surface as a triangulated untextured mesh (Fig. 4C(i)), an assignment map (Fig. 4C(ii)), an anatomical texture map (Fig. 4C(iii)), or a texture map of raw fluorescence (Fig. 4C(iv)). The axes where the 3D surface is visualized are automatically set to allow the user to rotate the model. Azimuth and elevation angles are automatically updated in the Camera Angle tool bar as the user rotates the heart or can be entered manually (Fig. 4D(i–iv)). Additionally, buttons have been provided to take the user directly to the view from each of the optical mapping cameras.

Software Validation

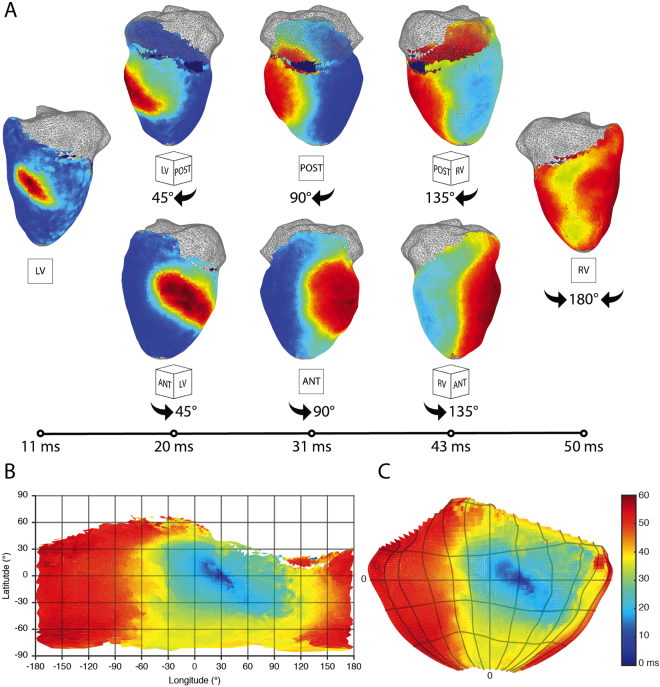

Figure 5 shows panoramically mapped spread of electrical activity in a rabbit heart. The paced beat originates in the left ventricular free wall and spreads towards the both the anterior and posterior sides to finally collide on the right ventricular free wall (Fig. 5A). The corresponding activation can be visualized using a flat Mercator projection (Fig. 5B) or a Hammer projection (Fig. 5C) in which ratios are maintained.

Figure 5.

Activation in representative rabbit panoramic optical data. (A) The time course of pacing activation is tracked using membrane potential. A paced beat starts on the left ventricular free wall and the divergent wavefronts are tracked over time around both the posterior and anterior sides to where they meet on the right ventricular free wall. Wavefronts are uninterrupted by artifact or discontinuity. (B) On the left, a 2D Mercator projection of the isochronal activation map generated using the processing GUI. On the right, a 2D Hammer projection whose latitude and longitude divisions are the same as seen in the Mercator projection.

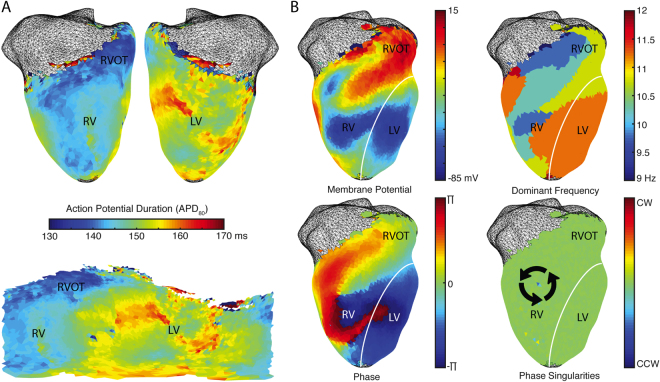

Figure 6 shows action potential duration maps (Fig. 6A). Ventricular fibrillation was pharmacologically induced in the heart and mapped for subsequent analysis including dominant frequency calculation as well as generating phase and phase singularities maps (Fig. 6B).

Figure 6.

Repolarization and arrhythmia analysis in representative rabbit data. (A) Action potential duration can be calculated using projected data and unwrapped into a Mercator 2D representation. (B) Arrhythmias like ventricular fibrillation can be analyzed using calculations of dominant frequency, phase, and phase singularities.

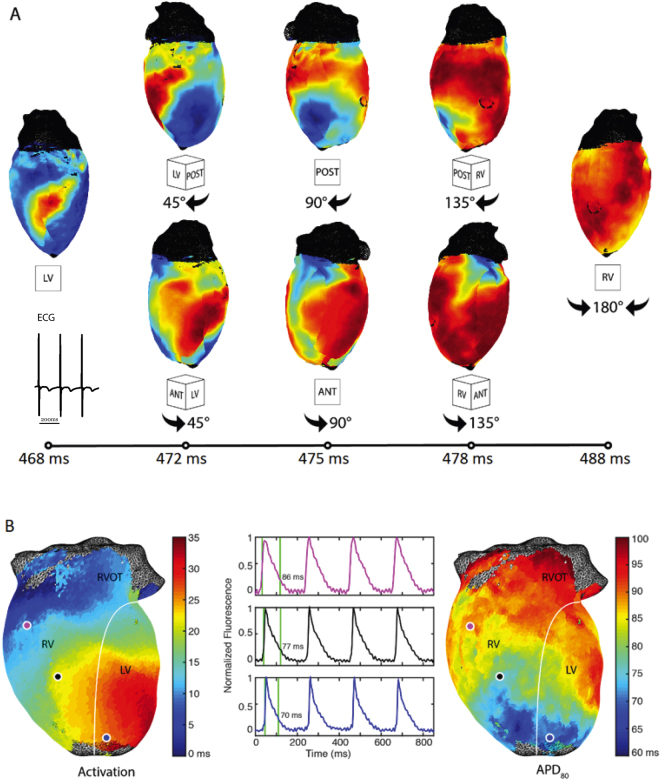

Figure 7 depicts the panoramically mapped propagation wave in a rat heart. A paced beat is similarly tracked over time around both the posterior and the anterior sides to where they meet on the right ventricular free wall (Fig. 7A). The activation and action potential duration maps as well as sample optical action potentials are also depicted (Fig. 7B).

Figure 7.

Representative rat panoramic optical data. (A) The time course of pacing activation is tracked using membrane potential. (B) Activation and the corresponding action potential duration (APD80) maps are seen along with representative action potentials.

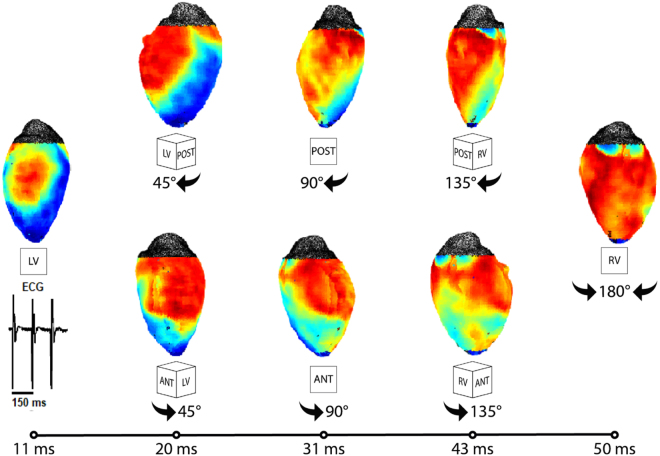

Figure 8 likewise captures the propagation wave in a panoramically imaged mouse heart.

Figure 8.

Representative mouse panoramic optical data. (A) The time course of pacing activation is tracked using membrane potential.

Discussion

In this study, we developed an open source panoramic optical imaging system suitable for the whole heart imaging of small mammalian hearts. While panoramic imaging was developed over a decade ago, few studies of actual physiology have been performed, and only by groups that developed panoramic methodologies. We believe this is in large part because this technology has always required significant engineering experience, which has acted as a barrier to entry for many in the cardiac physiology community. There has been a recent effort to present a low-cost panoramic imaging system33. While it addresses the issue of cost, the effort still falls short of providing publicly available and curated software for acquisition, processing, and analysis of both anatomical and optical data acquired from the complex 3D surface of the heart. Another contributing factor has been the explosion of transgenic small rodent models for looking at atrial and ventricular arrhythmias, which before now could not be panoramically imaged. Our open source system successfully simplifies the process of data acquisition, processing, and analysis making it more accessible to those without engineering expertise. It also facilitates the imaging of small hearts (e.g., mouse and rat), successfully opening the door to a host of relevant, but incompletely understood, transgenic models.

Methods

Study subjects included rabbit, rat and mouse, following the approval from the George Washington University’s Animal Care and Use Committee and conforming to the Guide for the Care and Use of Laboratory Animals (NIH Pub. No 85–23, Revised 1996).

Experimental Setup

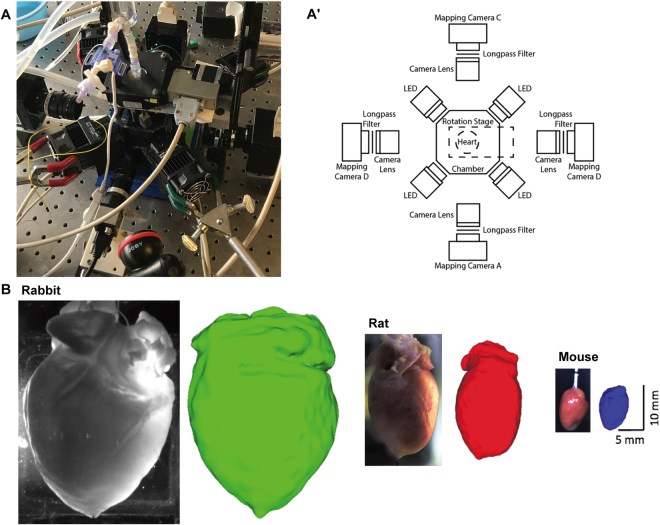

Previous optical mapping systems have been described in detail3,4,34. We limit our description to the characteristics unique to this open source panoramic system. Our panoramic imaging setup (Fig. 9A,A’) consists of four CMOS Ultima cameras attached to a MiCAM05 acquisition system (SciMedia Ltd, Costa Mesa, CA). These cameras are mounted to magnetic bases and each outfitted with 35 mm C-mount lens (MLV35M1, Thor Labs, Newton, NJ) and 655 nm long pass filters (ET655lp, Chroma Technology Corporation, Bellow Falls, VT). At 45° angles between the cameras are four 630 nM red LEDs (UHP-T-LED-630, Prizmatix Ltd, Givat-Shmuel, Israel). The perfusion system runs into a cannula mounted to a rotational stage (URS50, Newport Corporation, Irvine, CA). The stage is powered and rotated using a custom Labview interface, Arduino, and stepper driver. A parts list, code, and assembly instructions are included in the Data Supplement.

Figure 9.

Panoramic optical mapping system. (A) Picture and schematic of the optical mapping system are shown. In addition to increasing the number of optical cameras, we designed, and 3D printed a number of experimental components including the perfusion chamber. (B) Representative rabbit, rat and mouse hearts and the corresponding reconstructed geometries.

Recently, multiple labs have published on their efforts to use 3D printing to economically and independently customize their systems to specific experimental needs35,36. Here we present several potential applications of 3D printing in customizing a panoramic imaging system. We used a hanging Langendorff-perfused heart in a 3D printed superfusion chamber (Fig. 9A’). The tissue chamber was designed to maximize accessibility to heart for cameras and LEDs, while minimizing the volume to minimize the quantity of drug required to reach desired concentrations. Because we use a superfusion chamber, it is necessary to rotate the heart in front of the camera rather than the camera around the heart, when collecting images for geometric reconstruction. To prevent the perfusion tubing from becoming tangled, we designed, and 3D printed a cannula mount that attaches to the rotating face of the rotational stage directly above the heart and a bubble trap mount to be positioned on the opposite side of the stage. Connecting the bubble trap and the cannula is a female-to-female luer lock connector with an O-ring and swivel that allows the two ends of the connector to spin independent of one another, thus preventing entanglement. Additionally, a platform was designed and printed to facilitate anchoring of the cardiac apex without obscuring the field of view of the cameras or LEDs. Items not shown include camera mounts, positioning brackets, and a backdrop for increasing image contrast during rotation of the heart. Images, design specifications, and files for all components are included in the Data Supplement.

Design of all components was either done in entirely free (e.g. Autodesk Student Version, Autodesk Inc, San Rafael, CA) or well-discounted (e.g. SketchUp Pro Academic License, Trimble Inc, Sunnyvale, CA) CAD software packages. Once completed, the designs were exported to the stereolithography format (i.e. *.stl) and a printer path was created using either the Stratasys Insight 10.8 or Stratasys Control Center 10.8 software packages. Printing was done on both a Stratasys Fortus 250mc and a Stratasys uPrint SE Plus (Stratasys, Eden Prairie, MN) using acrylonitrile butadiene styrene (ABS) plastic. Print time varied from 2 hours for the smallest components to over 30 hours for the large chamber. It should be noted that sophisticated 3D printers can themselves be cost prohibitive, but 3D printing can be outsourced to on line commercial printers at low cost. Similar designs can still be accomplished using entry-level 3D printers, but different design approaches must be used to compensate for their general lack of dissolvable support materials.

The design of this panoramic imaging toolkit, in particular the use of 3D printed components, makes it easily scalable for hearts of varying sizes (Fig. 9B). To demonstrate this, we conducted optical imaging studies in rabbit (left), rat (middle) and mouse (right). Typically, the rat heart (2.1 cm external length, 1.2 cm external width) is half the size of a rabbit heart, whereas the mouse heart (1 cm length, 0.4 cm width) is ¼ the size of a rabbit heart.

Optical Mapping

The rabbits were initially anesthetized using a ketamine and xylazine cocktail. We administered a dose of heparin and brought them to a deeper level of anesthesia using isoflurane. Once the reflex test failed to illicit a response, we excised the heart using a sternal thoracotomy and immediately cannulated the aorta on a secondary Langendorff perfusion setup. We allowed 3–5 minutes for the beating heart to clear coronary circulation and cardiac cavities from blood before placing it on the panoramic perfusion system and lowering it into the tissue chamber. Once in the chamber, we allowed 10 minutes for the heart to recover from explantation and cannulation procedures. After the recovery period, we added the excitation-contraction uncoupler blebbistatin (Sigma-Aldrich, St. Louis, MO) into the perfusate and brought the perfusate up to a final concentration of 10 μM by slowly injecting blebbistatin into a drug port just upstream from the cannula bubble trap. Once contraction was suppressed, we added the voltage sensitive dye di-4-ANBDQBS (Loew Laboratory, University of Connecticut, Storrs, CT) to the bubble trap up to a final concentration of 35 μM before beginning the experimental protocol.

We first conducted a standard S1S1 pacing restitution protocol at decreasing pacing cycle length (PCL) of 300, 270, 240, 210, 180, and 150 ms. We then administered a 30 μM dose of the ATP channel activator Pinacidil (Sigma-Aldrich, St. Louis, MO) to shorten action potential duration and thus create a substrate that could sustain a reentrant ventricular arrhythmia. After allowing 10 minutes for the drug to take full effect, we induced VF by 1-second burst of 60 Hz pacing. Initialization and maintenance of the arrhythmia were panoramically mapped at multiple time points.

The rat and mouse studies followed similar Langendorff perfusion setup and pacing restitution protocol as described for the rabbit study.

Electronic supplementary material

Acknowledgements

We gratefully acknowledge generous support of National Institutes of Health (grant R01 HL115415 & R01 Hl126802 to IRE and R01 HL095828 to MWK) and Leducq Foundation (project RHYTHM) to IRE and NRF; Russian Science Foundation grant 14-31-00024 to RAP and Russian Foundation for Basic Research grant 18-07-01480 RAS. We also gratefully acknowledge contributions of Brianna Cathey and Sofian Obaid to 3D design and printing of optical system’s components. In addition, we would also like to acknowledge Baichen Li’s contribution in optimizing the electrical components of the panoramic setup.

Author Contributions

I.R.E., M.W.K. conceived the experiments and provided the intellectual input. C.G. designed the constructed the experimental setup. H.Z., J.R., and C.G. developed the rotational stage controller system. C.G., K.A., and N.R.F. designed and conducted the rabbit studies. K.A. and N.R.F. designed and conducted the rat and mouse studies. C.G., M.W.K., and J.R. wrote the first generation of the panoramic toolkit software. K.A., R.A.S., R.A.P., and S.G. wrote the second generation of the toolkit software. C.G., K.A., and N.R.F. analyzed the results. S.G. wrote the software documentation. C.G., K.A. and I.R.E. wrote the manuscript. R.A.P. developed heart surface generation algorithm. All authors reviewed the manuscript.

Competing Interests

The authors declare no competing interests.

Footnotes

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-018-21333-w.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Boukens BJ, Efimov IR. A Century of Optocardiography. IEEE Reviews in Biomedical Engineering. 2014;7:115–125. doi: 10.1109/RBME.2013.2286296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Boukens BJ, Gutbrod SR, Efimov IR. Imaging of Ventricular Fibrillation and Defibrillation: The Virtual Electrode Hypothesis. Advances Experimental Medicine and Biology. 2015;859:343–365. doi: 10.1007/978-3-319-17641-3_14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Salama G, Lombardi R, Elson J. Maps of optical action potentials and NADH fluorescence in intact working hearts. American Journal of Physiology. 1987;252:H384–H394. doi: 10.1152/ajpheart.1987.252.2.H384. [DOI] [PubMed] [Google Scholar]

- 4.Efimov IR, Nikolski VP, Salama G. Optical Imaging of the Heart. Circulation Research. 2004;95:21–33. doi: 10.1161/01.RES.0000130529.18016.35. [DOI] [PubMed] [Google Scholar]

- 5.Efimov IR, Huang DT, Rendt JM, Salama G. Optical mapping of repolarization and refractoriness from intact hearts. Circulation. 1994;90:1469–1480. doi: 10.1161/01.CIR.90.3.1469. [DOI] [PubMed] [Google Scholar]

- 6.Kay M, Swift L, Martell B, Arutunyan A, Sarvazyan N. Locations of ectopic beats coincide with spatial gradients of NADH in a regional model of low-flow reperfusion. American Journal of Physiology – Heart and Circulatory Physiology. 2008;294:H2400–H2405. doi: 10.1152/ajpheart.01158.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sulkin MS, et al. Mitchondrial depoloarization and electrophysiological changes during ischemia in the rabbit and human heart. American Journal of Physiology – Heart and Circulatory Physiology. 2014;307:H1178–H1186. doi: 10.1152/ajpheart.00437.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jaimes R, III, et al. A Technical Review of Optical Mapping of Intracellular Calcium within Myocardial Tissue. American Journal of Physiology – Heart and Circulatory Physiology. 2016;310:H1388–H1401. doi: 10.1152/ajpheart.00665.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Davidenko JM, Pertsov AV, Salomonsz R, Baxter W, Jalife J. Stationary and drifting spiral waves of excitation in isolated cardiac muscle. Nature. 1992;355:349–351. doi: 10.1038/355349a0. [DOI] [PubMed] [Google Scholar]

- 10.Rogers JM, Huang J, Smith WM, Ideker RE. Incidence, evolution, and spatial distribution of functional reentry during ventricular fibrillation in pigs. Circulation Research. 1999;84:945–954. doi: 10.1161/01.RES.84.8.945. [DOI] [PubMed] [Google Scholar]

- 11.Lin S-F, Wikswo JP. Panoramic Optical Imaging of Electrical Propagation in Isolated Heart. Journal of Biomedical Optics. 1999;4:200–207. doi: 10.1117/1.429910. [DOI] [PubMed] [Google Scholar]

- 12.Bray MA, Lin SF, Wikswo JP. Three-dimensional surface reconstruction and fluorescent visualization of cardiac activation. IEEE Transactions on Biomedical Engineering. 2000;47:1382–1391. doi: 10.1109/10.871412. [DOI] [PubMed] [Google Scholar]

- 13.Kay M, Amison P, Rogers J. Three-Dimensional Surface Reconstruction and Panoramic Optical Mapping of Large Hearts. IEEE Transactions on Biomedical Engineering. 2004;51:1219–1229. doi: 10.1109/TBME.2004.827261. [DOI] [PubMed] [Google Scholar]

- 14.Qu F, Ripplinger CM, Nikolski VP, Grimm C, Efimov IR. Three-dimensional panoramic imaging of cardiac arrhythmias in rabbit heart. Journal of Biomedical Optics. 2007;12:044019. doi: 10.1117/1.2753748. [DOI] [PubMed] [Google Scholar]

- 15.Kay MW, Walcott GP, Gladden JD, Melnick SB, Rogers JM. Lifetimes of epicardial rotors in panoramic optical maps of fibrillating swine ventricles. American Journal of Physiology: Heart and Circulatory Physiology. 2006;291:H1935–H1941. doi: 10.1152/ajpheart.00276.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rogers JM, Walcott GP, Gladden JD, Melnick SB, Kay MW. Panoramic optical mapping reveals continuous epicardial reentry during ventricular fibrillation in the isolated swine heart. Biophysical Journal. 2007;92:1090–1095. doi: 10.1529/biophysj.106.092098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rogers JM, et al. Epicardial wavefronts arise from widely distributed transient sources during ventricular fibrillation in the isolated swine heart. New Journal of Physics. 2008;10:1–14. doi: 10.1088/1367-2630/10/1/015004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bourgeois EB, Reeves HD, Walcott GP, Rogers JM. Panoramic optical mapping shows wavebreak at a consistent anatomical site at the onset of ventricular fibrillation. Cardiovascular Research. 2012;93:272–279. doi: 10.1093/cvr/cvr327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ripplinger CM, Lou Q, Li W, Hadley J, Efimov IR. Panoramic imaging reveals basic mechanisms of induction and termination of ventricular tachycardia in rabbit heart with chronic infarction: implications for low-voltage cardioversion. Heart Rhythm: Official Journal of the Heart and Rhythm Society. 2009;6:87–97. doi: 10.1016/j.hrthm.2008.09.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Li W, Ripplinger CM, Lou Q, Efimov IR. Multiple monophasic shocks improve electrotherapy of ventricular tachycardia in a rabbit model of chronic infarction. Heart Rhythm: Official Journal of the Heart and Rhythm Society. 2009;6:1020–1027. doi: 10.1016/j.hrthm.2009.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lou Q, Li W, Efimov IR. The role of dynamic instability and wavelength in arrhythmia maintenance as revealed by panoramic imaging with blebbistatin vs. 2,3-butanedione monoxime. American Journal of Physiology: Heart and Circulatory Physiology. 2012;302:H262–H269. doi: 10.1152/ajpheart.00711.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Levin MD, et al. Melanocyte-like cells in the heart and pulmonary veins contribute to atrial arrhythmia triggers. Journal of Clinical Investigation. 2009;119:3420–3436. doi: 10.1172/JCI39109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wan E, et al. Aberrant sodium influx causes cardiomyopathy and atrial fibrillation in mice. Journal of Clinical Investigation. 2016;126:112–122. doi: 10.1172/JCI84669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nadadur RD, et al. Pitx2 modulates a Tbx5-dependent gene regulatory network to maintain atrial rhythm. Science Translational Medicine. 2016;8:354ra115. doi: 10.1126/scitranslmed.aaf4891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Heikkila J. Geometric camera calibration using circular control points. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000;22:1066–1077. doi: 10.1109/34.879788. [DOI] [Google Scholar]

- 26.Martin WN, Aggarwal JK. Volumetric descriptions of objects from multiple views. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1983;5:150–158. doi: 10.1109/TPAMI.1983.4767367. [DOI] [PubMed] [Google Scholar]

- 27.Niem W. Robust and fast modeling of 3D natural objects from multiple views. SPIE Proceedings: Image and Video Processing II. 1994;2182:388–397. doi: 10.1117/12.171088. [DOI] [Google Scholar]

- 28.Deshbrun, M., Meyer, M., Schroder, P. & Barr, A. H. Implicit Fairing of Irregular Meshes using Diffusion and Curvature Flow. SIGGRAPH 99 Conference Proceedings, 317–324 (1999).

- 29.Kroon, D. Smooth Triangulated Mesh (version1.1). Available from https://www.mathworks.com/matlabcentral/fileexchange/26710-smooth-triangulated-mesh. (2010).

- 30.Heckbert PS. Survey of Texture Mapping. IEEE Computer Graphics and Applications. 1986;6:56–67. doi: 10.1109/MCG.1986.276672. [DOI] [Google Scholar]

- 31.Laughner JI, Ng FS, Sulkin MS, Arthur RM, Efimov IR. Processing and analysis of cardiac optical mapping data obtained with potentiometric dyes. American Journal of Physiology: Heart and Circulatory Physiology. 2012;303:H753–H765. doi: 10.1152/ajpheart.00404.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Banville I, Gray RA, Ideker RE, Smith WM. Shock-induced figure-of-eight reentry in the isolated rabbit heart. Circulation Research. 1999;85:742–52. doi: 10.1161/01.RES.85.8.742. [DOI] [PubMed] [Google Scholar]

- 33.Lee P, et al. Low-Cost Optical Mapping Systems for Panoramic Imaging of Complex Arrhythmias and Drug-Action in Translational Heart Models. Scientific Reports. 2017;7:43217. doi: 10.1038/srep43217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lou Q, Li W, Efimov IR. Multiparametric Optical Mapping of the Langendorff-perfused Rabbit Heart. Journal of Visualized Experiments. 2011;55:3160. doi: 10.3791/3160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sulkin MS, et al. Three-dimensional printing physiology laboratory technology. American Journal of Physiology: Heart and Circulatory Physiology. 2013;305:H1569–H1573. doi: 10.1152/ajpheart.00599.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Baden T, et al. Open Labware: 3-D Printing Your Own Lab Equipment. PLOS Biology. 2015;13:e1002086. doi: 10.1371/journal.pbio.1002086. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.