Abstract

Importance

Data sharing is as an expanding initiative for enhancing trust in the clinical research enterprise.

Objective

To evaluate the feasibility, process, and outcomes of a reproduction analysis of the THERMOCOOL SMARTTOUCH Catheter for the Treatment of Symptomatic Paroxysmal Atrial Fibrillation (SMART-AF) trial using shared clinical trial data.

Design, Setting, and Participants

A reproduction analysis of the SMART-AF trial was performed using the data sets, data dictionary, case report file, and statistical analysis plan from the original trial accessed through the Yale Open Data Access Project using the SAS Clinical Trials Data Transparency platform. SMART-AF was a multicenter, single-arm trial evaluating the effectiveness and safety of an irrigated, contact force-sensing catheter for ablation of drug refractory, symptomatic paroxysmal atrial fibrillation in 172 participants recruited from 21 sites between June 2011 and December 2011. Analysis of the data was conducted between December 2016 and April 2017.

Main Outcomes and Measures

Effectiveness outcomes included freedom from atrial arrhythmias after ablation and proportion of participants without any arrhythmia recurrence over the 12 months of follow-up after a 3-month blanking period. Safety outcomes included major adverse device- or procedure-related events.

Results

The SMART AF trial participants’ mean age was 58.7 (10.8) years, and 72% were men. The time from initial proposal submission to final analysis was 11 months. Freedom from atrial arrhythmias at 12 months postprocedure was similar compared with the primary study report (74.0%; 95% CI, 66.0-82.0 vs 76.4%; 95% CI, 68.7-84.1). The reproduction analysis success rate was higher than the primary study report (65.8%; 95% CI 56.5-74.2 vs 75.6%; 95% CI, 67.2-82.5). Adverse events were minimal and similar between the 2 analyses, but contact force range or regression models could not be reproduced.

Conclusions and Relevance

The feasibility of a reproduction analysis of the SMART-AF trial was demonstrated through an academic data-sharing platform. Data sharing can be facilitated through incentivizing collaboration, sharing statistical code, and creating more decentralized data sharing platforms with fewer restrictions to data access.

Key Points

Question

Is it feasible to reproduce the results of a major cardiovascular clinical trial using publicly available, shared clinical trial data?

Findings

The total time to complete the reproduction was 11 months. The primary effectiveness and safety outcomes were similar between the 2 studies; however, there were differences in the secondary analyses, and some important findings could not be replicated.

Meaning

Reproduction analyses are feasible. Incentivizing collaboration for data sharing, reporting statistical code of primary studies, and decentralizing data sharing platforms may improve clinical trial data sharing.

This study evaluates the feasibility, process, and outcomes of a reproduction analysis of the SMART-AF trial using shared clinical trial data..

Introduction

The National Academy of Medicine, International Committee of Medical Journal Editors, and other leading organizations have highlighted clinical trial data sharing as an important initiative for enhancing trust in the clinical research enterprise. Data-sharing platforms have been created including government platforms such as the National Heart, Lung, and Blood Institute’s Biologic Specimen and Data Repository Information Coordinating Center, commercial platforms such as ClinicalStudyDataRequest.com, and academic platforms such as the Yale Open Data Access (YODA) Project.

Replication is an essential component of any scientific process, and study reproducibility can bolster clinical and public confidence in trial results. Reproduction analyses represent a fundamental approach for and outcome from data sharing but are uncommonly performed. For example, among 172 data requests to the National Heart, Lung, and Blood Institute’s Biologic Specimen and Data Repository Information Coordinating Center between 2000 and 2016, only 2 (1%) were for reproduction analyses. However, 35% of reproduction analyses lead to differences in the interpretation of the primary outcome results (most commonly as a result of differing statistical approaches or analytical definitions) related to direction or magnitude of treatment effects. Less commonly, there are cases where reanalyses have uncovered fundamentally flawed results, which is perhaps the most compelling indication for increased data transparency. Because there are limited reports describing the feasibility, process, and outcomes of reanalyzing cardiovascular clinical trial data, we sought to perform a reproduction analysis of the Thermocool Smarttouch Catheter for Treatment of Symptomatic Paroxysmal Atrial Fibrillation (SMART-AF) trial, the only available cardiovascular clinical trial in the YODA Project database.

Methods

Data Sources and Outcome Definitions

We submitted a research proposal through the YODA Project website to gain access to the original data sets from the SMART-AF trial performed by Natale et al. SMART-AF was a multicenter, single-arm trial evaluating the effectiveness and safety of an irrigated, contact force-sensing catheter for ablation of drug refractory, symptomatic paroxysmal atrial fibrillation in 172 participants recruited from 21 sites between June 2011 and December 2011. The primary study was published in 2014, and the device was approved by the US Food and Drug Administration in 2014.

The primary effectiveness outcome of the SMART-AF trial was freedom from documented symptomatic atrial fibrillation, atrial tachycardia, or atrial flutter during the evaluation period. All participants were given a 90-day postprocedure blanking period to allow for atrial healing and remodeling, during which time participants were allowed 2 repeated ablations that would not be considered treatment failures. After the blanking period, participants were evaluated through 12 months of follow-up for recurrence, defined as any documented atrial fibrillation, atrial tachycardia, or atrial flutter episodes lasting at least 30 seconds. Treatment failure also included absence of entrance block confirmed in all pulmonary veins at the end of the ablation procedure, repeated ablation after day 90, or new antiarrhythmic drug requirement during the evaluation period. Secondary outcomes included the overall success rate (proportion) of participants free from arrhythmia recurrence after initial ablation and remaining off antiarrhythmic medications at the 12-month follow-up. Safety outcomes were defined as major adverse procedure- or device-related events occurring within 7 days after the ablation procedure.

After we received access to the analysis data sets, data dictionary, case report file, and statistical analysis plan through a SAS-based data sharing platform, we sought to reproduce the effectiveness and safety outcomes described in the primary study report.

We sought and received approval for our analysis of deidentified trial data through the Northwestern University institutional review board. Participant consent was obtained in the primary study but was not required for this reproduction analysis using deidentfied data.

Statistical Analysis

We followed the methods in the statistical analysis plan and original publication. When we identified discrepancies in our results with the primary study report, we contacted the study authors, the YODA Project team, and the trial sponsor for clarification.

We calculated descriptive statistics for all participants in the effectiveness and safety cohorts. We created Kaplan-Meier survival curves for the primary effectiveness outcome of time to first recurrence procedure after ablation and blanking period for participants in the effectiveness cohort. We attempted to further stratify our analysis of this outcome by proportion of time that investigators spent operating in their selected contact force range, dichotomized at the 80% level as described in the primary study report. We calculated the success rate for the effectiveness cohort as the proportion of participants without any recurrence during the 12-month follow-up period. We attempted to create a logistic regression model with the primary effectiveness outcome as the independent variable but were unable to maintain fidelity to the original analysis owing to these data not being available. We calculated the rate of major adverse events from the safety cohort as the proportion of participants experiencing such an event during the study period.

We performed all statistical analyses using SAS Drug Development, version 4.3 (SAS Institute) through the SAS Clinical Trials Data Transparency platform.

Results

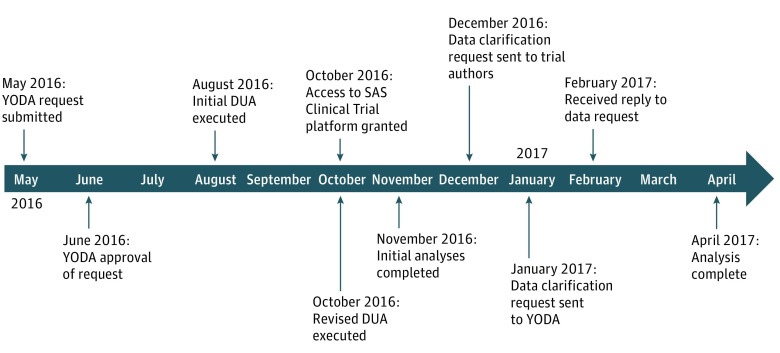

The Figure demonstrates the project timeline. We submitted our data request and research proposal through the YODA Project website in May 2016. We received approval in 1 month, and the final data use agreement was finalized in October 2016, after which time we received access to the SAS Clinical Trials Data Transparency platform. We completed our initial reproduction analysis by November 2016 and identified and worked through clarifications with the SMART-AF authors, YODA Project team, and trial sponsor between December 2016 and April 2017.

Figure. Reproduction Analysis Timeline.

DUA indicates data use agreement and YODA indicates Yale Open Data Access.

Using the trial data sets and metadata, we confirmed that 172 participants were enrolled into the study. We calculated that 162 participants had the study catheter inserted to comprise the safety cohort compared with 161 participants reported by the study authors. After removing 38 participants selected as roll-in participants (a study requirement by which investigators calibrated their experience with the catheter on 1-2 participants to configure the operating reference ranges for future cases), we identified 124 participants in the effectiveness cohort compared with 122 participants reported by the study authors. We matched the 5 participants from the effectiveness cohort who were noted as lost to follow-up during the 12-month study period in the publication analysis.

Table 1 demonstrates demographic, clinical history, and imaging data reported by the study authors and in our reproduction analysis. We identified minor differences from the primary study that were driven by the differences in the cohorts but do not materially influence the baseline characteristics.

Table 1. Baseline Patient Characteristics and Demographic Information.

| Baseline Characteristics | No. (%) | |||

|---|---|---|---|---|

| SMART-AF Analysis | Reproduction Analysis | |||

| Effectiveness Cohort (n = 122) |

Safety Cohort (n = 161) |

Effectiveness Cohort (n = 124) |

Safety Cohort (n = 162) |

|

| Age, mean (SD), y | 58.3 (10.9) | 58.7 (10.8) | 58.3 (10.3) | 58.8 (10.8) |

| Male sex | 87 (71.3) | 116 (72.0) | 88 (71.0) | 116 (71.6) |

| Patient history | ||||

| Atrial fibrillation duration, median (IQR), y | 4 (1-7) | 4 (2-8) | 4 (1-7) | 4 (1-7) |

| Atrial flutter | 37 (30.3) | 52 (32.3) | 37 (29.8) | 52 (32.1) |

| Hypertension | 74 (60.7) | 96 (59.6) | 74 (59.7) | 96 (59.3) |

| Diabetes | 14 (11.5) | 20 (12.4) | 14 (11.3) | 20 (12.4) |

| Structural heart disease | 15 (12.3) | 19 (11.8) | 15 (12.1) | 19 (11.7) |

| Cerebrovascular disease | 0 | 0 | 0 | 2 (1.2) |

| Transient ischemic attack | 4 (3.3) | 5 (3.1) | 4 (3.2) | 5 (3.1) |

| Prior thromboembolic events | 6 (4.9) | 10 (6.2) | 3 (2.4) | 5 (3.1) |

| NYHA functional class | ||||

| None | 101 (82.8) | 133 (82.6) | 103 (83.1) | 134 (82.7) |

| I | 15 (12.3) | 18 (11.2) | 15 (12.1) | 18 (11.1) |

| II | 5 (4.1) | 8 (5.0) | 5 (4.0) | 8 (4.9) |

| Unknown | 1 (0.8) | 2 (1.2) | 1 (0.8) | 2 (1.2) |

| LVEF, mean (SD), mm | 60.3 (7.8) | 60.2 (7.6) | 60.3 (7.8) | 60.1 (7.6) |

| LA dimension, mean (SD), mm | 38.5 (5.6) | 38.5 (5.7) | 38.3 (5.7) | 38.4 (5.8) |

Abbreviations: IQR, interquartile range; LA, left atrium; LVEF, left ventricular ejection fraction; NYHA, New York Heart Association, SMART-AF, THERMACOOL SMARTTOUCH Catheter for the Treatment of Symptomatic Paroxysmal Atrial Fibrillation.

Table 2 demonstrates the effectiveness and safety outcomes reported by the study authors and in our reproduction analysis. We calculated a similar rate for the primary effectiveness outcome of freedom from symptomatic atrial arrhythmia at 12-month postprocedure compared with the primary study (74.0%; 95% CI, 66.0-82.0 vs 76.4%; 95% CI, 68.7-84.1). We calculated a higher success rate than the primary study (65.8%; 95% CI, 56.7-74.3 vs 75.6%; 95% CI 67.2-82.5). The mean (SD) contact force calculated in the SMART-AF trial of 17.9 (9.4) g was lower compared with our finding of 29.2 (14.1) g. Our analysis of the safety cohort revealed a similar number of major adverse events as reported by the study authors: vascular access complications (n = 5), cardiac tamponade (n = 4), pericarditis (n = 4), pericardial effusion (n = 3), heart block (n = 1), pulmonary vein stenosis (n = 0), and atrioesophageal fistula (n = 0). We were unable to reproduce the analyses predicated on proportion of time that investigators spent operating in their selected contact force range because not all variables or information to derive them were available in the data set. Similarly, we could not reproduce the multivariable logistic regression model owing to missing dependent variables. We attempted to resolve these discrepancies with requests for clarification about discordant results and missing or unavailable data through communication with the study authors, YODA team, and trial sponsor analysts. While some of our queries were clarified through this process, other discrepancies remained unresolved.

Table 2. Effectiveness and Safety Outcomes and Comparison.

| Outcome | SMART-AF Analysis | Reproduction Analysis | ||

|---|---|---|---|---|

| No. | % (95% CI) | No. | % (95% CI) | |

| Effectiveness cohort outcomes | ||||

| 12-mo freedom from arrhythmiaa | 122 | 74.0 (66.0-82.0) | 124 | 76.4 (68.7-84.1) |

| Success rateb | 77 | 65.8 (56.7-74.3) | 90 | 75.6 (67.2-82.5) |

| Reason for failure | ||||

| AF recurrence post-blanking | 31 | 26.5 (NR) | 23 | 19.3 (12.8-27.4) |

| Repeat ablation | 6 | 5.1 (NR) | 6 | 5.0 (1.9-10.5) |

| Taking new AAD at 12 mo | 4 | 3.4 (NR) | 0 | 0.0 (0.0-2.9) |

| Safety cohort outcomesc | ||||

| Cardiac tamponade | 4 | 2.5 (NR) | 4 | 2.5 (0.7-6.2) |

| Heart block | 1 | 0.6 (NR) | 1 | 0.6 (0.0-3.4) |

| Pericarditis | 3 | 1.9 (NR) | 4 | 2.5 (0.7-6.2) |

| Vascular access problem | 4 | 2.5 (NR) | 5 | 3.1 (1.0-7.1) |

| Pericardial effusion | NR | (NR) | 3 | 1.9 (0.4-5.4) |

Abbreviations: AAD, antiarrhythmic drug; AF, atrial fibrillation; NR, not reported; SMART-AF, THERMACOOL SMARTTOUCH Catheter for the Treatment of Symptomatic Paroxysmal Atrial Fibrillation..

Reported results are for symptomatic atrial arrhythmia as displayed in Figure 2 in the primary study report by Natale et al; No. = initial at risk population for Kaplan-Meier analysis.

n = 117 for SMART-AF analysis (5 lost to follow-up) and n = 119 for reproduction analysis (5 lost to follow-up).

n = 161 for SMART-AF analysis and n = 162 for reproduction analysis.

Discussion

This study demonstrates the feasibility, process, and outcomes of a reproduction analysis of the SMART-AF trial and identifies potentially valuable lessons as clinical trial data sharing increases. First, the SMART-AF study authors, YODA Project team, and trial sponsors are vanguards in cardiovascular clinical trial data sharing and were under no obligation to share these data or respond to our requests. Clinical trial data sharing requires time and effort that many investigators may be reluctant to spend but is likely necessary for effective clinical trial data sharing.

We closely reviewed the data sets, data dictionary, case report files, and statistical analysis plan and worked to minimize queries to the SMART-AF authors, YODA Project team, and trial sponsor. However, questions invariably arose, even from this relatively small and straightforward, single-arm trial. One potential strategy to minimize the need for specific technical questions may be to include the statistical code in data sharing packages. This would not necessitate that replicating authors use such code as the lone method for reproduction but would provide a valuable reference and further advance transparency in reporting. Journals, such as Biostatistics, encourage its contributing authors to submit all supporting data and code with their original publications as supplemental material or through links to online code-sharing repositories such as GitHub. As data sharing evolves, software programs that facilitate replication of analytical working environments may become more prevalent. While these tools may improve transparency in the analytical process, analytical independence also remains important for enhancing trust through reproducible research. There may be an inherent tension between primary study teams, who best understand the study procedures and data, and independent research teams seeking information for hypothesis-generating analyses or evidence synthesis activities. This tension is evident when considering questions of fairness in and incentivization of attribution and contribution for clinical trial data sharing.

Methods for optimal clinical trial data sharing remain under development. Commercial and academic platforms, such as ClinicalStudyDataRequest.com and YODA, require centralized review and approval for access to secure online portals. On the other hand, government platforms, such as the National Heart, Lung, and Blood Institute’s Biologic Specimen and Data Repository Information Coordinating Center, only require a local institutional review board letter of approval for the proposed analysis to gain access to data files, which may be a decentralized approach for more efficient data sharing; these methods were recently demonstrated by the Systolic Blood Pressure Intervention Trial (SPRINT) Data Analysis Challenge. Investigators will need to use rigorous deidentification methods to ensure participant confidentiality for this type of data sharing, but limiting barriers to access will likely enhance trust in the clinical research enterprise, which is a key goal for data sharing.

Limitations

Our reproduction was limited by an inability to attempt certain reanalyses, such as the logistic regression models, owing to the limited availabilty of some data elements through the data-sharing platform. Second, the process of accessing and analyzing data through an academic data-sharing platform is feasible, although the time spent executing data use agreements represented approximately half of the overall project time. The SAS Clinical Trials Data Transparency platform was effective for this reproduction analysis, but other investigators may prefer to download the analyzable data sets and use different statistical packages with which they might be more familiar. Third, we demonstrated the ability to largely reproduce the direction and magnitude of effectiveness and safety of the intervention, although we did demonstrate discrepancies related to success rate, which may represent a true yet imprecise difference of effect that clinicians and patients may judge to be significant. Further, we could not reproduce all reported analyses, including inferential analyses and those related to contact force range. Our inability to exactly replicate the analysis was likely based on a number of factors (eg, data availability, interpretation of the inclusion and exclusion criteria, assumptions during data cleaning, differences in the analytic methods and statistical package, or combination thereof) but also acknowledge these discrepancies may have been related to our own errors.

Conclusions

We demonstrate the feasibility, process, and outcomes of a reproduction analysis of the SMART-AF clinical trial using the YODA Project academic data-sharing platform. Our findings were largely similar to the results reported by the study authors. We identified and described areas for potentially improving the growing clinical trial data-sharing process and enterprise based on our experience. Such proposals include incentivizing greater collaboration between original investigators and independent researchers, sharing statistical code, and more decentralized data-sharing platforms, with fewer restrictions to data access.

References

- 1.Lo B. Sharing clinical trial data: maximizing benefits, minimizing risk. JAMA. 2015;313(8):793-794. [DOI] [PubMed] [Google Scholar]

- 2.Taichman DB, Backus J, Baethge C, et al. . Sharing clinical trial data: a proposal from the International Committee of Medical Journal Editors. JAMA. 2016;315(5):467-468. [DOI] [PubMed] [Google Scholar]

- 3.Krumholz HM, Waldstreicher J. The Yale Open Data Access (YODA) project: a mechanism for data sharing. N Engl J Med. 2016;375(5):403-405. [DOI] [PubMed] [Google Scholar]

- 4.Coady SA, Mensah GA, Wagner EL, Goldfarb ME, Hitchcock DM, Giffen CA. Use of the national heart, lung, and blood institute data repository. N Engl J Med. 2017;376(19):1849-1858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ebrahim S, Sohani ZN, Montoya L, et al. . Reanalyses of randomized clinical trial data. JAMA. 2014;312(10):1024-1032. [DOI] [PubMed] [Google Scholar]

- 6.Baggerly KA, Coombes KR. Deriving chemosensitivity from cell lines: forensic bioinformatics and reproducible research in high-throughput biology. Ann Appl Stat. 2009;3(4):1309-1334. doi: 10.1214/09-AOAS291 [DOI] [Google Scholar]

- 7.Natale A, Reddy VY, Monir G, et al. . Paroxysmal AF catheter ablation with a contact force sensing catheter: results of the prospective, multicenter SMART-AF trial. J Am Coll Cardiol. 2014;64(7):647-656. [DOI] [PubMed] [Google Scholar]

- 8.Premarket Approval (PMA) record for biosense webster navistar/celsius thermocool diagnostic/ablation deflectable tip catheters. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpma/pma.cfm?id=P030031S053. Accessed August 18, 2017.

- 9.Biostatistics: information for authors. https://academic.oup.com/biostatistics/pages/General_Instructions. Accessed August 18, 2017.

- 10.Burns NS, Miller PW. Learning what we didn’t know: the SPRINT data analysis challenge. N Engl J Med. 2017;376(23):2205-2207. [DOI] [PubMed] [Google Scholar]