Abstract

Wireless capsule endoscopy (WCE) is the most advanced technology to visualize whole gastrointestinal (GI) tract in a non-invasive way. But the major disadvantage here, it takes long reviewing time, which is very laborious as continuous manual intervention is necessary. In order to reduce the burden of the clinician, in this paper, an automatic bleeding detection method for WCE video is proposed based on the color histogram of block statistics, namely CHOBS. A single pixel in WCE image may be distorted due to the capsule motion in the GI tract. Instead of considering individual pixel values, a block surrounding to that individual pixel is chosen for extracting local statistical features. By combining local block features of three different color planes of RGB color space, an index value is defined. A color histogram, which is extracted from those index values, provides distinguishable color texture feature. A feature reduction technique utilizing color histogram pattern and principal component analysis is proposed, which can drastically reduce the feature dimension. For bleeding zone detection, blocks are classified using extracted local features that do not incorporate any computational burden for feature extraction. From extensive experimentation on several WCE videos and 2300 images, which are collected from a publicly available database, a very satisfactory bleeding frame and zone detection performance is achieved in comparison to that obtained by some of the existing methods. In the case of bleeding frame detection, the accuracy, sensitivity, and specificity obtained from proposed method are 97.85%, 99.47%, and 99.15%, respectively, and in the case of bleeding zone detection, 95.75% of precision is achieved. The proposed method offers not only low feature dimension but also highly satisfactory bleeding detection performance, which even can effectively detect bleeding frame and zone in a continuous WCE video data.

Keywords: Bleeding detection, bleeding zone, color histogram, feature extraction, wireless capsule endoscopy

Wireless capsule endoscopy is currently the most advanced technology for noninvasive visualization of the gastrointestinal (GI) tract. The major disadvantage of the technology is its long reviewing time, which is laborious and requires continuous manual intervention. This paper proposes an automatic bleeding detection method for wireless capsule endoscope video which is based on color histogram of block statistics. In experimentation on several endoscopy videos and 2300 images collected from a publicly available database, the accuracy, sensitivity, and specificity of bleeding frame detection obtained from the proposed method are 97.85%, 99.47%, and 99.15%, respectively, and, in the case of bleeding zone detection, 95.75% precision is achieved.

I. Introduction

Wireless capsule endoscopy (WCE) has been considered to be the best option to visualize the entire small bowel [1]. It is the most advanced and non-invasive video imaging technology for examining the entire gastrointestinal (GI) tract. The first clinical diagnosis is performed by Given Imaging Company in 2001 [2]. Due to the small size (around 11 mm in diameter and 26 mm in length) of the capsule, patients can swallow it without any obstruction. In gastrointestinal pathway, this capsule travels the whole tract in 8 hours and the video camera takes nearly 57,000 color images in 2 frames per second. Next, all images are stored in a computer.

From the clinical point of view, bleeding detection in GI tract is one of the vital tasks for diagnosing different GI diseases [3]. The problem of WCE lies in its reviewing process which usually takes two hours to complete [4]. Furthermore, it is very difficult to identify some bleeding abnormalities by naked eyes because of their small size and random distribution. All these difficulties inspire researchers to improve the computer aided bleeding detection method in decreasing the burden of a gastroenterologist. With its increasingly wide applications, some methods have been developed to detect bleeding images from the WCE video. The very first attempt is suspected blood indicator (SBI), however, its bleeding detection sensitivity and specificity are found dissatisfactory [5]. Hue-Saturation-Intensity based color histogram features are introduced in [6], which involves high algorithmic complexity. In [7], local binary pattern (LBP) based color texture feature is proposed in HSI (hue, saturation, intensity) domain. Super-pixel segmentation and SVM classifier based computer aided bleeding detection method is developed in [8], which is computationally complex. In [9], probabilistic neural network (PNN) based bleeding image identification scheme is proposed. The method proposed in [10] utilizes color statistical features extracted from histogram probability. In [11], G to R pixel intensity ratio, along with the mean intensity of red, green and blue plane is deployed to detect bleeding frame. While statistical measures of R/G composite color domain is proposed in and [12]. Furthermore, color histogram incorporating all three color plane bin frequency feature is proposed in [13]. Statistical feature from HSV domain is used In [14] The YCbCr color plane is used to find word based color histogram feature from for bleeding frame detection while bleeding localization is marked by fusion strategy and thresholding. The region of Interest (ROI) based statistical feature of CMYK domain is proposed in [15]. A frequency domain approach is proposed in [16], where Normalized Gray Level Co-occurrence Matrix (NGLCM) is used. Most of the methods described above are confined to individual color channel histogram (R, G, B histogram), whereas color histogram may offer potential feature to detect bleeding frames with high accuracy and sensitivity because of combinedly using information from three planes.

The objective of this paper is to develop an efficient computer aided bleeding frame and zone detection scheme in the WCE video recordings. A block-based local feature extraction scheme is proposed from each color plane, which offers better feature representation in comparison to individual pixel based feature. Color histogram based global features are extracted using the extracted local feature. For the purpose of bleeding and non-bleeding image classification, extracted feature dimension is reduced and then k-nearest neighbor (KNN) classifier is used. In order to determine bleeding zone, first each block in a bleeding image is classified using the available local features and label of pixels within a block is determined with proposed interpolation method. Finally, bleeding acquired zone is fine-tuned by the morphological operation.

II. Proposed Method for Bleeding Frame Detection

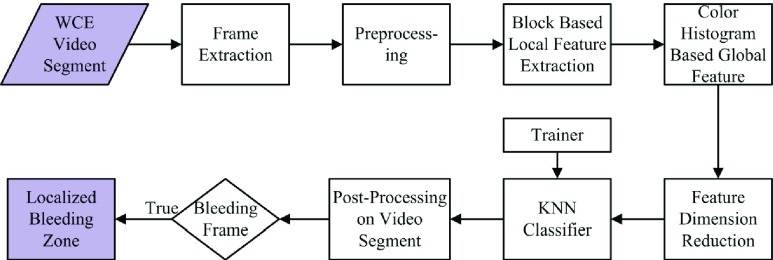

Basic steps involved in the proposed bleeding frame and zone detection method is presented in Fig. 1. From a given WCE video data, frame by frame analysis is carried out to extract feature and classify bleeding and non-bleeding frames. Similar preprocessing and feature extraction operations are performed on both training and testing data. Each WCE frame is first preprocessed and then segmented into several spatial blocks. Next, the block-based statistical feature is extracted and used to construct a color histogram. Bin frequencies of the color histogram are considered as the global feature. Furthermore, utilizing dominance of color plane, a feature dimension reduction scheme is proposed. For the purpose of classification, KNN classifier is employed. In addition, bleeding detection algorithm is tested on continuous WCE video segments. Finally, for a bleeding image, a scheme is developed to detect bleeding zones. In what follows, each step of the proposed method is described.

FIGURE 1.

Illustration of basic steps involved in the proposed method.

A. Preprocessing

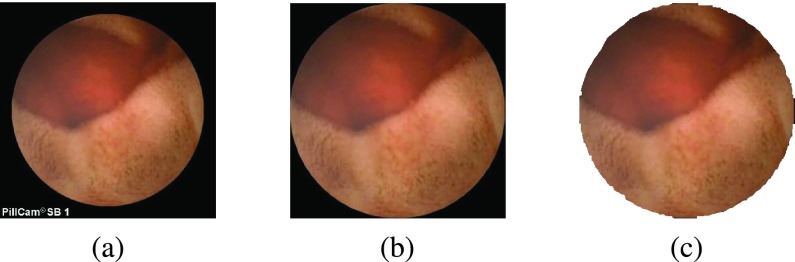

Due to an inherent circular aperture in front of the WCE camera inside the small intestine, the center region (circular or semi-octagonal) of WCE image contains essential information as shown in Fig. 2(a). The pixels residing around the central region possess comparatively very small intensity. In the proposed preprocessing step, black pixels of the border and corner zones of WCE images are removed bearing in mind the variation in pixel intensities and circular shape of the desired region. The step by step outcomes during the preprocessing stage are presented in Fig. 2. Fig. 2(b) presents the WCE image where black portions placed in the corner regions are removed and in Fig. 2(c) final preprocessed image is shown. Rather than using the entire image, the resulting black-boundary eliminated images are considered for further processing.

FIGURE 2.

Illustration of preprocessing step (a) original WCE frame; (b) after removing black portions of the four sides; (c) final preprocessed image after removing corner black regions.

B. Pixel Based Color Histogram (PChist)

A histogram measures the ratio of participation of different sub-classes in a given data. A color histogram is formed considering pixel intensities of all three color planes [17], [18]. Pixel intensities of all color planes are grouped together according to different ranges of their values (depending on bin size of the color histogram) and then the number of pixels in each group is counted. By varying the pixel values of R, G, and B, the RGB color space of the image can be segmented into  distinct colors and then, color histogram

distinct colors and then, color histogram  can be formed as

can be formed as

|

where  holds the number of pixels of j-th color in the image.

holds the number of pixels of j-th color in the image.

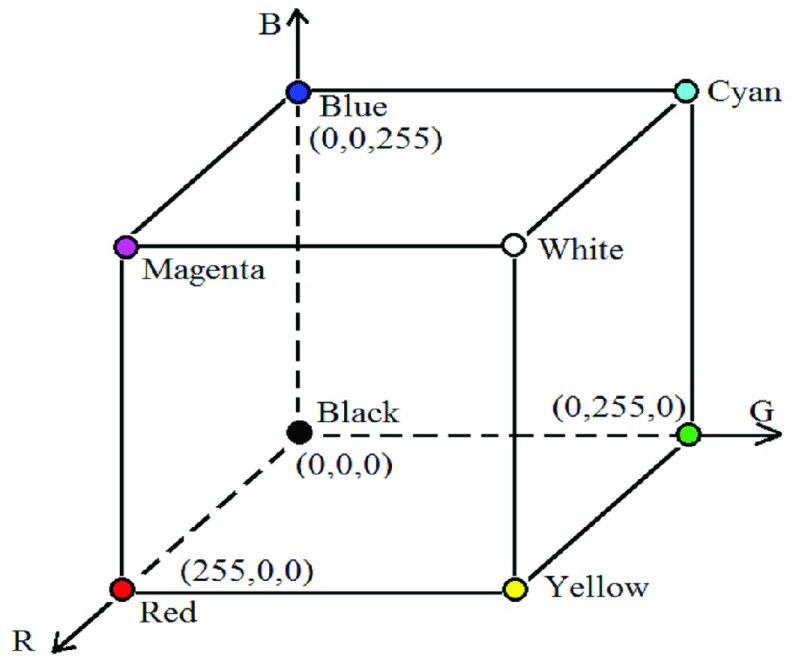

For example, if only one bit per plane is considered to represent the intensity of a pixel, there will be total three bits from three color planes resulting in 23 = 8 different choices ( ). As each pixel is now represented by three bits from three color planes, using Cartesian coordinate system, the color subspace where a pixel will be located can be represented by a cube as shown in Fig. 3. As per the standard color scheme, eight colors obtained in this system are indicated in the figure. Use of one bit for a color plane is, in fact, equivalent to consider 0 for the intensity range 0 to 127 and 1 for the intensity range 128 to 255. In this case, to represent the pixel intensity values (0–255), maximum 8 bits can be used, e.g. eight bits in R-plane can be represented as

). As each pixel is now represented by three bits from three color planes, using Cartesian coordinate system, the color subspace where a pixel will be located can be represented by a cube as shown in Fig. 3. As per the standard color scheme, eight colors obtained in this system are indicated in the figure. Use of one bit for a color plane is, in fact, equivalent to consider 0 for the intensity range 0 to 127 and 1 for the intensity range 128 to 255. In this case, to represent the pixel intensity values (0–255), maximum 8 bits can be used, e.g. eight bits in R-plane can be represented as  , here the suffix ‘b’ is introduced to indicate binary values. For each pixel, information from all three planes is taken into consideration and the corresponding pixel is assigned to a new three-bit value. The given WCE image is now transformed into a composite plane where the pixels are indexed with

, here the suffix ‘b’ is introduced to indicate binary values. For each pixel, information from all three planes is taken into consideration and the corresponding pixel is assigned to a new three-bit value. The given WCE image is now transformed into a composite plane where the pixels are indexed with  bits where

bits where  indicates the number of bits to be considered for each color plane resulting in

indicates the number of bits to be considered for each color plane resulting in  combination for each image. For example, considering

combination for each image. For example, considering  there will be 26 = 64 choices.

there will be 26 = 64 choices.

FIGURE 3.

Cartesian coordinate system of RGB color space.

In order to obtain the index value of each pixel, the binary values of  most significant bits (MSBs) of each color plane are utilized. Let the bit values are

most significant bits (MSBs) of each color plane are utilized. Let the bit values are  ,

,  , and

, and  . Final index value (

. Final index value ( ) is calculated as the decimal value that corresponds to the binary bits

) is calculated as the decimal value that corresponds to the binary bits

|

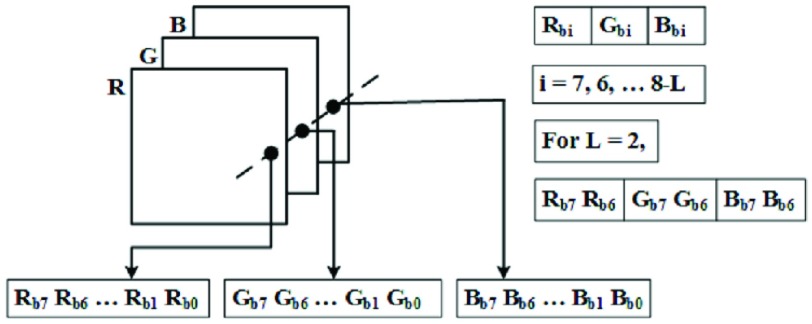

where  . Final index value formation is illustrated in the Fig. 4. In the figure, three color planes (R, G, B) and their bit values are presented, and from those bit value index value is computed. For example, when

. Final index value formation is illustrated in the Fig. 4. In the figure, three color planes (R, G, B) and their bit values are presented, and from those bit value index value is computed. For example, when  and the values of R, G, B planes of a pixel are 150, 240, 30 respectively, binary index value is

and the values of R, G, B planes of a pixel are 150, 240, 30 respectively, binary index value is  and corresponding decimal index value is

and corresponding decimal index value is  . Instead of considering the color distribution in one plane, information for constructing histogram is extracted from the index image plane described above. A detailed example explaining the formation of the index value is presented in Table. 1. First, for each color plane, 1-bit representation is considered, which result in decimal index values from 0 to 7. Next, 2-bit representation per color plane is considered, which provides index values 0 to 63.

. Instead of considering the color distribution in one plane, information for constructing histogram is extracted from the index image plane described above. A detailed example explaining the formation of the index value is presented in Table. 1. First, for each color plane, 1-bit representation is considered, which result in decimal index values from 0 to 7. Next, 2-bit representation per color plane is considered, which provides index values 0 to 63.

FIGURE 4.

Index image construction from RGB planes.

TABLE 1. Representation of Pixel Index Value.

| Pixel value (R,G,B) | 1 bit | Ind | 2 bit | Ind | ||||

|---|---|---|---|---|---|---|---|---|

|

|

|

(0–7) |  |

|

|

(0–63) | |

| (100,230,50) | 0 | 1 | 0 | 2 | 01 | 11 | 00 | 28 |

| (150,240,30) | 1 | 1 | 0 | 6 | 10 | 11 | 00 | 44 |

| (50,60,10) | 0 | 0 | 0 | 0 | 00 | 00 | 00 | 00 |

| (200,120,100) | 1 | 0 | 0 | 4 | 11 | 01 | 01 | 53 |

| (240,235,250) | 1 | 1 | 1 | 7 | 11 | 11 | 11 | 63 |

Color histogram, a count based representation of image pixels should ensure the presence of any group of bleeding pixels, no matter how small it is, independently in the feature vector. Thus, a color histogram is considered as more prominent and significant representation of color in a given WCE image and it is to be noted that bleeding regions are sensitive to color information.

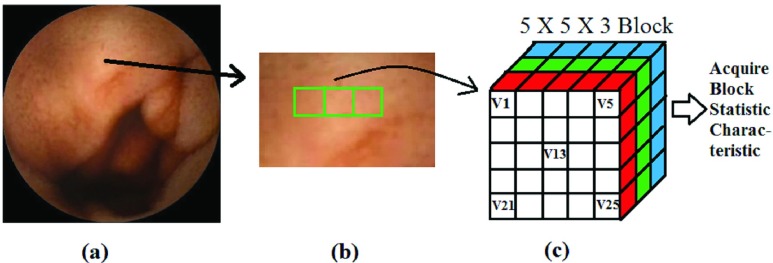

C. Block Based Feature Extraction

In WCE video, there is a probability that a distinct pixel of a WCE image may be corrupted or distorted because of motion blur and slow frame rate of WCE capsule. Hence it may not be a good idea to classify each pixel, whether bleeding or non-bleeding. In most of the bleeding detection methods, the analysis is performed on pixel level, for example, different features are computed from each pixel [13], [14], [19]. In order to overcome this problem, rather than obtaining features from a single pixel, neighborhood block of a single pixel is considered for feature computation. Since in general, even a very small bleeding area consists of a number of pixels, it is expected that such block based feature extraction can overcome the problem of single pixel distortion and offer feature consistency. Furthermore, depending on the amount of overlap between two successive blocks, block based feature extraction may decrease the computational cost. For example, considering a single plane of WCE image with  pixels, if pixel values are directly considered as a feature, feature dimension will be

pixels, if pixel values are directly considered as a feature, feature dimension will be  . However, if feature extraction is performed on

. However, if feature extraction is performed on  non-overlapping blocks in one plane, there will be in total

non-overlapping blocks in one plane, there will be in total  blocks, where

blocks, where  . If the average value of each color plane of each block is considered as a feature, feature dimension becomes

. If the average value of each color plane of each block is considered as a feature, feature dimension becomes  . It is to be mentioned that although only

. It is to be mentioned that although only  blocks instead of

blocks instead of  pixels are used in one channel, in each block all pixels are utilized to extract feature. Thus, conventional feature selection or pixel down-sampling operations are not equivalent to block based feature extraction. In Fig. 5, a block formation and local feature extraction method is illustrated. First, a given image is presented, followed by formation of blocks from RGB color space (in this case a

pixels are used in one channel, in each block all pixels are utilized to extract feature. Thus, conventional feature selection or pixel down-sampling operations are not equivalent to block based feature extraction. In Fig. 5, a block formation and local feature extraction method is illustrated. First, a given image is presented, followed by formation of blocks from RGB color space (in this case a  spatial block is considered). In the figure, red, green and blue color represent each color plane. Finally, local features are computed separately for each color plane.

spatial block is considered). In the figure, red, green and blue color represent each color plane. Finally, local features are computed separately for each color plane.

FIGURE 5.

Representation of neighborhood block characteristic (a) given image; (b) a representation of consecutive block; (c) block and statistic characteristics.

A pixel intensity becomes three dimensional vector and denoted as  where

where  . For a block of size

. For a block of size  , pixel intensity of j-th pixel is denoted as

, pixel intensity of j-th pixel is denoted as  , mean value of i-th block of each color plane are defined as

, mean value of i-th block of each color plane are defined as

|

where  is defined as color plane. Finally, block local feature of a statistical measurement is composed as

is defined as color plane. Finally, block local feature of a statistical measurement is composed as

|

In a similar way, other conventional statistical measurements such as median, minimum and maximum of i-th block are computed. In order to obtain better consistency in feature characteristics, overlapping blocks are considered. The amount of percentage overlap between successive blocks are varied and in different cases, classification performance is tested.

D. Proposed CHOBS Feature

The objective now is to develop a global feature of an image by using extracted block based features. In order to utilize statistical measures of all three color planes, a block based color histogram is proposed. For a particular spatial block, statistical feature (say mean) can be computed in three different planes. The mean feature values obtained in those three planes can then be utilized to construct binary and decimal index values similar to the case of pixel based color histogram. Here the main difference will be, instead of a pixel intensity value in three planes, block feature values obtained in three planes will be used to construct a color histogram. In this way, once index values of all blocks are computed, a color histogram is constructed. Since the pixel value in RGB color space varies from 0 to 255, the value of the statistical measure of any block (e.g. mean, median, maximum, and minimum) will be confined within the range 0 to 255. Using the mean value of each block, a color histogram of a bleeding image considering 1 bit is constructed and represented in Table. 2. In that table, only MSB bit of each color space is considered, which results in 8 different classes. From the table, it is observed that blocks correspond to a particular color are grouped together.

TABLE 2. Representation of Color Histogram.

| R | G | B | Color Index | Block Count | Block/Bin Probability |

|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 744 | 0.327 |

| 0 | 0 | 1 | 1 | 0 | 0 |

| 0 | 1 | 0 | 2 | 0 | 0 |

| 0 | 1 | 1 | 3 | 0 | 0 |

| 1 | 0 | 0 | 4 | 626 | 0.275 |

| 1 | 0 | 1 | 5 | 0 | 0 |

| 1 | 1 | 0 | 6 | 805 | 0.354 |

| 1 | 1 | 1 | 7 | 100 | 0.044 |

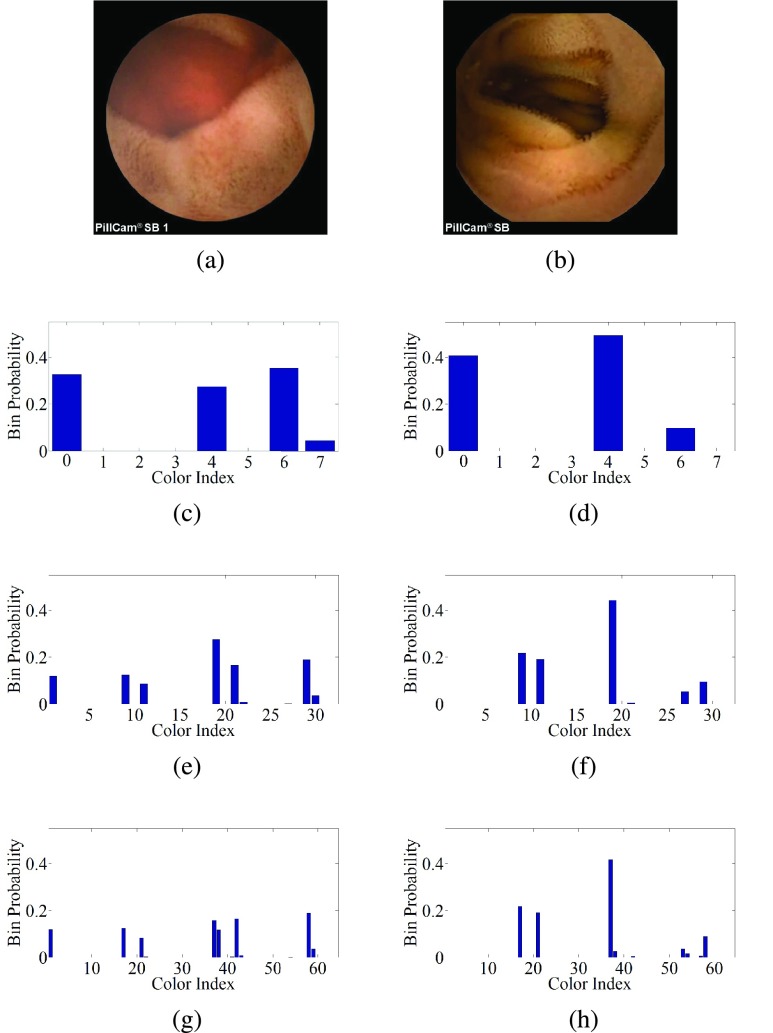

For better understanding, considering the bleeding and non-bleeding images presented in Fig. 6 (a) and (b) respectively, eight bin histograms (considering 1-bit representation of each pixel 23 = 8) constructed from different individual color planes are first shown in Figs. 6 (c)-(d). Next, 32 and 64 bins representation are shown in the Figs. 6 (e)-(h). From the figure, it is observed that 8-bin color histogram shows different patterns for bleeding and non-bleeding cases, with significant overlapping. However, with the increase in bin numbers, quite a distinguishable color histogram bin patterns are achieved between bleeding and non-bleeding cases (Figs. 6 (e)-(h)). Hence, a set of occurrence values of each color in the index image histogram is proposed to be used as a potential global feature for classifying bleeding and non-bleeding images. And it is termed as a color histogram of block statistic (CHOBS) feature.

FIGURE 6.

Color histogram from proposed indexed image plane, (a) bleeding image; (b) non-bleeding image; (c) 8-bin (bleeding); (d) 8-bin (non-bleeding); (e) 32-bin (bleeding); (f) 32-bin (non-bleeding); (g) 64-bin (bleeding); (h) 64-bin (non-bleeding).

E. Feature Dimensionality Reduction

For the case where higher bin color histograms are considered, the feature vector length may be quite high. For example, considering 4 bit of each pixel made 2(3*4) = 4096 choices which offer maximum 4096 bin color histogram. Moreover, all the bin frequencies may not be of same importance when it comes to differentiating bleeding and non-bleeding images. In this regards for feature selection and dimensionality reduction, two stage feature reduction scheme is incorporated, one by utilizing the histogram pattern and another one using principle component analysis (PCA). Reduction of feature dimension offers less computational burden for the classifier and also provides faster convergence for decision making.

1). Feature Dimensionality Reduction Utilizing Histogram Pattern

One of the major observations is made from the Table. 2 is that the blue intensity value in WCE image remains lower than 128 due to the inherent characteristics of GI tract. From the table, it is observed that when the blue index value is 1, the pixel count is negligibly small or zero. It is to be noted that index value 1 represents intensity range of 128–255. In Table 2,  corresponds to 1, 3, 5, and 7 index values. In addition to this, from the Figs. 6 (c) and (d), it is observed that at color indices 1, 3, 5, and 7, bin probability is close to zero which do not help to distinguish bleeding and non-bleeding frames. Thus, to reduce feature dimension, we propose to take only bin probability that contains blue intensity range 0–127. For example, in 8-bin color histogram, 0, 2, 4, and 6 colors index feature is proposed. Similarly for 64 bin,

corresponds to 1, 3, 5, and 7 index values. In addition to this, from the Figs. 6 (c) and (d), it is observed that at color indices 1, 3, 5, and 7, bin probability is close to zero which do not help to distinguish bleeding and non-bleeding frames. Thus, to reduce feature dimension, we propose to take only bin probability that contains blue intensity range 0–127. For example, in 8-bin color histogram, 0, 2, 4, and 6 colors index feature is proposed. Similarly for 64 bin,  color index feature is proposed. This approach reduces the feature dimension by 50% without mislaying any significant information regarding bleeding.

color index feature is proposed. This approach reduces the feature dimension by 50% without mislaying any significant information regarding bleeding.

2). Feature Dimension Reduction Utilizing PCA

Principle component analysis is a very popular and efficient orthogonal linear transformation [20]. It projects the original feature vector into a smaller subspace through a transformation that offers dimensionality reduction of that feature space. The PCA transforms the original  -dimensional feature space into the

-dimensional feature space into the  -dimensional linear subspace that is spanned by the leading eigenvectors of the covariance matrix of the feature vector in each cluster (

-dimensional linear subspace that is spanned by the leading eigenvectors of the covariance matrix of the feature vector in each cluster ( ). In the least square sense, for a given data PCA provides the optimally transformed data. For a data matrix

). In the least square sense, for a given data PCA provides the optimally transformed data. For a data matrix  , with zero empirical mean, where each row represents a different repetition of the experiment, and each column gives the result from a particular probe, the PCA transformation is given by

, with zero empirical mean, where each row represents a different repetition of the experiment, and each column gives the result from a particular probe, the PCA transformation is given by

|

where the matrix  is a

is a  diagonal matrix with nonnegative real numbers on the diagonal and

diagonal matrix with nonnegative real numbers on the diagonal and  is singular value decomposition of

is singular value decomposition of  . If the total number of train and test images is

. If the total number of train and test images is  and initial feature vector dimension

and initial feature vector dimension  per image. The feature space will be a dimension of

per image. The feature space will be a dimension of  . For proposed method, implementation of PCA on the derived feature space could efficiently reduce feature dimension without losing much information. Therefore, PCA is incorporated to further reduce feature dimension of the color histogram feature vector.

. For proposed method, implementation of PCA on the derived feature space could efficiently reduce feature dimension without losing much information. Therefore, PCA is incorporated to further reduce feature dimension of the color histogram feature vector.

F. K-Nearest Neighbor (KNN) Classifier

To classify the bleeding and non-bleeding WCE images the K-nearest neighbor (KNN) classifier is used. Though it is one of the simplest classifiers, due to its high efficiency this non-parametric classifier is one of the most widely used classifiers in several pattern recognition algorithms. KNN classifier classifies the test data set of WCE images comparing them with K neighboring train data set by considering a distance function computed between both data sets. After classification, the KNN classifier outputs a class membership. This class membership assigned to a test object is determined by votes of the majority K nearest neighbors. In the proposed method, Euclidean distance is used to classify test data set considering the class labels of K nearest image patterns. After extensive experimentation with different values of K, a suitable value is used to achieve optimum performance in the proposed method.

III. Bleeding Zone Localization

Once a bleeding image is detected in a WCE video, automatic marking of the bleeding zone is very much helpful for the reviewer to diagnose the diseases. Automatic bleeding zone detection can provide numerous benefits, namely 1) confirming the bleeding detection decision of that image, 2) quick visualization of the region of interest intensively and 3) Exploring the change in bleeding characteristics in consecutive video frames. But automatic bleeding zone localization facility is not available in the most of the existing bleeding detection methods. However, in this section, an automatic bleeding zone localization scheme is proposed, which is applied for identifying bleeding regions in detected bleeding frames. In the proposed method, first, local features are extracted from different blocks, hence using that local feature, the probability of color histogram bin is computed and considered as the global feature and then feature based supervised classifier is used to identify bleeding (B) or non-bleeding (N) images. Next, bleeding zone detection will be carried out only on bleeding images. At this stage, the objective is to classify the blocks of a given bleeding image into two classes as bleeding and non-bleeding. After getting the block label, the label of a pixel in a block needs to be determined. And finally bleeding zone is identified using pixel-wise marking of a given image. Major steps, to be executed in the proposed bleeding zone localization procedure are as following

-

•

Classify all the blocks of an image into bleeding and non-bleeding classes

-

•

Identifying the label of each pixel in a block whether bleeding or non-bleeding

-

•

Fine-tuning of bleeding zone with the help of morphology operation.

A. Block Classification

In order to classify blocks of a given bleeding image, available local features of each block are considered. It is to be mentioned that block based local features are already extracted for the purpose of bleeding frame detection and described in subsection II-C. Any computational burden is not incorporated in feature extraction for the purpose of block classification because of using available local features of each block. Along with the block-based local features, the color intensity value of block center pixel of each color channel is included in the final feature vector. This block center pixel intensity is a strong representative member of that block, which definitely enhances feature quality of a block in case of block classification.

|

Here  is defined in (4) and the other block statistical features can be computed in a similar fashion and

is defined in (4) and the other block statistical features can be computed in a similar fashion and  is defined as

is defined as

|

Here,  corresponds to central pixel intensity of i-th block in C color plane. For the purpose of classification of each block, a supervised KNN classifier is proposed utilizing the final block features

corresponds to central pixel intensity of i-th block in C color plane. For the purpose of classification of each block, a supervised KNN classifier is proposed utilizing the final block features  . In KNN classifier, a training dataset consists by randomly chosen 10% bleeding images of the available database. Ground truth of bleeding localization of those bleeding images are denoted by an expert physician. Bleeding blocks are collected from the ground truth marking and considered as training dataset.

. In KNN classifier, a training dataset consists by randomly chosen 10% bleeding images of the available database. Ground truth of bleeding localization of those bleeding images are denoted by an expert physician. Bleeding blocks are collected from the ground truth marking and considered as training dataset.

B. Identifying the Label of Pixels

Since the label of all the blocks in a bleeding frame is known, our next step is to identify the label of each pixel in a block. One common approach is to give same block label (which is determined in the previous subsection) to every pixel of that block. But the problem arises in the case of overlapping blocks. In overlapping blocks, one pixel may have more than one label when it is a member of more than one block with different labels. In this case, it would be a challenging task to identify the pixel label. Hence, it is necessary to find out a method to label pixels in a bleeding frame. In the proposed method, at first for a test pixel, its membership status is determined by using the number of blocks which contain that particular test pixel. If a test pixel belongs to a single block, it is marked the same label as the block. For example, if a test pixel belongs to a non-bleeding block then it is labeled as non-bleeding. For clinical aspect, it has severe consequences, if a bleeding pixel is declared as non-bleeding, with a comparison to the case where a non-bleeding pixel declared as bleeding. Thus in the proposed method, if a test pixel belongs to multiple blocks, if any of that blocks labeled as bleeding then it is marked as bleeding. For instants, if a test pixel belongs to four overlapping blocks say block-1, block-2, block-3, and block-4, among the four blocks only block-3 is bleeding, in this circumstance that test pixel is declared as bleeding. For multiple overlapping blocks, a test pixel is declared as non-bleeding if and only if all the overlapping blocks are non-bleeding.

C. Finalizing the Bleeding Zone Using Morphology Operation

Pixel based labeling operation provides a set of suspected bleeding pixels in the bleeding image. Generally, a single isolated pixel may not be a candidate for the bleeding zone. Such pixels may arise due to intensity variation or the presence of bleeding like areas. Choice of the hard threshold is also a reason for the appearance of isolated bleeding pixels. Discarding such isolated bleeding suspected pixels is not a good solution. Alternately we proposed to check the homogeneity around that suspected pixel. In this regard, morphological operations are performed, a namely morphological dilation followed by morphological erosion.

Morphological dilation offers connectivity among closely separate bleeding regions in a WCE image. It includes very small isolated non-bleeding regions or pixels if those are surrounded by bleeding regions. Apart from that, it also dilates the boundary of bleeding regions. This operation ensures not to lose any bleeding pixels that are initially marked as bleeding rather than it enhances bleeding (foreground) regions with respect to non-bleeding (background). After Morphological dilation, morphological erosion operation is performed. Morphological erosion discards isolated regions (very small) and pixels those are a candidate for bleeding zones. It also erodes and smoothens the boundary of the bleeding zone. Hence, erosion operation ensures that bleeding zone must contain significantly large bleeding areas.

IV. Simulation Result and Discussion

In order to illustrate the usefulness of the proposed method in identifying the bleeding frames and zones, experimentation on several WCE videos are performed. In this section, simulation result and discussion of proposed method are presented. Simulation results are reported in three aspects: 1) bleeding frame detection performance, 2) performance in continuous WCE video clips and 3) bleeding zone detection performance.

A. Data Description

In order to analyze the performance of the proposed method, several WCE videos are collected from a publicly available widely used database [21]. The ground truth labeling of bleeding and non-bleeding videos are provided by the database. The experimentation is conducted on those WCE videos and the simulation outcome is reported on 32 of them, where 12 videos are labeled as bleeding and 20 are non-bleeding. Images in bleeding videos are manually commented as bleeding or non-bleeding by an expert physician and if bleeding, the bleeding zones are curved out by that physician. For bleeding frame detection performance, experimentation is carried out on 2350 WCE frames, that consist of 450 bleeding images and 1900 non-bleeding images with a resolution of  , which are extracted from those 32 WCE videos. The performance of the proposed method in terms of bleeding detection criteria is explored and compared with that of some of the recent methods [10], [12], [14]. In what follows, first the performance of bleeding frame detection is presented considering different scenarios, such as variation in block size and amount of overlap between successive blocks, variation of histogram bin size, effect of different block features and their combinations, significance of incorporating block-based approach, effect of using different color planes and effect of different color plane histogram. Next, the bleeding detection performance in continuous WCE video clip is described. Finally, the bleeding zone detection performance is reported considering some bleeding images.

, which are extracted from those 32 WCE videos. The performance of the proposed method in terms of bleeding detection criteria is explored and compared with that of some of the recent methods [10], [12], [14]. In what follows, first the performance of bleeding frame detection is presented considering different scenarios, such as variation in block size and amount of overlap between successive blocks, variation of histogram bin size, effect of different block features and their combinations, significance of incorporating block-based approach, effect of using different color planes and effect of different color plane histogram. Next, the bleeding detection performance in continuous WCE video clip is described. Finally, the bleeding zone detection performance is reported considering some bleeding images.

B. Bleeding Frame Detection Criteria

There are four cases about the detection result of bleeding and non-bleeding images. Namely: 1) true bleeding ( ), 2) true non-bleeding (

), 2) true non-bleeding ( ), 3) false non-bleeding (

), 3) false non-bleeding ( ) and 4) false bleeding (

) and 4) false bleeding ( ).

).  cases are the bleeding images correctly detected as bleeding, similarly (

cases are the bleeding images correctly detected as bleeding, similarly ( cases are the non-bleeding images correctly detected as non-bleeding. And

cases are the non-bleeding images correctly detected as non-bleeding. And  cases are the bleeding images wrongly detected as non-bleeding, likewise

cases are the bleeding images wrongly detected as non-bleeding, likewise  cases are the non-bleeding images wrongly detected as bleeding. In order to measure the capability of correctly identifying bleeding and non-bleeding frames three standard evaluation criteria namely accuracy, sensitivity, and specificity [22] are used, which are defined as

cases are the non-bleeding images wrongly detected as bleeding. In order to measure the capability of correctly identifying bleeding and non-bleeding frames three standard evaluation criteria namely accuracy, sensitivity, and specificity [22] are used, which are defined as

|

Sensitivity is a measure of correctness in bleeding frame detection. In equation (8), the denominator ( ) represents total no of actual bleeding images. With the increase in the value of

) represents total no of actual bleeding images. With the increase in the value of  , the value of sensitivity will decrease. And For bleeding frame detection, sensitivity plays the most vital role because it is directly associated with the true bleeding frame detection truthfulness and always being the prime concern in bleeding frame detection research. Accuracy reflects overall correctness of true bleeding and non-bleeding frame detection while Specificity indicates truthfulness in identifying non-bleeding images. For all these performance indicators, higher the values better the performance.

, the value of sensitivity will decrease. And For bleeding frame detection, sensitivity plays the most vital role because it is directly associated with the true bleeding frame detection truthfulness and always being the prime concern in bleeding frame detection research. Accuracy reflects overall correctness of true bleeding and non-bleeding frame detection while Specificity indicates truthfulness in identifying non-bleeding images. For all these performance indicators, higher the values better the performance.

C. Performance of Bleeding Image Detection

First, block-based local feature is extracted, such as mean, median, maximum, and minimum is computed. Next, the CHOBS feature is computed. Finally, KNN classifier is used where various k values are tested. Unless otherwise specified, results presented here utilizes  . Experimental performance is evaluated based on 10-fold cross validation technique.

. Experimental performance is evaluated based on 10-fold cross validation technique.

Bleeding frame detection performance, by varying the block sizes  ,

,  ,

,  and

and  are presented in Table. 3. One may expect that larger block size will provide more consistent statistical features, which is found true up to a certain level. A large block may include both bleeding and non-bleeding pixels. Therefore, to achieve the best performance, it is more appropriate to select the moderate block size. This fact can be visualized from the results shown in Table. 3. In this case, the median feature is considered. It is observed from the table that the value of all three performance criteria increases with the increase in block size starting from

are presented in Table. 3. One may expect that larger block size will provide more consistent statistical features, which is found true up to a certain level. A large block may include both bleeding and non-bleeding pixels. Therefore, to achieve the best performance, it is more appropriate to select the moderate block size. This fact can be visualized from the results shown in Table. 3. In this case, the median feature is considered. It is observed from the table that the value of all three performance criteria increases with the increase in block size starting from  up to

up to  and after that further increase in the block size to

and after that further increase in the block size to  causes a decrease in the performance. In Table. 4, bleeding image detection performance of proposed method with the variation of the amount of overlap between successive blocks is presented considering

causes a decrease in the performance. In Table. 4, bleeding image detection performance of proposed method with the variation of the amount of overlap between successive blocks is presented considering  . The amount of overlapped is measured depending on the number of rows or columns being overlapped between two successive blocks. For example, for

. The amount of overlapped is measured depending on the number of rows or columns being overlapped between two successive blocks. For example, for  block, if one column is overlapping 7 pixels involves, thus amount of percentage overlap becomes 7/49 = 14%. Various percentages of overlap, e.g. 0%, 14%, 28%, and 43% are considered to evaluate performance and reported in Table. 4. More than 50% overlap between successive blocks is not considered to avoid the computational cost. It is noticed from the table that only a small percentage of overlapping (14%) provides a slight improvement in performance but for a large amount of overlapping, performance degraded.

block, if one column is overlapping 7 pixels involves, thus amount of percentage overlap becomes 7/49 = 14%. Various percentages of overlap, e.g. 0%, 14%, 28%, and 43% are considered to evaluate performance and reported in Table. 4. More than 50% overlap between successive blocks is not considered to avoid the computational cost. It is noticed from the table that only a small percentage of overlapping (14%) provides a slight improvement in performance but for a large amount of overlapping, performance degraded.

TABLE 3. The Effect of Block Size on Bleeding Frame Detection Performance.

| Block Size | Sen. | Spec. | Accu. |

|---|---|---|---|

| 3 by 3 | 93.58% | 98.94% | 97.91% |

| 5 by 5 | 93.75% | 98.90% | 97.87% |

| 7 by 7 | 94.22% | 99.22% | 98.26% |

| 9 by 9 | 91.93% | 98.38% | 96.89% |

TABLE 4. The Effect of Block Overlapping on Bleeding Frame Detection Performance.

| Block Size | Overlap (%) | Sen. | Spec. | Accu |

|---|---|---|---|---|

| 7 by 7 | 0% | 94.22% | 99.22% | 98.26% |

| 14% | 94.92% | 99.23% | 98.30% | |

| 28% | 93.09% | 99.11% | 97.96% | |

| 43% | 93.84% | 98.88% | 97.91% |

In order to illustrate the performance variation due to the different bin size of the color histogram, the performance obtained using bin sizes of 8, 16, 32, 64, 128, 256 512, 1024, 2048 and 4096 is reported in Table. 5. It is to be noted that the number of the bin is equal to the number of features. Therefore, more than 4096 bin is not considered to avoid very large size of the feature vector. As in the case of higher bin histogram, blocks are grouped in more classes, more discriminant features between bleeding and non-bleeding frame are obtained. As a result, better performance is achieved by using higher bin histogram.

TABLE 5. Performance of Bleeding Frame Detection Varying Histogram Bin.

| Block size | Histogram Bin | Sen. | Spec. | Accu. |

|---|---|---|---|---|

| 7 by 7 | 8 | 46.17% | 87.42% | 79.49% |

| 16 | 79.46% | 95.89% | 92.72% | |

| 32 | 79.61% | 95.95% | 92.81% | |

| 64 | 85.03% | 96.79% | 94.55% | |

| 128 | 88.88% | 98.36% | 96.60% | |

| 256 | 93.09% | 98.74% | 97.66% | |

| 512 | 94.22% | 99.22% | 98.26% | |

| 1024 | 94.55% | 99.26% | 98.34% | |

| 2048 | 95.56% | 99.64% | 98.89% | |

| 4096 | 97.16% | 99.58% | 99.11% |

Next, the performance of various block based features, namely block mean, median, minimum and maximum are tested and results are reported in Table. 6. It is found that best performance is achieved using block minimum feature. Which means the minimum color values of all bleeding blocks are very similar, but the minimum color values of all bleeding blocks are very different from the minimum color values of all non-bleeding blocks.

TABLE 6. Performance of Bleeding Frame Detection Varying Local Feature of Block.

| Block Size | Hist. Bin | Local Feature | Sen. | Spec. | Accu. |

|---|---|---|---|---|---|

| 7 by 7 | 4096 | Mean | 97.46% | 99.12% | 98.77% |

| Median | 97.16% | 99.58% | 99.11% | ||

| Maximum | 96.23% | 99.15% | 98.60% | ||

| Minimum | 98.03% | 99.58% | 99.28% |

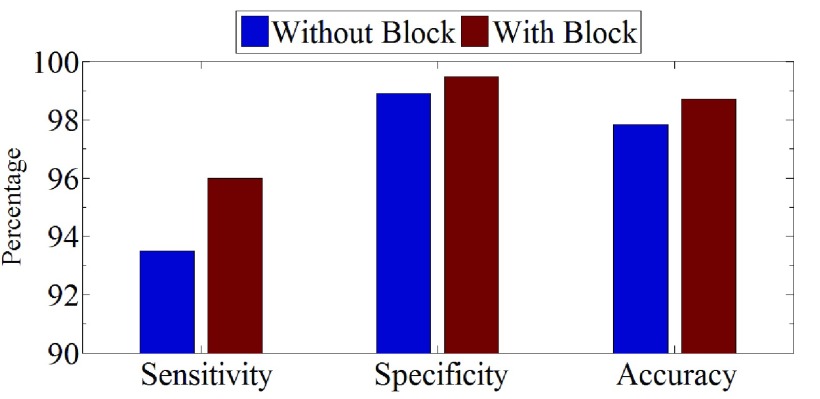

It is to be mentioned that one major contribution of this paper is the block based feature extraction. In order to demonstrate the effect of a block-based local feature in the proposed method on classification performance, in Fig. 7, comparative performance analysis is shown in the view of with and without block based approach. In the absence of block, there are no options for getting block-based local features as described in section II-C. In this case, the global feature is obtained from the entire image utilizing each pixel. In the figure, bleeding detection performance in terms of sensitivity, specificity and accuracy are demonstrated. As discussed before, it is observed that feature obtained with block provides improved classification performance in comparison to that of without block based approach.

FIGURE 7.

Bleeding Detection Performance effect on block.

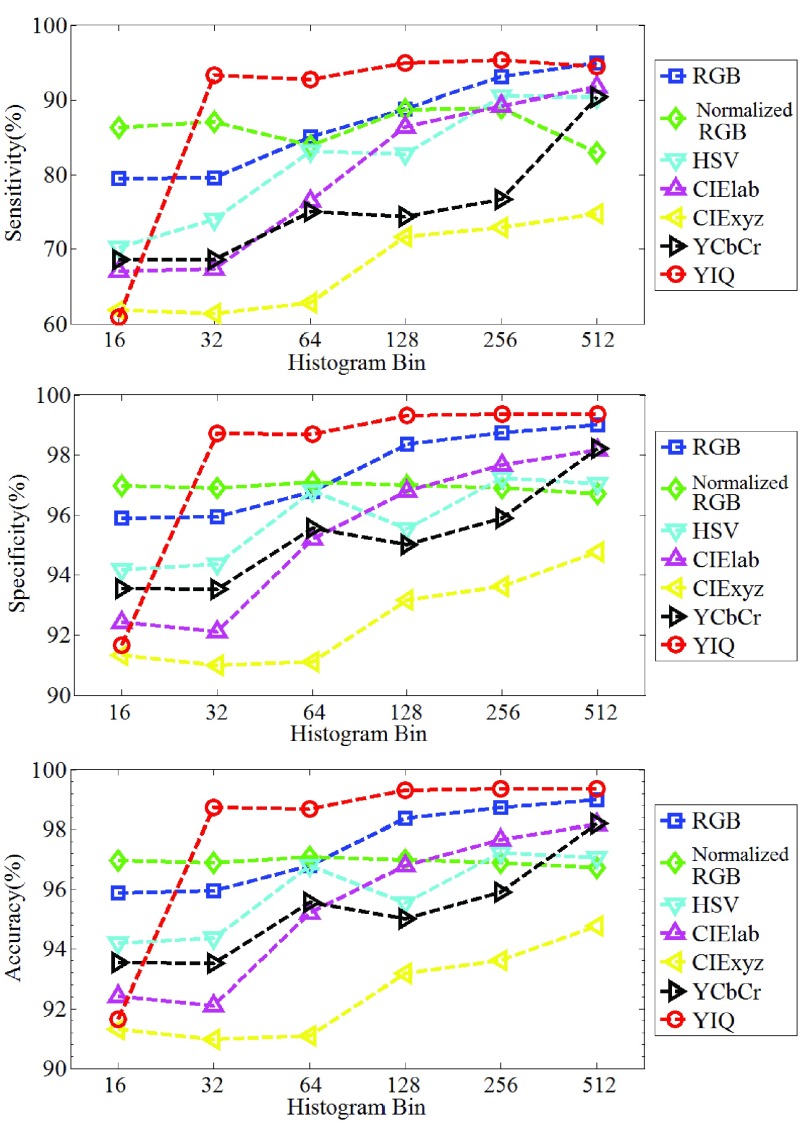

Although all the above results are reported in RGB color space, proposed CHOBS feature extraction method is also tested among different color spaces, like normalized RGB, HSV, CIElab, CIExyz, YCbCr, and YIQ. In Fig. 8, all the performance indicators are presented varying the size of color histogram bin. Among the seven color spaces, it is found that RGB and YIQ demonstrated highly satisfactory performance in terms of all criteria.

FIGURE 8.

Bleeding Detection Performance in Different Color Plane.

1). Effect of Feature Dimension Reduction Scheme on Performance

One of the major contributions of this work is to reduce feature dimension of the CHOBS feature. This scheme offers a reduction of computational cost without compromising the bleeding detection performance. The performance comparison between with and without reduction of feature dimension is presented in Table. 7. First, feature dimension is reduced to 50% (4096 to 2048) by utilizing histogram pattern as described in the subsection II-E.1. Secondly, feature dimension is reduced by using PCA. From the table, it is found that feature dimension reduction scheme offers almost similar performance while reducing feature size from 4096 to 16. However, reduction of feature vector size significantly reduces the computational cost of classification.

TABLE 7. Effect of Feature Dimensionality Reduction on Bleeding Frame Detection Performance.

| Reduction | Feature Dim. | Sen. (%) | Spe. (%) | Accu. (%) |

|---|---|---|---|---|

| Without | 4096 | 98.03% | 99.58% | 99.28% |

| With | 2048 | 97.79% | 99.52% | 99.23% |

| 1024 | 97.40% | 99.48% | 99.06% | |

| 512 | 97.65% | 99.58% | 99.19% | |

| 256 | 97.57% | 99.57% | 99.19% | |

| 128 | 97.70% | 99.63% | 99.23% | |

| 64 | 97.83% | 99.58% | 99.23% | |

| 32 | 97.57% | 99.58% | 99.19% | |

| 16 | 97.85% | 99.47% | 99.15% | |

| 8 | 97.13% | 99.26% | 98.81% | |

| 4 | 91.60% | 98.11% | 96.89% |

2). Performance Comparison with Other Established Methods

To further evaluate the performance of proposed color histogram, it is compared with different histogram features constructed from the red, green and blue plane along with feature combination of three planes in RGB space. Performance outcomes are reported in Table. 8 and it is observed that the proposed method performs far better in comparison to individual color plane histogram feature as well as the cascaded-combined features obtained from all three color planes. One major advantage of the proposed CHOBS feature is the drastic reduction in feature dimension. As shown in Table. 8 using only a feature dimension of 16 (in comparison to 256 or 768) much better performance is obtained.

TABLE 8. Performance Comparison of Different Histogram Bin Features of RGB Plane (%).

| Block Size | Hist. Feature | Feat. Dim. | Sen. | Spec. | Accu. |

|---|---|---|---|---|---|

| 7 by 7 | R plane | 256 | 84.13% | 96.68% | 94.26% |

| G plane | 256 | 79.04% | 93.69% | 90.89% | |

| B plane | 256 | 74.14% | 93.65% | 89.79% | |

| Combined R, G, B | 768 | 94.50% | 98.95% | 98.06% | |

| Proposed CHOBS | 16 | 97.85% | 99.47% | 99.15% |

In view of comparing the performance of the proposed method, six recently reported methods are taken into consideration [4], [7], [10], [13]–[15]. Local Binary Pattern (LBP) [7] based feature is one of the established approaches to bleeding detection. In LBP based method, for the purpose of feature extraction, 8-bin histogram is utilized. The method reported in [14] utilizes 80-bin word based color histogram for feature extraction. In [10], statistical feature computed from histogram probability is employed to detect bleeding frame. In implementing the method reported in [10], the best feature combination as mentioned in this paper is taken into consideration. Reference [13] is chosen for performance comparison utilizing index image (constructed from each pixel of WCE image) histogram. In [23], statistical features computed from ROI of a given image in CMYK (cyan-magenta-yellow-black) color space are used to detect bleeding frame. Comparison results are presented in Table. 9. For a fair comparison, same WCE image dataset and classifier are used for all these six methods. It is to be noted that proposed method is named as CHOBS (color histogram of block statistic). From the table, it is found that proposed method exhibits superior performance to all other reported methods, in terms of all performance indicators. The probable reasons for getting superior classification performance by the proposed method are its competency of overcoming some serious problems that usually WCE videos faces, like single pixel randomness, illumination changes, and image distortion. In the proposed, 18 out of 450 bleeding images incorrectly detected as non-bleeding. Failure reasons are inspected and found that proposed method is not capable of identifying those images as bleeding because of the presence of image noise, the existence of obstacle between the subject and camera lens and the absence of significant amount of bleeding regions.

TABLE 9. Performance Comparison of Different Features.

The problem of poor video quality due to various artifacts, such as noises, illumination changes, motion blur or low resolutions can degrade the quality of capsule endoscopy images. In order to analyze the effect of change in resolution on bleeding frame detection performance, an experiment is conducted considering two cases where the original image resolutions of  are changed to

are changed to  and

and  . The proposed method is employed to classify the bleeding and non-bleeding frames in all three cases and the results are shown in Table 10. It is observed that the proposed method offers satisfactory performance, even in case of images with low resolutions.

. The proposed method is employed to classify the bleeding and non-bleeding frames in all three cases and the results are shown in Table 10. It is observed that the proposed method offers satisfactory performance, even in case of images with low resolutions.

TABLE 10. Performance Comparison of Different Image Resolution.

| Resolution | Sen. | Spec. | Accu. |

|---|---|---|---|

|

98.03% | 99.58% | 99.28% |

|

96.79% | 99.37% | 98.85% |

|

95.13% | 98.79% | 98.09% |

In order to handle the video quality degradation due to several other factors as mentioned above other than the resolution effect, different image enhancement schemes are available in literature [24]–[27]. Some example images are shown in Fig. 9 for better understanding about the poor video quality. Imtiaz and Wahid [24] proposed two stage color image enhancement method, which performs at first gray level enhancement followed by space variant chrominance mapping color reproduction. In WCE images there may be problem of darkness caused by limited illumination of LEDs and complex circumstances in GI tract. For enhancing WCE images, Li and Meng proposed an adaptive contrast diffusion method in [25]. In WCE recordings, visibility may be impaired by air bubbles, food residues and bile pigments (in spite of good bowel preparation). Sakai et al. [26] conducted a study on detectability issue based on flexible spectral imaging color enhancement (FSICE). The detailed advantage of FSICE was investigated by Kobayashi et al. [27] for detecting small bowel lesions. Possible remedy to overcome the problem of video quality would be to employ the existing image enhancement techniques as preprocessing step prior to feature extraction in the proposed method, which could be a potential future work.

FIGURE 9.

Sample images that are affected by video artifact.

D. Performance in Continuous WCE Video Clip

In this subsection, bleeding frame detection in continuous WCE video clip is demonstrated. For the purpose of analyzing performance in WCE video clip, five WCE bleeding videos are considered namely: 1) D170 bleeding, 2) bleding5, 3) bleeding3, 4) bleeding2, and 5) 23 bleeding, those are publicly available in [21]. Those videos are chosen in a way so that it covers different types of bleeding frame, as well as variation of frame number and position. First, from the video clip image frames are extracted and proposed final features are calculated. Then, bleeding or non-bleeding decision is acquired by applying KNN classifier and the performance is reported in Table. 11. For classifying a test frame of a particular video, it is assured that the images of that video are excluded from the training dataset. Among the five videos, four videos show satisfactory performance in terms of all performance criteria, except 23 bleeding, due to the presence of numerous incidents of faint small bleeding areas. Quite satisfactory overall performance result is found which is reported in the last row of that table.

TABLE 11. Performance Result of Continuous Video Clip.

| Video name | Frames No. (B/N) | Sen. | Spe. | Accu. |

|---|---|---|---|---|

| D170 bl. | 96/4 | 98.96% | 75.00% | 99.00% |

| bleeding5 | 22/78 | 90.91% | 96.15% | 95.00% |

| bleeding3 | 5/95 | 100.00% | 89.47% | 90.00% |

| bleeding2 | 100/0 | 98.00% | — | 98.00% |

| 23 bl. | 27/73 | 81.48% | 98.63% | 94.00% |

| overall | 250/250 | 96.00% | 93.60% | 94.90% |

E. Performance Results for Bleeding Zone Detection

1). Quantitative Analysis

In order to measure the quantitative performance of proposed bleeding zone detection method, a pixel-wise comparison between the marked bleeding zone and the ground truth labeled by the physicians is performed. The comparison result has four possible outcomes, they are true positive (TP), true negative (TN), false positive (FP) and false negative (FN). There are two true cases: 1) the bleeding pixels that are correctly labeled as the bleeding called true positive (TP) and 2) the non-bleeding pixels that are correctly labeled as non-bleeding termed as true negative (TN). Similarly, there are two false cases: 1) the pixels which are not labeled as the bleedings but should have been called false negative (FN) and 2) false positive (FP) are the ones incorrectly labeled as the bleedings. From those four outcomes three criteria are calculated to represent performance result as Precision, the false positive ratio (FPR) and the false negative ratio (FNR) [28], [29], which is computed according to the following formulas:

|

Precision describes the truthfulness of bleeding zone detection, thus a higher value of it is highly desirable. On the contrary, being an indication of error, a lower value indicates better performance for FPR and FNR. For the purpose of quantitative analysis, 70 bleeding images are taken into consideration, which is collected from 10 different bleeding videos. The ground truth of bleeding zone of those bleeding images is marked by physicians. In Table. 12, bleeding zone detection result of proposed method is presented. It is observed that in all cases competitive performance is obtained. For example, increase in block size to  offers lowest FPR but highest FNR and lowest precision. In terms of precision,

offers lowest FPR but highest FNR and lowest precision. In terms of precision,  block provides most satisfactory performance with reasonable values of FPR and FNR.

block provides most satisfactory performance with reasonable values of FPR and FNR.

TABLE 12. Bleeding Zone Detection Performance of Different Block Size.

| Block size | Precession | FPR | FNR |

|---|---|---|---|

| 3 by 3 | 94.28% | 2.14% | 8.46% |

| 5 by 5 | 94.75% | 4.01% | 10.31% |

| 7 by 7 | 95.33% | 3.14% | 10.88% |

| 9 by 9 | 94.07% | 1.34% | 16.35% |

Different amount of overlapping are investigated and reported in Table. 13 considering  block. It is found that the performance of bleeding zone detection is less sensitive to the amount of overlap between successive blocks. However similar to Table. 4, 14% overlap provides better results.

block. It is found that the performance of bleeding zone detection is less sensitive to the amount of overlap between successive blocks. However similar to Table. 4, 14% overlap provides better results.

TABLE 13. The Effect of Block Overlapping on Bleeding Zone Detection Performance.

| Block Size | Overlap | Precision | FPR | FNR |

|---|---|---|---|---|

| 7 by 7 | 0% | 95.33% | 3.14% | 10.88% |

| 14% | 95.75% | 3.11% | 10.38% | |

| 28% | 94.80% | 3.49% | 16.46% | |

| 43% | 94.67% | 4.13% | 15.80% |

Next, the performance of the proposed bleeding zone detection method is compared with that of a recently developed method [14]. The comparison result of bleeding detection performance is illustrated in Table. 14. From the table, it is shown that precision is significantly improved. Most important and significant improvement is observed in FNR ratio, it is improved by almost 28% compared to [12]. However, FPR is slightly (around 1%) higher than Yuan method. The performance of the proposed method is found superior [14].

TABLE 14. Performance Comparison of Bleeding Zone Detection.

| Method | Precession | FPR | FNR |

|---|---|---|---|

| Yuan method [14] | 93.61% | 2.14% | 38.60% |

| Proposed block based classification | 95.75% | 3.11% | 10.38% |

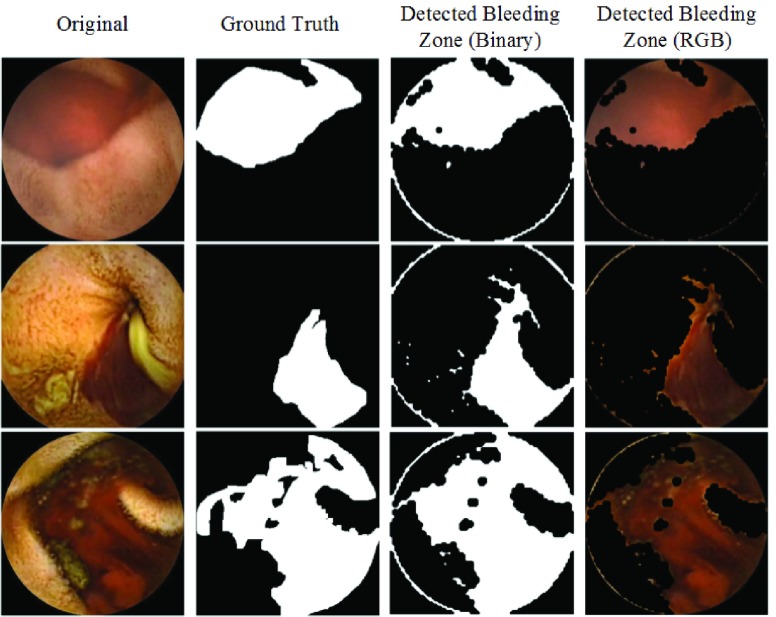

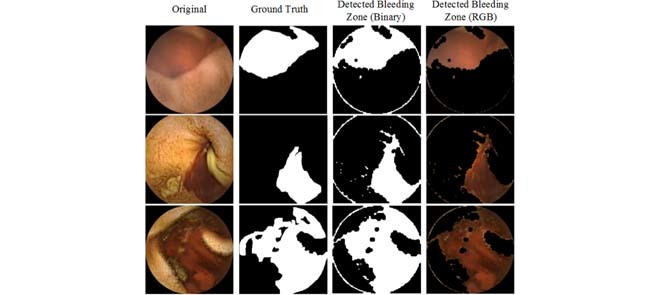

2). Qualitative Analysis

For the purpose of qualitative analysis, four bleeding frames from four different videos are considered and presented in Fig. 10. The first column is the original image, while the second column shows the bleeding zone ground truth, the third column shows the detected bleeding region before the morphological operation and the final column shows the bleeding zone in the binary image after the morphological operation. It is observed that the proposed method has the best capability of marking the bleeding zone with high precision.

FIGURE 10.

Qualitative analysis of bleeding zone detection.

V. Conclusion

In this paper, a new technique of bleeding frame and zone detection in wireless capsule endoscopy video recordings is presented. Use of block-based local feature extraction overcomes pixel randomness problem. Proposed CHOBS feature uniquely combines information of all three color planes and provides consistent feature quality. Two stage feature reduction is incorporated, one by utilizing the histogram pattern and another one using PCA. The proposed method exhibits high sensitivity, specificity, and accuracy with respect to other established method. Moreover, a bleeding zone localization method is developed using the block local feature which does not require any additional computation. First, blocks of a bleeding frame are classified using supervised classifier and then pixels are marked using proposed interpolation scheme. Finally, bleeding zones are delineated applying morphological operation. The proposed method is thus promising in identifying bleeding zones from a bleeding image.

Acknowledgment

The authors would like to express their sincere gratitude towards Dr. M. G. Kibria and Dr. S. N. F. Rumi of Dhaka Medical College and Hospital, who helped in finalizing the ground truth of bleeding image and zone.

References

- [1].Adler D. G. and Gostout C. J., “Wireless capsule endoscopy,” Hospital Phys., vol. 39, no. 5, pp. 14–22, 2003. [Google Scholar]

- [2].Iddan G., Meron G., Glukhovsky A., and Swain P., “Wireless capsule endoscopy,” Nature, vol. 405, p. 417, May 2000. [DOI] [PubMed] [Google Scholar]

- [3].(2014). The National Digestive Diseases Information Clearinghouse. [Online]. Available: http://digestive.niddk.nih.gov/ddiseases/pubs/bleeding

- [4].Liu J. and Yuan X., “Obscure bleeding detection in endoscopy images using support vector machines,” Optim. Eng., vol. 10, no. 2, pp. 289–299, 2008. [Google Scholar]

- [5].Liangpunsakul S., Mays L., and Rex D. K., “Performance of given suspected blood indicator,” Amer. J. Gastroenterol., vol. 98, no. 12, pp. 2676–2678, 2003. [DOI] [PubMed] [Google Scholar]

- [6].Mackiewicz M. W., Fisher M., and Jamieson C., “Bleeding detection in wireless capsule endoscopy using adaptive colour histogram model and support vector classification,” Proc. SPIE, vol. 6914, p. 69140R, Mar. 2008. [Google Scholar]

- [7].Li B. and Meng M. Q.-H., “Computer-aided detection of bleeding regions for capsule endoscopy images,” IEEE Trans. Biomed. Eng., vol. 56, no. 4, pp. 1032–1039, Apr. 2009. [DOI] [PubMed] [Google Scholar]

- [8].Fu Y., Zhang W., Mandal M., and Meng M. Q.-H., “Computer-aided bleeding detection in WCE video,” IEEE J. Biomed. Health Informat., vol. 18, no. 2, pp. 636–642, Mar. 2014. [DOI] [PubMed] [Google Scholar]

- [9].Guobing P., Guozheng Y., Xiangling Q., and Jiehao C., “Bleeding detection in wireless capsule endoscopy based on probabilistic neural network,” J. Med. Syst., vol. 35, pp. 1477–1484, Dec. 2011. [DOI] [PubMed] [Google Scholar]

- [10].Sainju S., Bui F. M., and Wahid K., “Bleeding detection in wireless capsule endoscopy based on color features from histogram probability,” in Proc. 26th Annu. IEEE Can. Conf. Electr. Comput. Eng. (CCECE), May 2013, pp. 1–4. [Google Scholar]

- [11].Ghosh T., Fattah S. A., and Wahid K. A., “Automatic bleeding detection in wireless capsule endoscopy based on RGB pixel intensity ratio,” in Proc. Int. Conf. Electr. Eng. Inf. Commun. Technol. (ICEEICT), Apr. 2014, pp. 1–4. [Google Scholar]

- [12].Ghosh T., Bashar S. K., Alam M. S., Wahid K., and Fattah S. A., “A statistical feature based novel method to detect bleeding in wireless capsule endoscopy images,” in Proc. Int.Conf. Informat. Electron. Vis. (ICIEV), 2014, pp. 1–4. [Google Scholar]

- [13].Ghosh T., Fattah S. A., Shahnaz C., and Wahid K. A., “An automatic bleeding detection scheme in wireless capsule endoscopy based on histogram of an RGB-indexed image,” in Proc. 36th Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. (EBMC), Aug. 2014, pp. 4683–4686. [DOI] [PubMed] [Google Scholar]

- [14].Yuan Y., Li B., and Meng M. Q.-H., “Bleeding frame and region detection in the wireless capsule endoscopy video,” IEEE J. Biomed. Health Inform., vol. 20, no. 2, pp. 624–630, Mar. 2016. [DOI] [PubMed] [Google Scholar]

- [15].Ghosh T., Bashar S. K., Fattah S. A., Shahnaz C., and Wahid K. A., “A feature extraction scheme from region of interest of wireless capsule endoscopy images for automatic bleeding detection,” in Proc. IEEE Symp. Signal Process. Inf. Technol. (ISSPIT), Dec. 2014, pp. 1–4. [Google Scholar]

- [16].Hassan A. R. and Haque M. A., “Computer-aided gastrointestinal hemorrhage detection in wireless capsule endoscopy videos,” Comput. Methods Programs Biomed., vol. 122, no. 3, pp. 341–353, 2015. [DOI] [PubMed] [Google Scholar]

- [17].Hafner J., Sawhney H. S., Equitz W., Flickner M., and Niblack W., “Efficient color histogram indexing for quadratic form distance functions,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 17, no. 7, pp. 729–736, Jul. 1995. [Google Scholar]

- [18].Stricker M. and Swain M., “The capacity of color histogram indexing,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 1994, pp. 704–708. [Google Scholar]

- [19].Sainju S., Bui F. M., and Wahid K., “Automated bleeding detection in capsule endoscopy videos using statistical features and region growing,” J. Med. Syst., vol. 38, pp. 1–11, Apr. 2014. [DOI] [PubMed] [Google Scholar]

- [20].Wold S., Esbensen K., and Geladi P., “Principal component analysis,” Chemometrics Intell. Lab. Syst., vol. 2, nos. 1–3, pp. 37–52, 1987. [Google Scholar]

- [21].(2014) The Capsule Endoscopy. [Online]. Available: http://www.capsuleendoscopy.org

- [22].Altman D. G. and Bland J. M., “Diagnostic tests. 1: Sensitivity and specificity,” Brit. Med. J., vol. 308, no. 6943, p. 1552, 1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Ghosh T.et al. , “An automatic bleeding detection technique in wireless capsule endoscopy from region of Interest,” in Proc. IEEE Int. Conf. Digit. Signal Process. (DSP), Jul. 2015, pp. 1293–1297. [Google Scholar]

- [24].Imtiaz M. S. and Wahid K. A., “Color enhancement in endoscopic images using adaptive sigmoid function and space variant color reproduction,” Comput. Math. Methods Med., vol. 2015, May 2015, Art. no. 607407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Li B. and Meng M. Q.-H., “Wireless capsule endoscopy images enhancement via adaptive contrast diffusion,” J. Vis. Commun. Image Represent., vol. 23, no. 1, pp. 222–228, 2012. [Google Scholar]

- [26].Sakai E.et al. , “Capsule endoscopy with flexible spectral imaging color enhancement reduces the bile pigment effect and improves the detectability of small bowel lesions,” BMC Gastroenterol., vol. 12, no. 1, p. 83, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Kobayashi Y.et al. , “Efficacy of flexible spectral imaging color enhancement on the detection of small intestinal diseases by capsule endoscopy,” J. Digestive Diseases, vol. 13, no. 12, pp. 614–620, 2012. [DOI] [PubMed] [Google Scholar]

- [28].Conversano F.et al. , “Hepatic vessel segmentation for 3D planning of liver surgery: Experimental evaluation of a new fully automatic algorithm,” Acad. Radiol., vol. 18, no. 4, pp. 461–470, 2011. [DOI] [PubMed] [Google Scholar]

- [29].Conversano F., Casciaro E., Franchini R., Casciaro S., and Lay-Ekuakille A., “Fully automatic 3D segmentation measurements of human liver vessels from contrast-enhanced CT,” in Proc. IEEE Med. Meas. Appl. (MeMeA), Jun. 2014, pp. 1–5. [Google Scholar]