Abstract

Forming valid predictions about the environment is crucial to survival. However, whether humans are able to form valid predictions about natural stimuli based on their temporal statistical regularities remains unknown. Here, we presented subjects with tone sequences with pitch fluctuations that, over time, capture long-range temporal dependence structures prevalent in natural stimuli. We found that subjects were able to exploit such naturalistic statistical regularities to make valid predictions about upcoming items in a sequence. Magnetoencephalography (MEG) recordings revealed that slow, arrhythmic cortical dynamics tracked the evolving pitch sequence over time such that neural activity at a given moment was influenced by the pitch of up to seven previous tones. Importantly, such history integration contained in neural activity predicted the expected pitch of the upcoming tone, providing a concrete computational mechanism for prediction. These results establish humans' ability to make valid predictions based on temporal regularities inherent in naturalistic stimuli and further reveal the neural mechanisms underlying such predictive computation.

SIGNIFICANCE STATEMENT A fundamental question in neuroscience is how the brain predicts upcoming events in the environment. To date, this question has primarily been addressed in experiments using relatively simple stimulus sequences. Here, we studied predictive processing in the human brain using auditory tone sequences that exhibit temporal statistical regularities similar to those found in natural stimuli. We observed that humans are able to form valid predictions based on such complex temporal statistical regularities. We further show that neural response to a given tone in the sequence reflects integration over the preceding tone sequence and that this history dependence forms the foundation for prediction. These findings deepen our understanding of how humans form predictions in an ecologically valid environment.

Keywords: 1/f activity, magnetoencephalography, naturalistic stimuli, predictive processing, scale-free dynamics

Introduction

In real-life environments, prior sensory information across multiple time scales continually influences information processing in the present. To date, the relationship between neural integration of information over time and predictive computations remains mysterious (Hasson et al., 2015). The importance of this question is underscored by the fact that forming valid predictions about the features (Summerfield and de Lange, 2014) and timing (Cravo et al., 2011; Jepma et al., 2012) of upcoming stimuli in the environment is crucial for survival. Predictions about stimulus features can be generated through a variety of mechanisms, including associative learning (Schlack and Albright, 2007), contextual influences (Bar, 2004), imagination (Schacter et al., 2007), task cues (Wyart et al., 2012; de Lange et al., 2013), and extrapolation from statistical regularities in sensory input (Saffran et al., 1996; Alink et al., 2010; Chalk et al., 2010). Because statistical regularities are ubiquitous in natural stimuli (Dong and Atick, 1995; Summerfield and de Lange, 2014), extrapolating from statistical regularities in sensory input should provide a fundamental strategy for forming predictions in natural environments.

Previous studies investigating predictions based on statistical regularities have largely used relatively simple stimuli such as the oddball paradigm, in which a novel stimulus is embedded within a sequence of repeated stimuli (Yaron et al., 2012); the local–global paradigm, in which a sequence including two values contains both local and global regularities (Bekinschtein et al., 2009); and repeated presentations of a fixed sequence of stimuli (Erickson and Desimone, 1999; Meyer and Olson, 2011; Gavornik and Bear, 2014). Although such stimuli allow for tight experimental control, they induce the formation of predictions by repeated presentations of items or sequences of items. In contrast, temporally varying stimuli encountered in the natural environment have rich statistical structures that allow for more complex forms of predictions. At present, whether humans can form valid predictions based on temporal statistical regularities inherent in natural stimuli remains unknown.

In particular, one pervasive statistical feature of natural stimuli is that they exhibit power spectra following a P ∝ 1/fβ pattern in spatial (Tolhurst et al., 1992) and temporal frequency domains, where β is an exponent commonly ranging between 0 and 2. In the temporal domain, a 1/fβ pattern is observed in the loudness and pitch fluctuations of music, speech, and ambient noise in urban and rural settings (Voss and Clarke, 1975; De Coensel et al., 2003) and in the rhythms of music (Levitin et al., 2012). Previous work has shown that human perception and neural activity are sensitive to the scaling parameter β of dynamic auditory stimuli such as tone sequences featuring 1/fβ fluctuations in pitch (Schmuckler and Gilden, 1993; Patel and Balaban, 2000; Garcia-Lazaro et al., 2006; Lin et al., 2016). Therefore, 1/fβ statistics are prevalent in natural stimuli, and human perception is sensitive to such statistics. Because a 1/fβ type temporal power spectrum indicates temporal dependence and predictability (Eke et al., 2002; He, 2014), these observations raise the intriguing question of whether humans are able to make valid predictions about upcoming items in such sequences.

Here, we investigate these open questions using a novel experimental paradigm that allows us to probe, for the first time, the ability of human subjects to exploit the temporal statistical regularities found in natural stimuli to predict upcoming stimulus features. We created tone sequences with pitch that fluctuated over time following a 1/fβ pattern where β ranged from 0 (no temporal dependence, “white noise”) to 2 (strong temporal dependence, “random walk”). The expected pitch of a given tone in the sequence can be expressed as a function of the preceding tone pitches. These stimuli are thus well controlled while also capturing the complex statistical regularities exhibited by auditory stimuli in natural environments. We therefore refer to these tone sequences as “naturalistic” rather than “natural” to highlight that, although the elements of the sequences are man-made pure tones, the dynamics of tone pitch fluctuation reflect the statistics of 1/fβ temporal dependence found in natural stimuli. Using a behavioral task based on these stimuli and MEG recordings in humans, we established human subjects' ability to make valid predictions about upcoming stimulus features based on naturalistic temporal statistical regularities and further uncovered the underlying neural mechanisms as implemented in history-dependent neural processing of incoming information.

Materials and Methods

Stimuli creation.

We created auditory tone sequences with pitch fluctuations that had five levels of temporal dependence strength β, ranging from β = 0 (no temporal dependence) to β = 2 (strong temporal dependence). Sequences with β < 1 (β = 0 and 0.5) were fractional Gaussian noise (fGn), whereas sequences with β > 1 (β = 1.01, 1.5, and 2) were fractional Brownian motion (fBm). fBm sequences are nonstationary, self-similar sequences with increments that are stationary fGn sequences and the transition from fGn to fBm sequences occurs at β = 1 (Mandelbrot and Van Ness, 1968; Eke et al., 2002). We used a circulant embedding algorithm (Helgason et al., 2011) to create six unique 33-element long series for each level of β, as follows:

where each element xj of xβ,i is taken to represent the pitch of the jth tone in the sequence. Each xβ,i series was translated and scaled such that its elements ranged from log(220) to log(880). This range of pitch values was chosen to span the iso-loudness region of human hearing (Robinson and Dadson, 1956). To investigate the potential effect of overall pitch variability on judgments of sequence statistics, we scaled half of the sequences such that the SD of its corresponding fGn was 70% of its original value (each fBm series is associated with a fGn series of the same Hurst exponent with a power–law exponent β that differs by 2; Eke et al., 2002). This resulted in sequences that spanned a smaller range (green traces in Figure 1B) than the nonscaled sequences (magenta traces). Next, the series were discretized such that each element took on one of 25 values evenly spaced on the log scale with semitone distance. We refer to these discretized series as pβ,i. Each pβ,i thus represents a series of tone pitches with pitch fluctuation that exhibits temporal dependence prescribed by β. To disentangle predictive processing from instantaneous stimulus processing, all sequences converged onto a pitch value of 440 Hz for the 33rd and penultimate tone. The 34th and final tone of each sequence varied across trials and was selected from one of six possible values of pitch that were 4, 8, or 12 semitone steps above or below 440 Hz (black dots in Fig. 1B). Each unique sequence was presented for a total of 12 times and each of the six final tone pitch values was used twice for each unique sequence in randomized order.

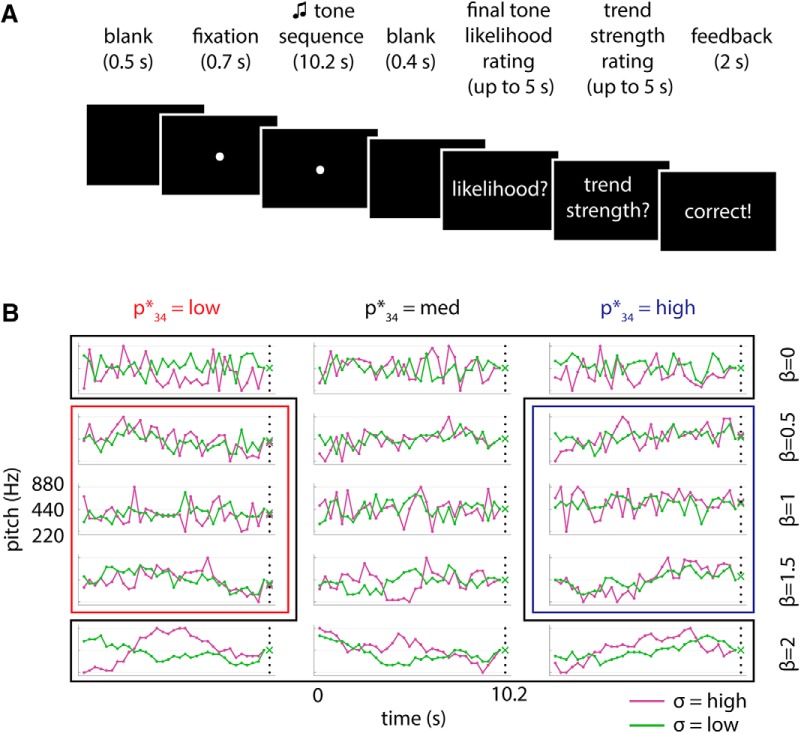

Figure 1.

Task and stimuli. A, Trial structure. At the end of each trial, subjects judged the likelihood of the final tone, given the preceding tone sequence, on a scale of 1–5. Subjects then rated the “trend strength” of the sequence on a scale of 0–4. Feedback on the accuracy of the trend strength rating was provided at the end of every trial. B, Full stimulus set. For each level of β (rows), we generated 6 sequences, 3 of which ranged from 220 to 880 Hz (magenta traces, high σ) and 3 of which spanned a slightly smaller pitch range (green traces, low σ). For all sequences, the penultimate (33rd) tone was 440 Hz and the final (34th) tone was drawn from one of 6 possible values 4, 8, or 12 semitone steps above or below 440 Hz (black dots). For β = 0.5, 1, and 1.5, sequences were chosen to have expected pitch for the final tone (p34*) spanning low (<440 Hz; red outline), medium (440 Hz; black outline), and high (>440 Hz; blue outline) ranges (indicated by x). For β = 0 and 2, the expected final tone pitch was always 440 Hz. Each sequence was presented 12 times, with the 6 possible values for final tone pitch distributed evenly and randomly across the 12 presentations, for a total of 360 trials.

The sound wave for each sequence was constructed according to the following:

where t denotes sample number, i denotes sequence number, j denotes tone number within sequence i, A (amplitude) = 1, and SR (sampling rate) = 44,100 Hz. The 300 ms duration of each tone was chosen for ease of listening, in accordance with prior studies (Patel and Balaban, 2000; Lin et al., 2016). Because each sequence contained 34 tones, it had a total duration of 10.2 s. Cosine waves for each tone j were concatenated such that there was no silence period between consecutive tones. To prevent clicking noises from occurring between tones, ϕj is computed to ensure that yβ,i,j is a smooth and differentiable function across adjacent j values. Audio files of sequence examples can be downloaded from https://med.nyu.edu/helab/sites/default/files/helab/Maniscalco_etal_stim_wav_files_and_figs.zip.

These auditory tone sequences were presented using the PsychPortAudio function of the Psychophysics Toolbox (Brainard, 1997) in MATLAB (The MathWorks). The audio was delivered through specialized ear tubes that were MEG compatible. The Etymotic ER-3 Insert Headphones, which has a frequency response that is flat to 5 kHz, was used. The plastic tubing from the transducer to the earpiece had a speed-of-sound delay of ∼10 ms, which was corrected in the MEG data analyses.

Calculation of p34*.

Let {xn, n = 1, …, N} be a series of data samples drawn from a stationary zero-mean random process, with covariance function rx. Linear prediction of order K, x̂n, for sample n, from past samples {xn−1, xn−2, …, xn−K} is written as follows:

where the vector a = {a1, a2, …, aK} is to be chosen (or estimated) so as to minimize the average (squared) prediction error as follows:

Linear algebra leads to an explicit theoretical solution for â (Scharf, 1991):

where rx is the covariance sequence of process x and denotes the K × K square matrix, with entry = rx (|p − p′|) for p,p′ ∈ {1, …, K}2.

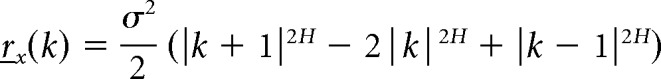

If x is an fGn sequence (−1 < β < 1), then covariance rx can be computed as a function of its Hurst exponent H and variance σ2 (Eke et al., 2002) as follows:

|

where H = (β + 1)/2. We set K = 33 to compute the expected value of the final tone pitch based on the pitch values of all 33 preceding tones.

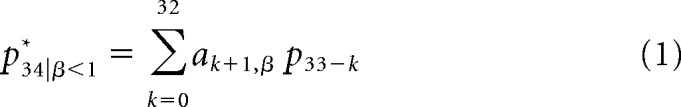

In summary, the calculation of p34* for the (zero-meaned) β = 0 and β = 0.5 sequences can be written as follows:

|

where ak+1, β are the optimal prediction weights for an fGn sequence with scaling parameter β and p33-k are the pitch of the preceding tones. Expected value was calculated for each sequence using zero-meaned pitch series. Because the mean pitch value was log(440), we added log(440) to the calculated expected value (Eq. 1) to yield the final value for p34* used in analyses. For β = 0 sequences, coefficients ak+1, 0 = 0, so the expected value of the upcoming item is equal to the sequence mean, resulting in p34* = log(440) for all β = 0 sequences.

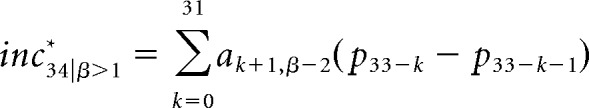

fBm sequences (1 < β < 3) are cumulative sums of fGn sequences with the same Hurst exponent H and are nonstationary. The preceding methodology for computing expected value only holds for fGn sequences and so cannot be applied to fBm sequences. However, the increments of an fBm sequence with scaling parameter β constitute an fGn sequences with scaling parameter β – 2 (Eke et al., 2002). Therefore, to calculate the expected value for fBm sequences, we first computed the difference between successive elements in the fBm sequence, which yielded an fGn sequence (with expected mean = 0). We then computed the expected value for the upcoming item in this fGn sequence, i.e., the expected increment in pitch for the original fBm series, as follows:

|

where ak+1, β-2 are the optimal prediction weights for an fGn sequence with scaling parameter β − 2 and p33-k are the pitch of the preceding tones. Finally, we calculated the expected pitch of the 34th tone in the fBm sequences (β = 1.01, 1.5, and 2) as the pitch of the 33rd tone (which is always 440 Hz) plus the expected increment in pitch, as follows:

To facilitate comparison with equation (1), p34* for fBm sequences can be written as a weighted sum of preceding pitch values as follows:

|

For a β = 2 sequence, the increments form an fGn sequence with β = 0, so the expected increment inc34* = 0, entailing that p34* = p33 = log(440).

Herein, we use the term “optimal prediction weights” (sometimes shortened to “prediction weights”) to refer to the weights placed on the pitch sequence history p33-k (where k ∈ [0 32]) to compute p34* for fGn (Eq. 1) and fBm (Eq. 2) sequences.

Experimental design and statistical analysis.

Each trial began with presentation of a fixation point in the middle of the screen (Fig. 1A). Subjects were instructed to fixate on the fixation point during presentation of the auditory sequence to minimize eye movements. Likelihood ratings and trend strength ratings were entered using two separate button boxes for the left and right hand, respectively. Subjects were instructed to disregard the final tone in their judgment of trend strength because the final tone did not follow the temporal dependence statistics of preceding tones. Higher trend strength was explained to the subjects as the tendency for a trend of high- or low-pitch values to persist over longer periods of time, which captured the concept of temporal dependence in the time series. Performance feedback about the trend strength rating was presented visually for 2 s after entry of both behavioral responses. The feedback indicated what trend strength rating had been entered by the subject, what the true trend strength of the sequence was, and whether the subject's trend strength rating was correct, close to correct (off by one level), or incorrect (off by two or more levels).

Trials were split into 12 blocks of 30 trials each and subjects received a self-terminated period of rest after each block. Between blocks, the head position of the subject was measured with respect to the MEG sensor array using coils placed on the left and right preauricular points and the nasion and the subject self-corrected their head position to the same position recorded at the start of the first block using a custom visual-feedback program written by T.H. inspired by previous work (Stolk et al., 2013) to minimize head displacement across the experiment. Details on statistical analyses can be found in the following sections and in the Results.

Subjects.

The experiment was approved by the Institutional Review Board of the National Institute of Neurological Disorders and Stroke (protocol #14-N-0002). All subjects were right-handed and neurologically healthy with normal hearing and provided written informed consent. Twenty subjects between 21 and 34 years old (mean age 24.1; 15 females) participated in an initial ∼2 h long behavioral session to familiarize themselves with the task. Overall, the initial group of 20 subjects was able to discriminate sequence β (t test on z-transform of Spearman correlation coefficients between sequence β and trend strength rating, t(19) = 7.8, p < 0.001) and to make valid predictions (p34 × p34* interaction as in the analysis of Fig. 2B, F(10,190) = 17.2, p < 0.001; for details, see Results, subjects can make valid predictions about upcoming items based on stimulus sequence history).

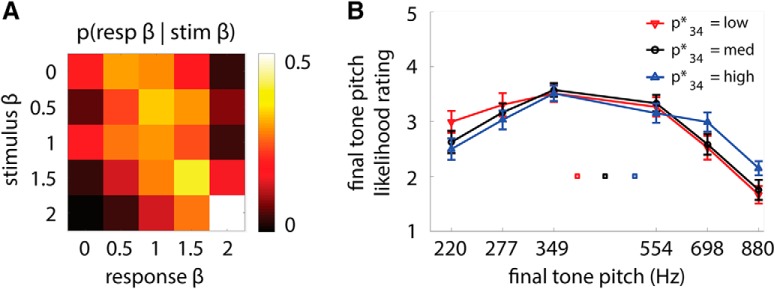

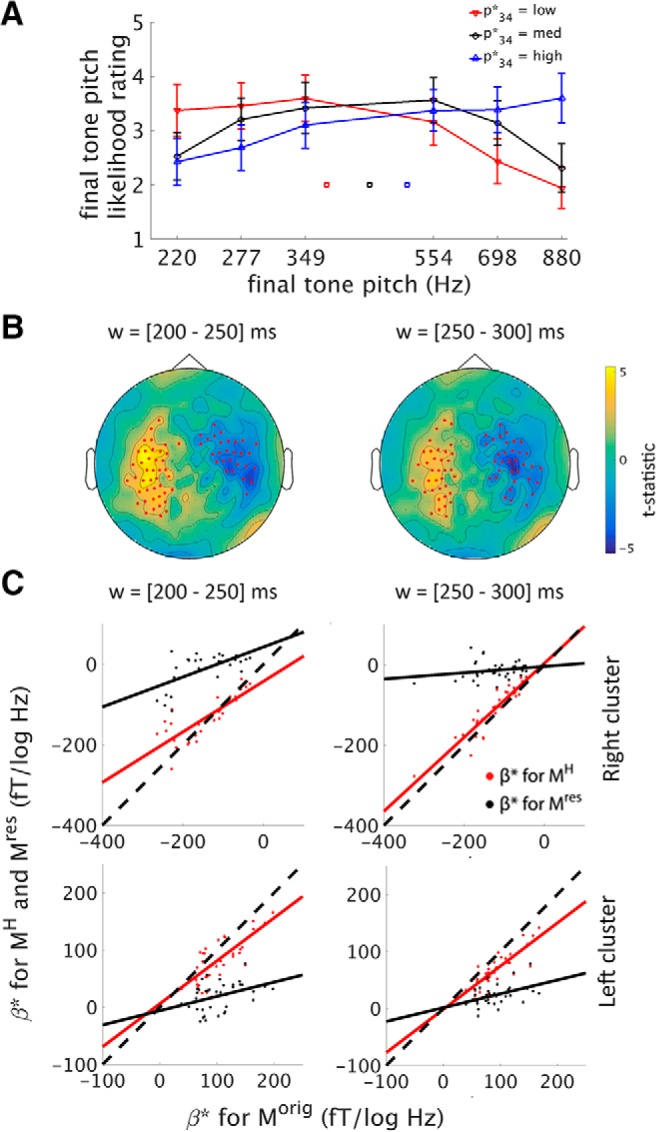

Figure 2.

Behavioral performance. A, Trend strength rating. Heat map of the group-average distribution of trend strength ratings (transformed into the β scaling as “response β”) as a function of true sequence β (“stimulus β”). Responses concentrated along the diagonal, suggesting that subjects could meaningfully perform the trend strength rating task. Spearman's ρ for stimulus β and response β was significantly above chance for every subject (all p < 0.001, n = 360 trials for each subject), with an average rho of 0.45. B, Final tone pitch likelihood rating. Final tone likelihood rating is plotted as a function of presented final tone pitch (p34; abscissa) and expected final tone pitch (p34*; red, low; black, medium; blue, high). A repeated-measures ANOVA revealed a significant interaction between p34 and p34* (F(10,100) = 5.7, p < 0.001, n = 11), which can be observed by noting the crossover effect of the red and blue curves. Red, black, and blue squares indicate mean expected final tone pitch values for the respective conditions. Error bars denote SEM across subjects.

We invited subjects exhibiting adequate behavioral performance (Spearman correlation between sequence β and trend strength rating >0.2 and full usage of the rating scales for both behavioral responses) to perform the task in the MEG scanner. Fourteen subjects were recruited for the MEG portion of the study (lasting ∼3 h including setup time). Three subjects were excluded from analyses due to excessive MEG artifacts from dental implants or eye or head movements (age range for the remaining subjects: 21–34; mean age: 24.1; 7 females). Behavioral results reported in Figure 2 are from the MEG session and included the same subjects who contributed MEG data.

Data acquisition and preprocessing.

Experiments were conducted in a whole-head 275-channel CTF MEG scanner. MEG data were collected with a sampling rate of 600 Hz and an anti-aliasing filter at <150 Hz. Analyses were performed on 271 sensors after excluding four malfunctioning sensors. The analyses were conducted using the Fieldtrip package (Oostenveld et al., 2011) and code custom written in MATLAB. MEG data from each block were demeaned and detrended. To best investigate low-frequency MEG activity, we did not apply a high-pass filter; an earlier study from our laboratory on the same MEG scanner using similar stimuli and much longer trial-length (180 s), as well as resting-state recordings, had demonstrated excellent data quality down to ∼0.005 Hz (Lin et al., 2016). Powerline noise was removed offline by applying a fourth-order Butterworth band-stop filter in frequency ranges 58–62 Hz and 118–122 Hz. Independent component analysis (ICA) was used to remove artifacts related to eye blinks, eye movements, heartbeat, breathing, and slow movement drift.

Phase dissimilarity.

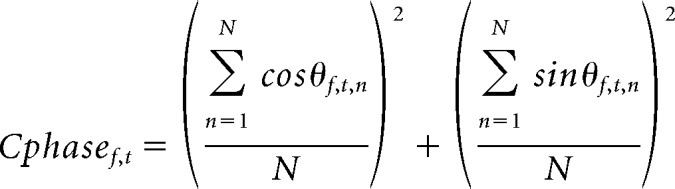

We conducted this analysis closely following the methods used in (Luo and Poeppel, 2007). For every trial, sensor, and subject, we performed a fast Fourier transform on the activity during the 9.9 s presentation of the first 33 tones of the tone sequence using a 6 s length sliding Hanning window that incremented in 100 ms steps. For each time point and frequency bin at each sensor, across-trial phase coherence was computed as follows:

|

where θf,t,n denotes phase at frequency f, time t, and trial n. Phase coherence ranges from 0 to 1, where 0 indicates random phase across trials and 1 indicates identical phase across trials.

We next defined “identical” stimulus sets as the 12 trials in which the same initial 33-tone sequence was presented and “similar” stimulus sets as trials in which sequences with the same β and σ were presented. To match the number of trials in the two conditions, for “similar” stimulus sets, we randomly selected 12 trials from a total of 36 trials for each level of β and σ. We then computed phase dissimilarity at each frequency bin as the difference in mean phase coherence over time between identical and similar stimulus sets as follows:

|

The following analyses involved performing regressions on MEG activity time locked to the onset of tones. For these analyses, we extracted MEG activity using 50-ms-length sliding windows, which resulted in six windows during the presentation of each tone. For analyses using baseline-corrected MEG activity, because there was no period of silence before each tone (aside from the first tone in the sequence), we performed baseline correction by computing the mean MEG activity in the first 20 ms after tone onset and subtracting it from all time points during the presentation of that tone.

History tracking analysis.

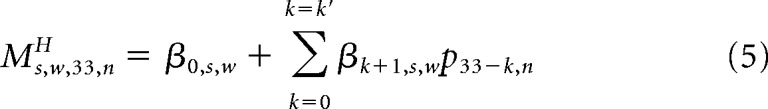

For every tone i in the second half of the tone sequence (16 ≤ i ≤ 32) of every trial n, we extracted a list of the k′ preceding tone pitch values: pi,n, pi−1,n, …, pi−k′,n. (Tone 33 was omitted from the analysis because it always had the same pitch of 440 Hz and tone 34 was omitted because its value was selected independently of the prior sequence.) For each sensor s and trial n, we extracted MEG activity after the onset of tone i using the sliding window approach described earlier. We then regressed this value (call it Ms,w,i,n) onto the current tone as well as the k′ preceding tones as follows:

|

where the βk+1,s,w terms describe how MEG activity at sensor s and time window w depends upon the pitch of the tone presented k tones before the current tone i.

Our aim was to determine what value of k′ (ranging from 0 to 15) provided the best fit to the MEG activity at each sensor and time window. To assess this, we used a cross-validation procedure. For each subject, we partitioned trials into a training set (odd-numbered trials) and a test set (even-numbered trials). We performed 16 regression analyses on the training set (one for each k′ value ranging from 0 to 15). We then used the regression coefficients from the training set to predict MEG activity in the test set. The best value of k′ for a given sensor and time window was defined to be the one that minimized the sum of squared errors of the predicted MEG activity in the test set. This procedure was repeated using even-numbered trials as the training set and odd-numbered trials as the test set. The final optimal k′ assigned to each subject/sensor/time window was the average k′ derived from the two folds. See Figure 4A for a schematic of the analysis. Last, k′ values were averaged across subjects at each sensor and time window. This analysis was performed both with and without baseline correction.

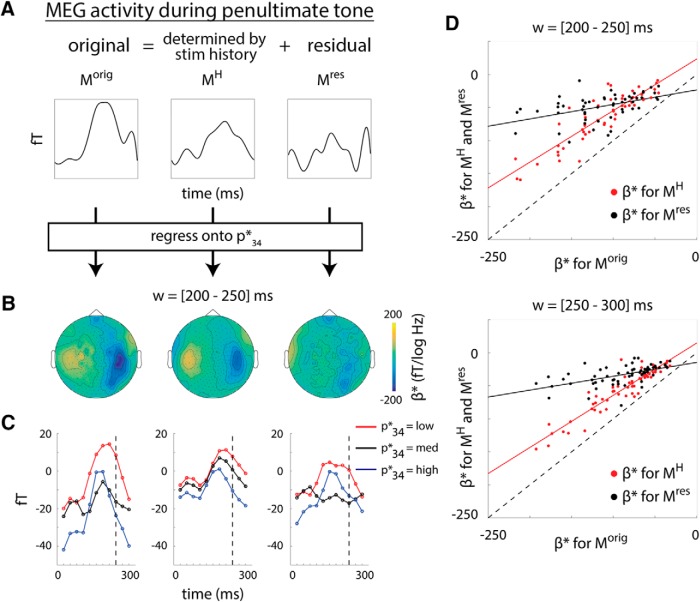

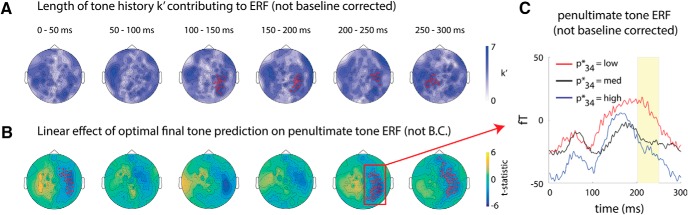

Figure 4.

History-dependent neural responses. A, Analysis schematic. MEG activity in response to the ith tone in the sequence is regressed onto the pitch of tone i, as well as the pitch values of the preceding k′ tones, for all values of i occurring in the second half of the tone sequence. The regression is performed separately for each MEG sensor, in a sliding window w (length 50 ms) and a cross-validation procedure is used to determine the value of k′ that provides the best fit to the data. B, Length of history dependence in baseline-corrected MEG signals (i.e., evoked responses). The optimal k′ value determined for each sensor is plotted for each time window after tone onset. A right-lateralized sensor cluster in the 100–150 ms time window survived correction by cluster-based permutation test (red dots; p = 0.01, n = 11), where MEG response to a given tone is influenced by up to 7 prior tones.

To assess the statistical significance of the (across-subject) mean k′ values against the null hypothesis that mean k′ = 0, we repeated the cross-validation regression analyses described above using random permutations of the tone sequences in the training set while leaving tone sequences in the test set unshuffled. We randomly permuted the order of the first 32 tones in the training set, with the exception that the value of the current tone pitch i in each regression was kept the same as in the original sequence. In other words, information about current tone pitch was retained, whereas information about tone sequence history was destroyed. We repeated this procedure 100 times each using odd and even trials as the training set and found the value of k′ yielding minimum error on the test set, yielding 200 permutation samples of k′perm for each subject, sensor, and time window. Next, we repeatedly sampled (with replacement) from the k′perm values of each subject and calculated the across-subject average 1000 times, yielding a distribution of mean k′ values at each sensor and time window under the null hypothesis that mean k′ = 0. We defined uncorrected p-values for each sensor and time window as the proportion of samples in this null distribution that were greater than or equal to the empirically observed mean k′. These p-values were subsequently used to define clusters for the cluster correction analysis, in which the sum of k′ across sensors in a cluster, k′sum, was used as the cluster statistic (see “Cluster correction” section below).

Prediction effect.

We investigated how MEG activity during the penultimate (33rd) tone was modulated by the expected value of the final (34th) tone by performing the following regression model:

Because the penultimate tone always had a pitch of 440 Hz, it was not necessary to include its pitch in the regression analysis. The β1,s,w* coefficient describes how MEG activity at sensor s and time window w after the penultimate tone onset depends on the expected pitch of the final tone, p34*. We submitted β1,s,w* to a group-level analysis (one-sample t test against zero), which was corrected for multiple comparisons across sensors using a cluster-based permutation test described below.

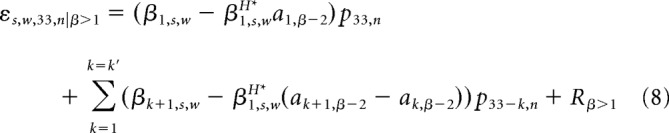

Relating history-dependent MEG response with p34*.

For each subject, sensor s, and time window w, we obtained coefficients βk+1,s,w (0 ≤ k ≤ k′) from the previously conducted history tracking regression analysis on non-baseline-corrected data using the optimal k′ value for that subject, sensor, and time window. Specifically, both the optimal k′ and the coefficients βk+1,s,w were averaged across the two folds for training and testing and noninteger values of k′ were rounded up to the nearest integer (in the rare cases of optimal k′ = 0, it was set to 1 to carry out the regression analysis described below). Next, we partitioned MEG activity in response to the penultimate tone at each sensor s, time window w, and trial n into two components (see Fig. 6A): that which was predicted by the preceding tone sequence and the residual. The former was computed as follows:

|

and the residual was computed as follows:

where Ms,w,33,norig denotes the originally recorded MEG activity. Next, we repeated the prediction effect regression analysis described above, but now using Ms,w,33,nH and Ms,w,33,nres as the dependent variable as follows:

|

Last, we quantified these observations by comparing the group-averaged regression weights for p34* obtained for Morig, MH, and Mres.

Figure 6.

Neural integration of stimulus history accounts for prediction. A, Analysis schematic. The (non-baseline-corrected) MEG activity during the penultimate tone (left column) can be expressed as the component accounted for by stimulus history integration (middle column) plus residual (right column). Prediction effect for each signal is then assessed by regressing the activity onto the expected final tone pitch, as in Figure 5B. B, Regression coefficients of the expected final tone pitch for the original MEG activity during the penultimate tone (left, from the 200–250 ms window), its history-dependent component (middle), and the residual (right). Maps show mean coefficients across subjects. C, MEG activity time courses during penultimate tone as a function of expected final tone pitch. Left, Reproduced from Figure 5C, but with 50-ms-length, half-overlapping sliding windows. Middle and right, Component constructed from history integration effect and the residual, respectively. D, Comparison of regression coefficients for Morig, MH, and Mres across sensors. For sensor clusters showing significant prediction effects in the time windows 200–250 ms (top) and 250–300 ms (bottom) (red dots in Fig. 5B), the original and history-dependent MEG activity exhibit similar sensitivity to p34* (red dots and lines; 200–250 ms: r = 0.89, p < 10−15, n = 53; 250–300 ms: r = 0.93, p < 10−15, n = 59). In comparison, the residual activity is much less sensitive to p34* (shown by the black dots being closer to the ordinate value of 0) and less similar to the original response (200–250 ms: r = 0.48, p = 0.0003, n = 53; 250–300 ms, r = 0.56, p = 4 × 10−6, n = 59). Dashed lines indicate unity.

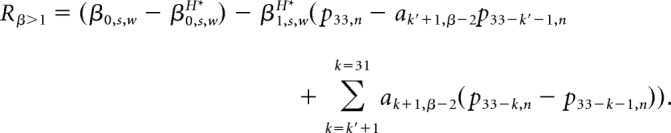

Relationship between history tracking weights and optimal prediction weights.

Both MH (Eq. 5) and p34* (Eqs. 1 and 2) are defined as weighted sums of the preceding pitch values. We can therefore gain further insight into the relationship between history tracking and prediction by investigating the relationship between the weights on the preceding pitch values that determine MH and p34*. Recall from Equation 6 that the regression of MH onto p34* is defined as follows: Ms,w,33,nH = β0,s,wH* + β1,s,wH* p34,n* + εs,w,33,n. By substituting the definitions of MH and p34* into the regression equation and solving for the error term ε, we can see how the regression error depends on the relationship between the history tracking weights and optimal prediction weights. For fGn sequences:

|

where

|

and for fBm sequences:

|

where

|

Therefore, for fGn sequences, the regression error for MH onto p34* partially depends on the (βk+1,s,w − β1,s,wH* ak+1,β) terms; that is, the difference between the history tracking weights βk+1 and the scaled optimal prediction weights ak+1,β (Eq. 1), where the scaling factor β1H* is the regression coefficient on p34*. Similarly, for fBm sequences, regression error partially depends on (β1,s,w − β1,s,wH*a1,β−2) and the (βk+1,s,w − β1,s,wH* (ak+1,β−2 − ak,β−2)) terms; that is, the difference between the history tracking weight and the scaled optimal prediction weight for the fBm sequence (Eq. 2), where the scaling factor is β1H*, the regression coefficient on p34* (Eq. 6).

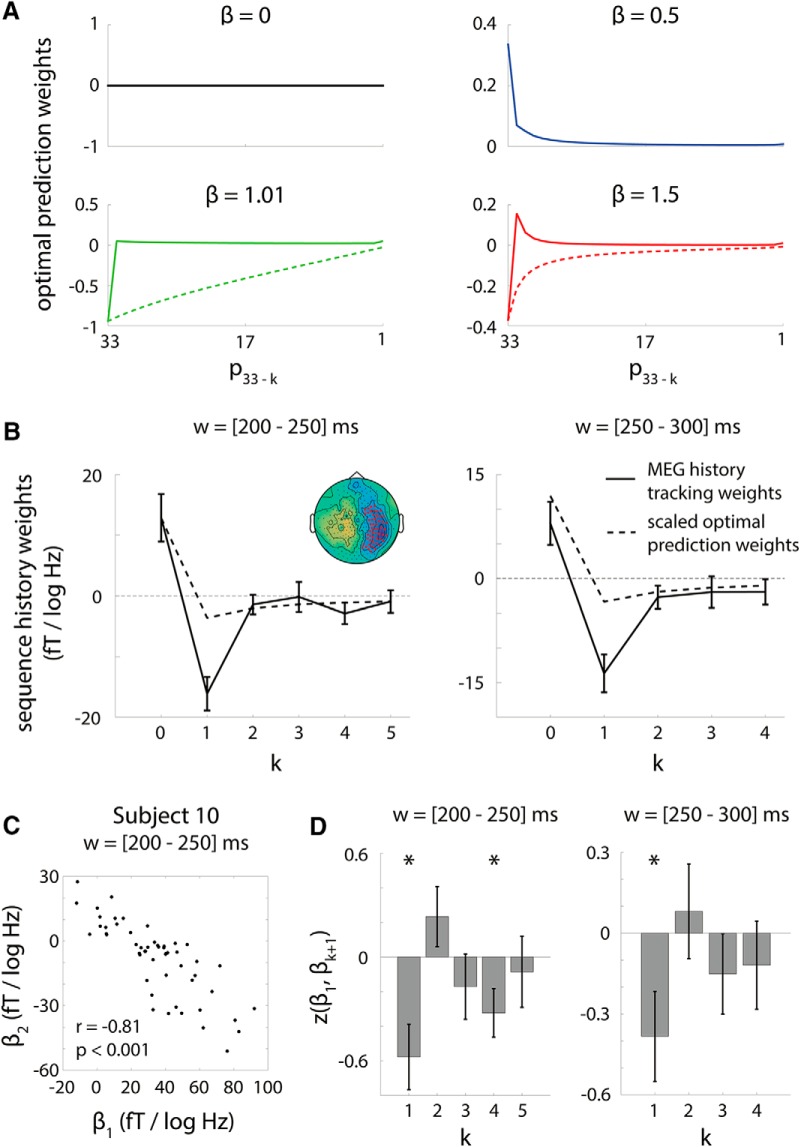

In Figure 7A, we plot optimal prediction weights for different sequence β values (see definition in Eqs. 1 and 2). From the above, we expect MH to better predict p34* the more closely history tracking weights resemble the scaled optimal prediction weights.

Figure 7.

Relationship between history integration weights and optimal prediction weights. A, Optimal prediction weights as a function of sequence β. For 1/fβ-type sequences, the expected value of the upcoming item is a weighted sum of the previous items; these weights (“optimal prediction weights”) are plotted as solid lines for each β level. For β < 1, expected value depends on the ak+1 weights (Eq. 1). For β > 1, expected value depends on the weights [a1, (a2 − a1), …, (ak + 1 − ak), −ak + 1] (Eq. 2). For β > 1 sequences, dashed lines show optimal prediction weights for the corresponding fGn sequence with scaling parameter β-2, which were used to derive the weights in Equation 2 and shown in solid lines. B, History integration weights resemble scaled optimal prediction weights. In the time window of 200–300 ms, the dependence of MEG activity on pitch history (solid lines) in the significant prediction cluster (inset left column, red sensors; reproduced from Fig. 5B, 200–250 ms window) reflected the pattern exhibited by the scaled optimal prediction weights averaged across sequence β (dashed lines). This resemblance accounts for why history-dependent MEG activity carries the prediction effect (Fig. 6; Eqs. 7 and 8). C, Correlation between history integration weights on current and previous pitches. To further explore the apparent opposing effects of the current and previous pitch on MEG activity illustrated in B, we computed across-sensor correlations in the prediction cluster between the weight on the current pitch, β1, and weights on previous pitches, βk + 1, k ≥ 1. Here, we show an example scatterplot of the across-sensor relationship between β1 and β2 for one subject. D, Inverse correlation between current and previous pitch weight. Across subjects, the influence of the current and previous tone pitch on MEG activity was significantly inversely correlated for the 200–250 ms (t(10) = −3.0, p = 0.01) and 250–300 ms (t(10) = −2.3, p = 0.04) time windows, as assessed by a one-sample t test on the Fisher's z-transform of the correlation coefficients. Asterisks denote p < 0.05. Error bars denote SEM across subjects.

To compare history dependence weights and scaled optimal prediction weights, we focused on the significant sensor cluster showing a prediction effect in the 200–250 ms window (see Fig. 5B, reproduced in Fig. 7B, inset). Because optimal k′ differs across subjects and sensors, first, we averaged optimal k′ across sensors in the significant cluster, training folds, and subjects and rounded the result to the nearest integer for the 200–250 ms and 250–300 ms time windows separately (where significant prediction effects are found). Call this rounded average value k′avg, w. We found that k′avg, w = 5 and 4 for the 200–250 ms and 250–300 ms time windows, respectively. For these time windows, we then analyzed history dependence weights from the regressions where k′ = k′avg, w for all subjects and sensors to allow for across-subject and across-sensor averaging. History dependence weights from both training folds were averaged across sensors in the significant cluster and then averaged across subjects. Next, we computed the scaled optimal prediction weights; that is, the β1,s,wH* ak+1,β terms in Equation 7 and the β1,s,wH* a1,β−2 and β1,s,wH* (ak+1,β−2 − ak,β−2) terms in Equation 8 to compare them to the history dependence weights. To determine the β1,s,wH* values, we computed the across-subject, across-sensor average regression coefficient β1,s,wH* for the 200–250 ms and 250–300 ms time windows, respectively. We averaged the scaled prediction weights computed for each level of sequence β to arrive at a single set of scaled prediction weights for each time window (dashed lines in Fig. 7B).

Figure 5.

Neural correlates of history integration and prediction. A, Length of history dependence in non-baseline-corrected MEG signals (i.e., ongoing/accumulated activity). Same as Figure 4B, but for non-baseline-corrected data. Red dots indicate sensors belonging to significant clusters (all p < 0.05, cluster-based permutation test, n = 11). B, Neural correlate of prediction. Using a 50 ms sliding window, non-baseline-corrected MEG activity during the penultimate tone (which always had a pitch of 440 Hz) was regressed onto the expected pitch of the final tone to determine which sensors showed a significant prediction effect. Maps plot the t-values corresponding to a group-level one-sample t test on regression coefficients at each sensor and time location. Red dots indicate sensors belonging to significant clusters (all p < 0.05, cluster-based permutation test, n = 11). C, MEG activity during penultimate tone as a function of expected final tone pitch. The non-baseline-corrected MEG signal for sensors in the significant cluster from the 200–250 ms window (red dots in B) as a function of the expected pitch of the final tone. A monotonic relationship can be seen in the significant time window. Note that, although expected pitch is binned into three levels here for illustration purposes, the regression analysis depicted in B used continuously varying expected values for pitch.

Inspection of the history dependence weights suggests that they feature a pattern in which the pitch of the current and previous tone have large but opposing effects on MEG activity during the current tone (Fig. 7B). We investigated this effect further by calculating the across-sensor correlations between the history dependence weights for the current and previous pitches. In particular, if the current and previous pitch have opposing effects on MEG activity, then we might expect that the weights of the current and previous pitch on MEG activity are inversely correlated across sensors. Therefore, using history dependence weights defined by k′avg, w as described above, we investigated the across-sensor lagged correlation between the first history dependence weight (k = 0; i.e., regression weight for the current tone pitch) and subsequent history dependence weights (k ≥ 1; i.e., regression weights for earlier tones in the sequence) for sensors in the 200–250 ms prediction cluster. We computed the correlation for all possible lags for each subject. Statistical significance was assessed by computing the Fisher's z transform of the correlation coefficients and submitting the z-values to an across-subject, one-sample t test against zero.

Cluster correction.

To correct for multiple comparisons across sensors in the analyses of MEG data, we used cluster-based permutation tests (Maris and Oostenveld, 2007). For a given statistical test performed at each sensor, clusters were defined as neighboring sensors exhibiting test statistics of the same sign (e.g., positive t-values) and p < 0.05. The neighbors for each sensor were defined using the CTF275_neighb.mat template in Fieldtrip. For each such cluster, a summary cluster statistic was defined as the sum of the absolute values of the test statistics across all sensors in the cluster. We generated a null distribution of cluster statistics by repeating the following procedure 1000 times: we randomly permuted the data independently for each subject (described in detail below) and used the same permutation across all sensors. Using the permuted data, we again performed the statistical test for each sensor and extracted the maximum cluster statistic across all clusters for the current permutation iteration. This yielded a null distribution of 1000 cluster statistics. We then used this null distribution to assign a p-value to clusters occurring in the original, unshuffled data. We defined a measure of effect size for clusters in the original data, dcluster, as follows:

where CScluster is the cluster statistic for the cluster in the original data and CSnull is the null distribution of cluster statistics derived from the permutation procedure. dcluster thus measures the effect size of a cluster statistic as the number of SDs by which it exceeds the mean of the null distribution.

For ANOVA analyses (Fig. 3B), we permuted the data by randomly shuffling the assignment of data cells to levels of the ANOVA factor under investigation for each subject. We considered clusters in the original data to be significant if their p-value was <0.05. For regression analyses (Fig. 5B), we used the following procedure. For a given regression coefficient j at time window w, βj,w, we performed group-level analysis at every sensor using a one-sample t test against 0 on βj,w across subjects. For each subject, we permuted the data by randomly shuffling the across-trial correspondence between the dependent variable (MEG activity) and independent variable (the term weighted by βj,w). We considered clusters in the original data to be significant if their p-value was <0.025 (corresponding to a two-tailed test at p < 0.05). For cluster correction of k′ (Figs. 4B, 5A), we defined clusters as spatially neighboring sensors exhibiting k′ > 0 at p < 0.05 (uncorrected) and took the cluster statistic to be the sum of the k′ values of all sensors in a cluster. The permutation procedure and the construction of the null distribution is described in the section “History tracking analysis.” We considered clusters in the original data to be significant if their p-value was <0.05.

Figure 3.

Slow cortical dynamics track tone sequences. A, Analysis schematic. We defined “identical” trials as trials in which an identical tone sequence was presented repeatedly and “similar” trials as trials in which tone sequences were statistically similar (same β and σ) but nonidentical. We identified neural activity encoding tone sequences by comparing MEG activity across-trial correlation/coherence for identical versus similar trials. B, Correlation analysis. Across-subject mean F-values corresponding to the effect of “identical” versus “similar” trial pairs from within-subject ANOVAs using across-trial correlation of the MEG activity time course as the dependent variable. A repeated-measures ANOVA across subjects shows a single significant cluster including all sensors after correction by cluster-based permutation test (p < 0.001, n = 11). Left, Result obtained using full-band (0.05–150 Hz) MEG data. Right, Result obtained using 0.05–3 Hz data. Black dots indicate sensors where tone sequence tracking effect in the full band data was strongest (F > 50). C, Phase dissimilarity analysis. Ci, Group-average phase dissimilarity (difference in phase coherence between identical and similar set of trials) in the <3 Hz band is plotted across sensors. Block dots are the same as in B and were used to define left and right sensor clusters for subsequent analysis. Cii, Phase dissimilarity by frequency for the sensor clusters shown in Ci, as well as for all sensors. Dashed vertical lines indicate tone presentation frequency (3.3 Hz) and its harmonics. Shaded areas denote SEM across subjects. Ciii, Phase coherence across identical (green) and similar (magenta) set of trials, respectively, for the sensor clusters shown in Ci, as well as for all sensors. Peaks in the plots correspond to tone presentation frequency and its harmonics.

Permutation-based cluster correction has the advantage of being a nonparametric test and therefore not dependent upon distributional assumptions about the data. Inspection of the marginal distributions of MEG recordings within single subjects, sensors, and trials confirmed that MEG data was approximately normally distributed.

Replication of main findings in an independent sample.

We performed a follow-up experiment the primary purpose of which was to investigate the influence of tone duration on the history tracking effect. Results for this analysis will be reported in a future study. For the purposes of the present study, we used this new dataset to investigate whether the primary behavioral and neural effects of interest would replicate in an independent sample. For the new experiment, we generated a new set of tone sequences using the same procedures described above with the following exceptions. Tone sequences had scaling parameter β values limited to 0.5, 0.99, or 1.5 and the sequence σ manipulation was omitted. Within each sequence, tone duration was 150 ms, 300 ms, or 600 ms. Subjects completed 324 trials in total (108 trials at each tone duration setting). The behavioral task was identical to that of the main experiment.

In total, 26 subjects completed the experiment in the MEG (lasting ∼3 h including setup time), of which 7 were prescreened for behavioral performance (as described for the main experiment), whereas the remaining 19 subjects performed the task for the first time in the MEG scanner without any behavioral prescreening. Six out of 26 subjects were excluded due to poor performance (not using the full range on rating scales) or excessive MEG artifacts caused by head movements, yielding a final group of 20 subjects for analyses (age range: 19–34; mean age 25.0; 11 females).

Behavioral results reported are from the MEG session and included the same 20 subjects who contributed MEG data. To investigate replication of the primary effects of interest from the main experiment, we analyzed data from the follow-up experiment using only trials with a 300 ms tone duration (108 trials per subject). For these trials, we assessed the behavioral prediction effect (cf. Figs. 8A, 2B), the neural prediction effect (cf. Figs. 8B, 5B), and the relationship between the neural prediction and history tracking effects (cf. Figs. 8C, 6D). All MEG preprocessing and data analysis procedures were conducted as described above for the main experiment.

Figure 8.

Reproduction of main findings in an independent dataset. We collected MEG data from 20 additional subjects on a modified version of the task paradigm (for details, see Materials and Methods). A, Behavioral prediction effect (cf. Fig. 2B). A 6 (p34) × 3 (p34*) repeated-measures ANOVA on likelihood ratings for the final tone pitch yielded a significant p34 × p34* interaction (F(10,190) = 20.4, p < 0.001), indicating that subjects' likelihood ratings for the final tone were sensitive to the expected pitch value. B, Neural correlate of prediction (cf. Fig. 5B). As in the main experiment, a right-lateralized cluster of sensors (red dots) exhibited a significant linear relationship with expected pitch in the 200–250 ms and 250–300 ms time windows. A left-lateralized cluster also emerged as a significant neural correlate of prediction in these data (a similar pattern was observed in the main experiment, which did not pass statistical significance). C, Linking history tracking and prediction (cf. Fig. 6D). Across sensors in the right-lateralized prediction cluster (top row) and left-lateralized prediction cluster (bottom row), the original and history-dependent MEG activity exhibit similar sensitivity to p34* (red dots and lines). In comparison, the residual activity is much less sensitive to p34* (shown by the black dots being closer to the ordinate value of 0) and less similar to the original response. Dashed lines indicate unity. For statistics, see Results.

Results

Task paradigm

On every trial, human subjects listened to a ∼10-s-long sequence of 34 tones, each 300 ms in duration. The pitch (i.e., log of sound–wave frequency) fluctuation of the first 33 tones in the sequence followed different levels of temporal dependence as prescribed by the parameter β, which measures the slope of the temporal power spectrum of the pitch time series (following a P ∝ 1/fβ form). Higher β (i.e., steeper power spectrum) corresponds to stronger temporal dependence (Fig. 1B). Sequence β spanned five levels: 0, 0.5, 1, 1.5, and 2, with β = 0 corresponding to no temporal dependence and β = 2 corresponding to strong temporal dependence. Thirty unique sequences were used in the experiment. For each level of β, three sequences spanned the full range of 220–880 Hz (Fig. 1B, magenta traces) and three sequences spanned a slightly smaller range of pitch (average range = 276–701 Hz; Fig. 1B, green traces, for details, see Materials and Methods). All sequences converged on a pitch of 440 Hz for the penultimate (33rd) tone to disentangle neural activity related to instantaneous stimulus processing from that related to predictive processing (see below).

Given knowledge about a sequence of N − 1 values and the sequence's autocorrelation structure, it is possible to mathematically compute the expected value of the Nth item in the sequence (see Materials and Methods section “Calculation of p34*”). We refer to the expected value of the final tone pitch as p34*. For β = 0.5, 1, and 1.5, sequences varied in p34*, exhibiting low (<440 Hz; Fig. 1B, red outline), medium (440 Hz; black outline), or high (>440 Hz; blue outline) expected values (indicated by the × at the end of each sequence in Fig. 1B). Average values of p34* for the low and high categories were 388.5 Hz (range: [370, 415] Hz) and 504.2 Hz (range: [466, 554] Hz), respectively. For β = 0 sequences, because there is no temporal dependence, p34* is simply the mean value of the sequence and is 440 Hz. For β = 2 sequences, because they are random walks with no drift, p34* is the same as the pitch of the 33rd tone and is also 440 Hz.

Each of the 30 unique sequences was presented 12 times (in randomized order), for a total of 360 trials. For each unique sequence, the actually presented 34th (and final) tone pitch varied across the 12 presentations and was randomly selected from one of six values evenly spaced around the penultimate tone pitch of 440 Hz (black dots in Fig. 1B). After listening to the tone sequence on a given trial, subjects made two responses (Fig. 1A). First, they rated how probable the final tone pitch was given the preceding tone sequence on a scale of 1–5. Second, they indicated the “trend strength” of the first 33 tones of the sequence on a scale of 0–4, where each trend strength rating corresponded to a level of β.

Subjects can make valid predictions about upcoming items based on stimulus sequence history

We first replicated previous findings that subjects can form valid judgment about sequence temporal dependence (Schmuckler and Gilden, 1993; Lin et al., 2016). The across-trial correlation between sequence β and trend strength rating was significant for all subjects (all p < 0.0001, n = 360 trials for each subject; mean ρ = 0.45). Figure 2A plots the group average of stimulus–response matrix, with the distribution of responses concentrated along the diagonal, indicating that subjects' responses about “trend strength” closely tracked sequence β.

Can subjects capitalize on the temporal dependence in a sequence to make valid predictions about upcoming items in the sequence? To investigate this question, we performed the following analysis. For each subject, we calculated the average likelihood rating for the final tone pitch as a function of its expected value (p34*: low, medium, high), its actually presented value (p34) and overall variability in the pitch sequence (σ; magenta vs green traces in Fig. 1B). We then submitted the likelihood ratings to a 6 (p34) × 3 (p34*) × 2 (σ) repeated-measures ANOVA. There was a main effect of p34 (F(5,50) = 20.2, p < 0.001, ηp2 = 0.67), reflecting the overall inverse U shape of the function (Fig. 2B); as expected, subjects rated final tone pitch to be less likely the more it deviated from the penultimate tone pitch of 440 Hz. Crucially, we also observed a p34 × p34* interaction (F(10,100) = 5.7, p < 0.001, ηp2 = 0.36). The interaction can be observed by noting that, when the presented final tone pitch was low (220 Hz), subjects rated the final tone to be more likely when its expected pitch was also low (paired t test for p34* = low vs p34* = high, t(10) = 3.2, p = 0.009, difference = 0.50); in contrast, when the presented final tone pitch was high (880 Hz), subjects rated the final tone to be more likely when its expected pitch was also high (t(10) = −3.4, p = 0.007, difference = −0.49). Therefore, subjects' likelihood ratings for the final tone pitch were sensitive to its expected value, which depends on the previous sequence history. This result suggests that subjects were indeed able to capitalize on sequence temporal dependence to make valid predictions. No other main effect or interaction in the ANOVA was significant (all p > 0.15). Therefore, likelihood ratings did not appear to be sensitive to the pitch sequence variability (σ) manipulation in our stimulus set.

See Materials and Methods “Subjects” section for results from an additional behavioral dataset, which replicated the above findings. We now proceed to investigate the neural mechanisms underlying the predictive computation: What aspects of neural activity encode a particular sequence and what aspects of neural activity contribute to prediction? Finally, how is such prediction constructed?

Slow cortical dynamics track naturalistic tone sequences

To identify neural activity that encodes tone sequences, we searched for components of the MEG activity that were reliably modulated by repeated presentations of an identical tone sequence above and beyond sequences that were only statistically similar. For each subject, we defined “identical” trial pairs as pairs of trials in which the same initial 33-tone sequence was presented and “similar” trial pairs as pairs of trials in which the initial 33-tone sequences were different but exhibited the same sequence β and variability σ (Fig. 3A). For each sensor, we computed the Pearson correlation of MEG activity time courses during the presentation of the first 33 tones in a sequence for all “identical” and “similar” trial pairs. These correlation values were Fisher-z transformed and averaged across trial pairs for the two groups of trials separately and then submitted to a repeated-measures ANOVA across subjects. There was a strong effect of “identical” versus “similar” trial pair including all sensors (Fig. 3B left; all sensor-level p-value < 0.047), which formed a single significant cluster comprising all 271 sensors [p < 0.001, corrected by cluster-based permutation test (henceforth “cluster-corrected”); cluster size = 271, dcluster = 14.9]. The magnitude of the effect was particularly high in sensors overlying bilateral auditory cortices, with a right lateralization. There was no significant interaction effect with the temporal dependence (β) or variability (σ) of stimulus sequences. Strikingly, these findings were almost identical when MEG activity was low-pass filtered at <5, 3, or 1 Hz before computing trial–pair correlations (the <3 Hz result is shown in Fig. 3B, right). Therefore, repetitions of an identical tone sequence consistently modulated MEG activity in the low-frequency range.

To evaluate the frequency content of this activity more thoroughly, we computed a phase dissimilarity measure (for details, see Materials and Methods) for each sensor at each frequency, which quantifies the degree of phase locking to repetitions of an identical stimulus sequence above and beyond that elicited by statistically similar sequences and was computed as the difference in phase coherence between “identical” and “similar” set of trials (Luo and Poeppel, 2007). Reassuringly, mean phase dissimilarity in the ≤3 Hz range (Fig. 3Ci) has a similar spatial pattern to the results from the correlational analysis described above (Fig. 3B). Phase dissimilarity as a function of frequency averaged across all sensors is shown in Figure 3Cii (black trace), which revealed that phase dissimilarity did not peak at a particular frequency, but rather was spread across all frequencies <10 Hz, with the highest values in the lowest frequencies. This pattern suggests phase locking in arrhythmic rather than oscillatory brain activity (He, 2014). The phase dissimilarity plots averaged across sensors in the left and right clusters defined from full-band correlational analysis (black dots in Fig. 3B, left) exhibit similar patterns (Fig. 3Cii, red and blue traces).

The phase dissimilarity curves exhibited sharp dips at the frequency of tone presentation (3.3 Hz) and its harmonics (Fig. 3Cii, dashed vertical lines). To gain additional insight into this pattern, we plotted across-trial phase coherence separately for “identical” and “similar” trials (Fig. 3Ciii). Interestingly, whereas both “identical” and “similar” trials display strong phase locking at the frequency of tone presentation and its harmonics, only identical trial pairs exhibit additional arrhythmic, low-frequency phase locking. Therefore, phase locking at 3.3 Hz and its harmonics reflects consistent modulation of MEG activity locked to the onset of each tone (likely contributed by the event-related field, ERF, due to tone presentation) regardless of the evolving pitch sequence. In contrast, phase locking in the low-frequency arrhythmic activity tracks the specific sequence of pitch values. Together, these results suggest that tone sequences with naturalistic temporal statistical regularities consistently modulate slow, arrhythmic brain activity.

Sequence integration in neural activity

The above results may reflect two possible scenarios: the MEG activity might track the fluctuating tone pitches instantaneously or integrate information in the sequence over a period of time. Next, we tested whether MEG activity at a given moment depended not only on the current tone pitch but also on the history of preceding tone pitches.

To this end, we regressed MEG activity after the onset of a given tone (using a 50-ms-long sliding window applied to full-band data) onto the pitch values of the previous k′ tones, for each value of k′ ranging from 0 to 15. We determined which value of k′ provided the best fit to the data at each sensor and time window using a cross-validation approach (see Materials and Methods, “History tracking analysis” section). The cross-validated k′ value thus describes the length of sequence history that affects neural activity at a given moment. A schematic of this analysis is shown in Figure 4A. We performed this analysis using both conventional baseline-corrected tone ERFs and MEG activity without baseline correction. The former reveals whether the evoked response to a given tone is modulated by preceding tones. However, applying baseline correction also removes any potential effect in neural activity that accumulates over the course of sequence processing. Such ongoing (i.e., non-baseline-corrected) activity is a good candidate for carrying a continuously updated prediction for the upcoming tone pitch (investigated in the next section). Therefore, we also applied the regression analysis on non-baseline-corrected data.

The result using baseline-corrected MEG responses is shown in Figure 4B. As expected, k′ values for all sensors are near 0 within the first 50 ms of tone onset. However, over time, groups of sensors exhibiting sensitivity to the preceding tone sequence begin to emerge. This effect achieves statistical significance in the time window of 100–150 ms (p = 0.01, cluster-corrected; cluster size = 26, dcluster = 4.2, mean k′ in cluster = 5.4, max k′ in cluster = 7.3; red dots in Fig. 4B), when a right-lateralized group of sensors exhibit tone-evoked responses that are sensitive to the pitch of up to 7 previous tones. Therefore, the evoked response to a given tone depends on preceding tones in the sequence. This result suggests history-dependent stimulus processing and is consistent with the idea that, during neural processing of naturalistic temporally extended stimuli, past information continuously influences information processing in the present (Hasson et al., 2015).

The result using non-baseline-corrected MEG data is shown in Figure 5A. This analysis also revealed a right-lateralized sensor cluster in the 100–150 ms window exhibiting significant sensitivity to up to 7 previous tones (p = 0.001, cluster-corrected; cluster size = 25, dcluster = 5.2, mean k′ in cluster = 5.3, max k′ in cluster = 6.8), which persisted until 250 ms after tone onset (150–200 ms: p = 0.01, cluster corrected, cluster size = 21, dcluster = 3.5, mean k′ in cluster = 5.1, max k′ in cluster = 6.8; 200–250 ms: p = 0.045, cluster corrected, cluster size = 13, dcluster = 2.1, mean k′ in cluster = 5.7, max k′ in cluster = 7.0). An additional left-lateralized sensor cluster emerged in the 250–300 ms window (p = 0.017, cluster-corrected; cluster size = 19, dcluster = 3.3, mean k′ in cluster = 5.3, max k′ in cluster = 6.4). Therefore, ongoing (non-baseline-corrected) MEG activity exhibits stronger history dependence than the baseline-corrected, tone-evoked responses, suggesting a build-up effect over time that contains information integrated over sequence history.

Neural correlate of prediction

To identify neural mechanisms underlying sequence prediction, we investigated whether brain activity during the penultimate (33rd) tone (which always had a pitch of 440 Hz) contains information about the expected pitch of the upcoming final tone, p34* (see Materials and Methods, “Prediction effect” section). Because sensory input during the penultimate tone is identical across all trials, this analysis allowed a clean assessment of neural activity underlying the predictive computation without the confounds of concurrent sensory processing. Similar to the previous analysis, we investigated MEG activity both with and without baseline correction to focus on tone-evoked responses and slow fluctuations that build up over multiple tones, respectively. Using ongoing, non-baseline-corrected activity, the activity in a right lateralized sensor cluster predicted the expected final tone pitch in early (0–50 ms) and late (200–300 ms) time windows (Fig. 5B, red dots, cluster-corrected; 0–50 ms: p = 0.02, cluster size = 33, dcluster = 3.1; 200–250 ms: p = 0.001, cluster size = 53, dcluster = 6.5; 250–300 ms: p < 0.001, cluster size = 59, dcluster = 6.1). The average activity time courses in the significant sensor cluster from the 200–250 ms time window are shown in Figure 5C for trials with low, medium, and high expected final tone pitch, respectively, which exhibit a monotonic relationship with the expected pitch of the upcoming tone. No significant effect was found using baseline-corrected data. Therefore, the neural signature of prediction is carried by slow cortical dynamics that accumulates information over the tone sequence.

Sequence integration accounts for prediction

The above result reveals a neural correlate of subjects' predictions about upcoming, but yet unpresented, stimulus. A remaining important question is the computational mechanisms leading to the construction of such predictive neural activity. Given that predicting upcoming items in a sequence requires integrating information over sequence history and extrapolating based on its temporal statistical regularity, could the sequence integration effect observed in our earlier analysis directly form the basis for predictive computation? We reasoned that, if this were the case, then the MEG activity during the penultimate tone that is predicted by sequence history integration (Fig. 5A) should also be predictive of the expected final tone pitch (as assessed in Fig. 5B). This is a substantive empirical question because the results thus far are consistent with the possibility that the neural integration of tone sequence history revealed in our analysis serves no predictive function.

We investigated this question using the following analysis. For each subject, we computed the component of (non-baseline-corrected) MEG activity during penultimate tone presentation that is accounted for by stimulus history (including the present tone) based on the history dependence analysis described earlier (Fig. 5A; for details, see Materials and Methods, “Relating history-dependent MEG response with p34*” section). Call this activity MH, where the “H” superscript denotes sequence history (Fig. 6A, middle). We then computed the residual activity not accounted for by sequence history, Mres (Fig. 6A, right). Therefore, MH captures the portion of neural activity that linearly depends on tone pitch history, whereas Mres captures any additional nonlinear dependencies as well as other influences. We next repeated the above tone pitch prediction analysis by regressing MEG activity onto p34* (as in Fig. 5B), but using MH and Mres as the dependent variables.

The scalp topography of regression weights in the window of 200–250 ms are shown in Figure 6B. Consistent with our hypothesis that sequence history integration reflected in MEG activity contributes to its predictive sensitivity to the upcoming tone's expected pitch, the originally observed relationship between MEG activity and expected pitch was closely mirrored by MH, but not Mres. Using the significant sensor cluster showing a prediction effect in the 200–250 ms window (Fig. 5C, reproduced in Fig. 6C, left, using a 50 ms sliding window), we further found that the component of its activity that is attributable to sequence history (MH; Fig. 6C, middle), but not the residual activity (Mres; Fig. 6C, right), shows a linear relationship with p34*.

We quantified these observations by comparing the regression weights for p34* obtained using the original MEG activity during the penultimate tone (Morig) with those obtained using MH and Mres for the sensor clusters exhibiting a significant prediction effect in the 200–250 ms and 250–300 ms time windows (Fig. 5B, red dots). The regression weights are plotted across sensors for Morig against MH (Fig. 6D, red) and for Morig against Mres (Fig. 6D, black). There was a strong correlation between the regression weights for Morig and MH (200–250 ms: r = 0.89, p < 10−15, regression coefficient = 0.78, n = 53; 250–300 ms: r = 0.93, p < 10−15, regression coefficient = 0.79, n = 59). Therefore, the sensitivity of the MEG activity to the expected upcoming tone pitch is largely explained by its linear sensitivity to the preceding tone sequence. In contrast, the relationship between the regression weights for Morig and Mres was considerably weaker though non-negligible (200–250 ms: r = 0.48, p = 0.0003, regression coefficient = 0.22, n = 53; 250–300 ms: r = 0.56, p = 4 × 10−6 and regression coefficient = 0.21, n = 59). This suggests that the linear history dependence of the MEG activity cannot entirely explain the predictive effect, and nonlinear history dependence may carry additional prediction effect.

Overall, these results suggest that linear integration of sequence history contained in slow, arrhythmic neural activity constitutes a prominent component of predictive processing for upcoming items in the sequence.

Sequence integration weights resemble scaled optimal prediction weights

Further insight into the relationship between history-dependent processing and prediction follows from the fact that both the history-dependent MEG activity MH and the optimal predicted pitch p34* are defined as weighted sums of the preceding pitch values. Therefore, we can investigate the relationship between history-dependent processing and prediction by analyzing the relationship between the weights on the pitch sequence history that determine MH and p34*.

As shown in the Materials and Methods section “Relationship between history tracking weights and optimal prediction weights,” error in the regression of pitch-history-dependent MEG activity MH onto the expected pitch p34* depends on the discrepancy between the weights characterizing history dependence in MEG activity and a scaled version of the weights determining optimal pitch prediction (Eqs. 7 and 8), where the scaling factor is the β1H* coefficient determined by the regression of MH onto p34* (Eq. 6). Optimal prediction weights for β = 0 and β = 0.5 sequences (as defined in Eq. 1) are plotted in Figure 7A, top row. Optimal prediction weights for β = 1.01 and β = 1.5 sequences (as defined in Eq. 2) are plotted as solid lines in Figure 7A, bottom row, along with the prediction weights for the corresponding fGn sequences (dashed lines), which were used to derive the optimal prediction weights for β > 1 sequences (see Materials and Methods for details).

We investigated how history tracking weights compared with scaled optimal prediction weights in the cluster of sensors showing significant prediction effect in the 200–250 ms time window (Fig. 7B, inset, displaying the result from Fig. 5B). We computed the average k′ value in this cluster for the 200–250 ms and 250–300 ms time windows (time period with significant prediction effects; Fig. 5B), k′avg, w, and then compared averaged history tracking and scaled prediction weights across sensors in the cluster extending k′avg, w tones back. As expected, history dependence weights for MEG activity exhibited a pattern qualitatively similar to that of scaled optimal prediction weights (Fig. 7B). This suggests that modulation of neural activity due to pitch sequence history follows a pattern similar to the manner in which the expected pitch for the upcoming tone depends on pitch sequence history and that this similarity between neural history dependence and the calculation of expected pitch is what underlies the neural prediction effect.

The results in Figure 7B suggest that the pitch of the current (k = 0) and previous (k ≥ 1) tones have opposite effects on MEG activity. To quantify this effect, we computed the across-sensor lagged correlation between the weight on the current pitch, β1 (corresponding to k = 0), and the weight on pitch k tones back (k > 0), βk+1, for sensors in the prediction cluster (Fig. 7B, inset). An example correlation between β1 and β2 (corresponding to weights on the current and immediately preceding tones) for a single subject is shown in Figure 7C. For each level of lag (k), we performed a one-sample t test on the Fisher's z-transformed correlation coefficients to assess significance at the group level. We found that the lag-1 correlation was significant at both the 200–250 ms (t(10) = −3.0, p = 0.01, Cohen's d = −0.92) and 250–300 ms (t(10) = −2.3, p = 0.04, Cohen's d = −0.69) time windows (Fig. 7D), indicating that, for sensors in the significant prediction cluster, the current and previous tone pitch had opposing effects on MEG activity. The lag-4 correlation was also significant for the 200–250 ms time window (t(10) = −2.3, p = 0.04, Cohen's d = −0.69), consistent with the observation that both history tracking weight and scaled prediction weight have negative values for k = 4 (Fig. 7B).

Replication of main findings in an independent sample

To assess the robustness of our main findings, we analyzed an independent dataset from 20 additional subjects who performed a slightly modified version of the experiment (for details, see Materials and Methods, “Replication of main results in an independent sample” section).

Consistent with earlier results (Fig. 2A and Lin et al., 2016), subjects were able to discriminate sequence β (t test on z-transform of Spearman correlation coefficients between sequence β and trend strength rating, t(19) = 5.5, p = 3 × 10−5, Cohen's d = 1.23). Following the analysis of Figure 2B, we first established that subjects exhibited a behavioral prediction effect by performing a 6 (p34) × 3 (p34*) repeated-measures ANOVA on likelihood ratings for the final tone pitch. The p34 × p34* interaction was highly significant (F(10,190) = 20.4, p < 0.001, ηp2 = 0.52), reflecting the fact that subjects' likelihood ratings for the final tone pitch were sensitive to the expected pitch (Fig. 8A). The interaction can be observed by noting that, when the presented final tone pitch was low (220 Hz), subjects rated the final tone to be more likely when its expected pitch was also low (paired t test for p34* = low vs p34* = high, t(19) = 3.7, p < 0.002, difference = 0.95); in contrast, when the presented final tone pitch was high (880 Hz), subjects rated the final tone to be more likely when its expected pitch was also high (t(19) = −7.9, p = 2 × 10−7, difference = −1.66). There was also a main effect of p34 (F(5,95) = 10.1, p = 9 × 10−8, ηp2 = 0.35), reflecting the inverse U shape of the function.

Second, following the analysis of Figure 5B, we established that subjects exhibited a neural prediction effect by regressing non-baseline-corrected MEG activity during the penultimate tone onto expected final tone pitch. Replicating the key finding in the main experiment (Fig. 5B), we found a right-lateralized cluster of sensors exhibiting the prediction effect in the 200–250 ms and 250–300 ms time windows (Fig. 8B, red dots, cluster-corrected; 200–250 ms: p = 0.009, cluster size = 28, dcluster = 4.5; 250–300 ms: p = 0.004, cluster size = 34, dcluster = 5.3). In these time windows, there was also a significant left-lateralized prediction cluster (Fig. 8B, red dots, cluster-corrected; 200–250 ms: p < 0.001, cluster size = 42, dcluster = 8.6; 250–300 ms: p = 0.003, cluster size = 36, dcluster = 5.5). We found a similar left-lateralized group of sensors exhibiting a prediction effect in the same time windows in the main experiment (Fig. 5B), although this group of sensors did not pass the significance threshold established by cluster correction in the main experiment.

Third, following the analysis of Figure 6D, we established that the prediction effect is related to the linear history tracking effect. As in Figure 6, we partitioned the original MEG data, Morig, into the component accounted for by history tracking, MH, and the residual, Mres. We then compared the regression weights obtained by regressing Morig, MH, and Mres onto p34* for the 200–250 ms and 250–300 ms time windows. The regression weights are plotted for Morig against MH (Fig. 8C, red) and for Morig against Mres (Fig. 8C, black), separately for the right- and left-lateralized significant prediction clusters (top and bottom rows of Fig. 8C, respectively). For both clusters, there was a strong correlation between the regression weights for Morig and MH (right cluster, 200–250 ms: r = 0.81, p < 3 × 10−7, regression coefficient = 0.63, n = 28; right cluster, 250–300 ms: r = 0.93, p < 3 × 10−15, regression coefficient = 0.92, n = 34; left cluster, 200–250 ms: r = 0.80, p < 3 × 10−10, regression coefficient = 0.75, n = 42; left cluster, 250–300 ms: r = 0.85, p < 9 × 10−11, regression coefficient = 0.76, n = 36). Therefore, this result reproduces the original finding in the main experiment (Fig. 6D) that the sensitivity of the MEG activity to the expected upcoming tone pitch is largely explained by its linear sensitivity to the preceding tone sequence. In addition, as in the main experiment, the relationship between the regression weights for Morig and Mres was considerably weaker though non-negligible (right cluster, 200–250 ms: r = 0.63, p < 4 × 10−4, regression coefficient = 0.37, n = 28; right cluster, 250–300 ms: r = 0.21, p = 0.24, regression coefficient = 0.08, n = 34; left cluster, 200–250 ms: r = 0.40, p = 0.009, regression coefficient = 0.25, n = 42; left cluster, 250–300 ms: r = 0.45, p = 0.006, regression coefficient = 0.24, n = 36).

In summary, the primary behavioral and neural findings pertaining to pitch prediction and its relationship to pitch history tracking (Figs. 2B, 5B, 6D) replicated in a second independent dataset (Fig. 8), demonstrating the robustness of these findings.

Discussion

In summary, we used precisely constructed tone sequences with temporal statistical regularities representative of temporally varying stimuli in the natural environment to investigate predictive processing in the human brain. We first established that humans can form valid predictions about upcoming items in such sequences by extrapolating from sequence history. We then reveal the underlying neural mechanisms for such predictive processing by showing that: (1) these naturalistic stimuli consistently modulate slow, arrhythmic neural activity; (2) ongoing neural activity predicts the expected pitch of the upcoming tone; and (3) such predictive activity is constructed by neural integration of sequence history. These findings further our understanding about predictive computations in the human brain significantly and reveal an important role for slow, arrhythmic brain activity in the processing and prediction of naturalistic stimuli.

We observed that the phase of arrhythmic neural activity in the <10 Hz range tracked pitch fluctuation in a tone sequence. This observation generalizes a previous finding showing that, when tone sequences with 1/fβ pitch fluctuation are amplitude modulated at 40 Hz, the phase of a 40 Hz entrained neural oscillation tracks the evolving pitch sequence (Patel and Balaban, 2000). Here, we show that, in the absence of such artificial amplitude modulation, the phase of low-frequency arrhythmic neural activity tracks the evolving pitch sequence. In addition, we demonstrate that slow, ongoing neural activity that accumulates information over multiple tones plays a crucial role in integrating sequence history and predicting upcoming stimulus input. These findings complement previous research showing phase locking of low-frequency neuronal oscillations to rhythmic stimuli, which may align high-excitability phases to events within a stimulus stream and thereby enhance perceptual sensitivity (Schroeder and Lakatos, 2009). Such phase locking to periodic stimuli (a tone every 300 ms) was also evident in our data (Fig. 3Ciii). However, our results show that the processing of an evolving sequence of pitches over multiple tones was carried by slow, arrhythmic neural activity (Fig. 3Cii). This slow, arrhythmic neural activity was previously shown to have behavioral, developmental, and clinical significance (Monto et al., 2008; He et al., 2010; He, 2014; Voytek et al., 2015; Lin et al., 2016). Our results significantly extend this prior literature by demonstrating that it serves an important role in processing and predicting natural stimuli, consistent with an earlier hypothesis (He et al., 2010).

The current study advances our understanding of the neural mechanisms of prediction in two important directions. First, our results establish that human subjects can make valid predictions of upcoming stimulus features for naturalistic stimulus sequences by extrapolating from their temporal statistical regularities and reveal a neural correlate for such predictions. Second, we describe a mechanism for how the content of prediction is constructed based on neural processing of sequence history. Below we elaborate on these findings.