Abstract

Two foundational questions about sustainability are “How are ecosystems and the services they provide going to change in the future?” and “How do human decisions affect these trajectories?” Answering these questions requires an ability to forecast ecological processes. Unfortunately, most ecological forecasts focus on centennial-scale climate responses, therefore neither meeting the needs of near-term (daily to decadal) environmental decision-making nor allowing comparison of specific, quantitative predictions to new observational data, one of the strongest tests of scientific theory. Near-term forecasts provide the opportunity to iteratively cycle between performing analyses and updating predictions in light of new evidence. This iterative process of gaining feedback, building experience, and correcting models and methods is critical for improving forecasts. Iterative, near-term forecasting will accelerate ecological research, make it more relevant to society, and inform sustainable decision-making under high uncertainty and adaptive management. Here, we identify the immediate scientific and societal needs, opportunities, and challenges for iterative near-term ecological forecasting. Over the past decade, data volume, variety, and accessibility have greatly increased, but challenges remain in interoperability, latency, and uncertainty quantification. Similarly, ecologists have made considerable advances in applying computational, informatic, and statistical methods, but opportunities exist for improving forecast-specific theory, methods, and cyberinfrastructure. Effective forecasting will also require changes in scientific training, culture, and institutions. The need to start forecasting is now; the time for making ecology more predictive is here, and learning by doing is the fastest route to drive the science forward.

Keywords: forecast, ecology, prediction

There are two fundamental questions at the heart of sustainability: “How are ecosystems and the services they provide going to change in the future?” and “How do human decisions affect this trajectory?” Until recently, we could reasonably rely on observed means and variability as the frame of reference for sustainable environmental management (e.g., 100-y floods, historical species ranges). However, the current pace of environmental change is moving us outside the envelope of historic variation (1). It is becoming increasingly apparent that we cannot rely on historical variation alone as the basis for future management, conservation, and sustainability (2, 3).

Addressing these challenges requires ecological forecasts—projections of “the state of ecosystems, ecosystem services, and natural capital, with fully specified uncertainties” (4). Decisions are made every day that affect the health and sustainability of socioenvironmental systems, and ecologists broadly recognize that better informed decision-making requires making their science more useful and relevant beyond the disciplinary community (5, 6). Advancing ecology in ways that make it more useful and relevant requires a fundamental shift in thinking from measuring and monitoring to using data to anticipate change, make predictions, and inform management actions.

Decision-making is fundamentally a question about what will happen in the future under different scenarios and decision alternatives (6), making it inherently a forecasting problem. Such forecasts are happening, but there is currently a mismatch between the bulk of ecological forecasts, which are largely scenario-based projections focused on climate change responses on multidecadal time scales, and the timescales of environmental decision-making, which tend to require near-term (daily to decadal) (7, 8) data-initialized predictions, as well as projections that evaluate decision alternatives. The overall objective of this paper is to focus attention on the need for iterative near-term ecological forecasts (both predictions and projections) that can be continually updated with new data. Rather than reviewing the current state of ecological forecasting (9), we focus on the opportunities and challenges for such forecasts and provide a roadmap for their development.

Recent changes make the time particularly ripe for progress on iterative near-term forecasting. On the scientific side, there has been a massive increase in the accumulation and availability of data, as well as advances in statistics, models, and cyberinfrastructure that facilitate our ability to create, improve, and learn from forecasts. At the same time, the societal demand for ecological forecasts, which was already high, is growing considerably. Near-term ecological forecasts are already in use in areas such as fisheries, wildlife, algal blooms, human disease, and wildfire (Table 1). As demonstrated by the 21st Conference of Parties in Paris, where a majority of nations agreed on the United Nations Framework on Climate Change, international recognition of climate change has finally shifted in favor of action. With this comes a greater mandate to manage for novel conditions, and a shift in research needs from detection to mitigation and adaptation, which require accurate forecasts of how systems will respond to intervention. Key priority areas include forecasting the following: ecological responses to droughts and floods; zoonotic and vector-borne diseases; plant pathogen and insect outbreaks; changes in carbon and biogeochemical cycles; algal blooms; threatened, endangered, and invasive species; and changes in phenological cycles.

Table 1.

Examples of iterative near-term ecological forecasts

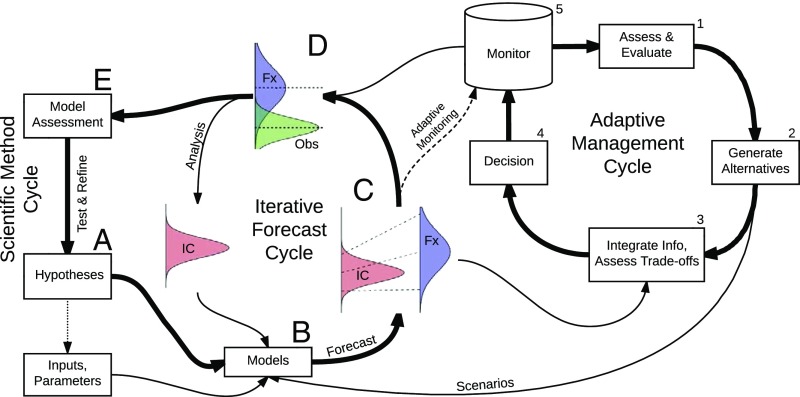

In addition to having greater societal relevance, refocusing ecological forecasting on the near-term also provides greater benefits to basic science. At the core of the scientific method is the idea of formulating a hypothesis, generating a prediction about what will be observed, and then confronting that prediction with data (Fig. 1 A–E). All scientists view this process as inherently iterative, with our understanding of the natural world continually being refined and updated. Models embody this understanding and make precise our underlying hypotheses. Thus, by using models to make testable quantitative predictions, iterative forecasting is an approach that can represent the scientific method. Quantitative predictions can also provide a more rigorous test of a hypothesis than is commonly found in traditional null-hypothesis testing, where the default null is often trivial (e.g., no effect) and ignores what we already know about a system. Furthermore, by making out-of-sample predictions about a yet-to-be-observed future, ecological forecasts provide a stronger test than in-sample statistical testing and are less susceptible to overfitting, which can arise covertly through multiple comparisons or subconsciously because those building the model have already seen the data.

Fig. 1.

Conceptual relationships between iterative ecological forecasting, adaptive decision-making, and basic science. Hypotheses (A) are embedded in models (B) that integrate over uncertainties in initial conditions (IC), inputs, and parameters to make probabilistic forecasts (Fx, C), often conditioned on alternative scenarios. New observations are then compared with these predictions (D) to update estimates of the current state of the system (Analysis) and assess model performance (E), allowing for the selection among alternative model hypotheses (Test and Refine). Analysis and partitioning of forecast uncertainties facilitates targeted data collection (dotted line) and adaptive monitoring (dashed line). In decision analysis, alternative decision scenarios are generated (2) based on an assessment of a problem (1). Since decisions are based on what we think will happen in the future, forecasts play a key role in assessing the trade-offs between decision alternatives (3). Adaptive decisions (4) lead to an iterative cycle of monitoring (5) and reassessment (1) that interacts continuously with iterate forecasts.

An iterative forecasting approach also has the potential to accelerate the pace of basic ecological research. Because forecasts embody the current understanding of system dynamics, every new measurement provides an opportunity to test predictions and improve models. That said, ecologists do not yet routinely compare forecasts to new data, and thus are missing opportunities to rapidly test hypotheses and get needed feedback about whether predictions are robust. Near-term forecasts provide the opportunity to iteratively test models and update predictions more quickly (10, 11). Research across widely disparate disciplines has repeatedly shown that this iterative process of gaining feedback, building experience, and correcting models and methods is critical for building a predictive capacity (12, 13).

While examples of iterative ecological forecasts do exist (Table 1), there has been no coordinated effort to track the different ecological forecasts in use today and independently assess what factors have made them successful or how they have improved over time. A quantitative assessment of iterative ecological forecasts is severely limited by a lack of open forecast archiving, uncertainty quantification, and routine validation. We can contrast this with weather forecasting, where skill has improved steadily over the past 60 y. Weather forecasts have benefitted not only from technological advances in measurement and computation, but also from insights and improvements that came from continually making predictions, observing results, and using that experience to refine models, measurements, and the statistics for fusing data and models (20, 21). Initial numerical weather forecasts were unspectacular, but rather than retreating to do more basic research until their models were “good enough,” meteorologists embraced an approach focused on “learning by doing” and leveraging expert judgment that accelerated both basic and applied research (22, 23). By contrast, in ecology the prevailing opinion about forecasting has been both pessimistic and conservative, insisting that “biology is too complex” and demanding that any forecasting model work optimally before it is applied (24, 25). Given our rapidly changing world, we can no longer afford to wait until ecological models are “good enough” before we start forecasting. We need to start forecasting immediately: learning by doing is the fastest route to drive science forward and provide useful forecasts that support environmental management decisions.

Herein, we articulate the needs, opportunities, and challenges in developing the capacity for iterative near-term ecological forecasts. Specifically, we provide a roadmap for rapid advancement in iterative forecasting that looks broadly across data, theory, methods, cyberinfrastructure, training, institutions, scientific culture, and decision-making. Overall, taking an iterative near-term forecasting approach will improve science more rapidly and render it more relevant to society. Despite the challenges, ecologists have never been better poised to take advantage of the opportunities for near-term forecasting.

Data

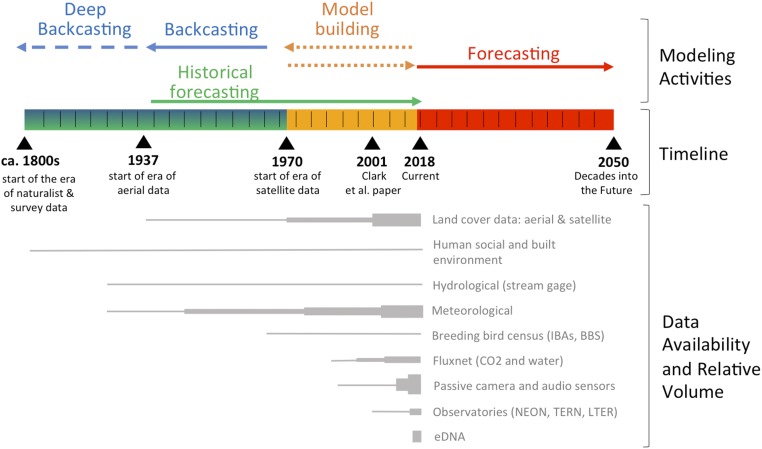

Updating forecasts iteratively requires data. Fortunately, many types of ecological data are increasing rapidly in volume and variety (Fig. 2) (26). In addition, key abiotic model drivers, such as weather forecasts, are also improving, particularly at timescales of weeks to years that are relevant to near-term ecological forecasting. At the same time, scientific culture is evolving toward more open, and in some cases real-time, data. Of particular value for forecasting is the growing fraction of data that comes from coordinated efforts, such as national networks [e.g., National Ecological Observatory Network (NEON), Terrestrial Ecosystem Research Network], global community consortiums [e.g., International Long-Term Ecological Research, FLUXNET, Global Lakes Ecological Observatory Network (GLEON)], open remote sensing (27), grassroots experiments (e.g., NutNet), and citizen science initiatives (28). While some problems will remain data-limited, collectively these trends represent important new opportunities. In what follows, we assess four characteristics of data that are useful for iterative forecasting and address the challenges and opportunities for each: repeated sampling, interoperability, low data latency, and uncertainty reporting.

Fig. 2.

Forms of model–data integration (Top) and the availability of environmental data (Bottom) through time. Changes in relative data volume indicated by line width.

Repeated measurements over time are the first requirement for iterative forecasting. More measurements mean more opportunities to update model results and improve model performance. However, many forecasting problems also require spatial replication, which leads to a trade-off where an increase in temporal frequency usually comes at a compromise on spatial resolution or replication. This can lead to mixed designs, such as measuring subsets of variables and/or sites at higher frequencies to assist with extrapolating back to a larger network. Optimizing this trade-off between space and time requires a better understanding of the spatial and temporal autocorrelation and synchrony in ecological responses (29), as this controls the uncertainties in interpolating between sites and scaling-up forecasts.

When making repeated measurements, iterative forecasting also provides opportunities to collect data in more targeted ways that can lead to improved inference (e.g., more accurate forecasts) at reduced costs (30, 31). Dynamic adaptive monitoring approaches (Fig. 1) can vary when and where data are collected in response to forecast uncertainty estimates (32). Next, uncertainty analyses can identify which measurements are most crucial to reducing uncertainties (33, 34). Finally, value-of-information analyses can assess the sensitivity of decision-making to forecast uncertainties to identify where better forecasts would improve decision trade-offs (35, 36).

The second data challenge is interoperability, the ability of one system to work with or use the parts of another system. Interoperability affects both efforts to combine different data sources and the continuity of data sources as instruments change (i.e., harmonization). Technical requirements for interoperability include data standards (variable names, units, etc.) and data formatting and communication protocols to enable machine-to-machine exchange. There are also semantic challenges, due to the use of the same terms to represent different concepts or different terms to describe the same concept, that can be mitigated through machine-readable ontologies that describe the relationships among variables and help translate information between data standards. However, increasing data interoperability goes beyond just information technologies (37). Organizational barriers can arise due to issues related to institutional responsibility for, and authority over, data. Similarly, cultural barriers can occur due to incompatibilities in how people work collaboratively, value and manage data ownership, and handle data latency.

The third data challenge is latency, which is defined as the time between data collection and data availability. Latency on a timescale similar to the ecological processes of interest can significantly limit the ability to make forecasts. Latency increases when measurements require laboratory analyses or have complicated processing and quality assurance/quality control requirements. Forecasts would benefit from shared standards among commercial laboratories and competition to meet timely and strict contractual obligations. For all data, long embargo periods reduce the accuracy of forecasts, potentially rendering them obsolete before they can even be made. For example, high frequency limnology data typically take 2–5 y to appear in publications (38). Data embargo is driven, in part, by concern that data collectors will not receive sufficient credit (39), as well as concerns about releasing data that has not been fully vetted. For the former, ensuring proper attribution is important for maximizing the potential of ecological forecasting. In the latter case, latency can be reduced through a tiered system that clearly distinguishes provisional (but rapid) data from final products.

A fourth issue is data uncertainty reporting. Measurement errors set an upper bound on our ability to validate predictions—beyond this point, we cannot assess model performance or distinguish between competing hypotheses. It is also impossible to assimilate data into forecasts (Fig. 1, Analysis) without estimates of the uncertainty, such as sampling variability, random and systematic instrument errors, calibration uncertainty, and classification errors (e.g., species identification). Because iterative forecasts combine the previous forecast with the data, each weighted by their respective uncertainties, data with incomplete error accounting will be given too much weight, potentially biasing results and giving falsely overconfident predictions. An outstanding challenge is that there are no general standards for reporting measurement uncertainty, and robust uncertainty estimates tend to be the exception rather than the rule.

Theory and Methods

Although data are critical to detect change, data by themselves cannot be used to anticipate change; making forecasts requires that data be fused with models. Models translate what we know now into what we anticipate will happen, either in the future, at a new location, or under different decision scenarios. That said, there are a wide range of models that are potentially useful for forecasting, ranging from simple statistical models to complex simulations that integrate physical, chemical, and ecological theory. While there are well-established trade-offs related to model complexity (40), we focus on three important forecasting challenges and opportunities: predictability and forecast uncertainty; model assessment; and model–data assimilation.

Predictability and Forecast Uncertainty.

Because there is no such thing as a “perfect” forecast, it is critical that ecological forecasts be probabilistic. A probability distribution of possible outcomes captures forecast uncertainty, and rather than reflecting doubt and ignorance, its calculation should arise from a careful and quantified accounting of the data in hand, the past performance of predictive models, and the known variability in natural processes (9). Uncertainty is at the core of how people evaluate risk and make decisions. This makes ecological forecasts crucial under high uncertainty and a cornerstone of adaptive management (41). As we embark on the path of learning by doing, we must have every expectation that early forecasts will be poor and associated with high uncertainty. However, even if the amount of variance that can be explained is relatively low, failure to include important sources of uncertainty results in falsely overconfident predictions, which erodes trust in ecological forecasts (42) and provides inadequate inputs for risk-based decision-making.

Ecological research aimed at understanding the limits to ecological predictability is in its infancy (24, 43). Because forecast uncertainty increases with lead time, a key part of understanding predictability lies in understanding how fast uncertainty increases. Multiple sources of uncertainty (initial conditions, covariates/drivers, parameters, process variability, model structure, stochasticity, rare events; Fig. 1B) contribute to forecast uncertainty, and the importance of each is moderated by the sensitivity of the system to each factor (9, 43). Our understanding of a system, and our ability to forecast it, is strongly influenced by which term dominates forecast error. Knowledge about the dominant sources of uncertainty can also have broad impacts on data collection, model development, and statistical methods across a whole discipline. For example, weather forecasts are dominated by initial condition uncertainty (i.e., uncertainty about the current state of the atmosphere) because the high sensitivity to initial conditions causes similar initial states to diverge rapidly through time. As a result, billions of dollars are invested every year collecting weather data primarily to better constrain the initial-state estimates in weather models, and a whole discipline of atmospheric model–data assimilation is dedicated to improving how models ingest these data (20).

By contrast, there is unlikely to be a single source of uncertainty that dominates across all of ecology. A practical way forward is to develop a comparative approach to predictability by empirically classifying a wide range of ecological systems based on which forecast uncertainties dominate their predictions (9, 43). Do the dominant sources of uncertainty vary by ecological subdisciplines, trophic levels, functional groups, or spatial, temporal, or phylogenetic scales? Answers to these questions provide a high-level means of organizing our understanding of ecological processes and will lead to new theories and research directions. The answers are also practical—given that we cannot measure everything everywhere, such patterns help determine what we can predict and how to direct limited resources when encountering new problems.

Model Assessment and Improvement.

When making ecological forecasts, our current knowledge and theories are embedded in the models we use. Model improvement, however, is not guaranteed simply by making forecasts; model results need to be compared with data and their accuracy evaluated (Fig. 1E). While a wide variety of skill scores are used by ecologists for model assessment [e.g., R2, root-mean-square error (RMSE), area under the curve] statisticians advocate the use of proper and local scores, such as deviance, to assess predictive ability (44). A local score depends on data that could actually be collected, while a proper score is one based on the statistical model used for inference and calibration. For example, because a Gaussian likelihood minimizes RMSE, RMSE would be a proper score for a model calibrated under a Gaussian likelihood. By contrast, mean absolute error would not be proper for this likelihood because another model could score higher than the best-fit model. Formalizing repeatable model–data comparisons into explicit model benchmarks (45) can facilitate tracking skill over time and across models.

When assessing forecast skill, it is also useful to compare forecasts against an appropriate null model (46). Unlike statistical null models (e.g., slope = 0), the appropriate null for forecasts often is more sophisticated. The range of natural/historical variability (47), which may come from long-term means and variances (e.g.., disturbance regimes), natural cycles (e.g., diurnal, season), or first-principle constraints, provides an important null model—the ecological equivalent to comparing a weather forecast to the long-term climatology.

Beyond refuting a null model, a combination of model assessment and exploring alternative model structures (either separate models or variants of a single model) is essential for testing hypotheses and improving models (e.g., identifying time lags, feedbacks, and thresholds). However, the concept of parsimony and the statistics of model selection tell us that the “best” model is not always the one that minimizes error (44). Beyond the well-known admonition to avoid overfitting, forecasting requires that we balance forecast skill (e.g., minimizing RMSE) and forecast uncertainty. Forecast uncertainty increases with the number of model parameters, state variables, and inputs/drivers, particularly if there are large uncertainties associated with any of these (43). Forecasters need to moderate the urge to solve every problem by adding model complexity and rely on formal model selection criteria that account for multiple uncertainties.

Finally, it is important to remember that some human responses to a forecast (e.g., management) may influence the outcome, causing the original forecast to be wrong and eliminating our ability to validate the forecast. Care must be taken when validating such models, such as through extensive code testing, validation of the model components, and carefully constructed counterfactuals (i.e., demonstrating that reality is now different from what would have happened without the action).

Model–Data Assimilation and the Forecast–Analysis Cycle.

The increased feasibility of iterative forecasting is not solely driven by increased data availability. Methodologically, the past decade has seen considerable advances in and uptake of sophisticated approaches for bringing models and data together (4). Bayesian methods are particularly relevant to iterative forecasting because Bayes’ theorem is fundamentally an iterative approach to inference—our prior beliefs are updated based on the likelihood of new data, generating a posterior that can be used as the next prior. Bayesian methods also provide a means to make forecasts when data are very sparse (48). Specifically, the use of informative priors, such as through expert elicitation (49), generates a starting point for chronically data-limited problems (e.g., invasive species, emergent diseases) to make formal predictions with uncertainties, and then to iteratively refine these predictions as data become available. Furthermore, Bayesian methods offer a flexible approach for partitioning and propagating uncertainties. In particular, the Bayesian state space framework (a hierarchical model; e.g., hidden Markov model) (50) is frequently used to fit models because it explicitly separates observation and process errors. It can also combine multiple sources of information that inform the same or related processes, as different datasets can have different observation error models but share the same process model. Important special cases of the state space framework include nonlinear iterative data assimilation methods, such as sequential Monte Carlo/particle filters and ensemble filters, which are increasingly being employed with both statistical and process-based models (9).

More generally, data assimilation techniques are used in iterative forecasting as part of the forecast–analysis cycle (Fig. 1). During the forecast step, predictions are made probabilistically that integrate over the important sources of uncertainty (e.g., initial conditions, parameters, drivers/covariates). Next, when new data are collected, the state of the system is updated during the analysis step, treating the probabilistic forecast as the Bayesian prior. A challenge in applying the forecast–analysis cycle in ecology is that not all data assimilation techniques make the same assumptions, and the uncritical adoption of tools designed for different uncertainties (e.g., initial condition uncertainty in atmospheric data assimilation) will likely meet with limited success. For example, hierarchical and random-effect models allow ecologists to better account for the heterogeneity and inherent variability in ecological systems (e.g., by letting parameters vary in space and time), and to partition inherent stochasticity (e.g., disturbance) from model uncertainty (50, 51). Such approaches are increasingly used by ecologists for data analysis, but have largely been absent from data assimilation because they are not the dominant uncertainties in weather forecasting.

Another important data assimilation challenge is that ecological models can have large structural uncertainties (i.e., that first-principles governing equations are unknown). An important way to account for structural uncertainties is to forecast using multiple models. This approach is particularly important when there are multiple competing hypotheses about long-term dynamics that are all consistent with current short-term data. Multimodel forecasts tend to perform better than individual models because structural biases in models may cancel out (52). Often multimodel approaches use unweighted ensembles (all models count equally), but Bayesian model averaging can be used to obtain weighted forecasts with improved predictive skill (44, 53). Finally, forecasting competitions, targeted grants, and repositories of ecological forecasts represent possible mechanisms for promoting a diverse community of forecasting approaches.

While model structural error is accommodated via an ensemble across models, other sources of uncertainty are propagated into forecasts by running a model dozens to thousands of times, with each run sampling from the distribution of the model parameters, random effects, inputs/drivers, and initial conditions (Fig. 1B). Additional sampling of stochastic processes (e.g., disturbance) and residual process error is required to generate a posterior predictive distribution and predictive interval. However, it is challenging to construct, calibrate, and validate models of rare events due to limited observational and experimental data (54). Furthermore, some rare events and disturbances are too infrequent to warrant inclusion in a model’s structure; these are best handled by running rare-event scenarios (also known as war games). It is important to remember that forecasts are only as good as the scenarios they consider; the greatest challenge in rare-event forecasting lies in anticipating rare events (55). Indeed, rare events are one of the greatest challenges to ecological forecasting as a whole.

Cyberinfrastructure

Achieving the goals in this paper will require ecological forecasts to be transparent, reproducible, and accessible. This is especially important for iterative forecasts, where continuous updates are needed as new data become available. Fortunately, there has been a push on many fronts toward making data and code openly available (56, 57). Others have similarly advocated for following best practices in data structure (e.g., standardized, machine-readable data structures, open licenses) (58,59), metadata (60), and software development (e.g., documentation, version control, open licenses) (61). These trends make data, models, and code easier to access, understand, and reuse, and will maximize data use, model comparison and competition, and contributions by a diverse range of researchers.

Among these trends, reproducibility is particularly important to iterative forecasting: each component of a forecast should be easy to rerun and produce consistent outcomes. Currently, most ecological analyses are done once and never repeated. However, the distinction between such “one-off” analyses and a fully automated iterative pipeline is not all-or-nothing, but a continuum that involves increasing investments of time, personnel, training, and infrastructure to refine and automate analyses [e.g., National Aeronautics and Space Administration (NASA) Application Readiness Levels]. These costs highlight the advantages of developing shared forecasting tools and cyberinfrastructure that can easily be applied to new problems. Workflows that automate the forecast cycle could be built using a combination of existing best practices, continuous integration, and informatics approaches. Forecast infrastructure could benefit from, and build upon, the experience of existing long-term data programs, many of which have developed streamlined, tested, and well-documented data workflows (62).

Improving forecasts, and evaluating their improvement over time, will also benefit from archiving forecasts themselves (46). First, this allows unbiased assessment of forecasts that were clearly made before evaluation data were collected. Second, it allows comparisons of the accuracy of different forecasting approaches developed by different research groups to the same question. Last, it allows the historical comparison of forecast accuracies to determine whether the field of ecological forecasting (and ecology more broadly) is improving over time. Efforts to archive ecological forecasts could take advantage of existing infrastructure for archiving data, while developing associated metadata to allow forecasts to be readily compared. Forecasting will improve most rapidly if forecasts are openly shared, allowing the entire scientific community to assess the performance of different approaches. By publicly archiving ecological forecasts from early on, we are preparing in advance for future syntheses and community-level learning.

Training, Culture, and Institutions

Training.

The next generation of ecological forecasters will require skills not currently taught at most institutions (28, 63). Forecasting benefits from existing trends toward more sophisticated training in statistics and in best practices in data, coding, and informatics (e.g., Data Carpentry, Software Carpentry). However, the additional skills needed range from high-level theory and concepts (e.g., classifying predictability) (43), to specific tools and techniques in modeling, statistics, and informatics (e.g., iterative statistical methods) (10), to decision support, leadership, and team science collaboration skills (28, 63, 64). Furthermore, both new researchers and experienced forecasters would benefit from interdisciplinary forums to allow ideas, methods, and experiences to be shared, fostering innovation and the application of knowledge to new problems.

Some successful models that have emerged to fill gaps in graduate student skillsets are cross-institution fellowship programs (e.g., GLEON Fellows program) (63) and within-institution interdisciplinary training programs [e.g., National Science Foundation (NSF) Research Training Groups]. The next generation of ecological forecasters would also benefit from graduate and postdoctoral fellowships directed at forecasting or supplemental funding aimed at making existing analyses more updatable and automated. Short courses, held over a 1- to 2-wk period to obtain specific skills, are another model that holds promise, not only in academia but also in applied disciplines and professional societies (e.g., water quality, forestry), which often have continuing education certification requirements. By training practitioners in the latest forecast approaches, such certifications may also be particularly helpful for bridging ecological forecasts from research to operations.

From Research to Operations.

Forecasting is an iterative process that improves over years or decades as additional observations are made. A major long-term challenge then is how to bridge from basic research to sustainable operations. Beyond technical skills, moving from research to operations requires high-level planning and coordination, clear communication of end user requirements, and designs that are flexible and adaptive to accommodate new research capabilities (64). It also requires sustained funding, a challenge that may run up against restrictions placed on research agencies that prohibit funding of “operational” tools and infrastructure. For example, in the United States, moving from research to operations requires a hand-off that crosses federal agencies (e.g., from NSF to US Department of Agriculture, US Forest Service, National Oceanic and Atmospheric Administration) with different organizational values and reward structures, often without a clear mechanism for how to do so. NASA’s Ecological Forecasting program provides one model for moving basic research to operations, requiring a phased funding transition where research is operationalized by an external partner identified at the time of grant submission. Whether required upfront or transitioned post hoc, forecasting would be improved if funding agencies did more to actively support bridges between basic research and organizations with the infrastructure to sustain operational forecasting capabilities (65).

Culture.

In addition to technical training and organizational support, there are important shifts in scientific culture that would encourage greater engagement in iterative forecasting. First, prediction needs to be viewed as an essential part of the scientific method for all of ecology (25). The need for iterative forecasting goes beyond informing decisions; so long as forecasts are tested against observations, they have a central role to play in refining models, producing fundamental insights, and advancing basic science.

Second, prediction often requires a perceptual shift in the types of questions we ask and the designs we use to answer them. For example, rather than asking “does X affect Y,” we need to ask “how much does X affect Y.” A simple shift to a greater use of regression-based experimental designs over ANOVA designs does not require sophisticated statistical tools or advanced training, but would immediately shift the questions being asked, make it easier to build on previous work quantitatively, and provide information more relevant to forecasting (25). More broadly, the modeling and measurement communities should be actively encouraged to work together iteratively from the earliest stages of research projects (66). Overall, a change in our approach to many long-standing problems is likely to generate new insights and research directions.

Third, while testing explicit, quantitative predictions has the potential to accelerate basic science, it also comes with a much greater chance of being wrong. Indeed, we should expect that individual predictions will often be wrong, particularly in the early stages of forecasting. While “getting it wrong” is an essential part of the scientific method, it is rarely embraced by the scientific community. Because ecologists are not accustomed to making explicit predictions, fear of being wrong may lead to concerns about impacts on professional reputations, career prospects, competition for funding, or even legal liability. Indeed, research forecasts should be labeled as “experimental” to ensure users are aware that such systems are a work in progress and should be treated with caution. That said, there are already parts of ecology (e.g., ecosystem modeling) where model intercomparisons are a common part of the culture and important for both advancing science and building community (66). Furthermore, forecast performance is not black-and-white—in both research and policy, it is better to be honestly uncertain than overconfidently wrong, which reiterates the importance of including robust uncertainty estimates in forecasts. In a decision context, honestly uncertain ecological forecasts are still better than science not being part of decisions—when correctly communicated, uncertain forecasts provide an important signal for the need to seek alternatives that are precautionary, robust to uncertainty, or adaptive (67).

Decision Support

Because decisions are focused on the future consequences of a present action (68), societal need is a major driver for increased ecological forecasting. To facilitate direct incorporation into a decision context, forecasts need to provide decision-relevant metrics for an ecological variable of interest at the appropriate spatial and temporal scale. Many scientists (and some organizations) think there is a predefined “proficiency threshold” a forecast has to reach (24), and thus some of the reluctance among scientists to provide ecological forecasts may stem from misperceptions about when a forecast is “good enough” to be useful for decision-making. Instead, environmental management decisions should be based on the best available information. If the best available forecast is rigorously validated (69) but highly uncertain, it is important that uncertainty be included in risk management decisions. As long as a forecast provides an appropriate uncertainty characterization, and does better than chance, then decision analysis methods can be used to identify the options that are the most desirable (i.e., maximize utility) given the uncertainties and the decision maker’s goals (5, 70).

The following sections focus on two key opportunities to simultaneously facilitate the development of iterative forecasts and support decisions. First, the process of developing near-term ecological forecasts (on the scientific side) and using them (on the management side) will require long-term partnerships between researchers and managers to refine and improve both forecasts themselves and how they are used. Second, there is an important opportunity for linking iterative near-term forecasts with adaptive management (Fig. 1) and other inherently iterative policy rules (e.g., annual harvest quotas).

Partnerships.

Rather than a “build it and they will come” model of decision support, making ecological forecasts useful for decision-making requires partnerships. Decision-relevant outputs may best be achieved through coproduction processes, where ecological forecast users (e.g., stakeholders, decision makers, and other scientists) collaborate with forecasting experts to mutually learn and understand the desired outputs for a range of decision contexts, as well as the potential or limitations of current forecasting capabilities (71). However, collaborations between scientists and stakeholders can be challenging because each think about a particular problem from different perspectives (72). These challenges can be reduced by employing best practices for interdisciplinary teams to help build trust, establish effective communication and a shared lexicon, and identify and manage conflict (63).

Often it can be useful to engage in “boundary processes” to connect information and people focused on the scientific and policy aspects for a particular topic (35). These can be collaborations with individual science translation experts, who represent the perspectives of multiple stakeholders or an institution, or with boundary organizations, whose organizational role is to help translate relevant scientific information to support decision-making. Partnering with such organizations facilitates engagement within their established and trusted networks, increases the impact of the research more quickly, and provides their stakeholder and decision-making networks with credible forecasts that are relevant for their decisions. The effectiveness of these collaborations can be measured over time as forecasts are actually used and value of information is analyzed to determine its influence on decisions (73). Regardless of the collaborator, effective engagement requires a long-term investment in mutual learning and relationship development to achieve successful science policy translation and facilitate increased use of scientific information (7, 74).

Adaptive Management.

Adaptive management is an iterative process of making better environmental decisions, with better outcomes, by reducing uncertainties through time through monitoring, experimentation, and evaluation (ref. 41, figure 1.1-5). Because decisions are ultimately about the future, predictions of how systems will respond to different management choices provide the most relevant ecological information for decision-making (6). Adaptive management acknowledges that our ability to manage ecological systems is based on imperfect and uncertain predictions. A key part of adaptive management is the collection of monitoring data to assess the outcomes of different management actions. These data should then be used to update our understanding of the system and make new predictions. In this way, an iterative approach to forecasting plays an important role in adaptive management, especially if such forecasts are designed to be societally relevant. Iterative forecasting represents an important opportunity to develop knowledge and experience about the capacity of different management strategies to cope with change and maximize resistance and resilience. The development of near-term ecological forecasts could lead to improved decision analysis frameworks and advances in our understanding of coupled human–natural systems.

A Vision Forward

The central message here is that ecologists need to start forecasting immediately. Rather than waiting until forecasts are “good enough,” we need to view these efforts as an iterative learning exercise. The fastest way to improve the skill of our predictions, and the underlying science, is to engage in near-term iterative forecasts so that we can learn from our predictions. Ecological forecasting does not require a top-down agenda. It can happen immediately using existing data, often with only modest reframing of the questions asked and the approaches taken.

Ecological forecasting would benefit from cyberinfrastructure for the automation, public dissemination, and archiving of ecological forecasts, which will allow community advances in two important ways. First, by looking across different types of forecasts, we can take a comparative approach to understanding what affects predictability in ecology. Second, forecasts of the same or similar processes facilitates synthesis and lets us use small-scale efforts to work toward upscaling. For example, multiple local forecasts could be synthesized using hierarchical models to later assess their spatial and temporal synchrony, leading to further constraints at the local scale.

Beyond cyberinfrastructure, there are additional practical steps that can be taken to advance the field, take advantage of current capabilities, and create new opportunities. To summarize the recommendations from previous sections, at a minimum we suggest that it is necessary to have the following:

Increased training and fellowship opportunities focused on ecological forecasting.

Shifts in the scientific culture to view prediction as integral to the scientific process.

Increased theoretical focus on predictability and practical methods development for iterative forecasting.

Open and reproducible science, with a particular emphasis on reducing data latency and increasing automation for problems amenable to frequent forecasts.

Increased long-term support for the collection of data and development of forecasts, in particular focused on helping researchers at all career stages to bridge projects from research to operations.

Early engagement with boundary organizations and potential stakeholders in developing ecological forecasts.

Two larger initiatives that would advance iterative forecasting are the establishment of an ecological forecasting synthesis center and a “Decade of Ecological Forecasting” initiative. A forecasting synthesis center is a natural next step for technology, training, theory, and methods development. Such a center could contribute to development in these areas, both directly and indirectly (e.g., through working groups), by synthesizing forecasting concepts, data, tools, and experiences, and by advancing theory, training, and best practices. A forecast center could exist in many forms, such as an independent organization, a university center, an interagency program, or an on-campus agency/university partnership. In any of these configurations, a forecast center could provide the institutional memory and continuity required to allow forecasts to mature and improve through time, and to help transition forecasts from research to operations.

Similarly, initiatives in other research communities, such as the International Biological Program, the three International Polar Years, the International Geophysical Year, and the Decade of Predictions in Ungauged Basins, succeeded in bringing widespread public and scientific attention to topics in need of research and synthesis. The opportunity to initiate a Decade of Ecological Forecasting is both timely and critical.

Conclusions

Iterative near-term forecasts have the potential to make ecology more relevant to society and provide immediate feedback about how we are doing at forecasting different ecological processes. In doing so, they will accelerate progress in these areas of research and lead to conceptual changes in how these problems are viewed and studied.

On longer timescales, efforts to broaden the scope of iterative forecasting will lay the foundation for tackling harder interdisciplinary environmental problems. This improvement will come in three major ways: improvements in ecological theory, improvements in tools and methods, and gaining experience. Key conceptual and methodological questions exist about the spatial and temporal scales of predictability, what ecological processes are predictable, and what sources of uncertainty drive uncertainties in ecological forecasts. Scientifically, additional experience is required because we currently lack a sufficient sample of iterative ecological forecasts to be able to look for general patterns. Furthermore, gaining forecasting experience is necessary because the community is currently not ready to respond quickly to the needs of environmental managers.

To engage actively in near-term forecasts represents an opportunity to advance ecology and maximize its relevance to society during what is undoubtedly a critical junction in our history. The need for iterative near-term ecological forecasts permeates virtually every subdiscipline in ecology. While there are definitely challenges to address, these should not be viewed as requirements that investigators must meet to start forecasting, but rather as a set of goals to which forecasters should strive to achieve. There are broad sets of problems where we can begin to tackle iterative approaches to forecasting using current tools and data. The time to start is now.

Acknowledgments

We thank James S. Clark, Tom Hobbs, Yiqi Luo, and Woody Turner, who also participated in the NEON forecasting workshop and contributed useful ideas and discussion. M.C.D. and K.C.W. were supported by funding from NSF Grants 1638577 and 1638575 and K.C.W. additionally by NSF Grant 1137327. E.P.W. and L.G.L. were supported by the Gordon and Betty Moore Foundation’s Data-Driven Discovery Initiative through Grants GBMF4563 and GBMF4555. A.T.T. was supported by an NSF Postdoctoral Research Fellowship in Biology (DBI-1400370). R.V. and H.W.L. acknowledge support from NSF Grant 1347883. M.B.H. acknowledges support from NSF Grants 1241856 and 1614392. This paper benefited from feedback from John Bradford, Don DeAngelis, and two anonymous reviewers, as well as internal reviews by both NEON and USGS. This paper is a product of the “Operationalizing Ecological Forecasts” workshop funded by the National Ecological Observatory Network (NEON) and hosted by the US Geological Survey (USGS) Powell Center in Fort Collins, Colorado. Any use of trade, firm, or product names is for descriptive purposes only and does not imply endorsement by the US Government.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Smith MD, Knapp AK, Collins SL. A framework for assessing ecosystem dynamics in response to chronic resource alterations induced by global change. Ecology. 2009;90:3279–3289. doi: 10.1890/08-1815.1. [DOI] [PubMed] [Google Scholar]

- 2.Milly PC, et al. Climate change. Stationarity is dead: Whither water management? Science. 2008;319:573–574. doi: 10.1126/science.1151915. [DOI] [PubMed] [Google Scholar]

- 3.Craig R. “Stationarity is dead”—long live transformation: Five principles for climate change adaptation law. Harvard Environ Law. 2010;34:9–75. [Google Scholar]

- 4.Clark JS, et al. Ecological forecasts: An emerging imperative. Science. 2001;293:657–660. doi: 10.1126/science.293.5530.657. [DOI] [PubMed] [Google Scholar]

- 5.Gregory R, et al. Structured Decision Making: A Practical Guide to Environmental Management Choices. Wiley; Hoboken, NJ: 2012. [Google Scholar]

- 6.Williams PJ, Hooten MB. Combining statistical inference and decisions in ecology. Ecol Appl. 2016;26:1930–1942. doi: 10.1890/15-1593.1. [DOI] [PubMed] [Google Scholar]

- 7.Pouyat R, et al. The role and challenge of federal agencies in the application of scientific knowledge: Acid deposition policy and forest management as examples. Front Ecol Environ. 2010;8:322–328. [Google Scholar]

- 8.Hobday A, Claire M, Eveson J, Hartog J. Seasonal forecasting for decision support in marine fisheries and aquaculture. Fish Oceanogr. 2016;25:45–56. [Google Scholar]

- 9.Dietze M. Ecological Forecasting. Princeton Univ Press; Princeton: 2017. [Google Scholar]

- 10.Collins M, et al. Long-term climate change: Projections, commitments and irreversibility. In: Stocker T, et al., editors. Climate Change 2013: The Physical Science Basis. Cambridge Univ Press; Cambridge, UK: 2013. pp. 1029–1136. [Google Scholar]

- 11.Loescher H, Kelly E, Lea R. National ecological observatory network: Beginnings, programmatic and scientific challenges, and ecological forecasting. In: Chabbi A, Loescher H, editors. Terrestrial Ecosystem Research Infrastructures: Challenges, New developments and Perspectives. CRC Press; Boca Raton, FL: 2017. pp. 27–52. [Google Scholar]

- 12.Kahneman D. Think, Fast and Slow. Farrar, Straus and Giroux; New York: 2013. [Google Scholar]

- 13.Tetlock P, Gardner D. Superforecasting: The Art and Science of Prediction. Crown; New York: 2015. [Google Scholar]

- 14.Chen Y, et al. Forecasting fire season severity in South America using sea surface temperature anomalies. Science. 2011;334:787–791. doi: 10.1126/science.1209472. [DOI] [PubMed] [Google Scholar]

- 15.Shaman J, Karspeck A. Forecasting seasonal outbreaks of influenza. Proc Natl Acad Sci USA. 2012;109:20425–20430. doi: 10.1073/pnas.1208772109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stumpf RP, et al. Skill assessment for an operational algal bloom forecast system. J Mar Syst. 2009;76:151–161. doi: 10.1016/j.jmarsys.2008.05.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hobbs N, et al. State-space modeling to support management of brucellosis in the Yellowstone bison population. Ecol Monogr. 2015;85:525–556. [Google Scholar]

- 18.US Fish and Wildlife Service 2016 Adaptive Harvest Management: 2017 Hunting Season (US Department of Interior, Washington, DC). Available at www.fws.gov/birds/management/adaptive-harvest-management/publications-and-reports.php. Accessed August 30, 2017.

- 19.NOAA Fisheries 2016 Status of Stocks 2016: Annual Report to Congress on the Status of U.S. Fisheries (NOAA Fisheries, Washington, DC). Available at https://www.fisheries.noaa.gov/sfa/fisheries_eco/status_of_fisheries/status_updates.html. Accessed August 30, 2017.

- 20.Kalnay E. Atmospheric Modeling, Data Assimilation and Predictability. Cambridge Univ Press; Cambridge, UK: 2002. [Google Scholar]

- 21.Bauer P, Thorpe A, Brunet G. The quiet revolution of numerical weather prediction. Nature. 2015;525:47–55. doi: 10.1038/nature14956. [DOI] [PubMed] [Google Scholar]

- 22.Shuman F. History of numerical weather prediction at the National Meteorological Center. Weather Forecast. 1989;4:286–296. [Google Scholar]

- 23.Murphy A, Winkler R. Probability forecasting in meteorology. J Am Stat Assoc. 1984;79:489–500. [Google Scholar]

- 24.Petchey OL, et al. The ecological forecast horizon, and examples of its uses and determinants. Ecol Lett. 2015;18:597–611. doi: 10.1111/ele.12443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Houlahan J, McKinney S, Anderson T, McGill B. The priority of prediction in ecological understanding. Oikos. 2017;126:1–7. [Google Scholar]

- 26.LaDeau S, Han B, Rosi-Marshall E, Weathers K. The next decade of big data in ecosystem science. Ecosystems (N Y) 2017;20:274–283. [Google Scholar]

- 27.Wulder M, Masek J, Cohen W, Loveland T, Woodcock C. Opening the archive: How free data has enabled the science and monitoring promise of Landsat. Remote Sens Environ. 2012;122:2–10. [Google Scholar]

- 28.Weathers K, et al. Frontiers in ecosystem ecology from a community perspective: The future is boundless and bright. Ecosystems (N Y) 2016;19:753–770. [Google Scholar]

- 29.Stark S, et al. Toward accounting for ecoclimate teleconnections: Intra- and inter-continental consequences of altered energy balance after vegetation change. Landsc Ecol. 2015;31:181–194. [Google Scholar]

- 30.Seber G, Thompson S. Handbook of Statistics. Elsevier; Amsterdam: 1994. Environmental adaptive sampling; pp. 201–220. [Google Scholar]

- 31.Krause S, Lewandowski J, Dahm C, Tockner K. Frontiers in real-time ecohydrology—a paradigm shift in understanding complex environmental systems. Ecohydrology. 2015;8:529–537. [Google Scholar]

- 32.Hooten M, Wikle C, Sheriff S, Rushin J. Optimal spatio-temporal hybrid sampling designs for ecological monitoring. J Veg Sci. 2009;20:639–649. [Google Scholar]

- 33.Dietze M, et al. A quantitative assessment of a terrestrial biosphere model’s data needs across North American biomes. J Geophys Res Biogeosci. 2014;119:286–300. [Google Scholar]

- 34.LeBauer D, et al. Facilitating feedbacks between field measurements and ecosystem models. Ecol Monogr. 2013;83:133–154. [Google Scholar]

- 35.Moss R, et al. Decision support: Connecting science, risk perception, and decisions. In: Melillo J, Richmond T, Yohe G, editors. Climate Change Impacts in the United States: The Third National Climate Assessment. Chap 26. US Global Change Research Program; Washington, DC: 2014. pp. 620–647. [Google Scholar]

- 36.Keisler J, Collier Z, Chu E, Sinatra N, Linkov I. Value of information analysis: The state of application. Environ Syst Decis. 2014;34:3–23. [Google Scholar]

- 37.Vargas R, et al. Enhancing interoperability to facilitate implementation of REDD+: Case study of Mexico. Carbon Manag. 2017;1:57–65. [Google Scholar]

- 38.Meinson P, et al. Continuous and high-frequency measurements in limnology: History, applications, and future challenges. Environ Rev. 2016;24:52–62. [Google Scholar]

- 39.Mills JA, et al. Archiving primary data: Solutions for long-term studies. Trends Ecol Evol. 2015;30:581–589. doi: 10.1016/j.tree.2015.07.006. [DOI] [PubMed] [Google Scholar]

- 40.Larsen L, et al. Appropriate complexity landscape modeling. Earth Sci Rev. 2016;160:111–130. [Google Scholar]

- 41.Walters C. Adaptive Management of Renewable Resources. Macmillan; New York: 1986. [Google Scholar]

- 42.Pilkey O, Pilkey-Jarvis L. Useless Arithmetic: Why Environmental Scientists Can’t Predict the Future. Columbia Univ Press; New York: 2007. [Google Scholar]

- 43.Dietze MC. Prediction in ecology: A first-principles framework. Ecol Appl. 2017;27:2048–2060. doi: 10.1002/eap.1589. [DOI] [PubMed] [Google Scholar]

- 44.Hooten M, Hobbs N. A guide to Bayesian model selection for ecologists. Ecol Monogr. 2015;85:3–28. [Google Scholar]

- 45.Luo Y, et al. A framework for benchmarking land models. Biogeosciences. 2012;9:3857–3874. [Google Scholar]

- 46.Harris D, Taylor S, White E. 2017. Forecasting biodiversity in breeding birds using best practices. bioRxiv:191130.

- 47.Whol H. 2017. Historical Range of Variability. Oxford Bibliographies (Oxford Univ Press, New York)

- 48.Ibáñez I, et al. Integrated assessment of biological invasions. Ecol Appl. 2014;24:25–37. doi: 10.1890/13-0776.1. [DOI] [PubMed] [Google Scholar]

- 49.Morgan MG. Use (and abuse) of expert elicitation in support of decision making for public policy. Proc Natl Acad Sci USA. 2014;111:7176–7184. doi: 10.1073/pnas.1319946111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Berliner L. Hierarchical Bayesian time series models. In: Hanson K, Silver R, editors. Maximum Entropy and Bayesian Methods. Kluwer Academic; Dordrecht, The Netherlands: 1996. pp. 15–22. [Google Scholar]

- 51.Clark J. Why environmental scientists are becoming Bayesians. Ecol Lett. 2005;8:2–14. [Google Scholar]

- 52.Weigel A, Liniger M, Appenzeller C. Can multi-model combination really enhance the prediction skill of probabilistic ensemble forecasts? Q J R Meteorol Soc. 2007;133:937–948. [Google Scholar]

- 53.Hoeting J, Madigan D, Raftery A, Volinsky C. Bayesian model averaging: A tutorial. Stat Sci. 1999;14:382–417. [Google Scholar]

- 54.Liu S, et al. Simulating the impacts of disturbances on forest carbon cycling in North America: Processes, data, models, and challenges. J Geophys Res Biogeosci. 2011;116:G00K08. [Google Scholar]

- 55.Taleb N. The Black Swan: The Impact of the Highly Improbable. Random House; New York: 2007. [Google Scholar]

- 56.Whitlock MC, McPeek MA, Rausher MD, Rieseberg L, Moore AJ. Data archiving. Am Nat. 2010;175:145–146. doi: 10.1086/650340. [DOI] [PubMed] [Google Scholar]

- 57.Mislan KA, Heer JM, White EP. Elevating the status of code in ecology. Trends Ecol Evol. 2016;31:4–7. doi: 10.1016/j.tree.2015.11.006. [DOI] [PubMed] [Google Scholar]

- 58.Borer E, Seabloom E, Jones M, Schildhauer M. Some simple guidelines for effective data management. Bull Ecol Soc Am. 2009;90:205–214. [Google Scholar]

- 59.White E, et al. Nine simple ways to make it easier to (re)use your data. Ideas Ecol Evol. 2013;6:1–10. [Google Scholar]

- 60.Strasser C, Cook R, Michener W, Budden A. 2012 Primer on data management: What you always wanted to know. Available at https://escholarship.org/uc/item/7tf5q7n3. Accessed February 27, 2017.

- 61.Wilson G, et al. Best practices for scientific computing. PLoS Biol. 2014;12:e1001745. doi: 10.1371/journal.pbio.1001745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Michener W. Meta-information concepts for ecological data management. Ecol Inform. 2006;1:3–7. [Google Scholar]

- 63.Read E, et al. Building the team for team science. Ecosphere. 2016;7:e01291. [Google Scholar]

- 64.Uriarte M, Ewing H, Eviner V, Weathers K. Scientific culture, diversity and society: Suggestions for the development and adoption of a broader value system in science. Bioscience. 2007;57:71–78. [Google Scholar]

- 65.National Research Council . Satellite Observations of the Earth’s Environment: Accelerating the Transition of Research to Operations. The National Academies Press; Washington, DC: 2003. [Google Scholar]

- 66.Medlyn B, et al. Using ecosystem experiments to improve vegetation models: Lessons learnt from the free-air CO2 enrichment model-data synthesis. Nat Clim Chang. 2015;5:528–534. [Google Scholar]

- 67.Bradshaw G, Borchers J. Uncertainty as information: Narrowing the science-policy gap. Conserv Ecol. 2000;4:7. [Google Scholar]

- 68.Janetos A, Kenney M. Developing better indicators to track climate impacts. Front Ecol Environ. 2015;13:403. [Google Scholar]

- 69.Nature Editorial: Validation required. Nature. 2010;463:849. doi: 10.1038/463849a. [DOI] [PubMed] [Google Scholar]

- 70.Clemen R, Reilly T. Making Hard Decisions: Introduction to Decision Analysis. Duxbury Press; Belmont, CA: 2005. [Google Scholar]

- 71.Meadow A, et al. Moving toward the deliberate coproduction of climate science knowledge. Weather Clim Soc. 2015;7:179–191. [Google Scholar]

- 72.Eigenbrode S, et al. Employing philosophical dialogue in collaborative science. Bioscience. 2007;57:55–64. [Google Scholar]

- 73.Runge M, Converse S, Lyons J. Which uncertainty? Using expert elicitation and expected value of information to design an adaptive program. Biol Conserv. 2011;144:1214–1223. [Google Scholar]

- 74.Driscoll C, Lambert K, Weathers KC. Integrating science and policy: A case study of the Hubbard Brook Research Foundation Science Links Program. Bioscience. 2011;61:791–801. [Google Scholar]