Significance

The human cortex operates in a state of restless activity, the meaning and functionality of which are still not understood. A fascinating, though controversial, hypothesis, partially backed by empirical evidence, suggests that the cortex might work at the edge of a phase transition, from which important functional advantages stem. However, the nature of such a transition remains elusive. Here, we adopt ideas from the physics of phase transitions to construct a general (Landau–Ginzburg) theory of cortical networks, allowing us to analyze their possible collective phases and phase transitions. We conclude that the empirically reported scale-invariant avalanches can possibly come about if the cortex operated at the edge of a synchronization phase transition, at which neuronal avalanches and incipient oscillations coexist.

Keywords: cortical dynamics, neuronal avalanches, criticality, neural oscillations, synchronization

Abstract

Understanding the origin, nature, and functional significance of complex patterns of neural activity, as recorded by diverse electrophysiological and neuroimaging techniques, is a central challenge in neuroscience. Such patterns include collective oscillations emerging out of neural synchronization as well as highly heterogeneous outbursts of activity interspersed by periods of quiescence, called “neuronal avalanches.” Much debate has been generated about the possible scale invariance or criticality of such avalanches and its relevance for brain function. Aimed at shedding light onto this, here we analyze the large-scale collective properties of the cortex by using a mesoscopic approach following the principle of parsimony of Landau–Ginzburg. Our model is similar to that of Wilson–Cowan for neural dynamics but crucially, includes stochasticity and space; synaptic plasticity and inhibition are considered as possible regulatory mechanisms. Detailed analyses uncover a phase diagram including down-state, synchronous, asynchronous, and up-state phases and reveal that empirical findings for neuronal avalanches are consistently reproduced by tuning our model to the edge of synchronization. This reveals that the putative criticality of cortical dynamics does not correspond to a quiescent-to-active phase transition as usually assumed in theoretical approaches but to a synchronization phase transition, at which incipient oscillations and scale-free avalanches coexist. Furthermore, our model also accounts for up and down states as they occur (e.g., during deep sleep). This approach constitutes a framework to rationalize the possible collective phases and phase transitions of cortical networks in simple terms, thus helping to shed light on basic aspects of brain functioning from a very broad perspective.

The cerebral cortex exhibits spontaneous activity even in the absence of any task or external stimuli (1–3). A salient aspect of this, so-called resting-state dynamics as revealed by in vivo and in vitro measurements, is that it exhibits outbursts of electrochemical activity characterized by brief episodes of coherence—during which many neurons fire within a narrow time window—interspaced by periods of relative quiescence, giving rise to collective oscillatory rhythms (4, 5). Shedding light on the origin, nature, and functional meaning of such an intricate dynamics is a fundamental challenge in neuroscience (6).

On experimentally enhancing the spatiotemporal resolution of activity recordings, Beggs and Plenz (7) made the remarkable finding that, actually, synchronized outbursts of neural activity could be decomposed into complex spatiotemporal patterns, thereon named “neuronal avalanches.” The sizes and durations of such avalanches were reported to be distributed as power laws [i.e., to be organized in a scale-free way, limited only by network size (7)]. Furthermore, they obey finite-size scaling (8), a trademark of scale invariance (9), and the corresponding exponents are compatible with those of an unbiased branching process (10).

Scale-free avalanches of neuronal activity have been consistently reported to occur across neural tissues, preparation types, experimental techniques, scales, and species (11–18). This has been taken as empirical evidence backing the criticality hypothesis (i.e., the conjecture that the awake brain might extract essential functional advantages—including maximal sensitivity to stimuli, large dynamical repertoires, optimal computational capabilities, etc.—from operating close to a critical point, separating two different phases) (19–22).

To make further progress, it is of crucial importance to clarify the nature of the phase transition marked by such an alleged critical point. It is usually assumed that it corresponds to the threshold at which neural activity propagates marginally in the network [i.e., to the critical point of a quiescent-to-active phase transition (7)], justifying the emergence of branching process exponents (23, 24). However, several experimental investigations found evidence that scale-free avalanches emerge in concomitance with collective oscillations, suggesting the presence of a synchronization phase transition (25, 26).

From the theoretical side, on the one hand, very interesting models accounting for the self-organization of neural networks to the neighborhood of the critical point of a quiescent-to-active phase transition have been proposed (27–30). These approaches rely on diverse regulatory mechanisms (31), such as synaptic plasticity (32), spike time-dependent plasticity (33), excitability adaptation, etc., to achieve network self-organization to the vicinity of a critical point. These models have in common that they rely on an extremely large separation of dynamical timescales [as in models of self-organized criticality* (36, 37)], which might not be a realistic assumption (27, 30, 38, 39). Some other models are more realistic from a neurophysiological viewpoint (17, 29), but they give rise to scale-free avalanches if and only if causal information—which is available in computational models but not accessible in experiments (40)—is considered. Thus, in our opinion, a sound theoretical model justifying the empirical observation of putative criticality is still missing.

On the other hand, from the synchronization viewpoint, well-known simple models of networks of excitatory and inhibitory spiking neurons exhibit differentiated synchronous (oscillatory) and asynchronous phases, with a synchronization phase transition in between (41–44). However, avalanches do not usually appear (or are not searched for) in such modeling approaches (18, 45, 46).

Concurrently, during deep sleep and also, under anesthesia, the cortical state has long been known to exhibit so-called “up and down” transitions between states of high and low neural activity, respectively (47, 48), which clearly deviate from the possible criticality of the awake brain and have been modeled on their own (29, 49, 50). Thus, it would be highly desirable to design theoretical models describing within a common framework the possibility of criticality, oscillations, and up–down transitions.

Our aim here is to clarify the nature of the phases and phase transitions of dynamical network models of the cortex by constructing a general unifying theory based on minimal assumptions and allowing us, in particular, to elucidate what the nature of the alleged criticality is.

To construct such a theory, we follow the strategy pioneered by Landau and Ginzburg. Landau proposed a simple approach to the analysis of phases of matter and the phase transitions that they experience. It consists of a parsimonious, coarse-grained, and deterministic description of states of matter in which—relying on the idea of universality—only relevant ingredients (such as symmetries and conservation laws) need to be taken into account and in which most microscopic details are safely neglected (9, 51). Ginzburg went a step further by realizing that fluctuations are an essential ingredient to be included in any sound theory of phase transitions, especially in low-dimensional systems. The resulting Landau–Ginzburg theory, including fluctuations and spatial dependence, is regarded as a metamodel of phase transitions and constitutes a firm ground on top of which the standard theory of phases of matter rests (9). Similar coarse-grained theories are nowadays used in interdisciplinary contexts—such as collective motion (52), population dynamics (53), and neuroscience (54–56)—where diverse collective phases stem out of the interactions among many elementary constituents.

In what follows, we propose and analyze a Landau–Ginzburg theory for cortical neural networks—which can be seen as a variant of the well-known Wilson–Cowan model, including crucially, stochasticity and spatial dependence—allowing us to shed light from a very general perspective on the collective phases and phase transitions that dynamical cortical networks can harbor. Using analytical and mostly, computational techniques, we show that our theory explains the emergence of scale-free avalanches as episodes of marginal and transient synchronization in the presence of a background of ongoing irregular activity, reconciling the oscillatory behavior of cortical networks with the presence of scale-free avalanches. Last but not least, our approach also allows for a unification of existing models describing diverse specific aspects of the cortical dynamics, such as up and down states and up and down transitions, within a common mathematical framework and is amenable to future theoretical (e.g., renormalization group) analyses.

Model and Results

We construct a mesoscopic description of neuronal activity, where the building blocks are not single neurons but local neural populations. These latter can be interpreted as small sections of neural tissue (57, 58) consisting of a few thousand cells (far away from the large number limit) and susceptible to be described by a few variables. Although this effective description is constructed here on phenomenological bases, more formal mathematical derivations of similar equations from microscopic models exist in the literature (59). In what follows, (i) we model the neural activity at a single mesoscopic “unit,” (ii) we analyze its deterministic behavior as a function of parameter values, and later, (iii) we study the collective dynamics of many coupled units.

Single-Unit Model.

At each single unit, we consider a dynamical model in which the excitatory activity, , obeys a Wilson–Cowan equation (60) (that is, following the Landau approach, we truncate to third order in a Taylor series expansion†):

| [1] |

where controls the spontaneous decay of activity, which is partially compensated for by the generation of additional activity at a rate proportional to the amount of available synaptic resources, . The quadratic term with controls nonlinear integration effects.‡ Finally, the cubic term imposes a saturation level for the activity, preventing unbounded growth, and is an external driving field.

A second equation is used to describe the dynamics of the available synaptic resources, , through the combined effect of synaptic depression and synaptic recovery as encoded in the celebrated model of Tsodyks and Markram (61) for synaptic plasticity (32):

| [2] |

where () is the characteristic recovery (depletion) time and is the baseline level of nondepleted synaptic resources. Importantly, we have also considered variants of this model, avoiding the truncation of the power series expansion or including an inhibitory population as the chief regulatory mechanism: either of these extensions leads to essentially the same phenomenology and phases as described in what follows, supporting the robustness of the forthcoming results (SI Appendix).

Mean Field Analysis.

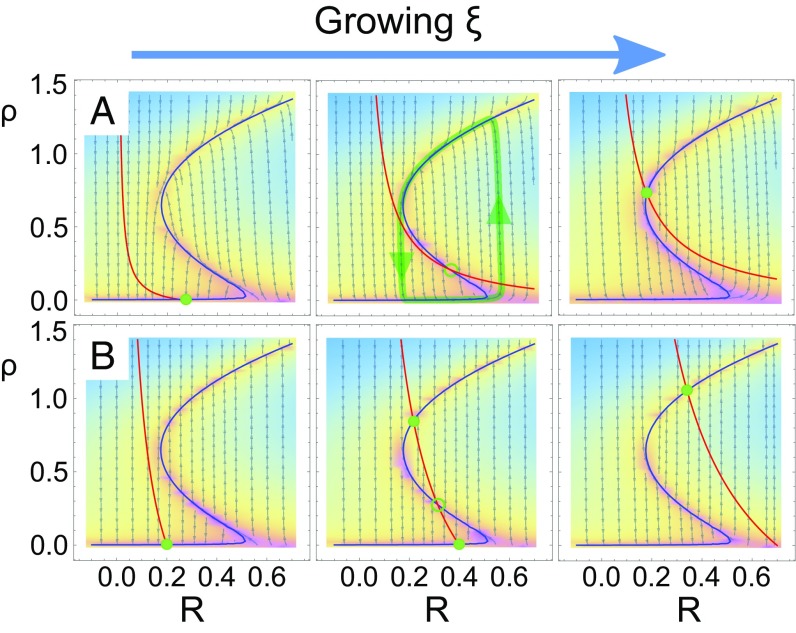

Here, we analyze, both analytically and computationally, the dynamics of a single unit as given by Eqs. 1 and 2. We determine the fixed points (i.e., the possible steady states at which the system can settle) as a function of the baseline level of synaptic resources, , which plays the role of a control parameter (all other parameters are kept fixed to standard nonspecific values as summarized in Fig. 1). For small values of , the system falls into a quiescent or down state with and .§ Instead, for large values of , there is an active or up state with self-sustained spontaneous activity and depleted resources . In between these two limiting phases, two alternative scenarios (as illustrated in Fig. 1 and summarized in the phase diagram in SI Appendix) can appear depending on the timescales and .

-

i)

Case A. A stable limit cycle (corresponding to an unstable fixed point with complex eigenvalues) emerges for intermediate values of (in between two Hopf bifurcations) as illustrated in Fig. 1A. This Hopf bifurcation scenario has been extensively discussed in the literature (62), and it is at the basis of the emergence of oscillations in neural circuits.

-

ii)

Case B. An intermediate regime of bistability including three fixed points is found for intermediate values of (in between two saddle node bifurcations): the up and the down ones as well as an unstable fixed point in between (as illustrated in Fig. 1B). This saddle node scenario is the relevant one in models describing transitions between up (active) and down (quiescent) states (29, 49, 63).

Fig. 1.

Phase portraits and nullclines for the (deterministic) dynamics (Eqs. 1 and 2). Nullclines are colored in blue () and red (), respectively; fixed points —at which nullclines intersect—are highlighted by green (open) circles for stable (unstable) fixed points. Background color code (shifting from blue to purple) represents the intensity of the vector field , with local directions that are represented by small gray arrows. A trajectory illustrating a limit cycle is showed in green in A. The system exhibits either (A) an oscillatory regime or (B) a region of bistability in between a down (Left) state and an up (Right) state. It is possible to shift from case A to case B and vice versa by changing just one parameter [e.g., the timescale of resources depletion, ( and for cases A and B, respectively)]. Other parameter values: . The control parameters in Left, Center, and Right are , respectively, in A and , respectively, in B.

Two remarks are in order. The first is that one can shift from one scenario to the other just by changing one parameter (e.g., the synaptic depletion timescale ).¶ The second and very important one is that none of these two scenarios exhibit a continuous transition (transcritical bifurcation) separating the up/active from the down/quiescent regimes. Thus, at this single-unit/deterministic level, there is no precursor of a critical point for marginal propagation of activity.

Stochastic Network Model.

We now introduce stochastic and spatial effects in the simplest possible way. For this, we consider a network of nodes coupled following a given connection pattern as described below. Each network node represents a mesoscopic region of neural tissue or unit as described above. On top of this deterministic dynamics, we consider that each unit (describing a finite population) is affected by intrinsic fluctuations (55, 59, 64). More specifically, Eq. 1 is complemented with an additional term , which includes a (zero mean, unit variance) Gaussian noise and a density-dependent amplitude # [i.e., a multiplicative noise (65)].

At macroscopic scales, the cortex can be treated as a 2D sheet consisting mostly of short-range connections (66).‖ Although long-range connections are also known to exist and small world effects have been identified in local cortical regions (68), here we consider a 2D square lattice (size ) of mesoscopic units as the simplest way to embed our model into space. Afterward, we shall explore how our main results are affected by the introduction of more realistic network architectures, including additional layers of complexity, such as long-range connections and spatial heterogeneity.

Following the parsimonious Landau–Ginzburg approach adopted here, the coupling between neighboring units is described up to leading order by a diffusion term. This type of diffusive coupling between neighboring mesoscopic units stems from electrical synapses (57, 69), has some experimental backing (70), and has been analytically derived starting from models of spiking neurons (54).** Thus, finally, the resulting set of coupled stochastic equations is

| [3] |

where for simplicity, some time dependences have been omitted; and are the activity and resources at a given node (with ) and time , respectively. describes the diffusive coupling of unit with its nearest neighbors with (diffusion) constant . The physical scales of the system are controlled by the values of the parameters and ; however, given that, as illustrated in SI Appendix, results do not change qualitatively on varying parameter values (as long as they are finite and nonvanishing), here we take for the sake of simplicity.

Eq. 3 constitutes the basis of our theory. In principle, this set of equations is amenable to theoretical analyses, possibly including renormalization ones (9). However, here we restrict ourselves to computational studies aimed at scrutinizing what is the basic phenomenology, leaving more formal analyses for the future. In particular, we resort to numerical integration of the stochastic equations (Eq. 3), which is feasible thanks to the efficient scheme developed in ref. 71 to deal with multiplicative noise. We consider as an integration time step and keep, as above, all parameters fixed, except for the baseline level of synaptic resources, , which works as a control parameter.

Phases and Phase Transitions: Case A.

We start analyzing sets of parameters within case A above. We study the possible phases that emerge as is varied. These are illustrated in Fig. 2, where characteristic snapshots and overall activity time series as well as raster plots are plotted. For a more vivid visualization, we have also generated videos of the activity dynamics in the different phases (Movie S1).

Fig. 2.

Illustration of the diverse phases emerging in the model (case A). The baseline of synaptic resources, , increases from top to bottom: (down state), (synchronous regime), (critical point for the considered size ), (asynchronous phase), and (active phase). The first column shows snapshots of typical configurations; the color code represents the level of activity at each unit as shown in the scale. The network spiking or synchronous irregular phase is characterized by waves of activity growing and transiently invading the whole system before extinguishing the resources and coming to an end. However, in the nested oscillation or asynchronous irregular (AI) regime, multiple traveling waves coexist, interfering with each other. In the up state, waves are no longer observed, and a homogeneous state of self-sustained activity is observed (Movie S1). The second column shows the time series of the overall activity averaged over the whole network. In the down state, activity is almost vanishing. In the synchronous phase, macroscopic activity appears in the form of almost synchronous bursts interspersed by almost silent intervals. At the critical point, network spikes begin to superimpose, giving rise to complex oscillatory patterns (nested oscillations) and marginally self-sustained global activity all across the asynchronous regime; finally, in the up state, the global activity converges to steady state with small fluctuations. The third column shows steady-state probability distribution for the global activity: in the down state and the network spiking regime, the distributions are shown in a double-logarithmic scale; observe the approximate power law for very small values of stemming from the presence of multiplicative noise (10). The fourth column shows an illustration of the different levels of synchronization across phases: a sample of randomly chosen units is mapped into oscillators using their analytic signal representation (Materials and Methods); the plot shows the time evolution of their corresponding phases . Observe the almost periodic behavior in the synchronous phase, which starts blurring at the critical point and progressively vanishes as the control parameter is further increased. Parameter values: .

Down-state phase (A1).

If the baseline level is sufficiently small (), resources are always scarce, and the system is unable to produce self-sustained activity (i.e., it is hardly excitable), giving rise to a down-state phase characterized by very small values of the network time-averaged activity for large times (Fig. 2, first row). The quiescent state is disrupted only locally by the effect of the driving field , which creates local activity, barely propagating to neighboring units.

Synchronous irregular phase (A2).

Above a certain value of resource baseline (), there exists a wide region in parameter space in which activity generated at a seed point is able to propagate to neighboring units, triggering a wave of activity that transiently propagates through the network until resources are exhausted, activity ceases, and the recovery process restarts (Fig. 2, second row). Such waves or “network spikes” appear in an oscillatory, although not perfectly periodic, fashion, with an average separation time that decreases with . In the terminology of Brunel (43), this corresponds to a synchronous irregular state/phase, since the collective activity is time-dependent (oscillatory) and single-unit spiking is irregular (as discussed below). This wax and wane dynamics resembles that of anomalous (e.g., epileptic) tissues (72).

Asynchronous irregular phase (A3).

For even larger values of resource baseline (), the level of synaptic recovery is sufficiently high as to allow for resource-depleted regions to recover before the previous wave has come to an end. Thereby, diverse traveling waves can coexist and interfere, giving rise to complex collective oscillatory patterns [Fig. 2, fourth row, which is strikingly similar to, for example, EEG data of -rhythms (73)]. The amplitude of these oscillations, however, decreases on increasing network size (which occurs as many different local waves are averaged and deviations from the mean tend to be washed away). This regime can be assimilated to an asynchronous irregular (AI) phase of the work by Brunel (43) (see below).

Up-state phase (A4).

For even larger values of , plenty of synaptic resources are available at all times, giving rise to a state of perpetual activity with small fluctuations around the mean value (Fig. 2, fifth row) (i.e., an up state). Let us finally remark that, as explicitly shown in SI Appendix, the AI phase and the up state cannot be distinguished in the infinite network size limit, in which there are so many waves to be averaged that a homogeneous steady state emerges on average in both cases.

Phase Transitions.

Having analyzed the possible phases, we now discuss the phase transitions separating them. For all of the considered network sizes, the time-averaged overall activity, , starts taking a distinctively nonzero value above (Fig. 3), reflecting the upper bound of the down or quiescent state (transition between A1 and A2). This phase transition is rather trivial and corresponds to the onset on network spikes [i.e., oscillations; with characteristic time that depends on various factors, such as the synaptic recovery time (74) and the baseline level of synaptic resources].

Fig. 3.

Overall network activity state (case A) as determined by the network time-averaged value (). (A) Order parameter as a function of the control parameter for various system sizes [from bottom (blue) to top (orange)]; observe that grows monotonically with and that an intermediate regime, in which grows with system size, emerges between the up and down states. (B) SD of the averaged overall activity in the system multiplied by ; (in the text). The point of maximal variability coincides with the point of maximal slope in A for all network sizes . (C) Finite-size scaling analysis of the peaks in B. The distance of the size-dependent peak locations from their asymptotic value for , , scales as a power law of the system size, taking and revealing the existence of true scaling at criticality.

More interestingly, Fig. 3 also reveals that exhibits an abrupt increase at (size-dependent) values of between two and three, signaling the transition from A2 to A3. However, the jump amplitude decreases as increases, suggesting a smoother transition in the large- limit. Thus, it is not clear a priori, using as an order parameter, whether there is a true sharp phase transition or whether there is just a cross-over between the synchronous (A2) and asynchronous (A3) regimes. To elucidate the existence of a true critical point, we measured the standard deviation of the network-averaged global activity , . Direct application of the central limit theorem (65) would imply that such a quantity should decrease as for large , and thus, should converge to a constant. However, Fig. 3B shows that exhibits a very pronounced peak located at the (-dependent) transition point between the A2 and A3 phases; furthermore, its height grows with (i.e., it diverges in the thermodynamic limit), revealing strong correlations and anomalous scaling as occurs at critical points. Also, a finite-size scaling analysis of the value of at the peak [for each ; i.e., ] reveals the existence of finite-size scaling as it corresponds to a bona fide continuous phase transition at in the infinite-size limit (Fig. 3C). Moreover, a detrended fluctuation analysis (75, 76) of the time series reveals the emergence of long-range temporal correlations right at (SI Appendix) as expected at a continuous phase transition.

To shed further light on the nature of such a transition, it is convenient to use a more adequate (synchronization) order parameter. In particular, we consider the Kuramoto index —customarily used to detect synchronization transitions (77)—defined as , where is the imaginary unit, is the modulus of a complex number, here indicates averages over time and independent realizations, and runs over units, each of which is characterized by a phase, , that can be defined in different ways. For instance, an effective phase can be assigned to the time series at unit , , by computing its analytic signal representation, which maps any given real-valued time series into an oscillator with time-dependent phase and amplitude (Materials and Methods). Using the resulting phases, , the Kuramoto index can be calculated. As illustrated in Fig. 4A, it reveals the presence of a synchronization transition: the value of clearly drops at the previously determined critical point . An alternative method to define a time-dependent phase for each unit (details are discussed in Materials and Methods) reveals even more vividly the existence of a synchronization transition at as shown in Fig. 4B. Finally, we have also estimated the coefficient of variation (CV) of the distance between the times at which each of these effective phases crosses the value ; this analysis reveals the presence of a sharp peak of variability, converging for large network sizes to the critical point (Fig. 4B, Inset).

Fig. 4.

Synchronization transition elucidated by measuring the Kuramoto parameter as estimated using (A) the analytic signal representation of activity time series at different units and for various system sizes [ (red), (orange), and (green)]. For illustrative purposes, A, Right Inset shows the analytical representation (including both a real part and an imaginary part) of five sample units as a function of time; A, Left Inset shows the time evolution of one node (gray) together with the amplitude of its analytic representation (blue). Both vividly illustrate the oscillatory nature of the unit dynamics. (B) Results similar to those in A but using a different method to compute time-dependent phases of effective oscillators (Materials and Methods). This alternative method captures more clearly the emergence of a transition; the point of maximum slope of the curves corresponds to the value of the transition points in A. B, Inset shows the CV (ratio of the SD to the mean) of the times between two consecutive crossings of the value ; it exhibits a peak of variability at the critical point .

Thus, recapitulating, the phase transition separating the down state from the synchronous irregular regime (A1–A2 transition) is trivial and corresponds to the onset of network spikes, with no sign of critical features. In between the asynchronous and up states (A3–A4), there is no true phase transition, as both phases are indistinguishable in the infinitely large size limit (SI Appendix). However, different measurements clearly reveal the existence of a bona fide synchronization phase transition (A2–A3), at which nontrivial features characteristic of criticality emerge.

Avalanches.

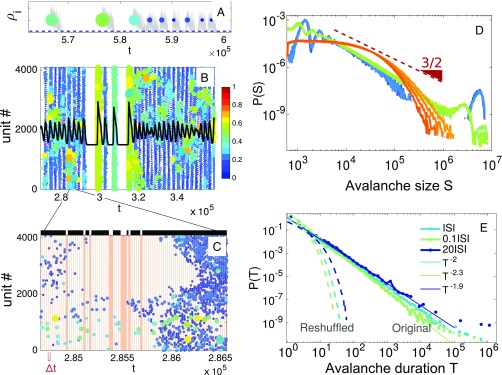

For ease of comparison with empirical results, we define a protocol to analyze avalanches that closely resembles the experimental one as introduced by Beggs and Plenz (7). Each activity time series of an individual unit can be mapped into a series of discrete time “spikes” or events as follows. As illustrated in Fig. 5A, a spike corresponds to a period in which the activity at a given unit is above a given small threshold in between two windows of quiescence (activity below threshold).†† Hence, as illustrated in Fig. 5B, the network activity can be represented as a raster plot of spiking units. Following the standard experimental protocol, a discrete time binning is chosen, and each individual spike is assigned to one such bin. An avalanche is defined as a consecutive sequence of temporally contiguous occupied bins preceded and ended by empty bins (Fig. 5 B and C). Quite remarkably, using this protocol, several well-known experimental key features of neuronal avalanches can be faithfully reproduced by tuning to a value close to the synchronization transition.

-

i)

The sizes and durations of avalanches of activity are found to be broadly (power law) distributed at the critical point; these scale-invariant avalanches coexist with anomalously large events or “waves” of synchronization as revealed by the heaps in the tails of the curves in Fig. 5 D and E.

-

ii)

Changing , power law distributions with varying exponents are obtained at criticality (the larger the time bin, the smaller the exponent) as originally observed experimentally by Beggs and Plenz (7) (Fig. 5E).

-

iii)

In particular, when is chosen to be equal to the interspike interval (ISI; i.e., the time interval between any two consecutive spikes), avalanche sizes and durations obey—at criticality—finite-size scaling with exponent values compatible with the standard ones (i.e., those of an unbiased branching process) (Fig. 5 B and C and SI Appendix).

-

iv)

Reshuffling the times of occurrence of unit’s spikes, the statistics of avalanches is dramatically changed, giving rise to exponential distributions (as expected for an uncorrelated Poisson point process) and thus revealing the existence of a nontrivial temporal organization in the dynamics (Fig. 5E).

-

v)

Away from the critical point, both in the subcritical regime and in the supercritical regime, deviations from this behavior are observed. In the subcritical or synchronous regime, the peak of periodic large avalanches becomes much more pronounced; in the asynchronous phase, such a peak is lost, and distribution functions become exponential ones with a characteristic scale (Fig. 5D).

Fig. 5.

Avalanches measured from activity time series. (A) Illustration of the activity time series (gray color) at a given unit . By establishing a threshold value (dashed blue line close to the origin), a single “event” or “unit spike” is defined at the time of the maximal activity in between two threshold crossings (note that the forthcoming results are robust to changes in this criterion) (SI Appendix); a weight equal to the area covered in between the two crossings is assigned to each event (note the color code). This allows us to map a continuous time series into a discrete series of weighted events. The time distance between two consecutive events is called ISI. (B) Raster plot for a system with units obtained using the procedure above for each unit. Observe that large events coexist with smaller ones and that these last ones occur in a rather synchronous fashion. The overall time-dependent activity is marked with a black curve. (C) Zoomed-in view of a part of B illustrating the time-resolved structure and using a time binning equal to the network-averaged ISI. Shaded columns correspond to empty time bins (i.e., with no spike). Avalanches are defined as sequences of events occurring in between two consecutive empty time bins, and they are represented by the black bars above the plot. (D) Avalanche size distribution (the size of the avalanche is the sum of the weighted spikes that it comprises) for diverse values of (from to in shades of blue, from to in shades of green, and from to in shades of orange) measured from the raster plot . The (red) triangle, with slope , is plotted as a reference, illustrating that, near criticality, a power law with an exponent similar to the experimentally measured one is recovered. Away from the critical point, either in the synchronous phase (shades of blue) or in the asynchronous one (shades of orange), clear deviations from power law behavior are observed. Observe the presence of “heaps” in the tails of the distributions, especially in the synchronous regime; these correspond to periodic waves of synchronized activity (SI Appendix). They also appear at criticality but at progressively larger values for larger system sizes. (E) Avalanche duration distribution determined with different choices of the time bin. The experimentally measured exponent is reproduced using , whereas deviations from such a value are measured for smaller (larger) time bins in agreement with experimentally reported results. After reshuffling times, the distributions become exponential ones, with characteristic timescales depending on (dashed lines).

Summing up, our model tuned to the edge of a synchronization/desynchronization phase transition reproduces all chief empirical findings for neural avalanches. These findings strongly suggest that the critical point alluded to by the criticality hypothesis of cortical dynamics does not correspond to a quiescent/active phase transition—as modeling approaches usually assume—but to a synchronization phase transition, at the edge of which oscillations and avalanches coexist.

It is important to underline that our results regarding the emergence of scale-free avalanches are purely computational. To date, we do not have a theoretical understanding of why results are compatible with branching process exponents. In particular, it is not clear to us if a branching process could possibly emerge as an effective description of the actual (synchronization) dynamics in the vicinity of the phase transition or whether the exponent values appear as a generic consequence of the way that temporally defined avalanches are measured (46). These issues deserve to be carefully scrutinized in future work.

The Role of Heterogeneity.

Thus far, we have described homogeneous networks with local coupling. However, long-range connections among local regions also exist in the cortex, and mesoscopic units are not necessarily homogeneous across space (68, 78). These empirical facts motivated us to perform additional analysis of our theory, in which slightly modified substrates are used. We considered small world networks and verified that our main results (i.e., the existing phases and phase transitions) are insensitive to the introduction of a small percentage of long-range connections (SI Appendix). However, details, such as the boundaries of the phase diagram, the shape of propagation waves, and the amplitude of nested oscillations, do change.

More remarkably, as described in detail in SI Appendix, a simple extension of our theory, in which parameters are not taken to be homogeneous but position-dependent (i.e., heterogeneous in space), is able to reproduce remarkably well empirical in vitro results for neural cultures with different levels of mesoscopic structural heterogeneity (79).

To further explore the influence of network architecture onto dynamical phases, in future work, we will extend our model using empirically obtained large-scale networks of the human brain, as their heterogeneous and hierarchical–modular architecture is known to influence dynamical processes operating on them (68, 80).

Phases and Phase Transitions: Case B.

Here, we discuss the much simpler scenario for which the deterministic/mean field dynamics predicts bistability (i.e., case B above), which is obtained, for example, considering slower dynamics for synaptic resource depletion. In this case, the introduction of noise and space does not significantly alter the deterministic picture. Indeed, computational analyses reveal that there are only two phases: a down state and an up one for small and large values of , respectively. These two phases have the very same features as their corresponding counterparts in case A. However, the phase transition between them is discontinuous (much as in Fig. 1B), and thus, for finite networks, fluctuations induce spontaneous transitions between the up and down states when takes intermediate values in the regime of phase coexistence. Thus, in case B, our theory constitutes a sound Landau–Ginzburg description of existing models, such as those in refs. 29, 49, and 50, describing up and down states and up and down transitions.

Conclusions and Discussion

The brain of mammalians is in a state of perennial activity, even in the absence of any apparent stimuli or task. Understanding the origin, meaning, and functional significance of such an energetically costly dynamical state is the fundamental problem in neuroscience. The so-called criticality hypothesis conjectures that the underlying dynamics of cortical networks is such that it is posed at the edge of a continuous phase transition, separating qualitatively different phases or regimes, with different degrees of order. Experience from statistical physics and the theory of phase transitions teaches that critical points are rather singular locations in phase diagrams, with very remarkable and peculiar features, such as scale invariance (i.e., the fact that fluctuations of wildly diverse spatiotemporal scales can emerge spontaneously, allowing the system dynamics to generate complex patterns of activity in a simple and natural way). A number of features of criticality, including scale invariance, have been conjectured to be functionally convenient and susceptible to be exploited by biological (as well as artificial) computing devices. Thus, the hypothesis that the brain actually works at the borderline of a phase transition has gained momentum in recent years (20–22), even if some skepticism remains (46). However, what these phases are and what the nature of the putative critical point is are questions that still remain to be fully settled.

Aimed at shedding light on these issues, here we followed a classic statistical physics approach. Following the parsimony principle of Landau and Ginzburg in the study of phases of the matter and the phase transitions that they experience, we proposed a simple stochastic mesoscopic theory of cortical dynamics that allowed us to classify the possible emerging phases of cortical networks under very general conditions. For the sake of specificity and concreteness, we focused on regulatory dynamics—preventing the level of activity to explode—controlled by synaptic plasticity (depletion and recovery of synaptic resources), but analogous results have been obtained considering, for example, inhibition as the chief regulatory mechanism. As a matter of fact, our main conclusions are quite robust and general and do not essentially depend on specific details of the implementation, the nature of the regulatory mechanism.

The mesoscopic approach on which our theory rests is certainly not radically novel, as quite a few related models exist in the literature. For instance, neural mass (81–83) and neural field models (66, 67) and rate or population activity equations (58, 84) are similar in spirit and have been successfully used to analyze activity of populations of neurons and synapses and their emerging collective regimes at mesoscopic and macroscopic scales.

Taking advantage of experience from the theory of phase transitions, we introduce two important key ingredients: intrinsic stochasticity stemming from the noninfinite size of mesoscopic regions and spatial dependence. In this way, our theory consists of a set of stochastic (truncated) Wilson–Cowan equations and can be formulated as a field theory using standard techniques (85). A rather similar (field theoretic) approach to analyze fluctuation effects in extended neural networks has been proposed (54).

Such a theory turns out to include a continuous phase transition from a quiescent phase to an active phase, with a critical point in between, which is in contrast with our findings here. Note, however, that the authors of ref. 54 themselves open the door to more complex scenarios if refractoriness and thresholds are included. In any case, such a continuous phase transition picture can be easily recovered in our framework just by changing the sign of a parameter (i.e., taking in Eq. 1); with such a parameter choice, our theory constitutes a sound Landau–Ginzburg description of microscopic models of neural dynamics exhibiting criticality and a continuous phase transition from a quiescent phase to an active phase (27, 39). We believe, however, that this scenario does not properly capture the essence of cortical dynamics, as in actual networks of spiking neurons, there are spike integration mechanisms, meaning that many inputs are required to trigger further activity.

Using our Landau–Ginzburg approach, we have shown that the stochastic and spatially extended neural networks can harbor two different scenarios depending on parameter values: case A including a limit cycle at the deterministic level and the possibility of oscillations and case B leading to bistability (Fig. 1).

In the simpler case B, our complete theory generates either a down-state or a homogeneous up-state phase, with a discontinuous transition separating them, and the possibility of up–down transitions when the system operates in the bistability region. In this case, our theory constitutes a sound mesoscopic description of existing microscopic models for up and down transitions (29, 49, 63, 86).

However, in case A, we find diverse phases, including oscillatory and bursting phenomena: down, synchronous irregular, asynchronous irregular, and active states.

As a side remark, let us emphasize that we constructed a coarse-grained model for activity propagation, but our analyses readily revealed the emergence of oscillations and synchronization phenomena. Hence, our results justify the use of models of effective coupled oscillators to scrutinize the large-scale dynamics of brain networks. As a matter of fact, such models have been reported to achieve the best performance [e.g., reproducing empirically observed resting-state networks (68)] when operating close to the synchronization phase transition point (64, 87, 88).

Within our framework, it is possible to define a protocol to analyze avalanches resembling very closely the experimental one (7, 8, 11, 16, 17). Thus, in contrast with other computational models, causal information is not explicitly needed/used here to determine avalanches—they are determined from raw data—and results can be straightforwardly compared with experimental ones for neuronal avalanches without conceptual gaps (40).

The model reproduces all of the main features observed experimentally. (i) Avalanche sizes and durations distributed in a scale-free way emerge at the critical point of the synchronization transition. (ii) The corresponding exponent values depend on the time bin required to define avalanches, but (iii) fixing to coincide with the ISI, the same statistics as in empirical networks [i.e., the critical exponents compatible with those of an unbiased branching process (10)] are obtained. Finally, (iv) scale-free distributions disappear if events are reshuffled in time, revealing a nontrivial temporal organization.

Thus, the main outcome of our analyses is that the underlying phase transition at which scale-free avalanches emerge does not separate a quiescent state from a fully active one; instead, it is a synchronization phase transition. This is a crucial observation, as most of the existing modeling approaches for critical avalanches in neural dynamics to date rely on a continuous quiescent/active phase transition.

Consistent with our findings, the amazingly detailed model put together by the Human Brain Project Consortium suggests that the model best reproduces experimental features when tuned near to its synchronization critical point (89). In such a study, the concentration of calcium ions, Ca2+, needs to be carefully tuned to its actual nominal value to set the network state. Similarly, in our approach, the role of the calcium concentration is played by the parameter , regulating the maximum level reachable by synaptic resources. Interestingly, the calcium concentration is well-known to modulate the level of available synaptic resources (i.e., neurotransmitter release from neurons) (32, 58, 61); hence, both quantities play a similar role.

Let us emphasize that we have not explored how potentially the network could self-organize to operate in the vicinity of the synchronization critical point, without having to resort to parameter fine tuning. Adaptive, homeostatic, and self-regulatory mechanisms accounting for this will be analyzed in future work. Also, here we have not looked for the recently uncovered neutral neural avalanches (40), as these require causality information to be considered, and such detailed causal relationships are blurred away in mesoscopic coarse-grained descriptions.

Summing up, our Landau–Ginzburg theory with parameters in case B constitutes a sound description of the cortex during deep sleep or during anesthesia, when up and down transitions are observed. However, case A, when tuned close to the synchronization phase transition, can be a sound theory for the awaked cortex in a state of alertness. A detailed analysis of how the transition between deep sleep (described by case B) and awake (or rapid eye movement sleep described by case A) states may actually occur in these general terms is beyond our scope here, but observe that, just by modifying the speed at which synaptic resources recover, it is possible to shift between the two cases, making it possible to investigate how such transitions could be induced.

A simple extension of our theory, including spatial heterogeneity, has been shown to be able to reproduce remarkably well experimental measurements of activity in neural cultures with structural heterogeneity, opening the way for more stringent empirical validations of the general theory proposed here.

Although additional experimental, computational, and analytical studies would certainly be required to definitely settle the controversy about the possible existence, origin, and functional meaning of the possible phases and phase transitions in cortical networks, we hope that the general framework introduced here—based on very general and robust principles—helps in clarifying the picture and in paving the way to future developments in this fascinating field.

Materials and Methods

Model Details.

In the Wilson–Cowan model, in its simplest form, the dynamics of the average firing rate or global activity, , is governed by the equation

where is the synaptic strength, is a threshold value that can be fixed to unity, and is a sigmoid (transduction) function [e.g., ] (59, 60). We adopt this well-established model and for simplicity, keep only the leading terms in a power series expansion; we rename the constants, yielding the deterministic part of Eq. 1. To this, we add noise , which is a delta-correlated Gaussian white noise of zero mean and unit variance accounting for stochastic/demographic effects in finite local populations as dictated by the central limit theorem; a formal derivation of such an intrinsic or demographic noise starting from a discrete microscopic model can be found in ref. 59. A noise term could also be added to the equation for synaptic resources (50), but it does not significantly affect the results. Considering mesoscopic units and coupling them diffusively within some networked structure (e.g., a 2D lattice), we finally obtained the set of Eq. 3.

Analytic Signal Representation.

The Hilbert transform is a bounded linear operator largely used in signal analysis, as it provides a tool to transform a given real-valued function into a complex analytic function, called the analytic signal representation. This is defined as , where the Hilbert transform of is given by . Expressing the analytic signal in terms of its time-dependent amplitude and phase (polar coordinates) makes it possible to represent any signal as an oscillator. In particular, the associated phase is defined by .

From Continuous Time Series to Discrete Events.

Local time series at each single unit, , can be mapped into time sequences of point-like (“unit spiking”) events. For this, a local threshold is defined, allowing us to assign a state on/off to each single unit/node (depending on whether it is above/below such a threshold) at any given time. If the threshold is low enough, the procedure is independent of its specific choice. A single (discrete) event or spike can be assigned to each node (e.g., at the time of the maximal within the on state); a weight proportional to the integral of the activity time series spanned between two consecutive threshold crossings is assigned to each single event (Fig. 5A). Other conventions to define an event are possible, but results are not sensitive to it as illustrated in SI Appendix.

Phases from Spiking Patterns.

An alternative method to define a phase at each unit can be constructed after a continuous time series has been mapped into a spiking series. In particular, using a linear interpolation, , where and is the time of the nth spike of node/unit .

Supplementary Material

Acknowledgments

We thank P. Moretti, J. Hidalgo, J. Mejias, J. J. Torres, A. Vezzani, and P. Villa for useful suggestions and comments. We acknowledge Spanish Ministerio de Economía y Competitividad Grant FIS2013-43201-P (Fondo Europeo de Desarrollo Regional funds) for financial support.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. V.B. is a guest editor invited by the Editorial Board.

*This theory, developed three decades ago, aims at explaining the seemingly ubiquitous presence of criticality in natural systems as the result of autoorganization to the critical point of a quiescent/active phase transition by means of diverse mechanisms, including the presence of two dynamical processes occurring at infinitely separated timescales (34, 35).

†We keep up to third order to include the leading effects of the sigmoid response function; a nontruncated variant of the model has also been considered (SI Appendix).

‡Single neurons integrate many presynaptic spikes to go beyond threshold, and thus, their response is nonlinear: the more activity, the more likely that it is self-sustained (57). As a matter of fact, the Wilson–Cowan model includes a sigmoid response function with a threshold, implying that activity has to be above some minimum value to be self-sustained and entailing in the series expansion (Materials and Methods).

§Deviations from stem from the small but nonvanishing external driving .

¶Note that the slope of the nullclines deriving from Eq. 2 (red in Fig. 1) is proportional to : if it is small enough, there exists only one unstable fixed point, giving rise to a Hopf bifurcation; otherwise, the nullclines intersect at three points, generating the bistable regime. These two possibilities correspond to cases A and B above, respectively.

#In the limit of slow external driving and up to leading order in an expansion in powers of , this can be written as , where is a noise amplitude; this stems from the fact that the spiking of each single neuron is a stochastic process, and the overall fluctuation of the density of a collection of them scales with its square root as dictated by the central limit theorem (65) (ref. 59 has a detailed derivation of the square root dependence).

‖This type of approach is at the bases of so-called neural field models, with a long tradition in neuroscience (67).

**More elaborated approaches, including coupling kernels between different regions, as well as asymmetric ones are also often considered in the literature (e.g., ref. 56), but here, we stick to the simplest possible coupling.

††Results are quite robust to the specific way in which this procedure is implemented (Materials and Methods, Fig. 5, and SI Appendix).

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1712989115/-/DCSupplemental.

References

- 1.Arieli A, Sterkin A, Grinvald A, Aertsen AD. Dynamics of ongoing activity: Explanation of the large variability in evoked cortical responses. Science. 1996;273:1868–1871. doi: 10.1126/science.273.5283.1868. [DOI] [PubMed] [Google Scholar]

- 2.Raichle ME. The restless brain. Brain Connect. 2011;1:3–12. doi: 10.1089/brain.2011.0019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. MD Fox, Raichle ME (2007) Spontaneous fluctuations in brain activity observed with functional magnetic resonance imaging. Nat Rev Neurosci 8:700–711. [DOI] [PubMed]

- 4.Persi E, Horn D, Volman V, Segev R, Ben-Jacob E. Modeling of synchronized bursting events: The importance of inhomogeneity. Neural Comput. 2004;16:2577–2595. doi: 10.1162/0899766042321823. [DOI] [PubMed] [Google Scholar]

- 5.Segev R, Shapira Y, Benveniste M, Ben-Jacob E. Observations and modeling of synchronized bursting in two-dimensional neural networks. Phys Rev E. 2001;64:011920. doi: 10.1103/PhysRevE.64.011920. [DOI] [PubMed] [Google Scholar]

- 6.Buzsaki G. Rhythms of the Brain. Oxford Univ Press; Oxford: 2009. [Google Scholar]

- 7.Beggs JM, Plenz D. Neuronal avalanches in neocortical circuits. J Neurosci. 2003;23:11167–11177. doi: 10.1523/JNEUROSCI.23-35-11167.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Petermann T, et al. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proc Natl Acad Sci USA. 2009;106:15921–15926. doi: 10.1073/pnas.0904089106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Binney JJ, Dowrick NJ, Fisher AJ, Newman MEJ. The Theory of Critical Phenomena. Oxford Univ Press; Oxford: 1993. [Google Scholar]

- 10.di Santo S, Villegas P, Burioni R, Muñoz MA. A simple unified view of branching process statistics: Random walks in balanced logarithmic potentials. Phys Rev E. 2017;95:032115. doi: 10.1103/PhysRevE.95.032115. [DOI] [PubMed] [Google Scholar]

- 11.Mazzoni Alberto, et al. On the dynamics of the spontaneous activity in neuronal networks. PLoS One. 2007;2:e439. doi: 10.1371/journal.pone.0000439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pasquale V, Massobrio P, Bologna LL, Chiappalone M, Martinoia S. Self-organization and neuronal avalanches in networks of dissociated cortical neurons. Neuroscience. 2008;153:1354–1369. doi: 10.1016/j.neuroscience.2008.03.050. [DOI] [PubMed] [Google Scholar]

- 13.Hahn G, et al. Neuronal avalanches in spontaneous activity in vivo. J Neurophysiol. 2010;104:3312–3322. doi: 10.1152/jn.00953.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Haimovici A, Tagliazucchi E, Balenzuela P, Chialvo DeR. Brain organization into resting state networks emerges at criticality on a model of the human connectome. Phys Rev Lett. 2013;110:178101. doi: 10.1103/PhysRevLett.110.178101. [DOI] [PubMed] [Google Scholar]

- 15.Tagliazucchi E, Balenzuela P, Fraiman D, Chialvo DR. Criticality in large-scale brain fMRI dynamics unveiled by a novel point process analysis. Front Physiol. 2012;3:15. doi: 10.3389/fphys.2012.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shriki O, et al. Neuronal avalanches in the resting meg of the human brain. J Neurosci. 2013;33:7079–7090. doi: 10.1523/JNEUROSCI.4286-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bellay T, Klaus A, Seshadri S, Plenz D. Irregular spiking of pyramidal neurons organizes as scale-invariant neuronal avalanches in the awake state. Elife. 2015;4:e07224. doi: 10.7554/eLife.07224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Poil S-S, Hardstone R, Mansvelder HD, Linkenkaer-Hansen K. Critical-state dynamics of avalanches and oscillations jointly emerge from balanced excitation/inhibition in neuronal networks. J Neurosci. 2012;32:9817–9823. doi: 10.1523/JNEUROSCI.5990-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chialvo DR. Critical brain networks. Phys A. 2004;340:756–765. [Google Scholar]

- 20.Plenz D, Niebur E. Criticality in Neural Systems. Wiley; New York: 2014. [Google Scholar]

- 21.Chialvo DR. Emergent complex neural dynamics. Nat Phys. 2010;6:744–750. [Google Scholar]

- 22.Mora T, Bialek W. Are biological systems poised at criticality? J Stat Phys. 2011;144:268–302. [Google Scholar]

- 23.Henkel M, Hinrichsen H, Lübeck S. Theoretical and Mathematical Physics. Springer; London: 2008. Non-equilibrium phase transitions: Absorbing phase transitions. [Google Scholar]

- 24.Marro J, Dickman R. 2005. Nonequilibrium Phase Transitions in Lattice Models, Collection Aléa-saclay (Cambridge Univ Press, Cambridge, UK)

- 25.Gireesh ED, Plenz D. Neuronal avalanches organize as nested theta- and beta/gamma-oscillations during development of cortical layer 2/3. Proc Natl Acad Sci USA. 2008;105:7576–7581. doi: 10.1073/pnas.0800537105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yang H, Shew WL, Roy R, Plenz D. Maximal variability of phase synchrony in cortical networks with neuronal avalanches. J Neurosci. 2012;32:1061–1072. doi: 10.1523/JNEUROSCI.2771-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Levina A, Michael Herrmann J, Geisel T. Dynamical synapses causing self-organized criticality in neural networks. Nat Phys. 2007;3:857–860. [Google Scholar]

- 28.Arcangelis L, Perrone-Capano C, Herrmann HJ. Self-organized criticality model for brain plasticity. Phys Rev Lett. 2006;96:028107. doi: 10.1103/PhysRevLett.96.028107. [DOI] [PubMed] [Google Scholar]

- 29.Millman D, Mihalas S, Kirkwood A, Niebur E. Self-organized criticality occurs in non-conservative neuronal networks during/up/’states. Nat Phys. 2010;6:801–805. doi: 10.1038/nphys1757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Stepp N, Plenz D, Srinivasa N. Synaptic plasticity enables adaptive self-tuning critical networks. PLoS Comput Biol. 2015;11:e1004043. doi: 10.1371/journal.pcbi.1004043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Harnack D, Pelko M, Chaillet A, Chitour Y, van Rossum MCW. Stability of neuronal networks with homeostatic regulation. PLoS Comput Biol. 2015;11:e1004357. doi: 10.1371/journal.pcbi.1004357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Markram H, Tsodyks MV. Redistribution of synaptic efficacy between pyramidal neurons. Nature. 1996;382:807–810. doi: 10.1038/382807a0. [DOI] [PubMed] [Google Scholar]

- 33.Shin C-W, Kim S. Self-organized criticality and scale-free properties in emergent functional neural networks. Phys Rev E. 2006;74:045101(R). doi: 10.1103/PhysRevE.74.045101. [DOI] [PubMed] [Google Scholar]

- 34.Dickman R, Muñoz MA, Vespignani A, Zapperi S. Paths to self-organized criticality. Braz J Phys. 2000;30:27–41. [Google Scholar]

- 35.Bonachela JA, Muñoz MA. Self-organization without conservation: True or just apparent scale-invariance? J Stat Mech. 2009;2009:P09009. [Google Scholar]

- 36.Bak P. How Nature Works. Copernicus; New York: 1996. [Google Scholar]

- 37.Jensen HJ. Self-Organized Criticality: Emergent Complex Behavior in Physical and Biological Systems. Cambridge Univ Press; Cambridge, UK: 1998. [Google Scholar]

- 38.de Arcangelis L. Are dragon-king neuronal avalanches dungeons for self-organized brain activity? Eur Phys J SpecTop. 2012;205:243–257. [Google Scholar]

- 39.Bonachela JA, de Franciscis S, Torres JJ, Muñoz MA. Self-organization without conservation, 2010 conservation: Are neuronal avalanches generically critical? J Stat Mech Theor E. 2010;2010:P02015. [Google Scholar]

- 40.Martinello M, et al. Neutral theory and scale-free neural dynamics. Phys Rev X. 2017;7:041071. [Google Scholar]

- 41.Van Vreeswijk C. Partially synchronized states in networks of pulse-coupled neurons. Phys Rev E. 1996;54:5522–5537. [Google Scholar]

- 42.Amit DJ, Brunel N. Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cereb Cortex. 1997;7:237–252. doi: 10.1093/cercor/7.3.237. [DOI] [PubMed] [Google Scholar]

- 43.Brunel N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J Comput Neurosci. 2000;8:183–208. doi: 10.1023/a:1008925309027. [DOI] [PubMed] [Google Scholar]

- 44.Brunel N, Hakim V. Sparsely synchronized neuronal oscillations. Chaos. 2008;18:015113. doi: 10.1063/1.2779858. [DOI] [PubMed] [Google Scholar]

- 45.Gautam SH, Hoang TT, McClanahan K, Grady SK, Shew WL. Maximizing sensory dynamic range by tuning the cortical state to criticality. PLoS Comput Biol. 2015;11:e1004576. doi: 10.1371/journal.pcbi.1004576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Touboul J, Destexhe A. Power-law statistics and universal scaling in the absence of criticality. Phys Rev E. Jan 2017;95:012413. doi: 10.1103/PhysRevE.95.012413. [DOI] [PubMed] [Google Scholar]

- 47.Destexhe A. Self-sustained asynchronous irregular states and up down states in thalamic, cortical and thalamocortical networks of nonlinear integrate-and-fire neurons. J Comput Neurosci. 2009;27:493–506. doi: 10.1007/s10827-009-0164-4. [DOI] [PubMed] [Google Scholar]

- 48.Steriade M, Nunez A, Amzica F. A novel slow ( 1 hz) oscillation of neocortical neurons in vivo: Depolarizing and hyperpolarizing components. J Neurosci. 1993;13:3252–3265. doi: 10.1523/JNEUROSCI.13-08-03252.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Holcman D, Tsodyks M. The emergence of up and down states in cortical networks. PLoS Comput Biol. 2006;2:e23. doi: 10.1371/journal.pcbi.0020023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mejias JF, Kappen HJ, Torres JJ. Irregular dynamics in up and down cortical states. PLoS One. 2010;5:e13651. doi: 10.1371/journal.pone.0013651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Stanley HE. Introduction to Phase Transitions and Critical Phenomena. Oxford Univ Press; Oxford: 1987. [Google Scholar]

- 52.Toner J, Tu Y, Ramaswamy S. Hydrodynamics and phases of flocks. Ann Phys. 2005;318:170–244. [Google Scholar]

- 53.Martín PV, Bonachela Ja, Levin Sa, Muñoz MA. Eluding catastrophic shifts. Proc Natl Acad Sci USA. 2015;112:E1828–E1836. doi: 10.1073/pnas.1414708112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Buice MA, Cowan JD. Field-theoretic approach to fluctuation effects in neural networks. Phys Rev E. 2007;75:051919. doi: 10.1103/PhysRevE.75.051919. [DOI] [PubMed] [Google Scholar]

- 55.Bressloff PC. Metastable states and quasicycles in a stochastic Wilson-Cowan model of neuronal population dynamics. Phys Rev E. 2010;82:051903. doi: 10.1103/PhysRevE.82.051903. [DOI] [PubMed] [Google Scholar]

- 56.Bressloff PC. Spatiotemporal dynamics of continuum neural fields. J Phys A Math Theor. 2012;45:033001. [Google Scholar]

- 57.Kandel ER, Schwartz JH, Jessell TM, Siegelbaum SA, James Hudspeth A. Principles of Neural Science. Vol 4 McGraw-Hill; New York: 2000. [Google Scholar]

- 58.Dayan P, Abbott LF. Theoretical neuroscience: Computational and mathematical modeling of neural systems. J Cogn Neurosci. 2003;15:154–155. [Google Scholar]

- 59.Benayoun M, Cowan JD, Drongelen W, Wallace E. Avalanches in a stochastic model of spiking neurons. PLoS Comput Biol. 2010;6:e1000846. doi: 10.1371/journal.pcbi.1000846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J. 1972;12:1–24. doi: 10.1016/S0006-3495(72)86068-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Tsodyks MV, Markram H. The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc Natl Acad Sci USA. 1997;94:719–723. doi: 10.1073/pnas.94.2.719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Mattia M, Sanchez-Vives MV. Exploring the spectrum of dynamical regimes and timescales in spontaneous cortical activity. Cogn Neurodyn. 2012;6:239–250. doi: 10.1007/s11571-011-9179-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Levina A, Herrmann JM, Geisel T. Phase transitions towards criticality in a neural system with adaptive interactions. Phys Rev Lett. 2009;102:118110. doi: 10.1103/PhysRevLett.102.118110. [DOI] [PubMed] [Google Scholar]

- 64.Deco G, Jirsa VK. Ongoing cortical activity at rest: Criticality, multistability, and ghost attractors. J Neurosci. 2012;32:3366–3375. doi: 10.1523/JNEUROSCI.2523-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Gardiner C. 2009. Stochastic Methods: A Handbook for the Natural and Social Sciences, Springer Series in Synergetics (Springer, Berlin)

- 66.Breakspear M. Dynamic models of large-scale brain activity. Nat Neurosci. 2017;20:340–352. doi: 10.1038/nn.4497. [DOI] [PubMed] [Google Scholar]

- 67.Deco G, Jirsa VK, Robinson PA, Breakspear M, Friston K. The dynamic brain: From spiking neurons to neural masses and cortical fields. PLoS Comput Biol. 2008;4:e1000092. doi: 10.1371/journal.pcbi.1000092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Sporns O. Networks of the Brain. MIT Press; Cambridge, MA: 2010. [Google Scholar]

- 69.Torres JJ, Varona P. Modeling biological neural networks. In: Rozenberg G, Bäck T, Kok JN, editors. Handbook of Natural Computing. Springer; Berlin: 2012. pp. 533–564. [Google Scholar]

- 70.Yu S, Klaus A, Yang H, Plenz D. 2014. Scale-invariant neuronal avalanche dynamics and the cut-off in size distributions. PLoS One, 9:e99761.

- 71.Dornic I, Chaté H, Muñoz MA. Integration of Langevin equations with multiplicative noise and the viability of field theories for absorbing phase transitions. Phys Rev Lett. 2005;94:100601. doi: 10.1103/PhysRevLett.94.100601. [DOI] [PubMed] [Google Scholar]

- 72.Hobbs JP, Smith JL, Beggs JM. Aberrant neuronal avalanches in cortical tissue removed from juvenile epilepsy patients. J Clin Neurophysiol. 2010;27:380–386. doi: 10.1097/WNP.0b013e3181fdf8d3. [DOI] [PubMed] [Google Scholar]

- 73.Lopes da Silva F. Neural mechanisms underlying brain waves: From neural membranes to networks. Electroencephalogr Clin Neurophysiol. 1991;79:81–93. doi: 10.1016/0013-4694(91)90044-5. [DOI] [PubMed] [Google Scholar]

- 74.Tabak J, Senn W, O’Donovan MJ, Rinzel J. Modeling of spontaneous activity in developing spinal cord using activity-dependent depression in an excitatory network. J Neurosci. 2000;20:3041–3056. doi: 10.1523/JNEUROSCI.20-08-03041.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Peng C-K, Havlin S, Eugene Stanley H, Goldberger AL. Quantification of scaling exponents and crossover phenomena in nonstationary heartbeat time series. Chaos An Interdiscip J Nonlinear Sci. 1995;5:82–87. doi: 10.1063/1.166141. [DOI] [PubMed] [Google Scholar]

- 76.Linkenkaer-Hansen K, Nikouline VV, Matias Palva J, Ilmoniemi RJ. Longrange temporal correlations and scaling behavior in human brain oscillations. J Neurosci. 2001;21:1370–1377. doi: 10.1523/JNEUROSCI.21-04-01370.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Pikovsky A, Rosenblum M, Kurths J. Synchronization: A Universal Concept in N onlinear Sciences. Vol 12 Cambridge Univ Press; Cambridge, UK: 2003. [Google Scholar]

- 78.Jbabdi S, Sotiropoulos SN, Haber SN, Van Essen DC, Behrens TE. Measuring macroscopic brain connections in vivo. Nat Neurosci. 2015;18:1546–1555. doi: 10.1038/nn.4134. [DOI] [PubMed] [Google Scholar]

- 79.Okujeni S, Kandler S, Egert U. Mesoscale architecture shapes initiation and richness of spontaneous network activity. J Neurosci. 2017;37:3972–3987. doi: 10.1523/JNEUROSCI.2552-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Moretti P, Muñoz MA. Griffiths phases and the stretching of criticality in brain networks. Nat Commun. 2013;4:2521. doi: 10.1038/ncomms3521. [DOI] [PubMed] [Google Scholar]

- 81.Freeman WJ. Mass Action in the Nervous System. Elsevier Science & Technology; New York: 1975. [Google Scholar]

- 82.David O, Friston KJ. A neural mass model for meg/eeg: Coupling and neuronal dynamics. NeuroImage. 2003;20:1743–1755. doi: 10.1016/j.neuroimage.2003.07.015. [DOI] [PubMed] [Google Scholar]

- 83.El Boustani S, Destexhe A. A master equation formalism for macroscopic modeling of asynchronous irregular activity states. Neural Comput. 2009;21:46–100. doi: 10.1162/neco.2009.02-08-710. [DOI] [PubMed] [Google Scholar]

- 84.Gerstner W, Kistler WM, Naud R, Paninski L. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition. Cambridge Univ Press; Cambridge, UK: 2014. [Google Scholar]

- 85.Täuber UC. Critical Dynamics: A Field Theory Approach to Equilibrium and Non-Equilibrium Scaling Behavior. Cambridge Univ Press; Cambridge, UK: 2014. [Google Scholar]

- 86.Pittorino F, Ibáñez Berganza M, Volo M, Vezzani A, Burioni R. Chaos and correlated avalanches in excitatory neural networks with synaptic plasticity. Phys Rev Lett. Mar 2017;118:098102. doi: 10.1103/PhysRevLett.118.098102. [DOI] [PubMed] [Google Scholar]

- 87.Cabral J, Hugues E, Sporns O, Deco G. Role of local network oscillations in resting-state functional connectivity. Neuroimage. 2011;57:130–139. doi: 10.1016/j.neuroimage.2011.04.010. [DOI] [PubMed] [Google Scholar]

- 88.Villegas P, Moretti P, Muñoz MA. Frustrated hierarchical synchronization and emergent complexity in the human connectome network. Sci Rep. 2014;4:5990. doi: 10.1038/srep05990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Markram H, et al. Reconstruction and simulation of neocortical microcircuitry. Cell. 2015;163:456–492. doi: 10.1016/j.cell.2015.09.029. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.