Abstract

The identification of bone lesions is crucial in the diagnostic assessment of multiple myeloma (MM). 68Ga-Pentixafor PET/CT can capture the abnormal molecular expression of CXCR-4 in addition to anatomical changes. However, whole-body detection of dozens of lesions on hybrid imaging is tedious and error prone. It is even more difficult to identify lesions with a large heterogeneity. This study employed deep learning methods to automatically combine characteristics of PET and CT for whole-body MM bone lesion detection in a 3D manner. Two convolutional neural networks (CNNs), V-Net and W-Net, were adopted to segment and detect the lesions. The feasibility of deep learning for lesion detection on 68Ga-Pentixafor PET/CT was first verified on digital phantoms generated using realistic PET simulation methods. Then the proposed methods were evaluated on real 68Ga-Pentixafor PET/CT scans of MM patients. The preliminary results showed that deep learning method can leverage multimodal information for spatial feature representation, and W-Net obtained the best result for segmentation and lesion detection. It also outperformed traditional machine learning methods such as random forest classifier (RF), k-Nearest Neighbors (k-NN), and support vector machine (SVM). The proof-of-concept study encourages further development of deep learning approach for MM lesion detection in population study.

1. Introduction

Multiple myeloma (MM) is a malignancy accounting for 13% of the hematological cases [1–3]. A characteristic hallmark of MM is the proliferation of malignant plasma cells in the bone marrow [4]. Common symptoms of MM are summarized as CRAB: C for hypercalcemia, R for renal failure, A for anemia, and B for bone lesions. Modern treatments have achieved a 5-year survival rate of 45% [5]. Nevertheless, MM remains an incurable disease at the moment and it usually relapses after a period of remission under therapy. The identification of bone lesions plays an important role in the diagnostic and therapeutic assessment of MM.

Radiographic skeletal survey (whole-body X-ray) is traditionally applied in the characterization of bone lesions of MM. However, it can only display lesions when the trabecular bone has already lost more than 30% around the focal, usually leading to underestimation of lesion extent [6]. 3D computed tomography (CT) allows the detection of smaller bone lesions that are not detectable by conventional radiography [7]. Magnetic resonance imaging (MRI) is also more sensitive than skeletal survey in the detection of MM lesions and it can detect diffuse bone marrow infiltration with good soft tissue differentiation [8, 9]. Comparable high sensitivity in the detection of small bone lesions can be achieved using PET/CT by combining metabolic (18F-FDG PET) and anatomical (CT) information [10–13]. The lesions are usually visualized more clearly with the guidance of hotspots in fused images, which can potentially improve the diagnosis and prognosis of MM [14]. Recently, the overexpression of chemokine (C-X-C motif) receptor 4 (CXCR4) has been verified in a variety of cancers, leading to the development of targeted PET tracer such as 68Ga-Pentixafor [15]. This emerging tracer has already demonstrated a higher sensitivity in the visualization of MM lesions [16, 17].

Even with advanced imaging, challenges remain in the identification of MM bone lesions. It is commonly seen that dozens of lesions spread across the whole body. Manual reading of all these distributed lesions is usually tedious for physicians and can result in large interobserver variations [18] and may be prone to errors. Although metabolic lesion volume is a prognostic index for the interpretation of MM PET images [19], it is necessary to identify the lesions before the calculation of characteristic quantities such as the maximum of standardized uptake value within tumor (SUVmax) and total lesion evaluation (TLE) [20, 21].

Computer-aided detection (CAD) has been developed to assist radiologists to resolve the critical information from complex data, which improves the accuracy and robustness of diagnosis [22–25]. Machine learning is the engine for typical CAD approaches. Several methods have been developed for lesion detection or tumor screening in oncological applications [26, 27], in which lesion and nonlesion parts are differentiated and segmented. For hybrid imaging, either is characterized with low spatial resolution in PET or features with low contrast in CT, and a direct detection or cosegmentation of tumors in both modalities is difficult. Based on such concern, in [28, 29] a fuzzy locally adaptive Bayesian algorithm has been developed for volume determination for PET imaging and later applied in lung tumor delineation. In [30–33] Markov Random Field (MRF) and graph-cut based methods were integrated to encode shape and context priors into the model. Simultaneously model delineation of regions using random walk and object/background seed localization method were also being employed in joint segmentation. In [34] modality-specific visibility weighting scheme based on a fuzzy connectedness (FC) image segmentation algorithm was proposed to determine the boundary correspondences of lesions in varied imaging modalities.

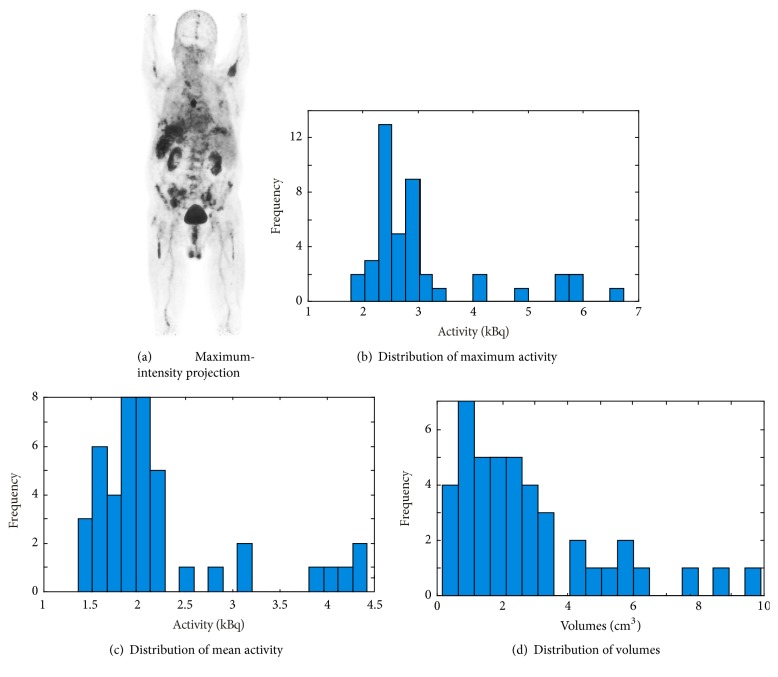

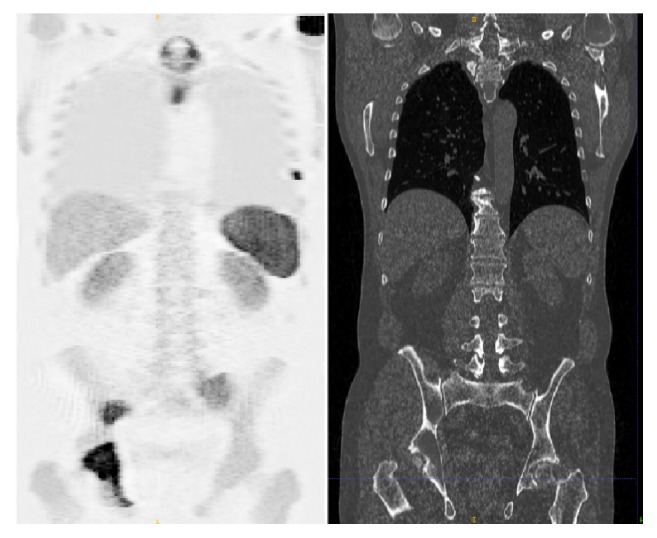

In order to tackle the multiple myeloma lesion detection, classification, or other pathological analysis issues for the bone, several methods have been developed. A virtual calcium subtraction method [35] has been adopted to evaluate MM in the spine. The study of [36] focuses on detecting lesions in femur cortical bones, in which a probabilistic, spatially dependent density model has been developed to automatically identify bone marrow infiltration in low-dose CT. In [37] a semiautomatic software was developed to perform pixel thresholding based segmentation for the assessment of bone marrow metabolism while automatic quantification of bone marrow myeloma volume was conducted in [38]. A hybrid iterative reconstruction technique was used to compare the diagnostic performance of conventional radiography (CR) and whole-body low-dose computed tomography (WBLDCT) with a comparable radiation dose reconstructed for MM staging [39]. However, none of the above-mentioned approaches can be directly transferred to automatically detect systemic bone lesions on PET imaging. As is shown in Figure 1, the 68Ga-Pentixafor PET imaging has a large variation in uptake and size even among the lesions in the same patient. Such heterogeneity in lesion size and tracer uptake in the complex context of various nonspecific overexpression makes the whole-body detection of all the lesions extremely difficult. To the best of our knowledge, no effective CAD methods have been presented for automated detection of MM bone lesions in the full body.

Figure 1.

Properties of MM lesions of an exemplary patient with 68Ga-Pentixafor PET imaging: (a) maximum-intensity projection of 68Ga-Pentixafor PET; (b) histogram distribution of maximum activity of the lesions; (c) histogram distribution of mean activity of the lesions; (d) histogram distribution of volumes of the lesions.

The emergence of deep learning methods largely exceeds human perception power in extracting useful information from large amount of data such as images and conventional machine learning methods in many applications [26, 40, 41]. They have already demonstrated advantages in computerized diagnosis on medical images, such as abnormality detection [42, 43]. Convolutional neural networks (CNNs) are the driving force among many network architectures, and the current state-of-the-art work largely relies on CNN approaches to address the common semantic segmentation or detection tasks [44]. The combination of convolutional and deconvolutional layers can well extract high-level contextual and spatial features hierarchically. CNN architecture such as U-Net offers a 2D framework to segment biomedical images by using a contracting path for contextual extraction and a symmetric reversed path for object localization [45]. U-Net has been extended to a 3D version as V-Net [46] and achieves promising results by introducing an optimized objective function to train the model end-to-end. Similar 3D CNNs have been presented in [47] to learn intermediate features for brain lesion segmentation. A cascaded CNN has been developed to first segment the liver and then the liver lesions in [48].

This paper explores the advantages of cascaded CNNs in lesion prediction and segmentation with the aim of detecting MM bone lesion in a full body manner. For the first time, deep learning method is developed to combine anatomical and molecular information of 68Ga-Pentixafor PET/CT imaging to support whole-body lesion identification. Besides employing V-Net, two enhanced V-Nets are cascaded to build a W-shaped framework to learn the volumetric feature representation of the skeleton and its lesions from coarse to fine. The whole network requires only minimal preprocessing and no postprocessing. We testify the algorithm on 70 digital phantoms generated by realistic simulation of 68Ga-Pentixafor PET images to demonstrate the applicability of deep learning architectures in hierarchically extracting features and predicting the MM bone lesions. For proof-of-concept, the methods were further evaluated on 12 clinical PET/CT data and the results demonstrate the potential to improve MM bone lesion detection. In addition, we compared the proposed approach with several traditional machine learning methods, including random forest classifier, k-Nearest Neighbor (k-NN) classifier, and support vector machine (SVM) algorithm, in which cases the advantages of deep learning methods are more evidently shown.

2. Methods and Materials

2.1. Deep Learning Methods: V-Net and W-Net

In this study, we investigate a widely used CNN-based deep learning architecture, V-Net, for 3D volumetric image segmentation [46] on CT and PET images. 3D convolutions are performed aiming to extract features from both modalities. At the end of each stage, to reduce its resolution by using appropriate stride, the left part of the V-Net consists of a compression path, while the right part decompresses the signal until its original size is reached. Convolutions are all applied with appropriate padding. We assembled PET and CT into two channels of combined images for lesion segmentation.

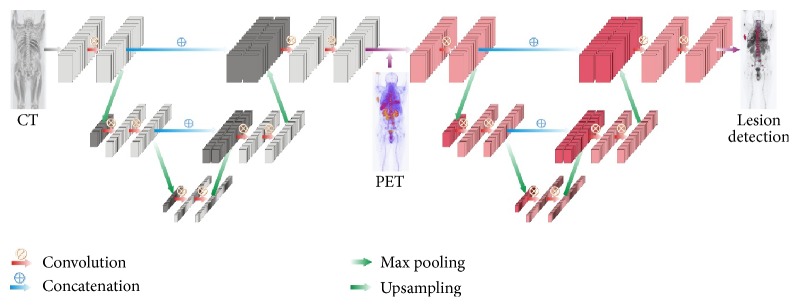

In particular, we cascaded two V-Nets to form a W-Net architecture to improve the segmentation to bone-specific lesions in this study. As illustrated in Figure 2, there is a compression downward path, followed by an approximately symmetric decompression path inside each V-Net. The former cuts the volumetric size and broadens the receptive field along the layers, while the latter functions the opposite way to expand the spatial support of the lower resolution feature maps. For both contracting and expanding paths, we use the 3 × 3 × 3 kernel for convolution and a stride of two for max pooling or upsampling. For the first V-Net, only volumetric CT data is fed into the network in order to learn anatomical knowledge about the bone. The outcome builds a binary mask for the skeleton, which adaptively offers geometrical boundary for lesion localization. The second V-Net then adds both PET/CT and the output from the first network as the total input, of which PET/CT provides additional feature information to jointly predict the lesion. Since lesions have comparatively smaller size than the bone, the deeper a network goes, the more detailed information will vanish even if adding concatenations from layers in the contracting path. For the W-Net, we use five layers in the first V-Net and three layers for the second V-Net. For the single V-Net architecture, 3 layers are adopted.

Figure 2.

Overview of a simplified W-Net architecture.

All the experiments are implemented on Theano using Python 2.7 and all the PET/CT volumes are trained on NVIDIA TITAN X with a GPU memory of 12 GB. We employed 3-fold cross validation to test the prediction accuracy.

There exists high imbalance in the dataset, especially for lesion labels with very small sizes. In order to better track tiny lesions and balance different sizes of the bone systemically, we also adopt a similar weight balance strategy as in [48]. For the input CT volume V and a given voxel i, there is a set of labels containing two values, of which 0 denotes nonbone regions and 1 denotes bones. As for the PET volumes in the binary label set, 0 denotes nonmyeloma part and 1 indicates MM lesions. We define p(xi = l∨V) to be the probability that a voxel i is being assigned a label l given the volume V, with the label set l ∈ [0,1]. Then a cross-entropy loss function L is defined as follows:

| (1) |

In (1), is the ground truth while pi is the probability assigned to voxel i when it belongs to the foreground. ωilabel is set to be inversely proportional to the number of voxels that belong to a certain label.

Besides, we adopt another balance implementation, which is patch-based balance strategy. We subsample the training dataset to bridge the gap between the labels (bone and lesion) and the background. For each patient, we pretrain our network by first extracting patches of size (64 × 64 × 64) across the whole patient volume, with an overlap of 5 voxels in all directions. We then select the top 30 patches per patient volume based on the ratio of label to background in each patch. These selected patches improve the total label to background ratio for the bone label from a percentage of 12.35% to 20.16% and for the lesion label from a percentage of 2.54% to 7.01%.

With the class balancing loss function and the patches used in V-Net, we pretrain the network for 1000 iterations. Stochastic gradient descent with a learning rate of 0.001 and a momentum of 0.95 is performed for every 100 iterations. We then use the entire training set to fine-tune the network until it converges following the same setup as is adopted in the pretraining process. This training scheme is employed for both bone and lesion segmentation tasks.

Dice score was calculated to estimate the segmentation accuracy. In addition, the lesionwise detection accuracies (sensitivity, specification, and precision) were summarized on the segmented results based on the criteria of patch overlapping. Patches of size 9 × 9 × 9 were generated across the lesions with an overlap of 4 voxels being added in all three directions. A lesion was considered as detected when the amount of lesion labels that fell into the patch was above 10%.

We calculate precision and other relevant metrics via patch overlapping, where the annotated ground truth patch is considered positive if at least 10% of the total voxels in the patch are a lesion and negative otherwise.

2.2. Test on Realistic PET Phantoms

To evaluate the performance of deep learning methods for lesion detection, we generated realistic digital phantoms of 68Ga-Pentixafor PET scans. Digital phantoms using physically based simulations provide ground truth to in-depth evaluate the performance of algorithms [49]. Realistic PET activities extracted from patient data were assigned to a whole-body phantom with CT and anatomical labels such as liver, spleen, and kidney. Bone lesions of various sizes, shapes, and uptakes at different locations were generated randomly in skeleton of phantoms to represent a large diversity of lesion presentations. The CT values were modified accordingly by considering severity of lesions. Realistic PET measurements of the phantoms were simulated by a forward projection model following the procedures described in [50]. This model includes object attenuation as well as the system geometry resembling the characteristics of a real clinical scanner (Siemens Biograph mMR [51]). With the scanner geometry described above, scattered events within the phantom and detectors were simulated using GATE V6. These events were sorted out from the GATE output and formed the expectation of scatter sinogram. In this simulation, 30% scattered and 30% uniformly distributed random events were included considering a large positron range of Ga-68. Poisson noise was then generated in each sinogram bin. Finally, a set of sinograms (90 bins and 160 projections) was generated with the expectation of the total counts to be 1 million. In total, 70 digital phantoms of different lesions were simulated.

2.3. Test on Clinical Data

12 patients (3 female and 9 male) with histologically proven primary multiple myeloma disease were referred for 68Ga-Pentixafor PET/CT imaging (Siemens Biograph mCT 64; Siemens Medical Solutions, Germany). Approximately 90 to 205 MBq 68Ga-Pentixafor was injected intravenously 1 hour before the scan. A low-dose CT (20 mAs, 120 keV) covering the body from the base of skull to the proximal thighs was acquired for attenuation correction. PET emission data were acquired using a 3D mode with a 200 × 200 matrix for 3 min emission time per bed position. PET data were corrected for decay and scattering and iteratively reconstructed with attenuation correction. This study was approved by the corresponding ethics committees. Patients were given written informed consent prior to the investigations.

The coregistration of PET and CT was visually inspected using PMOD (PMOD Technologies Ltd., Switzerland). With the fusion of PET and CT, all the lesions were manually annotated under the supervision of experienced radiologist, then each lesion was segmented by local thresholding at half maximum.

2.4. Comparison with Traditional Machine Learning Methods

Traditional machine learning methods [52] including random forest, k-NN, and SVM were employed in this study for the comparison with deep learning methods. The patch-based intensity information was extracted as features for different algorithmic implementation. Multimodality features were obtained by taking the PET and CT intensities patchwise with a size of 3 × 3 × 3 in order that neighbor and intensity information can be encoded. For training, a total of 2000 lesion samples (patches) and 2000 nonlesion samples for each data volume were randomly selected and normalized to form the feature space. Each sample in the training/test set was represented as an intensity-based feature vector of 54 dimensions. Then the principal component analysis (PCA) was applied to reduce the dimensionality to 15 and the grid search with 3-fold cross validation was used to select the parameters. For random forest, the number of trees n was set to 20. For k-NN, the number of neighbors k was set to 15. For SVM, we choose a linear kernel, and the penalty parameter of the error term C was set to 0.5.

3. Results and Discussions

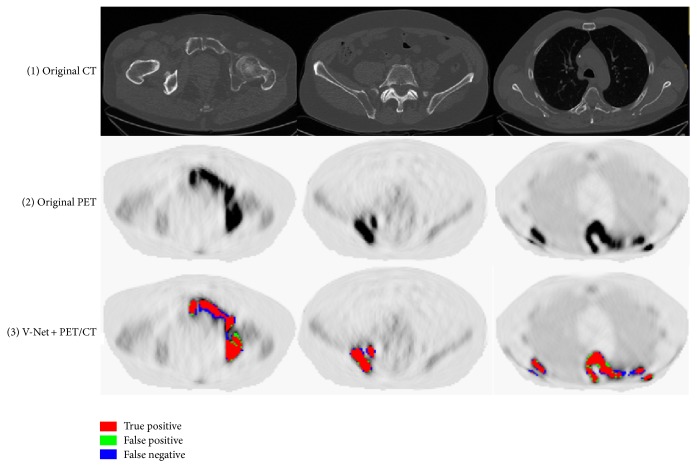

An exemplary coronal slice of simulated 68Ga-Pentixafor PET and its corresponding CT scan are shown in Figure 3. The simulated PET images present visual similarities to real PET measures. The detection results of 3 slices of different body regions in axial plane are shown in Figure 4 and the test results on 70 of these realistic digital phantoms are listed in Table 1. It achieves specificity as high as 99.68% and the Dice score is also remarkable with a value of 89.26%, which confirm that deep learning method has the potential to detect the whole-body MM lesions.

Figure 3.

Synthetic PET data generating from digital phantom and its corresponding CT scan.

Figure 4.

Exemplary detection results of phantom study: (1) the original axial CT slices; (2) the corresponding PET slices; (3) MM lesion prediction using V-Net.

Table 1.

Experimental results of phantom study using synthetic PET/CT using V-Net, random forest (RF), k-Nearest Neighbor (k-NN), and support vector machine (SVM).

| Performance (%) | Sensitivity | Specificity | Precision | Dice |

|---|---|---|---|---|

| V-Net | 89.71 | 99.68 | 88.82 | 89.26 |

| RF with n = 20 | 99.16 | 89.49 | 12.18 | 21.69 |

| kNN with k = 15 | 98.52 | 90.38 | 12.41 | 23.09 |

| SVM with C = 0.5 | 98.76 | 92.15 | 15.60 | 26.94 |

The comparisons between V-Nets and W-Net using clinical dataset are summarized in Table 2. For the deep learning methods, the combination of PET and CT for V-Net improves the Dice score (69.49%) compared to V-Net with CT alone (26.37%) or PET alone (28.51%). For lesionwise accuracy, the combination of PET and CT for V-Net achieves higher specificity (99.51%) than V-Net using CT (94.43%) or PET (96.04%) and lower sensitivity than V-Net with CT alone (73.18%). For V-Net, the combination of CT and PET can improve the lesion segmentation; however, it does not bring much benefit to the sensitivity. This is because CT volume represents good anatomical structure and is sensitive to abnormal structure of lesions. The mixture usage of CT and PET in lesion detection does not increase the possibility of capturing such feature.

Table 2.

Experimental results of V-Nets and W-Net for MM bone lesion detection. Best results are indicated in italic.

| Performance (%) | Sensitivity | Specificity | Precision | Dice |

|---|---|---|---|---|

| V-Net + CT | 73.18 | 94.43 | 16.08 | 26.37 |

| V-Net + PET | 61.77 | 96.04 | 18.53 | 28.51 |

| V-Net + PET/CT | 71.06 | 99.51 | 68.00 | 69.49 |

| W-Net + PET/CT | 73.50 | 99.59 | 72.46 | 72.98 |

W-Net, which also combines PET and CT, reaches the highest segmentation accuracy (Dice score 72.98%) and lesion detection accuracy (sensitivity 73.50%, specificity 99.59%, and precision 72.46%). In contrast to V-Net, W-Net distinguishes the information on CT and PET, and the extracted CT skeleton can be utilized as a type of regularization. The maximization of information utilization improves the segmentation and lesion detection. However, given the expensive computation by adding an extra V-Net, the sophisticated W-Net only slightly improves the performance (around 2% to 4%) compared to V-Net with PET/CT input. This can be elucidated in two aspects. On one hand, the hybrid input already contains anatomic information, which is encoded and learnt as important features by the single V-Net. On the other hand, the overall performance of the W-Net may be restricted by the first V-Net. If the skeleton mask is not correctly labeled, its segmentation error will be propagated to the second V-Net and once again cause negative effect on subsequent lesion detection. Further improvement of the individual V-Net may improve the overall performance. Besides, all the methods obtain high specificity (true negative rate) of more than 90%, which demonstrate that the deep learning methods can properly exclude nonlesion parts.

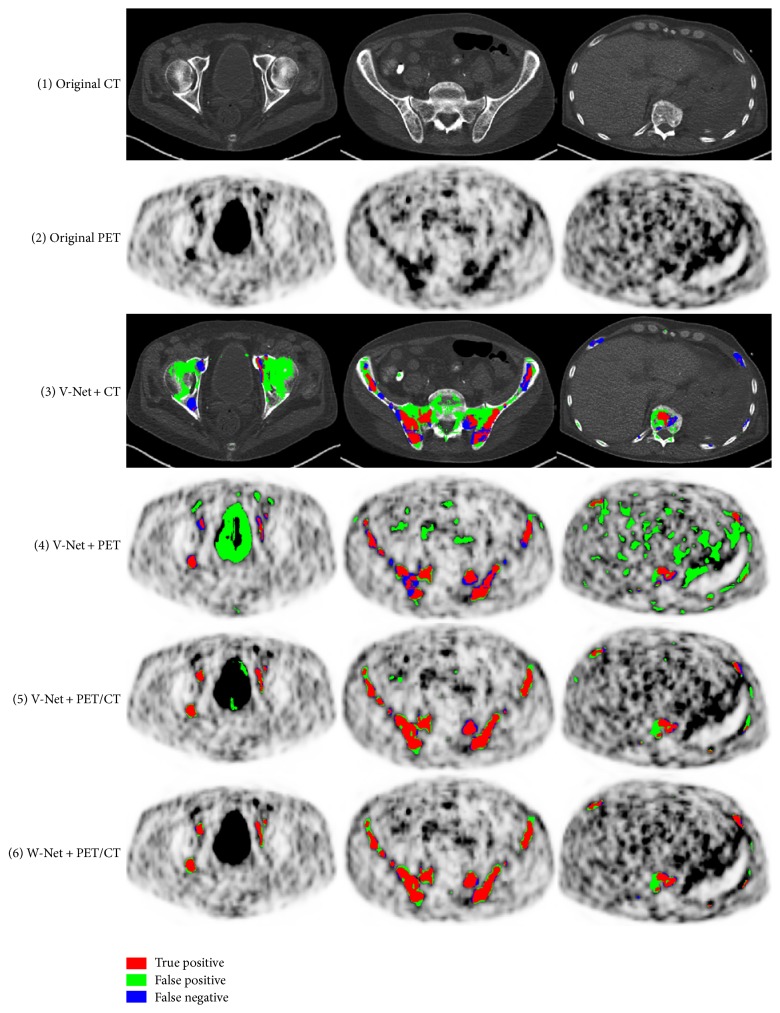

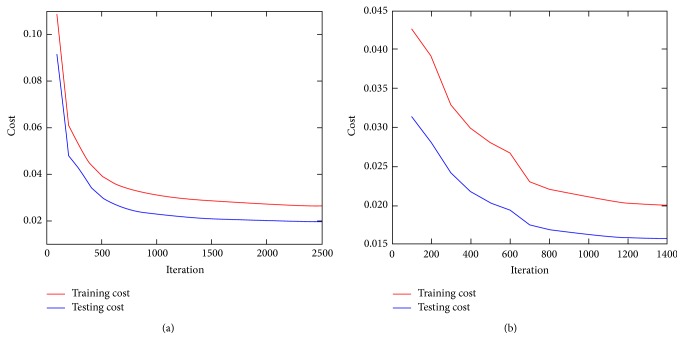

Exemplary detection results of 3 slices of different body regions using deep learning methods are visualized in axial plane in Figure 5, where the false positive and false negative are marked out for in-depth comparison. Typically, false negatives occur when the lesion is too small while the contrast is not high enough to identify its presence. False positives are highly intensity driven, which considers the nonspecific high tracer uptake as lesion by mistake. The V-Net with CT or PET alone predicted lesions in low accuracy with lots of false negative and false positives. The V-Net with hybrid PET/CT data as input is capable of learning features from both modalities. It can generally prevent false positive prediction to a large extent. For W-Net, the obtained binary skeleton mask is forwarded to the second V-Net together with PET/CT volumes. Therefore, W-Net geometrically offers extra anatomical restrictions and reduces the probability of assigning wrong lesion labels, which further improves the detection performance compared to V-Net of the same input. The convergence curves for different networks are shown in Figure 6.

Figure 5.

Exemplary detection results of V-Nets and W-Net: (1) the original axial CT slices; (2) the corresponding PET slices; (3) MM lesion prediction using CT alone in V-Net; (4) MM lesion prediction using PET alone in V-Net; (5) MM lesion prediction using PET/CT in V-Net; (6) MM lesion detection using W-Net.

Figure 6.

Convergence curves of different network architectures, W-Net (a) or V-Net (b).

The experimental results of conventional machine learning methods (RF, k-NN, and SVM) are shown in Table 1. For the traditional methods, all of them achieve comparable good sensitivity and specificity. However, to the exact voxelwise discrimination of correct classes, random forest obtains a Dice score of 21.69%, k-NN gives a Dice score of 23.09%, and SVM shows a Dice score of 26.94%. The outperformance of SVM over random forest and k-NN indicates that SVM is more capable of providing a hyperplane in distinguishing nonlesion regions from lesion regions than the other two. For random forest, it might be explained as that bone lesions are in quite a small quantity compared to the rest of healthy parts of the whole body, which results in the data to be rather sparse and hinders its performance. This is exactly the advantage when applying sparse data to SVM. For k-NN, the reason behind it might be that the pure calculation of Euclidean distance as the features to predict the closeness of testing voxel and the training samples does not fit for bone lesions spread over the full body.

For the first time, this study proposed a deep learning method to automatically detect and segment the whole-body MM bone lesions on CXCR-4 PET/CT imaging. It can efficiently leverage the potential information inside multimodal imaging instead of extracting handcrafted features that are difficult to identify or reach an evaluation consensus. However, the current study is restricted by small number of patient data. Even though we tried to augment the number of training samples by generating patches, the performance of the deep learning methods is still hampered. Nevertheless, this explorative study demonstrated the potential of deep learning methods in combining multimodal information for lesion detection. The preliminary results support the further development of deep learning methods for whole-body lesion detection. With the evolution of CXCR-4 imaging and therapy in clinical practice, more and more subjects will be enrolled for the tests. The performance of deep learning is expected to be improved with the availability of more data volumes. Besides, we only focus on the detection of bone lesions of multiple myeloma in this proof-of-concept study and lesions outside the bone are not considered. This is not realistic for multiple myeloma patients with possible extramedullary lesions. The W-Net architecture can be naturally extended to the detection of lesions outside the bone by incorporating more labels of other tissue types and lesions. However, this needs sufficient data to make the training and test converge, which may be achieved with an increased number of subjects.

4. Conclusion

This study proposed the first computer-aided MM bone lesion detection approach on whole-body 68Ga-Pentixafor PET/CT imaging. It explored two deep learning architectures, that is, V-Net and W-Net, for lesion segmentation and detection. The deep learning methods can efficiently combine the information inside multimodal imaging and do not require the extraction of handcrafted features that are difficult to identify with regard to intermodality characteristics. We demonstrate the feasibility of deep learning methods by conducting realistic digital phantom study. Traditional machine learning methods were also compared to further confirm the advantage of deep learning approaches in handling lesion heterogeneities. The preliminary results based on limited number of data support the W-Net, which incorporates additional skeletal information as a kind of regularization for MM bone lesion detection. Increasing the amount of data may further enhance the performance of the proposed deep learning method. The trial of this study makes a step further towards developing an automated tool for the management of multiple myeloma disease.

Acknowledgments

This work was supported by China Scholarship Council under Grant no. 201306890013. This work was also supported by the German Research Foundation (DFG) and the Technical University of Munich (TUM) in the framework of the Open Access Publishing Program. The authors deeply acknowledge NVIDIA Corporation for the donation of the Titan X Pascal GPU for this research.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Authors' Contributions

Lina Xu, Giles Tetteh, Kuangyu Shi, and Bjoern H. Menze contributed equally to the article.

References

- 1.Terpos E., Kanellias N. Practical Considerations for Bone Health in Multiple MyelomaPersonalized Therapy for Multiple Myeloma. Springer; 2018. [DOI] [Google Scholar]

- 2.Terpos E., Dimopoulos M.-A. Myeloma bone disease: Pathophysiology and management. Annals of Oncology. 2005;16(8):1223–1231. doi: 10.1093/annonc/mdi235. [DOI] [PubMed] [Google Scholar]

- 3.Collins C. D. Problems monitoring response in multiple myeloma. Cancer Imaging. 2005;5(special issue A):S119–S126. doi: 10.1102/1470-7330.2005.0033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Callander N. S., Roodman G. D. Myeloma bone disease. Seminars in Hematology. 2001;38(3):276–285. doi: 10.1053/shem.2001.26007. [DOI] [PubMed] [Google Scholar]

- 5.Lütje S., Rooy J. W. J., Croockewit S., Koedam E., Oyen W. J. G., Raymakers R. A. Role of radiography, MRI and FDG-PET/CT in diagnosing, staging and therapeutical evaluation of patients with multiple myeloma. Annals of Hematology. 2009;88(12):1161–1168. doi: 10.1007/s00277-009-0829-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dimopoulos M., Terpos E., Comenzo R. L., et al. International myeloma working group consensus statement and guidelines regarding the current role of imaging techniques in the diagnosis and monitoring of multiple Myeloma. Leukemia. 2009;23(9):1545–1556. doi: 10.1038/leu.2009.89. [DOI] [PubMed] [Google Scholar]

- 7.Horger M., Claussen C. D., Bross-Bach U., et al. Whole-body low-dose multidetector row-CT in the diagnosis of multiple myeloma: an alternative to conventional radiography. European Journal of Radiology. 2005;54(2):289–297. doi: 10.1016/j.ejrad.2004.04.015. [DOI] [PubMed] [Google Scholar]

- 8.Dutoit J. C., Verstraete K. L. MRI in multiple myeloma: a pictorial review of diagnostic and post-treatment findings. Insights into Imaging. 2016;7(4):553–569. doi: 10.1007/s13244-016-0492-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dimopoulos M. A., Hillengass J., Usmani S., et al. Role of magnetic resonance imaging in the management of patients with multiple myeloma: a consensus statement. Journal of Clinical Oncology. 2015;33(6):657–664. doi: 10.1200/jco.2014.57.9961. [DOI] [PubMed] [Google Scholar]

- 10.Van Lammeren-Venema D., Regelink J. C., Riphagen I. I., Zweegman S., Hoekstra O. S., Zijlstra J. M. 18F-fluoro-deoxyglucose positron emission tomography in assessment of myeloma-related bone disease: a systematic review. Cancer. 2012;118(8):1971–1981. doi: 10.1002/cncr.26467. [DOI] [PubMed] [Google Scholar]

- 11.Nakamoto Y. Clinical contribution of PET/CT in myeloma: from the perspective of a radiologist. Clinical Lymphoma, Myeloma & Leukemia. 2014;14(1):10–11. doi: 10.1016/j.clml.2013.12.005. [DOI] [PubMed] [Google Scholar]

- 12.Healy C. F., Murray J. G., Eustace S. J., Madewell J., O'Gorman P. J., O'Sullivan P. Multiple myeloma: a review of imaging features and radiological techniques. Bone Marrow Research. 2011;2011:1–9. doi: 10.1155/2011/583439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cavo M., Terpos E., Nanni C., et al. Role of 18 F-FDG PET/CT in the diagnosis and management of multiple myeloma and other plasma cell disorders: a consensus statement by the International Myeloma Working Group. The Lancet Oncology. 2017;18(4):e206–e217. doi: 10.1016/S1470-2045(17)30189-4. [DOI] [PubMed] [Google Scholar]

- 14.Moreau P., Attal M., Caillot D., et al. Prospective evaluation of magnetic resonance imaging and [18F] fluorodeoxyglucose positron emission tomography-computed tomography at diagnosis and before maintenance therapy in symptomatic patients with multiple myeloma included in the IFM/DFCI 2009 trial: results of the IMAJEM study. Journal of Clinical Oncology. 2017;35(25):2911–2918. doi: 10.1200/JCO.2017.72.2975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vag T., Gerngross C., Herhaus P., et al. First experience with chemokine receptor CXCR4 Targeted PET imaging of patients with solid cancers. Journal of Nuclear Medicine. 2016;57(5):741–746. doi: 10.2967/jnumed.115.161034. [DOI] [PubMed] [Google Scholar]

- 16.Philipp-Abbrederis K., Herrmann K., Knop S., et al. In vivo molecular imaging of chemokine receptor CXCR4 expression in patients with advanced multiple myeloma. EMBO Molecular Medicine. 2015;7(4):477–487. doi: 10.15252/emmm.201404698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lapa C., Schreder M., Schirbel A., et al. [68Ga]Pentixafor-PET/CT for imaging of chemokine receptor CXCR4 expression in multiple myeloma—comparison to [18F]FDG and laboratory values. Theranostics. 2017;7(1):205–212. doi: 10.7150/thno.16576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Belton A. L., Saini S., Liebermann K., Boland G. W., Halpern E. F. Tumour size measurement in an oncology clinical trial: comparison between off-site and on-site measurements. Clinical Radiology. 2003;58(4):311–314. doi: 10.1016/S0009-9260(02)00577-9. [DOI] [PubMed] [Google Scholar]

- 19.Fonti R., Larobina M., Del Vecchio S., et al. Metabolic tumor volume assessed by18F-FDG PET/CT for the prediction of outcome in patients with multiple myeloma. Journal of Nuclear Medicine. 2012;53(12):1829–1835. doi: 10.2967/jnumed.112.106500. [DOI] [PubMed] [Google Scholar]

- 20.Foster B., Bagci U., Mansoor A., Xu Z., Mollura D. J. A review on segmentation of positron emission tomography images. Computers in Biology and Medicine. 2014;50:76–96. doi: 10.1016/j.compbiomed.2014.04.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bagci U., Udupa J. K., Mendhiratta N., et al. Joint segmentation of anatomical and functional images: applications in quantification of lesions from PET, PET-CT, MRI-PET, and MRI-PET-CT images. Medical Image Analysis. 2013;17(8):929–945. doi: 10.1016/j.media.2013.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Taylor A. T., Garcia E. V. Computer-assisted diagnosis in renal nuclear medicine: Rationale, methodology, and interpretative criteria for diuretic renography. Seminars in Nuclear Medicine. 2014;44(2):146–158. doi: 10.1053/j.semnuclmed.2013.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Petrick N., Sahiner B., Armato S. G., III, et al. Evaluation of computer-aided detection and diagnosis systems. Medical Physics. 2013;40(8) doi: 10.1118/1.4816310.087001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gilbert F. J., Astley S. M., Gillan M. G. C., et al. Single reading with computer-aided detection for screening mammography. The New England Journal of Medicine. 2008;359(16):1675–1684. doi: 10.1056/nejmoa0803545. [DOI] [PubMed] [Google Scholar]

- 25.Eadie L. H., Taylor P., Gibson A. P. A systematic review of computer-assisted diagnosis in diagnostic cancer imaging. European Journal of Radiology. 2012;81(1):e70–e76. doi: 10.1016/j.ejrad.2011.01.098. [DOI] [PubMed] [Google Scholar]

- 26.Shin H., Roth H. R., Gao M., et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Transactions on Medical Imaging. 2016;35(5):1285–1298. doi: 10.1109/tmi.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kourou K., Exarchos T. P., Exarchos K. P., Karamouzis M. V., Fotiadis D. I. Machine learning applications in cancer prognosis and prediction. Computational and Structural Biotechnology Journal. 2015;13:8–17. doi: 10.1016/j.csbj.2014.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Halt M., Le Rest C. C., Turzo A., Roux C., Visvikis D. A fuzzy locally adaptive Bayesian segmentation approach for volume determination in PET. IEEE Transactions on Medical Imaging. 2009;28(6):881–893. doi: 10.1109/tmi.2008.2012036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hatt M., Cheze-Le Rest C., Van Baardwijk A., Lambin P., Pradier O., Visvikis D. Impact of tumor size and tracer uptake heterogeneity in 18F-FDG PET and CT non-small cell lung cancer tumor delineation. Journal of Nuclear Medicine. 2011;52(11):1690–1697. doi: 10.2967/jnumed.111.092767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Song Q., Chen M., Bai J., Sonka M., Wu X. Information Processing in Medical Imaging. Vol. 6801. Berlin, Heidelberg, Germany: Springer; 2011. Surface–region context in optimal multi-object graph-based segmentation: robust delineation of pulmonary tumors; pp. 61–72. (Lecture Notes in Computer Science). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Song Q., Bai J., Han D., et al. Optimal Co-segmentation of tumor in PET-CT images with context information. IEEE Transactions on Medical Imaging. 2013;32(9):1685–1697. doi: 10.1109/TMI.2013.2263388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Song Q., Bai J., Garvin M. K., Sonka M., Buatti J. M., Wu X. Optimal multiple surface segmentation with shape and context priors. IEEE Transactions on Medical Imaging. 2013;32(2):376–386. doi: 10.1109/TMI.2012.2227120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ju W., Xiang D., Zhang B., Wang L., Kopriva I., Chen X. Random walk and graph cut for co-segmentation of lung tumor on PET-CT images. IEEE Transactions on Image Processing. 2015;24(12):5854–5867. doi: 10.1109/TIP.2015.2488902. [DOI] [PubMed] [Google Scholar]

- 34.Xu Z., Bagci U., Udupa J. K., Mollura D. J. Fuzzy connectedness image Co-segmentation for HybridPET/MRI and PET/CT scans. Proceedings of the CMMI-MICCAI; 2015; Springer; pp. 15–24. [DOI] [Google Scholar]

- 35.Thomas C., Schabel C., Krauss B., et al. Dual-energy CT: Virtual calcium subtraction for assessment of bone marrow involvement of the spine in multiple myeloma. American Journal of Roentgenology. 2015;204(3):W324–W331. doi: 10.2214/AJR.14.12613. [DOI] [PubMed] [Google Scholar]

- 36.Martínez-Martínez F., Kybic J., Lambert L., Mecková Z. Fully automated classification of bone marrow infiltration in low-dose CT of patients with multiple myeloma based on probabilistic density model and supervised learning. Computers in Biology and Medicine. 2016;71:57–66. doi: 10.1016/j.compbiomed.2016.02.001. [DOI] [PubMed] [Google Scholar]

- 37.Leydon P., O’Connell M., Greene D., Curran K. Semi-automatic Bone Marrow Evaluation in PETCT for Multiple MyelomaAnnual Conference on Medical Image Understanding and Analysis. Springer; 2017. [DOI] [Google Scholar]

- 38.Nishida Y., Kimura S., Mizobe H., et al. Automatic digital quantification of bone marrow myeloma volume in appendicular skeletons—clinical implications and prognostic significance. Scientific Reports. 2017;7(1, article 12885) doi: 10.1038/s41598-017-13255-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lambert L., Ourednicek P., Meckova Z., Gavelli G., Straub J., Spicka I. Whole-body low-dose computed tomography in multiple myeloma staging: superior diagnostic performance in the detection of bone lesions, vertebral compression fractures, rib fractures and extraskeletal findings compared to radiography with similar radiation exposure. Oncology Letters. 2017;13(4):2490–2494. doi: 10.3892/ol.2017.5723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Silver D., Huang A., Maddison C. J., et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529(7587):484–489. doi: 10.1038/nature16961. [DOI] [PubMed] [Google Scholar]

- 41.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 42.Tajbakhsh N., Gotway M. B., Liang J. Computer-aided pulmonary embolism detection using a novel vessel-aligned multi-planar image representation and convolutional neural networks. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI '15); 2015; Springer; pp. 62–69. [DOI] [Google Scholar]

- 43.Roth H. R., Lu L., Liu J. Improving computer-aided detection using convolutional neural networks and random view aggregation. IEEE Transactions on Medical Imaging. 2015;35(5):1170–1181. doi: 10.1109/TMI.2015.2482920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Long J., Shelhamer E., Darrell T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '15); June 2015; Boston, MA, USA. IEEE; pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- 45.Ronneberger O., Fischer P., Brox T. U-net: convolutional networks for biomedical image segmentation. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention and Medical Image Computing and Computer-Assisted Intervention (MICCAI '15); November 2015; pp. 234–241. [DOI] [Google Scholar]

- 46.Milletari F., Navab N., Ahmadi S.-A. V-Net: fully convolutional neural networks for volumetric medical image segmentation. Proceedings of the 4th International Conference on 3D Vision (3DV '16); October 2016; IEEE; pp. 565–571. [DOI] [Google Scholar]

- 47.Kamnitsas K., Ledig C., Newcombe V. F. J., et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Medical Image Analysis. 2017;36:61–78. doi: 10.1016/j.media.2016.10.004. [DOI] [PubMed] [Google Scholar]

- 48.Christ P. F., Elshaer M. E. A., Ettlinger F., et al. Automatic liver and lesion segmentation in CT using cascaded fully convolutional neural networks and 3D conditional random fields. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI '16); October 2016; Springer; pp. 415–423. [DOI] [Google Scholar]

- 49.Gavrielides M. A., Kinnard L. M., Myers K. J., et al. A resource for the assessment of lung nodule size estimation methods: Database of thoracic CT scans of an anthropomorphic phantom. Optics Express. 2010;18(14):15244–15255. doi: 10.1364/OE.18.15244. doi: 10.1364/OE.18.15244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Cheng X., Li Z., Liu Z., et al. Direct parametric image reconstruction in reduced parameter space for rapid multi-tracer PET imaging. IEEE Transactions on Medical Imaging. 2015;34(7):1498–1512. doi: 10.1109/TMI.2015.2403300. [DOI] [PubMed] [Google Scholar]

- 51.Delso G., Fürst S., Jakoby B., et al. Performance measurements of the siemens mMR integrated whole-body PET/MR scanner. Journal of Nuclear Medicine. 2011;52(12):1914–1922. doi: 10.2967/jnumed.111.092726. [DOI] [PubMed] [Google Scholar]

- 52.Anbeek P., Vincken K. L., Viergever M. A. Automated MS-lesion segmentation by k-nearest neighbor classification. MIDAS Journal. 2008 [Google Scholar]