Key Points

Question

Is diagnosis from whole-slide images (WSI) noninferior to diagnosis from glass slides for cutaneous specimens?

Findings

This large retrospective study of 499 representative dermatopathology cases found 94% intraobserver concordance between WSI and traditional microscopy (TM). Diagnosis from WSI was found statistically noninferior to diagnosis from glass slides compared with ground truth consensus diagnosis.

Meaning

In most cases, we found diagnosis from WSI noninferior to TM. The WSI method approached, but did not achieve, noninferiority for the subset of melanocytic lesions. Discordance in TM diagnosis of this challenging group is broadly recognized and further investigation of melanocytic neoplasms is recommended.

This study compares diagnostic accuracy using whole-slide images with diagnostic accuracy using traditional microscopy for cutaneous diseases.

Abstract

Importance

Digital pathology represents a transformative technology that impacts dermatologists and dermatopathologists from residency to academic and private practice. Two concerns are accuracy of interpretation from whole-slide images (WSI) and effect on workflow. Studies of considerably large series involving single-organ systems are lacking.

Objective

To evaluate whether diagnosis from WSI on a digital microscope is inferior to diagnosis of glass slides from traditional microscopy (TM) in a large cohort of dermatopathology cases with attention on image resolution, specifically eosinophils in inflammatory cases and mitotic figures in melanomas, and to measure the workflow efficiency of WSI compared with TM.

Design, Setting, and Participants

Three dermatopathologists established interobserver ground truth consensus (GTC) diagnosis for 499 previously diagnosed cases proportionally representing the spectrum of diagnoses seen in the laboratory. Cases were distributed to 3 different dermatopathologists who diagnosed by WSI and TM with a minimum 30-day washout between methodologies. Intraobserver WSI/TM diagnoses were compared, followed by interobserver comparison with GTC. Concordance, major discrepancies, and minor discrepancies were calculated and analyzed by paired noninferiority testing. We also measured pathologists’ read rates to evaluate workflow efficiency between WSI and TM. This retrospective study was caried out in an independent, national, university-affiliated dermatopathology laboratory.

Main Outcomes and Measures

Intraobserver concordance of diagnoses between WSI and TM methods and interobserver variance from GTC, following College of American Pathology guidelines.

Results

Mean intraobserver concordance between WSI and TM was 94%. Mean interobserver concordance was 94% for WSI and GTC and 94% for TM and GTC. Mean interobserver concordance between WSI, TM, and GTC was 91%. Diagnoses from WSI were noninferior to those from TM. Whole-slide image read rates were commensurate with WSI experience, achieving parity with TM by the most experienced user.

Conclusions and Relevance

Diagnosis from WSI was found equivalent to diagnosis from glass slides using TM in this statistically powerful study of 499 dermatopathology cases. This study supports the viability of WSI for primary diagnosis in the clinical setting.

Introduction

Whole-slide images (WSI) are virtual slides produced by digitally scanning conventional glass slides. Advantages of WSI over traditional microscopy (TM) are found in training and education, tumor boards, research, frozen tissue diagnosis, permanent archiving, teleconsultation, collaboration, inclusion in patient electronic medical records, and quality assurance testing. Whole-slide images permit manual and computer-aided quantitative image analysis of diagnostic and prognostic protein and genetic biomarkers, with several algorithms having US Food and Drug Administration (FDA) approval for clinical use. Whole-slide image biorepositories provide for the development of pattern recognition algorithms as a diagnostic tool. Digital WSI allow annotation, insertion of comments, and enhanced accessibility to specialists, especially from geographically remote locations.

The ability to diagnose from WSI is uniquely pertinent to dermatology, where residency curricula require training and competence that qualifies dermatologists to interpret their own dermatopathology specimens. Moreover, dermatopathology is ideal for WSI diagnosis owing to the relatively small specimen size, low slide counts, and overall higher case volume found in the specialty.

Although WSI are accepted adjunctively in various clinical applications and in several specific tests, lack of commonly accepted validation study standards has impeded the acceptance of WSI for primary diagnosis in the United States. However, after years of scrutiny, the FDA announced on April 12, 2017, approval of the first WSI system for primary diagnosis. We interpret this decision as a major step to facilitate widespread adoption of digital pathology in many workflow settings and anticipate that additional WSI systems will receive FDA approval in the near future. During the interim, individual laboratories are not prohibited from validation of WSI systems for their own use as laboratory developed tests.

A further concern for acceptance of WSI for primary diagnosis is the considerable variability between organ system subdisciplines that may require system by system validation. There have been a number of studies evaluating concordance between WSI and TM; however, studies are often small, lack specificity of specialties, and are dominated by interobserver rather than intraobserver validation methodologies. More recent investigations have included dermatopathology-specific cases and emphasis on intraobserver observations. Similar studies have appeared outside the dermatologic literature.

Here, we investigate intraobserver concordance of diagnosis from WSI compared with TM in a large cohort of 499 cases proportionally representing the spectrum of diagnoses encountered in our high-volume dermatopathology laboratory. We compare these diagnoses with interobserver ground truth consensus (GTC) diagnosis. We tested the hypothesis that interpretation from WSI was noninferior to that from TM; and that the discordance observed is no greater than the inherent variability in diagnosis among pathologists using TM.

An efficiency study of WSI vs TM read rates by pathologists with different levels of experience using WSI is included.

Methods

Prior to the study, institutional review board (IRB) approval was sought and exempted by the IRB at the Boonshoft School of Medicine, Wright State University. Whole-slide images were viewed, diagnosed and reported using a patented in-house–developed Laboratory Information System (LIS) platform (Clearpath). The WSI system was previously validated for primary diagnosis of hematoxylin-eosin (H&E) stained slides per the College of American Pathologists (CAP) guidelines. Special stains and immunohistochemistries were not included in this study because they require separate validation for each stain.

Case Selection

A total of 505 cases, proportionally representing the 30 most frequent diagnoses (approximately 95% of total diagnoses reported in our laboratory), were sequentially pulled from cases received in the 3 months prior to commencement of the study. Eight melanomas (2 in situ and 6 invasive malignant melanomas) were added for a total of 15 melanomas. A total of 513 cases were initially considered for the study, reflecting the spectrum and complexity of specimen types and diagnoses encountered during routine practice.

Ground Truth Consensus

Case materials were deidentified and entered into a database that included reference number, patient demographics, available clinical information, and original diagnosis. Glass slides and data, including the original diagnosis, were viewed simultaneously on a multi-headed microscope by 3 board-certified dermatopathologists (T.O., S.S., and M.M.) and interobserver GTC diagnosis established. If consensus could not be achieved, the case was not included in the study. A total of 499 cases were included and are listed in Table 1.

Table 1. Case Type and Frequency of Diagnoses in 499 Cases.

| Diagnosis | Cases, No. |

|---|---|

| Benign melanocytic nevi (blue, dermal, compound, junctional) | 90 |

| Basosquamous acanthomas/verrucoid keratoses (stages of seborrheic keratosis and/or verrucae) | 100 |

| Basal cell carcinoma | 80 |

| Atypical nevi | 44 |

| Actinic keratosis | 32 |

| Squamous cell carcinoma in situ | 31 |

| Invasive squamous cell carcinoma | 28 |

| Spongiotic dermatitis | 23 |

| Epidermal cyst | 16 |

| Malignant melanoma | 15 |

| Dermatofibroma | 6 |

| Hemangioma | 5 |

| Sebaceous hyperplasia | 4 |

| Prurigo nodularis | 4 |

| Neurofibroma | 4 |

| Fibrous papule | 4 |

| Pilar cyst | 3 |

| Lipoma | 2 |

| Psoriasis | 1 |

| Bullous pemphigoid | 1 |

| Granuloma annulare | 1 |

| Lichen planus | 1 |

| Onychomycosis | 1 |

| Insect bite | 1 |

| Small vessel necrotizing vasculitis (leukocytoclastic vasc) | 1 |

| Chondrodermatitis nodularis helicis | 1 |

Intraobserver WSI and TM Diagnosis

Glass slides were digitized as WSI at ×20 magnification (Aperio AT2 Image Scope, Leica Biosystems). Cases were divided into 3 groups of 166, 166, and 167 cases, each proportionally representing the spectrum of cases seen in the laboratory.

Three board-certified dermatopathologists (intraobserver group, J.M., M.C., and M.J.K.) diagnosed one half of their cases by TM and the second half by WSI. Glass slides were read on conventional microscopes, while WSI were read on an in-house WSI system (Clearpath). Following a minimum 30-day washout period, each dermatopathologist diagnosed the same cases using the alternate method.

Intraobserver concordance between TM and WSI was determined as well as interobserver concordance between each interpretation and GTC diagnosis. Assignment of major or minor discrepancies between diagnoses were determined by effect on patient care and outcome, according to the criteria used by Bauer et al. Major discrepancy represented a difference in diagnosis associated with an alteration in patient care. Minor discrepancy was defined as having no significant clinical impact.

Statistical Analysis

Statistical analysis was performed by the Statistical Consulting Center at Wright State University. Discrepancies were analyzed by paired noninferiority tests with a 4% noninferiority margin. Major and minor discrepancies were analyzed separately with their own noninferiority tests of hypothesis. A Bonferroni correction was applied to control for potentially inflated type I error from performing 2 tests, which resulted in a level of significance of α = .025 that was used throughout. Wald-type test statistics and confidence intervals were calculated using methods described by Liu et al.

Efficiency Study

Separately, we compared read rates of 3 dermatopathologists (ratio of cases read/h by WSI vs cases read/h by TM) using a proprietary in-house digital pathology system (Clearpath) for WSI. Two dermatopathologists read WSI and TM cases at 1 hour intervals, for a total of 2 hours of reading by each methodology. A third pathologist read 1 hour by WSI and 2 hours by TM. Cases were included regardless of number of slides and without concern for level of difficulty; however, cases requiring additional stains or recuts were not included. Timers were stopped for interruptions such as phone calls or extraneous transactions to allow comparison of actual reading times.

Results

Intraobserver concordance between WSI and TM diagnosis was 94% (471 of 499). Twenty-eight (6%) cases were designated discordant diagnoses; 14 were minor and 14 were major. Of the major discrepancies shown in Table 2, there were 6 melanocytic lesions, 2 inflammatory lesions and 6 cases involving epithelial dysplasia/atypia vs epithelial hyperplasias/hypertrophies. Major discrepancies among melanocytic lesions included 2 cases of invasive melanoma vs in situ melanoma, 1 case of melanoma vs severely atypical nevus, and 3 cases where the margins were involved and coinciding histologically with a moderate or mild degree of atypism vs a benign nevus. Major discrepancies involving 2 inflammatory cases included spongiotic dermatitis by WSI vs benign keratosis by TM and another case of spongiotic dermatitis where TM interpretation was granuloma annulare. The 6 major discrepancies of nonmelanocytic lesions involved interpretation of disorganization and cytologic atypia of actinic keratosis vs various epithelial hyperplasias and/or hypertrophies.

Table 2. Major Intraobserver Discrepancies and Agreement With GTC.

| Case No./Group | WSI Diagnosis | TM Diagnosis | GTC Diagnosis |

|---|---|---|---|

| 1/Melanocytic | In situ malignant melanoma | Invasive malignant melanoma | Invasive malignant melanoma |

| 2/Melanocytic | In situ malignant melanoma | Invasive malignant melanoma | In situ malignant melanoma |

| 3/Melanocytic | Invasive malignant melanoma | Atypical lentiginous compound nevus; severe atypia | Atypical lentiginous junctional nevus; severe atypia |

| 4/Melanocytic | Lentiginous junctional nevus | Atypical lentiginous junctional nevus; moderate atypiaa | Atypical lentiginous junctional nevus; moderate atypia |

| 5/Melanocytic | Lentigo/early junctional nevus | Atypical lentiginous junctional nevus; moderate atypiaa | Lentiginous junctional nevus |

| 6/Melanocytic | Lentiginous compound nevus | Atypical lentiginous compound nevus; mild atypiaa | Atypical lentiginous compound nevus; moderate atypia |

| 7/Inflammatory | Spongiotic dermatitis | Solar lentigo-seborrheic keratosis overlap | Spongiotic dermatitis |

| 8/Inflammatory | Spongiotic dermatitis | Granuloma annulare | Spongiotic dermatitis |

| 9/Nonmelanocytic | Verrucous keratosis | Actinic keratosis; hypertrophic variant | Verruca vulgaris |

| 10/Nonmelanocytic | Verrucous benign keratosis | Bowenoid actinic keratosis | Actinic keratosis |

| 11/Nonmelanocytic | Verruca vulgaris | Actinic keratosis; hypertrophic variant | Verruca vulgaris |

| 12/Nonmelanocytic | Verruca vulgaris | Actinic keratosis; hypertrophic variant | Verruca vulgaris |

| 13/Nonmelanocytic | Actinic keratosis | Solar lentigo | Actinic keratosis |

| 14/Nonmelanocytic | Actinic keratosis | Spongiotic/Psoriasiform dermatitis | Actinic keratosis |

Abbreviations: GTC, ground truth consensus; TM, traditional microscopy; WSI, whole-slide images.

Moderate/mild atypia with the margins involved resulting in probable reexcision.

Interobserver concordance between WSI and GTC diagnosis was 94%, while interobserver concordance between TM and GTC diagnosis was 94%. Concordance between all observations (GTC, WSI, and TM) was 91%.

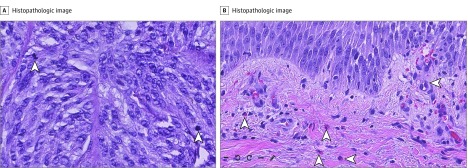

Melanocytic lesions comprised 30% of total cases, to include 15 malignant melanomas, and inflammatory cases represented 7%. Mitotic figures within melanomas were not difficult to identify by WSI. Inflammatory cases, particularly spongiotic dermatoses, yielded a mixed infiltrate with eosinophils that were easily visualized by WSI (Figure).

Figure. Whole-Slide Images .

Hematoxylin-eosin stained biopsy samples (original magnification ×20). A, Arrowheads indicate mitotic figures in invasive melanoma. B, Arrowheads indicate eosinophils in perivascular and interstitial zones of spongiotic dermatosis.

Noninferiority was determined comparing discrepancies of paired samples by each readout method to GTC, allowing a 4% noninferiority margin between methods. Rates of both major and minor discrepancies of WSI and TM for all cases were not significantly different. When considering major discrepancies in 3 subgroups, we found no significant difference between methodologies for nonmelanocytic lesions and inflammatory lesions; however, major discrepancies for melanocytic lesions fell outside the 4% noninferiority margin (Table 3).

Table 3. Major Intraobserver Discrepancies by Lesion Group.

| Case Group | Cases Read | Cases With Agreement | Concordance, % | P Value | (95% CI) |

|---|---|---|---|---|---|

| WSI vs TM | |||||

| All cases | 499 | 485 | 97 | <.001 | (−.01 to .01) |

| Nonmelanocytic lesions | 271 | 265 | 98 | <.001 | (−.01 to .03) |

| Melanocytic lesions | 149 | 143 | 96 | .053 | (−.05 to .02) |

| Inflammatory conditions | 34 | 32 | 94 | .007 | (−.02 to .14) |

Abbreviations: TM, traditional microscopy; WSI, whole-slide images.

Efficiency Study

Pathologist’s efficiency, measured by ratio of number of WSI cases read per minute to TM cases read per minute, suggests that WSI efficiency can achieve parity with TM, and efficiency correlated with pathologist’s experience with WSI (Table 4).

Table 4. TM vs WSI Read Rates.

| Pathologist | TM/WSI (Specimens Read per Hour) | Experience (Total WSI Reads) |

|---|---|---|

| 1 | 0.9 | 1843 |

| 2 | 1.9 | 686 |

| 3 | 1.7 | 640 |

Abbreviations: TM, traditional microscopy; WSI, whole-slide images.

Discussion

Acceptance of WSI for primary diagnosis requires rigorous demonstration that patient care will not be adversely affected. The accuracy of this methodology may well be dependent on individual subspecialty validation. The primary outcome in this large dermatopathology validation study is that diagnosis of dermatologic cases from WSI is not inferior to diagnosis by TM. Noninferiority tests are a standard intended to show that the effect of a new method is not worse than that of an active control by more than a specified margin. This testing methodology was the foundation for FDA approval of the first WSI system approved for primary diagnosis. Our findings support increasing evidence that using WSI does not compromise patient care and allows pathologists to gain confidence in WSI for primary diagnosis.

Unlike previous noninferiority testing of WSI, we used paired testing, with the advantage of comparing results from the same sample by the 2 different methods. Our study provides a statistically powerful and comprehensive addition to previous validation studies.

A recent publication by Shah et al lends additional support to the dermatopathology subspecialty in their finding that intraobserver and interobserver concordance rates between WSI and TM were equivalent in 181 dermatopathology cases when adhering to the 2013 CAP validation guidelines. In an earlier, smaller study, Al-Janabi et al found WSI and TM intraobserver concordance to be 94% in 100 dermatopathology cases. The 6 discordant cases were graded as minor, having no impact on patient care. In 2016, Goacher et al published a systematic review of 38 validation studies across all pathology subspecialties and found the mean diagnostic concordance of WSI and TM, weighted by the number of cases per study, was 92.4%. Our study would meet the criteria for inclusion in the Goacher et al review and provides further support for confirmation of the efficacy of WSI.

We found total (major and minor) intraobserver concordance between WSI and TM was unequivocal in 94% of all cases. Further, intraobserver concordance between WSI and TM achieved 97% when minor discrepancies were included as agreement. Importantly, our 94% interobserver concordance of WSI to GTC and TM to GTC supports the position that diagnostic methodology does not impact a pathologist’s interpretation.

Among the 499 cases reviewed, we evaluated 3 subgroupings: nonmelanocytic lesions, melanocytic lesions, and inflammatory cases, similar to the selected clustering of cases reported by other investigators. The 14 major intraobserver discrepancies by case and subgroup provide a frame of reference for discussion (Table 2).

Nonmelanocytic Lesions

The highest intraobserver concordance between WSI and TM was the nonmelanocytic grouping, with 98% agreement. The 6 major discrepancies involved interpretation of an actinic keratosis vs a variety of benign epithelial hyperplasias, to include seborrheic keratoses, verrucoid keratoses, and verruca vulgaris. Cases involving discordance between actinic keratoses, carcinoma in situ (Bowen disease) and invasive squamous cell carcinoma were not observed. We interpret the occurrence of major discrepancies, both intraobserver and interobserver, not as a failure of WSI methodology but rather the inherent subjectivity of pattern recognition and integration of degrees of dysplasia when biopsies are taken from chronically sun-damaged skin. While WSI yielded a less malignant diagnosis vs TM in 4 of 6 cases, WSI was concordant to GTC in 5 of 6 cases. Shah et al published similar results in terms of the common occurrence of actinic keratosis as a discordant diagnosis in the nonmelanocytic lesion grouping, as well as 2 discordant cases of an actinic keratosis vs invasive squamous cell carcinoma.

Melanocytic Lesions

In melanocytic lesions, although WSI vs TM concordance was 96% for major discrepancies, TM agreement with GTC was greater than WSI agreement with GTC, and the 2.5% lower confidence bound fell below the 4% noninferiority margin. This is not unexpected because diagnostic discordance among pathologists for this challenging group of lesions by TM alone has long been documented in the literature. We believe the method of readout (WSI or TM) is not a factor in the variability inherent in diagnosing this subgroup. It should also be noted that of the 15 melanomas in the study, WSI and TM each had 1 major disagreement with GTC.

As WSI emerges as a new methodology for diagnosing pathology cases, the intraobservational and interobservational discordance in the diagnosis of melanocytic lesions remains evident. Okada et al found 100% concordance after reviewing 23 benign melanocytic lesions and 12 malignant melanomas, both in situ and invasive malignant melanoma. Lienweber et al, in a larger series of 560 melanocytic neoplasms, reported a concordance rate of 94.4% to 96.4%, but using a binary (benign or malignant) reporting system. Shah et al recorded an overall lower concordance rate of melanocytic lesions at 75.6%, yet the figure corrected to a 97.4% intraobserver concordance using the binary system methodology. We expect that further, more statistically powerful WSI studies of melanocytic lesions, considering the effect of scanning magnification and including immunohistochemistry stains, will better elucidate the noninferiority of WSI and TM vs GTC for this challenging group. Case 1 reflected the major discrepancy of in situ melanoma vs invasive malignant melanoma with WSI favoring an in situ process and an invasive melanoma diagnosis by TM (Table 2). The GTC favored invasive malignant melanoma. On rereview of WSI, a section was identified with invasive melanoma. Case 2 was again a situation of an in situ melanoma diagnosed by WSI and GTC, as opposed to TM diagnosis of invasive melanoma. Further review was unable to reproduce the TM diagnosis of invasive melanoma. In both cases, one could reasonably attribute differences in interpretation to variations in logistical habit sign-out patterns when one is faced with multiple blocks that produce multiple fields of view under the microscope or scanned by WSI.

The remaining 4 intraobserver major discrepancies included a severely atypical nevus by TM vs melanoma by WSI and 3 cases where WSI diagnosis of nevus was less severe than dysplastic diagnoses by TM, with 2 of the 3 cases being moderate atypia and the third mild. These results might suggest a lower degree of image resolution by WSI vs TM, particularly because our scanning magnification was ×20 vs the ×40 magnification used by Shah et al and Al Habeeb and colleagues. Indeed, Al Habeeb et al noted that among their surveyed staff pathologists, participating majority opinion indicated ×40 magnification by WSI produced a superior image to the microscope. Nonetheless, with the more severe diagnosis of invasive melanoma by WSI vs severely atypical nevus by TM, in addition to the lack of disparities between WSI vs GTC and TM vs GTC, respectively, we attribute the differences to subjective nuances observed in melanocytic neoplasms.

Inflammatory Lesions

Our data reflected a high level of concordance with inflammatory lesions, with 32 of 34 cases, representing a wide range of dermatoses, being concordant. In fact, even though the concordance rate for this subgroup was not as high as for melanocytic lesions, noninferiority was achieved, as WSI achieved greater concordance with GTC than did TM (Table 3). One major discrepancy yielded a diagnosis of spongiotic dermatitis vs verrucoid keratosis. This variance, namely inflammation vs neoplasm, has been observed by others and attributed to the lack of a complete data set, such as clinical photographs. The second major discrepancy, spongiotic dermatitis vs granuloma annulare, was rereviewed with the diagnosis of granuloma annulare being an interpretative error, with the variance attributed to ropelike basophilic collagen bundles interpreted as mucin. Although Massone et al have reported a low 75% concordance among inflammatory lesions, the authors acknowledge limitations of their study to include incomplete clinical data, unfamiliarity with the virtual microscope, and intrinsic difficulties that accompany inflammatory skin pathologic analyses. Because of the high level of concordance of inflammatory lesions in our series, we believe inflammatory skin pathologic abnormalities can be readily interpreted using WSI for primary diagnosis, assuming an awareness and focus on clinicopathological correlations and clinical photographs available for review.

Efficiency Study

There has been little agreement regarding effect of WSI on pathologist workflow efficiency. This is not unexpected as the nascent technology is developing, innovative approaches to workflow design and implementation are being tested, and a new generation of pathologists, many used to digital lifestyles, come of age. It may also be that, as with implementation of electronic medical records, efficiencies in one area may compromise those in another, even as the total system provides gains for patient care. Our preliminary findings suggest that experience is a key factor and that parity can be achieved.

Although our efficiency data are small and relatively uncontrolled, after having the opportunity to work with WSI for 5 years it is our assessment that if the workflow involves 100 cases or less, WSI efficiencies can equal the microscope with training and practice. This is particularly relevant if there is an integrative laboratory information management system (LIS) and order and result interfaces.

Limitations

We did not evaluate intraobserver TM to TM or WSI to WSI concordance in this study; however, data from the literature and interobserver observations of WSI to GTC and TM to GTC are in agreement with our intraobserver WSI vs TM results. The study did not include rare dermatologic diagnoses or cases referred to other specialists (ie, lymphoreticular processes and cytopathologic diseases). We did not include granulomatous pathologic abnormalities or foreign bodies because, to our knowledge, WSI does not currently include polarizing capabilities.

Conclusions

Diagnosis from WSI was found to be noninferior compared with diagnosis from TM in this validation study of 499 cases reflecting the spectrum and complexity of specimen types and diagnoses encountered in a dermatopathology practice. Our substudy regarding reading efficiency suggests that pathologists experienced with WSI can achieve parity with TM. As WSI systems improve and pathologists gain experience in this transformative technology, diagnostic time should not be a barrier in adoption of WSI for primary diagnosis.

References

- 1.Koch LH, Lampros JN, Delong LK, Chen SC, Woosley JT, Hood AF. Randomized comparison of virtual microscopy and traditional glass microscopy in diagnostic accuracy among dermatology and pathology residents. Hum Pathol. 2009;40(5):662-667. [DOI] [PubMed] [Google Scholar]

- 2.Pantanowitz L, Valenstein PN, Evans AJ, et al. . Review of the current state of whole slide imaging in pathology. J Pathol Inform. 2011;2:36-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wilbur DC, Madi K, Colvin RB, et al. . Whole-slide imaging digital pathology as a platform for teleconsultation: a pilot study using paired subspecialist correlations. Arch Pathol Lab Med. 2009;133(12):1949-1953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Romero Lauro G, Cable W, Lesniak A, et al. . Digital pathology consultations-a new era in digital imaging, challenges and practical applications. J Digit Imaging. 2013;26(4):668-677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zembowicz A, Ahmad A, Lyle SR. A comprehensive analysis of a web-based dermatopathology second opinion consultation practice. Arch Pathol Lab Med. 2011;135(3):379-383. [DOI] [PubMed] [Google Scholar]

- 6.US Dept of Health and Human Services Food and Drug Administration. List of Cleared or Approved Companion Diagnostic Devices (In Vitro and Imaging Tools). http://www.fda.gov/medicaldevices/productsandmedicalprocedures/invitrodiagnostics/ucm301431.htm. Accessed March 20, 2017.

- 7.Nielsen PS, Riber-Hansen R, Raundahl J, Steiniche T. Automated quantification of MART1-verified Ki67 indices by digital image analysis in melanocytic lesions. Arch Pathol Lab Med. 2012;136(6):627-634. [DOI] [PubMed] [Google Scholar]

- 8.Madabhushi A, Lee G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med Image Anal. 2016;33:170-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Esteva A, Kuprel B, Novoa RA, et al. . Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115-118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wei B-R, Simpson RM. Digital pathology and image analysis augment biospecimen annotation and biobank quality assurance harmonization. Clin Biochem. 2014;47(4-5):274-279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Al-Janabi S, Huisman A, Van Diest PJ. Digital pathology: current status and future perspectives. Histopathology. 2012;61(1):1-9. [DOI] [PubMed] [Google Scholar]

- 12.US Department of Health and Human Services Clinical Laboratory Improvement Amendments of 1988: Final Rule. 42 CFR §493.1449. https://cdn.loc.gov/service/ll/fedreg/fr057/fr057040/fr057040.pdf. Effective September 1, 1992. Accessed April 4, 2017.

- 13.Al Habeeb A, Evans A, Ghazarian D. Virtual microscopy using whole-slide imaging as an enabler for teledermatopathology: A paired consultant validation study. J Pathol Inform. 2012;3:2-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.FDA allows marketing of first whole slide imaging system for digital pathology. Food and Drug Administration. April 12, 2017. https://www.fda.gov/newsevents/newsroom/pressannouncements/ucm552742.htm. Accessed June 12, 2017.

- 15.Whole slide imaging for primary diagnosis: ‘Now it is happening’. CAP Today. May 2017. http://www.captodayonline.com/whole-slide-imaging-primary-diagnosis-now-happening/. Accessed June 12, 2017.

- 16.Pantanowitz L, Sinard JH, Henricks WH, et al. ; College of American Pathologists Pathology and Laboratory Quality Center . Validating whole slide imaging for diagnostic purposes in pathology: guideline from the College of American Pathologists Pathology and Laboratory Quality Center. Arch Pathol Lab Med. 2013;137(12):1710-1722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Shah KK, Lehman JS, Gibson LE, Lohse CM, Comfere NI, Wieland CN. Validation of diagnostic accuracy with whole-slide imaging compared with glass slide review in dermatopathology. J Am Acad Dermatol. 2016;75(6):1229-1237. [DOI] [PubMed] [Google Scholar]

- 18.Al-Janabi S, Huisman A, Vink A, et al. . Whole slide images for primary diagnostics in dermatopathology: a feasibility study. J Clin Pathol. 2012;65(2):152-158. [DOI] [PubMed] [Google Scholar]

- 19.Mooney E, Hood AF, Lampros J, Kempf W, Jemec GB. Comparative diagnostic accuracy in virtual dermatopathology. Skin Res Technol. 2011;17(2):251-255. [DOI] [PubMed] [Google Scholar]

- 20.Bauer TW, Schoenfield L, Slaw RJ, Yerian L, Sun Z, Henricks WH. Validation of whole slide imaging for primary diagnosis in surgical pathology. Arch Pathol Lab Med. 2013;137(4):518-524. [DOI] [PubMed] [Google Scholar]

- 21.Piepkorn MW, Barnhill RL, Cannon-Albright LA, et al. . A multiobserver, population-based analysis of histologic dysplasia in melanocytic nevi. J Am Acad Dermatol. 1994;30(5 Pt 1):707-714. [DOI] [PubMed] [Google Scholar]

- 22.Brennan P, Silman A. Statistical methods for assessing observer variability in clinical measures. BMJ. 1992;304(6840):1491-1494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37(5):360-363. [PubMed] [Google Scholar]

- 24.Liu JP, Hsueh HM, Hsieh E, Chen JJ. Tests for equivalence or non-inferiority for paired binary data. Stat Med. 2002;21(2):231-245. [DOI] [PubMed] [Google Scholar]

- 25.Crockett J, Olsen TG, Kent MN Practical implementation of whole slide imaging for primary diagnosis in an independent dermatopathology lab. Poster presented at: 51st Annual Meeting of the American Society of Dermatopathology, Nov 6-9, 2014; Chicago IL. Abstract 640. [Google Scholar]

- 26.Duffy KL, Mann DJ, Petronic-Rosic V, Shea CR. Clinical decision making based on histopathologic grading and margin status of dysplastic nevi. Arch Dermatol. 2012;148(2):259-260. [DOI] [PubMed] [Google Scholar]

- 27.Hocker TL, Alikhan A, Comfere NI, Peters MS. Favorable long-term outcomes in patients with histologically dysplastic nevi that approach a specimen border. J Am Acad Dermatol. 2013;68(4):545-551. [DOI] [PubMed] [Google Scholar]

- 28.Abello-Poblete MV, Correa-Selm LM, Giambrone D, Victor F, Rao BK. Histologic outcomes of excised moderate and severe dysplastic nevi. Dermatol Surg. 2014;40(1):40-45. [DOI] [PubMed] [Google Scholar]

- 29.Kim CC, Swetter SM, Curiel-Lewandrowski C, et al. . Addressing the knowledge gap in clinical recommendations for management and complete excision of clinically atypical nevi/dysplastic nevi: Pigmented Lesion Subcommittee consensus statement. JAMA Dermatol. 2015;151(2):212-218. [DOI] [PubMed] [Google Scholar]

- 30.Fleming NH, Egbert BM, Kim J, Swetter SM. Reexamining the threshold for reexcision of histologically transected dysplastic nevi. JAMA Dermatol. 2016;152(12):1327-1334. [DOI] [PubMed] [Google Scholar]

- 31.Goacher E, Randell R, Williams B, Treanor D. The diagnostic concordance of whole slide imaging and light microscopy: a systematic review. Arch Pathol Lab Med. 2017;141(1):151-161. [DOI] [PubMed] [Google Scholar]

- 32.Farmer ER, Gonin R, Hanna MP. Discordance in the histopathologic diagnosis of melanoma and melanocytic nevi between expert pathologists. Hum Pathol. 1996;27(6):528-531. [DOI] [PubMed] [Google Scholar]

- 33.Braun RP, Gutkowicz-Krusin D, Rabinovitz H, et al. . Agreement of dermatopathologists in the evaluation of clinically difficult melanocytic lesions: how golden is the ‘gold standard’? Dermatology. 2012;224(1):51-58. [DOI] [PubMed] [Google Scholar]

- 34.Shoo BA, Sagebiel RW, Kashani-Sabet M. Discordance in the histopathologic diagnosis of melanoma at a melanoma referral center. J Am Acad Dermatol. 2010;62(5):751-756. [DOI] [PubMed] [Google Scholar]

- 35.Urso C, Rongioletti F, Innocenzi D, et al. . Interobserver reproducibility of histological features in cutaneous malignant melanoma. J Clin Pathol. 2005;58(11):1194-1198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Okada DH, Binder SW, Felten CL, Strauss JS, Marchevsky AM. “Virtual microscopy” and the internet as telepathology consultation tools: diagnostic accuracy in evaluating melanocytic skin lesions. Am J Dermatopathol. 1999;21(6):525-531. [DOI] [PubMed] [Google Scholar]

- 37.Leinweber B, Massone C, Kodama K, et al. . Teledermatopathology: a controlled study about diagnostic validity and technical requirements for digital transmission. Am J Dermatopathol. 2006;28(5):413-416. [DOI] [PubMed] [Google Scholar]

- 38.Massone C, Soyer HP, Lozzi GP, et al. . Feasibility and diagnostic agreement in teledermatopathology using a virtual slide system. Hum Pathol. 2007;38(4):546-554. [DOI] [PubMed] [Google Scholar]

- 39.Gilbertson JR, Ho J, Anthony L, Jukic DM, Yagi Y, Parwani AV. Primary histologic diagnosis using automated whole slide imaging: a validation study. BMC Clin Pathol. 2006;6:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Velez N, Jukic D, Ho J. Evaluation of 2 whole-slide imaging applications in dermatopathology. Hum Pathol. 2008;39(9):1341-1349. [DOI] [PubMed] [Google Scholar]

- 41.Treanor D, Jordan-Owers N, Hodrien J, Wood J, Quirke P, Ruddle RA. Virtual reality Powerwall vs conventional microscope for viewing pathology slides: an experimental comparison. Histopathology. 2009;55(3):294-300. [DOI] [PubMed] [Google Scholar]

- 42.Nielsen PS, Lindebjerg J, Rasmussen J, Starklint H, Waldstrøm M, Nielsen B. Virtual microscopy: an evaluation of its validity and diagnostic performance in routine histologic diagnosis of skin tumors. Hum Pathol. 2010;41(12):1770-1776. [DOI] [PubMed] [Google Scholar]

- 43.Randell R, Ruddle RA, Mello-Thoms C, Thomas RG, Quirke P, Treanor D. Virtual reality microscope vs conventional microscope regarding time to diagnosis: an experimental study. Histopathology. 2013;62(2):351-358. [DOI] [PubMed] [Google Scholar]

- 44.Vodovnik A. Diagnostic time in digital pathology: A comparative study on 400 cases. J Pathol Inform. 2016;7:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Treanor D, Gallas BD, Gavrielides MA, Hewitt SM. Evaluating whole slide imaging: A working group opportunity. J Pathol Inform. 2015;6:4-6. [PMC free article] [PubMed] [Google Scholar]

- 46.Thorstenson S, Molin J, Lundström C. Implementation of large-scale routine diagnostics using whole slide imaging in Sweden: Digital pathology experiences 2006-2013. J Pathol Inform. 2014;5(1):14-22. [DOI] [PMC free article] [PubMed] [Google Scholar]