This study compared faculty evaluation of male vs female emergency medicine resident milestone attainment throughout residency training.

Key Points

Question

How does gender affect the evaluation of emergency medicine residents throughout residency training?

Findings

In this longitudinal, retrospective cohort study of 33 456 direct-observation evaluations from 8 emergency medicine training programs, we found that the rate of milestone attainment was higher for male residents throughout training across all subcompetencies. By graduation, this gap was equivalent to more than 3 months of additional training.

Meaning

The rate of milestone attainment throughout training is significantly higher for male than female residents across all emergency medicine subcompetencies, leading to a wide gender gap in evaluations that continues until graduation.

Abstract

Importance

Although implicit bias in medical training has long been suspected, it has been difficult to study using objective measures, and the influence of sex and gender in the evaluation of medical trainees is unknown. The emergency medicine (EM) milestones provide a standardized framework for longitudinal resident assessment, allowing for analysis of resident performance across all years and programs at a scope and level of detail never previously possible.

Objective

To compare faculty-observed training milestone attainment of male vs female residency training

Design, Setting, and Participants

This multicenter, longitudinal, retrospective cohort study took place at 8 community and academic EM training programs across the United States from July 1, 2013, to July 1, 2015, using a real-time, mobile-based, direct-observation evaluation tool. The study examined 33 456 direct-observation subcompetency evaluations of 359 EM residents by 285 faculty members.

Main Outcomes and Measures

Milestone attainment for male and female EM residents as observed by male and female faculty throughout residency and analyzed using multilevel mixed-effects linear regression modeling.

Results

A total of 33 456 direct-observation evaluations were collected from 359 EM residents (237 men [66.0%] and 122 women [34.0%]) by 285 faculty members (194 men [68.1%] and 91 women [31.9%]) during the study period. Female and male residents achieved similar milestone levels during the first year of residency. However, the rate of milestone attainment was 12.7% (0.07 levels per year) higher for male residents through all of residency (95% CI, 0.04-0.09). By graduation, men scored approximately 0.15 milestone levels higher than women, which is equivalent to 3 to 4 months of additional training, given that the average resident gains approximately 0.52 levels per year using our model (95% CI, 0.49-0.54). No statistically significant differences in scores were found based on faculty evaluator gender (effect size difference, 0.02 milestone levels; 95% CI for males, −0.09 to 0.11) or evaluator-evaluatee gender pairing (effect size difference, −0.02 milestone levels; 95% CI for interaction, −0.05 to 0.01).

Conclusions and Relevance

Although male and female residents receive similar evaluations at the beginning of residency, the rate of milestone attainment throughout training was higher for male than female residents across all EM subcompetencies, leading to a gender gap in evaluations that continues until graduation. Faculty should be cognizant of possible gender bias when evaluating medical trainees.

Introduction

Women remain significantly underrepresented in academic medicine, with the greatest attrition in commitment to academia appearing to occur during residency. It has been hypothesized that unconscious bias may be a significant contributor to this attrition.1 This possibility is conceivable considering that within medicine women comprise only one-third of the physician workforce, continue to earn a lower adjusted income, hold fewer faculty positions at academic institutions, and enjoy fewer positions of leadership in medical societies and departments.1,2,3,4 Indeed, a recent study5 surveying more than 1000 US academic medical faculty members found that 70% of women perceived gender bias in the academic environment compared with 22% of men.

To date, only a handful of studies6,7,8,9 have examined the role of sex and gender in medical education evaluations. Among these studies, an analysis6 of 5 years of evaluations of medical trainees rotating through gastroenterology clinics at the Mayo Clinic found that gender differences in evaluation play a larger role at more senior levels of training. A cross-sectional study7 of internal medicine residents during their first 2 years of training at the University of California, San Francisco, revealed that male residents were consistently rated higher than their female colleagues in 9 dimensions of performance. A similarly designed study conducted at Yale University,8 however, found no significant evidence of gender bias in the evaluation of their internal medicine residents. Likewise, Holmboe et al9 asked faculty members to evaluate scripted videos of resident performance and found no differences in evaluation based on faculty or resident gender.

Many of these studies7,8 are now more than a decade old, making comparisons with current demographic data problematic. Moreover, none of these studies6,7,8,9 examined medical trainees across institutions, and many were performed using institutional or unvalidated evaluation scales, limiting the external validity of their findings. In addition, the studies have conflicting outcomes and widely varying methods, which make interpreting the findings difficult and comparisons among these studies nearly impossible. Furthermore, vignette-style studies9 may be prone to the Hawthorne effect, whereby evaluators are less likely to be discriminatory in their evaluations knowing that they are being evaluated. Lastly, few studies have examined bias using direct observation of skills.

The recently adopted Accreditation Council for Graduate Medical Education’s (ACGME’s) Next Accreditation System (NAS) milestone evaluations offers a novel method of studying gender bias. The NAS milestone evaluation system is a competency-based evaluation framework that is now used by all training programs to evaluate resident and fellow progress.10 This nationally standardized, longitudinal system allows for analysis of trainee performance across all years and training programs, at a scope and level of detail never previously possible, and can facilitate multicenter studies on many aspects of graduate medical education.

Emergency medicine (EM) was one of the first specialties to adopt the NAS and develop milestones through a rigorous process that included a consensus of national experts, and it is the only specialty to have engaged most residency programs in a national milestone validation study, resulting in significant revision of the milestones before implementation.11,12,13 To date, EM is the only specialty to have had the reliability and validity of their milestones supported using psychometric analysis by the ACGME, which included data from 100% of EM programs.11,14 This study aims to compare the evaluation of male vs female residents by faculty throughout training using a novel longitudinal, multi-institutional data set that consists of EM milestone evaluations based on direct observation.

Methods

This study was approved as exempt research by the University of Chicago Institutional Review Board. Data from all institutions were pooled, and all identifying information was removed to create a composite data set. Written consent for data use was obtained from all participating programs.

Study Population

Data for this longitudinal, retrospective analysis were collected at 8 hospitals from July 1, 2013, to July 1, 2015. Training programs were included in this study if they had already adopted InstantEval, a direct-observation mobile app for collecting milestone evaluations. For purposes of standardization, only 3-year ACGME-accredited EM training programs were included. Residents’ gender was determined by examining both names and photos for all residents and faculty that were submitted to InstantEval by the program. In cases of ambiguity, we looked at the residents’ profiles on their program's website to determine gender.

Data Collection

Data were collected using InstantEval, version 2.0 (Monte Carlo Software LLC), a software application available on the mobile devices and tablets of faculty members to facilitate real-time, direct-observation milestone evaluations. Faculty members chose when to complete evaluations, whom to evaluate, and the number of evaluations to complete, although most programs encouraged set numbers of daily point-of-care or end-of-shift evaluations (generally ranging from 1 to 3 evaluations per shift). Each evaluation consisted of a milestone-based performance level (1, 1.5, 2, 2.5, 3, 3.5, 4, 4.5, or 5) on 1 of 23 possible individual EM subcompetencies, along with an optional text comment given to a resident by a single faculty member (eFigure in the Supplement). Subcompetencies more procedural in nature were grouped as procedural subcompetencies.

When performing an evaluation, faculty members were presented with all descriptors of the individual milestone levels, as written by the ACGME and the American Board of Emergency Medicine. This data set, therefore, represents direct-observation evaluations produced at the individual evaluator level rather than the final evaluations produced by clinical competency committees.

Statistical Analysis

Trainee and faculty demographic data were tabulated, and a 1-sample test of proportions was used to assess gender differences in our study population compared with the national population of EM resident and attending physicians. Differences in the number of evaluations between the 2 groups were assessed using a 2-sample t test. Gaps in training were detected by time difference greater than 1 month between subsequent evaluations of a given resident.

Given that our sample size was large, appeared nearly normally distributed (skewness = −0.2, kurtosis = 2.7), and was without a substantial number of outliers, we analyzed the milestones as continuous rather than ordinal data. To explore the effect of resident and attending physician gender pairings on evaluations, scores given by male and female attending physicians were averaged separately for each resident and compared using a paired t test for both resident genders.

A 3-level mixed-effects model with both nested and crossed random effects using restricted maximum likelihood was used to examine the association between milestone evaluation scores and resident gender over time. In our primary model, evaluations (level 1) were nested within residents and attending physicians (crossed at level 2), who were nested in training programs (level 3). Residents were assigned random intercepts. Each model included fixed effects for the amount of time spent in residency, resident gender, and their interaction. To account for potential confounders, factors such as training within a community or academic program, being evaluated by a male or female attending physician, the interaction of attending and resident physician gender, and whether a procedural subcompetency was being evaluated were included as fixed effects in subsequent models. The normality of the standardized residuals was verified using quantile-quantile plots.

Differences in training programs were assessed by fitting an analysis of variance model using the mean score per resident for each postgraduate year (PGY) and assessing the training program by resident gender interaction. Analyses were performed using STATA statistical software, version 14 (StataCorp). Statistical significance was presumed at P < .05 (2-tailed test).

Results

Demographic Characteristics

A total of 33 456 direct-observation evaluations were collected from 359 EM residents (237 men [66.0%] and 122 women [34.0%]) by 285 faculty members (194 men [68.1%] and 91 women [31.9%]) during the study period. The proportion of female residents in our study (34.0%) was not significantly different from the proportion of female residents in EM nationally (37.5%; P = .12).15 However, our study sample had a slightly higher proportion of female attending physicians (91 [31.9%]) compared with the national population of EM physicians (25.5%; P = .02).15 Our study included evaluations from 8 training programs (6 academic and 2 community programs) (Table 1). The training programs represent all 4 US Census–designated regions of the United States (Northeast, Midwest, South, West) in a mix of rural, suburban, and urban settings. Training programs ranged from 21 to 54 residents.

Table 1. Characteristics of the Emergency Medicine Resident and Attending Physicians and Evaluations by Gender.

| Characteristic | No. (%) of Physicians | |

|---|---|---|

| Men | Women | |

| Attending physicians (n = 285) | ||

| Total | 194 (68.1) | 91 (31.9) |

| Setting | ||

| Academic program (n = 249 physicians) | 165 (66.3) | 84 (33.7) |

| Community program (n = 36 physicians) | 29 (80.6) | 7 (19.4) |

| Residents (n = 359) | ||

| Total | 237 (66.0) | 122 (34.0) |

| Setting | ||

| Academic program (n = 285 physicians) | 187 (65.6) | 98 (34.4) |

| Community program (n = 74 physicians) | 50 (67.6) | 24 (32.4) |

| Evaluations by resident gender (n = 33 456) | ||

| Postgraduate year | ||

| 1 (n = 9832) | 6898 (70.2) | 2934 (29.8) |

| 2 (n = 13 129) | 8881 (67.6) | 4248 (32.4) |

| 3 (n = 10 495) | 7069 (67.4) | 3426 (32.6) |

| Procedural evaluations | 5094 (67.7) | 2426 (32.3) |

| Evaluations with female attending physician | 6202 (67.2) | 3028 (32.8) |

Because of the adoption of InstantEval by training programs at different times during the study period, this data set represents 350 resident-years of evaluations. A total of 9832 evaluations (29.4%) were of PGY1 residents, 13 129 (39.2%) of PGY2 residents, and 10 493 (31.4%) of PGY3 residents. The mean numbers of evaluations received during the study period were 96 for female residents and 87 for male residents, although this difference was not statistically significant (P = .21). Similarly, the mean numbers of evaluations were 125 for male attending physicians and 101 for female attending physicians, which was also not statistically significant (P = .25). Finally, there were no statistically significant differences in the mean duration or frequency of training gaps between male and female residents (male residents had a mean of 2.77 periods [4 continuous weeks each] with no evaluations vs 2.54 periods with no evaluations for females; P = .85).

Descriptive Analysis

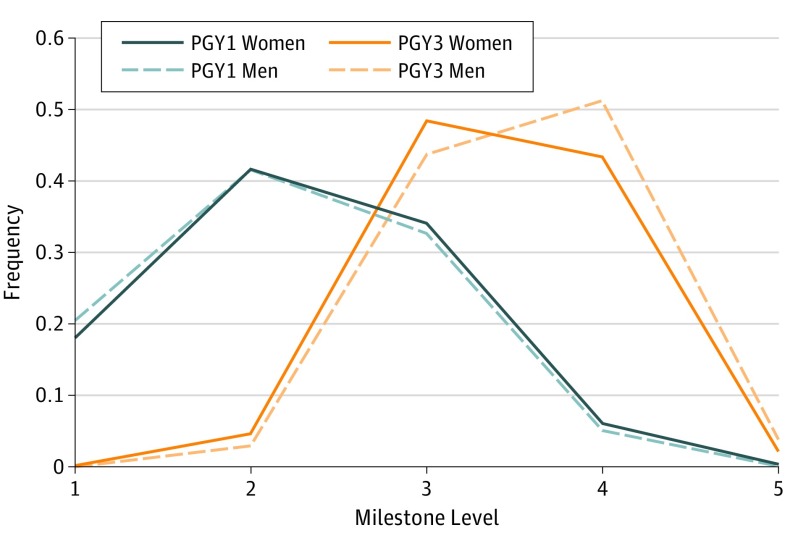

Frequency distributions for the milestone levels assigned to male and female residents in PGY1 and PGY3 are shown in the Figure. The PGY1 score distributions appear to be similar for male and female residents; however, the PGY3 distributions suggest that male residents are evaluated at higher milestone levels more frequently. This trend was observed in 7 of 8 training programs included in the study.

Figure. Frequency Distribution of Milestone Levels for Postgraduate Year (PGY) 1 and PGY3 Attending and Resident Physicians.

Data for the histograms are binned by integer milestone level because few attending physicians chose to use half-milestone intervals (1.5, 2.5, 3.5, and 4.5) when performing evaluations.

Mean scores per EM subcompetency were calculated for PGY1 and PGY3 residents (Table 2). In the first year of residency, male and female residents were evaluated comparably, with female residents receiving higher evaluations in subcompetencies, such as multitasking, diagnosis, and accountability. For PGY3 residents, men were evaluated higher on all 23 subcompetencies. No statistically significant differences were found in the scores given by male and female faculty members, indicating that faculty members of both sexes evaluated female residents lower.

Table 2. Mean Milestone Level for PGY3 Men and Women by Subcompetency.

| Subcompetency | PGY1 | PGY3 | ||||

|---|---|---|---|---|---|---|

| Women | Men | Differencea | Women | Men | Differencea | |

| Emergency stabilizationb | 2.38 | 2.37 | 0.01 | 3.6 | 3.75 | −0.16 |

| Focused history and physical examination | 2.52 | 2.46 | 0.06 | 3.62 | 3.78 | −0.16 |

| Diagnostic studies | 2.36 | 2.32 | 0.05 | 3.68 | 3.74 | −0.07 |

| Diagnosis | 2.55 | 2.41 | 0.14 | 3.65 | 3.79 | −0.14 |

| Pharmacotherapy | 2.25 | 2.29 | −0.04 | 3.55 | 3.61 | −0.06 |

| Observation and reassessment | 2.55 | 2.44 | 0.12 | 3.59 | 3.7 | −0.12 |

| Disposition | 2.48 | 2.41 | 0.07 | 3.66 | 3.81 | −0.15 |

| Multitasking (task switching) | 2.62 | 2.44 | 0.18 | 3.73 | 3.88 | −0.15 |

| General approach to proceduresb | 2.54 | 2.42 | 0.13 | 3.61 | 3.85 | −0.24 |

| Airway managementb | 2.37 | 2.35 | 0.02 | 3.52 | 3.79 | −0.26 |

| Anesthesia and acute pain managementb | 2.26 | 2.35 | −0.09 | 3.58 | 3.69 | −0.11 |

| Goal-directed focused ultrasonographyb | 2.58 | 2.6 | −0.02 | 3.44 | 3.45 | −0.01 |

| Wound managementb | 2.39 | 2.41 | −0.02 | 3.59 | 3.66 | −0.07 |

| Vascular accessb | 2.24 | 2.31 | −0.06 | 3.52 | 3.66 | −0.15 |

| Medical knowledge | 2.34 | 2.4 | −0.07 | 3.74 | 3.8 | −0.06 |

| Patient safety | 2.33 | 2.33 | 0.01 | 3.45 | 3.56 | −0.11 |

| Systems-based management | 2.37 | 2.36 | 0.01 | 3.57 | 3.63 | −0.07 |

| Technology | 2.32 | 2.34 | −0.01 | 3.5 | 3.53 | −0.03 |

| Practice-based performance improvement | 2.46 | 2.44 | 0.02 | 3.41 | 3.58 | −0.17 |

| Professional values | 2.6 | 2.52 | 0.08 | 3.68 | 3.75 | −0.08 |

| Accountability | 2.58 | 2.4 | 0.18 | 3.54 | 3.62 | −0.08 |

| Patient-centered communication | 2.61 | 2.55 | 0.07 | 3.64 | 3.73 | −0.09 |

| Team management | 2.41 | 2.43 | −0.01 | 3.56 | 3.72 | −0.16 |

Abbreviation: PGY, postgraduate year.

Difference between female and male resident scores.

Procedural milestone.

Mixed-Effects Model Analysis

Results from the mixed-effects linear regression model are given in Table 3. Consistent with the means calculated in Table 2, our model demonstrated that female residents were evaluated higher than male residents at the beginning of residency, but this factor was only weakly significant (−0.07; 95% CI, −0.14 to −0.004). The rate of milestone attainment, defined as the increase in the mean milestone level achieved over time, was 0.52 levels per year (95% CI, 0.49-0.53). Male residents had a significant, 13% higher rate of milestone attainment (0.07 milestone levels per year; 95% CI, 0.04-0.09). This higher rate of attainment led to a higher mean milestone score for men after the first year of residency that continued until graduation. By graduation, men were evaluated approximately 0.15 milestone levels higher than women, equivalent to 3 to 4 months of additional training, given the overall increase of 0.52 milestone levels per year. This effect was consistent for procedural and nonprocedural subcompetencies, as well as across training programs. No overall differences in milestone scores were found based on evaluator gender (effect of 0.02 milestone levels; 95% CI, −0.09 to 0.11) or evaluator-evaluatee gender pairing (effect of −0.02 milestone levels; 95% CI, −0.05 to 0.01), indicating that male and female faculty members evaluated residents similarly. Additional significant predictors of milestone score included time spent in residency (effect of 0.52 levels per year; 95% CI, 0.49-0.54; P < .001) and whether a procedural skill was evaluated (effect of −0.04 levels; −0.06 to −0.03; P < .001) (Table 3).

Table 3. Predictors of Resident Milestone Scores Based on a Mixed-Effects Modela.

| Factor | Coefficient (95% CI) | P Value |

|---|---|---|

| Time spent in residency (levels per year) | 0.52 (0.49 to 0.54) | <.001 |

| Time spent in residency adjusted for male residents (levels per year) | 0.07 (0.04 to 0.09) | <.001 |

| Initial adjustment for male residents (levels) | −0.07 (−0.14 to −0.004) | .04 |

| Procedural task (levels) | −0.04 (−0.06 to −0.03) | <.001 |

Time spent in residency adjusted for male residents represents the relative difference in rate of milestone attainment for men compared with women (the resident × time interaction or slope). According to this model, by postgraduate year 3, the mean milestone score will be 0.15 levels higher for men compared with women, representing 3 to 4 mo of training. Initial adjustment for male residents represents the relative fixed difference in milestone scores for men compared with women (the intercept for male residents).

Discussion

To our knowledge, this is the first study to use the EM milestones, which have strong evidence of validity, to quantify gender bias in trainee evaluations using a longitudinal, multicenter data set. We found that despite starting at similar levels, the rate of milestone attainment throughout training is higher for male than female residents across all EM subcompetencies, leading to a wide gender gap in evaluations by graduation. Because of our data structure, we were able to use robust statistical modeling techniques to test potential mechanisms that may produce the significant gender gap observed, while controlling for other characteristics, such as evaluator gender and grading tendencies.

It is worth exploring the mechanism of these findings. One possibility is that gender differences in this study were at least partially driven by implicit gender bias, defined as an unconscious preference for, or prejudice against, one gender over another. Of importance, evaluators are generally unaware that such biases are operating, and these biases may even be at odds with their professed beliefs. Several aspects of our data support this implicit gender bias hypothesis. We found that men and women were evaluated similarly at the beginning of training, with women, in fact, receiving higher mean scores on several subcompetencies. This finding suggests that male and female residents entered training with similar skills and funds of knowledge. However, as women progressed through the same residency programs, they were consistently evaluated lower than their male colleagues. By PGY3, women were evaluated lower on all 23 EM subcompetencies, including the potentially more objective procedural subcompetencies and potentially more subjective nonprocedural subcompetencies. Such a uniform trend may suggest implicit bias rather than diminished competency or skill, especially considering that men and women began residency with similar skills and knowledge.

Research from the social sciences has yielded a number of insights into conscious and subconscious drivers of gender bias in medical education and the effects they have over time.16,17,18,19,20 Senior residents are expected to assume leadership roles and display agentic traits, such as assertiveness and independence, which are stereotypically identified as male characteristics.18 When female residents assume leadership roles and display agentic qualities during later years of training, they may incur a penalty for violating expected gender roles—a phenomenon that has been described as role incongruity or the likeability penalty.16,18,19,20 Compounding the problem is the concept of stereotype threat, where members of a group characterized by negative stereotypes may actually perform below their actual abilities in situations where the negative stereotype becomes salient.17 Thus, one way to interpret our findings is that a widening gender gap is attributable to the cumulative effects of repeated disadvantages and biases that become increasingly pronounced at the more senior levels of training.

Other factors that may contribute to the observed evaluation gap include disparate opportunities in accessing mentorship, practicing skills, and obtaining meaningful feedback. For example, it has been established that gender plays a strong role in the mentor-mentee relationship.21 However, there are disproportionately fewer female faculty members in EM, which may reduce mentorship opportunities for female residents. It is also possible that male residents had more opportunities to practice their skills in the emergency department, and their higher evaluation scores are attributable to more clinical experience. Although not statistically significant, the lower than expected number of evaluations for female residents may represent less feedback from attending physicians or less participation in observed clinical opportunities.

It is also possible that women have systematic disadvantages in certain domains of clinical practice that are leading to this gap. We found larger differences between men and women in certain subcompetencies, such as airway management and general approach to procedures. A more thorough evaluation of such drivers may allow simple solutions to these problems, such as designing ergonomic laryngoscopes for women or adding protocols to adjust bed height in the case of the airway management subcompetency.

Social determinants, such as motherhood and maternity leave, have been discussed as potential drivers of the gender gap in the workplace in several studies.1,3,22 Such factors would likely be more pronounced during training, which is consistent with our findings. However, few training gaps were detected in our study, and the frequency and duration of these gaps did not differ significantly for male and female residents.

Given the disparity we observed, future research is needed to better understand the mechanisms behind these trends so that we can design effective interventions that promote gender equity in medicine. Although it was beyond the scope of this study, our data include nearly 15 000 text comments along with the numerical evaluations that may provide additional important insights into why the gender gap emerges. In addition, studies of participant observation of medical education have been found to effectively uncover biases. Thus, future research using qualitative methods is warranted to better understand the context in which these evaluations occur.23

Regardless of the specific factors behind our findings, our study highlights the need for awareness of gender bias in residency training, which itself may partially serve to mitigate it. Implementing focused evaluation and communication techniques based on proven models of effective evaluation and feedback strategies, combined with continued recruitment and training efforts to narrow the gender and mentorship gaps in medicine, may also help attenuate gender differences in evaluations during residency.3,17 Training programs may also consider introducing implicit bias training and addressing stereotype threat by promoting a more inclusive and supportive culture.1,17,18

Understanding bias in the NAS is also important because the milestone evaluation system is a critical piece in beginning the transition from the current structure and process system of postgraduate medical education to a competency-based medical education system.13,24 Under a competency-based medical education system, residents will graduate only after demonstrating competency in the core areas of a specialty, which can even lead to variable training lengths from resident to resident. On the basis of the findings of our model, female residents would require an additional 3 to 4 months of training to graduate at the same level as their male counterparts. Because a resident’s milestone evaluations may one day influence how long they spend in training, it is imperative that the evaluation system be rigorously validated and investigated for any possible bias.

Limitations

This study should be interpreted within the context of certain limitations. The influence of sex and gender on evaluations is highly complex, and given the observational nature of our study and the difficulty of establishing causality, many of our explanations will remain speculative until further research provides a fuller understanding. It is possible that we did not attribute gender correctly based on name and photo review. Furthermore, the type of feedback solicited by residents, or given by evaluators, may have varied because of selection bias. In addition, although all programs used the same evaluation tool, use of the app likely varied by program, attending physician, and shift. Although our study includes academic and community training programs throughout the country in urban, suburban, and rural settings of all sizes, our data may not be reflective of all EM programs because only programs that had adopted use of the InstantEval software for resident evaluations were included in the study.

Conclusions

Although male and female EM residents are evaluated similarly at the beginning of residency, the rate of milestone attainment throughout training is higher for male than female residents, leading to a wide gender gap in evaluations across all EM subcompetencies by graduation. Although the specific factors that drive these outcomes remain to be determined, this study highlights the need to be cognizant of gender bias and the necessity of further research in this area.

eFigure. Screenshot of the InstantEval App Displaying the Patient-Centered Communication Subcompetency, Taken on an Apple iPad Mini

References

- 1.Edmunds LD, Ovseiko PV, Shepperd S, et al. . Why do women choose or reject careers in academic medicine? a narrative review of empirical evidence. Lancet. 2016;388(10062):2948-2958. [DOI] [PubMed] [Google Scholar]

- 2.Wehner MR, Nead KT, Linos K, Linos E. Plenty of moustaches but not enough women: cross sectional study of medical leaders. BMJ. 2015;351:h6311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kuhn GJ, Abbuhl SB, Clem KJ; Society for Academic Emergency Medicine (SAEM) Taskforce for Women in Academic Emergency Medicine . Recommendations from the Society for Academic Emergency Medicine (SAEM) Taskforce on women in academic emergency medicine. Acad Emerg Med. 2008;15(8):762-767. [DOI] [PubMed] [Google Scholar]

- 4.Cydulka RK, D’Onofrio G, Schneider S, Emerman CL, Sullivan LM. Women in academic emergency medicine. Acad Emerg Med. 2000;7(9):999-1007. [DOI] [PubMed] [Google Scholar]

- 5.Jagsi R, Griffith KA, Jones R, Perumalswami CR, Ubel P, Stewart A. Sexual harassment and discrimination experiences of academic medical faculty. JAMA. 2016;315(19):2120-2121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Thackeray EW, Halvorsen AJ, Ficalora RD, Engstler GJ, McDonald FS, Oxentenko AS. The effects of gender and age on evaluation of trainees and faculty in gastroenterology. Am J Gastroenterol. 2012;107(11):1610-1614. [DOI] [PubMed] [Google Scholar]

- 7.Rand VE, Hudes ES, Browner WS, Wachter RM, Avins AL. Effect of evaluator and resident gender on the American Board of Internal Medicine evaluation scores. J Gen Intern Med. 1998;13(10):670-674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Brienza RS, Huot S, Holmboe ES. Influence of gender on the evaluation of internal medicine residents. J Womens Health (Larchmt). 2004;13(1):77-83. [DOI] [PubMed] [Google Scholar]

- 9.Holmboe ES, Huot SJ, Brienza RS, Hawkins RE. The association of faculty and residents’ gender on faculty evaluations of internal medicine residents in 16 residencies. Acad Med. 2009;84(3):381-384. [DOI] [PubMed] [Google Scholar]

- 10.Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system–rationale and benefits. N Engl J Med. 2012;366(11):1051-1056. [DOI] [PubMed] [Google Scholar]

- 11.Beeson MS, Carter WA, Christopher TA, et al. The development of the emergency medicine milestones. Acad Emerg Med 2013;20(7):724-729. [DOI] [PubMed] [Google Scholar]

- 12.Love JN, Yarris LM, Ankel FK; Council of Emergency Medicine Residency Directors (CORD) . Emergency medicine milestones: the next step. Acad Emerg Med. 2015;22(7):847-848. [DOI] [PubMed] [Google Scholar]

- 13.Ankel F, Franzen D, Frank J Milestones: quo vadis? Acad Emerg Med 2013;20(7):749-750. [DOI] [PubMed] [Google Scholar]

- 14.Beeson MS, Holmboe ES, Korte RC, et al. Initial validity analysis of the emergency medicine milestones. Acad Emerg Med. 2015;22(7):838-844. [DOI] [PubMed] [Google Scholar]

- 15.Association of American Medical Colleges , Center for Workforce Studies. Washington, DC: Association of American Medical Colleges; 2014 Physician Specialty Data Book 2014. [Google Scholar]

- 16.Kolehmainen C, Brennan M, Filut A, Isaac C, Carnes M. Afraid of being “witchy with a ‘b’”: a qualitative study of how gender influences residents’ experiences leading cardiopulmonary resuscitation. Acad Med. 2014;89(9):1276-1281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Burgess DJ, Joseph A, van Ryn M, Carnes M. Does stereotype threat affect women in academic medicine? Acad Med. 2012;87(4):506-512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Carnes M, Bartels CM, Kaatz A, Kolehmainen C. Why is John more likely to become department chair than Jennifer? Trans Am Clin Climatol Assoc. 2015;126:197-214. [PMC free article] [PubMed] [Google Scholar]

- 19.Eagly AH, Karau SJ. Role congruity theory of prejudice toward female leaders. Psychol Rev. 2002;109(3):573-598. [DOI] [PubMed] [Google Scholar]

- 20.Heilman ME, Wallen AS, Fuchs D, Tamkins MM. Penalties for success: reactions to women who succeed at male gender-typed tasks. J Appl Psychol. 2004;89(3):416-427. [DOI] [PubMed] [Google Scholar]

- 21.Levine RB, Mechaber HF, Reddy ST, Cayea D, Harrison RA. “A good career choice for women”: female medical students’ mentoring experiences: a multi-institutional qualitative study. Acad Med. 2013;88(4):527-534. [DOI] [PubMed] [Google Scholar]

- 22.Leonard JC, Ellsbury KE. Gender and interest in academic careers among first- and third-year residents. Acad Med. 1996;71(5):502-504. [DOI] [PubMed] [Google Scholar]

- 23.Jenkins TM. ‘It’s time she stopped torturing herself’: structural constraints to decision-making about life-sustaining treatment by medical trainees. Soc Sci Med. 2015;132(132):132-140. [DOI] [PubMed] [Google Scholar]

- 24.Iobst WF, Sherbino J, Cate OT, et al. Competency-based medical education in postgraduate medical education. Med Teach. 2010;32(8):651-656. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure. Screenshot of the InstantEval App Displaying the Patient-Centered Communication Subcompetency, Taken on an Apple iPad Mini