Significance

The peripheral hearing system contains several motor mechanisms that allow the brain to modify the auditory transduction process. Movements or tensioning of either the middle ear muscles or the outer hair cells modifies eardrum motion, producing sounds that can be detected by a microphone placed in the ear canal (e.g., as otoacoustic emissions). Here, we report a form of eardrum motion produced by the brain via these systems: oscillations synchronized with and covarying with the direction and amplitude of saccades. These observations suggest that a vision-related process modulates the first stage of hearing. In particular, these eye movement-related eardrum oscillations may help the brain connect sights and sounds despite changes in the spatial relationship between the eyes and the ears.

Keywords: reference frame, otoacoustic emissions, middle ear muscles, saccade, EMREO

Abstract

Interactions between sensory pathways such as the visual and auditory systems are known to occur in the brain, but where they first occur is uncertain. Here, we show a multimodal interaction evident at the eardrum. Ear canal microphone measurements in humans (n = 19 ears in 16 subjects) and monkeys (n = 5 ears in three subjects) performing a saccadic eye movement task to visual targets indicated that the eardrum moves in conjunction with the eye movement. The eardrum motion was oscillatory and began as early as 10 ms before saccade onset in humans or with saccade onset in monkeys. These eardrum movements, which we dub eye movement-related eardrum oscillations (EMREOs), occurred in the absence of a sound stimulus. The amplitude and phase of the EMREOs depended on the direction and horizontal amplitude of the saccade. They lasted throughout the saccade and well into subsequent periods of steady fixation. We discuss the possibility that the mechanisms underlying EMREOs create eye movement-related binaural cues that may aid the brain in evaluating the relationship between visual and auditory stimulus locations as the eyes move.

Visual information can aid hearing, such as when lip reading cues facilitate speech comprehension. To derive such benefits, the brain must first link visual and auditory signals that arise from common locations in space. In species with mobile eyes (e.g., humans, monkeys), visual and auditory spatial cues bear no fixed relationship to one another but change dramatically and frequently as the eyes move, about three times per second over an 80° range of space. Accordingly, considerable effort has been devoted to determining where and how the brain incorporates information about eye movements into the visual and auditory processing streams (1). In the primate brain, all of the regions previously evaluated have shown some evidence that eye movements modulate auditory processing [inferior colliculus (2–6), auditory cortex (7–9), parietal cortex (10–12), and superior colliculus (13–17)]. Such findings raise the question of where in the auditory pathway eye movements first impact auditory processing. In this study, we tested whether eye movements affect processing in the auditory periphery.

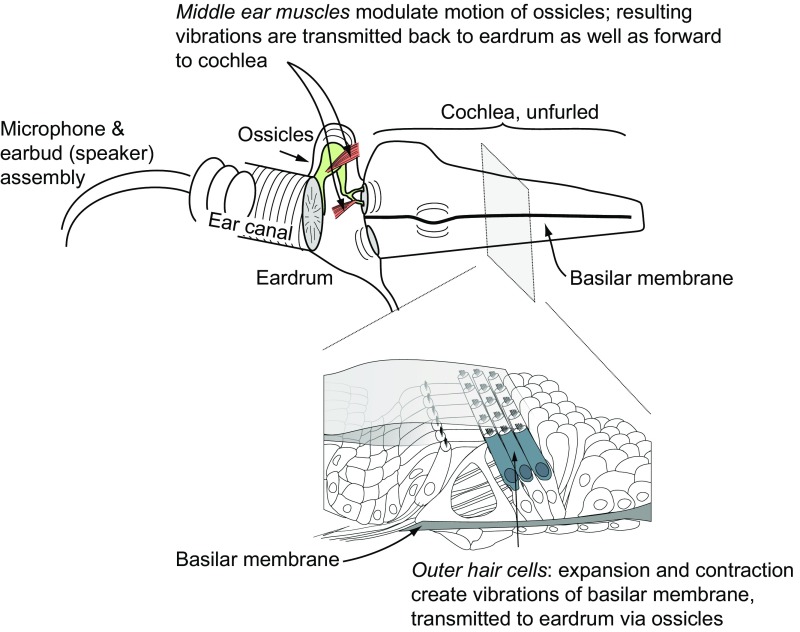

The auditory periphery possesses at least two means of tailoring its processing in response to descending neural control (Fig. 1). First, the middle ear muscles (MEMs), the stapedius and tensor tympani, attach to the ossicles that connect the eardrum to the oval window of the cochlea. Contraction of these muscles tugs on the ossicular chain, modulating middle ear sound transmission and moving the eardrum. Second, within the cochlea, the outer hair cells (OHCs) are mechanically active and modify the motion of both the basilar membrane and, through mechanical coupling via the ossicles, the eardrum [i.e., otoacoustic emissions (OAEs)]. In short, the actions of the MEMs and OHCs affect not only the response to incoming sound but also transmit vibrations backward to the eardrum. Both the MEMs and OHCs are subject to descending control by signals from the central nervous system (reviewed in refs. 18–20), allowing the brain to adjust the cochlear encoding of sound in response to previous or ongoing sounds in either ear and based on global factors, such as attention (21–27). The collective action of these systems can be measured in real time with a microphone placed in the ear canal (28). We used this technique to study whether the brain sends signals to the auditory periphery concerning eye movements, the critical information needed to reconcile the auditory spatial and visual spatial worlds.

Fig. 1.

Motile cochlear OHCs expand and contract in a way that depends both on the incoming sound and on the descending input received from the superior olivary complex in the brain. OHC motion moves the basilar membrane, and subsequently the eardrum, via fluid/mechanical coupling of these membranes through the ossicular chain. The MEMs also pull on the ossicles, directly moving the eardrum. These muscles are innervated by motor neurons near the facial and trigeminal nerve nuclei, which receive input from the superior olive bilaterally. In either case, eardrum motion can be measured with a microphone in the ear canal.

Results

The Eardrums Move with Saccades.

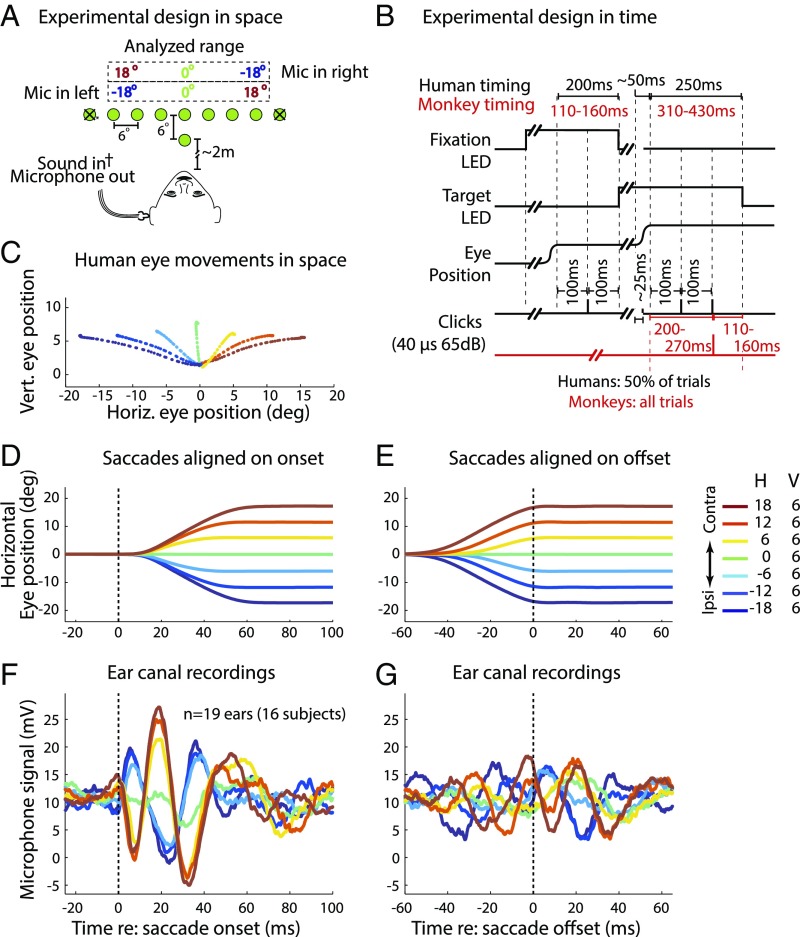

Sixteen humans executed saccades to visual targets varying in horizontal position (Fig. 2A). Half of the trials were silent, whereas the other half incorporated a series of task-irrelevant clicks presented before, during, and at two time points after the saccades (Fig. 2B). This allowed us to compare the effects of eye movement-related neural signals on the auditory periphery in silence as well as in the presence of sounds often used to elicit OAEs.

Fig. 2.

Experimental design and results. (A) Recordings for all subjects were made via a microphone (Mic) in the ear canal set into a custom-fit ear bud. On each trial, a subject fixated on a central LED and then made a saccade to a target LED (−24° to +24° horizontally in 6° increments and 6° above the fixation point) without moving his/her head. The ±24° locations were included on only 4.5% of trials and were excluded from analysis (Methods); other target locations were equally likely (∼13% frequency). (B) Humans (black text) received randomly interleaved silent and click trials (50% each). Clicks were played via a sound transducer coupled with the microphone at four times during these trials: during the initial fixation and saccade and at 100 ms and 200 ms after target fixation. Monkeys’ trials had minor timing differences (red text), and all trials had one click at 200–270 ms after target fixation (red click trace). (C) Average eye trajectories for one human subject and session for each of the included targets are shown; colors indicate saccade target locations from ipsilateral (blue) to contralateral (red). deg, degrees; Horiz., horizontal; Vert., vertical. Mean eye position is shown as a function of time, aligned on saccade onset (D) and offset (E). H, horizontal; V, vertical. Mean microphone recordings of air pressure in the ear canal, aligned on saccade onset (F) and offset (G), indicate that the eardrum oscillates in conjunction with eye movements. The phase and amplitude of the oscillation varied with saccade direction and amplitude, respectively. The oscillations were, on average, larger when aligned on saccade onset than when aligned on saccade offset.

We found that the eardrum moved when the eyes moved, even in the absence of any externally delivered sounds. Fig. 2 D and E shows the average eye position as a function of time for each target location for the human subjects on trials with no sound stimulus, aligned with respect to (w.r.t.) saccade onset (Fig. 2D) and offset (Fig. 2E). The corresponding average microphone voltages are similarly aligned and color-coded for saccade direction and amplitude (Fig. 2 F and G). The microphone readings oscillated, time-locked to both saccade onset and offset with a phase that depended on saccade direction. When the eyes moved toward a visual target contralateral to the ear being recorded, the microphone voltage deflected positively, indicating a change in ear canal pressure, beginning about 10 ms before eye movement onset. This was followed by a more substantial negative deflection at about 5 ms after the onset of the eye movement, after which additional oscillatory cycles occurred. The period of the oscillation is typically about 30 ms (∼33 Hz). For saccades in the opposite (ipsilateral) direction, the microphone signal followed a similar pattern but in the opposite direction: The initial deflection of the oscillation was negative. The amplitude of the oscillations appears to vary with the amplitude of the saccades, with larger saccades associated with larger peaks than those occurring for smaller saccades.

Comparison of the traces aligned on saccade onset vs. saccade offset reveals that the oscillations continue into at least the initial portion of the period of steady fixation that followed saccade offset. The eye movement-related eardrum oscillation (EMREO) observed following saccade offset was similar in form to that observed during the saccade itself when the microphone traces were aligned on saccade onset, maintaining their phase and magnitude dependence on saccade direction and length. The fact that this postsaccadic continuation of the EMREO is not seen when the traces are aligned on saccade onset suggests a systematic relationship between the offset of the movement and the phase of the EMREO, such that variation in saccade duration obscures the ongoing nature of the EMREO.

To obtain a portrait of the statistical significance of the relationship between the direction and amplitude of the eye movements and the observed microphone measurements of ear canal pressure across time, we calculated a regression of microphone voltage vs. saccade target location for each 0.04-ms sample from 25 ms before to 100 ms after saccade onset. The regression was conducted separately for each individual subject. Since this involved many repeated statistical tests, we compared the real results with a Monte Carlo simulation in which we ran the same analysis but scrambled the relationship between each trial and its true saccade target location (details are provided in Methods). As shown in Fig. 3A, the slope of the regression involving the real data (red trace, averaged across subjects) frequently deviates from zero, indicating a relationship between saccade amplitude and direction and microphone voltage. The value of the slope oscillates during the saccade period, beginning 9 ms before and continuing until about 60 ms after saccade onset, matching the oscillations evident in the data in Fig. 2F. In contrast, the scrambled data trace (Fig. 3A, gray) is flat during this period. Similarly, the effect size (R2 values) of the real data deviates from the scrambled baseline in a similar but slightly longer time frame, dropping back to the scrambled data baseline at 75–100 ms after saccade onset (Fig. 3C). Fig. 3E shows the percentage of subjects showing a statistically significant (P < 0.05) effect of saccade target location at each time point. This curve reaches 80–100% during the peaks of the oscillations observed at the population level. A similar, although weaker, dependence of the EMREO on target location was observed when the recordings were synchronized to saccade offset (Fig. 3 B, D, and F). We repeated this test in an additional dataset (human dataset II; Methods) involving finer grained sampling within a hemifield (i.e., analyzing target locations within the contralateral or ipsilateral hemifield). This analysis confirmed the relationship between the microphone voltage and saccade amplitude, as well as saccade direction (Fig. S1).

Fig. 3.

Regression results for data aligned to saccade onset (A, C, and E) and offset (B, D, and F). (A and B) Mean ± SEM slope of regression of microphone voltage vs. saccade target location (conducted separately for each subject and then averaged across the group) at each time point for real (red) vs. scrambled (gray) data. In the scrambled Monte Carlo analysis, the true saccade target locations were shuffled and arbitrarily assigned to the microphone traces for individual trials. (C and D) Proportion of variance (Var.) accounted for by regression fit (R2). (E and F) Percentage of subject ears showing P < 0.05 for the corresponding time point. Additional details are provided in Methods.

EMREO Phase Is Related to Relative, Not Absolute, Saccade Direction.

Comparison of the EMREOs in subjects who had both ears recorded confirmed that the direction of the eye movement relative to the recorded ear determines the phase pattern of the EMREO. Fig. 4 shows the results for one such subject. When the left ear was recorded, leftward eye movements were associated with a positive voltage peak at saccade onset (Fig. 4A, blue traces), whereas when the right ear was recorded, that same pattern occurred for rightward eye movements (Fig. 4B, blue traces). Translating this into eardrum motion, the implication is that for a given moment during a given saccade, the eardrums bulge inward in one ear while bulging outward in the other ear. Which ear does what when is determined by the direction of the eye movement relative to the recorded ear (contralaterality/ipsilaterality), not whether it is directed to the left vs. right in space. The other subjects tested with both ears showed similar patterns, and those tested with one ear were also generally consistent (although there were some individual idiosyncrasies in timing and amplitude; the remaining individual subject data are shown in Fig. S2).

Fig. 4.

Recordings in left (A) and right (B) ears in an individual human subject. The eye movement-related signals were similar in the two ears when saccade direction is defined with respect to the recorded ear. The remaining individual subjects’ data can be found in Fig. S2. Contra, contralateral; Ipsi, ipsilateral; Mic, microphone.

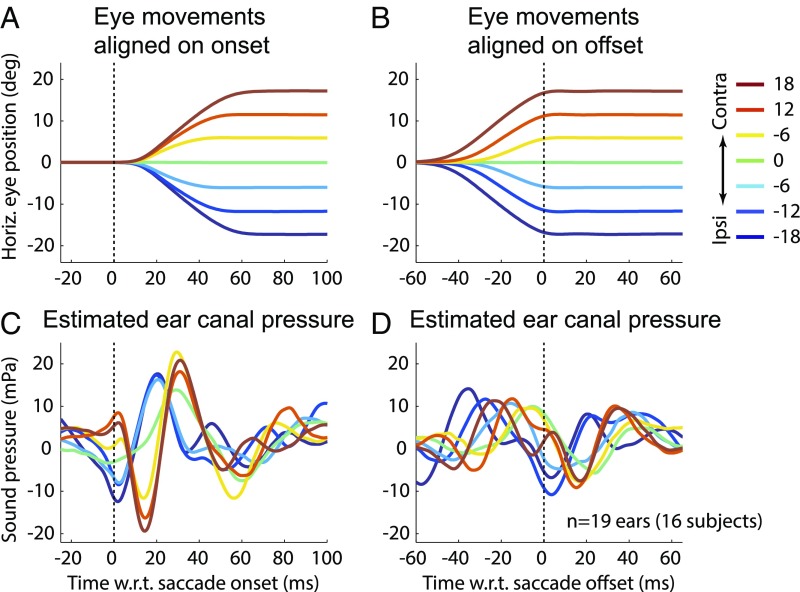

Relating the EMREO Signal to Eardrum Displacement.

At the low frequencies characteristic of EMREO oscillations (∼30 Hz), mean eardrum displacement is directly proportional to the pressure produced in the small volume enclosed between the microphone and the eardrum (∼1 cc for humans). We converted the EMREO voltage signal to pressure using the microphone calibration curve. At frequencies below about 200 Hz, the nominal microphone sensitivity quoted by the manufacturer does not apply, and so we used the complex frequency response measured by Christensen et al. (29) (details are provided in Methods). We found that the group average EMREO had peak-to-peak pressure changes near ∼42 mPa for 18° contralateral saccades, as well as an initial phase opposite to the microphone voltage (Fig. 5C, maximum excursion of dark red traces). Using ear canal dimensions typical of the adult ear (30), this pressure, the equivalent of about 57-dB peak-equivalent sound pressure level (SPL), corresponds to a mean peak-to-peak eardrum displacement of ∼4 nm. Thus, the inferred initial deflection of the eardrum was always opposite to the direction of the saccade: When the eyes moved left, the eardrums moved right, and vice versa.

Fig. 5.

Estimated EMREO pressure for human subjects at saccade onset (A and C) and saccade offset (B and D). Pressures were obtained from the measured microphone voltage using the microphone’s complex frequency response measured at low frequencies as described by Christensen et al. (29). Contra, contralateral; deg, degrees; Horiz., horizontal; Ipsi, ipsilateral.

EMREOs in Monkeys.

As noted earlier, eye movements are known to affect neural processing in several areas of the auditory pathway (2–9). Because this previous work was conducted using nonhuman primate subjects, we sought to determine whether EMREOs also occur in monkeys. We tested the ears of rhesus monkeys (Macaca mulatta; n = 5 ears in three monkeys) performing a paradigm involving a trial type that was a hybrid of those used for the humans. The hybrid trial type was silent until after the eye movement, after which a single click was delivered with a 200- to 270-ms variable delay. EMREOs were observed with timing and waveforms roughly similar to those observed in the human subjects, both at the population level (Fig. 6 B–D) and at the individual subject level (Fig. 6E, individual traces are shown in Fig. S3). The time-wise regression analysis suggests that the monkey EMREO begins at about the time of the saccade and reaches a robust level about 8 ms later (Fig. 4 B–E).

Fig. 6.

Eye position (A), microphone signal of ear canal pressure (B), and results of point-by-point regression (C–E) for monkey subjects (n = 5 ears). All analyses were calculated in the same way as for the human data (Figs. 2 and 3). Contra, contralateral; deg, degrees; Ipsi, ipsilateral.

Controls for Electrical Artifact.

Control experiments ruled out electrical artifacts as possible sources of these observations. In particular, we were concerned that the microphone’s circuitry could have acted as an antenna and could have been influenced by eye movement-related electrical signals in either the eye movement measurement system in monkeys (scleral eye coils) and/or some other component of the experimental environment: electrooculographic signals resulting from the electrical dipole of the eye or myogenic artifacts, such as electromyographic signals, originating from the extraocular, facial, or auricular muscles. If such artifacts contributed to our measurements, they should have continued to do so when the microphone was acoustically plugged without additional electrical shielding. Accordingly, we selected four subjects with prominent effects of eye movements on the microphone signal (Fig. 7A) and repeated the test while the acoustic port of the microphone was plugged (Fig. 7B). The eye movement-related effects were no longer evident when the microphone was acoustically occluded (Fig. 7B). This shows that eye movement-related changes in the microphone signal stem from its capacity to measure acoustic signals rather than electrical artifacts. Additionally, EMREOs were not observed when the microphone was placed in a 1-mL syringe (the approximate volume of the ear canal) and positioned directly behind the pinna of one human subject (Fig. 7C). In this configuration, any electrical contamination should be similar to that in the regular experiment. We saw none, supporting the interpretation that the regular ear canal microphone measurements are detecting eardrum motion and not electrical artifact.

Fig. 7.

EMREOs recorded normally (A) are not observed when the microphone input port is plugged to eliminate acoustic but not electrical (B) contributions to the microphone signal. The plugged microphone sessions were run as normal sessions except that after calibration, the microphone was placed in a closed earbud before continuing with the session (n = 4 subjects). (C) Similarly, EMREOs were not evident when the microphone was placed in a test tube behind a human subject’s pinna while he/she performed the experiment as normal. Contra, contralateral; Ipsi, ipsilateral.

EMREOs and Click Stimuli.

EMREOs occurred not only in silence but also when sounds were presented. During half of our trials for human subjects, acoustic clicks (65-dB peak-equivalent SPL) were delivered during the initial fixation, during the saccade to the target, and at both 100 ms and 200 ms after obtaining target fixation. Clicks presented during the saccade superimposed on the ongoing EMREO (Fig. 8B1). Subtraction of the clicks measured during the fixation period (Fig. 8A1) indicated that the EMREO was not appreciably changed by the presence of the click (Fig. 8B2). Unlike saccade onset or offset, the click did not appear to reset the phase of the EMREO.

Fig. 8.

Clicks do not appear to alter EMREOs. (Upper Row) Mean microphone signals for human subjects (A–D) and monkeys (E) for click presentations at various time points are shown: during the initial fixation (A), during the saccade (B, ∼20 ms after saccade onset), and 100 ms (C) and 200 ms (D) after target fixation was obtained in humans, and 200–270 ms after target fixation in monkeys (E). (Insets) Zoomed-in views of the periclick timing for a more detailed view (gray backgrounds). (Middle, B–D) Residual microphone signal after the first click in each trial was subtracted (human subjects) is shown. There were no obvious distortions in the EMREOs at the time of the (removed) click, suggesting that the effects of EMREOs interact linearly with incoming sounds. (Lower, A–E) Mean peak-to-peak amplitude of the clicks (mean ± SE) is shown. There were no clear differences in the peak click amplitudes for any epochs, indicating that the acoustic impedance did not change as a function of saccade target location. Contra, contralateral; Ipsi, ipsilateral.

Clicks delivered after the eye movement was complete (Fig. 8 C1, C2, D1, and D2) revealed no new sound-triggered effects attributable to the different static eye fixation positions achieved by the subjects by that point of the trial. Results in monkeys, for which only one postsaccadic click was delivered (at 75-dB peak-equivalent SPL), were similar (Fig. 8E).

Finally, we tested the peak-to-peak amplitude of the microphone signal corresponding to the click itself. Any changes in the recorded amplitude of a click despite the same voltage having been applied to the earbud speaker would indicate changes to the acoustic impedance of the ear as a function of saccade direction. We calculated the peak-to-peak amplitude of the click as a function of saccade target location, but found no clear evidence of any saccade target location-related differences during any of the temporal epochs (Fig. 8 A3–E3). If the mechanisms causing the eardrum to move produce concomitant changes in the dynamic stiffness of the eardrum, they are too small to be detected with this technique.

Discussion

Here, we demonstrated a regular and predictable pattern of oscillatory movements of the eardrum associated with movements of the eyes. When the eyes move left, both eardrums initially move right and then oscillate for three to four cycles, with another one to two cycles occurring after the eye movement is complete. Eye movements in the opposite direction produce an oscillatory pattern in the opposite direction. This EMREO is present in both human and nonhuman primates and appears similar across both ears within individual subjects.

The impact of the eye movement-related process revealed here on subsequent auditory processing remains to be determined. In the case of OAEs, the emissions themselves are easily overlooked, near-threshold sounds in the ear canal. However, the cochlear mechanisms that give rise to them have a profound impact on the sensitivity and dynamic range of hearing (31, 32). OAEs thus serve as a biomarker for the health of this important mechanism. EMREOs may constitute a similar biomarker indicating that information about the direction, amplitude, and time of occurrence of saccadic eye movements has reached the periphery and that such information is affecting underlying mechanical processes that may not themselves be readily observed. EMREO assessment may therefore have value in understanding auditory and visual-auditory deficits that contribute to disorders ranging from language impairments to autism and schizophrenia, as well as multisensory deficits associated with traumatic brain injuries.

One important unresolved question is whether the oscillatory nature of the effect observed here reflects the most important aspects of the phenomenon. It may be that the oscillations reflect changes to internal structures within the ear that persist statically until the eye moves again. If so, then the impact on auditory processing would not be limited to the period of time when the eardrum is actually oscillating. Instead, processing could be affected at all times, updating to a new state with each eye movement.

If the oscillations themselves are the most critical element of the phenomenon, and auditory processing is only affected during these oscillations, then it becomes important to determine for what proportion of the time they occur. In our paradigm, we were able to demonstrate oscillations occurring over a total period of ∼110 ms, based on the aggregate results from aligning on saccade onset and offset. They may continue longer than that, but with a phase that changes in relation to some unmeasured or uncontrolled variable. As time passes after the eye movement, the phase on individual trials might drift such that the oscillation disappears from the across-trial average. However, with the eyes moving about three times per second under normal conditions, an EMREO duration of about 110 ms for each saccade corresponds to about one-third of the time in total.

Regardless, evidence arguing for a possible influence on auditory processing can be seen in the size of EMREOs. A head-to-head comparison of EMREOs with OAEs is difficult since auditory detection thresholds are higher in the frequency range of EMREOs than in the range of OAEs, but EMREOs appear to be at least comparable and possibly larger than OAEs. For 18° horizontal saccades, EMREOs produce a maximum peak-equivalent pressure of about 57-dB SPL, whereas click-evoked OAEs range from ∼0- to 20-dB SPL in healthy ears. The similar or greater scale of EMREOs supports the interpretation that they too likely reflect underlying mechanisms of sufficient strength to make a meaningful contribution to auditory processing.

In whatever fashion EMREOs, or their underlying mechanism, contribute to auditory processing, we surmise that the effect concerns the localization of sounds with respect to the visual scene or using eye movements. Determining whether a sight and a sound arise from a common spatial position requires knowing the relationship between the visual eye-centered and auditory head-centered reference frames. Eye position acts as a conversion factor from eye- to head-centered coordinates (1), and eye movement-related signals have been identified in multiple auditory-responsive brain regions (2–17). The direction/phase and amplitude of EMREOs contain information about the direction and amplitude of the accompanying eye movements, which situates them well for playing a causal role in this process.

Note that we view the EMREO as most likely contributing to normal and accurate sound localization behavior. Sounds can be accurately localized using eye movements regardless of initial eye position (33), and this is true even for brief sounds presented when the eyes are in flight (34). Although some studies have found subtle influences of eye position on sound perception tasks (35–42), such effects are only consistent when prolonged fixation is involved (43–45). Holding the eyes steady for a seconds to minutes represents a substantial departure from the time frame in which eye movements normally occur. It is possible that deficiencies in the EMREO system under such circumstances contribute to these inaccuracies.

The source of the signals that cause EMREOs is not presently known. Because the EMREOs precede, or occur simultaneously, with actual movement onset, it appears likely that they derive from a copy of the motor command to generate the eye movement rather than a proprioceptive signal from the orbits, which would necessarily lag the actual eye movement. This centrally generated signal must then affect the activity of either MEMs or OHCs, or a combination of both.

Based on similarities between our recordings and known physiological activity of the MEMs, we presume that the mechanism behind this phenomenon is most likely the MEMs. Specifically, the frequency (around 20–40 Hz) is similar to oscillations observed in previous recordings of both the tensor tympani and stapedius muscles (46–51). Furthermore, it seems unlikely, given the measured fall-off in reverse middle ear transmission at low frequencies (52), that OHC activity could produce ear canal sounds of the magnitude observed (42-mPa peak-to-peak or 57-dB peak-equivalent SPL). Although MEMs are often associated with bilaterally attenuating loud environmental and self-generated sounds (which their activity may precede), they are also known to be active in the absence of explicit auditory stimuli, particularly during rapid eye movement sleep (53–56) and nonacoustic startle reflexes (51, 57–61), and have been found to exhibit activity associated with movements of the head and neck in awake cats (57, 62). (This latter observation is worthy of further investigation; perhaps there is also a head movement-related eardrum oscillation to facilitate conversion of auditory information into a body-centered reference frame).

More generally, we have demonstrated that multisensory interactions occur at the most peripheral possible point in the auditory system and that this interaction is both systematic and substantial. This observation builds on studies showing that attention, either auditory-guided or visually guided, can also modulate the auditory periphery (21–27). Our findings also raise the intriguing possibility that efferent pathways in other sensory systems [e.g., those leading to the retina (63–73)] also carry multisensory information to help refine peripheral processing. This study contributes to an emerging body of evidence suggesting that the brain is best viewed as a dynamic system in which top-down signals modulate feed-forward signals; that is, the brain integrates top down information early in sensory processing to make the best-informed decision about the world with which it has to interact.

Methods

The data in this study are available at https://figshare.com.

Human Subjects and Experimental Paradigm.

Human dataset I.

Human subjects (n = 16, eight females, aged 18–45 y; participants included university students as well as young adults from the local community) were involved in this study. All procedures involving human subjects were approved by the Duke University Institutional Review Board. Subjects had apparently normal hearing and normal or corrected vision. Informed consent was obtained from all participants before testing, and all subjects received monetary compensation for participation. Stimulus (visual and auditory) presentation, data collection, and offline analysis were run on custom software utilizing multiple interfaces [behavioral interface and visual stimulus presentation: Beethoven software (eye position sampling rate: 500 Hz), Ryklin, Inc.; auditory stimulus presentation and data acquisition: Tucker Davis Technologies (microphone sampling rate: 24.441 kHz); and data storage and analysis: MATLAB; MathWorks].

Subjects were seated in a dark, sound-attenuating room. Head movements were minimized using a chin rest, and eye movements were tracked with an infrared camera (EyeLink 1000 Plus). Subjects performed a simple saccade task (Fig. 2 A and B). The subject initiated each trial by obtaining fixation on an LED located at 0° in azimuth and elevation and about 2 m away. After 200 ms of fixation, the central LED was extinguished and a target LED located 6° above the horizontal meridian and ranging from −24° to 24°, in intervals of 6°, was illuminated. The most eccentric targets (±24°) were included despite being slightly beyond the range at which head movements normally accompany eye movements, which is typically 20° (74, 75), because we found in preliminary testing that including these locations improved performance for the next most eccentric locations (i.e., the ±18° targets). However, because these (±24°) locations were difficult for subjects to perform well, we presented them on only 4.5% of the trials (in comparison to 13% for the other locations) and excluded them from analysis. After subjects made saccades to the target LED, they maintained fixation on it (9° window diameter) for 250 ms until the end of the trial. If fixation was dropped (i.e., if the eyes traveled outside of the 9° window at any point throughout the initial or target fixation period), the trial was terminated and the next trial began.

On half of the trials, task-irrelevant sounds were presented via the earphones of an earphone/microphone assembly (Etymotic 10B+ microphone with ER 1 headphone driver) placed in the ear canal and held in position through a custom-molded silicone earplug (Radians, Inc.). These sounds were acoustic clicks at 65-dB peak-equivalent SPL, produced by brief electrical pulses (40-μs positive monophasic pulse) and were presented at four time points within each trial: during the initial fixation period (100 ms after obtaining fixation), during the saccade (∼20 ms after initiating an eye movement), 100 ms after obtaining fixation on the target, and 200 ms after obtaining fixation on the target.

Acoustic signals from the ear canal were recorded via the in-ear microphone throughout all trials, and were recorded from one ear in 13 subjects (left/right counterbalanced) and from both ears in separate sessions in the other three subjects, for a total of n = 19 ears tested. Testing for each subject ear was conducted in two sessions over two consecutive days or within the same day but separated by a break of at least 1 h between sessions. Each session involved about 600 trials and lasted a total of about 30 min. The sound delivery and microphone system was calibrated at the beginning of every session using a custom script (MATLAB) and again after every block of 200 trials. The calibration routine played a nominal 80-dB SPL sound (a click, a broadband burst, and a series of tones at frequencies ranging from 1–12 kHz in 22 steps) in the ear canal and recorded the resultant sound pressure. It then calculated the difference between the requested and measured sound pressures and calculated a gain adjustment profile for all sounds tested. Auditory recording levels were set with a custom software calibration routine (MATLAB) at the beginning of each data collection block (200 trials). All conditions were randomly interleaved.

Human dataset II.

Additional data were collected from eight ears of four new subjects (aged 20–27 y, three females and one male, one session per subject) to verify that the relationship between microphone voltage and saccade amplitude/target location held up when sampled more finely within a hemifield. All procedures were the same as in the first dataset with three exceptions: (i) The horizontal target positions ranged from −20° to +20° in 4° increments (the vertical component was still 6°), (ii) the stimuli were presented from a color LCD monitor (70 cm × 49 cm) at a distance of 85 cm, and (iii) no clicks were played during trials. The microphone signal was also calibrated differently: Clicks played between trials revealed the stability of its impulse response and overall gain during and across experimental sessions. This helped identify decreasing battery power to the amplifiers, occlusion of the microphone barrel, or a change in microphone position. A Focusrite Scarlett 2i2 audio interface was used for auditory stimulus presentation and data acquisition (microphone sampling rate of 48 kHz). Stimulus presentation and data acquisition were controlled through custom MATLAB scripts using The Psychophysics Toolbox extension (76–78) and the Eyelink MATLAB toolbox (79).

These data are presented in Fig. S1, and the remaining human figures are derived from dataset I.

Monkey Subjects and Experimental Paradigm.

All procedures conformed to the guidelines of the National Institutes of Health (NIH publication no. 86-23, revised 1985) and were approved by the Institutional Animal Care and Use Committee of Duke University. Monkey subjects (n = 3, all female) underwent aseptic surgical procedures under general anesthesia to implant a head post holder to restrain the head and a scleral search coil (Riverbend Eye Tracking System) to track eye movements (80, 81). After recovery with suitable analgesics and veterinary care, monkeys were trained in the saccade task described above for the human subjects. Two monkeys were tested with both ears in separate sessions, whereas the third monkey was tested with one ear, for a total of n = 5 ears tested.

The trial structure was similar to that used in human dataset I but with the following differences (Fig. 2B, red traces): (i) Eye tracking was done with a scleral eye coil; (ii) task-irrelevant sounds were presented on all trials, but only one click was presented at 200–270 ms after the saccade to the visual target (the exact time jittered in this range, and was therefore at the same time or slightly later than the timing of the fourth click for human subjects); (iii) the ±24° targets were presented in an equal proportion to the other target locations but were similarly excluded from analysis as above, and there were additional targets at ±9° that were also discarded as they were not used with the human subjects; (iv) initial fixation was 110–160 ms (jittered time range), while target fixation duration was 310–430 ms; (v) monkeys received a fluid reward for correct trial performance; (vi) disposable plastic ear buds containing the earphone/microphone assembly as above were placed in the ear canal for each session; (vii) auditory recording levels were set at the beginning of each data collection session (the ear bud was not removed during a session, and therefore no recalibration occurred within a session); (viii) sessions were not divided into blocks (the monkeys typically performed consistently throughout an entire session and dividing it into blocks was unnecessary); and (ix) the number of trials per session was different for monkeys vs. humans (which were always presented exactly 1,200 trials over the course of the entire study), and the actual number of trials performed varied based on monkey’s performance tolerance and capabilities for the day. Monkeys MNN012 and MYY002 were both run for four sessions per ear (no more than one session per day) over the course of 2 wk; MNN012 correctly performed an average of 889 of 957 trials at included target locations per session for both ears, while MYY002 correctly performed 582 of 918 trials per session on average. Monkey MHH003 was only recorded for 1 d and yielded 132 correct out of 463 trials. Despite this much lower performance, visual inspection of the data suggested they were consistent with other subject data and were therefore included for analysis. It is worth highlighting that the effect reported in this study can be seen, in this case, with comparatively few trials.

Control Sessions.

To verify that the apparent effects of eye movements on ear-generated sounds were genuinely acoustic in nature and did not reflect electrical contamination from sources such as myogenic potentials of the extraocular muscles or the changing orientation of the electrical dipole of the eyeball, we ran a series of additional control studies. Some subjects (n = 4 for plugged microphone control, n = 1 for syringe control) were invited back as participants in these control studies.

In the first control experiment, the microphone was placed in the ear canal and subjects performed the task but the microphone’s input port was physically plugged, preventing it from detecting acoustic signals (Fig. 7). This was accomplished by placing the microphone in a custom ear mold in which the canal-side opening for the microphone was blocked. Thus, the microphone was in the same physical position during these sessions, and should therefore have continued to be affected by any electrical artifacts that might be present, but its acoustic input was greatly attenuated by the plug. Four subject ears were retested in this paradigm in a separate pair of data collection sessions from their initial “normal” sessions. The control sessions were handled exactly as the normal sessions except that the plugged ear mold was used to replace the regular open ear mold after the microphone was calibrated in the open ear. Calibration was executed exactly as in the normal sessions before plugging the ear mold.

In the second control, trials were run exactly as a normal session except that the microphone was set into a 1-mL syringe [approximately the average volume of the human ear canal (82)] using a plastic ear bud and the syringe was placed on top of the subject’s ear behind the pinna. Acoustic recordings were taken from within the syringe while a human subject executed the behavioral paradigm exactly as normal. Sound levels were calibrated to the syringe at the start of each block.

Data Analysis.

Initial processing.

Unless specifically stated otherwise, all data are reported using the raw voltage recorded from the Etymotic microphone system. Results for individual human and monkey subjects were based on all of a subject’s correct and included trials (with ±24° and 9° target locations excluded, the average number of correct trials per human subject ear for each session was 150, for a total of 900 across the six sessions).

Trial exclusion criteria.

Trial exclusion criteria were based on saccade performance and microphone recording quality. For saccade performance, trials were excluded if: (i) the reaction time to initiate the saccade was >250 ms or (ii) the eyes deviated from the path between the fixation point and the target by more than 9°. These criteria resulted in the exclusion of 18.5 ± 11.1% per ear recorded.

Microphone recording quality was examined for click and no-click trials separately, but followed the same guidelines. The mean and SD of microphone recordings over a whole block were calculated from all successful trials (after exclusions based on saccade performance). If the SD of the voltage values of a given trial was more than threefold the SD of the voltage values across the whole block, it was excluded. Sample-wise z-scores were calculated relative to the mean and SD of successful trials within a block. Trials with 50 samples or more with z-scores in excess of 10 were also excluded. These requirements removed trials containing noise contamination from bodily movements (e.g., swallowing, heavy breaths) or from external noise sources (acoustic and electric). These two criteria excluded an average of 1.7 ± 1.5% of trials that had passed the saccade exclusion criteria. Overall, 20% of trials were excluded because of saccade performance or recording quality.

Saccade-microphone synchronization.

Eye position data were resampled to the microphone sampling rate (from 500 Hz to 24.5 kHz) and smoothed to minimize the compounding error of nonmonotonic recording artifacts after each successive differentiation of eye position to calculate eye velocity, acceleration, and jerk (the time derivative of acceleration).

Microphone data were synchronized to two time points in the saccades: initiation and termination. Saccade onsets were determined by locating the time of the first peak in the jerk of the saccades. These onset times for each trial were then used to synchronize the microphone recordings at the beginning of saccades. Because saccades of different lengths took different amounts of time to complete and because performance of saccades to identical targets varied between trials, saccade completions occurred over a range of tens of milliseconds. As a result, recordings of eardrum behavior related to the later stages of saccades would be poorly aligned if synchronized by saccade onset alone. Therefore, saccade termination, or offset, was determined by locating the second peak in the saccade jerk and used to synchronize microphone recordings with saccade completion.

Statistical analyses.

We evaluated the statistical significance of the effects of eye movements on signals recorded with the ear canal microphone with a regression analysis at each time point (microphone signal vs. saccade target location) for each subject. This produced a time series of statistical results (slope, R2, and P value; Fig. 3 A–F). This analysis was performed twice: first, with all trials synchronized to saccade onset and, second, with all trials synchronized to saccade offset.

Given that the dependence of one sample relative to the next sample was unknown, a post hoc correction (e.g., Bonferroni correction) was not practical. Instead, we used a Monte Carlo technique to estimate the chance-related effect size and false-positive rates of this test. We first scrambled the relationship between each trial and its saccade target location assignment and then ran the same analysis as before. This provided an estimate of how our results should look if there was no relationship between eye movements and the acoustic recordings.

Peak click amplitudes were calculated for each trial involving sounds by isolating the maximum and minimum peaks during the click stimuli. This allowed us to look for possible changes in acoustic impedance in the ear canal.

Estimation of eardrum motion.

The sensitivity of the microphone used in the experiments (Etymotic Research ER10B+) rolls off at frequencies below about 200 Hz, where it also introduces a frequency-dependent phase shift (29). Consequently, the microphone voltage waveform is not simply proportional to the ear canal pressure (or eardrum displacement) at frequencies characteristic of the EMREO waveform. To obtain the ear canal pressure, and estimate the eardrum motion that produced it, we converted the microphone voltage to pressure using published measurements of the microphone’s complex-valued frequency response (29).

Because the measured frequency response (Hmic, with units of volts per pascal) was sampled at 48 kHz, we first transformed it into the time-domain representation of the microphone’s impulse response, resampled the result at our sampling rate of 24.4 kHz, and then retransformed it back into the frequency domain as Hmic′. For each trial, the fast Fourier transform of microphone voltage (Vmic) was divided by Hmic′ and the inverse fast Fourier transform was then calculated to produce the estimate of ear-canal, Pec:

Eardrum displacement, xed, was computed from the measured pressure using the equation xed = (V/A) Pec/ρ0c2, where V is the volume of the residual ear canal space (∼2 cc), A is the cross-sectional area of the eardrum (∼60 mm2), ρ0 is the density of air, and c is the speed of sound.

Supplementary Material

Acknowledgments

We thank Tom Heil, Jessi Cruger, Karen Waterstradt, Christie Holmes, and Stephanie Schlebusch for technical assistance. We thank Marty Woldorff, Jeff Beck, Tobias Overath, Barbara Shinn-Cunningham, Valeria Caruso, Daniel Pages, Shawn Willett, and Jeff Mohl for numerous helpful discussions and comments during the course of this project.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The data and code reported in this paper have been deposited in figshare (https://doi.org/10.6084/m9.figshare.c.3972495.v1).

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1717948115/-/DCSupplemental.

References

- 1.Groh JM, Sparks DL. Two models for transforming auditory signals from head-centered to eye-centered coordinates. Biol Cybern. 1992;67:291–302. doi: 10.1007/BF02414885. [DOI] [PubMed] [Google Scholar]

- 2.Groh JM, Trause AS, Underhill AM, Clark KR, Inati S. Eye position influences auditory responses in primate inferior colliculus. Neuron. 2001;29:509–518. doi: 10.1016/s0896-6273(01)00222-7. [DOI] [PubMed] [Google Scholar]

- 3.Zwiers MP, Versnel H, Van Opstal AJ. Involvement of monkey inferior colliculus in spatial hearing. J Neurosci. 2004;24:4145–4156. doi: 10.1523/JNEUROSCI.0199-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Porter KK, Metzger RR, Groh JM. Representation of eye position in primate inferior colliculus. J Neurophysiol. 2006;95:1826–1842. doi: 10.1152/jn.00857.2005. [DOI] [PubMed] [Google Scholar]

- 5.Bulkin DA, Groh JM. Distribution of eye position information in the monkey inferior colliculus. J Neurophysiol. 2012;107:785–795. doi: 10.1152/jn.00662.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bulkin DA, Groh JM. Distribution of visual and saccade related information in the monkey inferior colliculus. Front Neural Circuits. 2012;6:61. doi: 10.3389/fncir.2012.00061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Werner-Reiss U, Kelly KA, Trause AS, Underhill AM, Groh JM. Eye position affects activity in primary auditory cortex of primates. Curr Biol. 2003;13:554–562. doi: 10.1016/s0960-9822(03)00168-4. [DOI] [PubMed] [Google Scholar]

- 8.Fu KM, et al. Timing and laminar profile of eye-position effects on auditory responses in primate auditory cortex. J Neurophysiol. 2004;92:3522–3531. doi: 10.1152/jn.01228.2003. [DOI] [PubMed] [Google Scholar]

- 9.Maier JX, Groh JM. Comparison of gain-like properties of eye position signals in inferior colliculus versus auditory cortex of primates. Front Integr Neurosci. 2010;4:121–132. doi: 10.3389/fnint.2010.00121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J Neurophysiol. 1996;76:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- 11.Mullette-Gillman OA, Cohen YE, Groh JM. Motor-related signals in the intraparietal cortex encode locations in a hybrid, rather than eye-centered reference frame. Cereb Cortex. 2009;19:1761–1775. doi: 10.1093/cercor/bhn207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94:2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- 13.Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. I. Motor convergence. J Neurophysiol. 1987;57:22–34. doi: 10.1152/jn.1987.57.1.22. [DOI] [PubMed] [Google Scholar]

- 14.Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J Neurophysiol. 1987;57:35–55. doi: 10.1152/jn.1987.57.1.35. [DOI] [PubMed] [Google Scholar]

- 15.Jay MF, Sparks DL. Auditory receptive fields in primate superior colliculus shift with changes in eye position. Nature. 1984;309:345–347. doi: 10.1038/309345a0. [DOI] [PubMed] [Google Scholar]

- 16.Populin LC, Tollin DJ, Yin TC. Effect of eye position on saccades and neuronal responses to acoustic stimuli in the superior colliculus of the behaving cat. J Neurophysiol. 2004;92:2151–2167. doi: 10.1152/jn.00453.2004. [DOI] [PubMed] [Google Scholar]

- 17.Lee J, Groh JM. Auditory signals evolve from hybrid- to eye-centered coordinates in the primate superior colliculus. J Neurophysiol. 2012;108:227–242. doi: 10.1152/jn.00706.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Guinan JJ., Jr . Perspectives on Auditory Research. Springer; New York: 2014. Cochlear mechanics, otoacoustic emissions, and medial olivocochlear efferents: Twenty years of advances and controversies along with areas ripe for new work; pp. 229–246. [Google Scholar]

- 19.Guinan JJ., Jr Olivocochlear efferents: Anatomy, physiology, function, and the measurement of efferent effects in humans. Ear Hear. 2006;27:589–607. doi: 10.1097/01.aud.0000240507.83072.e7. [DOI] [PubMed] [Google Scholar]

- 20.Mukerji S, Windsor AM, Lee DJ. Auditory brainstem circuits that mediate the middle ear muscle reflex. Trends Amplif. 2010;14:170–191. doi: 10.1177/1084713810381771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Delano PH, Elgueda D, Hamame CM, Robles L. Selective attention to visual stimuli reduces cochlear sensitivity in chinchillas. J Neurosci. 2007;27:4146–4153. doi: 10.1523/JNEUROSCI.3702-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Harkrider AW, Bowers CD. Evidence for a cortically mediated release from inhibition in the human cochlea. J Am Acad Audiol. 2009;20:208–215. doi: 10.3766/jaaa.20.3.7. [DOI] [PubMed] [Google Scholar]

- 23.Srinivasan S, Keil A, Stratis K, Woodruff Carr KL, Smith DW. Effects of cross-modal selective attention on the sensory periphery: Cochlear sensitivity is altered by selective attention. Neuroscience. 2012;223:325–332. doi: 10.1016/j.neuroscience.2012.07.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Srinivasan S, et al. Interaural attention modulates outer hair cell function. Eur J Neurosci. 2014;40:3785–3792. doi: 10.1111/ejn.12746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Walsh KP, Pasanen EG, McFadden D. Selective attention reduces physiological noise in the external ear canals of humans. I: Auditory attention. Hear Res. 2014;312:143–159. doi: 10.1016/j.heares.2014.03.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Walsh KP, Pasanen EG, McFadden D. Changes in otoacoustic emissions during selective auditory and visual attention. J Acoust Soc Am. 2015;137:2737–2757. doi: 10.1121/1.4919350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wittekindt A, Kaiser J, Abel C. Attentional modulation of the inner ear: A combined otoacoustic emission and EEG study. J Neurosci. 2014;34:9995–10002. doi: 10.1523/JNEUROSCI.4861-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Goodman SS, Keefe DH. Simultaneous measurement of noise-activated middle-ear muscle reflex and stimulus frequency otoacoustic emissions. J Assoc Res Otolaryngol. 2006;7:125–139. doi: 10.1007/s10162-006-0028-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Christensen AT, Ordonez R, Hammershoi D. 2015. Design of an acoustic probe to measure otoacoustic emissions below 0.5 kHz. Proceedings of the Fifty-Eighth Audio Engineering Society International Conference: Music Induced Hearing Disorders (Audio Engineering Society, New York), paper no. 2-4.

- 30.Abdala C, Keefe DH. Morphological and functional ear development. In: Werner L, Fay RR, Popper AN, editors. Human Auditory Development. Springer; New York: 2012. pp. 19–59. [Google Scholar]

- 31.Robinette M, Glattke T, editors. Otoacoustic Emissions–Clinical Applications. Thieme Medical Publishers; New York: 2007. [Google Scholar]

- 32.Ramos JA, Kristensen SG, Beck DL. An overview of OAEs and normative data for DPOAEs. Hear Rev. 2013;20:30–33. [Google Scholar]

- 33.Metzger RR, Mullette-Gillman OA, Underhill AM, Cohen YE, Groh JM. Auditory saccades from different eye positions in the monkey: Implications for coordinate transformations. J Neurophysiol. 2004;92:2622–2627. doi: 10.1152/jn.00326.2004. [DOI] [PubMed] [Google Scholar]

- 34.Boucher L, Groh JM, Hughes HC. Afferent delays and the mislocalization of perisaccadic stimuli. Vision Res. 2001;41:2631–2644. doi: 10.1016/s0042-6989(01)00156-0. [DOI] [PubMed] [Google Scholar]

- 35.Getzmann S. The effect of eye position and background noise on vertical sound localization. Hear Res. 2002;169:130–139. doi: 10.1016/s0378-5955(02)00387-8. [DOI] [PubMed] [Google Scholar]

- 36.Bohlander RW. Eye position and visual attention influence perceived auditory direction. Percept Mot Skills. 1984;59:483–510. doi: 10.2466/pms.1984.59.2.483. [DOI] [PubMed] [Google Scholar]

- 37.Weerts TC, Thurlow WR. The effects of eye position and expectation on sound localization. Percept Psychophys. 1971;9:35–39. [Google Scholar]

- 38.Lewald J, Ehrenstein WH. Effect of gaze direction on sound localization in rear space. Neurosci Res. 2001;39:253–257. doi: 10.1016/s0168-0102(00)00210-8. [DOI] [PubMed] [Google Scholar]

- 39.Lewald J, Getzmann S. Horizontal and vertical effects of eye-position on sound localization. Hear Res. 2006;213:99–106. doi: 10.1016/j.heares.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 40.Lewald J. The effect of gaze eccentricity on perceived sound direction and its relation to visual localization. Hear Res. 1998;115:206–216. doi: 10.1016/s0378-5955(97)00190-1. [DOI] [PubMed] [Google Scholar]

- 41.Lewald J. Eye-position effects in directional hearing. Behav Brain Res. 1997;87:35–48. doi: 10.1016/s0166-4328(96)02254-1. [DOI] [PubMed] [Google Scholar]

- 42.Lewald J, Ehrenstein WH. The effect of eye position on auditory lateralization. Exp Brain Res. 1996;108:473–485. doi: 10.1007/BF00227270. [DOI] [PubMed] [Google Scholar]

- 43.Cui QN, O’Neill WE, Paige GD. Advancing age alters the influence of eye position on sound localization. Exp Brain Res. 2010;206:371–379. doi: 10.1007/s00221-010-2413-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cui QN, Razavi B, O’Neill WE, Paige GD. Perception of auditory, visual, and egocentric spatial alignment adapts differently to changes in eye position. J Neurophysiol. 2010;103:1020–1035. doi: 10.1152/jn.00500.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Razavi B, O’Neill WE, Paige GD. Auditory spatial perception dynamically realigns with changing eye position. J Neurosci. 2007;27:10249–10258. doi: 10.1523/JNEUROSCI.0938-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Eliasson S, Gisselsson L. Electromyographic studies of the middle ear muscles of the cat. Electroencephalogr Clin Neurophysiol. 1955;7:399–406. doi: 10.1016/0013-4694(55)90013-4. [DOI] [PubMed] [Google Scholar]

- 47.Borg E, Moller AR. The acoustic middle ear reflex in unanesthetized rabbits. Acta Otolaryngol. 1968;65:575–585. doi: 10.3109/00016486809119292. [DOI] [PubMed] [Google Scholar]

- 48.Borg E. Excitability of the acoustic m. stapedius and m. tensor tympani reflexes in the nonanesthetized rabbit. Acta Physiol Scand. 1972;85:374–389. doi: 10.1111/j.1748-1716.1972.tb05272.x. [DOI] [PubMed] [Google Scholar]

- 49.Borg E. Regulation of middle ear sound transmission in the nonanesthetized rabbit. Acta Physiol Scand. 1972;86:175–190. doi: 10.1111/j.1748-1716.1972.tb05324.x. [DOI] [PubMed] [Google Scholar]

- 50.Teig E. Tension and contraction time of motor units of the middle ear muscles in the cat. Acta Physiol Scand. 1972;84:11–21. doi: 10.1111/j.1748-1716.1972.tb05150.x. [DOI] [PubMed] [Google Scholar]

- 51.Greisen O, Neergaard EB. Middle ear reflex activity in the startle reaction. Arch Otolaryngol. 1975;101:348–353. doi: 10.1001/archotol.1975.00780350012003. [DOI] [PubMed] [Google Scholar]

- 52.Puria S. Measurements of human middle ear forward and reverse acoustics: Implications for otoacoustic emissions. J Acoust Soc Am. 2003;113:2773–2789. doi: 10.1121/1.1564018. [DOI] [PubMed] [Google Scholar]

- 53.Dewson JH, 3rd, Dement WC, Simmons FB. Middle ear muscle activity in cats during sleep. Exp Neurol. 1965;12:1–8. doi: 10.1016/0014-4886(65)90094-4. [DOI] [PubMed] [Google Scholar]

- 54.Pessah MA, Roffwarg HP. Spontaneous middle ear muscle activity in man: A rapid eye movement sleep phenomenon. Science. 1972;178:773–776. doi: 10.1126/science.178.4062.773. [DOI] [PubMed] [Google Scholar]

- 55.De Gennaro L, Ferrara M. Sleep deprivation and phasic activity of REM sleep: Independence of middle-ear muscle activity from rapid eye movements. Sleep. 2000;23:81–85. [PubMed] [Google Scholar]

- 56.De Gennaro L, Ferrara M, Urbani L, Bertini M. A complementary relationship between wake and REM sleep in the auditory system: A pre-sleep increase of middle-ear muscle activity (MEMA) causes a decrease of MEMA during sleep. Exp Brain Res. 2000;130:105–112. doi: 10.1007/s002210050012. [DOI] [PubMed] [Google Scholar]

- 57.Carmel PW, Starr A. Acoustic and nonacoustic factors modifying middle-ear muscle activity in waking cats. J Neurophysiol. 1963;26:598–616. doi: 10.1152/jn.1963.26.4.598. [DOI] [PubMed] [Google Scholar]

- 58.Holst HE, Ingelstedt S, Ortegren U. Ear drum movements following stimulation of the middle ear muscles. Acta Otolaryngol Suppl. 1963;182:73–89. doi: 10.3109/00016486309139995. [DOI] [PubMed] [Google Scholar]

- 59.Salomon G, Starr A. Electromyography of middle ear muscles in man during motor activities. Acta Neurol Scand. 1963;39:161–168. doi: 10.1111/j.1600-0404.1963.tb05317.x. [DOI] [PubMed] [Google Scholar]

- 60.Yonovitz A, Harris JD. Eardrum displacement following stapedius muscle contraction. Acta Otolaryngol. 1976;81:1–15. doi: 10.3109/00016487609107472. [DOI] [PubMed] [Google Scholar]

- 61.Avan P, Loth D, Menguy C, Teyssou M. Hypothetical roles of middle ear muscles in the guinea-pig. Hear Res. 1992;59:59–69. doi: 10.1016/0378-5955(92)90102-s. [DOI] [PubMed] [Google Scholar]

- 62.Carmel PW, Starr A. Non-acoustic factors influencing activity of middle ear muscles in waking cats. Nature. 1964;202:195–196. doi: 10.1038/202195a0. [DOI] [PubMed] [Google Scholar]

- 63.Gastinger MJ, Tian N, Horvath T, Marshak DW. Retinopetal axons in mammals: Emphasis on histamine and serotonin. Curr Eye Res. 2006;31:655–667. doi: 10.1080/02713680600776119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Gastinger MJ, Yusupov RG, Glickman RD, Marshak DW. The effects of histamine on rat and monkey retinal ganglion cells. Vis Neurosci. 2004;21:935–943. doi: 10.1017/S0952523804216133. [DOI] [PubMed] [Google Scholar]

- 65.Gastinger MJ. 2004. Function of histaminergic retinopetal axons in rat and primate retinas. PhD dissertation (Texas Medical Center, Houston, TX)

- 66.Repérant J, et al. The evolution of the centrifugal visual system of vertebrates. A cladistic analysis and new hypotheses. Brain Res Brain Res Rev. 2007;53:161–197. doi: 10.1016/j.brainresrev.2006.08.004. [DOI] [PubMed] [Google Scholar]

- 67.Lörincz ML, Oláh M, Juhász G. Functional consequences of retinopetal fibers originating in the dorsal raphe nucleus. Int J Neurosci. 2008;118:1374–1383. doi: 10.1080/00207450601050147. [DOI] [PubMed] [Google Scholar]

- 68.Gastinger MJ, Bordt AS, Bernal MP, Marshak DW. Serotonergic retinopetal axons in the monkey retina. Curr Eye Res. 2005;30:1089–1095. doi: 10.1080/02713680500371532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Gastinger MJ, O’Brien JJ, Larsen NB, Marshak DW. Histamine immunoreactive axons in the macaque retina. Invest Ophthalmol Vis Sci. 1999;40:487–495. [PMC free article] [PubMed] [Google Scholar]

- 70.Abudureheman A, Nakagawa S. Retinopetal neurons located in the diencephalon of the Japanese monkey (Macaca fuscata) Okajimas Folia Anat Jpn. 2010;87:17–23. doi: 10.2535/ofaj.87.17. [DOI] [PubMed] [Google Scholar]

- 71.Honrubia FM, Elliott JH. Efferent innervation of the retina. I. Morphologic study of the human retina. Arch Ophthalmol. 1968;80:98–103. doi: 10.1001/archopht.1968.00980050100017. [DOI] [PubMed] [Google Scholar]

- 72.Honrubia FM, Elliott JH. Efferent innervation of the retina. II. Morphologic study of the monkey retina. Invest Ophthalmol. 1970;9:971–976. [PubMed] [Google Scholar]

- 73.Itaya SK, Itaya PW. Centrifugal fibers to the rat retina from the medial pretectal area and the periaqueductal grey matter. Brain Res. 1985;326:362–365. doi: 10.1016/0006-8993(85)90046-0. [DOI] [PubMed] [Google Scholar]

- 74.Freedman EG, Stanford TR, Sparks DL. Combined eye-head gaze shifts produced by electrical stimulation of the superior colliculus in rhesus monkeys. J Neurophysiol. 1996;76:927–952. doi: 10.1152/jn.1996.76.2.927. [DOI] [PubMed] [Google Scholar]

- 75.Stahl JS. Eye-head coordination and the variation of eye-movement accuracy with orbital eccentricity. Exp Brain Res. 2001;136:200–210. doi: 10.1007/s002210000593. [DOI] [PubMed] [Google Scholar]

- 76.Kleiner M, et al. What’s new in psychtoolbox-3? Perception. 2007;36:1–16. [Google Scholar]

- 77.Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- 78.Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- 79.Cornelissen FW, Peters EM, Palmer J. The Eyelink Toolbox: Eye tracking with MATLAB and the Psychophysics Toolbox. Behavior Research Methods, Instruments, & Computers. 2002;34:613. doi: 10.3758/bf03195489. [DOI] [PubMed] [Google Scholar]

- 80.Robinson DA. A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Trans Biomed Eng. 1963;10:137–145. doi: 10.1109/tbmel.1963.4322822. [DOI] [PubMed] [Google Scholar]

- 81.Judge SJ, Richmond BJ, Chu FC. Implantation of magnetic search coils for measurement of eye position: An improved method. Vision Res. 1980;20:535–538. doi: 10.1016/0042-6989(80)90128-5. [DOI] [PubMed] [Google Scholar]

- 82.Gerhardt KJ, Rodriguez GP, Hepler EL, Moul ML. Ear canal volume and variability in the patterns of temporary threshold shifts. Ear Hear. 1987;8:316–321. doi: 10.1097/00003446-198712000-00005. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.