Abstract

Introduction:

The neuropsychological battery of the Uniform Data Set (UDSNB) was implemented in 2005 by the National Institute on Aging (NIA) Alzheimer Disease Centers program to measure cognitive performance in dementia and mild cognitive impairment due to Alzheimer Disease. This paper describes a revision, the UDSNB 3.0.

Methods:

The Neuropsychology Work Group of the NIA Clinical Task Force recommended revisions through a process of due diligence to address shortcomings of the original battery. The UDSNB 3.0 covers episodic memory, processing speed, executive function, language, and constructional ability. Data from 3602 cognitively normal participants in the National Alzheimer Coordinating Center database were analyzed.

Results:

Descriptive statistics are presented. Multivariable linear regression analyses demonstrated score differences by age, sex, and education and were also used to create a normative calculator available online.

Discussion:

The UDSNB 3.0 neuropsychological battery provides a valuable non proprietary resource for conducting research on cognitive aging and dementia.

Key Words: dementia, neuropsychological test, cognition, UDS

Since 2005, the University of Washington’s National Alzheimer’s Coordinating Center (NACC) has collected the Uniform Data Set (UDS) on participants from over 30 past and present US Alzheimer’s Disease Centers (ADC). The UDS consists of data collection protocols used systematically on participants enrolled into the Clinical Cores of each ADC.1,2 Participants with clinical diagnoses of normal cognition (NC), mild cognitive impairment (MCI) and dementia of various etiologies including Alzheimer disease (AD) are recruited, enrolled, and followed annually. Consent is obtained at the individual ADCs, as approved by individual Institutional Review Boards (IRBs), and the University of Washington’s IRB has approved the sharing of deidentified UDS data. The UDS data, include demographics, medical history, medication use, clinical and neurological examination findings, measures of function and behavior, clinical ratings of dementia severity [eg, clinical dementia rating (CDR)3], and neuropsychological test scores. Systematic guidelines for clinical diagnosis are based on the most up to date published diagnostic research criteria.1,4

All UDS data collection instruments were constructed with the guidance and approval of the Clinical Task Force (CTF), a group originally constituted by the National Institute on Aging (NIA) to develop standardized methods for collecting longitudinal data that would encourage and support collaboration across the ADCs.1,5 As of December 1, 2016, the NACC database contained data on 34,748 UDS participants from past and present ADCs.

ADCs used the first version of the UDSNB starting in September 2005, and in February 2008, a second version, UDSNB 2.0, was implemented with slight revisions to instructions and data collection forms. Tests in the original version of the battery, used until March 2015, were chosen to capture the continuum of cognitive decline from NC through AD dementia, incorporating relevant domains described in detail previously.2 An online calculator was developed to aid in scoring.6

In 2010, encouraged by recognition of the growing importance of diagnostic biomarkers7 and the identification of preclinical stages of AD,8 the Neuropsychology Work Group, a committee to review the UDSNB 2.0 and make recommendations for future data collection, was convened. This paper describes the rationale and procedures for the development of UDSNB 3.0 and provides normative test scores for a cohort of cognitively normal individuals from the NACC database.

METHODS

Rationale and Procedures for Battery Design and Test Selection

The CTF and Neuropsychology Work Group outlined the rationale for change. First, in longitudinal follow-up, healthy controls showed practice effects, especially for the memory task, even reciting the story before administration on visits subsequent to baseline. UDSNB 2.0 measures were published tests, increasing the potential for multiple exposures either through clinical practice or in ancillary research conducted at the ADCs. Licensing costs and restrictions on sharing these instruments with intramural, extramural, and international researchers also created challenges for collaboration. Furthermore, the importance of early detection required instruments that would be sensitive to earlier stages of cognitive decline or even “preclinical” states. Finally, UDSNB 2.0 lacked tests of visuospatial functions and nonverbal memory, both of which can constitute areas of early decline, particularly in those with Lewy body disease9,10 or those with the posterior cortical atrophy variant of AD.11–13 Therefore, we created novel tests to address some of the shortcomings of the existing battery, while at the same time having a mechanism for preserving longitudinal continuity with previous data.

The Work Group included members from several ADCs to ensure multicenter representation, and exofficio members from the NACC and the NIA. The group conducted weekly or monthly conference calls, as needed, and in-person meetings 1 to 2 times per year to outline a strategy to assess options for change. This included considering different platforms for testing (paper-and-pencil vs. computer), considering whether or not to change the types of constructs tested with the UDSNB 3.0, and evaluating existing instruments for inclusion into the new battery. Criteria were developed to aid in decision-making. Early on, after reviewing a library of potential tests, the group decided to adopt nonproprietary measures to allow the ADCs to freely share the battery with collaborators. Moreover, early on, it was decided to postpone computerized testing, as the field was rapidly evolving with increasingly sophisticated technology.

The UDSNB 2.0 faced the serious issue of a new battery disrupting the longitudinal follow-up of participants tested with the initial battery since 2008. Therefore, the decision was made after careful review by the Work Group and the CTF and presentations to the centers, to model the new battery on the old one and to drop or replace existing measures. Digit Symbol from the WAIS-R was dropped, whereas the trail making tests and category list generation tests (animals, fruits, and vegetables) were retained. In addition, 4 measures were replaced with similar measures developed previously by several of the centers and tested in published research studies. The section below describes the instruments.

Materials, data recording forms, and a manual for administration and scoring were created and revised with feedback from the centers. After a brief period of pilot data collection with the new instruments to refine the instructions and address any questions about administration and scoring we made additional revisions and conducted a larger pilot study (N=935) that compared the UDSNB 2.0 and 3.0 versions in individuals divided into 4 groups based on their Mini Mental State Examination (MMSE)14 scores (26 to 30, 21 to 25, 16 to 20, 10 to 15) in a “crosswalk” study.15 The pairs of scores for the original and corresponding replacement tests were compared using equipercentile equating, and the analyses provided a crosswalk of equivalent test scores between the original and replacement tests [eg, a score of 15 on the Montreal Cognitive Assessment (MoCA) is equivalent to a score of 21 on the MMSE]. The results of the crosswalk study provided good evidence for relatively reliable equivalence across both measures and that the chosen tests were reasonable replacements for the older tests. Crosswalk scores could also assist in making longitudinal comparisons.

Selection of Tests for the UDS Neuropsychological Battery 3.0

The Work Group recommended replacing the MMSE with the MoCA,16,17 Logical Memory18 immediate and delayed with the Craft Story 21 immediate and delayed recall19; digit span forward and backward with the number span forward and backward test; and the Boston naming test (BNT) with the multilingual naming test (MINT).20 Each decision was based on the rationale outlined below.

General Cognitive Measure

The MoCA16,17 was selected to replace the MMSE as a measure of overall cognitive impairment. Factors influencing this decision included the fact that the MOCA is more difficult than the MMSE as demonstrated in studies showing lower MoCA than MMSE scores in the same samples21 and, hence, more likely to detect subtle cognitive deficits. Furthermore, floor and ceiling effects are less common with the MoCA, which also allows for a broader range of scores in MCI samples than does the MMSE.22 Therefore, the MoCA is more appropriate than the MMSE for detecting early cognitive decline. The MoCA has been validated in white23 and African American24 groups. A disadvantage of the MoCA is that it can yield lower scores in diverse healthy population-based samples.25 However, an abbreviated version reportedly demonstrated predictive ability with respect to diagnosis of MCI in a low-education, illiterate sample.26 In another study, MoCA was more sensitive to MCI and discriminated MCI from other samples better than the MMSE.27,28 MoCA scores have also been shown to correlate with the Activities of Daily Living Questionnaire29 a measure of functional integrity in dementia.30 The MoCA has the further advantage of yielding not only a total score (overall measure) but also index scores based on individual items tapping domains of attention, retentive memory, orientation, language, and executive function.31

The MoCA requires about 10 minutes to administer and yields a total score of 30 and the above-mentioned domain index scores. The index scores (not included in the present report) offer the potential to identify early dementia profiles of clinical dementia subtypes such as behavioral variant frontotemporal dementia and primary progressive aphasia. The memory index score has been shown to be especially predictive of decline from amnestic MCI to AD dementia.31 The paper-and-pencil version of the MoCA has been translated into multiple languages and dialects within languages32 and is freely available (www.mocatest.org/). The NACC was given permission to use it for 25 years without royalties or restrictions on sharing the test with collaborators.

Development of Domain-specific Neuropsychological Tests

Episodic Memory Tests

Memory loss is the hallmark symptom of the most common clinical dementia syndrome associated with AD.33 Early studies of AD dementia emphasized the importance of measures of episodic memory, such as word list learning and story recall, in the evaluation for dementia. The group had decided on a story memory test, as most ADCs were already using Logical Memory, immediate, and delayed recall conditions.

Craft and colleagues had designed multiple forms of a story recall test similar to Logical Memory in a study of the impact of insulin on cognition in mild AD dementia.19,34 The complete set of 22 stories had previously been tested for equivalence in a diverse sample of college age adults who were administered all of the stories in counter balanced order in the laboratory of Andrew Saykin (personal oral communication) and provided to the Work Group for consideration. Additional data on alternate sets of stories were included in published studies of patients undergoing systemic chemotherapy for treatment of breast cancer as well as individuals with traumatic brain injury and healthy controls.35–38 In a pilot study to determine the equivalence of 22 stories in middle-aged and older adults the Work Group determined that 3 stories offered the greatest relationship to Logical Memory and to one another. These 3 were reviewed by the work group and 1 was chosen for its content relevance to a diverse population, “Craft Story 21.”

Scoring of Logical Memory allows several acceptable responses for each item recalled. Following the protocol from Craft et al,34 items were scored in a similar manner to Logical Memory (paraphrase score) but another score was also calculated (verbatim score), allocating a point for each item recalled exactly as delivered in the story. The verbatim score (not included in the present report) was intended to serve as potentially more sensitive than the paraphrase score in detecting very early memory decline.

Finally, we introduced a novel measure of nonverbal memory, a function not previously included in the UDSNB 2.0. Following the copy of the Benson complex39 figure (see the Visuospatial Test section) delayed figure reproduction was tested.

Language Tests

The 32-item MINT20,40 was selected to replace the short BNT. The MINT was originally developed to test naming in 4 languages, English, Spanish, Hebrew, and Mandarin Chinese, taking care to equate the level of difficulty of items across languages. The BNT was developed in New England and designed for American English speakers and contains items that either have no equivalent word or different frequencies of usage in other languages. The MINT is sensitive to naming impairment in AD.20

Word fluency is measured with semantic and letter word list generation tests. The former were part of UDSNB 2.0,whereas 2 letter generation tasks were added (“F” and “L”) for UDSNB 3.0. Each task requires 60 seconds and correct items are totaled. Note is made of errors and rule violations.

Visuospatial Tests

The UDSNB 2.0 did not contain a visuospatial test. Visuospatial symptoms emerge in later stages of amnestic dementia due to AD but also may appear early in the clinical syndromes of posterior cortical atrophy and dementia associated with cortical Lewy body disease. The Benson complex figure39 was added as a test of constructional ability (Copy condition). Figural elements are scored for presence and placement. Reproduction is tested after a delay to measure retentive memory (see the Episodic Memory section). Comparison between patients with clinical dementia of the Alzheimer type and frontotemporal dementia showed distinctive profiles of performance and associations with frontal and parietal cortical atrophy regions in the groups.39,41

Immediate Attention, Working Memory, Executive Attention Tests

Immediate attention span is commonly tested with Digit Span.18 For studies requiring multiple forms to reduce practice effects, a series of number sets was randomly generated to provide alternatives to the digit span test (Joel Kramer lab, personal oral communication). The number spans for the UDS task were randomly generated with the restriction that no digit would be adjacent to a digit that was one higher or one lower (eg, a “7” would not be succeeded or preceded by a 6 or 8). Every attempt was also made to exclude sequences that contained area codes. The number span is the longest list recalled. The total number of trials administered up to failure on 2 trials at 1 length is also recorded. Backward span is a measure of working memory. The trail making tests were retained from the UDSNB 2.0 to measure processing speed (part A) and executive attention (part B).

Study Sample

This report is based on analyses of UDS data submitted to NACC by the ADCs between March 15, 2015 and November 30, 2016. The sample was restricted to individuals who received the UDSNB 3.0 and at that visit had a clinical diagnosis of NC and a global CDR score of 0. If a participant had received UDSNB 3.0 more than once, data were included from only the first administration. Although some participants’ scores on the UDSNB 3.0 seemed to be outside the range of normal scores (eg, MoCA score of 9), we chose not to remove any participants from the descriptive analyses because normalcy was not defined by the tests. Therefore, we describe the full range of scores in those with a clinical diagnosis of NC and a global CDR=0.

Data, Analyses, Normative Calculator

First we describe the demographics of the sample (age, education, and sex).The mean, median, 25th and 75th percentiles, and ranges of scores for the overall sample are presented. Histograms are provided for each of the tests to illustrate the distribution of scores in the overall sample. The mean scores and SDs for each test are provided by age divided into 5 groups (<60, 60 to 69, 70 to 79, 80 to 89, ≥90 y) and education, divided into 4 groups (≤12, 13 to 15, 16, ≥17 y). Unadjusted linear regression analyses tested for differences by age or education group. Finally, we ran linear regression models to estimate the effect of age (continuous), sex, and education (continuous) on each neuropsychological measure. Adjusted linear regression models were first run with either age, sex, or education predicting the neuropsychological test score (data not shown), and then multivariable models were run with all 3 demographics included in the model.

We developed a calculator for the UDSNB 3.0 tests based on previously published methods used to produce the calculator for UDSNB 2.0 tests.6 Although our descriptive analyses focused on all participants meeting our eligibility criteria, for the normative calculator, we excluded a handful of participants who performed 5 SDs outside of the mean on any particular test to improve the distribution of residuals and better satisfy model assumptions. This restriction resulted in excluding the following participants from the regression analyses: 5 participants from the analysis of the MoCA, 4 participants from the analysis of the Benson complex figure copy, 16 participants from the analysis of the trail making part A, and 5 participants from the analysis of the MINT.

RESULTS

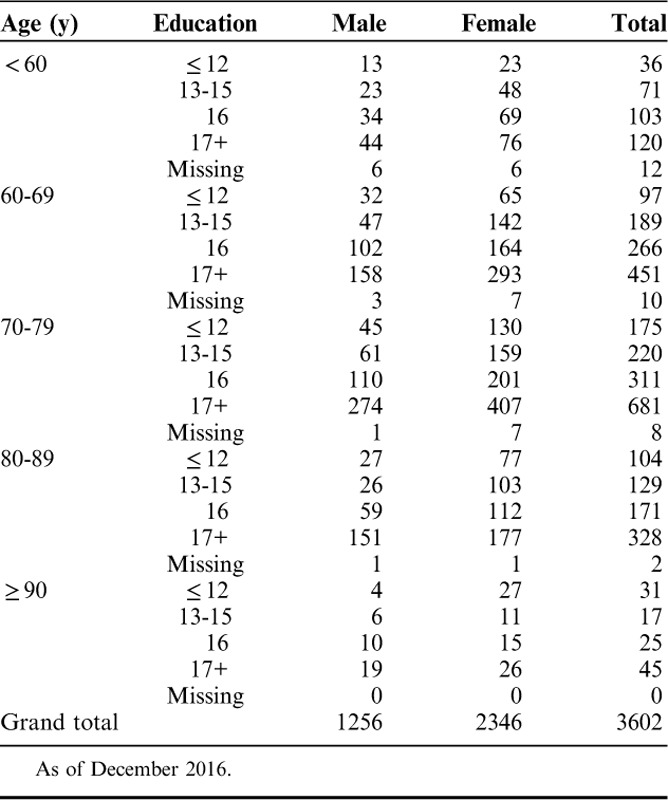

The sample included 3602 cognitively normal participants over age 60 receiving the UDSNB 3.0 (Table 1). The majority of the sample (65%) were women and were between 70 and 89 years of age (67%) and highly educated (69%). These analyses did not divide the sample by race, as most participants in the sample were white (83%), with an additional 14% African American, and 3% other race, reflecting the overall distribution of these groups within the ADCs receiving the UDS.

TABLE 1.

Sample Distribution by Sex, Age, and Education

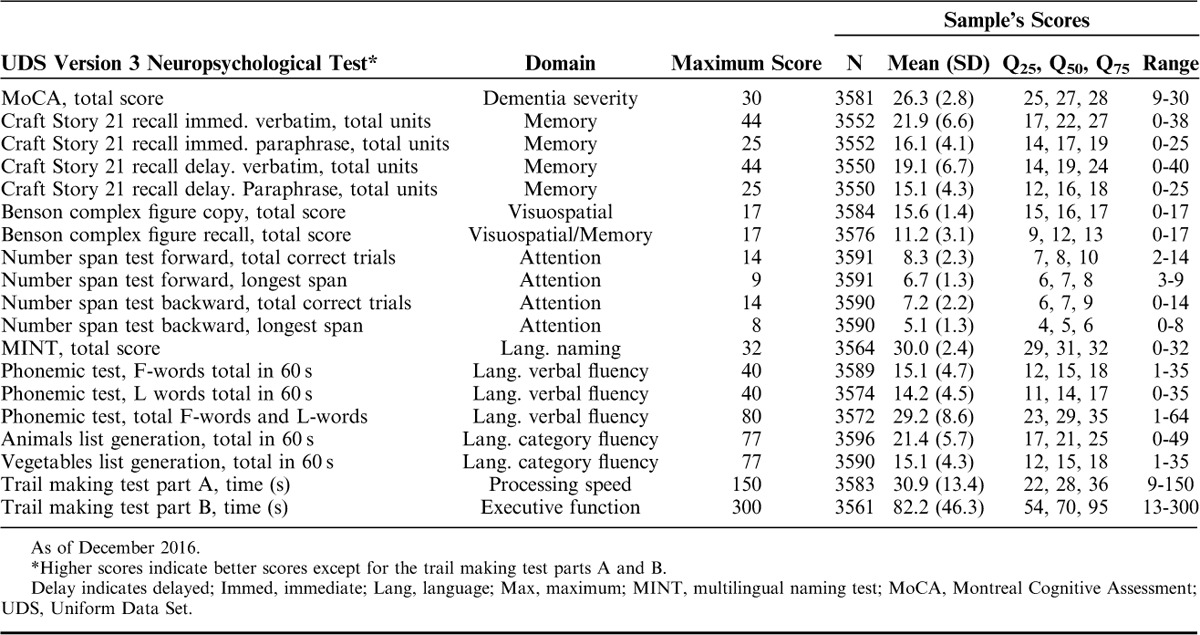

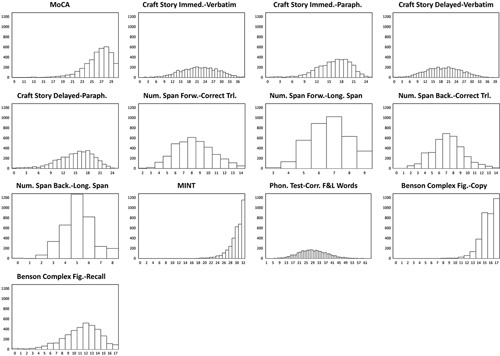

Means, 25th, 50th, and 75th percentile, and score ranges for each test in the overall sample are reported in Table 2. Histograms demonstrate whether the distribution of test scores were approximately normal (Fig. 1). Tests with an approximately normal distribution of scores included Craft Story immediate and delayed (paraphrase and verbatim), number span forward and backward (total correct trials and longest span), the letter list generation task (F-words and L-words), and the Benson complex figure recall. Scores on the MoCA, MINT, and copy condition of the Benson complex figure copy were highly skewed due to ceiling effects. However, the MoCA seems to be less affected by ceiling effects than the MMSE.2

TABLE 2.

Summary Statistics for Clinically Cognitively Normal UDS Participants

FIGURE 1.

Histograms showing score distributions for each measure on the UDSNB 3.0. From these graphs, many of the measures have a normal or near normal distribution, with the exception of the MoCA total score, the score for the copy of the Benson complex figure, and the total score for the MINT. Immed. indicates immediate; MINT, multilingual naming test; MoCA, Montreal Cognitive Assessment; Paraph., paraphrase.

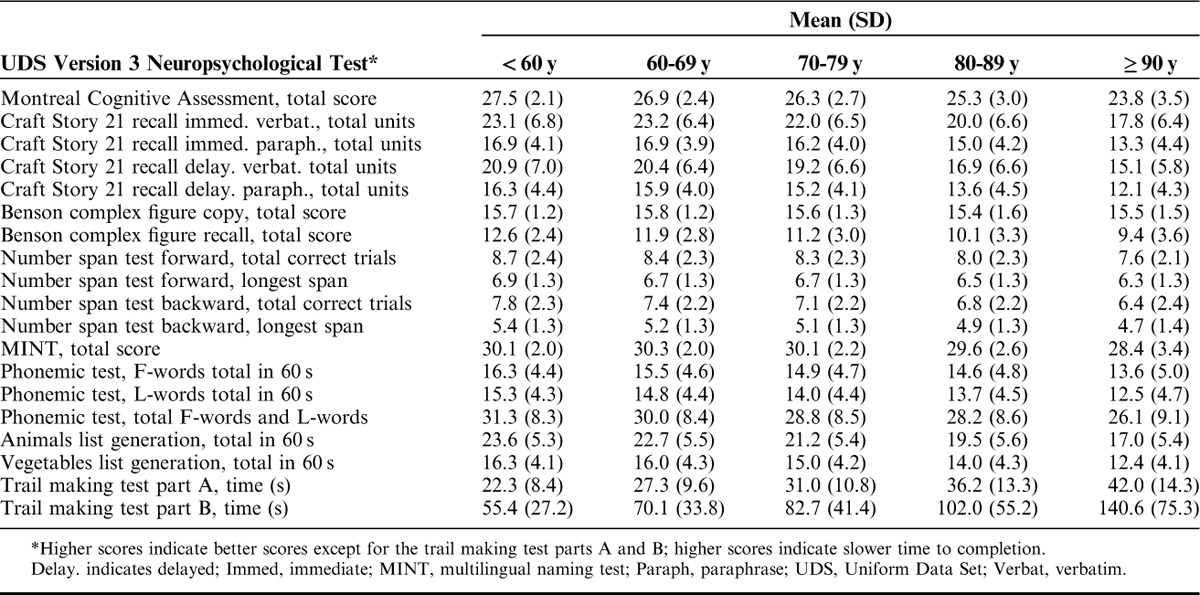

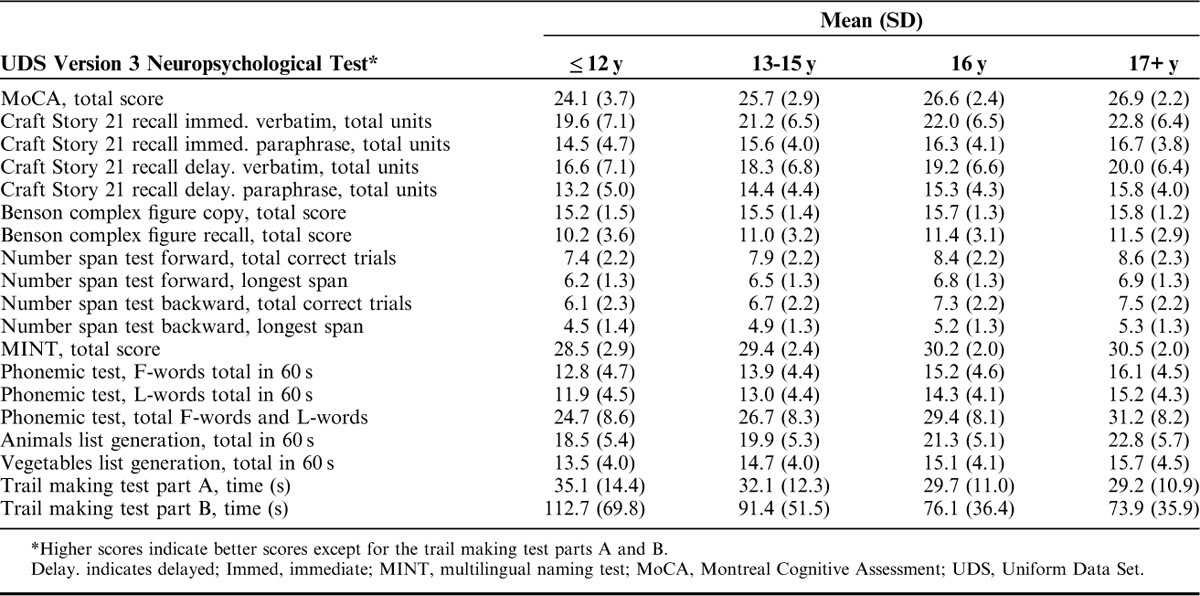

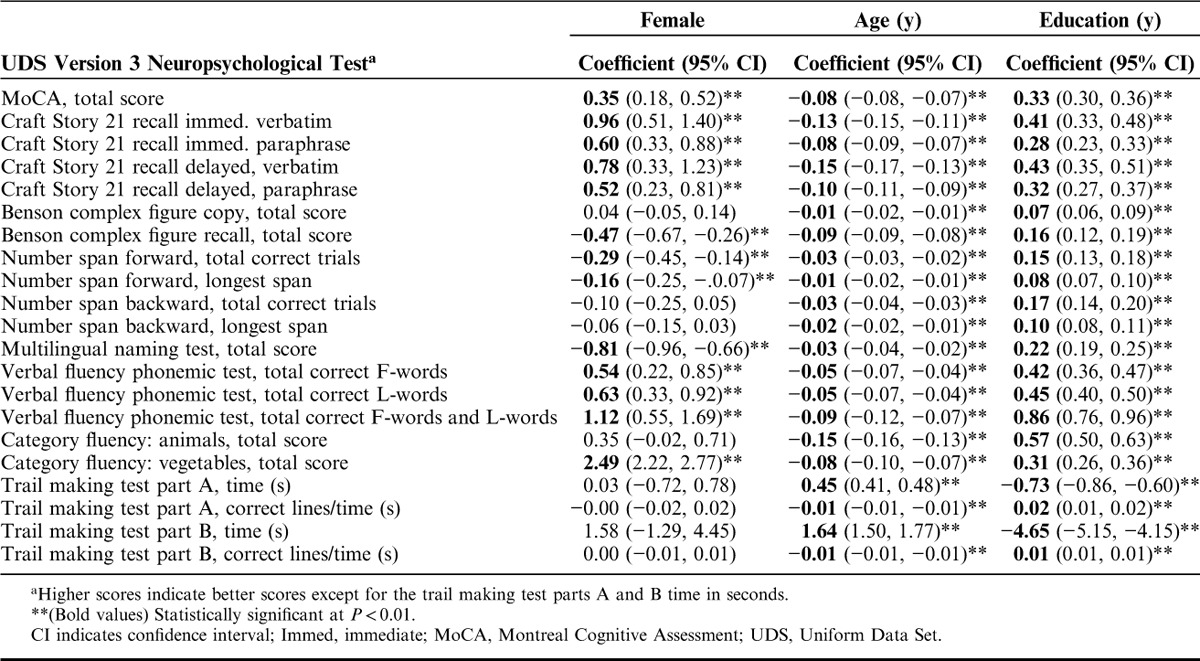

Table 3 shows the means and SDs by each measure across the 5 age groups, and Table 4 shows means and SDs for the 4 education groups. In the multivariable regression analyses (Table 5), women performed statistically significantly (P<0.01) better than men on the Craft story immediate and delayed, verbal fluency phonemic test, and vegetables list generation, but worse on the Benson copy figure recall, number span forward, and MINT (Table 5). Women and men performed similarly, without statistically significant differences, on the Benson complex figure copy, number span backward, animal list generation, and trail making parts A and B. Increasing age was associated with worse scores and increasing years of education was associated with better scores on all of the tests (P<0.01).

TABLE 3.

Mean Neuropsychological Test Scores by Age Group

TABLE 4.

Mean Neuropsychological Test Scores by Education Group

TABLE 5.

Multivariable Linear Regression Coefficients and 95% CIs for Sex, Age, and Education

For the data to be useful in characterizing research participants, a calculator was created to indicate the level of performance on each measure. The calculator uses the intercepts, regression coefficients, and root mean square errors resulting from the regression analyses described above to calculate unadjusted and adjusted Z-scores for individuals of a particular sex, age, and/or education level. The root mean square error is the square root of the average squared differences between the observed score and the predicted score, which we substitute as an estimate for a population SD. The adjusted Z-scores are calculated for each test adjusting for a single demographic characteristic (ie, sex, age, or education) and adjusting for all 3 of these demographics. One can enter an individual’s demographics and raw test scores, and the calculator uses the resulting Z-scores to calculate percentile estimates that indicate the individual’s level of impairment on any given test (eg, low average, or severely impaired). Two new variables were also added to this calculator to improve the precision of percentile estimates for trail making part A and part B. These 2 tests are terminated if the subjects cannot complete within a specified time length (150 s and 300 s for A and B, respectively), resulting in the same score regardless of how many lines are correctly connected. We added connections-per-second (correct lines connected divided by the time to completion) for both Part A and B. These 2 new variables provide more accurate Z-scores and percentiles for the trail making tests.

DISCUSSION

This paper reports the results from a study to develop normative data for the version 3.0 revision of the Uniform Data Set Neuropsychological Battery. The complete UDS contains not only the neuropsychological battery but also demographic, medical, family history, neurological, biomarker, psychiatric, and functional data and available post mortem diagnosis on Clinical Core participants who have been followed longitudinally. Earlier versions have been collected since 2005 and stored in the database of the NACC at the University of Washington. All the data are available for sharing with researchers and therefore provide a rich source for generating hypotheses and investigating cognitive aging and dementia in a well-defined cohort.

The current revision of the neuropsychological battery provides an updated set of tests, targeting predominantly the symptoms of the most typical, amnestic, presentation of AD. The tests are nonproprietary and have the potential to increase sensitivity over former measures to very early symptoms of cognitive decline in older individuals with different levels of education. The new measures are similar to the old measures but have also enriched the standard data collection with novel scores to enhance available data using a relatively brief battery. The normative calculator provides a convenient tool to characterize the level of performance on the measures of the UDSNB 3.0 battery. The calculator and the battery are available online (www.alz.washington.edu/WEB/npsych_means.html), (www.alz.washington.edu/NONMEMBER/UDS/DOCS/VER3/UDS3_npsych_worksheets_C2.pdf).

There are some limitations to the study reported above. Although the ADCs encourage the participation of a diverse sample with respect to sex, education, and race, there was an overrepresentation of individuals who were white, female, and highly educated. Therefore, the findings are most relevant to research settings where these demographics are representative of the research volunteers. It will be important to expand the normative data for underrepresented groups and also for population-based samples. Another limitation is that the battery focuses on the spectrum from healthy cognition to dementia of the Alzheimer type and does not explicitly target symptoms associated with other forms of dementia. The CTF has introduced additional data collection modules, however, including specialized tests of symptoms related to frontotemporal dementia. Plans are under way to further expand clinical symptom assessment in other dementia syndromes. The availability of the UDSNB 3.0 at no cost to researchers will aid in encouraging more consistent and systematic data collection in disparate studies of cognitive aging and dementia.

ACKNOWLEDGMENTS

The Uniform Data Set Neuropsychology Work Group dedicates this paper to the memory of Dr Steven Ferris, who passed away on April 5, 2017. He was a cherished and highly respected member of our community who made major contributions to the creation of the UDS and to the work that went into this publication. We all mourn his loss.

The Uniform Data Set Neuropsychology Work Group wishes to thank the following individuals for giving their permission to include tests they created in the UDSNB 3.0: Zaid Nasreddine, MD (MoCA); Suzanne Craft, PhD (“Craft Story” and scoring method); Andrew Saykin, PhD for providing data on the equivalence of forms of the Craft Story to Logical Memory; Tamar Gollan, PhD for the MINT; Joel Kramer for providing alternate number strings for the number span test; and Kate Possin and Joel Kramer for the Benson complex figure test. We also wish to thank Nina Silverberg, PhD, for her insightful leadership throughout the conduct of this project.

Footnotes

Steven Ferris died April 5, 2017.

The NACC database is funded by NIA/NIH Grant U01 AG016976. NACC data are contributed by the NIA-funded ADCs: P30 AG019610 (PI Eric Reiman, MD), P30 AG013846 (PI Neil Kowall, MD), P50 AG008702 (PI Scott Small, MD), P50 AG025688 (PI Allan Levey, MD, PhD), P50 AG047266 (PI Todd Golde, MD, PhD), P30 AG010133 (PI Andrew Saykin, PsyD), P50 AG005146 (PI Marilyn Albert, PhD), P50 AG005134 (PI Bradley Hyman, MD, PhD), P50 AG016574 (PI Ronald Petersen, MD, PhD), P50 AG005138 (PI Mary Sano, PhD), P30 AG008051 (PI Thomas Wisniewski, MD), P30 AG013854 (PI M. Marsel Mesulam, MD), P30 AG008017 (PI Jeffrey Kaye, MD), P30 AG010161 (PI David Bennett, MD), P50 AG047366 (PI Victor Henderson, MD, MS), P30 AG010129 (PI Charles DeCarli, MD), P50 AG016573 (PI Frank LaFerla, PhD), P50 AG005131 (PI James Brewer, MD, PhD), P50 AG023501 (PI Bruce Miller, MD), P30 AG035982 (PI Russell Swerdlow, MD), P30 AG028383 (PI Linda Van Eldik, PhD), P30 AG053760 (PI Henry Paulson, MD, PhD), P30 AG010124 (PI John Trojanowski, MD, PhD), P50 AG005133 (PI Oscar Lopez, MD), P50 AG005142 (PI Helena Chui, MD), P30 AG012300 (PI Roger Rosenberg, MD), P30 AG049638 (PI Suzanne Craft, PhD), P50 AG005136 (PI Thomas Grabowski, MD), P50 AG033514 (PI Sanjay Asthana, MD, FRCP), P50 AG005681 (PI John Morris, MD), P50 AG047270 (PI Stephen Strittmatter, MD, PhD).

S.W.: is participating in clinical trials of antidementia drugs from Eli Lilly and Company. K.W.-B.: received honoraria from Merck, Roche,T3D, Diffusion, and Biogen Companies. K.W.-B. is currently receiving funding from Takeda Pharmaceutical Company in her role as the Neuropsychology Lead for the TOMMORROW clinical trial program. D.M.: serves as a consultant for Janssen. Neither J.C.M. nor his family owns stock or has equity interest (outside of mutual funds or other externally directed accounts) in any pharmaceutical or biotechnology company. J.C.M. is currently participating in clinical trials of antidementia drugs from Eli Lilly and Company, Biogen, and Janssen. J.C.M. serves as a consultant for Lilly USA. He receives research support from Eli Lilly/Avid Radiopharmaceuticals and is funded by NIH grants #P50 AG005681; P01AG003991; P01AG026276; and UF01AG032438. The remaining authors declare no conflicts of interest.

REFERENCES

- 1.Beekly DL, Ramos EM, Lee WW, et al. The National Alzheimer’s Coordinating Center (NACC) database: the uniform data set. Alzheimer Dis Assoc Disord. 2007;21:249–258. [DOI] [PubMed] [Google Scholar]

- 2.Weintraub S, Salmon D, Mercaldo N, et al. The Alzheimer’s Disease Centers' Uniform Data Set (UDS): the neuropsychologic test battery. Alzheimer Dis Assoc Disord. 2009;23:91–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Morris JC. The clinical dementia rating (CDR): current version and scoring rules. Neurology. 1993;43:2412–2414. [DOI] [PubMed] [Google Scholar]

- 4.Morris JC, Weintraub S, Chui HC, et al. The uniform data set (UDS): clinical and cognitive variables and descriptive data from Alzheimer Disease Centers. Alzheimer Dis Assoc Disord. 2006;20:210–216. [DOI] [PubMed] [Google Scholar]

- 5.Beekly DL, Ramos EM, van Belle G, et al. The National Alzheimer’s Coordinating Center (NACC) Database: an Alzheimer disease database. Alzheimer Dis Assoc Disord. 2004;18:270–277. [PubMed] [Google Scholar]

- 6.Shirk SD, Mitchell MB, Shaughnessy LW, et al. A web-based normative calculator for the uniform data set (UDS) neuropsychological test battery. Alzheimers Res Ther. 2011;3:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jack CR, Jr, Knopman DS, Jagust WJ, et al. Hypothetical model of dynamic biomarkers of the Alzheimer’s pathological cascade. Lancet Neurol. 2010;9:119–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sperling RA, Aisen PS, Beckett LA, et al. Toward defining the preclinical stages of Alzheimer's disease: recommendations from the National Institute on aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011;7:280–292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Galasko D, Katzman R, Salmon DP, et al. Clinical and neuropathological findings in Lewy body dementias. Brain Cogn. 1996;31:166–175. [DOI] [PubMed] [Google Scholar]

- 10.Salmon DP, Galasko D, Hansen LA, et al. Neuropsychological deficits associated with diffuse Lewy body disease. Brain Cogn. 1996;31:148–165. [DOI] [PubMed] [Google Scholar]

- 11.Alladi S, Xuereb J, Bak T, et al. Focal cortical presentations of Alzheimer’s disease. Brain. 2007;130 (pt 10):2636–2645. [DOI] [PubMed] [Google Scholar]

- 12.Hof PR, Vogt BA, Bouras C, et al. Atypical form of Alzheimer’s disease with prominent posterior cortical atrophy: a review of lesion distribution and circuit disconnection in cortical visual pathways. Vision Res. 1997;37:3609–3625. [DOI] [PubMed] [Google Scholar]

- 13.Mendez MF, Ghajarania M, Perryman KM. Posterior cortical atrophy: clinical characteristics and differences compared to Alzheimer’s disease. Dement Geriatr Cogn Disord. 2002;14:33–40. [DOI] [PubMed] [Google Scholar]

- 14.Folstein MF, Folstein SE, McHugh PR. Mini Mental State Examination. Lutz, Florida: Psychological Assessment Resources; 2004. [Google Scholar]

- 15.Monsell SE, Dodge HH, Zhou XH, et al. Results from the NACC Uniform Data Set neuropsychological battery crosswalk study. Alzheimer Dis Assoc Disord. 2016;30:134–139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nasreddine ZS, Phillips N, Chertkow H. Normative data for the montreal cognitive assessment (MoCA) in a population-based sample. Neurology. 2012;78:765–766. author reply 766. [DOI] [PubMed] [Google Scholar]

- 17.Nasreddine ZS, Phillips NA, Bedirian V, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53:695–699. [DOI] [PubMed] [Google Scholar]

- 18.Wechsler D. Wechsler Memory Scale-Revised Manual. San Antonio, Texas: The Psychological Corporation; 1987. [Google Scholar]

- 19.Craft S, Newcomer J, Kanne S, et al. Memory improvement following induced hyperinsulinemia in Alzheimer’s disease. Neurobiol Aging. 1996;17:123–130. [DOI] [PubMed] [Google Scholar]

- 20.Ivanova I, Salmon DP, Gollan TH. The multilingual naming test in Alzheimer’s disease: clues to the origin of naming impairments. J Int Neuropsychol Soc. 2013;19:272–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Larner AJ. Screening utility of the Montreal Cognitive Assessment (MoCA): in place of—or as well as—the MMSE? Int Psychogeriatr. 2012;24:391–396. [DOI] [PubMed] [Google Scholar]

- 22.Trzepacz PT, Hochstetler H, Wang S, et al. Alzheimer's Disease Neuroimaging I. Relationship between the Montreal Cognitive Assessment and mini-mental state examination for assessment of mild cognitive impairment in older adults. BMC Geriatr. 2015;15:107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lam B, Middleton LE, Masellis M, et al. Criterion and convergent validity of the Montreal cognitive assessment with screening and standardized neuropsychological testing. J Am Geriatr Soc. 2013;61:2181–2185. [DOI] [PubMed] [Google Scholar]

- 24.Goldstein FC, Ashley AV, Miller E, et al. Validity of the montreal cognitive assessment as a screen for mild cognitive impairment and dementia in African Americans. J Geriatr Psychiatry Neurol. 2014;27:199–203. [DOI] [PubMed] [Google Scholar]

- 25.Rossetti HC, Lacritz LH, Cullum CM, et al. Normative data for the Montreal Cognitive Assessment (MoCA) in a population-based sample. Neurology. 2011;77:1272–1275. [DOI] [PubMed] [Google Scholar]

- 26.Julayanont P, Tangwongchai S, Hemrungrojn S, et al. The Montreal Cognitive Assessment-basic: a screening tool for mild cognitive impairment in illiterate and low-educated elderly adults. J Am Geriatr Soc. 2015;63:2550–2554. [DOI] [PubMed] [Google Scholar]

- 27.Luis CA, Keegan AP, Mullan M. Cross validation of the Montreal Cognitive Assessment in community dwelling older adults residing in the Southeastern US. Int J Geriatr Psychiatry. 2009;24:197–201. [DOI] [PubMed] [Google Scholar]

- 28.Roalf DR, Moberg PJ, Xie SX, et al. Comparative accuracies of two common screening instruments for classification of Alzheimer’s disease, mild cognitive impairment, and healthy aging. Alzheimers Dement. 2013;9:529–537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Johnson N, Barion A, Rademaker A, et al. The activities of daily living questionnaire: a validation study in patients with dementia. Alzheimer Dis Assoc Disord. 2004;18:223–230. [PubMed] [Google Scholar]

- 30.Durant J, Leger GC, Banks SJ, et al. Relationship between the activities of daily living questionnaire and the montreal cognitive assessment. Alzheimers Demen. 2016;4:43–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Julayanont P, Brousseau M, Chertkow H, et al. Montreal Cognitive Assessment memory index score (MoCA-MIS) as a predictor of conversion from mild cognitive impairment to Alzheimer’s disease. J Am Geriatr Soc. 2014;62:679–684. [DOI] [PubMed] [Google Scholar]

- 32.Conti S, Bonazzi S, Laiacona M, et al. Montreal Cognitive Assessment (MoCA)-Italian version: regression based norms and equivalent scores. Neurol Sci. 2015;36:209–214. [DOI] [PubMed] [Google Scholar]

- 33.McKhann G, Drachman D, Folstein M, et al. Clinical diagnosis of Alzheimer’s disease: Report of the NINCDS-ADRDA Work Group under the auspices of Department of Health and Human Services Task Force on Alzheimer’s disease. Neurology. 1984;34:939–944. [DOI] [PubMed] [Google Scholar]

- 34.Craft S, Asthana S, Schellenberg G, et al. Insulin effects on glucose metabolism, memory, and plasma amyloid precursor protein in Alzheimer's disease differ according to apolipoprotein-E genotype. Ann NY Acad Sci. 2000;903:222–228. [DOI] [PubMed] [Google Scholar]

- 35.Conroy SK, McDonald BC, Ahles TA, et al. Chemotherapy-induced amenorrhea: a prospective study of brain activation changes and neurocognitive correlates. Brain Imaging Behav. 2013;7:491–500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Conroy SK, McDonald BC, Smith DJ, et al. Alterations in brain structure and function in breast cancer survivors: effect of post-chemotherapy interval and relation to oxidative DNA damage. Breast Cancer Res Treat. 2013;137:493–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ferguson RJ, Ahles TA, Saykin AJ, et al. Cognitive-behavioral management of chemotherapy-related cognitive change. Psychooncology. 2007;16:772–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.McDonald BC, Flashman LA, Arciniegas DB, et al. Methylphenidate and memory and attention adaptation training for persistent cognitive symptoms after traumatic brain injury: a randomized, placebo-controlled trial. Neuropsychopharmacology. 2017:1766–1775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Possin KL, Laluz VR, Alcantar OZ, et al. Distinct neuroanatomical substrates and cognitive mechanisms of figure copy performance in Alzheimer’s disease and behavioral variant frontotemporal dementia. Neuropsychologia. 2011;49:43–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Gollan TH, Weissburger G, Runnqvist E, et al. Self-ratings of spoken language dominance: A Multilingual Naming Test (MINT) and preliminary norms for young and aging Spanish–English bilinguals. Biling : Lang Cogn. 2011;13:215–218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Possin KL. Visual spatial cognition in neurodegenerative disease. Neurocase. 2010;16:466–487. [DOI] [PMC free article] [PubMed] [Google Scholar]