Abstract

Introduction

Automated electronic sniffers may be useful for early detection of acute respiratory distress syndrome (ARDS) for institution of treatment or clinical trial screening.

Methods

In a prospective cohort of 2929 critically ill patients, we retrospectively applied published sniffer algorithms for automated detection of acute lung injury to assess their utility in diagnosis of ARDS in the first 4 ICU days. Radiographic full-text reports were searched for “edema” OR (“bilateral” AND “infiltrate”) and a more detailed algorithm for descriptions consistent with ARDS. Patients were flagged as possible ARDS if a radiograph met search criteria and had a PaO2/FiO2 or SpO2/FiO2 of 300 or 315, respectively. Test characteristics of the electronic sniffers and clinical suspicion of ARDS were compared to a gold standard of 2-physician adjudicated ARDS.

Results

Thirty percent of 2841 patients included in the analysis had gold standard diagnosis of ARDS. The simpler algorithm had sensitivity for ARDS of 78.9%, specificity of 52%, positive predictive value (PPV) of 41%, and negative predictive value (NPV) of 85.3% over the 4-day study period. The more detailed algorithm had sensitivity of 88.2%, specificity of 55.4%, PPV of 45.6%, and NPV of 91.7%. Both algorithms were more sensitive but less specific than clinician suspicion, which had sensitivity of 40.7%, specificity of 94.8%, PPV of 78.2%, and NPV of 77.7%.

Conclusions

Published electronic sniffer algorithms for ARDS may be useful automated screening tools for ARDS and improve on clinical recognition, but they are limited to screening rather than diagnosis because their specificity is poor.

Keywords: acute lung injury, acute respiratory distress syndrome, respiratory failure, critical illness, mechanical ventilation

Introduction

Acute respiratory distress syndrome is a prevalent and morbid disease.1 Outcomes can be improved with initiation of life-saving interventions including institution of low tidal volume ventilation, adequate positive end-expiratory pressure, proning, and neuromuscular blockade, depending on disease severity.2–5 Despite the effectiveness of these interventions, clinicians often fail to recognize ARDS.1 With the broad availability of electronic medical records, the potential exists for automated recognition of possible ARDS, prompting clinicians to consider the diagnosis and institute lung-protective ventilation earlier than might otherwise occur. Moreover, with the recent increased emphasis on investigations of early treatment of ARDS, an automated electronic sniffer to rapidly detect patients with possible ARDS may serve as a useful screening tool for clinical trials.6

An electronic “sniffer” for acute lung injury (now ARDS) was described by Herasevich and colleagues based on contemporaneous chest radiograph findings and low PaO2/FiO2 ratios7 and was later extended with the use of additional clinical data for discrimination of cardiogenic from non-cardiogenic pulmonary edema8 and validated prospectively in the same institution.9 Another electronic monitor for ARDS called the ASSIST algorithm was reported to facilitate patient identification for ARDS clinical trials with good sensitivity.6,10 Each of these reports was from a single institution, or single medical systems, and they have not been validated externally. We sought to validate the previously reported electronic sniffer algorithms for use in our institution using a previously collected prospective cohort of critically ill patients at risk for ARDS within which the presence or absence of ARDS was already adjudicated.

Methods

Patients

The study population was derived from the Validating Acute Lung Injury Markers for Diagnosis (VALID) study, consisting of 2,929 patients enrolled between January 2006 and August 2014. VALID, a prospective cohort study approved by Vanderbilt University’s Human Research Protection Program, enrolls adult patients who are admitted to the Vanderbilt University Hospital medical, surgical, trauma or cardiovascular intensive care units (ICU). As previously described,11–13 patients are enrolled on the morning of ICU day two, excluding patients for declination of consent, ICU admission for longer than 48 hours at time of screening, impending discharge from the ICU, severe chronic lung disease, uncomplicated overdose, and cardiovascular ICU patients who are not mechanically-ventilated or are post-operative.

Data Collection

In the VALID study, a broad set of demographic, past medical history, laboratory, and physiological data is collected by trained study nurses on the morning of cohort enrollment, encompassing the preceding 24 hours. Subsequent laboratory and physiologic data are collected each morning up until the morning of ICU calendar day 5, with each study day reflecting the values for the previous 24 hours. The physiologic variables collected include the lowest and the highest PaO2/FiO2 ratio and SpO2/FiO2 ratio for each 24-hour period. In addition to data collected in VALID, for the current study we retrospectively captured the electronic full text of all radiographic reports from the electronic clinical records during the calendar day prior to ICU admission through 96 hours after ICU admission.

Endpoints

In VALID, the presence of acute lung injury, as defined by the American-European Consensus Conference (AECC) definition,14 is prospectively adjudicated by two-physician consensus review for each of the first four ICU days through review of the chest radiographs and clinical data, and for the purposes of this study is taken as the gold standard for acute lung injury diagnosis. Hydrostatic pulmonary edema is diagnosed when chart review demonstrates that the radiographic findings is likely due to elevated left-sided cardiac filling pressures. As the newer Berlin definition of ARDS 15 supplants the older AECC definition of acute lung injury, we refer to the outcome as ARDS through the remainder of the manuscript.

The free text Boolean query “edema” OR (“bilateral” AND “infiltrate”), matching the search criteria from the Herasevich sniffer,7 was run against all radiographic reports. Due to the structure of the available data precluding restriction to chest radiograph reports alone, the query was run against all radiology reports. Not all of the patients had an arterial blood gas performed, so we used the previously described cutoff of SpO2/FiO2 less than or equal to 315 as an alternative to PaO2/FiO2 ratio of less than or equal to 300.16 Schmidt et al demonstrated strong agreement in the Herasevich algorithm when employing PaO2/FiO2 or SpO2/FiO2 ratios.17 The initial Herasevich screen used a positive chest radiograph report and a PaO2/FiO2 ratio of less than 300 within 24 hours of each other as a positive screen for acute lung injury. We altered this slightly by flagging patients as possible ARDS by Herasevich criteria if during a clinical day (morning to morning) in the VALID cohort, a radiographic report met the Boolean criteria and the lowest SpO2/FiO2 ratio or PaO2/FiO2 ratio of the same day was less than or equal to 315 or 300, respectively.

To adapt the ASSIST sniffer algorithm, we performed a free text Boolean query across all radiologic reports for phrases consistent with acute lung injury or terms describing bilaterality and infiltrates in the same sentence as described by Azzam et al.10 A positive screen by the ASSIST criteria was defined by a positive flag by the Boolean search and a PaO2/FiO2 ratio or SpO2/FiO2 ratio of less than or equal to 300 or 315, respectively, during the same day in the VALID study set.

In addition to applying the Herasevich and ASSIST sniffers independently, we created a third screen for possible ARDS that was positive if either the Herasevich or ASSIST radiograph and oxygenation criteria were met in a clinical day (referred to as “combined screen” moving forward).

We also retrospectively determined the presence of clinician suspicion of ARDS among a sub-cohort of VALID consisting of 1461 medical ICU patients. Clinician suspicion of ARDS was determined by a systematic search of clinical documentation for a possible or probable diagnosis of ARDS during the ICU stay using key words including “acute lung injury”, “acute respiratory distress syndrome”, “ALI”, and “ARDS”. Any documents containing the search phrases were then manually reviewed for the context and assigned as clinical suspicion for ARDS present or absent. The accuracy of this electronic search for clinical suspicion of ARDS was verified by manual review of all clinical documentation from a randomly selected 100 patients in the VALID cohort.

Statistical Analyses

Characteristics of the study population are presented as median and inter-quartile range for continuous data and frequency and proportion for categorical data. Test characteristics of the Herasevich, ASSIST, and combined sniffers were assessed versus the gold-standard of VALID diagnosis of ARDS, including sensitivity, specificity, negative predictive value (NPV) and positive predictive values (NPV), with 95% confidence intervals by binomial distribution. For the primary analysis, the four ICU days were pooled such that a sniffer was positive if it was positive on any day, and the patient was identified as having ARDS if they had adjudicated ARDS on any day. Sensitivity analyses were performed restricting the population to patients undergoing mechanical ventilation at the time of cohort enrollment, excluding patients with severe traumatic injuries, and assessing performance of the screen for individual patients over the course of their first four ICU days. Furthermore, the timing of initial flag by the sniffer versus by the gold standard diagnosis was examined. To approximate the use of a sniffer for screening in practice, the cumulative sensitivity of true-positive flags by the sniffers was calculated by ICU day. Finally, similar comparisons were made between the clinician suspicion of ARDS against the gold standard in the MICU subgroup.

Results

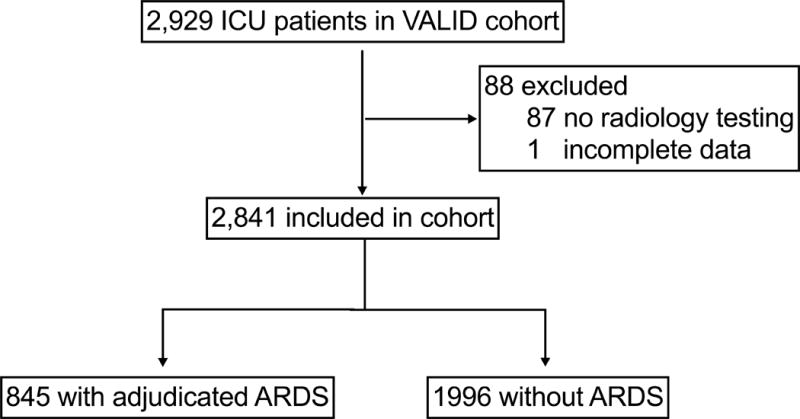

The VALID cohort contains 2,929 patients of whom one had incomplete data and 87 had no radiology testing. Of the remaining 2,841 cohort participants, 845 were identified by prospective physician adjudication to have ARDS, (see Figure 1). Participant characteristics are summarized in Table 1 for the cohort as a whole and divided by those with or without adjudicated ARDS. Median participant age is 55 years [IQR 42–65 years], and median APACHE II score is 25 [20-31]. The most common ARDS risk factors are sepsis (44.6%), severe trauma (28.2%), and pneumonia (21.1%). Additional demographic information is displayed in Table 1.

Figure 1.

Study Population

Table 1.

Summary Characteristics of Total Cohort and by ARDS Diagnosis

| Overall Cohort | No ARDS | ARDS | P value | |

|---|---|---|---|---|

| N=2841 | N=1996 | N=845 | ||

| Age (years) | 55.0 [42.0;65.0] | 55.0 [43.0;66.0] | 53.0 [39.0;64.0] | 0.011 |

| APACHE II | 25.0 [20.0;31.0] | 24.0 [19.0;30.0] | 27.0 [22.0;33.0] | <0.001 |

| Weight (kg) | 80.0 [68.0;96.0] | 80.0 [68.0;98.0] | 78.0 [66.0;93.0] | 0.001 |

| Male | 1710 (60.2%) | 1215 (60.9%) | 495 (58.6%) | 0.272 |

| Mechanically Ventilated | 1980 (69.7%) | 1311 (65.7%) | 669 (79.2%) | <0.001 |

| NIPPV | 111 (3.91%) | 70 (3.51%) | 41 (4.85%) | 0.113 |

| Source of ICU Admission | <0.001 | |||

| Emergency Department | 1274 (45.4%) | 902 (45.8%) | 372 (44.3%) | |

| Floor Transfer | 527 (18.8%) | 301 (15.3%) | 226 (26.9%) | |

| OSH Transfer | 498 (17.7%) | 355 (18.0%) | 143 (17.0%) | |

| OR | 457 (16.3%) | 364 (18.5%) | 93 (11.1%) | |

| ICU Type | <0.001 | |||

| SICU | 471 (16.6%) | 355 (17.8%) | 116 (13.7%) | |

| MICU | 1461 (51.4%) | 1001 (50.2%) | 460 (54.4%) | |

| Trauma Unit | 825 (29.0%) | 562 (28.2%) | 263 (31.1%) | |

| Cardiac ICU | 83 (2.92%) | 77 (3.86%) | 6 (0.71%) | |

| Medical History | ||||

| Current Smoker | 1009 (35.5%) | 698 (35.0%) | 311 (36.8%) | 0.373 |

| Alcohol Abuse | 529 (18.6%) | 378 (18.9%) | 151 (17.9%) | 0.538 |

| Diabetes | 724 (25.5%) | 527 (26.4%) | 197 (23.3%) | 0.093 |

| Chronic Liver Disease | 279 (9.82%) | 208 (10.4%) | 71 (8.40%) | 0.113 |

| Atrial Fibrillation | 214 (7.53%) | 149 (7.46%) | 65 (7.69%) | 0.895 |

| Congestive Heart Failure | 299 (10.5%) | 223 (11.2%) | 76 (8.99%) | 0.096 |

| Coronary Artery Disease | 302 (10.6%) | 242 (12.1%) | 60 (7.10%) | <0.001 |

| Stroke | 190 (6.69%) | 140 (7.01%) | 50 (5.92%) | 0.323 |

| Hypertension | 1297 (45.7%) | 931 (46.6%) | 366 (43.3%) | 0.112 |

| Hyperlipidemia | 688 (24.2%) | 512 (25.7%) | 176 (20.8%) | 0.007 |

| Chronic Kidney Disease | 451 (15.9%) | 336 (16.8%) | 115 (13.6%) | 0.036 |

| Dialysis | 128 (4.51%) | 101 (5.06%) | 27 (3.20%) | 0.036 |

| ARDS Risk Factor | ||||

| Sepsis | 1268 (44.6%) | 759 (38.0%) | 509 (60.2%) | <0.001 |

| Pneumonia | 599 (21.1%) | 281 (14.1%) | 318 (37.6%) | <0.001 |

| Pancreatitis | 39 (1.37%) | 33 (1.65%) | 6 (0.71%) | 0.072 |

| Trauma | 801 (28.2%) | 542 (27.2%) | 259 (30.7%) | 0.065 |

| Transfusion | 439 (15.5%) | 373 (18.7%) | 66 (7.81%) | <0.001 |

| Overdose | 48 (1.69%) | 43 (2.15%) | 5 (0.59%) | 0.005 |

| Aspiration | 216 (7.60%) | 80 (4.01%) | 136 (16.1%) | <0.001 |

| Drowning | 3 (0.11%) | 2 (0.10%) | 1 (0.12%) | 1 |

| Other | 33 (1.16%) | 10 (0.50%) | 23 (2.72%) | <0.001 |

| None | 399 (14.0%) | 398 (19.9%) | 1 (0.12%) | <0.001 |

| ARDS Risk Type | <0.001 | |||

| Direct | 790 (32.1%) | 354 (21.9%) | 436 (51.7%) | |

| Indirect | 1670 (67.9%) | 1262 (78.1%) | 408 (48.3%) |

Data are presented as median [25th percentile – 75th percentile] or number (percentage). APACHE II is Acute Physiology and Chronic Health Evaluation II – ranging from 0 to 71 with higher scores indicating higher severity of illness. P-value is for the between-groups comparison by Wilcoxon rank-sum test for continuous data or Pearson’s Chi-square test or Fisher’s Exact test for categorical data, as appropriate.

The Herasevich sniffer flagged 1,626 patients as possible ARDS over the four study days. The sniffer had sensitivity for flagging positive on any of the study days a case adjudicated to be ARDS at any point during the study of 78.9% (95% CI 76.0-81.6) and a specificity of 52.0% (95% CI 49.7-54.2), with PPV and NPV of 41.0% (95% CI 38.6-43.5) and 85.3% (95% CI 83.2-87.3), respectively (Table 2). However, sensitivity for detection was greatest on the first ICU day (61.3%) with declining performance on the subsequent three days, with sensitivity of less than 40% on ICU days 2-4 (Appendix A). The ASSIST sniffer had improved performance over the Herasevich criteria, flagging a total of 1,635 patients in the first four ICU days with an overall sensitivity of 88.2% (95% CI 85.8-90.3) and specificity of 55.4% (95% CI 53.2-57.6) (Table 2). Similar to the Herasevich sniffer, the ASSIST sniffer performed best on the first day (76.7% sensitive and 70.5% specific), and had lower sensitivity and specificity on subsequent days, flagging fewer than half of ARDS cases on ICU days 2-4 (Appendix A). As the ASSIST sniffer does not entirely overlap with the Herasevich sniffer (the isolated word “edema” flags for Herasevich but not ASSIST), we additionally considered a combined screen for which either sniffer was positive, which increased sensitivity to as high as 85% (95% CI 82-87.6) on ICU day 1 and 93.8% (95% CI 92-95.4) over the course of the four day study period (Tables 2 and 3).

Table 2.

Test Characteristics of Sniffers and Clinician Recognition

| Algorithm | Period | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|

| ASSIST | Days 1–4 | 88.2% (85.8–90.3) | 55.4% (53.2–57.6) | 45.6% (43.1–48) | 91.7% (90–93.2) |

| Herasevich | Days 1–4 | 78.9% (76–81.6) | 52% (49.7–54.2) | 41% (38.6–43.5) | 85.3% (83.2–87.3) |

| Combined | Days 1–4 | 93.8% (92–95.4) | 45.1% (42.9–47.3) | 42% (39.7–44.2) | 94.5% (92.9–95.9) |

| Clinical Recognition | Days 1–4 | 40.7% (36.1–45.3) | 94.8% (93.2–96.1) | 78.2% (72.5–83.3) | 77.7% (75.2–80) |

Data are presented as percentage (95% confidence interval).

Table 3.

Test Characteristics of Combined Sniffer by ICU Day

| Algorithm | Period | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|

| Combined | Day 1 | 85% (82–87.6) | 58.9% (56.8–61) | 38.2% (35.7–40.7) | 92.9% (91.5–94.2) |

| Combined | Day 2 | 53.7% (49.9–57.5) | 79.6% (77.8–81.2) | 45.4% (41.9–48.9) | 84.5% (82.8–86) |

| Combined | Day 3 | 50.7% (46.8–54.5) | 84.6% (83–86.1) | 50.2% (46.4–54.1) | 84.8% (83.2–86.3) |

| Combined | Day 4 | 51.7% (47.8–55.7) | 86.4% (84.9–87.8) | 52% (48–56) | 86.3% (84.8–87.7) |

| Combined | Days 1–4 | 93.8% (92–95.4) | 45.1% (42.9–47.3) | 42% (39.7–44.2) | 94.5% (92.9–95.9) |

Data are presented as percentage (95% confidence interval).

To mimic how an electronic sniffer might be employed in practice, we performed another analysis excluding correctly identified ARDS patients from flagging on subsequent days. Test characteristics were similar to the primary analysis (Appendix B).

As the VALID cohort employs the AECC definition of ARDS, some patients are diagnosed with ARDS in the absence of mechanical ventilation. Restricting the cohort to patients who were invasively mechanically ventilated did not meaningfully change the sensitivity of the flags (not shown). Additional analyses excluding patients with trauma or dividing the cohort by direct or indirect ALI risk factors also did not substantively alter the test characteristics (not shown).

Compared with either of the sniffers, clinical suspicion for ARDS in the medical ICU subgroup of ARDS was insensitive for the presence of ARDS at 40.7% over the four study days (95% CI 36.1 -45.3). However, clinical suspicion of ARDS had a much higher PPV at 78.2% (95% CI 72.5-83.3). See Table 2.

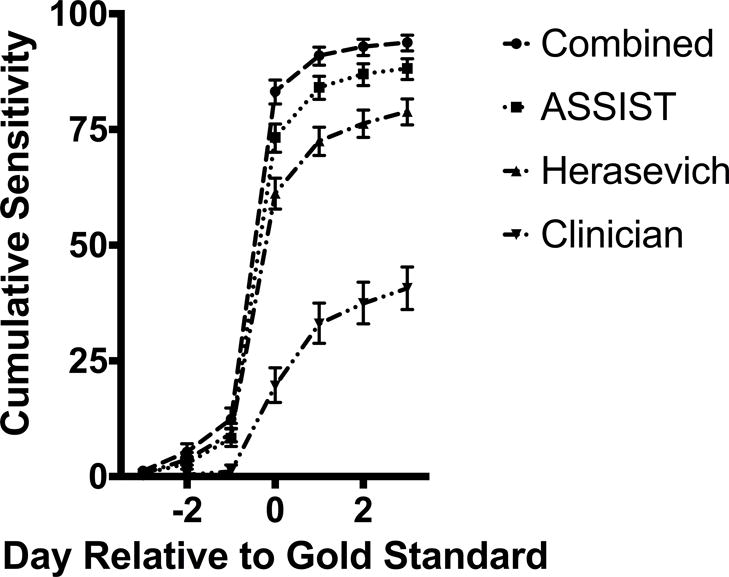

If an automated sniffer were used for early recognition of ARDS, then timing of diagnosis is important. Figure 2 displays the cumulative sensitivity of the sniffers relative to the gold standard diagnosis, and Appendix C summarizes the timing of first flag for each sniffer or clinician suspicion compared with the day of adjudicated diagnosis. Most cases first flagged positive by a sniffer on the same day as gold standard adjudication.

Figure 2.

Cumulative Daily Sensitivity of Electronic Sniffers and Clinician Suspicion of ARDS Compared to Gold Standard

Notably, the initial report of the ASSIST sniffer was on a population pre-screened to exclude patients with heart failure,10 and subsequent implementation applied rules to minimize inclusion of patients at high risk for heart failure.6 Similarly, the VALID cohort excludes non-intubated and post-operative patients in the cardiovascular ICU to reduce enrollment of patients with hydrostatic pulmonary edema; however, many patients ultimately determined to have hydrostatic edema are enrolled. Hydrostatic pulmonary edema accounted for just 22.4%, 24.0%, and 21.5% of false positives for the Herasevich, ASSIST, and combined flags, respectively. Moreover, exclusion of patients with a history of congestive heart failure did not meaningfully change the PPV for any of the algorithms (Appendix D).

To evaluate the reason for incomplete sensitivity of the sniffers, the 52 patients diagnosed as ARDS by the gold standard but not identified by the combined sniffer were manually reviewed. The most common cause for sniffer insensitivity was for different determination by the reading radiologist and the gold standard. Fifteen patients had a radiologist report that included “bilateral atelectasis”, and an additional four had “bibasilar atelectasis.” Eleven patients may have flagged positive by the Herasevich and ASSIST sniffers as originally published, but because in our implementation the qualifying radiology read and oxygenation criteria crossed clinical days, they were not flagged positive for our analysis. Seven patients had radiology reports with the singular “opacity” describing findings on both sides of the chest rather than “opacities.” See Supplemental Table 5 for additional details.

Discussion

The application of two previously published automated algorithms for detection of ARDS in a patient cohort from a different institution demonstrated good sensitivity for ARDS over the first four days of ICU stay with good timeliness of diagnosis, significantly exceeding the sensitivity of clinician recognition. As one might expect, the more detailed chest radiograph analysis of the ASSIST algorithm was more sensitive than the Herasevich algorithm, and combining the two was more sensitive still. With good sensitivity but poor specificity, the algorithms would be best deployed as an automated screen for possible diagnosis of ARDS rather than absolute diagnosis, as the PPV for any of the flags was between 40 and 50 percent in our population. Consequently, the absence of a positive screen over the first four ICU days strongly excludes ARDS, with a NPV exceeding 90% for the ASSIST and combined flag.

Despite good sensitivity and NPV over the four ICU days, neither algorithm approaches the test characteristics described in the primary publications. The ASSIST algorithm was initially described in a 199-patient trauma cohort with a similar ARDS incidence to our own cohort (26.6%).10 The algorithm was 86.8% and 89.0% sensitive and specific, respectively, and had PPV of 74.2% and NPV of 94.9%. The ASSIST algorithm performance was later reported in a broader population at the same institution by Koenig et al, comprised of medical, general surgery, and trauma ICUs, with notable exclusion of high probability CHF patients, those on FiO2 <0.5, and non-mechanically ventilated.6 The ARDS incidence in this validation population of 1270 was 6.6%, and the ASSIST algorithm had excellent sensitivity and specificity, of 97.6% and 97.6%, respectively, and a PPV of 73.9% and NPV of 99.8%. In comparison, when the ASSIST algorithm was applied to our cohort, the sensitivity was 88.2% and specificity of only 55.4%. Importantly, in operationalizing the ASSIST algorithm for this investigation, we were limited to the Azzam10 description of the word search algorithm. Any changes to the ASSIST algorithm that were made for the Koenig publication6 and that might have accounted for its improved sensitivity were not described and thus not applied in our cohort. Additionally, our validation cohort, while incorporating some rules to reduce false positives from heart failure by excluding non-mechanically ventilated and post-operative CCU patients, did not implement all of the rules in the Koenig paper including a 48-hour post-surgery exclusion in the surgical ICU, which may account for some of the difference in specificity.

The Herasevich algorithm, with a reported sensitivity and specificity of 96% and 89%, respectively, in a population with a case incidence of 8.6%, also underperformed when applied to our patient cohort. Sensitivity in our cohort was only 78.9% over the first four ICU days, and specificity was low at 52%.

Our initial intention was to apply the sniffers for automated screening of ARDS and discrimination between ARDS and cardiogenic pulmonary edema as described by Schmickl and colleagues, but the high frequency of false positives by causes other than cardiogenic pulmonary edema render the Schmickl discriminator algorithm less useful. Notably, the discrimination algorithm was derived and reported after manual exclusion of non-ARDS and non-cardiogenic pulmonary edema cases, which made up 40% of their positive flags.8,9

The diminished performance of the described algorithms in our cohort demonstrate the importance of local validation of externally derived screening algorithms before deployment. The differences in test characteristics reflect the challenges in applying free-text radiographic interpretation derived at one institution to another institution, where local practice patterns may lead to institution or reader-specific text patterns not employed elsewhere. More advanced semantic processing with natural language processing and machine learning, while more difficult to deploy, may potentially level characteristics across institutions.18,19

Interestingly, both algorithms were most sensitive for ARDS recognition on the day of cohort enrollment. This could be explained by a carry-forward effect of radiology reads on subsequent days, with later reads referencing clinical data and prior chest radiographs, decreasing the likelihood that the free-text report will contain the characteristic language of the search algorithms. Alternatively, it may be attributed to differential interpretation of the x-rays by the cohort-adjudication physicians and the radiologists.

This validation study has a number of strengths. In particular, although ARDS case definition can be challenging in the absence of a purely objective diagnostic test,20 the use of a prospectively collected cohort with endpoint adjudication independent of our study lends validity to the gold standard diagnostic determination. Moreover, it includes a broad population of patients from multiple types of ICUs. Our study also has some limitations. Whereas prior investigators instituted an automated rolling 24-hour search for the dual radiographic and oxygenation criteria, we were limited to concordance of criteria on a single ICU day due to the data collection algorithm for the VALID study. This is unlikely to have significantly altered the findings, as true ARDS does not rapidly resolve and should be present on multiple days. However, a rolling 24-hour period in practice would have identified 11 additional cases of ARDS. We also used SpO2/FiO2 data when arterial blood gas data was not available. As previously demonstrated, SpO2/FiO2 criteria for ARDS are a reasonable extension and have been shown to provide similar findings17 as well as identify patients with similar clinical outcomes.21 Use of the SpO2/FiO2 allows for deployment of the algorithm in a population where arterial blood gases are not frequently obtained; however, it is conceivable that adding SpO2/FiO2 criteria to the sniffers may have reduced specificity of the sniffers compared to prior sniffers. Furthermore, we applied the free-text search algorithms to all radiology reports rather than restricting them to chest radiograph reports because of the structure of the available data. This may have contributed to excess false positive rates and reduced the PPV. Finally, the clinician recognition was derived from electronic charting, and the delay between suspicion for ARDS and documentation of that suspicion in routine clinical care may have led to the appearance of more delayed clinical recognition of ARDS than in true practice. Nonetheless, clinician documentation of suspicion of ARDS compared with its presence by gold standard suggests significant under-recognition.

Conclusion

Two previously published sniffer algorithms may be useful automated tools to screen for ARDS in the electronic medical record with good sensitivity when applied at a new institution, well higher than clinician recognition. However, their use is limited to screening rather than diagnosis because specificity was poor.

Supplementary Material

Acknowledgments

Source of Funding: Dr. Ware was supported by funding from NIH HL103836. Dr. Wanderer was supported by funding from by the Foundation for Anesthesia Education and Research (FAER, Schaumburg, IL, USA) and Anesthesia Quality Institute (AQI, Schaumburg, IL, USA)’s Health Service Research Mentored Research Training Grant (HSR-MRTG). The funding institutions had no role in (1) conception, design, or conduct of the study, (2) collection, management, analysis, interpretation, or presentation of the data, or (3) preparation, review, or approval of the manuscript.

Footnotes

Conflicts of Interest: The authors declare no potential conflicts of interest.

Guarantor statement: A.C.M., J.P.W, and L.B.W. had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Authors contributions: Study concept and design: A.C.M., J.P.W; Acquisition of data: J.P.W, R.M.B, L.B.W.; Analysis and interpretation of data: A.C.M., J.P.W.; Drafting of the manuscript: A.C.M., J.P.W; Critical revision of the manuscript for important intellectual content: A.C.M., J.P.W, L.B.W, R.M.B; Statistical analysis: A.C.M.;

Some of the results of this study have been previously reported in the form of abstracts:

Brown RM, Semler MW, Zhao Z, Koyama T, Janz D, Bastarache JA, and Ware L. Clinician Recognition of ARDS in the Medical Intensive Care Unit. Am J Respir Crit Care Med 193;2016:A1831.

McKown AC, Ware LB, Wanderer JP. External Validity of Electronic Sniffers for Automated Recognition of Acute Respiratory Distress Syndrome. Am J Respir Crit Care Med 195;2017:A6806.

References

- 1.Bellani G, Laffey JG, Pham T, Fan E, Brochard L, Esteban A, Gattinoni L, van Haren F, Larsson A, McAuley DF, Ranieri M, Rubenfeld G, Thompson BT, Wrigge H, Slutsky AS, Pesenti A, for the LUNG SAFE Investigators and the ESICM Trials Group Epidemiology, Patterns of Care, and Mortality for Patients With Acute Respiratory Distress Syndrome in Intensive Care Units in 50 Countries. JAMA. 2016;315(8):788–800. doi: 10.1001/jama.2016.0291. [DOI] [PubMed] [Google Scholar]

- 2.The Acute Respiratory Distress Syndrome Network. Ventilation with Lower Tidal Volumes as Compared with Traditional Tidal Volumes for Acute Lung Injury and the Acute Respiratory Distress Syndrome. N Engl J Med. 2000;342(18):1301–1308. doi: 10.1056/NEJM200005043421801. [DOI] [PubMed] [Google Scholar]

- 3.Briel M, Meade M, Mercat A, Brower RG, Talmor D, Slutsky AS, Pullenayegum E, Zhou Q, Cook D, Brochard L, Richard J-CM, Lamontagne F, Bhatnagar N, Stewart TE, Guyatt G. Higher vs lower positive end-expiratory pressure in patients with acute lung injury and acute respiratory distress syndrome: Systematic review and meta-analysis. JAMA. 2010;303(9):865–873. doi: 10.1001/jama.2010.218. [DOI] [PubMed] [Google Scholar]

- 4.Guérin C, Reignier J, Richard J-C, Beuret P, Gacouin A, Boulain T, Mercier E, Badet M, Mercat A, Baudin O, Clavel M, Chatellier D, Jaber S, Rosselli S, Mancebo J, Sirodot M, Hilbert G, Bengler C, Richecoeur J, Gainnier M, Bayle F, Bourdin G, Leray V, Girard R, Baboi L, Ayzac L, for the PROSEVA Study Group Prone Positioning in Severe Acute Respiratory Distress Syndrome. N Engl J Med. 2013;368(23):2159–2168. doi: 10.1056/NEJMoa1214103. [DOI] [PubMed] [Google Scholar]

- 5.Papazian L, Forel J-M, Gacouin A, Penot-Ragon C, Perrin G, Loundou A, Jaber S, Arnal J-M, Constantin J-M, Courant P, Lefrant J-Y, Guérin C, Prat G, Morange S, Roch A, for the ACURASYS Study Investigators Neuromuscular Blockers in Early Acute Respiratory Distress Syndrome. N Engl J Med. 2010;363(12):1107–1116. doi: 10.1056/NEJMoa1005372. [DOI] [PubMed] [Google Scholar]

- 6.Koenig HC, Finkel BB, Khalsa SS, Lanken PN, Prasad M, Urbani R, Fuchs BS. Performance of an automated electronic acute lung injury screening system in intensive care unit patients. Crit Care Med. 2011;39(1):98–104. doi: 10.1097/CCM.0b013e3181feb4a0. [DOI] [PubMed] [Google Scholar]

- 7.Herasevich V, Yilmaz M, Khan H, Hubmayr RD, Gajic O. Validation of an electronic surveillance system for acute lung injury. Intensive Care Med. 2009;35(6):1018–1023. doi: 10.1007/s00134-009-1460-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schmickl CN, Shahjehan K, Li G, Dhokarh R, Kashyap R, Janish C, Alsara A, Jaffe AS, Hubmayr RD, Gajic O. Decision support tool for early differential diagnosis of acute lung injury and cardiogenic pulmonary edema in medical critically ill patients. Chest. 2012;141(1):43–50. doi: 10.1378/chest.11-1496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schmickl CN, Pannu S, Al-Qadi MO, Alsara A, Kashyap R, Dhokarh R, Hersevich V, Gajic O. Decision support tool for differential diagnosis of Acute Respiratory Distress Syndrome (ARDS) vs Cardiogenic Pulmonary Edema (CPE): a prospective validation and meta-analysis. Crit Care. 2014;18(6):659. doi: 10.1186/s13054-014-0659-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Azzam HC, Khalsa SS, Urbani R, Shah CV, Christie JD, Lanken PN, Fuchs BD. Validation Study of an Automated Electronic Acute Lung Injury Screening Tool. J Am Med Inform Assoc. 2009;16(4):503–508. doi: 10.1197/jamia.M3120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Siew ED, Ware LB, Gebretsadik T, Shintani A, Moons KGM, Wickersham N, Bossert F, Ikizler TA. Urine Neutrophil Gelatinase-Associated Lipocalin Moderately Predicts Acute Kidney Injury in Critically Ill Adults. J Am Soc Nephrol. 2009;20(8):1823–1832. doi: 10.1681/ASN.2008070673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Janz DR, Bastarache JA, Peterson JF, Sills G, Wickersham N, May AK, Roberts LJ, Ware LB. Association Between Cell-Free Hemoglobin, Acetaminophen, and Mortality in Patients With Sepsis: An Observational Study. Crit Care Med. 2013;41(3):784–790. doi: 10.1097/CCM.0b013e3182741a54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kangelaris K, Calfee CS, May AK, Zhuo H, Matthay MA, Ware LB. Is there still a role for the lung injury score in the era of the Berlin definition ARDS? Ann Intensive Care. 2014;4(1):4. doi: 10.1186/2110-5820-4-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bernard GR, Artigas A, Brigham KL, Carlet J, Falke K, Hudson L, Lamy M, Legall JR, Morris A, Spragg R, the Consensus Committee The American-European Consensus Conference on ARDS. Definitions, mechanisms, relevant outcomes, and clinical trial coordination. Am J Respir Crit Care Med. 1994;149(3):818–824. doi: 10.1164/ajrccm.149.3.7509706. [DOI] [PubMed] [Google Scholar]

- 15.The ARDS Definition Task Force. Acute Respiratory Distress Syndrome: The Berlin Definition. JAMA. 2012;307(23):2526–2533. doi: 10.1001/jama.2012.5669. [DOI] [PubMed] [Google Scholar]

- 16.Rice TW, Wheeler AP, Bernard GR, Hayden DL, Schoenfeld DA, Ware LB. Comparison of the Spo2/Fio2 Ratio and the Pao2/Fio2 Ratio in Patients with Acute Lung Injury or ARDS. Chest. 2007;132(2):410–417. doi: 10.1378/chest.07-0617. [DOI] [PubMed] [Google Scholar]

- 17.Schmidt MFS, Gernand J, Kakarala R. The use of the pulse oximetric saturation to fraction of inspired oxygen ratio in an automated acute respiratory distress syndrome screening tool. J Crit Care. 2015;30(3):486–490. doi: 10.1016/j.jcrc.2015.02.007. [DOI] [PubMed] [Google Scholar]

- 18.Solti I, Cooke CR, Xia F, Wurfel MM. Automated classification of radiology reports for acute lung injury: Comparison of keyword and machine learning based natural language processing approaches. IEEE International Conference on Bioinformatics and Biomedicine Workshop, 2009 BIBMW 2009. 2009:314–319. doi: 10.1109/BIBMW.2009.53320810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yetisgen-Yildiz M, Bejan CA, Wurfel MM. ACL 2013. Sofia, Bulgaria: Association for Computational Linguistics; 2013. Identification of Patients with Acute Lung Injury from Free-Text Chest X-Ray Reports; pp. 10–17. http://www.anthology.aclweb.org/W/W13/W13-19.pdf#page=22. Accessed February 13, 2016. [Google Scholar]

- 20.Rubenfeld GD, Caldwell E, Granton J, Hudson LD, Matthay MA. Interobserver Variability in Applying a Radiographic Definition for ARDS. Chest. 1999;116(5):1347–1353. doi: 10.1378/chest.116.5.1347. [DOI] [PubMed] [Google Scholar]

- 21.Chen W, Janz DR, Shaver CM, Bernard GR, Bastarache JA, Ware LB. Clinical Characteristics and Outcomes Are Similar in ARDS Diagnosed by Oxygen Saturation/Fio2 Ratio Compared With Pao2/Fio2 Ratio. Chest. 2015;148(6):1477–1483. doi: 10.1378/chest.15-0169. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.