Abstract

The need for high-throughput, precise, and meaningful methods for measuring behavior has been amplified by our recent successes in measuring and manipulating neural circuitry. The largest challenges associated with moving in this direction, however, are not technical but are instead conceptual: what numbers should one put on the movements an animal is performing (or not performing)? In this review, I will describe how theoretical and data analytical ideas are interfacing with recently-developed computational and experimental methodologies to answer these questions across a variety of contexts, length scales, and time scales. I will attempt to highlight commonalities between approaches and areas where further advances are necessary to place behavior on the same quantitative footing as other scientific fields.

As modern techniques for recording and manipulating neural circuits have expanded our toolbox for deconstructing the molecular and cellular components of animals’ nervous systems, an accompanying realization has gradually developed: to more fully comprehend the function of neural circuits and the computations underlying them, we must understand their output in an accordingly precise manner [1, 2]. Specifically, we need to measure behavior. More careful measurements of the actions animals perform is key not just for advancing our basic understanding of nervous system function, but also in our assessment and categorization of psychiatric disorders and the development of brain-machine interfaces [3, 4]. But what type of behavior do we want to measure, and once we decide on this, how do we measure it?

Answering these questions has proven difficult, but this is largely due to conceptual limitations rather than technical ones. If watching an animal behave, what are some precise, yet meaningful, numbers we should use to describe its movements? Is it the center of mass motion of the whole animal? The position and velocity of the organism’s body and limbs? The dynamics of individual myosin motors within muscle tissue? A more coarse-grained measure related to the animal’s “intended” action? A collective variable describing the combined dynamics of many animals? And how do we connect these scales to make inferences from the cellular and the molecular up to the movement of a limb, a wing, a finger, or an eyebrow? This is the dilemma that those of us who attempt to measure behavior commonly face.

While selecting the proper representation for one’s measurements is hardly a problem exclusive to the study of animal behavior (e.g. “more is different” and not wanting to “model bulldozers with quarks” [5, 6]), it is felt acutely by researchers in this field due to the multi-scale and distributed dynamics inherent to almost any behavioral process. Cognition or sensation acts to drive muscles that drive joints that drive limbs that drive locomotion or other motions, which then send feedback signals in the reverse direction, and the cycle continues. Where in this loop do we define behavior? Or is it the whole loop? And what numbers should we use to describe the observed dynamics? These are the questions that I will focus on here, asking how to best represent behavioral data in a manner that bridges length and time scales, highlighting particularly fruitful approaches.

Before progressing, though, it should be noted that there has been a recent proliferation of review articles discussing behavior, detailing concepts ranging from computational techniques for measuring behavior [7–10] to finding simplicity in “big behavioral data” [11, 12] to the advent of computational psychiatry and measuring emotional states [3, 13–17] to the need for measuring behavior in the first place [1, 2] to the reproducability and robustness of said behavioral measures [18, 19]. While there will inevitably be a great deal of overlap between this review and those that have come before it, here I will focus less on the practical aspects of behavioral quantification and more on the consequences of the representational choices one makes, highlighting areas where further progress is required.

Measuring behavior on the organismal scale

Beyond being a mere technical inconvenience, the relative lack of a quantitative language for measuring behavior has shaped the types of questions we have been able to ask. In a laboratory setting, behavioral experiments have usually been designed to observe a restricted set of actions within the scope of a restricted environment [20, 21]. To wit, the behavior measured in most of these experiments is typically performed within a “paradigm” – with the accompanying implication that we have tuned the animal to our quantification scheme rather than the other way around. Examples of this approach would be placing an animal in a maze where it can only turn left or right or head-fixing a rodent, where it is asked to lick in a particular direction to obtain a reward. While this reliance on non-naturalistic behavior sometimes emerges from a culture of treating behavior as a read-out variable of the neural hardware in question, more commonly it is driven by an understandable desire to have a repeatable measurement that can generate high-throughput data while recording activity from neurons. Nevertheless, the end result is to measure an over-constrained behavior that likely lies outside of an animal’s typical repertoire of actions.

To move forward with the analysis of more natural behavior, we can try to imagine the best-case scenario, ignoring all of the technical worries. If we have an arbitrarily large amount of high quality data from an animal behaving with minimal artificial constraints, how should we describe it quantitatively? To an extent, the answer here is the same as in most other scientific measurements: we desire consistency (repeatable results), fidelity (describing the system as accurately and completely as possible), interpretability (ease of relating the found numbers to their biological underpinnings), and scalability (requiring minimal manual labor or scoring without impractically taxing computational or human resources).

While consistency and scalability can be theoretically obtained independently of the other two, fidelity and interpretability are, by definition, in tension, with measurements typically being more understandable but less accurate as we remove details. Our goal for measuring behavior, then, is to find descriptive representations of these multi-scale processes that are as parsimonious as possible. This trade-off naturally suggests a continuum of solutions, and in the rest of this article we will see how researchers have represented behavioral data in varying ways, tying-together the length and time scales of naturalistic behavior at many levels of abstraction.

Selecting a representation

A good place to start investigating behavioral representations is to note the options available to researchers a decade ago if they wished to measure ethological behavior at the organismal scale. One option would have been the previously mentioned paradigmatic approach, where the quantification is ingrained into the experimental apparatus itself. Quantifying behavior in this manner has the advantages of high-throughput and consistent measurements, but it captures a very low-dimensional and potentially unnatural measurement [22].

Another approach would be to measure a coarse, yet non-paradigmatic, variable such as mean velocity or the fraction of time moving (including the laser-crossing experiments that are typical in circadian rhythm studies [23]). These measurements are more naturalistic than paradigmatic ones, while allowing for a similar level of throughput, however they only capture dynamics at a single scale. This is a plausible approach when studying the effects of genetic manipulations on sleep-wake cycles, but it may not be able to capture, say, the precise grooming patterns of an animal or other movements that are unlikely to be apparent by treating the animal as a point moving through space.

Alternatively, if a researcher desired a richer description of an animal’s behavior, they could have developed a human-defined classification system for an animal’s behavior that was then scored by a trained observer. While providing a great deal more description, this approach is extremely labor-intensive, often requiring significant effort to devise the scoring scheme, followed by potentially months of researcher-hours to apply it. Moreover, although the scheme one uses can be elaborated in detail, there will inevitably be user-specific variability in its application. More problematic, since behaviors are defined and delineated intuitively, it is difficult to quantitatively argue that one individual’s or group’s representation of the behavior is more accurate or appropriate than another’s, further limiting reproducibility. Lastly, this approach implicitly assumes that behavior can be described in terms of hopping between discrete states without showing, from the data, that such a model is indeed a reasonable representation in the first place.

Skipping ahead to the present, all three of these options are still frequently used, often generating novel insights into behavior and the mechanisms driving it. Automation has greatly increased the throughput of the first two described options, especially in small organisms like worms [24, 25], flies [26, 27], and zebrafish larvae [28–30]. Moreover, supervised machine learning techniques have greatly improved the repeatability and decreased the manual effort required to analyze behavioral data with user-defined classification of behavior [31–35]. That being said, the fundamental difficulties with these approaches remain - the level of behavioral description is either coarse-grained or behaviors are intuitively defined, explicitly encoding a human observer’s underlying assumptions about an animal’s behavior. Thus, we come to a fundamental query: how can we leverage modern data-collection techniques to extract complex behavioral representations in manner that is transparent and repeatable, with explicitly-stated and testable assumptions that shed light onto particular biological questions? As we will see, the answer to this requires thinking about the general principles.

Stereotypy as a general principle

One area of organismal-scale research where the challenges in measuring behavior have become predominantly technical is biolocomotion, the study of how animals move through their environments [36–39]. Here, while many deep questions regarding the performance, control, and evolution of these behaviors remain to be answered, there is a generally-agreed-upon framework for measuring behavior: most researchers study dynamic trajectories of motion, typically center-of-mass, body bending, and/or limb trajectories. What is it about biolocomotion that has made it amenable to this type of agreed-upon representation?

Part of the reason for this advantage is the clear ethological context of the actions studied – moving from one place to another quickly, efficiently, and robustly. Thus, there is a natural mathematical formalism to translate between scales, namely Newtonian mechanics, and the behaviors in question are clearly separable from other actions that the animal performs. Even in cases where the mathematics underlying this translation are untenably difficult to analyze directly, robots can serve as the physical equivalent of generative models to bridge this gap [40–42]. Moreover, concepts such as optimal control or energy-efficiency provide a theoretical basis for providing meaning to the investigations [43–45]. Another factor is that these behaviors are highly stereotyped, with physical constraints typically allowing for only a small number of movement patterns or gaits [46]. Although animals are capable of moving their limbs in an extremely large number of ways [47], during locomotion, their typical dynamics only explore a minute fraction of this vast space. Small perturbations off of these trajectories can be either corrected for or used to actuate control [48–50].

Inspired by these studies, much of the recent progress in developing tools for data-driven and unsupervised (i.e. without the aid of human-labeled examples) analysis of animal behavior has resulted from this observation that a large fraction of animal movements are low-dimensional compared to the animal’s total capacity for movement and are often repeated in a similar manner (Fig. 1) [11, 51–53]. However, in order to proceed, we must have a more precise mathematical description of stereotypy (i.e. defining what we mean by “low-dimensional,” “similar,” and “movement”). The goal here is to put the human at the beginning of the analysis process (defining stereotypy) as well as at the end (interpreting behavioral outputs of the analysis process), rather than in the middle (intuitively defining behaviors), as is the case for label-based, or supervised, methods for behavioral analysis.

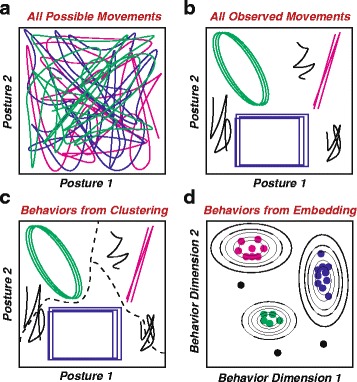

Fig. 1.

Approaches for identifying stereotyped movements. a Representation of all of the movements an animal could theoretically make. For instance, each line could be the dynamics of two joint angles, say, the bending of a knee and an ankle, or another set of postural variables over time. Although an animal could potentially move with any of these postural trajectories, many of the motions here would be only rarely performed. b How we observe most animals to move. Specifically, they use a relatively small portion of their potential behavioral repertoire (stereotyped behaviors, colored lines) along with a few instances of less-commonly-observed ones (non-stereotyped behaviors, black lines). c One way to isolate stereotyped behaviors is to break-up the observed trajectories into clusters (denoted by dashed lines). d An alternate means of identifying stereotyped behaviors is to transform the dynamics in such a way that, for instance, each time one of the trajectories in b is performed, a dot is placed using a low-dimensional embedding to a different space. Similar trajectories are mapped near each other (dots), and stereotyped behaviors could be identified as peaks in the density contours (lines) of this map

Several recent studies have developed tools for finding these stereotyped behaviors across a range of model organisms during (relatively) free behavior, from worms to rodents [53–59]. Although these researchers have all taken widely-differing technical approaches, there are key similarities that join their efforts together. The common logic between these methods points toward a shared definition of stereotyped behavior and forces us to ask a pair of fundamental questions: what does it mean for two behaviors to be similar or different, and how do we place a number on this difference? One important note, though, is that none of the approaches described below are strictly unbiased, despite the term being often brandished when describing their advantages. The implication in calling these unsupervised approaches unbiased is typically that the analyzer is removing themselves fully from the loop. Each choice a researcher makes, though, has consequences, regardless of how explicit those choices are, but the key to all of these approaches is that the consequences of these options are readily apparent.

Finding stereotyped movements in behavioral data

Although superficially distinct, there are surprising similarities in the underlying bases of different approaches for automatically identifying stereotyped behavior from videos (we will ignore other modalities for the moment) of freely-behaving animals. The general framework has been to first extract a low-dimensional postural time series from a data set, followed by a translation of these postures into a dynamical representation that is used to create a behavioral representation that isolates individual stereotyped actions. If desired, an animal’s dynamics within this behavioral representation can be observed over time, finding patterns and sequences of behavior (Fig. 2).

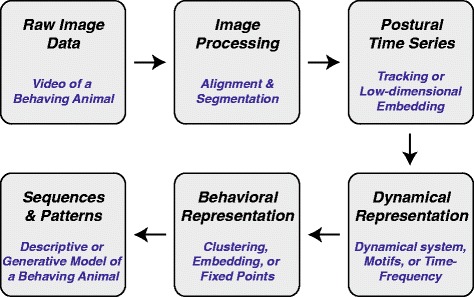

Fig. 2.

Archetypical data analysis pipeline for identifying stereotyped behaviors automatically from data

Extracting postural time series

The first step in almost any of these analyses is to isolate the animal’s posture from the raw video data. Here, by posture, I mean a measure that describes the configuration of an animal’s body and limbs at a given point in time (describing how they move will come in the next section). Usually, we prefer to describe this configuration in a manner that is in the body frame of the animal so that behavior is measured independently from spatial position or orientation. It is from this snapshot that further analyses will be devised, and it is here where organism-specific practicalities are most apparent.

This latter point can be readily seen in the difference between describing a nematode like C. elegans and a fly like D. melanogaster (Fig. 3). While almost all of the dynamics of worm behavior could be described by the motion of its centerline, a fly’s movement is the combination of six legs (each with two joints), two wings (each capable of moving with three degrees of freedom), and other body movements such as abdomen bending. These body plans clearly require different representations, even if we expect both to be relatively low-dimensional over the course of typical activities the animals perform. A rodent, with a more flexible body, or a human, with its typical bipedal walking gait, would require different representations still. In all cases, though, the aim is to take a high-dimensional measurement – say, thousands to millions of pixel values – and reduce it to a low-dimensional set of numbers describing the animal’s posture.

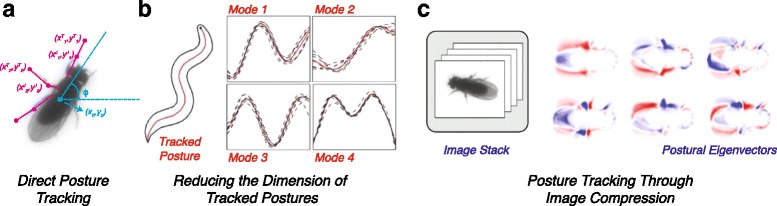

Fig. 3.

Examples of postural representations. a A schematic for how posture is typically represented by assigning body frame coordinates, here for a fruit fly. This assignment is usually created from manual tracking or machine vision techniques. b Using variations in the tracked centerline of the nematode C. elegans (left) to find a set of postural modes (right). Here, principal components analysis is used to find a set of postural modes, or “eigenworms,” where the original centerline can be largely reconstructed through a linear combination of these centerline variations (adapted from [54]). c In cases where tracking is not feasible due to occlusions, high-dimensionality, and/or large data sets, an alternative approach has been to use image compression to find postural modes, such as those seen in the fly images here (adapted from [53]). Here, red and blue represent positive and negative eigenvector magnitudes, respectively, that are the result of concentrating as much of the data’s variance in as few directions as possible. The original image can be reconstructed via a linear combination of all the modes plus an overall mean, and time series can be generated by observing sequential images’ projections onto these postural modes

The traditional, and in some senses optimal due to its interpretability, manner to achieve a set of low-dimensional time series has been to track the positions of individual body parts such as joints, leg tips, the tail, or the head. Outside of animals with relatively simple morphologies like C. elegans, this is an extremely difficult computer vision problem that has been the subject of comprehensive discussions elsewhere [7–9]. Even in the case of worms, new image analysis methods have been necessary to account for events where the worm crosses itself [60–62]. For legged animals, most automated methods typically require either attaching markers to the animal or large amounts of manual correction. Recent advances in experimental design [34, 63, 64] and computational algorithms [7, 65–67] provide hope for improving the state-of-the-art moving forward, but for large data sets containing up to billions of images, tracking individual body parts is not currently practical, especially for 2-dimensional images.

Instead of directly tracking, a common approach has been to think about postural decomposition as an image compression problem. After doing some image processing to isolate the animal from the background and align it translationally and rotationally to a template image, the tactic taken by work in flies [53] and mice [56] has been to perform a dimensionality reduction operation like Principal Components Analysis (PCA) on the raw image pixel data. This process allows for images of an animal with complex morphology to be reproducibly and continuously mapped into a relatively small set of time series, much like direct tracking of joint angles would do, but with vastly fewer errors and no need for manual inspection (Fig. 3c). This process has the disadvantage, however, of creating relatively uninterpretable time series, a fact we need take into account when moving toward a dynamical representation.

Building representations of dynamical behavior

When defining stereotyped behavior, we typically think of movements, not postures. For example, we wouldn’t describe walking as bending the right knee at 73.1°, the right ankle at 15.23°, and so on, but rather as a trajectory of these angles through time. As a result, to measure stereotyped behaviors, we need to create a dynamical representation that describes how the measured postural time series are changing. Building such a representation can be achieved by either directly fitting a differential equation to the postural data or through attributing features that incorporate dynamics such as temporal motifs or time-frequency features to individual segments of the data. We will see examples of each of these approaches momentarily. From here, one would like to create a behavioral representation, which can be thought of as longer-time scale changes in the underlying postural movements that generate the observed postural motions. For instance, giving the relative velocities of each of an insect’s six legs might be a dynamical representation, but saying that the animal is walking with an alternating tripod gait would be a behavioral representation. Of course, we need to make this idea more precise, and we will see how several different studies have done this, each with associated strengths and challenges.

The most straight-forward process for building a dynamic representation is to eliminate the step of finding postural time series and instead create a manually-curated set of dynamical features that are later used as the input to either a supervised classifier or a clustering/embedding algorithm [32, 68–72]. While relatively easy to implement, this approach risks missing elements of behavioral dynamics not captured in the list, and each of the measurements potentially has different units (e.g. velocity, angular velocity, acceleration, distance from another animal), requiring additional conversion factors or assumptions about equal variance that could affect any analysis’ outcome in subtle ways.

Ideally, an appropriate dynamical representation would emerge naturally from postural dynamics. To date, the clearest example of using postural data to explicitly generate a dynamical system that provides a natural behavioral representation is the work on C. elegans from Stephens et al. [52, 54]. Here, the authors found that the majority of a worm’s motion can be described by the progression of a single phase variable that can be thought of as the advance of a traveling wave moving up or down the animal’s body. Fitting the observed dynamics of this variable to a model with a deterministic and a stochastic component (Fig. 4a-b), they find that the worm’s behavior can be described as a set of dynamic attractors with switching times that are predictable from the statistics of the underlying noise. Although applying such methods directly to higher-dimensional data sets like those generated from legged animals can be challenging, recent advances in finding dynamical models that best describe a continuous time series provide future avenues for exploration [73–75].

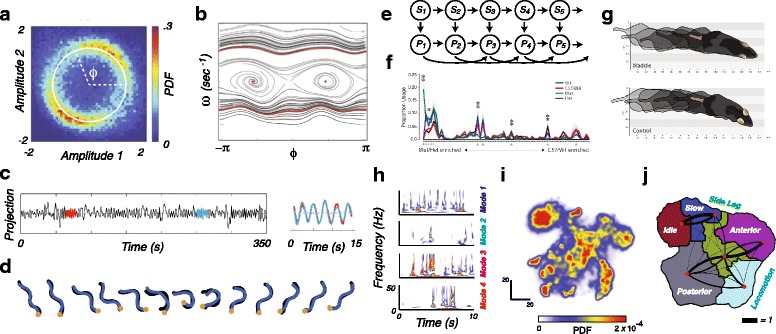

Fig. 4.

Examples of dynamical and behavioral representations. a For C. elegans, a histogram of projections onto the first two postural modes, or “eigenworms” (the left two curves in Fig. 3b) shows a low-dimensional structure that can be parameterized by a single phase variable, ϕ. b Fitting the dynamics of this variable to a deterministic dynamical system yields this phase map, with forward and backward locomotion naturally emerging as traveling wave trajectories at the top and bottom, respectively, and two fixed points in the middle corresponding to two different pause states (a and b are adapted from [54]). c An alternative approach to represent C. elegans behavior is via motif-finding. Here, time-series of projections onto the eigenworms are scoured for repeated patterns (e.g. the blue and red curves here). These patterns are then catalogued and used as the basis for a behavioral representation (adapted from [55]). d Instead of using dynamical motifs directly, the worm’s behavior can be captured as a sequence of postures, as seen in this example from [77]. e The approach taken by [56] was to fit an autoregressive hidden markov model (AR-HMM) to postural data of mouse movements, generated in a similar, but not identical, manner to that seen in Fig. 3c. Here, each Pt is a vector of the animal’s postural mode values at time t, and St is an underlying state that affects the dynamics of postural outputs. Here, arrows imply direct dependence (i.e. Pt is a stochastic function of St, Pt−1, and Pt−2, and so on). It is assumed that the time scale for changes in P is much faster than that for changes in S. This latter time scale, a parameter in the model, sets the distribution for the length of time that an animal stays within a particular behavioral state. f Average behavioral usage frequencies using an AR-HMM for four different mouse genotypes: Wild type, C57/BL6, as well as homozygous (Mut) and heterozygous (Het) mutations in the retinoid-related orphan receptor 1 β (Ror1 β) gene. g Distinct walking gaits found in the Mut (top) and C57/BL6 (bottom) mice (e-g adapted from [56]. Neuron 88(6), Alexander B. Wiltschko, Matthew J. Johnson, Giuliano Iurilli, Ralph E. Peterson, Jesse M. Katon, Stan L. Pashkovski, Victoria E. Abraira, Ryan P. Adams, Sandeep Robert Datta, Mapping Sub-Second Structure in Mouse Behavior, 1121-1135., Copyright 2015, reprinted with permission from Elsevier.) h An example of a time-frequency analysis representation from freely-moving fruitflies, where each set of axes represents a mode, and the colormap values indicate the continuous wavelet transform amplitudes for at each point in time. This approach allows for multiple time scales to enter the dynamical representation. i Probability density resulting from embedding points into 2-d such that two instances when a fruit fly is moving similar parts of its body at similar speeds are mapped nearby. Note the peaks and valleys. Here, the peaks represent stereotyped behaviors. j Break-down of the behavioral representation in i, with names for the behaviors within each of these regions manually labelled. Black lines are proportional to the transition probability between moving from one coarse region to another, with right-handedness implying the direction of transmission. (h-j adapted from [53, 76])

Another approach to building a dynamical system representation is to fit a statistical model to the data. A prominent example of this can be seen in the work of Wiltschko et al. [56], who collected postural time series data of mice and fit an Autoregressive Hidden Markov Model (AR-HMM) to their data (Fig. 4e-g). One can think of this approach as fitting small segments (less than 1 s) to linear dynamical systems, and that the animal is switching between these systems with time scales that are significantly longer than those of the dynamics within a given system. This method creates a dynamical representation (bottom row of Fig. 4e) at the same time as it creates a behavioral representation (top row of Fig. 4e).

While the ability to simultaneously represent dynamics and behavior is a distinctive advantage of the AR-HMM approach, it is also a limitation since it requires a parameter that sets the overall time scale of staying in a particular behavioral state. One could imagine amending this limitation by adding additional time scale parameters when fitting the model, but this still requires a hand-tuning of the time scales available to the system, as well as a corollary assumption that the amount of time an animal spends in a particular behavior must follow an exponential distribution. The time spent performing a behavior, however, can range over orders of magnitude – from a reflex lasting tens of milliseconds to a night’s sleep – and long time-scale dynamics are often observed in behavioral data [52, 76]. Moreover, if one wishes to directly measure the time scales evident in a particular data set, the fact that the approach used relies on this type of structure as an assumption can be confounding.

A complementary approach is to create a multi-scale dynamical representation that forms the basis for a behavioral representation. This can be achieved through finding motifs of varied lengths in a data set [55, 77, 78] or using a time-frequency analysis approach like a wavelet transform [53, 59, 79] to represent postural dynamics across a variety of time scales. For the case of motif-finding (Fig. 4c-d), one finds postural patterns that commonly occur throughout a data set and looks for when the animal exhibits similar dynamics. The relative frequency and patterns of use for these motifs can be used to create “behavioral fingerprints” for individuals or collections of animals differing in genotypes, neural manipulations, or other conditions of interest. The resulting behavioral representation is thus the set, frequency, and ordering of motifs that an animal performs. A difficulty of this approach, however, is that results may not always be robust to slight changes in postural dynamics such as changes in frequency or relative phasing between limbs.

An alternative approach to capture behavioral dynamics across multiple time scales is to use time-frequency analysis (Fig. 4h). Here, one takes the set of postural time series, determines a wide range of frequencies that are present in each time series and measures the relative importance of each of these frequencies as a function of time. This importance is often quantified via a wavelet transform [80], which uses a trade-off between accurate temporal resolution and poor frequency resolution at high frequencies and poor temporal resolution and accurate frequency resolution at low frequencies to generate a multi-scale representation of the animal’s postural movements. The resulting dynamical representation for a single point in time is thus a set of wavelet amplitudes for a collection of frequencies from each of the observed postural time series. Despite the fact that the wavelet transform contains both amplitude and phase information, it is typical to use only the amplitude information, as this eliminates many of the robustness issues experienced in the motif-finding case. Behavioral representations can then be obtained from either clustering [58, 79] or low-dimensional embedding (Fig. 4i-j) [53, 59] of the resulting vector of amplitudes. Typically, when embedding these feature vectors, an anisotropic density across this space emerges, with local peaks corresponding to particular stereotyped behaviors. Accordingly, one could treat the behavioral representation as either the density itself or the sequence of peaks that an animal visits.

Discrete vs. continuous behavioral representations

Note how we now have seen that behavioral representations can either be discrete (e.g. clusters or motifs) or continuous (e.g. densities or non-piecewise dynamical models) and that discrete representations can often be derived from continuous ones (e.g. fixed points or peaks). So which is better? Ideally, one is able to identify a discrete representation through the fixed points of a dynamical model, but this is not currently practicable for animal morphologies more complicated than a worm’s. For other systems, though, like most methodological questions, the answer depends on the experimental exigencies at play, and performing both often provides additional context and information.

On one hand, in favor of continuous representations, it is more intellectually satisfying to show that a discrete representation arises naturally out of a data set without imposing such a structure a priori. Even in the case where a discrete representation is appropriate, it may be that the interesting measurements to note are the subtle distinctions on the edge of the peaks. Additionally, although many of the movements an animal performs are stereotyped, not all of them need to be. An important aspect of continuous representations is that they allow for the ability to have portions of time where the animal is performing non-stereotyped dynamics (i.e. they do not remain stationary on the map in Fig. 4j). Results from fruit flies show that the animals perform non-stereotyped behaviors approximately half of the time [53, 59], implying that one must be careful when interpreting a representation that places all time points into a cluster.

On the other hand, though, if the data indeed has clusters, one should perform clustering in the high-dimensional space that retains all of the information in the data and where partitioning algorithms are more likely to succeed and one does not have to worry about the specific form of the length-scale distortions that any nonlinear embedding necessarily creates [58]. However, while formalisms such as AR-HMM allow for the building of a type of dynamical model, they also rely on underlying assumptions about a single time scale that could over- or under- partition the data. Accordingly, researchers need to think carefully about the consequences of these choices of representation and tailor their approach to the questions at hand.

Future challenges

Many of the next steps in building representations for measuring behavior involve building representations that link postural dynamics to dynamics of other variables, including space, other behavioral modalities, other individuals, and neural dynamics.

Joint representation of space and posture

An interesting observation about almost all of the representations in the previous section is that the typical quantities measured in coarse behavioral assays, namely spatial position, orientation, and their derivatives, are the first aspects to be eliminated. This is performed to ensure that one measures motions in an animal’s own frame, but there are numerous scenarios in neuroscience and social behavior where we would like to look at the interactions between location, movement, and behavioral patterns, ideally generating a joint representation.

A natural question here is, why not simply add the postural dynamics as an extra time series to be thrown into one’s favorite behavioral mapper or classifier? The difficulty here is that the variables describing dynamical representation – derivatives or spectral transforms of joint angles or postural modes – all have the same units, and these units differ from those of the spatial variables. Thus, a unit conversion must occur, requiring at least one arbitrarily-chosen parameter.

Current solutions have been to measure behavior conditioned on position or position conditioned on behavior [56, 59, 81] or to measure a response field averaged across individuals [82], but this does not provide a true joint representation. As an example, if one animal performs the exact same motion twice, but in slightly different locations, are those two behaviors closer or further away than the animal performing two slightly different motions but at the exact same position? Finding systematic and precise quantifications to answer this question (and the answer might change depending on the precise scientific investigation at hand) will be key to building joint positional-postural representations.

Collective and social behavior

Similar to the difficulty of representing space and posture simultaneously, we face a problem when attempting to describe the collective dynamics of many individuals moving together. This is often achieved through measuring an order parameter that is related to the proportion of individual velocities pointed in the same direction [83–85]. Ideally, though, one would like to capture metrics that describe the collective dynamics of many individuals in a manner as rich as the previously-described approaches for single animals. Particularly fruitful ideas here borrow techniques from fluid dynamics, including the use of Lagrangian coherent structures [86] and dynamic mode decomposition [87] to generate continuum-based models of many organisms moving collectively.

An additional challenge arising in social behavior is that much of the research described previously focuses on the physical motion of an animal’s limbs and body, but in the case of social interactions, capturing other aspects of behavior such as the production of audio and substrate-borne signals will be necessary to fully describe the animals’ dynamics. There have been many recent successes relating behavioral dynamics to, for example, audio dynamics through asking what behavioral features predict the performance of a particular song or song type using methods such as general linear models (GLMs) [88–90], and improvements in automated methods have increased the throughput of audio data analysis [91–94]. Ideally, though, we would be able to create a joint representation of the alternative behavioral modalities and the postural movements occurring at the same time that more fully links the dynamics of these processes.

Linking neurons to behavior

As our ability to record neurons in freely-behaving animals increases, the need to represent neural activity jointly with behavior is becoming increasingly apparent. As with multi-modal dynamics, most current approaches to neuro-behavioral analysis [57, 68, 95–100] take a correlative or decoding approach: given one knows something about neural dynamics, what can one predict about behavior, or vice versa? This could take the form of “given a neural stimulation what did the animal do?” or “is it possible to predict an animal’s behavior from neural dynamics?” While these are necessary first steps toward building our understanding of how neural circuits drive behavior, to more fully comprehend the interplay between these circuits and how behavior feeds back onto neural responses, we need to devise methods to analyze the combined dynamics of posture and neural activity simultaneously.

One potential avenue for achieving this aim is to combine experimentally-tested computational models of neural dynamics with high-resolution behavioral measurements and perturbations. Ideas toward this end have been put forward in the nascent field of computational psychiatry, where neural models, ranging in scale from small collections of neurons to individual brain nuclei to whole brain dynamics [3, 16, 101, 102] are manipulated or systematically controlled to see how system-wide outputs are affected. Although in these studies outputs are usually measured in terms of neural activity alone, a joint representation of behavioral outputs in model organisms or human patients and model-specific control dynamics of the neural circuit present an intriguing path forward. This would also allow ideas from control theory to inform the discussion [43], building a framework where feedbacks between neural activity and behavior could be more thoroughly linked.

Toward theories of behavior

The previous point about the use of theory in generating behavioral representations brings us back to the beginning of our discussion. Our fundamental challenges still remain at the conceptual rather than the technical level. Despite the significant advances in measuring behavior over the past few years, the ultimate goal of these approaches – understanding how and why animals control and generate particular sequences of physical movements – requires developing theories and models to serve as connective tissue, providing context and justification for the measurements we make and allowing us to make predictions that suggest future experiments.

But what should these theories look like? Does this mean we should turn behavior into particle physics? What is the atom or proton or quark of behavior? Does it even make sense to discuss behavior as if there is a set of underlying first principles from which all actions are derived? Like most questions in biology, we can begin to make progress by looking to evolution. Specifically, we cannot forget that almost every behavior has a goal: to increase an animal’s probability of passing its genes to subsequent generations. Thus, all movements are placed in the context of how they aid in the performance of one or many tasks.

This viewpoint, shared by many of us who refer to ourselves as “computational ethologists” [1] (whether this is different than “ethologists with fancy computers” is a discussion for a different article), makes an argument to engage in a parallel endeavor to the mapping and manipulation of neural circuits. We should search for what Richard Dawkins referred to as “software explanations of behavior” [103]. The most famous example of this type of analysis is Tinbergen’s hypothesis that animals’ behavioral drives can be explained via a hierarchically-organized set of competing impulses, based on both observations and ideas about optimality and evolvability [104]. This idea, independently developed by Herbert Simon in the context of engineered systems [105, 106], provides testable consequences that have lead to further investigations and theories across a wide variety of systems [76, 107–109]. Similarly, ideas about optimizing feedback control and energy-efficiency have shaped biolocomotion studies [110], and concepts from reinforcement learning have served as a starting point toward investigations into the neural implementation of learning [13, 111].

In each of these examples, observations about behavior have been used to make inferences about the brain’s functioning that do not explicitly rely on detailed models or knowledge of brain dynamics or morphology, potentially providing general principles that apply across systems. When deciding what type of behavior to measure and how to measure it, we either intentionally or unintentionally rely on theories such as these when we choose a behavioral context, select length and time scales, or decide how to analyze the data.

Only through consciously generating and interacting with broad theoretical concepts can we create a fuller understanding of how neural systems function to produce movement and behavior. For example, the idea of using stereotyped movements as a scale for behavioral measurements builds upon observations about low-dimensionality in movements and the commonality of neural circuitry such as central pattern generators devoted to the performance of periodic activities. Taking these assumptions directly into account has allowed the methods discussed in this review to be developed, and the identification of further concepts will be essential to their expansion, refinement, and application. At its core, “What type of behavior do we want to measure?” is a question that relies on theoretical insight for its answer, and future efforts toward quantitatively linking behavior to its physiological underpinnings will greatly benefit from approaching experimental design and analysis accordingly.

Acknowledgements

The author would like to thank Avani Wildani, Alex Gomez-Marin, Itai Pinkoviezky, Jennifer Rieser, Carlos Rodriguez, and Kun Tian for comments and suggestions on the manuscript. This work was partially supported by NIMH 1R01MH115831-01.

Competing interests

The author declares no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Anderson DJ, Perona P. Toward a Science of Computational Ethology. Neuron. 2014;84(1):18–31. doi: 10.1016/j.neuron.2014.09.005. [DOI] [PubMed] [Google Scholar]

- 2.Krakauer JW, Ghazanfar AA, Gomez-Marin A, Maciver MA, Poeppel D. Neuroscience Needs Behavior: Correcting a Reductionist Bias. Neuron. 2017;93(3):480–90. doi: 10.1016/j.neuron.2016.12.041. [DOI] [PubMed] [Google Scholar]

- 3.Wang XJ, Krystal JH. Computational psychiatry. Neuron. 2014;84(3):638–54. doi: 10.1016/j.neuron.2014.10.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lebedev MA, Nicolelis MAL. Brain-Machine Interfaces: From Basic Science to Neuroprostheses and Neurorehabilitation. Physiol Rev. 2017;97(2):767–837. doi: 10.1152/physrev.00027.2016. [DOI] [PubMed] [Google Scholar]

- 5.Anderson PW. More Is Different. Science. 1972;177(4047):393–6. doi: 10.1126/science.177.4047.393. [DOI] [PubMed] [Google Scholar]

- 6.Goldenfeld N, Kadanoff LP. Simple Lessons from Complexity. Science. 1999;284(5411):87–9. doi: 10.1126/science.284.5411.87. [DOI] [PubMed] [Google Scholar]

- 7.Dell AI, Bender JA, Branson K, Couzin ID, De Polavieja GG, Noldus LPJJ, et al. Automated image-based tracking and its application in ecology. Trends Ecol Evol. 2014;29(7):417–28. doi: 10.1016/j.tree.2014.05.004. [DOI] [PubMed] [Google Scholar]

- 8.Egnor SER, Branson K. Computational Analysis of Behavior. Ann Rev Neuro. 2016;39:217–36. doi: 10.1146/annurev-neuro-070815-013845. [DOI] [PubMed] [Google Scholar]

- 9.Robie AA, Seagraves KM, Egnor SER, Branson K. Machine vision methods for analyzing social interactions. J Exp Bio. 2017;220(1):25–34. doi: 10.1242/jeb.142281. [DOI] [PubMed] [Google Scholar]

- 10.Calhoun AJ, Murthy M. Quantifying behavior to solve sensorimotor transformations: advances from worms and flies. Curr Opin Neurobiol. 2017;46:90–8. doi: 10.1016/j.conb.2017.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stephens GJ, Osborne LC, Bialek W. Searching for simplicity in the analysis of neurons and behavior. Proc Nat Acad Sci. 2011;108(Supp 3):15565–71. doi: 10.1073/pnas.1010868108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gomez-Marin A, Paton JJ, Kampff AR, Costa RM, Mainen ZF. Big behavioral data: psychology, ethology and the foundations of neuroscience. Nat Neurosci. 2014;17(11):1455–62. doi: 10.1038/nn.3812. [DOI] [PubMed] [Google Scholar]

- 13.Montague PR, Dolan RJ, Friston KJ, Dayan P. Computational psychiatry. Trends Cogn Sci. 2012;16(1):72–80. doi: 10.1016/j.tics.2011.11.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Anderson DJ, Adolphs R. A Framework for Studying Emotions across Species. Cell. 2014;157(1):187–200. doi: 10.1016/j.cell.2014.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Anderson DJ. Circuit modules linking internal states and social behaviour in flies and mice. Nature Rev Neuro. 2016;17(11):692–704. doi: 10.1038/nrn.2016.125. [DOI] [PubMed] [Google Scholar]

- 16.Huys QJM, Maia TV, Frank MJ. Computational psychiatry as a bridge from neuroscience to clinical applications. Nat Neurosci. 2016;19(3):404–13. doi: 10.1038/nn.4238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stephan KE, Mathys C. Computational approaches to psychiatry. Curr Opin Neurobiol. 2014;25:85–92. doi: 10.1016/j.conb.2013.12.007. [DOI] [PubMed] [Google Scholar]

- 18.Leshner A, Pfaff DW. Quantification of behavior. Proc Nat Acad Sci. 2011;108(Supp 3):15537–41. doi: 10.1073/pnas.1010653108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fonio E, Golani I, Benjamini Y. Measuring behavior of animal models: faults and remedies. Nat Methods. 2012;9(12):1167–70. doi: 10.1038/nmeth.2252. [DOI] [PubMed] [Google Scholar]

- 20.Altmann J. Observational study of behavior: sampling methods. Behaviour. 1974;49(3):227–67. doi: 10.1163/156853974x00534. [DOI] [PubMed] [Google Scholar]

- 21.Martin P, Bateson P. Measuring behaviour: an introductory guide. Cambridge. UK: Cambridge University Press; 2007. [Google Scholar]

- 22.Gao P, Ganguli S. On simplicity and complexity in the brave new world of large-scale neuroscience. Curr Opin Neurobiol. 2015;32:148–55. doi: 10.1016/j.conb.2015.04.003. [DOI] [PubMed] [Google Scholar]

- 23.Panda S, Hogenesch JB, Kay SA. Circadian rhythms from flies to human. Nature. 2002;417(6886):329–35. doi: 10.1038/417329a. [DOI] [PubMed] [Google Scholar]

- 24.Yemini E, Jucikas T, Grundy LJ, Brown AEX, Schafer WR. A database of Caenorhabditis elegans behavioral phenotypes. Nat Methods. 2013;10(9):877–9. doi: 10.1038/nmeth.2560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Churgin MA, Jung SK, Yu CC, Chen X, Raizen DM, Fang-Yen C, et al. Longitudinal imaging of Caenorhabditis elegans in a microfabricated device reveals variation in behavioral decline during aging. eLife. 2017;6:e26652. doi: 10.7554/eLife.26652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ayroles JF, Buchanan SM, O’Leary C, Skutt-Kakaria K, Grenier JK, Clark AG, et al. Behavioral idiosyncrasy reveals genetic control of phenotypic variability. Proc Nat Acad Sci. 2015;112(21):6706–11. doi: 10.1073/pnas.1503830112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Branson K, Robie AA, Bender JA, Perona P, Dickinson MH. High-throughput ethomics in large groups of Drosophila. Nat Methods. 2009;6(6):451–7. doi: 10.1038/nmeth.1328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pérez-Escudero A, Vicente-Page J, Hinz RC, Arganda S, De Polavieja GG. idTracker: tracking individuals in a group by automatic identification of unmarked animals. Nat Methods. 2014;11(7):743–8. doi: 10.1038/nmeth.2994. [DOI] [PubMed] [Google Scholar]

- 29.Naumann EA, Fitzgerald JE, Dunn TW, Rihel J, Sompolinsky H, Engert F. From Whole-Brain Data to Functional Circuit Models: The Zebrafish Optomotor Response. Cell. 2016;167(4):947–960.e20. doi: 10.1016/j.cell.2016.10.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Orger MB, De Polavieja GG. Zebrafish Behavior: Opportunities and Challenges. Ann Rev Neuro. 2017;40:125–147. doi: 10.1146/annurev-neuro-071714-033857. [DOI] [PubMed] [Google Scholar]

- 31.Dankert H, Wang L, Hoopfer ED, Anderson DJ, Perona P. Automated monitoring and analysis of social behavior in Drosophila. Nat Methods. 2009;6(4):297–303. doi: 10.1038/nmeth.1310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kabra M, Robie AA, Rivera-Alba M, Branson S, Branson K. JAABA: interactive machine learning for automatic annotation of animal behavior. Nat Methods. 2013;10(1):64–7. doi: 10.1038/nmeth.2281. [DOI] [PubMed] [Google Scholar]

- 33.de Chaumont F, Coura RDS, Serreau P, Cressant A, Chabout J, Granon S, et al. Computerized video analysis of social interactions in mice. Nat Methods. 2012;9(4):410–7. doi: 10.1038/nmeth.1924. [DOI] [PubMed] [Google Scholar]

- 34.Kain J, Stokes C, Gaudry Q, Song X, Foley J, Wilson R, et al. Leg-tracking and automated behavioural classification in Drosophila. Nat Communications. 2013;4:1910. doi: 10.1038/ncomms2908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hong W, Kennedy A, Burgos-Artizzu XP, Zelikowsky M, Navonne SG, Perona P, et al. Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning. Proc Nat Acad Sci. 2015;112(38):E5351–E5360. doi: 10.1073/pnas.1515982112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Dickinson MH, Farley CT, Full RJ, Koehl MAR, Kram R, Lehman S. How Animals Move: An Integrative View. Science. 2000;288(5):100–6. doi: 10.1126/science.288.5463.100. [DOI] [PubMed] [Google Scholar]

- 37.Childress S, Hosoi A, Schultz WW, Wang ZJ, editors. Natural locomotion in fluids and on surfaces: swimming, flying, and sliding. New York: Springer; 2012. [Google Scholar]

- 38.Holmes P, Full RJ, Koditschek D, Guckenheimer J. The Dynamics of Legged Locomotion: Models, Analyses, and Challenges. SIAM Rev. 2006;48:207–304. [Google Scholar]

- 39.Miller LA, Goldman DI, Hedrick TL, Tytell ED, Wang ZJ, Yen J, et al. Using computational and mechanical models to study animal locomotion. Int Comp Bio. 2012;52(5):553–75. doi: 10.1093/icb/ics115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.McInroe B, Astley HC, Gong C, Kawano SM, Schiebel PE, Rieser JM, et al. Tail use improves soft substrate performance in models of early vertebrate land locomotors. Science. 2016;353(6295):154–8. doi: 10.1126/science.aaf0984. [DOI] [PubMed] [Google Scholar]

- 41.Aguilar J, Zhang T, Qian F, Kingsbury M, McInroe B, Mazouchova N, et al. A review on locomotion robophysics: the study of movement at the intersection of robotics, soft matter and dynamical systems. Rep Prog Phys. 2016;79(11):110001. doi: 10.1088/0034-4885/79/11/110001. [DOI] [PubMed] [Google Scholar]

- 42.Dickinson MH, Lehmann FO, Sane SP. Wing rotation and the aerodynamic basis of insect flight. Science. 1999;284(5422):1954–60. doi: 10.1126/science.284.5422.1954. [DOI] [PubMed] [Google Scholar]

- 43.Cowan NJ, Ankarali MM, Dyhr JP, Madhav MS, Roth E, Sefati S, et al. Feedback control as a framework for understanding tradeoffs in biology. Integr Comp Biol. 2014;54(2):223–37. doi: 10.1093/icb/icu050. [DOI] [PubMed] [Google Scholar]

- 44.Roth E, Sponberg S, Cowan NJ. A comparative approach to closed-loop computation. Curr Opin Neurobiol. 2014;25:54–62. doi: 10.1016/j.conb.2013.11.005. [DOI] [PubMed] [Google Scholar]

- 45.Alexander RM. Optima for Animals. Princeton, NJ: Princeton University Press; 1996. [Google Scholar]

- 46.Full RJ, Koditschek DE. Templates and anchors: Neuromechanical hypotheses of legged locomotion on land. J Exp Bio. 1999;202(23):3325–32. doi: 10.1242/jeb.202.23.3325. [DOI] [PubMed] [Google Scholar]

- 47.Monty Python’s FlyingCircus. “The Ministry of Silly Walks”. BBC. 1970;2(1).

- 48.Ristroph L, Bergou AJ, Ristroph G, Coumes K, Berman GJ, Guckenheimer J, et al. Discovering the flight autostabilizer of fruit flies by inducing aerial stumbles. Proc Nat Acad Sci. 2010;107(11):4820–4. doi: 10.1073/pnas.1000615107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Jindrich DL, Full RJ. Dynamic stabilization of rapid hexapedal locomotion. J Exp Bio. 2002;205(18):2803–23. doi: 10.1242/jeb.205.18.2803. [DOI] [PubMed] [Google Scholar]

- 50.Revzen S, Guckenheimer JM. Finding the dimension of slow dynamics in a rhythmic system. J Royal Soc Interface. 2012;9(70):957–71. doi: 10.1098/rsif.2011.0431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Osborne LC, Lisberger SG, Bialek W. A sensory source for motor variation. Nature. 2005;437(7057):412–6. doi: 10.1038/nature03961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Stephens GJ, de Mesquita MB, Ryu WS, Bialek W. Emergence of long timescales and stereotyped behaviors in Caenorhabditis elegans. Proc Nat Acad Sci. 2011;108(18):7286–9. doi: 10.1073/pnas.1007868108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Berman GJ, Choi DM, Bialek W, Shaevitz JW. Mapping the stereotyped behaviour of freely moving fruit flies. J Royal Soc Interface. 2014;11(99):20140672. doi: 10.1098/rsif.2014.0672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Stephens GJ, Johnson-Kerner B, Bialek W, Ryu WS. Dimensionality and dynamics in the behavior of C. elegans. PLoS Comp Bio. 2008;4(4):e1000028. doi: 10.1371/journal.pcbi.1000028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Brown AEX, Yemini EI, Grundy LJ, Jucikas T, Schafer WR. A dictionary of behavioral motifs reveals clusters of genes affecting Caenorhabditis elegans locomotion. Proc Nat Acad Sci. 2013;110(2):791–6. doi: 10.1073/pnas.1211447110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Wiltschko AB, Johnson MJ, Iurilli G, Peterson RE, Katon JM, Pashkovski SL, et al. Mapping Sub-Second Structure in Mouse Behavior. Neuron. 2015;88(6):1121–35. doi: 10.1016/j.neuron.2015.11.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kato S, Kaplan HS, Schrödel T, Skora S, Lindsay TH, Yemini E, et al. Global Brain Dynamics Embed the Motor Command Sequence of Caenorhabditis elegans. Cell. 2015;163(3):656–669. doi: 10.1016/j.cell.2015.09.034. [DOI] [PubMed] [Google Scholar]

- 58.Todd JG, Kain JS, de Bivort BL. Systematic exploration of unsupervised methods for mapping behavior. Phys Biol. 2017;14(1):015002. doi: 10.1088/1478-3975/14/1/015002. [DOI] [PubMed] [Google Scholar]

- 59.Klibaite U, Berman GJ, Cande J, Stern DL, Shaevitz JW. An unsupervised method for quantifying the behavior of paired animals. Phys Biol. 2017;14(1):015006. doi: 10.1088/1478-3975/aa5c50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Nagy S, Goessling M, Amit Y, Biron D. A Generative Statistical Algorithm for Automatic Detection of Complex Postures. PLoS Comp Bio. 2015;11(10):e1004517. doi: 10.1371/journal.pcbi.1004517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Broekmans OD, Rodgers JB, Ryu WS, Stephens GJ. Resolving coiled shapes reveals new reorientation behaviors in C. elegans. eLife. 2016;5:e17227. doi: 10.7554/eLife.17227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Nguyen JP, Shipley FB, Linder AN, Plummer GS, Liu M, Setru SU, et al. Whole-brain calcium imaging with cellular resolution in freely behaving Caenorhabditis elegans. Proc Nat Acad Sci. 2016;113(8):E1074–E1081. doi: 10.1073/pnas.1507110112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Mendes CS, Bartos I, Akay T, Márka S, Mann RS. Quantification of gait parameters in freely walking wild type and sensory deprived Drosophila melanogaster. eLife. 2013;e00231:2. doi: 10.7554/eLife.00231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Machado AS, Darmohray DM, Fayad J, Marques HG, Carey MR. A quantitative framework for whole-body coordination reveals specific deficits in freely walking ataxic mice. eLife. 2015;4:e07892. doi: 10.7554/eLife.07892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ristroph L, Berman GJ, Bergou AJ, Wang ZJ, Cohen I. Automated hull reconstruction motion tracking (HRMT) applied to sideways maneuvers of free-flying insects. J Exp Bio. 2009;212(9):1324–35. doi: 10.1242/jeb.025502. [DOI] [PubMed] [Google Scholar]

- 66.Fontaine EI, Zabala F, Dickinson MH, Burdick JW. Wing and body motion during flight initiation in Drosophila revealed by automated visual tracking. J Exp Bio. 2009;212(9):1307–23. doi: 10.1242/jeb.025379. [DOI] [PubMed] [Google Scholar]

- 67.Uhlmann V, Ramdya P. Delgado-Gonzalo R, Benton R, Unser M. FlyLimbTracker: An active contour based approach for leg segment tracking in unmarked, freely behaving Drosophila. PLoS ONE. 2017;12(4):e0173433. doi: 10.1371/journal.pone.0173433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Vogelstein JT, Park Y, Ohyama T, Kerr RA, Truman JW, Priebe CE, et al. Discovery of brainwide neural-behavioral maps via multiscale unsupervised structure learning. Science. 2014;344(6182):386–92. doi: 10.1126/science.1250298. [DOI] [PubMed] [Google Scholar]

- 69.Geng W, Cosman P, Baek JH, Berry CC, Schafer WR. Quantitative classification and natural clustering of Caenorhabditis elegans behavioral phenotypes. Genetics. 2003;165(3):1117–26. doi: 10.1093/genetics/165.3.1117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ghosh R, Mohammadi A, Kruglyak L, Ryu WS. Multiparameter behavioral profiling reveals distinct thermal response regimes in Caenorhabditis elegans. BMC Biol. 2012;10:85. doi: 10.1186/1741-7007-10-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Jhuang H, Garrote E, Mutch J, Yu X, Khilnani V, Poggio T, et al. Automated home-cage behavioural phenotyping of mice. Nat Commun. 2010;1:68. doi: 10.1038/ncomms1064. [DOI] [PubMed] [Google Scholar]

- 72.Golani I, Kafkafi N, Drai D. Phenotyping stereotypic behaviour: collective variables, range of variation and predictability. Appl Anim Behav Sci. 1999;65(3):191–220. [Google Scholar]

- 73.Bongard J, Lipson H. Automated reverse engineering of nonlinear dynamical systems. Proc Nat Acad Sci. 2007;104(24):9943–8. doi: 10.1073/pnas.0609476104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Natale JL, Hofmann D, Hernández DG, Nemenman I. Reverse-engineering biological networks from large data sets. arXiv. 2017.

- 75.Daniels BC, Nemenman I. Automated adaptive inference of phenomenological dynamical models. Nat Commun. 2015;6(1):8133. doi: 10.1038/ncomms9133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Berman GJ, Bialek W, Shaevitz JW. Predictability and hierarchy in Drosophila behavior. Proc Nat Acad Sci. 2016;113(42):11943–8. doi: 10.1073/pnas.1607601113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Gomez-Marin A, Stephens GJ, Brown AEX. Hierarchical compression of Caenorhabditis elegans locomotion reveals phenotypic differences in the organization of behaviour. J Royal Soc Interface. 2016;13(121):20160466. doi: 10.1098/rsif.2016.0466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Schwarz RF, Branicky R, Grundy LJ, Schafer WR, Brown AEX. Changes in Postural Syntax Characterize Sensory Modulation and Natural Variation of C. elegans Locomotion. PLoS Comp Bio. 2015;11(8):e1004322. doi: 10.1371/journal.pcbi.1004322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Sakamoto KQ, Sato K, Ishizuka M, Watanuki Y, Takahashi A, Daunt F, et al. Can Ethograms Be Automatically Generated Using Body Acceleration Data from Free-Ranging Birds. PLoS ONE. 2009;4(4):e5379. doi: 10.1371/journal.pone.0005379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Debnath L, Shah FA. Wavelet transforms and their applications. New York: Springer; 2015. [Google Scholar]

- 81.Benjamini Y, Fonio E, Galili T, Havkin GZ, Golani I. Quantifying the buildup in extent and complexity of free exploration in mice. Proc Nat Acad Sci. 2011;108(Supp 3):15580–7. doi: 10.1073/pnas.1014837108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Katz Y, Tunstrom K, Ioannou CC, Huepe C, Couzin ID. Inferring the structure and dynamics of interactions in schooling fish. Proc Natl Acad Sci. 2011;108(46):18720–5. doi: 10.1073/pnas.1107583108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Vicsek T, Czirók A, Ben-Jacob E, Cohen I, Shochet O. Novel type of phase transition in a system of self-driven particles. Phys Rev Lett. 1995;75(6):1226–9. doi: 10.1103/PhysRevLett.75.1226. [DOI] [PubMed] [Google Scholar]

- 84.Toner J, Tu Y. Flocks, herds, and schools: A quantitative theory of flocking. Phys Rev E. 1998;58(4):4828–58. [Google Scholar]

- 85.Buhl J, Sumpter D, Couzin ID, Hale J, Despland E, Miller E, et al. From disorder to order in marching locusts. Science. 2006;312(5778):1402–6. doi: 10.1126/science.1125142. [DOI] [PubMed] [Google Scholar]

- 86.Haller G. Lagrangian Coherent Structures. Ann Rev Fluid Mech. 2015;47(1):137–62. [Google Scholar]

- 87.Brunton BW, Johnson LA, Ojemann JG, Kutz JN. Extracting spatial-temporal coherent patterns in large-scale neural recordings using dynamic mode decomposition. J Neurosci Methods. 2016;258:1–15. doi: 10.1016/j.jneumeth.2015.10.010. [DOI] [PubMed] [Google Scholar]

- 88.Coen P, Clemens J, Weinstein AJ, Pacheco DA, Deng Y, Murthy M. Dynamic sensory cues shape song structure in Drosophila. Nature. 2014;507(7491):233–7. doi: 10.1038/nature13131. [DOI] [PubMed] [Google Scholar]

- 89.LaRue KM, Clemens J, Berman GJ, Murthy M. Acoustic duetting in Drosophila virilis relies on the integration of auditory and tactile signals. eLife. 2015;4:e07277. doi: 10.7554/eLife.07277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Neunuebel JP, Taylor AL, Arthur BJ, Egnor SER. Female mice ultrasonically interact with males during courtship displays. eLife. 2015;e06203:4. doi: 10.7554/eLife.06203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Arthur BJ, Sunayama-Morita T, Coen P, Murthy M, Stern DL. Multi-channel acoustic recording and automated analysis of Drosophila courtship songs. BMC Biol. 2013;11:11. doi: 10.1186/1741-7007-11-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Tabler JM, Rigney MM, Berman GJ, Gopalakrishnan S, Heude E, Al-Lami HA, et al. Cilia-mediated Hedgehog signaling controls form and function in the mammalian larynx. eLife. 2017;6:e19153. doi: 10.7554/eLife.19153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Mets DG, Brainard MS. An Automated Approach to the Quantitation of Vocalizations and Vocal Learning in the Songbird. bioRxiv. 2017. [DOI] [PMC free article] [PubMed]

- 94.Van Segbroeck M, Knoll AT, Levitt P, Narayanan S. MUPET–Mouse Ultrasonic Profile ExTraction: A Signal Processing Tool for Rapid and Unsupervised Analysis of Ultrasonic Vocalizations. Neuron. 2017; 94(3):465–85. [DOI] [PMC free article] [PubMed]

- 95.Robie AA, Hirokawa J, Edwards AW, Umayam LA, Lee A, Phillips ML, et al. Mapping the Neural Substrates of Behavior. Cell. 2017;170(2):393–406.e28. doi: 10.1016/j.cell.2017.06.032. [DOI] [PubMed] [Google Scholar]

- 96.Clemens J, Girardin CC, Coen P, Guan XJ, Dickson BJ, Murthy M. Connecting Neural Codes with Behavior in the Auditory System of Drosophila. Neuron. 2015;87(6):1332–43. doi: 10.1016/j.neuron.2015.08.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Wang NXR, Olson JD, Ojemann JG, Rao RPN, Brunton BW. Unsupervised Decoding of Long-Term, Naturalistic Human Neural Recordings with Automated Video and Audio Annotations. Front Hum Neurosci. 2016;10(251):78–13. doi: 10.3389/fnhum.2016.00165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Billings J, Medda A, Shakil S, Shen X, Kashyap A, Chen S, et al. Instantaneous brain dynamics mapped to a continuous state space. Neuroimage. 2017;162:344–52. doi: 10.1016/j.neuroimage.2017.08.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Gepner R, Skanata MM, Mihovilovic Skanata M, Bernat NM, Kaplow M, Gershow M. Computations underlying Drosophila photo- taxis, odor-taxis, and multi-sensory integration. eLife. 2015;4:e06229. doi: 10.7554/eLife.06229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Dunn TW, Mu Y, Narayan S, Randlett O, Naumann EA, Yang CT, et al. Brain-wide mapping of neural activity controlling zebrafish exploratory locomotion. eLife. 2016;5:471. doi: 10.7554/eLife.12741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Brodersen KH, Deserno L, Schlagenhauf F, Lin Z, Penny WD, Buhmann JM, et al. Dissecting psychiatric spectrum disorders by generative embedding. NeuroImage Clin. 2014;4:98–111. doi: 10.1016/j.nicl.2013.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Muldoon SF, Pasqualetti F, Gu S, Cieslak M, Grafton ST, Vettel JM, et al. Stimulation-Based Control of Dynamic Brain Networks. PLoS Comput Biol. 2016;12(9):e1005076. doi: 10.1371/journal.pcbi.1005076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Dawkins R. Hierarchical organisation: A candidate principle for ethology. In: Bateson PPG, Hinde RA, editors. Growing Points in Ethology. Cambridge, UK: Cambridge U Press; 1976. [Google Scholar]

- 104.Tinbergen N. The Study of Instinct. Oxford. Oxford University Press: UK; 1951. [Google Scholar]

- 105.Simon HA. The Architecture of Complexity. Proc Am Philos Soc. 1962;106(6):467–82. [Google Scholar]

- 106.Simon HA. The organization of complex systems. In: Pattee HH, editor. Hierarchy Theory. New York: Braziller; 1973. [Google Scholar]

- 107.Dawkins R, Dawkins M. Hierarchical Organization and Postural Facilitation - Rules for Grooming in Flies. Anim Behav. 1976;24(4):739–55. [Google Scholar]

- 108.Lefebvre L. Grooming in crickets: timing and hierarchical organization. Anim Behav. 1981;29(4):973–84. [Google Scholar]

- 109.Solway A, Diuk C, Córdova N, Yee D, Barto AG, Niv Y, et al. Optimal behavioral hierarchy. PLoS Comput Biol. 2014;10(8):e1003779. doi: 10.1371/journal.pcbi.1003779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Sponberg S. The Emergent Physics of Animal Locomotion. Phys Today. 2017;70(9):34. [Google Scholar]

- 111.Niv Y. Reinforcement learning in the brain. J Math Psychol. 2009;53(3):139–54. [Google Scholar]