Abstract

Purpose

The developmental readiness to produce early sentences with an iPad communication application was assessed with ten 3- and 4-year-old children with severe speech disorders using graduated prompting dynamic assessment (DA) techniques. The participants' changes in performance within the DA sessions were evaluated, and DA performance was compared with performance during a subsequent intervention.

Method

Descriptive statistics were used to examine patterns of performance at various cueing levels and mean levels of cueing support. The Wilcoxon signed-ranks test was used to measure changes within the DA sessions. Correlational data were calculated to determine how well performance in DA predicted performance during a subsequent intervention.

Results

Participants produced targets successfully in DA at various cueing levels, with some targets requiring less cueing than others. Performance improved significantly within the DA sessions—that is, the level of cueing required for accurate productions of the targets decreased during DA sessions. Last, moderate correlations existed between DA scores and performance during the intervention for 3 out of 4 targets, with statistically significant findings for 2 of 4 targets.

Conclusion

DA offers promise for examining the developmental readiness of young children who use augmentative and alternative communication to produce early expressive language structures.

Dynamic assessment (DA) procedures have been used for the past two decades to assess the expressive language skills of children who rely on speech to communicate. DA, with its foundations based in learning theories of Vygotsky (1978), has proven effective in identifying appropriate targets for children with varying language profiles who communicate via natural speech (Bain & Olswang, 1995; Patterson, Rodriguez, & Dale, 2013; Peña et al., 2006). Despite the need to identify comparable expressive communication targets for children with severe speech impairments, applications for children who require augmentative and alternative communication (AAC) are in their infancy.

Overview of DA

With DA, the examiner uses various cues to facilitate the child's performance, with a primary goal of establishing whether or not the targeted skill is within the child's zone of proximal development (Vygotsky, 1978)—that is, the difference between a child's observed performance and the level of potential development is determined. DA is designed to assess a child's readiness to learn (Tzuriel, 2000).

As a general concept, DA may be best understood when compared with static assessment. With static assessment, behaviors are measured at one point in time with no significant assistance offered by the examiner. Static tests are either criterion-referenced or norm-referenced (or both) and are not designed to assess the learning readiness of the child or to identify possible barriers to learning (Tzuriel, 2000). In contrast, DA uses a teach-test approach, in which the child is provided with ongoing supports from the examiner to identify the child's level of potential development. One of the main goals of DA is to determine the level of support a child needs to achieve success, thereby determining if the skill is within the child's zone of proximal development. With this type of adult guidance, children may perform above initial levels (Rogoff, 1990). This new, higher level of performance often becomes the goal for independent performance.

DA Approaches for Children With Communication Disorders

DA is a valuable component of comprehensive assessments and ongoing educational planning within the discipline of communication disorders. For example, DA can be used to predict reading levels (Petersen & Gillam, 2013) and differentiate cultural and linguistic differences from communication disorders (e.g., Peña, Iglesias, & Lidz, 2001). The most common approach to DA is mediated learning, in which tasks can be modified within each session depending on the child's performance (Rogoff, 1990). Static pre- and posttest scores and examiner ratings of the child's performance are used to assess progress. Graduated prompting is another DA approach, which involves using pre-established cueing hierarchies for each trial, where a score is assigned on the basis of the number of cues required for the child to achieve success (e.g., Patterson et al., 2013). Scores can then be used to evaluate the child's learning readiness as well as examiner effort required for achieving success. Several seminal studies used a graduated prompting approach to teach early two-word combinations to preschoolers with language impairments (Bain & Olswang, 1995; Olswang & Bain, 1996), and more recently, Patterson et al. (2013) effectively used this approach to assess the language skills of bilingual preschoolers. DA applications for children with more severe communication disabilities were recently summarized in a review (Boers, Janssen, Minnaert, & Ruijssenaars, 2013). In addition, Olswang, Feuerstein, Pinder, and Dowden (2013) used graduated prompting with six presymbolic communicators with severe disabilities to capture variability across participants, which was not apparent in the static test results, and also used DA to predict intervention performance. Taken as a whole, this body of work demonstrates that DA may have useful clinical applications for children with a wide range of communication disorders.

DA for Children Who Require AAC

Given the DA applications described above, DA holds great promise for children who need AAC. Many skills that children using AAC need to learn are identical to the skills of children who rely exclusively on speech to communicate, including the types of semantic, morphosyntactic, and narrative goals included in the DA studies discussed above. Although these types of language skills have been targeted successfully in intervention (e.g., Binger, Kent-Walsh, Berens, Del Campo, & Rivera, 2008; Binger & Light, 2007; Romski et al., 2010; Soto, Solomon-Rice, & Caputo, 2009), they have not—with two known exceptions discussed below—been examined using DA techniques. In addition, DA may prove useful for evaluating unique AAC skills, such as selecting graphic symbol sets and teaching children to use scanning to access AAC devices.

Although Nigam (2001) discussed AAC applications for DA over a decade ago, only two known AAC studies have included DA analyses such as using DA performance to predict future performance or measuring modifiability within DA sessions. The first focused on phonological awareness skills (Barker, Bridges, & Saunders, 2014), and the second, a precursor to the current investigation, used graduated prompting to evaluate productions of early two- and three-word utterances with four 5-year-old children with severe speech impairments (King, Binger, & Kent-Walsh, 2015). Results from the latter study indicated that the participants benefited from a range of cues to produce the sentences. Two participants evidenced modifiability (i.e., scores on the last two trials exceeded scores on the first two trials), a third did not, and the last encountered ceiling effects. In addition, three participants demonstrated moderate correlations between DA performance and performance on a subsequent experimental task. As with the approach taken in the current study (but unlike typical DA applications), the prompting techniques used during DA did not affect intervention decisions—that is, all participants received the same intervention, regardless of DA performance. This allowed for direct comparisons between DA scores and intervention performance for the same targets for all participants, an important feature given the uncertainty of DA or intervention success for any participants on the basis of past research. A similar approach, therefore, has been taken in the current study.

Overall, findings to date indicate that DA may be a viable way to evaluate readiness for children using AAC to produce simple sentences using graphic symbols. Therefore, the broad goal of the current investigation was to evaluate the feasibility and utility of DA to contribute to intervention planning for young children who use AAC and included the following specific aims:

To evaluate the degree of prompting 3- and 4-year-old children with significant speech disorders required to create accurate two- to three-word messages when using single meaning graphic symbols on an iPad communication application (“app”). Although some studies have indicated that this is a challenging task even for typically developing preschoolers (Sutton, Trudeau, Morford, Rios, & Poirier, 2010), emerging evidence indicates that with appropriate intervention, creating multisymbol messages is an achievable goal for young children using aided AAC (Binger et al., 2008; Binger & Light, 2007; Kent-Walsh, Binger, & Buchanan, 2015).

To determine if preschoolers using AAC evidence modifiability within a brief graduated prompting DA task; children demonstrating improvement within a single DA session may be more likely to rapidly acquire the target during intervention.

To determine if performance during DA is predictive of performance during subsequent intervention.

Method

Participants

The 10 children in the current study, as well as six additional children, served as participants in a language sampling study (Binger, Ragsdale, & Bustos, 2016). In addition, the 10 children in the current investigation participated in the intervention study which is a companion to the current article (Binger, Kent-Walsh, King, & Mansfield, 2017). Participants were recruited through a university clinic and surrounding school district contacts. Initial static assessments were completed for eligibility and descriptive purposes. For the current study, participants were required to meet the following criteria: (a) be between the ages of 3;0 and 4;11 (years;months) at the onset of the study; (b) have receptive language within normal limits, as defined by standard scores no more than 1.5 SD below the mean (i.e., ≥ 78 standard score) on the total score of the Test for Auditory Comprehension of Language–Third Edition (TACL-3; Carrow-Woolfolk, 1999); (c) have a severe speech impairment as defined by less than 50% intelligible speech in the no-context condition of the Index of Augmented Speech Comprehensibility in Children (Dowden, 1997); and (d) have an expressive vocabulary of at least 25 words on the MacArthur Communicative Development Inventories (Fenson et al., 2007) via any communication mode (speech, sign, or graphic symbol; see Table 1 for a list of participant characteristics).

Table 1.

Participant demographic information including sex, age, race/ethnicity, disability, prior augmentative and alternative communication (AAC) and iPad use, expressive vocabulary test results, and comprehensibility test results.

| Child a | Sex | CA (years;months) | Race/ethnicity | Diagnoses | Prior experience |

CDI | I-ASCC (%) |

||

|---|---|---|---|---|---|---|---|---|---|

| AAC | iPad | No context | Context | ||||||

| G | F | 4;8 | W/NH | Severe speech delay-SSD | None | Frequent use | 601 | 10 | 42 |

| H | M | 4;11 | W/NH | Autism; motor speech disorder-CAS b | School evaluation at onset of study with low tech AAC recommended | Minimal | 109 | 0 | 3 |

| I | M | 4;1 | W/H | Severe speech delay | None | Minimal | 601 | 6 | 38 |

| J | M | 4;2 | W/H | Severe speech delay | None | 2–3 years | 323 | 0 | 13 |

| K | M | 4;3 | W/H | Severe speech delay-SSD | None | Moderate | 419 | 3 | 12 |

| L | M | 4;6 | AI/NH | Severe speech delay-suspected CAS | None (school assessment during study) | Minimal | 117 | 19 | 32 |

| M | M | 4;3 | W/H | Severe speech delay-SSD; history of drug exposure in utero; early intervention until 18 months; currently only receiving services for speech | None | Extensive | 628 | 0 | 26 |

| N | F | 4;9 | W/NH | Motor speech disorder-dysarthria secondary to cerebral palsy b | Over 1-year experience with Minspeak c -based device | Limited | 547 | 3 | 16 |

| O | M | 3;5 | W/NH | Speech sound disorder b | None | Extensive | 83 | 6 | 23 |

| P | F | 3;3 | W/H | Severe speech delay | None | Moderate | 45 | 0 | 13 |

Note. All diagnoses are based on evaluations provided by the participants' school or private speech-language pathologists unless otherwise noted. CA = chronological age; CDI = Communication Development Inventory; I-ASCC = Index of Augmented Speech Comprehensibility in Children; SSD = speech sound disorder; CAS = childhood apraxia of speech; W/NH = White/non-Hispanic; W/H = White/Hispanic; AI/NH = American Indian/non-Hispanic.

All participants' primary language is English. Child J is secondarily exposed to Spanish, and Child L to Keres and Towa (Native American languages).

Children F, H, N, and O received differential diagnostic testing at the University of New Mexico Speech and Hearing Clinic.

Minspeak is a graphic symbol-based communication approach in which each symbol represents multiple concepts. These symbols are combined to access words on a speech-generating device. More information can be found at http://www.minspeak.com.

In addition, the participants were required to (a) speak English as their first language; (b) demonstrate comprehension of the targeted semantic-syntactic relations with at least 80% accuracy, using procedures adapted from Miller and Paul (1995); (c) have received no prior intervention targeting semantic-syntactic relations via aided AAC; (d) have functional vision and hearing for the purposes of participating in study activities; (e) have no diagnosis of autism; and (f) have motor skills adequate to direct select on a speech-generating device. All participants passed a pure-tone hearing screening. Children with autism were excluded as they often demonstrate both qualitative and quantitative differences in language learning.

Two children who did not meet all of the inclusion criteria were nonetheless included in the study. First, Child L's standard score on the TACL-3 fell below criterion (76; cutoff was 78). He was not excluded from the study for two primary reasons: His age equivalent score of 3;7 on the TACL-3 clearly indicated that the targets (producing two- to three-word utterances) should have been well within his reach. Second, as a Native American child, his inclusion diversified the participant pool, which better reflected the general population of the surrounding area. Child H's diagnosis of autism was not known at the onset of the investigation. This participant demonstrated social skills that were appropriate throughout the investigation, responded positively to feedback from study administrators, readily completed all investigation tasks, and completed tasks in a manner deemed to be indicative of his true abilities by his parents. As a single-case experimental design was used for the intervention study (Binger et al., 2017), thereby allowing for the examination of results on an individual basis, his data are included in both the intervention study and in the present investigation.

Additional measures collected for descriptive purposes included the following (see Table 2): Mullen Scales of Early Learning (Mullen, 1995), Peabody Picture Vocabulary Test–Fourth Edition (Dunn & Dunn, 2006), Leiter International Performance Scale–Revised (Roid & Miller, 1997), and Vineland Adaptive Behavior Scales (Sparrow, Cicchetti, Domenic, & Balla, 2005). Of the 10 participants included in the current study, only Child N and Child H had prior AAC experience.

Table 2.

Percentile scores of test results for all participants.

| Child | PPVT-4 | TACL-3 | Vineland-II Comm | MSEL |

Leiter-R |

||||

|---|---|---|---|---|---|---|---|---|---|

| VR | FM | RL | EL | Full IQ % | Full IQ | ||||

| G | 13 | 13 | 27 | 5 | 10 | 18 | 18 | 14 | 84 |

| H | 53 | 39 | 10 | 12 | 79 | 37 | 1 | 70 | 108 |

| I | 37 | 23 | 2 | 24 | 27 | 3 | 1 | 53 | 101 |

| J | 92 | 84 | 66 | 38 | 54 | 82 | 18 | 81 | 113 |

| K | 37 | 61 | 13 | 62 | 31 | 54 | 54 | 86 | 116 |

| L | 30 | 5 | 16 | 2 | 14 | 1 | 1 | 37 | 96 |

| M | 45 | 27 | 37 | 21 | 34 | 5 | 4 | 55 | 102 |

| N | 58 | 27 | 19 | 12 | 14 | 27 | 1 | 53 | 101 |

| O | 95 | 77 | 16 | 90 | 82 | 66 | 1 | >99 | 143 |

| P | 30 | 65 | 19 | 90 | 46 | 50 | <1 | 82 | 114 |

Note. PPVT-4 = Peabody Picture Vocabulary Test–Fourth Edition; TACL-3 = Test for Auditory Comprehension of Language–Third Edition, Total Score; Comm = Communication subtest; MSEL = Mullen Scales of Early Learning; VR = visual reception; FM = fine motor; RL = receptive language; EL = expressive language; Leiter-R = Leiter International Performance Scale–Revised.

Setting and Experimenters

The DA and intervention stage procedures were administered by the first and third authors and one additional speech-language pathology graduate student. The two students received training prior to administration of the DA and subsequent intervention by the first author, an experienced researcher and clinician, and were required to meet a procedural standard. All sessions were conducted in a university setting in a private research room. The experimenter and child were either seated on the floor or at a small table for all tasks. Sessions were conducted approximately twice per week for 60 min. Sony Handicam Digital HD video camera recorders were used during each session. The camera was moved as needed to maximize video capture of both the child's face and the iPad 1 screen throughout each session.

Targets and Instrumentation

All participants were provided with an iPad containing the Proloquo2Go 2 app for all sessions in which dependent measures were collected. All vocabulary was programmed into this AAC app. Synthesized speech software from Acapela Group, 3 the voice output software that comes with the app, was used as voice output. The same semantic-syntactic targets were used for the DA and intervention stages. Targets, which were presented in counterbalanced order across participants, included agent-action-object (AAO; “Pig chase cow”), entity-attribute (“Pig is happy”), entity-locative (“Pig under trash”), and possessor-entity (“Pig plate”). Notably, only AAO was reversible (“Pig chase cow”; “Cow chase pig”). Participants were only assigned targets for which they demonstrated at least 80% accuracy on a comprehension task at the outset of the study. The comprehension task vocabulary was the same as the DA vocabulary. Children I, L, and P did not pass the comprehension probe for entity-locative; thus, they were not assigned this structure. All four targets were assigned to the remaining seven children.

Vocabulary used during DA for each semantic-syntactic structure was selected from the Communication Development Inventory (Fenson et al., 2007) and included the following: (a) COW, LION, MONKEY, PENGUIN, PIG, and SHEEP (which served as agents, objects, and possessors, plus entities for the entity-attribute and entity-locative structures); (b) SCARE, DROP, TICKLE, CHASE, and KISS (actions); (c) HAPPY, SAD, CLEAN, DIRTY, WET, DRY, RED, BLUE, BIG, and LITTLE (attributes); (d) IN, ON, NEXT TO, BEHIND, and UNDER (locative prepositions) (e) BASKET, CAR, HOUSE, BATHTUB, and TRASH (locative nouns); and (f) CUP, SPOON, PLATE, CARROT, HOT DOG, ORANGE, GRAPES, CORN, BANANAS, and HAMBURGER (possessions). A full list of vocabulary is located in Supplemental Material S3 of the companion article (Binger et al., 2017). In addition, the grammatical markers IS, THE, possessive –'s, and third person singular –s, each represented orthographically, were included as independent symbols. Participants were required to use IS for the attribute productions (“Pig is happy”). The remaining markers were used for the companion study. For example, participants were neither instructed nor expected to use the possessive –'s marker in their possessive-entity productions (“Pig airplane”).

Graphic symbols representing target vocabulary consisted of color line drawings from the Proloquo2Go app. Two communication displays were developed and used for all DA sessions: one display for entity-attribute and entity-locative (28 symbols total), and another for AAO and possessor-entity (23 symbols total). Each display contained all of the vocabulary required for each target. Symbols were organized using a Fitzgerald key (McDonald & Schultz, 1973), a vocabulary organization system in which graphic symbols from different semantic categories are organized in color-coded groups from left to right. Images of all communication displays are in Supplemental Materials S4, S5, S6, and S7 of the companion article (Binger et al., 2017).

Symbol Familiarization

Participants were required to accurately identify the symbols on the communication displays with at least 90% accuracy prior to initiation of the DA task. A paired instructional paradigm (Schlosser & Lloyd, 1997) was used to teach any symbols produced in error—that is, the instructor showed the graphic symbol to the child while simultaneously providing the spoken label and providing a demonstration.

DA Session Procedures

Materials

Puppets, figurines, and additional toys depicting the vocabulary listed above were used during sessions. Puppets and small plastic figures were used for all of the animal characters; plastic items were used for all of the food and possessions (basket, car, etc.); and additional items were used as needed, such as a spray bottle to make the animals wet. Duplicate plastic characters were modified as needed for the entity-attribute target: One set of animals was painted blue and another red, and another set covered with glue and rolled in dirt to depict dirty. The same materials were used for all participants. Children had access to the DA communication displays on the iPads during DA.

Procedures

DA activities took place within 60-min sessions. Ten different trials were administered for each target (i.e., 10 entity-attribute trials for the attribute target, 10 for possessor-entity, etc.). Trials were counterbalanced, with each vocabulary word appearing an equal number of times (e.g., pig appeared twice on each attribute list). For each trial, the examiner used the toy animals and objects to demonstrate each item. For example, for the entity-locative target “Pig under trash,” the examiner placed the pig figurine under the toy trash can. A graduated prompting hierarchy, adapted from Olswang and Bain (Bain & Olswang, 1995; Olswang & Bain, 1996), was used to prompt the correct production of the target (see Table 3). The child's production at each cueing level was recorded for each of the 10 trials for each target. Whenever possible, items for a particular target took place within the same session.

Table 3.

Cueing hierarchy and examples for entity-locative for the target “Pig under trash” (contrast = “Lion in car”).

| Level | Points | Type of prompt | Set up | Example |

|---|---|---|---|---|

| A | 4 | Elicitation question/prompt | Place pig under the trash can. | Tell me about this one. |

| B | 3 | Spoken and aided model of contrast target plus sentence completion | Place lion in the car. | Look, lion is in the car “Lion in car.” Now tell me about this one (placing pig under the trash can again). |

| C | 2 | Spoken model plus elicitation cue | Place pig under the trash can. | See, pig is under the trash. Now you tell me. |

| D | 1 | Direct model plus elicitation statement | Place pig under the trash can. | Tell me, pig is under the trash “Pig under trash.” |

Probe Procedures During the Intervention Stage

For the purposes of the current study, the intervention stage consisted of the baseline and intervention phases of the intervention study (Binger et al., 2017). Throughout the intervention stage, probes (described below) were used to measure progress with the targets. Each child completed a minimum of three baseline sessions (i.e., three sets of probes) for each semantic-syntactic target, with many participants completing more for the purpose of achieving baseline stability. The only task completed during baseline was the probe task. During the intervention phase, children began each session by completing probes (which typically lasted approximately 10 min per probe set), and subsequently participated an intervention session lasting approximately 30 min. Intervention sessions focused on one target at a time and consisted of two activities: concentrated models of the target (Courtright & Courtright, 1979) followed by a 20-min play-based intervention in which augmented input and output (Romski et al., 2010), contrastive targets, recasts (Camarata, Nelson, & Camarata, 1994), and contingent responses (Warren & Brady, 2007) were used (see the companion article, Binger et al., 2017, for further details).

Materials

For all probe sessions, the participants were provided with a communication display similar to the one used during DA (see Supplemental Materials S4 and S5 of the companion article, Binger et al., 2017). The only difference was that Mickey Mouse Clubhouse 4 characters (Mickey Mouse™, Minnie Mouse™, Donald Duck™, Goofy™, and Pluto™) were used instead of the animals (Cow, Pig, etc.). During each session, one iPad showing the relevant communication display plus an additional iPad containing videos depicting the target relations (described in the section below) were used.

Probes

Ten probe set lists, with each set consisting of 10 different items, were created for each semantic-syntactic target—that is, 10 sets of 10 items for entity-attribute, 10 sets of 10 items for possessor-entity, and so on. Each probe set list was counterbalanced and then randomly ordered. Brief video clips of each item on the set list were created using the video recording function on an iPad. To administer the probes, the examiner showed the child a video clip on one iPad, then provided the child with an opportunity to produce that item on the other iPad. For example, a clip depicting Mickey Mouse chasing Minnie Mouse was shown, and the examiner then provided an elicitation prompt (“What's happening?”) and provided the child with time to produce “Mickey chase Minnie.” During probe sessions, the examiner was not permitted to provide feedback on the accuracy of the responses. Additional details are in the companion article (Binger et al., 2017).

Data Collection and Reduction

DA Scoring

Productions at each cueing level were assigned a score of 0–4 (see Table 3), with point assignments corresponding with the level at which the child produced the target correctly. Correct productions at Level A cueing (i.e., the least amount of support) were scored as 4, Level B as 3, Level C as 2, and Level D as 1. If the child never produced the target accurately for a particular trial, this earned a score of 0. As with any graduated prompting hierarchy, prompting began with the least amount of support, with prompts terminated as soon as a participant produced a target correctly. For example, if a participant produced a target correctly at Level A, the child earned 4 points for this trial, and the examiner moved on to the next trial. Level A and B cueing were considered to be minimal support (no models of the target provided), Level C moderate support (spoken model provided), and Level D maximal support (spoken + aided model provided). All DA scoring procedures mirror those of King et al. (2015).

Probe Mastery Scoring

The percentage of correct productions for each probe set was calculated for each target. To evaluate Aim 3—that is, the correlation between DA and probe scores—speed of mastery for the probes for each target was assigned a point value. Mastery in the third baseline session (the fewest possible sessions, as mastery was defined as at least 80% accuracy across three consecutive sessions) earned a score of 1, mastery in the fourth baseline sessions a 2, etc. (see Table 4). Any target not mastered during baseline entered the intervention phase.

Table 4.

Probe mastery scoring for the intervention stage.

| Session in which intervention stage mastery was reached | Points assigned |

|---|---|

| Baseline 3 | 1 |

| Baseline 4 | 2 |

| Baseline 5 | 3 |

| Baseline 6 | 4 |

| Baseline 7 | 5 |

| Baseline 8 | 6 |

| Intervention 3 | 7 |

| Intervention 4 | 8 |

| Intervention 5 | 9 |

| Intervention 6 | 10 |

| Intervention 7 | 11 |

| Never | 12 |

Measures of Prompting Levels (Aim 1)

For DA, the mean prompting level for accurate productions was calculated for each target using the scoring system described previously—that is, participant scores on the 10 DA trials for each target were averaged, resulting in a mean score for each DA session ranging from 0–4. In addition, the percent of correct productions achieved at Level A, B, C, and D were calculated as a measure of examiner effort.

Measures of Modifiability (Aim 2)

For each semantic-syntactic target during DA (with each child completing either three or four targets), modifiability was calculated by comparing the child's combined performance on the first five DA trials to the combined performance on the last five DA trials. The scoring procedures described above were used to determine performance. For example, for his first target, a participant could earn a combined score of 5 on the first five trials (e.g., child required Level D for each trial at 1 point each) and a combined score of 20 on the last five trials (i.e., child required Level A cueing at 4 points each). The Wilcoxon signed-ranks test (two-tailed, p < .05) was used to determine if differences existed between the first and second set of five trials. As the data are ordinal, assumptions cannot be made regarding the equality of the differences between each of the steps.

Measures of Response to Intervention (Aim 3)

Response to intervention was addressed by comparing the participants' mean DA performance with their mean probe mastery score; scoring procedures for both conditions are described above. Correlations were calculated to compare the mean DA score and mean probe mastery score for each target using Spearman's rho. Because the data are not independent, correlations were calculated for each target separately.

Fidelity and Reliability Measures

Fidelity Measures

Three trained research assistants (undergraduate and graduate students in speech and hearing sciences) compared the examiners' behaviors against fidelity standards by viewing video recordings of the sessions. Coders were masked to the purposes of the study, order of the tasks (i.e., that DA took place before baseline and intervention), and order of each session. For each child, fidelity measures were calculated for one randomly selected DA session out of a possible three or four sessions (i.e., 25% or 33% for each child; M = 27%). For the probe and play-based intervention sessions, the coders examined at least 20% of the randomly selected sessions for each target for each child (M = 32%; range = 26%–36%). The examiners' behaviors were judged on adherence to DA, probe, and intervention protocols. Across participants, the mean DA fidelity per participant ranged from 89% to 100%, the mean probe fidelity ranged from 88% to 99%, and the mean intervention fidelity ranged from 93% to 100%.

Data Reliability

To establish interrater reliability of the dependent measures, masked research assistants collected DA and probe data by viewing the videotaped sessions. The same sessions used to calculate fidelity were used for data reliability. Interrater agreement was calculated using Cohen's kappa and was 1.0 for DA data and 0.95 (range = 0.82–1.0) for the probe data, indicating nearly perfect reliability for the scoring of the dependent measures.

Results

All 10 participants completed all DA and probe sessions, which resulted in data for a total of 37 cases (four targets each for seven participants, three targets each for three participants). Overall results revealed that during DA, 89% of the cases were produced correctly at least once at cueing Level A, B, C, or D (33 out of 37 cases). During the intervention stage, participants mastered 86% of the cases (32 out of 37).

Time Required to Complete DA

The average time for participants to complete the 37 DA sessions was 26 min (range = 9–64 min). The mean speed of completion was similar for AAO (29 min), entity-locative (29 min), and entity-attribute (M = 31 min), but significantly shorter for possessor-entity (16 min). Examining completion time for the different participants, the mean DA session completion time per participant ranged from 13 to 40 min (grand M = 26 min).

Measures of Prompting Levels (Aim 1)

Performance at Each Cueing Level During DA

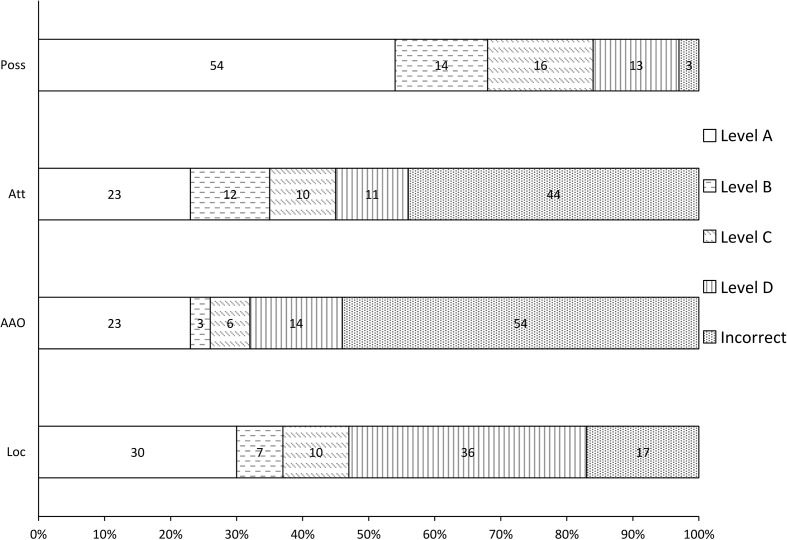

As shown in Figure 1, participants required varying levels of support across the four semantic-syntactic structures, with accurate productions noted for each target at each cueing level. Notably, participants produced numerous correct sentences at Level A cueing—that is, with simply an elicitation question or prompt—with over half of all productions at this level for the possessives. Participants successfully produced sentences at Level B (contrastive model) 3%–14% of the time across targets, Level C (spoken model) 6%–16% of the time, and Level D (spoken + AAC model) 11%–36% of the time. For the possessives, it was rare for children to not achieve success at some point, with only 3% of trials never produced correctly. A relatively low rate of incorrect productions also was noted for the locatives, at 17%. However, the attributes and AAO were more challenging, with participants failing to accurately produce nearly half of the attribute sentences (44%) and slightly over half of the AAO sentences (54%).

Figure 1.

Participants' performance at each cueing level during dynamic assessment. Poss = possessive-entity; Att = entity-attribute; AAO = agent-action-object; Loc = entity-locative.

Mean Level of Support During DA

As depicted in Table 5, the average level of support required for accurate sentence productions varied across semantic-syntactic structures. Participants required minimal support for the possessives (M = 3.0), moderate support for the locatives (2.0), and moderate-to-maximal support for the attributes (1.6) and AAO (1.3). Notably, the vast majority of the children produced each target correctly at least once during DA (89% of the targets). There were four exceptions: Children G and O for AAO, and Children I and P for entity-attribute.

Table 5.

Intervention mastery scores, dynamic assessment (DA) scores, and Spearman's rho correlations for all four targets.

| Target | Mean intervention mastery score | Total DA scores |

Second half DA scores |

||||

|---|---|---|---|---|---|---|---|

| Mean DA score | Correlation with intervention mastery |

Mean DA score | Correlation with intervention mastery |

||||

| r 2 | p value | r 2 | p value | ||||

| AAO | 8.3 | 1.3 | .58 | .01* | 1.6 | .48 | .03* |

| Attributes | 8.1 | 1.6 | .56 | .01* | 2.0 | .46 | .03* |

| Locatives | 7.3 | 2.0 | .53 | .06 | 2.5 | .59 | .04* |

| Possessors | 4.1 | 3.0 | .09 | .93 | 3.0 | .02 | .70 |

Note. AAO = agent-action-object.

Significant at p ≤ .5.

Modifiability (Aim 2)

Each participant's performance on the first five trials of a DA session for a given target was compared with their performance on the last five trials of that same session. Results of the Wilcoxon signed-ranks test were significant (p = .001), indicating that the participants demonstrated modifiability of performance within this brief learning experience. DA scores were lower for the second five trials in only four out of 36 cases (with one additional data point missing).

Response to Intervention After DA (Aim 3)

Response to intervention was assessed by comparing the mean level of support required for accurate productions during DA to mastery scores on the probes (see Table 4 for probe scoring). Significant, moderate correlations were found for entity-attribute and AAO, and a moderate but not significant correlation was found for the locatives (see Table 5). A weak, insignificant correlation was found for possessor-entity. Notably, only seven data points were used for the locative analysis, as this target was not administered for three participants. For the remaining three targets, 10 data points (one for each participant) were used per target. A post hoc analysis was completed to compare means for the second half of the DA scores with mastery scores on the probes, as the latter DA trials may have been more representative of the children's true readiness to exhibit changes in the intervention stage. Significant, moderate correlations were found again for the attributes and AAO, and also for locatives (p = .04), with insignificant results again for the possessors. Mean DA scores were highest for the possessors, followed by locatives, attributes, and AAO. Mean mastery scores—with lower scores indicating faster mastery—were in the same order: the quickest mastery for possessors, followed by locatives, attributes, and AAO.

An additional descriptive analysis revealed a particular pattern of interest. Out of the 37 total cases, participants failed to achieve mastery during intervention in only five cases (i.e., probe mastery scores of 12): Children I, K, and O did not master AAO, and Child P (the youngest participant) did not master AAO or entity-attribute. The DA scores for these targets were 0.1, 0.2, 0.0, 0.0, and 0.4, respectively, indicating that the children produced few-to-no correct productions at any cueing level. These scores constituted five of the seven lowest DA scores (out of 37 total). For the remaining two lowest DA scores, Children I and O earned DA scores of 0.0 and 0.3 and probe mastery scores of 10 and 8, respectively, on the attribute targets.

Discussion

The results of this study, taken as a whole, indicate that investigating DA applications for children who require AAC is a worthwhile pursuit. The 3- and 4-year-old children in the current study benefited from use of various cues as they progressed toward accurate production of semantic-syntactic targets and demonstrated modifiability within individual DA sessions. Further, DA results were significantly correlated with performance in a subsequent AAC intervention for two of four targets, with a third trending toward significance. A discussion of the findings for each research aim follows as well as directions for future research.

Time Required to Complete DA

The range of time it took the 3- and 4-year-olds in the current study to complete each 10-trial DA session varied widely, taking anywhere from 9 to 64 min per session. In a similar manner, mean completion times per participant (across targets) ranged from 13 to 40 min. Given the young age of the children, such variability may be expected; however, the children in the King et al. (2015) study demonstrated less variability in completion time and also took less time overall on a similar DA task, with mean completion times approximately 40% shorter. Two factors likely contributed to this finding: First, the four participants from King et al. were slightly older at age 5 years (with otherwise similar profiles), which may have made the task easier for numerous reasons (more sustained attention, etc.). The second, likely related factor was that these 5-year-olds required fewer cues to produce the sentences accurately, with most achieving success at Level A or B cueing—that is, providing fewer cues takes less time. In essence, the lesson learned from these data is to expect DA to take a little more time when working with younger children and when targets present a challenge.

Cueing Levels and Mean Level of Support (Aim 1)

Each of the four cueing levels yielded accurate participant productions of the targets, although levels were not equally distributed. Level A (elicitation question or prompt) was the most common for three of the four targets, with Level D resulting in the most success for the locatives. Levels B (comparison model), C (spoken model), and D (spoken + aided model) resulted in correct productions approximately equally for the possessive and attribute constructions, with more variability noted for the locatives and AAO. As was the case in the King et al. (2015) study, the possessors required the least amount of cueing, as evidenced in Figure 1 and Table 5. This finding is not surprising, given that this was the only two-term target in the current study and also is one of the earliest developing multiterm semantic-syntactic constructions (Leonard, 1976). Overall, children in the present study exhibited relatively high rates of incorrect productions at all cueing levels compared with the participants from King et al. In the latter study, as noted above, most children produced most sentences correctly with Level A and B cueing, and the younger age of the current participants likely contributed to this difference. In fact, this was a major reason for exploring the aided AAC productions of semantic-syntactic targets with younger children in the present investigation.

One point of speculation involves comprehension pretesting. First, the fact that the same vocabulary words were used for both comprehension testing of the targets (which occurred before DA) and DA may have contributed to the children's success during DA. On a related note, three of the 10 participants did not pass the comprehension pretest for locatives during pretesting (and therefore did not complete this target), whereas all 10 children demonstrated high levels of comprehension for the remaining three targets. We therefore expected the locatives to present challenges for the remaining children. However, participants required less cueing during DA to produce locatives (M DA score = 2.0) than attributes (1.6) and AAO structures (1.3). Further, relatively high failure rates during DA—that is, no correct productions at any cueing level—were noted for attributes at 44% and AAO at 54%, compared with only 17% for the locatives. Although no conclusions can be drawn on the basis of these data, caution at least may be warranted with making decisions about a child's ability to produce a particular structure on the basis of comprehension levels, in terms of including or excluding that structure in intervention. For example, it is not known how the three children who failed the locative comprehension pretest would have performed during DA and intervention, but it is possible that DA might have proven to be a better way to select targets than comprehension pretesting.

Overall, these findings highlight the need to carefully select early linguistic targets for DA and intervention. No two targets appear to be created equally when children are first learning how to produce early sentences using graphic symbols (e.g., possessives vs. locatives vs. AAO), just as they are not the same for children who rely on speech to communicate (Leonard, 1976).

Modifiability and Response to Intervention (Aims 2 and 3)

The modifiability measure was selected to examine changes within each DA session by comparing the first five versus the last five trials for a given sentence type. The participants' performance changed significantly during DA sessions—that is, the children needed fewer cues to produce the sentences correctly in the second half of the brief, graduated prompting–based DA sessions. This encouraging finding expands on the initial work of King et al. (2015), who found a positive trend for modifiability in their study of four 5-year-olds with severe speech disorders. In their study of bilingual preschoolers, Patterson et al. (2013) argued that, given appropriate tasks and levels of difficulty, children who are typically developing should be expected to evidence change within a brief DA task, but children with true underlying language disorders may not. In a similar manner, in the current study, children ready to produce graphic symbol-based sentences would be expected to demonstrate change within a brief DA learning session, with more limited success for children who are not. Such a tool would make a crucial contribution to the AAC field, in part because language expectations for children who use AAC tend to be set extremely low (e.g., Hustad, Keppner, Schanz, & Berg, 2008). Being able to show, relatively quickly, that a child can produce two- and three-word sentences—and having a degree of confidence in the results—may help begin to alleviate this problem and provide children with appropriate communication solutions earlier in life.

The results from Aim 3 provide some initial support toward this goal—that is, DA performance was a moderate predictor of response to the intervention for three of the four targets, with ceiling effects affecting the fourth (possessors). The results in Table 5 further demonstrate the relationship between DA and intervention stage performance: When participants required fewer cues to accurately produce the targets (i.e., higher DA scores), they required fewer sessions to master the targets in the intervention stage (i.e., lower mastery scores). Thus, using DA scores to predict response to intervention—instead of the more common approach of using DA scores to select targets—allowed for this systematic examination of the data across all targets and participants, with the correlational data indicating that DA may be used successfully as a key component of intervention planning. However, several factors may have weakened the correlations and should be addressed, as possible, in future research. First, modifiability results clearly indicated that learning occurred during DA. Therefore, a post hoc analysis in which the latter half of the DA scores were used to predict intervention performance was completed, with these scores better predicting intervention performance than the full DA scores. This type of analysis should be part of the initial planning in future research. Correlations also may have been weakened by choices regarding setup of DA, video probes, and intervention. For example, the use of voice output as well as the use of communication displays with color-coded grids may have facilitated the children's productions of the targets. However, although correlations might improve by eliminating voice output or using randomized placement of graphic symbols, it could be argued that if DA research is to be clinically relevant, then conditions during DA research should strive to maximize the child's chances for success and institute ideal clinical conditions as much as possible. In other words, if the goal is to determine when a child is ready to learn a new skill, then the conditions to promote use of that skill should be used during DA.

One additional descriptive analysis also is of direct clinical interest. The seven lowest DA scores ranged from 0.4 to 0.0, indicating little to no success in DA. For example, Child P's score of 0.4 for AAO reflected eight trials with no correct productions at any of the four cueing levels, and two correct productions at Level C (spoken model) cueing. For five of the seven cases falling within this range during DA, mastery was never achieved during intervention. In contrast, every case earning a DA score above 0.4 achieved mastery for that target at some point in the intervention stage. In clinical terms, this may indicate that the exact cueing profile evidenced during DA may be less important than merely observing the child produce the target multiple times at any cueing level. It is clear that more work with a greater number of participants is required to substantiate this hypothesis, but these initial data indicate that this is a promising line of inquiry.

Clinical Implications

For this initial study involving relatively few participants, clinical implications must be interpreted with caution, but several findings are of note. First, looking broadly at the data, the fact that the vast majority (89%) of these 3- and 4-year-old children with profound speech disorders produced a series of rule-based, semantic-syntactic targets correctly at least once during DA is impressive. In a similar manner, of the 37 cases attempted during the intervention stage (i.e., three or four targets for each of the 10 participants), the preschoolers achieved mastery for 32 (86%). Given previous documentation of the challenges of teaching preschoolers to produce multiword graphic symbol utterances (e.g., Sutton et al., 2010), these results are encouraging and indicate the need to build a body of research to validate effective, efficient intervention techniques for this population.

Second, the findings lend preliminary support for using DA to evaluate the readiness of children who require AAC to begin producing multiterm utterances using graphic symbols. Although a growing body of work supports building early syntax skills with this population (Binger et al., 2008; Binger, Kent-Walsh, Ewing, & Taylor, 2010; Binger & Light, 2007; Kent-Walsh et al., 2015), the current investigation moves this work forward in two primary ways: First, using DA may enable clinicians to improve their ability to predict when children are ready to focus on early syntax when using AAC. Second, focusing on a range of targets may help determine which particular linguistic structures may be viable at a given point in time. For example, some of the past AAC research in this area has focused on a single type of structure for young children—reversible AAO structures (Sutton & Morford, 2008; Sutton et al., 2010). Findings from the current investigation indicate that this structure may be particularly challenging for children compared with other early developing structures. Therefore, a broader range of targets must be investigated before concluding that a child is not capable of creating rule-based utterances when using graphic symbols to communicate.

Third, the fact that the majority of children who performed relatively poorly in DA—that is, those who required maximal cueing for numerous trials—ultimately were successful in the intervention stage requires careful consideration. The actual play-based AAC intervention provided in the current study consisted of a maximum of ten 30-min intervention sessions per target, or 5 hr of concerted intervention time—that is, even when children produced relatively few correct productions for a particular sentence type during DA, many still demonstrated improvement in intervention within a relatively short period of time—so at this point in time, demonstrating the ability to produce even a small number of targets during DA, even with high levels of cueing support, appears to be a useful predictor of the child's ability to learn the skill.

Directions for Future Research

AAC applications with DA are in their infancy, and therefore any number of valid directions may be taken for future work. First, there is an obvious need to conduct studies involving a greater number of participants. In particular, including groups of children with more varied profiles—for example, examining the same targets for children with various language comprehension levels or with autism—is an initial way to forward this line of research. Examination of additional language goals, such as early symbolic communication, narrative skills, or more advanced morphosyntactic structures, also is needed. The work of language researchers who have investigated these areas for children who rely on speech can assist with initial developments, with modifications specific to AAC made as needed.

In addition, DA applications may be useful for additional skills that are unique to AAC, such as determining readiness for particular scanning techniques, selection of particular symbol sets, and determining layout and organization for aided AAC overlays. DA also may prove useful when it is unclear what AAC instruction, if any, has been provided previously. However, DA applications must be carefully considered and not applied too broadly. For example, even though a child may not, in the span of a single DA session, demonstrate the ability to use a computerized dynamic display, this does not mean that a child should then only be given static displays to use for a lengthy period of time. DA, by its very nature, can be used not only to evaluate but also to re-evaluate, with no limits on the frequency of retesting. Again, note that the children mastered some targets during the intervention stage even when they earned relatively low DA scores. Further research is required to document procedures to clearly differentiate those who truly are and are not ready to begin working on a particular skill.

On a final note, when planning future studies, researchers should take the possible effects of the timing of DA into consideration. For example, as discussed in some length in the companion article (Binger et al., 2017), the fact that the children completed DA prior to the baseline phase likely affected baseline performance for some targets for some children—that is, the children likely learned how to produce some of the targets during the DA sessions, which led to unstable baselines. Although highly promising from a clinical perspective, carefully considering the timing of DA sessions, pretesting/baseline measures, and posttesting/intervention measures in future research is required to both preserve experimental control and assist with gaining a more complete understanding of how much learning is truly occurring during DA.

Summary

In summary, findings from this investigation contribute to an emerging body of literature showing promise for use of DA and implementation of interventions focused on rule-based linguistic structures with young children using aided AAC. Findings of particular note include the potential modifiability and predictive benefits of DA for language intervention with children requiring AAC, and the effectiveness of providing direct intervention following completion of DA tasks to target the production of a range of semantic-syntactic structures with these children.

Acknowledgments

This research was supported by the National Institute of Health Grant 1R03DC011610, awarded to Cathy Binger. Preliminary results were presented at the 2014 Annual Convention of the American Speech-Language-Hearing Association in Orlando, FL, and the Annual Convention of the Texas Speech-Language-Hearing Association in San Antonio, TX. The authors thank University of New Mexico AAC lab students Esther Babej, Nathan Renley, Aimee Bustos, Bryan Ho, Lindsay Mansfield, and Eliza Webb for their assistance; Barbara Rodriguez for inspiring this work; the Albuquerque Public School Assistive Technology team for assistance with locating participants; and the children and families who participated in this study. Thanks also to AssistiveWare for the donation of Proloquo2Go software.

Funding Statement

This research was supported by the National Institute of Health Grant 1R03DC011610, awarded to Cathy Binger. Preliminary results were presented at the 2014 Annual Convention of the American Speech-Language-Hearing Association in Orlando, FL, and the Annual Convention of the Texas Speech-Language-Hearing Association in San Antonio, TX.

Footnotes

The Apple iPad is a line of tablet computers designed and marketed by Apple Inc. More information about the Apple iPad can be found at http://www.apple.com/ipad.

Proloquo2go is a product from AssistiveWare and is an AAC software application developed for iPad, iPhone, and iPod touch. More information can be found at http://www.assistiveware.com/product/proloquo2go.

Acapela Group is a company that develops text-to-speech software and services. More information can be found at http://www.acapela-group.com/.

Mickey Mouse Clubhouse is an American animated television series that premiered on Disney Channel in 2006. More information can be found at http://disneyjunior.com/mickey-mouse-clubhouse.

References

- Bain B., & Olswang L. (1995). Examining readiness for learning two-word utterances by children with specific expressive language impairment: Dynamic assessment validation. American Journal of Speech-Language Pathology, 4, 81–91. [Google Scholar]

- Barker R. M., Bridges M. S., & Saunders K. J. (2014). Validity of a non-speech dynamic assessment of phonemic awareness via the alphabetic principle. Augmentative and Alternative Communication, 30, 71–82. https://doi.org/10.3109/07434618.2014.880190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binger C., Kent-Walsh J., Berens J., Del Campo S., & Rivera D. (2008). Teaching Latino parents to support the multi-symbol message productions of their children who require AAC. Augmentative and Alternative Communication, 24, 323–338. https://doi.org/10.1080/07434610802130978 [DOI] [PubMed] [Google Scholar]

- Binger C., Kent-Walsh J., Ewing C., & Taylor S. (2010). Teaching educational assistants to facilitate the multisymbol message productions of young students who require augmentative and alternative communication. American Journal of Speech-Language Pathology, 19, 108–120. https://doi.org/10.1044/1058-0360(2009/09-0015) [DOI] [PubMed] [Google Scholar]

- Binger C., Kent-Walsh J., King M., & Mansfield L. (2017). Early sentence productions of 3-and 4-year-old children who use augmentative and alternative communication. Journal of Speech, Language, and Hearing Research. Advance online publication. https://doi.org/10.1044/2017_JSLHR-L-15-0408 [DOI] [PMC free article] [PubMed]

- Binger C., & Light J. (2007). The effect of aided AAC modeling on the expression of multi-symbol messages by preschoolers who use AAC. Augmentative and Alternative Communication, 23, 30–43. https://doi.org/10.1080/07434610600807470 [DOI] [PubMed] [Google Scholar]

- Binger C., Ragsdale J., & Bustos A. (2016). Language sampling for preschoolers with severe speech impairments. American Journal of Speech-Language Pathology, 25, 493–507. https://doi.org/10.1044/2016_AJSLP-15-0100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boers E., Janssen M. J., Minnaert A. E., & Ruijssenaars W. (2013). The application of dynamic assessment in people communicating at a prelinguistic level: A descriptive review of the literature. International Journal of Disability, Development and Education, 60, 119–145. https://doi.org/10.1080/1034912X.2013.786564 [Google Scholar]

- Camarata S. M., Nelson K. E., & Camarata M. N. (1994). Comparison of conversational-recasting and imitative procedures for training grammatical structures in children with specific language impairment. Journal of Speech and Hearing Research, 37, 1414–1423. [DOI] [PubMed] [Google Scholar]

- Carrow-Woolfolk E. (1999). Test for Auditory Comprehension of Language–Third Edition. Austin, TX: Pro-Ed. [Google Scholar]

- Courtright J. A., & Courtright I. C. (1979). Imitative modeling as a language intervention strategy: The effects of two mediating variables. Journal of Speech and Hearing Disorders, 22, 389–402. [DOI] [PubMed] [Google Scholar]

- Dowden P. (1997). Augmentative and alternative communication decision making for children with severely unintelligible speech. Augmentative and Alternative Communication, 13, 48–59. [Google Scholar]

- Dunn L. M., & Dunn D. M. (2006). Peabody Picture Vocabulary Test–Fourth Edition. San Antonio, TX: Pearson. [Google Scholar]

- Fenson L., Marchman V. A., Thal D. J., Dale P. S., Reznick S. J., & Bates E. (2007). MacArthur-Bates Communicative Development Inventories–Second Edition. Baltimore, MD: Brookes. [Google Scholar]

- Hustad K. C., Keppner K., Schanz A., & Berg A. (2008). Augmentative and alternative communication for preschool children: Intervention goals and use of technology. Seminars in Speech and Language, 29, 83–91. https://doi.org/10.1055/s-2008-1080754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kent-Walsh J., Binger C., & Buchanan C. (2015). Teaching children who use augmentative and alternative communication to ask inverted yes/no questions using aided modeling. American Journal of Speech-Language Pathology, 24, 222–236. https://doi.org/10.1044/2015_AJSLP-14-0066 [DOI] [PubMed] [Google Scholar]

- King M., Binger C., & Kent-Walsh J. (2015). Using dynamic assessment to evaluate the expressive syntax of children who use augmentative and alternative communication. Augmentative and Alternative Communication, 31, 1–14. https://doi.org/10.3109/07434618.2014.995779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard L. B. (1976). Meaning in child language: Issues in the study of early semantic development. New York, NY: Grune and Stratton. [Google Scholar]

- McDonald E., & Schultz A. (1973). Communication boards for cerebral palsied students. Journal of Speech and Hearing Disorders, 38, 73–88. [DOI] [PubMed] [Google Scholar]

- Miller J. F., & Paul R. (1995). The clinical assessment of language comprehension. Baltimore, MD: Brookes. [Google Scholar]

- Mullen E. M. (1995). Mullen Scales of Early Learning. San Antonio, TX: Pearson. [Google Scholar]

- Nigam R. (2001). Dynamic assessment of graphic symbol combinations by children with autism. Focus on Autism and Other Developmental Disabilities, 16, 190–191. [Google Scholar]

- Olswang L. B., & Bain B. A. (1996). Assessment information for predicting upcoming change in language production. Journal of Speech and Hearing Research, 39, 414–423. [DOI] [PubMed] [Google Scholar]

- Olswang L. B., Feuerstein J. L., Pinder G. L., & Dowden P. (2013). Validating dynamic assessment of triadic gaze for young children with severe disabilities. American Journal of Speech-Language Pathology, 22, 449–462. https://doi.org/10.1044/1058-0360(2012/12-0013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson J. L., Rodriguez B. L., & Dale P. S. (2013). Response to dynamic language tasks among typically developing Latino preschool children with bilingual experience. American Journal of Speech-Language Pathology, 22, 103–112. https://doi.org/10.1044/1058-0360(2012/11-0129) [DOI] [PubMed] [Google Scholar]

- Peña E. D., Gillam R. B., Malek M., Ruiz-Felter R., Resendiz M., Fiestas C., & Sabel T. (2006). Dynamic assessment of school-age children's narrative ability: An experimental investigation of classification accuracy. Journal of Speech, Language, and Hearing Research, 49, 1037–1057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peña E. D., Iglesias A., & Lidz C. S. (2001). Reducing test bias through dynamic assessment of children's word learning ability. American Journal of Speech-Language Pathology, 10, 138–154. [Google Scholar]

- Petersen D. B., & Gillam R. B. (2013). Predicting reading ability for bilingual Latino children using dynamic assessment. Journal of Learning Disabilities, 48, 3–21. https://doi.org/10.1177/0022219413486930 [DOI] [PubMed] [Google Scholar]

- Rogoff B. (1990). Apprenticeship in thinking: Cognitive development in social context. New York, NY: Oxford University Press. [Google Scholar]

- Roid G. H., & Miller L. J. (1997). Leiter International Performance Scale–Revised. Wood Dale, IL: Stoelting. [Google Scholar]

- Romski M., Sevcik R. A., Adamson L. B., Cheslock M., Smith A., Barker R. M., & Bakeman R. (2010). Randomized comparison of augmented and nonaugmented language interventions for toddlers with developmental delays and their parents. Journal of Speech, Language, and Hearing Research, 53, 350–365. [DOI] [PubMed] [Google Scholar]

- Schlosser R. W., & Lloyd L. L. (1997). Effects of paired-associate learning versus symbol explanations on Blissymbol comprehension and production. Augmentative and Alternative Communication, 13, 226–238. [Google Scholar]

- Soto G., Solomon-Rice P., & Caputo M. (2009). Enhancing the personal narrative skills of elementary school-aged students who use AAC: The effectiveness of personal narrative intervention. Journal of Communication Disorders, 42, 43–57. https://doi.org/10.1016/j.jcomdis.2008.08.001 [DOI] [PubMed] [Google Scholar]

- Sparrow S. S., Cicchetti D. V., Domenic V., & Balla D. A. (2005). Vineland Adaptive Behavior Scales–Second Edition. San Antonio, TX: Pearson. [Google Scholar]

- Sutton A., & Morford J. (2008). Constituent order in picture pointing sequences produced by speaking children using AAC. Applied Psycholinguistics, 19, 525–536. https://doi.org/10.1017/S0142716400010341 [Google Scholar]

- Sutton A., Trudeau N., Morford J., Rios M., & Poirier M.-A. (2010). Preschool-aged children have difficulty constructing and interpreting simple utterances composed of graphic symbols. Journal of Child Language, 37, 1–26. https://doi.org/10.1017/S0305000909009477 [DOI] [PubMed] [Google Scholar]

- Tzuriel D. (2000). Dynamic assessment of young children: Educational and intervention perspectives. Educational Psychology Review, 12, 385–435. https://doi.org/10.1023/A:1009032414088 [Google Scholar]

- Vygotsky L. S. (1978). Mind in society: The development of higher psychological processes. Cambridge, MA: Harvard University Press. [Google Scholar]

- Warren S. F., & Brady N. C. (2007). The role of maternal responsivity in the development of children with intellectual disabilities. Mental Retardation and Developmental Disabilities Research Reviews, 13, 330–338. https://doi.org/10.1002/mrdd.20177 [DOI] [PMC free article] [PubMed] [Google Scholar]