Abstract

Introduction

Although substance use is common among probationers in the United States, treatment initiation remains an ongoing problem. Among the explanations for low treatment initiation are that probationers are insufficiently motivated to seek treatment, and that probation staff have insufficient training and resources to use evidence-based strategies such as motivational interviewing (MI). A web-based intervention based on motivational enhancement principles may address some of the challenges of initiating treatment but has not been tested to date in probation settings. The current study evaluated the cost-effectiveness of a computerized intervention, Motivational Assessment Program to Initiate Treatment (MAPIT), relative to face-to-face Motivational Interviewing (MI) and supervision as usual (SAU), delivered at the outset of probation.

Methods

The intervention took place in probation departments in two U.S. cities. The baseline sample comprised 316 participants (MAPIT=104, MI=103, and SAU=109), 90% (n=285) of whom completed the 6-month follow-up. Costs were estimated from study records and time logs kept by interventionists. The effectiveness outcome was self-reported initiation into any treatment (formal or informal) within 2 and 6 months of the baseline interview. The cost-effectiveness analysis involved assessing dominance and computing incremental cost-effectiveness ratios and cost-effectiveness acceptability curves. Implementation costs were used in the base case of the cost-effectiveness analysis, which excludes both a hypothetical license fee to recoup development costs and startup costs. An intent-to-treat approach was taken.

Results

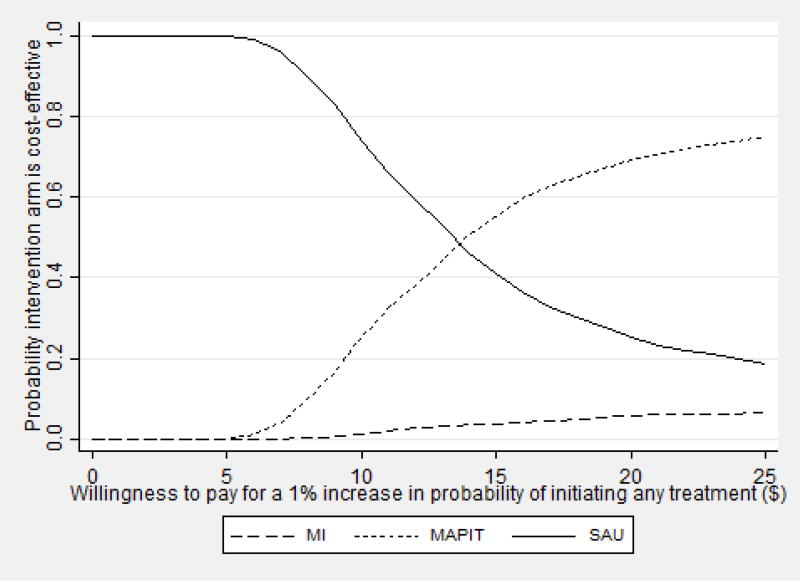

MAPIT cost $79.37 per participant, which was ~$55 lower than the MI cost of $134.27 per participant. Appointment reminders comprised a large proportion of the cost of the MAPIT and MI intervention arms. In the base case, relative to SAU, MAPIT cost $6.70 per percentage point increase in the probability of initiating treatment. If a decision-maker is willing to pay $15 or more to improve the probability of initiating treatment by one percent, estimates suggest she can be 70% confident that MAPIT is good value relative to SAU at the 2-month follow-up and 90% confident that MAPIT is good value at the 6-month follow-up.

Conclusions

Web-based MAPIT may be good value compared to in-person delivered alternatives. This conclusion is qualified because the results are not robust to narrowing the outcome to initiating formal treatment only. Further work should explore ways to improve access to efficacious treatment in probation settings.

Keywords: treatment initiation, substance use, computerized intervention, motivation, probation, cost-effectiveness

1. INTRODUCTION

In the United States, nearly 5 million adults are on probation or parole (Kaeble, Maruschak, & Bonczar, 2015), a disproportionate number of whom have a substance use disorder. A nationally representative sample of male probationers aged 18 to 49 found that 45% needed substance use treatment in 2012, yet only 24% received it (SAMHSA, 2014).

Given both the high prevalence of substance use among probationers and the adverse consequences of substance use—including revocation, rearrest, and incarceration—connecting substance-involved probationers with treatment is critical. However, given limited funding for probation, it is important to justify fiscally the resources spent on reducing recidivism. Linking probationers to treatment is one key factor in reducing recidivism (Drake, 2011; Drake & Aos, 2012; Taxman, 2008), especially because 8% of probationers are incarcerated for a new offense or through revocation of the terms of probation (Kaeble et al., 2015).

Screening, Brief Intervention, and Referral to Treatment (SBIRT) is one approach to linking clients to treatment. Many SBIRT models include brief counseling of 1 to 4 sessions, and most draw on motivational interviewing (MI) principles that are designed to increase motivation and readiness for change. Such an approach has been shown to reduce unhealthy alcohol use among the general population in primary care settings (Moyer, 2013). There is also evidence that MI can increase treatment initiation and compliance among probationers (McMurran, 2009). However, it may be more difficult to deliver an MI-based intervention than delivering general, unspecified counseling (Hall, Staiger, Simpson, Best, & Lubman, 2016). Moreover, it can be especially difficult for probation officers to implement an MI-based intervention, given large caseloads and limited training in behavioral health (Chadwick, Dawolf, & Serin, 2015; Taxman, Perdoni, & Caudy, 2013; Walters, Vader, Nguyen, Harris, & Eells, 2010).

Technology-based interventions have emerged as a potential solution to addressing substance use in settings where specialized skills are otherwise absent. Several studies document the effectiveness of technology-based interventions at reducing substance use and related risk behaviors in primary care and specialty treatment settings (Marsch, Carroll, & Kiluk, 2014). However, there is relatively little research on technology-based approaches to addressing substance use issues in justice settings (Walters et al., 2014).

The current study is a cost-effectiveness analysis (CEA) of a multisite randomized controlled trial to test the effectiveness of three approaches to encourage substance-involved probationers to initiate treatment: Motivational Assessment Program to Initiate Treatment (MAPIT), a two-session motivational computer intervention; motivational interviewing (MI), a two-session counselor-delivered intervention; and supervision as usual (SAU). The main study found that, compared to SAU, both MAPIT and MI were associated with increases in treatment initiation at the 2-month follow-up, and the increase for MAPIT was statistically significant (OR=2.4, p=.037), whereas the increase for MI was not (OR=2.15, p=.07). At 6 months, MAPIT was associated with an increase in treatment initiation relative to SAU, but the increase fell short of standard levels of statistical significance (OR=1.84, p=.058). No independent effect of site was found (Lerch, Walters, Tang, & Taxman, 2017).

Few studies have assessed the cost-effectiveness of programs for substance-involved probationers, and no study to our knowledge has assessed the cost-effectiveness of a computer-based intervention in this population. However, studies demonstrate that providing traditional forms of services and treatment to substance-involved offenders are cost-effective and cost-beneficial, particularly when the treatment is in the community. For example, in-prison substance use treatment combined with community-based aftercare treatment is particularly cost-effective (Griffith, Hiller, Knight, & Simpson, 1999; McCollister & French, 2003; McCollister, French, Prendergast, Hall, & Sacks, 2004; McCollister et al., 2003), and criminal justice diversion programs for substance-involved offenders have been shown to be cost-beneficial (Zarkin et al., 2005) and cost-effective (Cowell, Broner, & Dupont, 2004). Also, the general treatment literature finds that web-based and telemedicine initiatives in health care delivery tend to be effective, low-cost, and potentially cost-effective (Barnett, Murphy, Colby, & Monti, 2007; Scott et al., 2007). The current study is the first to examine the cost-effectiveness of a web-based motivational intervention in a probation setting.

2. MATERIAL AND METHODS

2.1 Overview

The main study, including sample, study procedures, and outcomes, are described elsewhere (Taxman, Walters, Sloas, Lerch, & Rodriguez, 2015; Lerch, Walters, Tang, & Taxman, 2017). The current study applies cost-effectiveness analyses (CEAs) to better understand the resources needed to implement the interventions and the degree to which outcomes improve with the increased cost of interventions compared to SAU. Results rely on the joint distribution of outcomes and cost, and they are expressed as the additional cost of achieving a one-unit improvement in outcome under one intervention compared to the next best alternative (Drummond et al., 2015). CEA can also be used to determine which of several interventions is good value at a given level of the hypothetical willingness of a decision-maker to pay for a certain outcome (Glick, Doshi, Sonnad, & Polsky, 2015; Murphy et al., 2017).

Conducting a CEA requires decisions about the analytic perspective, the study period, and the appropriate outcome. Like other economic analyses, the perspective guides which costs to include in the study and the appropriate measure of effectiveness. The current study uses the probation system as the analytic perspective because that system makes decisions about the interventions and would incur the costs associated with implementation. Other costs, such as the value of participant time, are excluded from the analysis because these costs are not incurred by the probation system. Additionally, the analysis excludes those costs that are solely incurred for research purposes. All costs are presented in 2016 U.S. dollars. The outcome of interest is any treatment initiation measured at 2 and 6 months after baseline assessment.

The current study assesses development costs, startup costs, and implementation costs. Development is to create an intervention, and development costs may be recouped by requiring a fee for using the intervention. Startup gets an intervention running, and startup costs are incurred before the study period begins, do not depend on the number of probationers in the study, and typically are not included in cost-effectiveness estimates (Neumann, Sanders, Russell, Siegel, & Ganiats, 2016). Implementation costs are incurred after probationers are enrolled into the study, increase with the number of participants recruited, and are included in cost-effectiveness estimates.

2.2 Sample and Procedures

Participation in the study was voluntary and included substance-involved people who had recently started probation in Baltimore City, Maryland, or Dallas, Texas. Individuals were provided information on the study during the probation intake process. Those who expressed an interest were screened to determine whether they met the eligibility requirements of any drug use or heavy alcohol use during the past 90 days. Those who were eligible provided consent to participate in the study, were given a baseline assessment, and were randomized to one of the three treatment arms: MI, MAPIT, or SAU. More details on the study procedures are published elsewhere (Taxman, Walters, Sloas, Lerch, & Rodriguez, 2015).

The baseline sample consisted of 316 participants (MAPIT=104, MI=103, and SAU=109)—90% (n=285) who completed the 6-month follow-up. The MAPIT and MI groups were randomized to receive two intervention sessions lasting roughly 45 minutes each. The first session typically took place the same day the person was randomized. The second session took place approximately 4 weeks later. MAPIT used theory-based algorithms and a text-to-speech engine to deliver personalized reflections, feedback, and suggestions. At the participant’s request, the program could send emails or mobile texts to remind participants of their goals. The two MAPIT sessions were self-paced; a research assistant was available to address any technical issues that arose. The development and content of MAPIT is described more fully elsewhere (Walters et al., 2014).* MI sessions were conducted one-on-one with a project counselor; the structure of the MI and MAPIT intervention sessions were similar. Participants were provided appointment reminders using a variety of methods, and additional effort was taken to track down those who missed their scheduled appointment. Clinical supervision was provided to counselors delivering MI by the study co-Principal Investigator (co-PI), who reviewed session tapes and met with the counselors on a biweekly basis to provide feedback. MAPIT and MI were delivered in addition to SAU.

2.3 Data

Development, startup, and implementation costs require information on labor, materials, and space used. Each of these depend on the quantity of units of resources required (e.g., number of staff hours) and the price per unit (e.g., hourly wage rate).

2.3.1 Development costs

Development costs apply solely to the MAPIT intervention. Although development costs were available from study records, the study charged no fee for using the MAPIT program. A sensitivity analysis, described as follows, includes a hypothetical fee. The cost of a software contractor to develop and implement the MAPIT system included components used for intervention and research. Software development cost could not be separated into distinct elements, so the full software development cost is presented here.

2.3.2 Startup costs

Startup costs are incurred to initiate programs and apply to MAPIT and MI. One of the two study PIs provided training and ongoing clinical supervision on MI to two counselors. The study purchased computers, printers, and licenses for web hosting, message texting, and the text-to-speech software, which were used for the MAPIT and MI interventions. Study records included staff time for training and the amount and cost of purchased items.

2.3.3 Implementation costs

Implementation costs include the value of resources used to support the intervention delivery in the current study. The implementation cost estimates are intended to approximate the value of resources needed to support the interventions if performed in the probation system outside of a research study context. Thus, the cost estimates include those components of the screening and assessment needed to support delivery of the interventions and the interventions themselves and exclude the costs associated with the research.

Implementation costs were mostly driven by the cost of labor used to support the interventions, so data collection focused on estimating the time for these activities. The estimated average screening time was based on all eligible participants and was adjusted to include only the relevant (non-research) questions that were necessary to support and deliver the interventions. The screening time estimate could not feasibly be linked to individual participants. The relevant (non-research) sections of the baseline assessment were timed for a separate subsample of 20% of all assessments; study resources did not permit timing for the entire sample.

Data on the time to deliver and support MAPIT and MI came from daily, structured logs of counselor and computer time spent delivering the interventions. Counselors recorded the total hours worked for the day; apportioned that time into time spent on the current study, research, and other purposes; and further apportioned the time on the current study into (1) preparing for, overseeing, and following up on MAPIT sessions; (2) preparing for, delivering, and following up on MI sessions; (3) scheduling participants for MAPIT or MI sessions; and (4) clinical supervision (MI only). The log included the number of participants who were involved in these activities.

It was possible to link to individual participants the time supporting MAPIT and the time delivering, preparing for, and following up on MI sessions. The other activities—scheduling appointments and clinical supervision—could not be reliably linked to individual participants. Study staff would typically schedule a block of time for calls to participants and move quickly from one call to the next. Clinical supervision was also performed in blocks of time and frequently would address multiple study participants.

Data on the wages of research assistants and counselors came from study records. To estimate the cost per hour of clinical supervision, a typical salary for a clinical supervisor was used rather than the salary of the PI. The implementation of MI in a real-world setting would likely use an experienced supervisor to perform clinical supervision rather than a senior researcher. The salary and fringe were based on the going market rate for a clinical supervisor in the Dallas/Fort Worth metropolitan area, as determined through the direct experience of the study team, and were assumed to be $60,000 and 26%, respectively.

The amount of office space used in the MAPIT and MI conditions was assumed to be 30 square feet; previous evaluations have demonstrated that space costs tend to be relatively small, and so approximating the amount of allocated space has minimal impact on conclusions (e.g., Cowell, Dowd, Mills, Hinde, & Bray, 2017). Data on the value of space came from the office lease rates for Baltimore and Dallas (Newmark Knight Frank, 2013a, 2013b). The cost of the printed materials for the MI and MAPIT sessions was available via study records.

2.3.4 Effectiveness data

The primary effectiveness measure for the study was self-reported treatment initiation at two time points: 2 and 6 months after the baseline assessment based on the self-report Timeline Follow-back, a calendar-based recall system that has been widely validated in substance treatment trials (Sobell & Sobell, 1996). Treatment initiation was measured as 2 or more days of any treatment involvement including attendance at religious-based groups, detoxification, inpatient treatment, intensive outpatient treatment, medication, outpatient group sessions, outpatient individual sessions, residential treatment, and self-help groups that were specific to substance abuse.

2.4 Analysis

2.4.1 Estimating costs

Development costs were taken directly from study records. Startup costs were estimated by multiplying the quantity of each resource by its unit cost; space costs were applied as appropriate, and the sum across all relevant resources was computed. Startup costs were allocated to the MAPIT and MI arms.

The implementation cost per participant was estimated by summing the following costs for each participant and then averaging that sum within each treatment arm: screening and assessment costs, oversight (MAPIT) or delivery costs, scheduling costs, and clinical supervision costs. To estimate these costs, the hours of each staff person were multiplied by the relevant wage (base wage plus benefits and employment taxes) and added to space and material costs.

The delivery and support of MAPIT and MI intervention sessions were calculated at the individual session level, and then the individual sessions were linked to the outcomes for individual participants. For participants in the MAPIT and MI arms, the average cost for the screening and assessment was calculated for the sub-sample by intervention arm, then applied to all participants in that arm. Average costs for appointment reminders and clinical supervision were computed across all participants, and the average for each activity was applied to all participants. Because average costs were applied to screening, assessment, appointment reminders, and clinical supervision, these activities cannot be differentiated at the individual level.

The current study focuses on the incremental cost of the MI and MAPIT interventions above standard care. Because no additional services were provided to participants randomized to SAU, this intervention arm was given a cost of $0. Implementation cost estimates were computed following the main study of using an intent-to-treat approach (Lerch, Walters, Tang, & Taxman, 2017): participants were retained in the intervention arm to which they had been randomized, and their costs and effectiveness were included as observed, regardless of the amount of activities and services they actually received. Also following the main study (Lerch, Walters, Tang, & Taxman, 2017), analyses adjusted for baseline imbalance across study arms in court-referred treatment and for differential follow-up with regard to stable housing and an ASI employment/education status composite score.

2.4.2 Effectiveness analysis

Following the main study (Lerch, Walters, Tang, & Taxman, 2017), effectiveness was measured as initiation of treatment within the 6-month period after baseline and assessed using the same specification as described above for costs.

2.4.5 Cost-effectiveness analysis

The CEA follows the accepted approach in the literature (Drummond et al., 2015; Glick et al., 2015; Neumann et al., 2016) and consists of two steps: calculating incremental cost-effectiveness ratios (ICERs) and deriving the cost-effectiveness accessibility curves (CEACs), which rely on the joint statistical distribution between cost and outcome (Barton, Briggs, & Fenwick, 2008; Fenwick, Claxton, & Sculpher, 2001). The ICER expresses how much more would have to be paid to achieve a given improvement in treatment initiation when comparing two interventions. Only variable costs were included in the ICER; thus, the base case cost estimates relied on implementation costs alone (not startup costs).

To compute the ICER, the interventions were ranked from lowest to highest cost, then the cost and effectiveness of each intervention were estimated relative to the previous intervention in the ranking (e.g., the least expensive intervention was compared with the second least expensive intervention). Following Drummond et al. (2015) and Neumann et al. (2016), the analyses eliminated from consideration any intervention that was dominated. An intervention strictly dominates another if it is less expensive and more effective than the other. A more expensive intervention dominates a less expensive intervention by extension if it is less effective but also has a lower ICER.

CEACs demonstrate the sampling variability of the ICER and use the joint distribution of cost and the outcome. A CEAC is the probability that an intervention is good value relative to the alternative interventions at a given willingness to pay for a unit change in the effectiveness outcome (Barton et al., 2008; Fenwick et al., 2001; Glick et al., 2015). Because the CEAC simultaneously accounts for variation in both cost and effectiveness, results may suggest that an intervention is good value even when the associated improvement in an outcome is not statistically significant (Glick et al., 2015).

2.4.6 Sensitivity analyses

Sensitivity analyses assess the robustness of findings to changes in analytic assumptions. Four sensitivity analyses were performed. The first three sensitivity analyses include a license fee and startup costs. In the base case, development costs were not recouped because MAPIT had no price or fee, and startup costs were omitted from the CEA. The first sensitivity analysis applied a hypothetical license fee for MAPIT of $60 per offender to recoup the development costs. It is unknown what fee would be charged if this intervention were widely disseminated. To be conservative, the sensitivity analysis uses a fee charged for a virtual program to address drinking under the influence among offenders.† The fee is high relative to other resources used for MAPIT because it is slightly less than the estimated cost of implementing MAPIT.

In the second sensitivity analysis, startup costs alone were applied. The training cost was apportioned across participants in the MI arm, and the computer cost was apportioned to participants in the MAPIT and MI arms. (The computer is used to gather data used to deliver the MI.) In the third sensitivity analysis, the license fee and startup costs were applied.

The fourth sensitivity analysis changed the outcome from initiating any treatment to initiating formal treatment only.

3. RESULTS

3.1 Development Costs and Startup Costs

Software development costs were estimated at $58,406. Table 1 shows the two main components of the startup costs of MAPIT, the cost of equipment for MAPIT and MI, and training counselors to deliver the MI. The startup costs were $5,218.

Table 1.

Startup Costs ($2016)

| Cost Category | Cost |

|---|---|

| Counselor training | $1,107.72 |

| Equipment cost | $4,109.96 |

|

| |

| Total | $5,217.68 |

Note: Total subject to rounding.

3.2 Implementation Cost

Table 2 shows the mean and standard error of time and implementation cost for the MAPIT and MI interventions, relative to SAU. The average time per participant for the MAPIT intervention (163 minutes) was 110 minutes shorter than the average per participant for the MI intervention (272 minutes). MAPIT cost $79.37 per participant compared to $134.27 per participant for MI, a difference of ~$55 per participant.

Table 2.

Time and Implementation Cost for MAPIT and MI Relative to SAU

| Time per Intervention (minutes) |

Cost per Intervention ($2016) |

|||

|---|---|---|---|---|

|

|

||||

| Activity | MAPIT | MI | MAPIT | MI |

| Screeninga | 2.47 (—) | 2.47 (—) | $1.18 (—) | $1.18 (—) |

| Baseline assessmenta | 4.48 (—) | 4.48 (—) | $2.18 (—) | $2.18 (—) |

| Delivery and support of intervention sessions | 49.21 (0.71) | 101.79 (0.54) | $24.12 ($0.31) | $50.08 ($0.29) |

| Appointment remindersa | 106.58 (—) | 106.58 (—) | $51.89 (—) | $51.89 (—) |

| Clinical supervisiona | — (—) | 56.56 (—) | — (—) | $28.93 (—) |

| Total | 162.73 (0.38) | 271.88 (0.29) | $79.37 ($0.12) | $134.27 ($0.10) |

Note: Predicted values of linear regression. MAPIT— Motivational Assessment Program to Initiate Treatment, MI—Motivational Interviewing, SAU—services as usual.

Data for this activity are not differentiated at the individual level and so do not have a standard error.

The table shows the costs broken out by the main activities. Delivery and support of intervention sessions took half as much time for MAPIT compared to MI and was therefore half the cost ($24.12 compared to $50.08). Although delivery and support comprised a large share of the total intervention cost—30% and 37% of MAPIT and MI costs, respectively—appointment reminders took more time than intervention delivery. On average, appointment reminders took 57 minutes longer than delivery/support in the MAPIT arm and 5 minutes longer in the MI arm.

Clinical supervision helped ensure the quality of conversations in the MI arm. Because clinical supervision involves both clinicians and supervisors, the amount of time spent on clinical supervision should be relatively high. The estimates showed that the average cost of clinical supervision is $29, which was just over 20% of the total cost of MI.

Screening and assessment time were relatively brief and therefore inexpensive. These two activities combined took less than 7 minutes, at a cost of $3.36 ($1.18 for screening plus $2.18 for the assessment). In addition to appointment reminders and clinical supervision, screening and assessment cannot be differentiated at the individual level and do not have a standard error.

3.3 Cost-Effectiveness Analysis

Table 3 shows at 2 and 6 months after baseline the estimated adjusted mean cost and effectiveness and the ICER analysis. At both time points, MI was both more expensive and less effective than MAPIT, the next cheapest alternative. Thus, MI was strictly dominated and excluded from further consideration, and an ICER was not estimated for it. Compared with SAU, the estimates show that at the 2- and 6-month time points, MAPIT cost $8.37 and $6.70 per percentage point increase in the probability of initiating treatment, respectively.

Table 3.

Cost-Effectiveness Analysis Results ($2016)

| Any Treatment Initiation (2 months) | Any Treatment Initiation (6 months) | |||||

|---|---|---|---|---|---|---|

|

|

|

|||||

| Treatment | Mean Cost (C) |

Mean Effectiveness (E) |

ICER (ΔC/ΔE) |

Mean Cost (C) |

Mean Effectiveness (E) |

ICER (ΔC/ΔE) |

| SAU | $— (—) | 10.7% (0.03) | $— (—) | 25.0% (0.04) | ||

| MAPIT | $79.54 ($0.14) | 20.2% (0.04) | $8.37 | $79.37 ($0.12) | 37.2% (0.05) | $6.70 |

| MI | $133.16 ($0.12) | 18.6% (0.04) | Strictly dominated | $134.27 ($0.10) | 28.6% (0.05) | Strictly dominated |

Note: Treatments are ranked in ascending order according to cost. ICER—Incremental Cost Effectiveness Ratio, MAPIT— Motivational Assessment Program to Initiate Treatment, MI— Motivational Interviewing, SAU—services as usual. The Δ compares one row of the table to the previous row, adjusting for covariates. For the current study, strictly dominated means that one intervention was both more effective and less expensive than the alternative.

The power to detect a joint difference in costs and effectiveness may be greater than the power to detect significance when costs and effectiveness are considered independently (Glick et al., 2015). For this reason, the analyses assess the joint significance of costs and effectiveness using the ICER, even when the effectiveness of the intervention only approaches statistical significance.

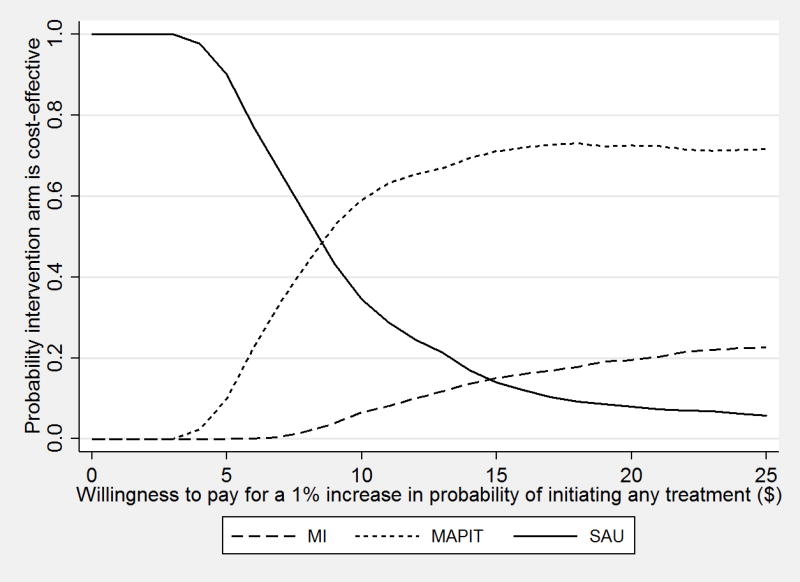

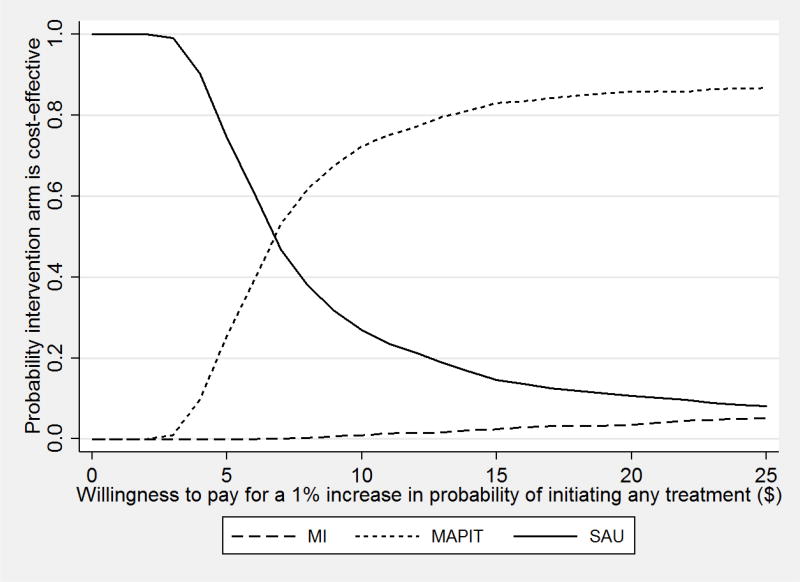

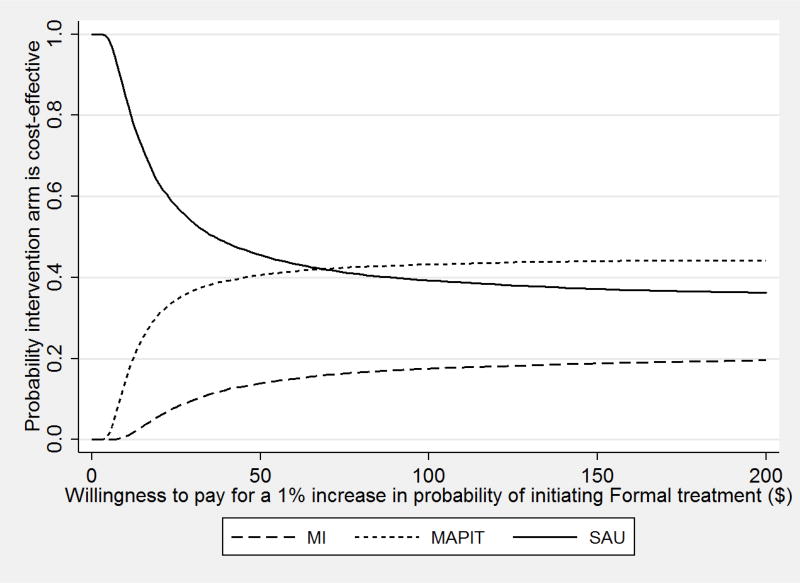

The CEACs for the 2-month follow-up (Figure 1) show that the probability that MAPIT is good value relative to SAU rose with the decision-maker’s willingness to pay for a 1 percentage point increase in the probability of initiating treatment. The CEAC asymptotes at a probability of 0.7, at approximately $15 per percentage point increase in the probability of initiating treatment. That is, a decision-maker who is willing to pay $15 or more to improve the probability of initiating treatment can be 70% confident that MAPIT is good value relative to SAU. For the 6-month follow-up (Figure 2), the CEAC asymptotes at 0.9, also at approximately $15. Thus, at this willingness to pay and above, the decision-maker can be 90% confident that MAPIT is good value.

Figure 1.

Cost-Effectiveness Acceptability Curve for Treatment Initiation within 2 Months

Figure 2.

Cost-Effectiveness Acceptability Curve for Treatment Initiation within 6 Months

3.4 Sensitivity Analyses

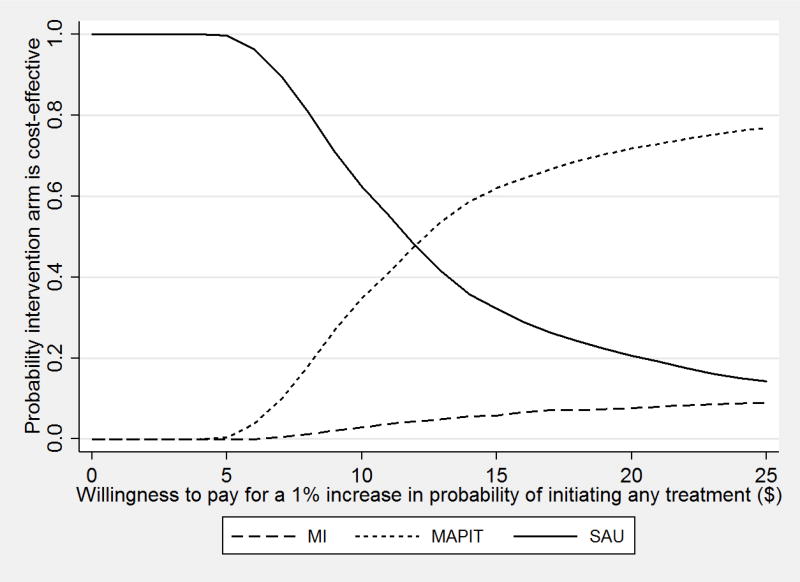

Appendix A provides the full results from the sensitivity analyses, including the relevant CEACs. In the first sensitivity analysis that added to MAPIT a license fee of $60 per participant, MAPIT (estimated cost of $139.54) cost $6.38 more than MI (estimated cost of $133.16). The ICER from SAU to MI was $37.30 and from MI to MAPIT was $0.59 (at the 6-month follow-up). Because the ICER decreased in value across increasingly expensive interventions, MAPIT dominated MI by extension (Neumann et al., 2016, p. 268), which in turn removed MI from consideration. The ICER between SAU and MAPIT was $11.42. This ICER value is slightly less than twice the value under the base case of $6.70, meaning that if a license fee to recoup development costs was to be included, a higher willingness to pay for initiation into treatment would be needed for the intervention to be considered good value.

Under the second sensitivity analysis, adding the startup costs alone, the ICER from SAU to MAPIT increased from $6.39 to $8.13. The increase in the cost differential did not change the study conclusions. Under the third sensitivity analysis, combining the license fee and startup costs, MAPIT was less expensive than MI, and because MAPIT was also more effective than MI, it dominated MI, as in the base case.

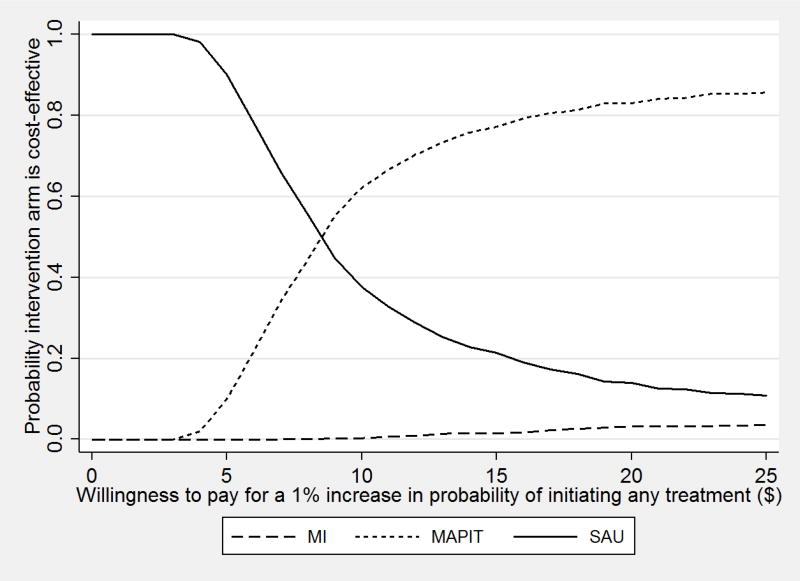

The fourth sensitivity analysis changed the outcome from initiating any treatment (which was used in the main study [Lerch, Walters, Tang, & Taxman, 2017]) to initiating formal treatment only. In the analysis, MAPIT relative to SAU led to a small increase in the probability of initiating treatment—by less than one percentage point at 6 months—and MI did not increase the probability of initiating treatment. Assessing the CEACs, which account for the joint statistical distribution of cost and outcome, showed that MAPIT was not cost-effective when the outcome was initiating formal treatment only.

4. DISCUSSION

Given the low proportion of substance-using probationers who seek treatment, a key short-term step toward reducing their substance use is to motivate probationers to initiate treatment. The current study assesses the cost-effectiveness of two interventions to increase treatment initiation in a probation setting. The main study found that MAPIT improved treatment initiation at 2 months following baseline, with non-significant improvement at 6 months (Lerch, Walters, Tang, & Taxman, 2017). The current study found that MAPIT also cost less than MI.

The cost estimates presented here suggest that MAPIT cost $79.37 per probationer at the 6-month point. After adjusting for inflation, estimates from the literature suggest that supervising one probationer costs $9.36 per day (Alemi, Taxman, Doyon, Thanner, & Baghi, 2004), or about $1,123.20 for 6 months of supervision (excluding weekends). Thus, MAPIT represents a relatively small incremental cost to community supervision.

The cost estimates also show that reminding probationers to participate in the experimental conditions comprised a large proportion of the time and cost of delivering this clinical trial: 65% for MAPIT and 40% for MI. This high proportion of time is not surprising, given the difficulty of engaging this population in supervision conditions. The current study was designed to deliver an intervention with high fidelity. Thus, the time spent on appointment reminders was high, which yielded higher rates of completed intervention delivery than is typical in most probation settings. If MAPIT were implemented in a “real world” probation department, it would be unlikely to engage probationers at such a high level unless individuals respond differently to officers than study staff during the intake process. Thus, the real-world cost of MAPIT may be lower than presented in the current study, and this reduced effort may also reduce the effectiveness of MAPIT.

The cost-effectiveness analysis shows that using MAPIT to increase the probability of initiating treatment 6 months after baseline cost $6.70 per percentage point increase in treatment initiation, relative to SAU. Before deciding whether to implement MAPIT, a probation department would need to determine its value or willingness to pay for such an effect. The benefits to a probation department of implementing MAPIT might include improving treatment access and reducing revocation. Initiating treatment increases the probability of successfully completing treatment, which is intended to lead to lower substance use and may then lower crime (Belenko & Peugh, 1998). The main study found, however, no reduction in substance use (Lerch, Walters, Tang, & Taxman, 2017).

To implement MAPIT more broadly, decision-makers must consider factors other than its cost-effectiveness, including the startup costs of MAPIT and its alternative approaches (software, equipment, and training) and longer-term maintenance and renewal costs. Funding options for interventions like MAPIT depend on the state and jurisdiction and include using Medicaid, securing state funds for efforts to enhance probation, and coordinating with the community treatment system to help defray its costs.

Three separate sensitivity analyses showed that including a hypothetical license fee of $60 per offender and/or startup costs increased the value of the ICER for MAPIT relative to the next best alternative. For example, including the fee almost doubled the value of the ICER relative to SAU. However, the study conclusion that MAPIT is likely good value at a particular willingness to pay was robust under each of these sensitivity analyses.

The findings from a fourth sensitivity analysis show that the results are not robust to changing the outcome from initiating any treatment to initiating formal treatment. This finding, together with the finding from the main study that intervention condition was not statistically significantly associated with substance use, suggests that future research focus on the quantity and quality of treatment that probationers receive. That research would include assessing the degree to which substance use reductions can be achieved by improving probationer access to evidence-based care.

The current study faces at least four limitations. First, treatment initiation was assessed at 2 and 6 months after baseline; a longer follow-up period would be needed to assess the long-term benefits and costs of any intervention. One possibility for further study is a simulation study that would span time some years after baseline (e.g., Zarkin et al., 2012).

A second limitation is that treatment initiation was self-reported, which may be less accurate than administrative records. Two considerations mitigate this concern. First, the Timeline Follow-back that we use is validated for use in substance use treatment trials (Sobell & Sobell, 1996). Second, although the degree of any measurement error cannot be assessed, there is little reason to suspect that it varied systematically by study arm.

A third limitation is that the current study findings may not generalize because they come from a randomized controlled trial in two specific cities.

A fourth limitation is that individual variation in the cost estimates was determined by variation in the delivery of the intervention. Other activities were not measured at the individual level for the entire sample, which suppresses estimating variability across individuals. It should be noted that little variability across participants would be expected in screening, assessment, and clinical supervision time, but variability would be expected in appointment reminders.

Despite the limitations, the study demonstrated that MAPIT is a promising and potentially cost-effective option relative to MI for motivating substance-involved probationers to initiate treatment. Future analyses might examine other criminal justice outcomes. Further work could also address how to implement and integrate MAPIT into the probation process. Involving probation officers to reinforce the concepts in a program like MAPIT may help to better sustain the effects beyond the short-term.

Highlights.

The cost-effectiveness of a computerized intervention for probationers is assessed.

Effectiveness is self-reported initiation into any treatment at 6 months.

The computerized intervention was relatively good value for the main outcome.

Results are not robust to narrowing the outcome to initiating formal treatment only.

Acknowledgments

Funding: This work was supported by the National Institute on Drug Abuse (grant R01 DA029010-01).

Abbreviations

- MAPIT

Motivational Assessment Program to Initiate Treatment

- MI

motivational interviewing

- SAU

supervision as usual

Appendix A: Sensitivity Analysis

1. Add a $60 license fee to MAPIT

Table A.1.

Cost-Effectiveness Analysis Results with Licensing Fee ($2016)

| Any Treatment Initiation 6MFU | |||

|---|---|---|---|

|

|

|||

| Treatment | Mean Cost (C) | Mean Effectiveness (E) | ICER (ΔC/ΔE) |

| SAU | $— | 25% (0.04) | — |

| MI | 134.27 ($0.10) | 29% (0.05) | Dominated by extension |

| MAPIT | $139.37 ($0.12) | 37.2% (0.05) | $11.42 |

Note: Treatments are ranked in ascending order according to cost. 6MFU—6-Month Follow-Up, ICER—Incremental Cost Effectiveness Ratio, MAPIT—Motivational Assessment Program to Initiate Treatment, MI—Motivational Interviewing, SAU—services as usual. The Δ compares one row of the table with the previous row, adjusting for covariates. In the current study, dominated by extension means the ICER decreases in value across increasingly expensive interventions.

Figure A.1.

Cost-Effectiveness Acceptability Curve for Treatment Initiation within 6 Months Including MAPIT Licensing Fee

2. Add startup costs to MAPIT and MI

Table A.2.

Cost-Effectiveness Analysis Results with Startup Costs ($2016)

| Any Treatment Initiation 6MFU | |||

|---|---|---|---|

|

|

|||

| Treatment | Mean Cost (C) | Mean Effectiveness (E) | ICER (ΔC/ΔE) |

| SAU | $— | 25% (0.04) | — |

| MAPIT | $99.22 ($0.12) | 37.2% (0.05) | $8.13 |

| MI | $164.88 ($0.10) | 28.6% (0.05) | Strictly dominated |

Note: Treatments are ranked in ascending order according to cost. 6MFU—6-Month Follow-Up, ICER—Incremental Cost Effectiveness Ratio, MAPIT—Motivational Assessment Program to Initiate Treatment, MI—Motivational Interviewing, SAU— services as usual. The Δ compares one row of the table with the previous row, adjusting for covariates. For the current study, strictly dominated means that one intervention was more effective and less expensive than the alternative.

Figure A.2.

Cost-Effectiveness Acceptability Curve for Treatment Initiation within 6 Months Including Startup Costs

3. Add startup costs to MAPIT and MI and a $60 license fee to MAPIT

Table A.3.

Cost-Effectiveness Analysis Results with Startup Costs and MAPIT Licensing Fee ($2016)

| Any Treatment Initiation 6MFU | |||

|---|---|---|---|

|

|

|||

| Treatment | Mean Cost (C) | Mean Effectiveness (E) |

ICER (ΔC/ΔE) |

| SAU | $ — | 25% (0.04) | — |

| MAPIT | $159.22 ($0.12) | 37.2% (0.05) | $13.05 |

| MI | $164.88 ($0.10) | 28.6% (0.05) | Strictly dominated |

Note: Treatments are ranked in ascending order according to cost. 6MFU—6-Month Follow-Up, ICER—Incremental Cost Effectiveness Ratio, MAPIT—Motivational Assessment Program to Initiate Treatment, MI—Motivational Interviewing, SAU— services as usual. The Δ compares one row of the table with the previous row, adjusting for covariates. For the current study, strictly dominated means that one intervention was more effective and less expensive than the alternative.

Figure A.3.

Cost-Effectiveness Acceptability Curve for Treatment Initiation within 6 Months Including Startup Costs and MAPIT Licensing Fee

4. Change the outcome from initiating any treatment (which was used in the main study [Lerch et al., 2017]) to initiating formal treatment only

Table A.4.

Cost-Effectiveness Analysis Results, Formal Treatment ($2016)

| Formal Treatment Initiation 6MFU | |||

|---|---|---|---|

|

|

|||

| Treatment | Mean Cost (C) | Mean Effectiveness (E) |

ICER (ΔC/ΔE) |

| SAU | $— | 29% (0.10) | — |

| MAPIT | $79.37 ($0.12) | 30.1% (0.11) | $72.15 |

| MI | $134.27 ($0.10) | 27.5% (0.10) | Strictly dominated |

Note: Treatments are ranked in ascending order according to cost. 6MFU—6-Month Follow-Up, ICER—Incremental Cost Effectiveness Ratio, MAPIT—Motivational Assessment Program to Initiate Treatment, MI—Motivational Interviewing, SAU— services as usual. The Δ compares one row of the table with the previous row, adjusting for covariates. For the current study, strictly dominated means that one intervention was more effective and less expensive than the alternative.

Figure A.4.

Cost-Effectiveness Acceptability Curve for Formal Treatment Initiation within 6 Months

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Samples of the program can be viewed at http://youtu.be/9yV6bTn1tVE, http://youtu.be/XEZ5o48WwTg, http://youtu.be/u2SHWG0QXe8, and http://youtu.be/wMShVdPpcsw.

Clinical trial registration: NCT01891656 (initiated on October 18, 2011)

Conflict of interest: The authors declare that they have no conflicts of interest.

References

- Alemi F, Taxman F, Doyon V, Thanner M, Baghi H. Activity based costing of probation with and without substance abuse treatment: A case study. Journal of Mental Health Policy and Economics. 2004;7:51–58. [PubMed] [Google Scholar]

- Barnett NP, Murphy JG, Colby SM, Monti PM. Efficacy of counselor vs. computer-delivered intervention with mandated college students. Addictive Behaviors. 2007;32(11):2529–2548. doi: 10.1016/j.addbeh.2007.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton GR, Briggs AH, Fenwick EAL. Optimal cost effectiveness decisions: the role of the cost-effectiveness acceptability curve (CEAC), the cost-effectiveness acceptability frontier (CEAF), and the expected value of perfection information (EVPI) Value in Health. 2008;11:886–897. doi: 10.1111/j.1524-4733.2008.00358.x. [DOI] [PubMed] [Google Scholar]

- Belenko S, Peugh J. Fighting crime by treating substance abuse. Issues in Science and Technology. 1998;15(1):53–60. [Google Scholar]

- Chadwick N, Dawolf A, Serin R. Effectively training community supervision officers: A meta-analytic review of the impact on offender outcome. Criminal Justice & Behavior. 2015;42:977–989. [Google Scholar]

- Cowell AJ, Broner N, Dupont R. The cost-effectiveness of criminal justice diversion programs for people with serious mental illness co-occurring with substance abuse four case studies. Journal of Contemporary Criminal Justice. 2004;20(3):292–314. [Google Scholar]

- Cowell AJ, Dowd WN, Mills MJ, Hinde JM, Bray JW. Sustaining SBIRT in the wild: Simulating revenues and costs for Screening, Brief Intervention and Referral to Treatment programs. Addiction. 2017;112(S2):101–109. doi: 10.1111/add.13650. [DOI] [PubMed] [Google Scholar]

- Drake EK. “What works” in community supervision: Interim report. Olympia, WA: Washington State Institute for Public Policy; 2011. [Google Scholar]

- Drake EK, Aos S. Confinement for technical violations of community supervision: is there an effect on felony recidivism? Olympia, WA: Washington State Institute for Public Policy; 2012. [Google Scholar]

- Drummond MF, Sculpher MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the economic evaluation of health care programmes. New York, NY: Oxford University Press; 2015. [Google Scholar]

- Fenwick E, Claxton K, Sculpher M. Representing uncertainty: The role of cost-effectiveness acceptability curves. Health Economics. 2001;10:779–787. doi: 10.1002/hec.635. [DOI] [PubMed] [Google Scholar]

- Glick HA, Doshi JA, Sonnad SS, Polsky D. Economic evaluation in clinical trials. Oxford, United Kingdom: Oxford University Press; 2015. [Google Scholar]

- Griffith JD, Hiller ML, Knight K, Simpson DD. A cost-effectiveness analysis of in-prison therapeutic community treatment and risk classification. The Prison Journal. 1999;79:352–368. [Google Scholar]

- Hall K, Staiger PK, Simpson A, Best D, Lubman DI. After 30 years of dissemination, have we achieved sustained practice change in motivational interviewing? Addiction. 2016;111:1144–1150. doi: 10.1111/add.13014. [DOI] [PubMed] [Google Scholar]

- Kaeble D, Maruschak LM, Bonczar TP. Probation and parole in the United States, 2014. Washington, DC: Bureau of Justice Statistics, U.S. Department of Justice; 2015. [Google Scholar]

- Lerch J, Walters ST, Tang L, Taxman FS. Effectiveness of a computerized motivational intervention on treatment initiation and substance use: Results from a randomized trial. Journal of Substance Abuse Treatment. 2017;80:59–66. doi: 10.1016/j.jsat.2017.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsch LA, Carroll KM, Kiluk BD. Technology-based interventions for the treatment and recovery management of substance use disorders: A JSAT special issue. Journal of Substance Abuse Treatment. 2014;46(1):1–4. doi: 10.1016/j.jsat.2013.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCollister KE, French MT. The relative contribution of outcome domains in the total economic benefit of addiction interventions: A review of first findings. Addiction. 2003;98:1647–1659. doi: 10.1111/j.1360-0443.2003.00541.x. [DOI] [PubMed] [Google Scholar]

- McCollister KE, French MT, Prendergast M, Hall E, Sacks S. Long-term cost-effectiveness of addiction treatment for criminal offenders. Justice Quarterly. 2004;21:659–679. [Google Scholar]

- McCollister KE, French MT, Prendergast M, Wexler H, Sacks S, Hall E. Is in-prison treatment enough? A cost-effectiveness analysis of prison-based treatment and aftercare services for substance-abusing offenders. Law & Policy. 2003;25:63–82. [Google Scholar]

- McMurran M. Motivational interviewing with offenders: A systematic review. Legal & Criminological Psychology. 2009;14:83–100. [Google Scholar]

- Moyer VA. Screening and behavioral counseling interventions in primary care to reduce alcohol misuse: U.S. preventive services task force recommendation statement. Annals of Internal Medicine. 2013;159:210–218. doi: 10.7326/0003-4819-159-3-201308060-00652. [DOI] [PubMed] [Google Scholar]

- Murphy SM, Polsky D, Lee JD, Friedmann PD, Kinlock TW, Nunes EV, O'Brien CP. Cost-effectiveness of extended release naltrexone to prevent relapse among criminal justice-involved individuals with a history of opioid use disorder. Addiction. 2017;112:1440–1450. doi: 10.1111/add.13807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neumann PJ, Sanders GD, Russell LB, Siegel JE, Ganiats TG, editors. Cost-effectiveness in health and medicine. New York, NY: Oxford University Press; 2016. [Google Scholar]

- Newmark Knight Frank. 2Q13 Baltimore Office Market. 2013a Retrieved from http://www.ngkf.com/home/research/us-market-reports.aspx.

- Newmark Knight Frank. 2Q13 Dallas Office Market. 2013b Retrieved from http://www.ngkf.com/home/research/us-market-reports.aspx.

- Scott RE, McCarthy FG, Jennett PA, Perverseff T, Lorenzetti D, Saeed A, Yeo M. Literature findings 4—cost. Journal of Telemedicine and Telecare. 2007;13:15–22. doi: 10.1258/135763307782213552. [DOI] [PubMed] [Google Scholar]

- Sobell LC, Sobell MB. Timeline Followback user’s guide: A calendar method for assessing alcohol and drug use. Toronto: Addiction Research Foundation; 1996. [Google Scholar]

- Substance Abuse and Mental Health Services Administration (SAMHSA) The NSDUH Report: Trends in substance use disorders among males aged 18 to 49 on probation or parole. Rockville, MD: Substance Abuse and Mental Health Services Administration; 2014. [PubMed] [Google Scholar]

- Taxman FS. No illusions: Offender and organizational change in Maryland's proactive community supervision efforts. Criminology & Public Policy. 2008;7(2):275–302. [Google Scholar]

- Taxman FS, Perdoni ML, Caudy M. The plight of providing appropriate substance abuse treatment services to offenders: Modeling the gaps in service delivery. Victims & Offenders. 2013;8(1):70–93. [Google Scholar]

- Taxman FS, Walters ST, Sloas LB, Lerch J, Rodriguez M. Motivational tools to improve probationer treatment outcomes. Contemporary Clinical Trials. 2015;43:120–128. doi: 10.1016/j.cct.2015.05.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walters ST, Vader AM, Nguyen ND, Harris TR, Eells J. Motivational interviewing as a supervision strategy in probation: A randomized effectiveness trial. Journal of Offender Rehabilitation. 2010;49(5):309–323. [Google Scholar]

- Walters ST, Ondersma SJ, Ingersoll KS, Rodriguez M, Lerch J, Rossheim ME, Taxman FS. MAPIT: Development of a web-based intervention targeting substance abuse treatment in the criminal justice system. Journal of Substance Abuse Treatment. 2014;46:60–65. doi: 10.1016/j.jsat.2013.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zarkin GA, Cowell AJ, Hicks KA, Mills MJ, Belenko S, Dunlap LJ, Keyes V. Benefits and costs of substance abuse treatment programs for state prison inmates: Results from a lifetime simulation model. Health Economics. 2012;21(6):633–652. doi: 10.1002/hec.1735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zarkin GA, Dunlap LJ, Belenko S, Dynia PA. A benefit-cost analysis of the Kings County District Attorney’s Office Drug Treatment Alternative to Prison (DTAP) Program. Justice Research and Policy. 2005;7(1):1–25. [Google Scholar]