Abstract

Cellphones equipped with high-quality cameras and powerful CPUs as well as GPUs are widespread. This opens new prospects to use such existing computational and imaging resources to perform medical diagnosis in developing countries at a very low cost. Many relevant samples, like biological cells or waterborn parasites, are almost fully transparent. As they do not exhibit absorption, but alter the light’s phase only, they are almost invisible in brightfield microscopy. Expensive equipment and procedures for microscopic contrasting or sample staining often are not available. Dedicated illumination approaches, tailored to the sample under investigation help to boost the contrast. This is achieved by a programmable illumination source, which also allows to measure the phase gradient using the differential phase contrast (DPC) [1, 2] or even the quantitative phase using the derived qDPC approach [3]. By applying machine-learning techniques, such as a convolutional neural network (CNN), it is possible to learn a relationship between samples to be examined and its optimal light source shapes, in order to increase e.g. phase contrast, from a given dataset to enable real-time applications. For the experimental setup, we developed a 3D-printed smartphone microscope for less than 100 $ using off-the-shelf components only such as a low-cost video projector. The fully automated system assures true Koehler illumination with an LCD as the condenser aperture and a reversed smartphone lens as the microscope objective. We show that the effect of a varied light source shape, using the pre-trained CNN, does not only improve the phase contrast, but also the impression of an improvement in optical resolution without adding any special optics, as demonstrated by measurements.

1 Introduction

In recent years the field of smart microscopy tried to enhance the user-friendliness as well as the image quaility of a standard microscope. Since then, the final output of the instrument thus can be more than what the user sees through the eyepiece. Taking a series of images and extract the phase information using the transport of intensity equation (TIE) [4], extracting the amplitude and phase from a hologram [5, 6] or capture multi-mode images such as darkfield, brightfield and qDPC [3, 7, 8] at the same time are just some examples.

Translating those concepts to field-portable devices for use in developing countries or education, where cost-effective devices like smartphones are widely spread, brings powerful tools for diagnosis or learning to the masses.

Most biological organisms have only low amplitude contrast, thus are hardly visible in common brightfield configurations. Interferometric or the already mentioned holographic approaches are able to reconstruct the phase in a computational post process. Other methods like the well known differential interference contrast (DIC) or Zernike Phase contrast use special optics to convert the phase into amplitude contrast to visualize these objects.

In the year 1899 Siedentopf [9] suggested a set of rules which can be used to enhance the contrast of an object by manipulating the design of the illumination source. Best contrast for a 2-dimensional sinusoidal grating e.g. can be achieved using a dipole configuration, where two illumination-spots perpendicular to the grating vector are placed in the condenser aperture. Since all objects can be modelled as a sum of an infinite number of sine/cosine patterns, there will always be an optimized source shape, which is, however, not always easy to find by just trial-and-error.

In computational lithography this principle is known as source-mask optimization (SMO) which reduces the critical dimension (CD), defined by the smallest feature size of the mask which can be imaged, by optimizing a freeform light source using e.g. a DMD [10] with an inverse problem. In lithography the subject of optimization is the mask which is well known, whereas in light microscopy the object, more precisely, its complex transmission function is unknown, which can be estimated using methods mentioned above and described below.

We present here an optimization-procedure for the illumination shape. The starting point of the algorithm is given by the estimated complex object transmission function t(x), which is given by inverse filtering of multiple intensity images using the weak-object transfer function (WOTF) developed by Tian et al. [3].

The paper is organized in four parts, where we start with a short introduction into the theory of image formation in an incoherent optical system and how it depends on the shape of the light source in section two. The third part shows methods for optimizing a light source shape which is then used in two different experimental systems described in part four. The experimental results taken with the lab-microscope which is equipped with a home-made low-cost SLM made by a smartphone Display and the smartphone microscope which derives from a low-cost LED-projector are shown in the fourth and fifth section.

2 Theory

2.1 Contrast formation in partially coherent imaging systems

To optimize the contrast of an object at a given illumination configuration, a forward model has to be defined which is used by the optimization routine in order to evaluate the effect of changes in the light source.

A common way to simulate a partially coherent imaging system in Koehler configuration, is to compute the system’s transmission cross coefficient (TCC) introduced by Hopkins [11] and processes the spectrum of the object-transmission function with this 4D transfer function, visualized for the 2D case in Fig 1. Abbes approach [12] discretizes the effective light source into a sum of infinitesimal small point sources s(νs). Each of these point-emitters shifts the object’s spectrum in Fourier-space. After summing all intensities of the inverse-Fourier transform with the objecitve’s PSF h(x) filtered spectrum over the area of the spatially extended condenser pupil NAc following [13, 14], one gets the partially-coherent image (in lithography often called aerial image).

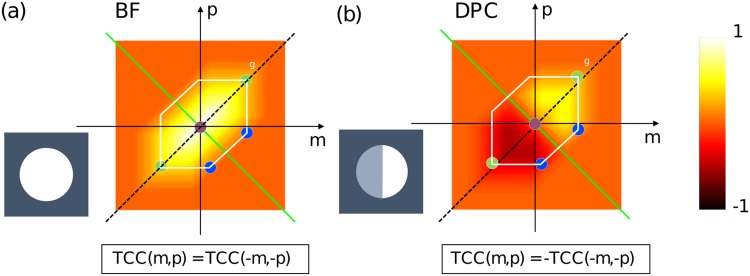

Fig 1. Symmetry properties of the TCC at different illumination configurations.

In (a) the TCC at p = q = 0 gives the partially coherent transfer function for a brightfield and in (b) for a DPC system (b). The green line shows the axis of symmetry. The DPC setup offers odd symmetry which enables phase-contrast.

To simplify the derivation of the image formation process, we limit calculus only to frequencies in x-direction. The same holds true if one extends the problem to two dimensions. The intensity then computes to

| (1) |

where NAc = n ⋅ sin(αc) represents the numerical aperture of the condenser. After interchanging the integration boundaries one gets the TCC following Hopkins [11]

| (2) |

| (3) |

| (4) |

where the object spectrum is given by a Fourier transform of the tranmission-function . H(ν) and S(ν) represent the two-dimensional pupil functions from the objective and the condenser respectively. Detailed investigation can be found in [15].

Following [11] the so-called Transmission-Cross-Coefficient TCC(ν1, ν2) or sometimes partial coherent transfer function PCTF [13], can be extracted from Eq (4) which then describes the imaging properties of a partially coherent imaging system and contains all information about illumination and aberration:

| (5) |

where Cn is the normalization-factor, that is usually the maximum value of the transfer function [15].

This filter function can be understood as the geometrical overlap of the 2D objective pupil H, its conjugate H* and the effective illumination source S. The shift of the H and H* corresponds to a spatial frequency caused by the bilinear object spectrum. Thus the TCC(ν1, ν2) gives the attenuation of an object frequency pair at a certain spatial frequency.

The main advantage of this method is, that the TCC, once computed, can be stored in memory for later reuse, thus it represents a very computationally efficient way to see how the contrast is modulated by the microscope.

A simplification introduced by Cobb et al. [16] and later further developed by Yamazoe et al. [17, 18] reduces this 4D matrix into a set of 2D-filter kernels by applying the singular-value decomposition (SVD). The result will be a set of eigenvectors which acts as filter kernels and its eigenvalues which gives the weights. It was shown, that the eigenvalues decrease rapidly, thus it’s possible to use only the first 2 Eigenvectors to simulate an image by keeping the error below 10%.

2.2 Partially coherent image formation

It can be shown, that without adding any additional optics, such as phase masks in Zernike phase contrast or prisms in DIC, the object’s phase contrast can be enhanced by an iterative optimization process which manipulates the shape of the condenser aperture.

A mathematical motivation of this phenomena can be found in Sheppard/ Wilson [1] which claims that a symmetric and real-valued optical system, such as the perfect brightfield microscope, is not capable to image the phase of an object. To make phase variations visible one can make the TCC asymmetric by illuminating the object asymmetrically (e.g. DPC) or adding a phase factor in the pupil plane (e.g. introducing a phase mask or defocus the object).

This can be proved by a simple example, where we define a sinusoidal phase grating visualized in Fig 2 . Its direction is defined by the k-vector, which can be represented as a scalar in the one-dimensional case kg, m ⊂ [0, 1] defines its modulation depth. Its Fourier-transform is given by a Taylor series expansion

| (6) |

where J is the Bessel function of the 1-st order. The aerial image (Fig 2) is given by

| (7) |

Taking the q = 0, q = ±1 diffraction order into account only (indicated as red/blue dots in Fig 1) and inserting Eq (6) into Eq (7), the formula reduces to 2 cases:

| (8) |

| (9) |

| (10) |

| (11) |

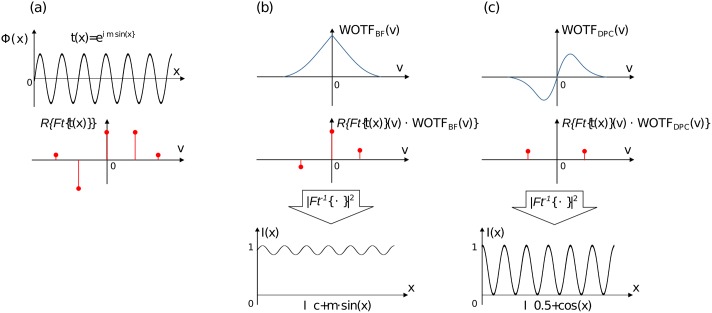

Fig 2. Asymmetric illumination source enables phase-contrast.

(a) shows the transmission-function t(x) of the sinusoidial phase object and its spectrum which gets filtered in (b) by the WOTF of the brightfield microscope and in (c) by the DPC-system. One clearly sees, that an odd symmetric optical system is capable of transmitting phase information and images the phase-gradient.

The TCC of a “perfect” brightfield microscope has even symmetry and has a real-valued pupilshape, which means, that no phase factors e.g. aberrations are present. Inserting TCC(m, p) = TCC(−m, −p) into the two cases show, that the phase term vanishes and only a DC-term predominates the aerial image.

By sequentially shading both sides of the condenser aperture and subtracting the resulting two images from one another gives an odd-symmetric TCC as can be seen in the 2D-TCC plot in Fig 1(b). Inserting TCC(m, p) = −TCC(−m, −p) into Eq (11), one can see, that the intensity follows the phase gradient plus a DC-term (I1 + I2) which can also be removed [19]

| (12) |

This brief mathematical description shows, that an appropriate light source shape enables to image phase contrast by manipulating the condenser’s aperture plane which can conveniently be done with an SLM, also shown in [20] and [21].

3 Optimization of the light source

According to the illumination principle introduced by Siedentopf [22] it is best practise in order to enhance the contrast in the object plane, by leaving out the illumination direction which do not contribute to the image formation.

This gives a huge degree of freedom which can be reduced by simplifying the effective area by a finite number of parameters which represent a pattern, when trying to optimize a m × n pixel grid of a freeform light source. In [23] this has been done by by a set of Zernike Coefficients which reduces the number of parameters considerably. An alternative is to divide the circular condenser aperture in ring segments and weigh each of them with a specific value.

A generalized light source can be described as a superposition of individual sub-illumination functions Zs(m, n), where each can be weighted with a factor Ψl:

| (13) |

and the latter one represents the source in matrix notation with Ψ = [Ψ1Ψ2…ΨP]T and m, n, p, q give the discrete pixel coordinates. S holds the light patterns, e.g. i-th Zernike polynomial or circular segment.

Inserting Eq (13) in Eq (5) gives a TCC whose imaging properties now depend on the parameter-set Ψ multiplied with the precomputed four-dimensional TCCS(m, n;p, q), which allows the computation of two-dimensional images

| (14) |

| (15) |

Separating the TCCZ into its Eigenvalues λi and Eigenfunctions Ψi using the SVD gives

| (16) |

thus the intensity of the aerial image reduces to

| (17) |

| (18) |

where k is the maximum number of used filter kernels (e.g. SVD-Eigenvectors).

Varying the source parameters has an influence on contrast, but also on intensity in the specimen plane or spatial resolution, where each image’s quality criterion can be measured by a tailored cost function. One example for a possible cost function can be derived following the so-called fidelity [15], which is defined as

| (19) |

| (20) |

| (21) |

This gives a quality measure how similar the intensity measurement, e.g. on the camera, follows the quantitative phase estimate by the qDPC image. Another approach could be to maximize the standard deviation of the simulated pixels and thus maximize the phase contrast, by changing the pixels inside the aperture plane. The next step would be to create a cost function using Eqs (21) and (18).

| (22) |

| (23) |

using Eq (18)

| (24) |

| (25) |

3.0.1 Particle swarm algorithm optimization

The simplification in the previous step, to accelerate the computation of an aerial image by using the SVD, complicates the calculation of the analytical gradient ∇{F(Ψ)} to feed e.g. a gradient-descent algorithm. Using genetic algorithms, like the so-called particle swarm optimization algorithm (PSO) [24], bypasses the lack of the analytical gradient.

The PSO finds its analogy in the behaviour of a swarm, such as a flock of birds, where the direction and velocity of its centre depends on the movement of each single individual as well as the whole swarm. In our approach, each individual of the swarm evaluates one possible illumination situation using the parameter-vector Ψ. The swarm size was empirically chosen to be 15 ⋅ lmax, where lmax corresponds to the maximum numbers of variable parameters, as suggested by Montgommery [25].

The iteration was stopped, once the movement of the swarm slows down to a specific amount or by reaching a maximum number of iterations [24]. The algorithm developed in Matlab 2015a (The MathWorks, Inc., Massachusetts, USA) and Tensorflow [26] is available on Github [27, 28].

3.0.2 Gradient descent optimization

Alternatively we used the auto-differentiation functionality of the open-source ML library Tensorflow [26] which enables a gradient based optimization using different optimizers (i.e. Gradient-Descent, Adadelta, etc.). We used the precomputed eigenfunctions and eigenvalues from Eq (16) to predict the intensity of the aerial image in Eq (18) following Eq (18). Different error-norms can be introduced, while the so called uniform-norm, which tries to maximize the absolute difference between the minimum and maximum Intensity value of the simulated image

| (26) |

was chosen as the best error measure for the given situation. In both cases, the initial values of the illumination source were set to one.

4 Using machine learning to optimize the light source

Even after optimizing the code by replacing the 4D-TCC with only two convolution-kernels following Eq (16) to simulate one intensity image, the process of finding an optimized set of the parameters takes about 20 s on a quadcore computer, which is not reasonable for biologists or high throughput-applications, such as drug screening or industrial metrology, who needs results in real-time.

Recent years gave rise to computational power and brought the field of Big Data in combination with neural networks back to focus. The field of machine learning has been applied to many tasks in microscopy such as automated cell-labelling [29] or learning approaches in optical tomography [30]. In our approach we show, that a neural network is capable to learn an optimized light source shape from a set of prior generated input-output-pairs. A major advantage of a neural network is, once it is trained, the learned weights can be used on devices with low computational power, such as cellphones.

4.1 Generating a dataset for machine learning

For training the network, we generated a data-set of microscopic-like objects. We exploit the self-similarity of biological objects [31], especially in its ultrastructure and overall shape, to maximize the generality of our application by building a universal dataset. This is possible, because an optimized illumination source rather depends on the object’s structure, than its type.

Therefore we included about 1.000 randomly chosen microscopy images (i.e. epithelial cells, bacteria, diatoms, etc.) from public databases [32, 33] as well as from acquired images using the qDPC approach. To expand the dataset, we added artificially created patterns from a 2D discrete cosine transformation (DCT) which mimic grating-like structures, such as muscle-cells or metallurgical objects, very well.

The datasets also includes the optimized illumination-parameters to enhance the phase-contrast of the complex object transfer function, generated with the algorithms explained in section 2.2. The transfer function itself was derived by generating pseudo phase maps from the intensity images.

Augmentation of the samples to avoid overfitting in the learning process of the NN was done by adding noise and rotation to the images. In case of the segment-illumination, the parameters have to be rotated likewise, due to the Fourier-relationship, which states, that a rotation around the zero-position corresponds to a rotation in frequency-space, thus no reevaluation of the parameters using the algorithm is necessary.

To overcome possible phase ambiguities, the complex object was split into real and imaginary part rather than amplitude and phase, which then creates a 2-layer 2D image and can be converted into a N × N × 2 matrix, where N corresponds to the number of pixels along x or y.

4.2 Architecture and training of the CNN

The cost function was defined as the mean-squared error (MSE) of the predicted and measured values m of the output intensities in the condenser aperture plane as

| (27) |

It turned out, that the criteria to predict the CNN’s accuracy, by simply comparing the exact matches of the continuous output and the dataset values would be too strict. Therefore the continuous output in the range of was quantized by rounding the first decimal place. Thus the accuracy is calculated by summing over all equal output-pairs and then normalizing it by dividing by the number of samples to get the relative accuracy

| (28) |

The dataset was separated into a train-, validation- and test-set by the fractions 80/10/10%. A batch-size of 128 training-samples was feed into the CNN, where the error was optimized using the ADAM [34] update rule with a empirically chosen learning rate of 10−2. The architecture was derived from the standard fully supervised convolutional neural network (convnet) by LeCun et al. [35]) and Krizhevsky et al [36] and implemented in the open-source software Tensorflow [26] which works also on mobile devices with Android or iOS.

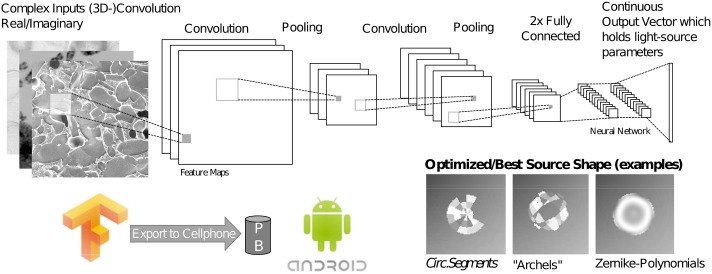

The complex 2D input image (two channels) xi is followed by a series of two 2D-conv-layers including a maxpool-layer with a feature size of 128 and kernel size of 9 × 9 and 7 × 7 respectively as shown in Fig 3. As activation function the tanh-function was chosen as this, compared e.g. to the linear relu-activation function, is better suited for continuous output values suggested in [36]. The top few layers of the network are conventional fully-connected networks with using dropout of 0.5 probability while training.

Fig 3. Basic architecture of the used CNN.

CNN which takes the complex 2-channel input images and the generated optimized light source parameters as the training data. The learned filters can then be exported to mobile devices i.e. Android smartphones.

The calculation was carried out on a Nvidia GTX TitanX, where the relative accuracy converged after about 10k iterations to a value of 67% in the testing phase, where we used the discretized error-norm described earlier.

The output of the training procedure is a set of filters, where each corresponds to object features which can be enhanced by using an appropriate source shape.

As mentioned earlier, there are always many possible solutions that could increase the phase contrast of an object, thus the output describes only a certain probability of an optimized parameter-set Ψ. Therefore we calculated the optimized illumination source for the 2D complex images from the test-set using the iterative algorithm in Sec 3.0.2. We compared it to the intensity simulations acquired with the illumination source generated using the trained CNN. The learned source-parameters even improved the overall contrast, defined as the difference of the min/max pixel-value improvement of the cost-function, in this case the uniform norm average, was about 2.5%.

The optimization procedure now reduces to a simple convolution, multiplication and summation of the input-vectors with the learned weights and the application of discriminator functions. Thus it can easily be performed by a mobile device such as a cellphone. The evaluation of the new output values resulting from the learned weights takes now tcomp = 30 ms on a computer and tcomp ≈ 430 ms on a cellphone, whereas the original algorithm in section 3 took more than tcomp = 20 s.

5 Methods

For proving, that the numerical optimization enhances the phase-contrast of a brightfield microscope, a standard upright research microscope ZEISS Axio Examiner.Z1 equiped with home-made SLM using a high-resolution smartphone display (iPhone 4S, Apple, USA) in the condenser plane, was used for the first tests. In comparison to LED-condensers, as presented in [37], a ZEMAX simulation of the koehler-illumination using an LCD in the aperture plane shows two-times better light-efficiency, which also improves the SNR and allows lower exposure times of the camera.

The higher pixel-density compared to the LED-matrix also allows the calculation using the TCC without introducing large errors or artefacts in the acquired intensity images and avoids artifacts due to missing sampling points.

5.1 Setup of a portable smartphone microscope

The effect of using the trained neural network shows its full potential, when it comes to devices with low computational power such as modern cellphones. The Tensorflow library [26] allows to train the network on a desktop-machine and exploit the trained weights/filters on mobile hardware. Low Cost smartphone microscopes were shown in [37, 38] with single LED as well as LED-matrix illumination and in a holographic on-chip configuration [39].

Here the Koehler illumination of a standard microscope is adapted to the mobile device, where a low-cost LED video projector (Jiam UC28+, China) was slightly modified by adding two additional lenses. To limit the spectral bandwidth of the illumination, necessary for getting the estimated phase from the DPC measurement, a green color filter (LEE HT 026, λ = 530nm ± 20nm) was chosen to keep the system’s efficiency as high as possible.

5.1.1 Optical and mechanical design

Following the approach by using a reversed smartphone lens (f# = keff = 2.0) of an iPhone 6 (Apple, USA) in [40] as the microscope objective, one can ensure a diffraction limited -1:1 imaging at a numerical aperture of .

The pattern of the projector which itself has an LC-Display (ILI9341), with a transmission of about 3% at 530 nm and a pixel size of 16 μm, illuminated by a high-power white light LED equipped with a ground glass diffuser can easily be controlled by the HDMI-port (MPI) of the smartphone. To guarantee a time-synchronized capturing while manipulating the pattern, a customized App [41] was written to trigger the camera-frame with the HDMI-signal using Google’s Presentation API.

The housing of the video-projector does not allow an appropriate arrangement of the lenses to form even illumination in the sample plane which is mandatory to realize Koehler Illumination. Therefore a new optical design was developed with commercially available lenses.

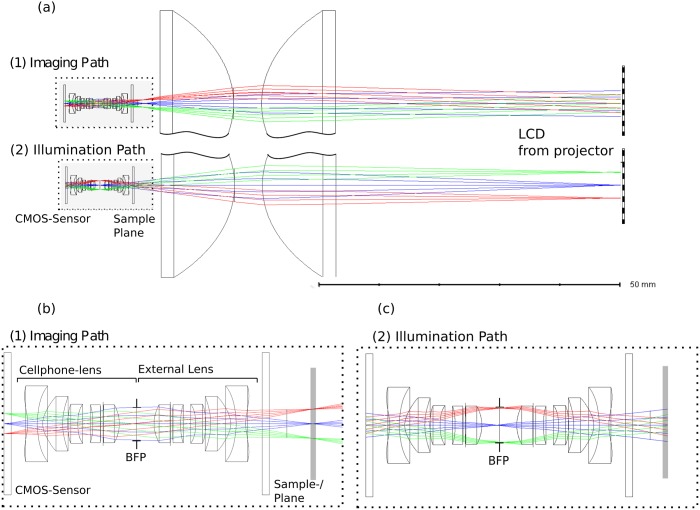

To ensure Koehler condition the LCD is imaged into the entrance pupil of the cellphone-camera which falls into the back focal plane (BFP) of the reversed cell-phone lens. At the same time, the field diaphragm, which confines the visible field of view and reduces stray light, has to be imaged to infinity. Unlike most conventional microscope schemes, the optical setup is not telecentric and the cellphone-lens has a large field-angle of about 70°. The developed optical design in Fig 4 composed by two injection molded aspherical (Thorlabs ACL3026U) and the two cellphone lenses (microscope-objective: iPhone 6 lens, tube lens: LG Nexus 5 lens) satisfies these two requirements. It has to be said, that even though the active area of the LCD which forms the effective light source uses only a fraction of the LCD, thus only few pixels contribute to the image formation.

Fig 4. Optical Design of the illumination system.

(a) shows the microscope setup using an inversed camera-lens, the location of the sample-/slide- plane and the LCD from the video-projector. (b) shows the enlarged imaging path from (a), where the objects gets imaged onto the sensor. (c) shows, that the illumination system images the condenser aperture (e.g. the LCD) into the BFP of the microscope objective.

An open-source 3D-printed case (Fig 5, available at [42]) was built to maintain the relationship between all optical components as well as to make the microscope portable. To adjust the Koehler condition the housing of the condenser has a 3D printed outer threading, which allows to change the z-position by simply rotating the lenses. Therefore it is possible to setup the optical distances even if the cellphone is not in the correct position or another phone is used. To ensure optimal centring of the optical axis, the reversed cellphone lens was brought into position with a customized magnetic snap-fit adapter.

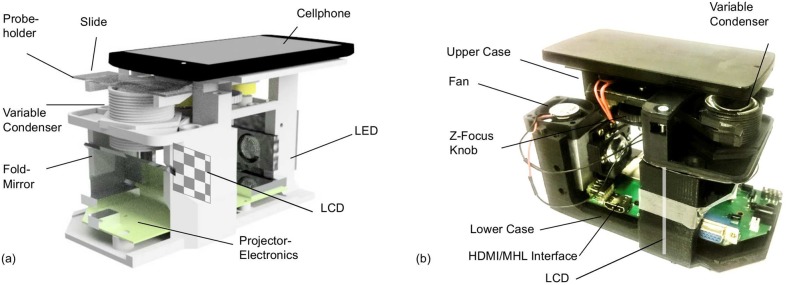

Fig 5. Rendering and 3D printed model of the microscope.

In (a) CAD rendering of the microscope, where the lens-distances were exported from the ZEMAX raytracing software to assure correct optical relationships. In (b) the fully automated microscope which uses a low-cost projector to quantitatively image the object’s phase. The location of the LCD is visualized as a white chessboard before a fold-mirror couples the light into the condenser.

The focussing of the object was also migrated by a 3D-printed linear stage which uses dry linear bearing (IGUS Drylin, Germany) on polished chrome-rods in order to achieve a precise linear moving. The adjustment is ensured by a micrometer screw (Mxfans, Micrometer 0-25mm, China).

5.1.2 Electrical and software design

The camera used in our experiments is provided by a LG D850/Google Nexus 5 [43] which is, by now, the only smartphone camera on the market which fully supports Androids Camera2 API, thus allows the readout of the raw pixel values of the sensor before post-processing e.g. demosaicing [44].

Camera-control settings like the ISO-value and exposure time, as well as the focus position can be manipulated manually, which gives rise to ensure a linear relationship between the illumination pattern and gray value necessary to satisfy the model requirements in [44]. A gamma-calibration is performed, by taking an image sequence of 256 gray patterns displayed on the projector, and estimating the look up table (LUT) by fitting the pixel average of each image to a Sigmoid-function, which characterizes the display of the LCD appropriately.

From the acquired images, an algorithm adapted from Tian et al. calculates an estimate of the quantiative phase using the OpenCV4Android framework [45] and then feeds the pretrained neural network. The final result is a parameter vector which represents the intensity weights of the selected illumination pattern (e.g. circular segments).

The optimized result is then displayed on the LCD (e.g. the condenser aperture) using the MPI interface. Thus the entire microscope can work completely autonomous.

6 Results and discussion

The proposed methods to improve the phase contrast by manipulating the condenser aperture was first tested on a standard research microscope (ZEISS Axio Examiner.Z1) with a magnification M = 20×, NA = 0.5 in air (ZEISS Plan-Neofluar) at a center-wavelength of λ = 530nm ± 20nm to give a proof-of-principle before the method was evaluated on the cellphone microscope.

The relatively low transmission and extinction, which is usually defined as the ratio of pixel on-/off-state , coming from the iPhone (ttrans = 6%, tex = 1: 800 at λ0 = 530nm) and the projector (ttrans = 3%, tex = 1: 250 at λ0 = 530nm) result in a low signal-to-noise ratio (SNR) and also in longer exposure times (e.g. >10× higher) compared to a mechanical condenser aperture. This effect was compensated by the high-power LED in the low-cost setup, where it was possible to get exposure times below texp < 10ms.

An LCD can only approximate a continuous aperture shape such as a circular aperture, but in contrast to the LED-illumination shown in [3, 37], the pixel density is still higher and results in a better approximation of the fundamental imaging theory to calculate e.g. the quantitative DPC.

6.1 Improvement in phase contrast on the cellphone microscope

The method presented here allows for increasing the phase contrast by varying the illumination source and has some major advantages compared to computational post-processing, where phase contrast is enhanced. The SNR of the acquired images can always be maximized in the acquisition process by adjusting the exposure time and per-pixel gain, once the best light source is found, which also results in the optimal dynamic range of the camera sensor.

Further advantages of the incoherent light source are, that no speckle patterns in the image are visible and that it gives two times the optical resolution compared to coherent imaging methods [46].

6.1.1 Quantitative results of microscopic objects

In order to improve the phase contrast of an object, both, the iterative and machine-learning based version of our algorithm, need information about the complex object transmission function. Therefore the open-sourced algorithm by Tian et al. [3] that allows to reconstruct the phase using the formalism of the WOTF from a series of image was implemented in the cellphone’s framework. It acquires a series of images while varying the illumination source.

To test it’s accuracy we mounted a commercially available glass fiber (Thorlabs SMF 28-100, n(λ = 530nm) = 1.42) in an immersion medium with n(λ = 530nm) = 1.46 and compared it with the expected value of the optical path-difference shown in the graph in Fig 6(a).

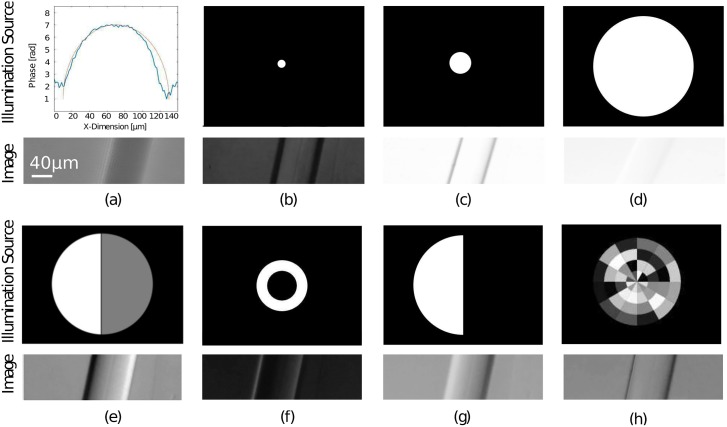

Fig 6. Quantitative and qualitative results produced by the portable microscope.

Quantitatively measured phase of a glass fiber immersed in oil using qDPC mode in (a); Intensity measurements and their corresponding illumination sources using brightfield mode with NAC = 0.1 (b), NAC = 0.2 (c), NAC = 0.5 (d); The computed DPC image in (e), a measurement in Darkfield mode (NAo < NAC) in (f), oblique illumination in (g) and the optimized light-source (NAC = 0.3; magnified for better visualization) using the CNN in (h) using (a) as the input image.

The processing of the DPC images (Fig 6(e))gives the quantitative phase map shown in (a). The phase jump at the edges of the fiber are due to the discontinuous phase gradient of the object, which is then not satisfying the model of the WOTF anymore. It has to be said, that the optical setup is very robust against misalignments in the optical path such as the position of the cellphone and the condenser relative to each other. A routine helped to automatically “koehler” the setup by centering the illumination aperture by searching for the max. intensity while varying its position on the LCD. Precise quantitative phase reconstructions were possible in almost all cases.

The different acquisition-modes (BF, DPC, qDPC, DF and the optimized illumination-source) are shown in Fig 6(b)–6(h), where a series of background and flatfield-images were acquired, prior to the data acquisition, to get rid of possible inhomogeneities in the illumination arrangement given by an incorrect alignment of the projector setup, by simply doing a flatfield-correction. The images are cropped to illustrate smaller details of the object. A non-technical object with a more complicated object structure is shown in Fig 7.

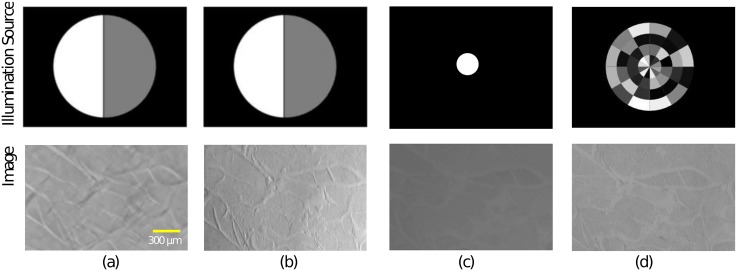

Fig 7. Quantitative and qualitative results produced by the portable microscope.

Quantitatively measured phase of Taste-buds of a rabbit using qDPC mode in (a); the computed DPC image in (b); the intensity measurement and their corresponding illumination sources using brightfield mode with NAC = 0.2 (c) and the optimized light-source (NAC = 0.3; magnified for better visualization) using the CNN in (h) using (a) as the input image.

6.1.2 Results of the optimized light source

Giving a quantitative measure of the images quality cannot be defined clearly, because the best contrast remains in the eye of the beholder. Nevertheless we quantized the contrast improvement by computing the error norms which were also used to train the network. Results for the fiber (Fig 6) and the biological sample (Fig 7) are shown in Tables 1 and 2 respectively. The images contrast was calculated by measuring the min/max pixel value and computing , the StDv was calculated using Matlabs stdfilt method. It has to be said, that comparing the two images is degraded by the fact, that the objects, even though very thin, show multiple-scattering effects, which shift i.e. the intensity information along x/y, once illuminated from oblique.

Table 1. Contrast measurements of intensity acquisitions of the fiber differently illuminated.

| Fig 6 | Method and NA | PSNR | Fidelity | StDv | Contrast |

|---|---|---|---|---|---|

| (b) | BF; NAc = 0.1 | 53,83 | 490,35 | 0,64 | 0,55 |

| (c) | BF; NAc = 0.2 | 59,84 | 15383,45 | 0,42 | 0,18 |

| (d) | BF; NAc = 0.5 | 58,05 | 14835,30 | 0,51 | 0,04 |

| (f) | DF; NAc = 0.4 | 54,18 | 8419,68 | 0,65 | 0,74 |

| (g) | DPC; NAc = 0.5 | 49,47 | 1350,69 | 0,68 | 0,49 |

| (h) | Opt. Illu.; NAc = 0.3 | 55,95 | 303,77 | 0,65 | 0,49 |

Comparison of reference and non-reference image measurements of the intensity acquisitions done with different illumination shapes from the fiber. The qDPC image was chosen to act as the reference image.

Table 2. Contrast measurements of intensity acquisitions of the fiber differently illuminated.

| Fig 7 | Method and NA | PSNR | Fidelity | StDv | Contrast |

|---|---|---|---|---|---|

| (b) | BF; NAc = 0.2 | 46,359 | 0,106 | 0,0034 | 0,27 |

| (c) | DPC; NAc = 0.5 | 50,93 | 0,0257 | 0,0053 | 0,99 |

| (d) | Opt. Illu.; NAc = 0.3 | 51,63 | 0,023 | 0,008 | 0,39 |

Comparison of reference and non-reference image quality measurements of the intensity acquisitions done with different illumination shapes from the taste bud of a rabbit. The qDPC image was chosen to act as the reference image.

These results suggest, that the fidelity (Eq (25)) improves compared to the brightfield mode, while it is not necessary to acquire multiple images and post process them, like in DPC-mode.

To demonstrate the effect of the optimized source using the algorithm in Sec 4 we imaged a histological thin-section of taste-bud cells from a rabbit which show almost no amplitude contrast in BF-mode NAc = 0.2 Fig 7(c). The resulting optimized light source NAc = 0.3 is illustrated in Fig 7(d). The dark-field-like aperture, where the frequencies which do not contribute to the image formation are damped by lowering the intensity of the proper segments improve the contrast compared to BF-mode. Introducing the oblique illumination results in a higher coherence factor , where NAo gives the numerical aperture from the objective lens. This makes it possible to resolve object structures above the limit given by the typical Rayleigh-criterion , where NAeff = NAc + NAo and Dmin defines the diameter of the smallest resolvable object structure.

Resulting from this, the highest possible frequency is given by , thus the pixel pitch for Nyquist sampling must be less than or equal to which results into a maximum optical resolution of Dmin ≤ 2.44⋅ pixel size. Following the theoretical investigation in [40], the theoretical Nyquist-limited resolution of the LG Nexus 5 with a pixel pitch of 1.9 μm, results into a resolution of ≈4.4 μm at λ = 550 nm, where the Bayer pattern was already taken into account.

Because we used an implicit way to model our optimization procedure using a machine learning approach, one can hardly judge, what the neural network really learned. From the experiments it seems, that the algorithm “liked” to give a higher weight to the outer segments of the circular segmented source. This reduces the zeroth order and can eventually increase the contrast because less “false” light is used for the image formation as already mentioned earlier. Besides that, it appears, that the method promotes the major diffraction orders of a sample by illuminating the vertically oriented fiber from left/right. This illumination-scheme is also explicitly represented by the so called Archels-Method [47].

Not all of the quality measures mentioned in Table 1 are verifying a better contrast of the optimized illumination source, thus it is dare to say, that the effort really improves the images quality. The complexity and large number of parameters of the algorithm can degrade the result of the method. From the experimental results it is clearly visible, that one can see a better phase-contrast, without the use of expensive optics, the drawback of having the phase-gradient like in DPC or only higher order scattering when using DF. The newly introduced method is capable to use the full numerical aperture of the condenser and requires no post-processing of the images once the source-pattern was created.

Conclusion

In most cases the computational and imaging potential of a cellphone is not fully exhausted. They serve an integrated framework with an already existing infrastructure for image acquisition and hardware synchronization, as well as for rapid development of user-defined image processing applications.

Taking advantage of the widespread availability of high-quality cellphone cameras which are mass-produced in well controlled production process gives a tool at hand which can be further enhanced by adding external hardware, such as the presented automated brightfield-microscope.

The field of computational imaging is then able to use the hardware in a way, that new image techniques, such as the qDPC, can be deployed on low-cost devices with high precision. Techniques to surpass the optical resolution limit, e.g. the SMO, that are around for long time can take advantage of new machine-learning applications which gives the opportunity to carry out those, usually computational expensive calculations, on embedded devices as shown in this study.

In recent years, the idea of making the sources for hard- and software available to the public, became widely accepted and it enables faster development cycles. Techniques such as the rapid prototyping then offer ways to produce cost effective microscopes e.g. for the use in 3rd-world countries and educational systems.

Further studies e.g. in [37, 38, 40, 44] show that quality and characteristic of cellphone cameras improve in each iteration of new hardware. The optical quality of the injection molded cellphone lenses can be used to diffraction limited microscopic imaging with an NA = 0.25 at price of less then 5 $ which opens opportunities to democratize health care or microscopic imaging in general. The results presented here show an optical resolution limit of up to 2 μm with the ability to measure the phase quantitatively at high accuracy, as well as imaging adaptive phase contrast methods using an actively controlled illumination source, for a price well below 100$.

The here created platform enables to integrate other imaging techniques, such as the Fourier Ptychography (FPM) [48–50] or include further image processing in the cellphone’s software such as tracking and classification of biological cells [51].

Acknowledgments

The authors thank Joerg Sprenger and Marzena Franek (CZ Microscopy GmbH) for helpful conversations as well as support and Jörg Schaffer (CZ Microscopy GmbH) for providing the research microscope.

Data Availability

All study data is available from GitHub at: https://github.com/bionanoimaging/Beamerscope_TENSORFLOWhttps://github.com/bionanoimaging/Beamerscope_MATLABhttps://github.com/bionanoimaging/Beamerscope_CADhttps://github.com/bionanoimaging/Beamerscope-Android.

Funding Statement

The work was funded by the Carl-Zeiss Microscopy GmbH Göttingen as a R&D project and by the free state of Thuringia. The funding organization did not play a role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript and only provided financial support in the form of authors’ salaries and/or research materials. The specific roles of these authors are articulated in the ‘author contributions’ section. Hereby I declare that Carl Zeiss Microscopy GmbH did not play a role in the design of the study and the experimental setups. CZ supported the study with professional lab equipment, like the microscope and the microscope objective lenses. The contributing authors were free in formulating their working hypothesis and the ways to proof it.

References

- 1. Hamilton DK, Sheppard CJR, Wilson T. Improved imaging of phase gradients in scanning optical microscopy. J Microsc. 1984;135(September):275–286. doi: 10.1111/j.1365-2818.1984.tb02533.x [Google Scholar]

- 2. Ryde N. Linear phase imaging using differential interference contrast microscopy. October. 2006;214(April 2004):7–12. [DOI] [PubMed] [Google Scholar]

- 3. Tian L, Waller L. Quantitative differential phase contrast imaging in an LED array microscope. Optics Express. 2015;23(9):11394 doi: 10.1364/OE.23.011394 [DOI] [PubMed] [Google Scholar]

- 4. Zuo C, Chen Q, Tian L, Waller L, Asundi A. Transport of intensity phase retrieval and computational imaging for partially coherent fields: The phase space perspective. Optics and Lasers in Engineering. 2015;71:20–32. doi: 10.1016/j.optlaseng.2015.03.006 [Google Scholar]

- 5. Liebling M, Blu T, Unser M. Complex-wave retrieval from a single off-axis hologram. Journal of the Optical Society of America A. 2004;21(3):367 doi: 10.1364/JOSAA.21.000367 [DOI] [PubMed] [Google Scholar]

- 6. Greenbaum A, Akbari N, Feizi A, Luo W, Ozcan A, Wang J, et al. Field-Portable Pixel Super-Resolution Colour Microscope. PLoS ONE. 2013;8(9):e76475 doi: 10.1371/journal.pone.0076475 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liu Z, Tian L, Waller L. Multi-mode microscopy in real-time with LED array illumination. International Society for Optics and Photonics; 2015. p. 93362M. Available from: http://proceedings.spiedigitallibrary.org/proceeding.aspx?doi=10.1117/12.2075951

- 8. Jung D, Choi JH, Kim S, Ryu S, Lee W, Lee JS, et al. Smartphone-based multi-contrast microscope using color-multiplexed illumination. Scientific Reports. 2017;7(1):7564 doi: 10.1038/s41598-017-07703-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Siedentopf H, Zsigmondy R. Uber Sichtbarmachung und Größenbestimmung ultramikoskopischer Teilchen, mit besonderer Anwendung auf Goldrubingläser. Annalen der Physik. 1903;315(1):1–39. doi: 10.1002/andp.19023150102 [Google Scholar]

- 10. Garetto A, Scheruebl T, Peters JH. Aerial imaging technology for photomask qualification: from a microscope to a metrology tool. Advanced Optical Technologies. 2012;1(4):289–298. doi: 10.1515/aot-2012-0124 [Google Scholar]

- 11. Hopkins HH. On the diffraction theory of optical images. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences. 1952;217(1130):408–432. doi: 10.1098/rspa.1953.0071 [Google Scholar]

- 12. Abbe E. Beiträge zur Theorie des Mikroskops und der mikroskopischen Wahrnehmung. Archiv fuer mikroskopische Anatomie. 1873;9(1):413–418. doi: 10.1007/BF02956173 [Google Scholar]

- 13.Kumar MSB. Phase-Space Perspective of Partially Coherent Imaging: Applications to Biological Phase Microscopy. Thesis. 2010;.

- 14.Hofmann C. Die optische Abbildung; 1980. Available from: https://books.google.de/books/about/Die_optische_Abbildung.html?id=_1yEpwAACAAJ&pgis=1

- 15.Gross H, Singer W, Totzeck M, Gross H. Handbook of Optical Systems. vol. 2; 2006.

- 16.Cobb N. Fast Optical and Process Proximity Correction Algorithms for Integrated Circuit Manufacturing [PhD]. University of California at Berkeley; 1998. Available from: http://www-video.eecs.berkeley.edu/papers/ncobb/cobb_phd_thesis.pdf

- 17. Yamazoe K. By Stacked Pupil Shift Matrix. America. 2008;25(12):3111–3119. [DOI] [PubMed] [Google Scholar]

- 18. Yamazoe K. Aerial image back propagation with two-dimensional transmission cross coefficient. Journal of Micro/Nanolithography, MEMS, and MOEMS. 2009;8(3):031406 doi: 10.1117/1.3206980 [Google Scholar]

- 19. Mehta SB, Sheppard CJR. Quantitative phase-gradient imaging at high resolution with asymmetric illumination-based differential phase contrast. Optics letters. 2009;34(13):1924–1926. doi: 10.1364/OL.34.001924 [DOI] [PubMed] [Google Scholar]

- 20.Bian Z. The Applications of Low-cost Liquid Crystal Display for Light Field Modulation and Multimodal Microscopy Imaging. Master’s Theses 746. 2015;.

- 21. Iglesias I, Vargas-Martin F. Quantitative phase microscopy of transparent samples using a liquid crystal display. Journal of biomedical optics. 2013;18(2):26015 doi: 10.1117/1.JBO.18.2.026015 [DOI] [PubMed] [Google Scholar]

- 22. Behrens J. Ueber ultramikroskopische Abbildung linearer Objekte. Zeitschrift fur wissenschaftliche Mikroskopie und fur mikroskopische Technik. 1915;32(1):508. [Google Scholar]

- 23. Wu X, Liu S, Li J, Lam EY. Efficient source mask optimization with Zernike polynomial functions for source representation. Opt Express. 2014;22(4):3924–3937. doi: 10.1364/OE.22.003924 [DOI] [PubMed] [Google Scholar]

- 24. Eberhart RC, Shi Y. Computational Intelligence: Concepts to Implementations. March Elsevier; 2007. [Google Scholar]

- 25. Chen S, Montgomery J, Bolufé-Röhler A. Measuring the curse of dimensionality and its effects on particle swarm optimization and differential evolution. Applied Intelligence. 2015;42(3):514–526. doi: 10.1007/s10489-014-0613-2 [Google Scholar]

- 26.Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015;.

- 27.Diederich B. Beamerscope Matlab Github Repository; 2017. Available from: https://github.com/bionanoimaging/Beamerscope_MATLAB

- 28.Diederich B. Beamerscope Tensorflow Github Repository; 2017. Available from: https://github.com/bionanoimaging/Beamerscope_TENSORFLOW

- 29. Sommer C, Gerlich DW. Machine learning in cell biology—teaching computers to recognize phenotypes. Journal of Cell Science. 2013;126(24). doi: 10.1242/jcs.123604 [DOI] [PubMed] [Google Scholar]

- 30. Kamilov US, Papadopoulos IN, Shoreh MH, Goy A, Vonesch C, Unser M, et al. Learning approach to optical tomography. Optica. 2015;2(6):517 doi: 10.1364/OPTICA.2.000517 [Google Scholar]

- 31.Losa GA, Losa, Angelo G. Fractals in Biology and Medicine. In: Encyclopedia of Molecular Cell Biology and Molecular Medicine. Weinheim, Germany: Wiley-VCH Verlag GmbH & Co. KGaA; 2011. Available from: http://doi.wiley.com/10.1002/3527600906.mcb.201100002

- 32.Brandner D and Withers G. The Cell Image Library; 2010. Available from: http://www.cellimagelibrary.org/

- 33.Larry Page SB. Google Bilder;. Available from: https://www.google.de/imghp?hl=de&tab=wi

- 34.Kingma D, Ba J. Adam: A Method for Stochastic Optimization. 2014;.

- 35.Le Cun Jackel, B Boser, J S Denker, D Henderson, R E Howard, W Hubbard, et al. Handwritten Digit Recognition with a Back-Propagation Network. Advances in Neural Information Processing Systems. 1990; p. 396–404.

- 36.Krizhevsky A, Sutskever I, Geoffrey E H. ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems 25 (NIPS2012). 2012; p. 1–9.

- 37. Phillips ZF, D’Ambrosio MV, Tian L, Rulison JJ, Patel HS, Sadras N, et al. Multi-Contrast Imaging and Digital Refocusing on a Mobile Microscope with a Domed LED Array. PLOS ONE. 2015;10(5):e0124938 doi: 10.1371/journal.pone.0124938 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Breslauer DN, Maamari RN, Switz NA, Lam WA, Fletcher DA. Mobile phone based clinical microscopy for global health applications. PloS one. 2009;4(7):e6320 doi: 10.1371/journal.pone.0006320 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Tseng D, Mudanyali O, Oztoprak C, Isikman SO, Sencan I, Yaglidere O, et al. Lensfree microscopy on a cellphone. Lab on a chip. 2010;10(14):1787–92. doi: 10.1039/c003477k [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Switz NA, D’Ambrosio MV, Fletcher DA, Petti C, Polage C, Quinn T, et al. Low-Cost Mobile Phone Microscopy with a Reversed Mobile Phone Camera Lens. PLoS ONE. 2014;9(5):e95330 doi: 10.1371/journal.pone.0095330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Diederich B. Beamerscope Android Github Repository; 2017. Available from: https://github.com/bionanoimaging/Beamerscope-ANDROID

- 42.Diederich B. Beamerscope CAD Github Reopository; 2017. Available from: https://github.com/bionanoimaging/Beamerscope_CAD

- 43.Electronics L. LG Consumer & Business Electronics | LG Deutschland;. Available from: http://www.lg.com/de

- 44. Skandarajah A, Reber CD, Switz NA, Fletcher DA. Quantitative imaging with a mobile phone microscope. PLoS ONE. 2014;9(5). doi: 10.1371/journal.pone.0096906 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.3 0 O. OpenCV | OpenCV;. Available from: http://opencv.org/

- 46. Tian L, Waller L. 3D intensity and phase imaging from light field measurements in an LED array microscope. Optica. 2015;2(2):104–111. doi: 10.1364/OPTICA.2.000104 [Google Scholar]

- 47. Granik Y. Source optimization for image fidelity and throughput. Journal of Microlithography, Microfabrication, and Microsystems. 2004;3(4):509. [Google Scholar]

- 48. Tian L, Li X, Ramchandran K, Waller L. Multiplexed coded illumination for Fourier Ptychography with an LED array microscope. Biomedical optics express. 2014;5(7):2376–89. doi: 10.1364/BOE.5.002376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Ou X, Horstmeyer R, Zheng G, Yang C. High numerical aperture Fourier ptychography: principle, implementation and characterization. Optics express. 2015;23(3):5473–5480. doi: 10.1364/OE.23.003472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zheng G, Horstmeyer R, Yang C. Ptychographic Microscopy; p. 1–15.

- 51. Angermueller C, Pärnamaa T, Parts L, Oliver S. Deep Learning for Computational Biology. Molecular Systems Biology. 2016;(12):878 doi: 10.15252/msb.20156651 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All study data is available from GitHub at: https://github.com/bionanoimaging/Beamerscope_TENSORFLOWhttps://github.com/bionanoimaging/Beamerscope_MATLABhttps://github.com/bionanoimaging/Beamerscope_CADhttps://github.com/bionanoimaging/Beamerscope-Android.