Abstract

Introduction:

In 2011, the Agence de la santé et des services sociaux de Montréal (ASSSM), in partnership with the region’s Centres de santé et de services sociaux (CSSS), coordinated the implementation of a program on cardiometabolic risk based on the Chronic Care Model. The program, intended for patients suffering from diabetes or hypertension, involved a series of individual follow-up appointments, group classes and exercise sessions. Our study assesses the impact on patient health outcomes of variations in the implementation of some aspects of the program among the six CSSSs taking part in the study.

Methods:

The evaluation was carried out using a quasi-experimental “before and after” design. Implementation variables were constructed based on data collected during the implementation analysis regarding resources, compliance with the clinical process set out in the regional program, the program experience and internal coordination within the care team. Differences in differences using propensity scores were calculated for HbA1c results, achieving the blood pressure (BP) target, and two lifestyle targets (exercise level and carbohydrate distribution) at the 6- and 12-month follow-ups, based on greater or lesser patient exposure to the implementation of various aspects of the program under study.

Results:

The results focus on 1185 patients for whom we had data at the 6-month follow- up and the 992 patients from the 12-month follow-up. The difference in differences analysis shows no clear association between the extent of implementation of the various aspects of the program under study and patient health outcomes.

Conclusion:

The program produces effects on selected health indicators independent of variations in program implementation among the CSSSs taking part in the study. The results suggest that the effects of this type of program are more highly dependent on the delivery of interventions to patients than on the organizational aspects of its implementation.

Keywords: chronic disease, diabetes, hypertension, primary health care

Highlights

The six CSSSs in the study implemented a program with moderate local variations.

Local variations between the CSSSs with regard to program implementation do not appear to have had an impact on patient health outcomes.

The results seem to indicate that the program’s impact is more dependent on the patient’s progress through the clinical process, which is based on aspects of the Chronic Care Model, rather than on the program’s organizational aspects.

Introduction

The steady increase in prevalence of diabetes mellitus and high blood pressure (HBP) among Canadians is worrisome. Because the diseases share an etiology— and this is a major risk factor for heart disease1,2—it is logical to consider them jointly as part of a prevention and management approach.

The Chronic Care Model (CCM) is a chronic disease care model that can be used to guide health care reform to optimize the management of chronic disease.3 In 2011, the Agence de la santé et des services sociaux de Montréal (ASSSM), in partnership with the region’s Centres de santé et services sociaux (CSSS), coordinated the implementation of an integrated interdisciplinary cardiometabolic risk prevention and intervention program. The duration of the program was two years; it was inspired by the CCM and was aimed at making lifestyle changes, restoring biological indicators, preventing complications, and empowering patients with diabetes or hypertension (additional information on the program and the eligibility criteria is available from the authors).

A number of studies have shown that CCM-based interventions not only improve the process and health outcomes, but also reduce costs and service use among patients with chronic diseases,4 particularly in the case of diabetes.5 Although we attempted to assess the impact of the CCM’s implementation on effects on patients in order to determine which specific elements or combination thereof yielded the best results, none have been identified to date.6,7 In addition, to our knowledge, no studies have focused on implementation context and variations in implementation of a CCM-inspired intervention among various local settings as regards the effects on patients.

The purpose of this study is to assess, as part of the implementation of the program in the various CSSSs, the effects of variations in the implementation of certain aspects of the program on patient health indicators.

Methods

Study design

Our study is a secondary analysis carried out as part of the assessment of the cardiometabolic risk program in Montréal.8 A quasi-experimental approach was taken to assessing the effects of variations in the implementation of certain aspects of the program on patient health outcomes.9

Six of the 12 CSSSs in Montréal took part in the evaluation. They were selected on a voluntary basis, as well as on their willingness to comply with the general program implementation framework suggested by the Agency. Patient recruitment was carried out by CSSS staff and took place from March 2011 to August 2013. The objective was to have each CSSS in the study recruit 300 patients per year for a total of 1500 patients per year, with anticipated attrition of approximately 15%.

Data sources and definition of variables

Data on program implementation were taken from the implementation analysis, whose purpose was to provide an overall assessment of the program. It was based on the program’s logic model and the conceptual framework of factors that explain the degree of implementation. They are qualitative in nature and were collected in three phases (at the outset of program implementation in March 2011, or implementation T0; 20 months later, in November 2012, or implementation T20; and in June and July 2014, 40 months after implementation, or implementation T40) using a variety of methods: semi-formal interviews with local and regional officers, collection of official documents, questionnaires for the managers in charge and stakeholders involved in the program in each territory.

Independent variables

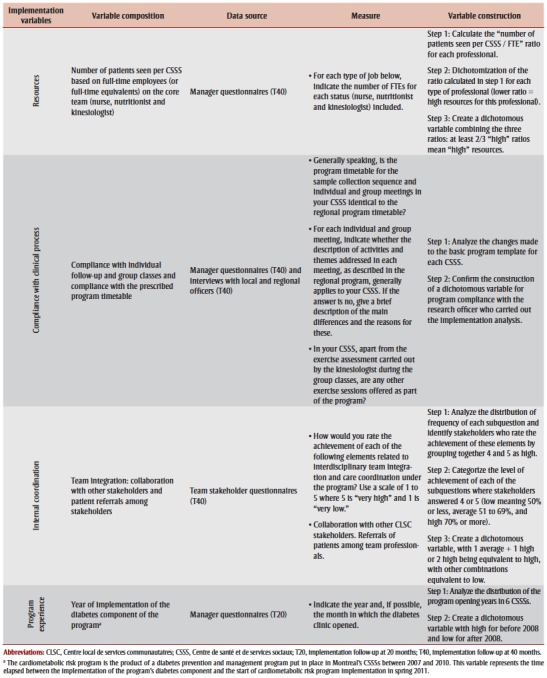

The study’s independent variables are variations in the implementation of four aspects of the program between participating CSSSs at T40, i.e. once the implementation analysis was complete. We selected the variables that had the greatest likelihood of affecting patient health outcomes: resources, program compliance to the planned regional clinical process, internal coordination of the health team, and program experience. These “implementation variables” were dichotomized in order to compare results for two groups of patients: the group of patients exposed to the program in CSSSs where the characteristic under study had been implemented more strongly (which we will call the “high implementation variable exposure” group), and the group of patients exposed to the program in CSSSs where the characteristic was less strongly implemented (which we will call the “low implementation variable exposure” group). The resources are the number of patients seen per CSSS based on full-time staff (or their equivalent) on the core team (nurses, nutritionist and kinesiologist). Compliance with the clinical process means compliance with individual follow-ups, group classes and adherence to the calendar set out in the regional program. Internal coordination means team integration in terms of collaboration with other stakeholders and patient referrals among stakeholders. Program experience means the number of years since the implementation of the first program component (diabetes), but also greater stakeholder experience with the program as noted in the qualitative implementation analysis carried out prior to this study.

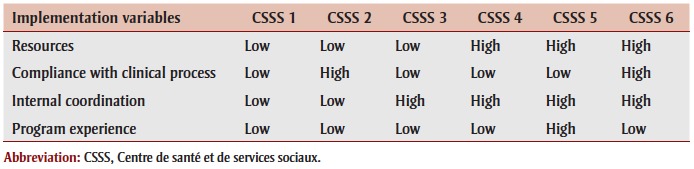

CSSS 1 was weak in its implementation of the four program components. CSSS 2 had more extensive program compliance. CSSS 3 was the strongest in implementing internal coordination. CSSSs 4, 5 and 6 were those that invested the most resources in the program and whose internal coordination was implemented most extensively. In addition, CSSS 6 had high compliance with the prescribed clinical process, and CSSS 5 distinguished itself with its program experience.

Each of the implementation variables was analyzed individually, as it was impossible to compare CSSSs that implemented all the variables with high intensity to those that implemented all the variables with lesser intensity (Table 1).

TABLE 1. Distribution of the four implementation variables for each CSSS.

|

The conversion of implementation variables into dichotomous variables was done while taking into account their distribution, implementation analysis findings, the small number of CSSSs, moderate variability among CSSSs with regard to the extent of implementation of the program aspects studied and, lastly, choice of analysis method. The description of data sources and the variable construction details (including dichotomization) are set out in Table 2. The “high exposure to the implementation variable” and “low exposure to the implementation variable” groups differ for each of the implementation variables. Details of patient characteristics for each group are available upon request from the authors.

TABLE 2. Implementation variables: definition, data sources and construction.

|

Dependent variables

The four dependent variables correspond to four health indicators: two clinical indicators, namely glycosylated hemoglobin (HbA1c) and blood pressure (BP); and two lifestyle indicators, i.e. exercise (EX) level and carbohydrate distribution. Data on the biological parameters (HbA1c and BP) and lifestyle (EX level and carb distribution) for each patient taking part in the assessment were extracted from the regional computerized chronic disease registry created by the ASSSM and implemented in the CSSSs as part of the project. Sociodemographic and health characteristics were drawn from a self-administered questionnaire that took approximately 20 minutes to fill out, which was given to patients taking part in the assessment at the time of their entry into the program (T0).

Glycemic control was measured using HbA1c, which is expressed as a percentage and represents the proportion of glycosylated hemoglobin as compared to total hemoglobin.10 Achieving the BP target means the achievement (yes or no) of the treatment target (below 140/90 mm Hg for non-diabetics and below 130/80 mm Hg for diabetics). Achievement of the EX target, assessed by means of a brief questionnaire adapted from Enquête québécoise sur l’activité physique et la santé11 and administered to the patient at each visit, occurs when the EX level is 3 or 4 on a scale of 1 to 4, which corresponds to the number of days the patient did at least 30 minutes of EX, weighted by activity intensity. Achievement of the balanced carbohydrate distribution (BCD) is determined by the nutritionist’s determination, following an assessment at each visit, of whether or not the patient achieved balanced carbohydrate distribution as determined by the patient’s personalized food plan. Food plans are based on the document Meal Planning for People with Diabetes at a Glance.12

Data analysis

The intervention unit is the same as the analysis unit: the patient exposed to implementation variables in his/her CSSS.

Prior to the analyses, missing data at T0 regarding the studied health indicators, or 10% to 15% of the data, underwent imputation using the Hot Deck13 method in order to reduce bias associated with non-responses14.

Difference in differences (DID) were calculated to measure the impact of implementation variables on the studied health indicators.15 A separate analysis model was constructed for each of the implementation variables studied, for each of the health outcomes studied, and for each analysis period.

Propensity scores were used in the DID analyses by including the following individual variables: age; sex; origins (Canadian or other); language spoken in the home (French or other); highest completed level of education (no high school diploma, high school diploma, college studies, university); professional activity in the past six months (working, unemployed, retired); number of comorbidities (none, one, two or more of the following: heart disease, asthma or COPD, bone and joint problems, history of stroke, mental health problems, and cancer); body mass index (BMI) on entry into the program; and type of front-line clinic of the general practitioner treating the patient for diabetes or HBP (family medicine group [FMG]; network clinic [NC]; FMG-NC; local community service centre [CLSC]; family medicine unit [FMU]; non-FMG, non-NC group clinic; solo practice; or orphaned patient). The propensity score, or the conditional likelihood of being a member of the “high exposure to the implementation variable” group based on individual characteristics, makes it possible to distribute these characteristics among the groups. Subject matching was done using the kernel matching16 method, which allows for almost complete matching by associating each subject with a fictitious counterpart representing the average weighted propensity scores of subjects with similar characteristics. A different propensity score was calculated for each analysis model. Our analyses have shown that this strategy has effectively made the “high exposure to the implementation variable” and “low exposure to the implementation variable” groups comparable on the basis of these characteristics. We can thus conclude that the effect observed between two different times in the “low exposure to the implementation variable” group would be comparable to the effect observed in the “high exposure to the implementation variable” if the group’s subjects had had a lower exposure to the studied implementation variable.

The DID analyses, performed using the STATA-diff17 module, were carried out on all patients and the various patient subgroups based on their comorbidity profile (with or without comorbidities), each taken separately. Because the program aims to manage (pre)diabetic and hypertensive patients, we can assume that the implementation impact is different for patients with comorbidities that do not fall within the program’s specific focus.

Ethical approval

This research project received the approval of the ASSSM ethics research committee.

Results

Sample description

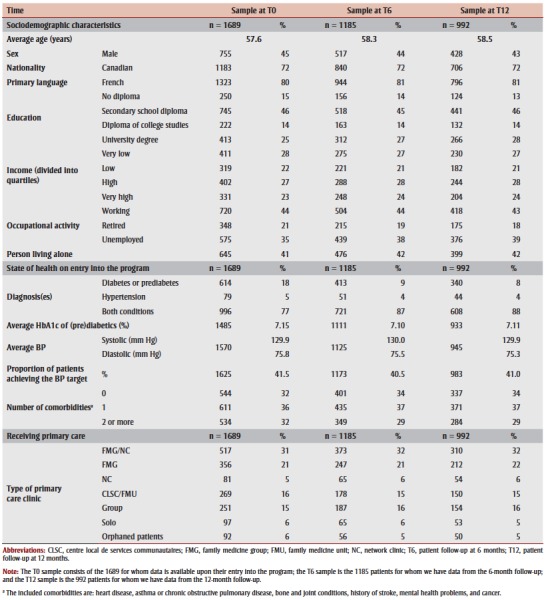

The initial sample was made up of the 1689 patients registered in the program who consented to take part in the evaluation (evaluation participation rate of 60%). At the 6-month (T6) and 12‑month (T12) follow-ups from their individual date of entry into the program, 1185 and 992 patients, respectively, had provided data. The difference in the size of the cohorts available for analysis at the three moments can be explained by both withdrawals and delays in patient follow-up.

At T0, the majority (77%) of patients suffered from diabetes (or prediabetes) or high blood pressure (HBP). Patients in the samples from the 6-month and 12-month follow-ups did not differ from those in the initial sample as regards their characteristics (Table 3), except for the proportion of patients suffering from both chronic diseases on which the program focuses. This proportion was higher in the follow-up samples.

TABLE 3. Characteristics of the samples studied.

|

Descriptive findings

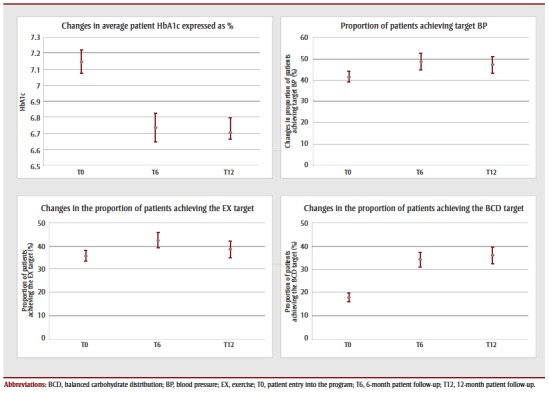

Generally speaking, the average of each health result appeared to improve over the course of the program follow-up for all patients. This was more marked between T0 and T6 (Figure 1). However, the study design did not make it possible to draw conclusions as to the program’s impact on patient health outcomes, and that impact is not the subject of this study.

Figure 1. Changes in the four health outcomes studied in all patients at 0, 6 and 12 months, with 95% confidence intervals.

Impact of implementation variables on findings: results of the difference in differences analysis

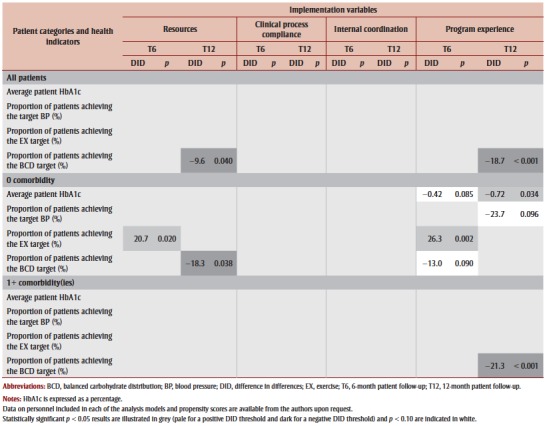

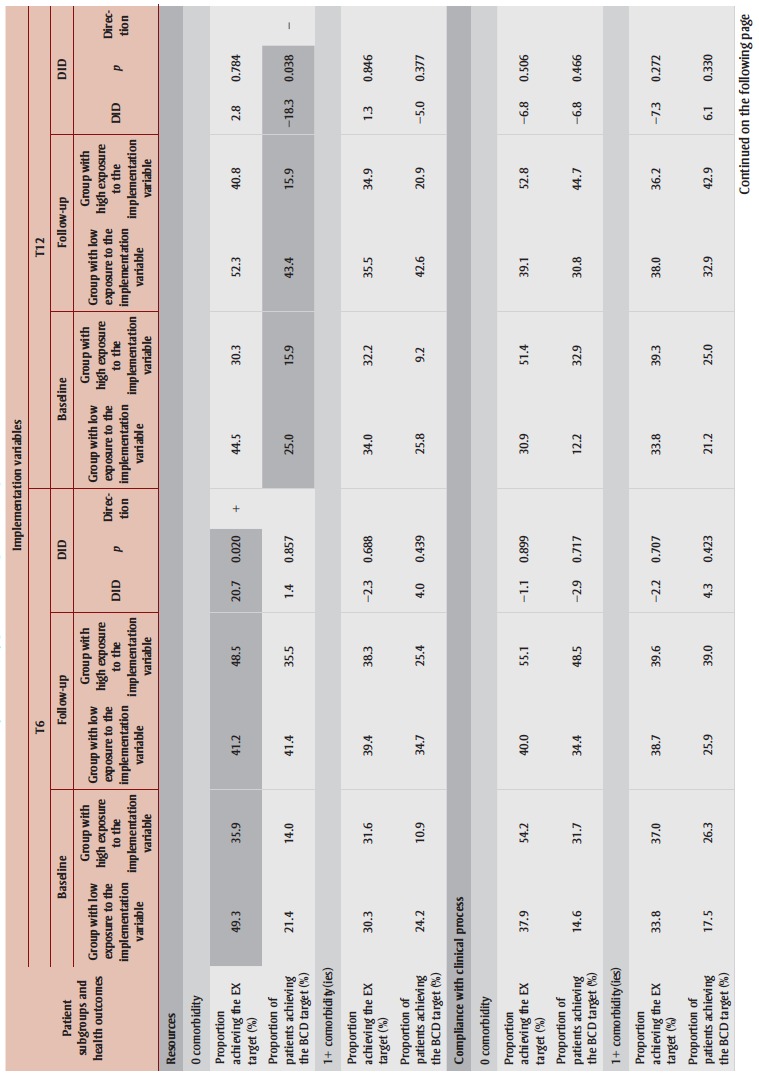

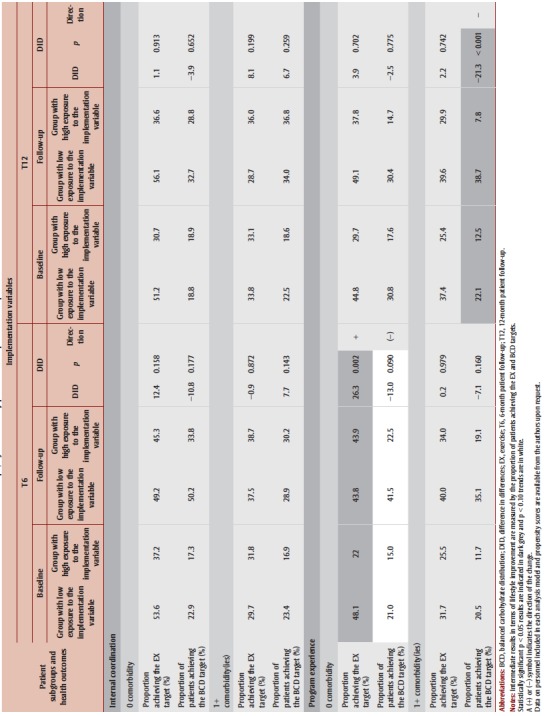

Overall, most analyses showed no effect of implementation variables on the studied results (Table 4). Table 5 and 6 show the difference in differences (DID) analysis results carried out on patient subgroups by comorbidity profile.

TABLE 4. Synthesis of statistically significant results (p < 0.05) and trends (p < 0.10) in analysis of difference in differences.

|

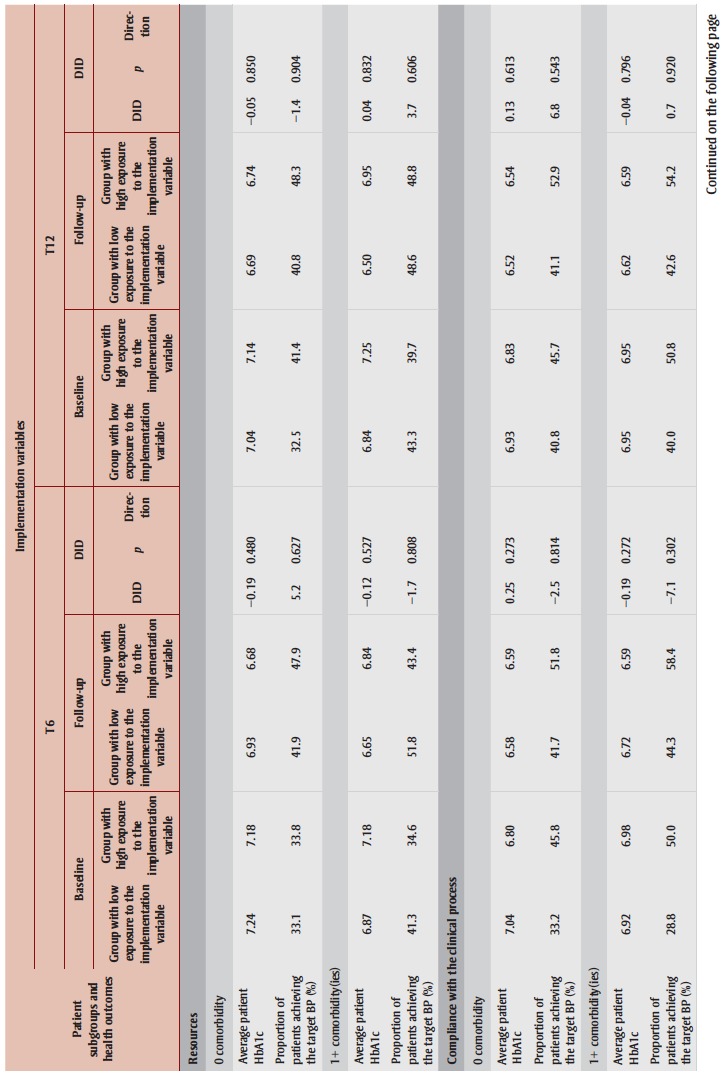

TABLE 5. Clinical results (disease control): average patient HbA1c and proportion of patients achieving the BP target at the 6- and 12-month follow-ups, according to their comorbidity profile and exposure to the implementation variables.

|

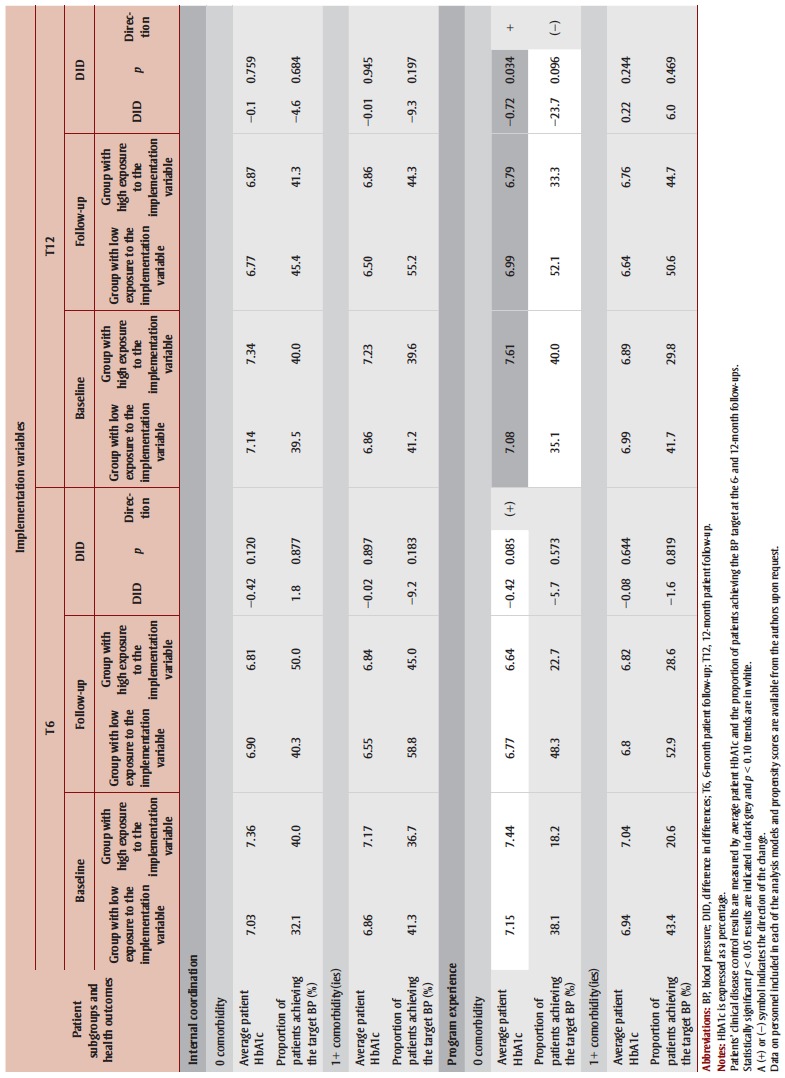

TABLE 6. Intermediate results (lifestyle improvement): proportion of patients achieving exercise and balanced carbohydrate distribution targets at the 6- and 12-month follow-ups, by comorbidity profile and exposure to implementation variables.

|

Significant DIDs (p < 0.05) are in dark grey and accompanied in the tables by a “+” symbol when positive, i.e. favourable to the “high exposure to the implementation variable” group, and a “−” symbol in the opposite case, when negative. DIDs with a significance threshold between 0.05 and 0.10 are in white and are considered trends, with a “(+)” or “(−)” symbol to indicate direction.

Table 5 shows that the clinical results specific to the program, namely improvements in HbA1c and achievement of the BP targets, are influenced by implementation variables only for the subgroup of patients with no comorbidities and that this influence only involves the program experience variable. This has a positive impact on HbA1c at T12 (−0.72 percentage points). This impact is also present at the 6-month follow-up in the form of a trend. At that moment, the two groups (“high exposure to the implementation variable” and “low exposure to the implementation variable”) show improvements in their HbA1c. Program experience appears to have a negative impact on the proportion of patients achieving the BP target. The scope of this trend is substantial (−23.7%), with the “high exposure to the implementation variable” group deteriorating and the “low exposure to the implementation variable” improving.

Table 6 shows that the proportion of patients achieving lifestyle targets is also little dependent on implementation variables. Achievement of the EX target is only influenced in patients with no comorbidities. The two significant effects are associated with the “resources” and “program experience” variables and are positive, but only at T6: the “low exposure to the implementation variable” group deteriorated, while the “high exposure to the implementation variable” group improved. The scope of the effect was substantial (+20.7% for resources and +26.3% for program experience).

Achievement of the BCD target is influenced negatively by certain implementation variables (resources and program experience), for both the subgroups of patients with and without comorbidities. These negative effects, detected at T12, are substantial (from −12.6% to −21.3%). In addition, in terms of the proportion of patients achieving the BCD target, the “high exposure to the implementation variable” group linked to the resources effect remained unchanged, while the “low exposure to the implementation variable” group improved among patients without comorbidities. The “high exposure to the implementation variable” linked to program experience deteriorated, while the “low exposure to the implementation variable” group improved among patients with comorbidities. The variable with the greatest influence appears to be program experience.

Discussion

Low impact of implementation on patient outcomes

The main objective of our study was to assess the influence of variations in the implementation of four program components on patient outcomes. The expected effects for at least three aspects ranged from neutral to positive for each of the studied health indicators. Greater compliance with the clinical process initially set out in the regional program might have generated more varied effects if we assume that adapting the program to patient needs, which would likely result in improved health outcomes, might not follow the prescribed clinical process.

The results of the DID analyses show that clinical indicators (HbA1c and achievement of the BP target) and lifestyle indicators are not much influenced by implementation variables when we consider all the patients taking part in the study.

In addition, some variables seem to negatively influence the proportion of patients achieving the BCD target. In the case of program experience, particularly with regard to the diabetes component, it is reasonable to assume that the CSSS nutritionists with the most experience have more experience in managing and monitoring diabetic patients, which may make them more conservative in their assessment of achievement of the BCG indicator in such patients. In the case of resources, some of these may be used for other purposes than the cardiometabolic risk program. CSSSs providing the fewest visits to patients may be providing potentially longer or higher-quality interventions. And lastly, barriers to service delivery may exist, particularly with regard to the complexities of managing appointments that follow the clinical process schedule.

Apart from a number of mitigated effects of implementation variables on the carbohydrate distribution indicator, very few effects of these variables were brought to light overall as regards health impacts for all patients. This is consistent with the results of systematic reviews showing that no CCM component has, to date, been demonstrated as being solely responsible for the CCM’s positive effects.6,7 It is highly likely that the implementation variables used in our study had a synergistic effect when taken together.

Effects in patients with no comorbidity

More significant effects of implementation variables were observed in the subgroup of patients without comorbidities than in the subgroup with comorbidities, particularly with respect to the “program experience” and “resources” variables.

It is possible that as part of the program, patients with comorbidities are given particular attention to meet their specific needs, regardless of variations in the implementation of certain aspects of the program.

The positive impact of program experience on HbA1c in patients with no comorbidities indicates that those patients, when exposed to a more experienced program, are more inclined to improve their diabetes control than patients who have comorbidities. Program experience, which corresponds to the duration of the program since the implementation of the diabetes component, doubtless reflects characteristics pertaining to expertise, particularly with regard to managing diabetic patients. Our results suggest that this expertise is perhaps better adapted to the management of diabetic patients with no comorbidities. Although the proportion of patients achieving the BCG target in the most experienced CSSS appears to have dropped by the 6-month follow-up, nutritionists in the program appear to contribute to the final program objective of diabetes control as measured by improvements in the average HbA1c of patients without comorbidities.

Resources, like program experience, have the expected positive impact on the EX target at the 6-month follow-up. Patients without comorbidities doubtless tend to increase their exercise levels in response to increased access to health care professionals who provide support and encouragement in their efforts to make changes, as well as the program expertise developed if it is more extensive in their CSSS. Patients with comorbidities benefit less from resource availability, particularly if they are dealing with physical or mental obstacles to exercise related to the number and nature of the other health problems from which they suffer.18

Moderate variations in implementation

The implementation analysis showed a few differences among the six participating CSSSs with regard to the program aspects implemented, but overall, the program was implemented fairly similarly across the board. The moderate variation observed can be explained by the fact that the program was very clearly defined and that the CSSSs agreed to follow the general implementation framework suggested by the Agency. Our analyses therefore compared a group with a low level of implementation to a group with a high level of implementation for each variable, but on the basis of variations that proved to be modest. This may in part explain why the variations observed had little effect on patient outcomes and, in some cases, even had unexpected impacts.

Program experience is probably the implementation variable that caused the greatest variations. A single CSSS was in the “high” category for this variable, which may explain its more substantial impact on patient results.

Strengths and limitations

Our study has a number of limitations. First, this is an exploratory study, involving post-hoc analyses. Also, the large number of analyses increases Type I errors. Since the purpose of the study was not to assess the program’s effectiveness, we cannot make any determination in that regard but can only reach conclusions as to the impact of variations in the implementation of the characteristics studied. There is no control group, given the fact that the study was carried out in an actual program implementation context, which limits the interpretation of results. Moreover, the quasi-experimental design involves limitations with regard to its assumption that results for the “high exposure to the implementation variable” would have mirrored those of the “low exposure to the implementation variable” group had it not had such high exposure.

To our knowledge, there were no changes in practice in any of the CSSSs that may have affected the study’s results, but we were unable to assess this component directly. We were also unable to assess the program’s effectiveness on cardiometabolic risk across all program participants, since we used a non-probability sample, which prevents us from gauging its representativeness. However, according to the analysis of the data at our disposal, the patients who agreed to take part in the evaluation are identical in terms of age and sex to the patients participating in the program. We do not have any data characterizing the program’s target population in the various CSSS territories.

The sample size was smaller than anticipated owing to the program’s low coverage, which limited the breadth of our analyses. We did not use any interaction terms in the analyses (whose purpose was exploratory), which allowed us to gauge the impact of each variable on each of the subgroups but prevented us from comparing the impact of implementation variables between the two patient subgroups (patients with and without comorbidities). Measures linked to lifestyle indicators have more limited reliability than those associated with clinical indicators. The lack of a blind for assessing health indicators may generate information bias, but in our study neither the patients nor the health care professionals collecting information on the health indicators were aware of the group to which they belonged, as these were defined after the fact. Lastly, data collection proved more difficult than anticipated early in the project’s implementation phase, as this period was mainly devoted to training new teams and learning new work methods, which affected the quality of the collected data (entry errors, missing data). Imputation of missing data nonetheless allowed us to enhance the quality of all the data and reduce the non-response bias.14

The type of analysis selected is one of this study’s major strengths. The analysis of difference in differences, with the use of propensity scores, is a method that did indeed make it possible to test causal relationships by comparing two groups over time: one group exposed to a program with a more strongly implemented aspect and another group where the implementation of that same program aspect was weaker. The groups were therefore comparable to one another because the effect of the exposure was isolated.

Another of the study’s strengths is that it attempted to draw a connection between the variations related to local environments in the implementation of certain aspects of the program to patient impacts, while also linking them to contextual elements stemming from the implementation analysis conducted at the time of the program’s implementation. Quantification of qualitative variables is rarely found in the literature, and this is an innovative practice. However, the identification of variables that, when taken independently, may have a direct impact on patient results is a challenge19 and it is likely that the aspects selected in our analyses as being more likely to directly influence patient outcomes acted synergistically.

The patients taking part in the evaluation entered the program at different times throughout the assessment period. We elected to consider implementation T40, or the evaluation conclusion, as the best approximation of program implementation levels for each of the aspects under study. This strategy may, however, have caused a certain underestimation of the association between variations in aspects of program implementation and patient impacts. The implementation analysis showed that changes under way mid-program (implementation T20) were heading toward the program’s status at implementation T40, justifying this methodology choice.

As mentioned previously, the implementation analysis showed differences among the CSSSs as regards program implementation, but those differences remained fairly modest. Consequently, for each dichotomized implementation variable, the difference between categories is moderate, limiting our ability to draw connections between implementation variables and patient outcomes.

Lastly, it should be mentioned that the implementation variables each carry wording that represents the aspect on which the CSSSs varied and that the groups were divided on this basis for analysis purposes. We must bear in mind that, for each implementation variable, the two CSSS groups can also differ in other characteristics than those indicated in the wording. This means that we cannot state that the effect of an implementation variable observed via our analysis is exclusively due to the concept reflected in the wording of the variable and not, at least in part, due to another, unmeasured characteristic that varies among CSSSs in a manner similar to the selected variable.

Linking variations in cardiometabolic risk program implementation to patient health outcomes is one of the study’s great strengths. It allows us to gauge the extent to which variations in program implementation in the field, related to differing local contexts, have an impact on patient results. The combination of results presented in this study with the information on the contextual elements collected during the implementation analysis make it possible to enhance the external validity of the results and the possibility that they can be used in similar contexts, in whole or in part. These results can guide decision- making with regard to the implementation of future CCM-based projects addressing other chronic diseases in populations in Montréal, in Quebec, or elsewhere in Canada.

Conclusion

The results of this study show that some variations in the implementation of various aspects of the cardiometabolic risk program have little influence on patients’ health outcomes, particularly on the clinical indicators of HbA1c and the achievement of blood pressure treatment targets.

Generally speaking, knowing that 6 CSSSs in the study implemented a program that was fairly similar, the moderate differences observed in this study do not appear to have had an impact on patient outcomes.

These results are an incentive to continue research to assess with greater accuracy the impact of variations in program implementation in various settings. The integration of qualitative and quantitative methods is a contribution that enriches the interpretation of our results and is a research direction to be pursued and improved. In that respect, greater cohesion between the qualitative and quantitative processes, particularly with regard to collecting data on the implementation of the intervention and on patient outcomes, is needed in conducting this type of research, in order to be able to better assess the impact of implementation extent on patient health outcomes.

Acknowledgements

The research project from which the content of this article was drawn was funded by the Canadian Institutes of Health Research and the Pfizer-FRSQ-MSSS (Fonds de recherche en santé du Québec – Ministère de la Santé et des Services sociaux) Chronic Disease Fund. The authors would like to highlight the contributions of collaborators associated with the project at the Agence de la santé et des services sociaux de Montréal and in participating Centres de santé et des services sociaux (Sud-Ouest–Verdun, Jeanne- Mance, Coeur-de-l’Île, Pointe-de-l’Île, St‑ Léonard–St-Michel, Bordeaux–Cartierville– St-Laurent).

Conflicts of interest

The authors have no conflicts of interest to declare.

Authors' contributions and statement

All authors took part in designing and drafting the manuscript and interpreting the data. All authors also took part in the critical review and read and approved the final manuscript.

The contents of this article and opinions expressed therein are those of the authors and do not necessarily represent the position of the Government of Canada.

References

- Ransom T, Goldenberg R, Mikalachki A, et al. Clinical practice guidelines: reducing the risk of developing diabetes. Ransom T, Goldenberg R, Mikalachki A, Prebtani APH, Punthakee, Z. :S16–S19. doi: 10.1016/j.jcjd.2013.01.013. Available from: http://guidelines.diabetes.ca/appthemes/cdacpg/resources/cpg2013fullen.pdf. [DOI] [PubMed] [Google Scholar]

- Campbell NS, Lackland D, Niebylski N, et al. Why prevention and control are urgent and important: a 2014 fact sheet from the World Hypertension League and the International Society of Hypertension (Internet) Campbell NS, Lackland D, Niebylski N. 2014 doi: 10.1111/jch.12372. Available from: http://ish-world.com/data/uploads/WHLISH2014HypertensionFactSheetlogos.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner EH, Austin BT, Davis C, Hindmarsh M, Schaefer J, Bonomi A, et al. Improving chronic illness care: trans¬lating evidence into action. Health Affairs. 2001;20((6)):64–78. doi: 10.1377/hlthaff.20.6.64. [DOI] [PubMed] [Google Scholar]

- Coleman K, Austin BT, Brach C, Wagner EH, et al. Evidence on the chronic care model in the new millennium. Health Aff. 2009:75–85. doi: 10.1377/hlthaff.28.1.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bodenheimer T, Wagner EH, Grumbach K, et al. Improving primary care for patients with chronic illness. Improving primary care for patients with chronic illness. The Chronic Care Model, Part 2. JAMA. 2002:1909–1914. doi: 10.1001/jama.288.15.1909. [DOI] [PubMed] [Google Scholar]

- Davy C, Bleasel J, Liu H, Tchan M, Ponniah S, Brown A, et al. Effectiveness of chronic care models: opportunities for improving healthcare practice and health outcomes: a systematic review. BMC Health Services Research. 2015 doi: 10.1186/s12913-015-0854-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stellefson M, Dipnarine K, Stopka C, et al. The Chronic Care Model and diabetes management in US primary care set¬tings: a systematic review. Prev Chronic Dis. 2013 doi: 10.5888/pcd10.120180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Provost S, Pineault R, Tousignant P, Hamel M, Silva R, et al. Evaluation of the implementation of an integrated primary care network for prevention and management of cardiometabolic risk in Montréal. BMC Fam Pract. 2011 doi: 10.1186/1471-2296-12-126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadish WR, Cook TD, Campbell DT, nd ed, et al. Experimental and quasi-experimental designs for generalized causal infe¬rence. 2nd ed. Belmont, CA: Wadsworth; 2002. 2002 [Google Scholar]

- Peterson KP, Pavlovich JG, Goldstein D, Little R, England J, Peterson CM, et al. What is hemoglobin A1c. Peterson KP, Pavlovich JG, Goldstein D, Little R, England J, Peterson CM. 1998;44((9)):1951–1958. Available from: http://clinchem.aaccjnls.org/content/44/9/1951. [PubMed] [Google Scholar]

- Nolin B, Homme D, Godin G, Hamel D, et al. Enquête québécoise sur l'activité physique et la santé 1998. Institut national de santé publique du Québec et Kino- Québec. 2002 [Google Scholar]

- Blanchet C, Trudel J, Plante C, et al. Résumé du rapport La consommation alimentaire et les apports nutrition¬nels des adultes québécois: Coup d'oeil sur l'alimentation des adultes québécois. Institut national de santé publique du Québec. 2009 [Google Scholar]

- A review of hot deck imputation for survey non-response. Int Stat Rev. 2010:40–64. doi: 10.1111/j.1751-5823.2010.00103.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Inférence en présence d'imputation: un survol. Haziza, D. Available from: http://jms.insee.fr/files/documents/2002/3301-JMS2002SESSION2HAZIZAINFERENCE-PRESENCE-IMPUTATION-UN-SURVOLACTES.PDF. [Google Scholar]

- Gertler PJ, Martinez S, Premand P, Waylings LB, et al. The World Bank. Washington(DC): 2011. Impact evaluation in practice (Internet) Available from: http://siteresources.worldbank.org/EXTHDOFFICE/Resources/5485726-1295455628620/ImpactEvaluationinPractice.pdf. [Google Scholar]

- Caliendo M, Kopeinig S, et al. Some practi¬cal guidance for the implementation of propensity score matching. Forschungsinstitut zur Zukunft der Arbeit (Institut de recherche sur l'avenir du travail) 2005 [Google Scholar]

- Villa JM, et al. Boston College Department of Economics. Boston(US): 2009. DIFF: Stata module to per¬form differences in differences esti¬mation (Internet) Available from: https://econpapers.repec.org/software/bocbocode/s457083.htm. [Google Scholar]

- Piette JD, Kerr EA, et al. The Impact of comorbid chronic conditions on dia¬betes care. Diabetes Care. 2006;29((3)):725–731. doi: 10.2337/diacare.29.03.06.dc05-2078. [DOI] [PubMed] [Google Scholar]

- Brousselle A, Champagne F, Hartz Z, et al. L'Évaluation: concepts et méthodes. Presses de l'Université de Montréal. 2011 [Google Scholar]