Abstract

Background

A growing literature has demonstrated the ability of user-centered design to make clinical decision support systems more effective and easier to use. However, studies of user-centered design have rarely examined more than a handful of sites at a time, and have frequently neglected the implementation climate and organizational resources that influence clinical decision support. The inclusion of such factors was identified by a systematic review as “the most important improvement that can be made in health IT evaluations.”

Objectives

(1) Identify the prevalence of four user-centered design practices at United States Veterans Affairs (VA) primary care clinics and assess the perceived utility of clinical decision support at those clinics; (2) Evaluate the association between those user-centered design practices and the perceived utility of clinical decision support.

Methods

We analyzed clinic-level survey data collected in 2006–2007 from 170 VA primary care clinics. We examined four user-centered design practices: 1) pilot testing, 2) provider satisfaction assessment, 3) formal usability assessment, and 4) analysis of impact on performance improvement. We used a regression model to evaluate the association between user-centered design practices and the perceived utility of clinical decision support, while accounting for other important factors at those clinics, including implementation climate, available resources, and structural characteristics. We also examined associations separately at community-based clinics and at hospital-based clinics.

Results

User-centered design practices for clinical decision support varied across clinics: 74% conducted pilot testing, 62% conducted provider satisfaction assessment, 36% conducted a formal usability assessment, and 79% conducted an analysis of impact on performance improvement. Overall perceived utility of clinical decision support was high, with a mean rating of 4.17 (+/− .67) out of 5 on a composite measure. “Analysis of impact on performance improvement” was the only user-centered design practice significantly associated with perceived utility of clinical decision support, b =.47 (p<.001). This association was present in hospital-based clinics, b =.34 (p<.05), but was stronger at community-based clinics, b =.61 (p<.001).

Conclusions

Our findings are highly supportive of the practice of analyzing the impact of clinical decision support on performance metrics. This was the most common user-centered design practice in our study, and was the practice associated with higher perceived utility of clinical decision support. This practice may be particularly helpful at community-based clinics, which are typically less connected to VA medical center resources.

Keywords: User-centered design, Clinical decision support, Human factors, Usability

Graphical abstract

1. Introduction

1.1. Background

User-centered design draws on cognitive science, psychology, and computer science to make information systems more useful and easier to use [1]. Though user-centered design has been applied to a range of clinical and operational processes, researchers have found it particularly relevant to clinical decision support (CDS), the tools that make evidence-based medical knowledge accessible and salient [2]. There are good reasons for this: CDS can be highly effective, but there is substantial variability in the usability, efficacy, and even safety of CDS [3–6], and user-centered design offers a way to identify and respond to these potential deficiencies [7].

A growing body of literature on user-centered design has helped to disseminate and refine user-centered design practices and has uncovered important lessons about the application of user-centered design in a clinical context [8]. This research often takes the form of papers that propose new approaches to user-centered design or that describe the application of these approaches in a clinical setting. However, user-centered design in these studies has often been directed or heavily influenced by informatics researchers. This involvement increases the possibility that results may differ in settings that do not benefit from the expertise and regular participation of experts in informatics whose work is frequently supported by a research grant. In addition, most studies on user-centered design of clinical decision support have necessarily been conducted within an individual clinical site or a small network of sites [9]. There remains an opportunity to study user-centered design across many sites with different users, different structural characteristics, and different resources, policies, and challenges. These contextual factors have been underexplored not only in studies of user-centered design but in studies of health IT in general, with one systematic review noting that “the most important improvement that can be made in health IT evaluations is increased measurement, analysis, and reporting of the effects of contextual and implementation factors.”[10]

In this study, we seek to fill these gaps in the literature by analyzing national survey data from a 2006–2007 census of US Veterans Affairs (VA)† health care facilities with large primary care caseloads. The survey data provide information about user-centered design practices and the perceived utility of CDS.

We examine user-centered design practices through the lens of organizational behavior and implementation science, and this lens informs the type of outcome we evaluate and the types of contextual information we consider. We analyze reports of CDS utility from the primary care director at each clinic. In VA health systems, the primary care director is responsible for supporting population health and evidence-based decision-making across the clinic. These reports represent a unique perspective focused on organizational priorities. We also account for clinics’ resources, implementation climate, and structural characteristics – factors that are routinely incorporated in organizational behavior studies, but are rarely represented in studies of user-centered design. We take advantage of the variability in clinical practice and organizational strategies within the VA [11] which provides study sites that are comparable in many respects (e.g. general structure, overall payment model, national leadership) but that differ in meaningful and well-documented ways [12]. In addition, we present rarely-accessible information about user-centered design practices that are not necessarily led by informatics researchers.

With these data, we assess which of four user-centered design practices work best to ensure that CDS accomplishes its stated goals. Namely, we consider four practices that are recommended by multiple guidelines for user-centered design [13–15]: 1) pilot testing CDS, 2) assessing provider satisfaction, 3) assessing usability, and 4) analyzing the impact of CDS on performance improvement. We examine the association between each of these practices and the perceived utility of CDS. Each of these practices were hypothesized to be associated with higher perceived utility of CDS.

1.2. User-Centered Design Practices

All four of the user-centered design practices we examined are intended to improve the formatting and framing of CDS, and optimize its fit within the clinical workflow. They are also designed to help determine which applications of CDS should be retained and which should be discarded. The goals and processes of each user-centered design practice are elaborated below:

Pilot testing

Pilot testing is a foundational aspect of software design, human factors, ergonomics, quality improvement, and nearly all frameworks for managing change within a complex system [7,16–18]. Published guidance on user-centered design of CDS recommends not only pilot testing but iterative testing [13,14]; however the limited time and resources available to local clinical informatics teams may preclude highly iterative processes. In this analysis, we examined pilot testing, a practice that is arguably a bare minimum for user-centered design.

Provider satisfaction assessment

Provider satisfaction assessment is a modest step toward usability testing: it serves as a rough gauge of the acceptability of clinical decision support. In the parlance of quality improvement, provider satisfaction assessment functions as a “balancing measure,” [18] that helps to determine whether short-term gains in technical quality of care come at the expense of provider and staff well-being. Reduced provider satisfaction is by no means the only potential unintended consequence of CDS but it is among the easiest to anticipate and can function as a proxy for other important organizational factors associated with care quality [19,20].

Formal usability assessment

Formal usability assessment is the practice that is perhaps most emblematic of user-centered design. It often involves some combination of interviews, focus groups, questionnaires, and analysis of clinical artifacts in the name of evaluating the three dimensions of usability defined by the International Organization for Standardization (ISO): effectiveness, efficiency, and user satisfaction [2,21]. These dimensions are evaluated as properties of the interaction between a user (e.g. a provider) and the product (CDS) and not as inherent properties of the CDS itself.

Analyzing the impact of CDS on performance improvement

Analyzing the impact of CDS on performance improvement helps to keep CDS goal-oriented, and can provide evidence as to whether CDS efforts are helping clinics meet quality targets. It is particularly germane at the VA because of the VA’s substantial infrastructure for measuring performance at multiple levels of the organization and targeting improvement efforts on the basis of those measures. For example, the VA’s External Peer Review Program (EPRP) defines clinical quality measures at a national level but delegates most development of computerized clinical reminders and disease-specific templates to individual VA medical centers [22]. The specific measures within EPRP have changed over time to reflect changing goals within the VA and new medical evidence, but have consistently included information about preventive care (e.g. the provision of important vaccinations and screenings), and other high-value practices in both inpatient and outpatient settings. This program is one of several performance improvement programs within the VA, with others focusing on, for example, patient experience of care [23], patient safety [24], and overutilization [25].

These four practices do not reflect the entirety of user-centered design, but are commonly-recommended, readily-implementable strategies for improving the utility of CDS. As illustrated in Table 1, each of the four user-centered design practices studied was explicitly recommended by the Healthcare Information and Management Systems Society (HIMSS) toolkit “Improving Outcomes with Clinical Decision Support: An Implementer’s Guide” [13] and by the United States Department of Health and Human Services (HHS)-funded technical report on “Advancing Clinical Decision Support” [14] – resources that are specifically targeted at user-centered design of CDS for the purpose of local improvement. The practices are also consistent with the Rapid Assessment Process (RAP), a methodological approach that is geared toward understanding how and why health IT systems succeed or fail while providing “actionable information” to organizations about their health IT systems. Two of the four practices are explicitly recommended within the RAP framework and all four are consistent with the RAP approach [15,26,27].

Table 1.

User-Centered Design Practices for Clinical Decision Support

| User-Centered Design Practice | Survey Item | Recommended By |

|---|---|---|

| Which mechanisms are usually used to develop computerized clinical reminders and/or disease-specific templates?: | ||

| Pilot testing | Test piloting reminders prior to full scale implementation | HIMSS; A-CDS; RAP |

| Provider satisfaction assessment | Post implementation assessment of provider satisfaction | HIMSS; A-CDS; RAP |

| Formal usability assessment | Formal evaluation of reminder usability (human factors or usability assessment) | HIMSS; A-CDS |

| Analysis of impact on performance improvement | Analysis of reminder impact on performance improvement | HIMSS; A-CDS |

HIMSS = Improving Outcomes with Clinical Decision Support: An Implementer’s Guide; A-CDS = “Advancing Clinical Decision Support” Technical Report; RAP = Rapid Assessment Process

2. Methods

2.1. Setting and Sample

The VA is the largest integrated healthcare delivery system in the United States, with hospital and community-based clinics that span all 50 states. By the end of 1999, when electronic health record systems were sparsely adopted across the US, all VA facilities had implemented an EHR, on a common platform, with computerized provider order entry and integrated CDS [28]. The VA’s early adoption of health IT makes it a particularly informative setting for this research: in 2006, the VA was at the stage of CDS use that many other health care systems have yet to begin: the stage of continuously updating and improving the medical knowledge and user interface of a system that is already in place.

VA facilities are organized into VA medical centers, typically anchored at a hospital. Most VA medical centers are affiliated with multiple primary care clinics – usually one clinic based at a hospital and multiple clinics based in the community.

The survey data we use, the Clinical Practice Organizational Survey (CPOS), was developed by VA investigators to study organizational influences on quality of care including but not limited to health information technology [29,30]. The design and execution of the survey has been described by other studies on organizational determinants of quality [31,32]. The content of the survey is based on input from a steering committee comprised of representatives from several research and operational offices within the VA, as well the National Committee for Quality Assurance and the Kaiser Health Institute. The CPOS encompasses over 1,000 variables addressing processes and tools for the management of clinical operations, resource sufficiency, and barriers to quality improvement. The survey has an emphasis on primary care, but includes inpatient care as well. The team that developed the survey used pilot testing and cognitive interviewing to verify that VA clinical and managerial leaders interpreted items as intended [33].

To facilitate the study’s emphasis on clinic-level factors, and to put the investigation into the broader context of research on strategies to support implementing evidence-based practice, we grouped measures according to domains from organizational behavior and implementation research [34]. The use of these domains helps reflect the depth of available information about clinics’ structural characteristics, implementation climate, and available resources.

Data for this study were drawn from two modules of the CPOS administered in 2006–2007: a chief of staff survey and a primary care director survey. The primary care director survey (the clinic-level survey) includes data from 250 clinics, which represents a 90% response rate. The VA medical center chief of staff survey includes data from 111 respondents, representing an 86% response rate [31]. Each chief of staff reported on the use of user-centered design practices for his or her entire VA medical center, and these responses were attributed to all primary care clinics under their control, an average of two clinics for each chief of staff.

Data from the two survey modules were merged, which resulted in a sample of 193 clinics with data from both modules. Because our analyses require data from both modules, clinics with data from only one module were not included. None of the 193 clinics were missing observations of the outcome (perceived CDS utility), but 23 were missing at least one observation of a covariate, which resulted in a final analytic sample of 170 clinics. We examined characteristics of the omitted clinics, and confirmed that they did not systematically differ from clinics with complete data, with the exception of two variables: clinics excluded because of missing data were less likely to report that they conduct analyses of provider satisfaction after implementing CDS (35% vs 62%), and scored lower on a measure of CDS customization by roughly half a point on a 4-level Likert scale. We also ran a model excluding the 4 variables that most contribute to missingness, which yielded a sample size of 185; the coefficient estimates in this model were similar to the estimates in the primary analysis. Our secondary analysis of data was approved by the Institutional Review Boards at the VA and the University of California, Los Angeles.

2.2. Measures

Perceived utility of CDS

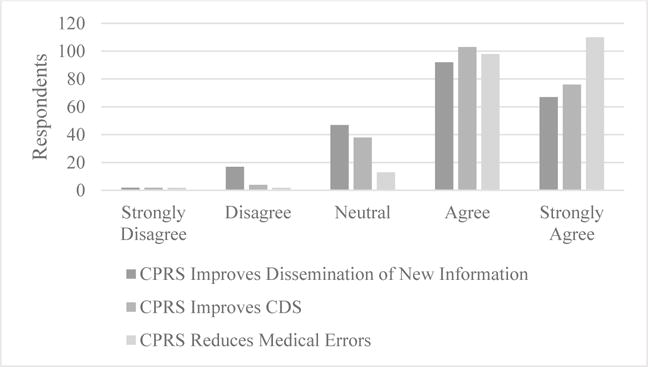

Perceived utility of CDS was operationalized as a 3-item measure (⍺ = .77) reflecting the key intended benefits of CDS: improving the dissemination of new information (e.g. new medical evidence), reducing medical errors, and supporting the clinical decision-making process [35]. This measure was drawn from the primary care director survey module, i.e. was collected directly at the clinic level. A table illustrating the full model specification is available in the appendix.

User-centered design practices

User-centered design practices are represented by four yes/no questions following the prompt: “Which mechanisms are usually used to develop computerized clinical reminders and/or disease-specific templates?”: 1) test piloting reminders prior to full scale implementation; 2) post implementation assessment of provider satisfaction; 3) formal evaluation of reminder usability (human factors or usability assessment); 4) analysis of reminder impact on performance improvement. The use of these practices is reported by the VA medical center chief of staff, and in most cases applies to multiple primary care clinics affiliated with each VA medical center. These indicators were selected because they embody the principles of user-centered design that pertain most directly to the use of CDS and could be ascertained from the Clinical Practice Organizational Survey.

Additional covariates were chosen on the basis of their theoretical or empirical associations with: a) the effective implementation and use of CDS, or b) clinic directors’ perceptions about computer-based approaches to improve the quality of care. This includes clinics’ structural characteristics, implementation climate, and available resources. Constructs with ⍺ > .7 were represented in the model as a mean across items; otherwise, items were specified separately.

Structural Characteristics

We included three measures of the structural characteristics of each primary care clinic: its “type” (i.e. whether it is a hospital-based clinic or a community-based outpatient center), its size as measured by the number of unique patients (in 1,000s) seen at the primary care clinic in the year the survey was administered, and its academic affiliation status (i.e. whether the clinic has a primary care training program) [11,36].

Implementation Climate

Studies of the implementation of innovations in health care organizations have operationalized implementation climate in a number of ways; the construct is typically innovation-specific, in that items that describe it refer to the innovation itself [37]. The measures of implementation climate we included describe the implementation of evidence-based practices, as opposed to the implementation of CDS itself. We chose this approach because the study is focused on the role of CDS in supporting evidence-based practice and performance improvement.

The analysis incorporated 7 different measures of implementation climate. We included a measure indicating whether primary care providers are required to observe explicit practice guidelines, and a measure indicating whether providers sometimes turn guideline prompts off. The latter was included because turning guideline prompts off may suggest that providers are customizing the CDS system to reduce alert fatigue, or, alternatively, may suggest that there are fewer opportunities for CDS to play a role in clinical decisions [38]. This variable was reported at the VA medical center level because it was unavailable at the clinic level. Two items representing competing demands were included: one represents difficulty making changes in the practice because providers and staff are busy seeing patients; the other describes the extent to which competing demands across initiatives are a barrier to improving performance. Items representing resistance to performance improvement were also included: resistance from primary care providers, from local managers, and from local support staff.

Available Resources

Three measures of available resources previously shown to affect implementation and perceived efficacy of health information technology were included [31, 36–38]: A measure of IT staff sufficiency included both technical staff (e.g. Information Resource Management) and the staff in charge of maintaining the clinical content of the electronic record system (⍺ = .80). A measure of the adequacy of health IT training was also included (⍺ = .88), along with a single-item measure of access to medical informatics expertise, specified at the VA medical center level.

2.3. Statistical Analysis

We examined descriptive statistics for the sample, as well as for excluded observations to determine whether missing observations were missing at random. We also examined correlations among independent variables to identify potential collinearity. Only two pairs of items had correlations greater than 0.5: clinic type (hospital-based vs community-based) was correlated with clinic size (r = .589), and resistance to performance improvement from support staff was correlated with resistance to performance improvement from local managers (r=.592). No changes were made to the primary model on the basis of these correlations, but a sensitivity test examined a model excluding clinic size and resistance from local managers. Statistical analyses were conducted with Stata 13 [39].

2.3.1. Primary Analysis

A random intercept model was used to account for clustering of clinics within VA medical centers, while permitting examination of cluster-invariant factors (user-centered design practices reported at the medical center level). In initial analyses, a fully unconditional random intercept model had an ICC of 0.243. We also examined a model with random slopes and intercepts, but a likelihood ratio test did not support the addition of random slopes (p = .981). Because the outcome was the mean of three Likert items, with several non-integer values, we treated it as continuous. We also evaluated square root and natural log transformations of the outcome, and neither transformation improved the normality of the distribution. In addition, we examined results stratified by clinic type (hospital-based vs. community-based) to determine whether associations were similar across settings.

2.3.2. Sensitivity Analyses

We conducted several sensitivity analyses to evaluate the robustness of our findings: we examined each of the 3 outcome items in separate models to determine whether there were different associations with different aspects of perceived CDS utility. We also tested different modeling approaches to evaluate model fit statistics and to verify that results were consistent across model specifications: we tested a naïve regression model, a clustered regression model with robust standard errors, and a generalized estimating equation model. We excluded some potentially relevant variables in our primary model in order to avoid overfitting, but as a sensitivity test we also evaluated a model with those covariates included – namely, measures of the extensiveness of CDS use at each clinic, a measure of clinic complexity developed by VA researchers [36], a measure of the primary care director’s authority over operational changes within the clinic, and a measure of the sufficiency of available computers. To further protect against overfitting, we examined the sequential inclusion of each domain of covariates, with and without the primary regressor.

3. Results

3.1. Descriptive Results

Perceived CDS utility was relatively high overall, with a mean of 4.17 (+/− .67) out of 5 on the composite measure. The distribution of the items that comprise perceived CDS utility are shown in Figure 1.

Fig. 1.

CDS utility rated by primary care directors. n = 193. CPRS = Computerized Patient Record System

Some user-centered design practices were much more common than others: analysis of reminder impact on performance improvement was reported at 79% of clinics, while formal usability assessment was reported at 36% (Table 2). There was substantial variability in clinics’ structural characteristics, and measures of implementation climate and of available resources were fairly moderate, with average scores falling near the middle of the 5- and 4-level scales.

Table 2.

Characteristics of VA Primary Care Clinics (n = 170)

| Clinic Characteristics | Mean (or %) | SD |

|---|---|---|

| Perceived CDS utility | 4.42 | 0.68 |

| User-centered design practices | ||

| Pilot testing CDS (%) | 73.5 | |

| Assessing provider satisfaction after implementation (%) | 62.4 | |

| Formal usability assessment (%) | 35.9 | |

| Analysis of CDS impact on performance improvement (%) | 79.4 | |

| Structural Characteristics | ||

| Community-based clinic (vs. hospital-based) (%) | 52.9 | |

| Academic affiliate (%) | 50.6 | |

| Unique patients at the clinic (thousands) | 28.0 | 19.4 |

| Implementation Climate | ||

| Required guideline use (%) | 42.4 | |

| Health IT use / customization (1–5) | 2.88 | 1.09 |

| Competing demands (1–5) | 3.46 | 0.71 |

| Hard to make changes because busy seeing patients (1–5) | 3.67 | 1.04 |

| PCP Resistance to Performance Improvement (1–4) | 2.11 | 0.75 |

| Local Manager Resistance to Performance Improvement (1–4) | 1.74 | 0.76 |

| Support Staff Resistance to Performance Improvement (1–4) | 2.05 | 0.84 |

| Available Resources | ||

| IT staff sufficiency (1–5) | 2.91 | 1.13 |

| Access to medical informatics expertise (1–5) | 3.44 | 1.06 |

| Health IT training adequacy (1–5) | 2.69 | 0.92 |

CDS = Clinical Decision Support; IT = Information Technology; PCP = Primary Care Provider

3.2. Regression model results

We hypothesized that each of the four user-centered design practices would be associated with perceived CDS utility. One of the four practices (analysis of CDS impact on performance improvement) had a significant association, b =.47 (p<.001), controlling for other variables in the model and adjusted for clustering (Table 3). A subgroup analysis showed that the association is present in hospital-based clinics, b =.34 (p<.05), but is stronger at community-based clinics, b =.61 (p<.001). There was no observed association for the other three user-centered design practices we examined.

Table 3.

Multilevel Model of Perceived Utility of CDS

| Explanatory Variables | Model Coefficient | |

|---|---|---|

| User-Centered Design Practices | Test piloting reminders Assessments of provider satisfaction after implementation Formal usability assessment Analysis of reminder impact on performance improvement |

−0.19 −0.11 0.00 0.47*** |

| Structural Characteristics | Community-based clinic (vs. hospital-based) Academic affiliate Unique patients at the clinic (1000s) |

0.20 0.12 0.00 |

| Implementation Climate | Required guideline use Providers sometimes turn guideline prompts off Hard to make changes because busy seeing patients Competing demands across too many initiatives PCP resistance to performance improvement Local manager resistance to performance improvement Local support staff resistance to performance improvement |

0.16 −0.02 −0.07 −0.05 0.05 0.03 0.00 |

| Available Resources | IT staff sufficiency Access to medical informatics expertise Health IT training adequacy |

0.02 −0.03 0.05 |

| Constant | 4.03 |

p< .05

p<.01

p<.001;

PCP = Primary Care Provider; IT = Information Technology

None of the additional explanatory variables had a statistically significant association with the outcome. The statistical significance of the explanatory variables of interest (the user-centered design strategies) was consistent across all sensitivity analyses. In addition, the direction and approximate magnitude of the statistically significant association was similar across all analyses.

4. Discussion

This study is one of the first to examine user-centered design practices for CDS across more than a handful of clinics. We identified widespread adoption of user-centered design practices with the exception of formal usability assessment, which was reported at only 36% of clinics. Our analyses also revealed high ratings of CDS utility, with the majority of respondents agreeing that CDS is useful, but also identified some variation in these ratings.

The results are highly supportive of the practice of analyzing the impact of CDS on performance improvement. To understand the implications of this finding, it is helpful to understand its context: performance measures are analyzed in multiple ways at multiple levels of the VA. Perhaps most relevant is that site leaders, including primary care directors, are accountable for quality measures, and these measures are monitored regularly by regional and national leadership. At many clinics, individual provider-level measures are also tracked and in some cases presented to providers [40]. Given this robust and long-standing system of performance measurement, it is intuitive that a mechanism linking CDS to these measures would be helpful.

The results of this study also suggest that analyzing the impact of CDS on performance improvement, though related to user-centered design, is distinct from other user-centered design practices, and may have a different type of influence on the effectiveness of CDS. These other user-centered design practices (pilot testing CDS, assessing provider satisfaction, formal usability assessment), which have been shown to be important and meaningful in other studies [41,42], may be inseparable from the implementation climate and the organizational resources that enable them to take place. However, aspects of the study design (described in section 4.1) may bias results toward the null, so the absence of observed associations must be interpreted with caution.

4.1. Study Design and Setting

Our use of two separate surveys, with separate groups of participants, is an important asset to the study. A handicap for a great deal of survey-based research is that it frequently relies on a single person to report both the outcome and explanatory variables. This is a particular concern when the relevant survey items are at all subjective: perhaps administrators who think that their clinic’s activities qualify as “user-centered design” are also more likely to have a charitable opinion of CDS - either because they have a more positive disposition, or because they themselves oversaw these user-centered design practices, and would like to believe that those practices have been effective. This study overcomes that common limitation by linking two surveys together, with one survey providing the explanatory variables of interest, and the other survey providing the outcome and covariates. This design adds to the credibility of the statistically significant findings, but also merits particular caution in the interpretation of the null findings, especially because of the “one to many” linking of the two surveys - i.e. a single VA medical center chief of staff reporting the user-centered design practices on behalf of multiple clinics.

The study also benefited from its inclusion of sites regardless of their funding for informatics research or involvement of informatics researchers in user-centered design, thereby better reflecting user-centered design “in the wild.” This does not mean that sites with informatics research funding were excluded (we do not have data that would permit such an analysis), but it means that the study was able to take a wider, more representative view of user-centered design practices.

The timing of the data collection is also meaningful for the interpretation of the results: data were collected after all sites had been using some version of CDS for at least 8 years [28], so this study contributes to the body of research on the ongoing improvement and maintenance of CDS, which is a much less studied period of time than the years immediately following the new implementation of a clinical decision support system. As the number of organizations using CDS grows, the assessment processes surrounding new CDS “rules” (i.e. new decision support) for existing CDS systems will become increasingly important. There are changes that have occurred within the VA since these surveys were fielded in 2006–07, but the timing actually improves the study’s relevance to clinics outside the VA with much shorter histories of CDS use.

The data were also collected before the passage of the Health Information Technology for Economic and Clinical Health (HITECH) Act that dramatically altered health IT in the private sector of the United States [43]. In some ways, the “meaningful use” provisions of the HITECH act served to make the environment in the private sector more similar to the environment within the VA: well before the HITECH Act, the VA had fostered the adoption of CDS by a) developing CDS for some conditions at a national level, and rolling it out to VA facilities across the country, and b) holding VA medical centers and regional networks accountable for health care quality indicators, and encouraging those medical centers to develop CDS related to those indicators. The HITECH Act provided the federal government with a less-direct mechanism for encouraging the effective use of CDS in the private sector: namely, adjustments to reimbursement from Medicare and Medicaid. But because most care within the VA is paid for by the VA itself, and not by Medicare or Medicaid, HITECH’s influence on the VA was modest.

This study is among the many health informatics studies conducted at large healthcare systems with homegrown information systems. This category of research can provide insights into what is possible with sufficient flexibility and control of a system, and helps identify some of the negative implications of systems that do not permit health care organizations to make changes on the basis of their own investigations. The vendor-developed (3rd party) EHR systems and CDS systems in use at an increasing number of hospitals are often criticized for lacking this flexibility [44].

The VA’s capacity for performance measurement is also a factor in the interpretation of this analysis. Outside the VA, most health systems have a much smaller infrastructure for performance measurement, and have likely placed relatively less emphasis on those activities in the past. This difference may be diminishing as payers increasingly shift to value-based payment systems (e.g., Medicare’s Merit-based Incentive Payment System), thereby placing pressure on primary care practices to invest in such an infrastructure. Still, the nature of performance measurement remains different at the VA, which has measured and reported its performance to Congress for decades.

4.2. Study Outcome

There are inherent limitations in the use of a subjective measure of CDS utility, and utility from the perspective of primary care clinic directors may diverge from the utility perceived by other clinicians who use the system on a regular basis for patient care. However, supporting clinical decision-making, disseminating information about medical best practices, and reducing medical errors are among the central responsibilities of primary care directors at the VA, which makes them well-positioned to assess the usefulness of CDS in supporting these aims. Evaluations of health IT systems frequently use clinical or operational data from the systems being evaluated, e.g. clinical quality measures, orders placed, clicks recorded, etc. But qualitative research on health IT implementation supports the notion that a good way to assess whether a health IT system is functioning well is to ask a person who depends on that system [2].

The outcome measure was also bolstered by sensitivity analyses. These analyses, beyond illustrating that the findings were robust, show that the findings are consistent across each of the 3 aspects of perceived CDS utility: supporting clinical decisions, improving the dissemination of new information, and reducing medical errors.

4.3. Measures of User-Centered Design

Our findings are best understood in the context of a growing awareness of usability issues and the potential value of user-centered design for CDS: of 120 usability studies published in the last 25 years, 88% were published in the last 10 years [45]. These studies occur at several phases of system development and deployment (requirements/development, prototyping, etc.), but the majority are implementation / post-implementation evaluations, and our analyses shed light on this important subset of user-centered design.

The survey data we use are highly informative but are not a comprehensive representation of user-centered design. The research team that developed the survey conducted cognitive interviews and pilot tests to ensure consistent interpretations of survey questions; however, as with all surveys, some variability in how respondents interpreted questions may have persisted and contributed to measurement error.

4.4. Conclusions

The first implication of these findings is that analyzing the impact of CDS on performance improvement appears to be important and useful, and should be done more consistently. The practice was significantly associated with the perceived utility of CDS across all model specifications adjusting for a wide range of other factors, and indeed it was the only practice to show an association with perceived utility of CDS in this study. This finding provides quantitative support to qualitative work conducted in diverse practice settings that similarly highlighted the value of linking CDS to quality goals and performance improvement [26]. The association does not necessarily mean that VA medical centers that already analyze the impact of CDS on performance improvement should do so more often, or more extensively; we could not evaluate a “dose-response” relationship. However, the study did find that the association was particularly strong for community-based clinics, which further supports the value of this practice as a way to improve CDS at clinics that are less connected to VA medical center resources, and have fewer opportunities to be influenced by the culture of improvement that can be fostered at a large academic medical center.

The process of evaluating the impact of CDS on performance measures is not costless: it requires an investment of time and resources, and we were not able to evaluate these investments in this study. Indeed, this is true for all four of the user-centered design practices we examined: we sought to evaluate their potential benefit, but a description of the costs of these practices was beyond the scope of the study. In addition, the over-application of performance measurement has the potential for unintended consequences, particularly at organizations that have already achieved high levels of performance [46], but this analysis helps identify an underappreciated way that performance measurement can be constructive.

Research on clinical decision support has often highlighted the impact of factors that are extremely difficult to change such as provider workload and time constraints [47]. This study took structural characteristics and implementation climate into account, but explicitly examined individual practices that can be adopted fairly readily. Given the VA’s infrastructure for performance measurement and improvement, drawing connections between these measures and CDS is a highly feasible step that facilities can take to improve CDS [48,49]. These findings have the most direct relevance within the VA, which has a long history of performance improvement and of CDS, but clinics outside the VA increasingly face similar performance improvement imperatives, and are rapidly adopting CDS systems that mirror the ones the VA has used for years [50].

In many respects, CDS is emblematic of the many changes that accompany the transition from volume to value: it has the potential to transform the practice of medicine, but its effectiveness is highly dependent on the way it is implemented and maintained. Our analysis identifies high average levels of perceived CDS utility, but also points toward informative variability. In doing so, the study represents a step toward understanding the mutable factors that distinguish the clinics most successful in using CDS.

Highlights.

We studied user-centered design practices related to clinical decision support

We looked at clinic-level factors in primary care clinics across the VA

Primary care directors rated the utility of clinical decision support highly

Clinics may improve clinical decision support by analyzing its impact on performance

Summary table.

What was already known on this topic:

There is substantial variability in the usability, efficacy, and even safety of clinical decision support (CDS), and user-centered design offers a way to identify and respond to these potential deficiencies.

Studies of user-centered design have frequently drawn data from a relatively small number of sites, and have often failed to account for important contextual factors (e.g. clinic resources, implementation climate, and structural characteristics).

What this study has added to our knowledge:

User-centered design practices for CDS varied across US Veterans Affairs (VA) primary care clinics.

The directors of these VA primary care clinics gave generally positive ratings of the utility of CDS.

One of the four user-centered design practices we studied was “analysis of CDS impact on performance improvement.” This practice was the only one that was associated with higher ratings of CDS utility.

Acknowledgments

This research was supported by NIH/National Center for Advancing Translational Science (NCATS) UCLA CTSI Grant Number TL1TR000121, the VA Office of Quality and Performance, and VA Health Services Research and Development MRC 05-093 (PI: E.M.Y.). Dr. Yano’s time was supported by a Research Career Scientist Award (Project #RCS 05-195). We would like to thank Ismelda Canelo for her help in using Clinical Practice Organizational Survey data and Danielle Rose, Brian Mittman, Douglas Bell, Brennan Spiegel, and Ron Hays for their thoughtful advice at various stages of the analysis.

Appendix: Constructs and Measures

| Domain | Construct | Source | Measures |

|---|---|---|---|

| Perceived Utility of CDS | Perceived Utility of CDS | Primary Care Clinic Director | Mean of 3 items: “CPRS improves dissemination of new information” “CPRS improves clinical decision support” “CPRS reduces medical errors” (1=strongly disagree, 5=strongly agree) |

|

| |||

| User-Centered Design | User-Centered Design Practices | VA Medical Center Chief of Staff | Four indicators: 1) Test piloting reminders prior to full scale implementation 2) Post implementation assessment of provider satisfaction 3) Formal evaluation of reminder usability (human factors or usability assessment) 4) Analysis of reminder impact on performance improvement (1=yes, 0=no) |

|

| |||

| Structural Characteristics | Clinic type | Administrative Data | 1 = Community-Based Outpatient Center 0 = VA Medical Center |

| Academic affiliate | Administrative Data | Presence of a primary care training program (1=yes, 0=no) | |

| Size | Administrative Data | Number of unique patients, in 1000’s | |

|

| |||

| Implementation Climate | Required Guideline Use | Primary Care Clinic Director | PCPs required to observe explicit practice guidelines when ordering specified tests or procedures (1=yes, 0=no) |

| Health IT Use/Customization | VA Medical Center Chief of Staff | Providers sometimes turn guideline prompts off (1=yes, 0=no) | |

| Competing demands | Primary Care Clinic Director | 2 items: 1) Hard to make any changes because so busy seeing patients 2) Competing demands across too many initiatives [serve as a barrier to improving performance] (1=strongly disagree, 5=strongly agree) |

|

| Resistance to Performance Improvement | Primary Care Clinic Director | 3 items: 1) Resistance from PCPs 2) Resistance from local managers 3) Resistance among local support staff (1=not a barrier, 4=large barrier) |

|

|

| |||

| Available Resources | IT staff sufficiency | Primary Care Clinic Director | Mean of 2 items: 1) Sufficiency of IRM or CPRS technical staff 2) Sufficiency of clinical application coordinators (1=not at all sufficient, 5=completely sufficient) |

| HIT Expertise | VA Medical Center Chief of Staff | Access to medical informatics expertise (e.g. informatics-trained clinicians) (1=never sufficient, 5=always sufficient) | |

| HIT Training | Primary Care Clinic Director | Mean of 2 items: 1) Adequate types of HIT training 2) Adequate time for HIT training (1=strongly disagree, 5=strongly agree) |

|

IRM = Information Resources Management; CPRS = Computerized Patient Record System; IT = Information Technology; CDS = Clinical Decision Support

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

VA = Veterans Affairs, CDS = Clinical Decision Support, RAP = Rapid Assessment Process, CPRS = Computerized Patient Record System

Author Contributions

Julian Brunner conceived and designed the study, analyzed and interpreted the data, and wrote the manuscript.

Emmeline Chuang assisted in study design and in the analysis and interpretation of the data, revised the manuscript for important intellectual content, and approved the final version.

Caroline Goldzweig assisted in the analysis and interpretation of the data, revised the manuscript for important intellectual content, and approved the final version.

Cindy L. Cain assisted in the analysis and interpretation of the data, revised the manuscript for important intellectual content, and approved the final version.

Catherine Sugar assisted in the analysis and interpretation of the data, revised the manuscript for important intellectual content, and approved the final version.

Elizabeth M. Yano acquired the data, assisted in study design, assisted in the analysis and interpretation of the data, revised the manuscript for important intellectual content, and approved the final version.

References

- 1.Johnson CM, Johnson TR, Zhang J. A user-centered framework for redesigning health care interfaces. J Biomed Inform. 2005;38:75–87. doi: 10.1016/j.jbi.2004.11.005. [DOI] [PubMed] [Google Scholar]

- 2.Yen PY, Bakken S. Review of health information technology usability study methodologies. J Am Med Informatics Assoc. 2012;19:413–422. doi: 10.1136/amiajnl-2010-000020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Samsa G, Hasselblad V, Williams JW, Musty MD, Wing L, Kendrick AS, Sanders GD, Lobach D. Effect of clinical decision-support systems: A systematic review. Ann Intern Med. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 4.Harrison MI, Koppel R, Bar-Lev S. Unintended Consequences of Information Technologies in Health Care - An Interactive Sociothecnical Analysis. J Am Med Informatics Assoc. 2007:542–549. doi: 10.1197/jamia.M2384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Koppel R, Cohen A, Abaluck B, Localio AR, Kimmel SE, Strom BL. Role of Computerized Physician Order Entry Systems in Facilitating Medication Errors. J Am Med Assoc. 2013;293:1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 6.Campbell E, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of Unintended Consequences Related to Computerized Provider Order Entry. Jamia. 2006;13:547–556. doi: 10.1197/jamia.M2042.Introduction. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Horsky J, Schiff GD, Johnston D, Mercincavage L, Bell D, Middleton B. Interface design principles for usable decision support: A targeted review of best practices for clinical prescribing interventions. J Biomed Inform. 2012;45:1202–1216. doi: 10.1016/j.jbi.2012.09.002. [DOI] [PubMed] [Google Scholar]

- 8.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004;37:56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 9.Patel VL, Kannampallil TG. Cognitive informatics in biomedicine and healthcare. J Biomed Inform. 2015;53:3–14. doi: 10.1016/j.jbi.2014.12.007. [DOI] [PubMed] [Google Scholar]

- 10.Jones SS, Rudin RS, Perry T, Shekelle PG. Health information technology: an updated systematic review with a focus on meaningful use. Ann Intern Med. 2014;160:48–54. doi: 10.7326/M13-1531. [DOI] [PubMed] [Google Scholar]

- 11.Yano EM, Soban LM, Parkerton PH, Etzioni DA. Primary care practice organization influences colorectal cancer screening performance. Health Serv Res. 2007;42:1130–1149. doi: 10.1111/j.1475-6773.2006.00643.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yano EM, Simon BF, Lanto AB, Rubenstein LV. The evolution of changes in primary care delivery underlying the Veterans Health Administration’s quality transformation. Am J Public Health. 2007;97:2151–2159. doi: 10.2105/AJPH.2007.115709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pifer EA, Teich JM, Sittig DF, Jenders R. Improving outcomes with clinical decision support: an implementer’s guide. 2nd. Himss; Chicago: 2011. [Google Scholar]

- 14.Byrne C, Sherry D, Mercincavage L, Johnston D, Pan E, Schiff G. Advancing Clinical Decision Support. 2011 [Google Scholar]

- 15.McMullen C, Ash J, Sittig D, Bunce A, Guappone K, Dykstra R, Carpenter J, Richardson J, Wright A. Rapid Assessment of Clinical Information Systems in the Healthcare Setting: An Efficient Method for Time-Pressed Evaluation. Methods Inf Med. 2011;50:299–307. doi: 10.3414/ME10-01-0042.Rapid. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Unertl KM, Novak LL, Johnson KB, Lorenzi NM. Traversing the many paths of workflow research: developing a conceptual framework of workflow terminology through a systematic literature review. J Am Med Inform Assoc. 2008;17:265–73. doi: 10.1136/jamia.2010.004333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care. 2010;19(Suppl 3):i68–i74. doi: 10.1136/qshc.2010.042085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Langley G, Moen RD, Nolan KM, Nolan TW, Norman CL, Provost LP. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. John Wiley & Sons; 2009. [Google Scholar]

- 19.Mitchell PH, Shortell SM. Adverse outcomes and variations in organization of care delivery. Med Care. 1997;35:NS19–32. doi: 10.1097/00005650-199711001-00003. [DOI] [PubMed] [Google Scholar]

- 20.Shortell SM, Zimmerman JE, Rousseau DM, Gillies RR, Wagner DP, Draper EA, Knaus WA, Duffy J. The performance of intensive care units: does good management make a difference? Med Care. 1994;32:508–25. doi: 10.1097/00005650-199405000-00009. [DOI] [PubMed] [Google Scholar]

- 21.ISO 9241-210. Ergonomics of human-system interaction – Part 210: Human-centred design for interactive systems. 2010 http://www.iso.org/iso/catalogue_detail.htm?csnumber=52075 accessed October 22, 2015.

- 22.Hysong SJ, Teal CR, Khan MJ, Haidet P. Improving quality of care through improved audit and feedback. Implement Sci. 2012;7:45. doi: 10.1186/1748-5908-7-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cleary PD, Meterko M, Wright SM, Zaslavsky AM. Are Comparisons of Patient Experiences Across Hospitals Fair? A Study in Veterans Health Administration Hospitals. Med Care. 2014;52:619–625. doi: 10.1097/MLR.0000000000000144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shin MH, Sullivan JL, Rosen AK, Solomon JL, Dunn EJ, Shimada SL, Hayes J, Rivard PE. Examining the validity of AHRQ’s patient safety indicators (PSIs): is variation in PSI composite score related to hospital organizational factors? Med Care Res Rev. 2014;71:599–618. doi: 10.1177/1077558714556894. [DOI] [PubMed] [Google Scholar]

- 25.Saini SD, Powell AA, Dominitz JA, Fisher DA, Francis J, Kinsinger L, Pittman KS, Schoenfeld P, Moser SE, Vijan S, Kerr EA. Developing and Testing an Electronic Measure of Screening Colonoscopy Overuse in a Large Integrated Healthcare System. J Gen Intern Med. 2016;31:53–60. doi: 10.1007/s11606-015-3569-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wright A, Ash JS, Erickson JL, Wasserman J, Bunce A, Stanescu A, St Hilaire D, Panzenhagen M, Gebhardt E, McMullen C, Middleton B, Sittig DF. A qualitative study of the activities performed by people involved in clinical decision support: recommended practices for success. J Am Med Informatics Assoc. 2014;21:464–472. doi: 10.1136/amiajnl-2013-001771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ash JS, Sittig DF, Guappone KP, Dykstra RH, Richardson J, Wright A, Carpenter J, McMullen C, Shapiro M, Bunce A, Middleton B. Recommended practices for computerized clinical decision support and knowledge management in community settings: a qualitative study. BMC Med Inform Decis Mak. 2012;12:6. doi: 10.1186/1472-6947-12-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Evans DC, Nichol WP, Perlin JB. Effect of the implementation of an enterprise-wide Electronic Health Record on productivity in the Veterans Health Administration. Health Econ Policy Law. 2006;1:163–9. doi: 10.1017/S1744133105001210. [DOI] [PubMed] [Google Scholar]

- 29.Yano EM, Fleming B, Canelo I, Lanto AB, Yee T, Wang M. National survey results for the primary care director module of the VHA clinical practice organizational survey. 2008 [Google Scholar]

- 30.Yano EM, Fleming B, Canelo I, Lanto AB, Yee T, Wang M. National survey results for the chief of staff module of the VHA clinical practice organizational survey. 2007 [Google Scholar]

- 31.Chou AF, Rose DE, Farmer M, Canelo I, Yano EM. Organizational Factors Affecting the Likelihood of Cancer Screening Among VA Patients. Med Care. 2015;53:1040–9. doi: 10.1097/MLR.0000000000000449. [DOI] [PubMed] [Google Scholar]

- 32.Guerrero EG, Heslin KC, Chang E, Fenwick K, Yano E. Organizational Correlates of Implementation of Colocation of Mental Health and Primary Care in the Veterans Health Administration. Adm Policy Ment Heal Ment Heal Serv Res. 2014 doi: 10.1007/s10488-014-0582-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Beatty PC, Willis GB. Research synthesis: The practice of cognitive interviewing. Public Opin Q. 2007;71:287–311. doi: 10.1093/poq/nfm006. [DOI] [Google Scholar]

- 34.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Carney BT, West P, Neily J, Mills PD, Bagian JP. The Effect of Facility Complexity on Perceptions of Safety Climate in the Operating Room: Size Matters. Am J Med Qual. 2010;25:457–461. doi: 10.1177/1062860610368427. [DOI] [PubMed] [Google Scholar]

- 37.Weiner BJ, Belden CM, Bergmire DM, Johnston M. The meaning and measurement of implementation climate. Implement Sci. 2011;6:78. doi: 10.1186/1748-5908-6-78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Patterson ES, Doebbeling BN, Fung CH, Militello L, Anders S, Asch SM. Identifying barriers to the effective use of clinical reminders: Bootstrapping multiple methods. 2005;38:189–199. doi: 10.1016/j.jbi.2004.11.015. [DOI] [PubMed] [Google Scholar]

- 39.StataCorp. Stata Statistical Software: Release 13, 2013. 2013 doi: 10.2307/2234838. [DOI] [Google Scholar]

- 40.Hysong SJ, Best RG, Pugh JA. Audit and feedback and clinical practice guideline adherence: making feedback actionable. Implement Sci. 2006;1:9. doi: 10.1186/1748-5908-1-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Russ AL, Zillich AJ, Melton BL, Russell SA, Chen S, Spina JR, Weiner M, Johnson EG, Daggy JK, McManus MS, Hawsey JM, Puleo AG, Doebbeling BN, Saleem JJ. Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation. J Am Med Inform Assoc. 2014:1–10. doi: 10.1136/amiajnl-2013-002045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, Payne TH, Rosenbloom ST, Weaver C, Zhang J. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013;20:e2–8. doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Blumenthal D. Launching HITECH. N Engl J Med. 2010;362:382–5. doi: 10.1056/NEJMp0912825. [DOI] [PubMed] [Google Scholar]

- 44.Koppel R, Lehmann CU. Implications of an emerging EHR monoculture for hospitals and healthcare systems. J Am Med Inform Assoc. 2014;2:1–5. doi: 10.1136/amiajnl-2014-003023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ellsworth MA, Dziadzko M, O’Horo JC, Farrell AM, Zhang J, Herasevich V. An appraisal of published usability evaluations of electronic health records via systematic review. J Am Med Inform Assoc. 2016 doi: 10.1093/jamia/ocw046. ocw046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Powell AA, White KM, Partin MR, Halek K, Christianson JB, Neil B, Hysong SJ, Zarling EJ, Bloomfield HE. Unintended consequences of implementing a national performance measurement system into local practice. J Gen Intern Med. 2012;27:405–12. doi: 10.1007/s11606-011-1906-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Saleem JJ, Patterson ES, Militello L, Render ML, Orshansky G, Asch SM. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc. 2005;12:438–47. doi: 10.1197/jamia.M1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hysong SJ, Khan MM, Petersen LA. Passive monitoring versus active assessment of clinical performance: impact on measured quality of care. Med Care. 2011;49:883–90. doi: 10.1097/MLR.0b013e318222a36c. [DOI] [PubMed] [Google Scholar]

- 49.Hysong SJ, Best RG, Pugh JA. Clinical practice guideline implementation strategy patterns in Veterans Affairs primary care clinics. Health Serv Res. 2007;42:84–103. doi: 10.1111/j.1475-6773.2006.00610.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hsiao CJ, Jha AK, King J, Patel V, Furukawa MF, Mostashari F. Office-based physicians are responding to incentives and assistance by adopting and using electronic health records. Health Aff. 2013;32:1470–1477. doi: 10.1377/hlthaff.2013.0323. [DOI] [PubMed] [Google Scholar]