Abstract

Objective

Long-term acceptability among computerized clinical decision support system (CDSS) users in pediatrics is unknown. We examine user acceptance patterns over six years of our continuous computerized CDSS integration and updates.

Materials and Methods

Users of Child Health Improvement through Computer Automation (CHICA), a CDSS integrated into clinical workflows and used in several urban pediatric community clinics, completed annual surveys including 11 questions covering user acceptability. We compared responses across years within a single healthcare system and between two healthcare systems. We used logistic regression to assess the odds of a favorable response to each question by survey year, clinic role, part-time status, and frequency of CHICA use.

Results

Data came from 380 completed surveys between 2011 and 2016. Responses were significantly more favorable for all but one measure by 2016 (OR range 2.90–12.17, all p < 0.01). Increasing system maturity was associated with improved perceived function of CHICA (OR range 4.24–7.58, p < 0.03). User familiarity was positively associated with perceived CDSS function (OR range 3.44–8.17, p < 0.05) and usability (OR range 9.71–15.89, p < 0.01) opinions.

Conclusion

We present a long-term, repeated follow-up of user acceptability of a CDSS. Favorable opinions of the CDSS were more likely in frequent users, physicians and advanced practitioners, and full-time workers. CHICA acceptability increased as it matured and users become more familiar with it. System quality improvement, user support, and patience are important in achieving wide-ranging, sustainable acceptance of CDSS.

Keywords: Decision support, user satisfaction, quality improvement, user acceptability, computerized decision support system

1 Introduction

Clinical decision support systems (CDSS) are increasingly integrated into patient care and electronic health record systems. They provide services ranging from simple reminders to complex risk-prediction algorithms. Their efficacy depends on clinician acceptance and use [1–3]. Prior studies have acknowledged the difficulties and successful aspects of implementing computerized CDSS into clinical practice [4].

A large systematic review of CDSS identified 19 CDSS implementation trials that also included provider satisfaction measures [5]. Most described a generally positive user satisfaction, but only four showed significantly improved satisfaction compared to usual care or no CDSS. Six studies reported provider dissatisfaction. Most studies examined CDSS effects over 6–12 months, and only one study described results for more than three years.

A more recent systematic review of factors influencing guideline-based CDSS implementation identified several knowledge gaps, including user satisfaction and service quality [6]. The authors also did not find any studies reporting on satisfaction with specific CDSS functions or benefits from efficiency or error reduction.

Healthcare technology adoption requires time and institutional effort, and theories such as the Technology Adoption Model illustrate how perceived ease-of-use and perceived usefulness influence adoption [7]. In addition, software-based technology is often updated to fix unanticipated consequences and offer new features. As a result, satisfaction may change over time, necessitating assessment of CDSS acceptability over the long term.

The Child Health Improvement through Computer Automation (CHICA) system is an evidence-based, computerized CDSS that has been in use since 2004 at several urban community clinics in Indianapolis, IN [8]. The CHICA team regularly reviews weekly usage patterns, holds quarterly user meetings to solicit feedback, and continuously refines the system with upgrades based in part on these data [9]. Formative evaluations are an efficient method to understand user and system needs for iterative improvements. As part of ongoing quality improvement, CHICA users complete annual surveys on the acceptance, usability, and perceived efficacy of the system. Results from the first two years of these surveys demonstrated short-term improvement and general, qualitative acceptance [9]. To our knowledge, long-term acceptability results are not reported among computerized CDSS users, let alone in pediatrics. The current study examines user attitudes and opinions over six years of continuous computerized CDSS integration and updates. We determine factors related to acceptability, and present results through the perspective of the Technology Adoption Model.

2 Materials and Methods

2.1 CHICA

CHICA has been previously described in technical and clinical literature [8,10–12]. It is a computerized CDSS that uses patient data to generate patient screening questionnaires (Pre-Screener Form, PSF) that are completed in the waiting room. These answers are uploaded to the CHICA server, which combines them with previous answers and medical history from the patient’s medical record. CHICA uses medical logic modules with embedded priority scores [13] written in Arden Syntax (a programming language for encoding medical knowledge [14]) to compute a prioritized list of recommendations for providers. Along with blood pressure, heart rate, temperature, growth information, and an area to document a physical exam, the six highest priority recommendations are displayed on a Provider Worksheet (PWS). Providers can document their responses to the prompts by checking boxes. Responses go back into the CHICA database to inform future encounters and aid clinical research. See online Supplement B for samples and screenshots of CHICA user interfaces. CHICA also produces “just-in-time” handouts to reinforce provider counseling or collect further patient information. Finally, CHICA generates a prose note that includes the patient-identified risks from the PSF, information presented on the PWS, as well as provider answers to the PWS prompts. This text can be quickly imported into the clinical note. All CHICA interactions with patients, including PSF questions and handouts, are available in English and Spanish. Non-provider clinic personnel primarily interact with CHICA by distributing and collecting PSFs, PWSs (when using paper versions), and handouts.

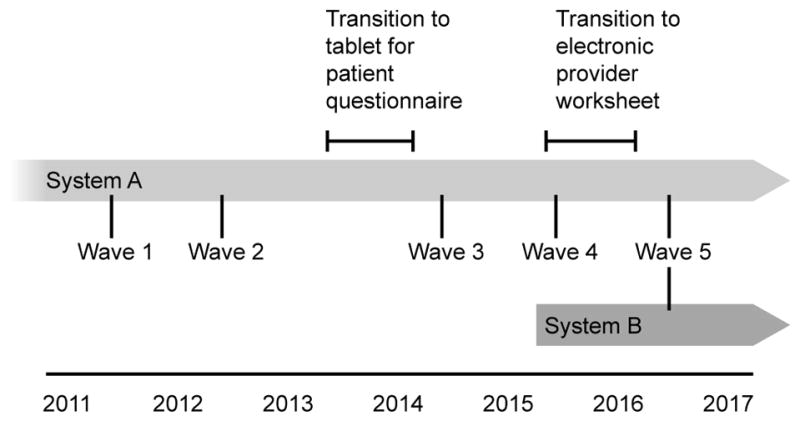

CHICA started within one healthcare system (System A) in 2004 with one clinic and gradually expanded to five clinics by 2013. This healthcare system used the Regenstrief Medical Record System, a homegrown electronic health record (EHR), with origins starting in 1972 [15,16]. The surveys in this study extended through the last few months of use before the System A transitioned to a vendor-based EHR (Epic®, Epic Systems Corporation, Verona, WI), within which CHICA is currently integrated. In 2015, a second health system (System B) adopted CHICA, where it was integrated into a different vendor-based EHR (Cerner®, Cerner Corporation, Kansas City, MO) (See Figure 1 for timeline).

Figure 1.

Timeline of relevant CHICA events and survey waves.

2.2 Paper to Electronic Conversion

Over the course of this study, the interfaces and workflow of CHICA changed from scanned paper to electronic methods. Prior to 2013, patients completed a paper PSF that was scanned into image-recognition software, which encoded their response in a database. The PSF was converted to a tablet interface through a staged rollout over 11 months between the survey administrations in 2012 and 2014 [17]. The basic functionality of prioritized screening questions remained the same. Soon afterwards, the PWS was converted to a webpage that recreated the paper functionality. Providers could access the webpage through a link in the EHR. Between the 2015 and 2016 surveys, providers could use either the webpage or paper versions of the PWS, though paper use dwindled closer to the 2016 survey. The paper PWS version was officially disabled after the conclusion of the 2016 survey. The ability to import the CDSS-generated documentation into the clinic note remained unchanged throughout this process.

2.3 Surveys

The CHICA user acceptability survey consisted of 12 core questions (Table 1, which also includes abbreviations used hereafter) and several provider characteristic questions (e.g., clinic role, percent time in clinic).

Table 1.

Core acceptability questions and associated abbreviations used in this study. Several questions were negatively worded and their scoring was reversed.

| Domain | Questions | Abbreviation | Reverse scored (negatively worded) |

|---|---|---|---|

| Function | CHICA sometimes reminds me of things I otherwise would have forgotten. | Reminds me | |

| CHICA makes documentation easier. | Easier documentation | ||

| CHICA often makes mistakes. | Makes mistakes | X | |

| CHICA has uncovered issues with patients that I might not otherwise have found out about. | Uncovers missed issues | ||

| I often disagree with the advice CHICA gives. | Disagree with advice | X | |

| The handouts CHICA produces are useful. | Useful handouts | ||

| CHICA makes lots of errors. | Makes errors | X | |

| Usage | I rarely, if ever, use CHICA. | Rarely use | X |

| CHICA tends to slow down the clinic. | Slows clinic | X | |

| I would rather not use CHICA. | Rather not use | X | |

| CHICA has too many technical problems. | Technical problems | X | |

| Technical support for CHICA is very good. | Good technical support |

Five core questions were positively worded and seven were negatively worded. We note that the item “CHICA often makes mistakes” was aimed towards clinical accuracy, and “CHICA makes lots of errors” was oriented more towards technical issues. The survey also included other items relating to specific decision support modules under evaluation, implementing, for example, medical-legal guidance, autism screening guidelines, and Attention-Deficit/Hyperactivity Disorder (ADHD) management. Only core questions—which address the general acceptability of the system—are included in this study. They were prepared as Likert items with a 5-point ordered response scale of strongly agree, somewhat agree, neutral, somewhat disagree, and strongly disagree. In order to encourage candid responses and suggestions for improvement, we did not collect identifiable information from participants.

We distributed surveys within System A annually in summer between 2011 and 2016, except for 2013 due to lack of funding (Figure 1). System B received the survey in 2016 only. As this survey was intended as a census rather than a representative sample, a research assistant (RA) from an independent research support network approached all CHICA users, including administrative staff, nurses, medical assistants, advanced practitioners, and physicians (residents and faculty). The RA identified herself as separate from the CHICA developers, reviewed the anonymous nature of survey, and invited the CHICA users to complete the surveys. A $5 gift card was offered as compensation. The surveys were completed before new intern physicians started in the clinics each year.

The surveys focused on a variety of concerns, and were both summative and formative, consistent with health information technology evaluation recommendations [18]. The CHICA development team implements continuous quality improvement and regular feature upgrades informed by this survey and regular contact with users [9].

Study data were collected and managed using REDCap, a web-based survey and database tool, hosted at Indiana University [19]. We dichotomized responses into favorable versus unfavorable with respect to the CHICA system (negatively worded questions were reverse scored). Since our primary outcome was an explicitly favorable response, neutral responses were categorized as unfavorable. We classified these core questions into questions about how CHICA functions or users’ usage of CHICA (Table 1). Clinic role was defined as provider (physician or advanced practitioner) or non-provider (nurse, MA, administrative staff). Clinic work time was self-reported as part-time or full-time. CHICA use was determined from a question, “I rarely, if ever, use CHICA”; “disagree” and “strongly disagree” responses were coded as frequent users, and all others were coded as infrequent users. Intention to use CHICA was defined by a negative response to the reverse-coded question, “I would rather not use CHICA.”

We calculated phi coefficients to test correlation between survey items. We used multiple logistic regression to examine factors associated with a favorable response for each core question, controlling for survey year, clinic role, part-time status, and CHICA use frequency. In order to assess the relative contributions to user satisfaction of familiarity with the software versus the maturity of the software itself, we again used multiple logistic regression to compare System B (new users of a mature system [2016]) with System A over time (new users of new system [2011] and familiar users of a mature system [2016]). We deemed System A 2011 and System B 2016 users to have similar familiarity with the system, and all users in 2016 to be using a similar system maturity level. We used RStudio [20] and R software [21] to conduct the statistical analyses. This study was reviewed and approved by the Indiana University Human Subjects Office.

3 Results

We collected data from 352 completed surveys out of the 354 surveys distributed to System A members over five waves. Twenty-eight users from System B completed surveys in 2016. An average of 70.4 (SD 6.9) users per year responded in System A. All surveys that were distributed were returned, except for the 2012 wave (97% completion). Complete responses from System A for our variables of interest were available for an average of 93.8% (SD 0.8%) of core questions. Slightly more than half of System A respondents were providers (55.1%) or full-time employees (53.4%). Of the 64 physicians that identified the year they completed residency, the earliest was 1967 and median was 2002. Overall, 80% of respondents reported being frequent CHICA users, and among providers, an average of 92% (SD 2%) identified themselves as frequent users each year (Table 2). The phi coefficients of correlation for pairwise question results ranged between 0.03 and 0.49 (median 0.23).

Table 2.

Survey and respondent characteristics, divided by health system.

| Health system | |||

|---|---|---|---|

| System A | System B | P* | |

| n | 352 | 28 | |

| Year, n (%) | <0.01 | ||

| 2011 | 71 (20.2) | - | |

| 2012 | 64 (18.2) | - | |

| 2014 | 63 (17.9) | - | |

| 2015 | 79 (22.4) | - | |

| 2016 | 75 (21.3) | 28 (100.0) | |

| Clinic role, n (%) | 0.06 | ||

| Provider | 194 (55.1) | 22 (78.6) | |

| Other | 154 (43.8) | 6 (21.4) | |

| No response | 4 (1.1) | 0 | |

| CHICA usage, n (%) | 0.08 | ||

| Frequent | 293 (83.2) | 19 (67.9) | |

| Infrequent | 53 (15.1) | 8 (28.6) | |

| No response | 6 (1.7) | 1 (3.6) | |

| Work time, n (%) | <0.01 | ||

| Full time | 188 (53.4) | 5 (17.9) | |

| Part time | 153 (43.5) | 22 (78.6) | |

| No response | 11 (3.1) | 1 (3.6) | |

| Physician status, n (%)** | <0.001 | ||

| Faculty | 121 (62.4) | 4 (18.2) | |

| Resident | 67 (34.5) | 18 (81.8) | |

| No response | 6 (3.1) | 0 (0) | |

Fisher’s exact test

Limited to subset of respondents identifying as providers

During the 3-month periods surrounding survey waves in 2014, 2015, and 2016, the average weekly PSF response rate was 88.6% (standard deviation 3.3%). In this same period, providers returned 77.6% (SD 11%) of their worksheets.

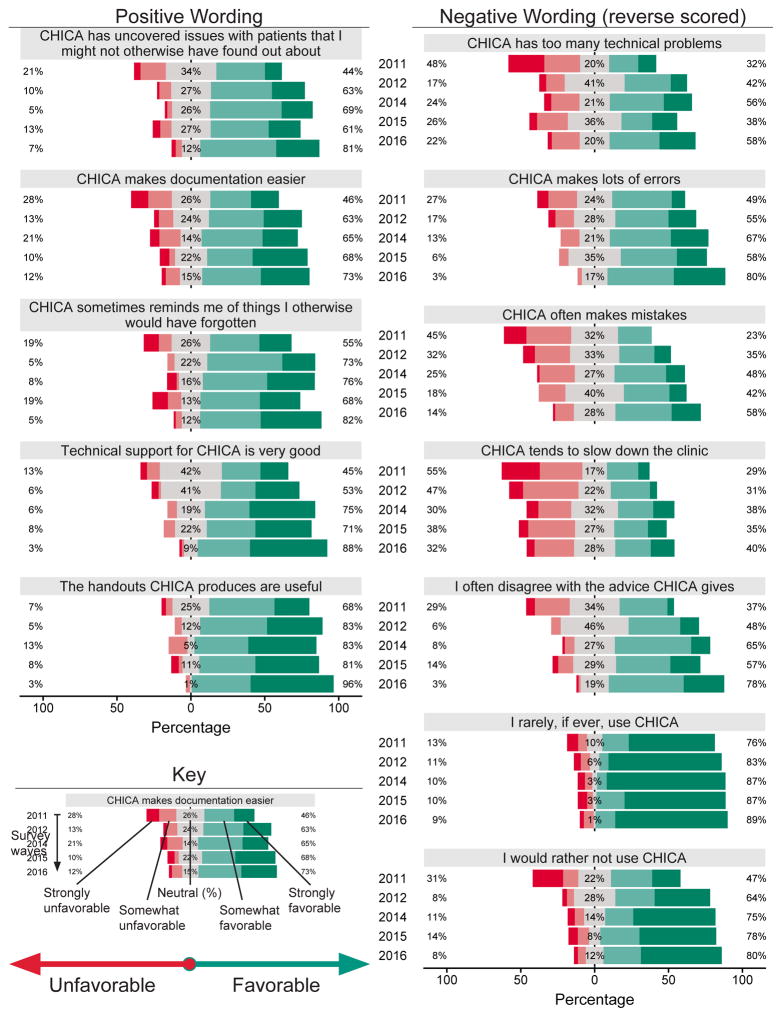

Figure 2 presents the percent of respondents who answered favorably to each question by survey year. The favorable responses generally outweigh the unfavorable opinions, with a notable portion of users within the neutral area. However, over the five waves, neutral responses appeared stable to diminishing, as “stronger” opinions prevailed. A rightward and downward trend visually indicates a satisfaction increase over time.

Figure 2. Results of user responses by question and year.

Percents indicate portion agreeing, disagreeing, or responding neutrally to survey questions in each year. Neutral responses are shown here divided evenly along the agree and disagree axis. Negatively worded questions are reverse scored such that all results are shown with leftward responses being unfavorable to the CDSS and rightward responses being favorable. Numbers may not add to 100% due to rounding.

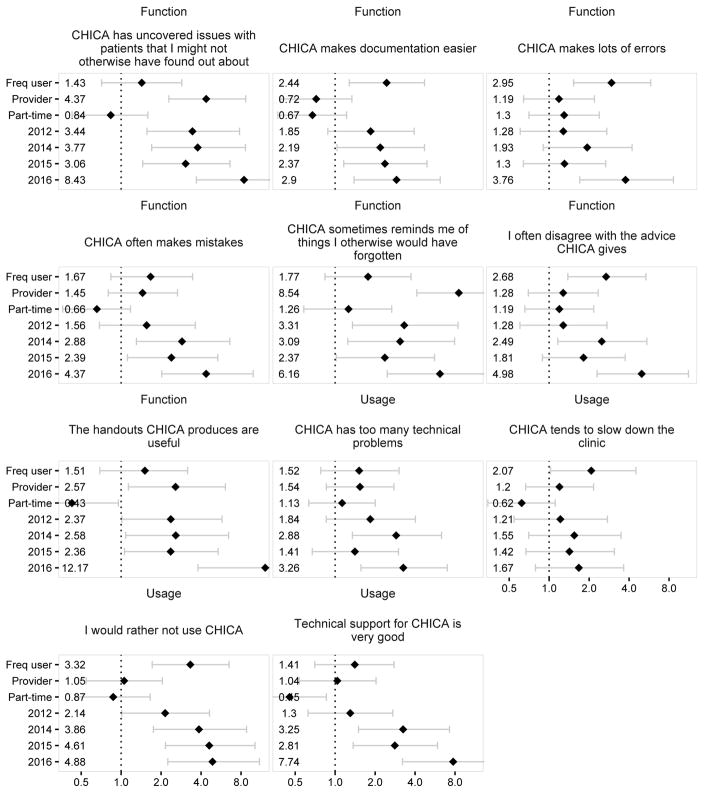

Multiple logistic regression outputs are represented as forest plots in Figure 3. This provides a core question-level understanding of acceptance trends. For example, each year users reported steady improvement in CHICA making documentation easier, whereas markedly more users felt CHICA handouts were useful in 2016. In 2012 (the second year of surveys), significantly more users preferred to use CHICA and reported that CHICA uncovered missed issues and reminded them of forgotten items. By 2016, CHICA acceptance increased in all measures except one (Slows clinic). Compared to the 2011 baseline, CHICA acceptance never significantly declined.

Figure 3. Adjusted logistic regression models of responding favorably acceptability survey.

Each question represents a separate, adjusted model. Odds ratio estimates and 95% confidence intervals are depicted. Freq user = frequent CHICA user.

Frequent CHICA users reported easier documentation, fewer errors, and more agreement with advice given by CHICA. Additionally, the frequent users intended to use CHICA significantly more than non-frequent users. Providers were more likely to report CHICA uncovered missed issues, reminded them of things otherwise forgotten, and provided useful handouts. Part-time workers viewed CHICA’s technical support and handouts less favorably than full-time employees.

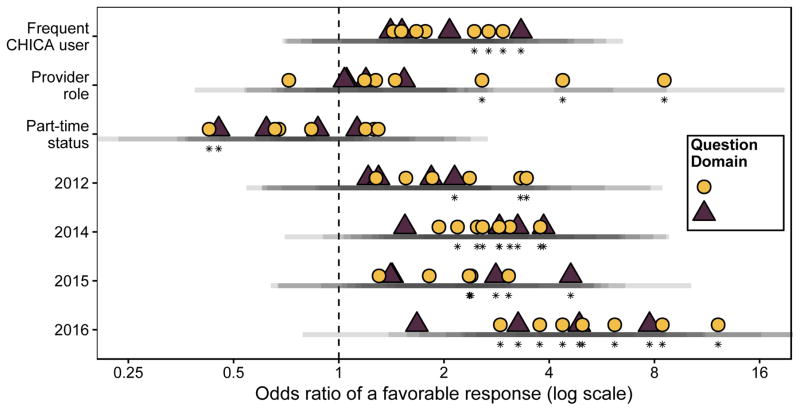

Figure 4 is a summary view of these 11 regression models (one for each acceptance measure), and identifies function versus usage measures across the regression predictors. User acceptance was more heterogeneous in later years. Table A.1 presents the adjusted model results.

Figure 4. Summary of logistic regression models of responding favorably towards CHICA.

Each point represents an odds ratio from a separate, adjusted model for each core acceptability question. The reference year is 2011. Transparent bars below point estimates show overlapping 95% confidence intervals. Asterisks denote significance at alpha = 0.05.

3.1 User familiarity and system maturity

Twenty-eight survey responses from System B were separately compared to 71 (2011) and 75 (2016) from System A. Users with the same maturity of software but more familiar using it reported better technical support, easier documentation, fewer errors, more useful handouts, and a stronger preference to use CHICA (Table 3).

Table 3.

Significant associations between system maturity and user familiarity with users’ assessment of system function and usage.

| Domain | Question | OR (95% CI) predicting a favorable response* |

|---|---|---|

| Effect of system maturity (2016 System B versus 2011 System A [reference]) | ||

| Function | CHICA has uncovered issues with patients that I might not otherwise have found out about | 5.18 (1.63–18.78) |

| Function | CHICA often makes mistakes | 7.58 (2.43–26.34) |

| Function | I often disagree with the advice CHICA gives | 4.24 (1.31–15.85) |

| Effect of user familiarity (2016 System A versus 2016 System B [reference]) | ||

| Function | CHICA makes documentation easier | 6.29 (1.9–23.87) |

| Function | CHICA makes lots of errors | 3.44 (1.04–11.68) |

| Function | The handouts CHICA produces are useful | 8.17 (1.73–46.68) |

| Usage | Technical support for CHICA is very good | 15.89 (3.81–85.34) |

| Usage | I would rather not use CHICA | 9.71 (3.16–33.54) |

Models adjusted for clinic role, part-time status and CHICA use frequency

Respondents with similar familiarity but more mature software more strongly identified CHICA as uncovering missed issues, rarely making mistakes and providing advice with which they agreed – all of which were function-related measures. No measures in these analyses had a significant decrease in favorability related to user familiarity or system maturity. The full results are given in Tables A.2 and A.3.

4 Discussion

This study presents long-term, repeated follow-up data of user acceptance of a CDSS in use since 2004 in busy pediatric ambulatory clinics. CHICA users reported increasing acceptance with both the function and usage of the system over time. Acceptability also changed favorably with user familiarity and system maturity.

The satisfaction reported on our surveys could be due to many factors. In a systematic review of CDSS effects, Bright et al. [5] described several factors associated with high provider satisfaction, including use in academic and ambulatory settings, EHR integration, local development, synchronous and point of care recommendations, and not requiring a mandatory response. More recently, Kilsdonk et al. [6] reiterated several of these same factors in a separate systematic review. CHICA shared these characteristics, and also has regular user support meetings, onsite technical support, and regular system upgrades, which may contribute to the positive results in this study. More importantly, CHICA is built on four factors previously associated [4] with improving clinical practice: integration in clinical workflow, actionable recommendations, point of care decision support, and computer-based methods. We suggest our clinician acceptability is also driven by improved clinical practice.

We can also turn to the science of information technology implementation to enlighten the connections between our users’ responses. The Technology Acceptance Model (TAM) and its variations are widely used to describe adoption of information systems at the individual level, including within the health information technology (IT) setting [7]. At its core, TAM describes a pathway where perceived usefulness (function) and ease of use (usage) influence a user’s attitude toward a technology, their intention to use it, and, in turn, actually using the system [22]. Our study was not designed to test causation, but our results describe associations that fit well with the basis of the TAM. Three function domain questions (addressing “usefulness”: Documentation easier, Makes errors, Disagree with advice) were associated with frequent CHICA use. Recent efforts to extend TAM for health IT show that perceived reliability (Makes mistakes, Makes errors in our study) and compatibility with work goal (Uncovers missed issues, Reminds me, Easier documentation in our study) are significant contributors to the usefulness and ease of use variables [23]. Our results also show significant association of favorable responses over time with questions addressing those topics. Finally, intention to use CHICA was strongly associated with frequent use—the final pathway for technology adoption reflecting intention and its subsequent behavior.

Another factor that may affect CDSS user responses is the advertised vision of health information technology [24]. Satisfaction is dampened when physicians perceive a quality reduction attributed to a gap between the current state and the promise of a meaningfully useful EHR [25]. Even more extreme, the enlightening expectations for future systems described in popular media [26] may brood disappointment when a user turns to the reality of day-to-day implementation.

Frequent CHICA users felt CHICA made documentation easier, suggesting familiarity with the system helped efficiency [27]. Additionally, they felt CHICA was more accurate than infrequent users did. We propose the perceived inaccuracy helped drive infrequent use, per models of technology adoption where perceptions drive adoption [7], but this study can only determine association, not causality.

End-user satisfaction can vary by clinical role [28,29]. Based on prior CHICA analyses, clinicians respond to nearly half of the recommendations displayed [9,30,31], which is similar to historical estimates of clinician engagement with CDSS [32]. In our study, nearly all clinicians self-identified as frequent CHICA users. Clinical providers (physicians and mid-level providers) stood out by saying the decision supports identified otherwise unnoticed issues and that the printed handouts were accurate and useful. We expect that some of this was because non-provider staff did not see the decision support prompts intended for the providers. For future implementation, it is important to address and train towards the unique needs of each end user’s role in the healthcare team.

The first areas to show significant improvement were Uncovers missed issues, Reminds me, and Rather not use. These topics reflect the core of computerized CDSS as a method for harnessing technology to improve the “non-perfectibility of man” [32]. Additionally, acceptability with these core topics coincides with an early increase in preference to use the system. By the most recent survey, all measures except one were significantly more favorable than the baseline. As seen in Figure 4, the distribution of odds ratios increased between 2011 and 2016. Specific satisfaction areas increased by differing amounts, reflecting the heterogeneity of user perspectives and patterns. For example, CHICA users may be more sensitive to improving technical support (2016 OR 7.74, CI 3.21–20.51) than easier documentation (2016 OR 2.9, CI 1.39–6.18).

Many questions showed a relative dip in favorable responses in the 2015 survey wave. We suggest this is due to the crossover period when the clinician-facing components of CHICA were using both paper and electronic interfaces. Babbot et al [33] identified higher stress levels among clinicians during a paper-to-electronic transition phase, compared to a more exclusive paper or electronic record. The electronic interface was increasingly adopted through the time covered under the 2016 survey, and responses were highest at that time. While paper records in some form are integral to clinic flow for the foreseeable future, informaticians can prepare for this phenomenon by minimizing the overlap between health record media types, especially during transition times.

Apart from the 2015 dip, the odds of responding favorably to the core measures increased year-over-year for nearly all questions. The health information technology literature includes sustained customer engagement, responsive support staff, and IT team visibility as factors promoting successful implementation [34,35]. The CHICA development team meets weekly to review feedback and performance, and clinicians and full-time developers implement new modules and features over time. Support staff are available in the various clinics to provide real-time, in situ training and problem resolution. All CHICA users are invited to quarterly user group meetings at their respective clinic sites where development team and medical directors review usage and receive direct feedback. Additionally, the surveys we examine in this study serve as annual feedback to the development team. We propose these multifaceted efforts aided the continually-improving user acceptability [9].

CHICA offers advice that is both guideline-based and tailored (personalized) to individual patients. This model will become increasingly important as machine learning and other forms of personalized medicine are introduced to decision support [36,37]. Our results emphasize the importance of continuously monitoring user feedback, including trust and acceptance of advanced algorithms, and adjusting accordingly.

Users with similar familiarity levels but a more mature CHICA implementation were more likely to report favorably in certain functional areas (e.g., agreeable advice, uncovered missed issues). In contrast, users with the same maturity of CHICA software but less familiarity showed more unfavorable opinions in both function and usage domains (e.g., worse technical support, rather not use CHICA). Our findings concur with prior research on CHICA, which demonstrated that users with more familiarity were more likely to fill out the physician worksheet prompts [31]. Beyond our local findings, other studies show familiarity with health information technology improves ease-of-use and perception [38,39]. Those implementing and maintaining CDSS should exercise patience while they work to continually improve it and respond to user feedback. Our results show that favorable opinions age well in an environment of coordinated effort to expand and integrate.

Our study has some limitations. It does not strictly fall under a repeated cross-sectional analysis. Although many of the respondents were likely the same across survey waves, with an anonymous survey we do not have within-respondent longitudinal data. It should be noted that our surveys gathered user opinions, and we did not conduct objective human factors and usability testing—a separate field that can directly inform the CDSS design [40,41]. Additionally, though qualitative user opinions were previously reported for only the first two years of this survey[9], we note a re-analysis is outside the scope of this report, but is planned for future work to describe the free-text survey responses. While CHICA was implemented in 2004, survey data are available starting in 2011. The data granularity was diminished by dichotomizing responses. Our results likely underestimate the true values, since we combined neutral and unfavorable responses. Finally, our analysis with System B was limited by a small sample size, which may have under-estimated the effects of user familiarity and system maturity.

5 Conclusion

Clinical decision support system (CDSS) user acceptability was assessed via repeated surveys over six years of implementation at several urban community pediatric clinics. CHICA users were more favorable towards the function and usage of the system as they gathered more familiarity and as the system matured, much like a positive feedback cycle. We propose the positive user opinions were influenced in part by several features previously identified as factors in short-term CDSS user satisfaction, including point-of-care actionable recommendations, EHR integration, user engagement and feedback, and an active technical team. Significant and ongoing quality improvement, user support, and patience are important in achieving wide-ranging, sustainable acceptance of clinical decision support systems.

Supplementary Material

10 Summary table.

What was already known on the topic

|

What this study added to our knowledge

|

Highlights.

User acceptability can affect CDDS efficacy, but only short-term data are known.

Computerized CDSS users completed surveys repeated five times over six years.

Frequent users and clinical providers had more favorable opinions of the CDSS.

CDSS user acceptability improves with time, user experience and system abilities.

Broad and sustainable user acceptability is possible with computerized CDSS.

Acknowledgments

The authors wish to acknowledge the technical expertise and efforts of the individual members of the Child Health Informatics and Research Development Lab (CHIRDL) team that provides programming and technical support for CHICA, the Pediatric Research Network (PResNet) at Indiana University for administering the CHICA satisfaction survey, and the clinic personnel who constantly help us evaluate and improve CHICA.

9 Funding

This work was supported by the National Institutes of Health [grant numbers 1R01HS020640-01A1, 1R01HS018453-01, R01 HS017939, R01 LM010031Z]. Dr. Bauer is an investigator with the Implementation Research Institute (IRI) at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institute of Mental Health (5R25MH08091607) and the Department of Veteran Affairs, Health Services Research & Developmental Service, Quality Enhancement Research Initiative (QUERI).

Abbreviations

- CHICA

Child Health Improvement through Computer Automation

- CDSS

Clinical Decision Support System

- PSF

Pre-screener Form

- PWS

Provider Worksheet

- EHR

Electronic Health Record

- TAM

Technology Acceptance Model

Footnotes

6 Authors’ Contributions

All authors contributed to conception and design of the study, or acquisition of data, or analysis and interpretation of data; drafting or revising the article; and final approval of submitted version.

8 Conflicts of Interest

Drs. Carroll and Downs are co-creators of CHICA. CHICA was developed at Indiana University, a non-profit organization. In 2016, one of the authors (SD) co-founded a company to disseminate the CHICA technology. There is no patent, and at this time, there is no licensing agreement. All data presented were collected by an investigative team separate from the authors and developers, and collection occurred prior to the creation of the aforementioned company. The data were analyzed by the first author (RG), who has no conflict of interest. The other authors have indicated they have no potential conflicts of interest to disclose.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Bates DW, Kuperman GJ, Wang S, et al. Ten Commandments for Effective Clinical Decision Support: Making the Practice of Evidence-based Medicine a Reality. J Am Med Inform Assoc JAMIA. 2003;10:523–30. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Seidling HM, Phansalkar S, Seger DL, et al. Factors influencing alert acceptance: a novel approach for predicting the success of clinical decision support. J Am Med Inform Assoc JAMIA. 2011;18:479–84. doi: 10.1136/amiajnl-2010-000039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jaspers MWM, Smeulers M, Vermeulen H, et al. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc. 2011;18:327–34. doi: 10.1136/amiajnl-2011-000094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kawamoto K, Houlihan CA, Balas EA, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 6.Kilsdonk E, Peute LW, Jaspers MWM. Factors influencing implementation success of guideline-based clinical decision support systems: A systematic review and gaps analysis. Int J Med Inf. 2017;98:56–64. doi: 10.1016/j.ijmedinf.2016.12.001. [DOI] [PubMed] [Google Scholar]

- 7.Holden RJ, Karsh B-T. The Technology Acceptance Model: Its past and its future in health care. J Biomed Inform. 2010;43:159–72. doi: 10.1016/j.jbi.2009.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Anand V, Biondich PG, Liu G, et al. Child Health Improvement through Computer Automation: the CHICA system. Stud Health Technol Inform. 2004;107:187–91. [PubMed] [Google Scholar]

- 9.Bauer NS, Carroll AE, Downs SM. Understanding the acceptability of a computer decision support system in pediatric primary care. J Am Med Inform Assoc JAMIA. 2014;21:146–53. doi: 10.1136/amiajnl-2013-001851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Anand V, Carroll AE, Biondich PG, et al. Pediatric decision support using adapted Arden Syntax. Artif Intell Med. 2015 doi: 10.1016/j.artmed.2015.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Carroll AE, Biondich PG, Anand V, et al. Targeted screening for pediatric conditions with the CHICA system. J Am Med Inform Assoc. 2011;18:485–90. doi: 10.1136/amiajnl-2011-000088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Carroll AE, Bauer NS, Dugan TM, et al. Use of a computerized decision aid for ADHD diagnosis: a randomized controlled trial. Pediatrics. 2013;132:e623–629. doi: 10.1542/peds.2013-0933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Downs SM, Uner H. Expected value prioritization of prompts and reminders. Proc AMIA Symp. 2002:215–9. [PMC free article] [PubMed] [Google Scholar]

- 14.Health Level Seven International. The Arden Syntax for Medical Logic Systems Version 2.9. 2013. [Google Scholar]

- 15.Duke JD, Morea J, Mamlin B, et al. Regenstrief Institute’s Medical Gopher: A next -generation homegrown electronic medical record system. Int J Med Inf. 2014;83:170–9. doi: 10.1016/j.ijmedinf.2013.11.004. [DOI] [PubMed] [Google Scholar]

- 16.McDonald CJ, Overhage JM, Tierney WM, et al. The Regenstrief Medical Record System: a quarter century experience. Int J Med Inf. 1999;54:225–53. doi: 10.1016/s1386-5056(99)00009-x. [DOI] [PubMed] [Google Scholar]

- 17.Anand V, McKee S, Dugan TM, et al. Leveraging electronic tablets for general pediatric care: a pilot study. Appl Clin Inform. 2015;6:1–15. doi: 10.4338/ACI-2014-09-RA-0071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kaplan B. Addressing Organizational Issues into the Evaluation of Medical Systems. J Am Med Inform Assoc. 1997;4:94–101. doi: 10.1136/jamia.1997.0040094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.RStudio Team. RStudio: Integrated Development Environment for R. Boston, MA: RStudio, Inc; 2016. [Google Scholar]

- 21.R Core Team. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; 2016. [Google Scholar]

- 22.Davis FD. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989;13:319–40. [Google Scholar]

- 23.Ducey AJ, Coovert MD. Predicting tablet computer use: An extended Technology Acceptance Model for physicians. Health Policy Technol. 2016;5:268–84. [Google Scholar]

- 24.Mamlin BW, Tierney WM. The Promise of Information and Communication Technology in Healthcare: Extracting Value From the Chaos. Am J Med Sci. 2016;351:59–68. doi: 10.1016/j.amjms.2015.10.015. [DOI] [PubMed] [Google Scholar]

- 25.Friedberg MW, Chen PG, Van Busum KR, et al. Factors Affecting Physician Professional Satisfaction and Their Implications for Patient Care, Health Systems, and Health Policy. Santa Monica, CA: RAND Corporation; 2013. [PMC free article] [PubMed] [Google Scholar]

- 26.Cha A. Watson’s next feat? Taking on cancer. [Accessed August 1, 2017];Washington Post. http://www.washingtonpost.com/sf/national/2015/06/27/watsons-next-feat-taking-on-cancer/. Published June 27, 2015.

- 27.Meulendijk MC, Spruit MR, Willeboordse F, et al. Efficiency of Clinical Decision Support Systems Improves with Experience. J Med Syst. 2016:40. doi: 10.1007/s10916-015-0423-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hoonakker PLT, Carayon P, Brown RL, et al. Changes in end-user satisfaction with Computerized Provider Order Entry over time among nurses and providers in intensive care units. J Am Med Inform Assoc JAMIA. 2013;20:252–9. doi: 10.1136/amiajnl-2012-001114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lambooij MS, Drewes HW, Koster F. Use of electronic medical records and quality of patient data: different reaction patterns of doctors and nurses to the hospital organization. BMC Med Inform Decis Mak. 2017:17. doi: 10.1186/s12911-017-0412-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Downs SM, Anand V, Dugan TM, et al. You Can Lead a Horse to Water: Physicians’ Responses to Clinical Reminders. AMIA Annu Symp Proc. 2010;2010:167–71. [PMC free article] [PubMed] [Google Scholar]

- 31.Bauer NS, Carroll AE, Saha C, et al. Experience with decision support system and comfort with topic predict clinicians’ responses to alerts and reminders. J Am Med Inform Assoc. 2016;23:e125–30. doi: 10.1093/jamia/ocv148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976;295:1351–5. doi: 10.1056/NEJM197612092952405. [DOI] [PubMed] [Google Scholar]

- 33.Babbott S, Manwell LB, Brown R, et al. Electronic medical records and physician stress in primary care: results from the MEMO Study. J Am Med Inform Assoc. 2014;21:e100–6. doi: 10.1136/amiajnl-2013-001875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gellert GA, Hill V, Bruner K, et al. Successful Implementation of Clinical Information Technology. Appl Clin Inform. 2015;6:698–715. doi: 10.4338/ACI-2015-06-SOA-0067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cresswell KM, Lee L, Mozaffar H, et al. Sustained User Engagement in Health Information Technology: The Long Road from Implementation to System Optimization of Computerized Physician Order Entry and Clinical Decision Support Systems for Prescribing in Hospitals in England. Health Serv Res. 2016 doi: 10.1111/1475-6773.12581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Welch BM, Kawamoto K. Clinical decision support for genetically guided personalized medicine: a systematic review. J Am Med Inform Assoc JAMIA. 2013;20:388–400. doi: 10.1136/amiajnl-2012-000892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Beaudoin M, Kabanza F, Nault V, et al. Evaluation of a machine learning capability for a clinical decision support system to enhance antimicrobial stewardship programs. Artif Intell Med. 2016;68:29–36. doi: 10.1016/j.artmed.2016.02.001. [DOI] [PubMed] [Google Scholar]

- 38.El-Kareh R, Gandhi TK, Poon EG, et al. Trends in Primary Care Clinician Perceptions of a New Electronic Health Record. J Gen Intern Med. 2009;24:464–8. doi: 10.1007/s11606-009-0906-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ryan MS, Shih SC, Winther CH, et al. Does it Get Easier to Use an EHR? Report from an Urban Regional Extension Center. J Gen Intern Med. 2014;29:1341–8. doi: 10.1007/s11606-014-2891-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ellsworth MA, Dziadzko M, O’Horo JC, et al. An appraisal of published usability evaluations of electronic health records via systematic review. J Am Med Inform Assoc. 2017;24:218–26. doi: 10.1093/jamia/ocw046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Richardson S, Mishuris R, O’Connell A, et al. “Think aloud” and “Near live” usability testing of two complex clinical decision support tools. Int J Med Inf. 2017;106:1–8. doi: 10.1016/j.ijmedinf.2017.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.