Abstract

Recent developments in automated tracking allow uninterrupted, high-resolution recording of animal trajectories, sometimes coupled with the identification of stereotyped changes of body pose or other behaviors of interest. Analysis and interpretation of such data represents a challenge: the timing of animal behaviors may be stochastic and modulated by kinematic variables, by the interaction with the environment or with the conspecifics within the animal group, and dependent on internal cognitive or behavioral state of the individual. Existing models for collective motion typically fail to incorporate the discrete, stochastic, and internal-state-dependent aspects of behavior, while models focusing on individual animal behavior typically ignore the spatial aspects of the problem. Here we propose a probabilistic modeling framework to address this gap. Each animal can switch stochastically between different behavioral states, with each state resulting in a possibly different law of motion through space. Switching rates for behavioral transitions can depend in a very general way, which we seek to identify from data, on the effects of the environment as well as the interaction between the animals. We represent the switching dynamics as a Generalized Linear Model and show that: (i) forward simulation of multiple interacting animals is possible using a variant of the Gillespie’s Stochastic Simulation Algorithm; (ii) formulated properly, the maximum likelihood inference of switching rate functions is tractably solvable by gradient descent; (iii) model selection can be used to identify factors that modulate behavioral state switching and to appropriately adjust model complexity to data. To illustrate our framework, we apply it to two synthetic models of animal motion and to real zebrafish tracking data.

Introduction

One of the most fascinating topics in interdisciplinary research is to understand the complexities of animal behavior in naturalistic settings. Many essential questions, explored previously either through direct observations or qualitatively using mathematical models, remain unanswered at the quantitative level that can connect to large scale data: How predictable is individual behavior on a moment-by-moment basis and what factors influence behavioral decisions? How can coordinated and collective motion realistically emerge in groups of interacting animals? How do we include the existence of different “cognitive” or behavioral states into mathematical descriptions of animal motion?

Recent progress in automated recording techniques opened the door to addressing these questions, by making it possible to track single or multiple interacting animals for extended periods of time [1–8]. Traditional models of animal motion, constructed to favor simplicity and provide a qualitative match to the observed collective or aggregate (averaged) behaviors, are today being revisited and carefully fitted to extensive data to provide predictive, quantitative models [1, 4, 5].

In addition to revisiting existing models, the wealth of data also provides the motivation to devise new models and remove some of the previously-made restrictions and assumptions, or at least test rigorously whether they are essential. A central assumption that underlies many models of animal motion and behavior is that each animal continuously performs a universal computation to determine its next action or movement direction [9–11]. Animals, however, often exhibit discrete stereotyped behaviors (termed here behavioral states) or stereotyped switches between these states that result in apparently very different modes of motion through space, as for example “kinematic proxies” for different cognitive or behavioral states described by [12]. These individual animal behavioral states can be extracted from data using various approaches: low-dimensional reduction of the complex behavior [13–15], fragmentation of trajectories into segments based on the functionality of the space [16], machine learning-based detection of behavior types [17, 18] or other data mining methods [19]. Moreover, the states can be defined also for the whole animal groups [20], which we do not consider here. The switches between the individual’s behavioral states seem to occur stochastically, but their rate could in principle be affected by many factors: by spatial preferences, by various environmental signals, by the motion of conspecifics within the group, etc. The question therefore arises about how to combine in a single tractable model, on the one hand, the kinematic description of individual’s motion through space, and on the other, the existence of different discrete behaviors, i.e., behavioral states. If such behavioral states are indeed present and important, then ignoring their existence—which happens in, e.g., classic zonal or force-field models—will enable us to learn only laws of behavior and motion that are “averaged over” various behavioral states. We would thus fail to capture the observed heterogeneity due to the stochastic and discrete nature of individual animal behaviors and, consequently, motion trajectories.

The combination of stochastic state transitions with deterministic laws of motion through space distinguishes our model from the classical models where behavioral rules are assumed fixed in time. Traditionally, such rules are inferred by fitting to all available data, resulting in simulated trajectories which tend to be much smoother than real data. Our approach also differs from the population dynamics models capturing proportions of animals with different tasks or behavioral states. For instance [21] and [22] study behavioral states in honeybees when multiple food sources are present and distinguish between proportion of population feeding on different sources. Similarly, [23], [24], and [25] distinguish behavioral states in ant foraging due to either multiple food sources or due to recruiting of the resting ants. While these works describe different “behavioral states”, they do so in terms of population averaged quantities, while our goal is to incorporate a set of different behaviors at the individual level and fit that to individual trajectory data.

We propose a class of probabilistic models that is sufficiently rich to capture a wide variety of complex individual and collective behaviors, and very flexible in how external factors modulate the focal animal’s behavior. Despite this expressive power, the proposed models remain easy to simulate given the parameters, and support tractable maximum likelihood parameter inference from trajectory data. As described in the following section, these models are technically hybrid models that combine deterministic dynamics for animal motion with discrete behavioral states; stochastic transitions between these states are described in a Generalized Linear Model framework.

This paper is structured as follows. The next section introduces the rationale behind the modeling approach that we propose, specifies the model mathematically, and discusses the forward (simulation) and inverse (parameter inference) approaches. The second half of the paper focuses on three examples: two synthetic toy models of animal behavior (ant motion, bacterial chemotaxis) to showcase and validate the inference as well as illustrate an interesting extension to coarse-grained behavioral states, and one real data example (tracked zebrafish data) to illustrate inference without prior knowledge of laws of motion, as well as model selection to identify kinematic variables that affect behavioral state switching.

Methods and models

The main goal of this work is to develop a probabilistic approach for understanding the behavior and motion of animals, either in an individual setting or as a group in which the animals can interact. We construe the “behavior” very broadly, as spanning the range from the occurrence of stereotyped events that can be identified by the body pose, specific interactions with an environment, vocalization production, etc., to internal behavioral states that manifest with the animal adopting a particular center-of-mass law of motion through space. We assume that each animal switches between the possible behaviors in a stochastic fashion, with rates that depend, in a very general way that we seek to identify, on the animal’s current behavioral state, its location, and the location of other animals in a group.

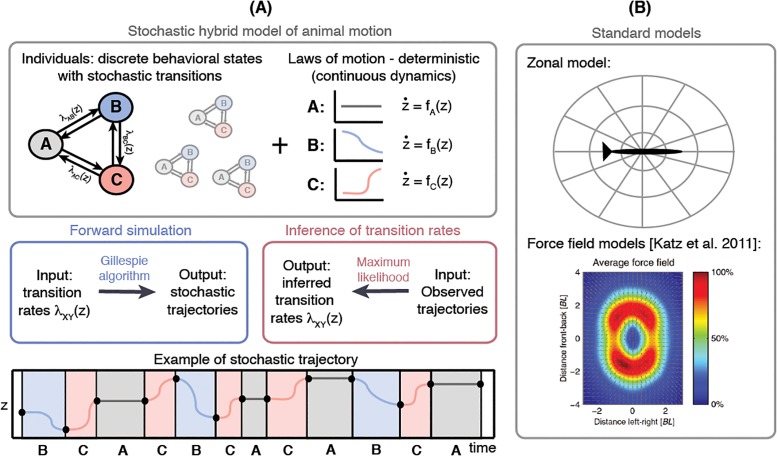

The expressive power of our model stems from the combination of its stochastic and deterministic components, as schematized in Fig 1A and described in detail below. Formally, our model belongs to a class of hybrid models [26–29] with stochastic discrete states and deterministic continuous dynamics, typically called Piecewise-Deterministic Markov Processes (PDMP). While switching between behavioral states is stochastic, within each behavioral state the animal follows a certain law of motion that governs its kinematics (e.g., its center-of-mass trajectory), as specified by a system of ordinary differential equations. This is a powerful generalization of the traditional models, schematized for comparison in Fig 1B: in either zonal or force field models, the focal animal always follows a single, universal law of motion that cannot depend on the behavioral state. Similarly, frameworks that model switching between behavioral states of individual animals often ignore the fact that this behavior plays out in physical space and is modulated by the ever-changing spatial relationship between the focal animal, its conspecifics, and its environment. Our model brings together the analysis of discrete behavioral states and motion through space in a single probabilistic framework.

Fig 1. Models of animal motion and inference of model parameters.

(A) The hybrid model consists of stochastic switching between the behavioral states (denoted as A, B, C) combined with the deterministic laws of motion for each state. When the behavioral states, laws of motion for each state, and the transition rates among them are known, the sample trajectories can be obtained by a stochastic simulation algorithm (i.e., the Gillespie algorithm). However, if the measured animal trajectories can be classified into the known behavioral states but the laws of motion and transition rates are unknown, this leads to an inference problem for the unknown transition rates. (B) Standard models, e.g., the zonal models [5] and the force-field models [4], do not capture discrete stereotyped behaviors and assume a single, universal computation carried out by each animal at every instant to determine the animal’s motion. The bottom subpanel of (B) is reproduced from [4], supporting information.

Given our very general notion of what constitutes a “behavior”, there exists no method for automatically detecting and annotating such behaviors in a way that is independent of the animal species, experimental setup, and the biological question being asked. For example, when describing ants, the most coarse-grained and easily observable behaviors might be the modes of locomotion (such as stopping and moving forward); however, in other experimental setups we might want to sidestep motion details and instead focus on anntenation and grooming behaviors triggered by exposure to specific pathogens. In the absence of generic algorithms for behavioral annotation, we start here by assuming that we are already given individual animal motion trajectories that have been annotated with behaviors of interest; this input data is obtained from raw observations in a problem-specific way that we do not address here. Starting with such input data, our framework will then proceed to identify how the animals switch between the identified behaviors, using a generic, problem-independent inference procedure. In this way, the complex problem of modeling animal behavior and motion can be broken down into manageable pieces, some of which admit generic solutions and thus need to be studied only once.

Even though a generic mechanism for identifying behaviors is currently out of reach, we use several examples of animal motion to show how such identification can be attempted in practice for specific cases where kinematic proxies exist for behavioral states. Our three examples include the case of simulated ant motion and bacterial chemotaxis, and the case of real (tracked) zebrafish data. Because our modeling framework is probabilistic, it naturally captures the stochastic nature of animal decision-making and can tolerate a degree of noise in the data. As we show below, the structure of our model permits straightforward and tractable forward simulation of stochastic animal trajectories (of motion as well as behavioral states) in a group, using a variant of Gillespie’s stochastic simulation algorithm (SSA) [30]. Conversely, given the data, we can use the standard statistical tools to solve the inverse problem of model inference and model comparison to identify environmental and group factors that modulate behavioral transitions. Importantly, since our model is a derivative of well-studied Generalized Linear Models (GLMs) in statistics and neuroscience, we can guarantee that maximum likelihood inference is convex. This means that for identifiable models there is a single set of best fitting parameters for the dependence of behavioral switching rates for each animal, which can be solved sequentially, animal-by-animal, by standard gradient descent. Taken together, these methodological properties of our framework ensure that it remains practically applicable for inference and simulation even while it maintains its large flexibility and expressive power.

Model of animal motion

The model’s main components are schematically displayed in Fig 1. We consider a population of animals of size N where the n-th individual is characterized by its position zn = (z1, …, zd) in d dimensions (see the notation in Table 1). This position can be complemented by other physically relevant kinematic measurements, for instance velocity, orientation, etc; for simplicity, we will nevertheless refer to z simply as “position”. In addition, each individual has an internal behavioral state, xn, chosen from a set of available states, {1, …, S}. Each individual at every time follows a deterministic law of motion which describes the rate of change of the position (velocity, etc.) depending on the current position of all individuals and state of the focal animal

| (1) |

Table 1. Abbreviations and notation.

| N | number of individuals |

| d | dimension of the space of motion |

| kinematic variables (e.g., position) of the n-th individual | |

| z = (z1, …, zN) | kinematic variables of all the individuals |

| S | number of behavioral states |

| X = (1, …, S) | space of available behavioral states |

| xn ∈ X | internal state of the n-th individual |

| x ∈ XN | internal state of all individuals |

| L(⋅) | log-likelihood function |

| α(1) | generalized linear model parameters of the first order |

| α(2) | GLM parameters of the second order (interaction) |

| k′ ∈ KS | time bin indices (with corresponding times tk′) at which the trajectories were sampled |

| k ∈ KT | time bin indices (with corresponding times tk) when state transitions occurred |

The motion of the n-th individual satisfies the rule (1) until a change of state occurs. Stochasticity of the motion arises due to random switching between the internal states (from xn to ) with the transition rates . These transition rates depend not only on the states relevant to this transition (xn and ), but also on the position of (possibly all) other animals, and on other important dynamic or environmental factors, captured by z. The central assumption of our model concerns the mathematical form through which the switching rates λ, characterized by a set of parameters , depend on the internal states and positions:

| (2) |

Animal positions, z, in this relationship serve as a “stimulus” that drives the changes of state by modulating transition rates λ. The dependence on z enters through functions φi and Ψij which, if chosen well, allow not only to approximate arbitrary transition rates but also to represent them efficiently. The particular representation of positions, i.e. the choice of φi and Ψij, used in this work essentially discretizes the space of positions, as discussed below. For example, in Eq (2), representations φi and Ψij parametrize the dependence of switching rates on position coordinates of individual animals (through the coefficients ), and on pairwise spatial relationships between the animals (through ), excluding interactions of more than two individuals. Formally, rates λ are the instantaneous rates of a Poisson point processes describing behavioral state changes that are approximated as infinitely fast. The rates are inhomogeneous, i.e., depend on time through their dependence on position and state variables. This dependence has the Generalized Linear Model form [31, 32]: the transition rates are specified by a nonlinear function, g, acting on a summation over representations of position (φ(zn) and Ψ(zn, zm)) that is linear in sought-for parameters α. In statistical literature, the inverse of the nonlinear function g is also known as the “link function.” In sensory and motor neuroscience, similar models have been used to analyze the dependence of Poisson spiking of neurons on the stimulus and output of other, simultaneously recorded, neurons in a neural circuit [33].

Applied to animal behavior, it is easiest to understand the model of Eq (2) when the animals are not interacting. In this case, , the second term in the argument to g(⋅) in Eq (2) vanishes, and behavioral transition rates can be decomposed into coefficients α(1) (that depend on the kind of behavioral transition) that are multiplicatively modulated by some representation of animal’s position, φi(zn). The choice of representations, φ, is fixed prior to model inference, and can provide a lot of flexibility as well as regularization of the parameters α(1). The parameters α(1) are inferred from data using maximum likelihood inference. If the animals interact in a pairwise fashion, the second term, parametrized by α(2), and also inferred by maximum likelihood, can be added to the argument of the nonlinear function. A typical example for the choice of Ψ in this case could be a function that depends on the distance between the center-of-masses of interacting animals n and m.

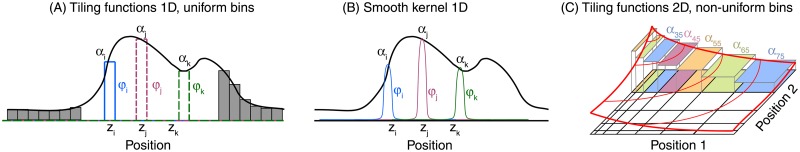

There exist many possibilities for the representation of the kinematic variables z (i.e., for the functions φ and Ψ) and the choice between them is problem-specific. In the absence of any prior information about how animal position could affect the transition rates, we choose here the tiling functions, schematized in Fig 2A and 2C. Tiling functions represent nothing else but some particular discretization of the continuous domain, e.g., , into bins; note that the bins can be non-uniform, as in Fig 2C. The choice of tiling functions is particularly useful when the positions of individuals can be measured with limited resolution. With the domain discretized into bins, the dependence of switching rates on position in Eq (2) is simply represented point-wise, by values of α specified bin-by-bin. Representation functions φ and Ψ can therefore be thought of as a “basis” in which we expand the dependence of switching rates on positions, with α being the coefficients of the expansion in the chosen basis.

Fig 2. Dependence of behavioral transition rates on z using different representations of position.

(A) The simplest way to approximate a transition function without any further assumptions on its shape is to discretize it using equidistant binning. This represents the rates using a linear combination of tiling functions φi, which have value 1 in a narrow region of the position space and 0 otherwise. The multiplicative constants αi, that are inferred in our approach, then determine the shape of the transition rate function. We choose non-overlaping tiling regions which cover the whole position space. Smaller size of the tiling regions leads to a more accurate approximation of the transition rate dependence on the position variables but requires more data for inference. (B) In this example, the rates are expanded into a linear combination of Gaussian bump functions that tile the domain. This enforces the smoothness of the rate at the spatial scale that corresponds to the width of the Gaussians. (C) An example of tiling functions for representing the rate on the 2D domain. In contrast to (A), here the bin sizes are not chosen uniformly.

The benefit of the tiling functions is the simplicity of their interpretation and implementation. In what follows we will see that with the tiling functions the sufficient statistics for maximum likelihood inference are simply contingency tables (or histograms), counting the number of behavioral transition events of each type occurring in every bin. The drawback of the tiling functions is the curse of dimensionality and the absence of smoothness regularization. If dimensionality of the kinematic space, d, is larger than typically 2 or 3, naive discretization along each dimension will result in very high dimensional histograms that will be poorly sampled, and hence the transition rates at many values of the kinematic variables will be unconstrained by the data. Tiling functions also permit the rates to depend on the position in an arbitrary, possibly non-smooth way. If, however, smoothness is expected, other basis choices (for instance, Gaussian bumps that tile the domain as in Fig 2B) may offer better generalization performance even with the basis of lower dimensionality. Ultimately, any choice of position representation is possible, with the inference remaining convex and tractable so long as: (i) the dependence of the argument of nonlinear function, g, is linear in parameters α, to be inferred; (ii) the link function, g−1, is chosen to be convex and log-concave [34]. A convenient choice satisfying these conditions that we adapt here for simplicity is an exponential function g(⋅) = exp(⋅), which also implies that covariates in Eq (2), which are additive as arguments to g, have multiplicative effects on the switching rates λ. Note that the use of the nonlinear link function as exponential is purely based on computational convenience. There are other functions that could work as well and are even computationally tractable, and the choice should be informed by what fits data best.

The stochastic process described in Eq (2) is a non-homogeneous continuous-time Markov chain, since the transition rates of the n-th individual only depend on its current state xn and on the external time-dependent input z1, …, zN of all individuals. Therefore the probability density of occupying state x = (x1, …, xN) and z = (z1, …, zN) obeys a Master equation

| (3) |

where for n ∈ {1, …, N} are disjoint sets of all behavioral states that can be reached from the state x, differing from the state x only in the n-th component. While the first two components in Eq (3) represent changes in the behavioral state of an individual, the last term accounts for the continually changing position of the individuals. The crucial assumption is that the law of motion is deterministic, i.e., all stochastic influences can be characterized as random transitions between suitably defined behavioral states. The total number of states x ∈ {1, …, S}N may be large but discrete and the position space is continuous.

Forward problem: Stochastic simulation

Stochastic simulations of the animal motion are performed by a variant of the Gillespie algorithm [30], which is an exact algorithm generating realizations of the process given by Eq (3). The system starts in the initial state (t0, x(t0), z(t0)), characterized by the initial time and the states and positions of all individuals. Then it undergoes a series of transitions through the states {tk, x(tk), z(tk)} where tk are the transition times, indexed by k. Between these transition times, the states do not change but each individual follows continuous laws of motion determined by the state which the individual currently resides in.

The instantaneous transition rate is the sum of the individual rates out of the current state x through all possible transitions x → x′ which lead to a change of the state:

| (4) |

So if no transition occurs in a time period [t0, tf], the rate accumulates to

| (5) |

where z(t) follows a deterministic dynamics in Eq (1). Because the stochastic process (x, z) is Markovian, the waiting time until the next transition is exponentially distributed with rate Λ:

| (6) |

Each transition can be realized by first choosing the individual that changes its state and then selecting the particular transition. This is true because of the following factorization of the transition probability:

| (7) |

The stochastic simulation algorithm uses the distribution of waiting times until the next transition given by Eq (6) and the probabilistic rule for picking this transition, and can be summarized in the following steps:

Initialize: xn(t0), zn(t0), t0, k = 1.

Compute rate functions: .

Generate random numbers: r1 ∼ Exp(1), r2, r3 ∼ U([0, 1]), where Exp(c) and U([a, b]) stand for an exponential distribution with parameter c and for the uniform distribution on the interval [a, b], respectively.

Compute time tk to the next transition: Λ(x, z(tk−1), tk, tk−1) = r1 where z solves z′ = f(z, x, t).

Choose an individual n randomly (using r2) with weights: λ(xn, z, tk)/λ(x, z, tk).

Choose the transition randomly (using r3) with weights: .

Update xn(tk), zn(tk), tk, set k = k + 1 and repeat from 2 until the terminal condition.

Inverse problem: Parameter inference

The inference uses experimental data and their annotation with the behavioral states to obtain the transition rates between these states. We denote the data as where index k′ ∈ KS is used to specify sampling times tk′. We furthermore say that a transition xk−1 → xk occurred at time tk, k ∈ KT if xk−1 ≠ xk (where KT denotes the set of all transition time indices). In principle, if deterministic laws of motion were known in advance, it would be sufficient to record just the times at which a transition occurs, since the rest of the trajectory can be calculated from the known laws of motion. In practice, however, the laws of motion f(z, xn, t) are often not known and the system has to be sampled finely even between the transition times. This is the regime we assume here. Thus, in case of multiple animal recordings, the two arrays xk−1, xk differ at most in one component, corresponding to a single animal changing its state at any given transition time.

For simplicity, we assume that all animals are identical, i.e., that the parameters α in Eq (2) are equal among the individuals. This assumption is not necessary for the tractability of the inference, and if data is sufficient, parameters can be inferred on a per-animal basis to ask questions about animal-to-animal heterogeneity.

We express the probability of observing a sequence of all transitions {tk, x(tk), z(tk)}, k ∈ KT corresponding to the parameters α as

| (8) |

The probabilities are conditioned on the observed values z and parameters α in Eq (2). We next construct a log-likelihood function given the data , which summarizes the evidence that the data provide about a set of unknown parameters α. Log-likelihood function (which is more convenient to use for large datasets with independent samples than a likelihood function) is a useful tool for inference of these parameters. For a single individual this becomes

| (9) |

where we used Eqs (8) and (6) and we denoted by C some constant independent of α. An analogous log-likelihood function can be also written for a group of individuals with pairwise interactions. Here, p(α) is the prior over parameters, which we take to be uniform. Intuitively, the first term in Eq (9) favors higher rates when more transitions are observed, while the second term favors lower rates when the integrated transition probabilities over intervals become large. The first term depends on the observed transitions, whereas the second term depends on the trajectory between them, accounting for the transitions that could have happened but did not. When dynamical laws are a priori unknown, the sampling of trajectories has to be sufficiently fine to obtain a good quadrature approximation for the integral terms.

Examples of animal motion

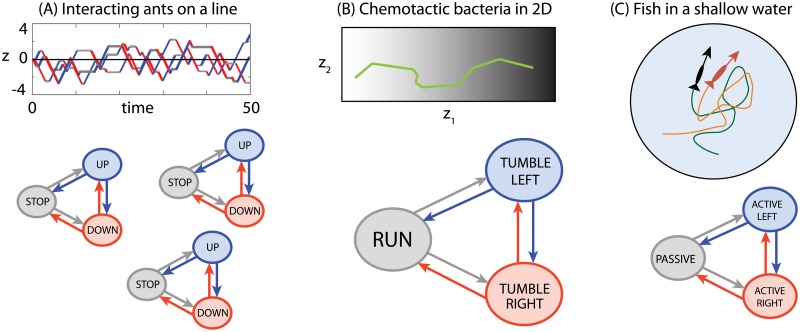

Here we demonstrate three applications of the inference for simple models of animal behavior. The studied examples, schematized in Fig 3, differ in the number of interacting animals, in the spatial dimension in which the motion unfolds, and in the complexity of animal motion that we seek to describe. The examples considered are very simplified toy models, whose purpose is not to provide a quantitative match to any particular dataset; rather, we try to showcase the versatility of the modeling framework and the application of our inference and simulation methodology.

Fig 3. Examples of animal motion studied in this paper.

The examples vary in complexity, in the dimension of the physical / behavioral space, and in the number of interacting individuals. (A) Single ant or several interacting ants with three behavioral states moving in 1D. (B) Bacteria climbing a chemical gradient in 2D using a run-and-tumble motion. The position space is three dimensional (two spatial coordinates plus the direction). The “tumble state” in which bacteria perform directional random walk is a compound state constructed from quick random switches between “tumble right” and “tumble left” states with deterministic laws of motion. (C) Two tracked interacting zebrafish moving in 2D in a shallow water tank. The motion can be split into three kinematic states (shown later): the passive state in which fish does not actively contribute to the motion, and two active states (left/right) where the action of fish results in positive acceleration and change of direction to either left or right direction. The rates of switching between the three kinematic states are assumed to depend on up to three kinematic variables.

Ant motion on a line

In the first example we consider the simplest model of a single ant that is moving along a line. Its motion is represented by three behavioral states, x = {xstop, xup, xdown}, which correspond to motion at a constant velocity in one of the available directions and to a resting state. The equations of motion are

| (10) |

The changes of the behavioral state are considered random, resulting in a stochastic motion of the ant along the line. The transition rates depend on the position of the ant and have the form

| (11) |

where each perceived “stimulus” φi(z) is modulated by the weight αi. For φi(z) we use the tiling functions in the position space z ∈ [−4, 4], i.e. characteristic functions of non-overlapping intervals of equal size covering the position space, as in Fig 2A.

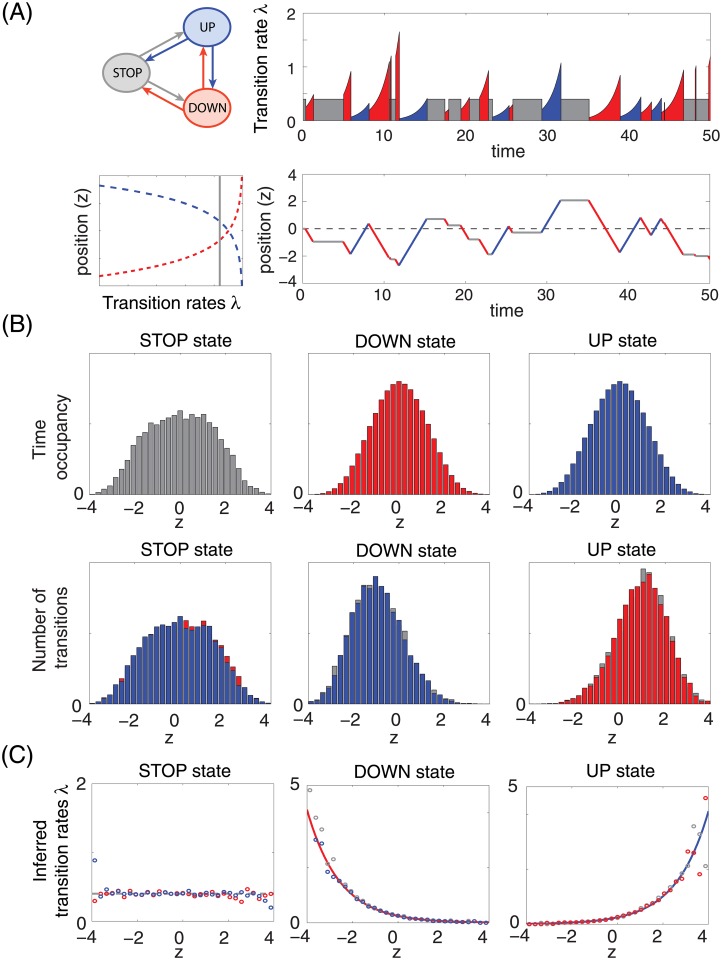

A long stochastic trajectory of the ant motion following Eqs (10) and (11) was simulated with chosen transition rates that depended on the position of the ant; a fraction of the trajectory and the transition rate details are shown in Fig 4A. This trajectory was then used to infer the transition rates, that were considered unknown in the inference step. Due to our choice of the tiling functions {φi}, the inference decouples into independent likelihood optimizations for each bin. Crucially, the inference does not require knowledge of the detailed trajectories, just of their sufficient statistics, i.e., the distribution of ant positions at the transition times and the overall time occupancy of every bin, shown in Fig 4B. This is because the log-likelihood function (9) can be also written as

| (12) |

where and represent the total number of transitions x → x′ at each bin and the total time spent in each bin, respectively. Here zi denote the center of the i-th tiling function, i.e. an average position when in the i-th bin, see the derivation in Appendix D.

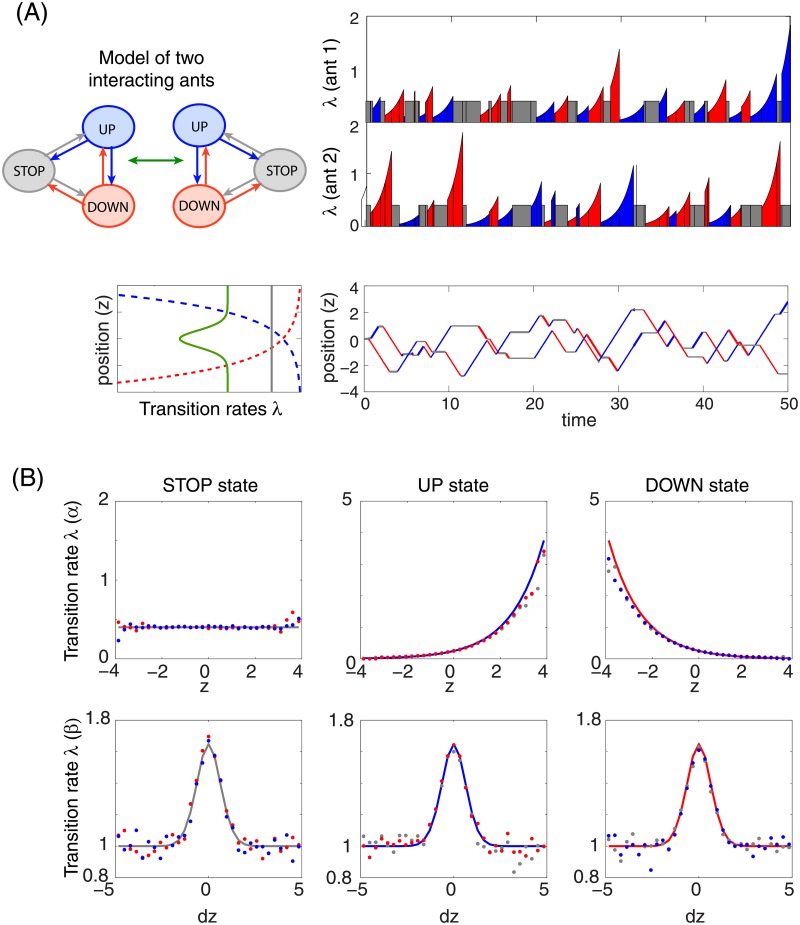

Fig 4. Motion of a single ant on a line.

(A) Animals switch between three behavioral states: STOP (gray), UP (blue), and DOWN (red). The transition rates have a form with , , and . A short segment of a sample stochastic trajectory, following Eq (10), is shown together with instantaneous transition rates from the current state. (B) Because tiling functions are used to represent position, the sufficient statistics for the inference are conditional histograms. The first row shows the histograms of the position given behavioral state during the whole simulation. Second row shows the number of transitions from a given behavioral state in each bin. Since from each state transitions can happen into two other states, each of the panels in the second row shows two (almost identical) histograms that correspond to two different color-coded target states. (C) Inferred transition rates (dots; color denotes the target state) from the simulated stochastic trajectories (100 trajectories for t ∈ [0, 500]), compared with the exact transition rates (solid curves; color denotes the current state). Since each state can transition into two other states, the inference provides separate estimates for each transition, shown as two sets of dots of different color. The true rates for two target states are equal.

The inferred rates, shown in Fig 4C, closely captured the true rates when the space was well sampled. For our choice of transition rates the sampling coverage decreases exponentially as |z| increases, so rate estimates at higher values for |z| are less reliable; nevertheless, low sample numbers in one bin do not affect the accuracy of the estimate in other bins. Generally, the quality of inference is limited by the available data. The ability to sample many long motion trajectories clearly leads to better parameter estimates. A less obvious factor that also influences the results is the sampling frequency, which sets the limit to the temporal precision with which behavioral transitions can be estimated, and to the quality of the approximation for the integral term in Eq (9). We explore the consequences of undersampling in Appendix A.

The single ant model can be extended to multiple interacting ants. In this case, the transition rates of the n-th ant depend not only on its own position, but also on the distance between the ants, as illustrated in Fig 5A. To model the interaction between a pair of ants, we introduced an interaction kernel, ψ(d) = e−d2, that depended on the mutual distance between the two ants, d = |Δz|. Smaller distances lead to a larger additive contribution to the argument of the nonlinear function g(⋅) in Eq (2), and since g in our model is an exponential function, the interaction modulated all other transition rates by a multiplicative factor. In effect, when two ants were close by, all their transition rates increased, leading to fast switching among the behavioral states.

Fig 5. Two interacting ants.

The ants follow dynamics of Eq (10) where the stochastic switching between the states follows transition rates with and the same as for the single ant in Fig 4 and the interaction strength . The interaction with a kernel ψ(d) = e−d2 modulates the rates multiplicatively by a factor which increases all transition rates when ants are close to each other and leaves them unchanged when they are sufficiently distant. (A) Short stochastic simulation of two interacting ants, showing also the instantaneous transition rates from the current state. The interaction modulating factor exp(0.5ψ(d)) is shown together with other transition rates (green) using the same axis. (B) The first and the second rows show the inferred transition rates (dots) for the parameters α(1) and α(2), respectively, compared with the exact rates (solid curves). The inference is based on 500 simulated trajectories, each for T ∈ [0, 500]. We used the tiling functions in the z and Δz = zn − zn′ space and a penalization function of the form to enforce vanishing interaction outside of the range |Δz| > 2. Penalization term also avoids a degeneracy of the rates, ensuring existence of a unique solution of the inference problem.

To perform inference in the case of two interacting ants, we optimized the likelihood written out in terms of the summary statistics, with the results shown in Fig 5B. Summary statistics in this case are the joint distribution of the time spent at each position z and at separation Δz = zn′ − zn between the n-th and the n′-th ant, and the marginal distributions of the transition counts as functions of position z and distance Δz. In case of many interacting ants, the summary statistics may become too high-dimensional, prompting us to revert to Eq (9), where the likelihood is evaluated directly over the trajectories.

Bacterial chemotaxis

Many bacteria have the ability to climb gradients of chemoattractant chemicals, for instance, when searching for food. One strategy for climbing such gradients is the so-called run-and-tumble motion [35]. Here, periods of motion in a straight line with nearly constant velocity, called “runs”, are interspersed with “tumbles”, events when a bacterium randomly reorients itself. The net motion bias towards the gradient source ensues because the rate at which tumbles are initiated depends on whether the bacterium has recently been moving along, or against, the gradient.

The run-and-tumble paradigm does not map directly into our framework, because the dynamics in the tumble state is stochastic: it can be approximated as a directional diffusion or a random walk in orientation. We can, however, propose a three-state model that is able to capture the stochastic tumble state, as we show in Appendix B. The three behavioral states that we introduce for this purpose are: (i) a run state, where the bacteria move at a constant speed and direction; (ii+iii) left/right tumble states, where the bacteria are stationary but rotate to the left or right at a constant angular velocity. The bacterial motion is described by three variables: position z1 = x, z2 = y, and angular direction φ in the 2D plane. Kinematic variables obey the following dynamical equations:

| (13) |

| (14) |

where v is the speed of the bacterium in the run state and ω is the angular velocity in the tumble state, both assumed constant. Since the transitions between the states are stochastic, the length of the trajectory in the run state as well as the net rotation angle in the tumble phase are random variables. The key idea behind the three state model with x = {xrun, xleft, xright} is that if the switching rates between the “tumble left” (xleft) and “tumble right” (xright) states are fast, the rapid succession of random switches between the two deterministic left/right tumble states will generate a random walk in orientation, effectively simulating a single, stochastic, compound “tumble” state, equivalent to the one described in chemotaxis.

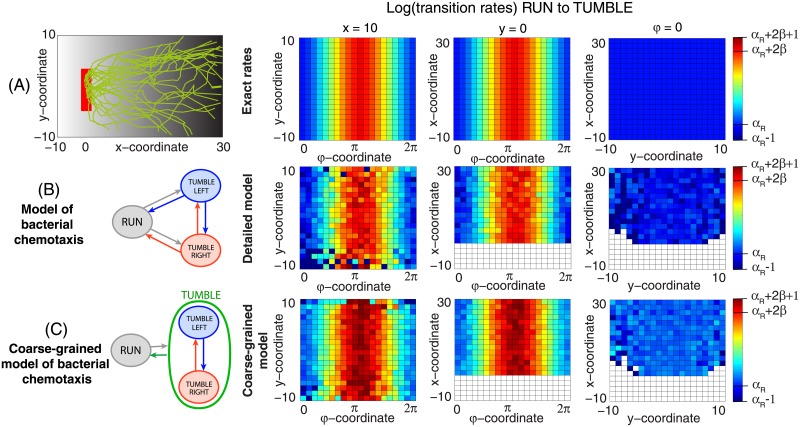

We consider an example where the transition rates from the run state into the tumble states, λ = λ(φ, x, y), depend on the position of the bacterium and its orientation, as displayed in Fig 6A: the rate of exiting the run state is low when the bacterium is oriented parallel to the chemoattractant gradient and high when the bacterium is antiparallel. Simulated trajectories, shown in Fig 6A, show that this bias gives rise to motion in the gradient direction.

Fig 6. Bacterial chemotaxis.

Bacteria execute run-and-tumble motion to climb the chemoattractant gradient (here, in the direction φ* = 0), starting at a random position within the red region. We assume the following transition rates: λ(xrun → xleft/right) = exp(αR + βf(φ)), λ(xleft/right → xrun) = exp(αL), λ(xleft/right → xright/left) = exp(αLR), where αR = αL = αLR = log(0.3), β = 3, v = 2, ω = 1. The constants β, v, and ω represent the strength of the chemical gradient, the speed of the bacterium in the run phase, and the angular velocity of the bacterium in the tumble state, respectively. The transition rate from the run state depends on the internal angle of the bacteria through a response function f(φ). For simplicity we take f(φ) = 1 + cos(φ − φ* − π), which is maximal for an angle antiparallalel to φ*, where φ* determines unobserved location of the source of chemoattractant. (A) Individual trajectories of a run-and-tumble bacterial motion in a chemical gradient simulated by a random switching between three deterministic behavioral states (run, tumble left, tumble right), following the dynamics of Eqs (13) and (14). At right, exact transition log-rates are shown for three different cross-sections in the position space: (φ, 10, y), (φ, x, 0) and (0, x, y). (B) Transition (logarithmic) rates inferred from the simulated trajectories (2000 trajectories that start at location x = 0) assuming a model with three deterministic states. (C) Inferred transition (logarithmic) rates for the coarse-grained model of bacterial chemotaxis with one stochastic, compound “tumble” state. Regions poorly sampled by simulated trajectories are shown in white. The colorscale ranges between [αR − 1, αR + 2β + 1], where αR and αR + 2β are the minimum and the maximum of the true log-rates.

To perform inference, we discretized kinematic variables, z, into Nφ × Nx × Ny bins, and inferred the transition rates bin-by-bin from simulated stochastic trajectories. This approach made no a priori assumptions about how the rates varied with the coordinates. As shown in representative cross-sections in Fig 6B, the inference led to a good estimate of the rates in the parameter regime which was sampled sufficiently by the 2000 simulated trajectories. In a real-world application, further assumptions about the smoothness of the rate dependence, either by a proper choice of position representations or by explicit smoothness regularization, and adaptive discretization selected by, e.g., cross-validation, could further boost the performance of inference given limited data.

Real observations of bacterial chemotaxis do not differentiate between the deterministic “tumble left” and “tumble right” states: in the limit of fast transitions between the two tumble states (relative to the transitions into the run state), the two tumble states merge into a single stochastic “tumble” state, in which the bacterium performs directional diffusion. We therefore asked if we can coarse-grain our framework analytically to infer the dependency of transition rates between the run state and this new, compound “tumble” state, directly. In Appendix B we show that this is indeed possible under moderate assumptions. Direct inference of the two-state coarse-grained model (with a deterministic “run” and a stochastic “tumble” state) tends to give superior performance compared to the three-state model (with deterministic “run”, “tumble left”, and “tumble right” states), as shown in Fig 6C, because the coarse-graining acts as an implicit regularization that integrates over some of the parameters of the three-state model. On the other hand, the coarse-grained model is applicable only under the time-scale separation and so microscopic details about behavioral transitions between “tumble left” and “tumble right” states, as well as the information about angular velocity ω in these states, are lost or are poorly constrained by the data. Using coarse-graining to define new compound behavioral states with stochastic laws of motion, and performing inference on the corresponding coarse-grained models directly, as demonstrated in the chemotaxis example, should significantly expand the modeling domain of our framework.

Motion of fish

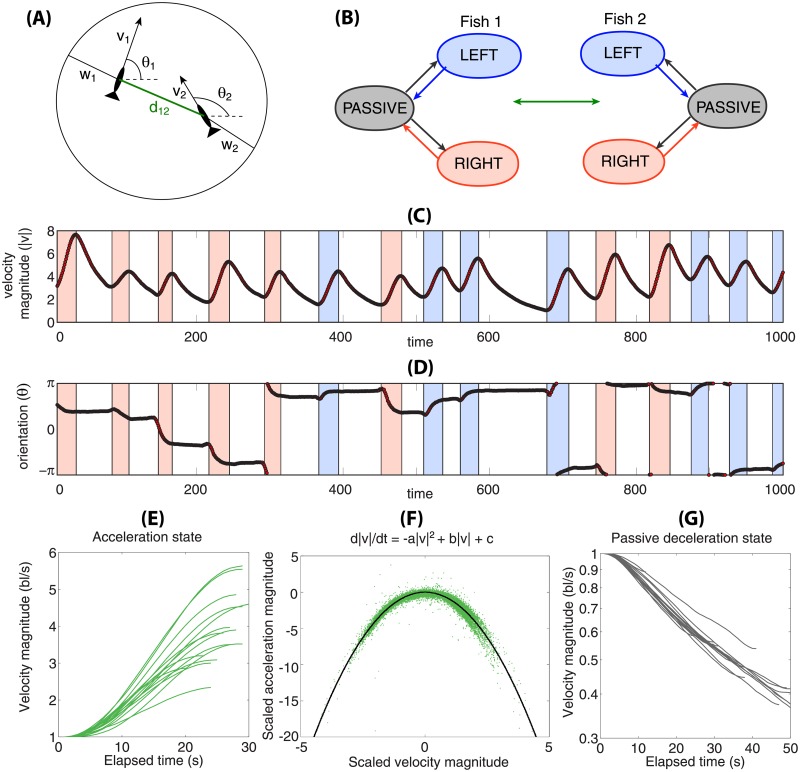

In the last example we show how to proceed from raw data, where the true (generating) model is unknown, to a set of inferred models that can be compared using model selection techniques. We do not aim to construct a perfect model of the animal motion; rather, our goal is a practical demonstration of the applicability of our approach to real data. The data was collected by [12] where tracked zebrafish are swimming in a shallow water tank of a circular shape, roughly a meter in diameter. The duration of the recordings, performed in two replicates, was about 30 minutes and the sampling rate was 100 Hz. We will see that this provides enough data to extract the basic features of fish motion.

Identification of states and laws of motion

Basic physical laws dictate the natural minimal set of kinematic variables, i.e., the position, velocity and acceleration of each animal. The motion of a fish in the shallow water tank is essentially two-dimensional, with position zi = (xi, yi) where i = 1, 2 is the index of the fish (with analogous notation used for the velocity, vi, and acceleration, ai), so that:

| (15) |

Fig 7 shows a typical segment of the trajectory and, in particular, highlights the repeated fluctuations in the velocity magnitude, consistent with the findings of [12]. These fluctuations are generated by a stereotypical force, caused by the tail beats and fin flips of the fish. Notice also that each acceleration event is accompanied by a dynamic adjustment of the angle θi, which measures the orientation of the fish, while in the deceleration phase the orientation stays unchanged. The data thus indicate that individual fish can be characterized by distinct behavioral states that correspond to the increase and decrease in the velocity of the fish. We call these states either active, where the motion of the fish results in a positive acceleration and in the change of orientation, or passive, where the dynamics are caused by the water friction without any active contribution of the fish. We identify the following laws of motion for the two kinds of states that hold to a good approximation:

Fig 7. Motion of two fish in a circular shallow water tank.

(A) Each fish is characterized by a position (x, y), velocity v, orientation θ and acceleration a. (B) Diagram of behavioral state transitions for the two fish with interaction. (C-D) A time window of length 1000 s shows the velocity and orientation of one fish (second fish not shown) from the data in [12]. The trajectory shows alternating regions of acceleration (blue = turning left, red = turning right) or deceleration (no shade), consistent with the states marked in B. (E) Velocity traces in the accelerating phase (data from C), shifted to the same initial value, have a sigmoidal functional form. Acceleration can be fitted empirically to a quadratic function, d|v|/dt = −a|v|2 + b|v| + c where . This is shown in (F) for data containing 3000 accelerating intervals. Data from each accelerating window (dotted green) is fitted separately and then shifted and rescaled to a normal form |a| = −|v|2 (black). (G) Passive state shows an exponential decay of the velocity due to friction, evident by rescaling decelerating trajectories to the same initial value and plotting them on a log-linear scale.

- Passive deceleration: Fig 7G indicates an exponential decay of the velocity magnitude in the passive state. Such dynamics are consistent with a passive motion where the fish exerts no force and decelerates solely due to friction. This can be captured by a dynamical law for the magnitude of the velocity

with an exponentially decaying solution with a rate κ, representing the friction coefficient. The orientation of the fish in the passive state satisfies .(16) - Active acceleration, left/right turn: The fish exerts a pulse-like force as a result of its motion, as in Fig 7E. This motion can be accurately fitted by the following law:

as indicated by Fig 7E and 7F. Here, an initial exponential increase in speed due to the force generated by the fish body motion is followed by saturation due to frictional dissipation of energy. The orientation of the fish changes in the active state, with an approximately exponential relaxation to a target θ* from the initial value θ(0) = θ0. The dynamics can be approximated by a linear mean-reverting process(17) (18)

While the dynamics obviously differ between the passive and active states, they formally differ also within the active state(s). This is because the dynamics in the active states are only fully specified when the five event-specific parameters, i.e. θ* − θ0, τ, k0, k1, and k2, are known. The data suggest that these parameters have relatively narrow distributions. While in principle the states should be parametrized by these five parameters, yielding a large total number of total behavioral states, we disregard this dependence here and use only three behavioral states: passive and active left/right, as shown in Fig 7B. The dynamics in Eqs (17) and (18) would then be approximated by typical values of the parameters (〈θ* − θ0〉, 〈τ〉, 〈k0〉, 〈k1〉 and 〈k2〉) or alternatively, these values can be generated stochastically from a suitable distribution. The data further suggest that the behavioral states alternate and that two active states are separated by a passive state.

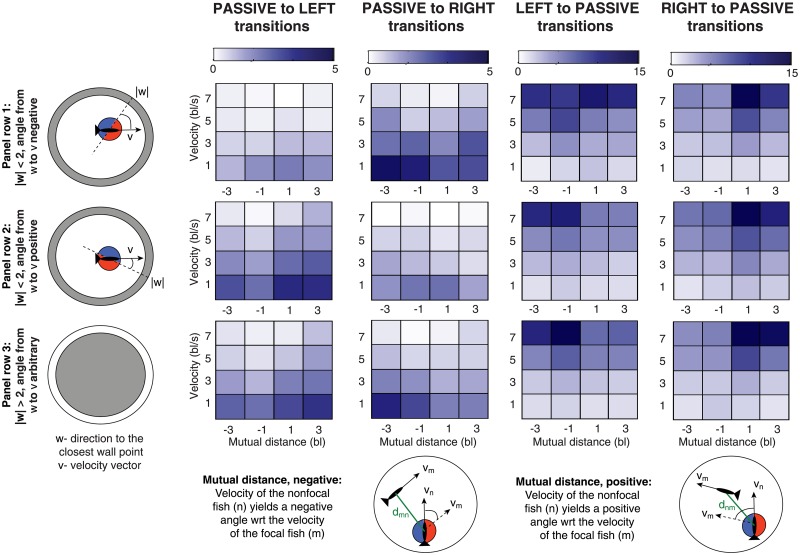

Inference of fish behavior

After identifying the three behavioral states and their laws of motion we now turn our attention to the factors that modulate behavioral state transitions. The first goal is to identify the suitable kinematic variables u for the transition rates. These should account for the behavior of the focal fish, the influence of the environment, but also the interaction between the fish. The fish spend a significant amount of time in a close proximity of the tank walls but also that fish often swim short distance apart from each other. To reflect this we select the following kinematic variables:

Velocity magnitude: , where the velocity of the n-th fish is approximated from the data.

Wall distance with a sign: u2(zn) = σ|wn|. The quantity we use to capture the wall distance is derived from a vector wn, leading from the focal fish position to the closest point at the fish tank wall. The wall distance |wn| provides information on the proximity of the wall, independent of the orientation of the fish. To encode also the direction of swimming along the wall we multiply |wn| by a sign σ, which is positive/negative if the fish swims anticlockwise/clockwise along the wall. The sign can be expressed as a vector product of the wall direction with the direction of swimming, i.e., σ = sgn(wn × vn).

Mutual alignment: u3(zn, zm) = ρdnm. The interaction between the fish n and m depends on their mutual distance and their alignment. We define dnm = |zn − zm| as a distance between the fish, modulated by a sign ρ. The distance (from the perspective of the n-th fish) is positive if the directed angle from vn to vm is in [0, π], zero, if the fish are perfectly aligned, and negative otherwise, i.e., ρ = sgn(vn × vm). Quantity ρdnm, measuring alignment between the orientation of the fish, is an asymmetric quantity, i.e., changing the reference fish changes the sign but not the magnitude of dnm, see Fig 8.

Fig 8. Transition rates inferred from tracked zebrafish data for the model with three kinematic variables.

Transition types are indicated in column headings. For each transition type, λ(s → s′), from state s to s′, the rates are shown by color intensity (colorbar on top), as a function of mutual distance u3 (horizontal axis), velocity magnitude u1 (vertical axis) and wall distance u2 (three rows, see legends at left). We used bin centers {1, 3, 5, 7} for the velocity magnitude u1, bin centers {−3, −1, 1, 3} for the mutual distance u3 and the wall distance is split to three bins: u2 ∈ [−2, 0), [0, 2], and |u2| > 2. The rates were inferred jointly from two experiments, each with two fish; the rates were assumed to be the same for all the fish.

Next we express the transition rates of the n-th fish similarly as in Eq (2):

| (19) |

where u(zn, zm) = (u1, u2, u3) are the specified key kinematic variables and Nu1, Nu2, Nu3 the number of discretization bins for each. This approach does not assume any particular decomposition of the rates, unlike Eq (2) where the no-interaction terms with α(1) and interaction terms with α(2) are assumed to sum additively in the exponent of the transition rates. Thus this ansatz is more general than in the synthetic example of ant motion. Indices n, m in Eq (19) indicate the identity of the focal and non-focal fish, respectively, and the summation goes through all perceived stimuli, captured by the kinematic variables (u1, u2, u3), which are functions of position of the focal and non-focal fish. The basis functions are chosen to be the tiling functions φ in the joint 3-dimensional space of u1 and u2 for single-fish position coordinates, and also u3 for the pairwise-interaction term.

In summary, we consider three kinematic variables u1(zn), u2(zn) and u3(zn, zm). To evaluate the importance of these variables systematically, we construct a hierarchy of seven models by picking different combinations of independent variables (dropping the dependence on zn, zm in the notation):

-

M1–M3

Marginal models with only one kinematic variable, i.e., [u1] OR [u2] OR [u3].

-

M4–M6

Models with a pair of kinematic variables, i.e., [u1, u2] OR [u1, u3] OR [u2, u3].

-

M7

Model with all three kinematic variables, i.e., [u1, u2, u3].

The inferred rates for model M7 are shown in Fig 8. The systematic dependence of the rates on kinematic variables suggests the following interpretable behaviors of the fish, qualitatively consistent with previous reports in [12]:

Speed dependence. A passive fish is more likely to transition to an active state when its speed is low, while the active fish is more likely to transition to a passive state when its speed is high. The first statement may be due to a correlation between the speed and the amount of time spent in the passive state, since the fish decelerate in time.

Collision avoidance. The last two columns of Fig 8 suggest that a fast moving active fish has a tendency to exit the current active turning state when the second fish travels to the same direction. This may be interpreted as a mechanism for avoiding collision between the fish since it applies to large speeds only, and does not depend on the distance from the wall.

Wall asymmetry. A passive fish within a few body lengths of the wall tends to turn in a direction to avoid a collision with the wall. This is evident from the contrast in the intensity of the rates between the first two panels in the top row and in the middle row in Fig 8. The fish swimming to the right/left of the wall will tend to turn to the right/left. Once the fish is far away from the wall (bottom row) this effect vanishes. We observe no similar effect for active fish.

Fish alignment. There is an asymmetry in the transition rates from the passive to the active state due to alignment of the fish. We observe a large transition rate passive→right when u3 < 0 and, similarly, a large transition rate passive→left when u3 > 0. This implies that the passive fish gets recruited by the neighboring fish to become active and align with its neighbor.

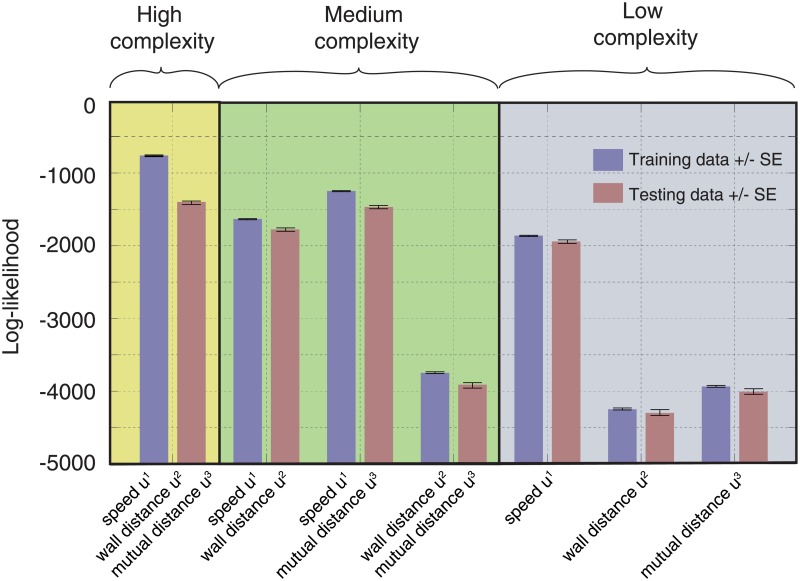

Our probabilistic models M1–M7 are amenable to systematic model comparison, shown in Fig 9. While it is evident that the variable u1 individually has the largest explanatory power, the inclusion of other variables also leads to better generalization performance. Given our data, the best model is the complex, three-variable model M7 that includes self-interaction, effects of environment and the interaction between the fish.

Fig 9. Model comparison.

Seven models (sorted into the “high complexity” model M7 with 3 variables, three “medium complexity” models (M4–M6) with 2 variables each, and 3 “low complexity” models (M1–M3) with one variable each) are compared in terms of their log-likelihood on training (blue) and testing (red) data. The data used in Fig 8 was split into segments of 10 s, containing on average 35 state transitions, for a total of 180 segments. These segments were then randomly assigned to a training and testing set, with probabilities 0.75 (training set) and 0.25 (testing set). The transition rates were inferred from the training set and tested on the testing set. We generated in total 200 random assignments. Bars are averages over sample sets ± standard error.

Conclusion

We introduced a novel approach for understanding the behavior of individual animals or groups of interacting animals. The probabilistic model of animal behavior combines deterministic dynamics, which describe the motion of animals at each of the possible behavioral states, and stochastic switching between these states. Our models are very flexible in terms of what behaviors are being tracked (which is problem specific), in terms of what motions the animals execute (which can be arbitrary deterministic or even stochastic motions), and in terms of which variables influence behavioral state transitions (which we seek to infer). Despite this flexibility, illustrated here by several synthetic and real examples, the forward problem (simulation) and the inverse problem (inference) remain tractable, primarily because the models inherit all the favorable properties of the Generalized Linear Models framework. Our framework therefore opens up the possibility to carry out theoretical explorations of, e.g., collective behavior, using forward simulation within the same model class in which inference from data is possible, bringing together two approaches that have in the past interacted rather sparsely. Because our models are probabilistic, we can use rigorous tools not only to perform inference, but also to select between classes of models of different complexity and to identify biologically relevant variables that modulate animal behavior. It is this ability, in particular, that should prove attractive for biological applications.

The practical use of our method consist of several steps, some of which we described in detail. First, when the laws of motion are unknown, as is usually the case, the motion of the animals needs to be recorded at a fine enough sampling rate. Second, distinct behavioral states of interest must be identified on every tracked trajectory. Third, the list of candidate kinematic variables, which the transition rates between the behavioral states may depend on, have to be selected. Fourth, these rates are decomposed into a linear combination of coefficients, to be inferred, and position basis functions, following which the rates are found by solving a convex maximum likelihood optimization problem. Fifth, model selection is performed to find the set of most relevant kinematic variables and, possibly, also the best choice of basis functions. Finally, we can look for a biological interpretation of the switching rates, or perform forward simulation in the inferred model by examining how changes in the rates affect the emergent animal behaviors. One of the key aspects of our approach is that the laws of motion are only needed for the forward simulation of the model, not for the inference of the transition rates from the data. This makes our method much more applicable to cases where the laws of motion are either unknown or complex, as for example the motion of fish presented here.

Applicability of our framework may be extended to non-Markovian behavioral transitions, where the transition rates are functions of observed kinematic variables but, in addition, they also depend on a slowly varying process that is not observed. Non-Markovianity can be incorporated in two ways. The first approach is to explicitly add a function of time to the collection of kinematic variables to explain the dependence of transition rates on both kinematic and temporal components at once. This would be relevant for example if multiple replicate experimental recordings occur in parallel within the same timeframe during which global changes in the environmental conditions occur. In such a case one would use the time from the start of the measurements as an additional variable. Inference in such a model may reveal not only the motion of an animal or a group of animals in the static conditions, but also to understand the nature of the environmental changes that occured during the recording. The second approach is to split a macrostate A (an observable behavioral state) into several microstates A1, A2, A3, … based on its duration. This is useful if the exit time is not exponential; for example, if a fish is very likely to stay in the passive state either for a very short time or for a very long time but rarely anything between. The microstates A1 and A2 would then correspond to the short and long passive states, classified using thresholding. Along with the transition rates between the macrostates, inference in this model should also reveal the distribution of exit times from a particular macrostate and thus it can be used as a general tool to test the Markov property of the process. Furthermore, the microstates can be also understood as bins with respect to the duration of the macrostate A and thus linked to the first approach. Overall, non-Markovianity may be incorporated into the model either by expanding the space of variables that the transition rates depend on or by expanding the number of behavioral states to include impact of state duration. Our framework may be then used to understand which timescales in the problem matter. For example, a systematic comparison may be performed between the models using different splittings to microstates. The model that reaches the maximum log-likelihood indicates the optimal timescales in the dynamics.

Our approach is to be contrasted with standard approaches which postulate constant (effective, or average) behavior in time. While that single behavior can be complex, e.g., it can represent a complex computation to determine an animal’s movement based on the position of its neighbors, the computation is usually assumed to be static through time and (most often) also deterministic. Our model can be seen as an extension of this standard approach to multiple behaviors (e.g., motion computations), possibly very different from each other, that the animal can stochastically switch between, in a way that is influenced by external and internal variables. While this is relevant for describing motion through space—especially to accurately capture abrupt changes in velocity or direction—there also exist many behaviors of interest that play out in physical space but extend beyond motion computation. Such behaviors often consist of stereotyped, discrete events (body pose changes during courtship dances, vocalizations, tailbeats, grooming behaviors, etc.) that are poorly described by continuous, averaged response models. With a proper choice of behavioral states, our model has the ability to capture this broad range of biological phenomena, either at the level of an isolated individual moving in space or interacting within a group.

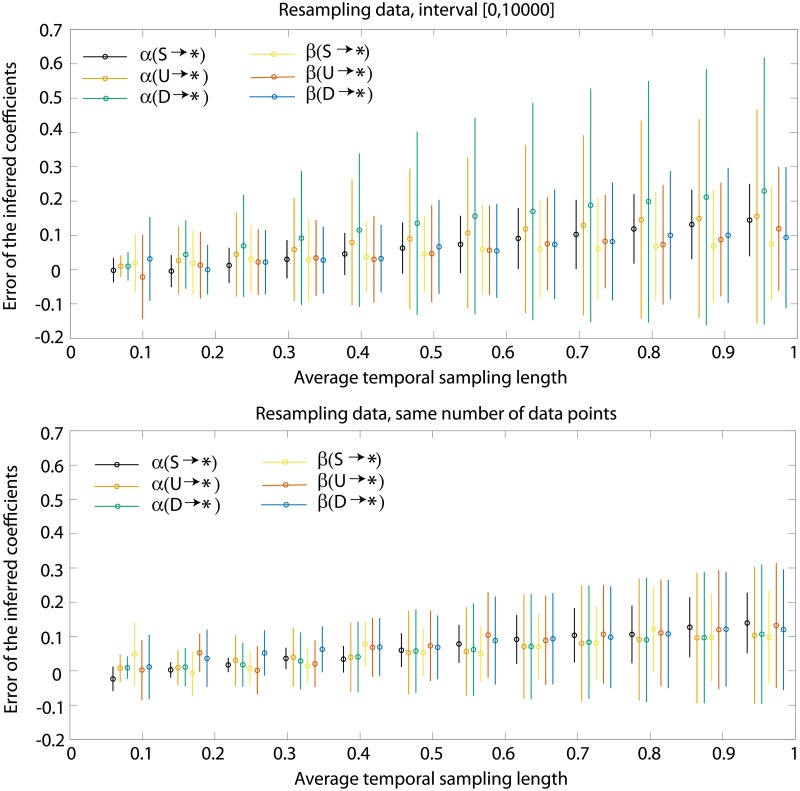

A Impact of sampling frequency

Experimental trajectories contain measurement noise but also a finite resolution due to the minimal sampling frequency that is practical to use in the experiments. If the sampling frequency is very large, a long enough trajectory contains enough information to resolve the transition rates accurately. However, if the sampling frequency is not large, even if all transitions are captured, the inference may be inaccurate due to a low resolution of the integral in (9) (or similarly the second term in (12)).

It is important to understand how this sampling frequency affects the quality of the inference. To test this we chose two interacting ants as a toy example and simulated the stochastic dynamics for a fixed period of time t ∈ [0, 10 000]. We then resampled the trajectory by taking only every k-th datapoint into consideration. Since the simulation ran with an adaptive time step we report only the average time step throughout the full simulation, 〈Δt〉 = 0.0795. The results shown in Fig 10(A) compare the accuracy of the inferred transition rates as a function of the average sampling step for data in t ∈ [0, 10 000]. It is important to note that resampling does not always reduce the number of observed transitions but it reduces the number of data points between them.

Fig 10. Accuracy of the inference on sampling frequency.

Two interacting ants are simulated according to the same model as in Fig 5 for t ∈ [0, 10 000]. The numerical integration of the dynamical equations uses an adaptive time step with a mean 〈Δt〉 = 0.0795. The data are re-sampled by taking every k-th datapoint, for the purpose of error sensitivity analysis. The error is defined as the mean difference between the real and inferred coefficient, (analogous for β). The average is taken only for z, dz ∈ [−2, 2], where enough data are available. (region of no regularization). (A) Error in coefficients α and β inferred from the simulation in t ∈ [0, 10 000] and different sampling rates, 〈Δt〉 = 0.0795k. (B) Error in coefficients α and β inferred from the simulation in t ∈ [0, 10 000k] and different sampling rates, 〈Δt〉 = 0.0795k.

As expected, the inference error grows as the sampling frequency decreases. But is this phenomenon simply a reflection of the decreased number of the data points? We study this by running a few sets of stochastic simulations with different sampling frequencies but with the same number of datapoints in total. This is done by running the coarser trajectories for a longer time, time intervals used are from [0, 104] to [0, 12 × 104]. While the top panel in Fig 10 shows a loss of accuracy due to both the smaller time coverage density and due to a smaller number of datapoints, Fig 10(B) keeps the number of datapoints constant thus reflecting only the density of data points. Despite the inference is done with the same number of data points the error still grows in time at a linear rate with the decrease in the sampling frequency.

B Coarse-graining through stochastic states with constant transition rates

Here we study an example of a hybrid system with a subset of behavioral states, which are not distinguishable in the experiment. We refer to those as microscopic states, whereas the states that are observable are macroscopic by definition. Ideally, we want to infer the rates of the full model (macro + micro) given only information about the macroscopic system. For that to be possible we assume that

All microscopic states are identical, i.e., the transition from any microstate to a given accesible macrostate is the same. Similarly, the transition from a macrostate to any allowed microstate is the same.

The transitions between the microstates are constant and the same.

The microstates may follow different laws of motion.

The idea is then to replace the set of identical microstates by a summary state which is macroscopic. However, this state is no longer deterministic; the laws of motion are randomly switching between the laws of motion of the corresponding microstates. Thus such an approach is an extension of the hybrid model as described in Section.

We chose the tumble-and run motion as a prototype for this demonstration. This is because the tumble left (TL) and tumble right (TR) form a great example of the unobserved internal states that can be clumped together to an observed tumble (T) state. The run (R) state does not contain any unobserved microstates and the transitions between the T and R states are experimentally observable.

Thus, the two levels of the tumble and run model are: the macroscopic level with two internal states SM = {T, R} (both experimentally observable), and the microscopic level with two microstates: Sm = {TL, TR}. The two models differ in replacing two microstates (tumble left and tumble right) by a summary tumble state. The TL and TR states follow different laws of motion but the transitions between them are assumed to be constant, i.e., independent of the position and angle (x, y, φ).

First note that we can compute the probability of the realized microscopic path (8) using all transition points tk but we can also compute a coarse-grained version using just the transition points tk′, where the set of microscopic transition indices k is {1, 2, …, K} and the set of the macroscopic indices is a subset of it. Such a coarse-grained expression leads to a log-likelihood that involves only the transitions among the observable states. To infer these macroscopic rates one needs to have access to a trajectory z(t) during the tumble state. This is an unobserved stochastic process due to random transitions between the left/right motion and thus we will seek a statistical description of it in terms of the probability density function of a position h(z, t) after elapsed time t from the transition into the tumble state.

The problem can be formulated as a set of the coupled advection-reaction equations for the probability densities u(φ, t) and v(φ, t) that the system which entered the composite tumble state at time t = 0 is in the tumble left and right state at time t, respectively with an angle φ. The system has a form

| (20) |

| (21) |

where the reaction terms capture changes of state at the constant rate γ and the advection terms capture the change in angle due to the motion with angular velocity ω. The system is defined for φ ∈ [0, 2π] is complemented by the periodic boundary conditions

| (22) |

| (23) |

At the transition into the tumble state the position and the angle of the individual are (x0, y0, φ0). We assume that the left/right states are initially equally likely, therefore

| (24) |

where δ(x) = 1 if x = 0 and zero otherwise. Note that due to translational symmetry the problem needs to be solved only for one value of φ0 since

| (25) |

and similar for v (where we included dependence on all the parameters). The above process is called a velocity jump process and it has been studied in a mathematical biology literature using the telegrapher’s equation [36–38].

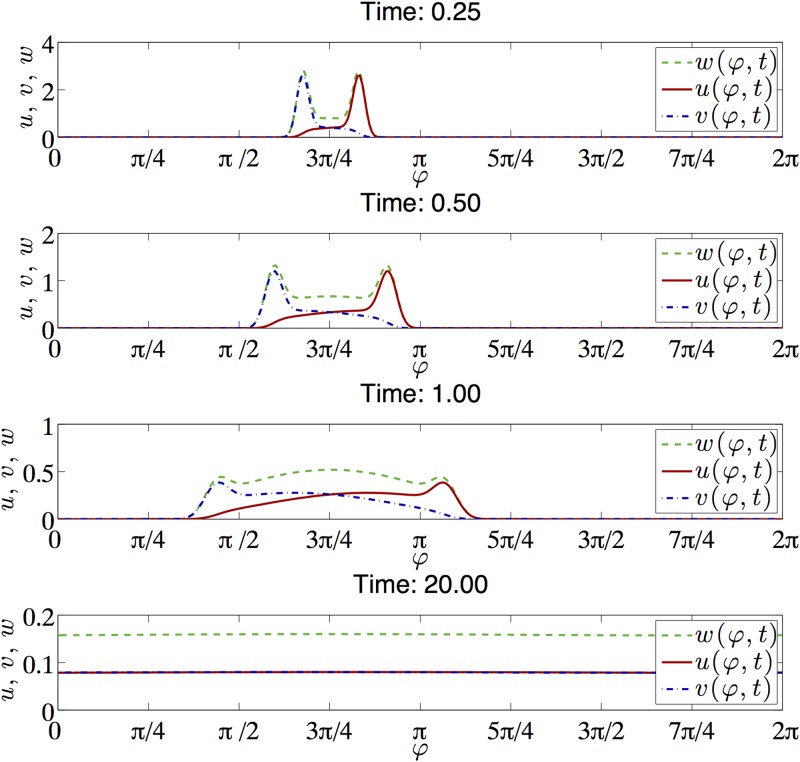

Fig 11 shows the solution of the coupled PDE system at times t = 0.25, 0.5, 1, 20. The function u(φ, t) and v(φ, t) combine into a density function w = u + v for the orientation at time t. For short times t ≪ ω/γ the system carries information about the entrance angle and shorter transitions times give a rise to a positive correlation between the entrance and the exit angles. This correlation disappears when t ≫ ω/γ where the exit distributions u and v become close to uniform distributions.

Fig 11. The solution of the system (20) and (21) at times t = 0.25, 0.5, 1, 20 for parameters ω = 1, γ = 2, φ0 = 3π/4.

We have used an upwind method that replaces the spatial derivatives of u and v using a backward and forward finite differences, respectively and the temporal derivative by a forward difference. This method is stable for that we ensure by choosing .

Once the solution of (20) and (21) is obtained we may formulate the inference problem using the coarse-grained log-likelihood function with an uniform prior

| (26) |

where zijk = (xi, yj, φk) is the center of the ijk-th bin in the tiling functions and m is the number of the divisions of the interval of interest (in Fig 6 we used x ∈ [−10, 30], y ∈ [−10, 10], φ ∈ [0, 2π] and m = 20).

Observing the system’s macroscopic states {x} ∈ SM and positions {z} at transition times leads to the statistics n(x → x′, z). To obtain the statistics T(x, z) one needs to approximate the amount of time the organism has spent at each bin of the domain. This can be calculated from a list of all entrance angles into the tumble state and the corresponding duration before leaving it to the run state. For each event from this list we compute numerically the density function of the angle φ after time t spent in the coarse-grained macrostate (T) with the entrance value and the microscopic transition rates γ

| (27) |

for t ∈ [0, τk] and use it as a proxy of how much time the system spends at a given φ-bin at each time. Note that the parametric dependence on φ0 can be suppressed due to translational symmetry (25) and the answer depends only on the parameters γ and ω, thus the solution of (20) and (21) has to be computed just once. For the given values γ, ω we use the entrance angles {φ0} into the macrostate T and the time spent in this state {τ} to sample the statistics T(x, z) for the state T. For every bin Dijk and a chosen time step Δt we evaluate the sum

| (28) |

composed of summands for every pair (φn, τn) and adding contributions at time t ∈ [0, τ], discretized to Δt-sized intervals. The function χ is a characteristic function, that results in one if the argument is true and zero otherwise.

As long as we have enough data for sampling the bulk part of the distribution T(x, z|γ, ω) the coarse-grained log-likelihood will be close to the actual one. In practice, the parameters γ and ω are not known. Therefore we formulate a composite log-likelihood including also the microscopic parameters γ, ω and find the maximum of a function of macroscopic parameters αM, appended by γ, ω

| (29) |

| (30) |

The stochastic state approach for the bacterial chemotaxis is implemented in the main text.

C Simulation code

To explore further details of our method readers can access our implementation in Matlab which is part of the supplemental information (S1 Code). It contains two toy examples: simplified motion of ants in 1D and bacteria in a chemical gradient in 2D. The code contains stochastic simulator of the motion of the organisms, assuming known transition rates. The simulated trajectories are then used as an input for the inference problem where the transition rates are reconstructed. We used a decomposition of the signal using tiling functions φ, Ψ exclusively. The single ant inference performs optimization in a bin-by-bin matter, while for multiple interacting ants inference is global and uses the whole trajectories. The bacterial chemotaxis is implemented on two scales: microscopic where all states are assumed observable; and macroscopic where only the states tumble and run are observed. More detail can be found in Appendix B.

We use a library minFunc containing the L-BFGS method to compute the optimum of the log-likelihood since this is a recommended method for large parameter unconstrained optimization in the information theory.

D Derivation of Eq (12)

Here we derive a more practical expression for the log-likelihood function for the simplest case of no interaction between individuals. The first term of (9) contains a sum of log-transition rates through all realized transitions, which can be sorted by the type of transition x → x′ and by the value of the kinematic variable zi using the chosen discretization of the space. Therefore

where S1(x → x′, zi) represents the number of realized transitions of type x → x′ at value zi (position, etc.). Next we apply similar reordering of terms in the seccond summand of (9). In the first step we sort the terms based on the outgoing state x and then noticing that the integration covers the entire time from the beginning to the end of the recording we compute (with δ(x) = 1 if x = 0 and zero otherwise)

where represents the total time spent in state x at value zi. Since we assumed that the transition rates do not depend on the out-state x′ S2 does not explicitly depend on it. The latter two equations lead to a formula for the log-likelihood based on summary statistics of the data (12) that is more practical to use. If pairwise (or higher order) interaction between individuals is included in the model these summary statistics will also include higher order statistics.

Supporting information

(ZIP)

Acknowledgments

This work was supported by the Human Frontier Science Program (HFSP) grant to GT and ES.

Data Availability

All data files on fish motion are available from the website https://github.com/harpazone/Zebrafish-swimming-data.

Funding Statement

This work was supported by the Human Frontier Science Program RGP0065/2012 (GT, ES).

References

- 1. Ballerini M, Cabibbo N, Candelier R, Cavagna A, Cisbani E, Giardina I, et al. Interaction ruling animal collective behavior depends on topological rather than metric distance: Evidence from a field study. Proceedings of the national academy of sciences. 2008. January;105(4):1232–7. doi: 10.1073/pnas.0711437105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Buhl J, Sumpter DJ, Couzin ID, Hale JJ, Despland E, Miller ER, et al. From disorder to order in marching locusts. Science. 2006. June;312(5778):1402–6. doi: 10.1126/science.1125142 [DOI] [PubMed] [Google Scholar]

- 3. Gautrais J, Jost C, Soria M, Campo A, Motsch S, Fournier R, et al. Analyzing fish movement as a persistent turning walker. Journal of mathematical biology. 2009. March;58(3):429–45. doi: 10.1007/s00285-008-0198-7 [DOI] [PubMed] [Google Scholar]

- 4. Katz Y, Tunstrøm K, Ioannou CC, Huepe C, Couzin ID. Inferring the structure and dynamics of interactions in schooling fish. Proceedings of the National Academy of Sciences. 2011. November;108(46):18720–5. doi: 10.1073/pnas.1107583108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lukeman R, Li YX, Edelstein-Keshet L. Inferring individual rules from collective behavior. Proceedings of the National Academy of Sciences. 2010. July;107(28):12576–80. doi: 10.1073/pnas.1001763107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Nagy M, Akos Z, Biro D, Vicsek T. Hierarchical group dynamics in pigeon flocks. arXiv preprint arXiv:1010.5394. 2010 Oct. [DOI] [PubMed]

- 7. Strandburg-Peshkin A, Farine DR, Couzin ID, Crofoot MC. Shared decision-making drives collective movement in wild baboons. Science. 2015. June;348(6241):1358–61. doi: 10.1126/science.aaa5099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Robinson EJ, Richardson TO, Sendova-Franks AB, Feinerman O, Franks NR. Radio tagging reveals the roles of corpulence, experience and social information in ant decision making. Behavioral ecology and sociobiology. 2009. March;63(5):627–36. doi: 10.1007/s00265-008-0696-z [Google Scholar]

- 9. Couzin ID, Krause J, James R, Ruxton GD, Franks NR. Collective memory and spatial sorting in animal groups. Journal of theoretical biology. 2002. September;218(1):1–1. doi: 10.1006/jtbi.2002.3065 [DOI] [PubMed] [Google Scholar]

- 10. Huth A, Wissel C. The simulation of the movement of fish schools. Journal of theoretical biology. 1992. June;156(3):365–85. doi: 10.1016/S0022-5193(05)80681-2 [Google Scholar]

- 11. Vicsek T, Czirók A, Ben-Jacob E, Cohen I, Shochet O. Novel type of phase transition in a system of self-driven particles. Physical review letters. 1995. August 7;75(6):1226 doi: 10.1103/PhysRevLett.75.1226 [DOI] [PubMed] [Google Scholar]