Abstract

Adaptation to foreign-accented sentences can be guided by knowledge of the lexical content of those sentences, which, being an exact match for the target, provides feedback on all linguistic levels. The extent to which this feedback needs to match the accented sentence was examined by manipulating the degree of match on different linguistic dimensions, including sub-lexical, lexical, and syntactic levels. Conditions where target-feedback sentence pairs matched and mismatched generated greater transcription improvement over non-English speech feedback, indicating listeners can draw upon sources of linguistic information beyond matching lexical items, such as sub- and supra-lexical information, during adaptation.

1. Introduction

In most communicative contexts, listeners must contend with extensive talker-related variability in their speech input, ranging from differences in vocal tract size to the presence of a speech impairment or foreign accent. As such, listeners are highly experienced at adapting to systematic deviations from long-term linguistic regularities such as those present in foreign-accented speech—adaptation which can occur in as short a time frame as a few sentences (e.g., Clarke and Garrett, 2004) and which can have lasting effects on listeners' perceptual systems (Zhang and Samuel, 2014). The present study focuses on elucidating this perceptual adaptation process as a linguistically guided process involving multiple levels of linguistic information.

Mechanisms for perceptual adaptation have been found to draw upon information outside of the speech signal itself, including visual lip movements (e.g., Bertelson et al., 2003) and lexical knowledge (e.g., Maye et al., 2008), to facilitate the disambiguation of distorted or ambiguous speech. Such visually or lexically guided disambiguations are posited to yield adaptive adjustments to phonetic categories, resulting in improved classification of subsequent exposures to the ambiguous sound in novel words. A considerable body of research has investigated the specific role of lexical information in adapting to variability (e.g., Davis et al., 2005; Kraljic and Samuel, 2007; Mitterer and McQueen, 2009; Norris et al., 2003; Reinisch and Holt, 2014). For instance, Norris et al. (2003) presented listeners with an ambiguous sound between [f] and [s] in lexical contexts intended to bias them to perceive the ambiguous sound as either [f] or [s] (e.g., “giraffe” or “dinosaur,” respectively). When later asked to identify sounds along an auditory [f]-[s] continuum, listeners' phonetic category boundaries were found to have shifted after exposure, suggesting that listeners' experience with the ambiguous sound in lexically biasing contexts enabled them to recalibrate the appropriate phonetic categories.

Similarly, rapid adaptation to noise-vocoded speech has been found when listeners were provided feedback as to the lexical content of the speech (Davis et al., 2005). Listeners were first asked to transcribe a vocoded sentence, followed by two repetitions of that target sentence. One group received the same sentence in clear (non-vocoded) speech followed by vocoded speech [distorted-clear-distorted condition (DCD)], and the other group received the same sentence as vocoded speech followed by clear speech [distorted-distorted-clear condition (DDC)]. The DCD condition allowed listeners to hear a repetition of the vocoded sentence after the lexical content of the sentence had been revealed (in the clear presentation), enhancing the intelligibility of that vocoded sentence, a phenomenon that has been termed a “pop-out” effect. This enabled listeners in the DCD condition, over the course of 30 trials, to adapt to the noise-vocoded speech, significantly faster than the DDC group.

While there has been a considerable focus in prior research on lexical information as a source of disambiguating information, relatively little work has investigated the efficacy of other possible connections between the incoming speech input and different levels of linguistic representation (Cutler et al., 2008). In Davis et al. (2005), clear (non-vocoded) feedback provided information not only about the lexical content of the target sentences but also about the phonemic, prosodic and syntactic content (as feedback and target sentences were identical, aside from the vocoding). It is conceivable then that listeners could have compared the sub-lexical (e.g., phonemic/phonotactic) and supra-lexical, as well as the lexical, information in the feedback sentences with the target sentences, yielding predictions at both non-lexical and lexical levels about upcoming speech input to facilitate generalized adaptation. However, naturally occurring conversations rarely include exact repetitions of “distorted” utterances in their “undistorted” form. The present work investigated the contribution of non-lexical levels of linguistic information by examining the extent to which feedback sentences needed to align with the foreign-accented sentence, manipulating the degree of match on different linguistic dimensions. In order to examine this issue, it is important to first establish that linguistically guided adaptation does not depend entirely on exactly matching “feedback.” It is possible that other levels of linguistic structure, particularly those that can potentially be the focus of interlocutor entrainment over the course of a conversation (e.g., prosody), could constrain and guide perceptual adaptation. This could provide a critical link between perceptual adaptation training regimens in the laboratory to naturally occurring, real-world, experience-dependent adaptation.

Using a similar paradigm as Davis et al. (2005), listeners in the present study first transcribed Mandarin-accented English sentences in noise. After each transcription, feedback sentences produced by a native English talker were presented that either aligned with the target on (1) all linguistic levels (Lexical Match), (2) sub-lexical, prosodic and syntactic levels with real words (Lexical Mismatch), or (3) sub-lexical, prosodic and syntactic levels with non-words (Jabberwocky). These three test conditions were compared to two control conditions, (4) non-English (Korean) speech feedback (Language Mismatch), and (5) Mandarin-accented exposure without any intervening feedback sentences (Accent Control). These controls allowed us to establish a baseline amount of improvement as a result of Mandarin accented-English exposure (No-Feedback Accent Control) as well as the degree to which hearing entirely unrelated speech (Language Mismatch) in the feedback interval facilitated learning. It is important to note that a basic assumption of this paradigm is that the Lexical Match condition would (trivially) result in (near) perfect recognition of the target sentence following feedback (i.e., feedback-guided within-trial correction via “revelation” of the intended sentence in clear, native-accented speech), whereas within-trial improvement would in all likelihood be significantly less than perfect in all other conditions. Thus, the critical measure of feedback-guided perceptual adaptation in all conditions was generalization to novel sentences (i.e., recognition accuracy improvement across trials).

If listeners leverage multiple sources of information during perceptual adaptation, one could hypothesize that the greater the number of connections between the disambiguating information and the input, the more efficiently the system could refine its predictions about future foreign-accented input. This would predict that conditions where the Mandarin-accented target and feedback sentences overlap on a larger number of linguistic dimensions (Lexical Match) should demonstrate greater adaptation relative to conditions with less overlap (Lexical Mismatch and Jabberwocky). Alternatively, for the sake of generalization to novel Mandarin-accented input, the perceptual system may utilize sub-lexical and supra-lexical information shared by target and feedback sentences in the Lexical Mismatch and Jabberwocky conditions to make generalizable adjustments, enabling improved recognition of novel items. Under this scenario, all English feedback conditions should yield comparable learning, showing larger gains relative to the Language Mismatch and Accent Control conditions.

2. Methods

One hundred monolingual American English listeners, self-reporting no speech or hearing deficits at the time of testing, were included in the study and received course credit or were paid for their participation (F = 75; M age = 19.8 years). Monolingual listeners were defined as having no experience with a language other than English prior to the age of 11 for more than 5 h per week, and no exposure to Mandarin-accented English-speaking family members. Listeners were randomly assigned to one of 5 conditions (n = 20 per condition).

Participants underwent 2 blocks of Mandarin-accented speech training. In all conditions, listeners' task after the target sentence was to transcribe the Mandarin-accented sentence, presented in speech-shaped noise at +5 dB SNR, with no limit on response time. In the test conditions, after the transcription, participants received feedback in one of several different formats (see Table 1 for example sentences). Feedback consisted of two sentence productions, the first of which was presented in the clear and was either a native-accented production of the target sentence (Lexical Match), a mismatching sentence (Lexical Mismatch), a jabberwocky sentence (Jabberwocky), or a Korean sentence (Language Mismatch). The second sentence was a repetition of the Mandarin-accented target sentence in noise. In these conditions, the clear (with no background noise) production and the Mandarin-accented target repetition were separated by 500 ms. In the Accent Control condition, listeners heard one repetition of the target Mandarin-accented sentence in noise, played immediately after transcription, to establish a baseline with respect to how much learning could occur from exposure to just the Mandarin-accented speech. All sentences were presented over headphones at a comfortable listening volume.

Table 1.

Trial structure for each condition.

| Condition | Target | Feedback | Target repetition | |

|---|---|---|---|---|

| Lexical match | Transcription | Native-accented in clear | ||

| “The children dropped the bag” | ||||

| Lexical mismatch | Mandarin-accented in noise “The children dropped the bag” | Native-accented in clear | Mandarin-accented in noise | |

| “The wife helped her husband” | ||||

| Jabberwocky | Native-accented in clear | “The children dropped the bag” | ||

| /The bεft faɪzd her wʌldɚn/ | ||||

| Language mismatch | Korean in clear | |||

| “ごくろうさまな話だ” | ||||

| Accent control | ∅ | |||

Each training block contained 2 blocked repetitions of 13 unique trials, whereby listeners heard and transcribed 13 target sentences (Block 1A) before receiving the same set of 13 trials again (Block 1B). Block 2 then introduced a new set of 13 trials (in a similarly constructed Block 2A and Block 2B). Which target sentences listeners received in the first and second training blocks were counterbalanced across participants. Training therefore consisted of 52 trials (26 trials × 2 repetitions), where each trial contained either 2 (Accent Control) or 3 sentence productions (all other conditions). All participants heard the same number of Mandarin-accented sentences over the course of the experiment (104 sentences), which include target transcription presentations and subsequent target repetitions.

The target materials were 26 sentences taken from the revised Bamford Kowal-Bench (BKB) Standard Sentence Test (Bamford and Wilson, 1979). These items are declarative, monoclausal sentences, each containing 3 to 4 keywords (e.g., “The boy fell from the window”). They were produced by a male, Mandarin-accented talker of medium-intelligibility, as determined in Bradlow and Bent (2008), as well as by a male native American English talker (used in the Lexical Match condition). For the Lexical Mismatch condition, 26 Hearing-in-Noise Test sentences that did not overlap with the target BKB sentences were taken from the ALLSSTAR database (Bradlow et al., 2011), produced by a male native American English talker. For the Jabberwocky condition, the 26 HINT sentences from the Lexical Mismatch condition were adapted, with the content words replaced by English pseudowords. The phonemes from the content words in each feedback sentence in the Lexical Mismatch condition were used to create the novel pseudowords in the Jabberwocky condition. Thus, the feedback sentences in both the Lexical Mismatch and Jabberwocky conditions contained the same phonemes and syntactic structure and were produced with highly similar phrase-level prosody (declarative intonation) by the same male talker from the other English feedback conditions. Feedback sentences in the Language Mismatch condition were Korean HINT sentences, also from ALLSSTAR (Bradlow et al., 2011), produced by a male native Korean talker.

3. Results

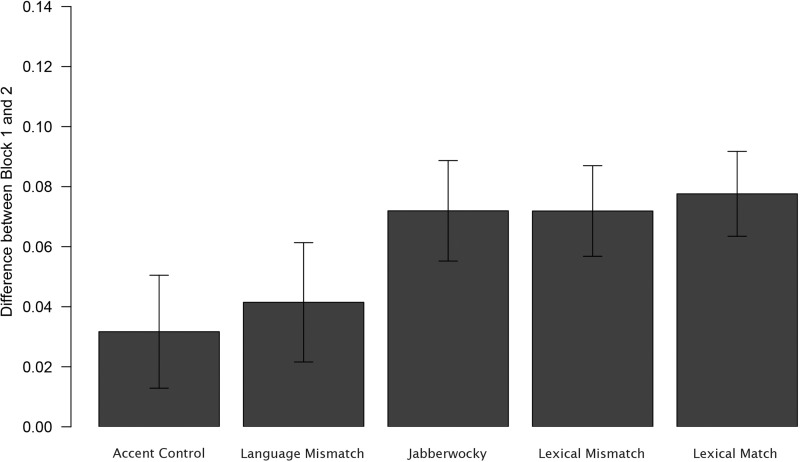

Strict keyword accuracy was tabulated, whereby for a word to be considered accurate, it had to be an exact match for the target (i.e., inaccurate morpheme substitutions or omissions were scored as incorrect); however, homophones and apparent spelling errors were not considered incorrect. Each keyword transcription was scored either 1 (correct) or 0 (incorrect) for Block 1A and Block 2A (i.e., a comparison of transcription accuracy for the first repetition of two different sets of trials). Table 2 provides the mean proportion correct for each condition, and Fig. 1 depicts the mean improvement in accuracy (difference between Blocks 1A and 2A). Logistic mixed effects regression models were implemented to analyze the data (Baayen et al., 2008), with keyword transcription accuracy as the dependent variable. A model was constructed with Helmert contrast-coded fixed effects of Condition (A: Accent Control vs all other conditions; B: Language Mismatch vs Jabberwocky, Lexical Mismatch and Lexical Match; C: Jabberwocky vs Lexical Mismatch and Lexical Match; D: Lexical Mismatch vs Lexical Match) and Block (1, 2) along with their interactions. The Helmert coding, typically employed in situations where the levels of a categorical variable are ordered from for instance lowest to highest, reflected our initial hypothesis, with levels ordered from low (Accent Control) to high (Lexical Match) linguistic overlap. The maximal random effects structure that would converge was implemented, which included random intercepts for participant and keyword, as well as random slopes for Condition by keyword and Block by participant. We compared across blocks rather than trial-to-trial within-blocks to minimize trial-specific variation arising from intelligibility differences between specific sentences.

Table 2.

Mean proportion correct (standard error in parentheses) by condition and block (first repetition trials) along with the mean proportion correct across blocks.

| Condition | Block 1 | Block 2 | Condition mean |

|---|---|---|---|

| Lexical match | 0.81 (0.013) | 0.88 (0.011) | 0.85 (0.010) |

| Lexical mismatch | 0.78 (0.015) | 0.85 (0.013) | 0.82 (0.010) |

| Jabberwocky | 0.79 (0.014) | 0.87 (0.012) | 0.83 (0.009) |

| Language mismatch | 0.84 (0.013) | 0.88 (0.011) | 0.86 (0.009) |

| Accent control | 0.80 (0.014) | 0.83 (0.013) | 0.82 (0.010) |

Fig. 1.

Mean difference in proportion keyword correct between Block 1 and Block 2. Errors bars indicate +/−1 standard error.

Results revealed a significant main effect of Block [β = 0.77, SE β = 0.09, χ2(1) = 59.373, p < 0.001] indicating that, across conditions, keyword identification accuracy significantly improved from Block 1 to Block 2. There was also a significant main effect of condition B [Language Mismatch vs Jabberwocky, Lexical Mismatch and Lexical Match; β = −0.51, SE β = 0.25, χ2(1) = 4.1507, p = 0.04], with listeners in the Language Mismatch condition (M = 86%) performing better across blocks relative to the English feedback conditions (M = 83%). Finally, a main effect of condition D (Lexical Mismatch vs Lexical Match) was also obtained, with higher accuracy rates overall in the Lexical Match condition (M = 85%) as compared to the Mismatch condition (M = 82%). No other Condition effects reached significance (χ2 < 2.63, p > 0.10).

Critically, a significant condition A (Accent Control vs all other conditions) × Block interaction was found [β = 0.77, SE β = 0.33, χ2(1) = 5.0077, p = 0.025], indicating that the presence of speech feedback significantly improved performance relative to Mandarin-accented only exposure. Furthermore, there was a strong trend for greater adaptation to occur in conditions with English feedback relative to Korean [β = 0.58, SE β = 0.34, χ2(1) = 2.8279, p = 0.09]. The remaining Condition × Block interactions were not significant (χ2 < 0.3844, p > 0.54), indicating that the English feedback conditions did not differ with respect to the amount of adaptation that occurred from Block 1 to 2.

Adaptation was not found to be significantly greater for the Lexical Match condition relative to other types of English feedback; however, to examine whether a “pop-out” effect was obtained, we compared keyword accuracy on the first and second repetition within the first block (Block 1A vs 1B). There was an overall effect of repetition [β = 1.09, SE β = 0.09, χ2(1) = 116.09, p < 0.001], with significantly higher accuracy on the second repetition relative to the first across conditions. Crucially, a significant Condition × Repetition interaction was obtained [β = 3.81, SE β = 0.4, χ2(1) = 93.172, p < 0.001], with a significantly larger increase in performance accuracy on the second repetition for Lexical Match listeners (16%) relative to listeners in other conditions (5%). This pop-out effect was also evident in the second block [Block 2A vs 2B; β = 2.83, SE β = 0.58, χ2(1) = 27.27, p < 0.001], with a 9% difference between first and second repetitions for the Lexical Match condition relative to an average 1% difference for the other conditions.

4. Discussion

The present work found similar adaptation in the English feedback conditions (Lexical Match, Lexical Mismatch, and Jabberwocky), and this adaptation was enhanced relative to Language Mismatch and Accent Control conditions. These findings suggest that listeners' perceptual systems leveraged linguistic information present in the externally provided feedback, in the form of native-accented speech, resulting in improved sentence recognition. Consistent with research reporting the facilitative effect of externally provided, matching lexical feedback on adaptation, significantly larger gains were made in the Lexical Match relative to the Accent Control condition (Davis et al., 2005; Hervais-Adelman et al., 2008; Mitterer and McQueen, 2009). However, prior work using this particular paradigm (e.g., Davis et al., 2005) always involved a within-trial match of feedback and target sentences (i.e., the target and its feedback sentence were always identical to each other). The present work revealed that the feedback did not need to match the target in order for enhanced adaptation to occur, suggesting that connections with non-lexical linguistic dimensions guided the adaptation process. The magnitude of these small but significant performance gains are in line with Mitterer and McQueen (2009), who found an average 6% (Scottish English) to 9% (Australian English) improvement for novel items and an 8%–14% improvement for old items when listeners had been provided with English subtitles during exposure.

One might predict that providing listeners with the lexical content of the target sentence would yield the largest gains over the course of training, as the within-trial feedback, being the same sentence as the Mandarin-accented input, aligned with the target on all linguistic dimensions. This alignment might be expected to increase intelligibility, enabling listeners to notice any discrepancies between the accented pronunciation and their predicted pronunciation (that is, the pronunciation that would be predicted based on listeners' stored linguistic representations). This might then allow them to adjust the appropriate phonemic categories in a manner that could be generalized to the novel input of the subsequent trial. For the Lexical Match condition, larger performance gains were indeed found for items to which they had already been exposed (i.e., they did indeed experience a within-trial “pop-out” effect), indicating that providing listeners with the identity of the target sentence served to enhance the intelligibility of its later repetition. However, no additional advantage was found for the Lexical Match condition over the Lexical Mismatch and Jabberwocky conditions on recognition of a novel sentence in the next trial. All three English-feedback conditions were found to have significantly greater adaptation on novel sentences relative to accent only exposure.

The advantage of Lexical Match over the other English feedback conditions on second repetition transcription is not surprising if we consider that listeners were provided with the identity of the target sentence in the Lexical Match condition. Why did this not translate into better generalization from Block 1 to Block 2? It is conceivable that a benefit for greater alignment between target and feedback items would only emerge in lower intelligibility conditions (e.g., higher levels of noise or a more heavily foreign-accented speaker) where distortions at the segmental (i.e., sub-lexical) and/or suprasegmental levels are extensive enough that, in the absence of the lexical mediation provided by the Lexical Match condition, prediction-generation and perceptual adjustments remain elusive. In heavily foreign-accented speech, for example, neutralization of phoneme contrasts and rhythmic interval timing may be so pervasive in the signal that phrase, word, and even syllable segmentation is severely challenged to the extent that connections at any of these levels between a target and feedback item is effectively blocked. It remains for future work to examine whether and how the adversity of the listening context modulates the contribution of different levels of linguistic information.

It is important to note that, while not sharing any lexical content words, there were nonetheless points of connection between the feedback and Mandarin-accented input within trials in these English feedback conditions. This degree of overlap in the phonemic content, prosodic and syntactic structure of these sentences may have facilitated the connection to stored linguistic knowledge and improved predictions about future, novel samples of Mandarin-accented input. Indeed, it is conceivable that part of the benefit derived from the Lexical Mismatch and Jabberwocky conditions stemmed from the fact that both target and feedback sentences shared similar “default” syntactic structure (initial noun phrase followed by a verb phrase) yielding “default” declarative sentence prosody across trials. The feedback sentences may have facilitated the alignment of the Mandarin-accented targets to this “default” prosody. This could have, in turn, effectively attuned listeners' attention to the expected lexical categories at each point in the sentence, such that listeners might be better able to predict where content and function words would likely occur, facilitating word segmentation. Moreover, target sentences contained an average of 15 phonemes (range 11–19), with an average 35% phonemic overlap (range 7%–59%) from their associated feedback sentences (Lexical Mismatch or Jabberwocky conditions). This phonemic overlap largely stemmed from shared individual phonemes or clusters (e.g., “found” in the target sentence, “ground” in the feedback sentence), rather than entire content words. Listeners could have drawn upon this overlap to help make adjustments to relevant phonemic categories when they discerned discrepancies between target and feedback phonemes. Future work could further tease apart the relative contribution of these different levels of linguistic information by providing feedback sentences that misaligned syntactically and/or prosodically with the target sentences, or feedback sentences consisting of strings of phonemes without syntactic structure or prosody.

The present findings provide insight into how the perceptual system utilizes different sources of linguistic information during adaptation, namely, that some degree of linguistically relevant alignment (sharing of English language features) of accented target and feedback sentences was sufficient to promote generalized adaptation under these listening conditions. In most naturally occurring conversations, the kind of information available to listeners is not typically concurrently presented, lexically matching feedback, but rather sequentially presented, discourse-building information. It is perhaps a necessity then for the perceptual system to have developed in such a way that it can capitalize on whatever kinds of information it can extract. The present work provides an important connection between lab-based adaptation paradigms and real-world communicative contexts, as indeed, it appears that the perceptual system did not need a lexical match to see enhanced comprehension of Mandarin-accented speech, suggesting that the system draws upon multiple sources of linguistic information (e.g., segmental, lexical, and supra-segmental information) present in the surrounding communicative context.

Acknowledgments

We would like to thank Chun Liang Chan, Emily Kahn, and Alexandra Saldan for their support. Portions of this work were presented at the 56th Annual Meeting of the Psychonomic Society in Chicago, Illinois. This research was supported in part by NIH-NIDCD Grant No. R01-DC005794 to A.R.B.

References and links

- 1. Baayen, R. H. , Davidson, D. J. , and Bates, D. M. (2008). “ Mixed-effects modeling with crossed random effects for subjects and items,” J. Mem. Lang. 59(4), 390–412. 10.1016/j.jml.2007.12.005 [DOI] [Google Scholar]

- 2. Bamford, J. , and Wilson, I. (1979). “ Methodological considerations and practical aspects of the BKB sentence lists,” in Speech-Hearing Tests and the Spoken Language of Hearing-Impaired Children, edited by J. Bench and J. Bamford ( Academic, London: ), pp. 148–187. [Google Scholar]

- 3. Bertelson, P. , Vroomen, J. , and De Gelder, B. (2003). “ Visual recalibration of auditory speech identification: A McGurk aftereffect,” Psychol. Sci. 14(6), 592–597. 10.1046/j.0956-7976.2003.psci_1470.x [DOI] [PubMed] [Google Scholar]

- 4. Bradlow, A. R. , Ackerman, L. , Burchfield, L. A. , Hesterberg, L. , Luque, J. , and Mok, K. (2011). “ Language- and talker-dependent variation in global features of native and non-native speech,” in Proceedings of the 17th International Congress of Phonetic Sciences, Hong Kong, pp. 356–359. [PMC free article] [PubMed] [Google Scholar]

- 5. Bradlow, A. R. , and Bent, T. (2008). “ Perceptual adaptation to non-native speech,” Cognition 106(2), 707–729. 10.1016/j.cognition.2007.04.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Clarke, C. M. , and Garrett, M. F. (2004). “ Rapid adaptation to foreign-accented English,” J. Acoust. Soc. Am. 116(6), 3647–3658. 10.1121/1.1815131 [DOI] [PubMed] [Google Scholar]

- 7. Cutler, A. , McQueen, J. M. , Butterfield, S. , Norris, D. , and Planck, M. (2008). “ Prelexically-driven perceptual retuning of phoneme boundaries,” in Proceedings of Interspeech 2008, edited by Fletcher J., Loakes D., Wagner M., and Goecke R., Brisbane, Australia. [Google Scholar]

- 8. Davis, M. H. , Johnsrude, I. S. , Hervais-Adelman, A. G. , Taylor, K. , and McGettigan, C. (2005). “ Lexical information drives perceptual learning of distorted speech: Evidence from the comprehension of noise-vocoded sentences,” J. Exp. Psychol.: Gen. 134(2), 222–241. 10.1037/0096-3445.134.2.222 [DOI] [PubMed] [Google Scholar]

- 9. Hervais-Adelman, A. G. , Davis, M. H. , Johnsrude, I. S. , and Carlyon, R. P. (2008). “ Perceptual learning of noise vocoded words: Effects of feedback and lexicality,” J. Exp. Psychol.: Human Percept. Perform. 34(2), 460–474. 10.1037/0096-1523.34.2.460 [DOI] [PubMed] [Google Scholar]

- 10. Kraljic, T. , and Samuel, A. G. (2007). “ Perceptual adjustments to multiple speakers,” J. Mem. Lang. 56, 1–15. 10.1016/j.jml.2006.07.010 [DOI] [Google Scholar]

- 11. Maye, J. , Aslin, R. N. , and Tanenhaus, M. K. (2008). “ The weckud wetch of the wast: Lexical adaptation to a novel accent,” Cogn. Sci. 32(3), 543–562. 10.1080/03640210802035357 [DOI] [PubMed] [Google Scholar]

- 12. Mitterer, H. , and McQueen, J. M. (2009). “ Foreign subtitles help but native-language subtitles harm foreign speech perception,” PLoS One 4(11), e7785. 10.1371/journal.pone.0007785 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Norris, D. , McQueen, J. M. , and Cutler, A. (2003). “ Perceptual learning in speech,” Cogn. Psychol. 47(2), 204–238. 10.1016/S0010-0285(03)00006-9 [DOI] [PubMed] [Google Scholar]

- 14. Reinisch, E. , and Holt, L. L. (2014). “ Lexically guided phonetic retuning of foreign-accented speech and its generalization,” J. Exp. Psychol.: Human Percept. Perform. 40(2), 539–555. 10.1037/a0034409 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Zhang, X. , and Samuel, A. G. (2014). “ Perceptual learning of speech under optimal and adverse conditions,” J. Exp. Psychol.: Human Percept. Perform. 40(1), 200–217. 10.1037/a0033182 [DOI] [PMC free article] [PubMed] [Google Scholar]