Abstract

Patient monitoring algorithms that analyze multiple features from physiological signals can produce an index that serves as a predictive or prognostic measure for a specific critical health event or physiological instability. Classical detection metrics such as sensitivity and positive predictive value are often used to evaluate new patient monitoring indices for such purposes, but since these metrics do not take into account the continuous nature of monitoring, the assessment of a warning system to notify a user of a critical health event remains incomplete. In this article, we present challenges of assessing the performance of new warning indices and propose a framework that provides a more complete characterization of warning index performance predicting a critical event that includes the timeliness of the warning. The framework considers 1) an assessment of the sensitivity to provide a notification within a meaningful time window, 2) the cumulative sensitivity leading up to an event, 3) characteristics on if the warning stays on until the event occurs once a warning has been activated, and 4) the distribution of warning times and the burden of additional warnings (e.g., false-alarm rate) throughout monitoring that may or may not be associated with the event of interest. Using an example from an experimental study of hemorrhage, we examine how this characterization can differentiate two warning systems in terms of timeliness of warnings and warning burden.

Keywords: Patient monitoring, Early warning, Performance assessment

1. Introduction

Patient monitoring has traditionally involved reporting individual vital signs that describe the current state of a patient with alarm conditions defined by the relationship of individual vital signs to, for example, pre-set high and low limits. This type of alarm system may be included on cardiac, blood pressure, and pulse-oximetry monitors. However, individual vital signs may not provide noticeable changes prior to a critical event [1] and may trigger alarms with low clinical relevance contributing to high alarm rates and alarm fatigue [2]. Warning indices and notification systems that use physiological measurements to produce comprehensive metrics of patient health [3,4] and identify patterns predictive of patient instabilities [5,6] are a promising tool to provide lead time prior to a critical event when effective mitigations can be taken [7]. A sampling of such methods include approaches for identifying need for life-saving interventions in trauma patients [8,9], patients at risk for septic shock in the intensive care unit [10], need for care escalation from step-down units [11–13], and potential heart failure in at-home monitoring [14,15] environments. Patient monitoring environments are known for the number of false alarms [16,17], presenting a need to balance the performance of any new monitoring index and warning system to provide timely warnings prior to an event and low false-alarm rates.

Warning indices are often evaluated as classification systems using metrics such as sensitivity, specificity, and ROC curve analysis for the index to warn prior to an event [18]. Sensitivity (number of warnings generated prior to an event divided by the total number of events [true positives/(true positives + false negatives)]) and a combination of metrics such as false-alarm rate (number of warnings not associated with an event over some unit of time [false positives/monitoring time]) and positive predictive value (number of warnings generated prior to an event divided by the total number of warnings [true positives/(true positives + false positives)]) may be reported to characterize the performance of this type of detection system. As these metrics alone fail to incorporate the timeliness of the detection [19] and patterns leading up to the event into the performance metrics (see below in II. Potential Warning Index Patterns Leading up to an Event), they may not always provide a complete characterization of the performance of a continuous warning index to predict a change in patient conditions.

In some scenarios detection of an event far in advance may not be useful or distinguishable from a false positive and detection as the event is occurring or after may be too late for the user to interpret the warning, reassess the patient, and provide a response. In this article we consider characterization of warning indices performance to detect true positive cases of critical events considering both detection accuracy and timeliness of detection at the same time. We demonstrate the performance information that is provided by comparing two warning system configurations using a normalized shock index for predicting a hemorrhage-induced drop in blood pressure in sheep.

2. Potential warning index patterns leading to an event

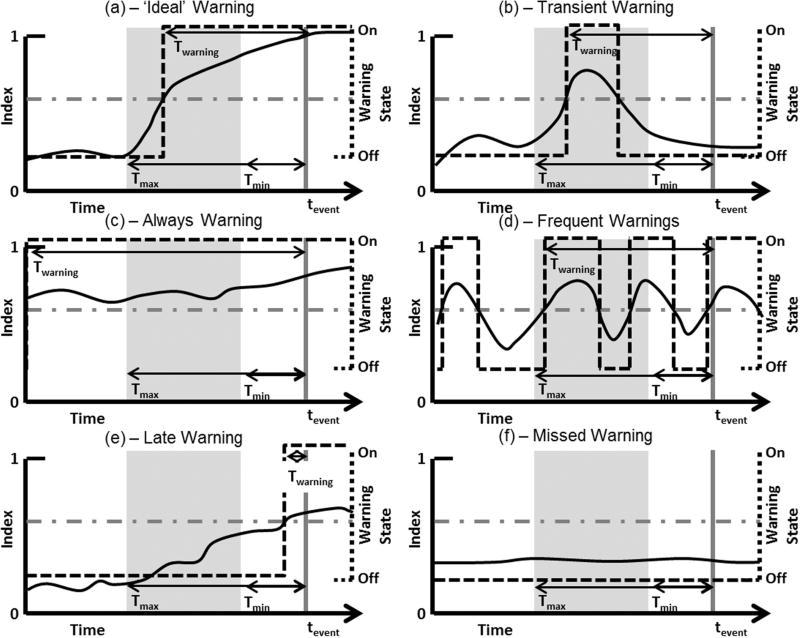

The potential patterns of a warning index prior to a critical event presented in Fig. 1 highlight the need for a comprehensive characterization of warning index performance. In these hand curated examples, tevent corresponds to the time of the event that has either been defined through an annotation process (e.g., through retrospective analysis of clinical data) or as an experimental endpoint (e.g., a target stopping point in a laboratory study), and twarning corresponds to the time when the warning is first activated. Twarning is the warning time (duration) provided from the time the warning is activated until the event (Twarning = tevent – twarning). Tmax and Tmin define the interval prior to tevent where a warning should be considered meaningful. Tmax is the longest duration of notice prior to an event that a warning index was designed to provide warning (e.g., 60 min) and Tmin is the shortest duration of notice prior to an event that a warning index was designed to provide warning (e.g., 1 min). For example, immediately prior to the event it may be expected that a high priority alarm condition is met based on individual vital signs. At this time, the value of an additional warning from an early warning index is likely limited. These values should be defined for the clinical scenario where the warning system is to operate. For the theoretical examples in Fig. 1, a 0–1 index is considered where a warning is triggered when the index crosses a threshold.

Fig. 1.

Potential patterns of a warning index leading up to a time critical health event. (a), (b), (c), and (d) represent scenarios where a warning is provided during a pre-defined meaningful window. (e) and (f) represent scenarios where a warning is not provided during a pre-defined meaningful window. tevent is the time that the known critical health event occurs. Twarning is the time between the warning activation and tevent. Tmax and Tmin are the pre-defined maximum and minimum warning times, respectively, relative to tevent. Solid black lines indicate the index and dashed lines the warning state that is determined by whether the index is above or below the warning condition threshold (horizontal dash-dot line). The gray box represents the pre-defined window for a warning to be considered a true positive, bounded by Tmax and Tmin, and the vertical gray line indicates tevent.

Considering a true positive definition as a warning present anytime within the window from Tmax to Tmin, examples in Fig. 1a–d would all be considered true positives. Alternatively, a true positive could be defined only when a warning is triggered within the window from Tmax and Tmin which would consider Fig. 1a, b, and d as true positives. However, the index and warning pattern of each of these differs which may affect the clinical interpretation. Fig. 1a may represent an ideal scenario for an index representative of patient deterioration where the index is monotonically increasing up to the time of a critical health event, and the warning is initiated within the pre-defined window and stays on until the event occurs. Fig. 1b shows an index that crosses the threshold within the window but then retreats below the threshold a short while later. This leaves a time gap between the end of the warning and the event. This scenario could be appropriate depending on the condition being monitored, but the interpretation of any given warning may suffer for a system that sometimes behaves as in 1a and other times as in 1b. When observed in real-time, the pattern in 1b may initially be considered a false alarm, until the event occurs. In Fig. 1c and d we present two additional patterns that would be considered true positives but may not enable a meaningful response. In Fig. 1c the warning is on long before the pre-defined window which may not enable an interpretation of the warning to the event of interest. In Fig. 1d the triggering and de-triggering events are being repeated, resulting in many warnings being generated prior to tevent contributing to alarm fatigue. Fig. 1e and f shows potential patterns for false negative events, or when no warning is present within the pre-defined meaningful window.

As with true positive patterns, these may also have different interpretations/utility. In Fig. 1e the warning is not generated within the window, but the index is monotonically increasing and a warning is eventually generated prior to the event. In Fig. 1f the index is stable and no warning is ever generated.

In addition to the index patterns, the configuration of the warning system could affect the performance and clinical utility of these cases. For example, instead of having the warning on/off when the index crosses the threshold the warning could stay on for a fixed time period. This would change the warning patterns shown in Fig. 1.

3. Framework to characterize performance

Here, we describe a framework for characterization of the patterns leading up to an event for a warning system. This framework considers the following fiducial points on Fig. 1: tevent – time of the critical health event, Tmax – maximum amount of time (duration) prior to the event when a warning is expected to be meaningful, Tmin – minimum amount of time prior to the event that would allow for meaningful action (depending on the application Tmin may be 0, the time of the event, or negative, after the event), and Twarning – time from the warning onset to tevent. Meaningful numbers for Tmin and Tmax depend on the specific event, patient condition and context of use of the warning system and could range from days to seconds accordingly, and as the performance and clinical utility of a new warning system is better understood the specifications for Tmin and Tmax may change. We also define an independent warning episode when a warning turns on and then turns back off.

The framework assesses a warning index on five aspects: 1) sensitivity within a pre-defined window ([Tmax, Tmin]), 2) sensitivity at time points leading up to the event (e.g., considering the sensitivity 5, 10, and 15 min prior to the event) 3) distribution of warning onset times (Twarning), 4) distribution of warning “stay-on” times, and 5) relationship between the number of warnings and Twarning. To assess the sensitivity within a pre-defined window, we first consider a true positive if a warning is ‘on’ at any point between Tmax and Tmin for each event (alternatively, a true positive could be defined based on warning onset). Sensitivity is then defined as the number of true positives divided by the number of events. This assessment distinguishes the patterns in Fig. 1a–d from 1e and f. As the next step, we assess the time varying nature of the sensitivity by considering if the performance improves closer to the event or if there is a specific time point prior to an event when the system is most informative. Improvement in sensitivity closer to the event may be expected for an index that provides an overall assessment of patient deterioration (e.g., Fig. 1a), but not for an index intended to detect a specific time-varying signature (e.g., Fig. 1b). Assessing the performance leading up to the event can help distinguish warning indices designed with these properties. For each unit time (t) from Tmax until Tevent we consider a true positive if a warning is ‘on’ at time t and divide that number by the number of events. This provides a value of sensitivity at each time point (i.e., true positives and false negatives are defined by the value at that time point) from Tmax until Tevent (Fig. 4 in the V. Results of Case Study further illustrates this concept).

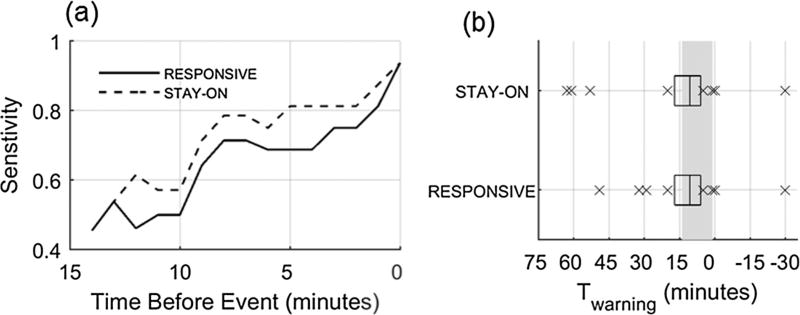

Fig. 4.

(a) Sensitivity considering a true positive at every minute leading up to the event from Tmax until tevent. (b) Distribution of Twarning for true positive warning episodes (time warning is activated for a warning episode present within [Tmax, Tmin]). Box plots where center line is the median, box edges are 25th and 75th percentiles, and crosses are values outside the 25th and 75th percentiles. Gray rectangle indicates [Tmax, Tmin] window.

Distribution of warning times (Twarning) is further assessed in a way that captures all patterns as shown in Fig. 1. Ideally Twarning should be within a narrow interval [Tmax, Tmin] for all events but this may not always occur. Patient physiology and disease severity may vary widely leading to a range of warning times when the index first detects/predicts a condition. The interpretation of an index at any specific time point may be challenging for a system that provides a range of Twarning from a few seconds to 30 min. This assessment provides information on the range of warning times that can be expected. For each warning, Twarning is defined with respect to the time of event as the distribution from twarning to tevent (see Fig. 1). For true positive warnings Twarning is within [Tmax, Tmin]. False positive warnings have Twarning outside of [Tmax, Tmin]. Warnings activated after tevent will have a negative value for Twarning. If no warning is activated for an event (false negative warning) we define a warning as occurring at the end of monitoring, which occurs after tevent giving it a (−) Twarning equal to the monitoring time.

Next, we characterize the percentage of time that a warning is ‘on’ between twarning and tevent (%WarningOn). The designer of a warning system may apply different strategies to turn off the warning that will affect the performance, interpretation and frequency of warnings. This assessment provides information on, if the warning was triggered, is it expected to stay on until the event (e.g., Fig. 1a and c) or may it turn off prior to the event (e.g., Fig. 1b and d). A warning episode is considered as each separate warning. The start of a warning episode is when the warning turns on and the end is either when it turns off or, if the warning does not turn off, the end of monitoring. For events with % WarningOn of less than 100, we also count the number of separate warning episodes that occur between Twarning and Tevent (distinguishing Fig. 1b and d). Although this step helps with the quantitative characterization of the performance of a warning index, it should be noted that in practice this representation could be very different depending on how the user interacts with the warning system.

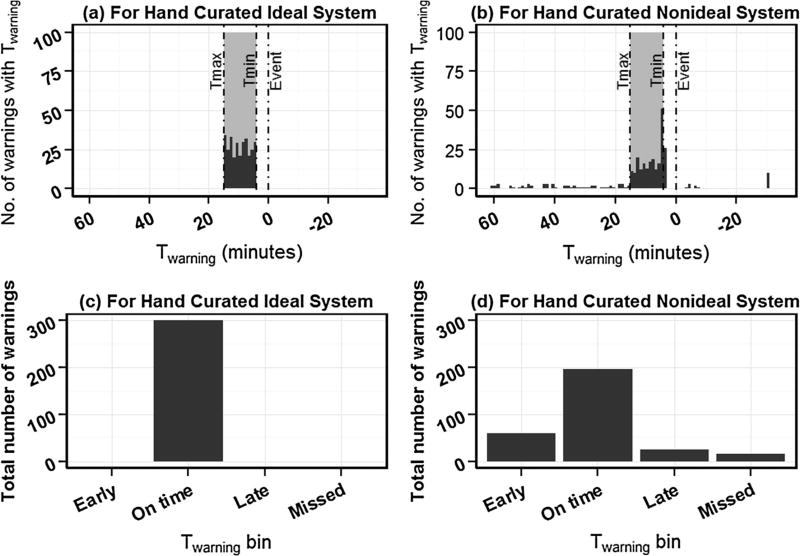

We then summarize all above characteristics onto one histogram by estimating the relationship between warning and time to warning (Twarning), which also includes information on the burden of additional warnings (e.g., false alarms). We construct a histogram of number of warnings against Twarning (Fig. 2). For missed events (when no warning is triggered), we assume that a warning occurred with Twarning at the end of monitoring which occurs after tevent. Twarning for an ideal warning system will be contained within [Tmax, Tmin] (Fig. 2a). When a system provides warnings too early, the distribution will disperse beyond Tmax creating a thicker left tail (Fig. 2b). When a system provides warnings too late or when there are missed warnings with negative Twarnings, the distribution will disperse beyond Tmin creating a thicker right tail (Fig. 2b). We then summarize this histogram into four bins (Fig. 2c and d): before Tmax (early), within [Tmax, Tmin] (on time), after Tmin (late, but still before tevent), and after tevent (missed). Considering the possibility of multiple warnings per event being generated by a warning system (Fig. 1d) we normalize the summary histogram two ways: with respect to total number of warnings and total number of events. Normalizing the summarized histogram with respect to total number of warnings we estimate the time profile of warning proportions. Time profile of warning proportions estimates the time distribution of warnings generated by the system. On time profile of warning proportions, the ideal warning system will have a distribution contained within [Tmax, Tmin] with the total proportion of warning events reaching 1.0. When normalized with respect to total number of events, the summary histogram estimates the time profile of warnings per event. Time profile of warnings per event estimates warning distribution with respect to the event. On time profile of warnings per event the sum across all four bins > 1.0 indicates multiple warnings per single event. When a system provides one warning per event, time profile of warning proportions and time profile of warnings per event will look the same.

Fig. 2.

Histograms which estimate the relationship between warnings and time to warnings (Twarning) for (a) hand curated ideal warning system and (b) hand curated non-ideal warning system. tevent is the time of critical health event occurrence (shown as 0 min here) and Twarning is the time of warning with respect to Tevent. Tmax and Tmin define meaningful maximum and minimum warning times, respectively, relative to tevent. Events which had no warning are artificially added to the histogram at right end of monitoring period (Twarning = −30 min for this example). False positives can be artificially added to the histogram at left end of histogram (not demonstrated in this figure). Time profile estimators (before normalization with respect to total warnings or total events) which summarize the histograms into four bins: early (before Tmax), on time (within [Tmax, Tmin]), late (after Tmin but still before tevent), and missed (after tevent) for (c) hand curated ideal warning system and (d) hand curated non-ideal warning system in (a) and (b) respectively.

4. Experimental methods for case example

To demonstrate the proposed framework we compare two configurations of a warning index applied to predict a hemorrhage-induced drop in blood pressure in conscious sheep from a previously reported study [20]. Experiments were performed at the University of Texas Medical Branch under a protocol reviewed and approved by the Institutional Animal Care and Use Committee and conducted in compliance with the guidelines of the National Institutes of Health and the American Physiological Society for the care and use of laboratory animals. Female Merino sheep (N= 8, 3 years old, weight: 33–40 kg) underwent two hemorrhages separated by at least 3 days. On the day of the first hemorrhage sheep were randomized to either a fast (1.25 mL/kgBW/min) or slow (0.25 mL/kgBW/min) hemorrhage. Hemorrhage on the second day was performed at the alternate rate of the first day. Hemorrhage was stopped when mean arterial pressure dropped by 30 mmHg from baseline mean arterial pressure. The difference in hemorrhage rates produced a range of times from the start until end of hemorrhage. Arterial blood pressure from a pressure transducer placed in a femoral artery was continuously recorded for at least 30 min before the start of hemorrhage (baseline), during the hemorrhage, and 30 min post-hemorrhage. At the end of the first hemorrhage day for each animal, the remaining withdrawn blood was reinfused.

4.1. Normalized shock index as a warning indicator

A custom pulse detector was applied to the arterial pressure waveform to determine heart rate (HR) and systolic blood pressure (SBP). Shock Index was computed as HR/SBP. A normalized Shock Index (SInorm) was then developed to produce an index on a 0–1 scale to be used as the warning index for this example. The population baseline mean and standard deviation of the Shock Index was computed across all experiments. SInorm at each time point was estimated from the Cumulative Distribution Function (CDF) of a normal distribution with mean and standard deviation from the population baseline. Two warning system strategies were prototyped for SInorm. For both warning system strategies, when SInorm was greater than or equal to 0.9, it was considered that the warning condition was met and no delays were included from when the warning condition was met until the warning activated. For the RESPONSIVE approach, whenever SInorm was greater than or equal to 0.9 the warning state was set to ‘on’, and whenever SInorm was below 0.9 the warning state was set to ‘off’. For the STAY-ON approach, the warning state remained ‘on’ unless SInorm dropped below and stayed below the threshold for 10 min.

We used the proposed framework to compare the performance of the two strategies of SInorm warning. tevent was defined by the stopping point of hemorrhage in the previously reported study as the time mean arterial pressure decreased during hemorrhage 30 mmHg mean arterial pressure from baseline [20]. For this demonstration, we defined Tmin and Tmax as 1 and 14 min before tevent, respectively. A true positive was defined if a warning was on within [Tmax, Tmin] prior to the event and a false negative was defined otherwise. Sensitivity was then [true positives/(true positives + false negatives)].

5. Results of case study

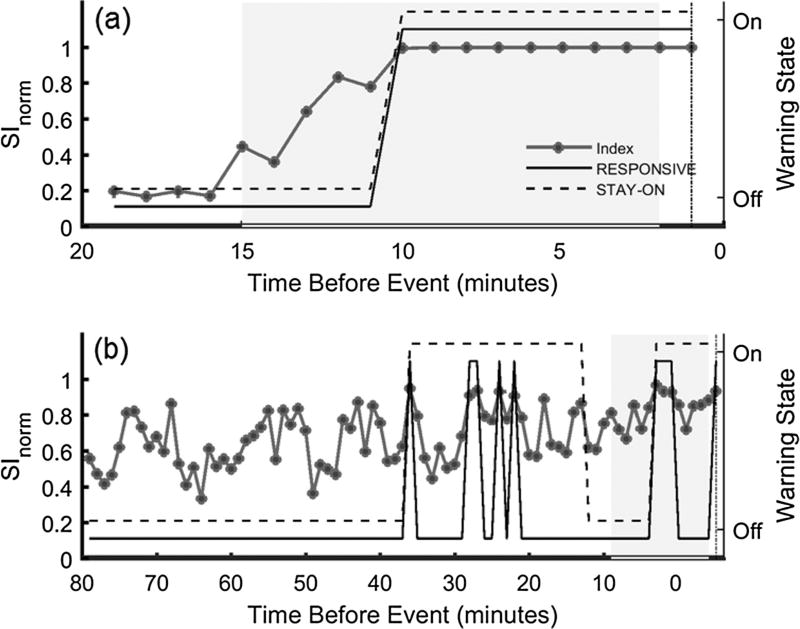

Using both the RESPONSIVE and STAY-ON approaches 14/16 events, sensitivity of 87.5%, had an associated true positive warning (warning on at any point within [Tmax, Tmin]) (Table 1). For the two experiments which did not have a warning within [Tmax, Tmin], one had a warning triggered at tevent while the other never triggered a warning. Twarning for these cases was set at 0 and −30 min (end of monitoring), respectively. Defining a true positive as a warning being generated within [Tmax, Tmin] would provide a sensitivity of 68.75% (RESPONSIVE) and 62.5% (STAY-ON). The warning patterns differed based on the individual hemorrhage trial and the warning system configuration (example shown in Fig. 3a and b). Fig. 3a shows SInorm cross the threshold of 0.9 only once and it occurs within the pre-defined window. This is the same for both warning configurations. This example is for a fast hemorrhage so the total monitoring time is relatively short compared to the slow hemorrhage in Fig. 3b. Here, multiple warning episodes with two different patterns are observed for the studied warning system configurations. The warning episodes prior to Tmax in Fig. 3b may be considered false positives. Only considering sensitivity as a warning on at any point within the pre-defined window would group these cases together as a true positive.

Table 1.

Characteristics of warnings within [Tmax, Tmin] for the 16 experiments. Results for total monitoring time, Twarning and %WarningOn are displayed as Mean ± STD [min, max]. Sensitivity for a warning within [Tmax, Tmin] is shown as the number of experiments with a warning on within [Tmax, Tmin] over the total number of experiments.

| Responsive | Stay-on | |

|---|---|---|

| Total monitoring time (min) | 43.1 ± 35.7 [7, 114] | |

| Sensitivity for a warning within [Tmax, Tmin] | 87.5% (14/16) | 87.5% (14/16) |

| Twarning (min) | 14.7 ± 13.0 [0, 49] | 19.2 ± 21.3 [0, 63] |

| %WarningOn | 91 ± 21 [36, 100] | 99 ± 3 [90, 100] |

Fig. 3.

Example of SInorm during hemorrhage with the warning state for the RESPONSIVE (solid black line) and STAY-ON (dashed black line) configurations. (a) is for a fast hemorrhage and (b) is a slow hemorrhage. Dot-dashed line indicates tEVENT and the gray box indicates the period between Tmax and Tmin. The legend used in (a) also applies to (b). When the Warning State is ‘On’ for RESPONSIVE, SInorm is greater than the threshold of 0.9.

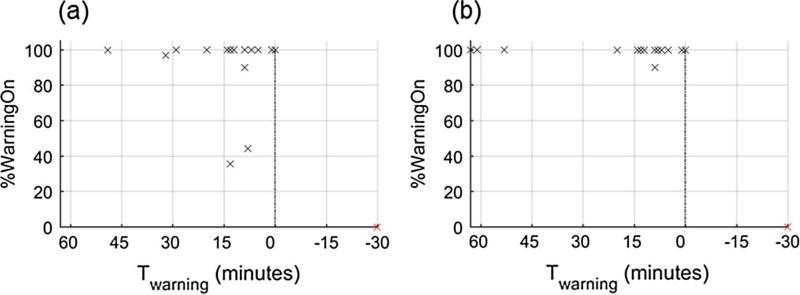

Fig. 4a presents sensitivity at each time index leading up to Tevent. Sensitivity improves for both warning configurations closer to the end of hemorrhage (Tevent occurs at 0 min in Fig. 4a). This is expected here as the endpoint of the hemorrhage experiment is a drop in mean arterial pressure of 30 mmHg while an input to SInorm is SBP. Distributions of Twarning (one per event) are shown in Fig. 4b. This shows that the median time that a warning is activated for both STAY-ON and REPSONSIVE occurs within [Tmax, Tmin] (i.e., the warning is first activated 1–14 min before the event), but both systems also have 4 warnings that occur prior to Tmax and for one event no warning is activated noted by the cross at −30 min (end of monitoring). Since both methods used the same threshold to initiate the warning, the distributions of Twarning are similar (see Table 1, p = 0.11, paired t-test). However, differences in the warning ‘off’ configurations results in two longer Twarning times for the STAY-ON approach of ~60 min. While Fig. 4b provides information on the time prior to each event that warning was activated within [Tmax, Tmin] it does not tell us if each warning stayed on until the event occurred. Fig. 5 displays the percentage time that each warning is active after Twarning (%WarningOn) against the time the warning is activated (Twarning). This shows us that RESPONSIVE has two warnings activated within [Tmax, Tmin] that are on for less than 50% of the time leading up to the event (Fig. 5a), while the STAY-ON approach has additional warnings on for the full time between when the first warning occurs and tevent (Fig. 5b).

Fig. 5.

Percentage of time that the system is in the warning “on” state once a warning is triggered. %WarningOn with respect to TWARNING for each warning are shown as a cross for (a) RESPONSIVE and (b) STAY-ON. The marker at 0% and −30 min indicates the experiment where no warning was generated. Box plots where center line is the median, box edges are 25th and 75th percentiles and crosses are values outside the 25th and 75th percentiles.

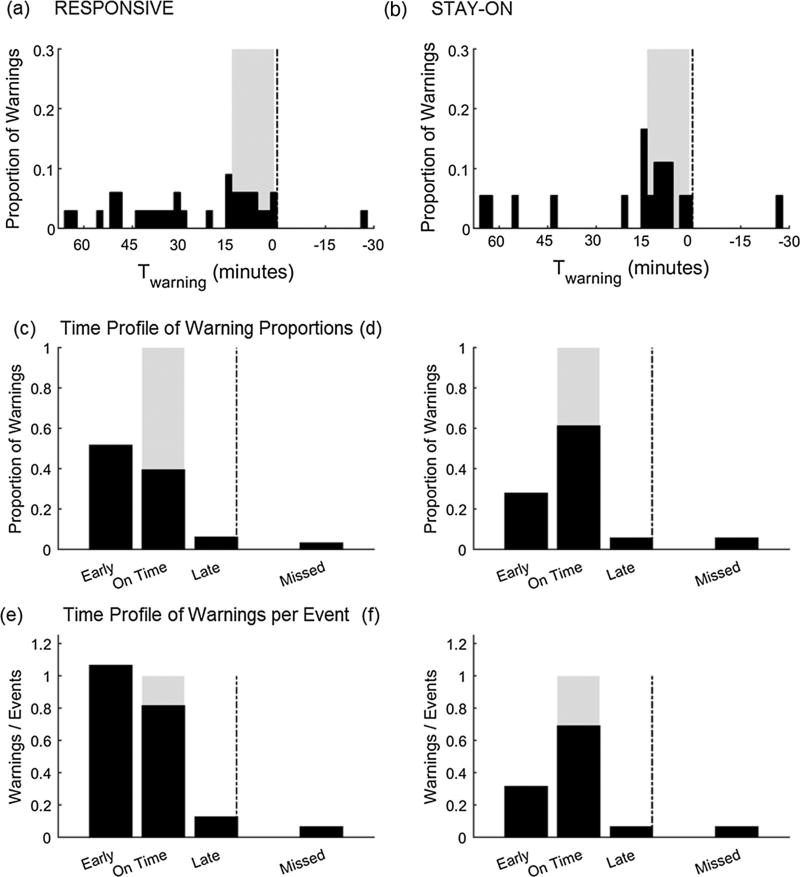

There are a total of 33 warnings before tevent using the RESPONSIVE approach (17 more than the number of events, minimum of 0 and maximum of 7 per event), and 18 for the STAY-ON approach (2 more than the number of events, minimum of 0 and maximum of 2 per event). The time profile of warning proportions (Fig. 6c and d) shows that there is a higher proportion of warning events within the pre-defined window using the STAY-ON approach. Fig. 6c shows us that ~50% of all RESPONSIVE warnings are generated prior to the pre-defined window, and this is reduced to ~25% for the STAY-ON approach (Fig. 6d). The time profile of warnings per event estimator (Fig. 6e and f) shows that the STAY-ON approach has a distribution closer to the ideal distribution (warning frequently within the predefined window and sum across all four bins closer to 1) compared to the RESPONSIVE approach. The larger alarm fatigue burden of RESPONSIVE approach is evident on time profile of warnings per event with a large > 1 sum across all four bins compared to STAY-ON approach (ratio of all warnings to all events of 2.06 for RESPONSIVE compared to 1.13 for STAY-ON).

Fig. 6.

Distributions of Twarning for all warnings for (a) RESPONSIVE and (b) STAY-ON approaches, normalized with respect to total number of warnings. The gray box indicates pre-defined window where the warnings may occur in the ‘ideal’ scenario (Twarning occurs within [Tmax, Tmin] for all cases). Negative values indicate cases where no warning was initiated for event. (c) and (d) show distributions for the four bins of Twarning: before Tmax (early), within [Tmax, Tmin] (on time), after Tmin (late, but still before tevent), and after tevent (missed), which we call time profile of warning proportions, for RESPONSIVE and STAY-ON approaches respectively. (e) and (f) show distributions when normalized with total number of events for the four bins of Twarning, which we call time profile of warnings per event for RESPONSIVE and STAY-ON approaches respectively.

6. Discussion

We have proposed a framework that considers the timeliness of detection to assess the performance of a system that warns of critical health events. This approach provides a comprehensive characterization of the performance of a warning system by addressing 1) sensitivity within a pre-defined relevant window, 2) sensitivity changes leading up to the event, 3) the distribution of the system warning times, 4) the percentage of time that the warning is on and the associated number of separate warning episodes before a critical event, and 5) warning time provided by all separate warning episodes in the database to qualitatively and quantitatively assess the performance of the warning system. Similar approaches are used in survival analysis where both occurrence of an event (a warning in our case) and the time of the event occurrence (Twarning) are important to assess the performance of a treatment (e.g.: Kaplan-Meier estimator) [21].

We demonstrated how this approach could be used with our example of a normalized Shock Index (SInorm) applied to detect early signs of a drop in blood pressure during hemorrhage. We used this data because the two different hemorrhage rates resulted in a wide range of times for the event of interest, similar to a clinical scenario where the rate of patient deterioration is generally unknown until the event occurs and there may be a range of time scales over which the monitor needs to function. When comparing two different warning strategies, sensitivity alone provided the same information between approaches and both approaches increased in sensitivity in the minutes leading up to the event. This is expected as the event of interest here was a drop in mean arterial pressure of 30 mmHg and SBP is an input to SInorm used as an example here, so the index should be expected to increase closer to the event.

The difference between the warning configurations is displayed in Figs. 4b and 5 where we see that the STAY-ON approach is on for a higher percentage of time (as expected based on the warning system design) and also has a higher percentage warnings within the target window (~60% vs. ~40% for the RESPONSIVE approach) when consider all warnings generated. This means that the STAY-ON approach has a fewer number of warnings generated that are on for longer continuous durations. Neither of these characteristics should be surprising given the design of the warning strategies used here for examples, but the approach we applied for analysis brings out these characteristics while only looking at the sensitivity provided similar performance. The time profile of warning proportions provides the estimated probability of a warning to occur early, on time, late or completely fail to provide a warning for a warning system. While time profile of warning proportions describes the time distribution of warnings generated by the system, the time profile of warnings per event describes the warning distribution with respect to the event. In our example, RESPONSIVE approach has a high probability to have a large portion of given warnings to be early or on time while STAY-ON approach has a high probability to have a large portion of given warnings to be on time. The time profile of warnings per event provides a snap shot of warning performance in time, including information about alarm burden when there is more than one warning per event. In our example, RESPONSIVE approach has a high alarm burden with the sum across all four bins much greater than 1 compared to STAY-ON approach. In retrospect, if a warning system performance was reported using this framework to a responder, by looking at the peaks on the time profile of warning proportions they can estimate an amount of time they have for intervention once a warning is heard before the patient reaches the critical event.

Here, we only considered the burden of additional warnings during the hemorrhage period of the experiments. The prevalence of the event of interest in the monitored population, and similar events that may trigger the warning, will affect the performance of the warning system. In this example we used an enriched data set where the event of interest was known to occur for every experiment. This allowed us to characterize the warning provided when an event is known to occur. False positives should be assessed in a broader monitored population that includes the expected prevalence of the condition. Given such a population with a mix of records with and without events, false positives could be added to the histogram presented in Fig. 6 at the left end (by setting a Twarning equivalent to infinity) the same way false negatives are added to the right end. False positives will also increase the sum across all four bins much greater than 1 on time profile of warnings per event. This would add the alarm burden to these plots and penalize the performance of a warning system that has frequent false positives. The method to handle correlated events within test databases should also be considered when applying this approach. For example, when there are long clinical records with the event occurring more than once for the same patient limits to the histogram can be imposed that consider the long record as segments.

Here, we compared two simple approaches for a warning system. There are many other strategies that could be implemented. For example, a warning may stay on until it is responded to, until the index drops below some refractory threshold, or for a fixed amount of time. The configuration will alter the reported performance metrics, and this approach could be used to compare warning configurations as well as different algorithms. In addition, a range of thresholds could be considered for a continuous index.

In this work we considered the evaluation of new warning indices given a small experimental data set with well-defined experimental endpoints and complete physiological monitoring data. Developing this type of study to evaluate a monitoring system in critical care settings is complicated by challenges associated with data handling and database development for critical care systems as highlighted by Johnson et al. [22]. In addition, annotating events and defining time windows for when an alert is meaningful may be challenging tasks on their own for clinical databases [18]. However, since these factors will impact performance it is important to understand the system performance during conditions with the complications that may occur with real-world use. For example, a warning index may be designed to use data from multiple physiological measurements and continue to display a value even if one or more of those measurements are missing. Considering these points when developing new indices and designing studies, the current approach could be used to help address the question of whether a warning informed on a potential event, missed that event, or added to the alarm burden by providing more information beyond sensitivity/specificity at specific time points. This may help to provide more descriptive information on the performance of a new warning system to inform caregivers on the true/false positive ratios (i.e., how the new warning may contribute to false alarms), whether the time of warning is appropriate for the criticality of the event, and how much the warning can be relied upon for warning of the event of interest.

7. Conclusion

We have presented an initial framework for characterizing the patterns of systems intended to warn of time critical health events. The process does not provide a single simple metric such as sensitivity, but we believe this approach provides a more comprehensive assessment of the performance of a system to warn of an event that could aid in the design, evaluation, and clinical interpretation and utility of the index and any notifications from the system along with better visualization.

Acknowledgments

The authors would like to thank Loriano Galeotti (FDA) and Sandy Weininger (FDA) for helpful comments on this manuscript.

This work was supported in part by the US Food and Drug Administration’s Medical Countermeasures Initiative and an appointment to the Research Participation Program at the Center for Devices and Radiological Health administered by the Oak Ridge Institute for Science and Education through an interagency agreement between the US Department of Energy and the US Food and Drug Administration.

Footnotes

Disclosures

The mention of commercial products, their sources, or their use in connection with material reported herein is not to be construed as either an actual or implied endorsement of such products by the Department of Health and Human Services.

Conflicts of interest

There are no conflicts of interest.

References

- 1.Hong W, Earnest A, Sultana P, Koh Z, Shahidah N, Ong MEH. How accurate are vital signs in predicting clinical outcomes in critically ill emergency department patients. Eur. J. Emergency Med. 2013;20:27–32. doi: 10.1097/MEJ.0b013e32834fdcf3. [DOI] [PubMed] [Google Scholar]

- 2.Siebig S, Kuhls S, Imhoff M, Gather U, Schölmerich J, Wrede CE. Intensive care unit alarms—How many do we need? Crit. Care Med. 2010;38:451–456. doi: 10.1097/CCM.0b013e3181cb0888. [DOI] [PubMed] [Google Scholar]

- 3.Tarassenko L, Hann A, Young D. Integrated monitoring and analysis for early warning of patient deterioration. Br. J. Anaesthesia. 2006;97:64–68. doi: 10.1093/bja/ael113. [DOI] [PubMed] [Google Scholar]

- 4.Tarassenko L, Clifton DA, Pinsky MR, Hravnak MT, Woods JR, Watkinson PJ. Centile-based early warning scores derived from statistical distributions of vital signs. Resuscitation. 2011;82:1013–1018. doi: 10.1016/j.resuscitation.2011.03.006. [DOI] [PubMed] [Google Scholar]

- 5.Pinsky MR. Complexity modeling: identify instability early. Crit. Care Med. 2010;38:S649–S655. doi: 10.1097/CCM.0b013e3181f24484. [DOI] [PubMed] [Google Scholar]

- 6.Lee J, Mark RG. An investigation of patterns in hemodynamic data indicative of impending hypotension in intensive care. Biomed. Eng. Online. 2010;9:62. doi: 10.1186/1475-925X-9-62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lynn LA, Curry JP. Patterns of unexpected in-hospital deaths: a root cause analysis. Patient Saf. Surg. 2011;5:3. doi: 10.1186/1754-9493-5-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Liu NT, Holcomb JB, Wade CE, Salinas J. Improving the prediction of mortality and the need for life-saving interventions in trauma patients using standard vital signs with heart-rate variability and complexity. Shock (Augusta, Ga) 2015 doi: 10.1097/SHK.0000000000000356. [DOI] [PubMed] [Google Scholar]

- 9.Van Haren RM, Thorson CM, Valle EJ, Busko AM, Jouria JM, Livingstone AS, et al. Novel prehospital monitor with injury acuity alarm to identify trauma patients who require lifesaving intervention. J. Trauma Acute Care Surg. 2014;76:743–749. doi: 10.1097/TA.0000000000000099. [DOI] [PubMed] [Google Scholar]

- 10.Henry KE, Hager DN, Pronovost PJ, Saria S. A targeted real-time early warning score (TREWScore) for septic shock. Sci. Translational Med. 2015;7:299ra122–299ra122. doi: 10.1126/scitranslmed.aab3719. [DOI] [PubMed] [Google Scholar]

- 11.Chen L, Ogundele O, Clermont G, Hravnak M, Pinsky MR, Dubrawski AW. Dynamic and personalized risk forecast in step-down units: implications for monitoring paradigms. Ann. Am. Thoracic Soc. 2016 doi: 10.1513/AnnalsATS.201611-905OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pimentel MA, Clifton DA, Clifton L, Watkinson PJ, Tarassenko L. Modelling physiological deterioration in post-operative patient vital-sign data. Med. Biological Eng. Comput. 2013;51:869–877. doi: 10.1007/s11517-013-1059-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hravnak M, DeVita MA, Clontz A, Edwards L, Valenta C, Pinsky MR. Cardiorespiratory instability before and after implementing an integrated monitoring system. Crit. Care Med. 2011;39:65. doi: 10.1097/CCM.0b013e3181fb7b1c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Blair TL. Device diagnostics and early identification of acute decompensated heart failure: a systematic review. J. Cardiovasc Nurs. 2014;29:68–81. doi: 10.1097/JCN.0b013e3182784106. [DOI] [PubMed] [Google Scholar]

- 15.Gyllensten IC, Bonomi AG, Goode KM, Reiter H, Habetha J, Amft O, et al. Early indication of decompensated heart failure in patients on home-telemonitoring: a comparison of prediction algorithms based on daily weight and noninvasive transthoracic bio-impedance. JMIR Med. Inf. 2016;4 doi: 10.2196/medinform.4842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.ECRI. Health Devices: 2014 Top 10 Health Technology Hazards. Health Devices, ECRI Institute; 2014. [Google Scholar]

- 17.Drew BJ, Harris P, Zègre-Hemsey JK, Mammone T, Schindler D, Salas-Boni R, et al. Insights into the problem of alarm fatigue with physiologic monitor devices: a comprehensive observational study of consecutive intensive care unit patients. PLoS One. 2014;9:e110274. doi: 10.1371/journal.pone.0110274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Clifton DA, Wong D, Clifton L, Wilson S, Way R, Pullinger R, et al. A large-scale clinical validation of an integrated monitoring system in the emergency department. Biomed. Health Inf., IEEE J. 2013;17:835–842. doi: 10.1109/JBHI.2012.2234130. [DOI] [PubMed] [Google Scholar]

- 19.Bai Y, Do DH, Harris PR, Schindler D, Boyle NG, Drew BJ, et al. Integrating monitor alarms with laboratory test results to enhance patient deterioration prediction. J. Biomed. Inf. 2015;53:81–92. doi: 10.1016/j.jbi.2014.09.006. [DOI] [PubMed] [Google Scholar]

- 20.Scully CG, Daluwatte C, Marques NR, Khan M, Salter M, Wolf J, et al. Effect of hemorrhage rate on early hemodynamic responses in conscious sheep. Physiol. Rep. 2016;4 doi: 10.14814/phy2.12739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Holländer N. Encyclopedia of Statistical Sciences. John Wiley & Sons, Inc.; 2004. Kaplan–Meier Plot. [Google Scholar]

- 22.Johnson AEW, Ghassemi MM, Nemati S, Niehaus KE, Clifton DA, Clifford GD. Machine learning and decision support in critical care. Proc. IEEE. 2016;104:444–466. doi: 10.1109/JPROC.2015.2501978. [DOI] [PMC free article] [PubMed] [Google Scholar]