Abstract

The noisy threshold regime, where even a small set of presynaptic neurons can significantly affect postsynaptic spike-timing, is suggested as a key requisite for computation in neurons with high variability. It also has been proposed that signals under the noisy conditions are successfully transferred by a few strong synapses and/or by an assembly of nearly synchronous synaptic activities. We analytically investigate the impact of a transient signaling input on a leaky integrate-and-fire postsynaptic neuron that receives background noise near the threshold regime. The signaling input models a single strong synapse or a set of synchronous synapses, while the background noise represents a lot of weak synapses. We find an analytic solution that explains how the first-passage time (ISI) density is changed by transient signaling input. The analysis allows us to connect properties of the signaling input like spike timing and amplitude with postsynaptic first-passage time density in a noisy environment. Based on the analytic solution, we calculate the Fisher information with respect to the signaling input’s amplitude. For a wide range of amplitudes, we observe a non-monotonic behavior for the Fisher information as a function of background noise. Moreover, Fisher information non-trivially depends on the signaling input’s amplitude; changing the amplitude, we observe one maximum in the high level of the background noise. The single maximum splits into two maximums in the low noise regime. This finding demonstrates the benefit of the analytic solution in investigating signal transfer by neurons.

Keywords: First-passage time density, Transient signaling input, Strong synapse, Gaussian noise, Threshold regime, Fisher information

Introduction

High variability in spiking activities of in vivo cortical neurons is considered as one of the fundamentals of information processing by networks of neurons (Softky and Koch 1993; Shadlen and Newsome 1998). Since it is difficult to experimentally control mechanisms that underlie the highly variable neuronal activity, theoretical and computational analysis of a stochastically spiking neuron model are invaluable approaches to investigate how information is transferred via the variable spiking activities (Abbott et al. 2012). Statistics of spike timing beyond the spike-rate conveys information in the sensory systems; in particular, neuron’s first-spike time after stimulus onset can encode most of the information in the sensory cortex (Petersen et al. 2001; Panzeri et al. 2001; Van Rullen and Thorpe 2001; Furukawa and Middlebrooks 2002; Johansson and Birznieks 2004; Van Rullen et al. 2005). Hence the spike-timing distribution, if attained at sufficient accuracy, could be a building block in modeling neural computation (Herz et al. 2006); it would explain consequences of activity-dependent plasticity (Babadi and Abbott 2013), information transmission by a population of neurons (Silberberg et al. 2004; De La Rocha et al. 2007; Pitkow and Meister 2012) and even behavior (Pitkow et al. 2015). An analytical solution would serve this purpose; however, the non-linear dynamics of a single neuron has so far prevented obtaining such a solution.

The variability observed in spike-timing is thought to reflect fluctuations of synaptic inputs rather than the intrinsic noise of neurons (Mainen and Sejnowski 1995). A neuron is sensitive to input fluctuations and fires irregularly if inputs from excitatory and inhibitory neurons are balanced at levels near but below the threshold (Shadlen and Newsome 1998). Intracellular recordings from in vivo cortical neurons have revealed ubiquity of such balanced inputs from excitatory and inhibitory populations (Wehr and Zador 2003; Okun and Lampl 2008). The balanced inputs are self-organized in sparsely connected networks with relatively strong synaptic connections and result in asynchronous population activities (van Vreeswijk and Sompolinsky 1996; 1998; Kumar et al. 2008; Renart et al. 2010). Encouragingly, a recent experiment (Tan et al. 2014) demonstrated that membrane potential of macaque V1 neurons are dynamically clamped near the threshold when a stimulus is presented to the animal. All these evidence place importance on developing an analytic solution to understand neural behavior near the threshold regime.

On the other hand, the distribution of synaptic strength is typically a log-normal distribution, which indicates the presence of a few extremely strong synapses and a majority of weak synapses (Song et al. 2005; Lefort et al. 2009; Ikegaya et al. 2013; Buzsáki and Mizuseki 2014; Cossell et al. 2015). These strong synapses may form signaling inputs (Abbott et al. 2012), with the aid of other weak synapses (Song et al. 2005; Teramae et al. 2012; Ikegaya et al. 2013; Cossell et al. 2015). Moreover, it has been long debated that nearly synchronized inputs, from multiple neurons, act as a strong signal on top of the noisy background input (Stevens and Zador 1998; Diesmann et al. 1999; Salinas and Sejnowski 2001; Takahashi et al. 2012). The strong input, in many cases, is a short lasting signal. For example, the signaling inputs which code stimulus information in early sensory processing areas like in primary visual and auditory cortex, are usually known to be transient (Gollisch and Herz 2005; Geisler et al. 2007). Thus, besides many weak synapses which form a noisy background input, we should also consider strong or temporally coordinated synaptic events which induce strong transient signaling inputs.

The leaky integrate-and-fire (LIF) neuron model is the simplest model that captures the important features of cortical neurons (Rauch et al. 2003; La Camera et al. 2004; Jolivet et al. 2008). This simple model is largely used to investigate the input-output relation of postsynaptic neuron (Tuckwell 1988; Brunel and Sergi 1998; Burkitt and Clark 1999; Lindner et al. 2005; Burkitt 2006a, 2006b; Richardson 2007, 2008; Richardson and Swarbrick 2010; Helias et al. 2013; Iolov et al. 2014). There are analytical studies which obtained the linear response of the neuron to oscillatory signaling input (Bulsara et al. 1996; Brunel and Hakim 1999; Brunel et al. 2001; Lindner and Schimansky-Geier 2001), excitatory and inhibitory synaptic jumps (Richardson and Swarbrick 2010; Helias et al. 2010) or transient input (Herrmann and Gerstner 2001; Helias et al. 2010, 2011). However, a closed-form analytical solution for the impact of strong transient signaling input on a LIF neuron model subject to Gaussian noise is not achieved yet.

Here we analytically derive the first-passage time density of a LIF neuron receiving transient signaling input with arbitrary amplitude; the background input is noisy but balanced at the threshold regime. We extend our solution for the arbitrary shape of transient signaling input. As an application of this solution, we calculate the Fisher information with respect to input’s amplitude; the maximum of Fisher information provides the minimum error to estimate the signaling input’s amplitude from spiking activity. We quantify the noise level and signal’s amplitude which yield the best possible discrimination.

Results

Impact of a transient signaling input on postsynaptic spiking

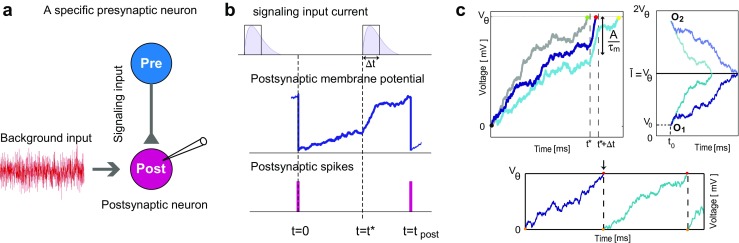

We consider a specified presynaptic neuron that provides the transient signaling input, while the rest of presynaptic neurons produce noisy background inputs to the postsynaptic neuron (Fig. 1a). The question is how a single spike of the signaling input affects the spike timing of the postsynaptic neuron (Fig. 1b). We use the LIF neuron model for postsynaptic neuron; the membrane potential, V, evolves with:

| 1 |

where τm is the membrane time constant, and I(t)is the total input current. The neuron produces a spike when its voltage reaches the threshold, V𝜃. The membrane voltage then resets to its resting value, Vr, which we assume to be zero without loss of generality.

Fig. 1.

The framework to study the impact of a specified presynaptic neuron on postsynaptic spiking activity. a The effect of specified presynaptic neuron is separated from the noisy background input, which is produced by the rest of presynaptic neurons. b A schematic view of the signaling input activity and its effect on the membrane potential and spiking of the postsynaptic neuron. c Postsynaptic membrane potential versus time, Top-left: There can be different trajectories, due to the noise. Any trajectory, if not reached the threshold before signaling input arrival at t∗, shows a sharp increase during t∗to t∗ + Δt time window. Top-right: For the particular case of , we can use the image method. The membrane potential trajectory and its mirror image coincide at line. This can help to fulfill the thresholding criteria if it is the same line of V = V𝜃. Bottom: It shows the membrane potential trajectory of the postsynaptic neuron. When the voltage reaches to V𝜃, the neuron fires and the voltage resets to 0. Here we particularly consider the first spike after t = 0

The effect of specified signaling input, and the rest of excitatory/inhibitory presynaptic neurons come through the input current I(t):

| 2 |

where I0(t) is the background input induced by presynaptic neurons, and ΔI(t,t∗) is the signaling input from the specified neuron; here t∗represents the time that the signaling input arrives. For large number of presynaptic neurons, the uncorrelated background input is approximated as: , where is the mean input strength and ξ(t) is a zero mean Gaussian noise (i.e. < ξ(t) >= 0and < ξ(t)ξ(t′) >= 2Dδ(t − t′), where δ(t) is the Dirac delta function).

When a spike from presynaptic neuron arrives at synaptic terminal, the postsynaptic current instantaneously increases according to the strength of the synapse, and decays with a time constant of τs. For τs ≪ τm which is measured for fast currents generated by AMPA and GABAAreceptors (Destexhe et al. 1998), we can model the input current as (Stern et al. 1992):

| 3 |

where Δt ∼ τs. This resembles a current which begins at t = t∗, remains constant for a short time window, t ∈ [t∗,t∗ + Δt], and vanishes after that. It transmits a net charge of A regardless of Δt; it also converges to the Dirac delta function in the limit of Δt → 0(i.e. ΔI(t,t∗) → A δ(t − t∗)). In this limit, the process (see Eq. (1)) converges to jump-diffusion process (Kou and Wang 2003).

As mentioned, a key element of this article is to predict when the postsynaptic neuron spikes if the signaling input arrives at t∗. We consider the last spike of the postsynaptic neuron as the origin of time, t = 0, and analytically predict when the first postsynaptic spike will happen (Fig. 1b). However, because of the stochastic term in the background input, ξ(t), we cannot predict the exact time of the next spike, but can describe its probability density, J(V𝜃,t).

We consider an ensemble of many postsynaptic neurons (or many repetitions of the same experiment with a single postsynaptic neuron); the life of each neuron in this ensemble is described by a trajectory of V (t), which is governed by the LIF equation (see Fig. 1c, top-left). All trajectories begin from the same point (V = 0 at t = 0), but do not follow the same path; because of different values realized for the stochastic background noise, ξ(t). The neuron initiates a spike if a trajectory passes the threshold voltage, V𝜃. Then J(V𝜃,t), which we shortly call first-passage time density, is the probability density function that a trajectory passes V𝜃 at time t. However, to obtain J(V𝜃,t), we have to know P(V,t), the probability density that a trajectory has the potential V at time t. This membrane potential probability density satisfies the Fokker-Planck (FP) equation (Risken 1984; Kardar 2007):

| 4 |

Here, the temporal evolution of P(V,t)is governed by (i) a diffusion term which is a signature of the stochastic input (i.e. ξ(t)), and (ii) a drift term which represents both the leak and the non-stochastic currents (i.e. and ΔI(t,t∗)).

Threshold nonlinearity of neuronal spike generation is dictated as a boundary condition in Eq. (4). The LIF neuron spikes if it passes the threshold voltage. Since each membrane trajectory ends at the threshold, there is no neuron with V > V𝜃. In the continuum limit, this results in the absorbing boundary condition of P(V ≥ V𝜃,t) = 0 (Gerstner et al. 2014). Here, we do not consider the reappearance of the trajectory from the resting potential after each spike occurs; because we are interested in neuron’s first spike only (Fig. 1c, down). This results in the absorbing boundary condition instead of the widely used periodic boundary condition to derive firing rate (Brunel and Hakim 1999; Brunel et al. 2001; Lindner and Schimansky-Geier 2001; Richardson and Swarbrick 2010).

Finally, we will obtain P(V,t)for t > t0 by solving Eq. (4) under this boundary condition once we specify an initial distribution of the membrane potential at time t = 0. Here we use P(V,0) = δ(V ) as we assumed that all membrane trajectories started from V = 0.

Unfortunately, the analytical solution of P(V,t)and consequently J(V𝜃,t)is not attainable in general even if we discard the signaling input from the equation. However, we may obtain the analytical solution at a particular regime known as the threshold regime, which will be described in detail as follows. For a fixed noise strength (i.e., D), a simple ratio determines how P(V,t) and the corresponding first-passage time density of J(V𝜃,t) behave. The neuron regularly spikes if significantly exceeds V𝜃; because the high value of mean input robustly drives neuron to its threshold. If, on the other hand, , there would be occasional spikes whenever the noise or the signaling input happens to be strong enough to prevail the gap between and V𝜃. An interesting regime exists somewhere in-between; for , a modest signaling input or some conventional noise can induce spike of the postsynaptic neuron. This is the near threshold regime. It was empirically observed (Shadlen and Newsome 1998; Tan et al. 2014) and suggested as a basis of high variability in neural networks (van Vreeswijk and Sompolinsky 1996, 1998). The quest for a closed-form analytical solution for Eq. (4) also leads us to the very same regime. There exists a closed-form solution for P(V,t), and consequently for J(V𝜃,t), if (i) and (ii) no signaling input is applied: ΔI(t,t∗) = 0(Wang and Uhlenbeck 1945; Sugiyama et al. 1970; Bulsara et al. 1996). Below, we describe the reason behind this peculiarity of the threshold regime and revisit the closed-form solution without the signaling input. We then extend this analytical solution to include effect of the signaling input.

The first-passage time density in the absence of signaling input

We begin with the simpler condition in which the signaling input is turned off (ΔI(t,t∗) = 0). Even in such a case, solutions for P(V,t)are, in general, available only in a non-closed form such as inverse Laplace transforms (Siegert 1951; Ricciardi and Sato 1988; Ostojic 2011). However, there exists a closed-form analytical solution for the particular case of the threshold regime, (Wang and Uhlenbeck 1945; Sugiyama et al. 1970; Tuckwell 1988).

A closed-form solution for probability density would exist for arbitrary if we could neglect the absorbing boundary condition. Assume that we have freed ourselves from the absorbing boundary condition, and that the membrane potential has a definite value of V = V0 at time t = t0, there exists a closed-form analytical solution for Eq. (4), for t > t0. It would be the free Green’s function, and it reads (Uhlenbeck and Ornstein 1930):

| 5 |

Here V0 and t0 quote the initial condition, and r(t) = exp[−t/τm]. The free Green’s function describes a probability density of the membrane trajectories which all begin from the point O1 = (t0,V0), but follow different paths due to the noise (see Fig. 1c, Right). Since we have neglected the threshold for the moment, the trajectories do not end as they pass the threshold line, V = V𝜃. Thus we can freely consider any initiating point, even above the threshold line, for the trajectories. It would be , the mirror-image point of O1, with respect to the line (Fig. 1c, Right). The probability density for this initiating point is . The encouraging fact is that the two Green’s functions yield equal values on the mirror-line: . Conclusively, we define the main Green’s function as:

| 6 |

This linear combination of the free Green’s functions, satisfies the linear Fokker-Planck equation (i.e., Eq. (4)). It also approaches zero on the line. Now, if we choose equal to V𝜃, this means that G(V,t; V0,t0)also satisfies the absorbing boundary condition. Thus we can utilize the analytical free Green’s functions to obtain an analytical solution under the absorbing boundary condition only at the threshold regime.

For our main problem, in which the postsynaptic neuron has the certain voltage of V = 0 at t = 0, the probability density of membrane potential is simply:

| 7 |

The probability of spiking between t and t + dt, is proportional to the number of voltage trajectories which pass the threshold in [t,t + dt] (see Fig. 1c, left). This equals where J0(V,t) is the current density:

| 8 |

For V = V𝜃, Eq. (8) yields the first-passage time density; it simplifies to (Wang and Uhlenbeck 1945; Sugiyama et al. 1970; Tuckwell 1988; Bulsara et al. 1996):

| 9 |

where r(t) = exp[−t/τm]. Apart from a 1/τm pre-factor in Eq. (9), which stands for its time inverse dimensionality, the overall shape of function is characterized by a dimensionless ratio of D/(τmV𝜃2). The ratio quantifies the strength of input background noise relative to the other competing factors. Other relevant quantities are also characterized by this ratio. For example, the maximum value of first-passage time density, J0(V𝜃,t), occurs at where:

| 10 |

For weak enough noise (i.e. ), this function simplifies to . Finally, it is important to extend this formalism to more plausible sub/supra-threshold cases. In Appendix I, we show how a scaling approach does help us to do so.

The transient signaling input modifies the probability density and first-passage time density

In this subsection, we extend the above analysis to the case in which signaling input is additionally applied to the neuron. The presence of the signaling input modifies P(V,t) and consequently J(V𝜃,t). To obtain a clear causal picture, we rewrite Eq. (4) as:

| 11 |

We assume that ΔI(t,t∗) corrects the initial threshold regime answer of P0(V,t)to P(V,t) = P0(V,t) + ΔP(V,t); P0(V,t)is the analytical solution of membrane potential density in the absence of the signaling input, and ΔP(V,t) is the correction, due to the signaling input. ΔP(V,t) would be zero if the signaling input did not exist, A = 0 (see Eq. (3)). For arbitrary signaling input, ΔP(V,t)would be a function of A, the signaling input’s strength. This lets us write a Taylor series for ΔP(V,t)as:

| 12 |

where δPn(V,t) ∝ An. Since ΔP(V,t) vanishes for A = 0, the constant term, δPn= 0(V,t) ∝ A0, is not included in the series. We plug into Eq. (11); for each n, we consider terms proportional to An, on both sides of equality, as a separate equation. For n = 1the equation reads:

| 13 |

This is the first-order perturbation equation, as both its sides are proportional to A1. To address its boundary conditions, we note that ΔP(V,t)is zero before the occurrence of the specified signaling input (i.e. t < t∗); consequently we obtain δP1(V,t < t∗) = 0. Moreover, the absorbing boundary condition at V = V𝜃 results in ΔP(V𝜃,t) = 0, from which we conclude that δP1(V𝜃,t) = 0. These let us use the aforementioned Green’s function, and write δP1(V,t)as a Green’s integral over the source term, the right side of Eq. (13):

| 14 |

Equation (14) could be further simplified, if we note that ΔI(t0,t∗) is zero for t0 < t∗ or t0 > t∗ + Δt; thus the t0 in G(V,t;V0,t0) and (∂/∂V )P0(V0,t0) always belong to [t∗,t∗ + Δt], a short time interval. As Δt ≪ τm, we conclude that the two functions are almost constant during this time interval and approximate them with G(V,t;V0,t∗) and (∂/∂V )P0(V0,t∗)respectively. This further simplifies Eq. (14):

| 15 |

Taking the time integral in Eq. (15), we have:

| 16 |

where

| 17 |

For n ≥ 2, the nth order perturbation equation is the same as Eq. (13) by replacing δP1(V,t) and δP0(V,t) with δPn(V,t) and δPn− 1(V,t). A recursive formalism, then, yields:

| 18 |

where

| 19 |

This helps us to calculate the series in Eq. (12) and obtain ΔP(V,t). For t > t∗ + Δt, for example, it reads:

| 20 |

The summation in bracket is the Taylor expansion of P0(V0 − A/τm,t∗) − P0(V0,t∗); thus for t > t∗ + Δt:

| 21 |

For t∗≤ t ≤ t∗ + Δt, a similar reasoning yields:

| 22 |

And evidently, for t < t∗, δPn(V,t) = 0. We use the combination rule, for propagators:

| 23 |

The final result, P(V,t) = P0(V,t) + ΔP(V,t), simplifies as:

| 24 |

Finally, we use P(V,t)to obtain the corrected first-passage time density in the presence of the signaling input:

| 25 |

While our formalism is applicable to arbitrary values of transient signaling input, here we focus on the effect of strong excitatory/inhibitory signaling input on postsynaptic neuron’s response. Figure 1c (top-left) shows how voltage trajectories almost uniformly increase during arrival of excitatory signaling input (i.e. t ∈ [t∗,t∗ + Δt]). The short period of signal arrival (i.e.Δt ≪ τm) guarantees this uniform increase and results in an overall rise of A/τm. Consequently, if the value of membrane potential of a particular trajectory is larger than V𝜃 − A/τm upon signal arrival, it passes the threshold during signal arrival: the neuron fires. If, on the other hand, it is smaller than V𝜃 − A/τm, the membrane potential does not cross the threshold by the additional signaling input: the neuron does not fire. This simple picture helps us to understand results shown in Fig. 2.

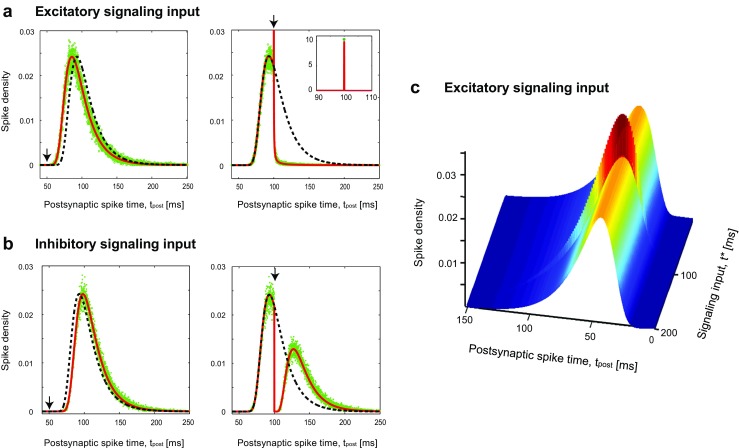

Fig. 2.

First-passage time density when the neuron receives signaling input and background noise at the threshold regime. The black vertical arrows show when the signaling input arrives; it changes the initial ISI distribution, shown in dashed black curve, to the modified distribution, thick red curve. Even when the signaling input is remarkably strong, the analytic modified distribution well matches the simulation results, which come as empty green circles. a Left: An early excitatory signaling input at t∗ = 50 mscauses a leftward temporal shift. Right: A drastic change occurs, If the signaling input arrives at t∗ = 100 ms, close enough to the peak of initial spiking density. b Left: An inhibitory signaling input imposes a mere temporal shift, a delay, if it occurs too early, t∗ = 50 ms. Right: If the inhibitory signaling input occurs close enough to the peak, , the spiking density almost divides into two distinct regions. c The first-passage time density as a function of the presynaptic spike timing, t∗, for small excitatory signaling inputs, A = 0.2 mV ×ms. The values of the parameters are chosen using the physiologically plausible range (McCormick et al. 1985). Here, we use membrane time constant τm = 20 ms, A = 10 mV ms, threshold voltage of V𝜃 = 20 ms, diffusion coefficient of D = 0.74 mV2msand the simulation time step is Δt = 0.05 ms

Figure 2a and b show that excitatory and inhibitory signaling inputs can result in quite different spiking behaviors, depending on their arrival time. The dashed-black curve, in both panels of Fig. 2a, b, right and left, shows the first-passage time density in the absence of the signaling input, J0(V𝜃,t). In the left panels, the signaling input arrives at t∗ = 50 ms; it has A = 10 mV × ms which increases or decreases the membrane potential by A/τm = 0.5 mV. In this case, the excitatory signaling input shifts the original density leftward for excitation or rightward for inhibition (thick-red-curves). In contrast, the right panels show the spiking density when the signaling input occurs at t∗ = 100 ms. This is close to , at which the J0(V𝜃,t)is maximized. Therefore, when the signal arrives, many trajectories are already close to the threshold potential (i.e., larger than V𝜃 − A/τm). All such trajectories will spike, due to the aforementioned rise of excitatory A/τm; this results in a sudden sharp increase of the first-passage time density at t = t∗ (see Fig. 2a, right, and its inset). In contrast, the inhibitory input prevents all trajectories to reach the threshold, which makes a sharp depletion in the first-passage time density (Fig. 2b, right). The effect of inhibitory input fades away after a while and again trajectories approach the threshold; this leads to the second rise of the first-passage time density (Fig. 2b, right). These theoretical predictions were confirmed by numerical simulation of the LIF model (green dots). It is also important to see what will happen if the signaling input comes much later than tmax. This means that most of the trajectories have already reached the threshold voltage, and only a tiny portion of them remains. Consequently, the signaling input does not induce much change in J(V𝜃,t). In other words, if the signaling input comes too late, the postsynaptic neuron has already fired, and the signal cannot change its first spike-time any more (Fig. 2c). This figure also demonstrates that the first-passage time density undergoes the maximum change when the excitatory input arrives around tmax, the peak time of no-signaling input case.

It is also important to note that there is a critical value for excitatory signal strength for which postsynaptic neuron fires, apart from the signal arrival time. For a signal with A ≥ τmV𝜃, the aforementioned rise would be V𝜃which results in spiking of almost all trajectories, irrespective of signal arrival time. This introduces another dimensionless ratio of A/(τmV𝜃), which quantifies the strength of the signaling input.

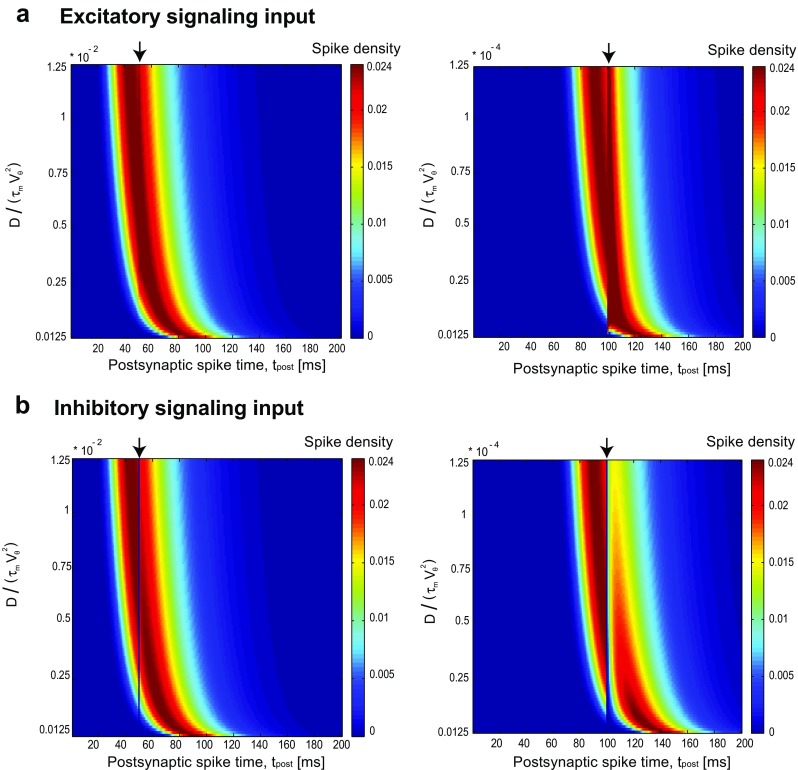

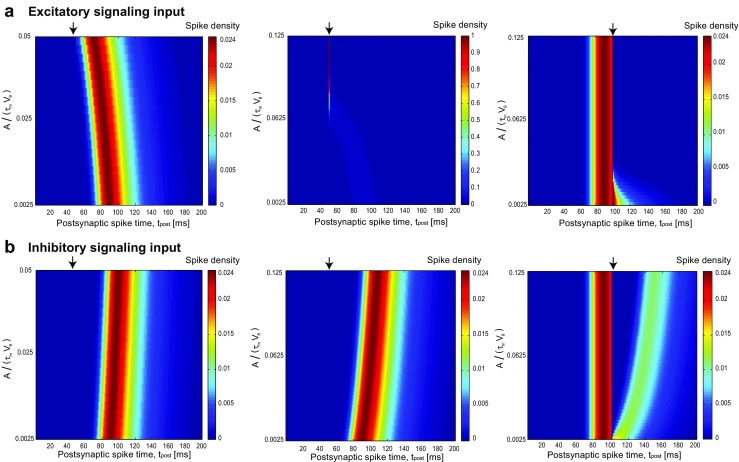

Figure 3 shows how the first-passage time density changes with the diffusion coefficient. When the scaled diffusion coefficient increases, the first-passage time density and tmax shift to the left. So signaling inputs that arrive earlier than tmaxat 50 mscan modulate the density (Fig. 3a, left). When the signaling input arrives at time t∗ = 100 ms which is close to tmax, the first-passage time density is modulated in low diffusion regimes (Fig. 3a, Right). In the case of inhibitory presynaptic spike (Fig. 3b), for high scaled diffusion coefficient, the first-passage time density decreases at the time of presynaptic spike but because of high diffusion, it goes up quickly. As the scaled diffusion decreases, the recovery from inhibition takes time which makes distance between two separated distributions.

Fig. 3.

First-passage time density as a function of the scaled diffusion coefficient, D/(τmV𝜃2). a Excitatory signaling input: (Left) The signaling input occurs at t = 50ms, black arrow. Variation of the scaled diffusion modifies the overall picture of ISI distribution. There is an apparent jump in the spiking density when D/(τmV𝜃2) ≃ 0.25 × 10− 2. It is because D/(τmV𝜃2)controls , the maximum of the ISI distribution in the absence of the signaling input; and ms for D/(τmV𝜃2) ≃ 0.25 × 10− 2. For higher/lower values of the scaled diffusion coefficient, however, we see minor modification as would be larger/smaller enough compared to the signaling input arrival time. (Right) The signaling input occurs at t = 100 ms; we chose much weaker diffusion coefficient, so that would be comparable to this signaling input arrival time. However, the modification is drastically larger, compared to the left panel. This shows that the influence of the signaling input amplifies, for weaker diffusion/noise strength. b The same as in (a) but with inhibitory signaling input. Reducing the scaled diffusion coefficient, from left to right, drastically amplifies the modification imposed by the signaling input. The values of the intrinsic parameters are chosen using the physiologically plausible range, V𝜃 = 20 mVand τm = 20 ms(McCormick et al. 1985). The amplitude of signaling input is fixed at A = 1 mV ms

The first-passage time density also depends on the scaled amplitude of the signaling input (Fig. 4). For an excitatory input which fires at time (t∗) much earlier than tmax, as the amplitude increases, the density moves to the left (Fig. 4a, left) until the amplitude is large enough to make them spike at the same time (Fig. 4a, middle). When inhibitory signaling input arrives near tmax, the density breaks in two parts (Fig. 4b, right). As the amplitude increases, the spiking density is zero not only at the time of presynaptic spiking but also for a duration after that. This duration nonlinearly depends on the strength of signaling input (Fig. 4b, right).

Fig. 4.

First-passage time density as a function of scaled signaling input amplitude A/(τmV𝜃). a Excitatory signaling input: When the amplitude of signaling input increases, the first-passage time density gradually shifts to the left (a, left) and in specific strong amplitude, the density changes to a peak because the signaling input is so strong that makes all the trajectories to fire at the time of signal arrival (a, middle). The signaling input arrives at t∗ = 50 ms(left and middle) and at t∗ = 100 ms(Right). b Inhibitory signaling input: Inhibitory signal arrives at t∗ = 50 ms(left and middle) and t∗ = 100 ms(Right). When the input arrival time is much less than , the effect of increasing the amplitude of inhibitory input is just to push the density to the right (b, Left and middle). For cases that t∗is comparable to , the first-passage time density breaks at the time of input arrival (b, right). As the amplitude increases, the recovery of distribution from that break takes more time and the distance between separated distribution increases nonlinearly. The signaling input arrival is shown by black arrows and the diffusion coefficient is fixed at D = 1 mV2ms. The values of the intrinsic parameters (V𝜃 = 20 mVand τm = 20 ms) are chosen using the physiologically plausible range (McCormick et al. 1985)

Arbitrary shapes of the transient signaling input

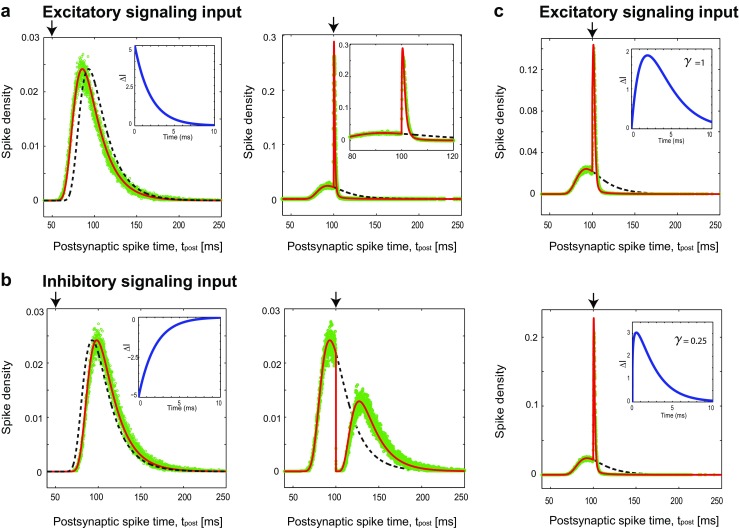

One merit of this formalism is its flexibility to address other shapes of the transient signaling input. We solve the first-passage time problem for the exponentially decaying input, which is more physiologically plausible (i.e. ΔI(t,t∗) ∝ exp(−(t − t∗)/τs), see Appendix II for the derivation and results). Figure 5a and b show how an exponential transient signaling input modifies the first-passage time density.

Fig. 5.

First-passage time density when the neuron receives exponential decaying signaling input and background noise at the threshold regime. The black vertical arrows show when the signaling input arrives; it changes the initial ISI distribution, shown in dashed black curve, to the modified distribution, thick red curve. Even when the signaling input is remarkably strong, the analytic modified distribution well matches the simulation results, which come as empty green circles. a Left: An early excitatory signaling input at t∗ = 50 mscauses a leftward temporal shift. The result is very similar to the case of square input (Fig. 2a, left). Right: A drastic change occurs, if the signaling input arrives at t∗ = 100 ms, close enough to the peak of initial spiking density. The change in density is smoother comparing with Fig. 2a, right. The inset shows the shape of the signaling input. b Left: An inhibitory signaling input imposes a mere temporal shift, a delay, if it occurs too early, t∗ = 50 ms. Right: If the inhibitory signaling input occurs close enough to the peak, , the spiking density almost divides into two distinct regions. The inset shows the shape of the signaling input. c The first-passage time density for the cases that signaling input is gamma function with parameter γ = 1(Top) and γ = 0.25(Bottom). The values of the parameters are chosen using the physiologically plausible range (McCormick et al. 1985). Here, we use membrane time constant τm = 20 ms, τs = 2 ms, A = 10 mV ms, threshold voltage of V𝜃 = 20 mV, diffusion coefficient of D = 0.74 mV2msand the simulation time step is Δt = 0.05 ms

For early signaling arrival, the modification due to excitatory (inhibitory) input is a leftward (rightward) shift, very similar to the changes we had for square input (Fig. 2a, b). For an excitatory input at t∗ = 100 ms (Fig. 5a right) there is a jump upon signal arrival; comparing to the square excitatory input (Fig. 2a right) the jump is less sharp but more wide. Similarly, the inhibitory exponential input at t∗ = 100 ms induces a fall (Fig. 5b right), similar but less steep than the fall observed for the square inhibitory input (Fig. 2b right). The numerical simulations well verify these analytic results (green circles in Fig. 5a, b).

The analytical solutions for square and exponential input suggest a general formula for probability density in the presence of an arbitrary input current, ΔI(t,t∗), which arrives at t∗. If the duration of signal arrival is shorter enough than the membrane time constant (i.e. τs ≪ τm) we suggest the probability density as:

| 26 |

This conjecture covers our results for both the square input (i.e. Eq. (24)) and the exponential decay (i.e. Eq. (54) in Appendix II). We also test it by comparing the analytical result of first-passage time (obtained using Eq. (26)) with simulation results (Fig. 5c) using the Gamma function input current:

| 27 |

the Γ(n) is the Euler’s Gamma function (Davis 1959). The signaling inputs are shown in the insets of Fig. 5c, top (bottom) for γ = 1(γ = 0.25). The inputs arrive at t∗ = 100 ms, and have a short duration of τs = 2 ms ≪ τm = 20 ms; we recognize a good agreement between simulation and analytical results. The method also can be extendable to the case that more than one presynaptic spike arrives. The derivation for more spikes of presynaptic neuron comes in Appendix III.

It is worthy to use Eq. (26) and obtain a general solution for the first-passage time density, due to transient signaling input with arbitrary shape. Using Eq. (8), the first-passage time density reads:

| 28 |

where ; it reads:

| 29 |

We use Eq. (7) for probability density, P0(V ), and try to calculate the integral in Eq. (28) (see Appendix IV). An expression is derived, for the first-passage time density in the presence of arbitrary signaling input (τs ≪ τm):

| 30 |

where and κ(t,t∗), ω(t,t∗) and φ±(t,t∗)are:

| 31 |

The first-passage time density, Eq. (30), is the probability density of the first spike of the postsynaptic neuron when the signaling input arrives at t∗; the t∗measures the time elapsed since the last spike of postsynaptic neuron up to the signal arrival.

There are experimental studies in which the postsynaptic neuron’s spike times both before and after signal arrival are recorded (Blot et al. 2016). In these researches, the t∗ is observed/assumed. For example studies regarding timing dependent plasticity, assume the knowledge of the last postsynaptic spike (Froemke and Dan 2002; Wang et al. 2005). The timing of the last spike may also be learned by intrinsic mechanisms (Johansson et al. 2016; Jirenhed et al. 2017). In such cases, it would be possible to directly apply our formalism to the analysis. However, there are experimental studies which do not take into account such knowledge of the last postsynaptic spike timing (Panzeri et al. 2014); a downstream neuron may have no access to the information of the neuron’s former spikes. Therefore, we should consider all possible values for t∗, a statistical procedure known as marginalization.

First spike-timing density after signaling input’s arrival

We observed that timing of the signaling input, relative to the last postsynaptic spike, significantly affects the first-spiking density of the postsynaptic neuron. However, the postsynaptic neuron (as well as downstream neurons) may have no access to this elapsed time. Assume that we monitor the arrival of a signaling input; it would be more convenient to consider arrival time as the time origin. Therefore, we reset the time origin accordingly and ask what is the probability density f(τ) that postsynaptic neuron fires at τ after signaling input arrival.

As a building block to address this question, we need to know the probability of the last postsynaptic spike occurring in a time window between t∗and t∗ + dt∗ before the signal arrival, Pback(t∗) dt∗. Following the language of voltage trajectories, this probability can be computed by considering that (A) a trajectory has begun from Vr = 0in the mentioned time window, but (B) it has not yet reached the threshold at V = V𝜃. The answer comes as multiplication of probabilities associated with the two conditions (A) and (B):

| 32 |

where is the spike rate of the postsynaptic neuron in the absence of any signaling input and J0(V𝜃,s) is given by Eq. (9). Pback(t∗)is known as the density function of backward recurrence time in the point process theory (Cox 1962).

The next question is which portion of the trajectories, addressed above, will reach the threshold at τ to τ + dτ, after signal arrival. This sets a temporal distance of t∗ + τ between the beginning point of the trajectories with V = Vr = 0to their spiking point at V = V𝜃. The answer is a conditional probability which reads:

| 33 |

Note that J(V𝜃,τ + t∗) is given by Eq. (30), where the signaling input arrives at t∗, after the last postsynaptic spike. The denominator is a normalization term to achieve .

Having f(τ|t∗)and Pback(t∗), we should integrate over all possible values of backward recurrence time, t∗, to obtain f(τ):

| 34 |

The result in Eq. (34) presents the probability density of first-spike timing after input arrival; the time of input arrival (stimulus onset) should be known by some mechanisms in the cortex (Van Rullen et al. 2005; Panzeri et al. 2014).

It is worth to see how Pback(t∗)successfully connects us to the existing stationary solution for the membrane potential (Brunel and Hakim 1999; Brunel 2000). We should address the probability of finding the membrane potential between V0 and V0 + dV0 at an arbitrary observation time. We split the task into two questions: First, what is the probability that the last postsynaptic spike has happened in a time window of t∗to t∗ + dt∗, before observation time?; Second, what is the conditional probability that voltage trajectories, which initiated from t∗ before observation time, have a potential V ∈ [V0,V0 + dV0]at the time of observation. The answer for the first question is simply given by the probability density of the backward recurrence time. The answer for the second question is a conditional probability:

| 35 |

P0(V,t∗)is given by Eq. (7). The denominator is a normalizing factor to ensure: . It has the very same origin we mentioned for the denominator in Eq. (33); in fact, it is easy to verify that the two denominators are equal, due to the conservation of probability: . We combine the answers of two questions, and obtain the stationary probability density as:

| 36 |

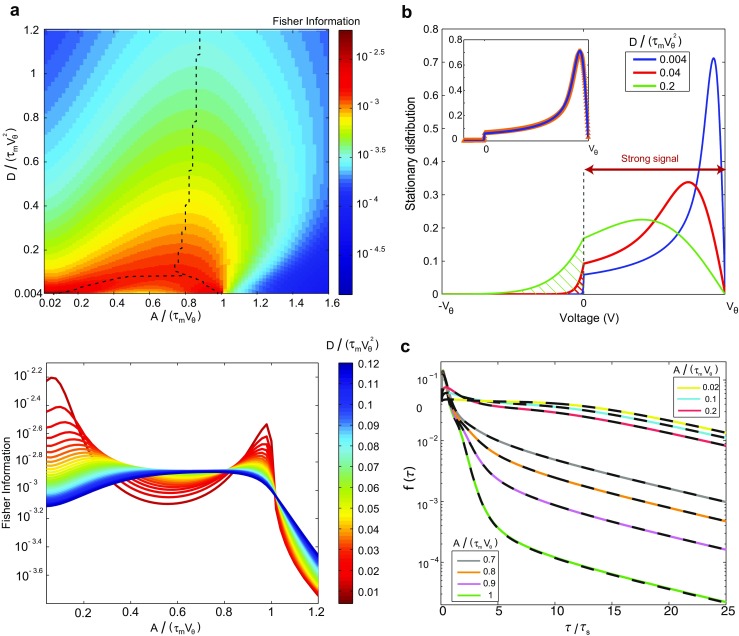

Figure 6b-inset shows that Ps(V0)nicely coincides with the existing stationary solution found by Brunel and Hakim (Brunel and Hakim 1999; Brunel 2000). To use their solution, we have simply put the mean input current equal to the threshold potential, .

Fig. 6.

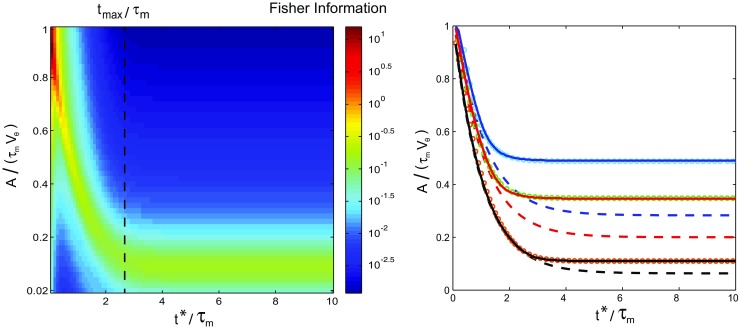

a, Top: Fisher information with respect to the amplitude of signaling input in logarithmic scale as a function of the scaled amplitude, A/(τmV𝜃), and the scaled diffusion coefficient, D/(τmV𝜃2). We use exponential decaying signaling input with τs = 1 ms (see Eq. (46)). For high level of the noise, Fisher information has one maximum in strong amplitude, but for a specific level of the noise, it splits into two maximums which occur in strong and weak amplitudes. The dashed black line shows the maximums of Fisher information. It is notable that Fisher information does not monotonically decrease with the noise level; except for A/(τmV𝜃) ≃ 1and A/(τmV𝜃) < 0.1, for the rest of scaled amplitudes, the Fisher information is maximized for a certain level of the noise (stochastic resonance). Bottom: in the low noise regime, D/(τmV𝜃2) ≤ 0.088, the Fisher information is maximized either by small signaling input’s amplitudes, A/(τmV𝜃) < 0.3or by its strong amplitudes, A/(τmV𝜃) ≃ 1. The two maximums approach each other, as the diffusion coefficient increases, and reach for D/(τmV𝜃2) > 0.088. This one maximum would be robust to the change of the amplitude in a wide range. b stationary distribution of the membrane potential for different scaled diffusion coefficient. When strong amplitudes in low diffusion arrive, nearly all trajectories reach the threshold and only small portion of them remains (hatched red area). Inset shows the comparison between stationary distribution from Eqs. (36) and (77) (Brunel and Hakim 1999; Brunel 2000). cf(τ)in logarithmic scale for weak and strong signaling inputs: The solid colored lines are obtained using Eq. (34) while the black dashed lines are the results using Eq. (37). The coincidence shows that two equations produce the same result, for the same signaling input’s amplitude. The scaled noise level is D/(τmV𝜃2) = 0.04

Ps(V0) provides an alternative approach to find f(τ). It determines the probability density that the postsynaptic neuron has a membrane potential of V = V0 upon signal arrival, (i.e. t = t∗). We have also obtained how the probability density evolves after signal arrival (i.e. t > t∗), for a square (see Eq. (24)) or exponential (see Eq. (55)) signaling input. We note that the framework of solutions which results in Eq. (24) or Eq. (55) does not depend on the initial choice of P0(V0,t) or Ps(V0). Conclusively, if we want to determine first-spiking density, with no previous knowledge about the last postsynaptic spike, we should simply replace P0(V0,t∗) with Ps(V0) (see Appendix V). This lets us follow our suggestion for J(V𝜃,t)in the presence of an arbitrary transient input, Eq. (30), and obtain f(τ)accordingly:

| 37 |

where is given by Eq. (29). In Fig. 6c, we depict f(τ)using both Eq. (37), dashed lines, and Eq. (34), full lines. There is a nice coincidence between two sets of curves which shows the consistency of the result from two approaches mentioned here. The result arises from each one of two approaches, has its own advantage. Since Eq. (34) has just one temporal integral, and J(V𝜃,τ)is already well simplified in Eq. (30), it is computationally easier and faster to work with. At first glance, Eq. (37) also has one temporal integral, however, there is another integral in Ps(V ) to reach stationary solution (see Eq. (36)). Consequently, it would be computationally faster to use Eq. (34) but Eq. (37) provides more intuition about how f(τ)behaves.

Fisher information

The analytical first-spiking density after input arrival, Eq. (34), allows us to quantify the minimum error for any unbiased estimator to decode signaling input’s properties such as its amplitude (input’s strength). Based on Cramer-Rao’s inequality (Rao 1973), the Fisher information provides the lower bound of the estimator’s variance (). Applied to spike timing density, maximizing the Fisher information gives us the minimum error to decode an input parameter (e.g. signal’s amplitude) using the spiking activity. Spike timing of postsynaptic neuron contains information that spike count does not carry (Rieke et al. 1999; van Vreeswijk 2001; Toyoizumi et al. 2006). Indeed, discarding spike timing information (specifically first-spike timing) leads to loss of information (Panzeri et al. 2001). Hence here, we investigate the Fisher information based on the spike timing with respect to the strength of signaling input. In this scenario, the decoder must know the input arrival time and the first spike time after that. It was discussed that this knowledge about the input arrival time as a time reference may be known by, for example, network oscillations or other mechanisms in cortical/sensory systems (Van Rullen et al. 2005; Panzeri et al. 2014). Here, depending on the level of noise, we want to find the amplitudes of signaling inputs with which an optimal decoder can make the best possible discrimination.

The Fisher information is defined as:

| 38 |

where the expectation is performed by f(τ)itself (see Eq. (34)). We assume the exponential decaying as signaling input’s functionality (i.e. ΔI(t,0) ∝ exp(−t/τs), see Eq. (46) for details).

Figure 6a top, shows the Fisher information as a function of two scaled variables: amplitude, A/(τmV𝜃), and noise level, . The dashed black lines locate the points of local maximums. Given the high noise level, , the Fisher information is maximized at a certain amplitude. The single maximum, however, splits into two maximums as the noise decreases. Figure 6a bottom, depicts the same as a function of signal’s amplitude, for certain values of the noise level. There are two distinct maxima in the dark-red curve, . The two peaks, however, go down and approach each other as the noise level is increased; they finally merge into one peak for , the blue and dark-blue curves.

The mentioned two maximums which appear in low noise regime show noise plays a major role in optimal decoding. Despite the high noise level, the best discrimination would happen for two kinds of input strength, in the low noise level. The crucial role of noise is also studied in the context of mutual information, where the maximally informative solutions for neural population splits into two, as noise level decreases (Kastner et al. 2015). Figure 6a shows two branches for the maximum of the Fisher information. The left side branch indicates that the maximizing amplitude diminishes as noise decreases. A similar behavior has been seen using an extension of the perfect integrate and fire model (Levakova et al. 2016); however, they observed a single but not two maximums. Therefore, the existence of the second maximum, as a result of strong signaling input, is less expected and needs more exploration. Here, we suggest a hand-waving explanation, which intuitively explains the existence of the second peak for strong amplitudes in low noise levels.

The Fisher information (Eq. (38)) has an integral over τ; we may expect that the maximization of its integrand versus A, for certain domains of τ, results in the maximization of the whole . The integrand, also, is a fraction with ∂f(τ)/∂A in its nominator, and f(τ)in its denominator. Figure 6c shows in the logarithmic scale, how f(τ) modifies as A increases. For large signal’s amplitude, as A/(τmV𝜃) varies from 0.7 to 1, we see no significant change of f(τ)for 0 ≤ τ ≲ 2.5τs domain. On the contrary, for , we see a significant decrease in f(τ); increasing A/(τmV𝜃)by a constant step of 0.1, always results in a significant downward shift of f(τ). The shift in the last step (A/(τmV𝜃) : 0.9 → 1) seems almost twice as large as the shift in its previous step, in logarithmic scale. This picture suggests that as A/(τmV𝜃) → 1, f(τ) drastically decreases, whereas ∂f(τ)/∂A remains finite. This results in the growth of the integrand and hence the integral for the domain.

Going back to the scenario of voltage trajectories, we can further understand this trend. A large transient signaling input boosts all trajectories with an upward shift of A/τm. Consequently, those trajectories which are closer to the threshold than A/τm, upon signal arrival, will fire immediately. What remains to fire afterward would be the trajectories which were below V𝜃 − A/τm, upon signal occurrence. This picture lets us introduce two distinct sources for f(τ), during and after signal arrival. measures the portion of those trajectories which fire during signal arrival (e.g. 0 < τ < 2.5τs), whereas measures the portion which will reach the threshold after the signal arrival. For the case of exponentially decaying input, it roughly implies that:

| 39 |

Equation (39) does not fully determine how f(τ)behaves after signal arrival; but it provides us a hint on how f(τ)varies with A. However, Fig. 6c helps us to go one step further; it shows a linear tail for f(τ) in logarithmic scale, f(τ) ∝ exp(−αt); all tails show almost the same slope: . This decouples the dependence of f(τ) on A, from its temporal dependence: f(τ) ∝ Fafter(A) × exp(−αt). Consequently, we would be able to estimate ∂f(τ)/∂A which reads (∂Fafter(A)/∂A) × exp(−αt). The ∂Fafter(A)/∂A simply is − (1/τm)Ps(V𝜃 − A/τm). These points clarify how the integrand in Eq. (38) varies with A, in the 2.5τs < τ domain. The integrand and the whole integral would behave like:

| 40 |

The right side of Eq. (40) has a simple geometrical interpretation. Consider the green curve in Fig. 6b (D/(τmV𝜃2) = 0.2), the denominator of Eq. (40) equals the hatched area below the curve, while its nominator is simply the square of the height of that curve, Ps(V ). As A/τm → V𝜃, the height of the curve remains finite (i.e. Ps(0)), whereas the hatched area decreases to .

We compare how the curve changes as noise decreases from green to red curve (Fig. 6b), . There is almost a ratio of 2 between the heights of the curves at V = 0; this means that the nominator decreases by a factor of 1/22. However, the hatched area is decreasing by a factor larger than 4. This means that the right side of Eq. (40) enlarges as noise level decreases. This trend is much stronger as noise level further decreases to ; the height is decreased by a factor of 0.63 whereas the enclosed area reduces to something hardly recognizable. In fact, we can show that the right side of Eq. (40) diverges like , as the noise level approaches to zero. So the integral of the Fisher information on the after signal arrival domain should diverge if both A/τm → V𝜃and . This intuitive picture explains why the second peak arises for large amplitudes in low diffusion regime.

Figure 6a shows for weak amplitudes (0.02 ≤ A/(τmV𝜃) < 0.1), when the level of noise increases, the Fisher information decreases monotonically. The same effect is observed when A/(τmV𝜃) ≃ 1. But for the rest amount of amplitudes, the Fisher information is a non-monotonic function of noise level (see the dashed lines in Fig. 6a which show the maximum of the function); there is a certain level of the noise that maximizes the Fisher information (stochastic resonance) (Bulsara et al. 1991).

Finally, we can associate the two scaled diffusion and amplitude parameters with measurements from neural data. The diffusion coefficient relates to the variance of the noise distribution as D = σ2τm/2. So the scaled diffusion coefficient would read . If the variance of the noise distribution is known which is different for in vivo and in vitro neurons, the scaled diffusion parameter in Fig. 6a can be found. The scaled amplitude (A/(τmV𝜃)) also shows the measure of excitatory postsynaptic potential (EPSP) which is available in different experimental studies (Shadlen and Newsome 1994; Song et al. 2005; Lefort et al. 2009; Cossell et al. 2015).

Discussion

In this study, we analytically derived the statistical input-output relation of a LIF neuron receiving transient signaling input on top of noisy balanced inputs. We developed a first-passage time density of the neuron when it receives the signal at the threshold regime. We examined a simple square input signal, and then extended it to more physiologically plausible signaling inputs. Our prediction matches well with simulation study, which shows the applicability of our model for more realistic signaling input shapes. The first-passage time density is a function of the scaled diffusion coefficient and the scaled amplitude of signaling input. It also depends on the arrival time of the signaling input elapsed from the last postsynaptic spike. We also extended our analysis and made it independent from the knowledge of the last postsynaptic spikes by marginalizing over all possible last postsynaptic spikes with respect to input arrival time. Based on the analytic expression for the first-spiking density after input arrival, we examined the Fisher information with respect to signaling input’s amplitude (efficacy). The result reveals that for each level of the noise, there are specific amplitudes of signaling inputs at which the decoding can be done most accurately.

Here, we investigate the LIF neuron model (Stein 1965). Although lots of studies have shown that some extended models such as adaptive exponential integrate-and-fire model (aEIF) better explains the neural properties (Izhikevich 2004; Ostojic and Brunel 2011), the LIF neuron model can capture the properties of the cortical pyramidal neurons (Rauch et al. 2003; La Camera et al. 2004; Jolivet et al. 2008); it is still the widely studied neuron model because of its simplicity to be driven analytically (Burkitt 2006a, 2006b).

Previous studies on the LIF neuron model already attempted to find an analytical solution for the first-passage time density or firing rate in the presence of signaling input in the noisy balanced environment. The effect of small oscillatory input on the first-passage time problem is studied by Bulsara et.al. using image method to solve the Fokker-Planck equation (Bulsara et al. 1996). The linear response of LIF neuron to oscillatory input and the change of firing rate starting from stationary distribution is also investigated analytically in a Fokker-Planck formalism by some studies (Brunel and Hakim 1999; Brunel et al. 2001; Lindner and Schimansky-Geier 2001). Richardson and Swarbrick provided analytical result for firing rate modulation up to linear order receiving excitatory and inhibitory synaptic jumps drawn from the exponential distribution (Richardson and Swarbrick 2010). In addition, there are studies that investigated the effect of transient input current (Herrmann and Gerstner 2001; Helias et al. 2010). Hermann and Gerstner showed how the post-stimulus time histogram is changed by the transient input signal on top of noise using the escape rate model and hazard function. They provided a numerical solution for the full model but the analytical result is achievable up to the first order. Moreover, Helias and coworkers (Helias et al. 2010) found the effect of the delta input kick in the Fokker-Planck equation on the firing rate of postsynaptic neuron up to linear order, assuming steady state distribution for finite synaptic amplitudes prior to input arrival.

Here, we analytically solved the first-passage time density for the LIF neuron receiving transient signaling input with arbitrary amplitude and shape on top of Gaussian background. However, it is obtained under the assumption that the mean input drive equals or at least is near to the threshold potential (see Appendix I). Further, it was assumed that the synaptic time constant is considerably smaller than the membrane time constant. However, within these limitations, we can analytically achieve various features of the LIF neuron’s spiking density. Our framework and approach at first is conditioned on the knowledge of last postsynaptic spike; this framework is useful in some experimental studies which the last postsynaptic spike is assumed (Froemke and Dan 2002; Wang et al. 2005; Blot et al. 2016) or the neuron may learn to time sequential responses (Johansson et al. 2016; Jirenhed et al. 2017). Moreover, we show by using the backward recurrence time distribution, the first-spiking density after input arrival is achieved, which does not depend on the last postsynaptic spike (see Eq. (34)); it can be used for experiments that the knowledge of last postsynaptic spike is not considered and just the time of input arrival is assumed as a reference signal for readout by downstream neurons (Van Rullen et al. 2005; Panzeri et al. 2014). We also show that the result of using backward recurrence time for the marginalization of the spiking density, in Eq. (34), can be achieved by another approach (see Eq. (37)) assuming a stationary distribution at the time of input arrival and the use of Green’s function from Eq. (6). While Eq. (34) has just one integral over time and computationally is faster, Eq. (37) gives us intuition about the spiking density after input arrival, which was used in the Fisher information part to describe the second observed maximum in low noise regime.

In this study, we model the background synaptic activity as a white Gaussian noise. This would be a too simplified view on the background activity. For example, background synaptic inputs to neurons may be described as shot noise (Richardson and Swarbrick 2010; Helias et al. 2013) and is synaptically filtered (Brunel and Sergi 1998; Moreno-Bote and Parga 2010). It can also be modeled as temporally correlated noise (colored noise) because of long lasting time scale of NMDA and GABABgenerated currents (Lerchner et al. 2006; Dummer et al. 2014; Ostojic 2014). However, the white Gaussian noise still can be a reasonable assumption for short lasting currents generated by AMPA and GABAA receptors (Destexhe et al. 1998). In fact, in the limit of τs ≪ τm, and with small numerous background synaptic amplitudes, the background activity is approximated by white Gaussian noise. Moreover, experimental evidence reported temporally uncorrelated noise for an animal engaged in a task (Poulet and Petersen 2008; Tan et al. 2014). The Gaussian noise, however, changes to correlated noise for an anesthetized animal, or when the animal is in its quiet wakefulness. Hence it would be important to extend the current formalism to non-Gaussian situations, as well.

Moreover, our solution works for the neurons at the threshold regime, at which the membrane potential of the neurons are very close to the threshold. At this regime, even small fluctuation brings the membrane potential above the threshold voltage, which makes the neuron to fire. Recent experimental study (Tan et al. 2014) shows that this scenario works when stimulus is presented to the monkey. To check how the solution changes when the value of the mean input deviates from the threshold voltage (sub/supra-threshold), we also use scaling approach and compare it with simulation study (Appendix I). The result shows, by scaling relation that we introduced, we can go beyond threshold spiking density to near sub/supra-threshold densities and extend our solution to more plausible cases of sub/supra-threshold regimes.

Finally, we calculate the Fisher information based on spike timing rather than rate or spike count (Rieke et al. 1999; van Vreeswijk 2001; Toyoizumi et al. 2006). Precise spike timing of postsynaptic neuron relative to signaling input arrival has information that may be lost or decreased in spike count methods that, spikes (responses) are summed over long time windows (Panzeri et al. 2010). The Fisher information as one application of the first-spiking density is investigated here; we find the specific amplitude of signaling inputs that can be distinguished most accurately by downstream neurons. To maximize the accuracy of decoding in low noise level, neurons have two choices for their synaptic strength, one in weak and the other in strong amplitude. But for higher levels of the noise, there is one strong amplitude which maximizes the FI; the achieved maximum is robust to a mere change of the amplitude (Fig. 6a Bottom, blue color). This effect may have some advantage in neural decoding and learning; strong amplitudes which might be the result of causal Hebbian learning (Dan and Poo 2004) can be discriminated most accurately by downstream neurons, even in low noise regime. We also revisit the stochastic resonance (Douglass et al. 1993; McDonnell and Ward 2011; Teramae et al. 2012; Ikegaya et al. 2013; Levakova et al. 2016); the Fisher information does not behave monotonically with the noise level, instead, there exists a wide range of signaling input’s amplitude for which the Fisher information is maximized in a certain and finite value of the noise (Fig. 6a).

The input-output relation of a single neuron embedded in a network is the building block of the neural activity underlying learning, cognition and behavior. Since strong synaptic inputs are pervasive in the neural system (Song et al. 2005; Lefort et al. 2009; Ikegaya et al. 2013; Buzsáki and Mizuseki 2014; Cossell et al. 2015), the analytic solution that can deal with the effect of strong transient signaling inputs can widely be used in predicting network’s complex activity (Herz et al. 2006). Given that the LIF model describes spike timing with adequate accuracy, the analytic solution presented here is expected to facilitate theoretical investigation of information processing in the neural systems.

Acknowledgements

H.S. thanks S.Koyama and R.Kobayashi for valuable discussions; S.RS and SN.R acknowledge T.Fukai’s kind supports and are grateful to him and H.Maboudi for valuable discussions.

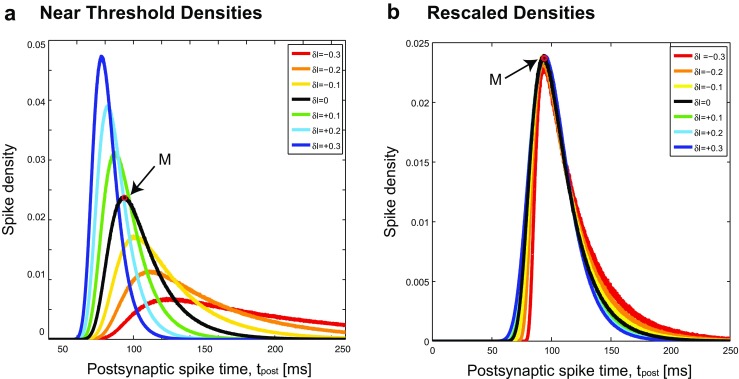

Appendix I: Spiking density near the threshold regime

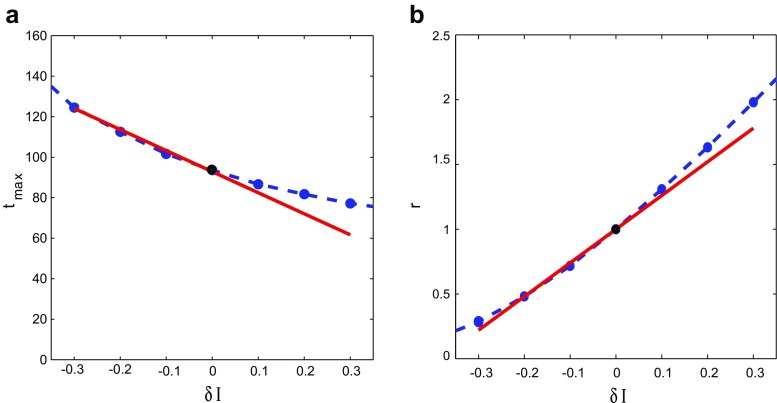

The suggested image method solution is valid exclusively for the threshold regime, when the drive current equals the threshold voltage (i.e. ). In the ubiquitous near threshold regime, however, the drive current is close but not exactly equal to the threshold voltage, . Thus we extend our formalism beyond, to address such a situation. Figure 7a depicts the first-passage time density for various values of . In Fig. 7b, we have rescaled all these curves; all of them collapse with the analytic solution at .

Fig. 7.

Rescaling the First-passage time densities of different supra-threshold and sub-threshold currents near the threshold. a First-passage time densities for different δI from simulation study. The minus sign for δI shows sub-threshold currents while the plus sign shows supra-threshold currents. As we go further away from the threshold regime to supra-threshold currents, the spiking density gets sharper and more symmetric but in sub-threshold currents, by going further from threshold current, the density would be more asymmetric with a long tale and the peak shifts to the right side. The black curve is the density at the threshold regime (δI = 0). The red dot (M) shows the maximum of the density where is the reference point for the scaling of the densities. b Collapse of all the rescaled supra-threshold and sub-threshold spiking densities on the analytic spiking density at the threshold. Each individual density is rescaled by transferring its and r by Eq. (41). Each curve is achieved by simulation study for 1011runs to have a smooth graph. The parameters used are τm = 20ms, V𝜃 = 20mV, D = 0.74 mV2msand δI = − 0.3,− 0.2,− 0.1,0,0.1,0.2,0.3. Simulation time step is 0.05ms

The scaling is simply based on transferring the maximum point of either of the curves to point M, the maximum of the threshold curve (red dot in Fig. 7a). We locate tmax(δI)and Jmax(δI), which are the occurrence time and the value of spiking density, at the maximum point of the curve with . We find the ratio between Jmax(δI) and the maximum of the threshold curve: r = Jmax(δI)/Jmax(0). Each curve in Fig. 7a transfers to its corresponding curve in Fig. 7b according to:

| 41 |

The second row, states that the height of the curve is tuned; so the height of its maximum would be equal to the maximum height of the threshold solution. Similarly, the first row tunes the occurrence time of the maximum. Moreover, it shows that the width of the curve is scaled reversely, compared to its height. This guarantees that the surface below the curve does not change, but remains normalized to one. After scaling, the curves collapse to the analytic solution. Therefore we can reversely use the analytic solution to find the spiking density for the near threshold curves:

| 42 |

So this scaling shows that if we know the analytic expression of r and tmax(δI), we can easily find the spiking density for the near threshold curves from analytic threshold density. If and δI ≪ V𝜃, We can find the analytic expressions for r and tmax(δI) up to first order with respect to δI:

| 43 |

and

| 44 |

Figure 8 shows the linear analytic expressions (Eqs. (43) and (44)) have good match with simulation data especially at the sub-threshold regimes.

Fig. 8.

Comparing simulation with our analytical scaling relations (Eqs. (43) and (44)). a as a function of the deviation of the drive current from threshold (δI) is shown for simulation study (blue dots and blue dashed line) comparing with analytical scaling relation (red line, Eq. (44)). It shows good agreement between simulation and analytical study especially for sub-threshold regimes. b The ratio (r) between and the maximum of the threshold curve as a function of δI for both simulation and analytical study. The analytical result for r (Eq. (43), red line) also agrees well with simulation study (blue dots and blue dashed line), especially for sub-threshold currents. The black dot in each graph shows the result of the threshold regime (δI = 0). The parameters used are τm = 20 ms, V𝜃 = 20 mV, D = 0.74 mV2msand δI = − 0.3,− 0.2,− 0.1,0,0.1,0.2,0.3. Simulation time step is 0.05ms and each point is achieved by 1011runs

The idea behind these analytic relations (Eqs. (43) and (44)) is as follows. We put the threshold aside for a moment. When we have no noise, the voltage trajectory in Eq. (1) is easily driven by . In the presence of just white noise, the voltage of LIF is given by . We imitate some ideas come from the Brownian motion problems, and put forward an open conjecture. Fortunately, the conjecture happens to work finely. To address when the trajectories, on average, reach the threshold, we consider the time when Vdet + δVnoise = V𝜃:

| 45 |

where . Expanding Eq. (45) up to the first order with respect to δI and assuming , we reach to Eq. (44). For finding analytical expression for r, we put the free Green function (see Eq. (5)) in first-passage time density J (see Eq. (8)) while . If we expand the expression up to first order with respect to δI, for , the result leads to Eq. (43).

Appendix II: Exponential decaying function as signaling input

Here, we extend our method to more physiologically plausible signaling input case, exponential decaying function. We assume that the signaling input has the form of:

| 46 |

where τs is the synaptic time constant and τs ≪ τm. Based on the defined signaling input current, to get the result of the first order, one should solve Eq. (14). To take the time integral of Eq. (15), we use the same reasoning with the assumption τs << τm which simplifies the result to Eq. (16) where f1(t,t∗)for t ≥ t∗is:

| 47 |

and f1(t,t∗) = 0 for t < t∗. for second and higher order terms of perturbations, n ≥ 2, we have the same result as before (see Eq. (18)) but with different fn(t,t∗)(see Appendix II):

| 48 |

To find the final result, we should add all n terms as in Eq. (12) and obtain ΔP(V,t). For t > t∗, it reads:

| 49 |

where Q(V0,t,t∗)is:

| 50 |

If we put fn(t,t∗) from Eq. (48) in Eq. (50), the first term in Eq. (50), simplifies to:

| 51 |

The second term of fn(t,t∗), , in Eq. (50) leads to:

| 52 |

Simply we can calculate all the other terms in Eq. (50) and add them together. Then, Q(V0,t,t∗) would be:

| 53 |

Finally the result of P(V,t) = P0(V,t) + ΔP(V,t), by inserting Eq. (53) into Eq. (49), simplifies as:

| 54 |

The whole signature of the input current is gathered in a single term, ; this term, however, is . Consequently, the result could also be written as:

| 55 |

The first-passage time density is also simply attainable by inserting Eq. (55) into Eq. (25):

| 56 |

where .

Appendix II-a

For an exponential decaying signaling input, Eq. (46), the first order term (see Eq. (15)) can be simplified by taking the integral of ΔI(t,t∗) over t:

| 57 |

which leads to Eq. (16) where f1(t,t∗)is:

| 58 |

The integral of current ΔI(t,t∗)for the second term of perturbation is:

| 59 |

which leads to Eq. (18) when n = 2with:

| 60 |

We can also go further and find the integral of current for third and fourth order of perturbation. The integral of third order is:

| 61 |

where f3(t,t∗)is:

| 62 |

For the fourth order we have:

| 63 |

This also leads to Eq. (18) for n = 4with:

| 64 |

Comparing Eqs. (58), (60), (62) and (64) together, fn(t,t∗)simply is:

| 65 |

Appendix III: Extending first-passage time density to multiple presynaptic spike times

If more than one presynaptic spike occurs after reset time, as far as the input’s spikes and shapes don’t interfere with each other, the first-passage time density is simply a superposition of the results. To make it clear, we assume a case that first presynaptic spike arrives at and the second pre spike arrives at (t2∗ > t1∗). The first-passage time density of postsynaptic neuron after is derived by Eqs. (24) and (25) but after is:

| 66 |

where . The integration can be taken numerically by software.

Appendix IV: Analytic expression for first-passage time density

Here we calculate the analytic expression for First-passage time density. By putting Eq. (29) and P0 from Eq. (7) in Eq. (28) and changing the variable (V𝜃 − V0 = y), the first-passage time density is:

| 67 |

where γ(t,t∗), α(t − t∗), β(t∗) and S ± (t,t∗)are:

| 68 |

The exponential term in Eq. (67) for the first(or the second) term, S± can be written in quadratic form:

| 69 |

where each of the two terms of integral in Eq. (67) is simplified to:

| 70 |

by changing the variable , the integral over y in Eq. (70) can be taken:

| 71 |

each of the integrals in Eq. (71) can be written as two terms; one integral from to 0 and the other term from 0 to ∞:

| 72 |

The first, second and fourth integral in Eq. (72) can be taken analytically. So the final result of Eq. (70) is:

| 73 |

The analytic expression for first-passage time density, Eq. (67), for arbitrary shape of input (τs ≤ τm) is achieved:

| 74 |

where γ = γ(t,t∗),α = α(t − t∗), β = β(t∗) and S± = S±(t,t∗) are given by Eq. (68).

To obtain a clearer picture, we combine above definitions and suggest new variables as:

| 75 |

and the first-passage time reads:

| 76 |

where .

Appendix V: Probability of spiking after input arrival starting from the stationary distribution

Prior to input arrival at t = 0, membrane potential of the neuron has the stationary distribution (Brunel and Hakim 1999; Brunel 2000):

| 77 |

Where Θ(V ) is the Heaviside step function (Θ(V ) = 1when V > 0 and otherwise Θ(V ) = 0), λ is the mean firing rate (), Vr is the resting voltage which is zero here and is the mean current which is equal to V𝜃. The stationary distribution is also represented by Eq. (36) at the threshold regime which is the case of our study here. When the square signaling input (see Eq. (3)) arrives at time origin, the probability density of trajectories evolves as:

| 78 |

The first passage time, Eq. (25), at time τ starting from the stationary distribution represents the trajectories that reach the threshold at that time and it reads:

| 79 |

where is given by Eq. (29). In the limit of Δt → 0, the square input looks like delta function (δ(τ)). At the arrival time of delta function with amplitude , all the trajectories that have the potential in the stationary state reach the threshold and spike. So the Eq. (79) simply is:

| 80 |

The form of Eq. (79) lets us use our suggestion used before in Eq. (30) once more and extend the result in Eq. (79) for an arbitrary shape of signaling input as:

| 81 |

Appendix VI: Fisher information when the last postsynaptic spike-time is known

Here, we suppose that the decoder has access to the last spike of postsynaptic neuron, and find the Fisher information by inserting Eq. (28) instead of f(τ)in Eq. (38). This function helps us to investigate more about the sensitivity of the first passage time to the amplitude of signaling input when the precise time of signaling input is known with respect to last postsynaptic spike. Figure 9 shows the Fisher information as a function of signaling input’s amplitude (A) and presynaptic spike time (t∗). When t∗occurs immediately after the last spike of postsynaptic neuron, a strong amplitude of the input needs to be added for the voltage to reach the threshold and generates a postsynaptic neuron’s spike. Since the noise has a small effect on voltage trajectory, the Fisher information is maximized. Nevertheless, when t∗ occurs with delay, noise and mean driving current are added to voltage trajectory and a smaller amount of signaling input is sufficient to reach the threshold; it leads to a local maximum of Fisher information. To find out about the efficient amount of amplitude in each t∗ that maximizes the Fisher information, we present a simple picture. Roughly, the maximum of Fisher information occurs when ∂J(V𝜃,t)/∂A is maximum; this corresponds to an A which is strong enough to make the postsynaptic neuron to fire, immediately upon presynaptic neuron’s spike. However, If we increase A more than this, J(V𝜃,t) does not significantly increase; this means that ∂J(V𝜃,t)/∂A would decrease or even vanish, for a larger A. We locate such an A, it corresponds to an amplitude which makes most of the trajectories to reach the threshold. The trajectories, corresponding to various possible states of the neuron, are all around a mean trajectory, . The background noise widens this area, ; thus the amplitude which can make most of the trajectories to spike, should satisfy:

Fig. 9.

Fisher information with respect to the amplitude of signaling input when the decoder has access to the last postsynaptic spike. Left: Fisher information in logarithm scale as a function of the scaled amplitude, A/(τmV𝜃), and the scaled presynaptic time, t∗/τm. The signaling input is the exponential decaying function(Eq. (46), τs = 1 ms) while the diffusion coefficient is fixed here, D/(τmV𝜃2) = 0.004. The Fisher information is maximized when the signaling input arrives immediately with strong amplitude A/(τmV𝜃) = 0.92which generates the postsynaptic neuron to fire immediately with minimum contribution from the noise. Dashed black line shows the time when . In the region, the Fisher information decreases monotonically. The later the signaling input arrives, we would need higher amplitude to maximize information; this maximum monotonically decreases with the arrival time. For , the maximizing amplitude and Fisher information are constants and independent of t∗. Right: the maximizing scaled amplitude, A/(τmV𝜃)as a function of normalized arrival time (t∗/τm) for different diffusion coefficients(D/(τmV𝜃2) = 0.004,0.04,0.08corresponding to black, red and blue). The circles show the maximum of Fisher information from Eq. (38). The dashed lines show Eq. (83) which has a good match for , but deviates for . The Solid lines show ∂2P0(V,t)/∂V2 = 0(see Eq. (7)) which has excellent compatibility with the circles

| 82 |

which shows a relation between A and t∗:

| 83 |

The dashed line in Fig. 9, show Eq. (83) that has good agreement with circles (amplitude maximizing Fisher information as a function of t∗) as far as t∗≤ tmax. The threshold nonlinearity and image method disturb this simple picture for t∗ > tmax. The deviation between the dashed line and circles shows how threshold makes the picture complicated. The solid line arises from calculating a voltage V which satisfies ∂2P0(V,t)/∂V2 = 0(see Eq. (7)); it shows a very good agreement with the place of maximized Fisher information.

Figure 9 gives us a hint why we observe two maximums in the Fisher information for the low level of the noise in Fig. 6. The left panel of Fig. 9 shows the Fisher information for low scaled diffusion (D/(τmV𝜃2) = 0.004). In this figure, the Fisher information is maximized at stronger amplitude for earlier t∗(t∗≤ tmax), but at weaker amplitude for later t∗(t∗≥ tmax). It is likely that we observed these two peaks in Fig. 6a for small diffusions because this result (see Eq. (38)) is obtained roughly by averaging over t∗ for each amount of signaling input’s amplitude in Fig. 9.

Compliance with Ethical Standards

Conflict of interests

The authors declare that they have no conflict of interest.

Footnotes

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

References

- Abbott L, Fusi S, Miller KD. Theoretical approaches to neuroscience: examples from single neurons to networks, Vol. 5. New York: McGraw-Hill; 2012. pp. 1601–1618. [Google Scholar]

- Babadi B, Abbott L. Pairwise analysis can account for network structures arising from spike-timing dependent plasticity. PLoS Computational Biology. 2013;9:e1002,906. doi: 10.1371/journal.pcbi.1002906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blot A, Solages C, Ostojic S, Szapiro G, Hakim V, Léna C. Time-invariant feed-forward inhibition of purkinje cells in the cerebellar cortex in vivo. The Journal of Physiology. 2016;594(10):2729–2749. doi: 10.1113/JP271518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunel N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of Computational Neuroscience. 2000;8(3):183–208. doi: 10.1023/A:1008925309027. [DOI] [PubMed] [Google Scholar]

- Brunel N, Hakim V. Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Computation. 1999;11:1621. doi: 10.1162/089976699300016179. [DOI] [PubMed] [Google Scholar]

- Brunel N, Sergi S. Firing frequency of leaky intergrate-and-fire neurons with synaptic current dynamics. Journal of Theoretical Biology. 1998;195(1):87–95. doi: 10.1006/jtbi.1998.0782. [DOI] [PubMed] [Google Scholar]

- Brunel N, Chance FS, Fourcaud N, Abbott L. Effects of synaptic noise and filtering on the frequency response of spiking neurons. Physical Review Letters. 2001;86(10):2186. doi: 10.1103/PhysRevLett.86.2186. [DOI] [PubMed] [Google Scholar]

- Bulsara A, Jacobs E, Zhou T, Moss F, Kiss L. Stochastic resonance in a single neuron model: theory and analog simulation. Journal of Theoretical Biology. 1991;152(4):531–555. doi: 10.1016/S0022-5193(05)80396-0. [DOI] [PubMed] [Google Scholar]