Abstract

Background

Cardiac auscultation is a cost-effective, noninvasive screening tool that can provide information about cardiovascular hemodynamics and disease. However, with advances in imaging and laboratory tests, the importance of cardiac auscultation is less appreciated in clinical practice. The widespread use of smartphones provides opportunities for nonmedical expert users to perform self-examination before hospital visits.

Objective

The objective of our study was to assess the feasibility of cardiac auscultation using smartphones with no add-on devices for use at the prehospital stage.

Methods

We performed a pilot study of patients with normal and pathologic heart sounds. Heart sounds were recorded on the skin of the chest wall using 3 smartphones: the Samsung Galaxy S5 and Galaxy S6, and the LG G3. Recorded heart sounds were processed and classified by a diagnostic algorithm using convolutional neural networks. We assessed diagnostic accuracy, as well as sensitivity, specificity, and predictive values.

Results

A total of 46 participants underwent heart sound recording. After audio file processing, 30 of 46 (65%) heart sounds were proven interpretable. Atrial fibrillation and diastolic murmur were significantly associated with failure to acquire interpretable heart sounds. The diagnostic algorithm classified the heart sounds into the correct category with high accuracy: Galaxy S5, 90% (95% CI 73%-98%); Galaxy S6, 87% (95% CI 69%-96%); and LG G3, 90% (95% CI 73%-98%). Sensitivity, specificity, positive predictive value, and negative predictive value were also acceptable for the 3 devices.

Conclusions

Cardiac auscultation using smartphones was feasible. Discrimination using convolutional neural networks yielded high diagnostic accuracy. However, using the built-in microphones alone, the acquisition of reproducible and interpretable heart sounds was still a major challenge.

Trial Registration

ClinicalTrials.gov NCT03273803; https://clinicaltrials.gov/ct2/show/NCT03273803 (Archived by WebCite at http://www.webcitation.org/6x6g1fHIu)

Keywords: cardiac auscultation, physical examination, smartphone, mobile health care, telemedicine

Introduction

Cardiovascular diseases are the most common causes of death, accounting for 31.5% of all deaths globally [1,2]. In 2015, in the United States, 92.1 million adults were estimated to have cardiovascular diseases, and 43.9% of the adult population is projected to have some form of cardiovascular disease by 2030 [3].

The stethoscope has played a key role in the physical examination of patients with cardiac disease since its invention by Rene Laënnec in 1816 [4]. The opening and closing of the heart valves, as well as blood flow and turbulence through the valves or intracardiac defects, generate rhythmic vibrations, which can be heard via the stethoscope [5]. Cardiac auscultation using the stethoscope enables hemodynamic assessment of the heart and can help in the diagnosis of cardiovascular diseases [6].

Recently, the advent of noninvasive imaging modalities has dwarfed the importance of cardiac auscultation in clinical practice [7,8]. Devices such as the handheld ultrasound have enabled detailed on-site visualization of the cardiac anatomy and are further threatening the role of the stethoscope as a bedside examination tool [9,10]. In this way, there has been a decrease in the appreciation of the importance of cardiac auscultation, and physicians are decreasingly proficient and confident in their examination skills [11-13]. Studies have also suggested a low level of interobserver agreement regarding cardiac murmurs [14].

The smartphone has become a popular device. As of 2015, 64% of Americans and 88% of South Koreans were reported to own a smartphone [15]. Smartphones are frequently used for health purposes, such as counseling or information searches [16]. The modern smartphone has excellent processing capability and is equipped with multiple high-quality components, such as microphones, display screens, and sound speakers. There have been efforts to use smartphone health apps for self-diagnosis [17]. However, some of these software apps have shown poor credibility, and their role in health care is not yet established [18].

Therefore, we sought to develop a smartphone app for cardiac auscultation that could be used by non–medical expert users. Although the importance of cardiac auscultation is declining in the hospital setting, it could serve as a screening tool at the prehospital stage if it can be performed easily by smartphone users themselves. This was a pilot study to test the feasibility of cardiac auscultation using the built-in microphones of smartphones without any add-on devices. The study tested (1) whether heart sound recording using a smartphone is feasible, and (2) whether an automated diagnostic algorithm can classify heart sounds with acceptable accuracy. Heart sounds were recorded using the smartphone microphones and processed electronically. We developed a diagnostic algorithm by applying convolutional neural networks, which we used for the diagnosis of the recorded heart sounds. In this study, we assessed the diagnostic accuracy of the algorithm.

Methods

Description of the App

We developed a smartphone app named CPstethoscope for this study (Figure 1). The app runs on the Android operating system (Google Inc) and is used for research purposes only. Heart sounds were recorded by placing the phone on the skin of the chest, using the built-in microphone. In most smartphones, microphones are located on the lower border of the device. Heart sounds can be best heard in the intercostal spaces. The instructions for this app indicated the anatomical landmarks and auscultation areas. While maintaining the contact of the lower margin of the smartphone with the chest wall, users are required to manipulate the device to start and stop recording. Users can see on the screen whether their heart sounds are properly being captured.

Figure 1.

Heart sound recording using a smartphone app. Left: illustration of how the heart sounds were recorded in this study. Smartphones were placed directly on the chest wall; a dedicated app was used with no add-on devices. Middle and right: representative screenshots of the app (called CPstethoscope) developed for this study. ECG: electrocardiogram.

Study Design

This was a pilot study designed to demonstrate the feasibility of smartphone-based recording and identification of heart sounds. We sought to enroll 50 participants who were 18 years of age or older and had undergone an electrocardiogram (ECG) and echocardiography within the previous 6 months at Seoul National University Bundang Hospital, Seoul, Republic of Korea. Ultimately, we sought to develop an app for self-diagnosis that could be performed by users. However, for this pilot study, heart sounds were recorded by researchers who were familiar with the use of the app and understood the principles of cardiac auscultation. The investigators who recorded heart sounds were not aware of the patients’ diagnoses. Eligible patients were invited to participate in the study by the research doctors at the outpatient clinics or on the wards. After participants provided informed consent, their heart sounds were recorded in a quiet room that was free from environmental noises.

Reference heart sounds were recorded by participating cardiologists (SHK, YY, GYC, and JWS) using an electronic stethoscope (3M Littmann Electronic Stethoscope Model 3200; 3M, St Paul, MN, USA). Study devices were the Samsung Galaxy S5 (model SM-G900) and Galaxy S6 (SM-G920; Samsung Electronics, Suwon, Republic of Korea), and LG G3 (LG-F400; LG Electronics, Seoul, Republic of Korea).

We chose the best site for recording from among the aortic, pulmonic, mitral, and tricuspid areas. The built-in microphones were placed directly on the skin of the chest wall for detection of the heart sound. We tested all 3 devices with all study participants. There were no prespecified orders for tested devices. No add-on devices were used. Recordings were made for approximately 10 seconds after stable heart sounds were displayed on the screen. Final diagnoses of the reference heart sounds were confirmed by a second cardiologist (SHK and GYC) by listening to the audio files and matching them with the echocardiography reports.

This study was approved by the Seoul National University Bundang Hospital institutional review board on August 24, 2016 (B-1609-361-303), and all participants provided written informed consent. We registered this study protocol (ClinicalTrials.gov NCT03273803). The corresponding author had full access to all the data in the study and takes responsibility for its integrity and the data analysis.

Data Processing and Identification

We transferred the recorded audio files to a desktop computer for data processing. After subtracting environmental and thermal noises using fast Fourier transformation, we constructed time-domain noise-reduced heart sounds. We detected the first and second heart sounds without an ECG reference, using a previously reported algorithm [19]. Time-domain signals were transformed into frequency-domain spectrogram features. We developed a diagnostic algorithm using convolutional neural networks, a variant of an artificial neural network that mimics the connections of neurons in the visual cortex of the brain. The convolutional neural network was constructed from 40×40 heart sound spectrogram matrices through 1 input layer. We processed these matrices with 2 convolution-max pool layers whose kernel size was 5×5. Moreover, the number of kernels for each of the 2 convolutional layers was either 8 or 16. Next, a dense, fully connected layer followed the second convolution–max pool layer, and we appended the last readout layer with 5 nodes that corresponded to each disease. We calculated the training loss of function of the network as soft maximum cross-entropy using the values from the readout layer. Finally, we trained the network with the Adam optimizer at a learning rate of 0.001 [20]. We used the TensorFlow version 1.2 Python library to compose this network [21]. Training was conducted using demonstration heart sounds obtained from open databases (The Auscultation Assistant, C Wilkes, University of California, Los Angeles, Los Angeles, CA, USA; Heart Sound & Murmur Library, University of Michigan Medical School, Ann Arbor, MI, USA; Easy auscultation, MedEdu LLC, Westborough, MA, USA; 50 Heart and Lung Sounds Library, 3M, St Paul, MN, USA; and Teaching Heart Auscultation to Health Professionals, J Moore, Rady Children’s Hospital, San Diego, CA, USA). We classified heart sounds into 5 categories: normal, third heart sound, fourth heart sound (S4), systolic murmur, and diastolic murmur. The algorithm showed 81% diagnostic accuracy with the training sets. Testing was performed with the samples acquired from this study.

Statistical Analysis

We calculated continuous variables as mean (SD), and categorical variables as counts and percentages. Reference heart sounds were adjudicated by experienced cardiologists. The primary end point of the study was the diagnostic accuracy of the system for heart sound classification. We considered the diagnosis to be accurate when the algorithm classified a heart sound into the correct category with 50% or more probability. We also estimated the performance of the system using sensitivity, specificity, positive predictive value, and negative predictive value. We defined the study end points were as follows: diagnostic accuracy = (TP+TN)/(TP+FP+FN+TN); sensitivity = TP/(TP+FN); specificity = TN/(TN+FP); positive predictive value = TP/(TP+FP); and negative predictive value = TN/(TN+FN), where TP indicates true positive; TN, true negative; FP, false positive; and FN, false negative. We calculated the diagnostic values as simple proportions with corresponding 95% confidence intervals. Statistical analyses were performed using the R programming language version 3.2.4 (The R Foundation for Statistical Computing). A 2-sided P<.05 was considered statistically significant.

Results

Patient Profiles

A total of 46 patients participated in this study. Multimedia Appendix 1 shows the Standards for Reporting of Diagnostic Accuracy Studies checklist and flow diagram for the study. Similar numbers of men and women were enrolled, and their median age was 65.5 years. Table 1 describes the participants’ characteristics: 20 (44%) had systolic murmurs, 20 (44%) had normal heart sounds, 5 (11%) had diastolic murmurs, and 1 (2%) had S4.

Table 1.

Characteristics of study participants.

| Characteristics | Interpretable heart sounds | Total | P valuea | ||

| Yes | No | ||||

| Number of participants, n (%) | 30 (65) | 16 (35) | 46 | ||

| Male sex, n (%) | 14 (47) | 7 (44) | 21 (46) | >.99 | |

| Age (years), median (range) | 62.5 (22.0-90.0) | 72.0 (27.0-88.0) | 65.5 (22.0-90.0) | .07 | |

| Body mass index (kg/m2), mean (SD) | 23.8 (3.9) | 23.4 (3.3) | 23.7 (3.7) | .69 | |

| Hypertension, n (%) | 14 (47) | 9 (56) | 23 (50) | .76 | |

| Diabetes, n (%) | 4 (13) | 5 (31) | 9 (20) | .24 | |

| Atrial fibrillation, n (%) | 0 (0) | 5 (31) | 5 (11) | <.001 | |

| Primary diagnosis, n (%) | .005 | ||||

| Aortic stenosis | 11 (37) | 2 (13) | 13 (28) | ||

| Aortic regurgitation | 0 (0) | 2 (13) | 2 (4) | ||

| Mitral stenosis | 0 (0) | 4 (25) | 4 (9) | ||

| Mitral regurgitation | 2 (7) | 0 (0) | 2 (4) | ||

| Hypertrophic cardiomyopathy | 1 (3) | 1 (6) | 2 (4) | ||

| Others | 16 (53) | 7 (44) | 23 (50) | ||

| Heart sounds, n (%) | .007 | ||||

| Systolic murmur | 15 (50) | 5 (31) | 20 (44) | ||

| Diastolic murmur | 0 (0) | 5 (31) | 5 (11) | ||

| S3/S4b | 1 (2) | 0 (0) | 1 (2) | ||

| Normal | 14 (47) | 6 (38) | 20 (44) | ||

aComparisons were performed using Student t test or Mann-Whitney U test for continuous variables, and chi-square test or Fisher exact test for categorical variables.

bS3/S4: third and fourth heart sounds.

After audio file processing, including noise reduction, we confirmed 30 of 46 heart sounds (65%) as interpretable. The reasons for failure to acquire interpretable heart sounds included the small amplitude of the acquired heart sounds, background noise, and the participant’s poor cooperation. Younger age tended to be associated with better interpretability, while body mass index had no impact. Significant factors for uninterpretability included atrial fibrillation and diastolic murmur.

Diagnostic Performance

Figure 2 shows the performance of the diagnostic algorithm by device. Heart sounds recorded with the 3 different study devices yielded consistently high diagnostic accuracy: Samsung Galaxy S5, 90% (95% CI 73%-98%); Samsung Galaxy S6, 87% (95% CI 69%-96%); and LG G3, 90% (95% CI 73%-98%). The Samsung Galaxy S5 and S6 showed a high sensitivity (S5: 94%, 95% CI 70%-100%; S6: 94%, 95% CI 70%-100%), while the LG G3 showed a high specificity (100%, 95% CI 68%-100%). The diagnostic performance did not vary significantly according to the study participants’ age or sex (Table 2). Figure 3 shows representative waveforms and spectrograms of heart sounds (audio files are provided in Multimedia Appendix 2,Multimedia Appendix 3, and Multimedia Appendix 4). No meaningful adverse events occurred during the study.

Figure 2.

Diagnostic performance of each study device. Bold broken lines indicate diagnostic accuracy. FN: false negative; FP: false positive; TN: true negative; TP: true positive.

Table 2.

Diagnostic performance (%) of each study device.

| Participants grouped by age and sex | Study device | |||

| Galaxy S5 estimate (95% CI) |

Galaxy S6 estimate (95% CI) |

G3 estimate (95% CI) |

||

| Total (n=30) | ||||

| Diagnostic accuracy | 90 (73-98) | 87 (69-96) | 90 (73-98) | |

| Sensitivity | 94 (70-100) | 94 (70-100) | 81 (54-96) | |

| Specificity | 86 (57-98) | 79 (49-95) | 100 (68-100) | |

| Positive predictive value | 88 (64-99) | 83 (59-96) | 100 (66-100) | |

| Negative predictive value | 92 (64-100) | 92 (62-100) | 82 (57-96) | |

| Men (n=14) | ||||

| Diagnostic accuracy | 79 (49-95) | 79 (49-95) | 93 (66-100) | |

| Sensitivity | 83 (36-100) | 83 (36-100) | 83 (36-100) | |

| Specificity | 75 (35-97) | 75 (35-97) | 100 (52-100) | |

| Positive predictive value | 71 (29-96) | 71 (29-96) | 100 (36-100) | |

| Negative predictive value | 86 (42-100) | 86 (42-100) | 89 (52-100) | |

| Women (n=16) | ||||

| Diagnostic accuracy | 100 (71-100) | 94 (70-100) | 88 (62-98) | |

| Sensitivity | 100 (59-100) | 100 (59-100) | 80 (44-97) | |

| Specificity | 100 (42-100) | 83 (36-100) | 100 (42-100) | |

| Positive predictive value | 100 (59-100) | 91 (59-100) | 100 (52-100) | |

| Negative predictive value | 100 (42-100) | 100 (36-100) | 75 (35-97) | |

| Elderly (≥65 years; n=12) | ||||

| Diagnostic accuracy | 100 (64-100) | 92 (62-100) | 83 (52-98) | |

| Sensitivity | 100 (55-100) | 100 (55-100) | 78 (40-97) | |

| Specificity | 100 (19-100) | 67 (9-99) | 100 (19-100) | |

| Positive predictive value | 100 (55-100) | 90 (55-100) | 100 (47-100) | |

| Negative predictive value | 100 (19-100) | 100 (9-100) | 60 (15-95) | |

| Young (<65 years; n=18) | ||||

| Diagnostic accuracy | 83 (59-96) | 83 (59-96) | 94 (73-100) | |

| Sensitivity | 86 (42-100) | 86 (42-100) | 86 (42-100) | |

| Specificity | 82 (48-98) | 82 (48-98) | 100 (62-100) | |

| Positive predictive value | 75 (35-97) | 75 (35-97) | 100 (42-100) | |

| Negative predictive value | 90 (55-100) | 90 (55-100) | 92 (62-100) | |

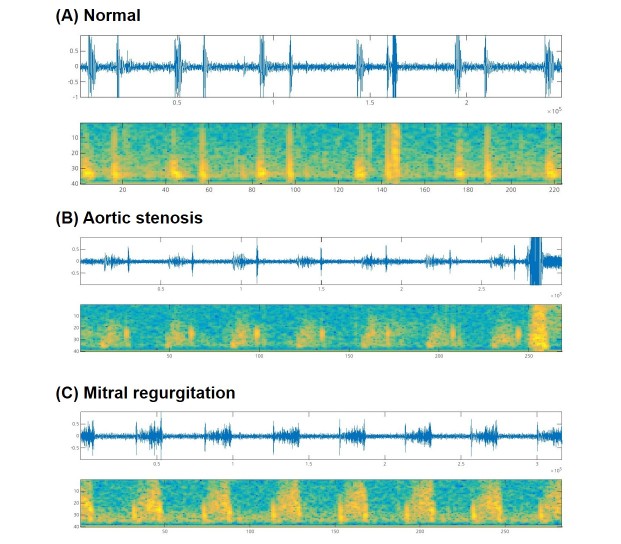

Figure 3.

Representative phonocardiograms and spectrograms. (A) Normal heart sounds from the aortic area of a 22-year-old man with a history of vasovagal syncope. (B) Midsystolic ejection murmur from the aortic area of an 83-year-old woman with aortic stenosis, which was classified as a systolic murmur. (C) Systolic murmur from the mitral area of a 63-year-old woman with mitral valve prolapse and mitral regurgitation, which was classified as a systolic murmur.

Discussion

Principal Findings

This was a pilot study to assess the feasibility of heart sound recording and identification using smartphones. We found that reliable heart sound recording was the most important difficulty encountered. However, the results of this study suggest that, once interpretable heart sounds are acquired, cardiac murmur diagnosis using convolutional neural networks yields high diagnostic accuracy.

Implications

With the widespread use of smartphones, an increasing number of health care apps have been developed. There were approximately 165,000 health-related apps available in 2016 [22]. These health care-related apps comprise a variety of aspects of medicine, including prevention, diagnosis, monitoring, treatment, compensation, and investigation [23]. However, there are concerns that many of these apps are not evidence based, and it is difficult to find any information on the research used in their development [18]. This study was a part of our effort to develop a diagnostic app that can differentiate normal and abnormal heart sounds. We sought to validate the diagnostic performance of the recording and identification system among patients in real-world clinical practice.

There have been attempts to use add-on gadgets in conjunction with smartphones for health care use, but most of these have not been accepted widely. Modern smartphones are equipped with high-quality built-in microphones that can capture low-pitch, low-amplitude heart sounds. We presumed that an app working solely with the featured specifications would have advantages with respect to accessibility and acceptability. However, this study implied that the acquisition of good-quality heart sounds is still far from perfect. A variety of factors were suggested to affect the heart sound recording. First, background noise is difficult to reduce systematically and, thus, should be avoided during recordings. It was necessary to record on the skin of the chest wall, and the choice of the appropriate location was essential. Second, respiration was not as important as expected. The frequency spectrum of lung sounds (100-2500 Hz) is usually distant from that of heart sounds (20-100 Hz) [24]. Thus, lung sounds were easily attenuated by applying a simple band-pass filter. Third, patient factors, such as age, body mass index, and the presence of arrhythmia, were also crucial. Fourth, our system failed to recognize heart sounds with diastolic murmur, although the sample size was small.

The use of machine learning in clinical medicine is rapidly increasing, with a marked increase in the amount of available data [25]. The interpretation of digitized images and development of prediction models are the leading applications of machine learning in the field of medicine [26,27]. This study suggests that the interpretation of audio signals derived from humans may be a potential application of artificial intelligence.

Comparison With Prior Work

To our knowledge, this study is the first attempt to discriminate heart sounds using a deep learning–based diagnostic algorithm. We showed that the diagnostic algorithm was feasible and reproducible. We found only 1 app for cardiac auscultation that enables heart sound recording, which is called the iStethoscope [28]. It amplifies and filters heart sounds in real time for better quality, but it is not capable of diagnosing heart murmurs. AliveCor Kardia, a device approved by the US Food and Drug Administration, enables ECG monitoring and, according to 1 clinical trial, significantly improves the detection of incident atrial fibrillation [29]. Azimpour et al performed an elegant study in which they used an electronic stethoscope to detect stenosis of coronary arteries [30]. Although the study idea was interesting, it may be difficult to use in commonly available smartphones due to the deep location of the coronary arteries and the low amplitude of the acoustic signals. There are several apps that enable heart rate monitoring. Some require specialized devices, and others simply use built-in smartphone cameras and flashes, also known as photoplethysmography. However, their accuracy and clinical application still require further investigation [31,32].

Limitations

This study had several limitations. First, the sample size was too small to represent a variety of cardiac murmurs. Second, the enrollment of study participants was selective rather than consecutive; thus, there is a possibility of a selection bias of participants with clear and unambiguous heart sounds. Third, we used the app developed for this study only to record heart sounds. In this pilot study, the audio files were moved to a central server and subsequently analyzed. Therefore, the app needs to be improved such that it can be used in the real world, such as an all-in-one system from acquisition to diagnosis. Fourth, we obtained the heart sounds ourselves, although we ultimately seek to develop an app that can be used by members of the general population.

Fifth, this study showed variations in performance with different devices, which seem to be caused by the differing specifications of each smartphone. This is one of the major hurdles in the development of an app that can be used in a variety of smartphones from different manufacturers. Our pilot testing indicated that the quality of recorded heart sounds depended on the quality of the built-in microphones. For this reason, we included 3 high-end smartphones for this study. System performance may be worse with inexpensive devices. In addition, we tested only devices running the Android operating system in this study, but not the Apple iPhone, which is one of the most widely used smartphones worldwide.

Future Research Steps

The app described in this study requires further development. An all-in-one system is crucial, comprising recording, audio processing, and a diagnostic algorithm. Instructions that help users record their heart sound by themselves are also needed. We are improving the ability of the app to acquire interpretable heart sounds and to diagnose atrial fibrillation. Another potential application is the use of a diagnostic algorithm with commercialized electronic stethoscopes performed by medical personnel [33]. This may improve the quality of clinical practice by assisting early-career doctors or nurses to assess patients.

Conclusions

The concept of cardiac auscultation using smartphones is feasible. Indeed, diagnosis using convolutional neural networks yielded a high diagnostic accuracy. However, use of the built-in microphones alone was limited in terms of reproducible acquisition of interpretable heart sounds.

Acknowledgments

This study was funded by a grant from the Seoul National University Bundang Hospital Research Fund (16-2016-006).

Abbreviations

- ECG

electrocardiogram

- FN

false negative

- FP

false positive

- S4

fourth heart sound

- TN

true negative

- TP

true positive

Standards for Reporting of Diagnostic Accuracy Studies study checklist and study diagram.

Audio file 1 (normal heart sound).

Audio file 2 (aortic stenosis).

Audio file 3 (mitral regurgitation).

Footnotes

Conflicts of Interest: None declared.

References

- 1.GBD 2013 DALYs and HALE Collaborators. Murray CJL, Barber RM, Foreman KJ, Abbasoglu OA, Abd-Allah F, Abera SF, Aboyans V. Global, regional, and national disability-adjusted life years (DALYs) for 306 diseases and injuries and healthy life expectancy (HALE) for 188 countries, 1990-2013: quantifying the epidemiological transition. Lancet. 2015 Nov 28;386(10009):2145–2191. doi: 10.1016/S0140-6736(15)61340-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.World Health Organization The top 10 causes of death: fact sheet. 2017. Jan, [2018-02-09]. http://www.who.int/mediacentre/factsheets/fs310/en/

- 3.Benjamin EJ, Blaha MJ, Chiuve SE, Cushman M, Das SR, Deo R, de Ferranti SD, Floyd J, Fornage M, Gillespie C, Isasi CR, Jiménez MC, Jordan LC, Judd SE, Lackland D, Lichtman JH, Lisabeth L, Liu S, Longenecker CT, Mackey RH, Matsushita K, Mozaffarian D, Mussolino ME, Nasir K, Neumar RW, Palaniappan L, Pandey DK, Thiagarajan RR, Reeves MJ, Ritchey M, Rodriguez CJ, Roth GA, Rosamond WD, Sasson C, Towfighi A, Tsao CW, Turner MB, Virani SS, Voeks JH, Willey JZ, Wilkins JT, Wu JH, Alger HM, Wong SS, Muntner P, American HASCSS. Heart disease and stroke statistics-2017 update: a report from the American Heart Association. Circulation. 2017 Mar 07;135(10):e146–e603. doi: 10.1161/CIR.0000000000000485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Roguin A. Rene Theophile Hyacinthe Laënnec (1781-1826): the man behind the stethoscope. Clin Med Res. 2006 Sep;4(3):230–5. doi: 10.3121/cmr.4.3.230. http://www.clinmedres.org/cgi/pmidlookup?view=long&pmid=17048358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Luisada AA, MacCanon DM, Kumar S, Feigen LP. Changing views on the mechanism of the first and second heart sounds. Am Heart J. 1974 Oct;88(4):503–14. doi: 10.1016/0002-8703(74)90213-0. [DOI] [PubMed] [Google Scholar]

- 6.Shaver JA. Cardiac auscultation: a cost-effective diagnostic skill. Curr Probl Cardiol. 1995 Jul;20(7):441–530. [PubMed] [Google Scholar]

- 7.Frishman WH. Is the stethoscope becoming an outdated diagnostic tool? Am J Med. 2015 Jul;128(7):668–9. doi: 10.1016/j.amjmed.2015.01.042. [DOI] [PubMed] [Google Scholar]

- 8.Bank I, Vliegen HW, Bruschke AVG. The 200th anniversary of the stethoscope: can this low-tech device survive in the high-tech 21st century? Eur Heart J. 2016 Dec 14;37(47):3536–3543. doi: 10.1093/eurheartj/ehw034. [DOI] [PubMed] [Google Scholar]

- 9.Liebo MJ, Israel RL, Lillie EO, Smith MR, Rubenson DS, Topol EJ. Is pocket mobile echocardiography the next-generation stethoscope? A cross-sectional comparison of rapidly acquired images with standard transthoracic echocardiography. Ann Intern Med. 2011 Jul 05;155(1):33–8. doi: 10.7326/0003-4819-155-1-201107050-00005. http://europepmc.org/abstract/MED/21727291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mehta M, Jacobson T, Peters D, Le E, Chadderdon S, Allen AJ, Caughey AB, Kaul S. Handheld ultrasound versus physical examination in patients referred for transthoracic echocardiography for a suspected cardiac condition. JACC Cardiovasc Imaging. 2014 Oct;7(10):983–90. doi: 10.1016/j.jcmg.2014.05.011. http://linkinghub.elsevier.com/retrieve/pii/S1936-878X(14)00546-4. [DOI] [PubMed] [Google Scholar]

- 11.Vukanovic-Criley JM, Criley S, Warde CM, Boker JR, Guevara-Matheus L, Churchill WH, Nelson WP, Criley JM. Competency in cardiac examination skills in medical students, trainees, physicians, and faculty: a multicenter study. Arch Intern Med. 2006 Mar 27;166(6):610–6. doi: 10.1001/archinte.166.6.610. [DOI] [PubMed] [Google Scholar]

- 12.Mangione S, Nieman LZ. Cardiac auscultatory skills of internal medicine and family practice trainees. A comparison of diagnostic proficiency. JAMA. 1997 Sep 03;278(9):717–22. [PubMed] [Google Scholar]

- 13.Mangione S, Nieman LZ, Gracely E, Kaye D. The teaching and practice of cardiac auscultation during internal medicine and cardiology training. A nationwide survey. Ann Intern Med. 1993 Jul 01;119(1):47–54. doi: 10.7326/0003-4819-119-1-199307010-00009. [DOI] [PubMed] [Google Scholar]

- 14.Lok CE, Morgan CD, Ranganathan N. The accuracy and interobserver agreement in detecting the 'gallop sounds' by cardiac auscultation. Chest. 1998 Nov;114(5):1283–8. doi: 10.1378/chest.114.5.1283. [DOI] [PubMed] [Google Scholar]

- 15.Poushter J. Smartphone ownership and Internet usage continues to climb in emerging economies but advanced economies still have higher rates of technology use. Washington, DC: Pew Research Center; 2016. Feb 22, [2018-02-09]. http://assets.pewresearch.org/wp-content/uploads/sites/2/2016/02/pew_research_center_global_technology_report_final_february_22__2016.pdf . [Google Scholar]

- 16.Smith A, Page D. US smartphone use in 2015. Washington, DC: Pew Research Center; 2015. Apr 01, [2018-02-09]. http://assets.pewresearch.org/wp-content/uploads/sites/14/2015/03/PI_Smartphones_0401151.pdf . [Google Scholar]

- 17.Lupton D, Jutel A. 'It's like having a physician in your pocket!' A critical analysis of self-diagnosis smartphone apps. Soc Sci Med. 2015 May;133:128–35. doi: 10.1016/j.socscimed.2015.04.004. [DOI] [PubMed] [Google Scholar]

- 18.Armstrong S. Which app should I use? BMJ. 2015 Sep 09;351:h4597. doi: 10.1136/bmj.h4597. [DOI] [PubMed] [Google Scholar]

- 19.Barabasa C, Jafari M, Plumbley MD. A robust method for S1/S2 heart sounds detection without ECG reference based on music beat tracking. 10th International Symposium on Electronics and Telecommunications (ISETC); Nov 15-16, 2012; Timisoara, Romania. 2012. pp. 15–16. [Google Scholar]

- 20.Kingma D, Ba J. Adam: a method for stochastic optimization. arXiv:14126980. 2017. Jan 30, [2018-02-09]. https://arxiv.org/abs/1412.6980 .

- 21.Rampasek L, Goldenberg A. TensorFlow: biology's gateway to deep learning? Cell Syst. 2016 Jan 27;2(1):12–4. doi: 10.1016/j.cels.2016.01.009. http://linkinghub.elsevier.com/retrieve/pii/S2405-4712(16)00010-7. [DOI] [PubMed] [Google Scholar]

- 22.The wonder drug: a digital revolution in health care is speeding up. London, UK: The Economist; 2017. Mar 02, [2017-11-28]. https://www.economist.com/news/business/21717990-telemedicine-predictive-diagnostics-wearable-sensors-and-host-new-apps-will-transform-how . [Google Scholar]

- 23.Medicines and Healthcare Products Regulatory Agency Guidance: medical device stand-alone software including apps (including IVDMDs) 2017. Sep, [2018-02-09]. https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/648465/Software_flow_chart_Ed_1-04.pdf .

- 24.Reichert S, Gass R, Brandt C, Andrès E. Analysis of respiratory sounds: state of the art. Clin Med Circ Respirat Pulm Med. 2008 May 16;2:45–58. doi: 10.4137/ccrpm.s530. http://europepmc.org/abstract/MED/21157521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Obermeyer Z, Emanuel EJ. Predicting the future - big data, machine learning, and clinical medicine. N Engl J Med. 2016 Sep 29;375(13):1216–9. doi: 10.1056/NEJMp1606181. http://europepmc.org/abstract/MED/27682033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chen JH, Asch SM. Machine learning and prediction in medicine - beyond the peak of inflated expectations. N Engl J Med. 2017 Jun 29;376(26):2507–2509. doi: 10.1056/NEJMp1702071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Goldstein BA, Navar AM, Carter RE. Moving beyond regression techniques in cardiovascular risk prediction: applying machine learning to address analytic challenges. Eur Heart J. 2017 Jun 14;38(23):1805–1814. doi: 10.1093/eurheartj/ehw302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bentley PJ. iStethoscope: a demonstration of the use of mobile devices for auscultation. Methods Mol Biol. 2015;1256:293–303. doi: 10.1007/978-1-4939-2172-0_20. [DOI] [PubMed] [Google Scholar]

- 29.Halcox JPJ, Wareham K, Cardew A, Gilmore M, Barry JP, Phillips C, Gravenor MB. Assessment of remote heart rhythm sampling using the AliveCor heart monitor to screen for atrial fibrillation: the REHEARSE-AF Study. Circulation. 2017 Nov 07;136(19):1784–1794. doi: 10.1161/CIRCULATIONAHA.117.030583. [DOI] [PubMed] [Google Scholar]

- 30.Azimpour F, Caldwell E, Tawfik P, Duval S, Wilson RF. Audible coronary artery stenosis. Am J Med. 2016 May;129(5):515–521.e3. doi: 10.1016/j.amjmed.2016.01.015. [DOI] [PubMed] [Google Scholar]

- 31.Gorny AW, Liew SJ, Tan CS, Müller-Riemenschneider F. Fitbit Charge HR wireless heart rate monitor: validation study conducted under free-living conditions. JMIR Mhealth Uhealth. 2017 Oct 20;5(10):e157. doi: 10.2196/mhealth.8233. http://mhealth.jmir.org/2017/10/e157/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vandenberk T, Stans J, Van Schelvergem G, Pelckmans C, Smeets CJ, Lanssens D, De Cannière CH, Storms V, Thijs IM, Vandervoort PM. Clinical validation of heart rate apps: mixed-methods evaluation study. JMIR Mhealth Uhealth. 2017 Aug 25;5(8):e129. doi: 10.2196/mhealth.7254. http://mhealth.jmir.org/2017/8/e129/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Leng S, Tan RS, Chai KTC, Wang C, Ghista D, Zhong L. The electronic stethoscope. Biomed Eng Online. 2015 Jul 10;14:66. doi: 10.1186/s12938-015-0056-y. https://biomedical-engineering-online.biomedcentral.com/articles/10.1186/s12938-015-0056-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Standards for Reporting of Diagnostic Accuracy Studies study checklist and study diagram.

Audio file 1 (normal heart sound).

Audio file 2 (aortic stenosis).

Audio file 3 (mitral regurgitation).