Abstract

Hedges (2018) encourages us to consider asking new scientific questions concerning the optimization of adaptive interventions in education. In this commentary, we have expanded on this (albeit briefly) by providing concrete examples of scientific questions and associated experimental designs to optimize adaptive interventions, and commenting on some of the ways such designs might challenge us to think differently. A great deal of methodological work remains to be done. For example, we have only begun to consider experimental design and analysis methods for developing “cluster-level adaptive interventions” (NeCamp, Kilbourne, & Almirall, 2017), or to extend methods for comparing the marginal mean trajectories between the adaptive interventions embedded in a SMART (Lu et al., 2016) to accommodate random effects. These methodological advances, among others, will propel educational research concerning the construction of more complex, yet meaningful, interventions that are necessary for improving student and teacher outcomes.

What is the Future of Research on Educational Interventions?

Hedges (2018) indicates that the future depends on our ability to design studies that are (a) replicable, (b) generalizable, and (c) can evaluate the complex interventions needed to improve student learning outcomes. On the third point, Hedges (2018) stresses the importance of not only testing single-point-in-time interventions but sequences of interventions and optimizing those sequences. He also emphasizes that as a profession we must expand the portfolio of experimental designs used to optimize and evaluate such interventions. We wholeheartedly agree with Hedges (2018). Sequential interventions that are adapted to the outcomes of students (or educators), known as adaptive interventions, hold great promise for the future. Moreover, a significant advance in statistics over the last decade has been the development of experimental designs and associated analytic methods that allow scientists to optimize adaptive interventions and evaluate their effects. A key innovation has been the development of Sequential Multiple Assignment Randomized Trials (SMARTs), which allow for rigorous experimental evidence, like that promoted by the Institute of Education Sciences (IES) and Hedges (2018), to inform the construction of optimized adaptive interventions (Kosorok & Moodie, 2016; Lavori & Dawson, 2004; Murphy, 2005). These designs have been used in clinical and behavioral research for years and are starting to get more traction in education research. We are excited about embracing these designs in education research as they hold great promise for better interventions and student outcomes in the future. However, we must be careful not to allow the elegance of these designs to distract us from the goal of better interventions—the study design must always be in service of the research question; not the other way around. In this commentary, we give a brief overview of adaptive interventions and the experimental designs for optimizing them with some advice on how to match the designs to the scientific questions.

Adaptive Interventions: What and Why?

An adaptive intervention is a prespecified set of decision rules that guide whether, how, or when—and based on which measure(s)—to offer different intervention options initially and over time (Almirall & Chronis-Tuscano, 2016; Collins, Murphy, & Bierman, 2004; Nahum-Shani et al., 2012). Typically, an adaptive intervention is needed because treatment effects are heterogeneous: for example, schoolchildren differ in how they respond or adhere to different intervention options. Another reason is that, in some cases, too little intervention is insufficient, but more intervention may be cost prohibitive, a cost–benefit trade-off that may unfold over time. Yet another reason is that depending on the changing status of the student, school professional, or school, some intervention options are more feasible than others.

Adaptive interventions are not new to education: response-to-intervention or tiered-intervention approaches (e.g., Fuchs & Fuchs, 2017; Horner et al., 2009), curriculum-based measurement interventions (e.g., Deno, 2003), many preventive interventions used in school settings (e.g., Bierman, Nix, Maples, & Murphy, 2006; Stormshak, Connell, & Dishion, 2009), and instructional interventions, such as individualized student instruction for reading (e.g., Connor et al., 2011), are just a few examples of adaptive interventions in education. What is new is much of the methodological science around constructing and studying optimized adaptive interventions.

Adaptive Interventions are Multicomponent

Figure 1 provides an example adaptive intervention to improve language outcomes in children with autism (ages 5–8) who are minimally verbal. Here, children are offered a highly structured intervention based on applied behavior analysis known as Discrete Trial Teaching (DTT; Smith, 2001) involving two sessions per week in stage 1 (weeks 1–12); children who respond at the end of 12 weeks continue with DTT in stage 2 (weeks 12–24); children who are slower to respond are offered intensified DTT, involving three sessions per week in stage 2. Slow response is defined as making <25% improvement from baseline to week 12 on a therapist-rated measure of total number of spontaneous communicative utterances (SCU) in a 20-minute sample; this measure (and its cutoff) is known as a “tailoring variable” because it is used to tailor/decide the stage 2 intervention. Components of this example adaptive intervention include (a) DTT at entry, (b) 12-week duration of stage 1; (c) monitoring for change in SCU, (d) using SCU and its <25% improvement cutoff to classify responders vs. slow responders, (e) “stay the course” strategy for responders, and (f) “intensified treatment” strategy for slow responders.

Figure 1.

An example of one adaptive intervention for improving language outcomes in children with autism who are minimally verbal. This adaptive intervention is typical of usual care (e.g., business-as-usual) in children with autism. This is an intervention design, not a study design.

The Promise of Optimizing Adaptive Interventions

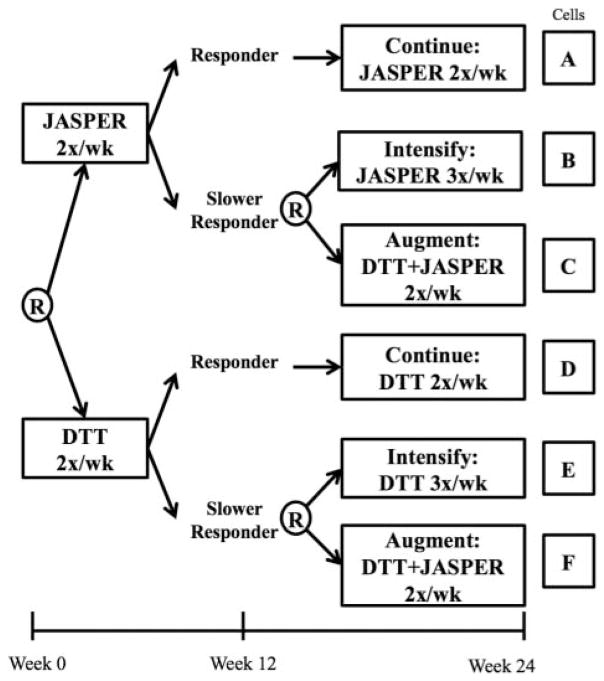

Obviously, if we are going to have interventions that adapt to a student’s baseline and intermediate outcomes, then we would like those adaptations to best meet the students’ needs and optimize the effectiveness of the entire sequence of interventions (Collins, Nahum-Shani, & Almirall, 2014). In the context of the example 24-week intervention in Figure 1, this may relate to scientific questions such as (Q1) “In stage 1, instead of offering DTT, should we offer a different intervention that specifically targets communication, such as Joint Attention Symbolic Play Engagement and Regulation intervention (JASPER; Kasari et al., 2014), and who might benefit from this intervention (vs. DTT)?”; (Q2) “Should we wait 6 weeks instead of 12 weeks to decide if a child is a slow responder to stage 1 treatment, and who might benefit from such a shorter (vs. longer) stage 1 duration?”; or (Q3) “Among slower responders to stage 1 treatment, instead of intensifying stage 1 treatment (to 3 sessions per week), should we combine JASPER and DTT (kept at twice weekly), and are there slower responders who might benefit more from this combination (vs. intensification)?” Questions Q1–Q3 are about developing an optimized adaptive intervention. The answers to Q1–Q3 could lead to an improved adaptive intervention that is different from, or more individually tailored than, the adaptive intervention shown in Figure 1.

Not All Research on Developing an Optimized Adaptive Intervention Requires a SMART

SMARTs are but one type of experimental design that could be used to answer diverse research questions, such as those in Q1–Q3, relating to the construction of adaptive interventions. As always, the choice of study design must depend on which (set) of questions the investigator is most interested in addressing. One example is in Figure 2, which can be used to investigate Q1 (only); this design is a standard two-arm trial with a head-to-head comparison of two adaptive interventions. A second example is the design in Figure 3, which can be used to investigate Q1 and Q3; this design is a SMART. Other study designs are possible: For example, to address Q3 (only) in the context of DTT, we could remove the initial randomization in Figure 3 so that all children in the study receive DTT in stage 1; this is a single-randomization study (not a SMART). Or, to address Q2 (only) we could replace the randomization in Figure 2 with a randomization to two different stage 1 durations for DTT (6 weeks vs. 12 weeks); again this is a single-randomization study (not a SMART). Or, to address both questions Q1 and Q2 (i.e., to understand how type of stage 1 treatment and duration work with or against each other to impact outcomes), the first randomization could be a 2 × 2 factorial (Collins, Dziak, Kugler, & Trail, 2014) to DTT vs. JASPER crossed with 6 vs. 12 weeks.

Figure 2.

An example 2-arm randomized trial comparing two adaptive interventions that differ in terms of their stage 1 intervention but are similar in terms of stage 2 strategy (continue intervention for responders, or intensify intervention for slower responders). The adaptive intervention that begins with DTT is the same one shown in Figure 1.

Figure 3.

An example sequential multiple assignment randomized trial (SMART). This SMART includes four embedded adaptive interventions, including the one shown in Figure 1 (cells D and E).

Preparing vs. Empirically Developing vs. Evaluating an Adaptive Intervention

Q1–Q3 are fundamentally different from questions related to preparing for an adaptive intervention, for example, understanding feasibility and acceptability considerations in developing (the components of) an adaptive intervention using a pilot study (Almirall, Compton, Gunlicks-Stoessel, Duan, & Murphy, 2012). Q1–Q3 are also fundamentally different from questions related to evaluating an already developed adaptive intervention, e.g., understanding the effect of an (already optimized) adaptive intervention versus a suitable control group using a conventional, confirmatory two-arm randomized trial. The designs in Figures 2–3 are neither preparation/pilot studies, nor conventional confirmatory randomized trials. Rather, they are studies meant to provide the data necessary to design an optimized adaptive intervention, that is, for evaluating component(s) making up an adaptive intervention. The ultimate goal of such studies is to generate one or more proposed adaptive interventions that could be evaluated in a subsequent conventional confirmatory randomized trial. The distinction (and the process of moving) between research that focuses on preparation, optimization, and evaluation is the core of the Multi-phase Optimization Strategy Framework (MOST; Collins et al., 2014; Collins, Kugler, & Gwadz, 2015; there are also two forthcoming books on this topic, see http://www.sbm.org/publications/outlook/issues/fall-2016/obi-sig/full-article), which was also mentioned in Hedges (2018).

Optimization Studies May Involve Different Considerations as Compared to Conventional, Confirmatory Randomized Trials

For example, it is not uncommon for a conventional, confirmatory trial to include a business-as-usual (BAU) control condition where it is unknown a priori what (sequence of) interventions a student might receive in the BAU condition. However, this would be unusual for a trial seeking to develop an optimized adaptive intervention. More typically, trials seeking to develop an optimized adaptive intervention embed a well-operationalized version of BAU as part of the design, so that we may better learn how best to optimize (relative to) the BAU intervention. All the example experimental designs discussed earlier are like this in that the adaptive intervention in Figure 1, which resembles typical practice for children with autism (Kasari & Smith, 2016; Smith, 2010), is embedded within each of them. Another example is that we may be more willing, in optimization studies, to focus on reducing Type II errors (false negatives) than on controlling Type I errors (false positives), which is more typical of confirmatory trials (e.g., Nahum-Shani et al., 2017).

Advancing Research on Adaptive Interventions in Education May Require the Continued Development of More Dynamic Theories of Change

Theories of change (together with practical experience, expertise, or prior data) play a critical role in identifying research questions and, therefore, in selecting an appropriate experimental design. Yet, in our experience in conducting research on adaptive interventions, we find that not all theories of change are as useful for that purpose. To guide the construction of adaptive interventions, theories of change should not only articulate the mechanisms underlying student learning outcomes, but also specify when or how often meaningful changes in these mechanisms (or intermediate outcomes) are expected to occur. The element of time has to be explicit enough in these theories in order to guide the development of interventions that modify the treatment over time.

Optimization Studies Do Not Fit Clearly into IES’s Classification Scheme

In its external grants program, IES distinguishes between studies that develop interventions and conduct at most smaller scale pilot studies in the feasibility-and-acceptability development process versus larger scale evaluation studies that test the efficacy or effectiveness of existing developed interventions. The development studies provide the components of adaptive intervention, and efficacy/effectiveness trials test the finalized sequential interventions. It is not clear where work to optimally piece together components into an adaptive intervention fits in the IES scheme. Given the importance of the IES grants program for promoting rigorous studies concerning education interventions (Hedges, 2018), a key step to promoting the use of well-designed and tested adaptive interventions may be a change in IES’s grants program to clearly specify a funding outlet for optimization studies aiming to evaluate intervention components, such as SMARTs and related studies.

Acknowledgments

We would like to thank Elizabeth Stuart and Sean Reardon for the invitation to comment on Dr. Hedges’s article.

Funding

Funding was provided by the National Institutes of Health (P50DA039838, R01DA039901, R01HD073975, R01DK108678, R01AA023187, R01AA022113, and U54EB020404) and the Institute for Education Sciences (R324U150001).

References

- Almirall D, Chronis-Tuscano A. Adaptive interventions in child and adolescent mental health. Journal of Clinical Child & Adolescent Psychology. 2016;45(4):383–395. doi: 10.1080/15374416.2016.1152555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Almirall D, Compton SN, Gunlicks-Stoessel M, Duan N, Murphy SA. Designing a pilot sequential multiple assignment randomized trial for developing an adaptive treatment strategy. Statistics in Medicine. 2012;31(17):1887–1902. doi: 10.1002/sim.4512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bierman KL, Nix RL, Maples JJ, Murphy SA. Examining clinical judgment in an adaptive intervention design: The fast track program. Journal of Consulting and Clinical Psychology. 2006;74(3):468–481. doi: 10.1037/0022-006X.74.3.468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Dziak JJ, Kugler KC, Trail JB. Factorial experiments: Efficient tools for evaluation of intervention components. American Journal of Preventive Medicine. 2014;47(4):498–504. doi: 10.1016/j.amepre.2014.06.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Kugler KC, Gwadz MV. Optimization of multicomponent behavioral and biobehavioral interventions for the prevention and treatment of HIV/AIDS. AIDS and Behavior. 2015;20(Supp 1):197–214. doi: 10.1007/s10461-015-1145-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Murphy SA, Bierman KL. A conceptual framework for adaptive preventive interventions. Prevention Science. 2004;5(3):185–196. doi: 10.1023/B:PREV.0000037641.26017.00. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Nahum-Shani I, Almirall D. Optimization of behavioral dynamic treatment regimens based on the sequential, multiple assignment, randomized trial (SMART) Clinical Trials. 2014;11(4):426–434. doi: 10.1177/1740774514536795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connor CM, Morrison FJ, Schatschneider C, Toste JR, Lundblom E, Crowe EC, Fishman B. Effective classroom instruction: Implications of child characteristics by reading instruction interactions on first graders’ word reading achievement. Journal of Research on Educational Effectiveness. 2011;4(3):173–207. doi: 10.1080/19345747.2010.510179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deno SL. Developments in curriculum-based measurement. The Journal of Special Education. 2003;37(3):184–192. doi: 10.1177/00224669030370030801. [DOI] [Google Scholar]

- Fuchs D, Fuchs LS. Critique of the National Evaluation of Response to Intervention: A case for simpler frameworks. Exceptional Children. 2017;83(3):255–268. doi: 10.1177/0014402917693580. [DOI] [Google Scholar]

- Hedges LV. Challenges in building usable knowledge in education. Journal of Research on Educational Effectiveness. 2018;11(1):1–21. [Google Scholar]

- Horner RH, Sugai G, Smolkowski K, Eber L, Nakasato J, Todd AW, Esperanza J. A randomized, wait-list controlled effectiveness trial assessing school-wide positive behavior support in elementary schools. Journal of Positive Behavior Interventions. 2009;11(3):133–144. doi: 10.1177/1098300709332067. [DOI] [Google Scholar]

- Kasari C, Lawton K, Shih W, Landa R, Lord C, Orlich F, … Senturk D. Caregiver-mediated intervention for low-resourced preschoolers with autism: An RCT. Pediatrics. 2014;134(1):72–79. doi: 10.1542/peds.2013-3229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kasari C, Smith T. Forest for the trees: Evidence-based practices in ASD. Clinical Psychology: Science and Practice. 2016;23(3):260–264. [Google Scholar]

- Kosorok MR, Moodie EE, editors. Series on Statistics and Applied Probability. Society for Industrial and Applied Mathematics; Philadelphia, PA: 2016. Adaptive treatment strategies in practice: Planning trials and analyzing data for personalized medicine. [Google Scholar]

- Lavori PW, Dawson R. Dynamic treatment regimes: Practical design considerations. Clinical trials. 2004;1(1):9–20. doi: 10.1191/1740774S04cn002oa. [DOI] [PubMed] [Google Scholar]

- Lu X, Nahum-Shani I, Kasari C, Lynch KG, Oslin DW, Pelham WE, Almirall D. Comparing dynamic treatment regimens using repeated-measures outcomes: Modeling considerations in SMART studies. Statistics in Medicine. 2016;35(10):1595–1615. doi: 10.1002/sim.6819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy SA. An experimental design for the development of adaptive treatment strategies. Statistics in Medicine. 2005;24(10):1455–1481. doi: 10.1002/sim.2022. [DOI] [PubMed] [Google Scholar]

- Nahum-Shani I, Ertefaie A, Lu XL, Lynch KG, McKay JR, Oslin DW, Almirall D. A SMART data analysis method for constructing adaptive treatment strategies for substance use disorders. Addiction. 2017;112(5):901–909. doi: 10.1111/add.13743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nahum-Shani I, Qian M, Almirall D, Pelham WE, Gnagy B, Fabiano GA, Murphy SA. Experimental design and primary data analysis methods for comparing adaptive interventions. Psychological Methods. 2012;17(4):457. doi: 10.1037/a0029372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NeCamp T, Kilbourne A, Almirall D. Comparing cluster-level dynamic treatment regimens using sequential, multiple assignment, randomized trials: Regression estimation and sample size considerations. Statistical Methods in Medical Research. 2017;26(4):1572–1589. doi: 10.1177/0962280217708654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith T. Discrete trial training in the treatment of autism. Focus on Autism and Other Developmental Disabilities. 2001;16(2):86–92. doi: 10.1177/108835760101600204. [DOI] [Google Scholar]

- Smith T. Early and intensive behavioral intervention in autism. In: Weisz JR, Kazdin AE, editors. Evidence-based psychotherapies for children and adolescents. Guilford Press; New York, NY: 2010. [Google Scholar]

- Stormshak EA, Connell A, Dishion TJ. An adaptive approach to family-centered intervention in schools: Linking intervention engagement to academic outcomes in middle and high school. Prevention Science. 2009;10(3):221–235. doi: 10.1007/s11121-009-0131-3. [DOI] [PMC free article] [PubMed] [Google Scholar]