Abstract

Minigames capturing the essence of Public Goods experiments show that even in the absence of rationality assumptions, both punishment and reward will fail to bring about prosocial behavior. This result holds in particular for the well-known Ultimatum Game, which emerges as a special case. But reputation can induce fairness and cooperation in populations adapting through learning or imitation. Indeed, the inclusion of reputation effects in the corresponding dynamical models leads to the evolution of economically productive behavior, with agents contributing to the public good and either punishing those who do not or rewarding those who do. Reward and punishment correspond to two types of bifurcation with intriguing complementarity. The analysis suggests that reputation is essential for fostering social behavior among selfish agents, and that it is considerably more effective with punishment than with reward.

Experimental economics relies increasingly on simple games to exhibit behavior that is blatantly at odds with the assumption that players are uniquely attempting to maximize their own utility (1–4). We briefly describe two particularly well-known games that highlight the prevalence of fairness and solidarity, without delving into experimental details and variations.

In the Ultimatum Game, the experimenter offers a certain sum of money to two players, provided they can split it among themselves according to specific rules. One randomly chosen player (the proposer) is asked to propose how to divide the money. The coplayer (the responder) can either accept this proposal, in which case the money is accordingly divided, or else reject the offer, in which case both players get nothing. The game is not repeated. Because a “rational” responder ought to accept any offer, as long as it is positive, a selfish proposer who thinks that the responder is rational, in this sense, should offer the minimal positive sum. As has been well documented in many experiments, this is not how humans behave, in general. Many proposers offer close to one-half of the sum, and responders who are offered less than one-third often reject the offer (5, 6).

In the Public Goods Game, the experimenter asks each of N players to invest some amount of money into a common pool. This money is then multiplied by a factor r (with 1 < r < N) and divided equally among the N players, irrespective of their contribution. The selfish strategy is obviously to invest nothing, because only a fraction r/N < 1 of each contribution returns to the donor. Nevertheless, a sizeable proportion of players invest a substantial amount. This economically productive tendency is further enhanced if the players, after the game, are allowed to impose fines on their coplayers. These fines must be paid to the experimenter, not to the punisher. In fact, imposing a fine costs a certain fee to the punisher (which also goes to the experimenter). Punishing is therefore an unselfish activity. Nevertheless, even in the absence of future interactions, many players are ready to punish free riders, and this behavior has the obvious effect of increasing the contributions to the common pool (refs. 3, 4, 7–9; for the role of punishment in animal societies, see ref. 10).

Simple as they are, both games have a large number of possible strategies. For the Ultimatum Game, these consist in the amount offered (when proposer) or the aspiration level (when responder); any amount below the aspiration level is rejected. For the Public Goods Game with Punishment, the strategies are defined by the size of the contribution and the fines meted out to the coplayers. To achieve a better theoretical understanding, it is useful to reduce these simple games even further and to consider minigames with binary options only. In doing this, we are following a distinguished file of predecessors (5, 11, 12). We shall then use the results from Gaunersdorfer et al. (13) (see also refs. 14, 15) to analyze these games by studying the corresponding replicator dynamics. It turns out that the Ultimatum minigame is just a special case of the Public Goods with Punishment minigame. Evolutionary game theory—like the classical theory—predicts the selfish “rational” outcome. But if an arbitrarily small reputation effect is included in the analysis, a bifurcation of the dynamics allows for an outcome that is more “social” and closer to what is actually observed in experiments.

We analyze similarly a minigame describing the Public Goods Game with Rewards (in which case the recipient of a gift has the option of returning part of it to the donor). Again, evolutionary game theory and classical theory predict the selfish outcome: no gifts and no rewards. This time, the corresponding reputation effect introduces another type of bifurcation. The outcome is more complex and less stable than in the punishment case.

It is tempting to suggest that this finding reflects why, in experiments, results obtained by including rewards are considerably less pronounced than those describing punishment (Ernst Fehr, personal communication). We concentrate in this note on the mathematical aspects of the minigames, but we argue in the discussion that reduction to a minigame is also interesting for experimenters, because the options are more clear-cut.

Public Goods with Punishment

For the minigame reflecting the Public Goods Game, we shall assume that there are only two players, and that both can send a gift g to their coplayer at a cost −c to themselves, with 0 < c < g. The players have to decide simultaneously whether to send the gift to their coplayer. They are effectively engaged in a Prisoner's Dilemma. We continue to call it a Public Goods Game, although the reduction to two players may affect an essential aspect of the game.

After this interaction, they are offered the opportunity to punish their coplayer by imposing a fine. The fine amounts to a loss −β to the punished player, but it entails a cost −γ to the punisher. Defecting and refusing to punish is obviously the dominating solution.

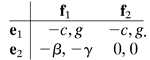

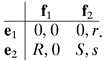

If we assume that players can impose their fine conditionally, fining only those who have failed to help them, the long-term outcome will still be the same as before: no prosocial behavior emerges. Indeed, let us label with e1 those players who cooperate by sending a gift to their coplayer and with e2 those who do not, i.e., who defect; similarly, let f1 denote those who punish defectors and f2 those who do not. The payoff matrix is given by

|

1 |

Here, the first number in each entry is the payoff for the corresponding row player and the second number, for the column player.

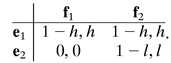

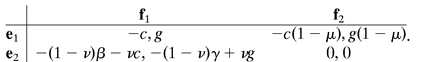

For the minigame corresponding to the Ultimatum Game, we normalize the sum to be divided as 1 and assume that proposers have to decide between two offers only, high and low. Thus proposers have to choose between option e1 (high offer h) and e2 (low offer l) with 0 < l < h < 1. Responders are of two types, namely f1 (accept high offers only) and f2 (accept every offer). In this case, the payoff matrix is

|

2 |

A Minicourse on Minigames

More generally, let us assume that players are in two roles, I and II, such that players in role I interact only with players in role II and vice-versa. Let there be two possible options, e1 and e2, in role I, and f1 and f2 in role II, and let the payoff matrix be

|

3 |

If players find themselves in both roles, their strategies are G1 = e1f1, G2 = e2f1, G3 = e2f2 and G4 = e1f2. Therefore we obtain a symmetric game, and the payoff for a player using Gi against a player using Gj is given by the (i, j)-entry of the matrix

|

4 |

For instance, a G1 player meeting a G3 opponent plays e1 against the opponents f2 and obtains B and plays f1 against the opponents e2, which yields c. In the Public Goods with Punishment minigame, the two roles are that of potential donor and potential punisher, and both players play both roles. In the Ultimatum Game, a player plays only one role and the coplayer the other, but because they find themselves with equal probability in one or the other role, we only have to multiply the previous matrix with the factor 1/2 to get the expected payoff values. We shall omit this factor in the following.

We turn now to the standard version of evolutionary game theory, where we consider a large population of players who are randomly matched to play the game. We denote by xi(t) the frequency of strategy Gi at time t and assume that these frequencies change according to the success of the strategies. Thus the state x = (x1, x2, x3, x4) (with xi ≥ 0 and ∑ xi = 1) evolves in the unit simplex S4. The average payoff for strategy Gi is (Mx)i. We shall assume a particularly simple learning mechanism and postulate that the rate according to which a Gi-player switches to strategy Gj is proportional to the payoff difference (Mx)j − (Mx)i (and is 0 if the difference is negative). We then obtain the replicator equation (14, 16, 17),

|

5 |

for i = 1, 2, 3, 4, where M̄ = ∑ xj(Mx)j is the average payoff in the population. It is well known that the dynamics does not change if one modifies the payoff matrix M by replacing mij by mij − m1j. Thus, we can use, instead of Eq. 4, the matrix

|

6 |

where R = C − A, r = b − a, S = D − B and s = d − c. Alternatively, we could have normalized the payoff matrix (Eq. 3) to

|

7 |

The matrix M has the property that m1j + m3j = m2j + m4j for each j, so that (Mx)1 + (Mx)3 = (Mx)2 + (Mx)4 for all x. From this equality follows that (x1x3)/(x2x4) is an invariant of motion for the replicator dynamics: the value of this ratio remains unchanged along every orbit. Hence the interior of the state simplex S4 is foliated by the invariant surfaces WK = {x ∈ S4: x1x3 = Kx2x4}, with 0 < K < ∞. Each such saddle-like surface is spanned by the frame G1 − G2 − G3 − G4 − G1 consisting of four edges of S4. The orientation of the flow on these edges can easily be obtained from the previous matrix. For instance, if R = 0, then the edge G1G2 consists of fixed points. If R > 0, the flow points from G1 towards G2 (G2 dominates G1 in the absence of the other strategies), and conversely from G2 to G1 if R < 0. Similarly, the orientation of the edge G2G3 is given by the sign of s, that of G3G4 by the sign of S, and that of G4G1 by the sign of r.

Generically, the parameters R, S, r, and s are nonzero. Therefore we have 16 orientations of G1G2G3G4, which, by symmetry, can be reduced to 4. In Gaunersdorfer et al. (13), all possible dynamics for the generic case have been classified.

Public Goods with Punishment and Ultimatum Minigames

If we apply this to the Public Goods with Punishment minigame, we find R = c − β, S = c, r = 0, and s = γ. For the Ultimatum minigame, we get R = −(1 − h), S = h − l, r = 0, and s = l.

In fact, the Ultimatum minigame is a Public Goods minigame, with l = γ, β = 1 − l, and g = c = h − l. Intuitively, this rejection simply means that in the Ultimatum minigame, the gift consists in making the high instead of the low offer. The benefit to the recipient (i.e., the responder) h − l is equal to the cost to the donor (i.e., the proposer). The punishment consists in refusing the offer. This costs the responder the amount l (which had been offered to him) and punishes the proposer by the amount 1 − l, which can be large if the offer has been dismal.

We can therefore concentrate on the Public Goods minigame. Note that it is nongeneric (r is zero), because the punishment option is excluded after a cooperative move (and in the Ultimatum minigame, no responder rejects the high offer).

In the interior of S4 (more precisely, whenever x2 > 0 or x3 > 0) we have (Mx)4 > (Mx)1, and hence x4/x1 is increasing. Similarly, x3/x2 is increasing. Therefore, there is no fixed point in the interior of S4. Thus the fixed points in WK are the corners Gi and the points on the edge G1G4. To check which of these are Nash equilibria, it is enough to check whether they are saturated. We note that a fixed point z is said to be saturated if (Mz)i ≤ M̄ for all i, with zi = 0. G3 is saturated; G2 is not. A point x on the edge G1G4 is saturated whenever (Mx)3 ≤ [x1(Mx)1 + (1 − x1)(Mx)4], i.e., whenever x1 ≥ c/β (using (Mx)4 = (Mx)1). The condition (Mx)2 ≤ M̄ reduces to the same inequality. Thus if c > β, G3 is the only Nash equilibrium. This case is of little interest.

From now on, we restrict our attention to the case c < β: the fine costs more than the cooperative act. We note that this inequality is always satisfied for the Ultimatum minigame and for public transportation. We denote the point (c/β, 0, 0, (β − c)/β) with Q and see that the closed segment QG1 consists of Nash equilibria.

In this case, R < 0, and the orientation of the edges of WK is given by Fig. 1. On the edge G2G4, there exists another fixed point F = (0, c/(β + γ), 0, (β + γ − c)/(β + γ)). It is attracting on the edge and in the face G2G4G1 but repelling on the face G2G4G3. Finally, there is also a fixed point on the edge G1G3, namely the point P = ((c + γ)/(β + γ), 0, (β − c)/(β + γ), 0). It is attracting in the face spanned by that edge and G2 but repelling in the face spanned by that edge and G4. In the absence of other strategies, the strategies G1 and G3 are bistable. The strategy G1 is risk dominant (i.e., it has the larger basin of attraction) if 2c < β − γ. We note that in the special case of the Ultimatum minigame, this reduces to the condition h < 1/2.

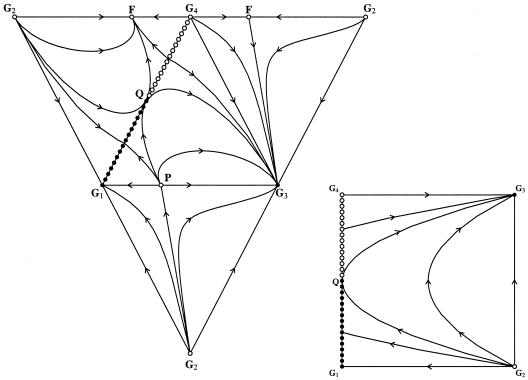

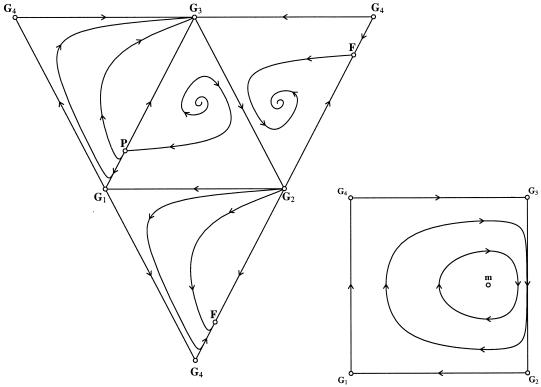

Figure 1.

Public Goods with Punishment but without Reputation. Dynamics on the four faces of the simplex S4 and on the invariant manifold WK with K = 1. The edge G1G4 is line of fixed points. On G1Q, they are stable (filled circles) and on QG4 unstable (open circles). In addition, there are two saddle points P and F on the edges G1G3 and G2G4. The social state G1 (donations and punishment) and the asocial state G3 (no gifts, no punishment) are both stable. However, random shocks eventually drive the system to the asocial equilibrium G3. Parameters: c = 1, g = 3, β = 2, γ = 1, μ = ν = 0.

Apart from G3 and the segment QG1, there are no other Nash equilibria. Depending on the initial condition, orbits in the interior of S4 converge either to G3 or to a Nash equilibrium on QG1. Selective forces do not act on the edge G1G4, because it consists of fixed points only. But the state x fluctuates along the edge by neutral drift (reflecting random shocks of the system). Random shocks will also introduce occasionally a minority of a missing strategy. If this happens while x is in QG1, selection will send the state back to the edge, but a bit closer to Q (because x4/x1 increases). Once the state has reached the segment QG4 and a minority of G3 is introduced by chance, this minority will be favored by selection and eventually become fixed in the population. Thus in spite of the segment of Nash equilibria, the asocial state G3 will get established in the long run. This result plays the central role in Nowak et al. (18).

Bifurcation Through Reputation

In the Ultimatum Game and the Public Goods Game, experiments are usually performed under conditions of anonymity. The players do not know each other and are not supposed to interact again. But let us now introduce a small probability that players know the reputation of their coplayer and, in particular, whether the coplayer has failed to punish a defector on some previous occasion. This reputation creates a temptation to defect.

Let us assume that with a probability μ, cooperators (e1 players) defect against nonpunishers (f2 players), i.e., μ is the probability that (i) the f2 type becomes known, and (ii) the e1 type decides to defect. Let us similarly assume that with a small probability ν, defectors (e2 players) cooperate against punishers (f1 players), i.e., ν is the probability that (i) the f1 type becomes known, and (ii) the e2 type decides to cooperate. The payoff matrix for this “Public Goods with Second Thoughts” minigame becomes

|

8 |

We obtain R = (1 − ν)(c − β) < 0, S = c(1 − μ) > 0, s = γ − ν(g + γ), which is positive for small ν and r = −gμ < 0. Thus the edge G1G4 consists no longer of fixed points but of an orbit converging to G1. This is a generic situation, and we can use the results from Gaunersdorfer et al. (13).

The fixed points in the interior of S4 must satisfy (Mx)1 = (Mx)2 = (Mx)3 = (Mx)4 (and, of course, x1 + x2 + x3 + x4 = 1). There exists now a line L of fixed points in the interior of S4, satisfying (Mx)1 = (Mx)2, which reduces to

|

9 |

and also satisfying (Mx)1 = (Mx)4, which reduces to

|

10 |

This yields solutions in the simplex S4 if, and only if, RS < 0 and rs < 0. Both conditions are satisfied for the new minigame. It is easily verified that the line of fixed points L is given by li = mi + p for i = 1, 3, and li = mi − p for i = 2, 4, with p as parameter and

|

11 |

(see Fig. 2). Setting ν = 0 for simplicity, this yields in our case

|

12 |

|

and reduces for the Ultimatum minigame to

|

13 |

|

with k = (1 − l − μ(h − l))(l + μ(1 − l)). This line passes through the quadrangle G1G2G3G4 and hence intersects every WK in exactly one point (it intersects W1 in m). Because Rr > 0, this point is a saddle point for the replicator dynamics in the corresponding WK (see Fig. 3). On each surface, and therefore in the whole interior of S4, the dynamics is bistable, with attractors G1 and G3. Depending on the initial condition, every orbit, with the exception of a set of measure zero, converges to one of these two attractors (see Fig. 3).

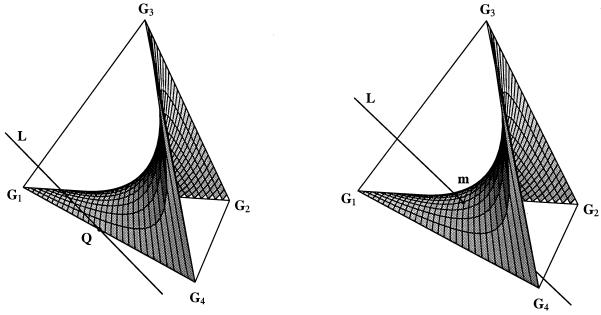

Figure 2.

Invariant manifold WK for K = 1 in the simplex S4. Without reputation (μ = ν = 0), the line of fixed points L intersects S4 in Q. With reputation (μ > 0 and/or ν > 0), L runs through the interior of S4 and intersects WK in m. The graphs refer to the punishment scenario, but in the case of reward, an analogous bifurcation occurs on the edge G2G3.

Figure 3.

Public Goods with Punishment and Reputation. Dynamics on the four faces of the simplex S4 and on the invariant manifold WK with K = 1. Introducing reputation produces a bistable situation. Depending on the initial configuration, the system ends up either close to the asocial equilibrium G3 or near the social equilibrium G1. Replacing the line of fixed points G1G4 (see Fig. 1), a transversal line of fixed points L runs through S4 and intersects WK in m (see Fig. 2). The position of m depends on the parameters and determines which corner, G1 or G3, corresponds to the “risk-dominant” solution. Parameters: c = 1, g = 3, β = 2, γ = 1, μ = 0.1, ν = 0.1.

For μ → 0, the point m, and consequently all interior fixed points (which are all Nash equilibria), converge to the point Q. At μ = 0, we observe a highly degenerate bifurcation. The (very short) segment of fixed points is suddenly replaced by a transversal line of fixed points, namely the edge G1G4, of which one segment, namely QG1, consists of Nash equilibria.

Thus, introducing an arbitrarily small perturbation μ (which is proportional to the probability of having information about the other player's punishing behavior) changes the long-term state of the population. Instead of converging in the long run to the asocial regime G3 (defect, do not punish), the dynamics has now two attractors, namely G3 and the social regime G1 (cooperate, punish defectors). For small μ and ν, this new attractor is even risk-dominant (in the sense that it has the larger basin of attraction on the edge G1G3) provided 2c < β − γ, which for the Ultimatum case reduces to h < 1/2. One can argue that, in this case, random shocks (or diffusion) will favor the social regime.

If μ = 1, i.e., if there is full knowledge about the type of the coplayer, we obtain S = 0. This case yields in some way the mirror image of the case μ = 0. G3G4 is now the fixed point edge; the points on Q̂G3 are Nash (with Q̂ = (0, 0, g/(g + γ), γ/(g + γ)) if we assume additionally that ν = 0), and fluctuations send the state ultimately to the unique other Nash equilibrium, namely G1, the social regime.

Reward and Reputation

Let us now consider another minigame, a variant of Public Goods with Second Thoughts, where reward replaces punishment. More precisely, two players are simultaneously asked whether they want to send a gift to the coplayer (as before, the benefit to the recipient is g, and the cost to the donor −c). Subsequently, recipients have the possibility to return a part of their gift to the donor. We assume that this costs them −γ and yields β to the coplayer (if γ = β, this is simply a payback). We assume 0 < c < β and 0 < γ < g. We label the players who reward their donor with f1 and those who don't with f2. We shall assume that with a small likelihood μ, cooperators defect if they know that the other player is not going to reward them, i.e., μ is the probability that (i) the f2 type becomes known, and (ii) the e1 type decides to defect. Similarly, we denote by ν the small likelihood that defectors cooperate if they know that they will be rewarded. (ν is the probability that (i) the f1 type becomes known and (ii) the e2 type reacts accordingly). We obtain the payoff matrix

|

14 |

Now R = (c − β)(1 − ν) < 0, S = c(1 − μ) > 0, r = γ − gμ, which is positive if μ is small, and s = (γ − g)ν, which is negative.

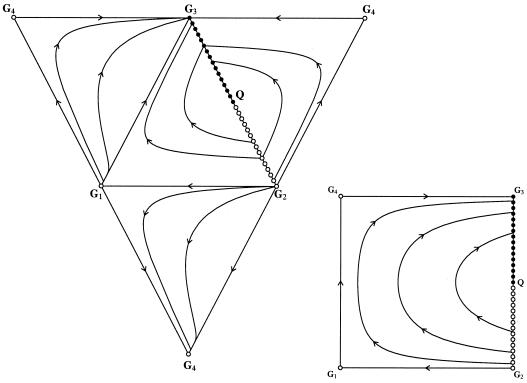

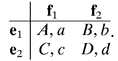

If ν = 0 (no clue that the coplayer rewards), then G2G3 consists of fixed points. As before, we see that the saturated fixed points (i.e., the Nash equilibria) on this edge form the segment QG3 (with Q = (0, c/β, (β − c)/β, 0) if μ is also 0). But now, the flow along the edges leads from G2 to G1, from there to G4, and from there to G3. All orbits in the interior have their α limit on G2Q and their ω limit on QG3. If a small random shock sends a state from the segment G2Q towards the interior, the replicator dynamics first amplifies the frequencies of the new strategies but then eliminates them again, leading to a state on QG3. If a small random shock sends a state from the segment QG3 towards the interior, the replicator dynamics sends it directly back to a state that is closer to G3. Eventually, with a sufficient number of random shocks, almost all orbits end up close to G3, the asocial state (see Fig. 4).

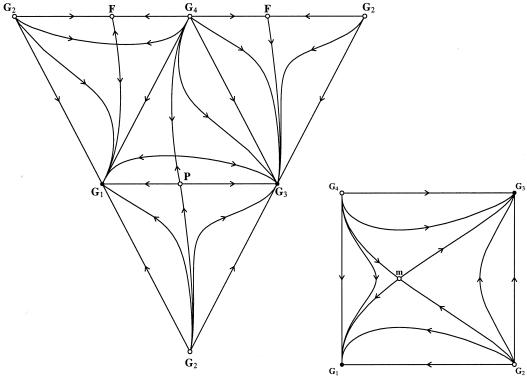

Figure 4.

Public Goods with Reward but without Reputation. Dynamics on the four faces of the simplex S4 and on the invariant manifold WK with K = 1. The edge G2G3 is a line of fixed points, stable on G3Q (closed circles) and unstable on QG2 (open circles). Random shocks eventually drive the system to the stable asocial segment G3Q, where no one makes any gifts but some players would reward a gift-giver. Parameters: c = 1, g = 3, β = 2, γ = 1, μ = ν = 0.

For ν > 0, the flow on the edge G2G3 leads towards G3, so that the frame spanning the saddle-type surfaces WK is cyclically oriented (see Fig. 5). As before, there exists now a line L of fixed points in the interior of S4. The surface W1 consists of periodic orbits. If Δ := (β − γ)(1 − ν) + (g − c)(μ − ν) is negative, all nonequilibrium orbits on WK with 0 < K < 1 spiral away from this line of fixed points. On WK, they spiral towards the heteroclinic cycle G1G2G3G4. All nonequilibrium orbits in WK with K > 1 spiral away from that heteroclinic cycle and towards the line of fixed points. If Δ is positive, the converse holds. If Δ = 0 (for instance, if β = γ and μ = ν), then all orbits off the edges and the line L of fixed points are periodic. For ν → 0, we obtain again to a highly degenerate bifurcation replacing a one-dimensional continuum of fixed points (which shrinks towards Q as ν decreases) by another, namely the edge G2G3.

Figure 5.

Public Goods with Reward and Reputation. Dynamics on the four faces of the simplex S4 and on the invariant manifold WK with K = 1. Introducing reputation destabilizes the asocial segment G3Q (see Fig. 4), leading to complex dynamical behavior. As before, the line of fixed points G2G3 is replaced by the transversal line L running through S4 and intersecting WK in m. For K = 1, periodic orbits appear with a center in m. Depending on the parameter values, Δ determines the dynamics on WK for K ≠ 1. If Δ < 0, then for K < 1, m turns into a source, and the state spirals towards the heteroclinic cycle G1G2G3G4 and for K > 1, m becomes a sink, and all states spiral inwards and converge to m. If Δ > 0, the converse holds, and for Δ = 0 all orbits are periodic. Small random shocks send the state from one manifold to another and hence change the value of K. Therefore, the system never converges. Parameters: c = 1, g = 3, β = 2, γ = 1, μ = 0.1, ν = 0.1.

We stress the highly unpredictable dynamics if ν > 0 and Δ ≠ 0. For one-half of the initial conditions, the replicator dynamics sends the state towards the line L of fixed points. But there, random fluctuations will eventually lead to the other half of the simplex, where the replicator dynamics leads towards the heteroclinic cycle G1G2G3G4. The population seems glued for a long time to one strategy, then suddenly switches to the next, remains there for a still longer time, etc. However, an arbitrarily small random shock will send the state back into the half-simplex where the state converges again to the line L of fixed points, etc. Not even the time average of the frequencies of strategies converges. One can say only that the most probable state of the population is either monomorphic (i.e., close to one corner of S4) or else close to the attracting part of the line of fixed points (all four types present, the proportion of cooperators larger among rewarders than among nonrewarders).

In this paper, we have concentrated on the replicator dynamics. There exist other plausible game dynamics, for instance, the best reply dynamics (see, e.g., ref. 14), where it is assumed that occasionally players switch to whatever is, among all pure available strategies, the best response in the current state of the population. Berger (19) has shown that almost all orbits converge in this case to m. We note that if the values of μ and ν are small, the frequency x1 + x4 of gift-givers is small.

Discussion

In a minigame, players are in two roles with two options in each role. Such games lead to interesting dynamics on the simplex S4. The edges of this simplex span a family of saddle-like surfaces that foliate S4. The orientation on the edges is given by the payoff values, i.e., by the signs of R, S, r, and s. Generically, these numbers are all nonzero. But in many games (especially among those given in extensive form), there exists one option where the payoff is unaffected by the type of the other player. In the Public Goods with Punishment, this is the gift-giving option: the coplayer will never punish. In the Public Goods with Reward, it is the option to withhold the gift: the coplayer will never reward. In each case, one edge of S4 consists of fixed points, one segment of it (from a point Q up to a corner Gi of the edge) being made up of Nash equilibria. A small perturbation leading from a point x on QGi into the interior of the simplex (i.e., introducing missing strategies) is offset by the dynamics, i.e., the new strategies are eliminated again and the state returns to QGi. But in one case, the state is closer to Q than before; in the other case, it is further away. The corresponding bifurcation replaces the fixed points on that edge by a continuum of fixed points, which, in one case, are saddle points (on the invariant surface WK) and in the other case have complex eigenvalues. There are two rather distinct types of long-term behavior—in one case, bistability, and in the other case, a highly complex and unpredictable oscillatory behavior.

It is obviously easy to set up experiments where the reputation of the coplayer is manipulated. In particular, our model seems to predict that in the punishment treatment, what is essential for the bifurcation is a nonzero likelihood (corresponding to the parameter μ) that the cooperator believes that she is faced with a nonpunisher. What is essential for the bifurcation to happen in the rewards treatment, in contrast, is that there is a nonzero likelihood (corresponding to the parameter ν) that the defector believes that he is faced with a rewarder.

The possibly irritating message is that for promoting cooperative behavior, punishing works much better than rewarding. In both cases, however, reputation is essential.

Acknowledgments

K.S. acknowledges support of the Wissenschaftskolleg (Vienna) Grant WK W008 Differential Equation Models in Science and Engineering; Ch.H. acknowledges support of the Swiss National Science Foundation, and M.A.N. acknowledges support from the Packard Foundation, the Leon Levy and Shelby White Initiatives Fund, the Florence Gould Foundation, the Ambrose Monell Foundation, the Alfred P. Sloan Foundation, and the National Science Foundation.

Footnotes

This paper was submitted directly (Track II) to the PNAS office.

References

- 1.Gintis H. J Theor Biol. 2000;206:169–179. doi: 10.1006/jtbi.2000.2111. [DOI] [PubMed] [Google Scholar]

- 2.Wedekind C, Milinski M. Science. 2000;288:850–852. doi: 10.1126/science.288.5467.850. [DOI] [PubMed] [Google Scholar]

- 3.Fehr E, Gächter S. Euro Econ Rev. 1998;42:845–859. [Google Scholar]

- 4.Kagel J H, Roth A E, editors. The Handbook of Experimental Economics. Princeton, NJ: Princeton Univ. Press; 1995. [Google Scholar]

- 5.Bolton G E, Zwick R. Games Econ Behav. 1995;10:95–121. [Google Scholar]

- 6.Henrich J, Boyd R, Bowles S, Camerer C, Fehr E, Gintis H, McElreath R. Am Econ Rev. 2001;91:73–78. [Google Scholar]

- 7.Gintis H. Game Theor. Evol. Princeton, NJ: Princeton Univ. Press; 2000. [Google Scholar]

- 8.Henrich J, Boyd R. J Theor Biol. 2001;208:79–89. doi: 10.1006/jtbi.2000.2202. [DOI] [PubMed] [Google Scholar]

- 9.Boyd R, Richerson P J. Ethol Sociobiol. 1992;13:171–195. [Google Scholar]

- 10.Clutton-Brock T H, Parker G A. Nature (London) 1995;373:209–216. doi: 10.1038/373209a0. [DOI] [PubMed] [Google Scholar]

- 11.Gale J, Binmore K, Samuelson L. Games Econ Behav. 1995;8:56–90. [Google Scholar]

- 12.Huck S, Oechssler J. Games Econ Behav. 1996;28:13–24. [Google Scholar]

- 13.Gaunersdorfer A, Hofbauer J, Sigmund K. Theor Popul Biol. 1991;29:345–357. [Google Scholar]

- 14.Hofbauer J, Sigmund K. Evolutionary Games and Population Dynamic. Cambridge, U.K.: Cambridge Univ. Press; 1998. [Google Scholar]

- 15.Cressman R, Gaunersdorfer A, Wen J F. Int Game Theor Rev. 2000;2:67–82. [Google Scholar]

- 16.Schlag K. J Econ Theor. 1998;78:130–156. [Google Scholar]

- 17.Weibull J W. Evolutionary Game Theory. Cambridge, MA: MIT Press; 1996. [Google Scholar]

- 18.Nowak M A, Page K M, Sigmund K. Science. 2000;289:1773–1775. doi: 10.1126/science.289.5485.1773. [DOI] [PubMed] [Google Scholar]

- 19.Berger U. Vienna University of Economics. 2001. , preprint. [Google Scholar]