Abstract

Tragically, the outbreak of Ebola that started in West Africa in 2014 has been far more extensive and damaging than any previous outbreaks. The duration of the outbreak has, for the first time, allowed the clinical evaluation of Ebola treatments. This article discusses the designs used for two such clinical trials which have recruited patients in Liberia and Sierra Leone. General principles are outlined for trial designs intended to be deployed quickly, adapt flexibly and provide results soon enough to influence the course of the current epidemic rather than just providing evidence for use should Ebola break out again. Lessons are drawn for the conduct of clinical research in future outbreaks of infectious diseases, where the sequence of events may or may not be similar to the West African Ebola epidemic.

Keywords: Ebola, infectious disease, multi-stage approach, sequential design, single-arm design, stopping rule

Introduction

Infectious diseases that are not endemic but occur only in sporadic outbreaks represent a unique challenge for clinical research. Clinical trials must be conducted during irregular and unpredictable outbreaks when medical staff and facilities are severely stretched. Potential treatments must be identified and supplied. At the same time, trial protocols must be developed and approved within a very short timeframe. Failure to react quickly can lead to a missed opportunity to collect reliable clinical evidence if the outbreak subsides, or can be a missed opportunity to save lives if the outbreak continues and an effective therapy exists.

This article stems from experience of the design and organisation of two clinical trials in Ebola within the Rapid Assessment of Potential Interventions & Drugs for Ebola (RAPIDE) programme led by the Centre for Tropical Medicine and Global Health at the University of Oxford. The first trial was initiated during the autumn of 2014 when the West African outbreak was at its height, but began to recruit patients in early 2015, by which time the rate of new cases had fallen substantially. The second trial was planned later, with the knowledge that recruitment was likely to be slow. The designs of the two studies are therefore different, reflecting the different circumstances of their development. The two trials are now complete,1,2 but this article focuses on their design and their results will not be discussed.

Basic design principles

The primary trial endpoint for both RAPIDE trials was survival to Day 14. A minor difference between the studies is that Day 1 was the day of commencement of study treatment in the first study, but was the day of admission in the second. The primary endpoint was chosen because it is objective, requires no invasive procedures or special equipment and captures mortality from both the disease and the intervention itself. This endpoint was chosen because deaths from Ebola mostly occur prior to Day 14 and because patients can generally still be traced at this time even if they have been discharged. Trial protocols specified collection and analysis of many other clinical measures which could influence final recommendations.

To inform the design of the studies, data were made available by Médecins Sans Frontières (Doctors Without Borders) on patients treated in four centres up to November 2014. These records had not been cleaned or verified due to the pressures bearing on the centres at that time. Nevertheless, they allowed a preliminary assessment of the Day 14 survival rate for patients treated with standard supportive therapy (denoted here by p0). From 1820 adult confirmed Ebola patients, p0 was estimated to be 0.43, with a 95% confidence interval of (0.40, 0.45). Consequently, a treatment associated with a true Day 14 survival rate of 0.5 or less would not be considered to be promising, and convincing evidence that the survival rate exceeded 0.5 was sought in the studies conducted.

Single-arm studies

In both RAPIDE trials, all study patients received the experimental treatment. The scientific and practical reasons for this are detailed in this section.

Absolute and relative treatment properties

A single-arm trial allows estimation of the absolute merits of an experimental treatment. The RAPIDE studies investigated the probability that a patient from the population represented by those treated in the study would survive to Day 14 (denoted here by p). A trial randomising between an experimental and control group allows estimation of p – p0. The fundamental difference between the designs is that they seek information on different quantities.

Denote the respective numbers of patients receiving the experimental and control treatments by n and n0 and the respective numbers who survive by S and S0. In a single-arm study using a target survival rate of 0.5, p – 0.5 is estimated by S/n – 0.5 with standard error approximately equal to √{S(n – S)/n3}. In a comparative trial, the difference p – p0 is estimated by S/n – S0/n0 with standard error approximately equal to √{S(n – S)/n3 + S0(n0 – S0)/n03}. The latter result becomes equal to the former if we imagine that in the single-arm study p0 is known to equal 0.5: that is, we treat it as estimated from a sample with S0/n0 = 0.5 and n0 = ∞, in which case S0(n0 – S0)/n03 can be taken to be zero. Suppose that the true values of p and p0 are close to one another. The standard error of the estimate of p – 0.5 in a single-arm study with n1 patients will be approximately √{p(1 – p)/n1}. That of p – p0 in a comparative study with ½n2 patients on each treatment will be approximately √{4p(1 – p)/n2}. Thus, the total sample size of a comparative study is four times that of a single-arm study of the same precision. For a fixed number of study subjects, the investigators’ choice is between a single-arm study that provides a relatively precise estimate of the absolute effect of treatment and a comparative study that estimates the effect relative to that of control treatment, but with less accuracy.

Theoretical findings

A Bayesian criterion can be used to determine optimal sample sizes for an experimental and a control group in a randomised clinical trial giving rise to normally distributed responses.3 If there is more accurate prior information concerning outcomes in the control group than in the experimental group, then the optimal sample size in the former will be smaller. In some cases, the optimal allocation will be for all patients to receive the experimental treatment. Similar conclusions apply to studies based on survival data,4 and methodology for making corresponding analyses for binary endpoints has also been developed.5

Optimal strategies have been devised for when numerous potential treatments are available, but the total sample size (N) is limited.6 The primary patient response is assumed to be binary and patients will be randomised equally between T experimental treatments. The treatment associated with the most successes will be selected (if two treatments tie for first place, then one will be chosen at random). Denoting the success rate of the selected treatment by p*, T is chosen to maximise the expected value of p*. If N = 60 and the prior success rate of each treatment independently follows a beta distribution with parameters 2 and 8 (with prior mean 0.2), then the optimal value for T is 10 or 15. Changing the beta distribution to have parameters 8 and 2 (with prior mean 0.8) leads to an optimal value for T of 5 or 6. These results show that the procedure that will lead to the most effective treatment after 60 patients have been treated is quite different from a procedure that seeks significant evidence that a certain treatment is in truth the best, testing many more treatments, each on fewer patients. By ‘in truth the best’, we refer to true, population success probabilities, rather than the sample estimates that will actually be observed. For example, to compare just two treatments with 90% power of a significant result at the 2.5% level (one-sided) when the true success probabilities are 0.50 and 0.80, respectively, requires a total of around 100 patients. Relaxing the one-sided significance level to 10% (relying on a subsequent confirmatory trial to expose any false-positives) reduces the total sample size to around 60.

The papers cited in the two preceding paragraphs are theoretical studies using Bayesian methods in idealised settings, but their findings have qualitative relevance for the design of frequentist clinical trials in Ebola. They show that it can be efficient to devote all resources to the experimental treatments when the performance of conventional treatment is already well understood and that when many promising treatments are available, it can be optimal to try as many as possible rather than devoting attention to just a few. These general principles have influenced the choice of designs within the RAPIDE programme. In particular, trials in which all patients receive the experimental treatment were part of the approach, to allow investigators to learn as quickly as possible about the novel intervention. Although formal multiple treatment trials were not envisaged for the start of the RAPIDE programme, it was intended to study a series of interventions in turn, with the possibility of a randomised comparison of those found promising later. Thus, the strategy of trying many treatments with relatively small numbers of patients, found optimal in more ideal circumstances, was adopted in our more pragmatic setting.

Analogies with cancer research

For many years, the standard approach to developing drugs for patients with solid tumours has consisted of a small single-arm phase II trial followed, if positive, by a large randomised phase III comparison with a standard control treatment.7 The primary analysis of the phase II trial concerns the response rate: that is, the probability that a patient’s tumour responds to the treatment by shrinking by some pre-defined amount. Target response rates, such as 0.2, have been established for various cancers based on the spontaneous rate of shrinkage in untreated patients. Evidence that a treatment exceeds the target is then used to justify a subsequent large phase III trial in which the survival time to death or progression of the disease will be compared with that of a control group receiving standard treatment. Oncology provides a precedent for using single-arm studies to establish whether a treatment is promising, leading to larger randomised studies of those agents so identified: this approach is extended and modified in the designs described here for Ebola.

Practical considerations

Conducting a clinical trial in the midst of an Ebola outbreak involves many challenges. Conducting a randomised trial in such circumstances involves even more. An ideal randomised trial in a stable medical setting includes administering a placebo treatment to patients in the control group. Ebola patients are isolated within a restricted zone of the treatment centre, and the time that medical staff can spend with them is severely limited. Devoting effort to administering placebos is not the best use of this time, and administering placebo infusions or injections adds the element of personal risk of infection for care givers. Of course, similar arguments can be made in the context of many studies, as delivering placebo treatment is never of direct benefit to trial patients. However, the stakes were considerably higher at the height of the Ebola outbreak when overcrowded treatment facilities and the need to wear protective clothing meant that nursing time available for providing care to each patient was extremely limited. In addition, contact with patients carried a particularly high personal risk to the care giver. At the peak of the Ebola epidemic, the acceptability of randomisation itself was being disputed by communities, care givers, experts and ethicists, both in the affected countries and internationally.8,9

An ideal design might involve the simultaneous testing of several alternative candidate treatments in a single study, but such a trial cannot begin until clinical trial batches for all candidates are available, and the use of all the products has been accepted by all relevant bodies, such as ethical and regulatory committees. This may be several months after the first of the candidate treatments is ready to enter a clinical trial. The delays involved are likely to preclude such an approach, especially at the beginning of a new outbreak.

Trial designs for the RAPIDE programme

A multi-stage approach

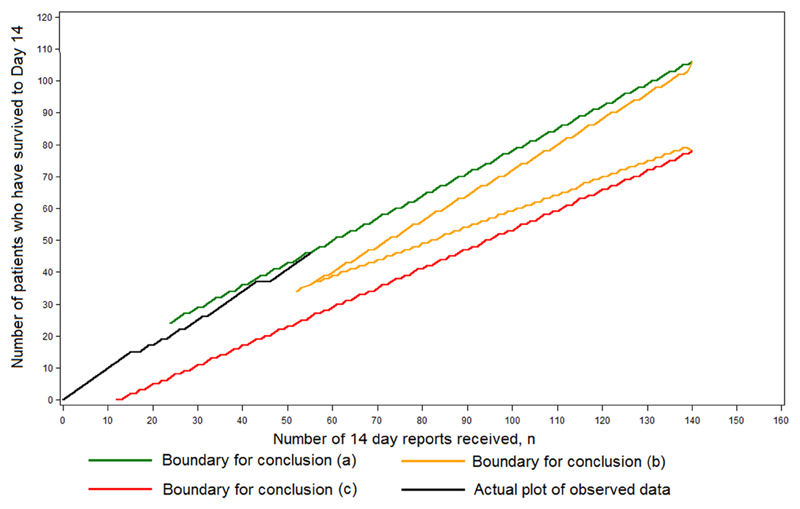

The first RAPIDE trial design was developed during the summer of 2014, when the Ebola outbreak was at its height, as part of a programme of research designed to evaluate a series of potential treatments as they became available. Each treatment would first be evaluated in a phase II trial with a maximum of 140 patients without a concurrent control group, designed as a triage classifying the treatment as (a) very effective, (b) promising or (c) apparently ineffective. Denoting the true Day 14 survival rate of the treatment by p, if p = 0.800, then conclusion (a) was to be reached with probability 0.90; if p = 0.667, then conclusion (b) was to be reached with probability 0.95; and if p = 0.500, then conclusion (c) was to be reached with probability 0.90. The trial was to follow a sequential design, stopping according to a continuously maintained plot of the number of patients who had survived to Day 14 against the total number who had been recruited 14 days earlier. The procedure is shown in Figure 1, where a fictitious plot is included for illustration. For p = 0.889, 0.800, 0.667, 0.500 and 0.333, median sample sizes were 38, 65, 65, 56 and 25, respectively. At the end of the trial, an exact analysis involving the estimation of p and the construction of an associated 95% confidence interval was to be conducted according to an exact method of calculation.10 The choices of values for p above were pragmatic. As already explained, the value 0.500 came from an analysis of available data, whereas the alternative value 0.800 was set high so that such a treatment might be regarded as indisputably effective based on evidence from two successive single-arm trials (the initial phase II triage and the subsequent phase III single-arm confirmatory trial). The value 0.667 was chosen because the odds ratios between it and 0.500 and 0.800 are 2 and 1/2 respectively.

Figure 1.

The design used for the phase II component of the multi-stage approach. The figure shows an illustrative, fictitious data plot together with the stopping boundaries.

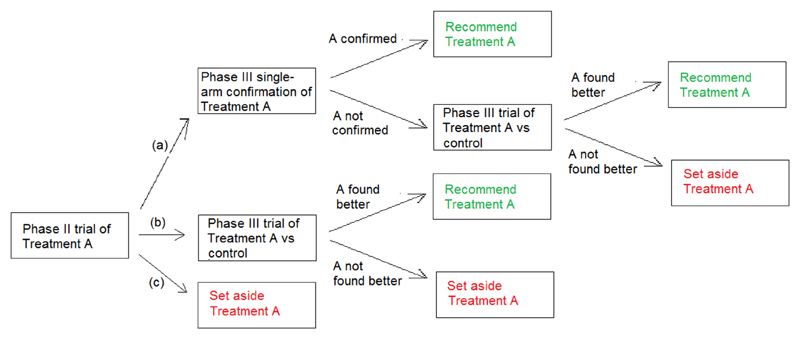

The phase II evaluation described above was to be part of a multi-stage approach (MSA). Conclusion (a) was to be followed by a single-arm confirmatory phase III trial, conducted as a sequential futility design,11 recruiting up to 132 patients, stopping if it ever became apparent that the Day 14 survival rate was not significantly in excess of 0.667. Otherwise, the treatment would be rolled out for general use. If the phase II trial reached Conclusion (b), or if the confirmatory trial described above failed to confirm that the treatment was very effective, then a randomised comparison with standard care (but without placebo) was to be conducted using a triangular design involving up to 500 patients. Alternative strategies, to be considered as the RAPIDE programme developed, included comparing multiple treatments that had been found to be promising (Conclusion (b) in phase II) within a single trial. Any treatment for which Conclusion (c) was reached would not be considered further within the RAPIDE programme. The overall procedure is presented as a flow chart in Figure 2. The procedure is a variation on the approach used in cancer trials described above. In cancer research, conclusions analogous to (b) and (c) can be drawn. Here, however, there is also the possibility of conclusion (a) which could lead to adoption of the experimental treatment based on evidence from two successive single-arm trials. This was felt appropriate because of the urgency of tackling the Ebola outbreak at the time when the trial was planned, the fact that data related directly to patient survival rather than response to treatment, and the higher chance of discovering a radical treatment breakthrough in a relatively unexplored therapeutic area.

Figure 2.

Flow chart to show how a single experimental treatment (Treatment A) can be evaluated using the multi-stage assessment procedure.

Further details of the MSA and a comparison with conventional approaches have been presented elsewhere.12 The MSA will quickly and accurately identify very effective treatments when such exist, particularly if several treatments are evaluated in the programme. It can start as soon as one experimental treatment becomes available. Further into the programme, randomisation and the simultaneous availability of multiple treatments may become necessary. It is most likely that any treatments recommended by the MSA will receive a randomised comparison with standard care. The fact that the experimental treatment will by then be seen as promising, but not very effective, might make randomisation more acceptable to participants and investigators. Only outstanding treatments that have passed both phase II and the phase III confirmation will be recommended by the MSA without having faced a randomised comparison. In such a case, the design guarantees that the estimated Day 14 survival rate will exceed 0.75, with the lower bound of the corresponding 95% confidence interval exceeding 0.66.

The phase II stage of the MSA was initiated by the RAPIDE team for the oral drug brincidofovir in January 2015 at the ELWA 3 centre in Liberia. The formal trial involved only adult patients, although children were also given the treatment in a separate observational study. By the time that the study started, the outbreak was almost over in Liberia, and admissions to ELWA 3 slowed down markedly. The company manufacturing the drug withdrew from the study when two adults had been recruited, and two children had also been treated. The low recruitment rate meant that by that time, completion of the trial in Liberia had become infeasible.

A futility design

The second RAPIDE design was constructed for an evaluation of TKM-130803, a novel treatment administered by infusion.13 This design was developed after the phase II study of brincidofovir had stopped, and it took place in Sierra Leone where cases were continuing to be observed. There were only 100 courses of the drug available, with no more likely to be available in the short term. The MSA approach relies on a plentiful supply of study subjects and is concerned with rolling out effective treatments as quickly as possible to influence the course of the current outbreak. These considerations no longer applied, and the objective of the second RAPIDE study was to collect data that may indicate whether TKM-130803 is a promising treatment, to be further evaluated as a matter of priority should additional drug supplies become available and the outbreak flare up again, or else in a future Ebola outbreak.

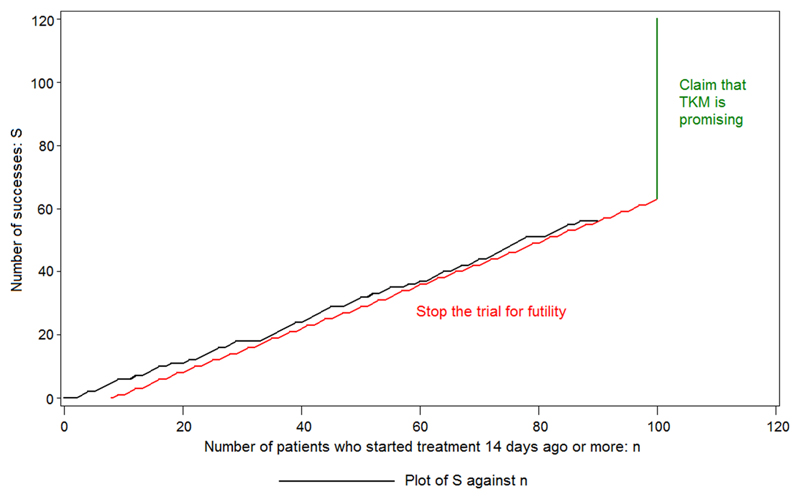

Given the circumstances, in contrast to the MSA, only a single phase II trial was envisaged, constructed as futility design.11 The trial would be terminated early if the treatment did not appear to be effective and there was no prospect of rejecting at the one-sided significance level of 0.025 the null hypothesis that the Day 14 survival rate on treatment was no better than the value suggested by historic data. Otherwise, all 100 courses were to be administered (providing that sufficient patients could be recruited). Only patients who survived the first 48 h following admission to the treatment centre were included in the primary analysis to avoid premature termination of the trial due to the inclusion of pre-treatment deaths and deaths of moribund patients with little chance of being helped by the candidate treatment. To allow for this new definition of success, the target survival rate was raised from 0.50 to 0.55, based on approximate analysis of the available Médecins Sans Frontières data. The design was constructed so that the probability of declaring TKM-130803 promising lies below 0.025 if p = 0.55: this probability rises to 0.49 and 0.83 when p = 0.65 and 0.70, respectively. All deaths, including those occurring in the first 48 h following admission, were included in a secondary analysis.

Throughout the trial, the number of patients who had survived to Day 14 (S) was plotted against the total number who had been recruited 14 days earlier (n). If ever S ≤ −4.87 + 0.682 n, then the trial was to be stopped for futility. It is not possible to reach this boundary until n = 8: if S = 0 at that stage, then the trial will be stopped. If the trial is not stopped according to this rule for any value of n, up to and including 100, then it will be claimed that TKM is promising. When n = 100, the limit is 63.33: that is, 64 or more successes are required to make the claim. The procedure is shown in Figure 3, where a fictitious plot is included for illustration. The trial was analysed using an exact approach.10 Given the diminishing rate of new infections in Sierra Leone, it was felt that the collection of data on 100 patients treated with TKM-130803, or on as many as could be collected before either the futility boundary was reached or the supply of patients ran out, would provide better information for deciding on further evaluation of TKM-130803 in any new Ebola outbreak than data on half that number from a randomised study. It was accepted that this RAPIDE TKM study would not, by itself, provide definitive evidence of the efficacy of the treatment unless the results were outstanding.

Figure 3.

The design used for the second RAPIDE study. The figure shows an illustrative, fictitious data plot together with the stopping boundaries.

Lessons for the future

At the time of writing, the outlook for the Ebola epidemic in West Africa is improving. Effective public health measures, especially the tracing of contacts of Ebola patients and hygienic burial of the dead, have been responsible for this success. Treatments that target the Ebola virus itself, rather than its consequences, have been administered for limited compassionate use and in small clinical trials, some of which are unfinished. No therapy has yet been proved to be very effective, and none has been used routinely. Ebola treatments have not contributed to gaining control of the epidemic.

It has been an unprecedented achievement to be able to initiate clinical trials during the course of such an epidemic. The concept of the RAPIDE programme originated in August 2014, funding was secured from the Wellcome Trust in September 2014 and the first patient was treated in January 2015. Procedures that would typically take 2 years or more in most therapeutic areas were telescoped into a mere 12 weeks from the first draft of the study protocol to the first patient being enrolled. Nevertheless, by the time that the trial did start, the worst of the epidemic was over. The investigators were chasing patients in the treatment centres that remained open, and it was too late for new treatments to have an effect on the course of the outbreak. Of course, all of the effort involved in setting up the trials was worthwhile. Had the outbreak not been brought under control, the RAPIDE programme and other research efforts could have quickly recruited the necessary sample sizes to determine whether the experimental treatments were worthwhile, and these may have saved many lives and helped to reduce the impact of the epidemic.

In conventional clinical trials of treatments for established diseases, data are normally available on the influence of various factors on patient outcomes. The lessons from the West African outbreak are likely to include such background information, drawn from analysis of the extensive databases that have been compiled. However, at the time when this outbreak started, as will be the case with any new infections, little was known or documented. In the RAPIDE programme, the main response to uncertainty was the selection of a primary endpoint that was already documented and the setting of high standards for experimental treatments to achieve. Both analyses of the case fatality rate undertaken on behalf of the World Health Organization14 and the limited evaluation of Day 14 survival rates by the present authors using data from four Médecins Sans Frontiéres centres made it clear that the background Day 14 survival rate was likely to be less than 50%. For the MSA, a true Day 14 survival rate target of at least 50% was set to avoid a treatment being classified as ineffective, a rate of at least 67% would justify a randomised trial and an 80% survival rate or better would correspond to a treatment being considered very effective. An observed estimated Day 14 survival rate of 75% or more (with a lower 95% confidence interval limit of 66% or more) would be required for the recommendation of a treatment’s use without a randomised study.

A common concern in developing new medical treatments is the balance between the harm they might cause and the benefit they might confer. Short-term harm is counted within the simple endpoint of survival to Day 14, although subsequent follow-up would be needed to quantify any late mortality or morbidity due to treatment and compare it with the benefit achieved. Such trade-offs are common in assessing acute surgery or cancer treatment. Long-term harm due to treatment can only be guessed at, although it has to be borne in mind that only survivors are at risk of long-term harm, which will usually be preferable to death within 14 days. Of course, serious disability within an underdeveloped care system might not necessarily be the preferred outcome.

At the end of the outbreak, trials are being commenced that run the risk of running out of patients prior to reaching their planned conclusion. Nevertheless, incomplete trials can be analysed, and even when adaptive methods are used, unbiased methods of analysis are available to deal with such underrunning.15 Although such analyses are unbiased and achieve the intended type I error rate, they are of course underpowered. These considerations apply irrespective of randomisation, although randomised trials may be more prone to underrunning as they require larger sample sizes.

Any future epidemic will bring its own challenges and will require a statistical approach tailored to its specific characteristics. If the disease spreads quickly and is rapidly lethal, information about its natural history will be available from many patients before any clinical trials begin, allowing identification of a simple primary endpoint and suggesting a target parameter value to be beaten in order to classify a treatment as promising. Non-randomised assessments should then be made to triage candidate treatments in order to identify which to recommend without further delay, which to take forward to further trials and which to discard. If the epidemic is slow to develop, with limited numbers of initial cases but potential for a sudden dramatic increase, then early initiation of clinical research would be important. Randomised strategies seem more appropriate when there is little experience of the natural course of the disease, using simple endpoints such as rating of the patient 14 days after recruitment on a scale with classifications such as recovered, stable, progressing or dead. Data-based rules for moving from one phase of testing to another and for stopping to recommend or abandon a treatment as soon as the evidence warrants it will be essential.

Conclusion

The West African Ebola outbreak has underlined the need for very rapid identification of potentially useful treatments and very rapid implementation of study protocols so as not to miss the outbreak and to have the potential for saving lives while it continues. A range of different trial designs that will yield credible results should be considered by investigators. In an emergency context, an adaptive approach to clinical trial design in which preliminary trial data are used to modify the clinical research programme as it develops has ethical, scientific and economic advantages. The trial design must be capable of answering the question at issue, but it must also be acceptable to the community from which patients are drawn, the organisations that are treating them and the companies and institutes providing and evaluating the experimental treatments. At the peak of the epidemic, with high incidence and fatality rates, the approach taken in the RAPIDE programme was to attempt to triage treatments quickly, in order to identify life-saving interventions that could be recommended for immediate use if they were found to be effective. At the same time, rigorous clinical trial methodology was applied following Good Clinical Practice guidelines in order to ensure that data generated could be used to support product registration.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Wellcome Trust of Great Britain (grant number 106491/Z/14/Z and 089275/Z/09/Z) and by the EU FP7 project PREPARE (602525). The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Footnotes

Declaration of conflicting interests

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: P.O. is a staff member of the World Health Organization; the authors alone are responsible for the views expressed in this publication and they do not necessarily represent the decisions, policy or views of the World Health Organization. The authors have declared that no other competing interests exist.

Reprints and permissions: sagepub.co.uk/journalsPermissions.nav

References

- 1.Dunning J, Kennedy SJ, Antierens A, et al. A phase II trial of brincidofovir for the treatment of Ebola Virus Disease. submitted. [Google Scholar]

- 2.Dunning J, Sahr F, Rojek A, et al. A phase II trial of TKM-130803 for the treatment of Ebola Virus Disease. submitted. [Google Scholar]

- 3.Whitehead J, Valdés-Márquez E, Johnson P, et al. Bayesian sample size for exploratory clinical trials incorporating historical data. Stat Med. 2008;27:2307–2327. doi: 10.1002/sim.3140. [DOI] [PubMed] [Google Scholar]

- 4.Cotterill A, Whitehead J. Bayesian methods for setting sample sizes and choosing allocation ratios in phase II clinical trials with time-to-event endpoints. Stat Med. 2015;34:1189–1903. doi: 10.1002/sim.6426. [DOI] [PubMed] [Google Scholar]

- 5.Hampson LV, Whitehead J, Eleftheriou D, et al. Bayesian methods for the design and interpretation of clinical trials in very rare diseases. Stat Med. 2014;33:4186–4201. doi: 10.1002/sim.6225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Whitehead J. Designing phase II studies in the context of a programme of clinical research. Biometrics. 1985;41:373–383. [PubMed] [Google Scholar]

- 7.Stallard N. Phase II clinical trials. In: Biswas A, Datta S, Fine JP, et al., editors. Statistical advances in the biomedical sciences: clinical trials, epidemiology, survival analysis and bioinformatics. New York: Wiley; 2008. p. 17. [Google Scholar]

- 8.World Health Organization. Ethical issues related to study design for trials on therapeutics for Ebola virus disease. WHO Ethics Working Group meeting. 2014 http://www.who.int/csr/resources/publications/ebola/ethical-evd-therapeutics/en/ [Google Scholar]

- 9.Presidential Commission for the Study of Bioethics Issues. Ethics and Ebola: Public health planning and response. 2015 http://bioethics.gov/node/4637#sthash.UPG9t8fH.dpufhttp://bioethics.gov/sites/default/files/Ethics-and-Ebola_PCSBI_508.pdf.

- 10.Jovic G, Whitehead J. An exact method for analysis following a two-stage phase II cancer clinical trial. Stat Med. 2010;29:3118–3125. doi: 10.1002/sim.3837. [DOI] [PubMed] [Google Scholar]

- 11.Whitehead J, Matsushita T. Stopping clinical trials because of treatment ineffectiveness: A comparison of a futility design with a method of stochastic curtailment. Stat Med. 2003;22:677–687. doi: 10.1002/sim.1429. [DOI] [PubMed] [Google Scholar]

- 12.Cooper BS, Boni MF, Pan-ngum W, et al. Evaluating clinical trial designs for investigational treatments of Ebola virus disease. PLoS Med. 2015;12:e1001815. doi: 10.1371/journal.pmed.1001815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Thi EP, Mire CE, Lee AC, et al. Lipid nanoparticle siRNA treatment of Ebola-virus-Makona-infected non-human primates. Nature. 2015;521:362–365. doi: 10.1038/nature14442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.WHO Ebola Response Team. Ebola virus disease in West Africa – the first 9 months of the epidemic and forward projections. New Engl J Med. 2014;371:1481–1495. doi: 10.1056/NEJMoa1411100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Whitehead J. Overrunning and underrunning in sequential clinical trials. Control Clin Trials. 1992;13:106–121. doi: 10.1016/0197-2456(92)90017-t. [DOI] [PubMed] [Google Scholar]