Abstract

Objective

The structure of neurocognition is explored by examining the neurocognitive domains underlying comprehensive neuropsychological assessment of cognitively healthy individuals.

Method

Exploratory factor analysis was conducted on the adult normative dataset of an expanded Halstead-Reitan Battery (eHRB), comprising Caucasian and African American participants. The factor structure contributions of the original HRB, eHRB expansion, and Wechsler intelligence scales were compared. Demographic effects were examined on composite factor scores calculated using confirmatory factor analysis.

Results

The full eHRB had an eight-factor structure, with latent constructs including: ‘working memory’, ‘fluency’, ‘verbal episodic memory’, ‘visuospatial cognition’ (visuospatial memory and problem solving), ‘perceptual-motor speed’ (speed for processing visual/tactile material and hand-motor execution), ‘perceptual attention’ (attention to sensory-perceptual information), ‘semantic knowledge’ (knowledge acquired through education and culturally-based experiences), and ‘phonological decoding’ (grapheme-phoneme processing essential for sounding-out words). ‘Perceptual-motor speed’ and ‘perceptual attention’ were most negatively associated with age, whereas ‘semantic knowledge’ and ‘phonological decoding’ were most resistant to aging. ‘Semantic knowledge’ showed the greatest dependence on demographic background, including education and ethnicity. Gender differences in cognitive performances were negligible across all domains except ‘phonological decoding’ with women slightly outperforming men. The original HRB contributed four neurocognitive domains, the eHRB expansion three domains, and the Wechsler scales one additional domain but with restructuring of verbal factors.

Conclusion

Eight neurocognitive domains underlie performance of healthy cognitive individuals during comprehensive neuropsychological assessment. These domains serve as framework for understanding the constructs measured by commonly-used neuropsychological tests and may represent the structure of neurocognition.

Keywords: Halstead-Reitan Battery, Neuropsychological assessment, Factor analysis, Demographic effects, Dyslexia

There is a long tradition of attempts to uncover the make-up of neurocognition and intelligence by examining the factor structure underlying neuropsychological test batteries (e.g., Halstead, 1947; Holdnack, Zhou, Larrabee, Millis, & Satlhouse, 2011; Horn & Cattell, 1966; Johnson & Bouchard, 2005; Newby, Hallenbeck, & Embretson, 1983; Thurstone & Thurstone, 1941; Vernon, 1965). The Halstead-Reitan Battery (HRB, Reitan & Wolfson, 1985) has been one of the most widely used neuropsychological test batteries for assessing brain dysfunction in clinical and research settings, and components of the HRB have often been incorporated into these factor analyses (Bornstein, 1983; Fowler, Zillmer, & Newman, 1988; Goldstein & Shelly, 1972; Grant et al., 1978; Leonberger, Nicks, Goldfader, & Munz, 1991; Leonberger, Nicks, Larrabee, & Goldfader, 1992; Moehle, Rasmussen, & Fitzhugh-Bell, 1990; Newby et al., 1983; Ryan, Prifitera, & Rosenberg, 1983; Swiercinsky, 1979).

Despite an extensive history of clinical and research use, no factor analysis of the HRB alone appears to have been published to date (Franzen, 2002; Ross, Allen, & Goldstein, 2013). Previous factor analytic studies have used combinations of select HRB subtests with a variety of other neuropsychological measures—especially from the Wechsler Adult Intelligence Scales (WAIS and WAIS-R; Wechsler, 1955, 1981) and Wechsler Memory Scales (WMS and WMS-R; Wechsler, 1945, 1987)—yielding a lack of clear consensus on the cognitive constructs underlying the core HRB (see Ross et al., 2013 for a review). Discrepancies were notably found with the inclusion, in many studies, of same-test measures, leading to factor structures encompassing instrumental factors—that is, factors arising from correlations related to task demands rather than underlying cognitive ability (Cattell, 1961; Larrabee, 2003).

Choice of factor rotation method has also impacted results. Most factor analytic studies of the HRB have used orthogonal rotation, a method that forces zero-correlations between factors and, compared to oblique rotation, has been judged less representative of actually related cognitive domains (Browne, 2001; Thurstone, 1947). Most studies that used orthogonal rotation on combined HRB and WAIS tests have found a broadly defined initial factor—accounting for the majority of variance—interpreted as education-related general intelligence, a secondary factor representative of visuospatial skills and speed, and one or two additional non-motor factors related to attention and speed, attention and working memory, sensory-perceptual abilities, or rapid visual-motor coordination, depending on the study (e.g., Bornstein, 1983; Grant et al., 1978; Goldstein & Shelly, 1972; Lansdell & Donnelly, 1977; Swiercinsky, 1979). A study that used oblique rotation, by contrast, found a simpler factor structure, without a broadly defined factor (e.g., Fowler et al., 1988).

Sample characteristics have also varied widely across studies, with subjects comprising mixed and often unspecified brain-damaged and neuropsychiatric patients (e.g., Bornstein, 1983; Fowler et al., 1988; Goldstein & Shelly, 1972; Grant et al., 1978; Leonberger et al., 1991, 1992; Moehle et al., 1990; Ryan et al., 1983; Swiercinsky, 1979). Yet, sample characteristics are important: different patterns of factor loadings and even number of factors have been previously reported in samples with different diagnoses or types of brain dysfunction (e.g., Delis, Jacobson, Bondi, Hamilton, & Salmon, 2003; Russell, 1974; Warnock & Mintz, 1979).

Historical context may have also contributed to the lack of consensus on the cognitive constructs underlying the HRB, with a development of the battery that proceeded atheoretically, guided by the practical and commendable goal of detecting brain dysfunction (Reitan & Wolfson, 2004). The initial test battery was selected based on sensitivity to brain damage and was composed of 13 measures thought to be representative of ‘biological intelligence’ (Halstead, 1947). This battery was later expanded and modified with goal to maximize correct diagnostic inferences for a range of known brain damage and diseases (Reitan & Wolfson, 1985, 2009). This process, which took 15 years, involved the systematic testing of thousands of cases, and proceeded by adding or keeping tests only if they contributed significantly to distinguishing between type of damage or disease—that is, right versus left hemispheric lesions, anterior versus posterior lesions, focal versus diffuse lesion localization, and acute versus chronic course (Reitan & Wolfson, 2004). For example, based on initial findings that the WMS did not provide unique discrimination across certain patient groups, formal memory testing was not included in the battery (Reitan & Wolfson, 2004). In the end, to form the final HRB, four of Halstead’s original tests were retained and six tests were added. A description of these tests is provided in Table 1a. The full WAIS was also routinely administered as part of the final prescribed battery (Table 1b), with justification that these tests would provide information about verbal intelligence, visuospatial function, and assist in determining lesion lateralization (Reitan & Wolfson, 2004).

Table 1a.

Test measures of the HRB

| Tests | Task descriptions | Test measures |

|---|---|---|

| Speech-Sounds Perception Test | Task requiring matching auditory-presented nonsense syllables with one of four closely related written syllables—for example, ‘weem,’ ‘weer,’ ‘weez,’ or ‘weeth’ (60 trials) |

|

| Seashore Rhythm Test | Task requiring to discriminate between two rhythmic beats (30 trials) |

|

| Reitan-Indiana Aphasia Screening Test | Short tasks including naming common objects, reading, writing, and repeating simple words, explaining a short sentence, performing simple arithmetic calculations, drawing simple shapes (e.g., a square and a triangle), and copying drawings (e.g., a cross and a key) |

|

| Reitan-Kløve Sensory-Perceptual Examination (SPE) | Series of tasks requiring the detection of tactile, auditory, and visual stimuli presented on either or both sides of the body |

|

| Tactile Form Recognition Test (TFR) | Task requiring the identification of shapes successively held in the right and then left hand as quickly as possible. |

|

| Tactual Performance Test (TPT) | Series of tasks first requiring quickly fitting a number of wood or foam shapes into their proper spaces on a vertical board while blindfolded (three trials successively testing the dominant hand, nondominant hand, and both hands). After the blindfold is removed, participants are later asked to draw the vertical board with its cut-out shapes from memory. |

|

| Category Test | Task requiring uncovering the principles underlying four subtests. Each subtest consists of 52 pictures, where participants must judge whether a geometric figure best corresponds to the number 1, 2, 3, or 4. Right or wrong feedback is systematically provided. |

|

| Trail Making Test | Tasks requiring quickly tracing lines between numbers and letters scattered on a page. Part A requires simple number sequencing; Part B requires number and letter sequencing and set-switching. |

|

| Finger Tapping Test | Task requiring tapping a lever as many times as possible within 10 s with the forefinger of the dominant and then non-dominant hand. Several trials are administered for calculation of a meaningful average performance. |

|

| Grip Strength Test | Task requiring squeezing a handle with as much force as possible with the dominant and then nondominant hand |

|

Notes: HRB = Halstead-Reitan Battery. Test measures starred in the table correspond to pure motor tests and were excluded from the factor analysis to avoid introducing gender-differences based on physiology rather than cognition.

The main criticisms of the HRB, notably the absence of formal memory testing and lack of in-depth language assessment (Lezak, Howieson, & Loring, 2004), have been addressed in the development of an expanded Halstead-Reitan Battery (eHRB, Heaton, Grant, & Matthews, 1991). Notably, in addition to the HRB tests and Wechsler scales tests, tests were included in the eHRB that formally assessed learning and memory and several aspects of language (see Table 1c). Tests were also added assessing executive function (fluency tests), attention and working memory, and processing speed. The eHRB normative dataset represents perhaps one of the most comprehensive neuropsychological test batteries to be systematically administered to a large number of individuals, with sizable representation of various demographic groups in terms of age, education, gender, and African American and Caucasian ethnicities (Heaton, Miller, Taylor, & Grant, 2004).

Table 1b.

Test measures of the WAIS and WAIS-R

| Tests | Task descriptions | Test measures |

|---|---|---|

| Information Subtest | Task requiring answering questions about general factual knowledge |

|

| Vocabulary Subtest | Task requiring defining words presented visually and orally, with higher quality answers yielding more points. |

|

| Comprehension Subtest | Task requiring answering questions about general principles and social situations involving common sense. Answers of higher quality yield more points. |

|

| Similarities Subtest | Task requiring uncovering common concepts underlying pairs of words—higher abstraction yield more points |

|

| Digit Span Subtest | Task requiring repeating a series of digits with increasing length, first forward, and then backward. One point is allocated for each successful trial. |

|

| Arithmetic Subtest | Task requiring mentally solving orally presented arithmetic problems within a time limit |

|

| Picture Completion Subtest | Task requiring identifying missing details in a series of pictures (e.g., a slit in a screw) within a time limit |

|

| Picture Arrangement Subtest | Task requiring arranging a set of pictures in order within a time limit so that they tell a sensible story |

|

| Block Design Subtest | Task requiring arranging bi-color blocks as quickly as possible so that they form patterns similar to a presented picture |

|

| Object Assembly Subtest | Task requiring assembling cut-up drawings of objects (puzzle-like) within a time limit |

|

| Digit-Symbol Coding Subtest | Task requiring copying symbols paired with specific digits within a time limit |

|

Note: WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised.

Table 1c.

Test measures of the expansion in the eHRB

| Tests | Task descriptions | Test measures |

|---|---|---|

| Boston Naming Test (BNT) | Task requiring naming 60 visually presented pictures. Semantic and phonemic cues are provided. |

|

| Thurstone Word Fluency | Task requiring writing down as many words as possible within one minute, first starting with the letter ‘S,’ and then starting with the letter ‘C’ and composed of four letters. |

|

| Letter Fluency (FAS) | Task requiring saying as many words as possible within one minute starting with the letters F, A, and then S |

|

| Category Fluency (Animal) | Task requiring naming as many animals as possible within a minute |

|

| Paced Auditory Serial Addition Test (PASAT) | Task requiring adding the two most recent digits in a series of audio-recorded digits presented continuously and at increasing speeds |

|

| Digit Vigilance Test | Task requiring crossing out as rapidly as possible the number 6 in two large digit arrays. |

|

| Grooved Pegboard | Task requiring placing 25 pegs into grooves on a metallic board using first the dominant and then the non-dominant hand |

|

| California Verbal Learning Test (CVLT) | During this task, a wordlist is first presented in five learning trials, each time requiring immediate repetition of as many words as possible. Delayed recall of the wordlist is then assessed twice, after a short and a long delay. |

|

| Story Memory Test | During this task, a short story is repeated until participants can repeat at least 15 out of 29 pieces of information, with a maximum of 5 allowed trials. Delayed recall is assessed 4 hr later. |

|

| Figure Memory Test | During this task, visual designs are presented on 3 successive cards, with presentations repeated up to 5 times, until participants are able to draw the figures from memory with a 15-point accuracy level. Delayed recall is assessed 4 hr later. |

|

| Peabody Individual Achievement Test (PIAT) | A series of tasks requiring reading a series of words aloud (i.e., reading recognition), choosing the correct spelling of a word among multiple choices, and choosing among four scenes, the one best depicting a short story previously presented in writing. |

|

| Boston Diagnostic Aphasia Examination (BDAE) Complex Ideational Material | Task requiring answering twelve read-aloud two-part questions, including commonly known facts and brief stories. |

|

Notes: eHRB = expanded Halstead-Reitan Battery. Test measures that are starred in this table were excluded from the factor analysis due to normative samples that were separately recruited or inadequate in size.

The present study has used this unique dataset to explore the neurocognitive domains underlying performance during comprehensive neuropsychological assessment. Determining the neurocognitive ability structure of the eHRB is a critical step for research that seeks to support the reliability and validity of test scores. Compared to previous factor analytic studies including HRB tests, the present study employed a large, diverse, and well-defined sample of neurocognitively healthy individuals, and robust statistical techniques, including control of instrumental factors and oblique factor rotation. Demographic effects were evaluated to support factor interpretation. For historical considerations and insight into the effects of measure selection, the factor structure of the subset of tests that composes the original HRB is provided with separate contribution of the WAIS and WAIS-R. By characterizing and comparing the HRB and eHRB factor structures with and without the Wechsler Scales, the incremental factors contributed by the expanded battery additions are evaluated.

Method

Participants and Design

The present study used the extensive dataset collected as part of the eHRB norming effort (Heaton et al., 1991, 2004). Healthy participants were recruited over a period of 25 years in the context of several neuropsychological studies (e.g., Heaton, Grant, Butters et al., 1995; Heaton, Grant, McSweeny, & Adams, 1983; Savage et al., 1988), and the construction of African-American norms (Norman, Evan, Miller, & Heaton, 2000; Norman et al., 2011). The same administration standardized procedures and scoring guidelines were used across studies (Heaton & Heaton, 1981). Most participants were paid for their time. Subjects were excluded based on structured interviews if they endorsed any history of neurological disorder, medical condition that might affect the brain, significant head trauma, learning disability, serious psychiatric disorder, and substance use disorder. Effort was assessed using formal effort testing and examiner’s ratings.

The majority of subjects were administered most measures as part of the eHRB normative project. However, because the norming project was a multi-study effort with multiple goals, it was not uncommon for a single subject to have some missing measures. Parameter estimation techniques used in latent variable modeling tend to be robust to missing data (Hagenaars & McCutcheon, 2002); however, to limit error, only subjects who had been administered at least half of the eHRB measures were selected, including at least one memory measure. Remaining attrition was assumed to occur at random and was dealt with using full information maximum likelihood (Collins, Schafer, & Kam, 2001; Muthén & Muthén, 2009). The final sample (N = 982; age: M = 47.2 years, SD = 18.0; education: M = 13.7 years, SD = 2.5) consisted of 443 female and 539 male participants, 618 African American and 364 Caucasian participants, and sizeable representation of all age and education groups. This sample composition takes advantage of the largest African American recruitment by far of any neuropsychological norming study (Heaton et al., 2004), and provides a diverse framework for studying the structure of neurocognition. All data and methods used in this manuscript are in compliance with the University of California, San Diego regulations.

Measures

Halstead-Reitan Battery and Expanded Halstead-Reitan Battery

Tests administered as part of the eHRB norming effort and their abbreviations are described in Table 1a–c. Tests administered to less than 25% of the sample (Table 2) were not included in the analyses. Test measures were excluded if they were not normally distributed or, to avoid redundancy, if they were combinations of other measures (notes in Table 1a–c). Pure motor tests (i.e., Grip Strength and Finger Tapping) were also excluded from the analyses to limit non-cognitive contributions when studying the structure of cognition and to avoid artificial inflation of gender differences. There were 33 remaining eHRB test measures in the analysis. Most of these measures were available for more than 80% of the sample, with the exceptions of the Thurstone Word Fluency Test (available for 67% of the sample, N = 658), PASAT (49%, N = 479), Digit Vigilance Test (57%, N = 562), and CVLT (69%, N = 681). Sample sizes, even for those tests, were deemed adequate for a factor analysis, and missing values were not anticipated to have a significant impact on the results.

Table 2.

Sample characteristics presented for each test separately

| Number of participants | |||||||

|---|---|---|---|---|---|---|---|

| Tests | Total | Men | Women | Caucasian | African-American | AgeM (SD) | EducationM (SD) |

| Halstead-Reitan Battery | |||||||

| Speech-Sounds Perception Test | 893 | 498 | 395 | 311 | 582 | 47.6 (18.2) | 13.8 (2.5) |

| Seashore Rhythm Test | 894 | 499 | 395 | 312 | 582 | 47.7 (18.3) | 13.8 (2.5) |

| Reitan-Indiana Aphasia Screening Test | 863 | 466 | 397 | 286 | 577 | 47.7 (18.5) | 13.8 (2.6) |

| Spatial Relations | 881 | 485 | 396 | 301 | 580 | 47.6 (18.3) | 13.7 (2.6) |

| Reitan-Kløve Sensory-Perceptual Examination (SPE) | 893 | 494 | 399 | 293 | 600 | 46.1 (17.8) | 13.7 (2.6) |

| Tactile Form Recognition Test (TFR) | 837 | 459 | 378 | 257 | 580 | 46.9 (18.1) | 13.7 (2.6) |

| *Finger Tapping Test | 744 | 428 | 316 | 361 | 383 | 50.7 (18.1) | 13.8 (2.6) |

| *Grip Strength Test | 892 | 489 | 403 | 299 | 593 | 46.7 (17.9) | 13.8 (2.6) |

| Tactual Performance Test (TPT) | 893 | 499 | 394 | 321 | 572 | 47.0 (17.9) | 13.8 (2.6) |

| Category Test | 969 | 529 | 440 | 360 | 609 | 46.9 (17.9) | 13.8 (2.5) |

| Trail Making Test | 982 | 539 | 443 | 364 | 618 | 47.2 (18.0) | 13.8 (2.5) |

| Expansion of the Halstead-Reitan Battery | |||||||

| Boston Naming Test (BNT) | 844 | 429 | 415 | 227 | 617 | 49.0 (18.0) | 13.6(2.4) |

| Thurstone Word Fluency | 658 | 387 | 271 | 276 | 382 | 39.3 (14.5) | 13.9 (2.6) |

| Letter Fluency (FAS) | 806 | 409 | 397 | 203 | 603 | 51.8 (18.0) | 13.5 (2.5) |

| Category Fluency (Animal) | 801 | 405 | 396 | 200 | 601 | 49.0 (18.0) | 13.6 (2.4) |

| Paced Auditory Serial Addition Test (PASAT) | 479 | 268 | 211 | 104 | 375 | 37.8 (12.0) | 13.7 (2.5) |

| Digit Vigilance Test | 562 | 302 | 260 | 179 | 383 | 42.1 (16.0) | 13.7 (2.5) |

| Grooved Pegboard | 950 | 530 | 420 | 361 | 589 | 47.1 (17.7) | 13.8 (2.5) |

| California Verbal Learning Test (CVLT) | 681 | 319 | 362 | 166 | 515 | 51.8 (18.0) | 13.5 (2.5) |

| Story Memory Test | 953 | 519 | 434 | 341 | 612 | 47.3 (18.1) | 13.7 (2.5) |

| Figure Memory Test | 937 | 510 | 427 | 331 | 606 | 47.3 (18.0) | 13.7 (2.5) |

| Peabody Individual Achievement Test (PIAT): | |||||||

| Reading Recognition | 871 | 473 | 398 | 280 | 591 | 47.6 (18.3) | 13.7 (2.6) |

| *Spelling | 125 | 97 | 28 | 125 | 0 | 35.5 (12.8) | 14.8 (2.9) |

| *Reading Comprehension | 217 | 135 | 82 | 203 | 14 | 48.2 (19.4) | 14.4 (2.7) |

| *Boston Diagnostic Aphasia Examination (BDAE) Complex Ideational Material | 0 | 0 | 0 | 0 | 0 | – | – |

| Wechsler Adult Intelligence Scales | |||||||

| WAIS (11 subtests) | 126 | 98 | 28 | 126 | 0 | 35.4 (12.8) | 14.8 (2.9) |

| WAIS-R (11 subtests) | 459 | 217 | 242 | 97 | 362 | 44.9 (16.8) | 13.6 (2.5) |

| African Am.: | 39.0 (12.6) | 13.6 (2.5) | |||||

| Caucasian: | 67.2 (11.1) | 13.7 (2.3) | |||||

| All participants that were administered at least half of the eHRB measures and one memory test | 982 | 539 | 443 | 364 | 618 | 47.2 (18.0) | 13.7 (2.5) |

Note: M = Mean; SD = Standard Deviation. Test measures that are starred in this table were excluded from the factor analyses due to normative samples that were separately recruited or inadequate in size (i.e., BDAE Complex Ideational Material, PIAT spelling, and PIAT reading comprehension), or to avoid introducing gender differences based on physiology rather than cognition (i.e., Grip Strength Test and Finger Tapping Test).

Wechsler Adult Intelligence Scales

Two versions of the Wechsler scales were administered as part of the eHRB norming effort: the WAIS (Wechsler, 1955) and the WAIS-R (Wechsler, 1981). The merging of WAIS and WAIS-R data is supported by the considerable content similarity across batteries (Kaufman & Lichtenberger, 2006) and adequate correlations between scores in counterbalanced administrations (Ryan, Nowak, & Geisser, 1987). However, educational level was significantly greater in the WAIS-R normative sample compared to the WAIS sample (Kaufman & Lichtenberger, 2006), yielding significantly higher WAIS-IQ compared to WAIS-R-IQ score in individuals who are tested on both batteries. To add to merging complexities, there were significant differences in the WAIS and WAIS-R ethnicity and age distributions (Table 2) related to administration timing over the 25 year-course of the eHRB norming effort: the WAIS was essentially administered during the first wave focusing on establishing Caucasian norms (Heaton et al., 1991), whereas the WAIS-R was administered during the second wave focusing on establishing norms for African Americans and older individuals (Heaton et al., 2004).

Assuming constant scaled score drift across age and education levels, adjustment coefficients were calculated for each subtest as the average difference between the WAIS and WAIS-R demographically uncorrected scaled scores in two sub-samples of Caucasian individuals matched for age and education. Adjustment coefficients (Table 3) were then applied to all the WAIS scores (N = 126, Caucasian subjects), whereas WAIS-R scores remained unchanged (N = 459, Caucasian and African-American subjects). Adjustment coefficients averaged a half standard deviation consistent with previous findings (Wechsler, 1981; Crawford et al., 1990).

Table 3.

Adjustment coefficients representing the scaled score drift between the original and revised WAIS and WAIS-R

| Adjustment coefficients | Paired sample t-tests | ||

|---|---|---|---|

| (WAIS–WAIS-R) | t | p | |

| Information | 1.78 | 3.16 | .006 |

| Vocabulary | 2.00 | 3.73 | .002 |

| Comprehension | 2.67 | 5.22 | <.001 |

| Similarities | 2.11 | 3.17 | .006 |

| Digit Span | 1.56 | 1.95 | .068 |

| Arithmetic | 2.28 | 3.06 | .007 |

| Picture Completion | 2.22 | 3.14 | .006 |

| Picture Arrangement | 0.75 | 0.77 | .459 |

| Block Design | 2.06 | 3.51 | .003 |

| Object Assembly | 0.61 | 0.70 | .491 |

| Digit-Symbol Coding | 1.06 | 2.37 | .030 |

Note: WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised. The adjustment coefficients represent the difference between the average uncorrected scaled scores obtained on the WAIS minus those obtained on the WAIS-R for a sample of Caucasian subjects matched for age and education (N = 34). Significance for these differences was tested using paired sample t-tests (t- and p-value presented in the table). The differences across versions that were nonsignificant are indicated in italics.

Analyses

Demographically uncorrected scaled scores (mean of 10, standard deviation of 3, higher scores indicating better performance) were used in all analyses to permit same-scale comparisons across test measures (see Heaton et al., 2004). Exploratory Factor Analyses (EFAs; Mulaik, 2010) with oblique factor rotations (Geomin; Yates, 1987; CF-Varimax, Crawford, 1975) were conducted on four test selections (HRB, HRB + WAIS, eHRB, eHRB + WAIS). For each EFA, the final solution among models with increasing numbers of factors was selected by comparing Akaike’s Information Criterion (AIC; Akaike, 1987), Bayesian Information Criterion (BIC; Schwartz, 1978), sample-adjusted BIC (adjBIC; Hagenaars & McCutcheon, 2002; Sclove, 1987), Chi-square index (χ2; Hu & Bentler, 1999), Comparative Fit Index (CFI; Bentler, 1990), Root Mean Square Error of Approximation (RMSEA; Steiger, 1990), and Standardized Root Mean Square Residual (SRMR; Byrne, 1998). The variance accounted for by the solution, the variance accounted for by each individual factor, and the interpretability of the factors were also evaluated to determine the plausibility of the factor structure. All analyses were run using MplusTM (Muthén & Muthén, 2009). To reduce the risk of producing instrumental factors (Cattell, 1961, Larrabee, 2003), only one measure per test was included in the EFAs. Same-test measures were kept only if they assessed markedly different cognitive constructs: Trail Making Test–Part A and –Part B, involving simple sequencing and sequencing with set-switching, respectively (Reitan, 1955); Digit Vigilance–Time and –Error, assessing speed and error-monitoring, respectively (Lewis, 1995); and Tactual Performance Test–Speed and –Memory, assessing speed and memory abilities, respectively (Reitan & Wolfson, 1985).

To examine demographic effects, composite factor scores were constructed using Confirmatory Factor Analysis (CFA; Lawley & Maxwell, 1971). CFA was especially useful for enabling the possibility of including same-test measures in the model while modeling within-instrument correlations, thus circumventing issues of instrumental factors and arbitrary measure selection. The CFA model parameters consisted of: factor loadings guided by the EFA results of the most inclusive battery, between-factor correlations, factor variances (factor means were set to 0), and residual correlations relating any two same-test measures. The CFA model was progressively simplified by iteratively fixing to zero smaller and non-significant loadings. The remaining loadings were interpreted based on criteria proposed by Comrey and Lee (1992). Adequate fit of the data was verified using the same statistical indices as for the EFAs. Composite factor scores were computed for the final model using Bartlett’s method (Estabrook & Neale, 2013; Muthén, 1998–2004, Appendix 11). Hierarchical Multiple Linear Regression was then carried out on these composite scores, using models incrementally including age, education, ethnicity, and gender. Quadratic and interaction terms were kept only if they were significant and explained at least 1% of the variance.

Results

Exploratory Factor Analyses

For all EFAs, indices of model fit and explained portions of variance are presented in Table 4. In the first EFA on HRB tests alone, indices of model fit and factor interpretability suggested a 4-factor solution, accounting for 71% of the variance. A 5-factor solution was considered based on improvement of some model fit indices, but discarded due to worse AIC and BIC, and poor interpretability (i.e., only one item loaded on the 5th factor). Based on geomin-rotated factor loadings (Table 5a) and review of cognitive processes theoretically involved in each test measure, the factors were interpreted as representing: ‘language/verbal attention,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention.’ Correlations between factors are presented in Table 5b.

Table 4.

Results of the EFA: indices of model fit

| Number of factors | df | χ2 | CFI | Information criteria (lower = better) | RMSEA | SRMR | Cumulative %variance | ||

|---|---|---|---|---|---|---|---|---|---|

| (good > .9) | AIC | BIC | adjBIC | (good < .05) | (good < .05) | ||||

| EFA on HRB tests (10 variables) | |||||||||

| 1 | 35 | 310.4 | 0.931 | 42,879 | 43,026 | 42,930 | 0.090 | 0.040 | 49.8 |

| 2 | 26 | 132.6 | 0.973 | 42,719 | 42,910 | 42,786 | 0.065 | 0.030 | 57.3 |

| 3 | 18 | 69.5 | 0.987 | 42,672 | 42,902 | 42,753 | 0.054 | 0.021 | 64.6 |

| 4 | 11 | 18.3 | 0.998 | 42,635 | 42,899 | 42,727 | 0.026 | 0.009 | 70.8 |

| 5 | 5 | 6.3 | >.999 | 42,635 | 42,928 | 42,738 | 0.016 | 0.005 | 75.9 |

| EFA on eHRB tests (22 variables) | |||||||||

| 1 | 209 | 2,066.3 | 0.803 | 84,974 | 85,297 | 85,087 | 0.095 | 0.078 | 43.8 |

| 2 | 188 | 1,055.1 | 0.908 | 84,005 | 84,430 | 84,154 | 0.069 | 0.043 | 53.1 |

| 3 | 168 | 664.0 | 0.947 | 83,654 | 84,177 | 83,837 | 0.055 | 0.031 | 58.7 |

| 4 | 149 | 452.3 | 0.968 | 83,480 | 84,096 | 83,696 | 0.046 | 0.026 | 62.8 |

| 5 | 131 | 339.3 | 0.978 | 83,403 | 84,107 | 83,650 | 0.04 | 0.023 | 66.7 |

| 6 | 114 | 250.7 | 0.986 | 83,349 | 84,136 | 83,625 | 0.035 | 0.018 | 70.0 |

| 7 | 98 | 166.0 | 0.993 | 83,296 | 84,161 | 83,599 | 0.027 | 0.014 | 73.3 |

| 8 | 83 | 117.9 | 0.996 | 83,278 | 84,217 | 83,607 | 0.021 | 0.012 | 76.1 |

| EFA on HRB + WAIS tests (21 variables) | |||||||||

| 1 | 189 | 2,227.4 | 0.774 | 77,619 | 77,927 | 77,727 | 0.105 | 0.095 | 43.7 |

| 2 | 169 | 997.27 | 0.908 | 76,429 | 76,835 | 76,571 | 0.071 | 0.044 | 54.8 |

| 3 | 150 | 537.1 | 0.957 | 76,007 | 76,506 | 76,182 | 0.051 | 0.035 | 60.4 |

| 4 | 132 | 372.0 | 0.973 | 75,878 | 76,465 | 76,083 | 0.043 | 0.025 | 64.9 |

| 5 | 115 | 223.9 | 0.988 | 75,764 | 76,434 | 75,998 | 0.031 | 0.017 | 68.9 |

| 6 | 99 | 161.8 | 0.993 | 75,734 | 76,482 | 75,996 | 0.025 | 0.014 | 71.9 |

| EFA on eHRB + WAIS tests (33 variables) | |||||||||

| 1 | 495 | 4,742.9 | 0.724 | 119,411 | 119,895 | 119,581 | 0.093 | 0.1 | 41.7 |

| 2 | 463 | 2,579.2 | 0.863 | 117,311 | 117,952 | 117,536 | 0.068 | 0.054 | 51.2 |

| 3 | 432 | 1,617.4 | 0.923 | 116,412 | 117,204 | 116,689 | 0.053 | 0.04 | 56.3 |

| 4 | 402 | 1,309.7 | 0.941 | 116,164 | 117,103 | 116,493 | 0.048 | 0.034 | 60.2 |

| 5 | 373 | 1,079.2 | 0.954 | 115,992 | 117,072 | 116,370 | 0.044 | 0.031 | 63.4 |

| 6 | 345 | 866.2 | 0.966 | 115,835 | 117,052 | 116,261 | 0.039 | 0.024 | 66.0 |

| 7 | 318 | 696.7 | 0.975 | 115,719 | 117,068 | 116,192 | 0.035 | 0.021 | 68.4 |

| 8 | 292 | 569.6 | 0.982 | 115,644 | 117,121 | 116,161 | 0.031 | 0.02 | 70.8 |

| 9 | 267 | 466.9 | 0.987 | 115,591 | 117,190 | 116,152 | 0.028 | 0.016 | 72.9 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery; eHRB = expanded Halstead-Reitan Battery; WAIS = Wechsler Adult Intelligence Scales; df = number of degrees of freedom in the models; χ2 = Chi-square indice of model fit; AIC = Akaike’s Information Criterion; BIC = Bayesian Information Criterion; adjBIC = sample-adjusted Bayesian Information Criterion; CFI = Comparative Fit Index; RMSEA = Root Mean Square Error of Approximation; SRMR = Standardized Root Mean Square Residual; Cumulative %Variance = proportion of variance accounted for by each model (i.e., the sum of eigenvalues divided by the number of variables). For each EFA, the model selected is highlighted in bold.

Table 5a.

EFA of the HRB: geomin-rotated factor loadings of the 4-factor solution

| Test measures | F1 | F2 | F3 | F4 |

|---|---|---|---|---|

| Language/verbal attention | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | |

| HRB | ||||

| Aphasia Screening | 0.651 | −0.055 | 0.019 | −0.027 |

| Speech-Sounds Perception | 0.376 | 0.076 | 0.068 | 0.322 |

| Category | 0.125 | 0.520 | 0.186 | 0.071 |

| TPT (memory) | 0.065 | 0.774 | −0.035 | −0.043 |

| TPT (time – total) | −0.086 | 0.658 | 0.311 | 0.027 |

| Trail Making Test—Part A | 0.031 | 0.007 | 0.832 | −0.042 |

| Trail Making Test—Part B | 0.249 | 0.039 | 0.629 | 0.071 |

| SPE (right side) | 0.036 | 0.071 | −0.024 | 0.693 |

| TFR (right hand) | −0.032 | −0.056 | 0.157 | 0.587 |

| Seashore Rhythm | 0.294 | 0.053 | −0.010 | 0.285 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery. Light gray-shaded areas indicate loadings ≥ 0.24 (i.e., items with more than 5% overlapping variance with the factor); and dark gray-shaded areas indicate loadings ≥ 0.4 considered priorities for factor interpretation.

Table 5b.

EFA of the HRB: correlations between factors

| Language/ Verbal Attention | Visuospatial Cognition | Perceptual-Motor Speed | Perceptual Attention | |

|---|---|---|---|---|

| Language/Verbal Attention | 1 | |||

| Visuospatial Cognition | 0.479 | 1 | ||

| Perceptual-Motor Speed | 0.560 | 0.682 | 1 | |

| Perceptual Attention | 0.433 | 0.748 | 0.717 | 1 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery. Effect sizes are gauged based on Cohen’s criteria (Cohen, Cohen, West, & Aiken, 2003)—that is, small (r2 ≥ .02 = r ≥ .14), medium (r2 ≥ .13 = r ≥ .36), and large (r2 ≥ .26 = r ≥ .51)—and are color-coded using very light gray, light gray, and dark gray-shaded areas, respectively.

In the second EFA on eHRB tests, a 7-factor solution best explained the data, accounting for 73% of the variance. An 8-factor solution was considered based on slight improvement of some model fit indices, but was discarded based on worse BIC and adjBIC, and on poor factor interpretability (i.e., only one item loaded on the 8th factor). Based on geomin-rotated factor loadings (Table 6a), the factors were interpreted to represent: ‘working memory/learning,’ ‘fluency,’ ‘language,’ ‘episodic/semantic memory,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention.’ Correlations between factors are presented in Table 6b.

Table 6a.

EFA of the eHRB: geomin-rotated factor loadings of the 7-factor solution

| F1 | F2 | F3 | F4 | F5 | F6 | F7 | |

|---|---|---|---|---|---|---|---|

| Test measures | Working memory/learning | Fluency | Language | Episodic/semantic memory | Visuospatial cognition | Perceptual-motor speed | Perceptual attention |

| HRB | |||||||

| Aphasia Screening | 0.289 | 0.011 | 0.517 | 0.112 | −0.142 | 0.089 | 0.012 |

| Speech-Sounds Perception | 0.073 | 0.162 | 0.257 | −0.057 | 0.173 | 0.114 | 0.295 |

| Category | 0.239 | 0.002 | −0.017 | 0.085 | 0.521 | 0.102 | 0.046 |

| TPT (memory) | −0.015 | 0.180 | −0.035 | −0.024 | 0.745 | −0.052 | −0.020 |

| TPT (time – total) | 0.024 | −0.006 | −0.011 | 0.020 | 0.618 | 0.263 | 0.067 |

| Trail Making Test—Part A | 0.203 | 0.014 | 0.031 | −0.061 | 0.102 | 0.649 | −0.015 |

| Trail Making Test—Part B | 0.450 | 0.014 | 0.031 | −0.004 | −0.004 | 0.599 | 0.009 |

| SPE (right side) | 0.309 | 0.049 | −0.206 | 0.013 | 0.027 | −0.018 | 0.585 |

| TFR (right hand) | −0.030 | −0.025 | 0.015 | 0.020 | −0.004 | 0.209 | 0.556 |

| Seashore Rhythm | 0.217 | 0.191 | 0.018 | −0.174 | 0.098 | 0.026 | 0.222 |

| eHRB expansion | |||||||

| PASAT | 0.525 | 0.106 | −0.041 | 0.067 | −0.001 | 0.340 | −0.012 |

| Digit Vigilance (Error) | 0.566 | −0.114 | 0.171 | −0.130 | 0.014 | −0.071 | 0.043 |

| Thurstone Word Fluency | 0.018 | 0.727 | 0.051 | 0.006 | 0.206 | 0.083 | −0.016 |

| Letter Fluency (FAS) | −0.012 | 0.775 | 0.025 | 0.062 | −0.054 | −0.024 | 0.192 |

| Category Fluency (Animal) | 0.082 | 0.322 | 0.023 | 0.299 | 0.016 | 0.131 | 0.093 |

| PIAT reading recognition | 0.001 | 0.220 | 0.653 | −0.008 | 0.003 | −0.065 | −0.080 |

| BNT | −0.013 | 0.041 | 0.536 | 0.304 | 0.218 | −0.011 | 0.043 |

| Story Memory (Learning) | 0.51 | −0.002 | 0.047 | 0.494 | 0.043 | −0.024 | −0.001 |

| CVLT (Trials 1–5) | 0.334 | 0.064 | −0.006 | 0.351 | 0.123 | 0.166 | <0.001 |

| Figure Memory (Learning) | 0.203 | −0.027 | 0.051 | 0.052 | 0.633 | 0.028 | 0.023 |

| Digit Vigilance (Time) | −0.069 | 0.053 | −0.118 | 0.015 | −0.003 | 0.674 | 0.076 |

| Grooved Pegboard (dom. hand) | −0.009 | −0.092 | 0.035 | 0.086 | 0.245 | 0.373 | 0.283 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery; eHRB = expanded Halstead-Reitan Battery. Light gray-shaded areas indicate loadings ≥ 0.24 (i.e., items with more than 5% overlapping variance with the factor); and dark gray-shaded areas indicate loadings ≥ 0.4 considered priorities for factor interpretation

Table 6b.

EFA of the eHRB: correlations between Factors

| Working memory/learning | Fluency | Language | Episodic/semantic memory | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | |

|---|---|---|---|---|---|---|---|

| Working memory/learning | 1 | ||||||

| Fluency | 0.428 | 1 | |||||

| Language | 0.427 | 0.498 | 1 | ||||

| Episodic/semantic memory | 0.287 | 0.375 | 0.245 | 1 | |||

| Visuospatial cognition | 0.604 | 0.277 | 0.174 | 0.420 | 1 | ||

| Perceptual-motor speed | 0.372 | 0.462 | 0.136 | 0.376 | 0.567 | 1 | |

| Perceptual attention | 0.438 | 0.353 | 0.151 | 0.320 | 0.653 | 0.585 | 1 |

Note: EFA = Exploratory Factor Analysis; eHRB = expanded Halstead-Reitan Battery. Effect sizes are gauged based on Cohen’s criteria (Cohen et al., 2003)—that is, small (r2 ≥ .02 = r ≥ .14), medium (r2 ≥ .13 = r ≥ .36), and large (r2 ≥ .26 = r ≥ .51)—and are color-coded using very light gray, light gray, and dark gray-shaded areas, respectively.

In the third EFA on combined HRB and WAIS tests, a 5-factor solution best explained the data, accounting for 69% of the variance. A 6-factor solution was considered based on slight improvement of most model fit indices, but was discarded based on worse BIC and poor factor interpretability (i.e., only one item loaded on the 6th factor). In the 5-factor solution, geomin-rotation also yielded a poorly defined 5th factor (all loadings <0.4). A different rotation method, CF-varimax, was tested and yielded consistent but slightly more robust loadings (Table 7a) and was retained for better interpretability. Factors were interpreted to represent: ‘working memory/verbal attention,’ ‘semantic knowledge,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention.’ Correlations between factors are presented in Table 7b.

Table 7a.

EFA of the combined HRB and WAIS/WAIS-R: CF-varimax-rotated factor loadings of the 5-factor solution

| F1 | F2 | F3 | F4 | F5 | |

|---|---|---|---|---|---|

| Test measures | Working memory/verbal attention | Semantic knowledge | Visuospatial cognition | Perceptual-motor speed | Perceptual attention |

| HRB | |||||

| Aphasia Screening | 0.302 | 0.299 | −0.052 | 0.280 | −0.221 |

| Speech-Sounds Perception | 0.336 | 0.126 | 0.034 | 0.227 | 0.267 |

| Category | 0.139 | 0.057 | 0.528 | 0.166 | 0.171 |

| TPT (memory) | 0.156 | −0.038 | 0.488 | 0.025 | 0.245 |

| TPT (time – total) | 0.020 | −0.058 | 0.543 | 0.271 | 0.269 |

| Trail Making Test—Part A | −0.027 | −0.004 | 0.059 | 0.744 | 0.106 |

| Trail Making Test—Part B | 0.166 | 0.034 | 0.089 | 0.678 | 0.069 |

| SPE (right side) | 0.142 | 0.074 | 0.167 | 0.140 | 0.443 |

| TFR (right hand) | 0.052 | 0.057 | 0.034 | 0.287 | 0.392 |

| Seashore Rhythm | 0.498 | −0.035 | −0.051 | 0.050 | 0.272 |

| WAIS/WAIS-R | |||||

| Digit Span | 0.883 | −0.033 | −0.001 | −0.028 | −0.017 |

| Arithmetic | 0.311 | 0.298 | 0.270 | 0.132 | −0.262 |

| Information | 0.051 | 0.669 | 0.221 | −0.005 | −0.262 |

| Vocabulary | 0.128 | 0.828 | −0.059 | 0.065 | −0.032 |

| Comprehension | 0.014 | 0.779 | −0.018 | −0.058 | 0.209 |

| Similarities | 0.019 | 0.561 | 0.234 | 0.125 | −0.033 |

| Picture Completion | 0.057 | 0.321 | 0.338 | 0.110 | 0.213 |

| Picture Arrangement | 0.069 | 0.236 | 0.367 | 0.147 | 0.226 |

| Block Design | 0.149 | 0.101 | 0.688 | 0.067 | 0.001 |

| Object Assembly | 0.046 | 0.026 | 0.708 | 0.101 | 0.013 |

| Digit-Symbol Coding | 0.030 | −0.049 | −0.028 | 0.741 | −0.036 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery; WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised. Light gray-shaded areas indicate loadings ≥0.24 (i.e., items with more than 5% overlapping variance with the factor); and dark gray-shaded areas indicate loadings ≥0.4 considered priorities for factor interpretation. For better interpretability, the oblique rotation method CF-varimax was used here instead of geomin, yielding a consistent factor structure overall but more robust loadings on the fifth factor.

Table 7b.

EFA of the combined HRB and WAIS/WAIS-R: correlations between factors

| Working memory/verbal attention | Semantic knowledge | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | |

|---|---|---|---|---|---|

| Working memory/verbal attention | 1 | ||||

| Semantic knowledge | 0.452 | 1 | |||

| Visuospatial cognition | 0.435 | 0.419 | 1 | ||

| Perceptual-motor speed | 0.539 | 0.282 | 0.574 | 1 | |

| Perceptual attention | 0.175 | −0.045 | 0.314 | 0.381 | 1 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery; WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised. Effect sizes are gauged based on Cohen’s criteria (Cohen et al., 2003)—that is, small (r2 ≥ .02 = r ≥ .14), medium (r2 ≥ .13 = r ≥ .36), and large (r2 ≥ .26 = r ≥ .51)—and are color-coded using very light gray, light gray, and dark gray-shaded areas, respectively.

In the fourth EFA on combined eHRB and WAIS tests, an 8-factor solution best explained the data, accounting for 71% of the variance. A 9-factor solution was considered based on slight improvement of most model fit indices, but discarded due to worse BIC and poor factor interpretability (i.e., only one item loaded on the 8th and 9th factors). Based on geomin-rotated factor loadings (Table 8a), factors were interpreted to represent: ‘working memory,’ ‘fluency,’ ‘phonological decoding,’ ‘semantic knowledge,’ ‘verbal episodic memory,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention.’ Correlations between factors are presented in Table 8b.

Table 8a.

EFA of the combined eHRB and WAIS/WAIS-R: geomin-rotated factor loadings of the 8-factor solution

| Test measures | F1 | F2 | F3 | F4 | F5 | F6 | F7 | F8 |

|---|---|---|---|---|---|---|---|---|

| Working memory | Fluency | Phonological decoding | Semantic knowledge | Verbal episodic memory | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | |

| HRB | ||||||||

| Aphasia Screening | 0.196 | 0.007 | 0.336 | 0.322 | 0.080 | −0.059 | 0.082 | 0.013 |

| Speech-Sounds Perception | −0.005 | 0.072 | 0.333 | 0.024 | 0.095 | 0.212 | 0.076 | 0.416 |

| Category | 0.130 | 0.006 | −0.068 | 0.047 | 0.185 | 0.533 | 0.087 | 0.061 |

| TPT (memory) | 0.004 | 0.160 | −0.002 | −0.108 | 0.168 | 0.567 | −0.069 | 0.066 |

| TPT (time – total) | −0.010 | 0.045 | −0.038 | −0.058 | 0.097 | 0.606 | 0.247 | 0.042 |

| Trail Making Test—Part A | 0.111 | 0.049 | 0.021 | −0.007 | −0.013 | 0.136 | 0.607 | 0.054 |

| Trail Making Test—Part B | 0.277 | 0.040 | 0.026 | 0.046 | 0.078 | 0.094 | 0.524 | 0.098 |

| SPE (right side) | 0.125 | 0.039 | −0.142 | −0.028 | 0.122 | 0.213 | 0.151 | 0.328 |

| TFR (right hand) | −0.043 | −0.006 | −0.027 | 0.051 | −0.060 | 0.150 | 0.378 | 0.294 |

| Seashore Rhythm | 0.293 | 0.072 | 0.051 | −0.072 | −0.010 | 0.051 | 0.012 | 0.414 |

| eHRB expansion | ||||||||

| PASAT | 0.497 | 0.093 | −0.017 | 0.030 | 0.142 | −0.001 | 0.342 | −0.011 |

| Digit Vigilance (Error) | 0.323 | −0.224 | 0.250 | 0.012 | 0.232 | 0.080 | −0.020 | 0.050 |

| Thurstone Word Fluency | 0.011 | 0.686 | 0.168 | 0.015 | 0.045 | 0.087 | 0.054 | 0.044 |

| Letter Fluency (FAS) | 0.057 | 0.802 | 0.041 | 0.040 | −0.042 | −0.035 | −0.025 | 0.084 |

| Category Fluency (Animal) | 0.002 | 0.470 | −0.107 | 0.190 | 0.103 | 0.054 | 0.139 | −0.056 |

| PIAT reading recognition | 0.042 | 0.044 | 0.546 | 0.467 | −0.175 | −0.007 | −0.004 | −0.025 |

| BNT | −0.125 | 0.062 | 0.161 | 0.632 | 0.025 | 0.206 | −0.005 | 0.064 |

| Story Memory (Learning) | 0.078 | 0.043 | <0.001 | 0.351 | 0.564 | 0.001 | 0.029 | −0.020 |

| CVLT (Trials 1–5) | −0.023 | 0.165 | 0.079 | 0.054 | 0.565 | 0.047 | 0.142 | 0.001 |

| Figure Memory (Learning) | 0.039 | −0.031 | 0.062 | −0.015 | 0.203 | 0.728 | −0.009 | 0.001 |

| Digit Vigilance (Time) | −0.034 | 0.074 | −0.006 | −0.047 | −0.162 | −0.033 | 0.779 | −0.007 |

| Grooved Pegboard (dom. hand) | −0.131 | −0.051 | 0.017 | 0.061 | 0.078 | 0.283 | 0.464 | 0.177 |

| WAIS/WAIS-R | ||||||||

| Digit Span | 0.566 | 0.048 | 0.127 | 0.050 | −0.027 | −0.015 | −0.028 | 0.354 |

| Arithmetic | 0.496 | −0.036 | −0.003 | 0.396 | 0.014 | 0.093 | 0.035 | −0.096 |

| Information | 0.085 | 0.052 | 0.020 | 0.744 | 0.007 | 0.102 | −0.134 | −0.176 |

| Vocabulary | 0.019 | −0.003 | 0.134 | 0.856 | −0.003 | −0.088 | −0.014 | 0.086 |

| Comprehension | −0.071 | −0.006 | −0.061 | 0.753 | 0.028 | −0.023 | −0.019 | 0.177 |

| Similarities | −0.009 | 0.030 | 0.016 | 0.595 | 0.052 | 0.210 | 0.032 | −0.048 |

| Picture Completion | 0.034 | −0.031 | −0.088 | 0.377 | −0.032 | 0.373 | 0.123 | 0.148 |

| Picture Arrangement | 0.053 | 0.014 | −0.081 | 0.204 | 0.201 | 0.377 | 0.037 | 0.129 |

| Block Design | 0.150 | −0.010 | 0.010 | 0.200 | −0.064 | 0.731 | 0.012 | −0.055 |

| Object Assembly | −0.032 | 0.029 | 0.126 | 0.046 | −0.059 | 0.873 | 0.004 | −0.140 |

| Digit-Symbol Coding | 0.107 | −0.018 | 0.257 | −0.029 | 0.022 | −0.013 | 0.736 | −0.112 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery; eHRB = expanded Halstead-Reitan Battery; WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised. Light gray-shaded areas indicate loadings ≥0.24 (i.e., items with more than 5% overlapping variance with the factor); and dark gray-shaded areas indicate loadings ≥0.4 considered priorities for factor interpretation.

Table 8b.

EFA of the combined HRB and WAIS/WAIS-R: correlations between factors

| Working memory | Fluency | Phonological decoding | Semantic knowledge | Verbal episodic memory | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | |

|---|---|---|---|---|---|---|---|---|

| Working memory | 1 | |||||||

| Fluency | 0.385 | 1 | ||||||

| Phonological decoding | 0.326 | 0.348 | 1 | |||||

| Semantic knowledge | 0.434 | 0.531 | 0.313 | 1 | ||||

| Verbal episodic memory | 0.377 | 0.366 | 0.096 | 0.380 | 1 | |||

| Visuospatial cognition | 0.427 | 0.420 | 0.048 | 0.454 | 0.558 | 1 | ||

| Perceptual-motor speed | 0.287 | 0.51 | 0.085 | 0.228 | 0.536 | 0.594 | 1 | |

| Perceptual attention | 0.079 | 0.401 | 0.022 | 0.174 | 0.340 | 0.425 | 0.412 | 1 |

Note: EFA = Exploratory Factor Analysis; HRB = Halstead-Reitan Battery; WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised. Effect sizes are gauged based on Cohen’s criteria (Cohen et al., 2003)—that is, small (r2 ≥ .02 = r ≥ .14), medium (r2 ≥ .13 = r ≥ .36), and large (r2 ≥ .26 = r ≥ .51)—and are color-coded using very light gray, light gray, and dark gray-shaded areas, respectively.

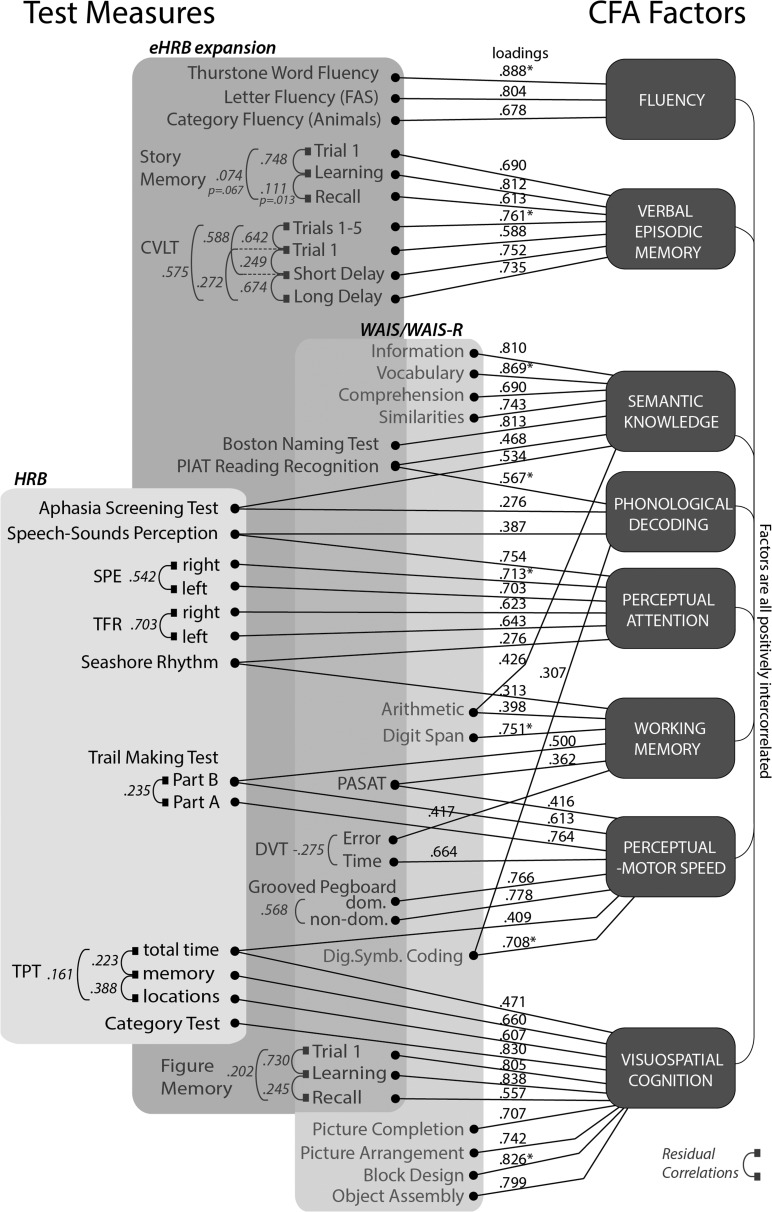

Confirmatory Factor Analysis

The final CFA model, presented in Fig. 1, was found to fit well the data (χ2(845) = 2,105.4, CFI = .951, AIC = 153,274, BIC = 154,199, adjBIC = 153,598, RMSEA = 0.039, SRMR = 0.058), with most factors comprising at least three loadings deemed very good to excellent for adequate stability. The two exceptions were the factors ‘working memory’ (1 excellent, 1 good, and 5 poor loadings) and ‘phonological decoding’ (1 good and 3 poor loadings). These factors had more items with cross-loading parameters—that is, items that divided their explained variance across two factors, yielding smaller loadings on both factors. Alternate CFA models that did not permit cross-loadings were tested, but required making somewhat arbitrary decisions about the model, and produced worse data fit and higher correlations between factors. Factor variances and between-factor correlations are presented in Table 9. Residual correlations between same-test measures (Fig. 1) were for the most part positive and significant with large effect sizes. Exceptions included small residual correlations between Trail Making Test-Part A and Part B, TPT time total and memory/location, and Digit Vigilance-Time and -Error, supporting the assumption that these same-test measures would not yield instrumental factors in the EFA. Interestingly, Digit Vigilance Test-Time and -Error had a negative residual correlation, suggesting a trade-off between speed and accuracy on that test.

Fig. 1.

Standardized loadings and residual correlations resulting from the Confirmatory Factor Analysis (CFA) on the combined tests of the Halstead-Reitan Battery (HRB), Wechsler Adult Intelligence Scales (WAIS/WAIS-R), and expansion of the expanded Halstead-Reitan Battery (eHRB). The CFA model was run by setting one loading in each factor to 1 (indicated by an asterisk), setting the factor means to 0, and letting factor variances vary as parameters. For better interpretability, standardized loadings and residual correlations are presented here instead of unstandardized model parameters. Residual correlations between same-test measures were all significant at p < .001 except when specified.

Table 9.

CFA of the combined eHRB and WAIS/WAIS-R: factor variance and factor correlations

| Working memory | Fluency | Phonological decoding | Semantic knowledge | Verbal episodic memory | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | |

|---|---|---|---|---|---|---|---|---|

| Factor variances (unit = squared scaled scores) | 4.25 | 7.45 | 3.01 | 5.32 | 5.21 | 6.09 | 4.57 | 5.72 |

| Factor correlations | ||||||||

| Working memory | 1 | |||||||

| Fluency | 0.658 | 1 | ||||||

| Phonological decoding | 0.340 | 0.287 | 1 | |||||

| Semantic knowledge | 0.602 | 0.683 | 0.244 | 1 | ||||

| Verbal episodic memory | 0.706 | 0.728 | 0.081 | 0.753 | 1 | |||

| Visuospatial cognition | 0.638 | 0.627 | −0.129 | 0.664 | 0.821 | 1 | ||

| Perceptual-motor speed | 0.498 | 0.629 | −0.130 | 0.404 | 0.706 | 0.802 | 1 | |

| Perceptual attention | 0.555 | 0.638 | −0.261 | 0.448 | 0.692 | 0.828 | 0.860 | 1 |

Notes: CFA = Confirmatory Factor Analysis; HRB = Halstead-Reitan Battery; WAIS = Wechsler Adult Intelligence Scales; WAIS-R = Wechsler Adult Intelligence Scales-Revised. Effect sizes are gauged based on Cohen’s criteria (Cohen et al., 2003)—that is, small (r2 ≥ .02 = r ≥ .14), medium (r2 ≥ .13 = r ≥ .36), and large (r2 ≥ .26 = r ≥ .51)—and are color-coded using very light gray, light gray, and dark gray-shaded areas, respectively.

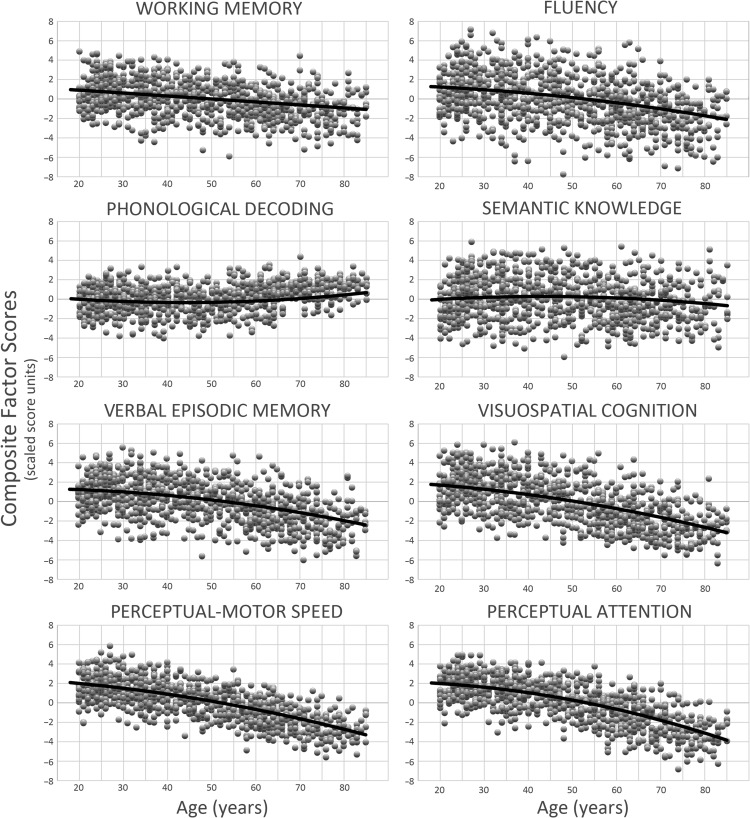

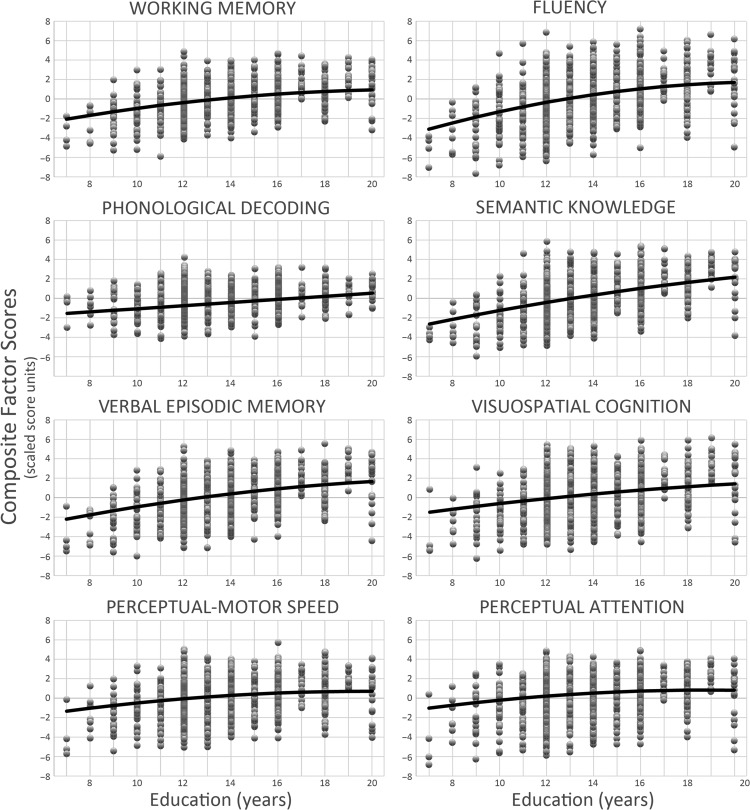

Hierarchical Multiple Linear Regression

Results from the hierarchical multiple linear regressions are presented in Table 10 and Figs 2 and 3. Age, education, and ethnicity were found to contribute significant incremental portions of variance with effect sizes varying depending on the cognitive domains (see R2change in Table 10). Quadratic terms for age and education were significant in a majority of models, generally indicating accelerated worsening of performance with age and linear improvement of performance with education with quadratic tapering at high educational levels. Most interaction terms were nonsignificant or accounted for less than 1% of variance and were removed from the models. The only nonnegligible interaction was that of ethnicity with age in ‘perceptual attention,’ characterized by a slightly steeper decrease of performance with age in African American participants (R2 = 0.622, F = 505.6, p < .001) compared to Caucasian participants (R2 = 0.522, F = 197.2, p < .001)—that term also added very little to the model (R2change = 0.018). The incremental contribution of gender after accounting for age, education, and ethnicity was minimal to negligible in all factors but ‘phonological decoding,’ with women outperforming men with a small effect size (R2change = 2.3%). Gender differences were also significant in ‘visuospatial cognition,’ (men > women) ‘perceptual-motor speed,’ (women > men) and ‘fluency,’ (women > men) but were minimal in effect size (R2change = 0.3% to 0.4%).

Table 10.

Hierarchical multiple linear regression: incremental effects of age, education, ethnicity, and gender on the composite factor scores derived from the CFA

| Working memory | Fluency | Phonological decoding | Semantic knowledge | Verbal episodic memory | Visuospatial cognition | Perceptual-motor speed | Perceptual attention | ||

|---|---|---|---|---|---|---|---|---|---|

| Regression model including age | |||||||||

| Model fit | R2 | 0.148 | 0.175 | 0.039 | 0.036 | 0.282 | 0.396 | 0.545 | 0.550 |

| F | 85.0 | 104.2 | 15.5 | 18.0 | 192.6 | 321.6 | 586.9 | 599.4 | |

| p | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | |

| Regression model including age and education | |||||||||

| Model fit | R2 | 0.284 | 0.335 | 0.160 | 0.280 | 0.448 | 0.484 | 0.597 | 0.589 |

| F | 96.8 | 123.1 | 48.4 | 126.7 | 198.2 | 305.6 | 362.3 | 350.3 | |

| p | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | |

| Model comparison | R2change | 0.136 | 0.160 | 0.121 | 0.244 | 0.166 | 0.087 | 0.052 | 0.039 |

| Fchange | 92.6 | 117.4 | 109.9 | 331.9 | 146.4 | 165.5 | 63.2 | 46.0 | |

| pchange | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | |

| Regression model including age, education, and ethnicity | |||||||||

| Model fit | R2 | 0.396 | 0.413 | 0.205 | 0.463 | 0.586 | 0.634 | 0.656 | 0.673 |

| F | 128.1 | 137.1 | 48.9 | 210.4 | 276.2 | 422.8 | 371.6 | 334.5 | |

| p | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | |

| Model comparison | R2change | 0.113 | 0.077 | 0.044 | 0.183 | 0.138 | 0.150 | 0.058 | 0.084 |

| Fchange | 182.6 | 128.7 | 42.4 | 332.5 | 325.3 | 400.3 | 165.2 | 125.1 | |

| pchange | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | |

| Regression model including age, education, ethnicity, and gender | |||||||||

| Model fit | R2 | 0.396 | 0.416 | 0.228 | 0.465 | 0.587 | 0.637 | 0.660 | 0.673 |

| F | 128.3 | 115.9 | 44.8 | 169.5 | 231.2 | 342.0 | 315.3 | 286.5 | |

| p | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | <.001 | |

| Model comparison | R2change | <0.001 | 0.004 | 0.023 | 0.002 | 0.001 | 0.003 | 0.004 | <0.001 |

| Fchange | 0.472 | 6.1 | 22.9 | 3.8 | 3.2 | 7.6 | 12.3 | 0.4 | |

| pchange | .492 | .014 | <.001 | .053 | .076 | .006 | <.001 | .847 | |

| Regression coefficients | |||||||||

| Age | bage2 | ~ | −4.33 × 10−4 | 5.94 × 10−4 | −5.51 × 10−4 | −5.86 × 10−4 | −6.02 × 10−4 | −5.81 × 10−4 | −9.49 × 10−4 |

| bage | −3.03 × 10−2 | −5.82 × 10−3 | −5.18 × 10−2 | 4.78 × 10−2 | 5.61 × 10−3 | −1.21 × 10−2 | −2.03 × 10−2 | 3.13 × 10−2 | |

| Education | bedu2 | −1.39 × 10−2 | −2.29 × 10−2 | ~ | ~ | −1.24 × 10−2 | ~ | −1.25 × 10−2 | −1.22 × 10−2 |

| bedu | 6.09 × 10−1 | 9.91 × 10−1 | 1.61 × 10−1 | 3.59 × 10−1 | 6.35 × 10−1 | 2.15 × 10−1 | 4.94 × 10−1 | 4.71 × 10−1 | |

| Ethnicity | bethn | −1.28 | −1.56 | −0.71 | −1.92 | −1.72 | −1.91 | −1.10 | 0.36 |

| bethn×age | ~ | ~ | ~ | ~ | ~ | ~ | ~ | −3.41 × 10−2 | |

| Gender | bsex | 6.33 × 10−2 | 3.19 × 10−1 | 4.36 × 10−1 | −2.04 × 10−1 | 1.62 × 10−1 | −2.64 × 10−1 | 2.76 × 10−1 | 1.63 × 10−2 |

Notes: CFA = Confirmatory Factor Analysis. R2 represents the proportion of variance accounted for by each regression model, F and p represent the F-ratio and p-value indicative of model fit. The subscript ‘change’ refers to measures of improvement of one model compared to the previous model in the hierarchy. , , , , , and represent the regression coefficients corresponding to the effects of age (linear and quadratic terms expressed in number of standard deviations per year and year-squared), education (linear and quadratic terms expressed in scaled score unit per year and year-squared), and ethnicity and gender (expressed in scaled score unit using dummy coding 0 for Caucasian/men and 1 for African American/women). bedu × age, bethn × age, and bethn × edu × age are regression coefficients for the interaction of age with education and age with ethnicity. All other interaction terms were non-significant or contributed less than 1% proportion of variance and were removed from the models. Effect sizes are gauged based on Cohen’s criteria (Cohen et al., 2003)—that is, small (R2≥ .02), medium (R2 ≥ .13), and large (R2 ≥ .26)—and are color-coded using gray-shaded areas. The symbol ‘~’ represents quadratic or interaction coefficients that were removed from the models (effects that were non-significant or contributed <1% proportion of variance). Linear regression coefficients that were non-significant (p ≥ .05) are italicized.

Fig. 2.

Effect of age (in years) on the ‘working memory,’ ‘fluency,’ ‘phonological decoding,’ ‘semantic knowledge,’ ‘verbal episodic memory,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention’ CFA composite factor scores (in scaled score units). CFA = Confirmatory Factor Analysis.

Fig. 3.

Effect of education (in years) on the ‘working memory,’ ‘fluency,’ ‘phonological decoding,’ ‘semantic knowledge,’ ‘verbal episodic memory,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention’ CFA composite factor scores (in scaled score units). CFA = Confirmatory Factor Analysis.

Discussion

The neurocognitive domains underlying performance during comprehensive neuropsychological assessment were characterized using exploratory and confirmatory factor analysis on the eHRB normative dataset. In particular, the incremental contributions of the HRB and eHRB with and without the Wechsler Intelligence Scales were evaluated. The HRB alone had a 4-factor structure, covering the neurocognitive domains: ‘language/verbal attention,’ ‘visuospatial cognition,’ ‘perceptual-motor speed,’ and ‘perceptual attention.’ These results are generally consistent with a synthesis of previous factor analytic studies suggesting a complex factor structure for the HRB with four to possibly five factors (Ross et al., 2013). The expansion in the eHRB contributed three additional factors, notably by covering two new cognitive domains—‘fluency’ and ‘episodic/semantic memory’; and by dividing the ‘language/verbal attention’ factor into two more specific constructs: ‘working memory/learning’ and ‘language.’ The inclusion of the Wechsler measures contributed one additional factor, predominantly defined by the ‘semantic knowledge’ construct. This finding is compatible with the importance of the Wechsler scales in measuring educational attainment and thus premorbid functioning in clinical settings compared to the HRB measures alone (Heaton et al., 1996). Beyond the addition of a ‘semantic knowledge’ factor, the Wechsler scales also contributed important restructuring of the other eHRB verbal factors into more specific and stable constructs: ‘working memory,’ ‘verbal episodic memory,’ and ‘phonological decoding.’

Overall, six of the factors were found to be consistent and stable across test batteries: ‘visuospatial cognition,’ ‘perceptual speed,’ ‘perceptual attention,’ ‘fluency,’ ‘verbal episodic memory,’ and ‘semantic knowledge.’ The other factors were less stable, involving concepts related to verbal attention, working memory, and basic language skills, that varied in emphasis depending on the tests involved in the analyses. This fragile stability can be essentially attributed to the complexity (or non-purity) of the tests upon which these factors relied. For example, within the eHRB, tests that recruited working memory also involved perceptual speed (e.g., PASAT, Trail Making Test—Part B); and those recruiting abilities for phonological decoding also involved semantic knowledge (i.e., PIAT reading recognition, Aphasia Screening) or perceptual attention (i.e., Speech-Sounds Perception). With factor analysis requiring a minimum of two similar items for extracting one factor, it follows that these underlying constructs could best emerge in the larger battery, when enough of these complex tests were included.

Neurocognitive Domains

Perceptual Attention

A ‘perceptual attention’ factor was consistently extracted with dominant loadings from the Speech-Sounds Perception Test and Seashore Rhythm Test, two tests included in the original HRB to measure alertness and attention (Reitan & Wolfson, 2004). Secondary loadings from the Reitan- Kløve Sensory-Perceptual Examination (SPE) and Tactile Form Recognition Test (TFR) also indicated a sensory-perceptual component to this factor. Cognitively healthy individuals are not typically expected to make errors on SPE and TFR (Spreen & Strauss, 1998), and performances on those tests were also likely to be representative of attention abilities. Factors representative of attention and concentration abilities have been reported before, but usually as less pure attention factors encompassing constructs such as psychomotor speed (e.g., Grant et al., 1978; Ryan et al., 1983), verbal and spatial working memory (e.g., Bornstein, 1983; Leonberger et al., 1991, 1992), or general verbal intelligence (e.g., Leonberger et al., 1991). The only study that found a similar perceptual attention factor was that of Fowler and colleagues (1988), likely related to their use of oblique rotation.

Working Memory

Working memory is likely to underlie performance on the factor characterized by loadings from the PASAT, Trail Making Test-Part B, WAIS-Digit Span, WAIS–Arithmetic, Digit Vigilance-Error, and Seashore Rhythm Test. Primary involvement of working memory processes has been demonstrated in performances on the PASAT (Tombaugh, 2006), Trail Making Test-Part B (Sánchez-Cubillo et al., 2009), and Digit Span and Arithmetic subtests (cf., working memory index in newer version of the WAIS, Wechsler, 1997). Working memory is also likely involved during Digit Vigilance, which requires remembering which digit to find and monitoring locations already searched (Oh & Kim, 2004); and during the Seashore Rhythm Test, which requires holding in mind and comparing two rhythmic beats (Seashore & Bennett, 1946). In previous factor analyses including the HRB, tests involving working memory have been generally absorbed as part of a global verbal comprehension or verbal intelligence factor (e.g., Fowler et al., 1988; Grant et al., 1978). The expansion of the HRB and addition of the Wechsler scales enabled separating working memory from a more general language/verbal attention construct.

Verbal Episodic Memory

Consistent with previous accounts (e.g., Heilbronner et al., 1989; Leonberger et al., 1992), the inclusion of verbal memory tests led to the extraction of a separate verbal memory factor. Interestingly, the structure of that factor varied subtly depending on test selection. In the EFA on the eHRB tests alone (Table 6a), that factor was characterized by loadings from the Story Memory Test and CVLT, but also from the Category Fluency Test and BNT, thus representing abilities involving both episodic and semantic memory (Saumier & Chertkow, 2002; Tulving, 1972). In the EFA on combined eHRB and WAIS tests (Table 8a), the verbal memory factor moved toward representing a more exclusive episodic memory construct, with substantial loadings now arising solely but with equivalent strength from the CVLT and Story Memory Test. Interestingly, although very small, a loading from the Figure Memory Test also became noticeable on that factor, possibly suggesting cross-modal episodic memory mechanisms.

Semantic Knowledge

The partial loadings from the BNT, Category Fluency Test, and Story Memory Test that were previously associated with the concept of semantic memory (EFA on eHRB tests alone) were transferred to a new ‘semantic knowledge’ factor in the EFA on combined eHRB and WAIS tests. This new factor, primarily characterized by the WAIS Vocabulary, Comprehension, Information, and Similarity subtests, has consistently emerged in factor analytic studies including the WAIS/WAIS-R (e.g., Kaufman & Lichtenberger, 2006; Silverstein, 1982). These tests have been noted to reflect the amount of knowledge acquired through education and culturally driven overlearned experiences, and shown to be resistant to aging (e.g., Heaton, Ryan, Grant, & Matthews, 1996)—a concept also coined as ‘crystallized intelligence’ (Cattell, 1963; Horn & Cattell, 1966).

Phonological Decoding

An additional language factor emerged from the data in the EFA on combined eHRB and WAIS tests, distinct from ‘semantic knowledge’ and ‘working memory,’ with dominant loading from PIAT Reading Recognition and secondary loadings from the Aphasia Screening Test, Speech-Sounds Perception Test, WAIS Digit-Symbol coding, and Digit Vigilance-Error in decreasing order of magnitude. The common cognitive construct underlying those tasks was interpreted to be grapheme-phoneme processing—the ability for the representation and matching of written symbols and sounds. This ability is central to sounding-out words when reading and represents a hallmark deficit in developmental dyslexia (e.g., Pennington, Van Orden, Smith, Green, & Haith, 1990; Rack, Snowling, & Olson, 1992). Consistent with literature suggesting that the occurrence of dyslexia is not related to general cognitive ability or IQ (Lyon, Shaywitz, & Shaywitz, 2003; Siegel, 1988), the ‘phonological decoding’ factor had only small to negligible correlations with other factors. Further, the loadings of the PIAT Reading Recognition and Aphasia Screening tests on both the ‘phonological decoding’ and ‘semantic knowledge’ factors were consistent with leading theories of reading suggesting a dual-route mechanism: (1) a phonological or sounding-out route, which proceeds with the construction of phonological representations from written parts of words; and (2) a lexical route, which proceeds with the direct recognition by sight of entire known words; a mechanism that benefits from reading experience and acquired lexical knowledge (Coltheart, Curtis, Atkins, & Haller, 1993; Marshall & Newcombe, 1973; Pritchard, Coltheart, Palethorpe, & Castles, 2012).

Visuospatial Cognition

A general ‘visuospatial cognition’ factor was consistently found across the HRB, eHRB, and WAIS batteries, including concepts pertaining to visuospatial construction, reasoning, memory, and abstraction (i.e., loadings from the Category Test, TPT, Figure Memory Test, and WAIS Block Design, Object Assembly, Picture Completion, and Picture Arrangement subtests).

The single loading of the Category Test on the ‘visuospatial cognition’ factor may seem surprising for a test suggested to recruit abstraction, problem solving, logical analysis, and organized planning (Reitan & Wolfson, 2004), but has been consistently reported in previous studies (e.g., Fowler et al., 1988; Lansdell & Donnelly, 1977; Leonberger et al., 1992; Moehle et al., 1990). Dual loadings have been reported for the Category Test, but have usually also involved a visuospatial cognition factor as well as a general intelligence factor (e.g., Bornstein, 1983; Boyle, 1988; Goldstein & Shelly, 1972; Grant et al., 1978). The lack of loading of the Category Test onto a separate abstraction factor may be attributable to the absence of another test of nonverbal concept formation, as factor analysis requires two or more tests with similar underlying constructs to extract a common factor.

Measures of visuospatial memory (i.e., TPT-memory and Figure Memory Test) also failed to form a separate memory factor—a finding consistent with most studies that combined WMS tests with HRB and WAIS tests (e.g, Heilbronner et al., 1989; Leonberger et al., 1992). Separate visuospatial memory factors have been reported in some factor analyses including HRB tests (e.g., Leonberger et al., 1991; Newby et al., 1983; Russell, 1982), but a closer look suggested instrumental factors (e.g., Larrabee, 2003). Compatible with considerations that figural stimuli are more difficult to encode than verbal stimuli (e.g., Brown, Patt, Sawyer, & Thomas, 2016), these findings may indicate that a core challenge of visuospatial memory tasks in cognitively healthy individuals resides in encoding the visuospatial properties of stimuli rather than in their retention. The number of visuospatial memory tests included in the analysis was possibly also insufficient to form a separate factor.

Fluency

A ‘fluency’ factor emerged systematically with the three fluency eHRB tests. Considering the very similar task demands across the three tests, this factor is likely to have an instrumental component representative of speeded word-retrieval tasks. Interestingly, though, consistent with the well documented brain-based distinction between phonemic and semantic fluency (e.g., Baldo, Schwartz, Wilkins, & Dronkers, 2006; Gourovitch et al., 2000; Henry & Crawford, 2004), loadings were stronger for the two phonemic fluency tests (Letter and Thurstone Word Fluency, requiring oral and written fluency, respectively) and weaker for the semantic fluency test (Animal Fluency, requiring oral fluency). This distinction suggests an underlying executive function construct beyond task-bound correlations.

Perceptual-Motor Speed

A nonverbal speed factor was consistently found across all test selections, characterized by strong loadings from the Trail Making Test-Parts A & B (A stronger than B), Digit Vigilance-Time, WAIS Digit-Symbol Coding, and Grooved Pegboard Test, and by secondary loadings from TPT-time, PASAT, and TFR. A ‘perceptual-motor speed’ factor has rarely emerged in previous factor analyses including HRB tests, with these tests usually loading on a visuospatial cognition factor (e.g., Fowler et al., 1988; Goldstein & Shelly, 1972; Grant et al., 1978; Moehle et al., 1990) or on both a visuospatial and attentional factor (e.g., Leonberger et al., 1992, Swiercinsky, 1979). Factor extraction was likely enabled in the present study by the use of oblique rotation, and the use of a sample that is large in size and composed of cognitively healthy participants.

Demographic Effects

In neuropsychology, demographic effects and normative corrections are often known separately for each neurocognitive test (e.g., Heaton et al., 2004; Norman et al., 2000, 2011; Schretlen, Testa, & Pearlson, 2010). By examining the effects of age, education, ethnicity, and gender on composite factor scores, the present study contributes to the literature by exploring demographic effects on underlying cognitive constructs, with less dependence upon task modality and stimulus attributes.

First, age-related cognitive declines were found in most cognitive domains, generally affecting perceptual more than verbal abilities. ‘Perceptual-motor speed’ and ‘perceptual attention’ were most negatively associated with age, whereas ‘semantic knowledge’ and ‘phonological decoding’ were for the most part resistant to aging. These results are consistent with previous conclusions (e.g., Hedden & Gabrieli, 2004; McArdle et al., 2002; Park & Reuter-Lorenz, 2009).

After accounting for age, education was found to have a significant positive effect across all cognitive domains, with notable greater impact on verbal compared to perceptual abilities (medium compared to small effects, respectively). This differential effect is consistent with a dual impact of education on (1) the general development of positive attitudes and strategies toward testing, and (2) the specific learning of language-based content material (e.g., Ardila, 2007). The effect of education on ‘phonological decoding’ was small, compatible with previous research suggesting an incidence of developmental dyslexia independent from access to instruction (Lyon et al., 2003). Consistent with previous findings (e.g., Ardila, 1998), a quadratic dampening was observed, indicating limited improved benefit in cognitive performance at higher educational levels.

Differences between Caucasians and African Americans remained after accounting for age and education, stressing the importance of using separate norms to avoid misdiagnosis of African-American individuals (e.g., Heaton et al., 1996; Manly, Byrd, Touradji, & Stern, 2004; Norman et al., 2000, 2011; Reynolds, Chastain, Kaufman, & McLean, 1987). These effects have been suggested to relate to differences in acculturation, socio-economic status, education quality, and lifelong experiences facing negative bias (Ardila, 2007; Manly et al., 2004; Steele & Aronson, 1995). In support of a cultural contribution, smaller effects of ethnicity were found in tests using stimuli with lower cultural loads (e.g., digits, letters, phonemes/graphemes, rhythms, sensory perceptual stimuli) compared to tests using stimuli with higher cultural loads (e.g., words, stories, or complex designs). ‘Semantic knowledge’ showed the greatest dependence on demographic background, including education and ethnicity.

To confirm consistency of factor structure and neuropsychological constructs across ethnicity, post-hoc confirmatory factor analyses were carried out on each group separately. The model described in Fig. 1 fit rather well for both the African American group (N = 618, CFI = 0.944, RMSEA = 0.041, SRMR = 0.069) and Caucasian group (N = 364, CFI = 0.938, RMSEA = 0.037, SRMR = 0.073), generally supporting the measurement of similar neurocognitive constructs across ethnicity. A couple of noticeable differences were nonetheless found in the loadings. The first consisted of weaker loadings on the ‘phonological decoding’ factor for Caucasian compared to African American participants. This finding likely suggested more variance in ‘phonological decoding’ in the African American group compared to the Caucasian group, perhaps partially related to the twice as large sample size. The second difference pertained to the loading of the Seashore Rhythm Test, which loaded predominantly on the ‘working memory’ factor in the African American group and predominantly on the ‘perceptual attention’ factor in the Caucasian group. This finding suggested use of different cognitive strategies across group during that test. Larger sample sizes will be required for a more elaborate comparison of the structure of neuropsychological test performance across ethnicity.

Consistent with reviews and meta-analyses (Hyde, 2005; Zell et al., 2015), the effect of gender was found to be minimal to negligible across most cognitive domains. Differences that were statistically significant but minimal in effect sizes were noted in visuospatial tasks (men > women) and in verbal fluency and perceptual-motor speed tasks (women > men). The only non-negligible gender difference was in phonological decoding (women > men), consistent with the documented prevalence of reading disability in men by two to three times compared to women (Liederman, Kantrowitz, & Flannery, 2005; Rutter et al., 2004).

Limitations