Abstract

Emerging perspectives in neuroscience indicate that the brain functions predictively, constantly anticipating sensory input based on past experience. According to these perspectives, prediction signals impact perception, guiding and constraining experience. In a series of six behavioral experiments, we show that predictions about facial expressions drive social perception, deeply influencing how others are evaluated: individuals are judged as more likable and trustworthy when their facial expressions are anticipated, even in the absence of any conscious changes in felt affect. Moreover, the effect of predictions on social judgments extends to both real-world situations where such judgments have particularly high consequence (i.e., evaluating presidential candidates for an upcoming election), as well as to more basic perceptual processes that may underlie judgment (i.e., facilitated visual processing of expected expressions). The implications of these findings, including relevance for cross-cultural interactions, social stereotypes and mental illness, are discussed.

Keywords: Predictions, emotion, facial expressions, social perception, evaluative judgments

Predictions as Drivers of Social Perception

Emerging perspectives indicate that the brain functions predictively, as it constantly anticipates sensory input based on past experience (see, e.g., Barrett & Simmons, 2015; Chanes & Barrett, 2016; Clark, 2013; Friston, 2005; Hohwy, 2013). Predictions are continuously issued and compared with incoming sensory inputs, which are in turn used to update the predictions. Predictions are thought to occur at multiple time scales and levels of specificity, from very specialized perceptual levels related to real-time sampling of the environment, to more abstract (i.e., general) and stable levels based on our core internal model of the world (e.g., Kiebel, Daunizeau, & Friston, 2008). Research within this framework has primarily come from the neurosciences, where predictions are assessed as top-down neural signals (see, e.g., Gilbert & Li, 2013 for a review on visual processing). According to these neuroscience perspectives, predictions importantly impact perception, guiding and constraining how we experience the world. However, in the behavioral domain, the posited role of predictions has largely been assessed only indirectly (although see Pinto, Gaal, Lange, Lamme, & Seth, 2015) under various labels (for recent discussions in terms of predictive coding see, e.g., Otten, Seth, & Pinto, 2017; Panichello, Cheung, & Bar, 2013). In this paper, we explicitly test the hypothesis that predictions significantly shape high-level social perception with important consequences for everyday life, in particular, judgments of others’ likability and trustworthiness. In so doing, we suggest that predictive coding accounts of perception offer a unique explanatory lens that can contribute to unifying a wide variety of social perception effects within a common framework by identifying predictive signals as a potential shared mechanism.

Evidence of Predictions in Social Perception Across Different Research Domains

Research on stereotypes offers a prominent example of how predictions are often indirectly studied in social psychology. Stereotypes can be thought of as predictions (Barrett, 2017; Otten et al., 2017) as they represent implicitly-held expectations about shared properties of category members, and recent theoretical work has proposed that they constrain initial perceptions rather than being downstream products of social perception as long presumed (Freeman & Johnson, 2016). Moreover, research has shown that violating stereotypes can carry negative consequences for the violators. For example, women who violate gender stereotypes at work, e.g., women who succeed in stereotypically ‘male’ professions, are less liked and perceived as more interpersonally hostile than their male counterparts (Heilman, Wallen, Fuchs, & Tamkins, 2004), with potential career-affecting outcomes (Heilman, Wallen, Fuchs, & Tamkins, 2004; Heilman, 2001). Similarly, Mendes and colleagues (2007) found that participants rated individuals whose socioeconomic status violated racial/ethnic stereotypes (e.g., a white individual with low socioeconomic status, a Latino/a individual with high socioeconomic status) less favorably than individuals whose socioeconomic status matched those stereotypes. Although these findings are consistent with our hypothesis that predictions can influence high-level social perception, stereotypes are stable predictions that are deeply rooted in society; they frequently involve historical and political dimensions. Moreover, stereotype predictions are often assumed by the researchers rather than evaluated at the individual level. Thus, isolating the role of flexible, dynamic predictions on social perception requires novel or modified paradigms that can assess a dynamic range of predictions at the individual level.

Research examining emotion congruence in verbal and nonverbal communication has also indirectly approached the role of predictions on social perception. Findings in this domain have suggested a preference for individuals who present prediction-consistent information in social interaction. For example, leaders whose affect is congruent with the emotional content of their message (i.e., sad nonverbal displays when conveying saddening information) are rated more positively than leaders whose affect is incongruent with their message (Newcombe & Ashkanasy, 2002). Similarly, school-age children prefer to request information from consistent speakers (e.g., speakers providing a negative statement with negative affect) than from inconsistent or unfamiliar speakers (Gillis & Nilsen, 2016), and they rely on emotional congruence to determine whether a speaker is lying or telling the truth (Rotenberg, Simourd, & Moore, 1989). In these kinds of emotion congruence studies, however, two cues with equal or opposite valence are typically simultaneously displayed by a single target person (e.g., target person’s words and tone of voice). This could be perceived as a flagrant inconsistency of the target person’s internal state, making it difficult to assess the impact of the perceiver’s own dynamic expectations.

Recent behavioral work has also investigated the effects of nonverbal predictive cues (e.g., gaze direction) on social perception. This work has demonstrated that individuals are more liked and/or trusted when they display nonverbal cues that are predictive of task-relevant events (e.g., target location) for a perceiver (Bayliss, Griffiths, & Tipper, 2009; Bayliss & Tipper, 2006; Heerey & Velani, 2010). However, in these studies, individuals were more liked and trusted as the predictive cues they displayed had positive consequences for task performance but not necessarily otherwise, and thus the role of predictability was confounded with potential benefits for the perceiver. Thus, again, the effect of predictions per se was not explored.

Finally, a variety of research has investigated the impact of context on emotion perception (for discussions, see Barrett, Mesquita, & Gendron, 2011, and Aviezer, Hassin, Bentin, & Trope, 2008), which is also relevant for social perception. Contextual cues set up predictions that importantly impact how we perceive emotion on other individuals’ faces (for a review, see Barrett, Mesquita, & Gendron, 2011). For example, participants rely on situational information, including presented scenarios (Carroll & Russell, 1996) and visual scenes (Righart & de Gelder, 2008), when judging emotional responses from facial configurations. Body configurations are also used as disambiguating contexts, creating variable interpretations of identical facial configurations depending on simultaneous contextual information from the body (Aviezer, Trope, & Todorov, 2012; Aviezer et al., 2008). These findings do not directly examine the influence of emotion predictions on social judgments, but do demonstrate that context guides emotion predictions, and can encourage participants to issue specific dynamic predictions about emotional displays. Thus, we can leverage emotional context in our experimental designs to explicitly explore the impact of predictions about emotional displays on social perception, which is the goal of the current research.

Although previous research provides some evidence that predictions play a role in social perception, these studies have typically not been discussed in terms of predictive coding, nor have they been designed to specifically assess the role of predictions as the underlying mechanism driving differences in social perception. Thus, the impact of flexible, dynamic, individualized predictions on social perception has remained largely unexplored and its study requires novel experimental designs.

The Present Studies

In this paper, we explicitly assessed how predictions of facial expressions embedded in emotional contexts impact individuals’ evaluation of others. We directly examined whether facial expression predictions drive social perception, deeply influencing how individuals experience social input. We hypothesized that individuals would be evaluated as more likable and trustworthy when their facial expressions were anticipated (Experiments 1, 2, 3, and 4), an effect that we predicted would occur across and within emotion categories (e.g., for fear, happiness, and sadness) as well as across affective categories (e.g., pleasant and unpleasant, high and low arousal emotion categories). We also hypothesized that the effect of predictions would extend beyond flagrant violations of stereotypical expressions to more nuanced and individualized predictions concerning what one expects to see another person look like in a given emotional context (Experiments 3 and 4). We also explored whether the effect of predictions on social perception would extend beyond well-controlled lab environments to real-world situations where such judgments have particularly high consequence, such as evaluating presidential candidates for an upcoming election (Experiment 4). Finally, we hypothesized that changes in consciously felt affect would not underlie these effects (i.e., that they would not be attributable to affective misattribution mechanisms, Clore, Gasper, & Garvin, 2001; Experiment 5). Instead, we hypothesized that predictions would drive social perception directly, operating as a kind of processing fluency (Winkielman, Schwarz, Fazendeiro, & Reber, 2003), such that the perceptual processing of predicted facial expressions would be facilitated, leading to more positive ratings (Experiment 6). To the extent that our hypotheses are supported, the present studies will demonstrate that predictive coding theories offer a unique explanatory lens to integrate seemingly disparate social perception effects as driven by explicit or implicit predictions at various levels of specificity. Those levels would span from specific visual predictions for what one should expect to see on another’s face in the very next moment, to very abstract, general, predictions about what another person may do over a much longer time course, such as over the next several minutes, days, weeks, or years.

Experiments 1 and 2: Stereotypical Facial Expressions and Social Perception

In a first set of experiments, we explored whether an individual’s perception of another person is shaped by whether that person’s facial expression matches or mismatches predicted stereotypical expressions. We hypothesized that individuals would be experienced as more likable and trustworthy when their facial expressions matched the perceiver’s predictions compared to when they did not. To test this, we implemented a novel task design wherein, on each trial, participants read an emotionally evocative story (scenario) about a target person and were asked to imagine how the person would look in that scenario, leading them to generate specific perceptual predictions. Next, participants saw the person’s facial expression. On each trial, the facial expression either matched the prediction evoked by the scenario (e.g., a smiling face after a happy scenario) or did not match it (e.g., a smiling face after a sad scenario). Thereafter, participants rated the likability (Experiment 1) or trustworthiness (Experiment 2) of the target person. These experiments represent a critical first step to look for evidence that facial expression predictions can impact social perception. Accordingly, our design focused on highly stereotypical facial expressions for different emotion categories and flagrant mismatches from the evoked predictions elicited by the scenarios, allowing us to first attempt to detect effects on social perception under conditions with robust and apparent prediction violations. If our hypotheses are supported within this paradigm, then we can begin to examine how facial expression predictions may shape the perception of social others in a more nuanced and individualized basis.

Method

Participants

Two groups of subjects participated in the two experiments. For each experiment, participants were 351 young adults recruited from Northeastern University (Mean Age±SD: 19±2 y.o., 22 female for Experiment 1; and Mean Age±SD: 19±1 y.o., 19 female for Experiment 2). All participants reported normal or corrected-to-normal visual acuity, were native English speakers and received course credit for their participation. The target sample size of n=35 was chosen because samples of 30–40 are thought to provide enough power to detect a medium to large behavioral effect (see Wilson VanVoorhis & Morgan, 2007).

Materials and Procedure

Participants completed 3 practice trials and 45 experimental trials of a social perception task on a computer. Instructions and stimuli were presented using E-Prime 2 running on a Dell Optiplex 745 and a 17-inch Samsung LCD flat-screen monitor (1280×1024). A diagram of the structure for each trial of the task is given in Figure 1a. Each trial started with a fixation screen (7 s), followed by a photograph of a neutral face of a target person (5 s). A short written story (scenario) about the target person, designed to be emotionally evocative, was then displayed for 20 s. The scenario was meant to evoke one of three emotions: fear, sadness, or happiness. Participants were asked to imagine how the target person would look in that scenario while reading. Then a second photograph of the target person was displayed, either portraying a neutral facial expression or a stereotypical facial expression for one of the three emotions (e.g., a pout depicting sadness), for 5 s. The face could “match” the evoked emotion (e.g., fear scenario followed by a stereotypical fear face; 21 trials), be neutral (e.g., fear scenario followed by a neutral face; 12 trials) or “not match” the evoked emotion (e.g., fear scenario followed by a stereotypical sad or happy face; 12 trials). In these experiments, trials with neutral faces were included only to generate variability (i.e., to avoid having only stereotypical facial expressions). They were not included in the analyses, which contrasted performance on trials with the same category of faces (stereotypical happy, sad, fear) when they were predicted (matched) and not predicted (non-matched).

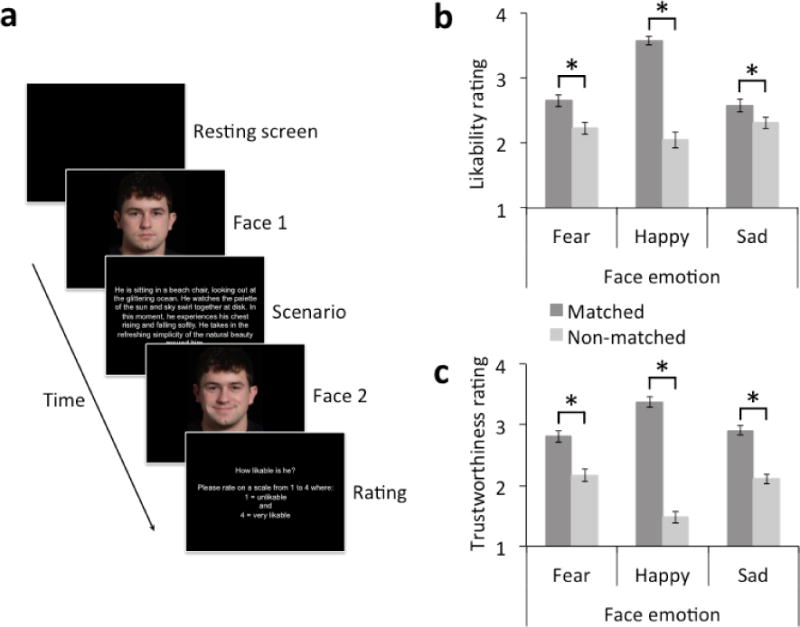

Fig. 1. Schematic representation of a trial and results in Experiments 1 and 2.

Note: (a) Each trial started with the presentation of a fixation screen (7 s) followed by a photograph of a target person displaying a neutral expression (Face 1; 5 s) and then a short story (Scenario; 20 s). Then, a new photograph of the target person was presented, this time portraying a facial expression that could match the scenario emotion, be neutral, or not match the scenario emotion (Face 2; 5 s). Participants were asked to rate how likable (Experiment 1) or trustworthy (Experiment 2) the target person was. (b) Individuals exhibiting predicted facial expressions (matching the emotion evoked by the scenario) were rated as more likable than those exhibiting unpredicted ones (non-matching) across the three emotion categories explored (Experiment 1). (c) Similar results were observed for trustworthiness ratings (Experiment 2). Asterisks indicate p<.05.

Participants were then asked to quickly perform one rating (up to 2 s). In Experiment 1, participants rated how likable the target person was. In Experiment 2, they rated how trustworthy the target person was. Ratings were performed on a scale from 1 to 4 (1=unlikable/4=very likable; 1=untrustworthy/4=very trustworthy).

We used photographs from the Interdisciplinary Affective Science Laboratory Face Set (www.affective-science.org). We used a different target person (identity) for each of the 48 trials (3 practice trials: 2 female, 1 male; 45 experimental trials: 28 female, 17 male). For each stereotypical facial expression (sad, fear, happy, neutral) and identity, two versions were available: one with mouth closed and one with mouth open. Half of the initial neutral faces corresponded to each version (i.e., mouth open or closed). If the second face was displaying a neutral expression, then the other version (i.e., mouth open or closed) was used to avoid repetition of the same image. If the second face was displaying a fear, happy or sad expression, then the open or closed mouth version was used randomly. Normed ratings of intensity, attractiveness, and stereotypicality (i.e., accuracy in identifying the emotion category) for the mouth-closed face set stimuli for the emotion categories used in this experiment are provided in the supplemental materials (Table S1). See Figure S1 in the supplemental materials for sample face stimuli.

Written stories (scenarios) were taken from a set of emotion scenarios developed and pilot-tested in a prior set of experiments (Wilson-Mendenhall, Barrett, & Barsalou, 2013). The scenarios used sampled from the four quadrants of the affective circumplex. Positive valence was represented by happy scenarios, whereas negative valence was represented by sad and fear scenarios. All emotion categories (happiness, fear, sadness) included high and low arousal scenarios. As described by Wilson-Mendenhall and colleagues (2013), an independent set of participants rated the scenarios to verify that they elicited the intended variation in subjectively experienced valence and arousal, and participants reported that it was relatively easy to immerse themselves in these scenarios (details can be found in the supplemental materials of Wilson-Mendenhall, Barrett, & Barsalou, 2013). For the purpose of the present experiment, we removed the last sentence of each scenario, which explicitly mentioned the specific emotion category. See Table S2 in the supplemental materials for sample scenarios.

Results

Experiment 1: Perceived Likability

A 2 (face match: matched, non-matched) by 3 (face emotion category: sad, fear, happy) repeated-measures analysis of variance (ANOVA) on likability judgments supported our hypothesis; target persons were rated as more likable when displaying predicted (matched) facial expressions (M=2.94, SE=.07) than when displaying non-matched facial expressions (M=2.19, SE=.07), F(1, 34)=75.97, p<.001, ηp2=.691. We also observed a main effect of face emotion category, F(2, 68)=12.00, p<.001, ηp2 =.261. Bonferroni comparisons revealed that target persons displaying stereotypical happy faces were rated as more likable (M=2.81, SE=.07) than those displaying stereotypical sad (M=2.46, SE=.08), p=.003, or fear faces (M=2.42, SE=.08), p=.001 (sad vs. fear faces: p=1.00).

This analysis also revealed a significant interaction between face match and face emotion category on ratings of likability, F(2, 68)=55.65, p<.001, ηp2 =.621. To explore this interaction, we conducted a series of repeated-measures ANOVAs, one for each face emotion category, with face match as the within-subjects factor. As anticipated, for all three emotion categories, individuals were rated as more likable when displaying predicted facial expressions (matched) than when displaying non-matched facial expressions (fear faces: F(1, 34)=22.53, p<.001, ηp2 =.399; sad faces: F(1, 34)=5.70, p=0.023, ηp2 =.144; happy faces: F(1, 34)=126.52, p<.001, ηp2 =.788)2. See Figure 1b.

Experiment 2: Perceived Trustworthiness

A 2 (face match: matched, non-matched) by 3 (face emotion category: fearful, sad, happy) repeated-measures ANOVA on trustworthiness judgments supported our hypothesis; target persons were rated as more trustworthy when displaying predicted (matched) facial expressions (M=3.04, SE=.07) than when displaying non-matched facial expressions (M=1.92, SE=.07), F(1, 34)=152.89, p<.001, ηp2 =.818. No main effect of face emotion category was observed (F<1).

This analysis also revealed a significant interaction between face match and face emotion category on trustworthiness ratings, F(2, 68)=72.69, p<.001, ηp2 =.681. To explore this interaction, we conducted a series of repeated-measures ANOVAs, one for each face emotion category, with face match as the within-subjects factor. As anticipated, for all three emotion categories, individuals were rated as more trustworthy when displaying predicted facial expressions (matched) than when displaying non-matched facial expressions (fear faces: F(1, 34)=36.04, p<.001, ηp2 =.515; sad faces: F(1, 34)=70.63, p<0.001, ηp2 =.675; happy faces: F(1, 34)=222.56, p<.001, ηp2 =.867)3. See Figure 1c4.

Discussion

In Experiments 1 and 2, we observed initial evidence that perceivers evaluate individuals more positively when provided with social information (in these experiments, facial expressions) that matches their predictions. Specifically, we found that participants rated individuals as more likable and trustworthy when those individuals exhibited expected facial expressions. Of import, the impact of predictions on social perception held across facial emotion categories (i.e., even individuals displaying stereotypical sad expressions were liked and trusted more if their expression was expected).

These experiments provide initial evidence that predictions influence social perception, but critically, we did not directly assess participants’ predictions in these experiments. Instead, we assumed the displayed facial expression roughly matched or mismatched the perceiver’s predictions based on the presentation of highly stereotypical facial expressions and normed emotion scenarios. Thus, we were not able to rule out the possibility that the effect observed was driven by blatant violations of stereotypes in non-match trials, where the target person might be perceived as displaying a highly inappropriate response (e.g., smiling in response to a tragedy or displaying a fearful face in response to meeting a good friend for coffee). Such blatantly inappropriate responses may have led participants to judge target persons in those trials as bizarre or maladaptive. Indeed, many mental illnesses are characterized by symptoms involving contextually-inappropriate affect displays (e.g., Liddle, 1987). Thus, blatant mismatches may have triggered judgments regarding that individual’s emotional or mental stability, with subsequent consequences regarding judgments of their character.

Thus, in Experiment 3, we attempted to replicate our observations while additionally asking participants to explicitly report the degree to which each facial expression matched their predictions on each trial. This approach allows for a more direct measure of the effects of predictability of facial expressions on social judgements and, critically, allows us to examine the effects of predictability within only those trials in which the target person presented an “appropriate” facial expression, with no blatant violations of stereotypes or conventionality (i.e., within match trials).

Experiment 3: Predictions and Social Perception

In Experiment 3, we explicitly assessed whether individual facial expression predictions underlie participants’ social perception (in particular, likability ratings) by asking participants to additionally rate how similar each target person looked to what they expected (predictability). These trial-by-trial predictability ratings provide us with a measure of the degree of subjective prediction fulfillment, as participants were instructed to imagine how the target person would look in each scenario as they read. Moreover, the inclusion of a trial-by-trial measure of predictability allowed us to examine whether social perception was driven by more subtle shifts in individual facial expression predictions, as opposed to blatant violations of stereotypicality (e.g., a smiling face in what should be terrifying scenario). That is, we were able to examine whether a target individual was perceived as more likable when his or her displayed facial expression more closely matched a specific perceiver’s predictions, irrespective of whether that stereotypical expression matched the emotion scenario on that trial. We examined this, not only across all experimental trials where we expected robust differences in predictability to emerge (i.e., across match trials and non-match trials), but also across trials where we expected predictability to vary less and reflect more ideographic differences in facial expression predictions (e.g., within match trials only, where predictability should be higher on average, or within non-match trials only where predictability should be lower on average). Analyzing only match trials, where the target person displays an “appropriate” facial expression, allows us to overcome an important potential confound of Experiments 1 and 2: that target identities displaying blatant norm/stereotype violations (e.g., cross-valence mismatches) may have been judged as callous, maladaptive, or even mentally disabled, with consequent impact on evaluative judgments of likability and trustworthiness.

We predicted that individuals would be judged as more likable on trials where their facial expression more closely matched the perceiver’s predictions, even when controlling for blatant stereotypical face matching and mismatching, and that predictability would mediate the impact of stereotypical match and mismatch on social perception. Importantly, we also predicted that the effect of predictions on social perception would emerge when considering only those trials in which the individuals presented an “appropriate” facial expression (match trials). To the extent that we find that predictability of facial expressions still predicts social judgment ratings in the match trials, we will be able to rule out the possible confound that our effect is driven by judgments of blatant (e.g., cross-valence) facial expression and scenario mismatches.

Method

Participants

Participants were 355 young adults recruited from Northeastern University (Mean Age±SD: 20±2 y.o., 25 female). All participants reported normal or corrected-to-normal visual acuity, were native English speakers and received course credit for their participation. The target sample size of n=35 was based on the results of Experiments 1 and 2.

Materials and Procedure

For Experiment 3, materials and procedure were identical to Experiments 1 and 2 except that participants first rated how similar the facial expression looked to what they expected (predictability rating), then how likable the target person was (likability rating) on scales from 1 to 4 (1=not at all similar/4=very similar; 1=unlikable/4=very likable).

Results

We utilized hierarchical linear modeling (HLM; Raudenbush & Bryk, 2002), which allowed us to avoid aggregation across trials and model variability in trial-by-trial performance nested within each participant. We utilized a continuous sampling model with random effects, and a restricted maximum likelihood method of estimation for model parameters. All Level-1 (trial-level) variables were group centered (i.e., centered around each participant’s mean; Enders & Tofighi, 2007).

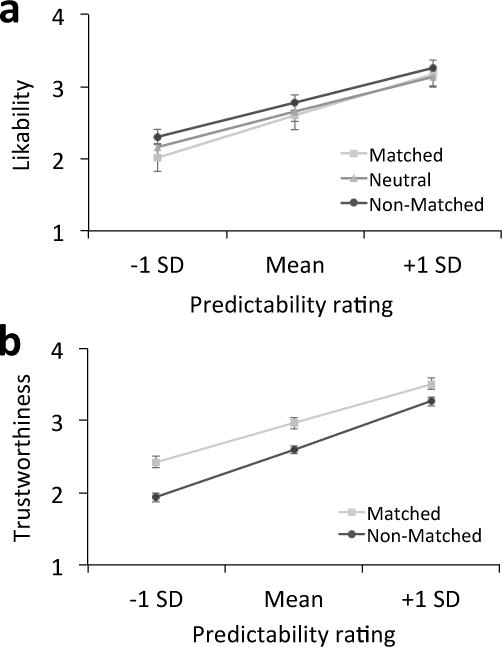

Mediational Analyses

Consistent with findings from Experiments 1 and 2, our HLM analysis revealed that individuals were rated as significantly more likable when displaying matched facial expressions (M=2.97, SE=.08) than when displaying non-matched facial expressions (M=2.30, SE=.06), t(34)=8.62, p<.001. As expected, this analysis also showed that matched facial expressions were rated as significantly more predicted (M=3.10, SE=.08) than non-matched facial expressions (M=1.68, SE=.05), t(34)=17.62, p<.001. Crucially, analyses also revealed that the relationship between face match condition (matched, non-matched) and likability ratings was significantly mediated by ratings of predictability. Predictability ratings accounted for 97.5% of the relationship between face match condition and ratings of likability and remained a significant predictor of likability ratings in this model (B=.47, SE=.04, t(34)=10.95, p<.001). A Sobel test confirmed significant mediation (Z=9.30, p<.001). When controlling for the effect of predictability, match condition was no longer a significant predictor of likability (B=.02, SE=.07; t(34)=0.25, p =.804), suggesting the relationship was fully mediated by ratings of predictability.

Predictability Predicts Likability Ratings

In addition, to move beyond broad condition-based differences, we utilized HLM to examine whether within-subject differences in ratings of predictability significantly predicted within-subject differences in perceptions of likability, ignoring match condition and including neutral face trials. This analysis revealed that individuals that portrayed expressions that were rated as more predicted were also rated as more likable (B=.46, SE=.04; t(34)=11.45, p<.001). We also examined this relationship separately within matched, non-matched, and neutral facial expression conditions. This analysis revealed that, within-subjects, ratings of predictability predicted ratings of likability when looking within just the match trials (B=.50, SE=.05; t(34)=10.77, p<.001), within just the non-match trials (B=.41, SE=.06; t(34)=6.77, p<.001), and within only the neutral face trials (B=.42, SE=.05; t(34)=8.50, p<.001). Moreover, the strength of the effect did not differ across conditions (matched vs. non-matched: B=.08, SE=.07; t(34)=1.28, p=.201; matched vs. neutral: B=.08, SE=.05; t(34)=1.57, p=.127; non-matched vs. neutral: B=.01, SE=.05; t(34)=0.12, p=.905). See Figure 2a6.

Fig. 2. Models for within-subjects predictability ratings predicting social perceptions in Experiments 3 and 4.

Note: (a) HLM model for within-subject likability ratings predicted by within-subject predictability ratings, face match condition (match, non-match, neutral), and their interactions. Predictability ratings significantly predict perceived likability, and this effect does not differ across face match conditions (match, non-match, neutral). Consistent with the mediational analyses, this model shows that there is no longer a significant difference in average perceived likability between match and non-match trials when controlling for predictability ratings. (b) HLM model for within-subject trustworthiness ratings predicted by within-subject predictability ratings, face match condition (match, non-match), and their interaction. Predictability ratings significantly predict perceived trustworthiness, and this effect does not differ across face match conditions (match, non-match). Consistent with the mediational analyses, which found evidence of significant but only partial mediation, this model shows that there is still a significant difference in average perceived trustworthiness between match and non-match trials even when controlling for predictability ratings.

Discussion

In Experiment 3, we replicated and extended the findings of Experiments 1 and 2, including a trial-by-trial measure of facial expression predictions that allowed us to assess predictions directly instead of making assumptions about a perceiver’s predictions based on stereotypicality. Using HLM, we replicated the results of Experiment 1, finding that an individual is judged as more likable when displaying a facial expression that matches the emotion category of the preceding scenario than when his or her facial expression does not match it. Moreover, we extended these results by conducting a mediational analysis that demonstrated that participants’ individual predictions about facial expressions underlie this effect.

Importantly, the trial-by-trial measure of predictability additionally allowed us to evaluate whether the effect of predictions was driven primarily by trials in which there was a violation of stereotype or conventionality (non-match trials) that could have led participants to judge the individual as bizarre or maladaptive. We demonstrated that the effect of predictions on evaluative judgments was still observed when considering only the match trials where an “appropriate” facial expression was always displayed (i.e., an expression congruent with the emotion evoked by the scenario: smiling face after a happy scenario, pouting face after a sad scenario, startled face after a fear scenario). Similarly, as expected, the effect of predictions on perception was also observed when examining only trials with stereotypical facial expressions that did not match the emotion category evoked by the scenario (non-match trials), and also when examining only trials with neutral facial expressions (where predictability ratings may be expected to fall between the match and non-match trials on average). This observation reveals that the impact of predictability on social perception goes beyond extreme violations of stereotypical faces, holding when deviations from expectations are more nuanced and individualized.

Experiment 4: Generalizability

In Experiment 4, we sought to replicate the results of Experiment 2 and extend our findings from Experiment 3 to trustworthiness by explicitly investigating whether individual facial expression predictions also underlie perceptions of trustworthiness. As in Experiment 3, we used predictability ratings on a trial-by-trial basis as a measure of the degree of subjective prediction fulfillment, and examined the relationship between prediction fulfillment and judgments of trustworthiness. We predicted that individuals would be judged as more trustworthy when their facial expressions fulfilled the perceiver’s predictions, and that predictability ratings would mediate the impact of stereotypical matching on judgments of trustworthiness (as it did for judgments of likability in Experiment 3). Similar to Experiment 3, we also performed additional analyses separately for match trials, in which only ‘appropriate’, stereotype-congruent, facial expressions were presented, to rule out the possibility that the impact of predictions on social perception is driven by non-match trials in which a blatant stereotype/norm violation occurred.

Critically, in Experiment 4, we also assessed whether perceived predictability impacted social judgments in a real-world situation of high consequence: the 2016 United States presidential election. To do so, we asked participants to rate the perceived predictability, likability and trustworthiness of the candidates of the two major political parties (Hillary Clinton and Donald Trump). In the context of a political campaign, basic social judgments of a candidate (i.e., likability or trustworthiness) are critical factors that shape voting behavior and could sway the results of an election (Kinder, 1983; Miller & Miller, 1976; Rosenberg & Sedlak, 1972). Consistent with our findings in Experiments 1–3, we hypothesized that candidates’ perceived predictability would be positively related to perceptions of the candidates’ likability and trustworthiness.

Furthermore, both presidential candidates consistently violated various stereotypes throughout the electoral campaigns. Hillary Clinton was an unconventional presidential candidate because of her gender; she was the only female presidential candidate in American history to be nominated by a major political party. Donald Trump was an unconventional presidential candidate in that he lacked a background in politics and consistently violated expectations of the decorum with which a presidential candidate is expected to behave (e.g., by repeatedly using non-normatively negative language; for a review, see Conway, Repke, & Houck, 2017). Thus, we also explored whether the degree to which participants’ expected stereotypical facial expressions (measured through our paradigm) predicted social judgments of the candidates or voting behavior. That is, we wanted to explore whether individuals whose predictions are strongly tied to stereotype consistency/violation within our task are also particularly sensitive to stereotype consistency/violation in a real-world context. We used ratings of predictability in our task to assess the degree to which participants’ expected stereotypical expressions: when a perceiver experienced non-matched faces as less similar to their expectations (e.g., a pouting face after a happy scenario was given a lower rating of predictability), this indicates that their facial expression predictions were fairly stereotypical (e.g., the participant was likely expecting a smiling face after a happy scenario); by contrast, when a perceiver experienced non-matched faces as more similar to their expectations, this indicates that their facial predictions were less stereotypical. We utilized these metrics to examine whether the stereotypicality of participants’ predictions related to their perceptions of the candidates. We hypothesized that individuals who made more stereotypical facial expression predictions would like and trust the candidates less and would even be less likely to vote for them, given that the candidates routinely violated various stereotypes/norms about presidential candidates throughout their campaigns.

Finally, we tested the generalizability of our effect by recruiting Experiment 4 participants from Amazon Mechanical Turk, which provides an older and more diverse population than typical university-based samples (Ross, Zaldivar, Irani, & Tomlinson, 2010). To the extent that our hypotheses for Experiment 4 are supported, it suggests that our findings may generalize to how people make real-world social judgments with widespread consequences.

Method

Participants

Participants were 90 young adult United States citizens recruited through Amazon Mechanical Turk (Mean Age±SD: 31±5 y.o., 38 female, gender missing for one participant who did not report it). Sample size was based on Experiment 3. It was adjusted (increased) in order to account for the decreased number of trials of the task and anticipated increases in response attrition for participants completing the task online compared to in the controlled lab environment. Participants received $10 or $5 for their participation. All participants had HIT approval ratings of at least 95%. To protect against negligent participation, only individuals who responded to at least 2/3 of the experimental trials within the task were remunerated for their participation and included in the analyses7. Sixteen participants identified themselves as Republicans, 49 identified themselves as Democrats, and 25 did not identify themselves as Republicans or Democrats. The majority of participants (82 out of 90) were registered voters.

Materials and Procedure

For Experiment 4, instructions and stimuli were presented using Qualtrics online research platform. Participants performed the same task as in Experiment 3 but with the following changes: (i) the total number of trials was 39 (3 practice trials: 2 female, 1 male; 36 experimental trials: 18 female, 18 male); (ii) only sad, fear and happy faces were presented after the scenario (no neutral faces); (iii) only the mouth closed version of the faces was used; and (iv) participants firstly rated how similar the target person looked to what they expected (predictability rating) and then how trustworthy the target person was (trustworthiness rating) on scales from 1 to 4 (1=not at all similar/4=very similar; 1=untrustworthy/4=very trustworthy). Eight trials (in total) were excluded from analyses due to the presentation of a repeated target person caused by a detected error in the task program. Additionally, at the end of the task, participants responded to the following questions regarding their political preferences and their evaluations of the presidential candidates for the then upcoming 2016 presidential election in the United States: (1) Do you consider yourself a Republican? (yes/no); (2) Do you consider yourself a Democrat? (yes/no); (3) Are you a registered voter? (yes/no); (4) and (5) How likely are you to vote for Donald Trump [Hillary Clinton]? (1=unlikely, 4=very likely); (6) and (7) How predictable is Donald Trump [Hillary Clinton]? (1=unpredictable, 4=very predictable); (8) and (9) How likable is Donald Trump [Hillary Clinton]? (1=unlikable, 4=very likable); (10) and (11) How trustworthy is Donald Trump [Hillary Clinton]? (1=untrustworthy, 4=very trustworthy). The order of the questions regarding the two presidential candidates was counterbalanced across participants. Means, standard deviations, and inferential statistics comparing ratings of Trump and Clinton in our sample can be found in Table S3 in the supplemental materials.

Results

Mediational Analyses

Data were again analyzed using HLM. Consistent with findings from Experiments 1–3, our HLM analysis revealed that individuals exhibiting matched facial expressions were rated as significantly more trustworthy (M=3.13, SE=.06) than those exhibiting non-matched facial expressions (M=2.23, SE=.05), t(89)=15.56, p<.001. As expected, this analysis also revealed that individuals exhibiting matched facial expressions were rated as significantly more predicted (M=3.13, SE=.06) than those exhibiting non-matched facial expressions (M=1.68, SE=.06), t(89)=25.95, p<.001. Crucially, analyses also revealed that the relationship between face match condition and trustworthiness was significantly mediated by ratings of predictability. Predictability ratings explained 85.7% of the relationship between face match condition and ratings of trustworthiness and remained a significant predictor of trustworthiness ratings in this model (B=.52, SE=.03, t(89)=16.62, p<.001). A Sobel test confirmed significant mediation (Z=13.99, p<.001). However, facial expression match condition also remained a significant predictor of trustworthiness ratings in this model (B=.13, SE=.03, t(89)=4.24, p<.001), suggesting that ratings of predictability only partially mediated this relationship.

Predictability Predicts Trustworthiness Ratings

In addition, we again utilized HLM to examine whether within-person differences in ratings of predictability significantly predicted within-person differences in perceptions of trustworthiness, ignoring match condition. This analysis revealed that individuals that portrayed expressions that were rated as more predicted by a perceiver were also rated as more trustworthy (B=.56, SE=.03; t(89)=18.12, p<.001). As in Experiment 3, we also found that this effect reflected more nuanced changes in predictability and was not simply driven by larger changes in predictability across face match conditions (matched and non-matched). That is, ratings of predictability predicted ratings of trustworthiness within-subjects when looking at just the matched trials (B=.48, SE=.03; t(89)=14.84, p<.001), and when looking at just the non-matched trials (B=.59, SE=.03; t(89)=15.61, p<.001), though the effect was significantly stronger within the non-matched than matched trials (B=−.11, SE=.03; t(89)=3.75, p<.001). See Figure 2b8.

Judgments of Presidential Candidates

To assess whether predictability was a determinant for perceptions of likability and trustworthiness in situations where such social perceptions have high real-world consequence, we regressed the likability and trustworthiness ratings of each presidential candidate (Clinton and Trump) onto ratings of their predictability. As hypothesized, perceptions that the candidate was more predictable predicted greater perceived likability of the candidate (βClinton=.17, F(1,89)=2.73, p=.102; βTrump=.29, F(1,89)=8.06, p=.006) and greater perceived trustworthiness of the candidate (βClinton=.23, F(1,89)=4.98, p=.028; βTrump=.23, F(1,89)=4.86, p=.030). However, the relationship between predictability and likability failed to reach traditional levels of significance for Clinton.

Stereotypicality of Predictions and Real-World Judgments

Further, we examined whether reactions to stereotypical prediction violations within our paradigm were able to predict reactions to individuals violating stereotypes in real-world situations of high consequence. Specifically, we assessed whether holding more stereotypical facial expression predictions (assessed as lower predictability ratings for non-matched faces) predicted perceptions of trustworthiness and likability for the 2016 US presidential candidates, both of whom regularly violated behavioral and/or stereotype-based norms.

As predicted, a series of linear regressions revealed that lower ratings of predictability for non-matched faces within our task (i.e., holding more stereotypical facial expression predictions) significantly predicted perceiving Clinton as less likable (β=.37, F(1,89)=13.64, p<.001) and less trustworthy (β=.30, F(1,89)=8.48, p=.005), and even predicted a decreased likelihood of voting for her (β=.25, F(1,89)=5.67, p=.019). For Trump, holding more stereotypical facial expression predictions (i.e. lower predictability ratings of non-matched faces within our task) significantly predicted perceiving Trump as less trustworthy (β=.23, F(1,89)=4.85, p=.030), but they failed to predict ratings of his perceived likability (β=.06, F(1,89)=0.27, p=.602) or the likelihood of voting for him (β=.07, F(1,89)=0.43, p=.513).

Discussion

In Experiment 4, we extended our findings from Experiment 3 to trustworthiness and showed that facial expression predictions influence social judgments for a more diverse group of participants, demonstrating the generalizability of the effect. Critically, this study also demonstrated that the perceived predictability of individuals in the real world, the 2016 US presidential candidates, influenced judgments of their likability and trustworthiness—two important social judgments that can impact voting decisions (Kinder, 1983; Miller & Miller, 1976; Rosenberg & Sedlak, 1972). In line with our hypotheses, for both Trump and Clinton, the candidates were judged as more likable and more trustworthy when they were thought to be more predictable. However, the relationship between predictability and likability was only marginally significant for Clinton.

Both Clinton and Trump consistently violated stereotypes about presidential candidates throughout the presidential campaign (e.g., gender stereotypes in the case of Clinton and conventionality stereotypes in the case of Trump). Thus, in Experiment 4 we also examined whether holding more stereotypical facial expression predictions within our experimental task predicted social perceptions of these two candidates, who regularly violated behavioral norms and expectations throughout their presidential campaigns. As expected, we found that participants who held more stereotypical facial expression predictions perceived Clinton and Trump as less trustworthy than those who held less stereotypical facial expression predictions. Holding stereotypical facial expression predictions was also associated with perceiving Clinton (but not Trump) as less likable and being less likely to vote for her. A possible explanation for these partially different patterns may relate to whether the norm/stereotype violations of the two candidates were, or were perceived to be, similarly expectancy-violating and equally relevant to the social context. That is, although both candidates did violate normative expectancies for presidential candidates, we did not assess the degree to which their violations were perceived as equivalent by our sample. Of note, Clinton was rated as significantly more predictable than Trump by our sample (see Table S3 in the supplemental materials). Thus, it is possible that a violation of gender stereotypes (i.e., a woman running for United States president) has less impact on how predictable a person is judged to be than constant violations of behavioral and social norms. At the same time, a violation of gender stereotypes might be considered a more serious norm violation in the context of a presidential election than a violation of conventionality, such that holding more stereotypical expectations would negatively impact Clinton more than Trump. Future research should examine the extent to which such effects are moderated by awareness of stereotype violations and perceptions of the severity or relevance of such violations. In addition, our sample consisted of more individuals identifying themselves as Democrats than as Republicans (or as not affiliated with either party), which may have contributed to Clinton being rated more positively than Trump overall in the present study. Overall, however, our findings demonstrate that our task has real-world implications, predicting perceptions about presidential candidates and even real-world decisions of great import (i.e., voting behavior).

Thus far, we have demonstrated that the extent to which social information blatantly confirms or violates predictions influences social judgments (Experiments 1 and 2), that this effect holds for more nuanced prediction violations that do not necessarily involve blatant norm violations, that this effect is mediated by explicit predictions of the perceivers (Experiments 3 and 4), and that this effect extends to real-world social evaluative judgments of high import (Experiment 4). However, we have yet to examine potential mechanisms for this effect. In the remaining studies, we examine the role of changes in felt affect and processing fluency as two potential causal explanations for our findings.

Experiment 5: Predictions and Reported Affect

Findings from Experiment 4 demonstrate that expectations about facial expressions are important drivers of social perception that extend beyond laboratory-based measures to influence real-world social judgments of high consequence. However, the mechanism by which predictions influence social perception remains unexplored. In the present experiment, we examine whether changes in felt (conscious) affect offer a viable explanation for our previous findings.

According to affect-as-information theory (Clore et al., 2001; Clore & Huntsinger, 2007; Schwarz & Clore, 1983), individuals utilize their feelings as a source of information when making decisions or social judgments, particularly when they are unsure of the source of their feelings. Indeed, previous research suggests that incidental affect (i.e., affect unrelated to the decision at hand) can influence how individuals perceive and respond to social others, including judgments about whether they pose a threat (Baumann & Desteno, 2010; Wormwood, Lynn, Barrett, & Quigley, 2016), judgments about whether to cooperate with them or offer assistance (Bartlett, Condon, Cruz, Baumann, & Desteno, 2012; Isen & Levin, 1972), and even judgments about whether they should be admitted into medical school (Redelmeier & Baxter, 2009). Most relevant to the current investigation, previous research has also demonstrated that incidental affect can influence the perceived trustworthiness and likability of others (Anderson, Siegel, White, & Barrett, 2012). Thus, it is possible in our experiments that a perceiver may experience positive affect when his or her expectations about a facial expression are fulfilled, and that they, in turn, misattribute those positive feelings as a reaction to the individual being perceived, leading them to evaluate the target person more positively (i.e., as more likable and trustworthy). If so, predictions may drive social perception when perceivers misattribute the positive or negative affective feelings that result from a met or violated prediction, respectively, as their affective reaction to the individual being perceived.

In order to test this possibility, we conducted an experiment using a paradigm nearly identical to that of Experiments 1 and 2, but instead of asking participants to make judgments about the individuals being displayed, participants were asked to self-report their own affective feelings on each trial (i.e., their felt valence and arousal). To the extent that changes in conscious affective feelings causally underlie our findings, we would expect to see more positive affect reported on matched trials than on non-matched trials, regardless of the specific emotion category being evoked by the scenario or the specific face emotion category being displayed. Conversely, if changes in felt affect are associated with the emotion categories evoked by the scenarios (e.g., greater self-reported positive affect following happy scenarios than fear and sad scenarios) or with the emotion categories of the stereotypical facial expressions presented (e.g., greater self-reported positive affect following stereotypical happy expressions than stereotypical fear and sad expressions), this pattern of findings would suggest that affective misattribution is not a viable mechanism underlying the influence of predictions on social perception.

Method

Participants

Participants were 379 young adults recruited from Northeastern University (Mean Age±SD: 19±1 y.o., 21 female). All participants reported normal or corrected-to-normal visual acuity, were native English speakers and received course credit for their participation. The target sample size of n=37 was based on the results of Experiments 1 and 2.

Materials and Procedure

Materials and procedure were identical to Experiments 1 and 2 except that, instead of rating the likability or trustworthiness of the target person, participants rated how pleasant they felt (valence rating) followed by how activated they felt (arousal rating) on 4-point scales (1=unpleasant/4=very pleasant; 1=deactivated/4=very activated).

Results

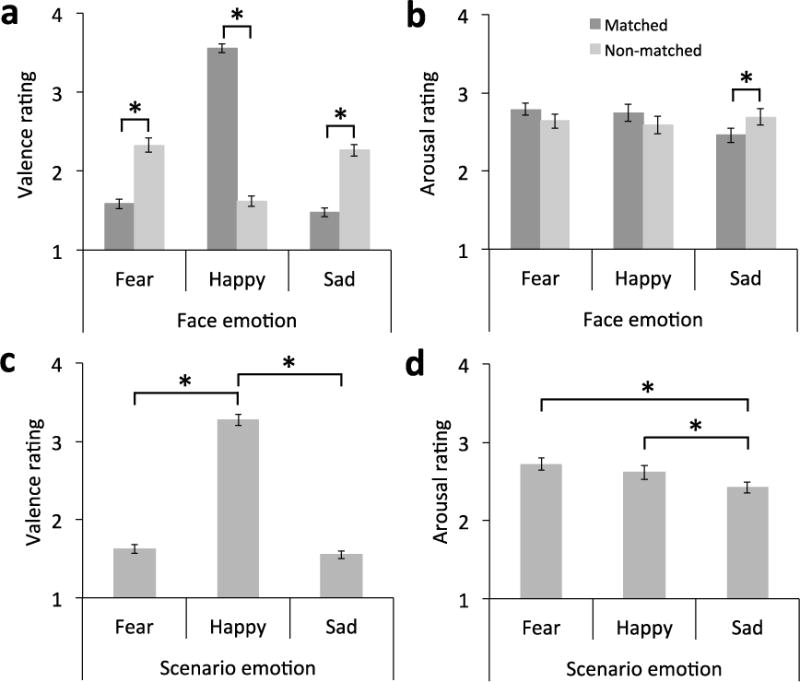

Valence Ratings

A 2 (face match: matched, non-matched) by 3 (face emotion category: sad, fearful, happy) repeated-measures ANOVA on valence ratings revealed a significant main effect for face emotion category, F(2, 72)=93.40, p<.001, ηp2 =.722. Bonferroni tests demonstrated that participants reported significantly more positive affect on trials with happy faces (M=2.58, SE=.04) than on trials with either sad faces (M=1.87, SE=.05), p<.001, or fear faces (M=1.96, SE=.06), p<.001 (sad v. fear: p=.077). Consistent with an affective-misattribution account, this analysis additionally revealed that participants reported more positive affective feelings after matched faces (M=2.21, SE=.03) than non-matched faces (M=2.07, SE=.06), F(1, 36)=5.98, p=.020, ηp2 =.142.

Inconsistent with an affective-misattribution account, however, this analysis also revealed a significant interaction between face match and face emotion category on these ratings, F(2, 72)=233.57, p<.001, ηp2 =.866. To examine this interaction, we conducted a series of repeated-measures ANOVAs, one for each face emotion category, with face match as the within-subject factor. For trials with stereotypical happy expressions, participants reported significantly more positive affect on matched trials than on non-matched trials, F(1, 36)=403.93, p<.001, ηp2 =918. However, the opposite was true for trials with stereotypical fear and sad expressions (F(1, 36)=42.31, p<.001, ηp2 =.540 and F(1, 36)=79.63, p<.001, ηp2 =689, respectively), where participants reported significantly more positive affect on non-matched trials than on matched trials. See Figure 3a.

Figure 3. Results in Experiment 5.

Note: (a) Trials with predicted facial expressions (matching the emotion evoked by the scenario) did not yield higher (more positive) valence ratings, which is inconsistent with affective misattribution as a viable mechanism underlying the effect of predictions on social perception. (b) Similarly, arousal ratings were not driven by prediction. (c) Valence ratings were higher (more positive) for happy than fear or sad scenarios. (d) Arousal ratings were lower for sad than happy and fear scenarios. Asterisks indicate p<.05.

Arousal Ratings

A 2 (face match: matched, non-matched) by 3 (face emotion category: sad, fearful, happy) repeated-measures ANOVA failed to reveal a significant main effect of face emotion category on self-reported arousal, F(2, 72)=1.99, p=.145, ηp2 =.052. Inconsistent with an affective-misattribution account, this analysis also failed to reveal a significant main effect of face match on self-reported arousal, F<1. Also inconsistent with an affective misattribution account, the interaction between face match and face emotion category reached significance, F(2,72)=4.09, p=.021, ηp2 =.102. To examine this interaction, we conducted a series of repeated-measures ANOVAs, one for each face emotion category, with face match condition as the within-subjects factor. This analysis revealed that there were no differences in self-reported arousal across matched and non-matched trials for either happy or fear faces (F(1, 36)=1.40, p=.245, ηp2 =.037 and F(1, 36)=1.74, p=.195, ηp2 =.046, respectively). However, participants reported significantly lower arousal on matched trials (M=2.46, SE=.09) than on non-matched trials (M=2.69, SE=.11) for sad faces, F(1, 36)=4.28, p=.046, ηp2 =.106. See Figure 3b.

Felt Affect By Emotion Scenario

In light of these results, we hypothesized that felt affect was driven by scenario emotion category rather than by predictions. In order to test this, we conducted two repeated-measures ANOVAs, one for ratings of valence and one for ratings of arousal, with emotion scenario condition as the within-subject factor. This analysis revealed a significant main effect of emotion scenario on ratings of valence, F(2, 72)=247.75, p<.001, ηp2 =.873. Post-hoc Bonferroni comparisons revealed that participants reported significantly higher (more positive) valence on trials with happy scenarios (M=3.27, SE=.07) than sad scenarios (M=1.55, SE=.05), p<.001, or fear scenarios (M=1.62, SD=.06), p<.001. There were no differences in reported valence between trials with sad and fear scenarios, p=.207. See Figure 3c. This analysis also revealed a significant effect of emotion scenario condition on ratings of arousal, F(2, 72)=8.24, p=.001, ηp2 =.186. Post-hoc Bonferroni comparisons revealed that participants reported significantly lower arousal ratings on trials with sad scenarios (M=2.42, SE=.07) than happy scenarios (M=2.62, SE=.09), p=.045, or fear scenarios (M=2.72, SE=.08), p<.001. There were no differences in reported arousal between trials with happy and fear scenarios, p=.590. See Figure 3d.

Discussion

The pattern of results observed suggests that changes in conscious affect are unlikely to underlie the effect of predictions on social perception. Across the four previous studies, we found that predicted faces were evaluated as more likable and trustworthy than non-predicted faces, regardless of the emotion category. In order for affective misattribution to account for these findings, Experiment 5 would need to have revealed that participants felt more affectively positive when presented with predicted faces than unpredicted faces across all face emotion categories. Instead, however, the present experiment revealed that changes in felt affect differed across face emotion categories and scenario emotion categories as opposed to across face match and non-match conditions. That is, while participants did experience changes in both felt pleasantness and activation across different conditions in Experiment 5, the participants’ affect changed in response to the affective value of scenarios and facial expressions rather than in response to the predictability of the facial expressions (i.e., whether the facial expression matched the emotion category evoked by the preceding scenario or not). The pattern of results appears to be best explained by a series of main effects that directly follow from existing literature on emotion and emotion perception (e.g., Russell & Pratt, 1980): 1) participants reported more positive affect following happy scenarios than sad or fear scenarios (e.g., Wilson-Mendenhall et al., 2013); 2) participants reported more positive affect following happy faces than pouting (sad) or startled (fear) faces (e.g., Wild, Erb, & Bartels, 2001); and (3) participants reported lower arousal following sad scenarios than happy or fear scenarios (e.g., Wilson-Mendenhall et al., 2013). Thus, our findings suggest that affective misattribution is unlikely to be the mechanism underlying the impact of predictions on social perception, as changes in felt affect accompanying prediction fulfillment and violation are not consistent across emotion categories.

Experiment 6: Processing of Predicted Faces

Another possible underlying mechanism for the impact of predictions on social perception, and one that should be consistent across emotion categories, is that prediction leads to a form of processing fluency (Winkielman et al., 2003) whereby the processing of predicted stimuli may be facilitated. According to the literature on processing fluency, ease of processing is associated with more positive evaluations (Winkielman et al., 2003). Thus, within this view, and consistent with our findings in Experiments 1–4, if the processing of predicted facial expressions is facilitated, then individuals displaying predicted expressions should be perceived more positively than those displaying non-predicted expressions. From a predictive coding perspective, we would expect facilitated processing of predicted stimuli, as the representation of expected sensory input has been shown to be highly efficient (Jehee, Rothkopf, Beck, & Ballard, 2006; Kok, Jehee, & de Lange, 2012).

A first step towards testing this explanation involves exploring whether the processing of predicted faces is indeed facilitated. In Experiment 6, we tested whether predicted (matched) faces exhibit privileged processing using a modified version of the paradigm from Experiments 1–5, in which the final facial expression was initially suppressed from conscious awareness using Continuous Flash Suppression (CFS; Tsuchiya & Koch, 2005). Instead of making person judgments on each trial, participants in Experiment 6 reported when they could first see each face as the contrast of the face image was slowly raised over the course of the trial, eventually breaking the suppression effect achieved through CFS. We hypothesized that expected facial expressions would be processed more efficiently than non-predicted facial expressions, and that this would be true across emotion categories. Thus, we predicted that participants would report seeing facial expressions earlier on trials where the stereotypical expression matched the preceding scenario’s emotion category than on trials where it did not.

Method

Participants

Participants were 4210 (24 female) young adults recruited from Northeastern University and the surrounding Boston community through fliers and Craigslist.com advertisements (Mean Age±SD: 21±5 y.o.; age missing for 4 undergraduate participants from Northeastern University). Desired sample size was estimated based on previous work using a similar binocular suppression technique (Anderson, Siegel, Bliss-Moreau, & Barrett, 2011; Study 2). Participants received course credit or $10 for their participation. All participants reported normal or corrected-to-normal visual acuity. Participants wearing glasses were excluded from the analyses given that they interfere with the proper function of the mirror stereoscope, a device used to visualize stimuli in this experiment. Some of the participants (n=25) performed additional, unrelated tasks as part of a different study.

Materials and procedure

Instructions and stimuli were presented using Matlab R2011a running on a Dell Optiplex 980 and a 17-inch Dell LCD flat-screen monitor (resolution of 1280×1024). Participants sat with their head placed on chin and forehead rests and viewed stimuli displayed on the screen through a mirror stereoscope at a distance of approximately 47 cm. The stereoscope allows for the simultaneous presentation of a different stimulus to each eye. Stimuli were presented in a gray scale surrounded by a white frame to facilitate fusion. The task consisted of 3 practice trials followed by 45 experimental trials. Participants were requested to remain still during each trial with their forehead and chin on the rests. Prior to the task, eye dominance was determined for each participant using the Dolman method (Dolman, 1919; Fink, 1938), as research has shown that suppression is more effective under CFS when the image to be suppressed is presented to the non-dominant eye.

As in the experiments reported above, on each trial, a photograph of a neutral face of a target person was displayed for 5 s to both eyes (Figure 4a). A scenario was then displayed for 20 s also to both eyes. Participants were asked to imagine the facial expression of the target person while reading. After a brief fixation screen (0.5 s), the dominant eye was presented with a series of high contrast Mondrian patterns. The patterns alternated at a rate of 10 Hz for a maximum duration of 10 s. These patterns decreased in contrast linearly, updated every 10 ms, over the first 5 s from full contrast to a final contrast of log10 contrast = −1. At the same time, the non-dominant eye was presented with an initially low-contrast face of the target person, either portraying a neutral facial expression or a stereotypical facial expression for one of the three emotions categories (e.g., a pout depicting sadness). The contrast of the suppressed face ramped up linearly, updated every 10 ms, over the first 1 s of the trial, from a very low initial contrast (log10 contrast = −3) to an ending contrast of log10 contrast = −0.5. The face was presented in one of the four corners of the image and, as in Experiments 1–3, could “match” the evoked emotion (matched faces; 21 trials), be neutral (12 trials) or “not match” the evoked emotion (non-matched faces; 12 trials). As in Experiments 1 and 2, we focused our analyses on comparing responses to the same facial expressions when they either matched or did not match the preceding emotion scenario (i.e., neutral faces were not included in the analyses).

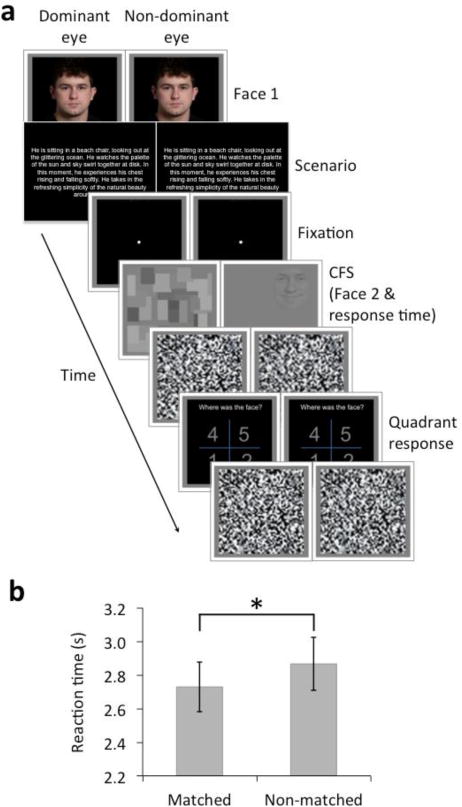

Fig. 4. Schematic representation of a trial and results in Experiment 6.

Note: (a) Each trial started with the presentation (to both eyes) of a photograph of a target person displaying a neutral face (Face 1; 5 s) followed by a short story (Scenario; 20 s). After a brief fixation screen, rapidly changing Mondrian patterns of decreasing contrast were presented to the dominant eye, while an image of the target person displaying a facial expression (that either matched or did not match the emotion scenario or was neutral) of increasing contrast was presented to the non-dominant eye (Face 2; max. time: 10 s). Participants were asked to press the spacebar as soon as they perceived the face and then to report in which quadrant the face was presented. (b) Faces portraying predicted facial expressions (matching the emotion evoked by the scenario) were processed faster than faces portraying unpredicted facial expressions (not matching), as revealed by faster reaction times. Asterisk indicates p<.05.

When using CFS, participants typically experience seeing initially only the Mondrian patterns presented to the dominant eye, and then the face presented to the non-dominant eye becomes visible once it is sufficiently high contrast (and Mondrian patterns are sufficiently low contrast) to break the suppression effect. Participants were instructed to press the spacebar as soon as they saw the face (within 10 s) and reaction time (RT) was collected as the outcome measure. Participants were then requested to report in which of the four corners of the image the face was located (unlimited time). The number of errors was expected to be low; this question served as a control to ensure that participants performed the task correctly and did actually see the faces. There was an inter-trial interval of 3s.

We used the same set of photographs as for Experiments 1–5. In addition, we used a modified version of these photographs, manipulated with Adobe Photoshop, for faces presented during CFS (see Figure S1). A full-contrast black and white image of each face was cropped into an oval shape so that only the facial features remained and the hair, ears, and neck were all removed. This cropped image was placed on a neutral gray background and the edges of the oval were blended with the background. Finally, each image was cropped into a 113×113 pixel square such that the eyes, eyebrows, nose, mouth, and chin all remained in the square (see Figure S1 for images of sample stimuli). Twelve different identities were included (6 female, 6 male), each of which was used in 3 or 4 trials to yield the total 45 experimental trials. We used the same set of scenarios as in the experiments above.

Results

As expected, there were very few trials where participants incorrectly reported the location of the target face (Mean Accuracy±SD: 0.99±0.02). A 2 (face match: matched, non-matched) by 3 (face emotion category: sad, fear, happy) repeated-measures ANOVA revealed facilitated processing for expected facial expressions. Face match condition significantly impacted reaction time (RT), with matched faces yielding faster RTs (M=2.74 s, SE=.15 s) than non-matched faces (M=2.87 s, SE=.16 s), F(1, 41)=5.37, p=.026, ηp2 =.116. See Figure 4b.

This effect did not differ across emotion category (i.e., the interaction was not statistically significant; F(2, 82)=2.47, p=0.091, ηp2 =.057). However, the analysis also revealed a significant main effect of face emotion category on RTs, F(2, 82)=7.78, p<.001, ηp2 =.159. Bonferroni comparisons revealed that fear faces yielded faster RTs (M=2.64, SE=.13) than either sad (M=2.94, SE=.17), p=.001, or happy faces (M=2.84, SE=.17), p=.025, which did not differ, p=.675. This is in agreement with prior work reporting preferential processing for stereotypical fear faces (Yang, Zald, & Blake, 2007), most likely because faces stereotypically portray fear with widened eyes showing a lot of sclera, creating a higher contrast in fear facial expressions than other facial expressions displaying less sclera (see, e.g., Hedger, Adams, & Garner, 2015).

Discussion

Experiment 6 shows that the processing of predicted faces is facilitated compared to the processing of non-predicted faces. Given that the literature on processing fluency suggests that ease of processing is associated with more positive evaluations (Winkielman et al., 2003), these findings suggest that processing fluency could be a potential underlying mechanism for the observed effect of facial expression predictions on social perception. These findings are also consistent with the neuroscience perspectives on predictive coding that underlie this research, which have reported highly efficient low-level processing for predicted stimuli (Jehee et al., 2006; Kok et al., 2012).

It is worth noting, however, that it remains unclear the exact level at which the facilitated processing observed here occurs; there is continued debate concerning whether CFS paradigms can be used to isolate non-conscious or pre-conscious processing of suppressed stimuli or whether they merely capture differences in conscious processing of stimuli after the suppressed stimulus has already reached awareness (see, e.g., Stein & Sterzer, 2014; Yang, Brascamp, Kang, & Blake, 2014). Thus, while our results clearly indicate that predicted faces are processed more efficiently than non-predicted faces, the present paradigm is unable to address whether this facilitation extends to unconscious processing.

General Discussion

Taken together, data across these six experiments provide evidence that facial expression predictions strongly contribute to our experience of other people. We demonstrated that individuals are evaluated as more likable and more trustworthy when displaying predicted facial expressions than non-predicted facial expressions (Experiments 1–4). Moreover, the effects of facial expression predictions on social perception proved broad, emerging across the three emotion categories explored; even for instances of negative emotions, participants liked a person better for expressing negatively when it was expected (Experiments 1–4). Importantly, the observed effects also extended beyond a laboratory setting to ratings of a real-world situation, namely, the 2016 United States presidential elections: presidential candidates were evaluated as more likable and trustworthy if they were also perceived as more predictable (Experiment 4). Interestingly, we also found evidence suggesting that sensitivity to stereotypical prediction violations may represent a stable individual difference: participants who exhibited more stereotypical facial expression predictions within our experimental task also liked and trusted a presidential candidate who violated gender norms (Clinton) less, and were even less likely to vote for her (Experiment 4). Finally, in a series of two experiments, we demonstrated that processing fluency, rather than affective misattribution, appears to be a better candidate for the mechanism underlying the effect of facial expression predictions on social perception (Experiments 5 and 6); predicted faces were processed more efficiently, which could in turn lead to more positive evaluations.

Our experimental design allowed us to examine the role of predictions in social perception directly, moving beyond the existing literature that has typically studied the role of predictions only indirectly in a number of ways. First, we were able to assess the power of complex, multimodal predictions, beyond perceptual priming (e.g., mere exposure; see Zajonc, 1968), as predictions were generated by participants imagining the target individual’s facial expression, not by presenting images of potential facial expressions to shape predictions perceptually. Our findings also go beyond emotion congruence effects (e.g., Mehrabian & Wiener, 1967), where the source of predictions and prediction violations are simultaneously presented by the target individual. In our paradigm, predictions were evoked, not by the target person in the moment, but by the emotion scenario, and predictions were established prior to the presentation of the target person’s facial expression. The findings also demonstrate the effect of predictions on social perception above and beyond stereotype violation effects (e.g., Heilman, Wallen, Fuchs, & Tamkins, 2004; Heilman, 2001), as, importantly, the effect of individual predictions on social perception was observed both across and within trials where the facial expressions were stereotypical matches or mismatches to the emotion evoked by the preceding scenario. Participants liked and trusted a target individual more when the displayed facial expression more closely matched their own predictions, even when examining only trials where all displayed facial expressions would be considered a stereotypical match to the predictions evoked by the preceding emotion scenario (i.e., smiling face after a happy scenario).

The present experiments leave a number of intriguing questions open for future exploration. First, we here focused on emotion categories that varied across valence and arousal levels, but that might all be considered to have an affiliative social quality. Future research could assess whether the effects described here are also observed for other emotion categories that are stereotypically non-affiliative, such as anger or disgust. Based on the strong across-emotion effects observed here, we hypothesize that facial expression predictions would also drive social perception for stereotypically non-affiliative emotion displays, such that a scowling face would still be evaluated more positively when displayed following a matching (i.e., angering) scenario, like being stuck in traffic. Still, this remains an empirical question to be explored.

Second, while our findings from Experiments 3 and 4 demonstrate that the role of predictions in social perception are not limited to instances involving blatant mismatches (e.g., cross-valence mismatches, like smiling in a horrifying situation), future research should further examine social perception under these contexts involving extreme prediction violations. In particular, future work could assess whether inferences about the person’s mental health or intellectual capabilities moderate the impact of predictions on evaluative judgments. Conversely, there is also evidence that norm violators may actually be preferred in specific situations in which unpredictable, inappropriate behavior, may be beneficial for the task at hand (Kiesler, 1973). For example, it is hypothesized that many voters favored Donald Trump’s language choices because they violated conventionality and political-correctness norms (Conway et al., 2017). Situational demands could be manipulated in future studies in order to examine the factors that might lead perceivers to form more favorable impressions of prediction-violating behavior, as well as whether such situations extend to emotion expression displays.

Future research should also further examine the underlying mechanisms of the effects observed. Of specific interest is whether processing fluency mediates the impact of predictions on social perception. Although a critical underlying condition was established here—predicted facial expressions did indeed exhibit evidence of facilitated processing—future work should more directly examine whether prediction-facilitated processing of facial expressions leads to more positive social perceptions of the individuals displaying those expressions. Given the existing literature on the effect of other kinds of processing fluency on social evaluative judgments (Winkielman et al., 2003), we would anticipate this relationship to hold for the processing and evaluation of facial expressions as well. However, in future studies, researchers could examine whether the effect of predictions on social perception is eliminated under conditions where processing ease is manipulated or held constant.