Abstract

Background

Much of the cancer burden in the USA is preventable, through application of existing knowledge. State-level funders and public health practitioners are in ideal positions to affect programs and policies related to cancer control. Mis-implementation refers to ending effective programs and policies prematurely or continuing ineffective ones. Greater attention to mis-implementation should lead to use of effective interventions and more efficient expenditure of resources, which in the long term, will lead to more positive cancer outcomes.

Methods

This is a three-phase study that takes a comprehensive approach, leading to the elucidation of tactics for addressing mis-implementation. Phase 1: We assess the extent to which mis-implementation is occurring among state cancer control programs in public health. This initial phase will involve a survey of 800 practitioners representing all states. The programs represented will span the full continuum of cancer control, from primary prevention to survivorship. Phase 2: Using data from phase 1 to identify organizations in which mis-implementation is particularly high or low, the team will conduct eight comparative case studies to get a richer understanding of mis-implementation and to understand contextual differences. These case studies will highlight lessons learned about mis-implementation and identify hypothesized drivers. Phase 3: Agent-based modeling will be used to identify dynamic interactions between individual capacity, organizational capacity, use of evidence, funding, and external factors driving mis-implementation. The team will then translate and disseminate findings from phases 1 to 3 to practitioners and practice-related stakeholders to support the reduction of mis-implementation.

Discussion

This study is innovative and significant because it will (1) be the first to refine and further develop reliable and valid measures of mis-implementation of public health programs; (2) bring together a strong, transdisciplinary team with significant expertise in practice-based research; (3) use agent-based modeling to address cancer control implementation; and (4) use a participatory, evidence-based, stakeholder-driven approach that will identify key leverage points for addressing mis-implementation among state public health programs. This research is expected to provide replicable computational simulation models that can identify leverage points and public health system dynamics to reduce mis-implementation in cancer control and may be of interest to other health areas.

Keywords: Mis-implementation, Cancer control, Agent-based models

Background

Cancer continues to be the second most common cause of death in the USA [1, 2]; however, much of this burden is preventable through evidence-based interventions [3]. Substantial potential for cancer control exists at the state level [4, 5] in which all states retain enormous authority to protect the public’s health [6]. States shoulder their broad public health responsibilities through work carried out by state and local health agencies. Over $1.1 billion annually is expended on state cancer control programs1 (i.e., primary and secondary prevention) [7, 8], which is significantly higher than any other area of chronic disease prevention and control. However, cancer control covers a broad spectrum of programs, and funding can be limited in areas and population groups with high cancer burdens [9]. With the limited resources available to state-level programs, the need to utilize the best available evidence to implement and sustain these programs is key to the efficiency of cancer control at the state level [10].

Evidence-based approaches to cancer control can significantly reduce the burden of cancer [10–13]. This approach begins with an estimate of the preventable burden. Depending on the methods, between one third and one half of deaths due to cancer can be preventable [3, 14, 15]. Large-scale efforts such as Cancer Control P.L.A.N.E.T. and the Community Guide have now placed a wide array of evidence-based interventions in the hands of cancer control practitioners [13, 16, 17]. Despite those efforts, a set of agency-level structures and processes (e.g., leadership, organizational climate and culture, access to research information) needs to be present for evidence-based decision-making (EBDM) to grow and thrive [10, 18–20]. While efforts are building to make sure practitioners have access to and the capacity for EBDM [21], the need for the exploration of mis-implementation of these programs in public health is growing.

Importance and potential impact of mis-implementation

The scientific literature has begun to highlight the importance of considering de-implementation in health care and public health [22, 23]. While de-implementation looks at the retraction of unnecessary or overused care [23, 24], it does not quite fully examine the processes that sustain non-evidence-based programs and the de-implementation of programs that are, in fact, evidence-based. An example of the discontinuation of an evidence-based program is notable with the VERB campaign in the USA that demonstrated effectiveness in increasing physical activity of children but was then discontinued [25, 26]. On the other end of the mis-implementation spectrum is the continuation of non-evidence-based programs such as the continuation of the DARE (Drug Abuse Resistance Education) program despite many evaluations have demonstrated its limited effectiveness [27, 28]. That is why researchers have come to define mis-implementation as the process where effective interventions are ended or ineffective interventions are continued in health settings (i.e., EBDM is not occurring) [22, 24]. Most of the current literature focuses on the overuse and underuse of clinical interventions and the cultural and organizational shifts needed toward the acceptance of de-adoption within medicine [29]. Currently, over 150 commonly used medical practices have been deemed ineffective or unsafe [23]. Despite this discovery within the medical realm, there is still sparse literature on mis-implementation in the field of public health or cancer control.

It is already known that there are a number of cancer control programs that continue without a firm basis in scientific evidence [12]. Hannon and colleagues reported that less than half of cancer control planners had ever used evidence-based resources [12]. Previous studies have suggested that between 58 and 62% of public health programs are evidence-based [30, 31]. Even among programs that are evidence-based, 37% of chronic disease prevention staff in state health departments reported programs are often or always discontinued when they should continue [22].

Factors likely to affect mis-implementation

In delivery of mental health services, Massatti and colleagues made several key points regarding mis-implementation: (1) the right mix of contextual factors (e.g., organizational support) is needed for the continuation of effective programs in real-world settings; (2) there is a significant cost burden of the programs to the agency; and (3) understanding the nuances of early adopters promotes efficient dissemination of effective interventions [32]. Management support for EBDM in public health agencies are associated with improved public health performance [18, 33], but little is known about the processes and factors that affect mis-implementation specifically. Pilot data indicate that organizational supports for EBDM may be protective against mis-implementation (e.g., leadership support for EBDM, having a work unit with the necessary EBDM skills) [22]. In addition, engaging a diverse set of partners may also lower the likelihood of mis-implementation.

The utility of agent-based modeling for studying public health practice

Agent-based modeling (ABM) is a powerful tool being used to inform practice and policy decisions in numerous health-related fields [34]. ABM is a type of computational simulation modeling in which individual agents—who may be people, organizations, or other entities—are defined according to mathematical rules and interact with one another and with their environment over time [35]. ABM is a useful tool to observe the dynamic and interdependent relationships between heterogeneous agents within a complex system and how system-level behavior and outcomes evolve over time from the interaction between these individual agents (emergent behavior) [35–37].

Agent-based models have a strong track record in social and biological sciences and have been widely used to study infectious disease control [38, 39] and health care delivery [40, 41]. More recently, ABM has begun to be applied to chronic disease control, ranging from the study of etiology, to intervention design, to policy implementation [35, 40, 42–44]. In topics specific to cancer prevention, ABMs are now being used to show how individuals respond to tobacco control policies [45] and to better understand the community-level, contextual factors involved in implementing childhood obesity interventions [43]. Some of the advantages of employing ABMs include that they can (1) model bi-directional and non-linear interactions between individuals, organizations, and external contextual factors [46]; (2) describe dynamic decision-making processes [47]; (3) simulate adaptation, counterfactuals, and relational structures such as networks [34, 42]; (4) consider and capture extensive heterogeneity across different entities or populations [48]; and (5) act as “policy laboratories” for researchers when real-world experimentation is not feasible or is too costly [48]. ABM has the potential to pinpoint both the factors within state health departments that have the greatest effect on the mis-implementation of cancer control programs and the leverage points that may be good targets to improve successful implementation.

Early on, ABMs primarily relied on simple heuristic rules as models of human behavior and were generally limited in their ability to predict behaviors of larger populations and complex interactions. In recent years, however, ABMs have provided (1) more refined representations of behavior and decision-making [49, 50], (2) increasingly sophisticated representations of relational/environmental structures such as geography and networks [34, 42], and (3) greater focus on “co-evolution” across levels of scale across settings, including organizational dynamics as in political science [34, 47].

Methods/design

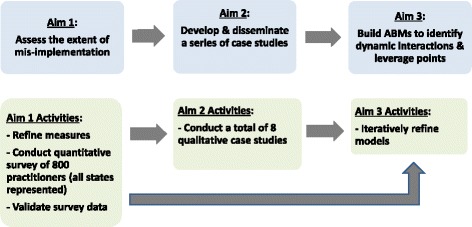

This is a multi-part, observational study funded by the National Cancer Institute that will examine the factors that affect mis-implementation of cancer control programs. ABM will be used here to understand influences of individual, organizational, and external factors on program implementation, decision-making, and the behaviors of professionals within state health departments. See Fig. 1 for a visual of the study schema. The project is outlined in the following three phases: (1) assessing mis-implementation, (2) conducting comparative case studies, and (3) agent-based modeling. The study design has been approved by the Washington University Institutional Review Board.

Fig. 1.

Study schema

Phase 1, assessing mis-implementation (Aim 1)

The measures to assess the scope and patterns of the mis-implementation problem are vastly under-developed. There has been limited pilot work in this area [22]; therefore, phase 1 will focus on the refinement of measures and assessment of the patterns of mis-implementation in cancer control in the USA. The project begins by refining and pilot testing measures to assess mis-implementation within state health departments. The foundation of these measures comes from a pilot survey previously completed by members of the team, the Mis-Implementation Survey for Cancer Control [22, 51–58]. The project team has engaged a group of public health practitioners who will serve as an advisory group throughout the duration of the project and will help inform the development of the measures.

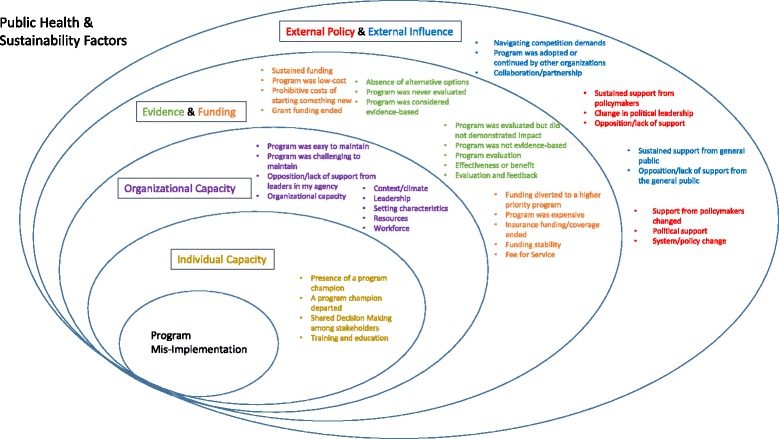

The first step in exploring mis-implementation is to build the self-report survey instrument. To do so, the team will search for existing instruments using formal queries of the published literature. The team will search public health, sustainability, and clinical and agent-based modeling literature. Search terms include Evidence-based decision making AND De-Implement* AND Measures; sustain*; de-implement* AND Measures; de-adopt* OR de-implement*; de-implement AND Health AND instrument; Organization* Capacity AND Measures. Candidate instruments will also be provided by the research team, lead authors of collected literature, and bibliography reviews [59]. Identified measures will be cataloged, and the authors will be queried for the related instruments. Relevant instruments will be summarized into evidence tables, highlighting core constructs, audience, and measurement properties. See Fig. 2 for the conceptual framework that will guide the survey development.

Fig. 2.

Conceptual framework for mis-implementation

Using the evidence tables, a draft instrument will be developed. It is likely to cover seven main domains: (1) biographical information; (2) frequency of mis-implementation; (3) reasons for mis-implementation; (4) barriers in overcoming mis-implementation; (5) specific programs being mis-implemented; (6) use of management supports for EBDM; and (7) ratings on current level of individual skills essential for implementing evidence-based interventions.

New measures will undergo expert review for content validity, relying on the advisory group of state health department practitioners. Before the instrument goes into the field, a series of individual interviews will be completed for cognitive response testing of newly developed items. Cognitive response testing is routinely used in refining questionnaires to improve the quality of data collection [60–62]. Cognitive response testing will be used to determine: (1) question comprehension (what does the respondent think the question is asking?); (2) information retrieval (what information does the respondent need to recall from memory in order to answer the question?); and (3) decision processing (how does the respondent choose their answer?).

Once cognitive testing is completed, additional edits will be made to the survey, and a test-retest will be employed to assess reliability of the instrument. The team intends to recruit around 100 practitioners, via the advisory group to complete the survey and then complete the survey again, 2 weeks after initial administration. Appropriate statistics will be calculated for each type of question to assess the reliability between the two test time points [63, 64].

Study participants

Study participants will include cancer control public health practitioners, which include those individuals who direct and implement population-based intervention programs in state health departments. These practitioners may be directly involved in program delivery, may set priorities, or allocate resources for programs related to cancer risk factors. The target audience will be inter-disciplinary; that is, they will be drawn from diverse backgrounds including health educators, epidemiologists, and evaluators. Examples of the individuals in the target audience include (1) the director of a Centers for Disease Control and Prevention (CDC)-funded comprehensive cancer control program for the state; (2) the director of a state program addressing primary prevention of cancer (tobacco, inactivity, diet, sun protection); (3) the director of state programs promoting early detection of breast, cervical, and colorectal cancers among underserved populations; or (4) state health department epidemiologists, evaluators, policy officers, and health educators supporting cancer control programs.

Participants will be randomly drawn from the 3000-person membership of National Association of Chronic Disease Directors (NACDD) and program manager lists from key CDC-supported programs in cancer and cancer risk factors. The team has an established partnership with NACDD and has worked extensively with them on previous projects [30, 54–57, 65–68]. In phase 1, the team will recruit 1040 individuals for a final sample size of 800. The team anticipates a 60% response rate based on evidence that supports a series of emails, follow-up phone calls, and endorsements from NACDD leadership and officials in the states with enrolled participants [51, 52, 54, 56, 69–74]. Similar to successful approaches in previous studies [56, 74], data will be collected using an online survey (Qualtrics software [75]) that will be delivered via email. The survey will remain open for a 2-month time period with four email reminders and two rounds of phone calls to bolster response rates [72]. All respondents will be offered a gift card incentive.

Analyzing the survey data

Survey data will be analyzed in three ways. First, descriptive statistics (e.g., frequencies, central tendencies, and variabilities) and diagnostic plots (e.g., stem and leaf plots, q-q plots) will be completed on all variables. Data will be examined for outliers and tested as appropriate for normality, linearity, and homoscedasticity. Appropriate corrective strategies will be used if problems are identified. Bivariate and multivariate analyses will rely on data at the single time point of the phase 1 survey. These preliminary analyses are necessary to ensure high-quality data and to test assumptions of the proposed models. The team will also compare demographic and regional variations between respondents and non-respondents to assess potential response bias.

Next, the measurement properties of the instrument will be comprehensively assessed. To do so, the team will conduct confirmatory factor analysis within an explanatory framework using structural equation models [76, 77]. Using factor analysis seeks to reduce the anticipated large number of items in the survey tool to a smaller number of underlying latent variables while examining construct validity of the measures [78]. The initial domains to be used in factor analysis are shown in Fig. 2.

Finally, multivariate analyses will use general linear or logistic mixed-effects modeling. A mixed-effects approach allows the team to include continuous predictors, both fixed and random categorical effects, and allows us to model the variability due to the nested design (individuals nested in health departments). The mixed-effects model will assess effects of multiple predictors on two key dependent variables (Yij) (ending programs that should continue, continuing programs that should end). The team will be able to assess covariate effects of numerous variables such as gender, educational background, and time in current job position. Here is a sample model:

where Pij and Sij are the fixed effects for program type and state population size, Rij is a fixed effect for reason for mis-implementation, (PR)ij is the program by reason interaction, and μj and εij are the variance components at the state and individual levels, respectively. The mixed-effects modeling allows for random effects at the state (μj) level. A mixed-effects model will examine state-level variability and account for the nested design of the study [79].

Sample size calculations

For the factor analysis, given a minimum of four items per factor and expected factor loading of 0.6 or higher, the survey will need a sample of 400 [80]. For reliability testing, for statistically significant (p < 0.05) kappa values of 0.50 and 0.70, the sample size requirements are 50 and 25 pairs, respectively, in each of the two groups. To estimate an intraclass correlation coefficient of 0.90 or above (power 0.80, p < 0.05), 45 pairs are required in each subgroup. Sample size estimates are based on Dunn’s recommendations [81]. Therefore, a sample of 100 for reliability testing will provide high power.

For population-level estimates of mis-implementation and multivariate modeling, sample sizes are based on a power ≥ 90% with two-sided α = 5% [22]. To estimate a prevalence of mis-implementation of 37% (± 3%), a sample of 750 is needed. To compare rates of mis-implementation by program area (e.g., cancer screening estimated at 19% versus primary prevention of cancer estimated at 29%), a sample size of 800 is required at power > 0.90 and p < 0.05.

Phase 2, comparative case studies (Aim 2)

Often, the key issue in understanding the translation of research to practice is not the evidence-based intervention itself, but rather the EBDM processes by which knowledge is transferred (i.e., contextual evidence as described by Rychetnik et al. [82] and Brownson et al. [10]) [83]. Building on data collected in phase 1, the goal of phase 2 is to better understand the context for mis-implementation via case studies, which will involve key informant interviews. These interviews will involve sites that are successful or less than successful in addressing mis-implementation. The purpose of key informant interviews is to collect information from a range of people who have first-hand knowledge of an issue within a specific organization or community.

Sampling, recruitment, and interview domains

The team will utilize purposive sampling to select participants [84]. Based on phase 1 data, eight states will be selected—that is, four states where mis-implementation is high and four where mis-implementation is low. Participants will be state health department practitioners who work in the identified states. By examining extreme cases, the study can maximize the likelihood that the qualitative approach will provide deeper understanding of mis-implementation, building on Aim 1 activities. While Aim 1 will determine the extent (the “how much”) and some underlying reasons (the “why”), Aim 2 will give a deeper understanding of the “why” and “how” of mis-implementation. The interviews will focus on several major areas (organized around domains in Fig. 2): (1) inputs related to mis-implementation, (2) factors affecting mis-implementation (individual, organizational, external), and (3) methods for reducing mis-implementation.

Data collection

Study staff will make initial contact via email and by telephone to invite identified participants to the study and arrange an appointment for the telephone interview. Participants will receive consent information in accordance with the Washington University Institutional Review Board standards. The team anticipates approximately 12 interviews from each of the eight state health departments (a total of 96 interviews) and will conduct interviews until the team reaches thematic saturation [85]. Participants will be offered a gift card incentive.

Data coding and analysis

Digital audio recordings of the interviews will be transcribed verbatim. Two project team members will analyze each of these transcripts via consensus coding. After reviewing the research questions [86, 87], the team members will read five of the transcripts using a first draft of a codebook. Each coder will be asked to systematically review the data and organize each of the statements into categories that summarize the concept or meaning articulated [88]. Once the first five transcripts are coded, they will be discussed in detail to ensure the accuracy of the codebook and inter-coder consistency. The codebook will be edited as needed prior to coding the remainder of the transcripts. All transcripts will be analyzed using NVIVO.11 [89]. After refinement of the codebook, each transcript will be coded independently by two team members. The two team members will then review non-overlapping coding in the text blocks and reach agreement on text blocking and coding. Themes from the coded transcripts will be summarized and highlighted with exemplary quotes from participants. Data analysis may also include quantification or some other form of data aggregation. The study team will use the interview guide questions to establish major categories (e.g., individual factors, organizational factors). All information that does not fit into these categories will be placed in an “other” category and then analyzed for new themes. Comparisons will be made to identify key differences in thematic issues between those in high and low mis-implementation settings. After initial analyses by research team members, one focus group will be conducted remotely or in-person with state health department staff in each of the eight states to get input on other interim theme summaries.

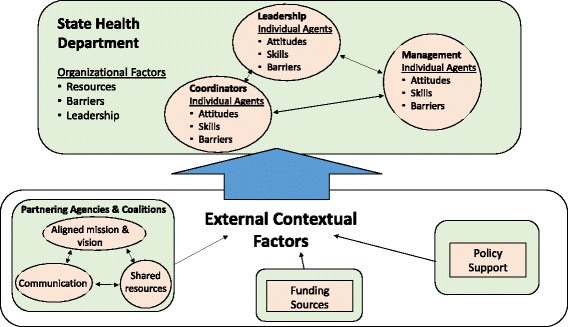

Phase 3, agent-based modeling

Agent-based modeling will allow the study team to explore how mis-implementation emerges through interactions between individuals who are situated within public health organizations. The general approach will be to model a hypothetical public health program environment, populated by individuals (agents) who are programmatic decision-makers or influencers within state health departments. The key behavior of interest in the simulation models will be the decision to continue or discontinue strategies within cancer prevention and control programs in a state health department. The resulting model will have the ability to simulate both overall trajectory of decisions being made as well as the patterns of interacting factors that emerge in these types of decision-making processes [34]. The models will consider individual characteristics (from the survey) and organizational characteristics (from the survey and archival data) across an agency hierarchy as well as influences from contextual, external forces that affect the state health department environments (e.g., policy changes, strengths of partnerships) and their potential effects on mis-implementation (Fig. 3 and Table 1).

Fig. 3.

Agent-based model flowchart

Table 1.

Sample agents and contextual factors

| Domain | Model-relevant construct | Sample elements | Sources |

|---|---|---|---|

| Agent (individual) | Attitudes | - Appeal of evidence-based practices - Openness to innovation |

Aarons et al. [97] |

| Skills | - Use economic evaluation in the decision-making process - Adapt programs for different communities and settings |

Gibbert et al. [31] Jacob et al. [56] |

|

| Barriers | - Lack of knowledge of EBDM - General resistance to changing old practices |

Maylahn et al. [98] Jacobs et al. [57] |

|

| Agent (organizational) | Resources | - Program funding - Expertise of available staff |

Erwin et al. [99] Brownson et al. [52] |

| Leadership/management support | - Agency leadership values EBDM - Management practices of direct supervisor |

Brownson et al. [18] Jacob et al. [56] |

|

| Barriers | - Lack of incentives in the agency for EBDM - Organizational culture does not support EBDM |

Jacobs et al. [57] Gibbert et al. [31] |

|

| Contextual (external) | Partnerships | - Maintains a diverse set of partners - Compatible processes between partners |

Brownson et al. [18] Massatti et al. [32] |

| Policy support | - Supportive state legislature - Supportive governor |

Brownson et al. [52] |

Defining the agents and contextual factors

Agents in an ABM are generally defined by individual characteristics (properties), behavior rules that govern choices or actions (possibly dependent on both the agent’s own state and that of the environment) [36, 37], and a social environment that characterizes relationships between agents. In this case, both individual agents (the public health professionals working in cancer prevention and control within state health departments) and organizational agents (the state health departments in which decisions are being made) will be influenced by elements outside the state health department, as well as by interactions with each other.

The complexity of the systems in which the organizational and external factors operate and influence public health practitioner decision-making is such that ABM will be able to provide greater insight than traditional experimental design or epidemiological and econometric analytic tools which require assumptions about homogeneity, linearity, etc. that are not appropriate for complex organizational systems [36, 90]. By developing a model that is informed by survey and case study data, we will be able to help explain how mis-implementation arises from decisions made by individuals within specific organizational and external contexts. Additionally, ABM can provide insight into potential counterfactuals and implications these may have for intervention designs and targeting (e.g., if a particular organizational climate had been present, mis-implementation would not have occurred). The potential benefits of using an ABM approach include anticipating both the individual impact of modifiable influences on decision-making and the organizational impacts of mis-implementation in varying conditions [43].

In our planned initial model design, individual-level agents will work within a health department, making periodic choices about whether to continue or discontinue specific intervention strategies within cancer prevention and control programs. The team will explore the impact that the underlying factors of state health departments have on the patterns of mis-implementation. The team has developed the initial set of individual and organizational agent constructs and external influences from pilot research in mis-implementation and in evidence-based decision-making [18, 21, 22, 31, 56, 57, 74] and draws on previous literature from systems science on organizational dynamics both within health systems and other fields [91–96]. Findings from phases 1 and 2 will allow the team to refine the core list of agent factors and also estimate the relative importance of different agent behaviors and attributes using a “bottom-up” approach (i.e., real-world data collected from individuals and organizations). Several of the agent factors listed in Table 1 have predicted mis-implementation in pilot research.

Individual

Individual-level agents in the initial models will represent the staff members who work within state health departments. These individuals, who exist in a hierarchy within a state health department, include leaders, managers, and coordinators (Fig. 3). The potential characteristics for modeling include their attitudes (e.g., openness to innovation) [97], skills and knowledge (e.g., ability to use economic evaluation) [31, 56], and barriers (e.g., lack of knowledge of EBDM processes) [57, 98], specifically those that may contribute to mis-implementation. Individual agents will also have social connections with other agents that affect how information flows through the organization and how organization-level decisions about implementation are made.

Organizational

The second set of planned agents are organizations representing state health departments. These organizations have characteristics that influence mis-implementation [22]. Several organizational agent characteristics likely to affect mis-implementation are management supports [18, 56], resources [52, 99], and organizational barriers [31, 57].

Contextual (external) factors

In addition, the team plans to model external contextual factors. While these are not directly present within health departments, context can have a significant influence on whether program strategies are continued or discontinued. The initial set of external factors has been drawn from pilot research and previous conceptual frameworks and includes variables such as a diverse, multi-disciplinary set of partners with EBDM skills [18, 32], funding climate, policy inputs, and political support [52]. The exploration of external factors within the models will allow the team to observe how agents adapt to different contexts—including through departmental and organizational communication channels—and how adaptation affects outcomes related to mis-implementation [34].

Developing the computational simulation

The development of the ABM will follow established computational modeling best practices [100]. The modeling process will have four key steps:

Step 1: model design and internal consistency testing

Design of the models begins with identification of key concepts and structures from the literature and pilot studies, as well as phases 1 and 2 and their operationalization into appropriate model constructs. Table 1 is a start from the pilot data and will be refined from what the team learns in phases 1 and 2. The models are then implemented in computational architecture, with each piece undergoing testing to ensure appropriate representation of concepts and implementation. Revisions of initial models’ implementation are undertaken as needed based on partial model testing and consultation with experts.

Step 2: test for explanatory insights

Once the initial models are complete, the generative explanatory power of the models to reproduce real-world observations about mis-implementation can be tested under a variety of different conditions. The testing procedure will have two parts. In part one, the ABM will be used to examine mis-implementation related to ending programs that should continue. In part two, the team will examine mis-implementation related to continuing programs that should end. For each type of mis-implementation, the team will focus on the ability of the models to reproduce “stylized facts” about mis-implementation obtained from pilot studies, activities in phases 1 and 2, and from the advisory group. These “stylized facts” are used to calibrate the models and include variables such as how skilled individuals are in EBDM and how strongly the organizational climate and culture supports EBDM. The engagement of the advisory group will be essential in this step.

Step 3: sensitivity analyses

Systematic exploration of model behavior will be undertaken as key parameters and assumptions are systematically varied. During this step, the model’s contextual environmental factors will be held constant, allowing the team to explore the sensitivity and dependency of outcomes (patterns of mis-implementation) to changes in assumptions about agent behavior and characteristics. Leveraging computational power to build up a robust statistical portrait of model dynamics and parameters, where assumptions are systematically varied, will allow for appropriate interpretation of model behavior, including the relationships between individual, organizational, and external contextual factors.

Step 4: model analysis to generate insights by manipulating potential levers

In this step, the team will introduce changes in individual and organizational agent knowledge and behavior and in contextual variables to explore effects on mis-implementation. This will help point to modifiable individual and organizational agent characteristics that, if addressed, can reduce mis-implementation in a variety of external conditions (i.e., essential leverage points). The ABMs can provide not only aggregate outcomes, but also information about how the relative importance and effect of different factors may vary across contexts and state health departments.

Discussion

Mis-implementation has not been studied nor have adequate measures been developed to fully assess its impact in the field of population-based cancer control and prevention. This study is timely and important because (1) cancer is highly preventable, yet existing evidence-based interventions are not being adequately applied despite their potential impact; (2) pilot research shows that mis-implementation in cancer control is widespread; and (3) ABM is a useful tool to more fully understand mis-implementation complexities and dynamics. Results from this study can help shape and inform how state health departments guide and implement effective cancer control programs as well as continue to test the utilization of agent-based modeling to inform chronic disease and cancer control research.

Furthering the debate about terminology

As the multi-disciplinary field of implementation science has developed over the past 15 years, scholars from diverse professions have attempted to document the many overlapping and sometimes inconsistently defined terms [101]. Many important contributions to implementation science originate from non-health sectors (e.g., agriculture, marketing, communications, management), increasing the breadth of literature and terminology. To bridge these sectors, a common lexicon will assist in accelerating progress by facilitating comparisons of methods and findings, supporting methods’ development and communication across disciplinary areas, and identifying gaps in knowledge [102, 103].

When we began the pilot work for this project in 2014 [22], we cataloged a number of established, relevant terms related to our scope, including:

De-implementation: “abandoning ineffective medical practices and mitigating the risks of untested practices [23]”;

De-adoption: “rejection of a medical practice or health service found to be ineffective or harmful following a previous period of adoption… [104]”;

Termination: “the deliberate conclusion or cessation of specific government functions, programs, policies or organizations [105]”;

Overuse: “clinical care in the absence of a clear medical basis for use or when the benefit of therapy does not outweigh risks [106]”;

Underuse: “the failure to deliver a health service that is likely to improve the quality or quantity of life, which is affordable [107]”;

Misuse: typically synonymous with “medical errors [108]”

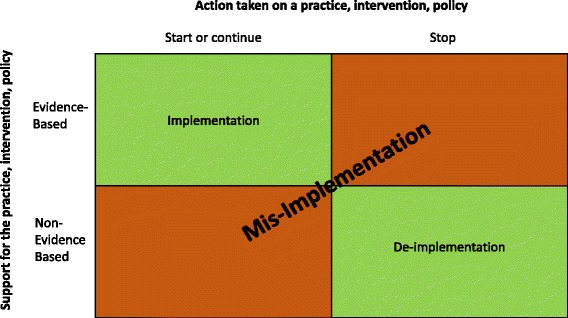

Because the goal of our project is to describe and model two related phenomena (ending of effective interventions or continuation of ineffective interventions), there was not an existing term that described the dual processes that we are addressing. Figure 4 depicts the bi-directional meaning of mis-implementation. The green cells show the desired implementation of effective programs and de-implementation of ineffective programs. The orange cells show the undesirable ending of effective programs and continuation of ineffective programs, which we label as mis-implementation. We welcome input on terminology as we learn more about these processes. We hypothesize that some of the underlying reasons and interaction of agents may differ for ending effective interventions versus continuing ineffective interventions. This will allow us to continue to refine terminology over time.

Fig. 4.

Conceptualization of the definition of mis-implementation

While planning the current project, we also realized that the use of the term mis-implementation may give some practitioners unease in that our study might reflect negatively on their day-to-day programs to decision-makers (e.g., public health leaders, policy makers), rather than identifying areas for improvement. These concerns have been addressed with a practice advisory group from the beginning of the project, and the team intends to frame the study goals carefully, including use of a project title and description that imparts positive, actionable outcomes: “Public Health in Appropriate Continuation of Tested Interventions” (Public Health in ACTION).

Utility of agent-based modeling

A strength of the study is the use of ABMs in combination with quantitative and qualitative methods to provide insights about how and why mis-implementation occurs and how specific policies may affect mis-implementation. These ABMs will explicate the dynamic interaction between individual decision-makers operating within organizations and influenced by external factors. An important policy utility comes from using the models to capture how variation in existing individual and organizational factors may reduce mis-implementation. Experiments using the models will allow comparison of baseline outcomes to outcomes as individual and organizational factors are changed to represent potential intervention scenarios. Insights generated by the models can be used to design novel strategies and policies that have the potential to effectively reduce mis-implementation. As these strategies become evident, the team will frequently seek input from the advisory group in order to maximize the real-world utility of the findings.

Like all ABMs, the planned models will require the team to make certain stylized assumptions about real-world structures and processes, especially when relevant data are not available. Translating the data the team collects in the initial phases of the project into the models in order to characterize individual and organizational behavior will require us to identify the most appropriate factors for inclusion, and develop appropriate ways to quantify these in a computational framework [43]. Many elements included in the models—including those related to external support and opposition for programs, barriers to decision-making, organizational climate, and individual capacity—although theoretically justified, are inherently difficult to measure and quantify. Therefore, while the models will elucidate important dynamics involved in mis-implementation and identify key leverage points for improving decision-making, they are not intended to provide precise, quantitative forecasts of how organizational changes will affect implementation decisions. Additionally, this is the first known attempt to computationally model the process involved in the implementation and mis-implementation of programs within health departments. As such, future modeling may be warranted to further enhance our findings and apply the models to other specific contexts.

Limitations

The study has a few limitations. While the team has plans to ensure the highest response rates [72, 73], high turn-over and workload demand of state health department workers may impede data collection efforts [109, 110]. Based on previous research and state-of-the-art methods [51, 52, 54, 72, 73], the team will take multiple steps to ensure a high response rate and will also compare respondents with non-respondents. There are limitations on how fully and accurately survey self-report responses can capture mis-implementation frequency and patterns across complex multi-faceted statewide programs.

Summary

A richer understanding of mis-implementation will help us better allocate already limited resources more efficiently, especially among health departments where a significant portion of cancer control work is contracted or performed in the USA [22]. This knowledge will also allow researchers and practitioners to prevent the continuation of ineffective programs or discontinuation of effective programs [22]. The team anticipates that the study will result in replicable models that can significantly impact mis-implementation in cancer control and can be applied to other health areas.

Acknowledgements

We acknowledge our Practice Advisory Group members, Paula Clayton, Heather Dacus, Julia Thorsness, and Virginia Warren, who have provided integral guidance for this project. We thank Alexandra Morshed for her help with the implementation science terminology and development of Fig. 4. We also acknowledge our partnership with NACDD in the developing, implementing, and disseminating findings from this study.

Funding

This study is funded by the National Cancer Institute of the National Institutes of Health under award number R01CA214530. The findings and conclusions in this article are those of the authors and do not necessarily represent the official positions of the National Institutes of Health.

Availability of data and materials

Not applicable

Abbreviations

- ABM

Agent-based model(ing)

- CDC

Centers for Disease Control and Prevention

- EBDM

Evidence-based decision-making

- NACDD

National Association of Chronic Disease Directors

Authors’ contributions

RCB conceived the original idea, with input from the team, and led the initial grant writing. MP wrote the sections of the original grant, is coordinating the study, and coordinated input from the team on the protocol. PA, PCE, RAH, DAL, and SMR were all involved in the initial grant writing and provided continual support and input to the progress of the study as well as provided substantial edits and revisions to this paper. MF, BH, and MK provided continual support and input to the progress of the study and provided substantial edits and revisions to this paper. RCB is the principal investigator of this study. All authors read and approved the final manuscript.

Ethics approval and consent to participate

The study was approved by the institutional review board of Washington University in St. Louis (reference number: 201611078). This study also received approval under Washington University’s Protocol Review and Monitoring Committee.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Footnotes

We use the term “programs” broadly to include a wide range of interventions from structured behavioral change strategies to broad policy approaches to cancer screening promotion initiatives.

Contributor Information

Margaret Padek, Email: mpadek@wustl.edu.

Peg Allen, Email: pegallen@wustl.edu.

Paul C. Erwin, Email: perwin@utk.edu

Melissa Franco, Email: mifranco@wustl.edu.

Ross A. Hammond, Email: rhammond@brookings.edu

Benjamin Heuberger, Email: bheuberger@brookings.edu.

Matt Kasman, Email: mkasman@brookings.edu.

Doug A. Luke, Email: dluke@wustl.edu

Stephanie Mazzucca, Email: smazzucca@wustl.edu.

Sarah Moreland-Russell, Email: smoreland-russell@wustl.edu.

Ross C. Brownson, Email: rbrownson@wustl.edu

References

- 1.Cancer Facts & Figures 2015. http://www.cancer.org.

- 2.American Cancer Society . Cancer facts and figures 2016. Atlanta: American Cancer Society; 2016. [Google Scholar]

- 3.Colditz GA, Wolin KY, Gehlert S. Applying what we know to accelerate cancer prevention. Sci Transl Med. 2012;4(127):127rv124. doi: 10.1126/scitranslmed.3003218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.DeGroff A, Carter A, Kenney K, et al. Using Evidence-based Interventions to Improve Cancer Screening in the National Breast and Cervical Cancer Early Detection Program. J Pub Health Manage Pract: JPHMP. 2016;22(5):442-49. 10.1097/PHH.0000000000000369. [DOI] [PMC free article] [PubMed]

- 5.Rochester PW, Townsend JS, Given L, Krebill H, Balderrama S, Vinson C. Comprehensive cancer control: progress and accomplishments. Cancer Causes Control. 2010;21(12):1967–1977. doi: 10.1007/s10552-010-9657-8. [DOI] [PubMed] [Google Scholar]

- 6.McGowan A, Brownson R, Wilcox L, Mensah G. Prevention and control of chronic diseases. In: Goodman R, Rothstein M, Hoffman R, Lopez W, Matthews G, editors. Law in public health practice. 2. New York: Oxford University Press; 2006. [Google Scholar]

- 7.FY2014 Grant Funding Profiles. http://wwwn.cdc.gov/fundingprofiles/.

- 8.Sustaining State Funding For Tobacco Control. https://www.cdc.gov/tobacco/stateandcommunity/tobacco_control_programs/program_development/sustainingstates/index.htm.

- 9.Carter AJR, Nguyen CN. A comparison of cancer burden and research spending reveals discrepancies in the distribution of research funding. BMC Public Health. 2012;12:526. doi: 10.1186/1471-2458-12-526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental concept for public health practice. Annu Rev Public Health. 2009;30:175–201. doi: 10.1146/annurev.publhealth.031308.100134. [DOI] [PubMed] [Google Scholar]

- 11.Ellis P, Robinson P, Ciliska D, Armour T, Brouwers M, O’Brien MA, Sussman J, Raina P. A systematic review of studies evaluating diffusion and dissemination of selected cancer control interventions. Health Psychol. 2005;24(5):488–500. doi: 10.1037/0278-6133.24.5.488. [DOI] [PubMed] [Google Scholar]

- 12.Hannon PA, Fernandez ME, Williams RS, Mullen PD, Escoffery C, Kreuter MW, Pfeiffer D, Kegler MC, Reese L, Mistry R, et al. Cancer control planners’ perceptions and use of evidence-based programs. J Public Health Manag Pract. 2010;16(3):E1–E8. doi: 10.1097/PHH.0b013e3181b3a3b1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sanchez MA, Vinson CA, Porta ML, Viswanath K, Kerner JF, Glasgow RE. Evolution of Cancer Control P.L.A.N.E.T.: moving research into practice. Cancer Causes Control. 2012;23(7):1205–1212. doi: 10.1007/s10552-012-9987-9. [DOI] [PubMed] [Google Scholar]

- 14.Byers T, Mouchawar J, Marks J, Cady B, Lins N, Swanson GM, Bal DG, Eyre H. The American Cancer Society challenge goals. How far can cancer rates decline in the U.S. by the year 2015? Cancer. 1999;86(4):715–727. doi: 10.1002/(SICI)1097-0142(19990815)86:4<715::AID-CNCR22>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- 15.Willett WC, Colditz GA, Mueller NE. Strategies for minimizing cancer risk. Sci Am. 1996;275(3):88–91. doi: 10.1038/scientificamerican0996-88. [DOI] [PubMed] [Google Scholar]

- 16.Briss PA, Brownson RC, Fielding JE, Zaza S. Developing and using the guide to community preventive services: lessons learned about evidence-based public health. Annu Rev Public Health. 2004;25:281–302. doi: 10.1146/annurev.publhealth.25.050503.153933. [DOI] [PubMed] [Google Scholar]

- 17.Guide to Community Preventive Services. https://www.thecommunityguide.org/.

- 18.Brownson RC, Allen P, Duggan K, Stamatakis KA, Erwin PC. Fostering more-effective public health by identifying administrative evidence-based practices: a review of the literature. Am J Prev Med. 2012;43(3):309–319. doi: 10.1016/j.amepre.2012.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Allen P, Brownson R, Duggan K, Stamatakis K, Erwin P. The Makings of an Evidence-Based Local Health Department:Identifying Administrative and Management Practices. Front Public Health Serv Syst Res Res. 2012;1(2). 10.13023/FPHSSR.0102.02.

- 20.Kohatsu ND, Robinson JG, Torner JC. Evidence-based public health: an evolving concept. Am J Prev Med. 2004;27(5):417–421. doi: 10.1016/j.amepre.2004.07.019. [DOI] [PubMed] [Google Scholar]

- 21.Jacobs JA, Duggan K, Erwin P, Smith C, Borawski E, Compton J, D’Ambrosio L, Frank SH, Frazier-Kouassi S, Hannon PA, et al. Capacity building for evidence-based decision making in local health departments: scaling up an effective training approach. Implement Sci. 2014;9(1):124. doi: 10.1186/s13012-014-0124-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Brownson RC, Allen P, Jacob RR, Harris JK, Duggan K, Hipp PR, Erwin PC. Understanding mis-implementation in public health practice. Am J Prev Med. 2015;48(5):543–551. doi: 10.1016/j.amepre.2014.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Prasad V, Ioannidis JP. Evidence-based de-implementation for contradicted, unproven, and aspiring healthcare practices. Implement Sci. 2014;9:1. doi: 10.1186/1748-5908-9-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gnjidic D, Elshaug AG. De-adoption and its 43 related terms: harmonizing low-value care terminology. BMC Med. 2015;13:273. doi: 10.1186/s12916-015-0511-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Huhman ME, Potter LD, Nolin MJ, et al. The Influence of the VERB Campaign on Children’s Physical Activity in 2002 to 2006.Am J Publ Health. 2010;100(4):638-45. 10.2105/AJPH.2008.142968. [DOI] [PMC free article] [PubMed]

- 26.Huhman M, Kelly RP, Edgar T. Social marketing as a framework for youth physical activity initiatives: a 10-year retrospective on the legacy of CDC’s VERB campaign. Curr Obes Rep. 2017;6(2):101–107. doi: 10.1007/s13679-017-0252-0. [DOI] [PubMed] [Google Scholar]

- 27.West SL, O’Neal KK. Project D.A.R.E. outcome effectiveness revisited. Am J Public Health. 2004;94:1027–1029. doi: 10.2105/AJPH.94.6.1027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Vincus AA, Ringwalt C, Harris MS, Shamblen SR. A short-term, quasi-experimental evaluation of D.A.R.E.’s revised elementary school curriculum. J Drug Educ. 2010;40(1):37–49. doi: 10.2190/DE.40.1.c. [DOI] [PubMed] [Google Scholar]

- 29.Gunderman RB, Seidenwurm DJ. De-adoption and un-diffusion. J Am Coll Radiol. 2015;12(11):1162–1163. doi: 10.1016/j.jacr.2015.06.016. [DOI] [PubMed] [Google Scholar]

- 30.Dreisinger M, Leet TL, Baker EA, Gillespie KN, Haas B, Brownson RC. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision making. J Public Health Manag Pract. 2008;14(2):138–143. doi: 10.1097/01.PHH.0000311891.73078.50. [DOI] [PubMed] [Google Scholar]

- 31.Gibbert WS, Keating SM, Jacobs JA, Dodson E, Baker E, Diem G, Giles W, Gillespie KN, Grabauskas V, Shatchkute A, et al. Training the workforce in evidence-based public health: an evaluation of impact among US and international practitioners. Prev Chronic Dis. 2013;10:E148. doi: 10.5888/pcd10.130120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Massatti RR, Sweeney HA, Panzano PC, Roth D. The de-adoption of innovative mental health practices (IMHP): why organizations choose not to sustain an IMHP. Admin Pol Ment Health. 2008;35(1–2):50–65. doi: 10.1007/s10488-007-0141-z. [DOI] [PubMed] [Google Scholar]

- 33.Harris AL, Scutchfield FD, Heise G, Ingram RC. The relationship between local public health agency administrative variables and county health status rankings in Kentucky. J Public Health Manag Pract. 2014;20(4):378–383. doi: 10.1097/PHH.0b013e3182a5c2f8. [DOI] [PubMed] [Google Scholar]

- 34.Hammond R: Considerations and best practices in agent-based modeling to inform policy. Paper commissioned by the Committee on the Assessment of Agent-Based Models to Inform Tobacco Product Regulation. In: Assessing the use of agent-based models for tobacco regulation. Volume Appendix A, edn. Edited by Committee on the Assessment of Agent-Based Models to Inform Tobacco Product Regulation. Washington, DC: Institute of Medicine of The National Academies; 2015: 161–193.

- 35.Burke JG, Lich KH, Neal JW, Meissner HI, Yonas M, Mabry PL. Enhancing dissemination and implementation research using systems science methods. Int J Behav Med. 2015;22(3):283–291. doi: 10.1007/s12529-014-9417-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Luke DA, Stamatakis KA. Systems science methods in public health: dynamics, networks, and agents. Annu Rev Public Health. 2012;33:357–376. doi: 10.1146/annurev-publhealth-031210-101222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bonabeau E. Agent-based modeling: methods and techniques for simulating human systems. Proc Natl Acad Sci U S A. 2002;99(Suppl 3):7280–7287. doi: 10.1073/pnas.082080899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Brown ST, Tai JH, Bailey RR, Cooley PC, Wheaton WD, Potter MA, Voorhees RE, LeJeune M, Grefenstette JJ, Burke DS, et al. Would school closure for the 2009 H1N1 influenza epidemic have been worth the cost?: a computational simulation of Pennsylvania. BMC Public Health. 2011;11:353. doi: 10.1186/1471-2458-11-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lee BY, Brown ST, Korch GW, Cooley PC, Zimmerman RK, Wheaton WD, Zimmer SM, Grefenstette JJ, Bailey RR, Assi TM, et al. A computer simulation of vaccine prioritization, allocation, and rationing during the 2009 H1N1 influenza pandemic. Vaccine. 2010;28(31):4875–4879. doi: 10.1016/j.vaccine.2010.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kumar S, Nigmatullin A. Exploring the impact of management of chronic illness through prevention on the U.S. healthcare delivery system—a closed loop system’s modeling study. Information Knowledge Systems Management. 2010;9:127–152. doi: 10.1142/S0219649210002589. [DOI] [Google Scholar]

- 41.Marshall DA, Burgos-Liz L, IJzerman MJ, Osgood ND, Padula WV, Higashi MK, Wong PK, Pasupathy KS, Crown W. Applying dynamic simulation modeling methods in health care delivery research-the SIMULATE checklist: report of the ISPOR simulation modeling emerging good practices task force. Value Health. 2015;18(1):5–16. doi: 10.1016/j.jval.2014.12.001. [DOI] [PubMed] [Google Scholar]

- 42.Hammond RA, Ornstein JT. A model of social influence on body mass index. Ann N Y Acad Sci. 2014;1331:34–42. doi: 10.1111/nyas.12344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hennessy E, Ornstein JT, Economos CD, Herzog JB, Lynskey V, Coffield E, Hammond RA. Designing an agent-based model for childhood obesity interventions: a case study of ChildObesity180. Prev Chronic Dis. 2016;13:E04. doi: 10.5888/pcd13.150414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Nianogo RA, Arah OA. Agent-based modeling of noncommunicable diseases: a systematic review. Am J Public Health. 2015;105(3):e20–e31. doi: 10.2105/AJPH.2014.302426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Feirman S, Donaldson E, Pearson J, Zawistowski G, Niaura R, Glasser A, Villanti AC. Mathematical modelling in tobacco control research: protocol for a systematic review. BMJ Open. 2015;5(4):e007269. doi: 10.1136/bmjopen-2014-007269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Fioretti G. Agent-based simulation models in organization science. Organ Res Methods. 2012;16(2):227–242. doi: 10.1177/1094428112470006. [DOI] [Google Scholar]

- 47.Harrison J, Lin Z, Carroll G, Carley K. Simulation modeling in organizational and management research. Acad Manag Rev. 2007;32(4):1229–1245. doi: 10.5465/AMR.2007.26586485. [DOI] [Google Scholar]

- 48.Epstein J. Why model? Journal of Artificial Societies and Social Simulation. 2008;11(4):12. [Google Scholar]

- 49.Bruch E, Hammond R, Todd P. Co-evolution of decision-making and social environments. In: Scott R, Kosslyn S, editors. Emerging trends in the social and behavioral sciences. Hoboken: John Wiley and Sons; 2014. [Google Scholar]

- 50.Hammond RA, Ornstein JT, Fellows LK, Dubé L, Levitan R, Dagher A. A model of food reward learning with dynamic reward exposure. Front Comput Neurosci. 2012;6:82. 10.3389/fncom.2012.00082. [DOI] [PMC free article] [PubMed]

- 51.Brownson RC, Ballew P, Brown KL, Elliott MB, Haire-Joshu D, Heath GW, Kreuter MW. The effect of disseminating evidence-based interventions that promote physical activity to health departments. Am J Public Health. 2007;97(10):1900–1907. doi: 10.2105/AJPH.2006.090399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Brownson RC, Ballew P, Dieffenderfer B, Haire-Joshu D, Heath GW, Kreuter MW, Myers BA. Evidence-based interventions to promote physical activity: what contributes to dissemination by state health departments. Am J Prev Med. 2007;33(1 Suppl):S66–S73. doi: 10.1016/j.amepre.2007.03.011. [DOI] [PubMed] [Google Scholar]

- 53.Dobbins M, Hanna SE, Ciliska D, Manske S, Cameron R, Mercer SL, O’Mara L, DeCorby K, Robeson P. A randomized controlled trial evaluating the impact of knowledge translation and exchange strategies. Implement Sci. 2009;4:61. doi: 10.1186/1748-5908-4-61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Allen P, Sequeira S, Best L, Jones E, Baker EA, Brownson RC. Perceived benefits and challenges of coordinated approaches to chronic disease prevention in state health departments. Prev Chronic Dis. 2014;11:E76. doi: 10.5888/pcd11.130350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Elliott L, McBride TD, Allen P, Jacob RR, Jones E, Kerner J, Brownson RC. Health care system collaboration to address chronic diseases: a nationwide snapshot from state public health practitioners. Prev Chronic Dis. 2014;11:E152. doi: 10.5888/pcd11.140075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Jacob RR, Baker EA, Allen P, Dodson EA, Duggan K, Fields R, Sequeira S, Brownson RC. Training needs and supports for evidence-based decision making among the public health workforce in the United States. BMC Health Serv Res. 2014;14(1):564. doi: 10.1186/s12913-014-0564-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Jacobs JA, Dodson EA, Baker EA, Deshpande AD, Brownson RC. Barriers to evidence-based decision making in public health: a national survey of chronic disease practitioners. Public Health Rep. 2010;125(5):736–742. doi: 10.1177/003335491012500516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Rabin BA, Brownson RC, Kerner JF, Glasgow RE. Methodologic challenges in disseminating evidence-based interventions to promote physical activity. Am J Prev Med. 2006;31(4 Suppl):S24–S34. doi: 10.1016/j.amepre.2006.06.009. [DOI] [PubMed] [Google Scholar]

- 59.Basch CE, Eveland JD, Portnoy B. Diffusion systems for education and learning about health. Fam Community Health. 1986;9(2):1–26. doi: 10.1097/00003727-198608000-00003. [DOI] [PubMed] [Google Scholar]

- 60.Forsyth BH, Lessler JT. Cognitive laboratory methods: a taxonomy. In: Biemer PP, Groves RM, Lyberg LE, Mathiowetz NA, Sudman S, editors. Measurement errors in surveys. New York: Wiley-Interscience; 1991. pp. 395–418. [Google Scholar]

- 61.Jobe JB, Mingay DJ. Cognitive research improves questionnaires. Am J Public Health. 1989;79(8):1053–1055. doi: 10.2105/AJPH.79.8.1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Jobe JB, Mingay DJ. Cognitive laboratory approach to designing questionnaires for surveys of the elderly. Public Health Rep. 1990;105(5):518–524. [PMC free article] [PubMed] [Google Scholar]

- 63.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. doi: 10.2307/2529310. [DOI] [PubMed] [Google Scholar]

- 64.Maclure M, Willett WC. Misinterpretation and misuse of the kappa statistic. Am J Epidemiol. 1987;126(2):161–169. doi: 10.1093/aje/126.2.161. [DOI] [PubMed] [Google Scholar]

- 65.Brownson RC, Baker EA, Leet TL, Gillespie KN. Evidence-based public health. New York: Oxford University Press; 2003. [Google Scholar]

- 66.Kreitner S, Leet TL, Baker EA, Maylahn C, Brownson RC. Assessing the competencies and training needs for public health professionals managing chronic disease prevention programs. J Public Health Manag Pract. 2003;9(4):284–290. doi: 10.1097/00124784-200307000-00006. [DOI] [PubMed] [Google Scholar]

- 67.O’Neall MA, Brownson RC. Teaching evidence-based public health to public health practitioners. Ann Epidemiol. 2005;15(7):540–544. doi: 10.1016/j.annepidem.2004.09.001. [DOI] [PubMed] [Google Scholar]

- 68.Yarber L, Brownson CA, Jacob RR, Baker EA, Jones E, Baumann C, Deshpande AD, Gillespie KN, Scharff DP, Brownson RC. Evaluating a train-the-trainer approach for improving capacity for evidence-based decision making in public health. BMC Health Serv Res. 2015;15(1):547. doi: 10.1186/s12913-015-1224-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ballew P, Brownson RC, Haire-Joshu D, Heath GW, Kreuter MW. Dissemination of effective physical activity interventions: are we applying the evidence? Health Educ Res. 2010;25(2):185–198. doi: 10.1093/her/cyq003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Boehmer TK, Luke DA, Haire-Joshu DL, Bates HS, Brownson RC. Preventing childhood obesity through state policy predictors of bill enactment. Am J Prev Med. 2008;34(4):333–340. doi: 10.1016/j.amepre.2008.01.003. [DOI] [PubMed] [Google Scholar]

- 71.Dodson EA, Fleming C, Boehmer TK, Haire-Joshu D, Luke DA, Brownson RC. Preventing childhood obesity through state policy: qualitative assessment of enablers and barriers. J Public Health Policy. 2009;30(Suppl 1):S161–S176. doi: 10.1057/jphp.2008.57. [DOI] [PubMed] [Google Scholar]

- 72.Dillman D, Smyth J, Melani L. Internet, mail, and mixed-mode surveys: the tailored design method. Third. Hoboken: John Wiley & Sons, Inc; 2009. [Google Scholar]

- 73.Mokdad AH. The behavioral risk factors surveillance system: past, present, and future. Annu Rev Public Health. 2009;30:43–54. doi: 10.1146/annurev.publhealth.031308.100226. [DOI] [PubMed] [Google Scholar]

- 74.Brownson RC, Reis RS, Allen P, Duggan K, Fields R, Stamatakis KA, Erwin PC. Understanding administrative evidence-based practices: findings from a survey of local health department leaders. Am J Prev Med. 2013;46(1):49–57. doi: 10.1016/j.amepre.2013.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Qualtrics: Survey Research Suite. http://www.qualtrics.com/.

- 76.Kim J-O, Mueller C. Introduction to factor analysis: what it is and how to do it. Thousand Oaks: Sage Publications; 1978. [Google Scholar]

- 77.Pett MA, Lackey NR, Sullivan JJ. Making sense of factor analysis: the use of factor analysis for instrument development in health care research. Thousand Oaks: Sage Publications; 2003. [Google Scholar]

- 78.Bollen KA. Latent variables in psychology and the social sciences. Annu Rev Psychol. 2002;53:605–634. doi: 10.1146/annurev.psych.53.100901.135239. [DOI] [PubMed] [Google Scholar]

- 79.Luke D. Multilevel modeling. Thousand Oaks: Sage Publications; 2004. [Google Scholar]

- 80.Gagne P, Hancock G. Measurement model quality, sample size, and solution propriety in confirmatory factor models. Multivar Behav Res. 2006;41(1):65–83. doi: 10.1207/s15327906mbr4101_5. [DOI] [PubMed] [Google Scholar]

- 81.Dunn G. Design and analysis of reliability studies. Stat Methods Med Res. 1992;1(2):123–157. doi: 10.1177/096228029200100202. [DOI] [PubMed] [Google Scholar]

- 82.Rychetnik L, Hawe P, Waters E, Barratt A, Frommer M. A glossary for evidence based public health. J Epidemiol Community Health. 2004;58(7):538–545. doi: 10.1136/jech.2003.011585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Green LW. From research to “best practices” in other settings and populations. Am J Health Behav. 2001;25(3):165–178. doi: 10.5993/AJHB.25.3.2. [DOI] [PubMed] [Google Scholar]

- 84.Higginbottom GM. Sampling issues in qualitative research. Nurse Res. 2004;12(1):7–19. doi: 10.7748/nr2004.07.12.1.7.c5927. [DOI] [PubMed] [Google Scholar]

- 85.Curry LA, Nembhard IM, Bradley EH. Qualitative and mixed methods provide unique contributions to outcomes research. Circulation. 2009;119(10):1442–1452. doi: 10.1161/CIRCULATIONAHA.107.742775. [DOI] [PubMed] [Google Scholar]

- 86.Strauss A. Qualitative analysis for social scientists. Cambridge: Cambridge University Press; 1987. [Google Scholar]

- 87.Strauss A, Corbin J. Basics of qualitative research: grounded theory procedures and techniques. Newbury Park: Sage; 1990. [Google Scholar]

- 88.Patton MQ. Qualitative evaluation and research methods. 3. Thousand Oaks: Sage; 2002. [Google Scholar]

- 89.NVivo 10 for Windows. http://www.qsrinternational.com/products_nvivo.aspx.

- 90.Miller JH, Page SE. Complex adaptive systems : an introduction to computational models of social life. Princeton: Princeton University Press; 2007. [Google Scholar]

- 91.Morgan GP, Carley KM. Modeling formal and informal ties within an organization: a multiple model integration. In: the garbage can model of organizational choice: looking forward at forty. 2012. p. 253–92.

- 92.Noriega P. Coordination, organizations, institutions, and norms in agent systems II: AAMAS 2006 and ECAI 2006 International Workshops, COIN 2006, Hakodate, Japan, May 9, 2006, Riva del Garda, Italy, August 28, 2006: revised selected papers. Berlin; New York: Springer; 2007. [Google Scholar]

- 93.Gibbons DE. Interorganizational network structures and diffusion of information through a health system. Am J Public Health. 2007;97(9):1684–1692. doi: 10.2105/AJPH.2005.063669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Hovmand PS, Gillespie DF. Implementation of evidence-based practice and organizational performance. J Behav Health Serv Res. 2010;37(1):79–94. doi: 10.1007/s11414-008-9154-y. [DOI] [PubMed] [Google Scholar]

- 95.Sastry MA. Problems and paradoxes in a model of punctuated organizational change. Adm Sci Q. 1997;42(2):237–275. doi: 10.2307/2393920. [DOI] [Google Scholar]

- 96.Larsen E, Lomi A. Representing change: a system model of organizational inertia and capabilities as dynamic accumulation processes. Simul Model Pract Theory. 2002;10(5):271–296. doi: 10.1016/S1569-190X(02)00085-0. [DOI] [Google Scholar]

- 97.Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the Evidence-Based Practice Attitude Scale (EBPAS) Ment Health Serv Res. 2004;6(2):61–74. doi: 10.1023/B:MHSR.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Maylahn C, Bohn C, Hammer M, Waltz E. Strengthening epidemiologic competencies among local health professionals in New York: teaching evidence-based public health. Public Health Rep. 2008;123(Suppl 1):35–43. doi: 10.1177/00333549081230S110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Erwin PC, Mays GP, Riley WJ. Resources that may matter: the impact of local health department expenditures on health status. Public Health Rep. 2012;127(1):89–95. doi: 10.1177/003335491212700110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Tesfatsion L, Judd K, editors. Handbook of computational economics: agent-based computational economics. Amsterdam: North-Holland; 2006. [Google Scholar]

- 101.Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver NL. A glossary for dissemination and implementation research in health. J Public Health Manag Pract. 2008;14(2):117–123. doi: 10.1097/01.PHH.0000311888.06252.bb. [DOI] [PubMed] [Google Scholar]

- 102.Ciliska D, Robinson P, Armour T, Ellis P, Brouwers M, Gauld M, Baldassarre F, Raina P. Diffusion and dissemination of evidence-based dietary strategies for the prevention of cancer. Nutr J. 2005;4:13. doi: 10.1186/1475-2891-4-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Rabin B, Brownson R. Terminology for dissemination and implementation research. In: Brownson R, Colditz G, Proctor E, editors. Dissemination and implementation research in health: translating science to practice. 2. New York: Oxford University Press; 2018. pp. 19–45. [Google Scholar]

- 104.Rogers EM. Diffusion of innovations. Fifth. New York: Free Press; 2003. [Google Scholar]

- 105.Brewer Gad P. The foundations of policy analysis. Homewood: Dorsey Press; 1983. [Google Scholar]

- 106.Institute of Medicine . Crossing the quality chasm: a new health system for the 21st century. Washington, DC: Institute of Medicine National Academy Press; 2001. [Google Scholar]

- 107.Glasziou P, Straus S, Brownlee S, Trevena L, Dans L, Guyatt G, Elshaug AG, Janett R, Saini V. Evidence for underuse of effective medical services around the world. Lancet (London, England) 2017;390(10090):169–177. doi: 10.1016/S0140-6736(16)30946-1. [DOI] [PubMed] [Google Scholar]

- 108.Kohn L, Corrigan J, Donaldson M. To err is human: building a safer health care system. Washington, DC: National Academies Press; 2000. [PubMed] [Google Scholar]

- 109.Pourshaban D, Basurto-Davila R, Shih M. Building and sustaining strong public health agencies: determinants of workforce turnover. J Public Health Manag Pract. 2015;21(Suppl 6):S80–S90. doi: 10.1097/PHH.0000000000000311. [DOI] [PubMed] [Google Scholar]

- 110.Drehobl P, Stover BH, Koo D. On the road to a stronger public health workforce: visual tools to address complex challenges. Am J Prev Med. 2014;47(5 Suppl 3):S280–S285. doi: 10.1016/j.amepre.2014.07.013. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable