ABSTRACT

Joseph Halpern and Judea Pearl ([2005]) draw upon structural equation models to develop an attractive analysis of ‘actual cause’. Their analysis is designed for the case of deterministic causation. I show that their account can be naturally extended to provide an elegant treatment of probabilistic causation.

-

1

Introduction

-

2

Preemption

-

3

Structural Equation Models

-

4

The Halpern and Pearl Definition of ‘Actual Cause’

-

5

Preemption Again

-

6

The Probabilistic Case

-

7

Probabilistic Causal Models

-

8

A Proposed Probabilistic Extension of Halpern and Pearl’s Definition

-

9

Twardy and Korb’s Account

-

10

Probabilistic Fizzling

-

11

Conclusion

1 Introduction

The investigation of actual (or ‘token’) causal relations—in addition to the investigation of generic (or ‘type’) causal relations—is an important part of scientific practice. For example, on various occasions in the history of science, paleontologists and geologists have been interested in determining the actual cause or causes of the extinction of the dinosaurs, cosmologists with the actual cause of the cosmic microwave background, astronomers with the actual causes of the perturbation of the orbit of Uranus and the perihelion precession of Mercury, and epidemiologists with the actual cause of the outbreak of the H7N9 avian influenza virus. Yet, despite the scientific importance of the discovery of actual causes, there remains a significant amount of philosophical work to be done before we have a satisfactory understanding of the nature of actual causation.

Halpern and Pearl ([2001], [2005]) have made progress on this front. They draw upon structural equation models (SEMs) to provide an innovative and attractive analysis (or ‘definition’, as they call it) of actual causation. Their analysis1 is closely related to analyses proposed by Pearl ([2009], Chapter 10),2 Hitchcock ([2001a], pp. 286–7, 289–90), and Woodward ([2005], pp. 74–86). Halpern and Pearl’s analysis handles certain cases that are counterexamples to these closely related accounts (see Pearl [2009], pp. 329–30; Weslake [forthcoming], Section 2), as well as handling many cases that pose problems for more traditional, non-structural equation-based analyses of actual causation (Halpern and Pearl [2001], pp. 197–202, [2005], pp. 856–69).

One limitation of Halpern and Pearl’s analysis and related accounts is that they are designed for the case of deterministic causation (see Halpern and Pearl [2005], p. 852; Hitchcock [2007], p. 498; Pearl [2009], p. 26). An extension of their analysis to enable it to handle probabilistic actual causation would be worthwhile, particularly in light of the probabilistic nature of many widely accepted scientific theories. In the following, I propose such an extension.

Before proceeding, it is worth noting that a refinement to Halpern and Pearl’s analysis has been proposed in (Halpern [2008], pp. 200–5; Halpern and Hitchcock [2010], pp. 389–94, 400–3, [2015], Section 6). The refined account preserves the core of Halpern and Pearl’s original analysis, but tweaks it slightly by strengthening one of its conditions so as to rule out certain alleged non-causes that are counted as actual causes by Halpern and Pearl’s analysis.3 However, doubt has been cast by Halpern ([unpublished], Section 1) and by Blanchard and Schaffer ([forthcoming], Section 3) upon whether this refinement to Halpern and Pearl’s original analysis is necessary (that is, whether the alleged counterexamples to Halpern and Pearl’s analysis are genuine).4 I will therefore take Halpern and Pearl’s analysis as my starting point in attempting to develop an analysis of actual causation adequate to the probabilistic case. As I shall explain in Section 5 below, if the proposed refinement to Halpern and Pearl’s analysis is necessary, it is plausible that it can be incorporated into my proposed analysis of probabilistic causation too.

The road map is as follows: In Section 2, I give an example of (deterministic) preemption, which poses problems for many traditional attempts to analyse actual causation in terms of counterfactuals (and, indeed, in terms of regularities and causal processes). In Section 3, I introduce the notion of an SEM. In Section 4, I outline Halpern and Pearl’s analysis of ‘actual cause’, which appeals to SEMs. In Section 5, I show that Halpern and Pearl’s analysis provides an attractive treatment of deterministic preemption. In Section 6, I describe an example of probabilistic preemption, which Halpern and Pearl’s analysis can’t (and wasn’t designed to) handle. In Section 7, I outline the notion of a probabilistic causal model. In Section 8, I draw upon the notion of a probabilistic causal model in proposing an extension of Halpern and Pearl’s analysis of ‘actual cause’ to the probabilistic case. I show that this extension yields an elegant treatment of probabilistic preemption. In Section 9, I outline an alternative attempt to extend analyses of actual causation in terms of SEMs to the probabilistic case, due to Twardy and Korb ([2011]). In Section 10, I show that Twardy and Korb’s proposal is subject to counterexamples which mine avoids. Section 11 concludes.

2 Preemption

Preemption makes trouble for attempts to analyse causation in terms of counterfactual dependence. Here’s an example.

PE: The New York Police Department is due to go on parade at the parade ground on Saturday. Knowing this, Don Corleone decides that when Saturday comes, he will order Sonny to go to the parade ground and shoot and kill Police Chief McCluskey. Not knowing Corleone’s plan, Don Barzini decides that when Saturday comes, he will order Turk to shoot and kill McCluskey. Turk is perfectly obedient and an impeccable shot; if he gets the chance, he will shoot and kill McCluskey. Nevertheless, Corleone’s headquarters are closer to the police parade ground than Barzini’s headquarters. If both Sonny and Turk receive their orders, then Sonny will arrive at the parade ground first, shooting and killing McCluskey before Turk gets the chance. Indeed, even if Sonny were to shoot and miss, McCluskey would be whisked away to safety before Turk had the chance to shoot. Sure enough, on Saturday, the dons order their respective minions to perform the assassination. Sonny arrives at the parade ground first, shooting and killing McCluskey before Turk arrives on the scene. Since Turk arrives too late, he does not shoot.

In this scenario, Corleone’s order is an actual cause of McCluskey’s death; Barzini’s order is not an actual cause, but merely a preempted backup. Still, McCluskey’s death doesn’t counterfactually depend upon Corleone’s order: if Corleone hadn’t issued his order and so Sonny hadn’t attempted to assassinate McCluskey, then Barzini would still have ordered Turk to shoot and kill McCluskey, and Turk would have obliged.

Such preemption cases pose a challenge for anyone attempting to analyse actual causation in terms of counterfactual dependence (see, for example, Lewis [1986a], pp. 200–2, [2004], pp. 81–2). Though I shall not attempt to show it here, they also pose a challenge for those seeking to analyse actual causation in terms of regularities (see Mackie [1965], p. 251; Lewis [1973a], p. 557; Strevens [2007], pp. 98–102; Baumgartner [2013], pp. 99–100; Paul and Hall [2013], pp. 74–5), and for those seeking to analyse actual causation in terms of causal processes (see Paul and Hall [2013], pp. 55–7, 77–8). Halpern and Pearl ([2001], [2005]) attempt to deal with such cases by appealing to SEMs.

3 Structural Equation Models

An SEM, , is an ordered pair, , where is a set of variables, and is a set of structural equations.5 Each of the variables in appears on the left-hand side of exactly one structural equation in . The variables in comprise two (disjoint) subsets: a set, , of ‘exogenous’ variables, the values of which do not depend upon the values of any of the other variables in the model; and a set, , of ‘endogenous’ variables, the values of which do depend upon the values of other variables in the model. The structural equation for each endogenous variable, , expresses the value of Y as a function of other variables in . That is, it has the form , where . Such a structural equation conveys information about how the value of Y counterfactually depends upon the values of the other variables in .

Specifically, suppose that and that . Then, X appears as an argument in the function on the right-hand side of the structural equation for Z just in case there is a pair, , of possible values of X; a pair, , of possible values of Z; and a possible assignment of values, (abbreviated as )6 to the variables in such that it is true that (a) if it had been the case that and that , then it would have been the case that ; and (b) if it had been the case that and that , then it would have been the case that . In other words, X appears on the right-hand side of the equation for Z just in case there is some assignment of values to the other variables in the model such that the value of Z depends upon that of X when the other variables take the assigned values (see Pearl [2009], p. 97; Hitchcock [2001a], pp. 280–1). If no variable appears on the right-hand side of the equation for Z, then Z is an exogenous variable. In that case, the structural equation for Z simply takes the form , where is the actual value of Z.

Any variables that appear as arguments in the function on the right-hand side of the equation for variable V are known as the ‘parents’ of V; V is a ‘child’ of theirs. The notion of an ‘ancestor’ is defined in terms of the transitive closure of parenthood, that of a ‘descendent’ in terms of the transitive closure of childhood.

Since structural equations encode information about counterfactual dependence, they differ from algebraic equations: given the asymmetric nature of counterfactual dependence, a structural equation is not equivalent to (see Pearl [1995], p. 672, [2009], pp. 27–9; Hitchcock [2001a], p. 280; Halpern and Pearl [2005], pp. 847–8; inter alia). Indeed, given a non-backtracking reading of counterfactuals (Lewis [1979], pp. 456–8), the counterfactuals entailed by will typically be false where those entailed by are true (see, for example, Hitchcock [2001a], p. 280; Halpern and Hitchcock [2015], p. 417). Limiting our attention to models entailing only non-backtracking counterfactuals helps to ensure that the SEMs that we consider possess the property of ‘acyclicity’: they are such that for no variable Vi is it the case that the value of Vi is a function of Vj, which in turn is a function of Vk, which is a function of …Vi. Acyclic models entail a unique solution for each variable.

Analyses of actual causation in terms of SEMs typically appeal to only those models that encode only non-backtracking counterfactuals (Hitchcock [2001a], p. 280; Halpern and Hitchcock [2015], p. 417). Doing so is important if such analyses are to deliver the correct results about causal asymmetry. In virtue of their appeal to models encoding only non-backtracking counterfactuals, analyses of actual causation in terms of SEMs can be seen as continuous with the tradition, initiated by Lewis ([1973a]), of attempting to analyse causation in terms of such counterfactuals (see Hitchcock [2001a], pp. 273–4; Halpern and Pearl [2005], pp. 877–8).

An SEM, , can be given a graphical representation by taking the variables in as the nodes or vertices of the graph and drawing a directed edge (or ‘arrow’) from a variable Vi to a variable Vj (), just in case Vi is a parent of Vj according to the structural equations in . A ‘directed path’ can be defined as an ordered sequence of variables, , such that there is a directed edge from Vi to Vj, and a directed edge from Vj to …Vk (in other words, directed paths run from variables to their descendants).

In the terminology of Halpern and Pearl ([2005], pp. 851–2), where yi is a possible value of Yi and (the set of endogenous variables), a formula of the form Yi = yi is a ‘primitive event’. In their notation, is a variable ranging over primitive events and Boolean combinations of primitive events (Halpern and Pearl [2005], p. 852).

One can evaluate a counterfactual of the form with respect to an SEM, , by replacing the equations for and Vk in with the equations and V k = vk (thus treating each of and Vk as an exogenous variable), while leaving all other equations in intact. The result is a new set of equations . The counterfactual holds in the original model, , just in case, in the solution to holds. This gives us a method for evaluating, with respect to , even those counterfactuals whose truth or falsity isn’t implied by any single equation in considered alone (Hitchcock, [2001a], p. 283), for example, counterfactuals concerning how the value of a variable would differ if the values of its grandparents were different.

This ‘equation replacement’ method for evaluating counterfactuals models what would happen if the variables and Vk were set to the values and Vk = vk by means of ‘interventions’ (Woodward [2005], p. 98) or a small ‘miracles’ (Lewis [1979], p. 468).7 By replacing the normal equations for and Vk (that is, the equations for these variables that appear in ) with the equations and Vk = vk, while leaving all other equations intact, we are not allowing the values of and Vk to be determined in the normal way, in accordance with their usual structural. Rather, we are taking them to be ‘miraculously’ set to the desired values (or at least set to the desired values via some process that is exogenous to the system being modelled, and which interferes with its usual workings; see Woodward [2005], p. 47). Evaluating counterfactuals in this way ensures the avoidance of backtracking (cf. Lewis [1979], pp. 456–8). Specifically, it ensures that we get the result that if , then the parents (and more generally, ancestors) of and Vk would have had the same values (except where some of the variables and Vk themselves have ancestors that are among and Vk), while the children (and, more generally, descendants) of and Vk are susceptible to change. This is because the structural equations for the ancestor and descendent variables (provided that they are not themselves among and Vk) are left unchanged (cf. Pearl [2009], p. 205).

As observed by Halpern and Hitchcock ([2015], p. 420), there are at least two different views of the relationship between SEMs and counterfactuals to be found in the literature.8 One view—adopted by Hitchcock ([2001a], pp. 274, 279–84, 287) and Woodward ([2005], pp. 42–3, 110), inter alia—is that structural equations are just summaries of sets of (non-backtracking) counterfactuals: a structural equation of the form simply summarizes a set of (non-backtracking) counterfactuals of the form , which, taken together, say what the value of Y would be for each possible assignment of values to . More generally, on this view, an SEM, , ‘encodes’ a set of counterfactuals—namely, the set of counterfactuals that are evaluated as true when the ‘equation replacement’ method is applied to —which are given a non-backtracking semantics that is quite independent of .

This independent semantics might be a broadly Lewisian semantics (Lewis [1979]), according to which a counterfactual is true if Y = y holds in a world in which each of is set to the value specified in the antecedent by a ‘small miracle’.9 Alternatively, one might appeal to a Woodwardian semantics (Woodward [2005]), according to which the relevant world to consider is one in which each of is set to the specified value by an intervention.10 These accounts both avoid backtracking because on neither account are we to evaluate counterfactuals with reference to worlds in which their antecedents are realized as a result of different earlier conditions operating via the usual causal processes.

An alternative view of the relationship between structural equations and counterfactuals—adopted by Pearl ([2009], pp. 27–9, 33–8, 68–70, 202–15, 239–40)11—is that structural equations, rather than summarizing sets of counterfactuals, represent causal mechanisms, which are taken as primitives, and which are themselves taken to ground counterfactuals (see Halpern and Hitchcock [2015], p. 420). Pearl ([2009], p. 70), unlike Woodward,12 defines ‘interventions’ as ‘local surgeries’ (Pearl [2009], p. 223) on the causal mechanisms that he takes to be represented by structural equations. He takes such local surgeries to be formally represented by equation replacements (Pearl [2009], p. 70), and takes the equation replacement procedure to constitute a semantics for the sort of counterfactual conditional relevant to analysing actual causation (Pearl [2009], pp. 112–13, Chapter 7).13 As he puts it, this interpretation bases ‘the notion of interventions directly on causal mechanisms’ (Pearl [2009], p. 112), and takes ‘equation replacement’—which he construes as representing mechanism-modification—‘to provide a semantics for counterfactual statements’ (Pearl [2009], p. 113).

On the ‘primitive causal mechanisms’ view, the asymmetry of structural equations and the non-backtracking nature of the counterfactuals that (on this view) are given an ‘equation-replacement’ semantics follows from the asymmetry of the causal mechanisms themselves (cf. Pearl [1995], p. 672, [2009], pp. 27, 29, 69). Specifically, as Pearl notes, where mechanisms exhibit the desired causal asymmetry, the asymmetry of the equations representing those mechanisms (that is, the distinction between the dependent variable to appear on the left-hand side of the structural equation and the independent variables to appear on the right) can be ‘determined by appealing […] to the notion of hypothetical intervention and asking whether an external control over one variable in the mechanism necessarily affects the others’ (Pearl [2009], p. 228). Recalling that Pearl defines interventions in terms of local surgeries on mechanisms, the idea is that where an equation represents an asymmetric causal mechanism, the value of Y would change under local surgeries on the mechanism that affect the values of Vi, Vj, Vk, …, but the values of Vi, Vj, Vk, … would not change under local surgeries that affect the value of Y.

For present purposes, there is no need to choose between the ‘summaries of counterfactuals’ and ‘primitive causal mechanisms’ construals of structural equations. It is worth noting, however, that the choice between the two approaches may have implications for the potential reductivity of an analysis of actual causation in terms of SEMs. If SEMs represent sets of primitive causal mechanisms, then an analysis of actual causation in terms of SEMs will not reduce actual causation to non-causal facts. By contrast, on the ‘summaries of counterfactuals’ construal, an analysis of actual causation in terms of SEMs will potentially be reductive if the counterfactuals summarized can be given a semantics—perhaps along the lines of (Lewis [1979])—that doesn’t appeal to causal facts. Reduction will not, however, be achieved if one instead adopts a semantics that appeals to causal notions, such as Woodward’s ‘interventionist’ semantics (see Woodward [2005], p. 98).

Nevertheless, even if the analysis is non-reductive, it is plausible that it might still be illuminating. Woodward ([2005], pp. 104–7) has rather convincingly argued that, although non-reductive, an analysis of causation in terms of SEMs that summarize counterfactuals that are given by his interventionist semantics can be illuminating and can avoid viciously circularity.14 Meanwhile, Halpern and Hitchcock ([2015], p. 420) argue that if we adopt the primitive causal mechanisms construal of structural equations, we can still give an illuminating (though non-reductive) analysis of actual causation in terms of SEMs. In particular, they observe that—on this construal—SEMs themselves ‘do not directly represent relations of actual causation’, but merely an ‘underlying “causal structure”’ (Halpern and Hitchcock [2015], p. 420) in terms of which actual causal relations can be understood. A similar view appears to be taken by Pearl ([2009]). On Pearl’s view, such an analysis reduces actual causation to facts about ‘causal mechanisms’ (Pearl [2009], p. 112), which are construed as ‘invariant linkages’ (Pearl [2009], p. 223) or stable, law-like relationships (Pearl [2009], pp. 224–5, 239), which are not themselves to be analysed in terms of actual causation (cf. Halpern and Pearl [2005], p. 849).

I shall not argue here that Halpern and Pearl’s definition of actual causation, or the probabilistic extension that I shall propose in Section 8, can be converted into a fully reductive analysis of actual causation in non-causal terms. I agree with the authors just cited that an analysis can be illuminating without being fully reductive.

4 The Halpern and Pearl Definition of ‘Actual Cause’

Before stating Halpern and Pearl’s analysis of actual causation, it is necessary to introduce some more of their terminology. Recall that, given an SEM, , Halpern and Pearl ([2005], pp. 851–2) call a formula of the form Y = y a primitive event, where ( being the subset of that comprises the endogenous variables) and y is a possible value of Y. They take to be a variable ranging over primitive events and Boolean combinations of primitive events (Halpern and Pearl [2005], p. 852).

Where are variables in (each of which is distinct from any variable that appears in the formula ), Halpern and Pearl ([2005], p. 852) call a formula of the form , which they abbreviate , a ‘basic causal formula’. Such a formula says that if it had been the case that and Yn = yn, then it would have been the case that (Halpern and Pearl [2005], p. 852). As such is simply a notational variant on (Pearl [2009], pp. 70, 108; cf. Halpern and Pearl [2005], p. 852).15 Finally, a ‘context’ is an assignment of values to the variables in (that is, the exogenous variables in ) (Halpern and Pearl [2005], p. 849). That is, where , a context is an assignment of a value to each Ui: . Such an assignment is abbreviated to or simply as (Halpern and Pearl [2005], pp. 847, 849).

Given context , the structural equations for the endogenous variables in acyclic SEM, , determine a unique value for each of the variables in . Halpern and Pearl ([2005], p. 852) write if holds in the unique solution to the model that results from when the equations in for the exogenous variables are replaced with equations setting these variables to the values that they are assigned in the context . That is, says that if the exogenous variables in were to take the values , then (according to ) would hold. Moreover, Halpern and Pearl ([2005], p. 852) write that if holds in the unique solution to the model that results from by replacing the equations for the variables with equations setting these variables equal to the values . That is, says that given context , the causal formula—that is, counterfactual— holds (according to ). By contrast, says that given context , the causal formula does not hold (according to ). Similarly, says that, in the context does not hold (according to ).

The types of events that Halpern and Pearl allow to be actual causes are primitive events and conjunctions of primitive events (for simplicity, I’ll take a primitive event to be a limiting case of a conjunction of primitive events in what follows). That is, actual causes have the form (for ), abbreviated as (Halpern and Pearl [2001], p. 196, [2005], p. 853). The events that they allow as effects are primitive events and arbitrary Boolean combinations of primitive events (Halpern and Pearl [2001], p. 196; [2005], p. 853). They define actual cause as follows (Halpern and Pearl [2001], pp. 196–7).16,17,18,19

AC: is an actual cause ofin [[that is, in model given the context ]] if the following three conditions hold:

-

AC1.

(that is, both and are true in the actual world).

-

AC2.There exists a partition of [[that is, the set of endogenous variables in the model ]] with and some setting of the variables in such that [[where]] for [[all]] , [[the following holds:]]

- . In words, changing from to changes from true to false

- for all subsets of . In words, setting to should have no effect on , as long as is kept at its [[actual]] value , even if all the variables in an arbitrary subset of are set to their original values in the context .

-

AC3.

is minimal; no [[strict]] subset of satisfies conditions AC1 and AC2. Minimality ensures that only those elements of the conjunction that are essential for changing in AC2(a) are considered part of a cause; inessential elements are pruned.

As Halpern and Pearl ([2001], p. 197) observe, the core of the definition is AC2. They observe that, informally, the variables in can be thought of as describing the ‘active causal process’ from to (Halpern and Pearl [2001], p. 197).20 They demonstrate (Halpern and Pearl [2005], pp. 879–80) that where a partition () is such that AC2 is satisfied, all variables in lie on a directed path from a variable in to a variable in . The variables in , on the other hand, are not part of the active causal process (Halpern and Pearl [2005], p. 854).

Condition AC2(a) says that there exists a (non-actual) assignment of possible values to the variables such that if the variables had taken the values , while the variables had taken the values , then would have held (Halpern and Pearl [2005], p. 854). Condition AC2(a) thus doesn’t require that straightforwardly counterfactually depends upon but rather requires (more weakly) that counterfactually depends upon under the contingency (that is, when it is built into the antecedent of the counterfactual) that (Halpern and Pearl [2005], p. 854).

On the other hand, condition AC2(b) is designed to ensure that it is , operating via the directed path(s) upon which the variables in lie, rather than , that is causally responsible for . It does this by requiring that if the variables in had taken the values , and any arbitrary subset of had taken their actual values while the values of the variables in had taken the values , then would still have held (Halpern and Pearl [2005], pp. 854–5).

Halpern and Pearl’s definition AC relativizes the notion of actual causation to an SEM. This might be thought a slightly odd feature, since ordinarily we take actual causation to be an objective feature of the world that is not model-relative. Others who have attempted to analyse actual causation in terms of SEMs have sought to avoid model-relativity by suggesting that is an actual cause of simpliciter, provided that there exists at least one ‘appropriate’ SEM relative to which satisfies the criteria for being a (model-relative) actual cause of (Hitchcock [2001a], p. 287; cf. Woodward [2008], p. 209).21 We could use this strategy to extract a non-model-relative notion of actual causation from Halpern and Pearl’s definition. Of course, this strategy requires us to say what constitutes an appropriate SEM. Though this isn’t an altogether straightforward task, progress has been made (see Hitchcock [2001a], p. 287; Halpern and Hitchcock [2010], pp. 394–9; Blanchard and Schaffer [forthcoming], Section 1). I won’t review all of the criteria for model appropriateness that have been advanced in the literature; suffice it to say that the SEMs outlined below satisfy all of the standard criteria that have been suggested.

One criterion is worth mentioning, however. Hitchcock has suggested that, to be appropriate, a model, , must ‘entail no false counterfactuals’ (Hitchcock [2001a], p. 287). By this he means that evaluating counterfactuals with respect to by means of the equation replacement method doesn’t lead to evaluations of counterfactuals as true when they are, in fact, false (Hitchcock [2001a], p. 283).22 I shall discuss an analogous criterion for the appropriateness of probabilistic causal models when I discuss the latter in Section 7 below.

5 Preemption Again

To see that Halpern and Pearl’s definition AC delivers the correct result in the simple preemption case described in Section 2 above, it is necessary to provide an SEM. I will call the model developed in this section ‘’.

Let C, B, S, T, and D be binary variables, where C takes a value of one if Corleone orders Sonny to shoot and kill McCluskey, and takes a value of zero if he doesn’t; B takes a value of one if Barzini orders Turk to shoot and kill McCluskey, zero if he doesn’t; S takes a value of one if Sonny shoots, zero if he doesn’t; T takes a value of one if Turk shoots, zero if he doesn’t; and D takes a value of one if McCluskey dies, and zero if he survives. To these variables, let us add two more binary variables: CI, which takes a value of one if Corleone intends to issue his order and zero if he doesn’t; and BI, which takes a value of one if Barzini intends to issue his order, and zero if he doesn’t.23

The system of structural equations for this example is as follows:

CI = 1;

BI = 1;

C = CI;

B = BI;

S = C;

;

.

In our model . That is, the variables CI and BI are the exogenous variables. Equations (i) and (ii) simply state their actual values, CI = 1 and BI = 1, representing the fact that Corleone forms his intention and that Barzini forms his intention. Thus, the actual context is .

In . That is, the variables C, B, S, T, and D are the endogenous variables. The structural equations for these variables express their values as a function of other variables in the model. Equation (iii) says that if Corleone had formed the intention to issue his order, then he would have issued it, but that he wouldn’t have issued it if he hadn’t formed the intention to do so. We might express this informally by saying that Corleone issues his order just in case he forms the intention to. Equation (iv) then says that Barzini issues his order just in case he forms the intention to; Equation (v) says that Sonny shoots just in case Corleone issues his order; Equation (vi) says that Turk shoots just in case Barzini issues his order and Sonny does not shoot; and Equation (vii) says that McCluskey dies just in case either Sonny or Turk shoots.

Given the context, , the values of the (endogenous) variables in are uniquely determined in accordance with the structural equations. The unique solution to our set of structural equations is: CI = 1, BI = 1, C = 1, B = 1, S = 1, T = 0, D = 1. That is, Corleone forms the intention to issue his order; Barzini forms the intention to issue his order; Corleone issues his order; Barzini issues his order; Sonny shoots; Turk doesn’t shoot; McCluskey dies.

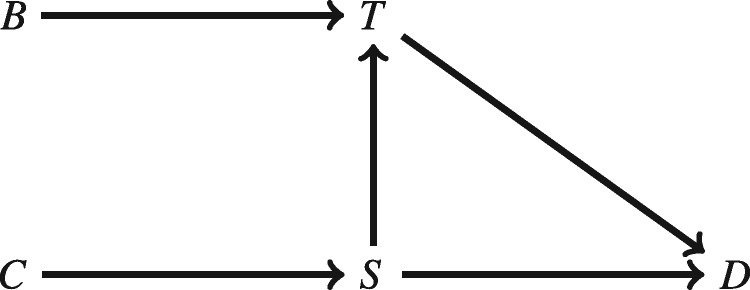

We can give a graphical representation by following the conventions for drawing such graphs that were outlined in Section 3. Following Halpern and Pearl ([2005], p. 862), I omit exogenous variables from the graph. The resulting graph is given as Figure 1.

Figure 1.

A graphical representation of the model .

With the model of our preemption case in hand, we are in a position to see that AC correctly diagnoses Corleone’s order (C = 1) as an actual cause of McCluskey’s death (D = 1). To see that it does, let , with and . Let be D = 1. In the solution to the structural equations, given the actual context, , C = 1 and D = 1 hold. So condition AC1 of AC is satisfied. Condition AC3 is also satisfied, since has no (non-empty) strict subsets. So everything hinges on whether AC2 is satisfied.

To see that AC2 is satisfied, let , let , and let . First note that AC2(a) is satisfied because in the set of structural equations that results from replacing Equation (iii) with Equation (iii) C = 0, and the Equations (iv) and (vi) with Equation (iv) B = 1 and Equation (vi) T = 0, the solution for D is D = 0. This means that it is true that

That is, in the model and the context , it is true that if Corleone hadn’t issued his order and Barzini had issued his order but Turk hadn’t shot, then McCluskey wouldn’t have died.

To see that AC2(b) is satisfied, note that the structural equations in ensure that if C = 1, then D = 1, no matter what values are taken by the variables in , and that this remains so even if we build into the antecedent of the relevant counterfactual the additional information that S = 1 and/or D = 1 holds (that is, even if an arbitrary subset of the variables in were to take the original values that they received in the context ). For instance it is true that

That is, given the model and the context, D would have taken value D = 1 if C had taken its actual value, C = 1, while B and T had taken the values B = 1 and T = 0, even if S had taken its actual value, S = 1.

So AC2(b) is satisfied. We have already seen that AC1, AC3, and AC2(a) are satisfied. Thus AC yields the correct verdict that C = 1 (Corleone’s order) is an actual cause of D = 1 (McCluskey’s death).

AC also yields the correct verdict that B = 1 (Barzini’s order) is not an actual cause of D = 1. In order to get the sort of contingent dependence of D = 1 upon B = 1 required by condition AC2(a), it will be necessary for S to take the non-actual value S = 0. The trouble is that if it were also the case that certain subsets of the variables on the Barzini process were to take their actual values (in particular, the set {T}), then variable D would take the value D = 0, contrary to the requirement of condition AC2(b).

For example, consider the obvious partition and , and consider the assignment . Condition AC2(a) is satisfied for this partition and this assignment.24 In particular, it is true that

That is to say, in this model and context, if Barzini hadn’t issued his order and Corleone had issued his order, but Sonny hadn’t shot, then McCluskey wouldn’t have died.

But notice that AC2(b) is not satisfied for this partition and assignment of values to . For take , and observe that

That is, in the model and the context, it is false that if B had taken its actual value B = 1, and the variables in had taken the values C = 1 and S = 0 (the values that they receive under the assignment ), while the subset of the variables in had taken their actual values—namely, T = 0—then it would have been that D = 1. Intuitively, it is not the case that if Barzini had issued his order, Corleone had issue his order, but Sonny hadn’t shot, and (as was actually the case) Turk hadn’t shot, then McCluskey would have died.

Nor is there any other partition of the endogenous variables such that AC2 is satisfied. In particular, none of the remaining variables on the Barzini process, {T, D}, can be assigned to instead of , for the values of each of these variables ‘screens off’ B from D. The result would be that for any assignment of values to the variables in , not both AC2(a) and AC2(b) are satisfied. On the other hand, at least one of the variables on the initial Corleone process {C, S}, must be an element of , since only by supposing that such a variable takes a value of zero do we get the contingent dependence required by AC2(a). But reassigning the other variable to will not affect the fact that AC2(b) fails to hold: it will remain true that if B = 1 but T = 0, and some variable on the Corleone process had taken a value of zero, then it would have been that D = 0, so that AC2(b) is violated.

So AC gives the correct diagnosis of this sort of preemption. It does so, intuitively, on the correct grounds. Specifically, the reason Corleone’s order is counted as a cause is that (i) given Turk’s non-shooting, McCluskey’s death depends upon Corleone’s order; and (ii) there is a complete causal process running from Corleone’s order to McCluskey’s death, as indicated by the fact that for arbitrary subsets of events on the Corleone process, it is true that if Corleone had issued his order, and Turk hadn’t shot, and those events had occurred, then McCluskey would have died.

By contrast, Barzini’s order isn’t counted as a cause because although (i) given Sonny’s non-shooting, McCluskey’s death counterfactually depends upon Barzini’s order,25 nevertheless, (ii) there is no complete causal process from Barzini’s order to McCluskey’s death as indicated by the fact that, for example, if Barzini issued his order and Sonny didn’t shoot but (as was actually the case) Turk didn’t shoot, then McCluskey would have survived.

The example that we have been considering is one of so-called early preemption. Halpern and Pearl show how their account provides similarly intuitive treatments of symmetric overdetermination and partial causation (Halpern and Pearl [2001], pp. 197–8, [2005], pp. 856–8), hastening and delaying (Halpern and Pearl [2001], pp. 198–9, [2005], pp. 859–60), late preemption (Halpern and Pearl [2001], pp. 199–200, [2005], pp. 861–4), causation by omission (Halpern and Pearl [2005], pp. 865–7), double prevention (Halpern and Pearl [2005], pp. 867–9), and a range of other cases (Halpern and Pearl [2001], pp. 200–2).

There are, however, some more subtle cases that they claim their definition does not diagnose correctly (Halpern and Pearl [2005], pp. 869–77). They take the view that, as it stands, AC is too liberal. They attempt to deal with the problem cases (Halpern and Pearl [2005], p. 870) by appealing to the notion of an extended causal model. This is simply defined as an ordered pair, , where is an SEM, and is a set of ‘allowable’ settings for the endogenous variables, .26 A setting of a subset of the endogenous variables is allowable if it can be extended to a setting in . The idea, then, is to require that the variable setting , appealed to in condition AC2 of their definition AC, be an allowable setting. Halpern and Pearl wish to count as non-allowable those settings that correspond to ‘unreasonable’ (Halpern and Pearl [2005], p. 869) or ‘fanciful’ (Halpern and Pearl [2005], p. 870) scenarios.

Elsewhere in the structural equations literature, attempts have been made to analyse actual causation in terms of SEMs that represent only ‘serious possibilities’ (Hitchcock [2001a], pp. 287, 294, 298; Woodward [2005], pp. 86–91). More recently, attempts have been made to provide a more rigorous account of allowable settings in terms of normality rankings over possible worlds (Halpern [2008], pp. 203–5; Halpern and Hitchcock [2010], pp. 400–3, [2015], Section 6; cf. Halpern and Pearl [2001], p. 202).

We needn’t go into the details here. The cases that are claimed to require a restriction to allowable settings tend to be rather subtle. Perhaps a fully adequate analysis of probabilistic actual causation would require a similar restriction. It seems plausible that the criteria for allowable settings that have been developed in the literature on deterministic actual causation carry over to the probabilistic case. Indeed, one of the criteria for normality that has been suggested is statistical frequency (Halpern and Hitchcock [2010], p. 402, [2015], pp. 429–30); clearly such a notion is applicable in a probabilistic context. Yet, Halpern ([unpublished], Section 1) and Blanchard and Schaffer ([forthcoming], Section 3) have raised doubts about the need to supplement Halpern and Pearl’s account with a normality-based restriction on allowable settings. Consequently, I will just focus upon extending the unrestricted version of their definition to the probabilistic case here.

A modification of AC that I will consider in some detail (because it is very plausible, and plausibly ought to be carried across to the probabilistic case too) is what Halpern and Pearl ([2005], p. 859) call ‘a contrastive extension to the definition of cause’. It is rather plausible that actual causation is contrastive in nature (Hitchcock [1996a], [1996b]; Schaffer [2005], [2013]). Often, our judgements of actual causation, rather than taking the form ‘ actually caused ’, instead take the form ‘ rather than actually caused rather than ’, where and is incompatible with (Halpern and Pearl [2005], p. 859). Or, more generally, ‘ rather than actually caused rather than ’, where denotes a set of formulas of the form such that for each such formula, , and where represents a set of formulas of the form such that for each such formula, is incompatible with (cf. Schaffer [2005], pp. 327–8). Following Schaffer ([2005], p. 329), I will call and ‘contrast sets’. The view that actual causation is contrastive both on the cause and on the effect side is thus the view that actual causation is a quaternary relation (Schaffer [2005], p. 327, [2013], p. 46) with , and as its relata, rather than a binary relation with just and as its relata.27 The suggestion is that claims like ‘ is an actual cause of ’ are incomplete and liable to be ambiguous, since no contrast sets are explicitly specified.28

To illustrate the plausibility of the view that actual causation is contrastive, consider a case where Doctor can administer no dose, one dose, or two doses of medicine to Patient. Patient will fail to recover if no dose is administered, but will recover if either one or two doses are administered. Let us suppose that Doctor in fact administers two doses, and Patient recovers. It would be natural to model this causal scenario using a ternary variable M, which takes value 0, 1, or 2 according to whether Doctor administers 0, 1, or 2 doses of medicine, and a binary variable, R, which takes value 0 if Patient fails to recover and 1 if she recovers. We can also add an exogenous variable, I, which takes value 0 if Doctor intends to administer zero doses, 1 if Doctor intends to administer one dose, and 2 if Doctor intends to administer two doses. The three structural equations for this case are then I = 2, M = I, and . The actual solution is I = 2, M = 2, and R = 1.

I think that the natural reaction to the claim, ‘Doctor’s administering two doses of Medicine caused Patient to recover’, is one of ambivalence (at least if there are no further contextual factors to pick out one of the two alternative actions available to Doctor as the relevant one). While one of the alternative actions available to Doctor (M = 0) would have made a difference to whether or not Patient recovered, the other (M = 1) would have made no difference. A natural interpretation of our ambivalent attitude is that causation is contrastive in nature, and that ‘Doctor’s administering two doses of Medicine caused Patient to recover’ is ambiguous between ‘Doctor’s administering two doses rather than no doses of Medicine caused Patient to recover’ (to which most people would presumably assent) and ‘Doctor’s administering two doses rather than one dose of Medicine caused Patient to recover’ (to which most people would presumably not assent).

Yet, as it stands, AC unequivocally yields the result that M = 2 was an actual cause of R = 1. Suppose that , and that is R = 1. Since M = 2 and R = 1 are the values of M and R in the solution to the structural equations of the model described, given the actual context AC1 is satisfied. Since has no (non-empty) strict subsets, AC3 is satisfied. To see that AC2 is satisfied, consider the partition () of the endogenous variables in our model such that and . Condition AC2(a) will be satisfied if, for some assignment of values to the variables in , it is true that if the variables in had taken those values and M had taken value M = 0, then R would have taken value R = 0. Since there are no variables in , AC2(a) reduces to the requirement that if M had taken value M = 0, then R would have taken the value R = 0. Since our model implies that this is so, AC2(a) is satisfied. Finally, condition AC2(b) is rather trivially satisfied. Since there are no variables in or in , AC2(b) just reduces to the requirement that if it had been that M = 2, then it would have been that R = 1. Since our model implies that this is so, AC2(b) is satisfied. Since, as we have seen, AC1, AC2(a), and AC3 are also all satisfied, AC yields the result that M = 2 is an actual cause of R = 1.

AC is unequivocal that M = 2 is a cause of R = 1, whereas intuition is equivocal. It would thus seem desirable to modify AC to bring it into closer alignment with intuition. Specifically, it would seem desirable to adjust AC so that it can capture the nuances of our contrastive causal judgements (Halpern and Pearl [2005], p. 859). This is easily achieved. To turn AC into an analysis of rather than being an actual cause of , we simply need to require that AC2(a) hold not just for some non-actual setting of , but for precisely the setting (cf. Halpern and Pearl [2005], p. 859). More generally, to turn AC into an analysis of rather than being an actual cause of , where denotes a set of formulas of the form , we simply need to require that AC2(a) hold for every formula of the form in .

This gives the correct results in the example just considered. The reason that the original version of AC yielded the unequivocal result that M = 2 is an actual cause of R = 1 is that the original version of AC2(a) requires simply that there be some other alternative value of M such that if M had taken that alternative value (and the variables in had taken some possible assignment), then it would have been the case that R = 0. This condition is satisfied because M = 0 is such a value. The revised version of AC just proposed does not give an unequivocal result about whether M = 2 is an actual cause of R = 1. Indeed, it doesn’t yield any result until a contrast set for M = 2 is specified.

The revised version of AC does yield the verdict that M = 2 rather than M = 0 was an actual cause of R = 1. Specifically, taking the contrast set to be , the revised version of AC is satisfied for precisely the same reason that taking to be M = 0 allowed us to show that the original version of AC is satisfied when we consider M = 2 as a putative cause of R = 1. The revised version of AC also yields the verdict that M = 2 rather than M = 1 is not a cause of R = 1. This is because the revised version of AC2(a) is violated when we take to be the contrast set. Specifically, it’s not the case that if M had taken the value M = 1 (and the variables in had taken some possible assignment—a trivially satisfied condition in this case because ),29 then variable R would have taken R = 0. The revised AC thus gives the intuitively correct results about these contrastive causal claims. Moreover, it can explain the equivocality of intuition about the claim ‘M = 2 was an actual cause of R = 1’ in terms of its ambiguity between ‘M = 2 rather than M = 0 was an actual cause of R = 1’ (which it evaluates as true) and ‘M = 2 rather than M = 1 was an actual cause of R = 1’ (which it evaluates as false).

As suggested above, we may find it plausible to build contrast in on the effect side too (Schaffer [2005], p. 328; Woodward [2005], p. 146). To change our previous example somewhat, suppose that one dose of medicine leads to speedy recovery, two doses leads to slow recovery (two doses is an ‘overdose’ that would adversely affect Patient’s natural immune response), while zero doses leads to no recovery. Suppose that Doctor in fact administers two doses, and so Patient recovers slowly. In this case, we might reasonably represent the outcome using a variable that has three possible values: R = 0 represents no recovery, R = 1 represents speedy recovery, and R = 2 represents slow recovery. Taking M and I to be variables with the same possible values (with the same interpretations) as before, the structural equations for this new case are I = 2, M = I, and R = M. The actual solution is I = 2, M = 2, and R = 2.

We might wish to have the capacity to analyse causal claims like ‘Doctor’s administering two doses rather than one dose of Medicine caused Patient to recover slowly rather than quickly’. It is unproblematic to modify AC to achieve this. In order to analyse a claim of the form ‘ rather than actually caused rather than ’ we simply need to replace with in condition AC2(a) (Halpern and Pearl [2005], p. 859) and require that the modified AC2(a) hold not just for some non-actual setting of , but for precisely the setting (cf. Halpern and Pearl [2005], p. 859). This yields the correct result in the present case because while the actual value of M is M = 2 and the actual value of R is R = 2, it is true that if M had taken the value M = 1, then R would have taken the value R = 1.

More generally, suppose that we wish to analyse claims of the form ‘ rather than actually caused rather than ’, where denotes a set of formulas of the form such that for each such formula, , and where represents a set of formulas of the form such that for each such formula, is incompatible with . To do this, we simply need to require that for each formula of the form in , there is some formula of the form in such that AC2(a) holds when is replaced with , and the non-actual setting of appealed to in AC2(a) is taken to be precisely the setting (cf. Schaffer [2005], p. 348).30

This revised definition reduces to the original AC in the case where the putative cause is primitive event X = x (rather than a conjunction of primitive events), and the putative effect is primitive event Y = y (rather than an arbitrary Boolean combination of primitive events), and the variables X and Y representing those primitive events are binary, with their alternative possible values being and (). In such a case, the setting of the putative cause variables appealed to in the unmodified AC is just the setting X = x, and the variable representing the putative effect is simply to be replaced by Y = y. Since, in this case, there is only one possible but non-actual value of X—namely, the value is automatically the non-actual setting of the putative cause variable appealed to in the unmodified AC2(a). Likewise, in such a case, (which appears in AC2(a)) just means , which, because Y is binary, just corresponds to . Moreover, in such a case, and automatically serve as the contrast sets appealed to in AC2(a) where AC is modified (in the way suggested in the previous paragraph) to incorporate contrastivity. This is because there are no other possible but non-actual values of the putative cause and effect variables. So, under these circumstances, both the original and revised version of AC2(a) require the same thing, namely, that Y would take the value if X were to take the value and the variables were to take the values . Since the modified and unmodified versions of AC differ only in AC2(a), it follows that both versions of the analysis will yield the same results in such cases.

This explains why the unmodified definition AC works well in our preemption scenario, where binary variable C taking value C = 1 (representing Corleone’s order) is considered as a putative cause of binary variable D taking value D = 1 (representing McCluskey’s death). Since, where the cause and effect variables are binary, the relevant contrasts are selected automatically, saying that C = 1 is an actual cause of D = 1 is effectively equivalent to saying that C = 1 rather than C = 0 is an actual cause of D = 1 rather than D = 0.

In closing this section, it is worth noting that although the causal notion upon which Halpern and Pearl ([2001], [2005]) focus is that of actual causation, other causal notions can be fruitfully analysed in the SEM framework. In fact, Pearl ([2009]), Hitchcock ([2001b]), and Woodward ([2005]) analyse a range of causal notions in terms of SEMs, including ‘net effect’ (Hitchcock [2001b], p. 372), ‘total cause’ (Woodward [2005], p. 51), ‘component effect’ (Hitchcock [2001b], pp. 374, 390–5), ‘direct cause’ (Woodward [2005], p. 55), ‘direct effect’ (Pearl [2009], pp. 126–8), and ‘contributing cause’ (Woodward [2005], p. 59). While my interest in this article is with actual causation rather than these other causal notions, I do think that there is another causal notion that is very closely related to that of actual causation, and which can be defined simply as a corollary to (the modified) AC, namely, that of ‘prevention’. I’m inclined to think that prevention is just the flip-side of actual causation. Specifically, it seems plausible to me that, if (by the lights of the modified AC) (rather than ) is an actual cause of rather than , then (rather than ) prevents rather than from happening. I shall discuss the issue of probabilistic prevention in Section 8.

6 The Probabilistic Case

In attempting to analyse probabilistic actual causation, philosophers have typically appealed to the notion of ‘probability raising’. The idea is that, at least when circumstances are benign—for example, when there are no preempted potential causes of the effect—an actual cause raises the probability of its effect.31 Turning this insight into a full-blown analysis of probabilistic actual causation depends, among other things, upon giving an account of what it is for circumstances to be ‘benign’ (ideally, an account that does not itself appeal to actual causation). This is part of what I shall seek to do below, drawing inspiration from Halpern and Pearl’s account of actual causation in the deterministic case.32

But first it is worth considering in a bit more detail precisely what the notion of probability raising amounts to. In this context, some notation introduced by Godszmidt and Pearl ([1992], pp. 669–70; see also Pearl [2009], pp. 23, 70, 85) is helpful. In that notation, represents the set of variables, coming to have the values as a result of ‘local surgeries’ (Pearl [2009], p. 223)—or (just as good) as a result of Woodwardian ‘interventions’ (Woodward [2005], p. 98), or Lewisian ‘small miracles’ (Lewis [1979]), p. 468ff)—as opposed to coming to have the values as a result of different initial conditions operating via ordinary causal processes.33

Suppose that is a candidate actual cause and is a putative effect of . One way of cashing out the idea that variables taking the values rather than raises the probability of is in terms of the following inequality:

| (1) |

This says that the probability of that would obtain if were to be set to by interventions (or by local surgeries or small miracles)34 is higher than the probability of that would obtain if were to be set to by interventions.35 Note that thus represents something different from . The latter is an ordinary conditional probability: the probability that obtains conditional upon obtaining. The former, by contrast, represents a counterfactual probability: the probability for that would obtain if the variables had been set to the values by interventions

The counterfactual probability, , is liable to diverge from the conditional probability, ; witness the difference between the probability of a storm conditional upon the barometer needle pointing towards the word ‘storm’, on the one hand, and the probability that there would be a storm if I intervened upon the barometer needle to point it towards the word ‘storm’, on the other (cf. Pearl [2009], pp. 110–11).

One of the advantages of appealing to counterfactual probabilities rather than to conditional probabilities in analysing actual causation is precisely that when the counterfactuals in question are given a suitably non-backtracking semantics (that is, where their antecedents are taken to be realized by interventions, small-miracles, local surgeries, or the like), we avoid generating probability-raising relations between independent effects of a common cause (see Lewis [1986a], p. 178). For example, the probability of a storm is higher conditional upon the barometer needle pointing to the word ‘storm’ than it is conditional upon the barometer needle’s not doing so (cf. Salmon [1984], pp. 43–4). This is not because the barometer reading is an actual cause of the storm, but rather because an earlier fall in atmospheric pressure is very probable conditional upon the needle of the barometer pointing towards ‘storm’, and a storm is very probable conditional upon a fall in atmospheric pressure. By contrast, it is false that the probability of a storm would be higher if I were to intervene to point the barometer needle towards ‘storm’ than if I were to intervene to point it towards some other word (for example, ‘sun’), precisely because my intervention breaks the normal association between the atmospheric pressure and the barometer reading. Understanding probability raising in terms of (non-backtracking) counterfactuals thus ensures the elimination of probability-raising relationships that are due merely to common causes.

Another advantage of appealing to counterfactual probabilities rather than conditional probabilities in analysing actual causation is that we retain the possibility of applying our probabilistic analysis of actual causation to the deterministic case (cf. Lewis [1986a], pp. 178–9). Under determinism, an effect, , counterfactually depends upon its cause, , when circumstances are benign (that is, where isn’t overdetermined, and where doesn’t preempt a potential alternative cause of ). In the probabilistic case, might merely have its probability raised by in such circumstances. This is because in the probabilistic case, it may well be that would have had a residual background chance of occurring, even if had been absent. For example, the probability that an atom will decay within a given interval of time can in some cases be increased by bombarding it with neutrons. If the atom decays within the relevant time interval, then we might reasonably say that the bombardment was an actual cause. Still, if the bombarded atom was already unstable, it is not true that if it hadn’t been bombarded, then it wouldn’t have decayed within the relevant time interval: it still might have decayed (there would have been a positive—and perhaps even reasonably high—chance of its doing so), it’s just that the probability of its doing so would have been lower than it actually was (cf. Lewis [1986a], p. 176).

Still, if probability raising is understood in terms of inequalities like Inequality (1), then counterfactual dependence can be seen as a limiting case of probability raising. Specifically, suppose that and actually obtain and that it is true that if, due to an intervention, (rather than ) had obtained, then would have obtained. Plausibly, it follows that —that is, that if had obtained (due to an intervention), then the chance of would have been zero. After all, if the chance of would have been greater than zero, then it is not true that would have obtained (Lewis [1986a], p. 176).36

Counterfactual dependence of upon also requires that if had obtained, then would have obtained. That is, it requires that (or in the notation adopted here). But it very plausibly follows from that . Denying this would require accepting that it could be the case that if had occurred, then would have occurred, even though the probability of occurring would have been equal to zero.37 Putting these two results together, we get that where and occur (which is a necessary condition for their standing in an actual causal relation), if counterfactually depends upon its being the case that rather than , then Inequality (1) holds. Counterfactual dependence is thus a special case of the sort of probabilistic dependence captured by Inequality (1).

As hinted at above, we can think of analyses of deterministic actual causation in terms of SEMs, such as Halpern and Pearl’s, as starting with the insight that effects counterfactually depend upon their actual causes when circumstances are benign, and then giving an account of what variables must be held fixed at which values in order to recover benign circumstances (and therefore contingent counterfactual dependence) even where actual circumstances are unbenign. The probabilistic analysis of actual causation developed below starts with the idea that effects have their probability raised by their actual causes when circumstances are benign, and then gives an account of what variables must be held fixed at which values in order to recover benign circumstances (and therefore contingent probability raising) even where actual circumstances are unbenign.38 Given the structural analogy between the two sorts of account, with probability raising playing the role in the one account that counterfactual dependence plays in the other, if counterfactual dependence is a limiting case of probability raising, then the prospects of a unified treatment of deterministic and probabilistic actual causation look good.

If we cashed out the notion of probability raising, not in terms of the counterfactual probabilities that appear in Inequality (1), but rather in terms of an inequality between conditional probabilities——then it would be much less clear that deterministic causation could be treated as a limiting case of probabilistic causation (cf. Lewis [1986a], pp. 178–9). The trouble is that under determinism, it is plausible that causes may have a chance of one of occurring (given initial conditions). Indeed, the putative causes in the deterministic preemption scenario described in Section 2 (namely, Corleone’s order and Barzini’s order) were taken to follow deterministically from the context (and thus to have a chance of one given that context). But where , then where and—according to standard probability theory— is undefined. So our probabilistic analysis of actual causation will run into trouble in the deterministic case if we understand the notion of probability raising in terms of the inequality . There is no such problem if we understand probability raising in terms of Inequality (1), since the fact that does not imply that the counterfactual probability (where ) is undefined.

It is worth emphasizing that, not only is not the same as , the former isn’t a conditional probability at all. is simply a different probability distribution than ; we could just as well denote these distributions ‘’ and ‘’. In particular, isn’t defined in terms of via the ratio definition of conditional probability, that is, it is not the case that . This could not be the case, since (unlike ) is not an event in the probability space over which is defined (see Pearl [1995], pp. 684–5, [2009], pp. 109–11, 332, 386, 421–2, Woodward [2005], pp. 47–8). Rather, is the actually obtaining probability distribution on the field of events generated by our variable set (of which the variables in and those in are subsets), whereas is the probability distribution (on that same field of events) that would obtain if the variables in were set to the values by interventions. Thus, Pearl ([2009], p. 110) suggests that we can construe the intervention as a function that takes the actual probability distribution and a possible event as an input and yields the counterfactual probability distribution as an output.

I have suggested that, when circumstances are benign, actual causation might involve probability raising. Yet, actual causation cannot simply be identified with the probability raising of one event by another. This is because circumstances aren’t always benign. Preemption cases are among the cases in which circumstances aren’t benign. It was seen in Section 2 that deterministic preemption cases show that counterfactual dependence (even under determinism) is not necessary for actual causation. Probabilistic preemption cases show that probability raising is not necessary for actual causation either. Interestingly, such cases also show that probability raising is not sufficient for actual causation (Menzies [1989], pp. 645–7; Menzies [1996], pp. 88–9). This is in contrast to the deterministic case, where counterfactual dependence, arguably, is sufficient for actual causation. We can describe a probabilistic preemption case by simply modifying our earlier deterministic preemption scenario. The modified scenario is as follows:

PE: The New York Police Department is due to go on parade at the parade ground on Saturday. Knowing this, Don Corleone decides that, when Saturday comes around, he will order Sonny to go to the parade ground and shoot and kill Police Chief McCluskey. Not knowing Corleone’s plan, Don Barzini decides that when Saturday comes around, he will order Turk to shoot and kill McCluskey. To simplify, suppose that each of the following chances is negligible: the chance of each of the dons not issuing his order given his intention to do so, the chance of Turk or Sonny shooting McCluskey if not ordered to do so, the chance of McCluskey dying unless he is hit by either Turk’s or Sonny’s bullet, and the chance of Turk shooting if Sonny shoots. Suppose that Sonny is a fairly obedient type, and that his opportunity to shoot will (with a chance approximating one) come earlier than Turk’s (since Corleone’s headquarters are closer to the police parade ground than Barzini’s headquarters). Let us assume that, given Corleone’s order, there is a 0.9 chance that Sonny will shoot McCluskey. Sonny, however, is not a great shot and if he shoots, there’s only a 0.5 chance that he’ll hit and kill McCluskey. Turk is also obedient, but will (with a chance approximating one) have the opportunity to shoot only if Sonny doesn’t shoot (even if Sonny shoots and misses, McCluskey will almost certainly be whisked away to safety before Turk gets a chance to shoot). But if Barzini issues his order and Sonny does not shoot, then there is a 0.9 chance that Turk will shoot. And if Turk shoots, there is a 0.9 chance that he will hit and kill McCluskey. Suppose that, in actual fact, both Corleone and Barzini issue their orders. Sonny arrives at the parade ground first, shooting and killing McCluskey. Turk arrives on the scene afterwards and doesn’t shoot.

Intuitively, just as in the deterministic scenario, Corleone’s order was a cause of McCluskey’s death, while Barzini’s order was not a cause. Still, the chance of McCluskey’s death if Corleone issued his order was:

| (2) |

That is (given the stipulations of the example), the chance of McCluskey’s death if Corleone issues his order is approximately equal to the probability that Sonny shoots if Corleone issues his order (0.9), multiplied by the probability that Sonny hits and kills McCluskey if he shoots (0.5), plus the probability that Sonny doesn’t shoot if Corleone issues his order (0.1), multiplied by the product of the probability that Turk shoots if Sonny doesn’t (0.9), and the probability that Turk hits and kills McCluskey if he shoots (0.9).

By contrast, the chance of McCluskey’s death if Corleone had not issued his order would have been:

| (3) |

That is (given the stipulations of the example), the chance of McCluskey’s death if Corleone had not issued his order would be approximately equal to the chance that Turk would shoot if Barzini issued his order and Sonny had not shot (0.9), multiplied by the probability that Turk would hit and kill McCluskey if he shot (0.9).

It is worth noting that in evaluating these probabilities, there is no need to explicitly hold fixed the context—namely, the intentions of the dons to issue their orders, —by including it as an argument in the function in the counterfactual probability expressions that appear on the left-hand side of the approximate equalities (Approximate Equalities (2) and (3)) (so that the expression on the left-hand side of Approximate Equality (3), for example, becomes ). This is because the context is already held fixed, implicitly, in virtue of the non-backtracking nature of the counterfactuals. In evaluating the counterfactual probability expressed by Approximate Equality (3), for example, we are to consider a world in which C is set to C = 0 by an intervention (or local surgery or small miracle) that leaves the context, , undisturbed. The same point applies to all of the counterfactual probabilities considered below.

It follows immediately from Approximate Equalities (2) and (3) that in spite of our intuitive judgement that Corleone’s order was a cause of McCluskey’s death, the former actually lowers the probability of the latter. Specifically,

| (4) |

Intuitively, the reason why Corleone’s order lowers the probability of McCluskey’s death is that Turk is by far the more competent assassin, and a botched assassination attempt by the relatively incompetent Sonny would prevent Turk from getting an opportunity to attempt the assassination. So although Corleone’s order was an actual cause of McCluskey’s death (because Sonny succeeded), Corleone’s order lowered the probability of McCluskey’s death (because it raised the probability that Sonny would carry out a botched attempt that would prevent the far more competent Turk from taking a shot). The example thus illustrates the well-known fact that causes need not raise the probability of their effects.39

Also well-known is the fact that an event can have its probability raised by another event that is not among its causes.40 The above example illustrates this phenomenon too. Since Corleone and Barzini issue their orders independently and since (in the context in which they both form the intention to do so) each does so with a probability of approximately one, the probability of McCluskey’s death if Barzini issues his order is approximately equal to the probability of McCluskey’s death if Corleone issues his order. That is,

| (5) |

However, the probability of McCluskey’s death if Barzini had not issued his order is approximately equal to the probability that Sonny shoots if Corleone orders him to (0.9), multiplied by the probability that Sonny hits and kills McCluskey if he shoots (0.5). That is,

| (6) |

It follows immediately from Approximate Equalities (5) and (6) that Barzini’s order raises the probability of McCluskey’s death. Specifically:

| (7) |

Intuitively, the reason that Barzini’s order raises the probability of McCluskey’s death is that there is some chance that Sonny will fail to shoot and in such circumstances, given Barzini’s order, there is a (fairly high) chance that Turk will shoot and kill McCluskey instead. Since Barzini’s order is nevertheless not an actual cause of McCluskey’s death, the example thus illustrates the fact that probability raising isn’t sufficient for actual causation (even when we understand probability raising in terms of non-backtracking counterfactuals so as to eliminate the influence of common causes),41 as well as not being necessary.

That probability raising is neither necessary nor sufficient for actual causation creates a difficulty for existing attempts to analyse probabilistic actual causation (see Hitchcock [2004]). And, as it stands, Halpern and Pearl’s definition AC does not give the correct diagnosis of probabilistic preemption cases like the one just described. In particular, it fails to diagnose Corleone’s order as a cause of McCluskey’s death. The reason is that it is no longer true (as it was in the deterministic preemption case considered above) that as condition AC2(a) requires, (i) given Turk’s non-shooting (and Barzini’s issuing his order), McCluskey’s death counterfactually depends upon Corleone’s order. After all, if Corleone hadn’t issued his order and (Barzini had issued his order but) Turk hadn’t shot, then McCluskey might still have died (from a heart attack, say). I said that the chance of his dying in these circumstances was negligible, not that it was zero.42 Moreover, I made the assumption of a negligible probability of his dying in such a situation only for calculational simplicity. In a probabilistic context, one can revise the example so that the probability is rather large, while still ensuring that Corleone’s order is a non-probability-raising cause and Barzini’s order is a probability-raising non-cause of McCluskey’s death.43

Moreover, since the Corleone process is now only probabilistic, it is not true, as condition AC2(b) requires, that (ii) if Corleone had issued his order, and (Barzini had issued his order but) Turk hadn’t shot, then McCluskey would have died. After all, in the probabilistic case, there is some chance that Sonny doesn’t shoot even if Corleone issues his order; it was a stipulation of the example that the chance of Sonny shooting if Corleone issues his order is only 0.9. There is also some chance that Sonny fails to kill McCluskey even if he does shoot; it was a stipulation of the example that the chance of McCluskey dying if Sonny shoots is only around 0.5. So it is not true that if Corleone had issued his order and Turk hadn’t shot, then McCluskey would have died. The chance of his dying under such circumstances is only (approximately) .44 So, in general, Halpern and Pearl’s definition AC does not (and is not intended to) deliver the correct results in the probabilistic case.

Nevertheless, it seems prima facie plausible that Halpern and Pearl’s account might be extended to provide a satisfactory treatment of probabilistic actual causation by substituting its appeals to contingent counterfactual dependence with appeals to contingent probability raising. Specifically, one might maintain that Corleone’s order was a cause of McCluskey’s death because (i) given Turk’s non-shooting (and Barzini’s order), Corleone’s order raised the probability of McCluskey’s death. After all, given Turk’s non-shooting, the probability of McCluskey’s death would have been lower (approximately zero) if Corleone hadn’t issued his order than if he had (approximately ). Moreover, (ii) there is a complete probabilistic causal process running from Corleone’s order to McCluskey’s death, as indicated by the fact that for arbitrary subsets of events on the Corleone process, it is true that given that Turk didn’t shoot (and Barzini issued his order), if Corleone had shot, and those events had occurred, then the probability of McCluskey’s death would have remained higher than it would have been if Corleone hadn’t issued his order.

By contrast, plausibly Barzini’s order isn’t an actual cause because while it is true that (i) given Sonny’s not shooting (and Corleone’s issuing his order), Barzini’s order raised the probability of McCluskey’s death—specifically, given Sonny’s non-shooting, the probability of McCluskey’s death would have been lower (approximately zero) if Barzini hadn’t issued his order than if he had (approximately )—it is nevertheless not true that (ii) there is a complete probabilistic causal process from Barzini’s order to McCluskey’s death, as indicated by the fact that, for example, if Sonny hadn’t shot (and Corleone had issued his order) and (as was actually the case) Barzini issued his order but Turk didn’t shoot, then the probability of McCluskey’s death would have been no higher than if Barzini hadn’t issued his order in the first place.

In order to render this suggestion more precise, it will be necessary to appeal to the notion of a ‘probabilistic causal model’.

7 Probabilistic Causal Models

As noted in the previous section, Pearl ([2009], p. 110) suggests that we can construe as a function that takes a probability distribution and a formula of the form as an input, and yields a new probability distribution, , as an output. Thinking of in these terms, we can construe a probabilistic causal model, , as an ordered triple , where is a set of variables, P is a probability distribution defined on the field of events generated by the variables in , and is a function that when P and any formula the form for are taken as its inputs, yields as an output a new distribution —the probability distribution that would result from intervening upon the variables to set their values equal to .

The variable set can be partitioned into a set of exogenous variables, , and a set of endogenous variables, . In the probabilistic context, exogenous variable is such that for no possible value u of U is there a pair of possible value assignments, , to the variables in such that . That is, U is exogenous if and only if the probability of none of the possible values of U is affected by interventions on the values of the other variables in . The endogenous variables, , are the variables that are not exogenous. An assignment of values to variables in set of exogenous variables, denoted , is (once again) called a ‘context’.

It was observed in Section 3 that Hitchcock ([2001a], p. 287) takes it to be a condition for the ‘appropriateness’ of an SEM, , that it ‘entail no false counterfactuals’, by which he means that evaluating counterfactuals with respect to by means of the equation replacement method doesn’t lead to evaluations of counterfactuals as true when they are in fact false (Hitchcock [2001a], p. 283). We can make an analogous requirement of probabilistic causal models. Specifically, where is our probabilistic causal model, it should be required that for any formula the form , such that , the distribution that the function yields as an output (when P and are its inputs) should be the true objective chance distribution on the field of events generated by the variables in that would result from intervening upon the variables to set their values equal to .45

In modelling our probabilistic preemption scenario, we can take the variable set to comprise the variables CI, BI, C, B, S, T, and D, where these variables all have the same possible values (with the same interpretations) as they did in the deterministic case. To be appropriate, our probabilistic causal model should satisfy the requirement described in the previous paragraph: where , for any formula of the form such that , the distribution that the function yields as an output (when P and are its inputs) should be the true objective chance distribution on the field of events generated by the variables in that would result from intervening upon the variables to set their values equal to . Since I am assuming the probabilities described in the probabilistic preemption example (as outlined in the previous section) to be objective chances, these probabilities should be among those that result from the appropriate inputs to .

We can construct a graphical representation of a probabilistic model, by taking the variables in as the nodes or vertices of the graph and drawing a directed edge (‘arrow’) from a variable Vi to a variable Vj () just in case, where , there is some assignment of values , some pair of possible values () of Vi, and some possible value vj of Vj such that . That is, an arrow is drawn from Vi to Vj just in case there is some assignment of values to all other variables in such that the value of Vi makes a difference to the probability distribution over the values of Vj when the other variables in take the assigned values. As in the deterministic case, where there is an arrow from Vi to Vj, Vi is said to be a parent of Vj, and Vj to be a child of Vi. Once again, ancestorhood is defined in terms of the transitive closure of parenthood, and descendanthood in terms of the transitive closure of childhood.